Abstract

In order to deal with the pseudo-Gibbs phenomenon in the process of hyperspectral remote sensing image enhancement, a novel image enhancement method based on nonsubsampled shearlet transform (NSST) is proposed in this paper. The main motivation of this study is to adjust the coefficient of remote sensing image enhancement as a pattern recognition task. Firstly, the input image is decomposed into a low-frequency component and some high-frequency components by NSST decomposition; Secondly, the guided filter is applied to process the low-frequency component to improve the contrast, and the improved fuzzy contrast is used to suppress the noise of the high-frequency components; Thirdly, the processed coefficients of low-frequency and high-frequency are reconstructed by inverse nonsubsampled shearlet transform (INSST), and the final enhanced image is obtained. The experimental results demonstrate that the proposed approach has obvious advantages in terms of objective data and subjective vision.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

With the wide spread application of imaging spectrometer remote sensing technology, people gradually demand higher quality of the hyperspectral images, but due to the undesirable environmental conditions, the contrast and definition of the hyperspectral remote sensing images are affected with different degree [23, 45]. Therefore, the image enhancement method is a key technology of achieving and preserving the details of the hyperspectral images. Currently, image enhancement algorithms can be divided into two categories: spatial-domain and transform-domain enhancements [2, 14]. In terms of spatial transformation, the histogram equalization (HE) is a traditional enhancement technique [19, 21, 22, 35], but it has the problems of over-enhancement and over-amplified noise; the contrast-limited adaptive histogram equalization (CLAHE) is an effective method to enhance the local contrast of the image, and it solves the defects of HE approach to some extent [9]; there are also some simple and effective image enhancement algorithms based on spatial-domain such as dualistic sub image histogram equalization (DSIHE) [36], unsharp masking [33], gamma correction [10]. With the rapid development of the digital image processing technology, image enhancement techniques based on transform-domain are also proposed by scholars, such as wavelet transform [46], curvelet transform [28], contourlet transform [8], etc. The contourlet transform can provide a flexible multi-resolution, directional and structured decomposition for images [15], it overcomes the inherent drawbacks of inefficiency about the wavelet transform, but the contourlet transform does not have shift-invariance. In order to solve the problem of shift-invariance, the nonsubsampled contourlet transform (NSCT) is proposed based on the theory of contourlet transform [4, 37]. Now according to the characteristics of NSCT, this transform is widely used in image fusion and image enhancement algorithms [41, 44].

Pu et al. [29] proposed a hyperspectral remote sensing image enhancement method based NSCT and unsharp masking to deal with the problem of low contrast and noise amplification, this algorithm has achieved good results in mean and standard deviation, but it can appear the over-enhancement phenomenon. Liu et al. [24] proposed a hyperspectral remote sensing image enhancement approach based on NSCT and mean filter, this method has the advantages in term of the image definition, contrast and peak signal to noise ratio (PSNR), but the computation burden is high. Wu et al. [39] proposed a hyperspectral image enhancement technique based on multi-scale retinex (MSR) in NSCT domain, it is effectiveness in term of the image information entropy and contrast. Li et al. [12] proposed a novel remote sensing image enhancement technique based on NSCT, and this approach has significant improvement in image enhancement.

With the further research on the theory of transform domain, the shearlet transform and nonsubsampled shearlet transform (NSST) are proposed, and these two methods are also widely used in remote sensing image denoising and enhancement [7, 34]. Yang et al. [42] proposed a remote sensing image enhancement algorithm based on shearlet transform and fuzzy enhancement, experimental results demonstrate that this technique obtains a good visual effect, the entropy and mean also have a significant promotion. Wu et al. [40] proposed an adaptive image enhancement technique based on NSST transform and constraint of human eye perception information fidelity, and this method also achieved a good results such as definition and contrast. Lv et al. [27] proposed a hyperspectral remote sensing image approach based on NSST theory and guided filter, but this method may appear the over-enhancement phenomenon due to the over-process of the high-frequency components.

Although these algorithms have achieved certain results in image enhancement, the pseudo-Gibbs phenomenon still exists. In order to solve this problem, a novel hyperspectral remote sensing image enhancement based on NSST is proposed in this paper. We mainly focus on the design of enhancement strategies for both the low-frequency and high-frequency components. The main contributions of the proposed algorithm are outlined as follows.

-

1)

We introduce the guided filter into the filed of image enhancement. The guided filter model is used to process the low-frequency component. The guided filter is experimentally verified to have a better contrast enhancement effect than the traditional bilateral filtering.

-

2)

We present a novel high-frequency denoising strategy with the improved fuzzy contrast.

-

3)

We propose a new remote sensing image enhancement approach in the NSST domain by using the enhancement and denoising strategies mentioned earlier. Firstly, the image is decomposed into a low-frequency component and some high-frequency components by NSST transform; Secondly, the guided filter is used to deal with the low-frequency component to improve the contrast and the improved fuzzy contrast is adopted to adjust the coefficients of high-frequency sub-bands. Thirdly, the inverse NSST (INSST) is applied to reconstruct the image, and the final enhanced image is obtained. Extensive experiments are conducted to verify the effectiveness of the proposed algorithm on different remote sensing image enhancement problems. Seven image enhancement algorithms are used for comparison. Experimental results demonstrate that the proposed approach can achieve good performances on both the subjective and objective assessments.

The remainder of this paper is organized as follows. In Section 2, we introduce the theory of nonsubsampled shearlet transform. The proposed hyperspectral remote sensing image enhancement method is described in Section 3. The Section 4 presents the experiments results and compares them with other state-of-the-art algorithms. Finally, the conclusions are summarized in Section 5.

2 Theoretical analysis

2.1 Non-subsampled shearlet transform

Non-subsampled shearlet transform can be constructed by combining geometry and multi-scale in affine system. When the dimension n = 2, the affine system of shearlet transform can be described as follows [6, 38]:

where ϕ ∈ L2(R2), L represents integrable space and det represents determinant of a matrix. Both A and B are invertible matrices of 2 × 2, and ∣ det B ∣ = 1. If MAB(ϕ)has a tight frame, the elements of MAB(ϕ)are called synthetic wavelets. A is called an anisotropic expansion matrix, and Ai is associated with scaling transformations; B is a shear matrix, and Bj is associated with geometric transformations which keep the area constant. The expressions for A and B are as follows [26]:

Assume a = 4, s = 1, the Eq. (2) is described as follows:

The discretization process of NSST can be divided into two steps: multi-scale decomposition and localization of orientation. The multi-scale decomposition is achieved by non-subsampled pyramid filter bank (NSP). The localization of the orientation is accomplished by a shearlet filter (SF).

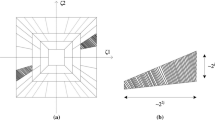

Fig. 1 depicts the frequency domain decomposition and the frequency domain support space, respectively. The multi-scale and multi-directional decomposition process of NSST is shown in Fig. 2, the decomposition levels of NSP is 3.

3 Proposed method

3.1 Guided filter in low-frequency sub-band

The guided filter is widely used in image enhancement and edge preserving. In this article, the guidance image, input image and filtered image are defined as I, p and q. The guided filter is compelled by the local linear model, and it is defined as follows [5]:

where i and k present the index of the pixel and the local square window w by the radius r, respectively. The ak and bk are defined as follows [25]:

where μk andδkpresent the mean and variance of the guidance image, n represents the regularization quantity. The filtered image is computed with the following equation:

where a1i and b1i presents the mean of a and b.

The enhanced image can be achieved by the formula:

where β is a parameter controlling the degree of enhancement.

3.2 Improved fuzzy contrast in high-frequency sub-bands

The high-frequency parts contain the details information including the edge and contour of image, these components also contain more noise. By selecting the appropriate threshold, the noise can be suppressed to the greatest extent, and the loss of detail information can be reduced. Therefore, the improved fuzzy contrast is used to adjust the high-frequency coefficients of the image.

In the fuzzy algorithm, the membership function is constructed by the following equation [34]:

where Fp and Fe are the reciprocal fuzzy parameters and exponential fuzzy parameters, respectively, they affect the uncertainty of fuzzy plane. T() represents the membership degree transformation, and maps the gray value of an image to the fuzzy domain. di, j is the gray value of the current pixel; dmax is the maximum gray value. The Fp and Fe are defined as follows:

The generalized contrast enhancement operator is used to enhance theμi, j, and the corresponding formula is computed by:

where q is 2. The threshold a in the Eq. (12) is usually defined as 0.5, but it is unreasonable. In this paper, the Otsu method is applied to select the best threshold value. The Otsu algorithm divides the pixels in the image into two categories according to the gray threshold T, namely class C1 and class C2. The type of C1 is composed by the gray pixels values between [0, T]; the type of C2 is composed by the gray pixels values between [T + 1, 255]. The between class variance of the C1 and C2 is defined as follows [34]:

where W1(t) and W2(t) are the ratios of the number of pixels in C1 and C2 to the total number of pixels in the image, respectively; U1(t) and U2(t) are the average gray value of pixels in the C1 and C2, respectively; Tis ranked sequentially in [0, 255], the T value that makes the maximum between class variance is the best threshold a of Otsu.

Finally, the T−1inverse transformation is carried out on the adjusted membership degree\( {\mu}_{ij}^{\hbox{'}} \), and the enhanced high-frequency coefficient Dijat the location (i, j) is obtained, the equation is described as follows:

3.3 Steps of the proposed method

-

Step 1:

The input image is decomposed into a low-frequency component and some high-frequency components by NSST.

-

Step 2:

The guided filter with Eqs. (4)–(8) is used to improve the contrast of low-frequency component, and the high-frequency components are processed by the fuzzy contrast with Eqs. (9)–(14).

-

Step 3:

The inverse NSST (INSST) is applied to the processed coefficients to obtain the reconstructed image, and the enhanced image is achieved.

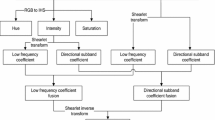

The flow chart of the proposed method is shown in Fig. 3.

4 Results and discussions

In this section, a large number of hyperspectral remote sensing images are simulated on Matlab 2016b. In order to verify the effectiveness of the proposed approach, the traditional histogram equalization (HE) [47], image enhancement based on NSCT and unsharp masking (NSCT1) [29], remote sensing image enhancement method based on NSST and guided filtering (NSST) [27], image enhancement technique based on mean filter and unsharp masking in NSCT domain (NSCT2) [24], linking synaptic computation for image enhancement (LSCN) [43], image enhancement method based on nonsubsampled contourlet transform (NSCT3) [16], image enhancement based on CLAHE and unsharp masking in NSCT domain (NSCT4) [17] are compared. We use visual and objective indicators as evaluation criteria. In terms of objective indicators, entropy (H) [18], measure of enhancement (EME) [20], structural similarity index metric (SSIM) [11, 30], average pixel intensity (API) [27], peak signal to noise ratio (PSNR) [1, 13], correlation coefficient (CC) [3], root mean square error (RMSE) [32], relative averages spectral error (RASE) [31], and running time (s) are selected in this paper. The corresponding data as shown in Tables 1, 2, 3, 4 and 5. The higher the values of the metrics H, EME, SSIM, API, PSNR and CC, the better enhancement effect of the image; the lower the values of the indicators RMSE, RASE and Runtime, the better enhancement effect of the image. In this paper, β is set to 4; the NSCT decomposition layers is 3, and direction numbers are 8, 16 and 16; the NSST decomposition layers is 4, and the direction numbers are 1, 2, 4 and 8.

4.1 Subjective assessment

Fig. 4 is the enhancement result simulated on the Image 1, and the size is 512 × 512. Fig. 4(a) is the original image; Fig. 4(b) shows the enhanced image obtained by HE, and it appears the over-enhancement; Fig. 4(c) presents the result achieved by NSCT1, there is an obvious distortion in the image; the enhanced image obtained by NSST is shown in Fig. 4(d), and it also appears the over-enhancement phenomenon; Fig. 4(e) represents the result enhanced by NSCT2, the contrast of the image is a litter lower; Fig. 4(f) represents the enhancement result achieved by LSCN, the definition of the image is a little lower; Fig. 4(g) shows the image enhanced by NSCT3, some blurred areas appear in the image; Fig. 4(h) depicts the result obtained by NSCT4, it makes some regions too dark; Fig. 4(i) is the enhancement image achieved by the proposed algorithm, and the image has obvious enhancement effect.

Fig. 5 is the enhancement result simulated on the Image 2, and the size is 512 × 512. Fig. 5(a) is the original image; Fig. 5(b) depicts the image enhanced by HE, it appears over-enhancement phenomenon in some regions; Fig. 5(c) is the result obtained by NSCT1, and the image is too bright; Fig. 5(d) shows the image achieved by NSST, the visual effect of the image is not good; Fig. 5(e) shows the result enhanced by NSCT2, the image is a litter dark; Fig. 5(f) depicts the image obtained by LSCN, and the definition of the image is relatively low; the image enhanced by NSCT3 is shown in Fig. 5(g), it is distorted; Fig. 5(h) is the result achieved by NSCT4; the result enhanced by the proposed technique is shown in Fig. 5(i), and it has moderate brightness and clear edge.

Fig. 6 is the result simulated on Image 3, and the size is 512 × 512. Fig. 6(a) presents the original image; Fig. 6(b) is the image enhanced by HE, and it makes some regions too dark; Fig. 6(c) depicts the result obtained by NSCT1, and the brightness of the image is too large; Fig. 6(d) shows the image enhanced by NSST, and the detail distortion of the image is very serious; Fig. 6(e) presents the result enhanced by NSCT2; the image enhanced by LSCN is shown in Fig. 6(f); the results obtained by NSCT3 and NSCT4 are shown in Fig. 6(g)-(h), respectively; Fig. 6(i) depicts the image enhanced by the proposed approach, and the object as well as background is clearly visible.

Fig. 7 is the result simulated on Image 4, and the size is 512 × 512. Fig. 7(a) is the original image; Fig. 7(b) shows the image achieved by HE; Fig. 7(c)-(d) depict the results obtained by NSCT1 and NSST, respectively, and the visual effects of these two images are rather poor; Fig. 7(e)-(f) are the images enhanced by NSCT2 and LSCN, respectively, and these two images have achieved some enhancement effect; Fig. 7(g) shows the result obtained by NSCT3, but the contrast is low; Fig. 7(h) is the image enhanced by NSCT4, the result appears the over-enhancement phenomenon; the enhancement result achieved by the proposed algorithm is shown in Fig. 7(i), and the image has obvious effects on image edge retention and contrast enhancement.

4.2 Objective assessment

The information entropy (H) is defined as amount of information contained in an image, and it can be evaluated as follows:

where P(l) presents the density function of the image at gray level l, L shows the quantity of gray levels. Larger value of the information entropy indicates that more information content is available in the enhanced image.

The measure of enhancement (EME) is described as follows:

where c is 0.0001. The higher EME indicates the image has a good enhancement effect.

The structural similarity index metric (SSIM) reflects the degree of distortion of the image, and it is defined as follows:

where μx and μypresent the average value of x and y, respectively. \( {\sigma}_x^2 \) and \( {\sigma}_y^2 \) present the variance of x and y, respectively. c1 and c2 are constants, and the two parameters are defined as follows:

where k1 and k2 are set to 0.01 and 0.03, respectively. D is the dynamic range of pixel values.

The average pixel intensity (API) is mean, it measures an index of contrast, and it is defined as follows:

where M and N present the size of the image, I(i, j) is pixel intensity at (i, j).

The peak signal to noise ratio (PSNR) denotes the denoising performance of the algorithm. The PSNR is higher, the antinoise performance of the method is better. It is defined as follows:

where U depicts the maximum value of the pixel, and the root mean square error (RMSE) is described as follows:

where fx,y and hx,y present the enhancement image pixel and original image pixel, respectively.

The correlation coefficient (CC) measures the similarity in small size structures between the original and the enhanced images. The values close to 1 show the two images are highly similar, and the corresponding equation is defined as follows:

where Q and P present the enhancement image and original image, respectively.

The relative average spectral error (RASE) is depicted as a percentage, it denotes the average performance of the method in the given spectral bands, and it can be computed by:

where M shows the mean radiance of the N original images Fi. The lower the error is, the better the algorithm.

Tables 1, 2, 3 and 4 show the objective metrics data of the proposed algorithm and the comparative approaches. From the Table 1, we can see that the H, SSIM and RASE achieved by the proposed technique are the best. From the Tables 2, 3 and 4, we can denote that the metrics data computed by the proposed technique have obvious advantages.

In order to prove the effectiveness of the proposed algorithm in this paper, 50 hyperspectral remote sensing images with the size 512 × 512 from the USC-SIPI Database are selected to simulate, and the average metrics are shown in Table 5. From the data, we can notice that the algorithm proposed in this paper has a good performance in terms of the metrics H, SSIM, PSNR, RMSE and RASE as compared to other seven approaches; the EME and CC of HE method are the best, but the corresponding values computed by the proposed algorithm are still ranked third; the API of NSCT1 algorithm is the best, the API of the proposed technique is ranked third; the running time of HE is the shortest, the proposed method is ranked second and the computation efficiency is relatively high. From the results, we can denote that the proposed algorithm is able to enhance the hyperspectral remote sensing images effectively and has a satisfactory effect.

5 Conclusions

In this paper, an extraordinary hyperspectral remote sensing image enhancement method based on NSST transformation is proposed, and it has achieved a good performance in terms of subjective and objective aspects. We mainly deal with the decomposition coefficients of the NSST transform, and make full use of the advantages of guided filter and fuzzy contrast in image processing. The main steps of this algorithm can be described as follows: Firstly, the input image is decomposed into one low-frequency component and some high-frequency components by NSST transform; Secondly, the guided filter is applied to enhance the contrast of the low-frequency component, and the improved fuzzy contrast is adopted to suppress the noise of the high-frequency components; Thirdly, the inverse NSST is used to reconstruct the image, and the enhanced image is obtained. Simulation results clearly show that the proposed approach outperforms other state-of-the art techniques based on visual quality assessment, and the experimental data are very satisfactory when compared to the previous methods. However, some parameter values in this algorithm need to be determined by empirical values. The future research will focus on the adaptability of the method.

References

Abazari R, Lakestani M (2018) A hybrid denoising algorithm based on shearlet transform method and Yaroslavsky’s filter. Multimed Tools Appl 77(14):17829–17851

Ancuti CO, Ancuti C, De CV (2018) Color balance and fusion for underwater image enhancement. IEEE Trans Image Process 27(1):379–393

Chavan SS, Mahajan A, Talbar SN (2017) Nonsubsampled rotated complex wavelet transform (NSRCxWT) for medical image fusion related to clinical aspects in neurocysticercosis. Comput Biol Med 81:64–78

Da CA, Zhou J, Do MN (2006) The nonsubsampled contourlet transform: theory, design, and applications. IEEE Trans Image Process 15(10):3089–3101

He K, Sun J, Tang X (2013) Guided image filtering. IEEE Trans Pattern Anal Mach Intell 35(6):1397–1409

Huang Z, Ding M, Zhang X (2017) Medical image fusion based on non-subsampled shearlet transform and spiking cortical model. J Med Imaging Health Inf 7(1):229–234

Jafari S, Ghofrani S (2016) Using two coefficients modeling of nonsubsampled shearlet transform for despeckling. J Appl Remote Sens 10(1):015002

Ji X, Zhang G (2017) Contourlet domain SAR image de-speckling via self-snake diffusion and sparse representation. Multimed Tools Appl 76(4):5873–5887

Joseph J, Periyasamy R (2018) A fully customized enhancement scheme for controlling brightness error and contrast in magnetic resonance images. Biomed Signal Processing Control 39:271–283

Kallel F, Sahnoun M (2018) CT scan contrast enhancement using singular value decomposition and adaptive gamma correction. SIViP 12(5):905–913

Li L, Si Y (2018) Enhancement of medical images based on guided filter in nonsubsampled shearlet transform domain. J Med Imaging Health Inf 8(6):1207–1216

Li Q, Jia Z, Qin X (2014) A novel remote sensing image enhancement method based on NSCT. Inf Technol J 13(1):153–158

Li L, Jia Z, Yang J (2016) Noisy remote sensing image segmentation with wavelet shrinkage and graph cuts. J Indian Soc Remote Sens 44(6):995–1002

Li L, Si Y, Jia Z (2017) Remote sensing image enhancement based on non-local means filter in NSCT domain. Algorithms 10(4):116

Li L, Si Y, Jia Z (2017) Remote sensing image enhancement based on adaptive thresholding in NSCT domain. Proceedings of 2nd International Conference on Image, Vision and Computing (ICIVC-2017) pp.319–322

Li L, Si Y, Jia Z (2018) A novel brain image enhancement method based on nonsubsampled contourlet transform. Int J Imaging Syst Technol 28(2):124–131

Li L, Si Y, Jia Z (2018) Medical image enhancement based on CLAHE and unsharp masking in NSCT domain. J Med Imaging Health Inf 8(3):431–438

Li L, Si Y, Jia Z (2018) Microscopy mineral image enhancement based on improved adaptive threshold in nonsubsampled shearlet transform domain. AIP Adv 8(3):035002

Liu Y, Nie L, Han L (2015) Action2Activity: recognizing complex activities from sensor data. Proceedings of the 24th International Joint Conference on Artificial Intelligence (IJCAI-2015) pp. 1617–1623

Liu L, Jia Z, Yang J (2015) A medical image enhancement method using adaptive thresholding in NSCT domain combined unsharp masking. Int J Imaging Syst Technol 25(3):199–205

Liu Y, Nie L, Liu L (2016) From action to activity: sensor-based activity recognition. Neurocomputing 181:108–115

Liu Y, Zhang L, Nie L (2016) Fortune teller: Predicting your career path. Proceedings of the 30th AAAI Conference on Artificial Intelligence (AAAI-2016) pp. 201–207

Liu J, Zhou C, Chen P (2017) An efficient contrast enhancement method for remote sensing images. IEEE Geosci Remote Sens Lett 14(10):1715–1719

Liu L, Jia Z, Yang J (2017) A remote sensing image enhancement method using mean filter and unsharp masking in non-subsampled contourlet transform domain. Trans Inst Meas Control 39(2):183–193

Liu Z, Feng Y, Chen H (2017) A fusion algorithm for infrared and visible based on guided filtering and phase congruency in NSST domain. Opt Lasers Eng 97:71–77

Luo X, Zhang Z, Zhang B (2017) Image fusion with contextual statistical similarity and nonsubsampled shearlet transform. IEEE Sensors J 17(6):1760–1771

Lv D, Jia Z, Yang J (2016) Remote sensing image enhancement based on the combination of nonsubsampled shearlet transform and guided filtering. Opt Eng 55(10):103104

Math SSP, Kaliyaperumal V (2017) Enhancement of SAR images using fuzzy shrinkage technique in curvelet domain. Sādhanā 42(9):1505–1512

Pu X, Jia Z, Wang L (2014) The remote sensing image enhancement based on nonsubsampled contourlet transform and unsharp masking. Concurr Comput Pract Experience 26(3):742–747

Quevedo E, Delory E, Callico GM (2017) Underwater video enhancement using multi-camera super-resolution. Opt Commun 404:94–102

Ren R, Gu L, Fu H (2017) Super-resolution algorithm based on sparse representation and wavelet preprocessing for remote sensing imagery. J Appl Remote Sens 11:026014

Sharif M, Hussain A, Jaffar MA (2015) Fuzzy similarity based non local means filter for Rician noise removal. Multimed Tools Appl 74(15):5533–5556

Singh P, Raman B, Misra M (2018) Just process me, without knowing me: a secure encrypted domain processing based on Shamir secret sharing and POB number system. Multimed Tools Appl 77(10):12581–12605

Tao F, Wu Y (2015) Remote sensing image enhancement based on non-subsampled shearlet transform and parameterized logarithmic image processing model. Acta Geodaet Et Cartographica Sin 44(8):884–892

Wang Y, Pan Z (2017) Image contrast enhancement using adjacent-blocks-based modification for local histogram equalization. Infrared Phys Technol 86:59–65

Wang C, Ye Z (2005) Brightness preserving histogram equalization with maximum entropy: a variational perspective. IEEE Trans Consum Electron 51(4):1326–1334

Wang J, Jia Z, Qin X (2015) Medical image enhancement algorithm based on NSCT and the improved fuzzy contrast. Int J Imaging Syst Technol 25(1):7–14

Wang X, Liu Y, Zhang N (2015) An edge-preserving adaptive image denoising. Multimed Tools Appl 74(24):11703–11720

Wu Y, Shi J (2015) Image enhancement in non-subsampled contourlet transform domain based on multi-scale retinex. Acta Opt Sin 35(3):79–88

Wu Y, Meng T, Wu S (2015) Adaptive image enhancement based on NSST and constraint of human eye perception information fidelity. J Optoelectron Laser 26(5):978–985

Wu C, Liu Z, Jiang H (2017) Choosing the filter for catenary image enhancement method based on the non-subsampled contourlet transform. Rev Sci Instrum 88(5):054701

Yang B, Jia Z, Qin X (2013) Remote sensing image enhancement based on shearlet transform. J Optoelectron Laser 24(11):2249–2253

Zhan K, Shi J, Teng J (2017) Linking synaptic computation for image enhancement. Neurocomputing 238:1–12

Zhang Q, Maldague X (2017) Multisensor image fusion approach utilizing hybrid pre-enhancement and double nonsubsampled contourlet transform. J Electron Imaging 26(1):010501

Zhang J, Geng W, Liang X (2017) Hyperspectral remote sensing image retrieval system using spectral and texture features. Appl Opt 56(16):4785–4796

Zhang Q, Shen S, Su X (2017) A novel method of medical image enhancement based on wavelet decomposition. Autom Control Comput Sci 51(4):263–269

Zhou S, Zhang F, Siddique MA (2015) Range limited peak-separate fuzzy histogram equalization for image contrast enhancement. Multimed Tools Appl 74(17):6827–6847

Acknowledgments

We thank all the volunteers and colleagues provided helpful comments on previous versions of the manuscript. The experimental measurements and data collection were carried out by Liangliang Li and Yujuan Si. The manuscript was written by Liangliang Li with assistance of Yujuan Si. We would like to thank Prof. Yujuan Si for her contributions in proofreading of the paper. This work was supported by the Key Scientific and Technological Research Project of Jilin Province under Grant Nos. 20150204039GX and 20170414017GH; the Natural Science Foundation of Guangdong Province under Grant No. 2016A030313658; the Innovation and Strengthening School Project (provincial key platform and major scientific research project) supported by Guangdong Government under Grant No. 2015KTSCX175; the Premier-Discipline Enhancement Scheme Supported by Zhuhai Government under Grant No. 2015YXXK02-2; the Premier Key-Discipline Enhancement Scheme Supported by Guangdong Government Funds under Grant No. 2016GDYSZDXK036.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Li, L., Si, Y. Enhancement of hyperspectral remote sensing images based on improved fuzzy contrast in nonsubsampled shearlet transform domain. Multimed Tools Appl 78, 18077–18094 (2019). https://doi.org/10.1007/s11042-019-7203-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-019-7203-6