Abstract

Machines can acknowledge the images of skin lesion as well as the disease compared to an experienced dermatologist. These might be executed by giving a proper label for the provided images of skin lesion. Within the proposed study researchers have examined various frameworks for detection of skin cancer as well as classification of melanoma. The current research includes a unique image pre-processing technique and modification of the image followed by image segmentation. The 23 texture and ten shape features of the dataset are further refined with feature engineering techniques. The improved dataset has been processed inside a Deep Neural Network models by binary cross-entropy. The dataset passes through several mixes of multiple activation layers with varying features and optimization techniques. As an outcome of the study, researchers have selected a useful, timesaving model to find an image as melanoma or even naevus. The model was evaluated with 170 images of MED NODE and 2000 images of ISIC dataset. This improved framework achieves a favorable accuracy of 96.8% with few noticeable epochs which concern other 12 machine learning models and five deep learning models. In the future, certainly there can be an investigation with several classes of skin cancer with an improved dataset.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Skin cancer can take place throughout every phase of life, and even more, it could grow with other inner areas of cells and cause damage to their DNA. Being exposed to Ultraviolet (UV) radiation is the primary reason for the growth of it, apart from ozone coating exhaustion, and chemical exposure and genetic issues. It can be many types and out of them most commonly studied are deadly melanoma and naevus (non-melanoma) [42]. Melanoma wipes out an over ten thousand men inside the United States yearly. This together with different other forms of skin malignancy induced even over three million clinical operations of skin lesion which are performed yearly in the U.S.A [9]. This sickness requires over 6% of the population to become affected in the modern era in the states. This fetal disease needs to detect at first stage as possible to avoid huge treatment cost and hazards. National Institute of Biomedical Imaging and Bioengineering has established a non-invasive imaging procedure which precisely spots cancer without having a surgery. There might be many invasive and expensive methods to recognize the phase of it; but, the most well-known technique is dermoscopy. It is a non-invasive approach which is only required the picture lesion of the abrasion. It works for the early diagnosis of melanoma, and it includes an illumination magnification scheme to analyze skin lesions. Skin professionals, a least of five years knowledge, might act it and the result of analysis relies on 30% in the hands of these skin experts. The need for experienced skin experts is much more in the low socio-economic nations. Absence of proper types of equipment to assess melanoma and lack of awareness on this condition could lead to more damage in less congested regions. Therefore, it has a huge need for Computer Aided Diagnosis (CAD) to test melanoma at the beginning [14]. The scientists had evaluated their scheme of classification to detect melanoma with machine learning or deep learning models. The proposed approach used structure-based features, ABCD clinical features or even shape attributes. Enrique V. Carrera et al. [2] used an optimized SVM classifier and came up with an accuracy of 73% (748 images). A classification model by J. Premaladha et al. [25] was tested on 992 images with a Hybrid Adaboost and Support Vector Machine (SVM) with DNN, which gave an accuracy of 93%. The proposed solution was tested and validated with almost a thousand images (malignant & benign lesions). E. Nasr-Esfahani et al. [23] have built a Convolutional Neural Network (CNN) and got 81% accuracy out of 170 images. The CNN was also used by Nayara Moura et al. [22] with ABCD descriptors and had an accuracy of 94.9% (814 images). The investigation of Afonso Menegola [20] has VGG-16 network to get an accuracy of 84.5% (900 images). Researchers had worked to innovate a correct framework for the analysis of the disease but still has the requirement of the subject for the complexity of this disease. The system may determine the elevation of the lesion and also compile ABCD information of the mole with the tool for detecting melanoma. This feature contains of property like the (1) symmetric property of the lesion (A), (2) the irregular border (B), (3) several shades of pigment color (C) and (4) size of the perimeter (D) of the lesion (6 mm) [22]. Apart from the above cited the papers, other works are reviewed in the literature review. In this current study, the researchers have accumulated the factors from these studies and worked to improvised those. The nobility of this study are as follows: (I) It consists of larger dataset compared to the earlier studies, which includes 1070 images of melanoma and 1100 of naevus. (II) The study deals mainly with pre-processing of images which are composed of normalization of intensity, modified anisotropic diffusion function combined and sigmoidal function. It is faster than typical 8-neighbourhood Anisotropic Diffusion Filter (ADF) as here present researchers have reduced it in 4-neighbourhood operation. The method was tested with sources through the Peak Signal-to-Noise Ratio (PSNR), Structural Similarity Index (SSIM), Mean Square Error (MSE) as it protects the adequate information like edges and colour. The skin lesion needs to properly isolate from the background to get proper information of shape. Several practices of various segmentation methods were implemented, and testing of the segmented lesion was determined with Jaccard index, shape match score, global consistency error as well as other metrics. Past studies of classification had a lack of effort in the proper data refinement techniques. (III) It also implies different image segmentation techniques and tested on skin lesion images. (IV) The preprocessed dataset with adequate class variance, present researchers, have applied on our suggested deep neural network model. The robust data set acts well with the combination of advance as well as typical non-linear activation function with different size of the hidden layers.

The motivation of the research has come from recent acts of optimization and tuning of hyper-parameter of deep learning models [34, 35, 45]. Present research incorporates those ideas. The suggested framework includes unique image preprocessing, segmentation, as thoroughly as feature extraction, dataset preparation. Finally, it classifies the diseases with aid of several Artificial intelligence (AI) based classification methods. In the introduction part, suggested study want to emphasize a variety of relevant information and existing reports on the detection of melanoma. In section 3, it has accumulated a few current studies and evaluating those. The suggested framework and its associated researches have been communicated in section 4 and explain briefly various phases of activities. Within its sub-sections, present researchers examined different hyper-parameter with our suggested solution. Here, comparison and its impacts have been talked earnestly. Inside section 5, the subject has been summarized with the restriction of the research, along with conditions future scope of the exploration. Lastly, it has highlighted our findings and reported our outcome of the investigation in section 6.

2 Related work

2.1 Data acquisition

There can be various stages for detection of melanoma through any AI-based models. The skin cancers image may be collected from several data sources like MED-NODE [7], ISIC [6], PH2 [24] databases as it was mentioned in various studies.

2.2 Image adjustment and lesion segmentation

The acquired images may have distortions of lights and undesired objects which need to reduce for further progress. So, identification of the melanoma starts with the pre-processing of the lesion images and segmenting the region of the affected skin cancer. Skin cancer images contain three layers of information as extracted information from HSV (Hue, Saturation, Value) layers was effective in the study of [23]. The GPU based computer aid system tried to detect melanoma from 170 images of MED NODE. To segment lesion of the lesion, six sigma methods could be used in which was shown [31]. In [43], the designed framework possess a solution for dermoscopic image segmentation utilising deep convolutional learning with pixel-wise labelling technique. In this study, it was correct segmentation even in the existence of hair, air/oil bubbles. In [1], researchers gave importance to precise segmentation, and the proposed full resolution networks with full resolution convolutional networks (FrCN) technique at once discovers the complete resolution features from every individual pixel of the content in almost 90% of cases. It is also observed in [21] study of Mishra et al. that viewer bias might impact the evaluation of dermoscopy images to establish a CAD system. It worked with a deep learning technique when it comes to automated skin lesion segmentation.

2.3 Feature learning and classification through DNN

Within the study of Zhang et al. [44], they recommended automated image feature evaluation through deep learning model. A convolutional neural network (CNN) was used for local regions which identify feasible high-level characteristics of images. They built a region-based unsupervised feature learning model with an accuracy of 81.75%. In the study of Yu et al. [46] worked on a framework with the high-class varieties in between melanoma and non-melanoma. Finally, local deep descriptors through Support vector machine (SVM) was used for encoding to categorised and got the accuracy of 86%. Googles Inception v4 CNN design was educated and verified utilising dermoscopic images and corresponding diagnoses [12]. Here, the test set of 100 images were crosschecked by the sectional reader. Here, the skilled dermatologists assessed a mean sensitivity and specificity for lesion classification of The CNN-ROC, CNN-AUC was higher compared to the mean ROC area of dermatologists. Salido et al. [28] have used to assess its efficiency; they evaluated their classifier using both pre-processed and source images from the PH2 dataset with DNN. As an outcome, it gave an accuracy of 86% to 94%. Dorj et al. conducted another study of deep learning. [8] as they made use of the quite useful deep convolutional neural network employing Error-Correcting Output Codes (ECOC) SVM to categorise the skin cancer diseases such as Actinic Keratoses, BCC, SCC as well as Melanoma, and deep convolutional neural network. The colour images 3500 of the skin cancers was accumulated in the pre-trained AlexNet model was utilized in extracting features. This revealed the accuracy as follows: 95.1% (Melanoma) for (SCC) 98.9% and 94.17% (BCC).

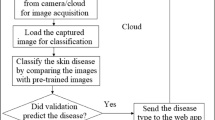

3 Proposed solution

The CAD for melanoma detection could have various phases that have discussed earlier. The system should discover the highest feasible ways of getting a better solution than former works by improved methodology. So, It needs to incorporate with various type of image enhancement, segmentation and also classification techniques to evaluate the system. We have already found the idea of different deep learning methods to categorize the melanoma and naevus images. It can be said, the analysis of the features through the AI-based learning model is the centre of the study. The images possess noise and low lighting problem, and it has been improved though proposes a preprocessing method. It has improved the texture of the image and helped to darken the perimeter of the lesion. The segmentation method like an active contour model is exceptional when just two contrast areas exist in the image. Features for the melanoma and naevus is similar in normal eyes, but a couple of characteristics of texture, colour and also shape differentiate them from each other. An effective model requires a massive dataset, and we have managed to obtain 2170 dermoscopy images. This brings a considerable amount of variation in class features. Sometimes, features need to procreate and generate to readjust variance of features classes. The framework [39, 40] consists of a polynomial feature generator technique which stretches the measured data. The dimension of the feature vector has been minimized with Principal component analysis (PCA). The refined dataset effectively contrasted to the traditional dataset. The optimised dataset was taken as training and validation inputs for many supervised learning models [38] as well as deep neural networks models. The proposed methods of work has been shown in Fig. 1 and Table 1.

3.1 Image processing

The understanding nature of image [47] is one of the vital tasks for skin experts as well as in the feature extraction process. The observed melanoma and any lesion images taken by digital equipment may be further progressed with specific preprocessing techniques. The images with low contrast need to successfully fine-tune such that alteration might not change the characteristics of the lesion. The colour, boundary as well as the texture property of the images is crucial [16, 36] for the image pattern analysis as well as spotting the shape of the lesion for classification of the melanoma.

3.1.1 Contrast enhancement

The intensity of the skin lesion image could be improved through image normalization technique. The enhanced image carries related information of the skin lesion which may work well when it processes for segmentation.

Here, norm(I)(x, y) is the normalized intensity of the Gary level pixels, required images average grey values is αd, the variance of the intensity is νd, α is the average intensity value of the grayscale images, ν is the variance of the intensity of the two images.

The noise removing anisotropic diffusion filter is useful for preserving edges of the lesion and blur the background area. The disadvantage of it is that the kernel for the filters processed slower than other filters like Median, Gaussian etc. We reduced the kernel operation and have utilised a novel modified anisotropic diffusion function with image normalisation and sigmoidal function [32].

Here, we have modified the original anisotropic diffusion function and passed through a selection of conduction function and done calculation of the gradient of the pixel boundaries. The eight neighbourhood kernel function was converted into four as shown in Fig. 2. In eq. 3, tnorth, south is threshold calculated in of north-south region in the kernel (Table 2).

I(s) standard deviation of gradient image north 3 × 3 regions, \( {\sigma}_{south}^2 \) is the standard deviation of gradient image south 3 × 3 regions. μnorth Is mean of the gradient image north 3 × 3 region, μsouth is mean of the gradient image south 3 × 3 regions. Psouth prior probability that a pixel belongs to gradient image north 3 × 3 regions, Pnorth is the prior probability that a pixel belongs to gradient image south 3 × 3 regions. Since an outcome, it decreases to a single threshold for a set of the north as well as south orientation. Likewise, we can use it to some other set of orientations such as NE-SW, E-W, as well as SE-NW. Considering these facts, a result, it decreases to four limits. The estimation of the gradient threshold parameter was used to meet iteration storing criteria. The assessment of the skin lesion image has computed through Peak signal-to-noise ratio.

It is an approach to stabilize the value of the intensity of the pixel. The stabilized intensity was taken for the operations and the magnitude of the interior regions calculated through conduction function. The gradient threshold parameter is responsible for managing the rate of the conduction. We have selected specific threshold S, and noises and also edges of the edges are smoothed away. The gradient threshold is an essential parameter as it operates on the boundary of the skin lesion. The noises in the image are sensitive to the iteration which needs to manage accordingly. The selection of iteration of the AD scheme is crucial, since misjudging that it might lead to smudging the border while ignoring it might keep unfiltered noise. Since optimal threshold time (T) is related to the number of iteration which preserves the normal range of PSNR [13] values. For that reason, the minimum threshold time Tmin should be approximated based on every filter version of the noisy image. img0 is first iteration image and imgttth iteration of the image.

Here, E(i, j) enhance image pixels are generated from above-motioned equations.

3.1.2 Lesion mask generation

The preprocessed images come with distinct boundaries and also contrast between a couple of regions. The evaluation of different segmentation technique was carried out for arbitrary sampled images. These methods are mainly based on border based, threshold based, region based or partial derivation based such as Otsu, Super Pixel, K-means, Fuzzy C-Means and Active contour, etc. The evolution of this methods is critically validated through measuring the similarity of formation of the pixels by Jaccard Index, Global Consistency Error, Probabilistic Rand Index, Variation of Information and Contour shape matching and object identification. Active contour model (ACM) segmentation technique [18, 26] has performed well in the majority of the cases to protect the boundaries. A concise survey has undoubtedly been accomplished to set apart a section of abrasion of skin cancer and to shape from the uncontrolled and noisy neighbouring. The shape of the binary mask of melanoma lesion as well as naevus might have blobs or holes in a couple of situations. We have identified optimal parameter combination to the possible standard correctness of segmentation and used a flood fill algorithm to occupy the regions. We took the most relevant techniques which have an outcome just like manual segmentation although most of the cases.

3.2 Selection of features

The process of getting patterns of the two class labels starts with the problem-solving approach that would combine mix approaches to get robust dataset having proper class variance. The skin lesion images could be tested based on the texture as well as shape attribute [27] The texture is a low-level feature that could be utilised to explain the structure of the area skin lesion image. The image could possess comparable histograms, however, texture feature of several techniques. We had to try out various methods of features extraction [29] like color, wavelets, local binary patterns etc. We have made an initial combination of Haralick co-occurrence matrices [19] features, which is a collection of 22 attributes. The shape is one of the vital features of these classes. Therefore 12 shape measurement [41] was present in the final dataset. The evaluation of 34 features more tuned and optimized [3, 4] in the study to distinguish between texture features of the training class. The feature fields are discussed below:

-

Haralick co-occurrence matrices (22 Features):

Autocorrelation, Contrast, Correlation, Cluster Prominence, Cluster Shade, Dissimilarity, Energy, Entropy, Homogeneity, Maximum probability, Sum of squares: Variance,Sum average, Sum variance,Sum entropy, Difference variance, Difference entropy Information measure of correlation1,correlation2, Inverse difference (INV),Inverse difference normalized (INN), Inverse difference moment normalized.

-

Shape Features (12 Features) is as follows:

Orientation, Solidity, Perimeter, Minor axis length, Major axis length, Filled area, Area, Convex area, Equiv diameter, Extent, Asymmetric index, Circularity index.

3.3 Feature scaling

The dataset of the features can be redefined according to the demand of various algorithms of artificial intelligence [17]. In some cases, it is required to estimate individual characteristics of the attributes of normally distributed records for the unit variation. We have put on change such that, the features are readjusted like non-constant features by their standard deviation. These features hold a various range of magnitudes and units and it is centred to the average. Here, feature-wise scaling has been carried out for all complement variance.

3.3.1 Polynomial features

The skin lesion features, even more, produce a matrix of updated attribute containing mixtures of all of the polynomial [30]. These features with the degree under or equivalent to the defined degree. We possess an input sample of 34 aspects of n-dimensional data. If we assume of the form f1, f2, f3......fn the degree is n, then polynomial features are \( \left[1,{f}_1,f{1}_2,{f}_1^2,f1f2,{f}_2^2......{f}_i^j\right] \). Here, The degree of the polynomial features initialised to 34. The overall variety of polynomial output features is computed by repeating over all appropriately sized mixes of feedback features. We have additionally observed the substantial degrees which could trigger overfitting.

3.3.2 Principal component analysis

The prolonged dataset of melanoma and non-melanoma further refined with a linear transformation method like Principal Component Analysis [15] (PCA). It possesses the depth of the evaluation of any feature data and a lot more. The dimension of the dataset and volume in the investigation is critical. The application of a PCA evaluation is to determine patterns in the dataset; PCA intends to identify the affiliation between attributes. If a good and balanced connection between variables exists, the attempt to minimise the dimensionality which only works. To compare to Linear Discriminant Analysis (LDA) and PCA are both linear transformation methods but PCA allows the directions that maximise the variance of the data, whereas LDA also aims to find the paths that optimise the discrimination between various attributes. The extended feature set consists of xi, j where j = [x1,x2.......x629] attributes minimized to build feature sets.

3.4 Proposed architecture of deep neural model

The previous researches of deep neural network (DNN) suggest several structures combined with stacked layered. They have recommended their deep neural structure for their functionality. The skin lesion like melanoma and naevus has few distinguishing features between them. The assessment of class labels from the image directly, is hard for DNN, though it is indeed an excellent feature learner. We have tested images directly on Convolution DNN models, but it has not quite done classification well. The images have to preprocessed and appropriately segmented to get the optimised feature set. Our objective was to build time-saving the accurate supervised deep neural model. In which we have divided and combined several hidden layers and fine-tuned them to get essential aspects of activation functions [10]. This experiment was rigorous as it combines hyperparameter [11, 33] like inputs in the dense layers, batch sizes, activation functions, optimisations methods of the networks. The formation of the node which is densely connected and at last this only generates the binary outcome. The brief result of the experiment described being evaluated and loaded through every layer and processed through activation functions which are described below in Fig. 3 and Table 3.

3.4.1 Brief of DNN architecture

Hyperparameters tuning is taking care of a variety of hidden units which determine the deep neural network, how it can educate as well as validate. We have evaluated the model in different number the hidden layers are the input layer and output layer along with activation function, fig. Three has described these. The model was evaluated with regularization methods which can increase accuracy. It is also discovered that a smaller sized number of hidden units may create underfitting. We have attached dropout of .5 at each layer to avoid overfitting. It is a medium size network which has the more opportunity to adapt knowledge of the data.

3.4.2 Role of the non-linear activation function

The model has different weight initialization aspects varying non-linear activation function used on each layer. We have used activation functions: Sigmoid, Softmax, tanh, Rectified Linear Unit and advanced activation of relu like S-shaped Rectified Linear Unit, Leaky relu, Sine relu, Parametric relu, Exponential linear unit. The ideal performing function is the rectifier activation function is the most promising and its variations has worked great. The last layer sigmoid was present for binary evaluation.

3.4.3 Aspects of learning rate, batch sizes

We have passed the learning rate starting with 0.1 to.001, and we discovered smaller variation had worked well, as decreases the learning procedure, however, converges efficiently. We iterated model with early stopping strategies and managed to obtain epoch of the highest accuracy. Here, batch size plays a crucial role as the number of subsamples given to the network after which parameter update happens, and we have tested on different size like 16, 32, 64, 128, 256. We got 32 and 64 worked well in many of the situations.

4 Experiments and results

4.1 Datasets

The investigation incorporates image dataset skin lesion includes 170 melanoma (70) as well as naevus (100) images. The Department of Dermatology of the University Medical Center Groningen (UMCG) assembled the whole repository. They have incorporated MED-NODE system and worked with such datasets for screening purpose.

The second source was the International Skin Imaging Collaboration (ISIC) archive, where we have obtained 2000 images of melanoma (1000) and naevus (1000). It contains digital images of different kinds of skin lesions and this open source public access archive of skin images to test and validate the proposed standards of automated diagnostic systems.

4.2 Approach to the machine learning algorithm

We have evaluated our preprocessed images dataset as well as source images dataset in the different machine learning algorithm. The model was assessed with accuracy and log loss matrices as shown in Tables 4 and 5 in below.

4.3 Approach to proposed deep neural network

We have divided dataset for the training and validation in 80:20 proportion. The experiment was carried out with a dataset in 10 times for each DNN models to find out average accuracy of them. We have examined the suggested model with a various set of parameters and specifications. Although, the influence of pre-processing shows up great impact as it improved over 10% more precision for discovering cancer malignancy over naevus. In Figs. 4 and 5. we tried to give different compression of hyper tuning parameters. We have evaluated complex structures with matrices [5, 37] in below Fig. 6. We have compared the proposed model with other models of DNN. In Fig. 7. has indeed revealed the rate of the loss of training as well as validation in each epoch of standard datasets. The Fig. 8 is showing less validation loss and steady increase in each epoch which is suitable for our proposed framework. (Table 6).

5 Discussion

The ongoing research explored different facets of the classification of melanoma over naevus (non-melanoma). These aspects are reviewed and summarised below:

5.1 Advantages

In the current study, the researchers discovered various merits this framework which are communicated in brief in below.

(I) The suggested pre-processing technique has improved accuracy to 10–18% in a noticeable number of situations. It proves that available images from open archives were improper for the extraction of features. Illumination, noises and undesirable artefacts in images make them ambiguous for classification. (II) The recent study also took care of confinement of the affected skin lesion. It was checked with the different edge based, clustered, region-based techniques for segmentation. It was noticed that the structure of the images prevents isolation of skin sore. (III) One of the basic features scaling methods is normalization,which is useful here for altering the range of intensity values. It is detected that rescaled intensity with a specific range which assured better convergence during back-propagation. It is used to subtract the mean of each data point division by its standard deviation. This assists equal weightage for all instances. (IV) The feature vectors is optimized through PCA. This identifies important variables which can help to prepare a set of principal variables. (V) The texture feature such as cluster prominence have more impact in the classification of the images. (VI) The shape features such as border circularity index and asymmetric index have the highest correlation in for classification. (VII) An increased amount of dataset (over 2000) has been included to carry out the work effectively compared to a few datasets. (VIII) Here, machine learning models functioned effectively than DNN in the small dataset but did not performed well when the dataset had a large variance. (IX) The DNN have dense hidden layers with varying shape and size. The optimal model should have a proper number of hidden layers which would produce adequate accuracy. It is noticeable that if the fixed size of hidden layers is there in the model, then the tuning of the hyper parameter like kernel will not have any impact on the accuracy. (X) Issues like over-fitting of the model and generalization of the architecture are resolved through adding more data in the DNN. It further includes data augmentation which reduces architectural complexity. Batch normalization and regularization are applied in the proposed model to resolve this issue. (XI) The researchers have employed the first order gradient descent technique like SDG regularization to handle sparse data and reduced the number of parameters in the neural network. (XII) Batch normalization is used to reduce the working time of activation layers and speed up the execution of the model. It further reduces the noises of the dropout layers.

5.2 Limitations

Here, challenges are not only restricted by the size of the dataset but was also confronted limitations.

(I) Acquired images from the internet consist of undesired objects which need extra care during sample selection. (II) The assessment of the features can be optimized before it is processed in DNN. The proper measurement and the correlation of the features may help obtain a significant subset. In the upcoming study, it can be carried out in detail. (III) The evaluation of these machine learning models may be further tuned with their concerns-parameters. (IV) It was also observed that that reduction of the features to a minimum size would deteriorate the accuracy to 7–16%.

6 Conclusions

The assessment of skin lesion was completed with uniquly pre-proceed images, and researchers have built a effective DNN for melanoma and non-melanoma (Naevus). A detailed comparative analysis of various segmentation (edge based, clustered, region-based techniques etc.) are studied. Feature normalization is deployed for the improvement of convergence rate during back-propagation. Most of the hyper-parameter of the deep learning model is tested on a large experimental data sets of size 2170 images (2000 images (ISIC- Archives) and 170 images (MED-NODE)). As a result of image pre-processing, a significant improvement in the reported accuracy (>10% for all classifiers) is observed. The pre-processed feature set is assessed with accuracy through 12 machine learning classifier such Logistic Regression (90.55%), C-Support Vector Classification (67.93%), Nu-Support Vector Classification (84.1%), K Neighbors Classifier (78.8%), Decision Tree Classifier (90.78%), Random Forest Classifier (90.32%), AdaBoost Classifier (85.25%), Gradient Boosting Classifier (95.16%), Bernoulli Naive Bayes (78.11%), Gaussian Naive Bayes (60.6%), Linear Discriminant Analysis (90.78%), Quadratic Discriminant Analysis (96.77%). The results obtained from a pre-processed dataset has better accuracy from 7 to18%. The proposed deep learning model can achieve an accuracy of 96.8% in 0.41 min. In the future, various feature selection techniques and hair removal techniques can be investigated to increase the robustness and efficacy of the recommended system. Moreover, machine learning models with their hyper-parameters can be studied. There is a scope of investigation in various types of transformation-based features like Fourier’s, Wavelets features and feature reduction techniques (e.g., Rough Sets, Linear Discriminant Analysis, Independent Component Analysis etc.). There is undoubtedly a possibility for an investigation of skin cancer with the improved dataset.

References

Al-masni MA, Al-antari MA, Choi M-T, Han S-M, Kim T-S (2018) Skin lesion segmentation in dermoscopy images via deep full resolution convolutional networks. Comput Methods Prog Biomed 162:221–231

Carrera EV, Ron-Dominguez D (2018) A computer aided di-agnosis system for skin cancer detection. In International Conference on Technology Trends, Springer, pp. 553–563

Chaki J, Dey N (2019) Pattern analysis of genetics and genomics: a survey of the state-of-art. Multimed Tools Appl:1–32

Chaki J, Dey N, Shi F, Sherratt RS Pattern Mining Approaches used in Sensor-Based Biometric Recognition: A Review. IEEE Sensors J. https://doi.org/10.1109/JSEN.2019.2894972

Dahl GE, Sainath TN, Hinton GE (2013) Improving deep neural networks for lvcsr using rectified linear units and dropout. In: Acoustics, Speech and Signal Processing (ICASSP), 2013 IEEE International Conference on, pp. 8609–8613, IEEE

Dermatology database used in med-node (1979). https://isic-archive.com/. Accessed: 2019-01-20

Dermatology database used in med-node (2015). http://www.cs.rug.nl/~imaging/databases/melanoma_naevi/. Accessed: 2019-01-20

Dorj U-O, Lee K-K, Choi J-Y, Lee M (2018) The skin cancer classification using deep convolutional neural network. Multimed Tools Appl:1–16

Ferris LK, Gerami P, Skelsey MK, Peck G, Hren C, Gorman C, Frumento T, Siegel DM (2018) Real- world performance and utility of a noninvasive gene expression assay to evaluate melanoma risk in pigmented lesions. Melanoma Res

Glorot X, Bengio Y (2010) Understanding the difficulty of training deep feedforward neural networks. In: Proceedings of the thirteenth international conference on artificial intelligence and statistics, pp. 249–256

Goodfellow I, Bengio Y, Courville A, Bengio Y (2016) Deep learning, vol 1. MIT Press, Cambridge

HA Haenssle C, Fink R, Schneiderbauer F, Toberer T, Buhl A, Blum A, Kalloo A, Hassen L, Thomas AE et al (2018) Man against machine: diagnostic performance of a deep learning convolutional neural network for dermoscopic melanoma recognition in comparison to 58 dermatolo-gists. Ann Oncol

Huynh-Thu Q, Ghanbari M (2008) Scope of validity of psnr in image/video quality assessment. Electron Lett 44(13):800–801

Jeong C-W, Joo S-C (2018) Skin care management support system based on cloud computing. Multimed Tools Appl:1–12

Jolliffe I (2011) Principal component analysis. In: International encyclopedia of statistical science, pp. 1094–1096, Springer

Kang D, Kim S, Park S (2018) Flow-guided hair removal for automated skin lesion identification. Multimed Tools Appl 77(8):9897–9908

Kotsiantis S, Kanellopoulos D, Pintelas P (2006) Data preprocessing for supervised leaning. Int J Comput Sci 1(2):111–117

Li B, Acton ST (2007) Active contour external force using vector field convolution for image segmentation. IEEE Trans Image Process 16(8):2096–2106

Mäenpää T (2003) The local binary pattern approach to texture analysis: extensions and applications. Oulun yliopisto Oulu

Menegola A, Fornaciali M, Pires R, Bittencourt FV, Avila S, Valle E (2017) Knowledge transfer for melanoma screening with deep learning. In Biomedical Imaging (ISBI 2017), 2017 IEEE 1fth International Symposium on, IEEE, pp. 297–300

Mishra R, Daescu O (2017) Deep learning for skin lesion seg-mentation. In Bioinformatics and Biomedicine (BIBM), 2017 IEEE International Conference on, pages 1189–1194. IEEE

Moura N, Veras R, Aires K, Machado V, muere Silva R, Araujo F, Mai'la C (2018) Abcd rule and pre-trained cnns for melanoma diagnosis. Multimed Tools Appl:1–20

Nasr-Esfahani E, Samavi S, Karimi N, Mohamad, S, Soroushmehr R, Jafari MH, Ward K, van Najarian K (2016) Melanoma detection by analysis of clinical images using convolutional neural network. In Engineering in Medicine and Biology Society (EMBC), 2016 IEEE 38th Annual International Conference of the, IEEE, pp. 1373–1376

Ph database. https://www.fc.up.pt/addi/ph2%20database.html,year=2015,note. Accessed: 2019-01-20

Premaladha J, Ravichandran KS (2016) Novel approaches for diagnosing melanoma skin lesions through supervised and deep learning algorithms. J Med Syst 40(4):96

Roy P, Goswami S, Chakraborty S, Azar AT, Dey N (2014) Image segmentation using rough set theory: a review. International Journal of Rough Sets and Data Analysis (IJRSDA) 1(2):62–74

Saba L, Dey N, Ashour AS, Samanta S, Nath SS, Chakraborty S, Sanches J, Kumar D, Marinho R, Suri JS (2016) Automated stratification of liver disease in ultrasound: an online accurate feature classification paradigm. Comput Methods Prog Biomed 130:118–134

Salido JAA (2018) Conrado Ruiz Jr. Using deep learning to detect melanoma in dermoscopy images. International Journal of Machine Learning and Computing 8

Samanta S, Ahmed SS, Salem MA-MM, Nath SS, Dey N, Chowdhury SS (2015) Haralick features based automated glaucoma classification using back propagation neural network. In: Proceedings of the 3rd International Conference on Frontiers of Intelligent Computing: Theory and Applications (FICTA) 2014, pp. 351–358, Springer

Sanderson C, Paliwal KK (2002) Polynomial features for robust face authentication. In: Image Processing. 2002. Proceedings. 2002 International Conference on, vol. 3, pp. 997–1000, IEEE

Sankaran S, Hagerty JR, Malarvel M, Sethumadhavan G, Stoecker WV (2018) A comparative assessment of segmentations on skin lesion through various entropy and six sigma thresholds. In: International Conference on ISMAC in Computational Vision and Bio-Engineering, pages 179–188. Springer

Sau K, Maiti A, Ghosh A. Preprocessing of skin cancer using anisotropic diffusion and sigmoid function, pp. 51–60

Schmidhuber J (2015) Deep learning in neural networks: An overview. Neural Netw 61:85–117

Wang S-H, Lv Y-D, Sui Y, Liu S, Wang S-J, Zhang Y-D (2018) Alcoholism detection by data augmentation and con-volutional neural network with stochastic pooling. J Med Syst 42(1):2

Wang S-H, Phillips P, Dong Z-C, Zhang Y-D (2018) Intelligent facial emotion recognition based on stationary wavelet entropy and jaya algorithm. Neurocomputing 272:668–676

Yan C, Xie H, Liu S, Yin J, Zhang Y, Dai Q (2018) Effective uyghur language text detection in complex background images for traffic prompt identification. IEEE Trans Intell Transp Syst 19(1):220–229

Yan C, Xie H, Liu S, Yin J, Zhang Y, Dai Q (2018) Effective Uyghur Language Text Detection in Complex Background Images for Traffic Prompt Identification. IEEE Trans Intell Transp Syst 19(1):220–229

Yan C, Xie H, Yang D, Yin J, Zhang Y, Dai Q (2018) Supervised hash coding with deep neural network for environment perception of intelligent vehicles. IEEE Trans Intell Transp Syst 19(1):284–295

Yan C, Zhang Y, Xu J, Dai F, Li L, Dai Q, Wu F (2014) A highly parallel framework for hevc coding unit partitioning tree decision on many-core processors. IEEE Signal Processing Letters 21(5):573–576

Yan C, Zhang Y, Xu J, Dai F, Zhang J, Dai Q, Wu F (2014) Effi-cient parallel framework for hevc motion estimation on many-core processors. IEEE Transactions on Circuits and Systems for Video Technology 24(12):2077–2089

Yang M, Kpalma K, Ronsin J (2008) A survey of shape feature extraction techniques

Yogita R, Samina S, Pooja S, Jyotsana G (2018) Review on skin cancer. Asian Journal of Research in Pharmaceutical Science 8(2):100–106

Youssef A, Bloisi DD, Muscio M, Pennisi A, Nardi D, Facchiano A (2018) Deep convolutional pixel-wise labeling for skin lesion image segmentation. In: 2018 IEEE International Symposium on Medical Measurements and Applications (MeMeA), pp 1–6. IEEE

Zhang G, Hsu C-HR, Lai H, Zheng X (2018) Deep learning based feature representation for automated skin histopathological image annotation. Multimed Tools Appl 77(8):9849–9869

Zhang Y-D, Muhammad K, Tang C (2018) Twelve-layer deep convolutional neural network with stochastic pooling for tea category classification on gpu platform. Multimed Tools Appl:1–19

Zhu F, Shao L, Yu M (2014) Cross-modality submodular dictionary learning for information retrieval. In Proceedings of the 23rd ACM International Conference on Conference on Information and Knowledge Management, pages 1479–1488. ACM

Zhu F, Shao L, Yu M (2014) Cross-modality submodular dictionary learning for information retrieval. In: Proceedings of the 23rd ACM International Conference on Conference on Information and Knowledge Management, pp. 1479–1488, ACM

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Maiti, A., Chatterjee, B. Improving detection of Melanoma and Naevus with deep neural networks. Multimed Tools Appl 79, 15635–15654 (2020). https://doi.org/10.1007/s11042-019-07814-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-019-07814-8