Abstract

In data mining and knowledge discovery applications, outlier detection is a fundamental problem for robust machine learning and anomaly discovery. There are many successful outlier detection methods, including Local Outlier Factor (LOF), Angle-Based Outlier Factor (ABOF), Local Projection Score (LPS), etc. In this paper, we assume that outliers lie in lower density region and they are at relatively larger distance from any points with a higher local density. In order to identify such outliers quantitatively, the paper proposed a decision graph based outlier detection (DGOD) method. The DGOD method works by firstly calculating the decision graph score (DGS) for each sample, where the DGS is defined as ratio between discriminant distance and local density, next ranking samples according to their DGS values, and finally, returning samples with top-r largest DGS values as outliers. Experimental results on synthetic and real-world datasets have confirmed its effectiveness on outlier detection problems, and it is a general and effective information detection method, which is robust to data shape and dimensionality.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Due to vast amounts of multiple distributed sensors, social media has become the most representative and relevant data sources for big data. Information detection is one of the most popular topics in social big data research, especially outlier and detection is the critical issue [4, 36]. In reality, within the massive data, there are inevitable exceptional behaviors or inconsistent patterns that often exhibit as the representations of noises or interesting facts, such as cyber-intrusion and terrorist activities [37]. In data mining and knowledge discovery applications, outliers are also referred as anomalies, deviants, or discordants of data generating process, which will lead to model misspecification, biased parameter estimation and incorrect results [22]. The detection of such unusual characteristics provides useful application-specific insights [1], such as network intrusion detection [10, 12], social media security and trustworthiness evaluation [38, 41], credit card fraud detection, clinical trials, voting irregularity analysis, severe weather prediction, athlete performance analysis, terrorist activity investigation et al. To address these challenges, many researchers have proposed several outlier detection methods according to different definitions of outliers. Johnson [18] suggests that an outlier is an observation which appears to be inconsistent with the rest of the dataset. Barnett and Lewis [3] define outliers as objects that appears to deviate markedly from other samples in which it occurs. The most popular definition of outlier is proposed by Hawkins [13], and he defined outlier as a sample that appears to deviate so much from other samples as to arouse suspicion that it was generated by a different mechanism. Since outlier detection can reveal unusual behaviors, interesting patterns and exceptional events from datasets, it is of great interest to the communities of machine learning and data mining.

There are two perspectives on outlier detection problem formulation, one is learning to rank which output a score about the level of “outlierness” of a sample, and the other is learning to classification which output a binary label indicating whether a sample is an outlier or not. Due to its nature of unsupervised learning, how to detect outliers from normal data samples with noise is often a subjective process. In this work, we formulate the outlier detection problem as a ranking problem, and present a quantified outlierness measure of a sample.

Many efforts have been devoted to detect outliers. In statistical analysis, the simplest method for outlier detection is Z-value test which assumes that the data samples are modeled from a normal distribution. The samples with more than 3 standard deviations from the mean are recognized as outliers. But it is not always the case for real data. Generally speaking, existing outlier detection methods falls into three main categories: distance-based methods, density-based methods and clustering-based methods.

Distance-based methods consider the data points having large average distances to the k-th nearest neighbors as outliers. Distance-based outlier was originally proposed by Knorr and Ng [19] in 1998, in which a sample x i in a dataset X is a DB(p,T)-outlier if at least fraction p of the samples in X lies greater than distance T from x i . Although a number of efficient algorithms for detecting distance-based outliers are proposed, it doesn’t provide a ranking for outliers. Based on the distance of each sample from its k-th nearest neighbor, Sridhar et al. [26] proposed a partition-based algorithm to rank each point and declare the top r points in this ranking to be outliers. To detect outliers in scattered datasets, Zhang et al. proposed Local Distance-based Outlier Factor (LDOF) [39] which is defined as the ratio of the average of distances from a data point to its k-nearest neighbors over the average of pairwise distances among these k-1 data points, and then the degree to which a sample deviates from its neighborhood system is captured. To circumvent the distance concentration problem in high-dimensional space, Liu et al. [21] introduced Local Projection Score (LPS) to represent deviation degree of a sample to its neighbors, in which the LPS can be computed by the technique of low-rank approximation.

Though distance-based methods are simple and elegant, they didn’t work well for datasets that have more complex structures and are sensitive to data locality [5]. Density-based methods are robust to data locality, which assume that the density around an outlier is significantly different from densities around its neighbors. Then outliers can be identified by comparing the density of a sample’s neighborhood with that of its neighbor’s neighborhood. The most popular density-based method is Local Outlier Factor(LOF) [5], in which outliers are detected by measuring the local deviation of a given data point with respect to its neighbors, and outliers are considered as data points that have a substantially lower density than their neighbors. However, selecting neighborhood parameter k in LOF is non-trivial. Following the idea of local density measure, several extensions to the basic LOF model have been proposed. Tang et al. proposed the Connectivity-based Outlier Factor (COF) [32] to deal with the case that a cluster and a neighboring outlier have similar neighborhood densities. Rather than examining an individual sample, the Local Correlation Integral (LOCI) [25] method looks for groups of outliers. LOCI method provides an “outlier plot” which gives the user an idea on how data is distributed in the vicinity of the analyzed sample. From the plot, one can assess whether the sample is inside a cluster, a part of a micro-cluster or if it is an outstanding outlier. Different with LOF, LOCI uses ε-neighborhoods rather than k-nearest neighbors, and can deal with multi-granularity problem in the dataset. Jin et al. proposed a measure of outlierness named INFLO [17] by considering the union of a point’s k-nearest neighbors and its reverse nearest neighbors. In 2011, in order to detect anomalies from high-dimensional data streams, Zhang et al. [40] developed a method named Stream Projected Otlier Detector (SPOD), which constructs sparse subspace template and then anomalies are more likely to be detected in the set of subspaces. To achieve good detection performance, application-dependent feature selection and data partition based on different temporal contexts are conducted. S. Hido et al. [14] proposed density-ratio based outlier detection approach which find outliers in the test set based on the training set consisting only of inliers. The outliers are recognized by the ratio of training and testing data densities. This method can be viewed as supervised outlier detection. However, there are not training data in most cases, and the performance of this method heavily depends on the accuracy of density ratio estimation which is a challenging problem for high-dimensional data sets.

The clustering problem has a complementary relationship to the outlier detection problem, in which points either belong to clusters or outliers. Clustering-based methods define outliers as clusters of small size, especially including the size of one data point. Density-Based Spatial Clustering of Applications with Noise (DBSCAN, [9]) detects outliers by checking the connections between data samples and clusters, and the samples that do not belong to any clusters or belong to small clusters are identified as outliers. In 2008, Jiang et al. [16] proposed a clustering-based outlier detection method, which consists of two stages, firstly, dataset is clustered by on-pass clustering algorithm, then the outlier factors of clusters are determined. The robust clustering with outliers is also called noise clustering, which define outliers in terms of noise distance. Rehm et al. [27] proposed a method to estimate the noise distance in noise clustering based on the preservation of the hypervolume of the feature space. However, how to estimate the hypervolume of the feature space is also a challenge. In 2011, Shi et al. [31] proposed Cluster-Outlier Iterative Detection (COID) method, in which clusters are obtained firstly, then the intra-relationship and inter-relationship are defined. After performing the alteration of clusters and outliers iteratively, COID method consistently outputs natural clusters and outliers. To circumvent sensitivity of parameter k in k-nearest neighbors based methods, Wang et al. [34] proposed a minimum spanning tree clustering based global outlier factor and local outlier factor. In order to handle large-scale datasets, a robust Novel Local Outlier Detection (NLOD, [7]) method is proposed, which finds density peaks of dataset by 3σ standard firstly, then all the samples are clustered by neareast neighbor criterion, finally the local outliers of each cluster are identified by Chebyshev’s inequality and density peak reachability. However, the performance of clustering-based methods are highly dependent on the effectiveness of the clustering algorithm in capturing the cluster structure of normal samples.

Furthermore, there are some other methods are designed for special background. For high dimensional data, Kriegel et al. proposed Angle-Based Outlier Factor (ABOF) [20] to evaluate the variance in angles among the difference vectors from the analyzed sample to other samples in the dataset. However, ABOF only considers the relationships between each sample and its neighbors and does not consider the relationships among these neighbors, thus it may not detect outliers correctly. Scholkipf et al. [30] extended SVM to outlier detection which aims to separate data samples into outliers and inliers by a hyperplane in a Gaussian reproducing kernel Hilbert space. However, setting of tuning parameters in this method is difficult. Manifold is a useful tool to model the structure of data samples. Since manifold learning methods are sensitive to outliers, Onderwater [23] proposed an outlier detection method based on Local Reconstruction Weights (LRW). The samples with large local reconstruction weights can be considered as outliers. By utilizing the concept of the center of gravity, Ha et al. [11] introduced the instability factor of a sample to detect local and global outliers. A sample with a high instability factor is a promising candidate for an outlier. This approach eliminates the problem of density calculation in the neighborhood of a sample, but with a high computational cost. Recently, Huang et al. [15] proposed Rank-Based Detection Algorithm (RBDA) and the degree of isolation of a sample is measured with sum of ranks of a sample. Dufrenois et al. [8] proposed one class Fisher’s linear discriminant criterion and its kernelized version to detect isolate outliers from normal samples.

All these methods are closely related, since they are based on the proximity of samples. However, these methods do not work well if there are various degrees of cluster density in dataset. Also, it is difficult to select appropriate values for the model parameters, such as the size of the neighborhood around a sample. What is more, they are often unsuitable for high-dimensional datasets and for arbitrary datasets without prior knowledge of the underlying data distribution. To overcome these weaknesses and detect all kinds of outliers simultaneously, motivated by the idea in [28], we proposed a Decision Graph based Outlier Detection (DGOD) method. DGOD detects outlier from clusters by incorporating the advantages of density-based and clustering-based methods. Each sample is ranked by the proposed decision graph score. The basic idea of DGOD is illustrated in Fig. 1, and the main contributions of this paper are listed as follows:

-

(1)

Two metrics are proposed to analyze the distribution of data samples, which are called local density and discriminant distance.

-

(2)

A simple and intuitive outlier detection criterion named decision graph score is defined to measure the outlierness of each sample, which is computational efficient and scalable for large- scale datasets.

-

(3)

Comprehensive experiments on several synthetic and real-world datasets demonstrate the efficiency and effectiveness of DGOD method. It is not only computational efficient, but also robust to distribution and dimensionality of datasets.

The rest of the paper is organized as follows. In Section 2, we reviewed some related works on outlier detection. Then the proposed method is presented in Section 3. The experimental analysis is conducted in Section 4. Section 5 concludes the paper and Section 6 outlines the limitations of the proposed method as well as the scope for future work.

2 Related work

In this section, we discuss six related works in the area of detecting outliers, such as Local Outlier Factor (LOF), Local Reconstruction Weights (LRW) based outlier detection method, Rank-Based Detection Algorithm (RBDA), Angle-Based Outlier Factor (ABOF), Density-Based Spatial Clustering of Applications with Noise (DBSCAN) and Local Projection Score (LPS). Table 1 presents the notations used in the remainder of the paper.

2.1 Local outlier factor (LOF)

Let dist k (x i ) be k-distance of a sample x i which is defined as the distance between x i and its kth nearest neighbor. Then k-distance neighbor of x i is defined as

Noted that the cardinality of N k (x i ) is greater than k. Then reachability distance from x i to x j is defined as

Obviously, the reachability distance is not symmetric, i.e., reachdist k (x i → x j ) ≠reachdist k (x j → x i ). It indicates that reachability distance measures the dissimilarity between x i and x j by considering their locality. The reachability distance from x i to its k-distance neighbor is its k-distance, and the reachability distance from x i to the samples that are not its k-distance neighbors is their actual distance. Therefore, statistical fluctuations of pairwise distances for all the nearby samples can be significantly reduced.

In order to compare the densities of different neighborhood sets of samples dynamically, local reachability density of x i is defined as

Here |N k (x i )| is number of samples contained in N k (x i ). Then LOF of a sample x i is defined as the average of the ratio of local reachability of x i and those of x i ‘s k-nearest neighbors

Note that the lower the local reachability density of x i , and the higher the local reachability density of the k-nearest neighbors of x j , then the higher LOF. Obviously, for most samples in a cluster, the LOF values of them are approximately equal to 1.

2.2 Local reconstruction weights (LRW) based method

LRW method derived from local linear embedding [29], and has three steps. Firstly, local reconstruction weights are computed, and then compute the reliability score of each sample point using local reconstruction weights. At last, the outliers are detected using the reliability scores. LRW method assumes that each data point can be linearly reconstructed from its neighborhoods, i.e.

where w ij is x i ’s local reconstruction weight from x j . The objective function (3) is minimized with following two constraints:

First, each data point x i is reconstructed only from its neighbors, enforcing w ij = 0 if x j does not belong to the set of neighbors of x i ; Second, the rows of the weight matrix sum to one, i.e.,

After obtaining the optimal local reconstruction weights, the sample points with large reconstruction weights are suspected as outliers in the dataset. Therefore, the reliability score of each sample can be defined as

2.3 Rank-based detection algorithm (RBDA)

RBDA uses mutual closeness of each data point and its neighbors to detect outliers. Different from other outlier detection methods, RBDA uses rank instead of distance. Let R be the rank matrix of dataset X. If x j is not the k-distance neighbor of x i , or x i is not the k-distance neighbor of x j , R ij = 0; Otherwise, R ij is the rank of distance between x i and x j among sorted distances between any other samples and x j with ascending order.

In order to measure the outlierness of x i , RBDA use the ranks based on neighborhood relationships between x i and N k (x i ), which can be defined as:

If outlierness of x i is large, it will be suspected as an outlier.

2.4 Angle-based outlier factor (ABOF)

The angle-based outlier factor ABOF(x i ) is defined as the variance over the angles between the difference vectors of x i to all pairs of samples in X weighted by the distance of the samples, which can be formulated as:

where 〈⋅, ⋅〉 denote inner product between two vectors. Since for each sample all pairs of samples must be considered, the computational cost is high. Thus in applications, the ABOF can be approximated as follows:

2.5 Density-based spatial clustering of applications with noise (DBSCAN)

In DBSCAN [9] method, outliers are identified as sample points that lie in low-density regions whose nearest neighbors are too far away. Since DBSCAN is a density-based clustering method, the points that do not belong to any of the clusters will be identified as outliers. The cluster of DBSCAN is defined as follows.

Definition 1

The ε -neighborhood of a sample is defined as

Definition 2

A sample x i is directly density-reachable from a sample x j if x i ∈ N ε (x j ) and |N ε (x j )| ≥ MinPts, where MinPts is a given integer.

Definition 3

A sample x i is density-reachable from a sample x j if there is a chain of samples \( {x}_{p_1},{x}_{p_2},\cdots, {x}_{p_m} \), and \( {x}_{p_1}={x}_j,{x}_{p_m}={x}_i \), such that \( {x}_{p_i+1} \)is directly density-reachable from \( {x}_{p_i} \).

Definition 4

A sample x i is density-connected to a sample x j if there is a sample x t sucht that both x i and x j are density-reachable from x t .

Definition 5

A cluster C is non-empty subset of X satisfying the following conditions:

-

(1)

For any x i and x j , if x j is density-reachable from x i that belong to C, then x j will belong to C.

-

(2)

For any x i and x j in C, xi is density-connected to x j

Definition 6

Let C 1, …, C s be the clusters of the dataset X, then

2.6 Local projection score (LPS)

LPS [21] assumes that the sparser the neighborhood of the sample, the higher probability of being outlier the sample. Since the nuclear norm of X can efficiently measure the divergence of X, the nuclear norm of neighborhood is adopted as the outlierness called LPS which is defined as

where \( {\left\Vert X\right\Vert}_{\ast }=\sum \limits_{i=1}^r{\sigma}_i \) and \( N\left({x}_i\right)=\left\{{x}_i^{(1)},{x}_i^{(2)},\cdots, {x}_i^{(k)}\right\} \). Note that the larger the LPS(xi) is, the sparser the neighborhood of xi is. The LPS(xi) can be estimated in low-dimensional embedding space, the projection procedure can be formulated as the following nuclear norm minimization problem:

3 Proposed method

Compared with their neighbors, outliers can be characterized by a lower density and by a relatively large distance from points with higher densities. Based on such observation, the proposed DGOD method uses cluster structure to determine normal samples, from which the outliers are identified.

3.1 Decision graph

Definition 7

The local density ρ i of a sample x i is defined as

where d c > 0 is a given threshold which can be called cutoff distance, and θ(⋅) is an indicator function which is defined as

From Definition 7, we can see that the local density of each sample point is the number of samples contained in a hypersphere with the radius d c which centered in that sample point. The lower local density, the sparser samples distributed. Therefore, local density in Definition 7 is a useful quantity measure to describe distribution of samples. In the application, we can define other form local density similarly, such as

By using the potential entropy of data field, Wang et al. [35] proposed an automatic selection of the threshold value of d c . In data field, the potential of each sample point can be calculated as follows:

which is very similar to the equation that is used to calculate local density. Since the sample points with larger potentials located in the dense region, by using the potential entropy of data field, the optimal threshold value d c can be calculated as \( \frac{3}{\sqrt{2}}\sigma \), where impact factor σ can be chosen with smallest entropy. The entropy H of data field can be calculated as follows:

Definition 8

The discriminant distance δ i of a sample x i is defined as

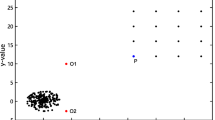

Similar to reachability distance in LOF, the larger discriminant distance of x i , the more likely it is an outlier. It represents the difference between x i and its neighborhood samples. Particularly, for those samples located in two different cluster centers, their discriminant distance may be large, but they cannot be outliers, since their local densities are large. In order to visualize the identification of outliers intuitively, we construct the following decision graph.

Definition 9

Decision Graph. Decision graph of a dataset X is a scatter-plot (ρ i , δ i ) which of the plot of discriminant distance δ i along local density ρ i for each sample.

Here is a toy example for illustrating the idea of decision graph. In Fig. 2, 40 sample points with two clusters are randomly generated. For statement conveniently, each sample point is marked with a number. Intuitively, we can see that from Fig. 2, Point 20, Point 17, Point 13 may be suspected as outliers. This suspicious can be confirmed obviously in Fig. 3, which shows the decision graph of the toy data. Samples with lower density and larger discriminant distance are located in the left corner of decision graph. It indicates that the outlier score of each sample can be calculated based on the decision graph, which is defined by local density and discriminant distance.

3.2 Outlier detection criteria

Finally, we define following outlier detection criteria based on decision graph of dataset.

Definition 10

Decision Graph Score. The decision graph score γ i of a sample x i is defined as

Essentially, discriminant distance is determined by local density. Therefore, the decision graph score is sensitive only to the relative magnitude of local density ρ i in different samples, and it is robust with respect to the choice of d c . Compared with related outlier detection methods, our DGOD method is simple and intuitive.

Figure 4 shows the plot of decision graph score γ i with descending order. We find that the samples with largest decision graph score are Point 20, Point 17 and Point 13. Figure 5 shows the original sample points marked with circles, whose radius represent the value of decision graph score. Obviously, the most suspicious outliers are Point 20, Point 17 and Point 13.

The implementation details of decision graph based outlier detection (DGOD) are summarized in Algorithm 1. It comprises two major procedures: estimating local densities and computing discriminant distances. After sorting decision graph scores in a descending order, the top r samples will be ranked as desired outliers. The dominating steps are pairwise distances computing and sorting, and the computational complexity of pairwise distance matrix computing is O(n 2 d) and the same as Step 2, the computational complexity of DGOD is O(n 2 d).

4 Experimental results

In this section, we show the effectiveness of the proposed method on Synthetic and real-world datasets with known ground-truth outliers. We compared the performance of our proposed method (DGOD) with seven existing approaches, Z-value, LOF, LRW, RBDA, ABOF, DBSCAN and LPS. All methods are implemented using MATLAB 2014b running on Intel core i7 processor with an 8 GB RAM. Although d c can be automatic selected by potential entropy of data field, it is computational expensive. In the experiments, the cutoff distance d c is set as follows: firstly, all pairwise distances between samples are sorted with ascending order, then the distance value in the position of 2 % of total number of samples is set as cutoff distance d c . Given a fixed number of desired outliers, the detected outliers will be obtained by different methods, and then the performances are compared with true outliers.

4.1 Synthetic data

In order to illustrate how DGOD behaves in outlier detection, four synthetic datasets, shown in Figs. 6, 7, 8, and 9, are designed to consider the various situations of datasets structure, including outliers planted in datasets with different local density and multi-granularity, outliers in linear regression and clustering. These four synthetic datasets are named as Local Density Synthetic Dataset, Multi-Granularity Synthetic Dataset, Linear Regression Synthetic Dataset and Two Cluster Synthetic Dataset, respectively. Figsures 6, 7, 8, and 9 displays the detected outliers by different methods on the four datasets. For each method, the detected outliers are marked with different colors and symbols.

Density and multi-granularity of dataset are challenges for outlier detection task, since the normal samples or clusters are generated by placing all points uniformly with varying degrees of densities. From Figs. 6 and 7, we can see that LRW and DBSCAN detected the two ground-truth outliers and DGOD detected only one ground-truth outlier, while other methods failed. It indicates that LRW and DBSCAN may be suitable for Local Density Synthetic Dataset and Multi-granularity Synthetic Dataset.

Outliers in regression and clustering tasks are main issue for robust data mining. In Fig. 8, both DGOD and LRW detect two true outliers successfully, and LOF and RBDA detect only one true outlier, while other methods failed. In Fig. 9, DGOD detected 5 true outliers successfully, and Z-value detected 4 true outliers, LOF and LPS detected 3 true outliers, RBDA detected 2 outliers, while other methods failed. It indicates that DGOD method performs well for robust regression and clustering tasks.

4.2 Real datasets with rare classes

We also applied the DGOD method to six real-world UCI datasets. For each UCI dataset, following the data preprocessing strategy used in [33], the class with minimum number of samples is made `rare’ by removing most of its samples, and the remaining samples are used in the final dataset. Specifically, only 10, 20 and 30% of the samples in the class with minimal size is contained as outlier. The datasets used in experiments are described in Table 2.

The number of detected outliers is presented as the number of true outliers, except for Z-value method. To make a comprehensive comparison, three metrics called Precision, Recall and F 1 measure are adopted in the experiments to evaluate the performances of different methods. We defined Precision (P) as follows

where r 0 is the number of true outliers among r detected outlier by an algorithm. It measures the percentage of true outliers among top r ranked. Note that precision defined above in fact measures the proportion of detected outliers that are correctly identified.

Based on the confusion matrix in Table 3, the Recall (R) and F 1 measure can be computed as follows:

Since the number of detected outliers (#Positive) is fixed as r, the False Alarm Rate (False Positive Rate) in our experiment is 50%.

For each dataset, we select different proportion of outliers for experimental comparable analysis. For each fixed number of outliers, the outliers used in experiment are selected randomly and random selection is repeated 10 times. Finally, the average detection precision, recall and F 1 measure are reported in Tables 4, 5, 6, 7 and 8, in which the optimal result of each measure is presented in bold.

From the Tables 4, 5, 6, 7, and 8, we can clearly observe that, on Sonar and Ionosphere datasets, DGOD performs better than other methods under the three evaluation measures. However, on WDBC and Vowel datasets, DBSCAN performs better than other methods under the three evaluation measures, and the DGOD method is the second best result. For the most cases, DGOD method consistently yields a better performance. Most existing methods first find the neighbors for each sample based on k nearest neighbor, and then compute local density of a sample using the neighbors, where the significant differences between local densities give us more confidence to declare an outlier. However, these methods may not be able to obtain reliable outliers in real world application, due to existing highly heterogeneous neighborhoods of some samples. To handle heterogeneous problems, DGOD uses local density and discriminant distance of each sample to detect more reliable outliers. Since the DGOD method is based on pairwise distances and local densities, when the samples are densely distributed, the performance will be better.

4.3 Real datasets with planted outliers

To validate the effectiveness of DGOD method on high-dimensional data, we conduct an experiment on image data. Firstly, we select Yale face datasetFootnote 1 as normal image set, which contains 165 grayscale images of 15 individuals. Then, we add six cat face images as outliers. Each image is resized to 64 × 64 and can be viewed as a point in 4096 dimensional space.

The experiment aims to recognize cat faces in Yale human face dataset. Figure 10 shows the first six outlier images detected by different methods. Among them, DGOD recognizes five cat faces successfully, and Z-value recognizes only two cat faces, while other methods failed. Moreover, from Fig. 10, we can find that illumination, with/without glasses and expressions are main interfere factors for the detection task. The possible reasons could be as follows: (1) The original Yale face dataset has a clear cluster structure, and the DGOD method considered local density and discriminant distance simultaneously, which make the outliers easier to be detected. (2) The intra-class variations in Yale face dataset distorted the distance neighborhood relationships between images, which further influence the detection rates of baseline methods.

For the purpose of visualization, we employed multi-dimensional scaling [6] to embedding the original face images into two-dimensional space. The comparative results are shown in Fig. 11. The embedding six outliers are marked with red circles. For each method, the marked points with different colors in the plots are the samples with highest outlier score. DGOD and LPS successfully detected the true outliers. LOF and RBDA seem to behave in the similar way. As can be seen in Fig. 11, the detected outliers located in the region of top-left corner. The images located in this region are the faces with darkest illumination.

Table 9 shows the time costs of all methods in face image datasets with planted cat face images. As one can see, DGOD is more efficient than LRW, ABOF, DBSCAN and LPS, and computational comparable with Z-value, LOF and RBDA. Although DGOD and DBSCAN perform comparably under detection rate on some case, this observation experimentally demonstrates that the proposed DGOD method is more efficient than DBSCAN in terms of computational time.

4.4 Parameter sensitivity analysis

In this section, we analyze the performance of the proposed method by varying the cutoff distance d c and the percentage of outliers. Similar to experiments in section 4.3, 10 cat faces are added to Yale face data set successively. To analyze how DGOD method affected by distance metrics between samples, five distance metrics are used in experiment, i.e., Euclidean distance, Minkovski distance, Chebychev distance, cosine distance and spearman distance. Figure 12 shows the effect of types of distance metrics between samples. As can be seen, Euclidean, Minkovski and spearman distance metric in DGOD have better performance than others. Then we set different values of cutoff distance d c in a wide range, the performance of DGOD with different cutoff distance are shown in Fig. 13. According to rule of thumb, we set d c to 2406. It is observed that as the cutoff distance increases, the detection rate of DGOD method increases correspondingly, and when the cutoff distance is greater than 2000, the detection rate tends to stable. There is a wide range for cutoff distance setting. Additionally, from Fig. 14, we can see that, DGOD method is robust to number of outliers.

5 Conclusion

SBD are about daily large data, produced from social communication and news dissemination, and it has been an effective platform for security and privacy issues on both theoretical and applied techniques, especially information control and detection is the critical issue. In order to detected outlier in various complex datasets, we present an outlier detection method by incorporating the idea of density-based and clustering-based methods. The proposed DGOD method starts by computing distance matrix of samples, then the local density and discriminant distance of each sample is computed. Finally, outliers are identified relatively with low local density and high discriminant distance. Empirical results on synthetic and real world dataset have demonstrated the effectiveness of our method in terms of data shape and dimensionality.

6 Limitations and future works

Since DGOD exploits pairwise distances between all samples to get density information, its performance will be affected by the distance computation to some extent. In our future work, we will extend DGOD to kernel version by using kernel similarity function as distance measure between samples. In addition, we would apply the decision graph based outlier detection method to detect abnormal apple samples with diseases by hyperspectral imaging in agricultural product inspection. Moreover, how to embed the proposed information detection method into distributed database system to deal with big social data incrementally is still a challenging problem [2, 24].

References

Aggarwal CC (2013) Outlier analysis[M]. Springer Science & Business Media, New York

Bagui S, Nguyen LT (2015) Database Sharding: to provide fault tolerance and scalability of big data on the cloud. Int J Cloud Appl Comput 5(2):36–52

Barnett V, Lewis T (1994) Outliers in statistical data[M]. Wiley, New York

Bello-Orgaz G, Jung JJ, Camacho D (2016) Social big data: recent achievements and new challenges[J]. Inf Fusion 28(3):45–59

Breunig MM, Kriegel HP, Ng RT et al (2000) LOF: identifying density-based local outliers[C]. Proceedings of ACM sigmod record. ACM 29(2):93–104

Cox T, Cox M (1994) Multidimensional scaling. Chapman & Hall, London

Du H, Zhao S, Zhang D et al (2016) Novel clustering-based approach for local outlier detection[C]. Int Conf Comput Commun 2016:802–811

Dufrenois F (2015) A one-class kernel fisher criterion for outlier detection[J]. IEEE Transactions on Neural Networks and Learning Systems 26(5):982–994

Ester M, Kriegel HP, Sander J et al (1996) A density-based algorithm for discovering clusters in large spatial databases with noise[C]. Kdd 96(34):226–231

Gupta S, Gupta BB (2017) XSS-secure as a service for the platforms of online social network-based multimedia web applications in cloud [J]. Multimed Tools Appl. https://doi.org/10.1007/s11042-016-3735-1

Ha J, Seok S, Lee JS (2014) Robust outlier detection using the instability factor[J]. Knowl-Based Syst 63:15–23

Hamedani K, Liu L, Rachad A et al (2017) Reservoir computing meets smart grids: attack detection using delayed feedback networks[J]. IEEE Trans Ind Inf. https://doi.org/10.1109/TII.2017.2769106

Hawkins D (1980) Identification of outliers. Chapman and Hall, New York

Hido S, Tsuboi Y, Kashima H et al (2011) Statistical outlier detection using direct density ratio estimation[J]. Knowl Inf Syst 26(2):309–336

Huang H, Mehrotra K, Mohan CK (2013) Rank-based outlier detection[J]. J Stat Comput Simul 83(3):518–531

Jiang S, An Q (2008) Clustering-based outlier detection method[C]. In Proceedings of IEEE fifth international conference on fuzzy systems and knowledge discovery 2:429–433

Jin W, Tung AKH, Han J et al (2006) Ranking outliers using symmetric neighborhood relationship[M]. Advances in knowledge discovery and data mining. Springer, Berlin, pp 577–593

Johnson RA, Wichern DW (1992) Applied multivariate statistical analysis[M]. Prentice hall, Englewood Cliffs

Knox EM, Ng RT (1998) Algorithms for mining distancebased outliers in large datasets[C]. In Proceedings of the international conference on very large data bases pp 392–403

Kriegel H P, Zimek A (2008) Angle-based outlier detection in high-dimensional data[C]. In Proceedings of the 14th ACM SIGKDD international conference on knowledge discovery and data mining. ACM 444–452

Liu H, Li X, Li J et al (2017) Efficient outlier detection for high-dimensional data[J]. IEEE Trans Syst Man Cybern Syst. https://doi.org/10.1109/TSMC.2017.2718220

Maimon O, Rockach L (2005) Data mining and knowledge discovery handbook: a complete guide for practitioners and researchers. Springer, New York

Onderwater M (2010) Detecting unusual user profiles with outlier detection techniques. Master Thesis, http://tinyurl.com/vu-thesis-onderwater

Ouf S, Nasr M (2015) Cloud computing: the future of big data management. Int J Cloud Appl Comput 5(2):53–61

Papadimitriou S, Kitagawa H, Gibbons PB et al (2003) Loci: fast outlier detection using the local correlation integral[C]. In Proceedings of IEEE 19th international conference on data engineering pp 315–326

Ramaswamy S, Rastogi R, Shim K (2000) Efficient algorithms for mining outliers from large data sets. ACM SIGMOD Rec 29(2):427–438

Rehm F, Klawonn F, Kruse R (2007) A novel approach to noise clustering for outlier detection[J]. Soft Comput 11(5):489–494

Rodriguez A, Laio A (2014) Clustering by fast search and find of density peaks[J]. Science 344(6191):1492–1496

Roweis ST, Saul LK (2000) Nonlinear dimensionality reduction by locally linear embedding[J]. Science 290(5500):2323–2326

Schölkopf B, Platt JC, Shawe-Taylor J et al (2001) Estimating the support of a high-dimensional distribution[J]. Neural Comput 13(7):1443–1471

Shi Y, Zhang L (2011) COID: a cluster–outlier iterative detection approach to multi-dimensional data analysis[J]. Knowl Inf Syst 28(3):709–733

Tang J, Chen Z, Fu AWC et al (2002) Enhancing effectiveness of outlier detections for low density patterns[M]. Advances in knowledge discovery and data mining. Springer, Berlin, pp 535–548

Tax DMJ, Duin RPW (2004) Support vector data description[J]. Mach Learn 54(1):45–66

Wang X, Wang XL, Ma Y et al (2015) A fast MST-inspired kNN-based outlier detection method[J]. Inf Syst 48:89–112

Wang S, Wang D, Li C et al (2015) Comment on“ Clustering by fast search and find of density peaks”[J]. arXiv preprint arXiv:1501.04267

Wu J, Guo S, Li J et al (2016) Big data meet green challenges: big data toward green applications[J]. IEEE Syst J 10(3):888–900

Wu J, Guo S, Li J et al (2016) Big Data Meet Green Challenges: Greening Big Data[J]. IEEE Syst J 10(3):873–887

Zhang Z, Gupta BB (2017) Social media security and trustworthiness: overview and new direction[J]. Futur Gener Comput Syst. https://doi.org/10.1016/j.future.2016.10.007

Zhang K, Hutter M, Jin H (2009) A new local distance-based outlier detection approach for scattered real-world data[M]. Advances in Knowledge Discovery and Data Mining. Springer, Berlin, pp 813–822

Zhang J, Gao Q, Wang H et al (2011) Detecting anomalies from high-dimensional wireless network data streams: a case study[J]. Soft Comput 15(6):1195–1215

Zhang Z, Sun R, Zhao C et al (2017) CyVOD: a novel trinity multimedia social network scheme[J]. Multimed Tools Appl 76(18):18513–18529

Acknowledgements

The authors would like to thank all the anonymous reviewers and editors for their valuable comments and. Additionally, we would like to thank Bin Liu and Yaguang Jia who critically reviewed the study proposal. This work was supported in part by Yangling Demonstration Zone Science and Technology Planning Project under Grant 2016NY-31, Doctoral Starting up Foundation of Northwest A&F University under Grant 2452015302 and National Natural Science Foundation of China under Grants 61602388.

Author information

Authors and Affiliations

Corresponding authors

Rights and permissions

About this article

Cite this article

He, J., Xiong, N. An effective information detection method for social big data. Multimed Tools Appl 77, 11277–11305 (2018). https://doi.org/10.1007/s11042-017-5523-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-017-5523-y