Abstract

It has become very popular to take photographs in everyone’s daily life. However, the visual quality of a photograph is not always guaranteed due to various factors. One common factor is the low-light imaging condition, which conceals visual information and degenerates the quality of a photograph. It is preferable for a low-light image enhancement model to complete the following tasks: improving contrast, preserving details, and keeping robust to noise. To this end, we propose a simple but effective enhancing model based on the simplified Retinex theory, of which the key is to estimate a good illumination map. In our model, we apply an iterative self-guided filter to refine the initial estimation of an illumination map, making it aware of local structure of image contents. In experiments, we validate the effectiveness of our method in various aspects, and compare our model with several state-of-the-art ones. The results show that our method effectively adjusts the global image contrast, recovers the concealed details and keeps the robustness against noise.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

With the prevalence of advanced mobile devices, it is very convenient for people to take photographs almost anywhere at anytime. Along with the booming of social media platforms, huge amounts of photographs are produced and shared each day, which have formed an important kind of big data [36]. However, the quality of these visual data is not ensured, as the generating source of these visual data is quite open. On one hand, in taking a photograph, most people are amateurs or know little about photographing skills. They tend to choose sub-optimal photographing parameters [23, 28]. On the other hand, there are many challenging photographing conditions that lead to low photo quality, such as bad weather, moving objects, and low light conditions [4]. Low-light images lower the visual quality for user experience or hinder the content understanding for industrial applications.

Various image enhancement techniques are thus highly needed to recover image details or lift image quality. For example, rich user-generated contents from social media can be fully adopted to optimize the scene composition [13, 23, 28]. In these learning based methods, it is necessary to capture the semantic information from sufficient visually similar exemplars. Furthermore, for processing an arbitrarily new photograph, large amount exemplars are needed at the server side [26]. In this context, the computation cost and the storage load make the support of cloud computing and high-speed transmission indispensable. Nevertheless, for some tasks, there is no need to process images at the cloud side. For example, to deal with challenging imaging conditions, the image manipulation has to be on the pixel-wise level, where the efficient filtering at the side of mobile devices is more feasible.

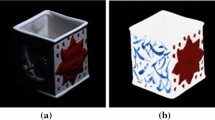

In this research, we intend to enhance the low-light images. Specifically, there are two situations of low light, i.e., nighttime, unbalanced light. In dark surroundings, the image histogram mainly gathers in low-intensity regions, and most image details are therefore concealed by the low contrast (e.g. the first column in Fig. 1). As for the unbalanced light situations, the low-light regions only exist in part of the whole image due to backlight or sidelight (e.g. the second and third column in Fig. 1).

These situations pose challenges to the image enhancement task. First, during the process of enhancing edge and texture patterns, the imaging noise overlapped in the dark regions is likely to be amplified in the meanwhile. Second, to deal with unbalanced-light conditions, an adaptive model is required to dynamically assign enhancing strengths to different regions. Third, the naturalness of a result image is supposed to be preserved, such as the basic scene characteristics and the visual consistency. Also, the computational efficiency is very important for applications on the mobile device side. The traditional histogram based [24] and Retinex based [15, 16] methods often have difficulties to meet all the requirements simultaneously.

In this paper, we propose a Retinex based low-light enhancement model shown in Fig. 2. To address the aforementioned challenges, the key issue is to produce a high-quality illumination map T. Specifically, on one hand, to reveal the detailed texture pattern, T is required to be piecewise-smooth for the Retinex model. On the other hand, T is preferred to be structure-aware, since it would introduce visual inconsistency if multiple filtering strengths were assigned to a same semantic region. In our model, we initially estimate the illumination map T through the max-RGB technique, and then apply a self-guided filter to refine T. The contribution of our paper is two-fold. First, the illumination map is refined for the Retinex model with effectiveness and efficiency. Second, experimental results empirically show that our model achieve good balance among the requirements of low-light image enhancement. Of note, this paper is an extension of a conference paper [4]. In this version, we provide more details in describing the research background, and introduce more related works. We also provide additional experimental results and their analysis to further validate the effectiveness of our model.

The rest of this paper is organized as follows. We briefly introduce the related works for processing low-light images in Section 2. Section 3 presents the proposed method. We validate our model in Section 4. Section 5 finally concludes the paper.

2 Related works

Image enhancement is usually a prerequisite step in many research fields, such as natural image classification/retrieval [35, 37, 38], medical image processing [8, 39], social media analysis [13, 31], and visual surveillance [21, 34]. The central task of enhancement can be also quite different, e.g., contrast enhancement [29], color enhancement [19], detail enhancement [9], composition enhancement [23], to name but a few. In this paper, we briefly introduce the related works on low-light enhancement models.

Retinex based models achieve promising results on enhancing low-light images. Its main idea is to decompose an image into an illumination map and a reflectance map [15, 16], where the reflectance can be treated as the enhanced result. However, this roadmap is limited as the produced result is often over-enhanced. Guo et al. [7] propose a simplified enhancement model LIME and achieves good results. Nevertheless, the model always needs a gamma correction for non-linearly rescaling the refined illumination map. This additional post-processing stage lowers the model’s robustness. Our model is most related to the LIME model, but distinguishes itself mainly in the model simplicity. By using a self-guided filter, we directly obtain the illumination map, which can be directly adopted in the enhancing model.

As a classical problem in computer vision and graphics, intrinsic image decomposition can be also used in the enhancement task. Fu et al. [6] propose a weighted variational model for simultaneously estimating reflectance and illumination, and apply this model in manipulating the illumination map. The limitation of this method is that its computational complexity is relatively high, as it aims at simultaneously recovering two channels. Based on HSV representation, Yue et al. [29] decompose the V channel into an illumination layer and a reflectance layer based on Split Bregman algorithm. By adjusting the illumination layer through Gamma Correction and histogram equalization, all the layers and channels are gradually integrated to achieve the final result.

Differently, Dong et al. [3] propose an interesting technical roadmap. They consider the inverse of the estimated illumination map as a hazed image, and obtain enhancement through the dehazing model. Song et al. [25] extend this model and solve the block artifact issue. Although this model obtains satisfying results, its idea lacks a clear physical meaning to some extent.

As another popular method family, histogram based methods are highlighted for their simplicity of reshaping the image histogram into a desired distribution. Traditional methods [1, 17] tend to over- or under-enhance the target image, since they stretch the illumination range without considering image details. Recently, a 2-D histogram based on the layered difference representation is built and effectively applied in the low-light enhancing task [18].

There are methods that combine different enhancing strategies. Fu et al. [5] proposed a novel mixture method. After estimating the illumination layer, they generate multiple enhancing results and then fuse them with a multi-scale pyramid model, which combines the strengths of several enhancing models. Lim et al. [20] propose to split an image into structure, texture and noise components, and use 2D–histogram-based scheme to enhance low-light images.

Of note, with the rapid development of deep learning models, it is possible to train a deep network to realize the low-light enhancement task. Lore et al. [22] propose a deep autoencoder-based approach to identify signal features from low-light images and adaptively brighten images without over-amplifying the lighter parts in an image.

3 Proposed method

In this section, we present our low-light enhancement model with a refined illumination map. The general framework is shown in Fig. 2. Given an input image, we initially estimate the illumination map with the max-RGB technique. Then we refine the initial estimation through a self-guided filtering process, which firstly removes the fine texture and then iteratively recovers the edge under a rolling guidance. Based on the refined map, the image is enhanced with a simplified Retinex model.

3.1 Simplified Retinex model

The Retinex model represents an observed low light image \( \mathbf{I}\in {\mathbb{R}}^{{\mathrm{N}}_1\times {\mathrm{N}}_2} \) as the pixel-wise multiplication:

where \( \mathbf{R}\in {\mathbb{R}}^{{\mathbf{N}}_1\times {\mathbf{N}}_2} \) is the reflectance layer and \( \mathbf{T}\in {\mathbb{R}}^{{\mathbf{N}}_1\times {\mathbf{N}}_2} \) is the illumination map. This model assumes that the scene sensed by human’s visual system is the product of R and T. Specifically, R is an image with an ideal light condition and is considered as the enhanced result, and T is the illumination map that controls the strength of lowering image intensity.

As the problem in Eq. 1 is ill-posed, methods based on full intrinsic decomposition [6] can be adopted. Nevertheless, this process is often time-consuming. In our research, we use a simplified model advocated in [7], which directly recovers R based on an element-wise division R = I/T.

We note that the direct element-wise division can be numerically unstable in case of very low T values. Therefore, to enhance a color image, a constant regularization term ϵ is added to the enhancement model for each channel:

where c is one channel of RGB, i.e. c ∈ {R, G, B}, and p represents a pixel of an image. This model is extremely simple and fast. To obtain satisfying results, the key is to further estimate an appropriate illumination map.

3.2 Illumination map refinement

On initially estimating each element T(p) in the illumination map, the Max-RGB technique is chosen:

In this equation, it is assumed that the illumination for each pixel is at least the maximum value among its three channels. Although there are other methods [6, 29] for accurately estimating the illumination map, we still choose the Max-RGB technique in our model for two reasons. First, the Max-RGB technique is extremely simple and fast. Second, the inaccurate estimation can be left for the following refinement stage [7], which produces a better T desired by the low-light enhancement task.

From Eq. 3, we can see that T is estimated in the pixel-wise style, producing an illumination map that is quite similar to the original image in terms of its local structure and texture pattern. In this way, the texture patterns of a patch in I can be flatted by T during the division process in Eq. 2, which results in detail loss (such as the red rectangle of R 0 in Fig. 3). There are some simple ways of refining the initial estimation, such as further applying the block-wise mean filter on T. It seems that the original pattern can be reserved by the smoothed T. However, we note that artifacts are likely to be brought together, such as the edge reversal effect shown in the yellow rectangles of R 1 and R 2 in Fig. 3.

Therefore, we prefer the illumination map to be both piecewise-constant and structure-aware in our research. Specifically, the texture pattern in the same semantic regions in T should be removed, while the boundaries between different regions should be preserved. To this end, we refine the initially estimated T with an iterative self-guided filtering model [30], which includes the following two stages.

The first stage is the texture removal. We convolute T with a Gaussian kernel parameterized by a scale factor σ s :

where ||p − q|| is the Euclidean distance between two pixels, \( {K}_p=\sum \limits_{q\in N(p)}\exp \left(-\frac{{\left|\left|p-q\right|\right|}^2}{2{\upsigma}_s^2}\right) \) is the normalization factor, and is N(p) the neighbor patch around p. The filtering process can be seen as a Gaussian weighted average controlled by the spatial parameter σ s . In this way, the image details with their spatial scale under σ s are removed. However, as boundaries of main structures in T are blurred during the Gaussian filtering, we need a following stage that restores the edges with T g and T.

The second stage is the iterative edge recovery. According to the information theory, it is impossible to recover the blurred edge only with T

g

. So we adopt the idea of joint filtering [2, 12] to address this issue, which leverages multiple image sources. Specifically, we anchor T as the input, and set the blurred T

g

as the initial guidance G

0. By using the joint filter \( \mathcal{F}\left(\mathbf{T},{\mathbf{G}}_0\right) \), we can obtain \( {\mathbf{G}}_1=\mathcal{F}\left(\mathbf{T},{\mathbf{G}}_0\right) \) that partially recovers main edge structures (e.g. G

1 in Fig. 3). We then use G

1 as the updated guidance for the next round filtering \( {\mathbf{G}}_2=\mathcal{F}\left(\mathbf{T},{\mathbf{G}}_1\right) \). In this way, we empirically found that G

k

quickly converges to a piecewise-constant map after a few iterations (e.g. the second row in Fig. 3). In our research, we choose the fast guided filter [11] as our joint filter  to ensure the efficiency of the whole refinement process. Of note, the iterative edge recovery strategy (illustrated within the dot line in Fig. 2) is different from the traditional joint filtering framework, since it gradually refines the guidance G while fix the input image T [30]. Based on the refined illumination map, we can obtain the enhanced image as:

to ensure the efficiency of the whole refinement process. Of note, the iterative edge recovery strategy (illustrated within the dot line in Fig. 2) is different from the traditional joint filtering framework, since it gradually refines the guidance G while fix the input image T [30]. Based on the refined illumination map, we can obtain the enhanced image as:

where c is one channel of an RGB-based color image, and p represents a pixel. We provide a toy example to demonstrate the effectiveness of our iterative edge recovery in Fig. 3. From G 0 to G 3, we observe that the fine-scale texture is smoothed, and the region boundaries become clear. In the meanwhile, we observe the corresponding enhancing results R 0 to R 3 gradually improve, as the texture patterns are preserved and the halo effect disappears.

The complexity of our model is presented as follows. Suppose N is the number of image pixels (N = N1 × N2). The maxRBG step takes O(3N) time. The texture removal step can be also realized in O(N) time regardless of its scale parameter σ s with the box filtering technique. As for the iterative guided filtering with k iterations, it needs O(k ∙ N/σ s ) time by using the fast guided filtering technique [11]. Finally, Eq. 5 is applied on RGB channels respectively, producing O(3N) computational cost. The overall complexity is proportional to O(N) level, which is comparable to other state-of-the-art models.

4 Experiments and analysis

We conduct the experiments on a laptop with a 2.6G Hz CPU and 8G ROM. Our method was implemented with un-optimized codes on the Matlab platform in a single thread. We use the experimental data from [7, 18] and the Internet, which composes of different low-light conditions such as nighttime, backlight and sidelight.

We first validate the effectiveness of using the refined illumination map. In Fig. 4, we can see that the texture details are lost (e.g. Fig. 4 (g)) if the initial T (Fig. 4 (c)) is not refined. By contrast, our result (Fig. 4 (d)) preserves the details (e.g. Fig. 4 (i)), as the refined map (Fig. 4 (e)) becomes piecewise-smooth (e.g. Fig. 4 (j)). Then we compare different strategies of illumination map refinement in Fig. 5, where we respectively use LIME [7], RTV [27] and self-guided filtering for refining T. The simplified Retinex model in Eq.2 was used for three models, and the only difference lies in the refined maps. From visual comparison, we observe that our method achieves the best visual consistency. As shown in the zoomed-in region in Fig. 5, our result has better naturalness than other two models, since our refinement process keeps looking at the homogenous guidance image, and therefore becomes more structure-aware to the complex local pattern.

In experimental comparison with other methods, the parameters of our method are set as follows. We empirically set the numerical stability parameter ϵ as 0.15, which works well for all the experimental images for different low-light conditions. As for the illumination map refinement, we empirically set the scale parameter σ s around 1/250 of the minimum of the image width and height. In experiments, we found three iterations (k = 3) are enough.

We first compare our method with several traditional image enhancing methods, such as Histogram Equalization (HE), Adaptive Histogram Equalization (AHE), and Gamma Correction (GC). In the implementation, we directly use the toolbox from Matlab to realize HE and AHE. For GC, we empirically set γ GC = 0.8. We choose one nighttime, one backlight and one sidelight image respectively, and demonstrate all their results in Fig. 6. We have the following observations. First, HE and AHE produce over-enhanced results, and they are also sensitive to image noises. Second, the results of GC are more natural and robust to noises. However, since GC only imposes a global non-linear mapping function for all the pixels, the enhancing effects are less apparent. Third, our results generally have a better global correcting effect, and recover the details from the dark regions while keep the naturalness of the previously bright regions.

We then compare our method with several state-of-the-art methods, i.e. LIME [7], Multi-scale Fusion (MF) [5], and Layered Difference Representation (LDR) [18]. In this comparison, we use the default parameter settings in [5, 7, 18]. Of note, we do not apply the post-denoising technique for all the results since it tends to generate the unrealistic cartoon effect. Fig. 7 shows the visual comparison between these state-of-the-art model and ours. We can see that our model achieves most balanced effect in terms of contrast enhancement, detail recovery and naturalness preserving. For example, our method generates fewer artifacts, such as noises and edge halos for nighttime and backlight images, than LIME and MF. LDR is most robust to these artifacts, but the global luminance of its results is relatively weak, which limits the detail recovery. As for the implementation time, our method is comparable to LIME and MF, which is able to process 500 K pixels in less than one second. The histogram based LDR achieves the fastest implementing time, as its computational load only lies in the optimization of a 256-dimensional histogram.

Finally, we make a further comparison between LIME and our model. Since the refined illumination map based on LIME needs a post-gamma-correction, we demonstrate multiple versions of LIME-based results with different γs (from 0.5 to 1) and our results in Fig.8. We observe that the global lightness of LIME results heavily depend on the gamma correction parameter. With a large γ, the noise is over-boosted and thus lowers both the image quality and naturalness. In contrary, our model is free of this extra controlling parameter, as the overall intensity distribution of G k is always constrained by the self-guided framework.

5 Conclusion and discussion

In this paper, we propose a simple but effective low-light enhancement model, which uses a piecewise constant illumination map to recover the concealed image details. To produce such a map, we apply an iterative self-guided filter to partition texture patterns from the initial estimation. We have validated our method by comparing it with several traditional and state-of-the-art methods. Experimental results demonstrate that our method is effective in recovering image details while keeping the visual naturalness.

The future research includes the following aspects. First, we plan to introduce a dynamic regularization term into the model in Eq. 2. For example, a spatially guided map [10] based on the lightness can be built and used to guide the regularization. Second, we note that the way of quantitatively assessing low-light enhancing results is still an open problem. It would be valuable to introduce an aesthetics evaluation model [14] specifically designed for our task. Third, aiming at producing content-aware enhancing results, it would be very useful to introduce high-level semantic information [32, 33] into our model.

References

Arici T, Dikbas S, Altunbasak Y (2009) A histogram modification framework and its application for image contrast enhancement. IEEE Trans Image Process 18(9):1921–1935

Chen J, Paris S, Durand F (2007) Real-time edge-aware image processing with the bilateral grid. ACM Trans Graph 26(3):article 103

Dong X, Wang G, Pang Y (2011) Fast efficient algorithm for enhancement of low lighting video. In: proceedings of Internation conference on Multimedia & Expo (ICME)

Feng Z, Hao S (2017) Low-light image enhancement by refining illumination map with self-guided filtering. In Proceedings of International Conference on Big Knowledge Workshop

Fu X, Zeng D, Huang Y, Liao Y, Ding X, Paisley J (2016) A fusion-based enhancing method for weakly illuminated images. Signal Process 129:82–96

Fu X, Zeng D, Huang Y, Zhang X, Ding X (2016) A Weighted Variational Model for Simultaneous Reflectance and Illumination Estimation. In: Proceedings of Computer Vision and Pattern Recognition (CVPR)

Guo X, Li Y, Ling H (2017) LIME: low-light image enhancement via illumination map estimation. IEEE Trans Image Process 26(2):982–993

Hao S, Li G, Wang L, Meng Y, Shen D (2016) Learning based Topological Correction for Infant Cortical Surfaces. In: Proceedings of Medical Image Computing and Computer Assisted Intervention (MICCAI)

Hao S, Pan D, Guo Y, Hong R, Wang M (2016) Image detail enhancement with spatially guided filters. Signal Process 120:789–796

Hao S, Guo Y, Hong R, Wang M (2016) Scale-aware spatially guided mapping. IEEE Multimedia 23(3):34–42

He K, Sun J (2015) Fast guided filter. ArXiv, abs/1505.00996

He K, Sun J, Tang X (2013) Guided image filtering. IEEE Trans Pattern Anal Mach Intell 35(6):1397–1409

Hong R, Zhang L, Tao D (2016) Unified photo enhancement by discovering aesthetic communities from Flickr. IEEE Trans Image Process 25(3):1124–1135

Hong R, Zhang L, Zhang C, Zimmermann R (2016) Flickr circles: aesthetic tendency discovery by multi-view regularized topic modeling. IEEE Trans Multimedia 18(8):1555–1567

Jobson J, Rahman U, Woodell A (1996) Properties and performance of a center/surround Retinex. IEEE Trans Image Process 6(3):451–462

Jobson J, Rahman U, Woodell A (1997) A multi-scale Retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans Image Process 6(7):965–976

Kim Y (1997) Contrast enhancement using brightness preserving bi-histogram equalization. IEEE Trans Consum Electron 43(1):1–8

Lee C, Lee C, Kim C (2013) Contrast enhancement based on layered difference representation of 2D histograms. IEEE Trans Image Process 22(12):5372–5384

Lee J Y, Sunkavalli K, Lin Z, Shen X, Kweon I S. (2016) Automatic Content-Aware Color and Tone Stylization. In: Proceedings of Computer Vision and Pattern Recognition (CVPR)

Lim J, Heo M, Lee C, Kim C (2017) Contrast enhancement of noisy low-light images based on structure-texture-noise decomposition. J Vis Commun Image Represent 45:107–121

Liu C, Gong S, Loy C (2014) On-the-fly feature importance Mining for Person re-Identification. Pattern Recogn 47(4):1602–1615

Lore K, Akintayo A, Sarkar S (2017) LLNet: a deep autoencoder approach to natural low-light image enhancement. Pattern Recogn 61:650–662

Ni B, Xu M, Wang M, Yan S, Tian Q (2013) Learning to photograph: a compositional perspective. IEEE Trans Multimedia 15(5):1138–1151

Reza AM (2004) Realization of the contrast limited adaptive histogram equalization (CLAHE) for real-time image enhancement. J VLSI Signal Process Syst 38(1):35–44

Song J, Zhang L, Shen P, Peng X, Zhu G (2016) Single Low-light Image Enhancement Using Luminance Map. In: Proceedings of Chinese Conference of Pattern Recognition (CCPR)

Wang S, Gu K, Ma S, Lin W, Liu X, Gao W (2016) Guided image contrast enhancement based on retrieved images in cloud. IEEE Trans Multimedia 18(2):219–232

Xu L, Yan Q, Xia Y, Jia J (2013) Structure extraction from texture via relative Total variation. ACM Trans Graph 31(6):article 139

Yin W, Mei T, Chen C, Li S (2014) Socialized mobile photography: learning to photograph with social context via mobile devices. IEEE Trans Multimedia 16(1):184–200

Yue H, Yang J, Sun X, Wu F, Hou C (2017) Contrast enhancement based on intrinsic image decomposition. IEEE Trans Image Process 26(8):3981–3994

Zhang Q, Shen X, Xu L, Jia J (2014) Rolling guidance filter. In: Proceedings of European Conference Computer Vision

Zhang L, Li X, Nie F, Yang Y, Xia Y (2016) Weakly supervised human fixations prediction. IEEE Trans Cybernetics 46(1):258–269

Zhang H, Shang X, Luan HB, Wang M, Chua TS (2016) Learning from collective intelligence: feature learning using social images and tags. ACM Trans Multimed Comput Commun Appl 13(1):Article 1

Zhang H, Kyaw Z, Yu J, Chang S F (2017) PPR-FCN: weakly supervised visual relation detection via parallel pairwise R-FCN, in: proceedings of international conference on computer vision (ICCV)

Zhu Y, Lucey S (2015) Convolutional sparse coding for Rrajectory reconstruction, IEEE transactions on. IEEE Trans Pattern Anal Mach Intell 37(3):529–540

Zhu X, Zhang L, Huang Z (2014) A sparse embedding and least variance encoding approach to hashing. IEEE Trans Image Process 23(9):3737–3750

Zhu W, Cui P, Wang Z, Hua G (2015) Multimedia Big Data Computing. IEEE Multimedia 22(3):96–100

Zhu X, Li X, Zhang S (2016) Block-row sparse Multiview multilabel learning for image Cassification. IEEE Trans Cybernetics 46(2):450–461

Zhu X, Li X, Zhang S (2017) Robust joint graph sparse coding for unsupervised spectral feature selection. IEEE Trans Neural Netw Learn Syst 28(6):1263–1275

Zhu Y, Zhu X, Kim M, Yan J, Wu G (2017) A Tensor Statistical Model for Quantifying Dynamic Functional Connectivity. In: Proceedings of Information Processing of Medical Image (IPMI)

Acknowledgements

The authors sincerely appreciate the useful comments and suggestions from the anonymous reviewers. This work was supported by the National Nature Science Foundation of China under grant number 61772171, and grant number 61702156.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Hao, S., Feng, Z. & Guo, Y. Low-light image enhancement with a refined illumination map. Multimed Tools Appl 77, 29639–29650 (2018). https://doi.org/10.1007/s11042-017-5448-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-017-5448-5