Abstract

In this paper, we present a robust person tracking method that the particle swarm optimization (PSO) algorithm is used as the tracking strategy. The method is divided into two procedures: object/background segmentation and tracking. For object/background segmentation, we use the temporal differencing to detect the regions of interest. For tracking, the PSO algorithm is used for overcome the robustness problem in the high noisy background and multiple moving persons and/or under occlusion. The particles in PSO represent the position, width and height of the search window, and the fitness function is calculated by the distance of the color feature vector and the histogram intersection. When occluded, we add the motion vector plus the previous position of the tracking model. The particles fly around the search region to obtain an optimal match of the target. The experiments show that the proposed method can track the single person, multiple people even when occluded, and is more efficient and accurate than the conventional particle filter method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Recently, visual tracking has been a popular application in computer vision, for example, public area surveillance, home care, and robot vision, etc. The abilities to track and recognize moving objects are important. First, we must get the moving region called region of interest (ROI) from the image sequences. There are many methods to do this, such as temporal differencing, background subtraction, and change detection. The background subtract method is to build background model, subtract with incoming images, and then get the foreground objects. K. L. Chan et al.[1] presents a method for foreground detection via a two-step background subtraction. Jalal et al.[7] describes a simple and robust approach for background subtraction in Daubechies complex wavelet domain.

Many methods has been proposed for tracking, for instance, Hayashi et.al [5] use the mean shift algorithm which modeled by color feature and iterated to track the target until convergence. [2] In tracking applications, the task is a dynamic optimization problem which may be influenced by the object state and the time. present a robust human tracking by the particle swarm optimization (PSO) algorithm as a search strategy. [2], condensation algorithm [4], and particle filter [9]. The particle filter method has the excellent ability to track the multiple person in motion with non-linearities and non-Gaussian statistics suggests the potential to provide improved robustness over conventional methods, such as those based on the Kalman filter. But the method for multiple objects tracking by particle filter tends to fail when two or more players come close to each other or overlap. The reason is that the filters’ particles tend to move to regions of high posterior probability.

Then we propose the optimization algorithm for object tracking called particle swarm optimization (PSO) algorithm. PSO is a new population based on stochastic optimization technique, has received more and more attentions because of its considerable success in solving non-linear, multimodal optimization problems. [3, 6, 8, 10–13] implement a multiple head tracking searched by PSO. They use a head template as a target model and count the hair and skin color pixels inside the search window and find the best match representing the human face. Xiaoqin et.al [14] propose a sequential PSO by incorporating the temporal continuity information into the traditional PSO algorithm. And the parameters in PSO are changed adaptively according to the fitness values of particles and the predicted motion of the tracked object. But the method is only for single person tracking.

In addition, temporal differencing is a simple method to detect motion region, but the disadvantage is that if the motion is unobvious, it would get a fragment of the object. This will cause us to track failed. So, we incorporate PSO into our tracking.

The paper is organized as follows. Section 2 introduces human detection. In Section 3, a brief PSO algorithm and the proposed PSO-based tracking algorithm are presented. Section 4 shows the experiments. Section 5 is the conclusion.

2 Human detection and labeling

In this section, we present the method how to detect motion, segment and label each region by 8-connected components. Each moving person has its own label.

2.1 Motion detection

Due to the background may change when robot or camera move, we do temporal differencing to detect motion.

A threshold function is used to determine change. If f(t) is the intensity of frame at time t, then the difference between f(t) and f(t-1) can be presented as

A motion image M(t) can be extracted by a threshold as

If the difference is large than the threshold, it is marked as an active pixel.

The morphological binary operations in image processing, dilation and erosion, are used. Dilation is used to join the broken segments. Erosion is used to remove the noise such as the pixels caused by light changed or fluttering leaves. Dilation and erosion operations are expressed as (3) and (4), respectively.

Let A and B are two sets of 2-D space. \( \widehat{B} \) means the reflection of set B.

Dilation:

Erosion:

Then we separate our image into equal-size blocks, and count the active pixels in each block. If the sum of the active pixels is greater than the threshold (a percentage of block size*block size), the block is marked as an active block which means it is a part of the moving person. Then connect the blocks to form an integrated human by 8-connected components. Fig. 1 shows the result.

2.2 Region labeling

Because we assume to track multiple people, the motion detection may bring many regions. We must label each active block so as to do individual PSO tracking. The method we utilize is 8-connected components. From Fig. 2, each region has its own label indicating an individual.

3 PSO-based tracking

The PSO algorithm is first developed by Kennedy and Eberhart in 1995. The algorithm is inspired by the social behavior of bird flocking. In PSO, each solution is a bird of the flock and is referred to as a particle. At each iteration, the birds tried to reach the destination and influenced by the social behavior. It has been applied successfully to a wide variety of search and optimization problems. Also, a swarm of n individuals communicate either directly or indirectly with one another search directions.

3.1 PSO algorithm

The process is initialized with a group of particles (solutions),[x1,x2,…,xn].(N is the number of particles.) Each particle has corresponding fitness value evaluated by the object function. At each iteration, the ith particle moves according to the adaptable velocity which is of the previous best state found by that particle (for individual best), and of the best state found so far among the neighborhood particles (for global best).

The velocity and position of the particle at each iteration is updated based on the following equations: is Adaptable velocity, Let φ1 = φ1 = 2, rand is random −1 ~ 1

i is particle number P i (t) is optimal position arising from i to end particle number P g (t) is optimal position arising from i to end with all particle number φ1 、 φ2

where φ 1, φ 2 are learning rates governing the cognition and social components. They are positive random numbers drawn from a uniform distribution. And to allow particles to oscillate within bounds, the parameter Vmax is introduced:

3.2 Target model

The process is initialized with a group of particles (solutions),[x1,x2,…,xn].(N is the number of particles.) Each particle has corresponding fitness value evaluated by the object function. At each iteration, the ith particle moves according to the adaptable

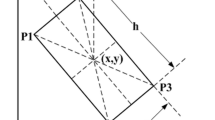

Our algorithm localized the people found in each frame using a rectangle. The motion is characterized by the particle xi = (x, y, weight, height, H, \( \overline{\mathrm{f}} \)) where (x, y) denotes the position of 2-D translation of the image, (weight, height) is the weight and height of the object search window, H is the histogram and \( \overline{\mathrm{f}} \) is the feature vector of the object search window. In the following, we introduce the appearance model.

The appearance of the target is modeled as color feature vector(proposed by Mohan S et al [13]) and gray-level histogram. The color space is the normalized color coordinates (NCC). Because the R and G values are sensitive to the illumination, we transform the RGB color space to the NCC. Here are the transform formulas:

Then the feature represented for color information is the mean value μ, of the 1-D histogram (normalized by the total pixels in the search window). The feature vector for the characterizing of the image is:

Which

K(r) is the Kernel function to give greater weight search area center weight value, and weight value decreases from the center outward.

The distance measurement is the

where D(m, t) is the Mahattan distance between the search window(target found representing by f) and the model (representing by m).

Also, the histogram which is segmented to 256 bins records the luminance of the search window. Then the intersection between the search window histogram and the target model can be calculated. The histogram intersection is defined as follows:

The fitness value of ith particle is calculated by

where ρ1 and ρ2 are the weights of the two criteria, that is the fitness value is a weighted combination.

Because similar colors in RGB color space may have different illumination in gray level, we combine the two properties to make decisions.

3.3 PSO target tracking

Here is the proposed PSO algorithm for multiple peoples tracking. Initially, when the first and two frames come, we do temporal differencing and region labeling to decide how many individual people in the frame, and then build new models for them indicating the targets we want to track. Then as new frame comes, we calculate how many people are in the frame. If the total of found people (represented by F) is greater than the total of the models (represented by M), we build a new model. If F < M, we find out that existing objects occluded or disappear. This situation we discuss in the next section. And if the F = M, we represent PSO tracking to find out where the position of each person exactly. Each person has its own PSO optimizer. In PSO tracking, the particles are initialized around the previous center position of the tracking model as a search space. Each particle represents a search window including the feature vector and the histogram and then finds the best match with the tracking model. This means the position of the model at present. The position of each model is updated every frame and motion vector is recorded as a basis of the trajectory. We utilize the PSO to estimate the position at present.

The flowchart of the PSO tracking process is showed in Fig. 3.

If the total of the targets found is less than the total of the models, we assume there is something occluded or disappeared. In this situation, we match the target list we found in this frame with the model list, determine which model is unseen. And if the position of the model in previous frame plus the motion vector recorded before is out of the boundaries, we assume the model has exited the frame, or the model is occluded. Then how to decide the occlusive model in this frame? We use motion vector information to estimate the position of this model in this frame. The short segmentation of the trajectory is considered as linear. Section 4 will show the experiment result.

4 Experimental results

The proposed algorithm is simulated by Borland C++ on Window XP with Pentium 4 CPU and 1G memory. The image size (resolution) of each frame is 320*240 (width*height) and the block size is 20*20 which is the most suitable size.

The block size has a great effect on the result. If the block size is set too small, then we will get many fragments. If the block size is set too large and the people walk too close, it will judge this as a target. The factor will influence our result and may cause tracking to fail. Figure 4a is the original image demonstrating two walking people. From Fig. 4b, we can see that a redundant segmentation came into being. Then Fig. 4d resulted only one segmentation.

The followings are the result of multiple people tracking by the proposed PSO based tracking. Figure 5 shows the two people tracking. They are localized by two different color rectangles to show their position (the order of the pictures is from left to right, top to down). And Fig. 6 shows the three people tracking without occlusion. From theses snapshots, we can see that our algorithm works on multiple people tracking.

The next experiment is the occlusion handled. The estimated positions of the occlusive people are localized by the model position recorded plus the motion vector. We use a two-person walking video Fig. 7a is the original image samples extracted from a two-people moving video. They passed by, and Fig. 8 is the tracking result.

We evaluate the performance of proposed method with the particle filter method [10]. We experiment to track different scenarios, respectively, for the number of particles, the mean absolute error, execution time, compare the results of PSO and the PF. We use 4 set of video testbench: Indoor 1 (Figs. 9 and 10), taking 35 test images, the number of particles were 20,50,100. Indoor 2 (Figs. 11 and 12), taking 70 test images, the number of particles were 20,50,100. Outdoor 1 (Fig. 13), take 100 test image, the number of particles were 20,50,100. Two people tracking in indoor (Fig. 14) environment, the number of particle were 50. Two people tracking in indoor environment under occlusion (Fig. 8), the number of particle 50. Tables 1 and 2 shows the experiment results.

By the analysis of the results on a two methods, the error from the center point of view, the three same scene, PSO tracking error of between about 3–7 pixels, and PF algorithms of error of about 6–12 pixels from tracking results, the yellow box to PSO tracking results of the first row in the result column PF red box represents the second track, the blue represents both detect the position of the block are subject to change, may be formed in the picture seen, PSO way better able to pinpoint the location of a moving object, although the PF may also correspond to the position of the moving object, but the error will be larger, it is possible to find, track the results of PSO significantly higher than PF to be accurate.

On efficiency the use of PSO processing speed of about 20 to 25 images per second, while the PF algorithm processing speed of about 15–20 frames per second, and when the number of particles for a long time, PSO can still maintain about 20 per second processing speed, but the PF algorithm execution time is noticeably more than about 15 frames per second. Thus seen, PSO algorithm in accuracy and execution performance than PF is more suitable for application in our problem.

The resulting performance evaluation reveals that the proposed method has better tracking precision and higher efficiency than the conventional tracking method (particle filter).

5 Conclusion

A PSO-based multiple persons tracking algorithm is proposed. This algorithm is developed for the application frameworks about the real-time video surveillance and robot vision. We use a robust object/background segmentation method to detect the moving person and then to track the motion in the subsequent images. For overcome the robustness problem in the high noisy background and multiple moving persons and/or under occlusion, we use PSO tracking as a search strategy to track the moving persons in the real-time video. The particles in PSO represent the position, width and height of the search window, and the fitness function is calculated by the distance of the color feature vector and the value of the histogram intersection. When occluded, we add the motion vector plus the previous position of the tracking model. The experiments show our algorithm works excellently, comparing with conventional particle filter method, whether is tracking precision or computational efficiency.

References

Chan KL (2015) Detection of foreground in dynamic scene via two-step background subtraction. Mach Vis Appl 26:723–740

Ching-Han C, Miao-Chun Y (2011) PSO-based multiple people tracking. In: Cherifi H, Zain J, El-Qawasmeh E (eds) Digital information and communication technology and its applications, vol 166. Springer, Berlin Heidelberg, pp 267–276

Cuevas EV, Zaldivar D, Rojas R (2005) Kalman filter for vision tracking. Freie Univ., Fachbereich Mathematik und Informatik, Technical Report

Djaghloul H, Batouche M, Jessel J-P (2010) Automatic PSO-based deformable structures markerless tracking in laparoscopic cholecystectomy. In: Graña Romay M, Corchado E, Garcia Sebastian MT (eds) Hybrid artificial intelligence systems, vol 6076. Springer Berlin, Heidelberg, pp 48–55

Hayashi Y, Fujiyoshi H (2008) Mean-shift-based color tracking in illuminance change. In: Visser U, Ribeiro F, Ohashi T, Dellaert F (eds) RoboCup 2007: robot soccer world cup XI, vol 5001. Springer Berlin, Heidelberg, pp 302–311

Hu M, Hu W, Tan T (2004) Tracking people through occlusions. ICPR’04 2:724–727

Jalal A, Singh V (2011) A robust background subtraction approach based on daubechies complex wavelet transform. In: Abraham A, Lloret Mauri J, Buford J, Suzuki J, Thampi S (eds) Advances in computing and communications, vol 191. Springer Berlin, Heidelberg, pp 516–524

Liu YWWZJ, and Liu XTP (2008) A novel particle filter based people tracking method through occlusion. Proceedings of the 11th Joint Conference on Information Sciences

Ornelas-Tellez F, Graff M, Sanchez E and Alanis A (2014) PSO optimal tracking control for state-dependent coefficient nonlinear systems. In: M. Jamshidi, V. Kreinovich, and J. Kacprzyk, (Eds.) Advance Trends in Soft Computing, vol. 312. Springer International Publishing, pp 403–410

Pan I, Das S (2013) Design of hybrid regrouping PSO–GA based sub-optimal networked control system with random packet losses. Memet Comput 5:141–153

Rymut B, Kwolek B (2014) Real-time multiview human body tracking using GPU-accelerated PSO. In: Wyrzykowski R, Dongarra J, Karczewski K, Waśniewski J (eds) Parallel processing and applied mathematics, vol 8384. Springer Berlin, Heidelberg, pp 458–468

Sulistijono IAaNK (2007) Evolutionary robot vision and particle swarm intelligence robot vision for multiple human tracking of a partner robot. CEC 2007: 1535–1541

Sulistijono IA and Kubota N (2007) Particle swarm intelligence robot vision for multiple human tracking of a partner robot. SICE Annual Conference 2007 pp 604–609

Sulistijono IA, Kubota N (2007) Human head tracking based on particle swarm optimization and genetic algorithm. J Adv Comput Intell Intell Inform 11(6):681–687

Acknowledgements

The authors are also grateful for the funding supported by the Ministry of Science and Technology of Taiwan (R.O.C.) under Grant No. NSC 103-2220-E-008 -003.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chen, CH., Wang, CC. & Yan, MC. Robust tracking of multiple persons in real-time video. Multimed Tools Appl 75, 16683–16697 (2016). https://doi.org/10.1007/s11042-016-3890-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-016-3890-4