Abstract

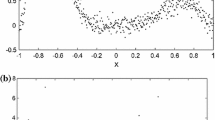

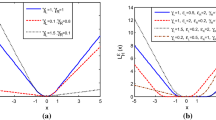

Support vector regression (SVR) method becomes the state of the art machine learning method for data regression due to its excellent generalization performance on many real-world problems. It is well-known that the standard SVR determines the regressor using a predefined epsilon tube around the data points in which the points lying outside the tube contribute to the errors whereas the inside points are simply ignored. To measure the data misfit as stated, the epsilon insensitive function is introduced as a loss function. In comparison with the popular quadratic loss function, it is robust but only continuous and therefore numerical minimization is difficult. However, as a combination of robust treatment to large errors and showing quadratic treatment to small errors, Huber function is used in the literature to measure the data misfit having the smooth property that it is differentiable everywhere. In this study, we propose a novel robust Huber SVR (HSVR) formulation in primal where the regressor is made as flat as possible by considering the regularization term in L1-norm. Since the regularization term is non-smooth and therefore by replacing it with smooth approximation functions, new problem formulation is obtained which is solved then by functional iterative method. Tests were performed on few synthetic and real world data sets whose results confirm the suitability and effectiveness of the proposed robust model.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Balasundaram, S., Meena, Y.: Robust support vector regression in primal with asymmetric Huber loss. Neural Process. Lett. 49, 1–33 (2018)

Camps-Valls, G., Bruzzone, L., Rojo-Alvarez, J.L.: Robust support vector regression for biophysical variable estimation from remotely sensed images. IEEE Geosci. Remote Sens. Lett. 3(3), 339–343 (2006)

Chu, W., Keerthi, S.S., Ong, C.J.: Baysian support vector regression using a unified loss function. IEEE Transact. Neural Netw. 15(1), 29–44 (2004)

Cristianini, N., Shawe-Taylor, J.: An Introduction to Support Vector Machines and other Kernel Based Learning Method. Cambridge University Press, Cambridge (2000)

Demsar, J.: Statistical comparisons of classifiers over multiple data sets. J. Mach. Learn. Res. 7, 1–30 (2006)

Fung, G., Mangasarian, O.L.: Finite Newton method for Lagrangian support vector machine. Neurocomputing 55(1–2), 39–55 (2003)

Gretton, A., Doucet, A., Herbrich, R., Rayner, P.J.W., Scholkopf, B.: Support vector regression for black-box system identification. In: Proceedings of the 11th IEEE Workshop on Statistical Signal Processing, pp. 341–344. IEEE, Singapore (2001)

Guitton, A., Symes, W.W.: Robust inversion of seismic data using the Huber norm. Geophysics 68(4), 1310–1319 (2003)

Huber, P.J., Ronchetti, E.M.: Robust Statistics, 2nd edn. Wiley, New York (2009)

Lee, Y.J., Mangasarian, O.L.: SSVM: A smooth support vector machine for classification. Comput. Optim. Appl. 20(1), 5–22 (2001)

Peng, X., Xu, D., Shen, J.: A twin projection support vector machine for data regression. Neurocomputing 138, 131–141 (2014)

Suykens, J.A.K., Van Gestel, T., De Brabanter, J., De Moor, B., Vandewalle, J.: Least Squares Support Vector Machines. World Scientific, Singapore (2002)

Vapnik, V.N.: The Nature of Statistical Learning Theory, 2nd edn. Springer, New York (2000)

Zhu, J., Hoi, S.C.H., Lyu, M.R.T.: Robust regularized kernel regression. IEEE Transact. Syst. Man Cybern. -Part B Cybern. 38(6), 1639–1644 (2008)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Puthiyottil, A., Balasundaram, S., Meena, Y. (2020). L1-Norm Support Vector Regression in Primal Based on Huber Loss Function. In: Singh, P., Panigrahi, B., Suryadevara, N., Sharma, S., Singh, A. (eds) Proceedings of ICETIT 2019. Lecture Notes in Electrical Engineering, vol 605. Springer, Cham. https://doi.org/10.1007/978-3-030-30577-2_16

Download citation

DOI: https://doi.org/10.1007/978-3-030-30577-2_16

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-30576-5

Online ISBN: 978-3-030-30577-2

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)