Abstract

We argue that distinct conditionals—conditionals that are governed by different logics—are needed to formalize the rules of Truth Introduction and Truth Elimination. We show that revision theory, when enriched with the new conditionals, yields an attractive theory of truth. We go on to compare this theory with one recently proposed by Hartry Field.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The conditionals we will be concerned with are those used in stating the rules for truth, Truth Introduction (TI) and Truth Elimination (TE):

- (TI):

-

If A, then ‘A’ is true; and

- (TE):

-

If ‘A’ is true, then A.

We will argue that the two conditionals here are different: they do not mean the same; they are not governed by the same logic.

In outline, our argument will be as follows. We will take it that (TI) and (TE) are the best formulations we have of the principal rules governing truth. Aristotle suggested these rules in the Categories; the medievals called them ‘Aristotle’s Rules’; and at least on this bit of logic no one has improved on Aristotle. We will compare these rules to the inferential behavior of the truth predicate. Whatever readings of the conditionals in (TI) and (TE) allow us to make the best sense of this behavior, those are the right readings for these conditionals. We will argue that the readings that make the best sense of the inferential behavior of truth assign different meanings, and different logics, to the conditionals in (TI) and (TE).

Consider some simple examples of arguments involving ‘true’:

- (A1):

-

Snow is white; therefore, ‘snow is white’ is true.

- (A2):

-

Grass is white; therefore, ‘snow is white’ is true.

- (A3):

-

Suppose everything Fred says is true. Then, everything Fred says is true. So: if everything Fred says is true then everything Fred says is true.

- (A4):

-

Snow is white; therefore, if everything Fred says is true then ‘everything Fred says is true and snow is white’ is true.

- (A5):

-

Grass is not white; therefore, if ‘grass is white or everything Fred says is true’ is true then everything Fred says is true.

Arguments (A1) and (A3) are plainly valid, and (A2) invalid. Furthermore, there are readings of (A4) and (A5) on which they are valid.Footnote 1 Consider next some arguments in which paradoxical self-reference is in play:

- (A6):

-

Given: l = ‘l is not true’. If l is true, then l is not true. So, if l is true, then l is true and also l is not true. We conclude, l is not true.

- (A7):

-

Given: b = ‘if b is true then God exists’. Suppose, b is true. So, if b is true, then God exists. Hence, God exists. We can conclude, therefore, that if b is true then God exists. Hence, b must be true. So, God exists.

Argument (A6) is one-half of the Liar argument, and it is highly perplexing. Its status is unclear, and different logicians have pronounced differently on its validity. Argument (A7) is Curry’s Paradox and it is plainly invalid, though it is debatable where precisely the argument goes wrong. Consider, finally, some arguments containing other sorts of self-reference:

- (A8):

-

Given: t = ‘t is true’. Suppose t is true. Then, ‘t is true’ is true. So: if t is true then ‘t is true’ is true.

- (A9):

-

Given: t = ‘t is true’ and l = ‘l is not true’. Suppose l is not true. Suppose further that l is true. We have a contradiction, and we may conclude t is true. So: if l is not true then t is true if l is true.

- (A10):

-

Given: t = ‘t is true’. Suppose t is true. Then, snow is black.

Arguments (A8) and (A9) do not bring into play the rules for truth, and they appear to be valid. Argument (A10), on the other hand, seems to be invalid: the transition from the Truth-Teller, ‘t is true’, to ‘snow is black’ appears to be unwarranted. The rules of logic, even when supplemented with (TI) and (TE), do not enable us to derive ‘snow is black’ from the Truth-Teller. Simple examples like these can, of course, be multiplied indefinitely. Moreover, less simple (and thus more interesting) examples can also be given.

There is, then, a universe of arguments involving ‘true’. Some of the arguments in this universe are plainly valid; some are plainly invalid; and the status of some others is uncertain and debatable. We want to better understand the logic of these arguments. We want a systematic account that separates valid arguments from invalid ones, and we want an explanation why the valid arguments are valid, and the invalid ones invalid. We have here a problem of logic that is fully classical in form: a problem of understanding validity.

The problem as stated is much too hard, however. To solve it, we would need to solve all the other problems of logic (e.g., those of vagueness); for the validity of an argument containing ‘true’ depends sometimes not only on the rules for truth but also on the behavior of the other elements in it (e.g., vague terms). Let us assume, therefore, that apart from truth and the conditionals, nothing else is problematic in the language. Let us go further and set down the following desideratum on a logic of truth:

- Descriptive Adequacy::

-

Let \(\mathcal {L}\) (the “ground” language) be a classical first-order language with the usual logical resources. For definiteness, let us take identity (=), negation (¬), conjunction (&), and the universal quantifier (∀) as primitives; and let us take disjunction (∨), material conditional(⊃), material equivalence (≡), the existential quantifier (∃), and the constants The True (⊤) and The False (⊥) to be defined in any one of several familiar ways. Let us assume, for simplicity, that \(\mathcal {L}\) has no function symbols.Footnote 2 Let \(\mathcal {L}\) be expanded to \(\mathcal {L}^{+}\) through the addition of a truth predicate T (“true in \(\mathcal {L}^{+}\)”) and possibly one or more conditionals. We assume that \(\mathcal {L}\) has canonical names for the sentences of \(\mathcal {L}^{+}\) (these names may be formed using quotation marks). Then: We want an account of truth and of the conditionals that (i) separates valid arguments of \(\mathcal {L}^{+}\) from invalid ones in a satisfactory way; (ii) provides explanations of why the valid arguments are valid, and invalid ones are invalid; and (iii) sees the logical behavior of T as issuing from (TI) and (TE).

Descriptive Adequacy highlights classical ground languages, but there is nothing special about these languages except their familiarity. The perplexing inferential behavior of truth arises in other logical contexts also: three-valued, four-valued, infinite-valued, intuitionistic, and relevance. Furthermore, concepts other than truth (e.g., “exemplification”) exhibit very similar behavior. This motivates a further desideratum:

- Generality Requirement::

-

The account of truth and conditionals should be generalizable to non-classical logics (such as, many-valued, intuitionistic, and relevance) and to other concepts (such as “exemplification” and “satisfaction”).

These are the principal desiderata we aim to respect as we work out a logic of truth and conditionals.Footnote 3 We will argue that an enriched version of revision theory is well placed to meet the desiderata. Revision theory provides general schemes for making sense of circular and interdependent definitions. These schemes do not presuppose a specific logic; hence, revision theory is well placed to meet the Generality Requirement. Furthermore, the schemes yield specific theories of truth once we take truth to be a circular concept governed by Aristotle’s Rules.Footnote 4 The resulting theories of truth are attractive, but they suffer from a serious lacuna. They lack conditionals suitable for expressing (TI) and (TE). An effect of this expressive shortcoming is that these theories are unable to account for a large class of arguments. For example, they are unable to provide readings under which (A4) and (A5) are valid. Our aims in this essay are (i) to show how the requisite conditionals can be added to revision theory and (ii) to make a case that the resulting theory meets the requirement of Descriptive Adequacy. It will turn out that the conditionals needed to express (TI) and (TE) differ from one another in their logic and semantics. It will turn out also that the logical behavior of the conditionals is quite extraordinary.

We begin by showing that the conditionals in (TI) and (TE) are not ordinary conditionals. Then we will present an extended revision theory capable of expressing (TI) and (TE), and we will compare this theory with another which also deems these conditionals to be extraordinary.

2 Ordinary Conditionals

We argue that the conditionals in Aristotle’s Rules for truth are not ordinary conditionals. We begin by showing that, in \(\mathcal {L}^{+}\), these conditionals should not be read as the classical material conditional. We will then argue that a shift to a non-classical material conditional does not provide a satisfactory reading, either. This will put us in a position to conclude that the conditionals in Aristotle’s Rules are not ordinary conditionals, in the sense made precise below.

2.1 Classical Material Conditional

If the conditionals in the rules for truth are interpreted as the classical material conditional, then the rules read thus:

Under this reading, the rules for truth are inconsistent if a liar sentence is expressible in the language.Footnote 5 This consequence has been embraced by some philosophers, most notably by Charles Chihara. Chihara, following a suggestion of Tarski, defends the above reading of the rules of truth and the resulting idea that truth is an inconsistent concept.Footnote 6

Chihara’s inconsistency view has the merit that it can easily meet the Generality Requirement: it is easy enough to find readings of the conditionals under which the rules for truth are inconsistent in expressively rich non-classical languages. However, as Chihara in effect recognizes, the proposal does poorly on Descriptive Adequacy. The proposal declares arguments valid that are plainly invalid. If the premisses of an argument merely imply the existence of a liar sentence then, according to the inconsistency view, the argument is bound to be valid, irrespective of the argument’s conclusion. More specifically, the inconsistency view declares argument (A7) to be valid when, intuitively, it is invalid. Chihara responds to the problem by suggesting that in working with truth, as in working with any inconsistent theory, one should not blindly follow where validity leads. One should restrict oneself to using only, what Chihara calls, “reasonable inferences.” Truth, he says, is inconsistent, but this fact does not preclude it from being useful.

However, without a satisfactory demarcation of inferences that are “reasonable,” Chihara’s proposal is no solution to our problem; it provides no illumination of the logic of truth. What advice, for instance, can Chihara offer the ordinary user of ‘true’? That in working with ‘true’ the user should take care to appeal only to “reasonable inferences”? This is not helpful advice unless the theorist is prepared to spell out “reasonable inference”—a task that is much harder than that of providing a logic of truth. Chihara is reducing a hard problem to a virtually impossible one. Neither Chihara nor any other inconsistency theorist we know has provided a satisfactory account of “reasonable inference” or of how we should reason with the concept of truth.Footnote 7

2.2 Non-Classical Ordinary Conditionals

It is an ancient idea that paradoxical sentences are neither true nor false, and lovely contemporary theories of truth have been built on it. Saul Kripke and, independently, Robert L. Martin and Peter Woodruff proved that if the truth predicate is allowed to be partial then attractive fixed-point interpretations can be found for it. More precisely, let M (the “ground” model) be the classical interpretation associated with \(\mathcal {L}\). Set M=〈D,I〉, where D is the domain and I is the interpretation function of M. Now, suppose we allow the truth predicate T of \(\mathcal {L}^{+}\) to be assigned partial interpretations 〈U,V〉, where U∩V = ∅ and U and V are subsets of D. Here U represents the extension, and V the anti-extension, of the predicate. Let M+〈U,V〉 be the model just like M except that it assigns to T the interpretation 〈U,V〉. The truth values of sentences can now be calculated using one of several three-valued semantic schemes. Let us fix on the Strong Kleene scheme, κ, and define the operation κ M as follows:Footnote 8 κ M (〈U,V〉)=〈U ′,V ′〉, where

Observe that if 〈U,V〉 is a fixed point of κ M —that is, if κ M (〈U,V〉)=〈U,V〉—then:

That is, if T is assigned a fixed-point interpretation 〈U,V〉, then T agrees perfectly with what turns out to be true, and what false, in the resulting language (i.e., in the language with the interpretation M+〈U,V〉). Kripke showed that κ M has fixed points.Footnote 9 Furthermore, he showed that κ M has a least fixed point, and that this fixed point captures important intuitive properties of the truth predicate.

The fixed-point theories of truth made available by the work of Kripke, Martin, and Woodruff are lovely, but they are not the final word on our problem. First, while the inconsistency view declares too many inferences to be valid, fixed-point theories declare too few of them to be valid. Thus, under the Strong Kleene scheme, a simple argument such as (A3) is invalid.Footnote 10

-

Suppose everything Fred says is true. Then, everything Fred says is true. So: if everything Fred says is true then everything Fred says is true.Footnote 11

Second, the rules for truth cannot be affirmed in the fixed-point languages. Under the conditionals definable in these languages, some instances of (TI) and (TE) turn out not to be true.Footnote 12 To be sure, conditionals are available within three-valued logics which, if present, would render (TI) and (TE) true. The problem is that the addition of these conditionals destroys the fixed-point property. For example, if we add to the Strong Kleene language a conditional, ⇝, that has the semantics,

and that agrees with the material conditional on classical values, then we can preserve (TI) and (TE), but we lose the fixed-point property.Footnote 13

Third, and this point is related to the previous one, fixed points exist only for expressively weak languages.Footnote 14 The problem before us, however, is that of understanding the behavior of truth in expressively rich languages. The fixed-point approach cannot be extended to these languages, and hence it fails to meet the Generality Requirement.

The above considerations motivate a general constraint on the reading of the conditionals in (TI) and (TE). Let ⇒ be an arbitrary conditional, and let the corresponding biconditional be ⇔:

Let us say that ⇒ is ordinary iff an enrichment with a negation connective, ¬, is possible that renders the following a logical law:

(By this definition, a wide range of conditionals count as ordinary, including intuitionistic, relevance, and the Strong Kleene conditionals.) Now, the constraint on the reading of the conditionals in (TI) and (TE) is this: these conditionals cannot be read as one and the same ordinary conditional, ⇒.Footnote 15 For, if we do so, then expressive richness forces the existence of a Liar sentence ¬T l for which we should affirm

But, in light of the above logical law, we should also affirm

We are thus landed in an inconsistency. Hence, if we wish to embrace expressive richness and at the same time avoid inconsistency, as we have argued we should, then there is no choice but to interpret the conditionals in (TI) and (TE) as something other than ordinary conditionals.

3 Step Conditionals

We now introduce two new conditionals within the framework of the revision theory of definitions and truth. As we noted above, revision theory provides general schemes for making sense of circular and interdependent definitions. These schemes yield, in turn, specific theories of truth if we take truth to be a circular concept, with Aristotle’s Rules as its partial definition. Let us review some basic facts about the revision theory of definitions.Footnote 16 This will put us in a position to introduce the new conditionals and to reflect on the resulting theories of truth. Readers not wanting to work through technical details will find an informal summary account of the conditionals in Section 3.4 below.

3.1 Revision Theory of Definitions

Let \(\mathcal {L}^{-}\) be a classical first-order language with interpretation M (=〈D,I〉) that is extended to a language \(\mathcal {L}\) through the addition of a new one-place predicate G. Let G be governed by the following definition,

- (\(\mathcal {D}\)):

-

G x= D f A(x,G),

where A(x,G) may contain occurrences of G but may contain no free occurrences of any variable other than x.Footnote 17 In revision theory, circular predicates are understood in terms of revision rules, rules that revise, what we shall call, hypotheses. We need a few preliminary concepts before we can define this notion.Footnote 18

Let F be the set of formulas of \(\mathcal {L}\) that contain no names, and let V be the set of assignments v of values to the variables (relative to M). Let us say that a pair 〈A,v〉 consisting of a formula and an assignment is similar to a pair 〈B,v ′〉 iff both A and B have the same number, n, of free occurrences of variables and there is a formula C(z 1,…,z n ) with precisely n distinct free variables z 1,…,z n and

-

(i)

for some variables x 1,…,x n , A is an alphabetic variant of C(x 1,…,x n );Footnote 19

-

(ii)

for some variables y 1,…,y n , B is an alphabetic variant of C(y 1,…,y n ); and

-

(iii)

for all i, 1≤i≤n, v(x i ) = v ′(y i ).

Then, a hypothesis h is a subset of F×V that satisfies the following condition:

Let C (= C(x 1,…,x n )) be a formula of \(\mathcal {L}\) with exactly n free variable x 1,…,x n , and let v be an assignment of values to variables. We say that 〈C,v〉falls under h relative to M (notation: 〈C,v〉∈ M h) iff there is an assignment v ′ and a sequence (possibly empty) of distinct names 〈a 1,…,a m 〉 and a formula C ′ (= C ′(x 1,…,x n ,y 1,…y m )) that has precisely n + m free variables, x 1,…,x n ,y 1,…y m , and that satisfies the following conditions:

-

(i)

C = C ′(x 1,…,x n ,a 1,…a m );

-

(ii)

for all i, 1≤i≤n, v ′(x i ) = v(x i );

-

(iii)

for all i, 1≤i≤m, v ′(y i ) = I(a i ); and

-

(iv)

〈C ′,v ′〉∈h.

If C(x) is a formula with exactly one free variable, then let the extension assigned to C(x)by hypothesis h (notation: h(C(x))) be the set of those objects d∈D such that

Set M + h to be an interpretation of \(\mathcal {L}\) that is just like M except that the predicate G is assigned h(A(x,G)). Now, the revision rule, \(\delta _{\mathcal {D},M}\), is an operation on hypotheses that satisfies the following condition: for all formulas B without occurrences of names and all assignments v,

The revision rule provides the key semantical information about the circular predicate G. Intuitively, the rule may be understood thus: the result of applying \(\delta _{\mathcal {D},M}\) to a hypothesis h (i.e., \(\delta _{\mathcal {D},M}(h)\)) provides a better, or at least an equally good, interpretation of G as the hypothesis h. So, if we begin with an arbitrary hypothesis, we may attempt to improve it through repeated applications, possibly transfinite, of \(\delta _{\mathcal {D},M}\). The result is a revision sequence, which we will define after some preliminary definitions.

Let O n be the class of all ordinals. Let \(\mathcal {S}\) be an O n-long sequence of hypotheses, and let \(\mathcal {S}_{\beta }\) be the β th member of \(\mathcal {S}\). If α is a limit ordinal, then we say that 〈B,v〉 is stably in [stably out of] \(\mathcal {S}\) at α iff

Similarly, we say that 〈B,v〉 is stably in [stably out of] \(\mathcal {S}\) iff

Observe the following facts about arbitrary O n-long sequences \(\mathcal {S}\).

-

(i)

\(\mathcal {S}\) is bound to contain a cofinal hypothesis: a hypothesis h that occurs over and over again in \(\mathcal {S}\); that is, \(\forall \alpha \exists \beta \geq \alpha (h = \mathcal {S}_{\beta })\).

-

(ii)

Reflection ordinals are bound to exist for \(\mathcal {S}\). That is, ordinals α exist at which stability coincides with stability in \(\mathcal {S}\) as a whole. More precisely, at α, a pair 〈B,v〉 is stably in [stably out of] \(\mathcal {S}\) at α iff 〈B,v〉 is stably in [stably out of] \(\mathcal {S}\).

We say that a hypothesis h coheres with a sequence \(\mathcal {S}\) at a limit ordinal α iff (i) if a pair 〈B,v〉 is stably in \(\mathcal {S}\) at α then 〈B,v〉∈h, and (ii) if a pair 〈B,v〉 is stably out of \(\mathcal {S}\) at α then 〈B,v〉∉h. Finally, we say \(\mathcal {S}\) is a revision sequence for \( \delta _{\mathcal {D},M}\) iff, for all ordinals α and β,

-

(i)

if α = β+1 then \(\mathcal {S}_{\alpha } = \delta _{\mathcal {D},M}(\mathcal {S}_{\beta })\); and

-

(ii)

if α is limit then \(\mathcal {S}_{\alpha }\) coheres with \(\mathcal {S}\) at α.

It is easy to show that all hypotheses h generate revision sequences; that is, there is at least one revision sequence \(\mathcal {S}\) such that \(\mathcal {S}_{0} = h\).

A hypothesis h is said to be recurring for a revision rule \(\delta _{\mathcal {D},M}\) iff h is cofinal in a revision sequence for \(\delta _{\mathcal {D},M}\). Recurring hypotheses are the survivors of the revision process, and they can be used to endow circular predicates with a logic and semantics. Gupta and Belnap provide two broad ways of doing so. Here is one way, which yields their system S #:

-

(i)

A sentence A is valid relative to \(\mathcal {D}\) in M in \(\mathbf {S}^{\#}(M \models _{\mathcal {D}}A\)) iff for all hypotheses h recurring in \(\delta _{\mathcal {D},M}\) there is a natural number n such that for all natural numbers p, n≤p, A is true in \(M + \delta _{\mathcal {D},M}^{p}(h)\), where \(\delta _{\mathcal {D},M}^{p}(h)\) is the result of p applications of \(\delta _{\mathcal {D},M}\) to h.

-

(ii)

A sentence A is valid relative to \(\mathcal {D}\) in S # (\(\models _{\mathcal {D}} A\)) iff A is valid in S # in all models M of \(\mathcal {L}^{-}\).

-

(iii)

Relative to \(\mathcal {D}\), premisses A 1,…,A n semantically entail a conclusion B (\(A_{1}, \dots, A_{n} \models _{\mathcal {D}} B\)) iff \(\models _{\mathcal {D}} [(A_{1} \& {\dots } \& A_{n}) \supset B]\).

One compelling feature of S # is that it yields an attractive theory of finite definitions, definitions for which transfinite revisions are unnecessary:

A definition \(\mathcal {D}\) is finite iff, for all models M of \(\mathcal {L}\), there is a number n such that, for all hypotheses h, \(\delta ^{n}_{\mathcal {D},M}(h)\) is finitely reflexive for \(\delta _{\mathcal {D},M}\); where h ′ is finitely reflexive for \(\delta _{\mathcal {D},M}\) iff, for some natural number p,0<p, \(h^{\prime } = \delta _{\mathcal {D},M}^{p}(h^{\prime })\).

For finite definitions \(\mathcal {D}\), the notion of validity can be greatly simplified:

Furthermore, a simple sound and complete calculus, C 0, can be provided for reasoning with finite definitions. In this calculus, one works with indexed formulas, A i, where i is an integer. We may view the integer index as representing a stage in the revision process. The rules governing the logical connectives are classical, with the proviso that, in an application, the premisses and conclusions must be decorated with the same index. The rules for definitions, DfI and DfE, require shifts in indices, however:

- DfE::

-

\(Gx^{i + 1} \ /\therefore \ A(x, G)^{i}\);

- DfI::

-

\(A(x, G)^{i} \ /\therefore \ Gx^{i + 1}\).

C 0 has one last rule, Index Shift, that allows arbitrary shifting of the index of a formula so long as it contains no occurrences of the defined predicate. Note finally that C 0 is sound for non-finite definitions also, but it fails to be complete for them.Footnote 20 For further information about C 0 and about the revision theory of finite and other definitions, see Gupta and Belnap [34], Martinez [44], and Gupta [32].

3.2 Introducing the New Conditionals

Let us now expand our language to \(\mathcal {L}^{+}\) by adding two new conditionals, the step-down conditional (→) and the step-up conditional (←). The intuitive meaning of these conditionals is roughly as follows. (B→C) says that if B is true at a revision stage then C is true at the previous stage. So, this conditional takes us a step down in the revision process (hence the name we have given it). On the other hand, (C←B) takes us a step up. It says that if B is true at the previous revision stage then C is true at the current stage.Footnote 21 Let us make these ideas precise.

Let \(\mathcal {L}^{-}\) be, as before, a classical first-order language with interpretation M. Let \(\mathcal {L}^{-}\) be expanded to \(\mathcal {L}^{+}\) by adding to it the two new step conditionals and a one-place predicate G that is governed by the definition,

- (\(\mathcal {D}\)):

-

G x= D f A(x,G).

Note that the definiens here, A(x,G), is a formula of \(\mathcal {L}^{+}\) and thus may contain occurrences of step conditionals. Hypotheses h are defined, in a way parallel to before, as certain sets of pairs of formulas of \(\mathcal {L}^{+}\) and assignments of values to variables. Furthermore, we define the notion “v satisfies a formula B of \(\mathcal {L}^{+}\) relative to M and h,” in symbols,

in the familiar way, reading G as receiving the interpretation h(A(x,G)) but interpreting the new conditionals as follows:

Define the step biconditional, (B⇔C), thus:

Then, we have

The revision rule, \({\Delta }_{\mathcal {D},M}\), for \(\mathcal {L}^{+}\) is now defined thus: for all formulas B without occurrences of names and for all assignments v,

It is a routine verification that if B is a sentence and v and v ′ are arbitrary assignments, then

So, if B is a sentence, set:

We can now adapt the earlier definitions to obtain the notions “revision sequence for \({\Delta }_{\mathcal {D},M}\)” and “a sentence B of \(\mathcal {L}^{+}\) is valid relative to \(\mathcal {D}\) in M in the system S #” (\(M \models ^{+}_{\mathcal {D}} B\)), and thereby, the notion of entailment (\(A_{1}, \dots, A_{n} \models ^{+}_{\mathcal {D}} B\)). Let us illustrate these notions.

Example 3.2.1 (Definitional equivalence expressed using “ ⇔”)

Let G be governed by definition \(\mathcal {D}\), and let \(\mathcal {S}\) be an arbitrary revision sequence for \({\Delta }_{\mathcal {D},M}\). Then, for all α≥1,

Hence,

The step biconditional (⇔) thus enables us to reflect the definitional equivalence governing G within \(\mathcal {L}^{+}\). The material equivalence (≡) is inadequate for this task, for if the definiens is ¬G x, then we have

Nevertheless, ∀x(G x⇔¬G x) remains valid. ⊣

Example 3.2.2 (Non-circular definiens from \(\mathcal {L}^{-}\))

Let G be defined non-circularly via the definiens x = a, and let a denote 0 in M. Observe that if \(\mathcal {S}\) is an arbitrary revision sequence for \({\Delta }_{\mathcal {D},M}\), then for all α>1, \(\mathcal {S}_{\alpha }(x = a) = \mathcal {S}_{\alpha }(Gx) = \{0\}\). Hence,

The expected material equivalence of the definiendum with the definiens thus holds. Suppose now that, for some assignment v, \(\langle \bot,v\rangle \in \mathcal {S}_{0}\). Then, we have

Thus, even with non-circular definitions, the revision process can exhibit instability at least up to ordinal ω. This is due to the presence of the new conditionals. In the absence of these conditionals, the revision sequences for non-circular definitions exhibit no instability beyond the first stage.

Example 3.2.3 (Non-circular definiens from \(\mathcal {L}^{+}\))

Let the definiens of G be (x≠b→x = a); and let a and b denote in M, respectively, 0 and 1. Now the interpretation of G in a revision sequence will eventually settle down to {0,1}. But notice that the presence of a step conditional in the definiens entails that it may take the revision process a little longer to reach this interpretation. The greater the embedding of the step arrows within one another in a definiens, the higher in general the revision stage by which the interpretation of the definiendum is bound to become stable.

The following two theorems, which are easily verified, settle the behavior of non-circular definitions.Footnote 22

Theorem 3.2.4 (Fixed-points of \({\Delta }_{\mathcal {D},M}\))

Let \(\mathcal {S}\) be an arbitrary revision sequence of \({\Delta }_{\mathcal {D},M}\) , and for some ordinal α, let \(\mathcal {S}_{\alpha }\) be a fixed point of \({\Delta }_{\mathcal {D},M}\) —that is, let \({\Delta }_{\mathcal {D},M}(\mathcal {S}_{\alpha }) = \mathcal {S}_{\alpha }\). Then (a) for all ordinals \(\beta \geq \alpha, \mathcal {S}_{\alpha } =\mathcal {S}_{\beta }\); and (b) for all formulas B and C and all assignments v, the following are equivalent:

-

(i)

\(M, \mathcal {S}_{\alpha }, v {\models }^{+} (B \rightarrow C)\),

-

(ii)

\(M, \mathcal {S}_{\alpha }, v {\models }^{+} (C \leftarrow B)\), and

-

(iii)

\(M, \mathcal {S}_{\alpha }, v {\models }^{+} (B \supset C)\).

Theorem 3.2.5 (Non-circular definitions)

Let the definiens of G be a formula A(x) of \(\mathcal {L}^{+}\) that contains no occurrences of G. Let n be the number of occurrences of the step arrows in A(x), and let \(\mathcal {S}\) and \(\mathcal {S}^{\prime }\) be arbitrary revision sequences for \({\Delta }_{\mathcal {D},M}\). Then:

-

(i)

for all ordinals \(\alpha > n + 1, \mathcal {S}_{\alpha }(Gx) = \mathcal {S}^{\prime }_{n+2}(Gx);\) and

-

(ii)

for all ordinals \(\alpha \geq \omega, \mathcal {S}_{\alpha } = \mathcal {S}^{\prime }_{\omega }\).

Hence, all revision sequences for \({\Delta }_{\mathcal {D},M}\) culminate in the same fixed point, and the distinction between the step conditionals and the material conditional collapses. We have:

-

(iii)

\(\models ^{+}_{\mathcal {D}} \forall x (Gx \equiv A(x))\).

Example 3.2.6 (Circular definiens from \(\mathcal {L}^{+}\))

Let the definiens of G be (G x→G x), and let M be an arbitrary ground model with domain D. Further, let X and Y be arbitrary subsets of D, and let \(\mathcal {S}\) be an arbitrary revision sequence for \({\Delta }_{\mathcal {D},M}\) such that

Then it can be verified that, for all n≥0,

Observe that the resulting revision pattern for G is different from the one we obtain with the definiens (G x⊃G x): in the latter revisions, G invariably receives the entire domain, D, as its interpretation. More generally, the revision patterns we obtain with the definiens (G x→G x) are essentially new. These patterns do not occur if G is defined using only the resources of \(\mathcal {L}\) (i.e., without the step conditionals). The addition of the step conditionals makes, therefore, an essential difference to the revision environment.

The hypotheses of revision are infinite sets. The next theorem shows that only a finite part of this set is relevant to the subsequent behavior of a formula in a revision sequence.

Theorem 3.2.7 (Revision sequences for circular definiens from \(\mathcal {L}^{+}\))

Let the definiens of G be an arbitrary formula A(x,G) of \(\mathcal {L}^{+}\) , and let \(\mathcal {S}\) and \(\mathcal {S}^{\prime }\) be arbitrary revision sequences for \({\Delta }_{\mathcal {D},M}\) such that, for all subformulas B of A(x,G),

Then, if C is an arbitrary formula with n occurrences of the step conditionals, then, for all m, m>n: \(\mathcal {S}_{m}(C) = \mathcal {S}^{\prime }_{m}(C)\).Footnote 23

3.3 The Logic of the Step Conditionals

Let us begin by observing that the logical behavior of the step conditionals is highly unusual. The following fails to be a logical law:

Relatedly, Identity is not a logical law for the step-down conditional. That is, for some definitions \(\mathcal {D}\),

A parallel claim holds for the step-up conditional. Notice also that the two conditionals are governed by different logics. Thus, Exportation holds for the step-up conditional but fails for the step-down conditional:

All the invalidities above can be verified by letting \(\mathcal {D}\) be a simple circular definition—for example, G x= D f ¬G x.

We shall argue below that the step conditionals are the right ones for formulating the rules for truth, (TI) and (TE). We wish to notice now that, although the logical behavior of the step conditionals is unusual, it is simple and straightforward. Let us observe, first, that the step conditionals have natural introduction and elimination rules. The elimination rule for the step-down conditional allows us to infer the indexed formula C i from (B→C)i+1 and B i+1. The introduction rule allows us to conclude (B→C)i+1 if we can derive C i from B i+1. The rules for the step-up conditional are parallel. In a Fitch-style deduction system, these rules can be represented as follows.

Let \(\mathbf {C}^{+}_{0}\) be C 0 with the addition of the introduction and elimination rules for both step conditionals. The next two theorems relate the calculus \(\mathbf {C}^{+}_{0}\) to validity in \(\mathcal {L}^{+}\).

Theorem 3.3.1 (Soundness of \(\mathbf {C}^{+}_{0}\))

For every sentence B of \(\mathcal {L}^{+}\) , if B is a deducible in \(\mathbf {C}^{+}_{0}\) from definition \(\mathcal {D}\) (notation: \(\vdash ^{+}_{\mathcal {D}} B\) ) then B is valid in \(\mathcal {L}^{+}\) relative to \(\mathcal {D}\) (i.e., \(\models ^{+}_{\mathcal {D}} B\) ).

Theorem 3.3.2 (Soundness and completeness of \(\mathbf {C}^{+}_{0}\) for finite definitions)

Let \(\mathcal {D}\) be a definition without any occurrences of the step conditionals. If \(\mathcal {D}\) is a finite definition of \(\mathcal {L}\) , then for all sentences B of \(\mathcal {L}^{+}\!\!:\; \vdash ^{+}_{\mathcal {D}} B\) iff \(\models ^{+}_{\mathcal {D}} B\).Footnote 24,Footnote 25

\(\mathbf {C}^{+}_{0}\) is not, in general, complete with respect to validity in S #. There is, however, a weaker sense of validity for which it is complete, regardless of the set of circular definitions.Footnote 26.

Let us observe, next, that the two step-conditionals are interdefinable. The following equivalences hold:

Moreover, since the following is a logical law,

each of the step conditionals is interdefinable with a modality (□). For instance, we can define □ in terms of → using the following equivalence:

- P □::

-

□A≡(⊤→A).

Or, alternatively, we can define → in terms of □ using the following principle:

- P →::

-

(A→B)≡(A⊃□B).

A parallel principle enables us to define ← directly in terms of □:

- P ←::

-

(B←A)≡(□A⊃B).

Observe, finally, that □ is governed by a simple normal modal logic. If we set out this logic, we can recover the logic of the step conditionals from it.

Let SC, the logic of the step conditionals, be the axiomatic system consisting of (i) a set of axioms for classical first-order logic with identity, as set out in, say, Mendelson [49]; (ii) P □, which we treat as a definition of □; (iii) P → and P ←; and (iv) for all formulas A and B, and all G-free formulas C, of \(\mathcal {L}^{+}\), the following formulas:

The rules for inference for SC are (a) modus ponens (from A⊃B and A to infer B); (b) universal generalization (from A to infer ∀x A); and (c) necessitation (from A to infer □A). We understand the notion “theorem of SC” (⊩ s c ) in the usual way. Now one can establish the following theorem, which connects the calculus SC with the revision semantics given above.

Theorem 3.3.3

[Soundness and completeness for SC] For all sentences A of \(\mathcal {L}^{+}\) : ⊩ sc A iff, for all definitions \(\mathcal {D}\), \(\models _{\mathcal {D}}^{+} A\). Footnote 27

If we drop the introduction and elimination rules for definitions from \(\mathbf {C}_{\mathbf {0}}^{+}\), then the resulting system—FSC, to give it a name—is equivalent to the system SC:

Theorem 3.4.1 (Equivalence of SC with FSC)

For every sentence B of \(\mathcal {L}^{+}\) , B is a theorem of SC iff B is a theorem of FSC.

3.4 An Informal Account of the Step Conditionals

A definition warrants two distinguishable logical transitions: first, from the definiendum to the definiens; and second, from the definiens to the definiendum. For non-circular definitions in, say, a classical context, the logic of these transitions is captured, at least in part, by the material conditional (⊃). Not so, however, once we allow circular and interdependent definitions. It is this consideration that motivates the introduction of the step conditionals. The step conditionals serve the role for definitions in general that the material conditional serves for ordinary, non-circular definitions.Footnote 28

The step-down conditional (→) allows us to represent the move from the definiendum to the definiens; the step-up conditional (←), the move from the definiens to the definiendum. If, as in revision semantics, we think of the interpretation of the definiendum as occupying a stage higher than the corresponding interpretation of the definiens, then the step-down conditional takes us from the higher definiendum stage to the lower definiens stage and, analogously, the step-up conditional takes us from the lower definiens stage to the higher definiendum stage. With non-circular definitions, the distinction between stages is inessential and, hence, so also is the distinction between the two conditionals: both step conditionals reduce to the material conditional. With circular definitions, however, the distinction between stages and conditionals is vital.

The precise semantics for our two conditionals, spelled out above, is complex because not only do we use these conditionals to represent facts about circularly defined predicates, we allow these conditionals to occur in the definientia of new circular definitions. This creates technical complications, and we need to proceed with care. Still, the intuitive idea behind the conditionals is straightforward. Consider a revision sequence of better and better evaluations of the language. Then, (A→B) is true at a stage α+1 iff, if A is true at α+1, then B is true at α—here we are stepping down to the conclusion. And, (B←A) is true at α+1 iff, if A is true at α, then B is true at α+1—here we are stepping up to the conclusion.

It is an easy consequence of the semantics of the step conditionals that any definition, \(\mathcal {D}\),

- (\(\mathcal {D}\)):

-

G x= D f A(x,G),

whether circular or not, implies the conditionals,

Let us define the step biconditional, (B⇔C), thus:

Then, definition \(\mathcal {D}\) invariably implies

although \(\mathcal {D}\) does not, in general, imply

With circular definitions, it is essential to distinguish between the material biconditional and the step biconditional. Only thus can we sustain the idea of coherent circularity.

The step conditionals are far from ordinary, familiar conditionals (such as strict conditionals and counterfactual conditionals). For example, reflexivity fails for them: neither (A→A) nor (A←A) is a logical law. For another example, (A⇔B) is not symmetric; the following fails to be a logical law:

We wish to make three observations to mitigate the seeming counterintuitiveness of these results.

-

(i)

The non-standard character of the conditionals issues directly from the function they are designed to serve. If, for example, we require either one of (A→A) and (A←A) to be a logical law, then the distinction between these conditionals and the material conditional collapses, and we cannot make sense of coherent circularity.

-

(ii)

The non-standard behavior does not undermine the idea that our connectives capture readings of ‘if’. The general notion expressed by the English ‘if’ is that if the antecedent is true at a certain point of evaluation e then the consequent must be true at a related point of evaluation e ∗. We obtain specific readings of ‘if’ by understanding the force of the modality “must” in a particular way and by imposing specific relations between the evaluation points e and e ∗. Thus, in the material conditional, the force of ‘must’ is entirely negated and the evaluation points are identified. In strict conditionals, the evaluation points are identified but the modality retains a significant force.Footnote 29 Conditionals used in connection with generics (“if you strike a match, it lights”) depart from the material conditional still further: in them a strong modality remains in force, and furthermore, the consequent is evaluated at points that are, in general, different from those of the antecedent. (In the example given, the consequent is evaluated at a moment later than that at which the antecedent is evaluated.) Our step conditionals are like the material conditional in that in them, too, the modality carries no force, but they are like the ‘if’ used with generics in that the evaluation points for the antecedent and the consequent can be distinct. As a result, reflexivity fails for the step conditionals, but conditional excluded middle holds. The following schemata are logically valid:

$$\begin{array}{@{}rcl@{}} &&(A \rightarrow B) \vee (A \rightarrow \neg{}B);~ \textnormal{and}\\ &&(B \leftarrow A) \vee (\neg{}B \leftarrow A). \end{array} $$The logic of the English ‘if’ is not simple, and this logic is not stable across different uses of ‘if’. In one set of its uses, namely, those where ‘if’ is used to express the relationship between definienda and definientia, its logic is captured, we believe, by the step conditionals.

-

(iii)

The basic logic of the step conditionals, though non-standard, is quite simple—simpler, we think, than that of other non-classical conditionals. We offered in the previous section simple calculi for reasoning with these conditionals, including a Fitch-style natural deduction system for the step conditionals. At an informal level, perhaps the observations that follow will bring out the simplicity of the logic.

Let us introduce a connective (□) with the following semantics: □A is true at a stage α+1 iff A is true at α.Footnote 30 So, intuitively, □A says that A is true at the previous revision stage. The meaning of the two conditionals can now be captured through the following equivalences:

- P →::

-

(A→B)≡(A⊃□B); and

- P ←::

-

(B←A)≡(□A⊃B).

Let us notice, next, that □ is governed by a normal modal logic in which the following schemata are logically valid:

Thus, □ can be distributed across any logical connective in a formula, and the result is a logically equivalent formula. In particular, □ can be pushed into the interior of the formula so that no other logical connective lies within its scope. Let the normal form of a formula be the formula that results when (a) the step conditionals are eliminated using P → and P ←, (b) all the occurrences of the □ are pushed as far as possible into the interior of the formula, and (c) all occurrences of the □ next to identities are deleted. For example, let us understand A, B, and C to be atomic in the formula

Then, step (a) applied to this formula yields

and an application of step (b) now yields

which is the normal form of the initial formula. Let a normal form be classically valid iff it is a theorem of classical logic when all occurrences of □ formulas are taken to be atomic.Footnote 31 Then, the normal form just displayed is classically valid, and this suffices to establish, by the following theorem, the logical validity of the original formula, in the sense of the normal modal logic indicated above. Exportation is thus valid for the step-up conditional, though, as we saw above, it fails for the step-down conditional.

Theorem 3.4.1 (An equivalence concerning normal forms)

A formula containing step conditionals is logically valid iff its normal form is classically valid.

It follows immediately that the propositional logic of the step conditionals is decidable and, more generally, that the question of the validity of any step formula reduces to a question about classical validity.

We can now easily verify that the following schemata are valid. (The first two of these schemata reflect the classicality of the ground language.)

Note that these schemata are not valid if occurrences of the material conditional are replaced by either of the step conditionals. Note also that analogues of the above schemata hold also for the step-up conditional.

Define by recursion:

And set:

Then, for all natural numbers n, the following schemata are valid:

Finally, let us note some schemata that fail to be valid:

The logic of the step conditionals is definitely unusual. However, its propositional fragment is simple, and taken as a whole, the logic is no more complex than classical logic.

A Comparison

In his Hilbert-Style axiomatization of calculi for circular definitions, Bruni [11] also extends the language with a new conditional. The principal differences between Bruni’s conditional and the conditionals introduced here are these: (i) Bruni’s conditional connects indexed formulas whereas our step conditionals connect formulas simpliciter. (ii) Bruni’s conditional does not belong to the language in which circular definitions are formulated but, as Bruni puts it, the conditional is “superimposed.” Consequently, Bruni’s conditional cannot occur in the definiens of circular definitions. Our step conditionals, in contrast, may be used to construct new circular definitions. For example, the definition

can be treated within the expanded revision theory, but the corresponding definition that uses Bruni’s conditional cannot be treated by Bruni’s calculi.

We think that the step conditionals makes available new, and perhaps simpler, versions of Bruni’s sequent calculi for circular definitions.

3.5 Application to Truth

The above account of definitions and conditionals yields a theory of truth if we read the Tarski biconditionals,

as partial definitions of truth. Under the assumption that only sentences are true, the partial definitions fix a rule of revision, and the semantical scheme sketched above applies. Theorem 3.3.1 continues to hold with the extended notion of definition: \(\mathbf {C}_{\mathbf {0}}^{+}\) is a sound calculus for truth and the step conditionals.

This theory of truth enables us to express (TI) and (TE) in the object language. We propose that the ‘if’ in (TI) should be read as the step-up conditional, and the ‘if’ in (TE) as the step-down conditional. (TI) and (TE) may now be expressed thus:

- (TI s):

-

T(‘A’) ←A; and

- (TE s):

-

T(‘A’) →A.

Consequently, the Tarski biconditionals can also be expressed in the object language. The ‘iff’ in

should, we propose, be read as the step biconditional. That is, we should understand the Tarski biconditionals thus:

We shall call this the step T-biconditional for A. The material T-biconditional for A, in contrast, is this equivalence:

We now argue that the resulting theory of truth satisfies the desiderata laid down in Section 1.

3.5.1 Descriptive Adequacy

We make the following observations in favor of the descriptive adequacy of the resulting theory:

-

(i)

Tarski biconditionals. The proposed reading of ‘iff’ allows us to unrestrictedly accept the Tarski biconditionals and, thus, respect a motivation for the inconsistency view (Section 2.1). We accept, for example, the Tarski biconditional for the Liar sentence l (where l=‘¬T l’):

$$\begin{array}{@{}rcl@{}} T(\textnormal{`}\neg Tl\textnormal{'}) \leftrightarrow \neg{}Tl. \end{array} $$But this biconditional implies no contradictions. In contrast, under the material reading favored by the inconsistency view, namely,

$$\begin{array}{@{}rcl@{}} T(\textnormal{`}\neg Tl\textnormal{'}) \equiv \neg Tl, \end{array} $$the Tarski biconditional for the Liar sentence does imply a contradiction.Footnote 32 There is, thus, a vast difference between the two ways of reading ‘iff’. Under one reading, the Tarski biconditionals express a law governing the concept of truth; under the other reading, they do not.Footnote 33 Indeed, under the material reading some of the Tarski biconditionals are false, as are some of the instances of (TI) and (TE). Neither of the following is a law governing truth:

- (TI ⊃):

-

A⊃T(‘A’), and

- (TE ⊃):

-

T(‘A’)⊃A.

Neither provides a basis for a good classical theory of truth.

-

(ii)

The meaning of truth. Under the proposed reading, the step biconditionals fix the meaning of truth, in the following sense: given any ground model, the biconditionals fix the revision rule for the truth predicate (assuming, as before, that only sentences are true). The theory thus preserves the strong intuitive linkage between the Tarski biconditionals and the concept of truth.Footnote 34

-

(iii)

Paradoxes. The theory rules that the reasoning in Curry’s Paradox, (A7), is invalid.

- (A7):

-

Given: b = ‘if b is true then God exists’. Suppose, b is true. So, if b is true, then God exists. Hence, God exists. We can conclude, therefore, that if b is true then God exists. Hence, b must be true. So, God exists.

The transition in the very first step—from “b is true” to “if b is true, then God exists”—is improper. In the theory we have offered, the rules of inference

- (TI R):

-

A; therefore, T(‘A’ ), and

- (TE R):

-

T(‘A’ ); therefore, A

are admissible, in the sense that they hold unrestrictedly in categorical contexts: if A is categorically asserted, then T(‘A’ ) may be categorically asserted; and conversely. However, in a hypothetical context, as in the first step in Curry’s Paradox, unrestricted application is illegitimate. Note that the following inference rules are unrestrictedly valid:

- (TI □):

-

□A; therefore, T(‘A’ ), and

- (TE □):

-

T(‘A’ ); therefore, □A.

These rules are insufficient, however, to generate Curry’s Paradox. From the assumption that b is true, we can deduce □(if b is true then God exists) and, then, if □(b is true) then God exists. But this does not allow us to move to the conclusion that God exists, for we cannot deduce □(b is true) from the initial assumption. The rule “A; therefore, □A” is not a valid inference rule.

A similar analysis shows that the argument in the Liar reasoning (A6) is invalid. Furthermore, the rules governing truth allow no deduction of ‘snow is black’ from the Truth-Teller; (A10) is also invalid. Indeed, the semantics sketched above provides an explanation of why (A10) is invalid.

-

(iv)

Non-vicious reference. If a sentence, say A, does not contain the truth predicate then its step T-biconditional implies its material T-biconditional. For now we have that

$$\begin{array}{@{}rcl@{}} A \equiv \Box A. \end{array} $$The same holds for many sentences containing the truth predicate so long as they do not involve vicious reference (such as that found in the Liar). An example is the sentence T a, where a denotes

$$\begin{array}{@{}rcl@{}} (T(\textnormal{`}Ta\textnormal{'})\; \&\; T(\textnormal{`}\neg Ta\textnormal{'})). \end{array} $$The distinction between the two readings of the Tarski biconditional, though essential, can thus be neglected when no vicious reference is in play.Footnote 35

-

(v)

Ground logic. The logic of the ground language is not disturbed. The theory allows us to reason with ¬, &, ∀, etc. in classical ways even in the presence of vicious self-reference. Arguments (A3), (A8), and (A9) are ruled valid by the theory. The validity of these arguments, as well as that of many others, can be established in the calculi defined above.Footnote 36

-

(vi)

Expressive power. The theory provides readings under which (A4) and (A5) are valid. Argument (A4) is valid if the conditional in its conclusion is read as the step-up conditional; (A5), on the other hand, is valid if the conditional in its conclusion is read as the step-down conditional. None of these readings can be captured in classical revision theory, which provides only the invalid material conditional readings. Another example that illustrates the expressive weakness of the classical theory is this:

$$\begin{array}{@{}rcl@{}} (T(\textnormal{`}\neg Tl\textnormal{'})~ \textnormal{iff}~ \neg Tl) \ \&\ Q. \end{array} $$Here Q is a truth and l denotes, as before, ¬T l. Plainly, there is an interpretation of this sentence under which it is true. However, ‘iff’ cannot be read here as definitional equivalence, for this kind of equivalence cannot be embedded within truth-functional constructions (at least in classical revision theory). Furthermore, under the material equivalence reading of ‘iff’ the sentence is false. In contrast, if we read ‘iff’ as expressing the step biconditional, we gain an interpretation under which the sentence is true.

-

(vii)

Iterated truth. Define by recursion:

$$\begin{array}{@{}rcl@{}} T^{0}(\textnormal{`}A\textnormal{'}) = A;~ \textnormal{and}~ T^{n+1}(\textnormal{`}A\textnormal{'}) = T(`T^{n}(\textnormal{`}A\textnormal{'})\textnormal{'}). \end{array} $$Then, for all natural numbers n, the following is a logical truth:

$$\begin{array}{@{}rcl@{}} T^{n}(`\textnormal{}(T(\textnormal{`}A\textnormal{'}) \leftrightarrow A)\textnormal{'}). \end{array} $$We can assert of a Tarski biconditional that it is true, that the truth attribution to it is true, and so on. Note that the following is not a logical truth:

$$\begin{array}{@{}rcl@{}} (T^{n}(\textnormal{`}A\textnormal{'}) \leftrightarrow A). \end{array} $$The biconditional governing iterated truth is properly formulated thus:

$$\begin{array}{@{}rcl@{}} (T^{n}(\textnormal{`}A\textnormal{'}) \leftrightarrow_{n} A). \end{array} $$More generally, the following is a law governing the truth predicate:

- (IT):

-

(T m + n(‘A’ )⇔ m T n(‘A’ )).

But the following is not, in general, valid:

$$\begin{array}{@{}rcl@{}}</p><p class="noindent">(T^{m+n}(\textnormal{`}A\textnormal{'}) ~\equiv T^{n}(\textnormal{`}A\textnormal{'})). \end{array} $$Let us notice that the step connectives provide an essential resource for the expression of laws governing truth. An explanation of this is as follows: Wherever circular (and interdependent) concepts are in play, the distinction between revision stages is of vital importance. Some of the laws governing circular concepts concern what happens across stages; others concern what happens within a stage. The usual logical resources (e.g., the truth-functional connectives) are adequate for the expression of intra-stage laws, but they are not adequate for the expression of inter-stage laws. The expression of the latter laws requires step connectives. (TI s), (TE s) and (IT) are examples of inter-stage laws. These laws require the step connectives for their proper formulation.Footnote 37

-

(viii)

Semantic laws. Laws of semantic composition are examples of intra-stage laws; they need to be formulated using the material conditional and biconditional. Examples of these laws are:

$$\begin{array}{@{}rcl@{}} &&T(\textnormal{`}\neg{}A\textnormal{'}) \equiv \neg T(\textnormal{`}A\textnormal{'}), \\&&T(\textnormal{`}A \ \&\ B\textnormal{'}) \equiv (T(\textnormal{`}A\textnormal{'}) \ \&\ T(\textnormal{`}B\textnormal{'})),~ \textnormal{and}\\~&&T(\textnormal{`}\forall xGx\textnormal{'}) \supset T(\textnormal{`}~Gb\textnormal{'}). \end{array} $$In the above, schematic, formulation, these laws are implied by the step T-biconditionals and are, therefore, validated by the theory we offer.Footnote 38 Note that if we replace the material conditional and biconditional in these laws by their step counterparts, the results are not in general valid. Versions of semantic laws hold also for iterated truth. For example, we have:

$$\begin{array}{@{}rcl@{}} T^{n}(\textnormal{`}A \ \&\ B\textnormal{'}) \equiv (T^{n}(\textnormal{`}A\textnormal{'}) \ \&\ T^{n}(\textnormal{`}B\textnormal{'})). \end{array} $$

Let us consider a possible objection that may be directed against us. “Your theory,” an objector may say to us, “provides a reading of ‘iff’ that preserves the Tarski biconditionals. However, your theory fails to preserve the intuition that the following variant of the biconditionals also holds:

- (∗):

-

A iff T(‘A’).

Formula (∗) is not valid if we read ‘iff’ as material equivalence or as your step biconditional. You fail, therefore, to preserve an important intuition.”

Response. We have pointed out two different readings of ‘iff’, readings that have escaped notice before. Intuitions about what one is inclined to say when one is confused about the readings of ‘iff’ should be treated with care; we cannot rely on them uncritically. Now, we can easily provide a reading of ‘iff’ on which (∗) is valid. However, instead of multiplying readings of ‘iff’, it is better to recognize that the intuition that (∗) is valid is a product of a confusion of two readings of ‘iff’: ‘iff’ as material biconditional and as step biconditional. The confusion leads one to suppose that the step biconditional is governed by a logic more suited to the material biconditional. It leads to the idea that the ‘iff’ in Tarski biconditionals is symmetric, and thus to the thought that (∗) is valid. We are better off rejecting the confusion and along with it (∗).

Note that several variants of the above objection are possible, all based on the mismatch between the logical behavior of the step biconditional and intuitions about the logic of ‘iff’. Our responses to all these objections would parallel what we have just said.Footnote 39

The above considerations, though not conclusive, provide some evidence for the descriptive adequacy of the theory we offer. The literature on truth and the semantic paradoxes is rich, and it certainly provides theories that can claim several of the features listed above. However, as far as we know, none of the available theories can claim all the features.

3.5.2 Generality Requirement

The method presented above of enriching a language with circular definitions and step conditionals is highly general. Our presentation assumed, it is true, that the ground language is classical; but the assumption is inessential. The method can be applied even when the ground language is governed by a non-classical logic—such as a many-valued or a relevance or intuitionistic logic. In all cases, we obtain a theory of truth that validates Aristotle’s Rules, interpreted, as above, using the step conditionals. Indeed, thus interpreted, Aristotle’s Rules fix, irrespective of the ground logic, the revision rule governing truth. Thus, in one sense of meaning, the Rules fix the meaning of the truth predicate. More generally, theories of truth we gain for non-classical languages possess virtues similar to those indicated above for classical languages.

Other circular concepts such as “reference,” “satisfaction,” and “exemplification” are governed by rules analogous to Aristotle’s Rules for truth, and they receive a parallel treatment.Footnote 40 Vagueness can also be treated within a revision-theoretic framework.Footnote 41

The scope of the Generality Requirement can be understood (and should be understood) to include concepts stipulatively defined using circular and interdependent definitions. Finite circular definitions, in particular, provide a good testing ground for a theory of truth, for their behavior is relatively clear and simple. Many otherwise attractive theories of truth (including some revision theories) do not lead to plausible theories of finite circular definitions. We believe that the theory we have offered here does so. We cite Theorem 3.3.2 as evidence.

3.6 A Comparison

We have offered above a theory of self-referential truth for a classical language equipped with additional logical resources—namely, the step biconditionals or, equivalently, □. Aczel and Feferman were perhaps the first logicians to explicitly set down and investigate theories of truth of this kind.Footnote 42 Aczel and Feferman studied, in particular, the type-free axiomatic system S(≡), which they formulated in a classical language extended with a new biconditional (≡).Footnote 43 (They studied also some other systems closely related to S(≡).) The system S(≡) contains as axioms the Tarski biconditionals (and, more generally, axioms for satisfaction) with the biconditional connective read as their new equivalence (≡). Aczel and Feferman proved the consistency of S(≡). We will not give here a detailed account of S(≡), for which the reader should consult Aczel and Feferman [1] or, better, Feferman [22]. We note only some basic differences between reading the Tarski biconditionals in terms of the Aczel-Feferman biconditional and in terms of the step biconditional. For ease of comparison, we use ≡ A F for the Aczel-Feferman biconditional.Footnote 44

-

(a)

The biconditional ≡ A F , unlike our step biconditional, expresses an equivalence relation. Consequently, in S(≡), both of the following statements are provable for the Liar sentence, ¬T l:

-

(i)

T l≡ A F (T l & ¬T l), and

-

(ii)

¬T l.

Since T l is not provable, it follows that the theorems of S(≡) are not closed under Truth Introduction: a sentence A can be a theorem while T(‘A’ ) fails to be a theorem. None of this holds for the step T-biconditionals. These biconditionals imply neither the Liar sentence, ¬T l, nor the analogue of (i):

$$\begin{array}{@{}rcl@{}}</p><p class="noindent">Tl \leftrightarrow (Tl \ \&\ \neg Tl). \end{array} $$Furthermore, if A is provable from the step T-biconditionals then so also is T(‘A’ ).

-

(i)

-

(b)

In light of the provability of (ii), it is plain that A≡ A F B and B can be provable while A may fail to be provable. Not so for the step biconditional: if A⇔B and B are provable then A is also provable.Footnote 45

-

(c)

Aczel and Feferman’s new connective, ≡ A F , expresses some sort of equivalence, but the exact character of this equivalence is unclear (as is noted by Feferman [22], Section 11). The step biconditional, in contrast, does not express an equivalence. In fact, the relation it expresses fails to be reflexive, symmetric, as well as transitive. Nonetheless, the step biconditional expresses a perfectly clear notion. Hence, while the meaning of the Tarski biconditionals is clear when they are interpreted using ⇔, their meaning is not clear when they are interpreted using ≡ A F .

-

(d)

S(≡) seems to be a theory, set out in a classical language, of partial truth. (Indeed, Aczel and Feferman prove the consistency of a system very similar to S(≡) via a Strong Kleene fixed-point construction.) Our theory is also formulated in a classical language. It is not, however, a theory of partial truth; it is a theory of circular truth. The step T-biconditionals have the character they do because they reflect the circularity of the concept of truth.

4 The Field Conditional

4.1 Exposition

Field’s theory of truth combines fixed-point and revision-theoretic ideas in an interesting way. Field supplements the ground language with a truth predicate, T, and a conditional, which we will represent thus: → F . Field interprets the truth predicate via the least fixed-point of the Strong Kleene scheme, and the conditional using a revision construction, which we sketch below. The construction involves, as usual, iterative revisions of a hypothesis by a revision rule. Let us first make the idea of hypothesis precise; then we shall turn to Field’s revision rule.Footnote 46

Let M be, as before, a model of the ground language. Let C be the set of all formulas whose main connective is → F , and let V be the set of all assignments of values to variables relative to the domain of M. Then, hypotheses h for revision are certain functions from (C×V) into the set of truth values {t,f,n}.Footnote 47

Let M + h be an interpretation just like M except that it treats the conditional formulas as atomic and interprets them via the hypothesis h. We are now in a familiar three-valued setting and know, therefore, that there must be a Strong Kleene least fixed-point interpretation F M,h for truth relative to M + h. Let M + h + F M,h be the interpretation just like M + h except that it assigns to the truth predicate T the interpretation F M,h . We can now use the Strong Kleene rules to evaluate the entire language relative to M + h + F M,h . (We interpret the conditional formulas, as before, using the hypothesis h.) Let v a l M,h be the function that assigns to each pair of formula and assignment its truth value in the resulting evaluation. Let ≤ be the following linear ordering of the truth values:

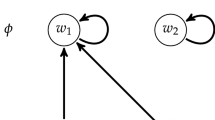

Then, Field’s revision rule, ϕ M , is defined as follows. Let h be an arbitrary hypothesis, (A→ F B) an arbitrary conditional formula, and v an arbitrary assignment of values to variables. Then:

Observe that the revised hypothesis assigns a classical truth value, t or f, to each element of its domain.

Let h n be the constant hypothesis that assigns the value n to all pairs in (C×V). Construct an On-long revision sequence, \(\mathcal {S}\), that begins with h n , applies the rule ϕ M at successor stages, and assigns to unstable elements of (C×V) the value n at limit stages. This revision sequence must contain reflection stages α (see Section 3.1). Since the value of \(\mathcal {S}\) at all reflection stages is bound to be the same, the following definition of h ∗ is legitimate: \(h^{\ast } = \mathcal {S}_{\alpha }\). We shall call h ∗ the reflection hypothesis for M. Now, the canonical model M ∗ over M is this:

This is the model Field uses to interpret the truth predicate and the conditional.Footnote 48

Let us note that Field improves on Kripke’s theory in one important respect. Unlike Kripke, he can recognize a sense of ‘if’ in which ‘if A then A’ and the Tarski biconditionals are logical truths. Set:

Then, it can be verified that all sentences of the form

are bound to be valid in this sense: they are true in the canonical model M ∗ over any ground model M.Footnote 49

4.2 Some Observations

-

(i)

The Field conditional differs semantically from our step conditionals in one important respect. Our step conditionals are cross-stage conditionals: in evaluating these conditionals, one considers the truth values of the antecedent and the consequent at different stages of revision. In contrast, the Field conditional is a same-stage conditional: in evaluating it, one considers the truth values of the antecedent and the consequent at the same stage.

-

(ii)

We have seen that the Field conditional provides a reading of ‘if’ on which ‘if A then A’ is a logical law. Hence, a reading is available to Field on which argument (A3) is valid. However, Field can provide no reading of ‘if’ under which the law ‘if A, then if B then (A & B)’ is valid. Hence, the theory rules as invalid arguments closely related to (A3), for example:

- (A3′):

-

Suppose everything Fred says is true. Now suppose that everything Mary says is true. So, everything Fred says as well as everything Mary says is true. By conditional proof, if everything Fred says is true, then if everything Mary says is true then everything Fred says as well as everything Mary says is true.

Field’s theory makes available four different readings of this argument: each of the two occurrences of ‘if’ in the conclusion can be interpreted either as ⊃ or as → F . However, the argument is valid under none of the resulting readings.

-

(iii)

According to Field’s theory, argument (A10), which has the Truth-Teller as its premiss and ‘snow is black’ as its conclusion, is valid. It also deems valid analogs of (A10) constructed using the Field conditional, such as the following:

$$\begin{array}{@{}rcl@{}} c = \top \rightarrow_{F} Tc, Tc;~ \textnormal{therefore}, Snow \ is \ black. \end{array} $$This is not only counterintuitive; it puts an unbearable burden on the logic of truth. For it is mysterious how ‘snow is black’ could be deduced, using rules for truth, from the Truth-Teller. The problem could be overcome if Field were to base his construction on certain other three-valued fixed-points and certain other revision sequences. We wish to point out, however, that no choice of specific fixed-point and specific revision sequence is free of unwanted and troublesome validities. The only hope of overcoming the general difficulty is to quantify over a range of fixed points and a range of revision sequences.Footnote 50 If Field were to follow this course, he would need to modify his construction to bring it more in line with the theory we have offered above.Footnote 51

-

(iv)

Field does not provide a definite and precise account of validity even for the propositional fragment of the language. He tell us that

it might be better to adopt the view that what is validated by a given version of the formal semantics outruns “real validity”: that the genuine logical validities are some effectively generable subset of those inferences that preserve value 1 [i.e., t] in the given semantics. (Field [23, p. 277])

But this leaves wide open the logic of the Field conditional.Footnote 52,Footnote 53

4.3 Intersubstitutivity Principle

Our main difficulty with Field boils down to this: that the logic of the Field conditional is obscure; in contrast, the logic of our step conditionals is straightforward. Field has, however, an apparently powerful response: his theory validates the Intersubstitutivity Principle, but the theory we offer does not.

- Intersubstitutivity Principle (IP)::

-

A and T(‘A’) are intersubstitutable in all extensional contexts. That is, if sentences B and C are exactly alike except that some extensional occurrences of T(‘A’) in one are replaced by A in the other, then B and C are inter-derivable; that is, C can be inferred from B, and B from C.Footnote 54

This principle is bound to fail for us because our theory validates the classical law (A≡A). Hence, our theory rules that (1) is valid:

-

(1)

l=‘ ¬T l’ ⊃ (T(‘ ¬T l’) ≡T(‘ ¬T l’)).

Now, if (IP) held, then (1) would be inter-derivable with (2):

-

(2)

l=‘ ¬T l’ ⊃ (T(‘ ¬T l’) ≡¬T l).

So, (2) would also be valid; this, however, is impossible. Hence, (IP) fails in our theory. This consequence, as Field sees it, is a fatal flaw. Any theory that fails to preserve (IP), Field thinks, ends up stripping the notion of truth of one of its essential functions. Field tells us:

‘true’ needs to serve as a device of infinite conjunction or disjunction (or more accurately, a device of quantification). ... in order for the notion of truth to serve its purposes, we need what I’ve been calling the Intersubstitutivity Principle. (Field [23, p. 210])

But what precisely is the connection between (IP) and the purposes served by ‘true’? Field’s thought here derives from an idea of W. V. Quine, who proposed that a function of ‘true’ is to enable us to generalize on sentence positions. Quine explains the point as follows in a famous passage in his Philosophy of Logic:

We may affirm the single sentence by just uttering it, unaided by quotation or by the truth predicate; but if we want to affirm some infinite lot of sentences that we can demarcate only by talking about the sentences, then the truth predicate has its use. We need it to restore the effect of objective reference when for the sake of some generalization we have resorted to semantic ascent. (Quine [57, p. 12])

Quine talks about affirmation here, but, as Field observes, the point is broader: ‘true’ serves the generalization function not only when truth attributions are affirmed outright, but also when they are embedded within compound sentences. Consider the following example from Field, in which a truth attribution occurs as the antecedent of a conditional:

-

(3)

If everything that the Conyers report says is true then the 2004 election was stolen.

Suppose that the Conyers report says A 1,…,A n and nothing more. Then, the generalization function of ‘true’ requires that (3) be inter-derivable with

-

(4)

If A 1 & … & A n then the 2004 election was stolen.

Now, given the supposition, the rules governing quantification ensure that (3) is inter-derivable with

-

(5)

If ‘ A 1’ is true & … & ‘ A n ’ is true then the 2004 election was stolen.

So, to ensure the inter-derivability of (3) and (4), the logic of ‘true’ must render (4) and (5) inter-derivable. Field observes, correctly, that it will not do to confine the intersubstitutability of A and “‘A’ is true” to categorical contexts. The logical rules governing ‘true’ must allow us to move from (4) to (5), and back again. (IP) guarantees that the move is legitimate. Any theory that blocks the move ends up denying an essential function of ‘true’.Footnote 55

Two points should be noted about this argument. First, the argument makes plausible the idea that the generalization function of ‘true’ requires that (4) and (5) be inter-derivable. However, the argument does not establish the precise character of the inter-derivability that needs to obtain. If we run the argument in the simplest case, we arrive at the demand that an inter-derivability needs to obtain between A and “‘A’ is true.” But, plainly, the character of this inter-derivability is not obvious. Indeed, our current inquiry, which has now occupied us for so long, is concerned principally to uncover its precise character.

Second, and this is the more important point, (IP) is not needed to ensure the inter-derivability of (4) and (5). The following weaker principle suffices.

- Uniform Substitutivity Principle (UP)::

-

Let B be an arbitrary sentence in which all extensional occurrences of the truth predicate T are confined to contexts of the form T(‘ A’). Let C be the formula that results when we replace all extensional occurrences of the form T(‘A’) in B by A. (We shall call C the uniform reduct of B.) Then, B and C are inter-derivable.

(IP) allows for mixed substitutions of A for T(‘A’). That is, (IP) allows us to replace some occurrences of the form T(‘A’) by A while leaving other occurrences unchanged. (UP) is not so liberal: if we replace one occurrence, then the principle requires us to replace all extensional occurrences of the form T(‘A’). So, as we saw above, (IP) allows us to derive (2) from (1), and (1) from (2). (UP), on the other hand, allows no such derivations. It allows us to derive only the tautological

from (1).

This example brings out a vitally important difference between (IP) and (UP): in the presence of self-referential truth, (IP) forces us to deviate from classical logic; (UP), on the other hand, forces no such deviation.Footnote 56

(IP) fails, we noted above, in the theory we have offered. (UP), we now wish to point out, is preserved. Indeed, we can now sharpen (UP): if C is the uniform reduct of B, then although

fails to be valid, the sentence

is valid. Indeed, the latter sentence is a logical consequence of the step T-biconditionals. In short, the generalization function of ‘true’ does not require the strong principle (IP); the weaker principle (UP) suffices. Hence, the generalization function creates no difficulty for our theory.Footnote 57

The problems with the Field conditional are rooted, we think, in Field’s misconceived adherence to (IP). It is this adherence that leads Field to burden his conditional with two separate tasks. The first task is to help overcome an expressive incompleteness in fixed-point theories—more specifically, to equip the language containing the truth predicate with a well-behaved conditional. The second task is to provide one or more readings of ‘if’ that validate Aristotle’s Rules. These are two separate tasks, but they can seem to collapse into one if (IP) is accepted. (IP) implies that a reading of ‘if’ will validate Aristotle’s rules so long as it validates Identity:

The impression arises, therefore, that we can complete both tasks if we can add to the fixed-point language a well-behaved conditional, one that validates Identity. But this thought, rooted in (IP), is erroneous. The problem of interpreting the conditionals in Aristotle’s Rules is quite separate from that of making sense of a well-behaved conditional in the presence of self-referential truth.Footnote 58 The first problem requires us to discover readings of ‘if’ suitable for expressing certain laws governing circular concepts, laws such as Aristotle’s Rules. We showed that no ordinary conditional will do here (Section 2.2), and we went on to argue that two readings of ‘if’ are needed—our step-up and step-down conditionals. Neither of these conditionals, we have seen, validates Identity. The second problem requires us to make sense of truth in an expressively rich context, a context in which fixed-points do not exist. The solution of this problem, we have suggested, requires us to move beyond fixed-point ideas to revision theory. The Field conditional, burdened as it is with two disparate tasks, is unable to perform either one of them in a satisfactory way. The conditional is neither well-behaved nor does it provide a satisfactory reading of Aristotle’s Rules.

Notes

1 Here and below, we treat quotation names as falling among the logical constants; their interpretation does not shift in assessments of validity.