Abstract

Context

One mainstay of soundscape ecology is to understand acoustic pattern changes, in particular the relative balance between biophony (biotic sounds), geophony (abiotic sounds), and anthropophony (human-related sounds). However, little research has been pursued to automatically track these three components.

Objectives

Here, we introduce a 15-year program that aims at estimating soundscape dynamics in relation to possible land use and climate change. We address the relative prevalence patterns of these components during the first year of recording.

Methods

Using four recorders, we monitored the soundscape of a large coniferous Alpine forest at the France-Switzerland border. We trained an artificial neural network (ANN) with mel frequency cepstral coefficients to systematically detect the occurrence of silence and sounds coming from birds, mammals, insects (biophony), rain (geophony), wind (geophony), and aircraft (anthropophony).

Results

The ANN satisfyingly classified each sound type. The soundscape was dominated by anthropophony (75% of all files), followed by geophony (57%), biophony (43%), and silence (14%). The classification revealed expected phenologies for biophony and geophony and a co-occurrence of biophony and anthropophony. Silence was rare and mostly limited to night time.

Conclusions

It was possible to track the main soundscape components in order to empirically estimate their relative prevalence across seasons. This analysis reveals that anthropogenic noise is a major component of the soundscape of protected habitats, which can dramatically impact local animal behavior and ecology.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Due to anthropization, land use and climate change may alter all types of landscape through mixed effects on plant and animal population ecology (Opdam and Wascher 2004; Opdam et al. 2009; Jennings and Harris 2017). The analysis, prediction and possible mitigation of these effects require appropriate monitoring methods that can retrieve relevant ecological information on large spatial scales and over long time periods. Long-term research is a requisite to estimate past, current and future changes (Hughes et al. 2017) and to develop general theories in ecology and evolutionary biology (Kuebbing et al. 2018). Ecoacoustics, more specifically soundscape ecology which works at the landscape scale, offers valuable tools to retrieve ecological information with remote field-based sensors on large spatio-temporal scales (Pijanowski et al. 2011; Sueur and Farina 2015). The use of autonomous recorders led to a long series of recent papers which, among others, showed that ecoacoustics can be involved in a wide range of ecological and conservation applications (Sugai et al. 2018, 2020). Soundscape ecology and ecoacoustics have both been put forward as interesting approaches for the study of the impacts of climate change (Krause and Farina 2016; Sueur et al. 2019).

A mainstay of soundscape ecology is the understanding of the acoustic patterns that compose a soundscape. These patterns can be separated into three categories: the biophony (biotic sounds), the geophony (abiotic sounds), and the anthropophony (human related sounds) (Krause 2008). A sub-classification might be considered by including the technophony as a sub-component of anthropophony which refers to the sound of human technology (Mullet et al. 2015). Biophony, geophony and anthropophony are repeatedly referred to as major structuring components of soundscapes (Krause 2008; Farina 2014). Biophony contains all sounds produced by living organisms, whether they are intentional (such as animal communication signals) or incidental (such as leaves rustling). Biophonic sounds can, therefore, work as proxies of life traits, ecological processes, and biodiversity (Gibb et al. 2018). Acoustic biodiversity can be evaluated with two major types of indicators: acoustic richness and acoustic abundance (Farina 2019). Although geophonic sounds are less studied than anthropogenic and biophonic sounds, they are a major soundscape component, as found in any type of environment. Such sounds can be a source of noise or information that can alter species behavior in the short term (Lengagne et al. 2002; Tishechkin 2013; Farji-Brener et al. 2018; Geipel et al. 2019) and the long term (Brumm 2004; Zollinger and Brumm 2015). Anthropogenic noise due to urbanization, transportation, industry, recreation, and military activity is invading all possible landscapes (marine, aquatic and terrestrial) even when they are protected by local or national legislation. If the negative effects of anthropogenic noise have been thoroughly studied at the individual level through animal behavior and animal physiology studies (Slabbekoorn and Ripmeester 2008; Barber et al. 2009; Brumm 2013; Shannon et al. 2015), less research has been conducted at larger ecological scales. Anthropophony has been shown to be a prevalent part of preserved soundscapes (Francis et al. 2011a, b; Mullet et al. 2015), and to have a role in the structure of soundscapes, in relation to massive noise generators, such as industrial sites (Duarte et al. 2015; Deichmann et al. 2017) or highways (Munro et al. 2018; Khanaposhtani et al. 2019). Anthropogenic noise can alter the benefits that humans may experience from natural soundscapes (Francis et al. 2017). Anthropogenic noise can also, through cascading effects, induce changes in singing species communities by altering species interactions (Francis et al. 2009), disturbing trophic chains (Hanache et al. 2020), and disrupting pollination and seed dispersal by modifying animal communities (Francis et al. 2012). Because biophony, geophony and anthropophony are part of a single ecological system, it appears necessary to understand their cross-correlated patterns over the long term.

Therefore, it seems essential, as a very first step, to automatically assess the presence of biophony, geophony, and anthropophony in long soundscape monitoring protocols. This decomposition appears as a challenge since biotic, abiotic and human sound sources can display great diversity regarding amplitude, time and frequency pattern, and moreover, they often overlap and interfere with each other. So far, such decomposition was operated either manually through listening and spectrographic visualization (Matsinos et al. 2008; Liu et al. 2013; Duarte et al. 2015; Mullet et al. 2015; Gasc et al. 2018; Rountree et al. 2020) or on the basis of frequency delimitation, anthropophony mainly occurring between 1 and 2 kHz (Joo et al. 2011; Gage and Axel 2014; Fairbrass et al. 2017; Ross et al. 2018; Doser et al. 2020). However, the choice of frequency limits is questionable as animals can produce sound at a lower or higher frequency range (Kasten et al. 2012). The biophony/anthropophony ratio has been quantified through spectrographic decomposition but neither geophony nor silence were estimated (Dein and Rüdisser 2020). Eventually, artificial intelligence appears as a promising solution to tease apart the soundscape components. Convolutional neural networks (CNN) have indeed been involved in acoustic biodiversity monitoring (Sethi et al. 2020), soundscape labelling (Bellisario et al. 2019), species identification (LeBien et al. 2020) and sound source separation (Lin and Tsao 2019). Yet, most of these recent tools require important efforts as most of them are based on complex algorithms, large training datasets and costly hardware components.

In Western Europe, mountain landscapes may suffer important land use and climate change at the same time. The Haut-Jura region, a middle altitude and cold area across France and Switzerland, is a typical example of a mountain landscape that could be substantially altered by climate change. The Haut-Jura region is dominated by a typical coniferous forest landscape inhabited by a very rich diversity of resident and migrating bird species. The region also includes the city of Geneva, the second largest urban area of Switzerland, which is served by one of Europe’s major airports. The region is therefore a mix of cold, preserved natural environment and urbanized zones.

To estimate the possible changes in soundscape composition over the long-term, in relation to land use and climate change, we started a 15-year monitoring program in one of the largest continuous coniferous forests in Europe, localized in the Parc naturel Régional du Haut-Jura and protected by several conservation tools. Using the first year of audio data, encompassing 2336 h of sound files, we first aim at understanding the dynamics of the soundscape categories over the seasons. We trained an artificial neural network, based on cepstral features, to automatically classify biophony (birds, mammals and insects), geophony (wind and rain), anthropophony (aircraft) and silence. The annual and diel phenology of each class could then be estimated, serving as a baseline for further research in the long-term and revealing for the first time the predominance of aircraft noise competing with biophony and limiting silence in a protected natural area.

Material and methods

Study site

The site consisted of a temperate cold climax forest locally named ‘Risoux’. The forest is located in the East of France in the Jura Mountains at the Swiss border and the closest city is Morez (46° 31′ 22″ N, 6° 01′ 23″ E). The forest consists of a 4400 ha West–East flat anticline crossed by 26 km of roads, hiking and cross-country skiing trails. The climate is semi-continental. The anticline and the average altitude of 1230 m a.s.l. fosters cold air conservation, inducing low annual average temperature (5.5 °C), important snowfalls (> 2 m), and an extended winter period with a very narrow vegetation period between April and October. Vegetation is mainly composed of European spruce (Picea abies), European beech (Fagus sylvatica), and European silver fir (Abies alba), their relative density depending on the altitude. Due to rich native flora and fauna, this old forest is protected by a natural park (Parc Naturel Régional du Haut-Jura) and a European Natura 2000 zone network, since 2003. Conservation measures and legal protection aim at ensuring the long-term protection of Europe's most valuable and threatened species and habitats, listed under both the European Birds Directive and the Habitats Directive. However, these conservation tools do not exclude human activities so that the Risoux Forest is still exploited for recreational hunting and commercial logging.

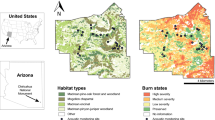

Recording

The forest soundscape was recorded using four automatic SongMeter 4 recorders (Wildlife Acoustics Inc, Concord, MA, USA). These recorders were installed across the Risoux Forest central zone, along a West–East axis. The distance between the recorders was 1.00 ± 0.10 km. The position of the recorders was chosen to (1) cover the forest area, (2) sample a single habitat defined by the dominance of the European spruce, (3) avoid pseudo-replication between neighboring recorders, and (4) avoid anthropogenic noise due to car traffic and local activities (Fig. 1).

Map of the study site. The recording site was localized in the Haut-Jura, in France at the border between France and Switzerland (inset). The four recorders were evenly distributed along the central zone of the Risoux forest which is crossed by roads, ski trails (left). The forest mainly consists of European spruce (Picea abies) (right)

The recorders were attached to trees at a height of 2.5 m and oriented on a 45°–315° axis so that the microphones were parallel to the main circulation axes. The microphones were protected with a plastic roof to reduce rain noise and damage and were facing south to limit ice and moisture. The recorders were powered with an external 12 V battery and were programmed to record for 1 min every 15 min (1’ on, 14’ off, 96 recordings per day) all year round, in accordance with reviewed literature (Depraetere et al. 2012; Bradfer-Lawrence et al. 2019). The recordings started on July 13, 2018 and were programmed to work over 15 years. Sounds were recorded in lossless stereo.wav format with a 44.1 kHz sampling frequency, 16-bit digitization depth, no analog filtering and a total gain of 42 dB. Mono and right channels were considered as duplicate sampling units, so that only the left channel was considered. The sound database recording, covering the first monitoring year, began on August 1st, 2018 and stopped on July 31st, 2019. This recording method resulted in a yearly database of 140,160 sound files (96 × 365 days × 4 sites) for a total of 2.36 h of recording. Due to some recorder failures, the effective database contained 137,087 sound files for a total of 2285 h of effective recording.

Supervised sound classification

In order to assess variations in the main forest soundscape components over space and time, a supervised sound classification was achieved. Five sound types were classified: aircraft (anthropophony), wind (geophony), rain (geophony), biophony, and silence (Fig. 2). Anthropophony was dominated by aircraft, as the recording sites were chosen to be distant from other human activities (ground based transportation, hiking, skiing, hunting and logging). Biophony was defined as any sound attributed to animal vocalization or movement. Silence was defined as the absence of any emergent sound. Classification was based on an artificial neural network (ANN) trained on a subsample of manually labelled sounds. The subsample contained 1% (i.e. 1402 files) of all recordings and was built to ensure a balanced dataset that is representative of (1) the four recording sites, (2) the 12 months of the year, (3) the seven week days and (4) the 24 h of a day-and-night cycle. Each recording was then listened to and manually classified as one of the five sound types by one of us (EG) using Audacity v2.3.2 (Audacity team 2019). The files were listened to through high-quality headphones with a noise reduction option (Bose Quiet Comfort 35 II, Bose, USA) and visualized with a grey-scale spectrogram with a Fourier window of 1024 samples with no overlap and a dynamic range of 60 dB.

Sound types monitored. Examples of each sound type automatically recorded and detected. Short-time Fourier transform parameter: Hanning window of 512 samples with no overlap, 50 dB dynamic with a relative maximum defined for each sample. Obtained with seewave R package (Sueur 2018). For a sake of clarity the duration of the examples was limited to 10 s and with only one type of sound per example but the ANN could detect several sound types for each recording

The recordings were parameterized with mel frequency cepstral coefficients (MFCCs), obtained with the R package tuneR v1.3.3 (Ligges et al. 2018). Successive MFCCs were computed over time, using a moving window of 512 samples with no overlap between successive windows. The time precision (δt = 0.0116 s) was enough to track fast sound events. This computation resulted in a matrix of 86 columns (windows) and 26 rows (MFCCs) for each 1 min audio recording. The matrix was then compressed by computing the average according to the rows and the 26 MFCCs so that the initial recording was parameterized with a vector of 26 coefficients (Sueur 2018). These coefficients were the input data used by the ANN multi-label classification algorithm using the R package neuralnet v1.44.2 (Fritsch et al. 2019). The settings of the ANN algorithm were: (1) an input layer of 26 neurons corresponding to the 26 MFCCs, (2) a hidden layer of five neurons, (3) an output layer of five neurons corresponding to the five sound types, and (4) a stopping criteria (threshold for the partial derivatives of the error function) of 0.4. The algorithm used was a resilient backpropagation with weight backtracking and the activation function was a logistic function. Due to the random start of the algorithm, the ANN procedure was repeated so that true positive rate (TPR) was maximized and false positive rate (FPR) minimized. The output of the ANN was the probability of the presence for each sound type. The optimized decision threshold for each sound type was automatically determined using the inflection point of the respective receiver operating characteristic (ROC) curve. The final selected ANN was then used to classify the remaining dataset (140,160–1402 = 138,758 files). Consequently, the final output was a presence/absence (0/1) decision for each sound type and for each recording, so that a presence/absence matrix of 140,160 rows (recordings) and five columns (sound types) was obtained.

Statistical analysis

The presence/absence matrix obtained with the ANN was first treated with an ordination analysis to assess the role of temporal and spatial factors on sound type classification. A multiple correspondence analysis (MCA) was applied to the matrix using sound type absence/presence as the variables to be explained and (1) recording sites, (2) months, (3) week days and (4) hours as explanatory variables. MCA results were visualized by plotting the projections of both explained and explanatory variables on the two first axes. The analysis was run with the R package FactoMineR v1.34 (Lê et al. 2008).

Spatio-temporal trends of sound types were also assessed with generalized additive models (GAMs) and generalized additive mixed models (GAMMs). These models accounted for nonlinear relationships between variables to be explained (number of detections per hour and per month) and the explanatory variables, i.e., the spatio-temporal covariates (hour and month as continuous variables) and the sites (categorical variable) (Hastie and Tibshirani 1986). The nonlinear relationship was modelled with smooth functions of covariates which can be isotropic and/or tensor product smooths (Wood 2017). Tensor product smooth modelled interactions between covariates with different units. The tensor product could include both the effect of the two variables and the interaction effect (Fewster et al. 2000). Model selection was based on the Akaike’s information criterion (dAIC < 2) (Burnham and Anderson 2002; Shadish et al. 2014). Seasonal patterns, their differences between sites and common temporal nonlinear trends were assessed with boxplot, quantile–quantile, and frequency plots (Zuur et al. 2010; Zuur and Ieno 2016). Using the function gam from the R package mgcv (Wood 2017), a full model was then fitted on data exploration with a Poisson error distribution and a log link function for each of the five variables to be explained. Collinearity among explanatory variables was assessed on a standard regression model with the variance inflated factor (VIF), applying the function vif from the R packages car (Fox and Weisberg 2011). Concurvity among smooth functions of covariates was checked using the function concurvity from the R package mgcv (Ramsay et al. 2003; Wood 2017). The residual temporal dependences of each of the five models were visualized with auto-correlation functions (ACF). Since GAM models do not integrate autocorrelation error structures, models were adjusted as GAM mixed model with an adaptive autoregressive error structure using the function gamm from R package mgcv. When over-dispersion occurred, models were re-fitted with a Tweedie distribution. Pertinence of the distribution correction was evaluated through AIC comparison. Models were validated after checking residual assumptions with graphical diagnostic tools using the function gam.check from the R package mgcv and non-parametric tests compiled in the function check.residuals from the R package forecast (Hyndman and Khandakar 2008). All statistical analyses were performed with R software version 3.6.1 (R Core Team 2019).

Models for biophony and silence sound types were designed to establish whether the number of detections followed a site-specific pattern over time, or whether sites shared a common pattern (Pedersen et al. 2019). Three models were built. Model 1 (M1) tested hourly and monthly effects with a common trend over time for the four sites, using a tensor product smooth function with a cyclic cubic spline (hour) and a thin plate regression spline (month). Model 2 (M2) tested hours, months and sites with a common trend over time and species-specific random deviations around the common trend. It was specified with the same structure as M1 plus another three dimensional tensor product, which integrated sites as a random smooth. Model 3 (M3) tested hours, months and sites using a common trend and a site-specific trend over time, using M1 structure plus a spatio-temporal two dimensional tensor product for each site. The mathematical expression of each is detailed in Supplementary Information.

Regarding biophony, model M1 fitted with a second-order autoregressive structure did not show any residuals issue. Model M2 violated the homoscedasticity hypothesis, even after re-fitting the model with a second-order autoregressive structure in link with the low number of levels of the variable site. Model M3, applied to biophony, did not converge.

Regarding silence, model M1 was adjusted for temporal dependence with a first-order autoregressive structure and fitted with a Tweedie distribution with a square root link and a p parameter of 1.01. Model M3 did not converge, whereas model M1 had a lower AIC than model M2.

Regarding wind, rain and aircraft sound types, the site effect was not considered due to a risk of pseudo-replication. Model M1 was fitted with a first-order autoregressive structure for aircraft and wind. A Tweedie distribution with a logarithm link and a p parameter of 1.06 was specified for the wind sound type model. For rain sound type, model M1 showed residual patterns, as well as a model with an hourly smooth by monthly trend.

Results

Automatic sound type classification

The area under the curve (AUC) of the ROC curve, when training the artificial neural, network was 0.92 for aircraft, 0.93 for wind, 0.88 for rain, 0.85 for biophony, and 0.95 for silence. The respective false positive rate (FPR) and true positive rate (TPR) were 3.75% and 71.66% for aircraft, 13.56% and 72.51% for biophony, 12.99% and 87.05% for wind, 11.11% and 78.26% for rain, and 10.65% and 94.12% for silence. The application of the trained artificial neural network on the total database led to a detection of 102,195 files with aircraft (75%), 58,348 files with biophony (43%), 49,287 files with geophony (57%), 49,287 files with wind (36%), 28,546 files with rain (21%)), and 18,890 files with silence (14%).

The percentage of the number of detections per file per month and per hour reveals specific patterns for each sound type (Figs. 3, 4). Aircraft was nearly constant across months and less abundant during the night than during the day, with a maximum around noon and a minimum at 3 am. Wind was almost evenly distributed across the year at the exception of a peak in March and showed a broad peak around 4 pm. Rain showed a maximum value in December and a minimum value in January, and was evenly distributed across day and hours. Biophony followed a clear seasonal pattern with a peak in June corresponding to spring and a trough in January corresponding to winter. Nevertheless, silence did not show any particular seasonal pattern but a clear pattern according to days and hours, with less silence during the day than during the night and a peak at 3 am. This confirmed that silence patterns were opposite to aircraft sounds as suggested by the MCA.

Ordination analysis

The first two axes of the multiple correspondence analysis (MCA) explained 65.13% of the total variance. The third and fourth axes explained 28.78% additional variance but did not reveal interesting patterns. The projection of the variables to be explained (aircraft, biophony, wind, rain, silence) and the explanatory variables (sites, months, week days and hours) along the two first axes revealed that the variables sites and week days had a weight close to zero (Fig. 5a). A second MCA was therefore calculated excluding these variables. The first two axes of this second MCA explained 65.40% of the total variance.

Multiple correspondence analyses (MCA). a Two first axes explaining 66.13% of the total variance. The variables to be explained were the aircraft, biophony, wind, rain, silence and the explanatory variables were the site, month, week day, and hour. b Two first axes explaining 65.40% of the total variance. The variables to be explained (red) were the aircraft, biophony, wind, rain, silence. The explanatory variables were the month (green) and hour (blue)

The projection of the variables to be explained along the two first axes showed that silence and aircraft contributed the most to the MCA first dimension axis, whereas rain and wind strongly contributed to the MCA second dimension axis (Fig. 5b). Silence and aircraft were negatively correlated, meaning there was silence when there were no aircraft. Rain and wind were positively correlated, meaning there was rain when there was wind. The projection of the explanatory variables revealed that the hours categories could be separated into two groups: (1) the night hours between 22:00 and 06:00 were located close to silence presence and aircraft absence, and (2) the day hours between 07:00 and 21:00 were located close to silence absence and aircraft, wind and rain presence. The months categories could be split into three groups: (1) March and December were located close to wind and rain presence, (2) August and September were close to wind and rain absence, and (3) other months were close to the origin.

Regression analysis

The phenology of each sound type shows a clear pattern according to months and hours (Fig. 6). The model for aircraft sound type explained 93.7% of the variance, with a tensor product term significant at 169.3 estimated degrees of freedom, suggesting a high nonlinear relation between temporal covariates and the number of detections (Fig. 6). Aircraft were characterized by a clear diel pattern with a morning peak activity followed by a plateau until 22 and then a sudden decline for the four sites. The morning peak was reached at 8 am from November to March and at 7 am for the rest of the year.

The model for wind explained 72% of the variance with a tensor product term significant at 108.6 estimated degrees of freedom. Wind followed three patterns along the day depending on the month of the year: a constant and low number of detections from December to January, and a bell shape in the afternoon during the rest of the year, from 8 am to 7 pm in February and from 7 am to 9 pm in August. The number of detections per hour was the lowest around at 40 in October, November and January; the highest being around 80 in March and July. For rain sound type, the model did not converge and could not be analyzed.

The model for biophony explained 89.7% of the variance with a tensor product term significant at 121.3 estimated degrees of freedom, suggesting a high nonlinear relation between temporal covariates and the number of detections for the four sites. Biophony detections followed two diel patterns changing monthly: (1) from March to August, the highest peak was reached in the morning, followed by a downward trend, a second peak and a sudden decline the next hour, and (2) from September to December a similar pattern was observed but without the second afternoon peak. A slight peak in the morning in November was also observed, probably because of specific autumn sounds due to birds and deer vocalizations. The time of the first peak differed per month with the earliest activity at 6 am from May to July, progressively shifting to 9 am from December to January. Biophony activity was also characterized by a yearly trend with increasing activity from February to June, and a two-fold decrease in activity from September to January.

The model for silence explained 93.6% of the variance, with a tensor product term significant at 130.5 estimated degrees of freedom (Fig. 6). Silence occurred during the night following a bell shape and then was absent through the day. The presence of silence was prevalent from 10 pm to sunrise.

Discussion

Monitoring and understanding natural soundscapes in the long-term necessitates distinguishing biophony, geophony and anthropophony (Krause 2008; Farina 2014). This step is a prerequisite to (1) track the relative importance of each component across space and time and (2) study individually (that is to filter out) the dynamic and composition of each component. However, this preliminary task is challenging due to the variability of each component, so much that a separation based on simple sound parameterization is not optimal. Convolutional neural networks (CNN) offer attractive solutions but are difficult to enforce due to a need for large training datasets and long computing time. Unsupervised clustering methods solve some of the CNN limitations. They do not require annotations and specific hardware, but the classification performance depends on the right choice of the acoustics features, such as acoustics indices (Sueur et al. 2014; Buxton et al. 2018), in order to be able to disentangle acoustics components and create meaningful clusters (Phillips et al. 2018). Here, we used a simple artificial neural network (ANN) with only a single hidden layer applied on a vector of MFCCs features to decompose the soundscape of a cold protected forest over the first year of a 15-year monitoring program. The training dataset contained only a small fraction (1%) of the complete dataset for which labelling was completed in less than 24 working hours by a single person (EG). The ANN algorithm was trained in a few minutes on a standard computer and returned robust results with an area under the curve between 0.85 and 0.95. Therefore, workflow appears reliable and easy to implement on local computers using standard and open-source scientific languages (e.g. R, Python and Julia). The main drawback of such an approach was that the output returned by the ANN consisted of presence/absence data so that no abundance information of the soundscape components was available. If this lack of abundance information could limit fine scale interpretation, data absence/presence could still reveal meaningful soundscape phenology on annual and diel scales.

The annual and diel phenologies of each soundscape component showed patterns that were, indeed, in agreement with the forest structure, surrounding human activities, and local weather. In particular, the annual phenology of biophony followed vegetation phenology, increasing at the time of plant growth and flowering between March and August, i.e. in spring and summer. During this period, the diel phenology of biophony followed the activity of the species that were acoustically the most active. In particular, biophony started with sunrise and decreased with sunset at the time of dawn and dusk bird choruses (Gil and Llusia 2020).

It should be noted that anthropophony, which was dominated by aircraft noise, and biophony showed convergent daily patterns all year long as aircraft followed clear diel cyclicity, corresponding to human diel activities from the nearest airport (Geneva, Switzerland). Aircraft were detected in 75% of the files, revealing noise exceedance in the soundscape of a protected high-diversity forest. Aircraft noise has already been shown to be pervasive at a large scale in the USA’s preserved or remote areas (Dumyahn and Pijanowski 2011; Barber et al. 2011; Buxton et al. 2017; Stinchcomb et al. 2020). Aircraft noise impacts bird distribution, behaviour and ecology (Gil et al. 2014; Dominoni et al. 2016; Sierro et al. 2017; Wolfenden et al. 2019) and might induce cascading effects that can affect ecosystems and landscapes (Francis et al. 2012). The growth of air transport seems to be inescapable, French civil aerial transport is estimated as doubling between 2030 and 2050 (Courteau 2013). The Haut-Jura is particularly characterized by a rising trend of flight activity due to a century of aircraft development. In 2019, Geneva airport welcomed 17.9 millions passengers with a 58% growth since 2009 (Genève Aéroport 2020). All year long, daily flight activity for non-cargo airports (such as Geneva airport) starts around 5 am and ends around 10 pm (Wilken et al. 2011), at the time of bird chorus. The evaluation of local aircraft-borne anthropogenic noise and its impact on local forest ecosystems is therefore a major issue for landscape conservation. The reduction of aircraft traffic since March 2020 due to the COVID-19 pandemic drastically changed the soundscape, so much that the effect on bird activity has already been documented (Derryberry et al. 2020). Further studies should estimate the possible acoustic interference of aircraft and biophony inside and outside lockdown periods. Such a study should be achieved by estimating sound overlap at a fine time scale and, more importantly, along the frequency spectrum. Referring to civil aircraft traffic data, it would also be possible to compare the acoustic impact of aircraft traffic according to aircraft altitude, trajectory and national and international destinations.

Wind showed a clear acoustic diel cyclicity which corresponded to the effect of daily air temperature change on wind force. On a yearly scale, wind was more present in spring during the day, with a peak before sunset, and was most present in March. This time pattern corresponded to the seasonality of ‘La Bise’, the local wind coming from the North or North-East. Rain was correlated with wind, indicating bad weather and, therefore, strong geophony. Unfortunately, it was not possible to fit a confident linear model with the temporal explaining variables. Rainfall modelling probably requires the addition of other environmental variables such as topography (Ranhao et al. 2008), air temperature, atmospheric pressure or humidity (Jennings et al. 2018). The roles of wind and rainfall in animal acoustic communication have rarely been studied but they are major components of soundscape, as they could impair animal acoustic communication (Lengagne and Slater 2002), affect sound propagation (Priyadarshani et al. 2018), and reduce biophony. Climate change might change rain and wind regimes. In particular, there is some evidence of an emerging consensus from the different regional climate models that annual wind density might slightly increase in northern Europe by the end of this century (Pryor et al. 2020). In the Jura mountains, intensity and frequency of daily precipitation is already perceptible (Scherrer et al. 2016), with high rainfall during late summer afternoons (Barton et al. 2020). Moreover, winter snowfalls, which are standard in Jura, are expected to gradually be replaced by rainfalls, increasing the number of rain events in the Risoux Forest (Beniston 2003). All of these meteorological modifications will affect the soundscape composition with more wind and rain geophony, in particular during spring when the biophony reaches a peak.

As expected, silence was occurring when biophony and aircraft were diminishing, and overall followed a diel pattern that was opposed to the other sound types. Silence was more prevalent during summer nights, probably because of a decrease of the local wind ‘La Bise’, inducing less geophony. Silent times in protected areas appeared as rather rare events which were fully constrained by anthropophony. Although the Risoux Forest benefits from important protection and conservation measures, animals and visitors can only benefit from very short time windows of quietness.

The estimated trends of each soundscape component illustrate a yearly acoustic profile with a winter soundscape dominated by aircraft, progressively evolving towards a more diverse soundscape, including biophony from early spring until the end of summer. The Risoux Forest soundscape is, therefore, closely tied to geophony and anthropophony. Here, we only monitored aircraft sounds that were dominating the anthropophony, but other sounds, even as minor as those of gunshots or chain saws due to hunting and logging, should not be totally neglected and could also be detected through acoustic methods (Hill et al. 2018; Sethi et al. 2020).

The recording sites were close to each other (~ 1 km) and were all localized in the same subalpine spruce forest habitat. This spatial design was intentionally selected so that each recording site could be considered as a replicate sample. Indeed, our analysis could not reveal differences between the sites, confirming the replication role of each recorder. However, we cannot rule out that further analyses over a longer observation time, that is over several years and dealing with more specific sounds, as birdsongs, mammal vocalizations or insect sounds, might reveal inter-site differences.

Using an automatic acoustic analysis, it was therefore possible to provide a first glimpse of the Risoux Forest soundscape structure over a complete year. This first analysis constitutes a baseline for future soundscape monitoring. The model generated by the ANN is efficient enough to analyze forthcoming datasets and display a reliable overview of the soundscape dynamics over years. Referring to this first year, it will be possible to estimate the relative importance of the biophony, geophony and anthropophony variation in the long term and to assess any possible shifts in diel and season patterns that could be linked to climate change. In addition, biophony automatic identification opens up the possibility to select recordings with biotic sounds and undertake more specific analyses about acoustic biodiversity. These analyses include the study of the composition and dynamics of acoustic communities in the long term, with a focus on the decline of specialist species in mountain environments (Lehikoinen et al. 2015; Scridel et al. 2018), an increase of generalist species, shifts in migration and nesting dates inducing new biophony phenologies. The development of sound classification tools should allow for more detailed long-term analysis about the evolution and impact of anthropic constraints over protected areas.

References

Audacity Team (2019) Audacity: free audio editor and recorder. Version 2.3.2. Retrieved 15 Feb, 2019, from https://audacityteam.org/

Barber JR, Crooks KR, Fristrup KM (2009) The costs of chronic noise exposure for terrestrial organisms. Trends Ecol Evol 25:180–189

Barber JR, Burdett C, Reed S, Warner K, Formichella C, Crooks K, Theobald D, Fristrup K (2011) Anthropogenic noise exposure in protected natural areas: estimating the scale of ecological consequences. Landsc Ecol 26:1281–1295

Barton Y, Sideris IV, Raupach TH, Gabella M, Germann U, Martius O (2020) A multi-year assessment of sub-hourly gridded precipitation for Switzerland based on a blended radar—rain-gauge dataset. Int J Climatol. https://doi.org/10.1002/joc.6514

Bellisario KM, Broadhead T, Savage D, Zhao Z, Omrani H, Zhang S, Springer J, Pijanowski BC (2019) Contributions of MIR to soundscape ecology. Part 3: tagging and classifying audio features using a multi-labeling k-nearest neighbor approach. Ecol Inf 51:103–111

Beniston M (2003) Climatic change in mountain regions: a review of possible impacts. In: Diaz HF (ed) Climate variability and change in high elevation regions: past, present & future. Springer, Dordrecht, pp 5–31. https://doi.org/10.1007/978-94-015-1252-7_2

Bradfer-Lawrence T, Gardner N, Bunnefeld L, Bunnefeld N, Willis SG, Dent DH (2019) Guidelines for the use of acoustic indices in environmental research. Methods Ecol Evol 10:1796–1807

Brumm H (2004) The impact of environmental noise on song amplitude in a territorial bird. J Anim Ecol 73:434–440

Brumm H (2013) Animal communication and noise. Springer, Berlin

Burnham KP, Anderson DR (2002) Model selection and multimodel inference: a practical information-theoretic approach, 2nd edn. Springer, New York

Buxton RT, McKenna MF, Mennitt D, Fristrup K, Crooks K, Angeloni L, Wittemyer G (2017) Noise pollution is pervasive in U.S. protected areas. Science 356:521–533

Buxton RT, McKenna MF, Clapp M, Meyer E, Stabenau E, Angeloni LM, Crooks K, Wittemyer G (2018) Efficacy of extracting indices from large-scale acoustic recordings to monitor biodiversity. Conserv Biol 32:1174–1184

Courteau R (2013) Sur les perspectives d’évolution de l’aviation civile à l’horizon 2040: préserver l’avance de la France et de l’Europe. 658. Office parlementaire d’évaluation des choix scientifiques et technologiques. Retrieved 15 March, 2019, from https://www.senat.fr/rap/r12-658/r12-658.html

Deichmann JL, Hernandez-Serna A, Amanda Delgado CJ, Campos-Cerqueira M, Aide TM (2017) Soundscape analysis and acoustic monitoring document impacts of natural gas exploration on biodiversity in a tropical forest. Ecol Ind 74:39–48

Dein J, Rüdisser J (2020) Landscape influence on biophony in an urban environment in the European Alps. Landsc Ecol 35:1875–1889

Depraetere M, Pavoine S, Jiguet F, Gasc A, Duvail S, Sueur J (2012) Monitoring animal diversity using acoustic indices: implementation in a temperate woodland. Ecol Ind 13:46–54

Derryberry EP, Phillips JN, Derryberry GE, Blum MJ, Luther D (2020) Singing in a silent spring: Birds respond to a half-century soundscape reversion during the COVID-19 shutdown. Science 370:575–579

Dominoni DM, Greif S, Nemeth E, Brumm H (2016) Airport noise predicts song timing of European birds. Ecol Evol 6:6151–6159

Doser JW, Hannam KM, Finley AO (2020) Characterizing functional relationships between anthropogenic and biological sounds: a western New York state soundscape case study. Landsc Ecol 35:689–707

Duarte M, Sousa-Lima R, Young R, Farina A, Vasconcelos M, Rodrigues M, Pieretti N (2015) The impact of noise from open-cast mining on Atlantic forest biophony. Biol Conserv 191:623–631

Dumyahn S, Pijanowski B (2011) Beyond noise mitigation: managing soundscapes as common-pool resources. Landsc Ecol 26:1311–1326

Fairbrass AJ, Rennett P, Williams C, Titheridge H, Jones KE (2017) Biases of acoustic indices measuring biodiversity in urban areas. Ecol Ind 83:169–177

Farina A (2014) Soundscape ecology: principles, patterns, methods and applications. Springer, Berlin

Farina A (2019) Ecoacoustics: a quantitative approach to investigate the ecological role of environmental sounds. Mathematics. https://doi.org/10.3390/math7010021

Farji-Brener AG, Dalton MC, Balza U, Courtis A, Lemus-Domínguez I, Fernández-Hilario R, Cáceres-Levi D (2018) Working in the rain? Why leaf-cutting ants stop foraging when it’s raining. Insect Soc 65:233–239

Fewster RM, Buckland ST, Siriwardena GM, Baillie SR, Wilson JD (2000) Analysis of population trends for farmland birds using generalized additive models. Ecology 81:1970–1984

Fox J, Weisberg S (2011) An R companion to applied regression. SAGE Publications, Los Angeles

Francis CD, Ortega CP, Cruz A (2009) Noise pollution changes avian communities and species interactions. Curr Biol 19:1415–1419

Francis CD, Ortega CP, Cruz A (2011a) Noise pollution filters bird communities based on vocal frequency. PLoS ONE 6:e27052

Francis CD, Paritsis J, Ortega C, Cruz A (2011b) Landscape patterns of avian habitat use and nest success are affected by chronic gas well compressor noise. Landsc Ecol 26:1269–1280

Francis CD, Kleist NJ, Ortega CP, Cruz A (2012) Noise pollution alters ecological services: enhanced pollination and disrupted seed dispersal. Proc R Soc B: Biol Sci 279:2727–2735

Francis CD, Newman P, Taff BD, White C, Monz CA, Levenhagen M, Petrelli AR, Abbott LC, Newton J, Burson S, Cooper CB, Fristrup KM, McClure CJ, Mennitt D, Giamellaro M, Barber JR (2017) Acoustic environments matter: synergistic benefits to humans and ecological communities. J Environ Manage 203:245–254

Fritsch S, Guenther F, Wright MN (2019) neuralnet: training of neural networks. R package version 1.44.2. Retrieved 15 May, 2019, from https://CRAN.R-project.org/package=neuralnet

Gage SH, Axel AC (2014) Visualization of temporal change in soundscape power of a Michigan lake habitat over a 4-year period. Ecol Inf 21:100–109

Gasc A, Gottesman BL, Francomano D, Jung J, Durham M, Mateljak J, Pijanowski BC (2018) Soundscapes reveal disturbance impacts: biophonic response to wildfire in the Sonoran Desert Sky Islands. Landsc Ecol 33:1399–1415

Geipel I, Smeekes MJ, Halfwerk W, Page RA (2019) Noise as an informational cue for decision-making: the sound of rain delays bat emergence. J Exp Biol 222:jeb192005

Genève Aéroport (2020) Monthly and annual air traffic statistics (passengers, movements, freight). http://www.gva.ch/en/Site/Geneve-Aeroport/Publications/Statistiques. Accessed 30 Oct 2020

Gibb R, Browning E, Glover-Kapfer P, Jones KE (2018) Emerging opportunities and challenges for passive acoustics in ecological assessment and monitoring. Methods Ecol Evol 10:169–185

Gil D, Llusia D (2020) The bird dawn chorus revisited. In: Aubin T, Mathevon N (eds) Coding strategies in vertebrate acoustic communication. Animal signals and communication, vol 7. Springer, Berlin, pp 45–90

Gil D, Honarmand M, Pascual J, Pérez-Mena E, Macías Garcia C (2014) Birds living near airports advance their dawn chorus and reduce overlap with aircraft noise. Behav Ecol 26:435–443

Hanache P, Spataro T, Firmat C, Boyer N, Fonseca P, Médoc V (2020) Noise-induced reduction in the attack rate of a planktivorous freshwater fish revealed by functional response analysis. Freshw Biol 65:75–85

Hastie T, Tibshirani R (1986) Generalized additive models. Stat Sci 1:297–310

Hill AP, Prince P, Piña Covarrubias E, Doncaster CP, Snaddon JL, Rogers A (2018) AudioMoth: evaluation of a smart open acoustic device for monitoring biodiversity and the environment. Methods Ecol Evol 9:1199–1211

Hughes BB, Beas-Luna R, Barner AK, Brewitt K, Brumbaugh DR, Cerny-Chipman EB, Close SL, Coblentz KE, de Nesnera KL, Drobnitch ST, Figurski JD, Focht B, Friedman M, Freiwald J, Heady KK, Heady WN, Hettinger A, Johnson A, Karr KA, Mahoney B, Moritsch MM, Osterback A-MK, Reimer J, Robinson J, Rohrer T, Rose JM, Sabal M, Segui LM, Shen C, Sullivan J, Zuercher R, Raimondi PT, Menge BA, Grorud-Colvert K, Novak M, Carr MH (2017) Long-term studies contribute disproportionately to ecology and policy. Bioscience 67:271–281

Hyndman RJ, Khandakar Y (2008) Automatic time series forecasting: the forecast package for R. J Stat Soft 26:1–22

Jennings MD, Harris GM (2017) Climate change and ecosystem composition across large landscapes. Landsc Ecol 32:195–207

Jennings KS, Winchell TS, Livneh B, Molotch NP (2018) Spatial variation of the rain–snow temperature threshold across the Northern Hemisphere. Nat Comm 9:1148

Joo W, Gage SH, Kasten EP (2011) Analysis and interpretation of variability in soundscapes along an urban rural gradient. Landsc Urban Plan 103:259–276

Kasten EP, Gage SH, Fox J, Joo W (2012) The remote environmental assessment laboratory’s acoustic library: an archive for studying soundscape ecology. Ecol Inf 12:50–67

Khanaposhtani MG, Gasc A, Francomano D, Villanueva-Rivera LJ, Jung J, Mossman MJ, Pijanowski BC (2019) Effects of highways on bird distribution and soundscape diversity around Aldo Leopold’s shack in Baraboo, Wisconsin, USA. Landsc Urban Plan 192:103666

Krause B (2008) Anatomy of the soundscape: evolving perspectives. J Audio Eng Soc 56:73–80

Krause B, Farina A (2016) Using ecoacoustic methods to survey the impacts of climate change on biodiversity. Biol Conserv 195:245–254

Kuebbing SE, Reimer AP, Rosenthal SA, Feinberg G, Leiserowitz A, Lau JA, Bradford MA (2018) Long-term research in ecology and evolution: a survey of challenges and opportunities. Ecol Monogr 88:245–258

LeBien J, Zhong M, Campos-Cerqueira M, Velev JP, Dodhia R, Ferres JL, Aide TM (2020) A pipeline for identification of bird and frog species in tropical soundscape recordings using a convolutional neural network. Ecol Inf 59:101113

Lê S, Josse J, Husson F (2008) FactoMineR: an R package for multivariate analysis. J Stat Soft 25:1–18

Lehikoinen A, Green M, Husby M, Kålås JA, Lindström Å (2015) Common montane birds are declining in northern Europe. J Avian Biol 45:3–14

Lengagne T, Slater PJB (2002) The effects of rain on acoustic communication: tawny owls have good reason for calling less in wet weather. Proc R Soc Lond B 269:2121–2125

Ligges U, Krey S, Mersmann A, Schnackenberg S (2018). tuneR: analysis of music and speech, R package, vs 1.3.3. Retrieved 15 Feb, 2019, from https://CRAN.R-project.org/package=tuneR

Lin T-H, Tsao Y (2019) Source separation in ecoacoustics: a roadmap towards versatile soundscape information retrieval. Remote Sens Ecol Conserv 6:236–247

Liu J, Kang J, Luo T, Behm H, Coppack T (2013) Spatiotemporal variability of soundscapes in a multiple functional urban area. Landsc Urban Plan 115:1–9

Matsinos Y, Mazaris A, Papadimitriou K, Mniestris A, Hatzigiannidis G, Maioglou D, Pantis J (2008) Spatio-temporal variability in human and natural sounds in a rural landscape. Landsc Ecol 23:945–959

Mullet T, Gage S, Morton J, Huettmann F (2015) Temporal and spatial variation of a winter soundscape in south-central Alaska. Landsc Ecol 31:1117–1137

Munro J, Williamson I, Fuller S (2018) Traffic noise impacts on urban forest soundscapes in south-eastern Australia. Aust Ecol 43:180–190

Opdam P, Wascher D (2004) Climate change meets habitat fragmentation: linking landscape and biogeographical scale levels in research and conservation. Biol Conserv 117:285–297

Opdam P, Luque S, Jones KB (2009) Changing landscapes to accommodate for climate change impacts: a call for landscape ecology. Landsc Ecol 24:715–721

Pedersen EJ, Miller DL, Simpson GL, Ross N (2019) Hierarchical generalized additive models in ecology: an introduction with mgcv. PeerJ 7:e6876

Phillips YF, Towsey M, Roe P (2018) Revealing the ecological content of long-duration audio-recordings of the environment through clustering and visualisation. PLoS ONE 13:1–27

Pijanowski B, Farina A, Gage S, Dumyahn S, Krause B (2011) What is soundscape ecology? An introduction and overview of an emerging new science. Landsc Ecol 26:1213–1232

Priyadarshani N, Castro I, Marsland S (2018) The impact of environmental factors in birdsong acquisition using automated recorders. Ecol Evol 8:5016–5033

Pryor SC, Barthelmie RJ, Bukovsky MS, Leung LR, Sakaguchi K (2020) Climate change impacts on wind power generation. Nat Rev Earth Environ 1:627–643

R Core Team (2019) R: a language and environment for statistical computing. R Foundation for Statistical Computing, Vienna

Ramsay TO, Burnett RT, Krewski D (2003) The effect of concurvity in generalized additive models linking mortality to ambient particulate matter. Epidemiology 14:18–23

Ranhao S, Baiping Z, Jing T (2008) A multivariate regression model for predicting precipitation in the Daqing mountains. Mt Res Dev 28:318–325

Ross SRPJ, Friedman NR, Dudley KL, Yoshimura M, Yoshida T, Economo EP (2018) Listening to ecosystems: data-rich acoustic monitoring through landscape-scale sensor networks. Ecol Res 33:135–147

Rountree RA, Juanes F, Bolgan M (2020) Temperate freshwater soundscapes: a cacophony of undescribed biological sounds now threatened by anthropogenic noise. PLoS ONE 15:1–26

Scherrer SC, Fischer EM, Posselt R, Liniger MA, Croci-Maspoli M, Knutti R (2016) Emerging trends in heavy precipitation and hot temperature extremes in Switzerland. J Geophys Res Atmos 121(6):2626–2637

Scridel D, Brambilla M, Martin K, Lehikoinen A, Iemma A, Matteo A, Jähnig S, Caprio E, Bogliani G, Pedrini P, Rolando A, Arlettaz R, Chamberlain D (2018) A review and meta-analysis of the effects of climate change on Holarctic mountain and upland bird populations. Ibis 160:489–515

Sethi SS, Jones NS, Fulcher BD, Picinali L, Clink DJ, Klinck H, Orme CDL, Wrege PH, Ewers RM (2020) Characterizing soundscapes across diverse ecosystems using a universal acoustic feature set. Proc Natl Acad Sci USA 117:17049–17055

Shadish WR, Zuur AF, Sullivan KJ (2014) Using generalized additive (mixed) models to analyze single case designs. J Sch Psychol 52:149–178

Shannon G, McKenna MF, Angeloni LM, Crooks KR, Fristrup KM, Brown E, Warner KA, Nelson MD, White C, Briggs J, McFarland S, Wittemyer G (2015) A synthesis of two decades of research documenting the effects of noise on wildlife. Biol Rev 91:982–1005

Sierro J, Schloesing E, Pavón I, Gil D (2017) European blackbirds exposed to aircraft noise advance their chorus, modify their song and spend more time singing. Front Ecol Evol 5:68

Slabbekoorn H, Ripmeester EAP (2008) Birdsong and anthropogenic noise: implications and applications for conservation. Mol Ecol 17:72–83

Stinchcomb TR, Brinkman TJ, Betchkal D (2020) Extensive aircraft activity impacts subsistence areas: acoustic evidence from Arctic Alaska. Environ Res Lett 15:115005

Sueur J (2018) Sound analysis and synthesis with R. Springer, Berlin, p 637

Sueur J, Farina A (2015) Ecoacoustics: the ecological investigation and interpretation of environmental sound. Biosemiotics 26:493–502

Sueur J, Farina A, Gasc A, Pieretti N, Pavoine S (2014). Acoustic indices for biodiversity assessment and landscape investigation. Acta Acust united Ac. 100:772–781.

Sueur J, Krause B, Farina A (2019) Climate change Is breaking Earth’s beat. Trends Evol 34:971–973

Sugai L, Silva T, Ribeiro J Jr, Llusia D (2018) Terrestrial passive acoustic monitoring: review and perspectives. Bioscience 69:15–25

Sugai LSM, Desjonquères C, Silva TSF, Llusia D (2020) A roadmap for survey designs in terrestrial acoustic monitoring. Remote Sens Ecol Conserv 6:220–235

Tishechkin DY (2013) Vibrational background noise in herbaceous plants and its impact on acoustic communication of small Auchenorrhyncha and Psyllinea (Homoptera). Entmol Rev 93:548–558

Wilken D, Berster P, Gelhausen MC (2011) New empirical evidence on airport capacity utilisation: relationships between hourly and annual air traffic volumes. Res Transp Bus Manag 1:118–127

Wolfenden A, Slabbekoorn H, Kluk K, de Kort S (2019) Aircraft sound exposure leads to song frequency decline and elevated aggression in wild chiffchaffs. J Anim Ecol 88:1720–1731

Wood SN (2017) Generalized additive models: an introduction with R, 2nd edn. CRC Press, Boca Raton. https://doi.org/10.1201/9781315370279

Zollinger SA, Brumm H (2015) Why birds sing loud songs and why they sometimes don’t. Anim Behav 105:289–295

Zuur AF, Ieno EN (2016) A protocol for conducting and presenting results of regression-type analyses. Methods Ecol Evol 7:636–645

Zuur AF, Ieno EN, Elphick CS (2010) A protocol for data exploration to avoid common statistical problems. Methods Ecol Evol 1:3–14

Acknowledgements

This work was supported by the Parc naturel du Haut-Jura who received funding from the Région Bourgogne-Franche-Comté and the Région Auvergne-Rhône-Alpes.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Grinfeder, E., Haupert, S., Ducrettet, M. et al. Soundscape dynamics of a cold protected forest: dominance of aircraft noise. Landsc Ecol 37, 567–582 (2022). https://doi.org/10.1007/s10980-021-01360-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10980-021-01360-1