Abstract

Accurate discrimination of neutron and gamma signals is essential for precise neutron detection. However, traditional discrimination methods face challenges under less-than-ideal conditions, such as varying signal-to-noise ratios, varying sampling rates, and pulse pile-up, leading to decreased accuracy. In this study, a simpler machine learning-based method was proposed for discriminating thermal neutron/gamma pulse shapes. The method was tested on data from a CLYC detector using a 241Am-Be neutron source. A comparison was made with three traditional methods and three other machine learning-based methods. The proposed method exhibited excellent anti-noise capabilities, particularly at low signal-to-noise ratios. Even with a standard deviation of noise reaching 0.05, the proposed method achieved an accuracy of 90%, surpassing the performance of the six discrimination methods evaluated.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Neutron detection technology plays a crucial role in various fields, including fusion monitoring and accelerator physics research [1]. Accurately identifying neutrons from gamma rays is an important prerequisite for monitoring the ion temperature and angular distribution of neutrons [2]. The natural abundance of 6Li in Cs2LiYCl6: Ce (CLYC) crystals is approximately 7%, and its reaction cross section with thermal neutrons is large (940 barns), making it an excellent material for thermal neutron and gamma discrimination. Many detectors have different response shapes of the neutron and gamma signals. Pulse shape discrimination (PSD) refers to the process of discriminating neutron and gamma signals according to differences in the shape of the pulses. PSD offers advantages over pulse height discrimination in regard to discriminating different types of signals with similar amplitudes.

Before PSD, digital sampling is now more commonly used than analog circuits [3], where detector signals are digitally sampled offline or online using a high-speed ADC. PSD methods are then used to discriminate neutrons and gamma rays. Traditional PSD methods can be divided into time domain and frequency domain approaches. Time domain methods, including charge comparison methods (CCM) [4], rise time methods [5], pulse gradient analyses (PGA) [6], pulse duration analyses (PDA) and others, are simple and easy to implement. Frequency domain methods, such as wavelet transform (WT) [7], fractal spectrum method [8], and frequency gradient analysis (FGA) [9], demonstrate better discrimination performance but involve complex Fourier or wavelet transforms for signal processing. Moreover, the use of machine learning-based methods in neutron-gamma discrimination has been studied in recent years [10,11,12,13,14,15,16,17,18,19,20]. These methods exhibit higher discrimination accuracy compared with traditional methods. However, existing machine learning structures are too complex and time-consuming. A BPNN is likely to fall into local optimality.

The accuracy of most traditional PSD methods may decrease at low signal to noise ratios [21]. Additionally, it has been demonstrated in research that different sampling rates will affect the discrimination performance, which can be critical factors when building imaging arrays with multiple channels or portable instrumentation [22, 23]. Furthermore, serious pulse pile-up poses a remarkable challenge to PSD in intense neutron fields [24]. Therefore, studying the performance of these methods under different conditions is necessary for improving the reliability and efficiency of detection equipment.

The purpose of this study is to propose a simpler machine learning-based discrimination method. By selecting the reference waveform and the difference factor, the computational complexity is reduced by avoiding the participation of a single waveform in multiple calculations. The weights for feature sampling points are determined by genetic algorithms to avoid the emergence of local optima during parameter convergence. The training set is jointly determined by three methods to improve its quality. Thus, investigate its performance in comparison with three traditional discrimination methods (CCM, PGA, PDA) and three machine learning-based methods (BPNN, SVM, KNN) under three different scenarios: varying signal-to-noise ratios, varying sampling rates, and different record lengths.

Principles of the proposed algorithm

Flow of the algorithm

The proposed algorithm follows a specific flow, as illustrated in Fig. 1. The horizontal coordinates are equally spaced because of equal sampling intervals. The key difference between neutron and gamma pulse shapes lies in the vertical coordinates, representing the pulse height. The algorithm leverages these vertical coordinate differences to analyze pulse waveform features.

Initially, the input data are divided into train set, validation set and test set at a ratio of 6:2:2. The algorithm can be divided into three parts, as shown in Fig. 1. The unweighted discrimination function is obtained through a training process, represented by the blue box. The weighted discrimination function is generated, as shown by the red box. The weighted discriminant function is used for testing purposes, depicted by the yellow box. The blue and red boxes illustrate the training process. Obtain a weighted discriminating function at the end of the training process, and input data from the test set into this function. Detailed explanations of the three parts are provided below.

Part I. Obtaining an unweighted discrimination function

A 2D Cartesian coordinate system is established on the two-dimensional plane, where the abscissa represents time, and the ordinate represents waveform amplitude. This coordinate system mathematically enables the representation of sampling points on the waveform.

We use \(N\) to denote the number of sampling points in the pulse waveform. Assuming that the train set consists of \(p\) gamma waveforms and \(q\) neutron waveforms. For any two positive integers \(s \in [p]\) and \(i \in [N]\), we define the coordinate vector of the \(i\)-th sampling point of the \(s\)-th gamma waveform as \((x_{i} ,a_{i}^{s} )\). Similarly, for any two positive integers \(t \in [q]\) and \(i \in [N]\), the coordinate vector of the \(i\)-th sampling point of the \(t\)-th neutron waveform is defined as \((x_{i} ,b_{i}^{t} )\). We establish the gamma waveform feature matrix \(A\) and neutron waveform feature matrix \(B\) using the vertical coordinates of \(p\) gamma waveforms sampling points and \(q\) neutron waveforms sampling points, respectively. The waveform features can be described by row vector in the matrices. Therefore, we have

We take the average of each column element in matrix \(A\) to obtain a vector \(a\). Similarly, we take the average of each column element in matrix \(B\) to obtain a vector \(b\). We define \(a = \left( {a_{1} ,a_{2} , \ldots ,a_{N} } \right)\), \(b = \left( {b_{1} ,b_{2} , \ldots ,b_{N} } \right)\), in which

\(a\) and \(b\) are called reference feature vectors for gamma and neutron, respectively.

We subtract the corresponding components of \(a\) and \(b\) to form a vector with absolute values, denoted as \(c\). \(c = (c_{1} ,c_{2} , \ldots ,c_{N} )\), where

By Eq. (2.5), we compare the sizes of all elements in \(c\).We may assume that

where \(i_{1} ,i_{2} , \ldots ,i_{N}\) is a rearrangement of \(1,2, \ldots ,N\). (\(i = 1,2, \ldots ,N\) is the arrangement of the original sampling points.) We maintain the position of elements in descending order to obtain vector \(c^{\prime}\), then

We define \(y = (y_{1} ,y_{2} , \ldots ,y_{N} )\), which is the feature vector formed by vertical coordinates of sampling points of any waveform in the validation set.

We define the parameter “difference factor” as \(\lambda\), \(1 \le \lambda \le N\).We take the first \(\lambda\) components from \(c^{\prime }\) to form a new vector \(c^{\prime}(\lambda )\), representing the differences between pulse feature heights, \(c^{\prime } (\lambda ) = (c_{{i_{1} }} ,c_{{i_{2} }} , \ldots ,c_{{i_{\lambda } }} )\). We filter for elements with the same subscript as \(c^{\prime}(\lambda )\) in \(a\), \(b\) and \(y\), respectively (other points of the waveform will no longer be considered). Then we define \(a^{\prime}(\lambda )\), \(b^{\prime}(\lambda )\) and \(y^{\prime}(\lambda )\) using the filtered elements defined in the same way as \(c^{\prime}(\lambda )\). We define

We compare the distance between \(y^{\prime}(\lambda )\) and \(a^{\prime}(\lambda )\), as well as the distance between \(y^{\prime}(\lambda )\) and \(b^{\prime}(\lambda )\), respectively. We introduce norms and define the “unweighted discrimination function” as \(f(\lambda )\). We have

By (2.10), we compare the function value with “1” to judge the waveform category. If the function value is less than 1, then it is judged as a gamma waveform. If the function value is more than 1, it is a neutron waveform.

We define the discrimination accuracy related to \(\lambda\) as \(P(\lambda )\), \(\lambda = 1,2, \ldots ,N\), which is the ratio of the number of waveforms that are correctly predicted and the number of the total waveforms in the validation set.

When \(\lambda = 1\), we obtain

We bring all waveform data in the validation set into \(f(1)\) and compare the function value with 1 to obtain predicted categories of all waveforms. An accuracy \(P(1)\) is obtained by comparing the number of waveforms that are correctly predicted with the number of total waveforms.

Similarly, when \(\lambda = 2\):

We bring all waveform data in the validation set into \(f(1)\) and compare the function value with 1 to obtain predicted categories of all waveforms. An accuracy \(P(2)\) is obtained by comparing the number of waveforms that are correctly predicted with the number of total waveforms.

By analogy, for any value of \(\lambda\), \(\lambda = 1,2, \ldots ,N\), we bring all waveform data from the validation set into the corresponding function \(f(\lambda )\), \(\lambda = 1,2, \ldots ,N\), we obtain predicted categories of all waveforms. We compare the number of waveforms that are correctly predicted with the number of total waveforms to obtain the accuracy \(P(\lambda )\), \(\lambda = 1,2, \ldots ,N\). We compare the value of \(P(1),P(2), \ldots ,P(N)\). Assuming that when \(\lambda = k\), the accuracy \(P(k)\) is the highest. The unweighted discrimination function is:

Part II. Generating weighted discrimination function

We set the weight vector \(w = (w_{1} ,w_{2} , \ldots ,w_{k} )\), in which each element \(w_{i} \ge 0\) \({\text{i}} = 1,2, \ldots ,k\). We define the weight matrix as \(W = diag\{ w_{1} ,w_{2} , \ldots ,w_{k} \}\). We modify the unweighted discrimination function \(f(k)\) in (2.13) to a weighted discrimination function \(f\).

Same as before, we compare the function value with “1” to judge the waveform category.

The strategy of the genetic algorithm is adopted when constructing coefficient vectors by constructing the gene matrix. For any two positive integers \(i \in [k]\) and \(j \in [r]\), We define the \(i\)-th gene of the \(j\)-th array as \(g_{ji}\). We define the gene matrix as

where \(r\) is the number of individuals, \(k\) is the dimension of individuals, \(G\) is the initial population and the row vector \(g_{j} \left( {j = 1,2, \ldots ,r} \right)\) is the \(j\)-th array.

For any \(j \in [r]\), we take \(g_{j}\) as the coefficient vector of the discrimination function, and judge the fitness of \(g_{j}\) through its corresponding accuracy by applying (2.14) to all waveform data in the validation set. Thus, we obtain the corresponding accuracy of \(g_{j}\). Since this hold for any \(j \in [r]\), we can obtain the accuracy of all arrays in the first generation.

As shown in Fig. 2, we select the appropriate row components based on fitness ranking. Crossover and mutation are used to iteratively optimize the elements in the selected components. The optimal coefficient vector \(w\) of the algorithm is the final row component with the highest fitness.

Part III. Testing for neutron-gamma discrimination

In the testing process, the weighted discrimination function obtained during the training process is used. For any waveform in the test set, its discrimination vector \(z^{\prime } (k)\) can be easily obtained by corresponding arrangement according to the same sequence of sampling points through its ordinate and Eq. (2.6). \(z^{\prime } (k) = (z_{{{\text{i}}_{1} }} ,z_{{{\text{i}}_{2} }} , \ldots ,z_{{{\text{i}}_{k} }} )\). Thus

We compare the function value with “1” to judge the waveform category. If the function value is less than 1, then it is judged as a gamma waveform. If the function value is more than 1, it is a neutron waveform.

If the value is equal to 1, its waveform category is difficult to be determined to a certain extent. Despite the fact that the large sample size and the precision of sampling point values make this possibility extremely unlikely, improvements are still proposed when the function value equals 1.

When \(f = 1\), namely, \(f = \frac{{\left\| {(a^{\prime } (k) - z^{\prime } (k))W} \right\|_{1} }}{{\left\| {(b^{\prime } (k) - z^{\prime } (k))W} \right\|_{1} }} = 1,\), we modify \(a\) and \(b\) again for a specific waveform, and select the coordinate components of the deleted \(n - k\) sampling points that have a significant impact and also include them in the range to obtain \(a^{\prime \prime }\), \(b^{\prime \prime }\). For any waveform \(z\), it is natural to retain the same number of component elements as \(a^{\prime \prime }\), \(b^{\prime \prime }\) to get \(z^{\prime \prime }\), then we bring them into \(f\), then \(f = \frac{{\left\| {a^{\prime \prime } (k) - z^{\prime \prime } (k)} \right\|_{1} }}{{\left\| {b^{\prime \prime } (k) - z^{\prime \prime } (k)} \right\|_{1} }}\), or you can also and regenerate appropriate weight matrix \(W^{\prime}\), then \(f = \frac{{\left\| {(a^{\prime \prime } (k) - z^{\prime \prime } (k))W^{\prime } } \right\|_{1} }}{{\left\| {(b^{\prime \prime } (k) - z^{\prime \prime } (k))W^{\prime } } \right\|_{1} }}\). By analogy, we can always adjust the discrimination function formula for waveforms with an \(f\) value of 1 to help obtain the desired.

Experimental methods

Acquisition and division of data sets

As shown in Fig. 3, this study utilized a Cs2LiYCl6: Ce(CLYC) scintillation crystal(~ 30 mm(Φ)*60 mm(h)) with 95% enrichment of 6Li. The crystal was coupled with a Hamamatsu R6231-100 PMT and AMETEK ORTEC 556 high-voltage power supply. Pulse signals were acquired by a RIGOL DS1104Z oscilloscope with 12-bit resolution and stored by LabVIEW upper computer program under the condition of a 241Am-Be neutron source with an activity of 1.09 × 1010 Bq(2023.6). The shield moderated neutrons. The sampling rate was 1G Sa/s with a storage depth of 2.4 k pts. The high voltage was set to 1000 V and the impedance was 50 Ω. To balance the numbers of neutrons and gamma rays, two lead plates were placed in front of the CLYC probe to collect as many neutron signals as possible. A total of 130,000 neutron and gamma pulse waveforms were acquired.

The pulse signals were pretreated as follows to reduce the error caused by noise and amplitude difference. (1) Smoothing filtering; (2) Signal normalization.

As shown in Fig. 4, most thermal neutrons and gamma rays can be clearly discriminated using the CCM, but certain waveforms remain unclear as to their categories. Therefore, to improve the quality of train set, the pulse categories were determined using three commonly used traditional methods (CCM, PGA, PDA). A pulse was classified as a neutron only if all three methods simultaneously identified it as such. Similarly, a pulse was deemed a gamma ray only if all three methods simultaneously classified it as gamma. Otherwise, the pulse was discarded. After continuous testing, the optimal positions of the total and tail gates for CCM were determined to be 800 ns and 300 ns, respectively. The optimal gradient for PGA was selected from the peak to the 250th sampling point after the peak. PDA refers to the period of time between the 50% amplitude of the rising and falling edges. For the proposed method, approximately 180 is the optimal value of k in this study.

A total of 40,000 neutron waveforms and 40,000 gamma waveforms were obtained for further processing. In accordance with the ratio of 6:2:2, the 80,000 selected neutron and gamma pulse waveforms were randomly divided into a train set, validation set, and test set. All data sets contained an equal number of neutron and gamma waveforms.

Evaluation metrics

In this study, the following evaluation metrics were used: accuracy, neutron discrimination error ration (\(DER_{n}\)), gamma discrimination error ration (\(DER_{\gamma }\)) and total discrimination error ration (\(DER_{total}\)). The formulas for these metrics are as follows:

where \(N_{n}\) is the number of total neutron waveforms, \(N_{\gamma }\) is the number of total gamma waveforms, \(N_{{n{ - }method}}\) is the number of neutron waveforms correctly classified by the corresponding method, and \(N_{{\gamma { - }method}}\) is the number of gamma waveforms correctly classified by the corresponding method.

Experimental results and discussion

We tested the robustness of the proposed method under three different scenarios that may occur in practical neutron detection: varying signal-to-noise ratios, varying sampling rates and different record lengths. The train set data were consistent across all machine learning-based methods.

The method failure point was defined as the point where the discrimination accuracy dropped to 50%. This indicates that the method had completely lost its neutron-gamma discriminating capability when its accuracy was less than or equal to the value.

Performance of discrimination at varying signal-to-noise ratios

Practical neutron detection often encounters interference from noise. Typically, shot noise and Johnson noise, which are Gaussian white noises, have the most impact on PSD.

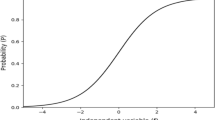

As shown in Fig. 5, Gaussian white noise with standard deviations of 0.01, 0.02, 0.03, 0.04, 0.05 and absolute noise with amplitude between − 0.002 and 0.002 V were added to the 16,000 pulse waveform data in the test set using LabVIEW to investigate the discrimination performance of various PSD methods at varying signal-to-noise ratios [25, 26]. To study the performance of the methods using different train sets, the anti-noise scenario was divided into two categories. In one case, the train set had no added noise, whereas in the other case, the train set had added noise to simulate varying signal-to-noise ratios. The added noise had standard deviations of 0.01, 0.02, 0.03, 0.04, and 0.05 with equal proportions added to the train set.

Train set without added noise

As shown in Fig. 6, the discrimination accuracy of each algorithm decreased gradually as the standard deviation of noise increased. Simultaneously, \(DER_{total}\), \(DER_{n}\), and \(DER_{\gamma }\) all increased. When the standard deviation of noise reached 0.02, the accuracy of the PDA began to diminish rapidly and its DER reached 100%. This indicated that the PDA became completely ineffective as the heavy noise caused multiple 50% amplitude points to be detected along the rising or falling edge of each pulse. When the noise standard deviation was between 0.02 and 0.05, the proposed algorithm demonstrated the highest accuracy and the lowest \(DER_{total}\), performing similarly to KNN and SVM. Despite being a machine learning-based discrimination method, the BPNN method's performance was unsatisfactory. This could be attributed to the gradient descent algorithm used to optimize its model parameters, leading to the possibility of getting trapped in local optima and failing to achieve the best parameters for optimal discrimination results. When the standard deviation of noise reached 0.05, the accuracy of KNN, BPNN and SVM was only 67.8%, 50.3% and 75.1%, respectively. However, the proposed method maintained an accuracy as high as 90%, with \(DER_{total}\) only 17.7%, which was the lowest among the seven methods. One remarkable reason for the strong anti-noise capability of the proposed method may be the setting of the difference factor, which makes waveform features easily distinguishable. Moreover, data storage, input data bandwidth and redundancy can be reduced by eliminating features that are difficult to distinguish, which is advantageous for improving discrimination in low dimensions. Other machine learning-based methods require processing all sampling points, which would potentially increase the dead time of real-time discrimination systems. In cases where neutron and gamma waveforms differ by a small amount, other methods may result in misjudgment. A low signal-to-noise ratio can cause surges in amplitude at points where the original amplitude should be small. The CCM exhibited superior anti-noise ability among the three traditional discrimination methods. This can be attributed to the fact that CCM operates by integration, which reduces the effect of random noise after integration. In contrast, the PGA relies on the calculation of the falling edge gradient and is susceptible to noise.

Train set with added noise

As shown in Fig. 7, the accuracy of BPNN, KNN, SVN, and the proposed method was higher than 95% even when the standard deviation of noise reached 0.05, which improved greatly when the train set was replaced, and their descending rate decreased. The CCM, PGA, and PDA do not depend on the train set, so their discrimination effects did not exhibit noticeable enhancement. When the standard deviation of noise reached 0.05, the accuracy of CCM was 78.9%, and its \(DER_{total}\) was as high as 38.2%. Under the same conditions, the accuracy of the proposed method remained high at 95.7%, which was not much different from other machine learning-based methods. The \(DER_{total}\) of the proposed method was slightly higher than that of other machine learning-based methods. The higher \(DER_{total}\) was primarily due to \(DER_{\gamma }\) because the gamma waveforms were more affected by noise, causing the falling edge to resemble the neutron waveform. Although the SVM demonstrated optimal discrimination performance by utilizing a Gaussian kernel as its kernel function, it also led to more intricate input features and lengthened discrimination time.

Performance of discrimination at varying sampling rates

Different sampling rates will affect the discrimination performance. Current digitizer sampling rates for online neutron-gamma discrimination (e.g. XilinxKintex-7 XC7K325T PXIe-DAQ) are mainly 10, 20, 40, and 250 M.

Thus, LabVIEW resampling VI was used to downsample the 16,000 pulse waveforms in the data set to 100, 50, 20, 10 and 5 M sampling rates to investigate the discrimination performance of various PSD methods at varying sampling rates.

As shown in Fig. 8, the accuracy of each discrimination method remained above 98%, and \(DER_{total}\), \(DER_{n}\) and \(DER_{\gamma }\) remained below 3% when the sampling rate exceeded 20 M. However, the accuracy of CCM, PGA, and PDA began to decline rapidly, and \(DER_{total}\) increased rapidly when the sampling rate was reduced to 10 M. Since the sampling rate was too low, the waveform lacked the required number of feature points, making it unsuitable for discrimination with traditional methods. The proposed method, along with BPNN, KNN, and SVM, maintained an accuracy of over 99% under the same conditions. When the sampling rate reached 5 M, the \(DER_{total}\) of the machine learning-based methods gradually increased, with KNN exhibiting the highest accuracy. In contrast, all traditional discrimination methods became ineffective. The proposed method showed poor performance only at a sampling rate of 5 M, whereas the typical ADC sampling rate for pulse waveform acquisition is 10 M or higher. KNN was the method with the highest accuracy. However, it relies on the selection of K values, which can be time consuming through K-fold cross-validation.

Performance of discrimination at different record lengths

The neutron flux measurement is directly related to the determination of neutron–nucleus interaction cross sections and the angular distribution of neutrons. Various neutron detectors and dosimeters require calibration based on absolute measurements of neutron flux during production and development. Currently, pulse pile-up events are usually discarded. However, if a discrimination algorithm can effectively distinguish neutrons from gamma rays at different record lengths, then improving the efficiency of detection is possible [27, 28].

In scenarios where accumulated pulses occur, for the proposed method, the first step is to determine the location of the pile-up generation. The ratio of the pulse length from the beginning to the location when the buildup occurs is calculated relative to the waveform length. The pulse type is determined by processing this specific part of the waveform using the trained model.

The waveform record length in the data set was reduced using LabVIEW to 1/2 (1200 ns), 1/6(400 ns), 1/8(300 ns), 1/10(240 ns), and 1/20(120 ns) of the original length to investigate the discrimination performance of various PSD methods at different record lengths.

As shown in Fig. 9, the PDA method became completely ineffective when the waveform record length was reduced to 1/2. This method relied on the full waveform record length for discrimination, and it was unable to distinguish neutrons from gamma rays as soon as pulse pile-up occurred at the rising or falling edge of the pulse. The accuracy of the PGA began to decline, and \(DER_{n}\) and \(DER_{total}\) began to increase when the waveform record length was reduced to 1/6. This is because of the reduced number of preserved tail waveform features. Similarly, the accuracy of BPNN began to decline when the waveform record length was reduced to 1/8. At a waveform record length of 1/20 (where pulse pile-up was severe and occurred near the peak position), CCM, PGA, and PDA failed to discriminate effectively. In contrast, KNN, SVM, and the proposed method were still able to achieve a discrimination accuracy of over 98% based on the differences between the rising edges of neutron and gamma ray waveforms.

Traditional discrimination methods require the adjustment of discrimination parameters and often fail to adequately consider waveform segments that exhibit remarkable differences between neutrons and gamma rays [29]. In addition, PGA and PDA use only two feature points from the pulse waveform as discrimination parameters, which makes them vulnerable to noise interference, and any decrease in the number of feature points used (varying sampling rates and different record lengths) would lead to an increase in the discrimination error rate for both. In comparison, machine learning-based methods are relatively robust. The proposed method performed comparably to other machine learning-based methods.

Conclusion

This study proposes a simpler machine learning-based method for thermal neutron/gamma PSD for CLYC scintillators and conducts experiments to test its performance. A comparison is made among three traditional methods (CCM, PGA, PDA) and three machine learning-based methods (BPNN, SVM, KNN), demonstrating that the proposed method has excellent anti-noise capabilities for discriminating signals at varying signal-to-noise ratios. When the standard deviation of noise reached 0.05, the proposed method achieved a discrimination accuracy of 90% on a train set without noise, which was 11.8% higher than the traditional discrimination method with the highest accuracy (CCM) and 19.84% higher than the machine learning method with the highest accuracy (SVM). Additionally, it had the lowest discrimination error rate for neutrons. When a train set with white noise waveforms added with different standard deviations in equal proportion was used, the accuracy of the proposed method reached as high as 95.7%. The proposed method performed comparably to other machine learning-based methods when the sampling rate was reduced to 100, 50, 20, and 10 M, and the waveform record length was only 1/2, 1/6, 1/8, 1/10, and 1/20. Even when the sampling rate was as low as 10 M and the record length was only 1/20 of the complete waveform, the proposed method still achieved accuracies of 99% and 98%, respectively, demonstrating its excellent robustness.

In practical applications, algorithms with strong anti-noise capabilities can reduce the negative impact of electromagnetic fields and other adverse factors on waveforms. Despite a low signal-to-noise ratio, accurate neutron counting for acquiring precise neutron-related data can still be achieved. As part of future work, the proposed algorithm will be implemented on an FPGA to enable real-time discrimination.

Data availability

Data will be made available on request.

References

Chen W, Hu L, Zhong G (2021) Design of the radiation shield and collimator for neutron and gamma-ray diagnostics at EAST. Fusion Eng Des 172:112775. https://doi.org/10.1016/j.fusengdes.2021.112775

Bertalot L, Krasilnikov V, Core L (2019) Present status of ITER neutron diagnostics development. J Fusion Energy 38:283–290. https://doi.org/10.1007/s10894-019-00220-w

Esposito B, Kaschuck Y, Rizzo A (2004) Digital pulse shape discrimination in organic scintillators for fusion applications. Nucl Instrum Methods Phys Res Sect A 518(1–2):626–628. https://doi.org/10.1016/j.nima.2003.11.103

Liu SX, Zhang W, Zhang ZH (2023) Performance of real-time neutron/gamma discr-imination methods. Nucl Sci Tech 34(1):8. https://doi.org/10.1007/s41365-022-01160-5

Yamazaki A, Watanabe K, Uritani A (2011) Neutron–gamma discrimination based on pulse shape discrimination in a Ce: LiCaAlF6 scintillator. Nucl Instrum Methods Phys Res Sect A 652(1):435–438. https://doi.org/10.1016/j.nima.2011.02.064

D’Mellow B, Aspinall MD, Mackin RO (2007) Digital discrimination of neutrons and γ-rays in liquid scintillators using pulse gradient analysis. Nucl Instrum Methods Phys Res Sect A 578(1):191–197. https://doi.org/10.1016/j.nima.2007.04.174

Yousefi S, Lucchese L, Aspinall MD (2008) Digital discrimination of neutrons and gamma-rays in liquid scintillators using wavelets. Nucl Instrum Methods Phys Res Sect A 598(2):551–555. https://doi.org/10.1016/j.nima.2008.09.028

Liu MZ, Liu BQ, Zuo Z (2016) Toward a fractal spectrum approach for neutron a-nd gamma pulse shape discrimination. Chin Phys C 40(6):066201. https://doi.org/10.1088/1674-1137/40/6/066201

Liu G, Joyce MJ, Ma X (2010) A digital method for the discrimination of neutrons and gamma rays with organic scintillation detectors using frequency gradient analysis. IEEE Trans Nucl Sci 57(3):1682–1691. https://doi.org/10.1109/tns.2010.2044246

Doucet E, Brown T, Chowdhury P (2020) Machine learning n/γ discrimination in CL-YC scintillators. Nucl Instrum Methods Phys Res Sect A 954:161201. https://doi.org/10.1016/j.nima.2018.09.036

Abdelhakim A, Elshazly E (2022) Neutron/gamma pulse shape discrimination using short-time frequency transform. Anal Integrate Cir Sign Process 111(3):387–402. https://doi.org/10.1007/s10470-022-02009-y

Gelfusa M, Rossi R, Lungaroni M (2020) Advanced pulse shape discrimination via machine learning for applications in thermonuclear fusion. Nucl Instrum Methods Phys Res Sect A 974:164198. https://doi.org/10.1016/j.nima.2020.164198

Ma T, Song H, Boyang L Y U (2020) Comparison of artificial intelligence algorithms and traditional algorithms in detector Neutron/Gamma discrimination. In: 2020 ICAICE IEEE, pp 173–178. https://doi.org/10.1109/icaice51518.2020.00040

Liu G, Aspinall MD, Ma X (2009) An investigation of the digital discrimination of neutrons and γ rays with organic scintillation detectors using an artificial neural network. Nucl Instrum Methods Phys Res Sect A 607(3):620–628. https://doi.org/10.1016/j.nima.2009.06.027

Lu J, Tuo X, Yang H (2022) Pulse-shape discrimination of SiPM array-coupled CLYC detector using convolutional neural network. Appl Sci 12(5):2400. https://doi.org/10.3390/app12052400

Jung KY, Han BY, Jeon EJ (2023) Pulse shape discrimination using a convolutional neural network for organic liquid scintillator signals. J Instrum 18(03):P03003. https://doi.org/10.1088/1748-0221/18/03/p03003

Jeong Y, Han BY, Jeon EJ (2020) Pulse-shape discrimination of fast neutron background using convolutional neural network for NEOS II. J Korean Phy Soc 77:1118–1124. https://doi.org/10.3938/jkps.77.1118

Griffiths J, Kleinegesse S, Saunders D (2020) Pulse shape discrimination and exploration of scintillation signals using convolutional neural networks. ML: Sci Tech. 1(4):045022. https://doi.org/10.1088/2632-2153/abb781

Yoon S, Lee C, Won BH (2022) Fast neutron-gamma discrimination in organic scintillators via convolution neural network. J Korean Phy Soc 80(5):427–433. https://doi.org/10.1007/s40042-022-00398-x

Han J, Zhu J, Wang Z (2022) Pulse characteristics of CLYC and piled-up neutron–gamma discrimination using a convolutional neural network. Nucl Instrum Methods Phys Res Sect A 1028:166328. https://doi.org/10.1016/j.nima.2022.166328

Liu HR, Zuo Z, Li P (2022) Anti-noise performance of the pulse coupled neural network applied in discrimination of neutron and gamma-ray. Nucl Sci Tech. 33(6):75. https://doi.org/10.1007/s41365-022-01054-6

Zhang J, Moore ME, Wang Z (2017) Study of sampling rate influence on neutron–gamma discrimination with stilbene coupled to a silicon photomultiplier. Appl Radiat Isot 128:120–124. https://doi.org/10.1016/j.apradiso.2017.06.036

Nakhostin M (2019) Digital discrimination of neutrons and γ-rays in liquid scintillation detectors by using low sampling frequency ADCs. Nucl Instrum Methods Phys Res Sect A 916:66–70. https://doi.org/10.1016/j.nima.2018.11.021

Luo XL, Modamio V, Nyberg J (2018) Pulse pile-up identification and reconstruction for liquid scintillator based neutron detectors. Nucl Instrum Methods Phys Res Sect A 897:59–65. https://doi.org/10.1016/j.nima.2018.03.078

Meng K, Gong P, Liang D (2023) High-speed Real-time X-ray image recognition based on a pixelated SiPM coupled scintillator detector with radiation photoelectric neural network structure. IEEE Trans Nucl Sci. https://doi.org/10.1109/tns.2023.3267262

Abdelhakim A, Elshazly E (2023) Efficient pulse shape discrimination using scalogram image masking and decision tree. Nucl Instrum Methods Phys Res Sect A 10500:168140. https://doi.org/10.1016/j.nima.2023.168140

Glenn A, Cheng Q, Kaplan AD (2021) Pulse pileup rejection methods using a two- component Gaussian Mixture Model for fast neutron detection with pulse shape discri-minating scintillator. Nucl Instrum Methods Phys Res Sect A 988:164905. https://doi.org/10.1016/j.nima.2020.164905

Yang H, Zhang J, Zhou J (2022) Efficient pile-up correction based on pulse-tail prediction for high count rates. Nucl Instrum Methods Phys Res Sect A 1029:166376. https://doi.org/10.1016/j.nima.2022.166376

Liu HR, Liu MZ, Xiao YL (2022) Discrimination of neutron and gamma ray using the ladder gradient method and analysis of filter adaptability. Nucl Sci Tech 33(12):159. https://doi.org/10.1007/s41365-022-01136-5

Acknowledgements

Ye Ma and Shuang Hang contributed equally to this work. This work was supported by the National Natural Science Foundation of China (Grant No. 12105144), the Primary Research and Development Plan of Jiangsu Province (Grant No. BE2022846, BE2023816), the State Key Laboratory of Intense Pulsed Radiation Simulation and Effect (Grant No. SKLIPR2023), the Fundamental Research Funds for the Central Universities (Grant No. NC2022006, NG2023002), and the Foundation of Graduate Innovation Center in NUAA (Grant No. xcxjh20220623).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ma, Y., Hang, S., Gong, P. et al. A method for discriminating neutron and gamma waveforms based on a comparison of differences between pulse feature heights. J Radioanal Nucl Chem 333, 375–386 (2024). https://doi.org/10.1007/s10967-023-09280-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10967-023-09280-x