Abstract

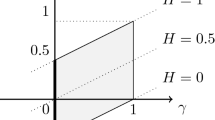

The generalized fractional Brownian motion (GFBM) \(X:=\{X(t)\}_{t\ge 0}\) with parameters \(\gamma \in [0, 1)\) and \(\alpha \in \left( -\frac{1}{2}+\frac{\gamma }{2}, \, \frac{1}{2}+\frac{\gamma }{2} \right) \) is a centered Gaussian H-self-similar process introduced by Pang and Taqqu (2019) as the scaling limit of power-law shot noise processes, where \(H = \alpha -\frac{\gamma }{2}+\frac{1}{2} \in (0,1)\). When \(\gamma = 0\), X is the ordinary fractional Brownian motion. When \(\gamma \in (0, 1)\), GFBM X does not have stationary increments, and its sample path properties such as Hölder continuity, path differentiability/non-differentiability, and the functional law of the iterated logarithm (LIL) have been investigated recently by Ichiba et al. (J Theoret Probab 10.1007/s10959-020-01066-1, 2021). They mainly focused on sample path properties that are described in terms of the self-similarity index H (e.g., LILs at infinity or at the origin). In this paper, we further study the sample path properties of GFBM X and establish the exact uniform modulus of continuity, small ball probabilities, and Chung’s laws of iterated logarithm at any fixed point \(t > 0\). Our results show that the local regularity properties away from the origin and fractal properties of GFBM X are determined by the index \(\alpha +\frac{1}{2}\) instead of the self-similarity index H. This is in contrast with the properties of ordinary fractional Brownian motion whose local and asymptotic properties are determined by the single index H.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Main Results

The generalized fractional Brownian motion (GFBM, for short) \(X:=\{X(t)\}_{t\ge 0}\) is a centered Gaussian self-similar process introduced by Pang and Taqqu [28] as the scaling limit of power-law shot noise processes. It has the following stochastic integral representation:

where the parameters \(\gamma \) and \(\alpha \) satisfy

and where B(du) is a Gaussian random measure in \({\mathbb {R}}\) with the Lebesgue control measure du. It follows that the Gaussian process X is self-similar with index H given by

When \(\gamma =0\), X becomes an ordinary fractional Brownian motion (FBM) \(B^H\) which can be represented as:

However, when \(\gamma \ne 0\), X does not have the property of stationary increments.

Fractional Brownian motion \(B^H\) has been studied extensively in the literature. It is well known that \(B^H\) arises naturally as the scaling limit of many interesting stochastic systems. For example, [17] or [30, Chapter 3.4] showed that the scaled power-law Poisson shot noise process with stationary increments converges to \(B^H\). Pang and Taqqu [28] studied a class of integrated shot-noise processes with power-law non-stationary conditional variance functions and proved in their Theorem 3.1 that the corresponding scaled process converges weakly to GFBM X.

As shown by Pang and Taqqu [28], GFBM X is a natural generalization of the ordinary FBM. It preserves the self-similarity property while the factor \(|u|^{-\gamma /2}\) introduces non-stationarity of increments, which is useful for reflecting the non-stationarity in physical stochastic systems. Ichiba, Pang and Taqqu [11] raised the interesting question: “How does the parameter \(\gamma \) affect the sample path properties of GFBM?”. They proved in [11] that, for any \(T>0\) and \(\varepsilon >0\), the sample paths of X are Hölder continuous in [0, T] of order \(H-\varepsilon \) and the functional and local laws of the iterated logarithm of X are determined by the self-similarity index H. More recently, Ichiba, Pang and Taqqu [12] studied the semimartingale properties of GFBM X and its mixtures and applied them to model the volatility processes in finance.

In this paper, we study precise local sample path properties of GFBM X, including the exact uniform modulus of continuity, small ball probabilities, Chung’s law of the iterated logarithm at any fixed point \(t > 0\), and the tangent processes. Our main results are Theorems 1.1–1.6 below. They show that the local regularity properties of GFBM X away from the origin are determined by the index \(\alpha +\frac{1}{2}\), instead of the self-similarity index \(H= \alpha -\frac{\gamma }{2}+\frac{1}{2}\). Our results also imply that the fractal properties of GFBM X are determined by \(\alpha +\frac{1}{2}\), see Remarks 3.1 (ii) and 7.1 below. This is in contrast with the ordinary fractional Brownian motion whose local, fractal, and asymptotic properties are determined by the single index H. We remark that our results are also useful for studying other fine sample path properties of GFBM X. For example, one can determine the exact Hausdorff measure functions for various fractals generated by the sample paths and prove sharp Hölder conditions and tail probability estimates for the local times of GFBM X as in, e.g., [32, 34, 38,39,40].

The first result is related to Theorems 3.1 and 4.1 of Ichiba, Pang and Taqqu [11] and provides the exact uniform modulus of continuity for X and its derivative \(X'\) (when it exists) in [a, b], where \(0< a< b< \infty \) are constants.

Theorem 1.1

Let \(X:=\{X(t)\}_{t\ge 0}\) be the GFBM defined in (1.1) and let \(0< a< b< \infty \) be constants.

-

(a)

If \(\alpha \in (-1/2+\gamma /2, 1/2)\), then there exists a constant \(\kappa _1\in (0,\,\infty )\) such that

$$\begin{aligned} \lim _{r\rightarrow 0+} \sup _{a\le t\le b}\sup _{0\le h\le r}\frac{|X(t+h)-X(t)|}{h^{\alpha +\frac{1}{2}}\sqrt{\ln h^{-1}}} =\kappa _1, \ \ \ \text {a.s.} \end{aligned}$$(1.5) -

(b)

If \(\alpha = 1/2\), then there exists a constant \(\kappa _2\in (0,\, \infty )\) such that

$$\begin{aligned} \lim _{r\rightarrow 0+} \sup _{a\le t\le b}\sup _{0\le h\le r}\frac{|X(t+h)-X(t)|}{h \ln h^{-1}} \le \kappa _2, \ \ \ \text {a.s.} \end{aligned}$$(1.6) -

(c)

If \(\alpha \in (1/2, 1/2+\gamma )\), then X has a modification that is continuously differentiable on [a, b] and its derivative \(X'\) satisfies that

$$\begin{aligned} \lim _{r\rightarrow 0+} \sup _{a\le t\le b}\sup _{0\le h\le r}\frac{|X'(t+h)-X'(t)|}{h^{\alpha -\frac{1}{2}}\sqrt{\ln h^{-1}}} =\kappa _3, \ \ \ \text {a.s.}, \end{aligned}$$(1.7)where \(\kappa _3\in (0,\,\infty )\) is a constant.

Remark 1.1

-

(i)

Theorem 3.1 in [11] states that, for all \(\varepsilon >0\), X has a modification that satisfies the uniform Hölder condition in [0, T] of order \( \alpha -\gamma /2+1/2-\varepsilon \). Our Theorem 1.1 shows that the sample path of X on any interval \([a,\, b]\) with \(a> 0\) is smoother than its behavior at \(t = 0\), which is determined by the self-similarity index \( H= \alpha -\gamma /2+1/2\) as suggested by \( {\mathbb {E}}\left[ X(t)^2\right] =c(\alpha , \gamma ) t^{2H}, \) with

$$\begin{aligned} c(\alpha , \gamma )= {\mathcal {B}}(2\alpha +1, 1-\gamma )+ \int _0^{\infty }((1+u)^{\alpha }-u^{\alpha })^2 u^{-\gamma }du. \end{aligned}$$(1.8)Here and below, \({\mathcal {B}}(\cdot ,\cdot )\) denotes the Beta function.

-

(ii)

We believe that the equality in (1.6) holds. However, we have not been able to prove this. The reason is that, when \(\alpha = 1/2\), the lower bounds in Lemma 3.1 and Proposition 3.2 are different. Similarly, the case of \(\alpha = 1/2\) is excluded in Theorems 1.2–1.4 below.

The next two results are on the small ball probabilities of X. They show a clear difference for the two cases of \(s \in [0, r]\) and \(s\in [t-r, t + r]\) with \(t>r> 0\). The small ball probabilities are not only useful for proving Chung’s law of the iterated logarithm (Chung’s LIL, for short) in Theorem 1.4 but also have many other applications. We refer to Li and Shao [21] for more information.

Theorem 1.2

Assume \(\alpha \in (-1/2+\gamma /2, \,1/2)\). Then, there exist constants \(\kappa _4, \kappa _5\in (0,\infty )\) such that for all \(r>0\) and \( 0<\varepsilon <1\),

where \(H=\alpha -\gamma /2+1/2\).

Theorem 1.3

-

(a)

Assume \(\alpha \in (-1/2+\gamma /2,\,1/2)\). Then, there exist constants \(\kappa _6, \kappa _7\in (0,\infty )\) such that for all \(t>0, r \in (0, t/2)\) and \(\varepsilon \in \left( 0, r^{\alpha +1/2}\right) \),

$$\begin{aligned} \begin{aligned} \exp \bigg (-\kappa _6\,r \, c_1(t)\Big (\frac{1}{\varepsilon }\Big )^{\frac{1}{\alpha +1/2}}\bigg )\le&\, {\mathbb {P}}\bigg \{\sup _{|h|\le r}|X(t+h)-X(t)|\le \varepsilon \bigg \}\\&\, \le \exp \bigg (-\kappa _7\,r\, c_2(t)\Big (\frac{1}{\varepsilon }\Big )^{\frac{1}{\alpha +1/2}}\bigg ), \end{aligned} \end{aligned}$$(1.10)where \(c_1(t)=\max \left\{ t^{\alpha -\gamma /2-1/2}, t^{-\gamma /(2\alpha +1)}\right\} \) and \(c_2(t) =t^{-\gamma /(2\alpha +1)}\).

-

(b)

Assume \(\alpha \in (1/2,\, 1/2+\gamma /2)\). Then, there exist constants \(\kappa _8, \kappa _9\in (0,\infty )\) such that for all \(t>0, r \in (0, t/2)\) and \(\varepsilon \in \left( 0, r^{\alpha -1/2}\right) \),

$$\begin{aligned} \begin{aligned} \exp \bigg (-\kappa _8\, r\, c_3(t)\Big (\frac{1}{\varepsilon }\Big )^{\frac{1}{\alpha -1/2}}\bigg )\le&\, {\mathbb {P}}\bigg \{\sup _{|h|\le r}|X'(t+h)-X'(t)|\le \varepsilon \bigg \} \\&\, \le \exp \bigg (-\kappa _9\, r\, c_4(t)\Big (\frac{1}{\varepsilon }\Big )^{\frac{1}{\alpha -1/2}}\bigg ), \end{aligned} \end{aligned}$$(1.11)where \(c_3(t)=\max \left\{ t^{\alpha -\gamma /2-3/2}, t^{-\gamma /(2\alpha -1)}\right\} \) and \(c_4(t) =t^{-\gamma /(2\alpha -1)}\).

The following are Chung’s laws of the iterated logarithm for X and \(X'\). It is interesting to notice that the parameters \(\gamma \) and \(\alpha \) play different roles. Since X and \(X'\) do not have stationary increments when \(\gamma > 0\), the limits in their Chung’s LILs depend on the location of \(t>0\). (1.12) and (1.13) show that the oscillations decrease at the rate \(t^{-\gamma /2}\) as t increases. This provides an explicit answer in the context of Chung’s LIL to the aforementioned question of Ichiba, Pang and Taqqu [11] regarding the effect of the parameter \(\gamma \).

Theorem 1.4

-

(a)

If \(\alpha \in (-1/2+\gamma /2,1/2)\), then there exists a constant \(\kappa _{10}\in (0,\infty )\) such that for every \(t>0\),

$$\begin{aligned} \liminf _{r\rightarrow 0+} \sup _{ |h|\le r}\frac{ |X(t+h)-X(t)|}{r^{\alpha +1/2}/(\ln \ln 1/r)^{\alpha +1/2}} =\kappa _{10} t^{-\gamma /2}, \ \ \ \text {a.s.} \end{aligned}$$(1.12) -

(b)

If \(\alpha \in (1/2, \, 1/2+\gamma /2)\), then there exists a constant \(\kappa _{11}\in (0,\infty )\) such that for every \(t>0\),

$$\begin{aligned} \liminf _{r\rightarrow 0+} \sup _{ |h|\le r}\frac{ |X'(t+h)-X'(t)|}{r^{\alpha -1/2}/(\ln \ln 1/r)^{\alpha -1/2}} =\kappa _{11} t^{-\gamma /2}, \ \ \ \ \text {a.s.} \end{aligned}$$(1.13)

Remark 1.2

From the proofs of Theorems 1.3 and 1.4, we know that if \(\sup _{ |h|\le r}\) is replaced by \(\sup _{ 0\le h\le r}\) in (1.10–1.13), then the corresponding results also hold.

For completeness, we also include the following law of the iterated logarithm for GFBM X at any fixed point \(t > 0\). Part (a) of our Theorem 1.5 supplements Theorem 6.1 in [11] where the case of \(t = 0\) was considered. See also Proposition 7.1 at the end of the present paper for a slight improvement of [11, Theorem 6.1] using the time inversion property of GFBM. Theorems 1.4 and 1.5 together describe precisely the large and small oscillations of X in the neighborhood of every fixed point \(t > 0\). As such they are useful for studying fine fractal properties (such as exact Hausdorff measure function and multifractal structure) of the sample path of X.

The topic of LILs for Gaussian processes has been studied extensively by many authors, see for example, Arcones [3], Marcus and Rosen [24], Meerschaert et al. [26]. In particular, Chapter 7 of [24] provides explicit information about the constant in LIL for Gaussian processes with stationary increments under extra regularity conditions on the variance of the increments or the spectral density functions. However, the results in [24] cannot be applied to GFBM X directly. Instead, we will make use of Theorem 5.1 in [26] and the stationary Gaussian process U in Sect. 4 to prove the following theorem. As in Theorem 1.4, our result below describes explicitly the roles played by the parameters \(\gamma \) and \(\alpha \) and the location \(t>0\).

Theorem 1.5

-

(a).

If \(\alpha \in (-1/2+\gamma /2,1/2)\), then there exists a constant \(\kappa _{12}\in (0,\infty )\) such that for every \(t>0\),

$$\begin{aligned} \limsup _{r\rightarrow 0+} \sup _{ |h|\le r}\frac{ |X(t+h)-X(t)|}{r^{\alpha +1/2} \sqrt{\ln \ln 1/r}}=\kappa _{12} t^{-\gamma /2}, \ \ \ \ \text {a.s.} \end{aligned}$$(1.14) -

(b).

If \(\alpha =1/2\), then there exists a constant \(\kappa _{13}\in (0,\infty )\) such that for every \(t>0\),

$$\begin{aligned} \limsup _{r\rightarrow 0+} \sup _{ |h|\le r}\frac{ |X(t+h)-X(t)|}{r \sqrt{\ln (1/r) \ln \ln 1/r}} = \kappa _{13}t^{-\gamma /2}, \ \ \ \text {a.s.} \end{aligned}$$(1.15) -

(c).

If \(\alpha \in (1/2, \, 1/2+\gamma /2)\), then there exists a constant \(\kappa _{14}\in (0,\infty )\) such that for every \(t>0\),

$$\begin{aligned} \limsup _{r\rightarrow 0+} \sup _{ |h|\le r}\frac{ |X'(t+h)-X'(t)|}{r^{\alpha -1/2}\sqrt{\ln \ln 1/r}}=\kappa _{14} t^{-\gamma /2}, \ \ \ \ \text {a.s.} \end{aligned}$$(1.16)

In order to prove the theorems stated above, we consider the following decomposition of X(t) for all \(t \ge 0\):

Then, the two processes \(Y=\{Y(t)\}_{t\ge 0}\) and \(Z=\{Z(t)\}_{ t\ge 0}\) are independent. The process Z in (1.17) is well defined for \(\alpha > -1/2\) and \(\gamma < 1\) and is called a generalized Riemann–Liouville FBM, following the terminology of Ichiba, Pang and Taqqu [11]. Notice that the ranges of the parameters \(\alpha \) and \(\gamma \) for Z are wider than that in (1.2). As in [28, Remark 5.1], one can verify that Z is a self-similar Gaussian process with Hurst index \(H=\alpha -\frac{\gamma }{2}+\frac{1}{2}\) which is negative if \(\alpha \in (-1/2,\, -1/2 +\gamma /2)\). It follows from Lemma 3.1 below that Z has a modification whose sample function is continuous on \((0, \infty )\) a.s. In Sect. 2, we will prove that Y has a modification that is continuously differentiable in \((0,\infty )\). Hence, in order to study the regularity properties of X, we only need to study in detail the regularity properties of the sample path of Z when the parameters \(\alpha > -1/2\) and \(\gamma \in [0, 1)\).

Intuitively, if \(u\in [a,b]\subset (0,\infty )\), the perturbation of \(u^{-\gamma /2}\) is bounded and it does not deeply affect the sample path properties of Z(t). Consequently, the process Z shares many regularity properties of the following process:

which is the Riemann–Liouville FBM introduced by Lévy [20], see also Mandelbrot and Van Ness [23], Marinucci and Robinson [25] for further information. When \(\alpha \ge 1\) is a positive integer, then \(Z^{\alpha , 0}\) is, up to a constant factor, an \(\alpha \)-fold primitive of Brownian motion and its precise local asymptotic properties were studied by Lachal [18].

Theorems 1.4–1.5 demonstrate that, since GFBM X has non-stationary increments, the local oscillation properties of X near a point \(t\in (0,\infty )\) are location dependent. As suggested by one of the referees,Footnote 1 another way to study the local structure of X near t is to determine the limit (in the sense of all the finite dimensional distributions or in the sense of weak convergence) of the following sequence of scaled enlargements of X around t:

where \( \{r_n\}\) and \( \{c_n\}\) are sequences of real numbers such that \(r_n\searrow 0\) and \(c_n\searrow 0\). The (small scale) limiting process of (1.19), when it exists, is called a tangent process of X at t by Falconer [8, 9]. If the limit in (1.19) exists for \(c_n = r_n^\chi \) for some constant \(\chi \in (0, 1]\) which may depend on t, one also says that X is weakly locally asymptotically self-similar of order \(\chi \) at t (cf. [6]). Tangent processes of stochastic processes and random fields are useful in both theoretical and applications. We refer to [8, 9] for a general framework of tangent processes and to [4, 5] for some statistical applications. Recently, Skorniakov [31] provided sufficient and necessary conditions for a class of self-similar Gaussian processes to admit a unique tangent process at any fixed point \(t>0\). The theorems in [31] are applicable to the Riemann–Liouville FBM, sub-fractional Brownian motion, and bi-fractional Brownian motion. However, it is not obvious to verify that GFBM X satisfies the conditions of Theorem 2.1 of Skorniakov [31]. In the following, we provide some results on the tangent processes of GFBM X by using direct arguments.

For any fixed \(t>0,\, u\ge 0\) and a constant \(\chi \in (0, 1]\), define the scaled process \(\big \{V^{t,u}(\tau )\big \}_{\tau \ge 0}\) around t by

-

(a)

If \(t = 0\), then, by the self-similarity of X, we take \(\chi = H\) and see that the corresponding tangent process is X itself.

-

(b)

If \(t > 0\), then the choice of \(\chi \) depends on the parameter \(\alpha \).

-

(b1)

If \(\alpha \in (1/2, 1/2+\gamma /2)\), then by the differentiability of X (see also [8, Example 2]), we can take \(\chi = 1\) and derive that, for any \(t > 0\),

$$\begin{aligned} \big \{V^{t,u}(\tau )\big \}_{\tau \ge 0} \text { converges in distribution to }\ \big \{c_{1,1} t^{H-1} B^1(\tau )\big \}_{\tau \ge 0} \end{aligned}$$in \(C({\mathbb {R}}_+, {\mathbb {R}})\) (the space of continuous functions from \({\mathbb {R}}_+\) to \({\mathbb {R}}\)), as \(u\rightarrow 0+\), where \(B^1(\tau )=\tau {\mathcal {N}}\) with \({\mathcal {N}}\) being a standard Gaussian random variable and

$$\begin{aligned} c_{1,1}=\alpha \left( \int _{-\infty }^{t}(t-u)^{2\alpha -2}|u|^{-\gamma }du\right) ^{1/2}. \end{aligned}$$ -

(b2)

If \(\alpha \in (-1/2+\gamma /2,1/2)\), then it follows from the decomposition (1.17) and the differentiability of Y (see Proposition 2.1 below) that the weak limit of \(\big \{V^{t,u}(\tau )\big \}_{\tau \ge 0}\) as \(u\rightarrow 0+\) is the same as the analogous scaled process for the generalized Riemann–Liouville FBM Z (See Proposition 3.1 below). More precisely, we obtain the result in Theorem 1.6 below.

-

(b1)

Theorem 1.6

Assume \(\alpha \in (-1/2+\gamma /2, 1/2), t>0\) and \(\chi = \alpha + \frac{1}{2}\) in (1.20). Then, the process \(\big \{V^{t,u}(\tau )\big \}_{\tau \ge 0}\) defined by (1.20) converges in distribution to \(\big \{ \kappa _{15} t^{-\gamma /2} B^{\alpha +1/2}(\tau )\big \}_{\tau \ge 0}\) in \(C({\mathbb {R}}_+,{\mathbb {R}})\), as \(u\rightarrow 0+\). Here, \(B^{\alpha +1/2}\) is a FBM with index \(\alpha +1/2\), and

The rest of the paper is organized as follows. In Sect. 2, we prove that the sample paths of the process Y are differentiable in \((0, \infty )\) almost surely. From Sects. 3–6, we focus on the generalized Riemann–Liouville FBM Z. More precisely, we give estimates on the moment of increments, prove the existence of the tangent process and establish the one-sided strong local nondeterminism of Z in Sect. 3; study the Lamperti transformation of Z and give some spectral density estimates in Sect. 4; determine the small ball probabilities for Z in Sect. 5; and prove a Chung’s LIL for Z in Sect. 6. In Sect. 7, we prove the main theorems for GFBM X.

2 Sample Path Properties of Y

In this section, we consider the process \(Y= \{Y(t)\}_{ t \ge 0}\) defined in (1.17), namely,

and show that its sample function is smooth away from the origin.

Lemma 2.1

Assume \(-1/2+\gamma /2<\alpha <1/2+\gamma /2\). Then, for all \(0< s< t<\infty \),

where \(c_{2,1}= \alpha ^2\int _0^{\infty }(1+u)^{2\alpha -2}u^{-\gamma }du\).

Proof

For any \(0< s< t<\infty \) and \(u > 0\), by the mean-value theorem, there exists a number \(\theta (u) \in (0,1)\) (which also depends on s, t) such that

In the proofs of this lemma and Proposition 2.1 below, we will use (2.3) to derive lower and upper bounds for \(|(t+u)^{\alpha }-(s+u)^{\alpha }|\). Hence, we have

Similarly, we can prove that

This proves (2.2). \(\square \)

By Lemma 2.1, the Gaussian property of Y, and the Kolmogorov continuity theorem (see, e.g., [16, Theorem C.6]), we know that, for any \(\varepsilon >0\), Y has a modification that is Hölder continuous with index \(1-\varepsilon \) on any interval [a, b] with \(0<a<b\). We will apply this fact in Sect. 7 to prove Theorems 1.1, 1.4–1.6, from the results on the generalized Riemann–Liouville FBM Z.

In the following, we prove the differentiability of Y by using the argument in the proof of Lemma 3.6 in [16].

Proposition 2.1

Assume \(-1/2+\gamma /2<\alpha <1/2+\gamma /2\). For any integer \(n \ge 1\), the Gaussian process \(Y=\{Y(t)\}_{t\ge 0}\) has a modification (still denoted by Y) that is continuously differentiable of order n in \((0,\infty )\).

Proof

For any \(t>0\), define

The integrand is in \(L^2((-\infty ,0);{\mathbb {R}})\), and hence, \(\{Y'(t)\}_{ t>0}\) is a well-defined mean-zero Gaussian process. For every \(s, t\in [a,b]\subset (0,\infty )\) with \(s<t\), applying (2.3), we have

This, together with the Kolmogorov continuity theorem and the arbitrariness of a and b, implies that \(Y'\) is continuous in \((0,\infty )\) up to a modification.

Assume that \(\phi \in C_c^{\infty }((0,\infty ))\) (the space of all infinitely differentiable functions with compact supports). By the stochastic Fubini theorem [16, Corollary 2.9] and the formula of integration by parts, we know a.s.,

Applying the stochastic Fubini theorem again, we have

This means that \(Y'(t)\) is the weak derivative of Y(t) for all \(t>0\). Since \(Y'\) is continuous in \((0,\infty )\), (2.7) shows that \(Y'\) is in fact almost surely the ordinary derivative of Y in \((0,\infty )\). By induction, we use the same argument as above to show that, for every integer \(n \ge 1\), Y has a modification that is continuously differentiable of order n in \((0,\infty )\). \(\square \)

3 Moment Estimates for the Increments and One-Sided SLND of Z

Consider the generalized Riemann–Liouville FBM \(Z= \{Z(t)\}_{t \ge 0}\) with indices \(\alpha \) and \(\gamma \) defined by

This Gaussian process is well defined if the constants \(\alpha \) and \(\gamma \) satisfy \(\alpha > - \frac{1}{2}\) and \(\gamma < 1\) and is self-similar with index \(H = \alpha - \frac{\gamma }{2}+\frac{1}{2}\). Notice that \(H\le 0\) if \(-\frac{1}{2} < \alpha \le -\frac{1}{2}+\frac{\gamma }{2}\) and \(H > 0\) if \(\alpha > -\frac{1}{2}+\frac{\gamma }{2}\).

In this section, we derive optimal estimates on the moment of the increments, prove the existence of the tangent process and establish the one-sided strong local nondeterminism for Z. These properties are useful for studying the sample properties of Z.

3.1 Moment Estimates

In the following, Lemmas 3.1, 3.2 and 3.3 provide optimal estimates on \({\mathbb {E}}\left[ (Z(t)-Z(s))^2 \right] \). These estimates are essential for establishing sharp sample path properties of Z. Notice that the upper bounds in (i) and (ii) in Lemma 3.1 below are the same (up to a constant factor) when \(\alpha <1/2\). We will use these bounds for estimating the small ball probability and the uniform modulus of continuity in Sects. 4 and 7.

Lemma 3.1

Assume \(\alpha \in (-1/2\,, 1/2]\) and \(\gamma \in [0, 1)\). The following statements hold:

-

(i).

If \(0< s< t <\infty \) and \(0 < s \le 2(t-s)\), then

$$\begin{aligned} c_{3,1} \frac{|t-s|^{2\alpha +1}}{t^{\gamma } }\le {\mathbb {E}}\left[ (Z(t)-Z(s))^2 \right] \le c_{3,2} \frac{|t-s|^{2\alpha +1}}{s^{\gamma } }, \end{aligned}$$(3.2)here \(c_{3,1}=1/(2\alpha +1)\) and \(c_{3,2}= 2/(1-\gamma )+1/(2\alpha +1) +{\mathcal {B}}(2\alpha +1, 1-\gamma ) 2^{ 2\alpha +1} \).

-

(ii).

If \(0< s< t < \infty \) and \(s > 2(t-s)\), then

$$\begin{aligned} {\mathbb {E}}\left[ (Z(t)-Z(s))^2 \right] \asymp \left\{ \begin{array}{ll} \frac{|t-s|^{2\alpha +1}}{s^{\gamma } } , \ \quad &{}\hbox { if }\ \alpha < 1/2,\\ \frac{(t-s)^2}{s^{\gamma }} \left( 1+\ln \big | \frac{s}{t-s}\big |\right) , \ \quad &{}\hbox { if } \ \alpha =1/2. \end{array} \right. \end{aligned}$$(3.3)Here and below, for two real-valued functions f and g defined on a set I, the notation \(f \asymp g\) means that

$$\begin{aligned} c \le f(x)/g(x) \le c' \ \ \hbox { for all }\,x \in I, \end{aligned}$$for some positive and finite constants c and \(c'\) which may depend on \(f,\, g\) and I.

Proof

For any \(0< s<t<\infty \), we have

To bound the integral \(I_1\), we make a change of variable with \(u=s-(t-s)v\) to obtain

In order to estimate \(I_1\) in Case (i), we distinguish the two cases \(\alpha \in [0, 1/2]\) and \(\alpha \in (- 1/2, 0)\).

When \(\alpha \in [0, 1/2]\), we use the fact that \(3^\alpha - 2^\alpha \le (1+v)^{\alpha }-v^{\alpha } \le 1\) for all \(v \in [0, 2]\) to derive

When \(\alpha \in (- 1/2, 0)\), we use the fact that \(0< v^{\alpha } -(1+v)^{\alpha } <v^{\alpha } \) for all \(v \in [0, 2]\) to derive

In the above, we made a change of variable and the assumption that \(s \le 2(t-s)\).

For the integral \(I_2\) in (3.4), by the change of variable \(u = s + (t -s)v\), we have

On the other hand, in Case (i), \(\big (\frac{s}{t-s} + v\big )^{-\gamma } \ge \big (\frac{t}{t-s} \big )^{-\gamma }\) for all \(v \in [0, 1]\). Hence,

Consequently, the lower bound in (3.2) follows from (3.4) and (3.9), and the upper bound in (3.2) follows from (3.4), (3.6), (3.7), and (3.8).

Now, we consider Case (ii). Since \(s > 2(t-s)\), we write

We will see that the main term is the integral \(I_{1,2}\). For estimating the integral \(I_{1,1}\), again we distinguish the two cases \(\alpha \in [0, 1/2]\) and \(\alpha \in (- 1/2, 0)\).

When \(\alpha \in [0, 1/2]\), we use the facts that \(2^\alpha - 1 \le (1+v)^{\alpha }-v^{\alpha } \le 1\) for all \( v \in [0, 1]\) and

to derive that

When \(\alpha \in (- 1/2, 0)\), we use the fact that \((1-2^\alpha ) v^\alpha \le v^{\alpha } - (1+v)^{\alpha } \le v^{\alpha }\) for \(v \in [0, 1]\) and (3.11) to get

Next, we estimate the integral \(I_{1,2}\). For \(\alpha \in (- 1/2, 1/2]\), we use the inequality \(|(1+v)^{\alpha }-v^{\alpha }| \asymp v^{\alpha - 1}\) for all \(v \in [1, \infty )\) to derive

where the above equality is obtained by the change of variable \(v = \frac{s}{t-s} w\). By splitting the last integral over the intervals \([\frac{t-s}{s}, \frac{3}{4}]\) and \([\frac{3}{4}, 1]\), we have

Combining (3.10)-(3.15) yields that in Case (ii),

It follows from this and (3.4) that

where \(c_{3,3}> 0\) is a finite constant.

On the other hand, it follows from (3.4), (3.8) and (3.16) that

where \(c_{3,4} > 0\) is a finite constant. This finishes the proof of (3.3). \(\square \)

Remark 3.1

The following are some remarks about Lemma 3.1 and some of its consequences.

-

(i).

It follows from Lemma 3.1 that for any \(0< a< b < \infty \) there exist constants \(c_{3,5}, \cdots , c_{3,8}\in (0,\infty )\) such that for \(s,t\in [a, \, b]\), we have that for \(\alpha \in (-1/2,\, 1/2)\),

$$\begin{aligned} c_{3,5}|t-s|^{2\alpha +1} \le {\mathbb {E}}\left[ (Z(t)-Z(s))^2 \right] \le c_{3,6} |t-s|^{2\alpha +1}; \end{aligned}$$(3.19)for \(\alpha =1/2\),

$$\begin{aligned} \begin{aligned} c_{3,7}|t-s|^2 \left( 1+ \big |\ln |t-s| \big |\right) \le&\, {\mathbb {E}}\left[ (Z(t)-Z(s))^2 \right] \\&\le c_{3,8} |t-s|^2 \left( 1+ \big |\ln |t-s| \big |\right) . \end{aligned} \end{aligned}$$(3.20)Consequently, the process Z has a modification that is uniformly Hölder continuous on \([a,\, b]\) of order \(\alpha +1/2-\varepsilon \) for all \(\varepsilon >0\). In the proof of Theorem 1.1 below, we will establish an exact uniform modulus of continuity of Z on any interval [a, b] for \(0< a< b < \infty .\)

-

(ii).

By Lemma 2.1, we see that for \(\alpha \in (-1/2 +\gamma /2,\, 1/2]\), the inequalities (3.19) and (3.20) hold for GFBM X. These inequalities can be applied to determine the fractal dimensions of random sets (e.g., range, graph, level set, etc) generated by the sample path of X. For example, we can derive by using standard covering and capacity methods (cf. [10, 14, 40]) the following Hausdorff dimension results: for any \(T > 0\),

$$\begin{aligned} \dim _{\mathrm{H}} \mathrm{Gr} X([0, T]) = 2 - \Big (\frac{1}{2} + \frac{\alpha }{2}\Big ) = \frac{3 - \alpha }{2}, \quad \hbox { a.s.}, \end{aligned}$$(3.21)where \(\mathrm{Gr} X([0, T])= \{(t, X(t)): t \in [0, T]\}\) is the graph set of X, and for every \(x \in {\mathbb {R}}\) we have

$$\begin{aligned} \dim _{\mathrm{H}} X^{-1}(x) = 1 - \Big (\frac{1}{2} + \frac{\alpha }{2}\Big ) = \frac{1 - \alpha }{2} \end{aligned}$$(3.22)with positive probability, where \(X^{-1}(x) = \{t \ge 0: X(t) = x \}\) is the level set of X. We remark that, due to the \(\sigma \)-stability of Hausdorff dimension \(\dim _{\mathrm{H}}\) ( [10]), the asymptotic behavior of X at \(t = 0\) (hence the self-similarity index H) has no effect on (3.21) and (3.22). Later on, we will indicate how more precise results than (3.21) and (3.22) can be established for X; see Remark 7.1 below.

-

(iii).

Let \(\xi = \{\xi (t)\}_{t\ge 0}\) be a centered Gaussian process. If there exists an even, non-negative, and non-decreasing function \(\varphi (h)\) satisfying \(\lim _{h\downarrow 0}h/\varphi (h)=0\) and

$$\begin{aligned} {\mathbb {E}}[(\xi (t+h)-\xi (t))^2]\ge \varphi (h)^2, \ \ \ \ t\ge 0,h\in (0,1), \end{aligned}$$then by using the argument in Yeh [41], one can prove that the sample functions of \(\xi \) are nowhere differentiable with probability one. See also [15]. Thus, if \(-1/2<\alpha \le 1/2\), then (3.19) and (3.20) imply that the sample paths of the generalized Riemann–Liouville FBM Z are nowhere differentiable in \((0,\infty )\) with probability one.

The next lemma will be used for studying the tangent processes of Z in Proposition 3.1.

Lemma 3.2

Assume \(\alpha \in (-1/2, 1/2)\) and \(\gamma \in [0, 1)\). Then, for every fixed \(t\in (0,\infty )\),

where \(c_{3,9}= \frac{1}{2\alpha +1}+\int _0^{\infty } \big [(1+v)^{\alpha }-v^{\alpha }\big ]^2dv\).

Proof

For simplicity, we consider the limit in (3.23) as \(s\uparrow t\) only. For \(0< s<t<\infty \), recall from (3.4) the decomposition of \({\mathbb {E}}\left[ (Z(t)-Z(s))^2 \right] \).

The term \(I_2\) is easier, and we handle it first. Since \(u^{-\gamma }\in [t^{-\gamma }, s^{-\gamma }]\) for any \(u\in [s,t]\), we obtain that

This implies that

For the integral \(I_1\), we use (3.5) and write it as

Notice that, for every \(v \in \big (0, s/[2(t-s)] \big )\), we have \(s^{-\gamma } \le \left( s-v(t-s) \right) ^{-\gamma } \le 2^\gamma s^{-\gamma }\). Thus, the dominated convergence theorem gives

On the other hand, we use the inequality \(|(1+v)^{\alpha }-v^{\alpha }| \le |\alpha | v^{\alpha - 1}\) for all \(v \ge 1\) to obtain

It follows that

Therefore, (3.23) follows from (3.25), (3.26), (3.27) and (3.28). The proof is complete. \(\square \)

The following lemma deals with the case when \(\alpha > 1/2\) and provides estimates on the second moments of the increments of Z(t) and its mean-square derivative \(Z'(t)\). The latter estimate allows us to show that \(\{Z(t)\}_{ t \ge 0}\) has a modification whose sample functions are continuously differentiable in \((0,\infty )\).

For simplicity, we only consider the case when \(\alpha \in (1/2,\, 3/2)\) and s, t stay away from the origin. This is sufficient for our study of the sample path properties of GFBM X.

Lemma 3.3

Assume \(\alpha \in (1/2,\, 3/2)\) and \(\gamma \in [0, 1)\). For any \(0<a<b<\infty \), it holds that for any \(s,t\in [a,\,b]\),

here, \(c_{3,10}=\frac{\alpha ^2}{1-\gamma } b^{2\alpha -2}a^{1-\gamma } \) and \(c_{3,11}=\alpha ^2 a^{2\alpha -1-\gamma }{\mathcal {B}}(2\alpha -1, 1-\gamma )\).

The process \(\{Z(t)\}_{t \in [a,b]}\) has a modification, which is still denoted by Z, such that its derivative process \(\{Z'(t)\}_{t \in [a, b]}\) is continuous almost surely. Furthermore, there exist constants \(c_{3,12}, c_{3,13}\in (0,\infty )\) such that for any \(s, t\in [a,b]\),

Proof

The proof of (3.29) is similar to that of Lemma 3.1. Here, we only prove the lower bound in (3.29) and the existence of a modification of Z whose sample functions are continuously differentiable on \((0, \infty )\) almost surely.

For any \(s,t\in [a,b]\) with \(s<t\), by (2.3) and (3.4), we have

Thus, the lower bound in (3.29) holds.

For any \(t\ge 0\), define

with \(Z'(0)=0\). Notice that, since \(\alpha \in (1/2, \, 3/2)\), the process \(\left\{ Z'(t)\right\} _{ t\ge 0}\) is a generalized Riemann–Liouville FBM with indices \(\alpha -1\) and \(\gamma \). It is self-similar with index \({\tilde{H}} = \alpha -1/2 -\gamma /2 \). Hence, by (3.19), we see that (3.30) holds. By the Kolmogorov continuity theorem (see, e.g., [16, Theorem C.6]), the Gaussian property of \(Z'\) and the arbitrariness of a and b, we know that \(Z'\) has a modification (still denoted by \(Z'\)) that is Hölder continuous in \((0,\infty )\) with index \(\alpha -1/2-\varepsilon \) for any \(\varepsilon >0\). With this modification, we define a Gaussian process \({\widetilde{Z}} =\big \{{\widetilde{Z}}(t)\big \}_{t \ge 0}\) by

Then, by the stochastic Fubini theorem [16, Corollary 2.9], we derive that for every \(t \ge 0\),

Hence, \({\widetilde{Z}}\) is modification of the generalized Riemann–Liouville FBM \(Z=\{Z(t)\}_{t \ge 0}\) and the sample function of \({\widetilde{Z}}\) is a.s. continuously differentiable in \((0,\, \infty )\). The proof is complete. \(\square \)

3.2 The Tangent Process of Z

Let \(C({\mathbb {R}}_+, {\mathbb {R}})\) be the space of all continuous functions from \({\mathbb {R}}_+\) to \({\mathbb {R}}\), endowed with the locally uniform convergence topology. We say that a family of stochastic processes \(V_n = \{V_n(\tau )\}_{\tau \ge 0}\) converges weakly (or in distribution) to \(V = \{V(\tau )\}_{\tau \ge 0}\) in \(C({\mathbb {R}}_+, {\mathbb {R}})\), if \({\mathbb {E}}[f(V_n)]\rightarrow {\mathbb {E}}[f(V)]\) for every bounded, continuous function \(f: C({\mathbb {R}}_+, {\mathbb {R}}) \rightarrow {\mathbb {R}}\) (cf. [7]).

Proposition 3.1

Assume \(\alpha \in (-1/2, 1/2)\) and \(\gamma \in [0, 1)\). For any \(t,\, u>0\), let

Then, as \(u\rightarrow 0+\), \(\big \{V_Z^{t,u}(\tau )\big \}_{\tau \ge 0}\) converges in distribution to \(\big \{c_{3,9}^{1/2} t^{-\gamma /2} B^{\alpha +1/2}(\tau )\big \}_{\tau \ge 0}\) in \(C({\mathbb {R}}_+,{\mathbb {R}})\), where \(c_{3,9}=\frac{1}{2\alpha +1}+\int _0^{\infty } \big [(1+v)^{\alpha }-v^{\alpha }\big ]^2dv\) is the constant in Lemma 3.2.

Proof

By the self-similarity of Z and (3.23), we know that for any \(t, s>0\),

For any \(t>0, \tau _1, \tau _2\ge 0\), by (3.32), we have

Hence, the Gaussian process \(\{V_Z^{t, u}(\tau )\}_{\tau \ge 0}\) converges to \(c_{3,9}^{1/2} t^{-\gamma /2} B^{\alpha +1/2}\) in finite-dimensional distributions, as \(u\rightarrow 0+\), where \(B^{\alpha +1/2}\) is a FBM with index \(\alpha +1/2\). By Lemma 3.1, there exists a constant \(c_{3,14}\in (0,\infty )\) such that for all \(t>0, u>0, \tau _1, \tau _2\ge 0\),

Hence, by [8, Proposition 4.1], we know the family \(\big \{V_Z^{t,u} \big \}_{u>0}\) is tight in \(C({\mathbb {R}}_+, {\mathbb {R}})\) and then it converges in distribution in \(C({\mathbb {R}}_+,{\mathbb {R}})\), as \(u\rightarrow 0+\). The proof is complete. \(\square \)

3.3 One-Sided Strong Local Nondeterminism

We establish the following one-sided strong local nondeterminism (SLND, for short) for Z. This property is essential for dealing with problems that involve joint distributions of random variables \(Z(t_1), \ldots , Z(t_n)\). From the proof, it is clear that GFBM X has the same SLND property.

Proposition 3.2

-

(a)

Assume \(\alpha \in (-1/2, 1/2]\) and \(\gamma \in [0, 1)\). For any constant \(b>0\), it holds that for any \(s, t\in [0,b]\) with \(s<t\),

$$\begin{aligned} \mathrm {Var}(Z(t)|Z(r): r\le s)\ge \frac{1}{( 2\alpha +1)b^{\gamma }} |t-s|^{2\alpha +1}, \end{aligned}$$(3.35)where \(\mathrm {Var}(Z(t)|Z(r): r\le s)\) denotes the conditional variance of Z(t) given the \(\sigma \)-algebra \(\sigma (Z(r): r\le s)\).

-

(b)

Assume \( \alpha \in (1/2,\, 3/2)\) and \(\gamma \in [0, 1)\). For any \(b>0\), it holds that for any \(s, t\in [0,b]\) with \(s<t\),

$$\begin{aligned} \mathrm {Var}\left( Z'(t)|Z'(r): r\le s\right) \ge \frac{1}{( 2\alpha -1)b^{\gamma }}|t-s|^{2\alpha -1}. \end{aligned}$$(3.36)

Proof

Assume that \(0 \le s< t\le b\). We write Z(t) as

The first term is measurable with respect to \(\sigma \big (B(r):r\le s\big )\), and the second term is independent of \(\sigma \big (B(r):r\le s\big )\). Since \(\sigma \big (Z(r): r\le s\big ) \subseteq \sigma \big (B(r): r\le s\big )\), we have

This implies (3.35).

The proof of (3.36) is similar. The details are omitted. The proof is complete. \(\square \)

4 Lamperti’s Transformation of Z

Inspired by [35], we consider the centered stationary Gaussian process \(U =\{U (t)\}_{t\in {\mathbb {R}}}\) defined through Lamperti’s transformation of Z:

Let \(r_U(t):={\mathbb {E}} \big [U (0)U (t)\big ]\) be the covariance function of U. By Bochner’s theorem, \(r_U\) is the Fourier transform of a finite measure \(F_U\) which is called the spectral measure of U. Notice that \(r_U(t)\) is an even function and

We can verify that \(r_U(t)=O(e^{- t(1-\gamma )/2})\) as \(t\rightarrow \infty \). It follows that \(r_U(\cdot )\in L^1({\mathbb {R}})\). Hence, the spectral measure \(F_U\) has a continuous spectral density function \(f_U\) which can be represented as the inverse Fourier transform of \(r_U(\cdot )\):

It is known that U has the stochastic integral representation:

where W is a complex Gaussian measure with control measure \(F_U\). Then, for any \(s, t \in {\mathbb {R}}\),

The following lemma provides bounds for \( {\mathbb {E}} \left[ \left( U (s) -U (t)\right) ^2\right] \) when Z has rough (fractal) sample paths.

Lemma 4.1

Assume \(\alpha \in (-1/2, 1/2]\). Then, for any \(b > 0\), there exist positive constants \(\varepsilon _0\), \(c_{4,1}\) and \(c_{4,2}\) such that for all \( s, t \in [0, b]\) with \(|s-t| \le \varepsilon _0\),

where \(\eta = 0\) if \(\alpha \in (-1/2, 1/2)\) and \(\eta =1\) if \(\alpha = 1/2.\)

Proof

Since U is stationary, it is sufficient to consider \({\mathbb {E}} \left[ \left( U (t) -U (0)\right) ^2\right] \) for \(t >0\). It follows from (4.1) and the elementary inequality \((x+y)^2 \le 2 (x^2 + y^2)\) that

This, together with Lemma 3.1, implies that the upper bound in (4.5) holds for all \(s, t \in [0, b]\) with \(|s-t| \le \varepsilon _0\) for some positive constant \(\varepsilon _0\).

On the other hand, the first equation in (4.6) and the inequality \((x+y)^2 \ge \frac{1}{2} x^2 - y^2\) imply

It follows from Lemma 3.1 that the lower bounds in (4.5) hold if \(t>0\) is small enough, say, \(0 < t \le \varepsilon _0\). This completes the proof of Lemma 4.1. \(\square \)

The following are truncation inequalities in Loéve [22, Page 209] that are expressed in terms of the spectral density function \(f_U\): for any \(u > 0\), we have

By these inequalities, (4.4) and the upper bound in Lemma 4.1, we have the following properties of the spectral density \(f_U(\lambda )\) at the origin and infinity, respectively.

Lemma 4.2

Assume \(\alpha \in (-1/2, 1/2]\). There exist positive constants \(u_0\), \(c_{4,3}\) and \(c_{4,4}\) such that for any \(u >u_0\),

and

In the above, \(\eta = 0\) if \(\alpha \in (-1/2, 1/2)\) and \(\eta =1\) if \(\alpha = 1/2.\)

5 Small Ball Probabilities of Z

By Lemmas 3.1, 3.3 and Proposition 3.2, we prove the following estimates on the small ball probabilities of the Gaussian process Z and its derivative \(Z'\) when it exists.

Proposition 5.1

-

(a)

Assume \(\gamma \in [0, 1)\) and \(\alpha \in (-1/2+\gamma /2,\,1/2)\). There exist constants \(c_{5,1}, \, c_{5, 2}\in (0,\infty )\) such that for all \(r>0, 0<\varepsilon <1\),

$$\begin{aligned} \begin{aligned} \exp \bigg (- c_{5,1}\Big (\frac{r^{H}}{\varepsilon }\Big )^{\frac{2}{ 2\alpha + 1}}\bigg )\le {\mathbb {P}}\bigg \{\sup _{s\in [0,\, r]}|Z(s)|\le \varepsilon \bigg \} \le \exp \bigg (- c_{5,2}\Big (\frac{r^{H}}{\varepsilon }\Big )^{\frac{2}{ 2\alpha +1}}\bigg ). \end{aligned}\nonumber \\ \end{aligned}$$(5.1) -

(b)

Assume \(\gamma \in [0, 1)\) and \(\alpha \in (1/2+\gamma /2, 3/2)\). There exist constants \(c_{5,3}, c_{5, 4}\in (0,\infty )\) such that for all \(r>0, 0<\varepsilon <1\),

$$\begin{aligned} \begin{aligned} \exp \bigg (- c_{5,3}\Big (\frac{r^{{{\tilde{H}}}}}{\varepsilon }\Big )^{\frac{2}{ 2\alpha - 1}}\bigg )\le {\mathbb {P}}\bigg \{\sup _{s\in [0,\, r]}|Z'(s)|\le \varepsilon \bigg \} \le \exp \bigg (- c_{5,4}\Big (\frac{r^{{{\tilde{H}}}}}{\varepsilon }\Big )^{\frac{2}{ 2\alpha -1}}\bigg ), \end{aligned}\nonumber \\ \end{aligned}$$(5.2)where \({\tilde{H}} = \alpha -\gamma /2-1/2\).

Remark 5.1

The following are two remarks about Proposition 5.1.

-

Notice that the case of \(\alpha = 1/2\) is excluded in Proposition 5.1. The reason is that, the bounds of the one-sided SLND in Proposition 3.2 and the optimal bounds in (3.3) do not coincide when \(\alpha = 1/2\). The method for proving (5.1) will not be able to prove optimal upper and lower bounds in the case of \(\alpha = 1/2\).

-

In Part (b), we assume that the self-similarity index \({\tilde{H}} = \alpha -\gamma /2-1/2\) of \(Z'\) is positive. When \({\tilde{H}} \le 0\), (5.2) does not hold. In fact, by using \({\mathbb {E}}[Z'(t)^2] = {\mathbb {E}}[Z'(1)^2] \, t^{2{\tilde{H}}}\), one sees that for any \(0 < \tau \le r\),

$$\begin{aligned} {\mathbb {P}}\bigg \{\sup _{s\in [0,\, r]}|Z'(s)|\le \varepsilon \bigg \} \le {\mathbb {P}}\big \{|Z'(\tau )|\le \varepsilon \big \} = {\mathbb {P}}\left\{ |Z'(1)|\le \tau ^{-{\tilde{H}}} \varepsilon \right\} . \end{aligned}$$If \({\tilde{H}} < 0\), then the last probability goes to 0 as \(\tau \rightarrow 0\). This implies that for all \(r>0\) and \(\varepsilon >0\) we have \({\mathbb {P}}\big \{\sup _{s\in [0,\, r]}|Z'(s)| \le \varepsilon \big \} = 0\).

When \({\tilde{H}} = 0\), the self-similarity implies that the small ball probability in (5.2) does not depend on r. We will let \(r \rightarrow \infty \) to show that this probability is in fact 0 for all \(\varepsilon >0\). To this end, we consider the centered stationary Gaussian process \(V= \{V(s)\}_{s \in {\mathbb {R}}}\) defined by \(V(s) = Z'(e^{s})\), which is the Lamperti transform of \(Z'\), and apply Theorem 5.2 of Pickands [29]. Notice that the covariance function of V,

Then, \(r_V(s) \le 2^{1-\alpha }\, e^{(\alpha - 1)s}\) for all s large enough. Since \(\alpha = \frac{1+\gamma }{2} < 1\), we have \(\lim \limits _{s \rightarrow \infty } r_V(s) \ln s = 0\), so the condition of [29, Theorem 5.2] is satisfied. Hence,

This implies that for all \(\varepsilon >0\),

For proving the lower bound in (5.1), we apply the general lower bound on the small ball probability of Gaussian processes due to Talagrand (cf. Lemma 2.2 of [32]). We will make use of the following reformulation of Talagrand’s lower bound given by Ledoux [19, (7.11)-(7.13) on Page 257].

Lemma 5.1

Let \(\{ Z(t)\}_{t \in S }\) be a separable, real-valued, centered Gaussian process indexed by a bounded set S with the canonical metric \(d_Z(s, t) = ({\mathbb {E}} |Z(s) - Z(t)|^2)^{1/2}\). Let \(N_\varepsilon (S)\) denote the smallest number of \(d_Z\)-balls of radius \(\varepsilon \) needed to cover S. If there is a decreasing function \(\psi : (0, \delta ] \rightarrow (0, \infty )\) such that \(N_\varepsilon (S) \le \psi (\varepsilon )\) for all \(\varepsilon \in (0, \delta ]\) and there are constants \(K_2 \ge K_1 > 1\) such that

for all \(\varepsilon \in (0, \delta ]\), then there is a constant \(K\in (0,\infty )\) depending only on \(K_1\), \(K_2\) and \(d_Z\) such that for all \(u \in (0, \delta )\),

We are ready to prove Proposition 5.1.

Proof

We will only prove (5.1). The proof of (5.2) is the same because \(Z'\) is also a generalized GFBM with indices \(\gamma \in [0, 1)\) and \(\alpha -1 \in (-1/2 + \gamma /2,\, 1/2)\).

By the self-similarity property of Z, we know that (5.1) is equivalent to the following statement: there exist constants \(c_{5,1}, c_{5,2}\in (0,\infty )\) such that for all \(0<\varepsilon <1\),

In order to prove the lower bound in (5.5), we take \(S = [0, 1]\) and apply Lemma 5.1. For any \(\varepsilon \in (0, \, 1)\), we construct a covering of [0, 1] by sub-intervals of \(d_Z\)-radius \(\varepsilon \), which will give an upper bound for \(N_\varepsilon ([0, 1]).\)

Recall that \({\mathbb {E}}\left[ Z(t)^2 \right] = c\, t^{2H}\) for all \(t \ge 0\), where \(H = \alpha - \frac{\gamma }{2} + \frac{1}{2}>0\). Since constants c here and those in Lemma 3.1 can be absorbed by the constants \(c_{5,1}\) and \(c_{5,2}\) in (5.5), without loss of generality we will take these constants to be 1 (otherwise, we consider the processes obtained by dividing Z by the maximum and minimum of these constants, respectively, and prove the upper and lower bounds in (5.5) separately.)

Let \(t_0=0, t_1 = \varepsilon ^{1/H}\). For any \(n \ge 2\), if \(t_{n-1}\) has been defined, we define

It follows from Lemma 3.1 that

Hence, \(d_Z(t_n,\, t_{n-1}) \le \,\varepsilon \) for all \(n \ge 1\).

Since [0, 1] can be covered by the intervals \([t_{n-1}, \, t_n]\) for \(n = 1, 2, \ldots , L_\varepsilon \), where \(L_\varepsilon \) is the largest integer n such that \(t_n \le 1\), we have \(N_\varepsilon ([0, 1]) \le L_\varepsilon +1 \le 2L_\varepsilon \).

In order to estimate \(L_\varepsilon \), we write \(t_n = a_n \varepsilon ^{1/H}\) for all \(n \ge 1\). Then, by (5.6), we have \(a_1=1\),

Denote by \(\beta = 1 - \frac{\gamma }{2\alpha + 1} = \frac{2H}{2\alpha + 1}\). We claim that there exist positive and finite constants \(c_{5,5} \le 2^{-\gamma /(2H\beta )}\beta ^{1/\beta }\) and \(c_{5,6} \ge 1\) such that

We postpone the proof of (5.9). Let us estimate \(L_\varepsilon \) and prove the lower bound in (5.5) first.

By (5.9), we have

This implies that for all \(\varepsilon \in (0, 1)\),

Since the function \(\psi (\varepsilon )\) satisfies (5.3) with \(K_1=K_2 = 2^{ \frac{2}{2\alpha + 1}}>1,\) we see that the lower bound in (5.5) follows from (5.10) and (5.4) in Lemma 5.1.

Now, we prove (5.9) by using induction. Clearly (5.9) holds for \(n=1\). Assume that it holds for \(n=k\). Then for \(n=k+1\), it follows from (5.8) and (5.9) that

where the last inequality can be checked by using the mean-value theorem and the facts that \(c_{5,6}\ge 1\) and \(0< \beta < 1\). This verifies the upper bound in (5.9). The desired lower bound for \(a_{n+1}\) is derived similarly using the mean-value theorem and the fact that \(c_{5,5} \le 2^{-\gamma /(2H\beta )}\beta ^{1/\beta }\). Hence, the claim (5.9) holds.

Next, we prove the upper bound in (5.5). Lemma 3.1 and Proposition 3.2 show that the conditions of Theorem 2.1 of Monrad and Rootzén [27] are satisfied. Hence, the upper bound in (5.1) follows from Theorem 2.1 of [27]. The proof is complete. \(\square \)

Similarly to the proof of Proposition 5.1, we can prove the following estimates on the small ball probabilities for the increments of Z and \(Z'\) at points away from the origin. We will use these estimates to prove Chung’s LILs for Z and \(Z'\). Also, notice that no extra condition of \(\alpha -\gamma /2-1/2>0\) is needed for (b).

Proposition 5.2

-

(a)

Assume \(\alpha \in (-1/2,\,1/2)\). Then, there exist constants \(c_{5,7}, c_{5,8}\in (0,\infty )\) such that for all \(t>0, r\in \big (0,t/2\big )\) and \(\varepsilon \in \left( 0, r^{\alpha +1/2}\right) \),

$$\begin{aligned} \ \begin{aligned} \exp \bigg (-c_{5,7}\, r \, t^{-\frac{\gamma }{2\alpha +1}} \varepsilon ^{-\frac{2}{2\alpha +1}}\bigg )\le&\, {\mathbb {P}}\bigg \{\sup _{|s|\le r}|Z(t+s)-Z(t)|\le \varepsilon \bigg \}\\&\le \, \exp \bigg (-c_{5,8}\, r\, t^{-\frac{\gamma }{2\alpha +1}} \varepsilon ^{-\frac{2}{2\alpha +1}}\bigg ). \end{aligned} \end{aligned}$$(5.11) -

(b)

Assume \(\alpha \in (1/2,\, 3/2)\). Then, there exist constants \(c_{5,9}, c_{5,10}\in (0,\infty )\) such that for all \(t>0, r\in \big (0,t/2\big )\) and \(\varepsilon \in \left( 0,r^{\alpha -1/2}\right) \),

$$\begin{aligned} \begin{aligned} \exp \bigg (-c_{5,9}\, r\, t^{-\frac{\gamma }{2\alpha -1}} \varepsilon ^{-\frac{2}{2\alpha -1}}\bigg )\le&\, {\mathbb {P}}\bigg \{\sup _{|s|\le r}|Z'(t+s)-Z'(t)|\le \varepsilon \bigg \}\\&\, \le \exp \bigg (-c_{5,10}\, r\, t^{-\frac{\gamma }{2\alpha -1}} \varepsilon ^{-\frac{2}{2\alpha -1}} \bigg ). \end{aligned} \end{aligned}$$(5.12)

Proof

(a). Assume \(\alpha \in (-1/2,\,1/2), 0<r<t/2\). Let \(I(t,r) = [t-r, t+r] \). It follows from Lemma 3.1 that

Hence, there exists a constant \(c_{5,11}\in (0, \infty )\) satisfying that for all \(0<\varepsilon < r^{\alpha +1/2}\),

Then, the function \(\psi (\varepsilon )\) satisfies (5.3) with \(K_1=K_2 = 2^{ \frac{2}{2\alpha + 1}}>1\). Hence, the lower bound in (5.11) follows from (5.4) in Lemma 5.1.

Next, Lemma 3.1 and Proposition 3.2 show that the conditions of Theorem 2.1 of Monrad and Rootzén [27] are satisfied. Hence, the upper bound in (5.11) follows from Theorem 2.1 of [27].

(b). As noted earlier, when \(\alpha \in (1/2,\, 3/2)\) the Gaussian process \(\{Z'(t)\}_{t \ge 0}\) is a generalized Riemann–Liouville FBM. Hence, (5.12) follows from (5.11). This finishes the proof. \(\square \)

6 Chung’s Law of the Iterated Logarithm for Z

As applications of small ball probability estimates, Monrad and Rootzén [27], Xiao [38], and Li and Shao [21] established Chung’s LILs for fractional Brownian motions and other strongly locally nondeterministic Gaussian processes with stationary increments. Notice that the generalized Riemann–Liouville FBM Z does not have stationary increments. Here, we will use the small ball probability estimates and the Lamperti transformation in the last two sections to establish Chung’s LIL for Z at points away from the origin when \(\alpha \in (-1/2,\,1/2)\). The case of \(\alpha = 1/2\) is open for Z as well as GFBM X.

Proposition 6.1

Assume \(\alpha \in (-1/2,\,1/2)\). There exists a constant \(c_{6,1}\in (0,\infty )\) such that for every \(t>0\),

For proving Proposition 6.1, we will make use of the following zero-one law, which implies the existence of the limit in the left hand side of (6.1). Notice that the constant \(c_{6,1}'\) in (6.2) can be 0 or \(\infty \).

Lemma 6.1

Assume \(\alpha \in (-1/2,\,1/2)\). There exists a constant \(c_{6,1}'\in [0,\infty ]\) such that for every \(t > 0\),

Proof

We start with the stationary Gaussian process \(U = \{U(s)\}_{s \in {\mathbb {R}}}\) in (4.3). As in the proof of Lemma 2.1 in [37], we write for every \(n \ge 1\),

Then, the Gaussian processes \(U_n = \{U_n(s)\}_{s \in {\mathbb {R}}},\, n \ge 1 \), are independent and

where the series is a.s. uniformly convergent on every compact interval in \({\mathbb {R}}\). As in the proof of Proposition 2.1, we can verify that every \(U_n(s)\) is a.s. continuously differentiable (or as in [37], we show that \(U_n(s)\) is almost Lipschitz on compact intervals). Therefore, for every \(x\in {\mathbb {R}}\) and \(N\in {\mathbb {N}}\),

Hence, for every \(x \in {\mathbb {R}} \) and \(c\ge 0\), the event

is a tail event with respect to the sequence \(\{U_n\}_{n \ge 1}\) of independent processes. By Kolmogorov’s zero-one law, we have \({\mathbb {P}}(E_c) = 0\) or 1. Let \(c_{6,1}' = \inf \{c \ge 0: {\mathbb {P}}(E_c) = 1\}\), with the convention that \(\inf \emptyset = \infty \). Then, \(c_{6,1}'\in [0,\infty ]\) and

Moreover, \(c_{6,1}'\) does not depend on x because of the stationarity of U.

Next, we use (6.3) and Lamperti’s transformation to show (6.2). For any \(t > 0\) fixed, \(0< r < t\) and \(|s|\le r\), we write

Since the first term is Lipschitz in \(s \in [-r, r]\) and \(\ln \big ( 1 + \frac{s}{t}) \big ) \sim s/t\) as \(s \rightarrow 0\), it can be verified that (6.2) follows from (6.4) and (6.3) with \(x = \ln t\). \(\square \)

It follows from Lemma 6.1 that Proposition 6.1 will be established if we show \(c_{6,1}' \in (0, \infty )\). This is where Propositions 3.2, 5.2, Lemma 4.2 and the following version of Fernique’s lemma from [13, Lemma 1.1, p.138] are needed.

Lemma 6.2

Let \(\{\xi (t)\}_{t\ge 0}\) be a separable, centered, real-valued Gaussian process. Assume that

for some continuous nondecreasing function \(\varphi \) with \(\varphi (0)=0\). For any positive integer \(k>1\) and any positive constants t, x and \(\theta (p), p\in {\mathbb {N}}\), we have

We now give the proof of Proposition 6.1.

Proof of Proposition 6.1

Without loss of generality, we may assume \(t>1\). We first prove the lower bound. For any integer \(n \ge 1\), let \(r_n = e^{-n}\). Let \(0< \delta < c_{5,8}\) be a constant and consider the event

Proposition 5.2 implies that for any \(n\in {\mathbb {N}}\),

Since \(\sum _{n=1}^{\infty } {\mathbb {P}}\{A_n\} < \infty \), the Borel–Cantelli lemma implies

It follows from (6.6) and a standard monotonicity argument that

for some constant \(c_{6,2}\in (0,\infty )\) which is independent of \(t>0\).

The upper bound is a little more difficult to prove due to the dependence structure of Z. In order to create independence, as in Tudor and Xiao [35], we will make use of the following stochastic integral representation of Z:

where \(H=\alpha -\gamma /2+1/2\). This follows from the spectral representation (4.3) of U.

For every integer \(n \ge 1\), we take

It is sufficient to prove that there exists a finite constant \( c_{{6,3}}\) such that

Let us define two Gaussian processes \(Z_n\) and \({\widetilde{Z}}_n\) by

and

respectively. Clearly, \(Z(t) = Z_n(t) + {\widetilde{Z}}_n(t)\) for all \(t >0\). It is important to note that the Gaussian processes \(Z_n\, (n = 1, 2, \ldots )\) are independent and, moreover, for every \(n \ge 1,\) \(Z_n\) and \({\widetilde{Z}}_n\) are independent as well.

Denote \(h(r) = r^{\alpha +1/2}\, \big (\ln \ln (1/r)\big )^{-(\alpha +1/2)}\). We make the following two claims:

-

(i)

There is a constant \(\delta > 0\) such that

$$\begin{aligned} \sum _{n=1}^\infty {\mathbb {P}}\bigg \{\sup _{|s|\le t_n} \big |Z_n(t+s)-Z_n(t)\big | \le \delta ^{\alpha +1/2}\,t^{-\gamma /2}\, h(t_n)\bigg \} = \infty . \end{aligned}$$(6.13) -

(ii)

For every \(\varepsilon > 0\),

$$\begin{aligned} \sum _{n=1}^\infty {\mathbb {P}}\bigg \{\sup _{|s| \le t_n} \big |{\widetilde{Z}}_n(t+s)-{\widetilde{Z}}_n(t)\big | > \varepsilon \,t^{-\gamma /2}\, h(t_n)\bigg \} < \infty . \end{aligned}$$(6.14)

Since the events in (6.13) are independent, we see that (6.10) follows from (6.13), (6.14) and a standard Borel–Cantelli argument.

It remains to verify the claims (i) and (ii) above. By Proposition 5.2 and Anderson’s inequality [2], we have

Hence, (i) holds for \(\delta \ge c_{5,7}\).

In order to prove (ii), we divide it into two terms: For any \(|s|<t_n\),

The second term is easy to estimate: For any \(|s|\le t_n\), there exists \(c_{6,4}\in (0,\infty )\) satisfying that

where the last inequality follows from (4.9).

For the first term \({\mathcal {J}}_1\), we use the following elementary inequalities:

\(1 - \cos x \le x^2\) for all \(x\in {\mathbb {R}}\) and \(\ln (1+x)\le x\) for all \(x\ge 0\) to derive that for any \(|s|\le t_n\),

Here, \(c_{6,5}\) is in \((0,\infty )\). By (4.8) and (4.9), and the fact that \(2n\ge (2\alpha +1)(n+1/2-\alpha )\) for any \(\alpha \in (-1/2+\gamma /2, 1/2)\). we have

for some constant \(c_{6,6}\in (0,\infty )\).

Put \(\delta =(2\alpha +1)(1/2-\alpha )\). By Lemma 3.3, (6.16), (6.17) and (6.18), there exists a constant \(K>0\) such that for \(0\le h\le t_n\),

Put \(x_n=(8\ln n)^{1/2}\). Given \(\varepsilon >0\), define

For large enough n, we have

and

Since

by applying Lemma 6.2 with \(\xi (s)={{\widetilde{Z}}}_n(t\pm s)-{{\widetilde{Z}}}_n(t)\) for \(0\le s\le t_n\), we obtain that

This proves (6.14) and hence Proposition 6.1. \(\square \)

Remark 6.1

In light of Proposition 6.1, it is natural to study Chung’s LIL of Z at the origin. While doing so, we found that there is an error in the proof of Theorem 3.1 in Tudor and Xiao [35], which gives Chung’s LIL for bifractional Brownian motion at the origin. More precisely, the inequality (3.30) in [35] does not hold. Hence, Theorem 3.1 in [35] should be modified as a Chung’s LIL at \(t>0\), which is similar to Theorem 1.4 and Proposition 6.1 in the present paper.

It turns out that Chung’s LIL at the origin for self-similar Gaussian processes that do not have stationary increments such as GFBM X, the generalized Riemann–Liouville FBM Z, and bifractional Brownian motion is quite subtle. A different method than that in the proof of Proposition 6.1 or that in [35] is needed for proving the desired upper bound. Recently, this problem has been studied in the subsequent paper [36] for GFBM X by modifying the arguments of Talagrand [33].

Similarly, for \(\alpha \in (1/2,\, 3/2)\), we have the following Chung’s LIL for \(Z'\).

Proposition 6.2

Assume \(\alpha \in (1/2,\, 3/2)\). There exists a constant \(c_{6,7} \in (0,\infty )\) such that for any \(t>0\),

Proof

Recall from (3.31) that, for \(\alpha \in (1/2,\, 3/2)\), the derivative process \(\{Z'(t)\}_{ t \ge 0}\) is a generalized Riemann–Liouville FBM with indices \(\alpha '=\alpha -1\in (-1/2,\ 1/2)\) and \(\gamma \in (0,1)\). Hence, the proof of (6.19) follows the same line as in the proof of Proposition 6.1. We omit the details. \(\square \)

7 Proofs of the Main Theorems

In this section, we prove our main results for GFBM X stated in Section 1.

7.1 Proof of Theorem 1.1

(a) Assume that \(\alpha \in (-1/2+\gamma /2,1/2)\). By Proposition 2.1, we know that for any \(0<a<b<\infty \), Y(t) is continuously differentiable on [a, b]. Then,

By (1.17), to prove (1.5), it is sufficient to prove that for any \(0<a<b<\infty \),

where \(c_{7,1}\) is a positive constant satisfying

Here, \(c_{3,5},\, c_{3,6}\) are constants given in (3.19).

For any \(\varepsilon >0\), let

Since \(\varepsilon \mapsto J(\varepsilon )\) is non-decreasing, the limit in the left-hand side of (7.1) exists almost surely. Moreover, the zero-one law for the uniform modulus of continuity in [24, Lemma 7.1.1] implies that the limit in (7.1) is a constant almost surely. Hence, it remains to prove that with probability one,

and

The proof of (7.3) is standard. It follows from (3.19) and the metric-entropy bound (cf. Theorem 1.3.5 in [1]), or one may prove this directly by applying Fernique’s inequality in Lemma 6.2 and the Borel–Cantelli lemma. The lower bound in (7.4) follows from the one-sided SLND (3.35) and Theorem 4.1 in [26]. This proves (a).

(b) When \(\alpha =1/2\), we see that (3.20) holds for all \(s, t \in [a, b]\). This implies that the canonical metric of Z satisfies

on [a, b]. Hence, for any \(\varepsilon > 0\) small, the covering number \(N_\varepsilon ([a, b])\) of [a, b] under \(d_Z\) satisfies

where \(c_{7,4}>0\) is a finite constant. It follows from Theorem 1.3.5 in [1] that almost surely for all \(\delta > 0\) small enough,

Notice that, by (3.20), \(d_Z(t, t+h) \le \delta \) is compatible to \(|h| \sqrt{\ln |h|^{-1}} \le \delta \), up to a constant factor. Hence, (7.6) implies that

Now, it can be seen that (1.6) follows from (7.7).

(c). The proof of (1.7) is similar: the uniform modulus of continuity of \(X'\) on [a, b] is the same as that of \(Z'\) on [a, b], and the latter can be derived from (3.30) in Lemma 3.3 and the one-sided SLND (3.36) in Proposition 3.2. We omit the details here. The proof is complete. \(\square \)

7.2 Proofs of Theorems 1.2 and 1.3

Similarly to the proof of Proposition 5.1, by using Lemmas 2.1 and 5.1 , we obtain the following lower bounds for the small ball probabilities of Y.

Lemma 7.1

-

(a)

Assume \(\alpha \in (-1/2+\gamma /2, 1/2+\gamma /2)\). Then, there exists a constant \(c_{7,5}\in (0, \infty )\) such that for all \(r>0, \varepsilon >0\),

$$\begin{aligned} {\mathbb {P}}\bigg \{\sup _{0\le s\le r} |Y(s)|\le \varepsilon \bigg \} \ge \exp \bigg (- c_{7,5} \, {r^{H}}\, \varepsilon ^{-1} \bigg ). \end{aligned}$$(7.8) -

(b)

Assume \(\alpha \in (-1/2+\gamma /2, 1/2+\gamma /2)\). Then, there exists a constant \(c_{7,6}\in (0,\infty )\) such that for all \(t>0, 0<\varepsilon<r<t/2\),

$$\begin{aligned} {\mathbb {P}}\bigg \{\sup _{|s|\le r} |Y(t+s)-Y(t)|\le \varepsilon \bigg \} \ge \exp \bigg (- c_{7,6} \, r \, t^{H-1} \, \varepsilon ^{-1}\bigg ). \end{aligned}$$(7.9)

Proof of Theorem 1.2

By the self-similarity of X, we know that (1.9) is equivalent to the following: For any \(0<\varepsilon <1\),

By (5.1), (7.8) and the independence of Y and Z, we have

where we have used the facts that \(\frac{1}{\varepsilon }\le \left( \frac{1}{\varepsilon } \right) ^{\frac{1}{\alpha +1/2}}\) as \(\varepsilon \in (0,1)\) and \(\alpha <1/2\). This proves the lower bound in (7.10).

On the other hand, by using the Anderson inequality [2] and (5.1), we have

This proves the upper bound in (7.10). The proof is complete. \(\square \)

Similarly to the proof of Theorem 1.2, by using Proposition 5.2 and Lemma 7.1, we can prove Theorem 1.3. The detail is omitted.

7.3 Proof of Theorem 1.4

By Proposition 2.1, Y(t) is continuously differentiable on [a, b] for any \(0< a< b< \infty \). Hence, Chung’s LILs of X (or \(X'\) when it exists) at a fixed point \(t > 0\) is the same as that of Z (or \(Z'\)) at t. Therefore, (1.12) and (1.13) follows from (6.1) and (6.19), respectively. \(\square \)

Remark 7.1

The small ball probabilities in Theorems 1.2 and 1.3 and the one-sided SLND in Proposition 3.2 can be applied as in [38] to refine the results in (3.21) and (3.22) by determining the exact Hausdorff measure functions for the graph and level set of GFBM X. We refer to Talagrand [32, 34] for further ideas on investigating other related fractal properties of X.

7.4 Proof of Theorem 1.5 and the time inversion of X

Proof of Theorem 1.5

As in the proof of Theorem 1.4, it is sufficient to prove (1.14)-(1.16) for Z and \(Z'\), respectively.

First, we consider the case when \(\alpha \in (-1/2+\gamma /2,1/2)\). It follows from (6.4) that for any fixed \(t > 0\),

where \(x = \ln t\). By Lemma 4.1, we see that the Gaussian process \(\{U(s) - U(0)\}_{s \in {\mathbb {R}}} \) satisfies the conditions of Theorem 5.1 in [26] with \(N=1\) and \(\sigma (s, t) \asymp |s-t|^{\alpha +1/2}\) on any compact interval of \((0, \infty )\). Consequently, there exists a constant \(\kappa _{12} \in (0, \infty )\) which does not depend on x such that

Combining (7.11) and (7.12) yields (1.14) for Z.

When \(\alpha = 1/2\), we see from Lemma 4.1 that Condition (A1) in [26] is satisfied with \(N=1\) and \(\sigma (s, t) \asymp |s -t| \sqrt{1 + \big |\ln |s-t| \big |}\) on any compact interval of \((0, \infty )\). This case is not explicitly covered by Theorem 5.1 in [26]. However, an examination of its proof shows that we have

for a constant \(\kappa _{13} \in (0, \infty )\) that does not depend on x. Therefore, by combining (7.11) and (7.13) we obtain (1.15) for Z.

Finally, when \(\alpha \in (1/2, \, 1/2+\gamma /2)\), the proof of (1.16) for \(Z'\) is similar to that of (1.14) for Z given above. There is no need to repeat it. The proof of Theorem 1.5 is complete. \(\square \)

The following result is the time inversion property of GFBM X. It allows us to improve slightly Theorem 6.1 in [11], where the law of the iterated logarithm of X at the origin was proved under the extra condition of \(\alpha >0\).

Proposition 7.1

Let \(X=\{X(t)\}_{t\ge 0}\) be a GFBM with parameters \(\gamma \in (0, 1)\) and \(\alpha \in (-1/2+\gamma /2, 1/2+\gamma )\). Define the Gaussian process \({{\widetilde{X}}}=\{{{\widetilde{X}}}(t)\}_{t\ge 0}\) by

Then, X and \({{\widetilde{X}}}\) have the same distribution. Consequently, there exists a constant \(\kappa _{15} \in (0,\infty )\) such that

Proof

Since X and \({{\widetilde{X}}}\) are centered Gaussian processes, it is sufficient to check their covariance functions. For any \(0< s\le t\),

where a change of variable \(u=v/(st)\) is used in the second step. This proves the first part of Proposition 7.1.

The time inversion property, together with the LIL of X at infinity in [11, Theorem 5.1], implies (7.14). The proof is complete. \(\square \)

7.5 Proof of Theorem 1.6

By Proposition 2.1, Y(t) is continuously differentiable in \((0,\infty )\). Hence, for every \(\alpha \in (-1/2+\gamma /2, 1/2)\), \(t>0\) and for every compact set \({\mathcal {K}}\subset {\mathbb {R}}_+\),

Therefore, X has the same tangent process as that of Z at \(t\in (0,\infty )\), and Theorem 1.6 follows from Proposition 3.1. \(\square \)

Notes

We thank the referee for raising this interesting question.

References

Adler, R.J., Taylor, J.E.: Random Fields and Geometry. Springer, New York (2007)

Anderson, T.W.: The integral of a symmetric unimodal function over a symmetric convex set and some probability inequalities. Proc. Amer. Math. Soc. 6, 170–176 (1955)

Arcones, M.A.: On the law of the iterated logarithm for Gaussian processes. J. Theoret. Probab. 8, 877–903 (1995)

Bardet, J.-M., Surgailis, D.: Measuring the roughness of random paths by increment ratios. Bernoulli 17(2), 749–780 (2011)

Bardet, J.-M., Surgailis, D.: Nonparametric estimation of the local Hurst function of multifractional Gaussian processes. Stoch. Process. Appl. 123(3), 1004–1045 (2013)

Benassi, A., Jaffard, S., Roux, D.: Elliptic Gaussian random processes. Rev. Mat. Iberoamericana 13, 19–90 (1997)

Billingsley, P.: Convergence of Probability Measures, 2nd edn. Wiley, New York (1999)

Falconer, K.J.: Tangent fields and the local structure of random fields. J. Theoret. Probab. 15(3), 731–750 (2002)

Falconer, K.J.: The local structure of random processes. J. London Math. Soc. 67(3), 657–672 (2003)

Falconer, K.J.: Fractal Geometry - Mathematical Foundations And Applications, 3rd edn. Wiley, Chichester (2014)

Ichiba, T., Pang, G.D., Taqqu, M.S.: Path properties of a generalized fractional Brownian motion. J. Theoret. Probab. (2021). https://doi.org/10.1007/s10959-020-01066-1

Ichiba, T., Pang, G.D., Taqqu, M.S.: Semimartingale properties of a generalized fractional Brownian motion and its mixtures with applications in finance (2020). arXiv:2012.00975

Jain, N.C., Marcus, M.B.: Continuity of sub-Gaussian processes. Probability on Banach spaces. Adv. Probab. Relat. Top. 4, 81–196 (1978)

Kahane, J.-P.: Some Random Series of Functions, 2nd edn. Cambridge University Press, Cambridge (1985)

Kawada, T., Kôno, N.: A remark on nowhere differentiability of sample functions of Gaussian processes. Proc. Jpn. Acad. 47, 932–934 (1971)

Khoshnevisan, D.: Analysis of Stochastic Partial Differential Equations. CBMS Regional Conference Series in Mathematics, 119. American Mathematical Society (2014)

Klüppelberg, C., Kühn, C.: Fractional brownian motion as a weak limit of Poisson shot noise processes with applications to finance. Stoch. Process. Appl. 113, 333–351 (2004)

Lachal, A.: Local asymptotic classes for the successive primitives of Brownian motion. Ann. Probab. 25, 1712–1734 (1997)

Ledoux, M.: Isoperimetry and Gaussian analysis. Lectures on probability theory and statistics (Saint-Flour, 1994). Lecture Notes in Math., 1648, 165–294. Springer-Verlag, Berlin (1996)

Lévy, P.: Random functions: general theory with special references to Laplacian random functions. Univ. Calif. Publ. Stat. 1, 331–390 (1953)

Li, W.V., Shao, Q.-M.: Gaussian processes: inequalities, small ball probabilities and applications. In Stochastic Processes: Theory and Methods. Handbook of Statistics, 19, (C.R. Rao and D. Shanbhag, editors), pp. 533–597, North-Holland (2001)

Loéve, L.: Probability Theory I. Springer, New York (1977)

Mandelbrot, B., Van Ness, J.: Fractional Brownian motions, fractional noises and applications. SIAM Rev. 10(4), 422–437 (1968)

Marcus, M.B., Rosen, J.: Markov Processes, Gaussian Processes, and Local Times. Cambridge University Press, Cambridge (2006)

Marinucci, D., Robinson, P.M.: Alternative forms of fractional Brownian motion. J. Statist. Plann. Inference 80, 111–122 (1999)

Meerschaert, M., Wang, W., Xiao, Y.: Fernique-type inequalities and moduli of continuity for anisotropic Gaussian random fields. Trans. Amer. Math. Soc. 365(2), 1081–1107 (2013)

Monrad, D., Rootzén, H.: Small values of Gaussian processes and functional laws of the iterated logarithm. Probab. Theory Relat. Fields 101, 173–192 (1995)

Pang, G.D., Taqqu, M.S.: Nonstationary self-similar Gaussian processes as scaling limits of power-law shot noise processes and generalizations of fractional Brownian motion. High Freq. 2(2), 95–112 (2019)

Pickands, J., III.: Maxima of stationary Gaussian processes. Z. Wahrsch. Verw. Gebeite 7, 190–223 (1967)

Pipiras, V., Taqqu, M.S.: Long-Range Dependence and Self-Similarity. Cambridge University Press, Cambridge (2017)

Skorniakov, V.: On a covariance structure of some subset of self-similar Gaussian processes. Stoch. Process. Appl. 129(6), 1903–1920 (2019)

Talagrand, M.: Hausdorff measure of trajectories of multiparameter fractional Brownian motion. Ann. Probab. 23, 767–775 (1995)

Talagrand, M.: Lower classes of fractional Brownian motion. J. Theoret. Probab. 9, 191–213 (1996)

Talagrand, M.: Multiple points of trajectories of multiparameter fractional Brownian motion. Probab. Theory Relat. Fields 112, 545–563 (1998)

Tudor, C.A., Xiao, Y.: Sample path properties of bifractional Brownian motion. Bernoulli 13, 1023–1052 (2007)

Wang, R., Xiao, Y.: Lower functions and Chung’s LILs of the generalized fractional Brownian motion (2021). arXiv:2105.03613

Wang, W., Su, Z., Xiao, Y.: The moduli of non-differentiability for Gaussian random fields with stationary increments. Bernoulli 26, 1410–1430 (2020)

Xiao, Y.: Hölder conditions for the local times and the Hausdorff measure of the level sets of Gaussian random fields. Probab. Theory Relat. Fields 109, 129–157 (1997)

Xiao, Y.: Strong local nondeterminism and the sample path properties of Gaussian random fields. Asymptotic Theory in Probability and Statistics with Applications (T.L. Lai, Q. Shao and L. Qian, eds.) 136-176. Higher Education Press, Beijing (2007)

Xiao, Y.: Sample path properties of anisotropic Gaussian random fields. In: A Mini-course on Stochastic Partial Differential Equations, D. Khoshnevisan, F. Rassoul-Agha, editors, Lecture Notes in Math. 1962, pp 145-212, Springer, New York (2009)

Yeh, J.: Differentiability of sample functions in Gaussian processes. Proc. Amer. Math. Soc., 18(1), 105-108, (1967). Correction in Proc. Amer. Math. Soc., 19(4), 843, (1968)

Acknowledgements

The authors are grateful to the anonymous referees for their constructive comments and corrections which have led to significant improvement of this paper. Part of the research in this paper was conducted during R. Wang’s visit to Michigan State University (MSU). He thanks the Department of Statistics and Probability at MSU for their hospitality, and is thankful for the financial support from the CSC Fund, NNSFC 11871382, and the Fundamental Research Funds for the Central Universities 2042020kf0031. The research of Y. Xiao is partially supported by NSF grant DMS-1855185.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, R., Xiao, Y. Exact Uniform Modulus of Continuity and Chung’s LIL for the Generalized Fractional Brownian Motion. J Theor Probab 35, 2442–2479 (2022). https://doi.org/10.1007/s10959-021-01148-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10959-021-01148-8

Keywords

- Gaussian self-similar process

- Generalized fractional Brownian motion

- Exact uniform modulus of continuity

- Small ball probability

- Chung’s LIL

- Tangent process

- Lamperti’s transformation