Abstract

A function on the state space of a Markov chain is a “lumping” if observing only the function values gives a Markov chain. We give very general conditions for lumpings of a large class of algebraically defined Markov chains, which include random walks on groups and other common constructions. We specialise these criteria to the case of descent operator chains from combinatorial Hopf algebras, and, as an example, construct a “top-to-random-with-standardisation” chain on permutations that lumps to a popular restriction-then-induction chain on partitions, using the fact that the algebra of symmetric functions is a subquotient of the Malvenuto–Reutenauer algebra.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Combinatorialists have built a variety of frameworks for studying Markov chains algebraically, most notably the theories of random walks on groups [18, 62] and their extensions to monoids [10, 14, 16]. Further examples include [23, 32, 54]. These approaches usually associate some algebraic operator to the Markov chain, the advantage being that the eigendata of the operator reflects the convergence rates of the chains. As an easy example, consider n playing cards laid in a row on a table, and imagine exchanging two randomly chosen cards at each time step. [24] represents this random transposition shuffle as multiplication by the sum of transpositions in the group algebra of the symmetric group, and deduces from the representation theory of the symmetric group that asymptotically \(\frac{1}{2}n\log n\) steps are required to randomise the order of the cards. See Example 2.1 for more details of the setup.

Analogous to how these frameworks translated the convergence rate calculation into an algebraic question of representations and characters, the present paper gives very general algebraic conditions for a different probability problem: when is a function \(\theta \) of an algebraic Markov chain \(\{X_{t}\}\) a Markov function, meaning that the sequence of random variables \(\{\theta (X_{t})\}\) is itself a Markov chain? The motivation for this is that often, only certain functions of Markov chains are of interest. For example, the random transposition shuffle above may be in preparation for a card game that only uses the cards on the left (Example 2.11), or where only the position of one specific card is important (Example 2.9). One naturally suspects that randomising only half the cards or only the position of one card may take fewer than \(\frac{1}{2}n\log n\) moves, as these functions can become randomised before the full chain does. The convergence rates of such functions of Markov chains are generally easier to analyse when the function is Markov, as the lumped chain\(\{\theta (X_{t})\}\) can be studied independently of the full chain \(\{X_{t}\}\). The reverse problem is also interesting: a Markov chain \(X'_{t}\) that is hard to analyse directly may benefit from being viewed as \(\{\theta (X_{t})\}\) for a more tractable “lift” chain \(\{X_{t}\}\). [17, 25, 28] are examples of this idea.

The aim of this paper is to expedite the search for lumpings and lifts of “algebraic” Markov chains by giving very general conditions for their existence. As formalised in Sect. 2.2, the chains under consideration are associated with a linear transformation \(T:V\rightarrow V\), where the state space is a basis \(\mathscr {B}\) of the vector space V. Our two main discoveries for such chains are:

-

(Section 2.3, Theorem 2.7) if T descends to a well-defined map \(\bar{T}\) on a quotient space \(\bar{V}\) of V that “respects the basis” \(\mathscr {B}\), then the quotient projection \(\theta :V\rightarrow \bar{V}\) gives a lumping from any initial distribution on \(\mathscr {B}\). The lumped chain is associated with \(\bar{T}:{\bar{V}}\rightarrow {\bar{V}}\).

-

(Section 2.4, Theorem 2.16) if V contains a T-invariant subspace \(V'\) that “respects the basis” \(\mathscr {B}\), then \(T:V'\rightarrow V'\) corresponds to a lumping that is only valid for certain initial distributions, i.e. a weak lumping.

Section 2 states and proves the above very general theorems, and illustrates them with numerous simple examples, both classical and new.

Section 3 specialises these general lumping criteria to descent operator chains on combinatorial Hopf algebras [54]—in essence, lumpings from any initial distribution correspond to quotient algebras, and weak lumpings to subalgebras. This is applied to two fairly elaborate examples. Sections 3.2, 3.3, 3.4, 3.5 demystifies a theorem of Jason Fulman [31, Th. 3.1]: the probability distribution of the RSK shape [64, Sec. 7.11] [33, Sec. 4] of a permutation, after t top-to-random shuffles (Example 2.1) from the identity, agrees with the probability distribution of a partition after t steps of a certain Markov chain that removes then re-adds a random box (see Sect. 3.2.1). Fulman remarked that the connection between these two chains was “surprising”, perhaps because it is not a lumping (see the start of Sect. 3). Here, we use the new lumping criteria for descent operator chains to construct a similar chain to top-to-random shuffling that does lump to the chain on partitions, and prove that its probability distribution after t steps from the identity agrees with that of top-to-random.

The second application, in Sect. 3.6, is a Hopf-algebraic re-proof of a result of Christos Athanasiadis and Persi Diaconis [9, Ex. 5.8], that riffle-shuffles and related card shuffles lump via descent set.

2 General Theory

2.1 Matrix Notation

Given a matrix A , let A(x, y) denote its entry in row x, column y, and write \(A^{T}\) for the transpose of A.

Let V be a vector space (over \(\mathbb {R}\)) with basis \(\mathscr {B}\), and \(\mathbf {T}:V\rightarrow V\) be a linear map. Write \(\left[ \mathbf {T}\right] _{\mathscr {B}}\) for the matrix of \(\mathbf {T}\) with respect to \(\mathscr {B}\) . In other words, the entries of \(\left[ \mathbf {T}\right] _{\mathscr {B}}\) satisfy

for each \(x\in \mathscr {B}\).

\(V^{*}\) is the dual vector space to V, the set of linear functions from V to \(\mathbb {R}\). Its natural basis is \(\mathscr {B}^*:=\left\{ x^{*}|x\in \mathscr {B}\right\} \), where \(x^{*}\) satisfies \(x^{*}(x)=1\), \(x^{*}(y)=0\) for all \(y\in \mathscr {B}\), \(y\ne x\). The dual map to \(\mathbf {T}:V\rightarrow V\) is the linear map \(\mathbf {T}^{*}:V^{*}\rightarrow V^{*}\) satisfying \((\mathbf {T}^{*}f)(v)=f(\mathbf {T}v)\) for all \(v\in V,f\in V^{*}\). Dualising a linear map is equivalent to transposing its matrix: \(\left[ \mathbf {T}^{*}\right] _{\mathscr {B}^*}=\left[ \mathbf {T}\right] _{\mathscr {B}}^{T}\).

2.2 Markov Chains from Linear Maps via the Doob Transform

To start, here is a quick summary of the Markov chain facts required for this work. A (discrete time) Markov chain is a sequence of random variables \(\{X_{t}\}\), where each \(X_{t}\) belongs to the state space\(\varOmega \). All Markov chains here are time-independent and have a finite state space. Hence, they are each described by an \(|\varOmega |\)-by-\(|\varOmega |\)transition matrixK: for any time t,

(Here, \(P\{X|Y\}\) is the probability of event X given event Y.) If the probability distribution of \(X_{t}\) is expressed as a row vector \(g_{t}\), then taking one step of the chain is equivalent to multiplication by K on the right: \(g_{t}=g_{t-1}K\). (Some authors, notably [10], take the opposite convention, where \(P\{X_{t}=y|X_{t-1}=x\}:=K(y,x)\), and the distribution of \(X_{t}\) is represented by a column vector \(f_{t}\) with \(f_{t}=Kf_{t-1}\).) Note that a matrix K specifies a Markov chain in this manner if and only if \(K(x,y)\ge 0\) for all \(x,y\in \varOmega \), and \(\sum _{y\in \varOmega }K(x,y)=1\) for each \(x\in \varOmega \). A probability distribution \(\pi :\varOmega \rightarrow \mathbb {R}\) is a stationary distribution if it satisfies \(\sum _{x\in \varOmega }\pi (x)K(x,y)=\pi (y)\) for each state \(y\in \varOmega \). We refer the reader to the textbooks [43, 47] for more background in Markov chain theory.

This paper concerns chains which arise from linear maps. A simple motivating example is a random walk on a group. Given a probability distribution Q on a group G (i.e. a function \(Q:G\rightarrow \mathbb {R}\)), consider the following Markov chain on the state space \(\varOmega =G\): at each time step, choose a group element g with probability Q(g), and move from the current state x to the state xg. This chain is associated with the “right multiplication by \(\sum _{g\in G}Q(g)g\)” operator on the group algebra \(\mathbb {R}G\), i.e. to the linear transformation \(\mathbf {T}:\mathbb {R}G\rightarrow \mathbb {R}G\), \(\mathbf {T}(x):=x\left( \sum _{g\in G}Q(g)g\right) \). To state the relationship more precisely: the transition matrix of the Markov chain is the transpose of the matrix of \(\mathbf {T}\) relative to the basis G of \(\mathbb {R}G\), i.e. \(K=[\mathbf {T}]_{G}^{T}\). (One can define similar chains from left-multiplication operators.)

Before generalising this connection to other linear operators, here are some simple examples of random walks on groups that we will use to illustrate lumpings in later sections.

Example 2.1

(Card shuffling) Random walks on \(G=\mathfrak {S}_n\), the symmetric group, describe many examples of card shuffling. The state space of these chains are the n! possible orderings of a deck of n cards. For convenience, suppose the cards are labelled \(1,2,\ldots ,n\), each label occurring once. There are various different conventions on how to represent such an ordering by a permutation, see [68, Sec. 2.2]. We follow the more modern notation in [8] (as opposed to [6, 11]) and associate \(\sigma \) to the ordering where \(\sigma (1)\) is the label of the top card, \(\sigma (2)\) is the label of the second card from the top, ..., \(\sigma (n)\) is the label of the bottom card. In other words, writing \(\sigma \) in one-line notation \((\sigma (1),\sigma (2),\ldots ,\sigma (n))\) (see Sect. 3.1) lists the card labels from top to bottom. Observe that in this convention, right-multiplication by a permutation \(\tau \) moves the card in position \(\tau (i)\) to position i.

Two simple shuffles that we will consider are:

-

top-to-random [6, 19]: remove the top card, then reinsert it into the deck at one of the n possible positions, chosen uniformly. A possible trajectory for a deck of five cards is

The associated distribution Q on \(\mathfrak {S}_n\) is

$$\begin{aligned} Q(g)={\left\{ \begin{array}{ll} \frac{1}{n} &{} \text{ if } g=(i\ i-1\ \ldots \ 1) \text{ for } \text{ some } i\text{, } \text{ in } \text{ cycle } \text{ notation };\\ 0 &{} \text{ otherwise. } \end{array}\right. } \end{aligned}$$(Note that the identity permutation is the case \(i=1\).) Equivalently, the associated linear map is right-multiplication by

$$\begin{aligned} q=\frac{1}{n}\sum _{i=1}^{n}(i\ i-1\ \ldots \ 1). \end{aligned}$$This Markov chain has been thoroughly analysed over the literature: [7, Sec. 1, Sec. 2] uses a strong uniform time to elegantly show that roughly \(n\log n\) iterations are required to randomise the deck, and [19, Cor. 2.1] finds the explicit probabilities of achieving a particular permutation after any given number of shuffles. The time-reversal of the top-to-random shuffle is the equally well-studied Tsetlin library [67]: [41, Sec. 4.6] describes an explicit algorithm for an eigenbasis, [56] derives the spectrum for a weighted version, and [30] lists many more references.

-

random-transposition [18, 24, Chap. 3D]: choose two cards, possibly with repetition, uniformly and independently. If the same card was chosen twice, do nothing. Otherwise, exchange the two chosen cards. A possible trajectory for a deck of five cards is

The associated distribution Q on \(\mathfrak {S}_n\) is

$$\begin{aligned} Q(g)={\left\{ \begin{array}{ll} \frac{1}{n} &{} \text{ if } g \text{ is } \text{ the } \text{ identity };\\ \frac{2}{n^{2}} &{} \text{ if } g \text{ is } \text{ a } \text{ transposition; }\\ 0 &{} \text{ otherwise. } \end{array}\right. } \end{aligned}$$Equivalently, the associated linear map is right-multiplication by

$$\begin{aligned} q=\frac{1}{n}{{\mathrm{id}}}+\frac{2}{n}\sum \sigma ; \end{aligned}$$summing over all transpositions \(\sigma \). The mixing time for the random-transposition shuffle is \(\frac{1}{2}n\log n\), as shown in [24] using the representation theory of the symmetric group. [20] uses this chain to induce Markov chains on trees and on matchings. A recent extension to random involutions is [13].

Example 2.2

(Flip a random bit) [46]: Let \(G=\left( \mathbb {Z}/2\mathbb {Z}\right) ^{d}\), written additively as binary strings of length d. At each time step, uniformly choose one of the d bits, and change it either from 0 to 1 or from 1 to 0. A possible trajectory for \(d=5\) is

The associated distribution Q is

Equivalently, the associated linear map is right-multiplication by

(The addition in q is in the group algebra \(\mathbb {R}G\), not within the group G.)

[46] investigated the return probabilities of this walk and similar walks on \(\left( \mathbb {Z}/2\mathbb {Z}\right) ^{d}\) that allow changing more than one bit.

As detailed above, the transition matrix of a random walk on a group is \(K=[\mathbf {T}]_{G}^{T}\), where \(\mathbf {T}\) is the right-multiplication operator on the group algebra \(\mathbb {R}G\) defined by \(\mathbf {T}(x):=x\left( \sum _{g\in G}Q(g)g\right) \). This relationship between K and \(\mathbf {T}\) allows the representation theory of G to illuminate the converge rates of the chain. A naive generalisation is to declare new transition matrices to be \(K:=[\mathbf {T}]_{\mathscr {B}}^{T}\), for other linear transformations \(\mathbf {T}\) on a vector space with basis \(\mathscr {B}\). The state space of such a chain is the basis \(\mathscr {B}\), and the intuition is that the transition probabilities K(x, y) would represent the chance of obtaining y when applying \(\mathbf {T}\) to x.

In order for \(K:=[\mathbf {T}]_{\mathscr {B}}^{T}\) to be a transition matrix, we require \(K(x,y)\ge 0\) for all x, y in \(\mathscr {B}\), and \(\sum _{y\in \mathscr {B}}K(x,y)=1\). As noted by Persi Diaconis (personal communication), the non-negativity condition can be achieved by adding multiples of the identity transformation to \(\mathbf {T}\), which essentially keeps the eigendata properties in Theorem 2.3. In any case, many linear operators arising from combinatorics already have non-negative coefficients with respect to natural bases, so we do not dwell on this problem.

The row-sum condition \(\sum _{y\in \mathscr {B}}K(x,y)=1\), though true for many important cases [10, 14], is less guaranteed. There are many possible ways to adjust K so that its row sums become 1. One way which preserves the relationship between the eigendata of \(\mathbf {T}\) and the convergence rates of the chain is to rescale \(\mathbf {T}\) and the basis \(\mathscr {B}\) using the Doob h-transform.

The Doob h-transform is a very general tool in probability, used to condition a process on some event in the future [26]. The simple case of relevance here is conditioning a (finite, discrete time) Markov chain on non-absorption. The Doob transform constructs the transition matrix of the conditioned chain out of the transition probabilities of the original chain between non-absorbing states, or, equivalently, out of the original transition matrix with the rows and columns for absorbing states removed. As observed in the multiple references below, the same recipe essentially works for any arbitrary non-negative matrix K.

The Doob transform relies on a positive right eigenfunction\(\eta \) of K, i.e. a positive function \(\eta :\mathscr {B}\rightarrow \mathbb {R}\) satisfying \(\sum _{y}K(x,y)\eta (y)=\beta \eta (x)\) for some positive number \(\beta \), which is the eigenvalue. (Functions satisfying this condition with \(\beta =1\) are called harmonic, hence the name h-transform.) To say this in a basis-independent way, recall that \(K=[\mathbf {T}]_{\mathscr {B}}^{T}=\left[ \mathbf {T}^{*}\right] _{\mathscr {B}^*}\), so \(\eta \) (or more accurately, its linear extension in \(V^{*}\)) is an eigenvector of the dual map \(\mathbf {T}^{*}:V^{*}\rightarrow V^{*}\) with eigenvalue \(\beta \), i.e. \(\eta \circ T=\beta \eta \) as functions on V.

Theorem 2.3

(Markov chains from linear maps via the Doob h-transform) [44, Def. 8.11, 8.12] [47, Sec. 17.6.1] [69, Lem. 4.4.1] [66, Lem. 1.4, Lem. 2.11] Let V be a finite-dimensional vector space with basis \(\mathscr {B}\), and \(\mathbf {T}:V\rightarrow V\) be a linear map for which \(K:=\left[ \mathbf {T}\right] _{\mathscr {B}}^{T}\) has all entries non-negative. Suppose K has a positive right eigenfunction \(\eta \) with eigenvalue \(\beta >0\). Then

-

i.

The matrix

$$\begin{aligned} \check{K}(x,y):=\frac{1}{\beta }K(x,y)\frac{\eta (y)}{\eta (x)} \end{aligned}$$is a transition matrix. Equivalently, \(\check{K}:=\left[ \frac{\mathbf {T}}{\beta }\right] _{\check{\mathscr {B}}}^{T}\), where \(\check{\mathscr {B}}:=\left\{ \frac{x}{\eta (x)}:x\in \mathscr {B}\right\} \).

-

ii.

The left eigenfunctions \(\mathbf {g}\) for \(\check{K}\), with eigenvalue \(\alpha \) (i.e. \(\sum _{x}\mathbf {g}(x)K(x,y)=\alpha \mathbf {g}(y)\)), are in bijection with the eigenvectors \(g\in V\) of \(\mathbf {T}\), with eigenvalue \(\frac{\alpha }{\beta }\), via

$$\begin{aligned} \mathbf {g}(x):=\eta (x)\times \text{ coefficient } \text{ of } x \text{ in } g. \end{aligned}$$ -

iii.

The stationary distributions \(\pi \) for \(\check{K}\) are precisely the functions of the form

$$\begin{aligned} \pi (x):=\eta (x)\frac{\xi _{x}}{\sum _{x\in \mathscr {B}}\xi _{x}\eta (x)}, \end{aligned}$$where \(\sum _{x\in \mathscr {B}}\xi _{x}x\in V\) is an eigenvector of \(\mathbf {T}\) with eigenvalue 1, whose coefficients \(\xi _{x}\) are all non-negative.

-

iv

The right eigenfunctions \(\mathbf {f}\) for \(\check{K}\), with eigenvalue \(\alpha \) (i.e. \(\sum _{y}K(x,y)\mathbf {f}(y)=\alpha \mathbf {f}(x)\)), are in bijection with the eigenvectors \(f\in V^{*}\)of the dual map \(\mathbf {T}^{*}\), with eigenvalue \(\frac{\alpha }{\beta }\), via

$$\begin{aligned} \mathbf {f}(x):=\frac{1}{\eta (x)}f(x). \end{aligned}$$

The function \(\eta :V\rightarrow \mathbb {R}\) above is called the rescaling function. The output \(\check{K}\) of the transform depends on the choice of rescaling function. Observe that, if \(K:=\left[ \mathbf {T}\right] _{\mathscr {B}}^{T}\) already has each row summing to 1, then the constant function \(\eta \equiv 1\) on \(\mathscr {B}\) is a positive right eigenfunction of K with eigenvalue 1, and using this constant rescaling function results in no rescaling at all: \(\check{K}=K\). This will be the case in all examples in Sects. 2.3, 2.4, so the reader may wish to skip the remainder of this section on first reading, and assume \(\eta \equiv 1\) on \(\mathscr {B}\) in all theorems. (Note that \(\eta \equiv 1\) on \(\mathscr {B}\) does not mean \(\eta \) is constant on V, since \(\eta \) is a linear function. Instead, \(\eta \) sends a vector v in V to the sum of the coefficients when v is expanded in the \(\mathscr {B}\) basis.)

Proof

To prove i, first note that \(\check{K}(x,y)\ge 0\) because \(\beta >0\) and \(\eta (x)>0\) for all x. And the rows of \(\check{K}\) sum to 1 because

Parts ii and iv are immediate from the definition of \(\check{K}\). To see part iii, recall that a stationary distribution is precisely a positive left eigenfunction with eigenvalue 1 (normalised to be a distribution).\(\square \)

Example 2.4

To illustrate the Doob transform, here is the down–up chain on partitions of size 3. (See Sect. 3.2.1 for a general description, and an interpretation in terms of restriction and induction of representations of symmetric groups.)

Let \(V_{3}\) be the vector space with basis \(\mathscr {B}_{3}\), consisting of the three partitions of size 3:

We define below a second vector space \(V_{2}\), and linear transformations \(\mathbf {D}:V_{3}\rightarrow V_{2}\), \(\mathbf {U}:V_{2}\rightarrow V_{3}\) whose composition \(\mathbf {T}=\mathbf {U}\circ \mathbf {D}\) will define our Markov chain on \(\mathscr {B}_{3}\).

\(V_{2}\) is the vector space with basis \(\mathscr {B}_{2}\), the two partitions of size 2

For \(x\in \mathscr {B}_{3}\), define \(\mathbf {D}(x)\) to be the sum of all elements of \(\mathscr {B}_{2}\) which can be obtained from x by deleting a box on the right end of any row. So

Then, for \(x\in \mathscr {B}_{2}\), define \(\mathbf {U}(x)\) to be the sum of all elements of \(\mathscr {B}_{3}\) which can be obtained from x by adding a new box on the right end of any row, including on the row below the last row of x. So

An easy calculation shows that

which has all entries non-negative, but its rows do not sum to 1.

The function \(\eta :\mathscr {B}_{3}\rightarrow \mathbb {R}\) defined by

is a right eigenfunction of K with eigenvalue \(\beta =3\). So applying the Doob transform with this choice of rescaling function amounts to dividing every entry of K by 3, then dividing the middle row by 2 and multiplying the middle column by 2, giving

This is a transition matrix as its rows sum to 1. Observe that \(\check{K}=\left[ \frac{1}{3}\mathbf {U}\circ \mathbf {D}\right] _{\check{\mathscr {B}}_{3}}^{T}\), where

2.3 Quotient Spaces and Strong Lumping

As remarked in the introduction, sometimes only certain features of a Markov chain is of interest, that is, we wish to study a process \(\{\theta (X_{t})\}\) rather than \(\{X_{t}\}\), for some function \(\theta \) on the state space. The process \(\{\theta (X_{t})\}\) is called a lumping (or projection), because it groups together states with the same image under \(\theta \), treating them as a single state. The analysis of a lumping is easiest when \(\{\theta (X_{t})\}\) is itself a Markov chain. If this is true regardless of the initial state of the full chain \(\{X_{t}\}\), then the lumping is strong; if it is dependent on the initial state, the lumping is weak. [43, Sec. 6.3, 6.4] is a very thorough exposition on these topics.

This section focuses on strong lumping; the next section will handle weak lumping.

Definition 2.5

(Strong lumping) Let \(\{X_{t}\},\{\bar{X}_{t}\}\) be Markov chains on state spaces \(\varOmega ,\bar{\varOmega }\), respectively, with transition matrices \(K,{\bar{K}}.\) Then \(\{\bar{X}_{t}\}\) is a strong lumping of\(\{X_{t}\}\)via\(\theta \) if there is a surjection \(\theta :\varOmega \rightarrow \bar{\varOmega }\) such that the process \(\{\theta (X_{t})\}\) is a Markov chain with transition matrix \({\bar{K}}\), irrespective of the starting distribution \(X_{0}\). In this case, \(\{X_{t}\}\) is a strong lift of\(\{\bar{X}_{t}\}\)via\(\theta \).

The following necessary and sufficient condition for strong lumping is known as Dynkin’s criterion:

Theorem 2.6

(Strong lumping for Markov chains) [43, Th. 6.3.2] Let \(\{X_{t}\}\) be a Markov chain on a state space \(\varOmega \) with transition matrix K, and let \(\theta :\varOmega \rightarrow \bar{\varOmega }\) be a surjection. Then \(\{X_{t}\}\) has a strong lumping via \(\theta \) if and only if, for every \(x_{1},x_{2}\in \varOmega \) with \(\theta (x_{1})=\theta (x_{2})\), and every \(\bar{y}\in \bar{\varOmega }\), the transition probability sums satisfy

The lumped chain has transition matrix

for any x with \(\theta (x)=\bar{x}\). \(\square \)

When the chain \(\{X_{t}\}\) arises from linear operators via the Doob h-transform, Dynkin’s criterion translates into the statement below regarding quotient operators.

Theorem 2.7

(Strong lumping for Markov chains from linear maps) [52, Th. 3.4.1] Let V be a vector space with basis \(\mathscr {B}\), and \(\mathbf {T}:V\rightarrow V,\eta :V\rightarrow \mathbb {R}\) be linear maps allowing the Doob transform Markov chain construction of Theorem 2.3. Let \({\bar{V}}\) be a quotient space of V, and denote the quotient map by \(\theta :V\rightarrow {\bar{V}}\). Suppose that

-

1.

the distinct elements of \(\{\theta (x):x\in \mathscr {B}\}\) are linearly independent, and

-

2.

\(\mathbf {T},\eta \) descend to maps on \({\bar{V}}\) - that is, there exists \(\bar{\mathbf {T}}:{\bar{V}}\rightarrow {\bar{V}}\), \(\bar{\eta }:{\bar{V}}\rightarrow \mathbb {R}\), such that \(\theta \circ \mathbf {T}=\bar{\mathbf {T}}\circ \theta \) and \(\bar{\eta }\circ \theta =\eta \).

Then, the Markov chain defined by \(\bar{\mathbf {T}}\) (on the basis \(\bar{\mathscr {B}}:=\{\theta (x):x\in \mathscr {B}\}\), with rescaling function \(\bar{\eta }\)) is a strong lumping via \(\theta \) of the Markov chain defined by \(\mathbf {T}\).

In the simplified case where \(\eta \equiv 1\) on \(\mathscr {B}\) and \(\beta =1\) (so no rescaling is required to define the chain on \(\mathscr {B}\)), such as for random walks on groups, taking \(\bar{\eta }\equiv 1\) on \(\bar{\mathscr {B}}\) satisfies \(\bar{\eta }\circ \theta =\eta \), so condition 2 reduces to a condition on \(\mathbf {T}\) only, and the lumped chain also does not require rescaling.

In the general case, the idea of the proof is that \(\theta \circ \mathbf {T}=\bar{\mathbf {T}}\circ \theta \) is essentially equivalent to Dynkin’s criterion for the unscaled matrices \(K:=[\mathbf {T}]_{\mathscr {B}}^{T}\) and \({\bar{K}}:=[\bar{\mathbf {T}}]_{\bar{\mathscr {B}}}^{T}\), and this turns out to imply Dynkin’s criterion for the Doob-transformed transition matrices.

Proof

Let \(K=[\mathbf {T}]_{\mathscr {B}}^{T}\), \({\bar{K}}=[\bar{\mathbf {T}}]_{\bar{\mathscr {B}}}^{T}\), and let \(\beta \) be the eigenvalue of \(\eta \). The first step is to show that \(\bar{\eta }\) is a possible rescaling function for \(\bar{\mathbf {T}}\), i.e. \(\bar{\eta }\) is an eigenvector of \(\bar{\mathbf {T}}^{*}\) with eigenvalue \(\beta \), taking positive values on \(\bar{\mathscr {B}}\). In other words, the requirement is that \([\bar{\mathbf {T}}^{*}(\bar{\eta })]v=\beta \bar{\eta }v\) for every \(v\in {\bar{V}}\), and \(\bar{\eta }(v)>0\) if \(v\in \bar{\mathscr {B}}\). Since \(\theta :V\rightarrow {\bar{V}}\) and its restriction \(\theta :\mathscr {B}\rightarrow \bar{\mathscr {B}}\) are both surjective, it suffices to verify the above two conditions for \(v=\theta (x)\) with \(x\in V\) and with \(x\in \mathscr {B}\), respectively.

Now

And, for \(x\in \mathscr {B}\), we have \(\bar{\eta }(\theta (x))=\eta (x)>0\).

Now let \(\check{K},\check{\bar{K}}\) denote the transition matrices that the Doob transform constructs from K and \({\bar{K}}\). (Strictly speaking, we do not yet know that the entries of \({\bar{K}}\) are non-negative—this will be proved in Eq. (2.1) below—but the formula in the definition of the Doob transform remains well-defined nevertheless.) By Theorem 2.6 above, it suffices to show that, for any \(x\in \mathscr {B}\) with \(\theta (x)={\bar{x}}\), and any \({\bar{y}}\in \bar{\mathscr {B}}\),

By definition of the Doob transform, this is equivalent to

Because \(\bar{\eta }\theta =\eta \), the desired equality reduces to

Now expand both sides of \(\bar{\mathbf {T}}\circ \theta (x)=\theta \circ \mathbf {T}(x)\) in the \(\bar{\mathscr {B}}\) basis:

Equating coefficients of \({\bar{y}}\) on both sides completes the proof.\(\square \)

Example 2.8

(Forget the last bit under “flip a random bit”) Take \(G=\left( \mathbb {Z}/2\mathbb {Z}\right) ^{d}\), the additive group of binary strings of length d, as in Example 2.2. Then \({\bar{G}}=\left( \mathbb {Z}/2\mathbb {Z}\right) ^{d-1}\) is a quotient group of G, by forgetting the last bit. The quotient map \(\theta :G\rightarrow {\bar{G}}\) induces a surjective map \(\theta :\mathbb {R}G\rightarrow \mathbb {R}{\bar{G}}\).

Recall that the “flip a random bit” chains comes from the linear transformation on \(\mathbb {R}G\) of right-multiplication by \(q=\frac{1}{d}\Big ((1,0,\ldots ,0)+(0,1,0,\ldots ,0)+\cdots +(0,\ldots ,0,1)\Big )\). Since multiplication of group elements descends to quotient groups, forgetting the last bit is a lumping, and the lumped chain is associated with right-multiplication in \(\mathbb {R}{\bar{G}}\) by the image of q in \(\mathbb {R}{\bar{G}}\), which is \(\frac{1}{d}\Big ((1,0,\ldots ,0)+(0,1,0,\ldots ,0)+\ldots +(0,\ldots ,0,1)+(0,\ldots ,0)\Big )\), where there are d summands each of length \(d-1\).

The analogous construction holds for any quotient \({\bar{G}}\) of any group G; see [8, App. IA].

The above principle extends to “quotient sets”, i.e. a set of coset representatives, which need not be groups (and is extended further to double-coset representatives in [25]):

Example 2.9

(“Follow the ace of spaces” under shuffling) Consider \(G=\mathfrak {S}_n\), and let \(H=\mathfrak {S}_{n-1}\) be the subgroup of \(\mathfrak {S}_n\) which permutes the last \(n-1\) objects. Then the transpositions \(\tau _{i}:=(1\ i)\), for \(2\le i\le n\), together with \(\tau _{1}:={{\mathrm{id}}}\), give a set of right coset representatives of H. The coset \(H\tau _{i}\) consists of all deck orderings where the card with label 1 is in the ith position from the top. Recall that right-multiplication is always well defined on the set of right cosets, so any card-shuffling model lumps by taking right cosets. This corresponds to tracking only the location of the card with label 1. [8, Sec. 2] analyses this chain in detail for the “riffle-shuffles” of [11].

The example of lumping to coset representatives can be further generalised to a framework concerning orbits under group actions; notice in the phrasing below that the theorem applies to more than random walks on groups.

Theorem 2.10

(Strong lumping to orbits under group actions) Let V be a vector space with basis \(\mathscr {B}\), and \(\mathbf {T}:V\rightarrow V,\eta :V\rightarrow \mathbb {R}\) be linear maps allowing the Doob transform Markov chain construction of Theorem 2.3. Let \(\{s_{i}:\mathscr {B}\rightarrow \mathscr {B}\}\) be a group of maps whose linear extensions to V commute with \(\mathbf {T}\), and which satisfies \(\eta \circ s_{i}=\eta \). Then the Markov chain defined by \(\mathbf {T}\) lumps to a chain on the \(\{s_{i}\}\)-orbits of \(\mathscr {B}\).

This theorem recovers Example 2.9 above, of lumping a random walk on a group to right cosets, by letting \(s_{i}\) be left-multiplication by i, as i ranges over the subgroup H. Since \(s_{i}\) is left-multiplication and \(\mathbf {T}\) is right-multiplication, they obviously commute. Hence any right-multiplication random walk on a group lumps via taking right cosets.

Proof

Let \(\bar{\mathscr {B}}\) be the sets of \(\{s_{i}\}\)-orbits of \(\mathscr {B}\), and let \(\theta :\mathscr {B}\rightarrow \bar{\mathscr {B}}\) send an element of \(\mathscr {B}\) to its orbit. Let \({\bar{V}}\) be the vector space spanned by \(\bar{\mathscr {B}}\). Then \(\mathbf {T}\) descends to a well-defined map on \({\bar{V}}\) because of the following: if \(\theta (x)=\theta (y)\), then \(x=s_{i}(y)\) for some \(s_{i}\), so \(\mathbf {T}(x)=\mathbf {T}\circ s_{i}(y)=s_{i}\circ \mathbf {T}(y)\) (using \(s_{i}\) to denote the linear extension in this last expression), and so \(\mathbf {T}(x)\) and \(\mathbf {T}(y)\) are in the same orbit. And the condition \(\eta \circ s_{i}=\eta \) ensures that \(\bar{\eta }\) is well defined on the \(\{s_{i}\}\)-orbits. \(\square \)

Below are two more specialisations of Theorem 2.10 that hold for random walks on any group as long as the element being multiplied is in the centre of the group algebra; we illustrate them with card-shuffling examples.

Example 2.11

(Values of the top k cards under random-transposition shuffling) This simple example appears to be new. Recall that the random-transposition shuffle corresponds to right-multiplication by \(q=\frac{1}{n}{{\mathrm{id}}}+\frac{2}{n}\sum _{i<j}(i\ j)\) on \(\mathbb {R}\mathfrak {S}_n\). Because q is a sum over all elements in two conjugacy classes, it is in the centre of \(\mathbb {R}\mathfrak {S}_n\), hence right-multiplication by q commutes with any other right-multiplication operator. Let \(s_{i}:\mathfrak {S}_n\rightarrow \mathfrak {S}_n\) be right-multiplication by \(i\in \mathfrak {S}_{n-k}\), the subgroup of \(\mathfrak {S}_n\) which only permutes the last \(n-k\) objects. Then Theorem 2.10 implies that the random-transposition shuffle lumps to the orbits under this action, which are the left-cosets of \(\mathfrak {S}_{n-k}\) (see also Example 2.9). The coset \(\tau \mathfrak {S}_{n-k}\) consists of all decks whose top k cards are \(\tau (1),\tau (2),\ldots ,\tau (k)\) in that order (i.e. all decks that can be obtained from the identity by first applying \(\tau \) and then permuting the bottom \(n-k\) cards in any way). Hence the lumped chain tracks the values of the top k cards.

Example 2.12

(Coagulation–fragmentation) As noted in [20, Sec. 1.5], the following chain is one specialisation of the processes in [29], modelling the splitting and recombining of molecules.

Recall that the random-transposition shuffle corresponds to right-multiplication by the central element \(q=\frac{1}{n}{{\mathrm{id}}}+\frac{2}{n}\sum _{i<j}(i\ j)\) on \(\mathbb {R}\mathfrak {S}_n\). Let \(s_{i}:\mathfrak {S}_n\rightarrow \mathfrak {S}_n\) be conjugation by the group element i, for all i in \(\mathfrak {S}_n\). This conjugation action commutes with right-multiplication by q:

where the second equality uses that q is central. So Theorem 2.10 implies that the random-transposition shuffle lumps to the orbits under this conjugation action, which are the conjugacy classes of \(\mathfrak {S}_n\). Each conjugacy class of \(\mathfrak {S}_n\) consists precisely of the permutations of a specific cycle type, and so can be labelled by the multiset of cycle lengths, a partition of n (see Sect. 3.1). These cycle lengths represent the sizes of the molecules. As described in [21], right-multiplication by a transposition either joins two cycles or breaks a cycle into two, corresponding to the coagulation or fragmentation of molecules.

Our final example shows that the group \(\{s_{i}\}\) inducing the lumping of a random walk on G need not be a subgroup of G:

Example 2.13

(The Ehrenfest Urn) [18, Chap. 3.1.3] Recall that the “flip a random bit” chain comes from the linear transformation on \(\mathbb {R}\left( \mathbb {Z}/2\mathbb {Z}\right) ^{d}\) of right-multiplication by \(q=\frac{1}{d}\left( (1,0,\ldots ,0)+(0,1,0,\ldots ,0)+\cdots +(0,\ldots ,0,1)\right) \). Let the symmetric group \(\mathfrak {S}_d\) act on \(\left( \mathbb {Z}/2\mathbb {Z}\right) ^{d}\) by permuting the coordinates. Because q is invariant under this action, right-multiplication by q commutes with this \(\mathfrak {S}_d\) action. So the “flip a random bit” Markov chain lumps to the \(\mathfrak {S}_d\)-orbits, which track the number of ones in the binary string. The lumped walk is as follows: if the current state has k ones, remove a one with probability \(\frac{k}{d}\); otherwise add a one. As noted by [18, Chap. 3.1.3], interpreting the state of k ones as k balls in an urn and \(d-k\) balls in another urn gives the classical Ehrenfest urn model: given two urns containing d balls in total, at each step, remove a ball from either urn and place it in the other.

Remark

In the previous three examples, the action \(\{s_{i}:G\rightarrow G\}\) respects multiplication on G:

Then the random walk from right-multiplication by q lumps to the \(\{s_{i}\}\)-orbits if and only if q is invariant under \(\{s_{i}\}\). But Eq. 2.2 need not be true in all applications of Theorem 2.10 - see Example 2.9 where \(s_{i}\) is left-multiplication.

2.4 Subspaces and Weak Lumping

Now turn to the weaker notion of lumping, where the initial distribution matters.

Definition 2.14

(Weak lumping) Let \(\{X_{t}\},\{X'_{t}\}\) be Markov chains on state spaces \(\varOmega ,\varOmega '\), respectively, with transition matrices \(K,K'.\) Then \(\{X'_{t}\}\) is a weak lumping of\(\{X_{t}\}\)via\(\theta \), with initial distribution\(X_{0}\), if there is a surjection \(\theta :\varOmega \rightarrow \varOmega '\) such that the process \(\{\theta (X_{t})\}\), started at the specified \(X_{0}\), is a Markov chain with transition matrix \(K'\). In this case, \(\{X_{t}\}\) is a weak lift of\(\{X'_{t}\}\)via\(\theta \).

[43, Th. 6.4.1] gives a complicated necessary and sufficient condition for weak lumping. (Note that they write \(\pi \) for the initial distribution and \(\alpha \) for the stationary distribution.) Their simple sufficient condition [43, Th. 6.4.4] has the drawback of not identifying any valid initial distribution beyond the stationary distribution—such a result would not be useful for the many descent operator chains which are absorbing. So instead we appeal to a condition for continuous Markov processes [60, Th. 2], which when specialised to the case of discrete time and finite state spaces reads:

Theorem 2.15

(Sufficient condition for weak lumping for Markov chains) [60, Th. 2] Let K be the transition matrix of a Markov chain \(\{X_{t}\}\) with state space \(\varOmega \). Suppose  , and there are distributions \(\pi ^{i}\) on \(\varOmega \) such that

, and there are distributions \(\pi ^{i}\) on \(\varOmega \) such that

-

1.

\(\pi ^{i}\) is nonzero only on \(\varOmega ^{i}\),

-

2.

The matrix

$$\begin{aligned} K'(i,j):=\sum _{x\in \varOmega ^{i},y\in \varOmega ^{j}}\pi ^{i}(x)K(x,y) \end{aligned}$$satisfies the equality of row vectors \(\pi ^{i}K=\sum _{j}K'(i,j)\pi ^{j}\) for all i, or equivalently

$$\begin{aligned} \sum _{x\in \varOmega }\pi ^{i}(x)K(x,y)=\sum _{j}K'(i,j)\pi ^{j}(y) \end{aligned}$$for all i and all y.

Then, from any initial distribution of the form \(\sum _{i}\alpha _{i}\pi ^{i}\), for constants \(\alpha _{i}\), the chain \(\{X_{t}\}\) lumps weakly to the chain on the state space \(\{\varOmega ^{i}\}\) with transition matrix \(K'\). \(\square \)

(This condition was implicitly used in [43, Ex. 6.4.2].)

Remark

As the proof of Theorem 2.16 will show, the case of chains from the Doob transform without rescaling corresponds to each \(\pi ^i\) being the uniform distribution on \(\varOmega ^i\). In this case, the conditions above simplify: we require that \(K'(i,j):=\frac{1}{|\varOmega ^i|}\sum _{x\in \varOmega ^{i},y\in \varOmega ^{j}}K(x,y)\) satisfy \(\frac{1}{|\varOmega ^i|}\sum _{x\in \varOmega }K(x,y)=\frac{1}{|\varOmega ^j|}\sum _{j}K'(i,j)\pi ^{j}(y)\) for all i and all \(y\in \varOmega ^j\). In other words, the only requirement is that \(\sum _{x\in \varOmega }K(x,y)\) depends only on \(\varOmega ^j \ni y\), not on y, a condition somewhat dual to Doob’s.

For Markov chains arising from the Doob transform, the condition \(\pi ^{i}K=\sum _{j}K'(i,j)\pi ^{j}\) translates to the existence of invariant subspaces. It may seem strange to consider the subspace spanned by \(\left\{ \sum _{x\in \mathscr {B}^{i}}x\right\} \), but Example 2.18 and Sect. 3.4.2 will show two examples that arise naturally, namely permutation statistics and congruence Hopf algebras.

Theorem 2.16

(Weak lumping for Markov chains from linear maps) Let V be a vector space with basis \(\mathscr {B}\), and \(\mathbf {T}:V\rightarrow V,\eta :V\rightarrow \mathbb {R}\) be linear maps admitting the Doob transform Markov chain construction of Theorem 2.3. Suppose  , and write \(x^{i}\) for \(\sum _{x\in \mathscr {B}^{i}}x\). Let \(V'\) be the subspace of V spanned by the \(x^{i}\), and suppose \(\mathbf {T}(V')\subseteq V'\). Define a map \(\theta :\mathscr {B}\rightarrow \{x^{i}\}\) by setting \(\theta (x):=x^{i}\) if \(x\in \mathscr {B}^{i}\). Then, the Markov chain defined by \(\mathbf {T}:V\rightarrow V\) lumps weakly to the Markov chain defined by \(\mathbf {T}:V'\rightarrow V'\) (with basis \(\mathscr {B}':=\{x^{i}\}\), and rescaling function the restriction \(\eta :V'\rightarrow \mathbb {R}\)) via \(\theta \), from any initial distribution of the form \(P\{X_{0}=x\}:=\alpha _{\theta (x)}\frac{\eta (x)}{\eta (\theta (x))}\), where the \(\alpha \)s are constants depending only on \(\theta (x)\). In particular, if \(\eta \equiv 1\) on \(\mathscr {B}\) (so no rescaling is required to define the chain on \(\mathscr {B}\)), the Markov chain lumps from any distribution which is constant on each \(\mathscr {B}^{i}\).

, and write \(x^{i}\) for \(\sum _{x\in \mathscr {B}^{i}}x\). Let \(V'\) be the subspace of V spanned by the \(x^{i}\), and suppose \(\mathbf {T}(V')\subseteq V'\). Define a map \(\theta :\mathscr {B}\rightarrow \{x^{i}\}\) by setting \(\theta (x):=x^{i}\) if \(x\in \mathscr {B}^{i}\). Then, the Markov chain defined by \(\mathbf {T}:V\rightarrow V\) lumps weakly to the Markov chain defined by \(\mathbf {T}:V'\rightarrow V'\) (with basis \(\mathscr {B}':=\{x^{i}\}\), and rescaling function the restriction \(\eta :V'\rightarrow \mathbb {R}\)) via \(\theta \), from any initial distribution of the form \(P\{X_{0}=x\}:=\alpha _{\theta (x)}\frac{\eta (x)}{\eta (\theta (x))}\), where the \(\alpha \)s are constants depending only on \(\theta (x)\). In particular, if \(\eta \equiv 1\) on \(\mathscr {B}\) (so no rescaling is required to define the chain on \(\mathscr {B}\)), the Markov chain lumps from any distribution which is constant on each \(\mathscr {B}^{i}\).

Note that, in the simplified case \(\eta \equiv 1\), it is generally not true that the restriction \(\eta :V'\rightarrow \mathbb {R}\) is constant on \(\mathscr {B}'\) - indeed, for \(x^{i}\in \mathscr {B}'\), it holds that \(\eta (x^{i})=|\mathscr {B}^{i}|\). So a weak lumping chain from Theorem 2.16 will generally require rescaling.

Remark

Suppose the conditions of Theorem 2.16 hold, and let \(j:V'\hookrightarrow V\) be the inclusion map. Now the dual map \(j^{*}:V^{*}\twoheadrightarrow V'^{*}\), and \(\mathbf {T}^{*}:V^{*}\rightarrow V^{*}\), satisfy the hypotheses of Theorem 2.7, except that there may not be suitable rescaling functions \(\eta :V^{*}\rightarrow \mathbb {R}\) and \(\bar{\eta }:V'^{*}\rightarrow \mathbb {R}\). Because the Doob transform chain for \(\mathbf {T}^{*}\) is the time-reversal of the chain for \(\mathbf {T}\) [52, Th. 3.3.2], this is a reflection of [43, Th. 6.4.5].

The proof of Theorem 2.16 is at the end of this section.

Example 2.17

(Number of rising sequences under riffle-shuffling) [11, Cor. 2] The sequence \(\{i,i+1,\ldots ,i+j\}\) is a rising sequence of a permutation \(\sigma \) if those numbers appear in that order when reading the one-line notation of \(\sigma \) from left to right. Viewing \(\sigma \) as a deck of cards, this says that the card with label i is somewhere above the card with label \(i+1\), which is somewhere above the card with label \(i+2\), and so on, until the card with label \(i+j\). Formally, \(\sigma ^{-1}(i)<\sigma ^{-1}(i+1)<\cdots <\sigma ^{-1}(i+j)\). Unless otherwise specified, a rising sequence is assumed to be maximal, i.e. \(\sigma ^{-1}(i-1)>\sigma ^{-1}(i)<\sigma ^{-1}(i+1)<\cdots <\sigma ^{-1}(i+j)>\sigma ^{-1}(i+j+1)\).

Following [11], write \(R(\sigma )\) for the number of rising sequences in \(\sigma \). This statistic is also written \({{\mathrm{ides}}}(\sigma )\), as it is the number of descents in \(\sigma ^{-1}\). For example, \(R(2,4,5,3,1)=3\), the three rising sequences being \(\{1\}\), \(\{2,3\}\) and \(\{4,5\}\).

[11] studied the popular riffle–shuffle model, where the deck is cut into two according to a binomial distribution and interleaved. (We omit the details as this shuffle is not the focus of the present paper). This arises from right-multiplication in \(\mathbb {R}\mathfrak {S}_n\) by

[11, Cor. 2] shows that riffle-shuffling, if started from the identity, lumps weakly via the number of rising sequences. This result can be slightly strengthened by applying the present Theorem 2.16 in conjunction with [11, Cor. 3], which proves explicitly that q generates a subalgebra spanned by \(x^{i}:=\sum _{R(\sigma )=i}\sigma \), for \(1\le i\le n\). The authors recognised this subalgebra as equivalent to Loday’s “number of descents” subalgebra [63]. (More precisely: the linear extension to \(\mathbb {R}\mathfrak {S}_n\) of the inversion map \(I(\sigma ):=\sigma ^{-1}\) is an algebra antimorphism—i.e. \(I(\sigma \tau )=I(\tau )I(\sigma )\)—and it sends \(x^{i}\) to the sum of permutations with \(i-1\) descents, which span Loday’s algebra.) Since the basis elements \(x^{i}\) have the form stipulated in Theorem 2.16, it follows that the lumping via the number of rising sequences is valid starting from any distribution that is constant on the summands of each \(x^{i}\), i.e. on each subset of permutations with the same number of rising sequences.

The key idea in the previous example is that, if \(q\in \mathbb {R}G\) generates a subalgebra \(V'\) of \(\mathbb {R}G\) of the form described in Theorem 2.16, then this produces a weak lumping of the random walk on G given by right-multiplication by q. We apply this to the top-to-random shuffle:

Example 2.18

(Length of last rising sequence under top-to-random shuffling) This simple example appears to be new. In addition to the definitions in Example 2.17, more terminology is necessary. The length of the rising sequence \(\{i,i+1,\ldots ,i+j\}\) is \(j+1\). Following [19], write \(L(\sigma )\) for the length of the last rising sequence, meaning the one which contains n. For example, the rising sequences of (2, 4, 3, 5, 1) have lengths 1, 2, 2, respectively, and \(L(2,4,3,5,1)=2\).

Recall that the top-to-random shuffle is given by right-multiplication by

The rising sequences of the cycles \((i\ i-1\ \ldots \ 1)\) are precisely \(\{1\}\) and \(\{2,3,\ldots ,n\}\), and these are the only permutations \(\sigma \) with \(L(\sigma )=n-1\). [19, Th. 4.2] shows that the algebra generated by q is spanned by \(x^{i}:=\sum _{L(\sigma )=i}\sigma \), for \(1\le i\le n\). Thus, Theorem 2.16 shows that top-to-random shuffling weakly lumps via the length of the last rising sequence, starting from any distribution that is constant on permutations with the same last rising sequence length. In particular, since the identity is the only permutation with \(L(\sigma )=n\), the lumping holds if the deck starts at the identity permutation.

Proof of Theorem 2.16

In the notation of Theorem 2.15, the distribution \(\pi ^{i}\) is

which clearly satisfies condition 1.

To check condition 2, write \(\mathbf {T}',\eta '\) for the restrictions of \(\mathbf {T},\eta \) to \(V'\), and set \(K=[\mathbf {T}]_{\mathscr {B}}^{T}\), \(K'=[\mathbf {T}']_{\mathscr {B}'}^{T}\). As in the proof of Theorem 2.7, we start by showing that \(\eta '\) is a possible rescaling function for \(\mathbf {T}'\):

so \(\eta '\) is an eigenvector of \(\mathbf {T}'^{*}\) with eigenvalue \(\beta \). And \(\eta '\) is positive on \(\mathscr {B}'\) because \(\eta '(x^{i})=\sum _{x\in \mathscr {B}^{i}}\eta (x)\), a sum of positive numbers.

Write \(\check{K},\check{K}'\) for the associated transition matrices. (As in the proof of Theorem 2.7, we check that the entries of \(K'\) are non-negative later, in Eq. 2.3.) We need to show that, for all i and for all \(y\in \mathscr {B}\),

Note that \(\pi ^{j}(y)\) is zero unless \(y\in \mathscr {B}^{j}\), so only one summand contributes to the right-hand side. By substituting for \(\pi ^{i},\check{K}\) and \(\check{K}'\), the desired equality is equivalent to

which reduces to

for \(y\in \mathscr {B}^{j}\).

Now, by expanding in the \(\mathscr {B}'\) basis,

On the other hand, a \(\mathscr {B}\) expansion yields

So

and since \(\mathscr {B}\) is a basis, the coefficients of y on the two sides must be equal. \(\square \)

3 Lumpings from Subquotients of Combinatorial Hopf Algebras

This section specialises the strong and weak lumping criteria of Part I to Markov chains from descent operators on combinatorial Hopf algebras [54]. Our main running example (which in fact motivated the entire paper) is a lift for the “down-up chain on partitions”, where at each step a random box is removed and a possibly different random box added, according to a certain distribution (see Sect. 3.2.1). The stationary distribution of this chain is the Plancherel measure \(\pi (\lambda )=\frac{(\dim \lambda )^{2}}{n!}\), where \(\dim \lambda \) is the dimension of the symmetric group representation indexed by \(\lambda \), or equivalently the number of standard tableaux of shape \(\lambda \) (see below for definitions). Because \((\dim \lambda )^{2}\) is the number of permutations whose RSK shape [64, Sec. 7.11] [33, Sec. 4] is \(\lambda \), it is natural to ask if the down–up chain on partitions is the lumping of a chain on permutations with a uniform stationary distribution.

Fulman [31, Th. 3.1] proved that this is almost true for top-to-random shuffling: the probability distribution of the RSK shape after t top-to-random shuffles from the identity, agrees with the probability distribution after t steps of the down–up chain on partitions. However, it is not true that top-to-random shuffles lump via RSK shape. [15, Fig. 1] is an explicit 8-step trajectory of the partition chain that has no corresponding trajectory in top-to-random shuffling. In other words, it is possible for eight top-to-random shuffles to produce an RSK shape equal to the end of the exhibited partition chain trajectory, but no choice of intermediate steps will have RSK shapes equal to the given trajectory. (Technically, this figure is written for random-to-top, the time-reversal of top-to-random, so one should read it backwards from right to left, to apply it to top-to-random shuffles.)

The present Theorem 3.11 finds that top-to-random shuffling can be modified to give an honest weak lift of the down–up chain on partitions: every time a card is moved, relabel it with the current time that we moved the card, then track the (reversed) relative orders of the labels. (A different interpretation without cards is in Sect. 3.4.1) This lift is constructed in two stages—Sect. 3.3 builds a strong lift to tableaux using Hopf algebra quotients, and Sect. 3.4 builds a weak lift to permutations using Hopf subalgebras. Sect. 3.5 then shows that the multistep transition probabilities of the relabelled chain agree with the unmodified top-to-random shuffle, if both are started at the identity, thus recovering the Fulman result.

3.1 Notation

A partition\(\lambda \) is a weakly decreasing sequence of positive integers: \(\lambda :=(\lambda _{1},\ldots ,\lambda _{l})\) with \(\lambda _{1}\ge \ldots \ge \lambda _{l}>0\). This is a partition of n, denoted \(\lambda \vdash n\), if \(\lambda _{1}+\cdots +\lambda _{l}=n\). We will think of a partition \(\lambda \) as a diagram of left-justified boxes with \(\lambda _{1}\) boxes in the topmost row, \(\lambda _{2}\) boxes in the second row, etc. For example, (5,2,2) is a partition of 9, and below is its diagram.

A tableau of shape\(\lambda \) is a filling of each of the boxes in \(\lambda \) with a positive integer. The shift of a tableaux T by an integer k, denoted T[k], increases each filling of T by k. A tableau is standard if it is filled with \(\{1,2,\ldots ,n\}\), each integer occurring once. If no two boxes of a tableau T has the same filling, then its standardisation\({{\mathrm{std}}}(T)\) is computed by replacing the smallest filling by 1, the second smallest filling by 2, and so on. Clearly \({{\mathrm{std}}}(T)\) is a standard tableau, of the same shape as T. A box b of T is removable if the difference \(T\backslash b\) is a tableau. Below shows a tableau of shape (5, 2, 2), its shift by 3, and its standardisation. The removable boxes in the first tableau are 11 and 13.

For a partition \(\lambda \), write \(\dim (\lambda )\) for the number of standard tableaux of shape \(\lambda \), as this is the dimension of the symmetric group representation corresponding to \(\lambda \) [61, Chap. 2].

In the same vein, this paper will regard permutations as “standard words”, using one-line notation: \(\sigma :=(\sigma (1),\ldots ,\sigma (n))\). The length of a word is its number of letters. The shift of a word \(\sigma \) by an integer k, denoted \(\sigma [k]\), increases each letter of \(\sigma \) by k. If a word \(\sigma \) has all letters distinct, then its standardisation\({{\mathrm{std}}}(\sigma )\) is computed by replacing the smallest letter by 1, the second smallest letter by 2, and so on. Clearly \({{\mathrm{std}}}(\sigma )\) is a permutation. For example, \(\sigma =(6,1,4,8,2,11,10,13,5)\) is a word of length 9. Its shift by 3 is \(\sigma [3]=(9,4,7,11,5,14,13,16,8)\), and its standardisation is \({{\mathrm{std}}}(\sigma )=(5,1,3,6,2,8,7,9,4)\).

We assume the reader is familiar with RSK insertion, a map from permutations to tableaux (only the insertion tableau is relevant here, not the recording tableau), see [64, Sec. 7.11] [33, Sec. 4].

A weak-compositionD (also called a decomposition in [3]) is a list of non-negative integers \(\left( d_{1},d_{2},\ldots ,d_{l(D)}\right) \). This is a weak-composition ofn, denoted \(D\vdash n\), if \(d_{1}+\cdots +d_{l}=n\). For example, (1, 3, 0, 2, 2, 0, 1) is a weak-composition of 11.

A compositionI is a list of positive integers \(\left( i_{1},i_{2},\ldots ,i_{l(I)}\right) \), where each \(i_{k}\) is a part. This is a composition of n, denoted \(I\vdash n\), if \(i_{1}+\cdots +i_{l}=n\). For example, (1, 3, 2, 2, 1) is a composition of 11. Define a partial order on the compositions of n: say \(J\le I\) if J can be obtained by joining adjacent parts of I. For example, \((6,2,1)\le (1,3,2,2,1)\), and also \((1,3,4,1)\le (1,3,2,2,1)\).

The descent set of a word \(w=(w_{1},\ldots ,w_{n})\) is defined to be \(\Big \{ j\in \{1,2,\ldots ,n-1\}|w_{j}>w_{j+1}\Big \} \). It is more convenient here to rewrite the descent set as a composition in the following way: a word w has descent composition\({{\mathrm{Des}}}(w)=I\) if \(i_{j}\) is the number of letters between the \(j-1\)th and jth descent, i.e. if \(w_{i_{1}+\cdots +i_{j}}>w_{i_{1}+\cdots +i_{j}+1}\) for all j, and \(w_{r}\le w_{r+1}\) for all \(r\ne i_{1}+\cdots +i_{j}\). For example, the descent set of (6, 1, 4, 8, 2, 11, 10, 13, 5) is \(\{1,4,6,8\}\), and \({{\mathrm{Des}}}(6,1,4,8,2,11,10,13,5)=(1,3,2,2,1)\). Note that \({{\mathrm{Des}}}(\sigma ^{-1})\) consists of the lengths of the rising sequences (as in Example 2.17) of \(\sigma \).

3.2 Markov Chains from Descent Operators, and the Down–Up Chain on Partitions

The Markov chains in this and subsequent sections arise from descent operators on combinatorial Hopf algebras, through the framework of [54] as summarised below.

Loosely speaking, a combinatorial Hopf algebra is a graded vector space \(\mathscr {H}=\bigoplus _{n=0}^{\infty }\mathscr {H}_{n}\) with a basis  indexed by a family of “combinatorial objects”, such as partitions, words, or permutations. The grading reflects the “size” of these objects. \(\mathscr {H}\) admits a linear product map \(m:\mathscr {H}\otimes \mathscr {H}\rightarrow \mathscr {H}\) and a linear coproduct map \(\varDelta :\mathscr {H}\rightarrow \mathscr {H}\otimes \mathscr {H}\) satisfying certain compatibility axioms; see the survey [39] for details. These two operations encode, respectively, how the combinatorial objects combine and break. The concept was originally due to Joni and Rota [42], and the theory has since been expanded in [1, 2, 12, 40] and countless other works.

indexed by a family of “combinatorial objects”, such as partitions, words, or permutations. The grading reflects the “size” of these objects. \(\mathscr {H}\) admits a linear product map \(m:\mathscr {H}\otimes \mathscr {H}\rightarrow \mathscr {H}\) and a linear coproduct map \(\varDelta :\mathscr {H}\rightarrow \mathscr {H}\otimes \mathscr {H}\) satisfying certain compatibility axioms; see the survey [39] for details. These two operations encode, respectively, how the combinatorial objects combine and break. The concept was originally due to Joni and Rota [42], and the theory has since been expanded in [1, 2, 12, 40] and countless other works.

To define the descent operators, it is necessary to introduce a refinement of the coproduct relative to the grading. Given a weak-composition \(D=\left( d_{1},d_{2},\ldots ,d_{l(D)}\right) \) of n, follow [2] and define \(\varDelta _{D}:\mathscr {H}_{n}\rightarrow \mathscr {H}_{d_{1}}\otimes \cdots \otimes \mathscr {H}_{d_{l(D)}}\) to be a projection to the graded subspace \(\mathscr {H}_{d_{1}}\otimes \cdots \otimes \mathscr {H}_{d_{l(D)}}\) of the iterated coproduct \((\varDelta \otimes {{\mathrm{id}}}^{\otimes l(D)-1})\circ \cdots \circ (\varDelta \otimes {{\mathrm{id}}}\otimes {{\mathrm{id}}})\circ (\varDelta \otimes {{\mathrm{id}}})\circ \varDelta \). So \(\varDelta _{D}\) models breaking an object into l(D) pieces, of sizes \(d_{1},\ldots ,d_{l(D)}\), respectively. See the examples below.

Example 3.1

An instructive example of a combinatorial Hopf algebra is the shuffle algebra\(\mathscr {S}\). Its basis is the set of all words in the letters \(\{1,2,\ldots ,N\}\) (for some N, whose exact value is often unimportant). View the word \((w_{1},\ldots ,w_{n})\) as the deck of cards with card \(w_{1}\) on top, card \(w_{2}\) second from the top, and so on, so card \(w_{n}\) is at the bottom. The degree of a word is its number of letters, i.e. the number of cards in the deck. The product of two words, also denoted by  , is the sum of all their interleavings (with multiplicity), and the coproduct is deconcatenation, or cutting the deck. For example:

, is the sum of all their interleavings (with multiplicity), and the coproduct is deconcatenation, or cutting the deck. For example:

(Here, () denotes the empty word, the unit of \(\mathscr {S}\).) Observe that

The four words that appear on the right-hand side are precisely all the possible results after a top-to-random shuffle of the deck (1, 5, 5, 2), and the coefficient of each word is the probability of obtaining it. The same is true for decks of n cards and the operator \(\frac{1}{n}m\circ \varDelta _{1,n-1}\).

Instead of removing only the top card—i.e. creating two piles of sizes 1 and \(n-1\), respectively—consider cutting the deck into l piles of sizes \(d_{1,}\ldots ,d_{l}\) for some weak-composition D of n. Then interleave these l piles together into one pile - i.e. uniformly choose an ordering of all n cards such that any two cards from the same one of the l piles stay in the same relative order. Such a shuffle is described by (a suitable multiple of) the composite operator \(m\circ \varDelta _{D}\). These composites (and their linear combinations) are the descent operators of [55], so named because, on a commutative or cocommutative Hopf algebra, their composition is equivalent to the multiplication in Solomon’s descent algebra [63] of the symmetric group. This descent algebra view will be useful in the proof of Theorem 3.14, relating these shuffles to a different chain on permutations.

[19] studied more general “cut-and-interleave” shuffles where the cut composition D is random, according to some probability distribution P on the weak-compositions of n. These P-shuffles are described by

more precisely, their transition matrices are \(\left[ m\circ \varDelta _{P}\right] _{\mathscr {B}_n}^{T}\), where \(\mathscr {B}_n\) is the word basis of the shuffle algebra. (The notation \(m\circ \varDelta _{P}\), from [54], is non-standard and coined especially for this Markov chain application of descent operators.) Notice that, if P is concentrated at \((1,n-1)\), then \(m\circ \varDelta _{P}=\frac{1}{n}m\circ \varDelta _{1,n-1}\), corresponding to the top-to-random shuffle as described in Example 3.1. The present paper will focus on the case where P is concentrated at \((n-1,1)\), so \(m\circ \varDelta _{P}=\frac{1}{n}m\circ \varDelta _{n-1,1}\) models the “bottom-to-random” shuffle.

[22, 54] extend this idea to other combinatorial Hopf algebras, using \(m\circ \varDelta _{P}\) to construct Markov chains which model first breaking a combinatorial object into l pieces where the distribution of piece size is P, and then reassembling the pieces. In particular, \(\frac{1}{n}m\circ \varDelta _{n-1,1}\) describes removing a piece of size 1 and reattaching it. For general combinatorial Hopf algebras, this construction requires the Doob transform (Theorem 2.3).

To simplify the exposition, focus on the case where \(|\mathscr {B}_{1}|=1\), i.e. there is only one combinatorial object of size 1. (This is not true of the shuffle algebra, where \(\mathscr {B}_{1}\) consists of all the different possible single card labels. Hence, we will ignore the shuffle algebra henceforth, until Sect. 3.5.) Writing \(\bullet \) for this object, \(\varDelta _{1,\ldots ,1}(x)\) then is a multiple of \(\bullet \otimes \cdots \otimes \bullet =\bullet ^{\otimes \deg x}\). [54, Lem 3.3] showed that, under the conditions in Theorem 3.2, this multiple is a rescaling function. (Briefly, conditions i and ii guarantee that \(K:=[m\circ \varDelta _{P}]\), the matrix before the Doob transform, has non-negative entries, and condition iii ensures \(\eta \) is positive on \(\mathscr {B}_n\).)

Theorem 3.2

(Markov chains from descent operators) [54, Lem 3.3, Th. 3.4] Suppose \(\mathscr {H}=\bigoplus _{n}\mathscr {H}_n\) is a graded connected Hopf algebra with a basis  satisfying:

satisfying:

-

o.

\(\mathscr {B}_{1}=\{\bullet \}\);

-

i.

for all \(w,z\in \mathscr {B}\), the expansion of \(m(w\otimes z)\) in the \(\mathscr {B}\) basis has all coefficients non-negative;

-

ii.

for all \(x\in \mathscr {B}\), the expansion of \(\varDelta (x)\) in the \(\mathscr {B}\otimes \mathscr {B}\) basis has all coefficients non-negative;

-

iii.

for all \(x\in \mathscr {B}_n\) with \(n>1\), it holds that \(\varDelta (x)\ne 1\otimes x+x\otimes 1\) (i.e. \(\mathscr {B}_n\) contains no primitive elements when \(n>1\)).

Then, for any fixed n and any probability distribution P(D) on weak-compositions D of n, the corresponding descent operator \(m\circ \varDelta {}_{P}:\mathscr {B}_n\rightarrow \mathscr {B}_n\) given by

and rescaling function \(\eta :\mathscr {B}_n\rightarrow \mathbb {R}\) given by

admit the Doob transform construction of Theorem 2.3.

The stationary distributions of these chains are easy to describe:

Theorem 3.3

[54, Th. 3.12] The unique stationary distribution of the Markov chains constructed in Theorem 3.2 is

independent of the distribution P.

[54, Th. 3.5] derives the eigenvalues of all descent operator chains. For our main example of \(\frac{1}{n}m\circ \varDelta _{n-1,1}\), these eigenvalues are:

Theorem 3.4

[54, Th. 4.4.i] For the descent operator \(\frac{1}{n}m\circ \varDelta _{n-1,1}\), the eigenvalues of the chains constructed in Theorem 3.2 are \(\frac{j}{n}\) for \(0\le j\le n\), \(j\ne n-1\), and their multiplicities are \(\dim \mathscr {H}_{n-j}-\dim \mathscr {H}_{n-j-1}\).

[54, Th. 4.4] also describes some eigenvectors. Since their formulae are complicated and they are not the focus of the present paper, we do not go into detail here.

3.2.1 Example: the Down–Up Chain on Partitions

We explain in detail below the Markov chain that arises from applying the Doob transform to \(\frac{1}{n}m\circ \varDelta _{n-1,1}\) on the algebra of symmetric functions. This chain is one focus of [31].

Work with the algebra of symmetric functions \(\varLambda \) [64, Chap. 7], with basis the Schur functions \(\{s_{\lambda }\}\), which are indexed by partitions. For clarity, we will often write \(\lambda \) in place of \(s_{\lambda }\). The degree of \(\lambda \) is the number of boxes in its diagram.

As described in [39, Sec. 2.5], \(\varLambda \) carries the following Hopf structure:

where \(c_{\nu \mu }^{\lambda }\) are the Littlewood-Richardson coefficients. These simplify greatly when \(\mu \) is the partition (1) - namely \(c_{\nu \mu }^{\lambda }=1\) if the diagrams of \(\lambda \) and \(\nu \) differ by one box, and \(c_{\nu \mu }^{\lambda }=0\) otherwise. (This is one case of the Pieri rule.) Writing \(\lambda \sim \nu \cup \square \) and \(\nu \sim \lambda \backslash \square \) when the diagram of \(\lambda \) can be obtained by adding one box to the diagram of \(\nu \), the above can be summarised as

For example,

To investigate how the Doob transform turns this data into probabilities, it is necessary to first understand the rescaling function \(\eta \). By Theorem 3.2, \(\eta (\lambda )\) is the coefficient of \((1)^{\otimes \deg (\lambda )}\) in \(\varDelta _{1,\ldots ,1}(\lambda )\), i.e. the number of ways to remove boxes one by one from \(\lambda \). Since such ways are in bijection with the standard tableaux of shape \(\lambda \), it holds that \(\eta (\lambda )=\dim \lambda \). Hence, the Doob transform creates the following transition matrix from \(\frac{1}{n}m\circ \varDelta _{n-1,1}\):

The second expression suggests a decomposition of each time step into two parts:

-

1.

Remove a box from \(\lambda \) to obtain \(\nu \), with probability \(\frac{\dim \nu }{\dim \lambda }\).

-

2.

Add a box to \(\nu \) to obtain \(\mu \), with probability \(\frac{1}{n}\frac{\dim \mu }{\dim \nu }\).

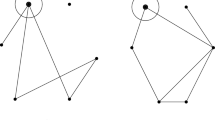

The two parts are illustrated by down-right and up-right arrows, respectively, in the following example trajectory in degree 5:

One easy way to implement step 1, the box removal, is via the hook walk of [37]: uniformly choose a box b, then uniformly choose a box in the hook of b—that is, to the right or below b—and continue uniformly picking from successive hooks until you reach a removable box. Similarly, step 2 can be implemented using the complimentary hook walk of [38]: start at the box (outside the partition diagram) in row n, column n, uniformly choose a box in its complimentary hook—that is, to its left or above it, and outside of the partition—and continue uniformly picking from complimentary hooks until you reach an addable box.

The transition matrix of this chain in degree 3 is

To interpret the Markov chain on partitions from other descent operators \(m\circ \varDelta _{P}\), it is necessary to view a partition of n as an irreducible representation of \(\mathfrak {S}_n\), as explained in [61, Chap. 2]. Then, the multiplication and comultiplication of partitions come, respectively, from the induction of the external product and the restriction to Young subgroups—for irreducible representations corresponding to the partitions \(\mu \vdash i\), \(\nu \vdash j\) and \(\lambda \vdash n\),

So the chains from \(m\circ \varDelta _{P}\) model restriction-then-induction, as detailed below.

Definition 3.5

Each step of the P-restriction-then-induction chain on irreducible representations of the symmetric group \(\mathfrak {S}_n\) goes as follows:

-

1.

Choose a weak-composition \(D=(d_{1},\ldots ,d_{l(D)})\) of n with probability P(D).

-

2.

Restrict the current irreducible representation to the chosen Young subgroup \(\mathfrak {S}_{d_{1}}\times \cdots \times \mathfrak {S}_{d_{l(D)}}\).

-

3.

Induce this representation to \(\mathfrak {S}_n\), then pick an irreducible constituent with probability proportional to the dimension of its isotypic component.

[31] considered similar chains for subgroups of any group \(H\subseteq G\) instead of \(\mathfrak {S}_{d_{1}}\times \cdots \times \mathfrak {S}_{d_{l(D)}}\subseteq \mathfrak {S}_n\).

To calculate the common unique stationary distribution of all these descent operator chains, using Theorem 3.3, first note that, for \(\lambda \vdash n\), the coefficient of \(\lambda \) in \((1)^n\) is given by

To obtain \(\pi (\lambda )\), multiply the above by \(\frac{\eta (\lambda )}{n!}\). As \(\eta (\lambda )\) is also \(\dim \lambda \), this means \(\pi (\lambda )=\frac{(\dim \lambda )^{2}}{n!}\), the Plancherel measure.

3.3 Quotient Algebras and a Lift to Tableaux

The following theorem is a specialisation of Theorem 2.7, about the strong lumping of Markov chains from linear maps, to the case of descent operator chains.

Theorem 3.6

(Strong lumping for descent operator chains) [53, Th. 4.1] Let \(\mathscr {H}\), \(\bar{\mathscr {H}}\) be graded, connected Hopf algebras with bases \(\mathscr {B}\), \(\bar{\mathscr {B}}\), respectively, that both satisfy the conditions in Theorem 3.2. If \(\theta :\mathscr {H}\rightarrow \bar{\mathscr {H}}\) is a Hopf-morphism such that \(\theta (\mathscr {B}_n)=\bar{\mathscr {B}}_{n}\) for all n, then the Markov chain on \(\mathscr {B}_n\) which the Doob transform fashions from the descent operator \(m\circ \varDelta _{P}\) lumps strongly via \(\theta \) to the Doob transform chain from the same operator on \(\bar{\mathscr {B}}_{n}\).

Proof

Condition 1 of Theorem 3.2 requires the distinct images of \(\mathscr {B}_n\) under \(\theta \) to be linearly independent—this is true here by hypothesis.

Condition 2 requires \(\theta \circ (m\circ \varDelta _{P})=(m\circ \varDelta _{P})\circ \theta \), and \(\bar{\eta }\circ \theta =\eta \). The former is true because \(\theta \) is a Hopf-morphism. To check the latter, apply both sides to an arbitrary \(x\in \mathscr {B}_{n}\) and multiply by \(\bar{\bullet }^{\otimes n}\), where \(\bar{\bullet }\) is the unique element of \(\bar{\mathscr {B}}_{1}\); then the condition required is equivalent to

The left-hand side is \(\varDelta _{1,\ldots ,1}(\theta (x))\), by definition of \(\bar{\eta }\). Because \(\theta \) is a Hopf-morphism, this is equal to \((\theta \otimes \cdots \otimes \theta )\circ \varDelta _{1,\ldots ,1}(x)=(\theta \otimes \cdots \otimes \theta )\left( \eta (x)\bullet ^{\otimes n}\right) \), by definition of \(\eta \) (writing \(\bullet \) for the unique element of \(\mathscr {B}_{1}\)). Hence this is \((\theta (\bullet )\otimes \cdots \otimes \theta (\bullet ))\eta (x)\). Since \(\theta (\mathscr {B}_n)=\bar{\mathscr {B}}_n\) for all n, it is true for \(n=1\), which means \(\theta (\bullet )\in \bar{\mathscr {B}}_{1}\). Since \(\bar{\mathscr {B}}_{1}=\{\bar{\bullet }\}\), it must be that \(\theta (\bullet )=\bar{\bullet }\). Hence \((\theta (\bullet )\otimes \cdots \otimes \theta (\bullet ))\eta (x)=\eta (x)\bar{\bullet }^{\otimes n}\), proving Eq. 3.1.\(\square \)

Remark

Observe that the proof does not fully use the assumption \(\theta (\mathscr {B}_n)=\bar{\mathscr {B}}_n\) for all n—all that is required is that \(\theta (\mathscr {B}_n)=\bar{\mathscr {B}}_n\) for the single value of n of interest, and that \(\theta (\mathscr {B}_{1})=\bar{\mathscr {B}}_{1}\). Indeed, if \(\mathscr {B}_n\) can be partitioned into communication classes  for the \(m\circ \varDelta _{P}\) Markov chain (i.e. it is impossible to move between distinct \(\mathscr {B}_n^{(i)}\) using the \(m\circ \varDelta _{P}\) Markov chain), so there is effectively a separate chain on each \(\mathscr {B}_n^{(i)}\), then, to prove a lumping for the chain on one \(\mathscr {B}_n^{(i)}\), it suffices to require \(\theta (x)\in \bar{\mathscr {B}}_n\) only for \(x\in \mathscr {B}_n^{(i)}\) (and \(\theta (\mathscr {B}_{1})=\bar{\mathscr {B}}_{1}\)). This will be useful in Sect. 3.6 for showing that cut-and-interleave shuffles of n distinct cards lump via descent set, as this lumping is false for non-distinct decks.

for the \(m\circ \varDelta _{P}\) Markov chain (i.e. it is impossible to move between distinct \(\mathscr {B}_n^{(i)}\) using the \(m\circ \varDelta _{P}\) Markov chain), so there is effectively a separate chain on each \(\mathscr {B}_n^{(i)}\), then, to prove a lumping for the chain on one \(\mathscr {B}_n^{(i)}\), it suffices to require \(\theta (x)\in \bar{\mathscr {B}}_n\) only for \(x\in \mathscr {B}_n^{(i)}\) (and \(\theta (\mathscr {B}_{1})=\bar{\mathscr {B}}_{1}\)). This will be useful in Sect. 3.6 for showing that cut-and-interleave shuffles of n distinct cards lump via descent set, as this lumping is false for non-distinct decks.

3.3.1 Example: the Down–Up Chain on Standard Tableaux

To use Theorem 3.6 to lift the descent operator chains on partitions of the previous section, we need a Hopf algebra whose quotient is \(\varLambda \), and the quotient map must come from a map from the basis elements of the new, larger Hopf algebra to partitions (or more accurately, to Schur functions). Below describes one such algebra, the Poirer–Reutenauer Hopf algebra of standard tableaux. It was christened \((\mathbb {Z}T,*,\delta )\) in [57], but we follow [27, Sec. 3.5] and denote it by \(\mathbf {FSym}\), for “free symmetric functions”. Its distinguished basis is \(\{\mathbf {S}_{T}\}\), where T runs over the set of standard tableaux. As with partitions, it will be convenient to write T in place of \(\mathbf {S}_{T}\). This algebra is graded by the number of boxes in T. The quotient map \(\mathbf {FSym}\rightarrow \varLambda \) is essentially taking the shape of the standard tableaux—the image of \(\mathbf {S}_{T}\) in \(\varLambda \) is \(s_{{{\mathrm{shape}}}(T)}\).

Because the product and coproduct of \(\mathbf {FSym}\) are fairly complicated, involving Jeu de Taquin and other tableaux manipulations, we describe here only \(m:\mathscr {H}_{n-1}\otimes \mathscr {H}_{1}\rightarrow \mathscr {H}_n\) and \(\varDelta _{n-1,1}\), and direct the interested reader to [57, Sec. 5c, 5d] for details.

If T is a standard tableaux with \(n-1\) boxes, then the product  is the sum of all ways to add a new box, filled with n, to T. For example,

is the sum of all ways to add a new box, filled with n, to T. For example,

The coproduct \(\varDelta _{n-1,1}\) is “unbump and standardise”. [64, fourth paragraph of proof of Th. 7.11.5] explains unbumping as follows: for a removable box b in row i, remove b, then find the box in row \(i-1\) containing the largest integer smaller than b. Call this filling \(b_{1}\). Replace \(b_{1}\) with b, then put \(b_{1}\) in the box in row \(i-2\) previously filled with the largest integer smaller than \(b_{1}\), and continue this process up the rows. In the second term in the example below, these displaced fillings are 4, 3, 2. What unbumping achieves is this: if b was the last number to be inserted in an RSK insertion that resulted in T, then unbumping b from T recovers the tableaux before b was inserted. The coproduct \(\varDelta _{n-1,1}(T)\) is the sum of unbumpings over all removable boxes b of T, then standardising the unbumped tableaux, for example

To describe the down–up chain on standard tableaux (i.e. the chain which the Doob transform fashions from the map \(\frac{1}{n}m\circ \varDelta _{n-1,1}\)), it remains to calculate the rescaling function \(\eta (T)\). This is the coefficient of  in \(\varDelta _{1,\ldots ,1}(T)\), which the description of \(\varDelta _{n-1,1}\) above rephrases as the number of ways to successively choose boxes to unbump from T. Such ways are in bijection with the standard tableaux of the same shape as T, so \(\eta (T)=\dim ({{\mathrm{shape}}}T)\). Hence one step of the down–up chain on standard tableaux, starting from a tableau T of n boxes, has the following interpretation:

in \(\varDelta _{1,\ldots ,1}(T)\), which the description of \(\varDelta _{n-1,1}\) above rephrases as the number of ways to successively choose boxes to unbump from T. Such ways are in bijection with the standard tableaux of the same shape as T, so \(\eta (T)=\dim ({{\mathrm{shape}}}T)\). Hence one step of the down–up chain on standard tableaux, starting from a tableau T of n boxes, has the following interpretation:

-

1.

Pick a removable box b of T with probability \(\frac{\dim ({{\mathrm{shape}}}(T\backslash b))}{\dim ({{\mathrm{shape}}}T)}\), and unbump b. (As for partitions, one can pick b using the hook walk of [37].)

-

2.

Standardise the remaining tableaux and call this \(T'\).

-

3.

Add a box labelled n to \(T'\), with probability \(\frac{1}{n}\frac{\dim ({{\mathrm{shape}}}(T'\cup n))}{\dim ({{\mathrm{shape}}}T')}\). (As for partitions, one can pick where to add this box using the complimentary hook walk of [38].)

Here are a few steps of a possible trajectory in degree 5 (the red marks the unbumping paths):

The transition matrix of this chain in degree 3 is

According to Proposition 3.3, the unique stationary distribution of the down–up chain on tableau is \(\pi (T)=\frac{1}{n!}\eta (T)\times \)coefficient of T in  . Note that there is a unique way of adding outer boxes filled with \(1,2,\ldots \) in succession to build a given tableau T, so each tableau of n boxes appears precisely once in the product

. Note that there is a unique way of adding outer boxes filled with \(1,2,\ldots \) in succession to build a given tableau T, so each tableau of n boxes appears precisely once in the product  . Hence \(\pi (T)=\frac{1}{n!}\eta (T)=\frac{1}{n!}\dim ({{\mathrm{shape}}}T)\).

. Hence \(\pi (T)=\frac{1}{n!}\eta (T)=\frac{1}{n!}\dim ({{\mathrm{shape}}}T)\).

As explained at the beginning of this subsection, the symmetric functions \(\varLambda \) is a quotient of \(\mathbf {FSym}\) [57, Th. 4.3.i], and the quotient map sends \(\mathbf {S}_{T}\) to \(s_{{{\mathrm{shape}}}(T)}\). Applying Theorem 3.6 then gives:

Theorem 3.7

The down–up Markov chain on standard tableaux lumps to the down–up Markov chain on partitions via taking the shape. \(\square \)

By the same argument, the P-restriction-then-induction chains on partitions, for any probability distribution P, lift to \(m\circ \varDelta _{P}\) chains on tableaux, but these are hard to describe.

3.4 Subalgebras and a Lift to Permutations

The following theorem is a specialisation of Theorem 2.16, about the weak lumping of Markov chains from linear maps, to the case of descent operator chains.

Theorem 3.8