Abstract

We consider a method of obtaining non-closed solutions of the first and second Kolmogorov equations for the exponential (double) generating function of transition probabilities for quadratic death-processes of one, two and three dimensions. We obtain a representation for the generating function of transition probabilities in the form of a Fourier series, using generalized hypergeometric functions and Jacobi polynomials.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

An analytical method for the study of Markov processes with a finite or countable states is based on consideration of the first (backward) and the second (forward) Kolmogorov systems of differential equations for transition probabilities [1, 10]. There are few cases in which an explicit solution can be found: the solutions are known for simple death-process, pure birth-process, birth and death process of linear or Poisson type (see the survey [12], Chap. 2, § 2.1.1), branching processes ([22], Chap. 1, § 8), and some of their modifications.

Different methods have been considered for simple death-process equations, for instance, in [4, 10], etc, operational calculus has been used. The expressions for transition probabilities are cumbersome [10] and of little use for the study of asymptotic properties of the random process.

Under special conditions on the Markov process, the second system of differential equations can be combined into a partial differential equation for generating function of transition probabilities [12]. In the case of a first-order equation, we have the Markov branching process [2, 22].

Researching into Markov death-process of quadric type with the equation of the second order was begun in [19], see also [5, 6]. The method of separating variables was used for the Kolmogorov second equation, and it obtained an expansion for the function of transition probabilities into Fourier series with two separated variables in which eigenfunctions are the Gegenbauer polynomials [19].

By the same method Letessier and Valent (see [16, 24], the survey [17], etc) found solutions for equations of second, third and fourth orders in the form of a Fourier series of special functions. In papers [16, 17], etc, spectrums and eigenfunctions were obtained for some birth and death processes of quadratic, cubic and quartic types. For the second equation of Kolmogorov, the authors build a series with more and more complicated functions, when the equation for eigenfunction belong to the class of hypergeometric equations (the Fuchs equation of second order with three singular points) or is generalized a hypergeometric equation.

In [24] for the birth and death processes of quadratic type, the second equation is solved in the form of a Fourier series with eigenvalues are expressed by elliptic integral, and the equation for eigenfunction belongs to the class of Heun equations (the Fuchs equation of the second order with four singular points, see [8], Chap. 15, § 3).

Numeric coefficients in [16, 19, 24], etc., were expressed by integral formulas, which are standard for the Fourier series theory, and remained uncalculated in many cases. According to the series for transiting probabilities, any opportunity to make some statements about asymptotic properties in considering Markov processes is unclear. A construction of non-closed solutions of Kolmogorov equations is connected with the spectrum-obtaining problem for these equations [15, 17]. Examples of solutions given in [16, 17, 19, 24], etc, have a discrete spectrum; the construction of examples of explicit solutions in the case of continuous spectrum is more challenging [1, 15].

In the present paper, the development of separating variables method owed to Kolmogorov equations is based on the introduction of an exponential generating function for transition probabilities [12] which allow combination of the first system of differential equations into the partial differential equation. At the same time using for first and second equations, the Fourier method gives a series with three separated variables, and coefficients are calculated by comparison with the exponent expansion known in the special functions theory.

We provide examples of the method used for equations of the quadratic death-process on N, \(N^2\) and \(N^3\). The established series includes the generalized hypergeometric functions and the Jacobi polynomials. In the last part of the paper, we discuss potential transition from the non-closed solution of the first and second equations to the integral represented solution.

2 Generalized Markov Death-Process of Quadratic Type

We consider a time-homogeneous Markov process

on the set of states

with transition probabilities

Let us suppose that the transition probabilities have the following form as \(t\rightarrow 0+\) (\(\lambda \ge 0\), \(\mu \ge 0\))

where \(p_0\ge 0\), \(p_1\ge 0\), \(p_0+p_1=1\).

Let us introduce the generating functions of the trasition probabilities (\(|s|\le 1\))

The second system of Kolmogorov differential equations for the transition probabilities of the process \(\xi _t\) is equivalent to the partial differential equation [12]

with initial condition \(F_{i}(0;s)=s^i\).

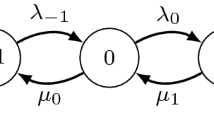

Possible jumps for stochastic process \(\xi _t\) are shown in Fig. 1. The Markov process stays at the initial state i during a random time \(\tau _i\) with distribution \({\mathbb {P}}\{\tau _i\le t\}=1-e^{-(i(i-1)\lambda +i\mu )t}\). Then the process passes into the state \(i-1\) with the probability \((p_1i(i-1)\lambda +i\mu )/(i(i-1)\lambda +i\mu )\) or into the state \(i-2\) with the probability \(p_0i(i-1)\lambda /(i(i-1)\lambda +i\mu )\), and so on. The state 0 is absorbing. Markov process \(\xi _t\) is interpreted as a model of bimolecular chemistry reaction with kinetic scheme \(2T\rightarrow 0,T\); \(T\rightarrow 0\) [5, 12, 19].

Let \(\mathcal{F}(t;z;s)\) be the exponential (double) generating function

The function \(\mathcal{F}(t;z;s)\) is analytic in the domain \(|z|<\infty \), \(|s|<1\).

The first (backward) and the second (forward) systems of Kolmogorov differential equations for the transition probabilities for the considered Markov process have the form [12]

with initial condition \(\mathcal{F}(0;z;s)=e^{zs}\). The linear equations in partial derivatives of the second order (4), (5) are solving by separating variables method.

Furthermore, we need some special functions (see [7, 8, 14, 21], etc). The confluent hypergeometric function is defined by the series (\(b\ne 0,-\,1,-\,2,\ldots \))

and it satisfies the confluent hypergeometric equation

The function (6) is analytic on the complex plane; at some values of the parameters, it can be expressed by the modified Bessel function ([21], Formula 7.11.1.5)

where \(\Gamma (a)\) is the gamma function.

The Jacobi polynomial of n-th order is defined by the formula ([7], § 10.8)

\(n=0,1,\ldots ,\) and it is the unique polynomial solution of the differential (hypergeometric) equation

The following exponent extension is needed for the sequel [7], § 10.20, Formula (4)]

Theorem 1

For the Markov process \(\xi (t)\) on the set of states N under conditions (1) the double generating function of transition probabilities is as follows \((\lambda >0\), \(\mu \ge 0)\)

where \(\Gamma (a)\) is the gamma function, \({}_{1}F_1(a,b;z)\) is the confluent hypergeometric function, \(P_n^{(-1,\beta )}(x)\) are the Jacobi polynomials.

Proof

We look for the solution of the system of Eqs. (4), (5) in a form of the series with three separating variables (\(|s|<1\))

After substitution (11) into (4) and (5), we get the following equations for the functions \(\widetilde{C}_n(z)\) and \(C_n(s)\):

The differential equations (2) and (13) was investigated in [19] under conditions \(\mu =0\), and \(p_0=0\) or \(p_0=1\). Following [19] we can establish that the Eq. (13) gets the additional boundary condition ‘\(C_n(s)\) is polynomial’. Then, the sequence of ‘eigenvalues’ \(\lambda _n=n(n-1)\lambda +n\mu \), \(n=0,1,\ldots \) (cf. [14], Vol. II, Chap. 3, § 9.7). Substituting \(x=(2s-1+p_0)/(1+p_0)\) in (13) and denoting \(C_n(s)=y(x)\) we obtain the following equation of type (8),

Consequently, each \(\lambda _n\) has the corresponding ‘eigenfunction’

where

The Eq. (12) takes the form

it is to be the reduced form of the confluent hypergeometric equation (7) ([14], cf. 2.273(6) at \(a=-p_1\), \(b=\mu /\lambda \), \(\alpha =-p_0\), \(\beta =-\mu /\lambda \), \(\gamma =-n(n-1)-n\mu /\lambda \) ). According to the generation function properties, we are looking an analytic for all \(z_1\), \(z_2\) solution; following [14], we get

where \({}_1F_1(a;b;z)\) is the confluent hypergeometric function.

Hence, the seeking series (11) is

The values \(A_n\) are obtained by comparing the initial conditions \(\mathcal{F}(0;z;s)=e^{zs}\) and the exponent expanding [see (9)]

We get

and the formula (10). Convergence of the series (10) for all z, s and \(t\in [0,\infty )\) follows from the convergence of expansion (15). \(\square \)

If \(t=0\), then the formula (10) is to be the expansion of the exponent \(e^{zs}\) into series. If \(\mu >0\), \(p_0=1\), then we have the expansion into Jacobi polynomial series. If \(\mu =0\), \(p_0=1\), then we have the Sonine expansion [7], § 7.10.1, Formula (5)].

By (6) we get \({}_1F_1(\mu /\lambda ;\mu /\lambda ;z)=e^z=1+z+z^2/2+\cdots ,\)\({}_1F_1(1+\mu /\lambda ;2+\mu /\lambda ;z)=1+ ((1+\mu /\lambda )/(2+\mu /\lambda ))z+\cdots ,\)\({}_1F_1(2+\mu /\lambda ;4+\mu /\lambda ;z)=1+\cdots ,\) by (14) we get \(P_0^{(-1,\mu /\lambda -1)}(x)=1\), \(P_1^{(-1,\mu /\lambda -1)}(x)=(\mu /(2\lambda ))(x-1)\), \(P_2^{(-1,\mu /\lambda -1)}(x)= ((1+\mu /\lambda )/8)[(2+\mu /\lambda )x^2- (2\mu /\lambda )x-2+\mu /\lambda ]\). Substituting this expressions for (10) and equating the coefficients in the equal powers of \(1,z,zs,z^2,z^2s,z^2s^2\) to one another with (3), we obtain the transitions probabilities

This formulas for \(P_{ij}(t)\) may be obtained by direct solution of the system of Kolmogorov differential equations [10] for the considering generalized death-process.

3 Quadratic Death-Process of Second Dimension

We consider a time-homogeneous Markov process

on the set of states

with transition probabilities

of the following form as \(t\rightarrow 0+\) (\(\lambda >0\))

where \(p_{00}\ge 0\), \(p_{10}\ge 0\), \(p_{01}\ge 0\), \(p_{00}+p_{10}+p_{01}=1\). Using the generating function (\(|s_1|\le 1,|s_2|\le 1\))

we can reduce the second system of differential equations for the transition probabilities of the Markov process \((\xi _1(t),\xi _2(t))\) to the partial differential equation [12]

with initial condition \(F_{(\alpha _1,\alpha _2)}(0;s_1,s_2)=s_1^{\alpha _1}s_2^{\alpha _2}\).

In Fig. 2 we show an example of the realization of a process \((\xi _1(t),\xi _2(t))\). The Markov process stays in its initial state \((\alpha _1,\alpha _2)\) for random time \(\tau _{(\alpha _1,\alpha _2)}\) with \({\mathbb {P}}\{\tau _{(\alpha _1,\alpha _2)}\le t\}=1-e^{-\alpha _1\alpha _2\lambda t}\). Then, with the probability \(p_{10}\), the process passes to the state \((\alpha _1,\alpha _2-1)\), with the probability \(p_{00}\) the process passes to the state \((\alpha _1-1,\alpha _2-1)\) or with the probability \(p_{01}\) the process passes to the state \((\alpha _1-1,\alpha _2)\). The further evolution of the process is similar. The states \(\{(\gamma _1,0),\ (0,\gamma _2),\ \gamma _1,\gamma _2=0,1,2,\ldots \}\) are absorbing. For the process \((\xi _1(t),\xi _2(t))\) ‘embedded Markov chain’ is a random walk on \(N^2\).

The Markov process \((\xi _1(t),\xi _2(t))\) is a model of a population with male individuals and female individuals [11]. The state \((\alpha _1,\alpha _2)\) is interpreted as the existence of a group \(\alpha _1\) of particles of type \(T_1\) and \(\alpha _2\) particles of type \(T_2\); in a random time there is an interaction of a pairs of particles, which are transformed into new groups of particles. The main assumptions of the model are as follows: any pair of individuals \(T_1+T_2\) in the population generates descendants independently of the others; the frequency of acts of generation of new individuals is proportional both to the number of individuals of type \(T_1\) and to the number of individuals of type \(T_2\).

Using the exponential generating function

we can reduce the first and the second system of differential equations for the transition probabilities of the Markov process to the form of partial differential equations [12]

with initial condition \(\mathcal{F}(0;z_1,z_2;s_1,s_2)=e^{z_1s_1+z_2s_2}\).

The generalized hypergeometric function is defined by the series

\({}_{0}F_1(b;z)\) is a solution of the equation

The function (19) can be expressed by the modified Bessel function ([21], Formula 7.13.1.1),

Theorem 2

Let a Markov process on the state space \(N^2\) be given by the densities of transition probabilities (16). The double generating function of the transitions probabilities is \((p_{10}<1,p_{01}<1)\)

where \({}_0F_1(b;z)\) is the generalized hypergeometric function, \(P_n^{(-1,\beta )}(x)\) are the Jacobi polynomials; if \(\alpha _1\ge \alpha _2\), then \(s_\sigma =s_1\), \(p_{\sigma }=p_{01}\); if \(\alpha _1<\alpha _2\), then \(s_\sigma =s_2\), \(p_{\sigma }=p_{10}\); if \(\alpha _1=0\), \(\alpha _2=0\) then the expression \({(\alpha _1+\alpha _2)/\max (\alpha _1,\alpha _2)}\) is to be equal to 1.

Proof

Consider the equation in partial derivatives (17), (18). We are looking for the solution in a form of the series (\(|s_1|<1\), \(|s_2|<1\))

Substituting (21) in (17) and (18), we get the equations for the functions \(\widetilde{C}_{\alpha _1\alpha _2}(z_1,z_2)\) and \(C_{\alpha _1\alpha _2}(s_1,s_2)\):

From the assumptions given on the jumps of the process \(\xi (t)\), it follows that for the Eq. (23) boundary condition ‘\(C_{\alpha _1\alpha _2}(s_1,s_2)\) is polynomial’. Then the sequence ‘eigenvalues’ \(\lambda _{\alpha _1\alpha _2}=\alpha _1\alpha _2\lambda \), \(\alpha _1,\alpha _2=0,1,\ldots ,\) and from (23) it is not difficult to obtain the corresponding ‘eigenfunction’

where \(P_n^{(-1,\beta )}(x)\) are Jacobi polynomials; \(s_\sigma =s_1\), \(p_{\sigma }=p_{01}\), if \(\alpha _1\ge \alpha _2\) and \(s_\sigma =s_2\), \(p_{\sigma }=p_{10}\), if \(\alpha _1<\alpha _2\).

Hence, the Eq. (22) has the form

By the definition of the function \(\mathcal{F}(t;z_1,z_2;s_1,s_2)\), it follows that the solution must be analytic for all \(z_1,z_2\), hence

where \({}_0F_1(b;z)\) is generalized hypergeometric function.

Aimed to get the values \(A_{\alpha _1\alpha _2}\) let us consider the exponent \(e^{z_1s_1+z_2s_2}\) expansion. From generalized hypergeometric function series (19), we obtain the equality

Considered special functions satisfies the formulas ([21], Formula 6.8.3.13):

\(k=1,2,\ldots \) Using (24), (25) and (26), we get the sequence of equalities

Comparing the series (21) at \(t=0\) with the exponent extension (27), we obtain

we have the solution (20) of the system (17), (18). Absolute convergence of the series (20) for all \(z_1\), \(z_2\), \(s_1\), \(s_2\) and \(t\in [0,\infty )\) follows from (27). \(\square \)

Now we shall give formulas for \(P_{(\beta _1,\beta _2)}^{(\alpha _1,\alpha _2)}(t)\) with initial conditions \(\alpha _1,\alpha _2,\beta _1,\beta _2\). Using (19), we have \({}_0F_1(1;z)=1+z+\cdots ,\)\({}_0F_1(2;z)=1+z/2+\cdots ,\)\({}_0F_1(3;z)=1+\cdots ,\)\({}_0F_1(4;z)=1+\cdots ,\) by (14) we obtain \(P_0^{(-1,0)}(x)=1\), \(P_0^{(-1,1)}(x)=1\), \(P_1^{(-1,0)}(x)=(1/2)(x-1)\), \(P_1^{(-1,1)}(x)=x-1\). Substituting this in (20), using the extension \(e^{p_{01}z_1+p_{10}z_2}=1+p_{01}z_1+p_{10}z_2+p_{01}^2z_1^2/2+ p_{01}p_{10}z_1z_2+p_{10}^2z_2^2/2+\cdots ,\) and equaling the coefficients at corresponding degrees \(1,z_1,z_1s_1,z_2,z_2s_2,\ldots ,z_1z_2^2s_2^2,z_1z_2^2s_1s_2^2\) in the obtaining series and the double generating function of transitions probabilities, we have

4 Probabilistic Model of Bimolecular Reaction

We consider a time-homogeneous Markov process

on the set of states

Let us suppose that the transition probabilities

have the following form as \(t\rightarrow 0+\) (\(\lambda >0\))

The second equation for the generating function of the transition probabilities (\(|s_1|\le 1,|s_2|\le 1,|s_3|\le 1\))

is

with initial condition \(F_{(\alpha _1,\alpha _2,\alpha _3)}(0;s_1,s_2,s_3)=s_1^{\alpha _1}s_2^{\alpha _2}s_3^{\alpha _3}\).

The process stays in the state \((\alpha _1,\alpha _2,\alpha _3)\) for a random time \(\tau _{(\alpha _1,\alpha _2,\alpha _3)}\), \({\mathbb {P}}\{\tau _{(\alpha _1,\alpha _2,\alpha _3)}\le t\}= 1-e^{-\alpha _1\alpha _2\lambda t}\), and then it passes to the state \((\alpha _1-1,\alpha _2-1,\alpha _3+1)\). Realization of a process \((\xi _1(t),\xi _2(t),\xi _3(t))\) with initial state \((\alpha _1,\alpha _2,0)\), is shown in the Fig. 3. If \(\alpha _1\ge \alpha _2\), then the process stops at the absorbing state \((\alpha _1-\alpha _2,0,\alpha _2)\), and if \(\alpha _2\ge \alpha _1\), then it stops at \((0,\alpha _2-\alpha _1,\alpha _1)\).

The Markov process \((\xi _1(t),\xi _2(t),\xi _3(t))\) is to be the model for chemical reaction \(T_1+T_2\rightarrow T_3\) [19]. The state \((\alpha _1,\alpha _2,\alpha _3)\) of the process is interpreted as the existence of \(\alpha _1\) elements of \(T_1\) type, \(\alpha _2\) elements of \(T_2\) type, \(\alpha _3\) elements of type \(T_3\); at random time moments pair of elements \(T_1+T_2\) transformed into the element \(T_3\). In [19] the connection between the second Eq. (29) and known in formal kinetics the law of active mass is discussed, see also [20]. In [1] using the method of Laplace transformations the expression for the transition probabilities of the process is obtained, which are, however, not convenient to use.

For the exponential generating function

we can write the first and the second system of the Kolmogorov differential equations in the following form [12]

with initial condition \(\mathcal{F}(0;z_1,z_2;s_1,s_2,s_3)=e^{z_1s_1+z_2s_2}\).

Theorem 3

Let a Markov process on the state space \(N^3\) be given by the densities of transition probabilities (28). The double generating function of the transitions probabilities is

where \({}_0F_1(b;z)\) is the generalized hypergeometric function, \(P_n^{(-1,\beta )}(x)\) are the Jacobi polynomials; if \(\alpha _1\ge \alpha _2\) then \(s_\sigma =s_1\); if \(\alpha _1<\alpha _2\), then \(s_\sigma =s_2\); if \(\alpha _1=0\), \(\alpha _2=0\), then the expression \({(\alpha _1+\alpha _2)/\max (\alpha _1,\alpha _2)}\) is to be equal to 1.

The proof of the Theorem 3 is similar to the Theorem 2 proof, in the same way the system of Eqs. (30), (31) is solving by the separating variables method. In particular, by putting \(p_{10}=p_{01}=0\) (that is \(p_{00}=1\)) in (20) and \(s_3=1\) in (32), we obtain the coincident formulas.

5 Simple Death-Process. Nonlinear Branching Property of the Transition Probabilities for the Death-Process of the Linear Type

We consider Markov process

on the state space

transition probabilities \(P_{ij}(t)\), \(i,j\in N\), can be expressed in the following form [10], as \(t\rightarrow 0+\),

where \(\varphi _0=0\), \(\varphi _i>0\) at \(i=1,2,\ldots \)

Stages for death-process \(\xi _t\) are shown in Fig. 4. The process stays at the initial state i during a random time \(\tau _i\) with distribution \({\mathbb {P}}\{\tau _i\le t\}=1-e^{-\varphi _i t}\). At the time \(\tau _i\) the process passes to the state \(i-1\); and so on.

Using the double generating function (\(|s|\le 1\)),

we may reduce the first and the second systems of differential equations for transition probabilities to [12]

with initial condition \(\mathcal{F}(0;z;s)=e(zs)\). Here we use the Gel’fond–Leont’ev operator [9] of generalized differentiation

defined for analytic on zero-neighborhood functions. The function

is eigenfunction for the operator \(D_z\),

Theorem 4

[12] Let a Markov death-process on the state space N be given by the densities of transposition probabilities (33), \(\varphi _{i+1}>\varphi _i\), \(i\in N\), and \(\lim _{i\rightarrow \infty }\varphi _i=\infty \). The double generating function of the transitions probabilities may be express as the Fourier series

where

The series (37) is absolutely convergent for all z, \(|s|<1\) and \(t\in [0,\infty )\).

Proof

This is the expressions for the simple death-process transition probabilities [10]: \(P_{0j}(t)=\delta _j^0,\ j\in N;\ P_{ij}(t)=0\) at \(j>i\ge 1;\) at \(j\le i\)

Following the definition of double generating function (34) and (38), we get

Convergence for the series \(\mathcal{F}(t;z;s)\) follows from the inequality

for all z and \(|s|<1\). \(\square \)

Thus, the solution (37) of the system of Kolmogorov equation (35), (36) is the series with three separating variables. For \(t=0\) we get an expansion of the function

the functions \(\widetilde{C}_n(z)\) and \(C_n(s)\) are connected by an integral transformation.

We have an important particular case at \(\varphi _{i}=i\mu \), \(i\in N\) (\(\mu >0\)), this is the death-process of a linear type. Here \(D_{z}=\mu \,(d/dz)\) and the generating function of transition probabilities \(F_i(t;s)\) satisfies the equation [1, 2, 22] (see Eq. (2) at \(\lambda =0\))

with initial condition \(F_i(t;s)=s^i\). For the process of linear type, it is easy to sum up the series (37) and for \(\mathcal{F}(t;z;s)\) we obtain

From the definition \(\mathcal{F}(t;z;s)=\sum ^\infty _{i=0}(z^i/(\mu ^i i!))F_{i}(t;s)\) and the extension (40) with respect to z, equating coefficients of \(z^i\), we obtain the branching property for the transition probabilities ([22], Chap. 1)

The straightforward solution of the linear first order partial equation (39) by a characteristic method give us (41) (see, for instance, [1], § 3.2).

Accepting that the process has the nonlinear property of the transition probabilities (41) then we can consider a death process for particles: at the time moment \(t=0\) there is i identical particles, each of them exists during a random time \(\tau ^{(k)}\), \({\mathbb {P}}\{\tau ^{(k)}\le t\}=1-e^{-\mu t}\); values \(\tau ^{(k)}\), \(k=1,\ldots ,i\), are independent (the death of one of them means the passing of Markov process \(\xi _t\) from state i to \(i-1\); and so on).

6 Concluding Remarks

Powerful analytic methods [2, 22] for the investigation of Markov processes with branching property have been established. Therefore, for simple death-process, the problem of proving the nonlinear property of transition probabilities can be formulated. This problem generalizes the property (41), and it can be reduced to analytic problem of the Fourier series summarized by (37)—under some assumptions concerning the function \(\varphi _{i}=\varphi (i)\), \(i\in N\).

For the quadratic death-process with \(\varphi _{i}=i(i-1)\lambda \) (\(D_{z}=\lambda z\,(d^2/dz^2)\)), series (37) (i.e., series (10) with \(\lambda >0\), \(\mu =0\), \(p_1=1\)) is summing in [12]. Using Gegenbauer’s addition theorem ([7], § 7.6.1), a closed representation of the double generating function of transition probabilities \(\mathcal{F}(t;z;s)\) was obtained. For \(F_i(t;s)\), an integral representation was obtained which has similar structure (41).

For the generalized quadratic death-process (with \(\lambda >0\), \(\mu >0\)), it is possible to obtain a closed solution of Kolmogorov equations (4), (5) by methods [12]. We consider the series (10) with aim of summing and reducing nonlinear property for transition probabilities

where \(X_t,Y_t\) are mutually connected stochastic processes.

For the two-dimensional quadratic death-process, we consider the series (20) with aim of summing up and reducing a closed solution of the system (17), (18) in form similar to nonlinear property (41),

where \(X_t\), \(Y_t\), \(Z_t\), \(U_t\) are mutually connected stochastic processes.

The opportunity for an integral representation in form (42), (43) is discussed in details in Chapter 5 in [12]. Formulas, similar to (42), (43), are established for a epidemic process which is a Markov quadratic death-process on the set of states \(N^2\) [13] (see also [18]).

Given the above modification of the separating variables method, which is applicable for the first and the second Kolmogorov equations, it may be useful to use other Markov death-processes. For instance, we indicate the simple death-process of polynomial type with \(\varphi _{i}=i(i-1)\ldots (i-k+1)\lambda \) (\(k=3,4,\ldots \)); equations for double generating function has the following form

with initial condition \(\mathcal{F}(0;z;s)=e^{zs}\).

In the same way by solving the first and second equations for transiting probabilities for the pure birth-process and generalized birth-processes, we get a series with three separated variables [23]. For instance, an interesting application of the quadratic pure birth-process on \(N^2\) [1, 3] has the equations for double generating function of the following form

with initial condition \(\mathcal{F}(0;z_1,z_2;s_1,s_2)=e^{z_1s_1+z_2s_2}\).

The problem of developing the discussed method in the case of Markov birth- and death-processes is difficult.

References

Anderson, W.J.: Continuous-Time Markov Chains: An Applications-Oriented Approach. Springer, New York (1991)

Athreya, K.B., Ney, P.: Branching Processes. Springer, New York (1972)

Becker, N.G.: Interactions between species: some comparisons between deterministic and stochastic models. Rocky Mt. J. Math. 3, 53–68 (1973)

Chen, A., Li, J., Chen, Y., Zhou, D.: Extinction probability of interacting branching collision processes. Adv. Appl. Prob. 44, 226–259 (2012)

Dadvey, I.G., Ninham, B.W., Staff, P.J.: Stochastic models for second-order chemical reaction kinetics. Equilib. State. J. Chem. Phys. 45, 2145–2155 (1966)

Daley, D.J., Gani, J.: A random allocation model for carrier-borne epidemics. J. Appl. Prob. 30, 751–765 (1993)

Erdelyi, A. (ed.): Higher Transcendental Functions, vol. 2. McGraw-Hill, New York (1953)

Erdelyi, A. (ed.): Higher Transcendental Functions, vol. 3. McGraw-Hill, New York (1955)

Gel’fond, A.O., Leont’ev, A.F.: A generalization of Fourier series. Mat. Sb. 29, 477–500 (1951). (in Russian)

Gikhman, I.I., Skorokhod, A.V.: Introduction to the Theory of Random Processes, 2nd edn. Dover Publ., New York (1996)

Johnson, N.L., Kotz, S. (eds.) Two-sex problem. In: Encyclopaedia of Statistical Sciences. vol. 9. Wiley, New York (1988)

Kalinkin, A.V.: Markov branching processes with interaction. Russ. Math. Surv. 57, 241–304 (2002)

Kalinkin, A.V., Mastikhin, A.V.: A limit theorem for a Weiss epidemic process. J. Appl. Prob. 52, 247–257 (2015)

Kamke, E.: Differentialgleichungen, lösungsmethoden und lösungen, 4th edn. Geest und Portig, Leipzig (1959)

Lederman, W., Reuter, G.E.H.: Spectral theory for the differential equations of simple birth and death processes. Philos. Trans. R. Soc. Lond. Ser. A 246, 321–369 (1954)

Letessier, J., Valent, G.: Exact eigenfunctions and spectrum for several cubic and quartic birth and death processes. Phys. Lett. Ser. A 108, 245–247 (1985)

Letessier, J., Valent, G.: Some exact solutions of the Kolmogorov boundary value problem. Approx. Theory Appl. 4, 97–117 (1988)

Mastikhin, A.V.: Final distribution for Gani epidemic Markov process. Math. Notes 82, 787–797 (2007)

McQuarrie, D.A., Jachimowcki, C.J., Russel, M.E.: Kinetic of small system. II. J. Chem. Phys. 40, 2914–2921 (1964)

McQuarrie, D.A.: Stochastic approach to chemical kinetics. J. Appl. Prob. 4, 413–478 (1967)

Prudnikov, A.P., Brychkov, Y.A., Marichev, O.I.: Integrals and Series. More Special Functions, vol. 3. Gordon and Breach, New York (1989)

Sevast’yanov, B.A.: Vetvyaščiesya protsessy (Branching processes). Nauka, Moscow (1971) (in Russian) (Also available in German: Sewastjanow, B.A.: Verzweigungsprozesse. Akademie-Verlag, Berlin (1974))

Turkina L.V.: Solution of the Kolmogorov equations for Markov birth-processes of quadratic type. Graduation thesis, Bauman Moscow State Technical University, p 99 (2008) (in Russian)

Valent, G.: An integral transform involving Heun functions and a related eigenvalue problem. SIAM J. Math. Anal. 17, 688–703 (1986)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kalinkin, A.V., Mastikhin, A.V. On the Separating Variables Method for Markov Death-Process Equations. J Theor Probab 32, 163–182 (2019). https://doi.org/10.1007/s10959-017-0795-8

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10959-017-0795-8

Keywords

- Markov death-process

- Transition probabilities

- Equations for exponential generating function

- Exact solutions

- Special functions

- Branching property