Abstract

Consider a multidimensional obliquely reflected Brownian motion in the positive orthant, or, more generally, in a convex polyhedral cone. We find sufficient conditions for existence of a stationary distribution and convergence to this distribution at an exponential rate, as time goes to infinity, complementing the results of Dupuis and Williams (Ann Probab 22(2):680–702, 1994) and Atar et al. (Ann Probab 29(2):979–1000, 2001). We also prove that certain exponential moments for this distribution are finite, thus providing a tail estimate for this distribution. Finally, we apply these results to systems of rank-based competing Brownian particles, introduced in Banner et al. (Ann Appl Probab 15(4):2296–2330, 2005).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A multidimensional obliquely reflected Brownian motion in a convex polyhedron \(D \subseteq {\mathbb {R}}^d\) has been extensively studied in the past few decades. This is a stochastic process \(Z = (Z(t), t \ge 0)\) which takes values in D; in the interior of D, it behaves as a Brownian motion, and as it hits the boundary \(\partial D\), it is reflected inside D, but not necessarily normally. For every face \(D_i\) of the boundary \(\partial D\), there is a vector \(r_i\) on this face, pointing inside \(r_i\), which governs the reflection. If \(r_i\) is the inward unit normal vector to D, then this reflection is normal; otherwise, it is oblique. Special care should be taken for the reflection at the intersection of two or more faces. A formal definition is given in Sect. 2.

One particularly important case is the positive orthant \(D = {\mathbb {R}}^d_+\), where \({\mathbb {R}}_+ := [0, \infty )\). The concept of a semimartingale reflected Brownian motion (SRBM) in the orthant was introduced in [29, 30], as a diffusion limit for series of queues, when traffic intensity at each queue tends to one (the so-called heavy traffic limit). Later, it was applied in the theory of competing Brownian particles (systems of rank-based Brownian particles, when each particle has drift and diffusion coefficients depending on its current ranking relative to the other particles), see [3, 4]. The gap process (vector of gaps, or spacings, between adjacent particles) turns out to be an SRBM in the orthant.

We refer the reader to the comprehensive survey [57] about an SRBM in the orthant.

Reflected Brownian motion in a convex polyhedron was introduced in [56] using a submartingale problem, and in [15] in a semimartingale form: semimartingale reflected Brownian motion, or an SRBM. The paper [15] contains a sufficient condition for weak existence and uniqueness in law; it is stated in Sect. 2.

In this paper, we assume that the condition mentioned above holds; then an SRBM in a convex polyhedron D exists and is unique in the weak sense, and versions of this SRBM starting from different points \(x \in D\) form a Feller continuous strong Markov family.

Of particular interest is a stationary distribution for an SRBM in a convex polyhedron D: a probability distribution \(\pi \) on D such that if \(Z(0) \sim \pi \), then \(Z(t) \sim \pi \) for all \(t \ge 0\). This was a focus of extensive research throughout the last four decades.

For the orthant (and, more generally, for a convex polyhedron), a necessary and sufficient condition for existence of a stationary distribution is not known. However, there are fairly general sufficient conditions and necessary conditions for the orthant, see [11, 16, 24, 28]. For dimensions \(d = 2\) and \(d = 3\), a necessary and sufficient condition is actually known, see [32] and [27, Appendix A] for \(d = 2\), and [7, 12] for \(d = 3\). For a convex polyhedron (more specifically, a convex polyhedral cone), see [1, 2, 8] for sufficient condition for existence of a stationary distribution. It was shown in [13, 16] that if a stationary distribution exists, then it is unique.

Exact form of this stationary distribution is known only in a few cases, the most important of which is the so-called skew-symmetry condition. Under this condition, the stationary distribution has a product-of-exponentials form, see [30, 31, 57]. Other known cases (sums of products of exponentials) are studied in [18, 26]. A necessary and sufficient condition for a probability distribution to be stationary is a certain integral equation, called the Basic Adjoint Relationship. However, it is not known how to solve this equation in the general case. Other properties of the stationary distribution were studied in [16].

We complement the results above by finding some new conditions for existence of a stationary distribution for an SRBM in the orthant and in a convex polyhedral cone. To this end, we find a Lyapunov function: this is a function \(V : D \rightarrow [1, \infty )\) such that for some constants \(k, b > 0\) and a set \(C \subseteq D\) which is “small” in a certain sense (we specify later what this means; for now, it is sufficient to take a compact C) the process

is a supermartingale. This is a more general definition than is usually used (with the generator of an SRBM). Under some additional technical conditions (so-called irreducibility and aperiodicity, more on this later), if such function V can be constructed, then there exists a unique stationary distribution \(\pi \), and the SRBM \(Z = (Z(t), t \ge 0)\) converges weakly to \(\pi \) as \(t \rightarrow \infty \); moreover, the convergence is exponentially fast in t. There is an extensive literature on Lyapunov functions and convergence, see [42–44] for discrete-time Markov chains and [19–21, 25, 45, 46] for continuous-time Markov processes. These methods were applied to an SRBM in the orthant in [9, 24] and to an SRBM in a convex polyhedral cone in [2, 8]. However, in these articles they construct a Lyapunov function indirectly. In this article, we come up with an explicit formula:

where Q is a \(d\times d\) symmetric matrix such that \(x'Qx > 0\) for \(x \in D\setminus \{0\}\), \(\lambda > 0\) is a certain constant (to be determined later), \(\varphi : {\mathbb {R}}_+ \rightarrow {\mathbb {R}}_+\) is a \(C^{\infty }\) function with

We can also conclude that \(\int _DV(x)\pi (\mathrm{d}x) < \infty \). This explicit form of the function V allows us to find tail estimates for the stationary distribution \(\pi \). Let us also mention the papers [14, 27], which study tail behavior of \(\pi \) in case \(d = 2\). A companion paper [51] studies Lyapunov functions for jump-diffusion processes in \({\mathbb {R}}^d\), as well as for reflected jump diffusions.

The paper is organized as follows. In Sect. 2, we introduce all necessary concepts and definitions, explain the connection between Lyapunov functions, existence of a stationary distribution, and exponential convergence. In Sect. 3, we state the main result and compare it with already known conditions for existence of a stationary distribution; then, we prove this main result. Section 4 is devoted to systems of competing Brownian particles.

1.1 Notation

We denote by \(I_k\) the \(k\times k\)-identity matrix, and by \(\mathbf {1}\) the vector \((1, \ldots , 1)'\) (the dimension is clear from the context). For a vector \(x = (x_1, \ldots , x_d)' \in {\mathbb {R}}^d\), let \(||x|| := \left( x_1^2 + \ldots + x_d^2\right) ^{1/2}\) be its Euclidean norm. The norm of a \(d\times d\)-matrix A is defined by

For any two vectors \(x, y \in {\mathbb {R}}^d\), their dot product is denoted by \(x\cdot y = x_1y_1 + \cdots + x_dy_d\). As mentioned before, we compare vectors x and y componentwise: \(x \le y\) if \(x_i \le y_i\) for all \(i = 1, \ldots , d\); \(x < y\) if \(x_i < y_i\) for all \(i = 1, \ldots , d\); similarly for \(x \ge y\) and \(x > y\). This includes infinite-dimensional vectors from \({\mathbb {R}}^{\infty }\). We compare matrices of the same size componentwise, too. For example, we write \(x \ge 0\) for \(x \in {\mathbb {R}}^d\) if \(x_i \ge 0\) for \(i = 1, \ldots , d\); \(C = (c_{ij})_{1 \le i, j \le d} \ge 0\) if \(c_{ij} \ge 0\) for all i, j.

Fix \(d \ge 1\), and let \(I \subseteq \{1, \ldots , d\}\) be a nonempty subset. Write its elements in the order of increase: \(I = \{i_1, \ldots , i_m\},\ \ 1 \le i_1 < i_2 < \ldots < i_m \le d\). For any \(x \in {\mathbb {R}}^d\), let \([x]_I := (x_{i_1}, \ldots , x_{i_m})'\). For any \(d\times d\)-matrix \(C = (c_{ij})_{1 \le i, j \le d}\), let \([C]_I := \left( c_{i_ki_l}\right) _{1 \le k, l \le m}\).

A one-dimensional Brownian motion with zero drift and unit diffusion, starting from 0, is called a standard Brownian motion. The symbol \({{\mathrm{mes}}}\) denotes the Lebesgue measure on \({\mathbb {R}}^d\). We write \(f \in C^{\infty }(D)\) for an infinitely differentiable function \(f : D \rightarrow {\mathbb {R}}\).

Take a measurable space \(({\mathfrak {X}}, \nu )\). For any measurable function \(f : {\mathfrak {X}} \rightarrow {\mathbb {R}}\), we denote \((\nu , f) := \int _{{\mathfrak {X}}}f{\mathrm {d}}\nu \). For a measurable function \(f : {\mathfrak {X}} \rightarrow [1, \infty )\), define the norm

where the supremum is taken over all measurable functions \(g : {\mathfrak {X}}\rightarrow {\mathbb {R}}\) such that \(|g(x)| \le f(x)\) for all \(x \in {\mathfrak {X}}\). For \(f = 1\), this is the total variation norm: \(||\nu ||_{{{\mathrm{TV}}}}\).

2 Definitions and Background

2.1 Definition of an SRBM in a Convex Polyhedron

Fix the dimension \(d \ge 2\), and the number m of edges. Take m unit vectors \({\mathfrak {n}}_1, \ldots , {\mathfrak {n}}_m\), and m real numbers \(b_1, \ldots , b_m\). Consider the following domain:

We assume that each face \(D_i\) of the boundary \(\partial D\):

is \((d-1)\)-dimensional, and the interior of D is nonempty. Then D is called a convex polyhedron. For each face \(D_i\), \({\mathfrak {n}}_i\) is the inward unit normal vector to this face. Define the following \(d\times m\)-matrix: \(N = [{\mathfrak {n}}_1|\ldots |{\mathfrak {n}}_m]\). Now, take a vector \(\mu \in {\mathbb {R}}^d\) and a positive definite symmetric \(d\times d\)-matrix A. Consider also an \(m\times d\)-matrix \(R = [r_1|\ldots |r_m]\), with \({\mathfrak {n}}_i\cdot r_i = 1\) for \(i = 1, \ldots , m\). We are going to define a process \(Z = (Z(t), t \ge 0)\) with values in D, which behaves as a d-dimensional Brownian motion with drift vector \(\mu \) and covariance matrix A, so long as it is inside D; at each face \(D_i\), it is reflected according to the vector \(r_i\). First, we define its deterministic version: a solution to the Skorohod problem.

Definition 1

Take a continuous function \({\mathcal {X}}: {\mathbb {R}}_+ \rightarrow {\mathbb {R}}^d\) with \({\mathcal {X}}(0) \in D\). A solution to the Skorohod problem in D with reflection matrix R and driving function \({\mathcal {X}}\) is any continuous function \({\mathcal {Z}}: {\mathbb {R}}_+ \rightarrow D\) such that there exist m continuous functions \({\mathcal {L}}_1, \ldots , {\mathcal {L}}_m : {\mathbb {R}}_+ \rightarrow {\mathbb {R}}_+\) which satisfy the following properties:

-

(i)

each \({\mathcal {L}}_i\) is nondecreasing, \({\mathcal {L}}_i(0) = 0\), and can increase only when \({\mathcal {Z}}\in D_i\); we can write the latter property as

$$\begin{aligned} {\mathcal {L}}_i(t) = \int _0^t1(Z(s) \in D_i){\mathrm {d}}{\mathcal {L}}_i(s),\quad t \ge 0; \end{aligned}$$ -

(ii)

for all \(t \ge 0\), we have:

$$\begin{aligned} {\mathcal {Z}}(t) = {\mathcal {X}}(t) + R{\mathcal {L}}(t),\hbox {where } {\mathcal {L}}(t) = ({\mathcal {L}}_1(t), \ldots , {\mathcal {L}}_m(t))'. \end{aligned}$$The function \({\mathcal {L}}_i\) is called the boundary term corresponding to the face \(D_i\).

For the rest of the article, assume the usual setting: a filtered probability space \((\Omega , {\mathcal {F}}, ({\mathcal {F}}_t)_{t \ge 0}, {\mathbf {P}})\) with the filtration satisfying the usual conditions.

Definition 2

Fix \(z \in D\). Take an \((({\mathcal {F}}_t)_{t \ge 0}, {\mathbf {P}})\)-Brownian motion \(W = (W(t), t \ge 0)\) with drift vector \(\mu \) and covariance matrix A, starting from z. A continuous adapted D-valued process \(Z = (Z(t), t \ge 0)\), which is a solution to the Skorohod problem in D with reflection matrix R and driving function W, is called a semimartingale reflected Brownian motion (SRBM) in D, with drift vector \(\mu \), covariance matrix A, and reflection matrix R, starting from z. It is denoted by \({{\mathrm{SRBM}}}^d(D, R, \mu , A)\). For the case \(D = {\mathbb {R}}^d_+\), we denote it simply by \({{\mathrm{SRBM}}}^d(R, \mu , A)\).

We shall present a sufficient condition for existence and uniqueness taken from [15]. First, let us introduce a concept concerning the geometry of the polyhedron D.

Definition 3

For a nonempty subset \(I \subseteq \{1,\ldots , m\}\), let \(D_I := \cap _{i \in I}D_i\), and let \(D_{\varnothing } := D\). A nonempty subset \(I \subseteq \{1, \ldots , m\}\) is called maximal if \(D_I \ne \varnothing \) and for \(I \subsetneq J \subseteq \{1,\ldots , m\}\), we have: \(D_J \subsetneq D_I\).

Now, let us define certain useful classes of matrices.

Definition 4

Take a \(d\times d\)-matrix \(M = (m_{ij})_{1 \le i, j \le d}\). It is called an \({\mathcal {S}}\)-matrix if for some \(u \in {\mathbb {R}}^d\), \(u > 0\) we have: \(Mu > 0\). It is called completely-\({\mathcal {S}}\) if for every nonempty \(I \subseteq \{1,\ldots , d\}\), we have: \([M]_I\) is an \({\mathcal {S}}\)-matrix. It is called a \({\mathcal {Z}}\)-matrix if \(m_{ij} \le 0\) for \(i \ne j\). It is called a reflection nonsingular \({\mathcal {M}}\)-matrix if it is both a completely \({\mathcal {S}}\) and a \({\mathcal {Z}}\)-matrix with diagonal elements equal to one: \(r_{ii} = 1\), \(i = 1, \ldots , d\). It is called strictly copositive if \(x'Mx > 0\) for \(x \in {\mathbb {R}}^d_+\setminus \{0\}\). It is called nonnegative if all its elements are nonnegative.

A useful equivalent characterization of reflection nonsingular \({\mathcal {M}}\)-matrices is given in [53, Lemma 2.3]. Now, let us finally state the existence and uniqueness result, taken from [15].

Proposition 2.1

Assume that for every maximal set \(I \subseteq \{1, \ldots , m\}\) the matrices \([N'R]_I\) and \([R'N]_I\) are \({\mathcal {S}}\)-matrices. Then for every \(z \in D\), there exists a weak version of an \({{\mathrm{SRBM}}}^d(D, R, \mu , A)\), and it is unique in law. Moreover, these processes for \(z \in D\) form a Feller continuous strong Markov family.

Remark 1

For a particular important case of the positive orthant: \(D = {\mathbb {R}}_+^d\), that is, when \(m = d\), \({\mathfrak {n}}_i = e_i\) and \(b_i = 0\) for \(i = 1, \ldots , d\), we have: \(N = I_d\), every nonempty subset \(I \subseteq \{1, \ldots , d\}\) is maximal, and the condition from Proposition 2.1 is equivalent to the matrix R being completely \({\mathcal {S}}\) (because R is completely \({\mathcal {S}}\) if and only if \(R'\) is completely \({\mathcal {S}}\)). This turns out to be not just sufficient but a necessary condition, see [47, 55].

A sufficient condition for strong existence and pathwise uniqueness was found in [29] for the orthant: R must be a reflection nonsingular-\({\mathcal {M}}\) matrix. Similar conditions for the general convex polyhedron were found in [23]. However, we shall not need strong existence and pathwise uniqueness in this paper. The generator of this process is given by

with the domain \({\mathcal {D}}({\mathcal {A}})\) containing the following subset of functions:

2.2 Recurrence of Continuous-Time Markov Processes

Let us remind the basic concepts of recurrence, irreducibility, and aperiodicity for continuous-time Markov processes. This exposition is taken from [9, 19, 21, 45, 46].

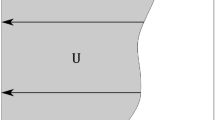

Take a locally compact separable metric space \({\mathfrak {X}}\) and denote by \({\mathfrak {B}}\) its Borel \(\sigma \)-field. Let

be a time-homogeneous Markov family, where X(t) has continuous paths under each measure \({\mathbf {P}}_x\). Denote by \(P^t(x, A) = {\mathbf {P}}_x(X(t) \in A)\) the transition function, and by \(\mathbf {E}_x\) the expectation operator corresponding to \({\mathbf {P}}_x\). Denote by \(P^tf\) and \(\nu P^t\) the action of this transition semigroup on functions \(f : {\mathfrak {X}}\rightarrow {\mathbb {R}}\) and Borel measures on \({\mathfrak {X}}\). Take a \(\sigma \)-finite reference measure \(\nu \) on \({\mathfrak {X}}\). The process X is called \(\nu \)- irreducible if for \(A \in {\mathfrak {B}}\) we have:

If such measure exists, then there is a maximal irreducibility measure \(\psi \) (every other irreducibility measure is absolutely continuous with respect to \(\psi \)), which is unique up to equivalence of measures. A set \(A \in {\mathfrak {B}}\) with \(\psi (A) > 0\) is accessible. A nonempty \(C \in {\mathfrak {B}}\) is petite if there exists a probability distribution a on \({\mathbb {R}}_+\) and a nontrivial \(\sigma \)-finite measure \(\nu _a\) on \({\mathfrak {B}}\) such that

Suppose that, in addition, this distribution a is concentrated at one point \(t > 0\): \(a = \delta _t\). Equivalently, there exists a \(t > 0\) and a nontrivial \(\sigma \)-finite measure \(\nu _a\) on \({\mathfrak {B}}\) such that

Then the set C is called small. The process is Harris recurrent if, for some \(\sigma \)-finite measure \(\nu \),

Harris recurrence implies \(\nu \)-irreducibility. A Harris recurrent process possesses an invariant measure \(\pi \), which is unique up to multiplication by a constant. If \(\pi \) is finite, then it can be scaled to be a probability measure, and in this case the process is called positive Harris recurrent. An irreducible process is aperiodic if there exists an accessible petite set C and \(T > 0\) such that for all \(x \in C\) and \(t \ge T\), we have: \(P^t(x, C) > 0\).

Definition 5

The process X is called V-uniformly ergodic for a function \(V : {\mathfrak {X}}\rightarrow [1, \infty )\) if it has a unique stationary distribution \(\pi \), and there exists constants \(K, \varkappa > 0\) such that for all \(x \in {\mathfrak {X}}\) and \(t \ge 0\) we have:

Now, let us state a few auxillary statements. The next proposition was proved in [42, Chapter 6] for discrete-time processes, but the proof is readily transferred to continuous-time setting.

Proposition 2.2

For a Feller continuous strong Markov family, every compact set is petite.

Lemma 2.3

Take a Feller continuous strong Markov family. Assume \(\psi \) is a reference measure such that there exists a compact set C with \(\psi (C) > 0\). If \(P^t(x, A) > 0\) for all \(t > 0\), \(x \in {\mathfrak {X}}\) and \(A \in {\mathfrak {B}}\) such that \(\psi (A) > 0\), then the process is \(\psi \)-irreducible and aperiodic.

Proof

Irreducibility follows from the definition. For aperiodicity, we can take the compact set C, because it is petite by Proposition 2.2. If \(\psi '\) is a maximal irreducibility measure, then \(\psi (C) > 0\) and \(\psi \ll \psi '\), and so \(\psi '(C) > 0\). The rest is trivial. \(\square \)

Finally, the following statement was proved in [13, Lemma 3.4].

Proposition 2.4

For an \({{\mathrm{SRBM}}}^d(D, R, \mu , A)\) under the conditions of Proposition 2.1, for every \(t > 0\), \(x \in D\), and \(A \subseteq D\) with \({{\mathrm{mes}}}(A) > 0\) we have: \(P^t(x, A) > 0\).

Remark 2

Combining Lemma 2.3 and Proposition 2.4, we get that an \({{\mathrm{SRBM}}}^d(D, R, \mu , A)\) is irreducible and aperiodic.

2.3 Lyapunov Functions and Exponential Convergence

There is a vast literature (some of these were mentioned in Sect. 1) on connection between Lyapunov functions for Markov processes and their convergence to the stationary distribution. However, for the purposes of this article, we need to state the result is a slightly different form. First, let us define the concept of a Lyapunov function.

Definition 6

Take a continuous function \(V : {\mathfrak {X}}\rightarrow [1, \infty )\). Suppose there exists a closed petite set \(C \subseteq {\mathfrak {X}}\) and constants \(k, b > 0\) such that the process

is an \((({\mathcal {F}}_t)_{t \ge 0}, {\mathbf {P}}_x)\)-supermartingale for all \(x \in {\mathfrak {X}}\). If, in addition, \(\sup _CV < \infty \), then V is called a Lyapunov function for the process X.

Remark 3

Equivalently, we can request that the process in (2) is a local supermartingale. This is equivalent to it being a supermartingale, because this process is bounded from below by \(-V(x) - bT\) on any time interval [0, T] under the measure \({\mathbf {P}}_x\). (Every local supermartingale which is bounded from below is a true supermartingale; this follows from a trivial application of Fatou’s lemma.)

This definition is taken from [19, Section 3] with minor adjustments, with \(\varphi (s) = ks\) in the notation of [19]. This is a slightly more general definition than is often stated in the literature; a more customary one invloves the generator of the Markov family. First, let us state an auxillary lemma.

Lemma 2.5

For some constant \(c_6 > 0\), we have:

Proof

Because U and V are equivalent in the sense of (4), it suffices to prove the statement of Lemma 2.5 for V instead of U. But this follows from the fact that the process (2) is a supermartingale. Indeed, take \(\mathbf {E}_x\) in (2) and get:

Therefore, \(P^tV(x) \le V(x) + bt\). But \(V(x) \ge 1\), so for \(t \in [0, 1]\), we get: \(P^tV(x) \le (1 + b)V(x)\). This completes the proof of Lemma 2.5. \(\square \)

Next, we present the main result for this subsection.

Theorem 2.6

Assume there exists a Lyapunov function V, and the process is irreducible and aperiodic. Then there exists a unique stationary distribution \(\pi \), the process is V-uniformly ergodic, and we have the following estimate:

Proof

Existence and uniqueness of \(\pi \) together with (3) follows from [19, Proposition 3.1]. If the process is irreducible, then the skeleton chain \((X(n))_{n \in {\mathbb {Z}}_+}\) is irreducible. Apply [19, Theorem 3.3] to the case \(\varphi (x) = kx\), we get that for any \(t_0 > 0\), there exists a function \(\tilde{V} : {\mathcal {X}}\rightarrow [k, \infty )\), an accessible petite set \(\tilde{C}\) for the skeleton chain \((X(n))_{n \in {\mathbb {Z}}_+}\) and a constant \(\tilde{b} > 0\) such that \(\sup _{\tilde{C}}\tilde{V} < \infty \),

and

Taking \(U := \tilde{V}/k : {\mathfrak {X}}\rightarrow [1, \infty )\), we get: there exists \(\lambda := 1 - k < 1\) and \(b' = \tilde{b}/k > 0\) such that

and

It follows from Proposition 2.4 that the skeleton chain \((X(n))_{n \ge 0}\) is irreducible and aperiodic. By [42, Theorem 5.5.7], the petite set \(\tilde{C}\) is small for this skeleton chain. Since this chain is irreducible and aperiodic, by [21, Theorem 2.1(c)] for some constants \(c_5 > 0\) and \(\rho \in (0, 1)\), we have:

Next, we follow the proof of [21, Theorem 5.2]. Every \(t \ge 0\) can be represented as \(t = n + s\), where \(n \in {\mathbb {Z}}_+\), \(s \in [0, 1)\). Since \(\pi \) is stationary, we have: \(\pi P^s = \pi \). Therefore, for any measurable \(g : {\mathfrak {X}}\rightarrow [1, \infty )\) with \(|g(x)| \le U(x)\),

But from Lemma 2.5 we have:

From (5), because \(|g(x)| \le U(x)\) for \(x \in {\mathfrak {X}}\), we get:

Since \(n \le t - 1\),

This proves that, for \(c_7 := c_5c_6\rho ^{-1}\),

This is U-uniform ergodicity. Since the functions U and V are equivalent in the sense of (4), this also means V-uniform ergodicity. \(\square \)

3 Main Results

3.1 Statement of the General Result

Consider now a special type of a convex polyhedron, namely a convex polyhedral cone:

where N is a \(m\times d\)-matrix, constructed in Sect. 2.2. This fits into the general framework of Definition 2, if we let \(b_1 = \ldots = b_m = 0\). What follows is the main result of the paper.

Theorem 3.1

Suppose that conditions of Proposition 2.1 hold. Assume there exists a symmetric nonsingular \(d\times d\)-matrix Q such that:

-

(i)

\(x'Qx > 0\) for \(x \in D\setminus \{0\}\);

-

(ii)

\((R'Qx)_j \le 0\) for \(x \in D_j\), for each \(j = 1,\ldots , m\);

-

(iii)

\(x'Q\mu < 0\) for \(x \in D\setminus \{0\}\).

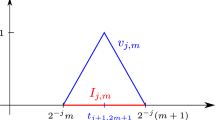

Take a \(C^{\infty }\) function \(\varphi : {\mathbb {R}}_+ \rightarrow {\mathbb {R}}_+\) defined in (1). Denote

Then for \(\lambda \in (0, \Lambda )\), the function

is a Lyapunov function for the \({{\mathrm{SRBM}}}^d(D, R, \mu , A)\). Therefore, the \({{\mathrm{SRBM}}}^d(D, R, \mu , A)\) has a unique stationary distribution \(\pi \), which satisfies

and is \(V_{\lambda }\)-uniformly ergodic.

Remark 4

The quantity \(\Lambda \) is strictly positive. Indeed, the matrix A is positive definite, and Q is nonsingular; so for \(x \ne 0\) we have: \(Qx \ne 0\) and \(x'QAQx = (Qx)'A(Qx) > 0\). Also, \(Q\mu \cdot x = x'Q\mu < 0\), and \(U(x) > 0\) for \(x \in D\setminus \{0\}\). Therefore, the fraction is positive for each \(x \in D\setminus \{0\}\). Since this fraction is homogeneous (invariant under scaling), we can take the minimum on the compact set \(\{x \in D\mid ||x|| = 1\}\). The rest is trivial.

The estimate (8) implies that some exponential moments of \(\pi \) are finite. Namely, let

This quantity is strictly positive, because \(U(x) > 0\) on the compact set \(\{x \in D\mid ||x|| =1\}\). Therefore, for large enough \(||x||\) we have:

and

From here, we get: for every \(a \ge 0\),

Let us compare this result with [1, 2, 8], where a more general case is considered (drift vector and covariance matrix depend on the state). There, a sufficient condition for V-uniform ergodicity is:

-

(i)

that Skorohod problem in D has a unique solution for every driving function and is Lipschitz continuously dependent on this function, in the metric of \(C([0, T], {\mathbb {R}}^d)\) for every \(T > 0\);

-

(ii)

there exists a vector \(b \in {\mathbb {R}}^m, b > 0\), such that \(Rb = -\mu \).

Condition (i) is stronger than the one from Proposition 2.1. However, we were not able to come up with an example when conditions of Theorem 3.1 hold, but condition (i) does not hold. Some sufficient conditions for (i) to hold are known from [22]. However, condition (ii) is much simpler than (i)–(iii) from Theorem 3.1. The results from [1, 2, 8] also construct a Lyapunov function indirectly, without giving an explicit formula. This does not allow to construct explicit tails estimates, as in 3.1.

3.2 Applications to the Case of the Positive Orthant

Now, let \(D = {\mathbb {R}}^d_+\), that is, \(m = d\) and \(N = I_d\). We have the following immediate corollary of Theorem 3.1.

Corollary 3.2

Assume R is a completely \({\mathcal {S}}\) matrix. Suppose there exists a strictly copositive nonsingular \(d\times d\)-matrix Q such that QR is a \({\mathcal {Z}}\)-matrix, and \(Q\mu < 0\). Then an \({{\mathrm{SRBM}}}^d(R, \mu , A)\) has a unique stationary distribution \(\pi \) and is \(V_{\lambda }\)-uniformly ergodic for \(\lambda \in (0, \Lambda )\), while \(\pi \) satisfies (8). Here, \(V_{\lambda }\) is defined in (7), and \(\Lambda \) is defined in (6).

Proof

Condition (i) of Theorem 3.1 follows from the definition of copositivity. Condition (ii) follows from the assumption that QR is a \({\mathcal {Z}}\)-matrix, because then for \(z \in D_i\) we have: \(z \ge 0\), but \(z_i = 0\), and so

Condition (iii) follows from \(Q\mu < 0\). \(\square \)

A particular example of this is as follows.

Corollary 3.3

Assume R is a \(d\times d\)-reflection nonsingular \({\mathcal {M}}\)-matrix, and there exists a diagonal matrix \(C = {{\mathrm{diag}}}(c_1, \ldots , c_d)\) with \(c_1, \ldots , c_d > 0\) such that \(\overline{R} = RC\) is symmetric. If \(R^{-1}\mu < 0\), then the process \({{\mathrm{SRBM}}}^d(R, \mu , A)\) has a unique stationary distribution \(\pi \), and is \(V_{\lambda }\)-uniformly ergodic with

for \(\lambda \in (0, \Lambda )\), where the function \(\varphi \) is defined in (1), and

In addition, \((\pi , V_{\lambda }) < \infty \) for \(\lambda \in (0, \Lambda )\).

Proof

Just take \(Q = \overline{R}^{-1} = C^{-1}R^{-1}\) in Corollary 3.2. Let us show that the matrix Q is strictly copositive. From [53, Lemma 2.3], \(R^{-1}\) is a nonnegative matrix with strictly positive elements on the main diagonal. Since \(C^{-1}\) is a diagonal matrix with strictly positive elements on the diagonal, the matrix \(\overline{R}^{-1}\) is also a nonnegative matrix with strictly positive elements on the main diagonal. Therefore, for \(x \in {\mathbb {R}}^d_+\), \(x \ne 0\) we have: \(x'\overline{R}^{-1}x > 0\). Now, from \(R^{-1}\mu < 0\) it follows that \(\overline{R}^{-1}\mu < 0\). The rest is trivial.

Example 1

However, Corollary 3.2 can be applied not only to the case when R is a reflection nonsingular \({\mathcal {M}}\)-matrix. Indeed, let \(d = 2\) and

Then R is a completely \({\mathcal {S}}\) matrix. Take the matrix

is a \({\mathcal {Z}}\)-matrix, and \(Q\mu < 0\). However, R is not a reflection nonsingular \({\mathcal {M}}\)-matrix.

It is instructive to compare these results with already known ones. It turns out that the only new statement in Corollary 3.3 is the tail estimate \((\pi , V_{\lambda }) < \infty \) for an explicitly constructed function \(V_{\lambda }\). Existence (and uniqueness) of a stationary distribution and V-uniform ergodicity for some function \(V : {\mathbb {R}}^d_+ \rightarrow [1, \infty )\) are already known from [9, 24] (only there is no simple formula for the Lyapunov function V in these papers: it is simply known that \(V(x) \ge a_1e^{a_2||x||}\) for some \(a_1, a_2 > 0\)). The paper [24] states the fluid path condition, which is sufficient for V-uniform ergodicitiy: for every \(x \in {\mathbb {R}}^d_+\), any solution of the Skorohod problem in the orthant with reflection matrix R and driving function \(x + \mu t\) must tend to zero as \(t \rightarrow \infty \). This turns out to be a necessary and sufficient condition for the case \(d =3\). In the case \(d = 2\), another necessary and sufficient condition is found: R must be nonsingular and \(R^{-1}\mu < 0\), see [32] and [27, Appendix A]. In fact, the following condition is necessary for existence of a stationary distribution: R is nonsingular and \(R^{-1}\mu < 0\), see [16], [7, Appendix C]. For \(d = 3\), the fluid path condition is weaker than this necessary condition, see [7] and [12].

It is not known for \(d \ge 4\) whether the fluid path condition is necessary. Therefore, Corollary 3.2 might contain results which are new compared to the fluid path condition. However, we do not know any counterexamples to fluid path condition (that is, cases when it is false, but the stationary distribution exists). This is a matter for future research.

3.3 Proof of Theorem 3.1

Recall from Remark 2 that an \({{\mathrm{SRBM}}}^d(D, R, \mu , A)\) is irreducible and aperiodic. The rest of the proof will be devoted to proving that the function (7) is indeed a Lyapunov function in the sense of Definition 6. Apply the Itô-Tanaka formula to

where \(Z = (Z(t), t \ge 0)\) is an \({{\mathrm{SRBM}}}^d(D, R, \mu , A)\), \(W = (W(t), t \ge 0)\) is the driving Brownian motion for Z, and \(L = (L_1, \ldots , L_m)'\) is the vector of boundary terms. Because of (1), for \(x \in D\) such that \(||x|| \ge s_2\), we have:

First, let us calculate the first- and second-order partial derivatives of U on this set. Since Q is symmetric and \(x'Qx > 0\) for \(x \in D\) such that \(||x|| \ge s_2\), we have:

Therefore,

Now,

As \(||x|| \rightarrow \infty \), these second-order derivatives tend to zero, because \(U(x) \ge K||x||\) for \(x \in D\). Now, let us calculate the first- and second-order partial derivatives for \(V_{\lambda }\):

and

Since \(\langle Z_i, Z_j\rangle _t = a_{ij}{\mathrm {d}}t\) for \(i, j = 1, \ldots , d\), and \(Z(t) = W(t) + RL(t)\), we have:

where for \(x \in D\setminus \{0\}\) we let \(\beta _{\lambda }(x) := a(x)\lambda ^2 - b(x)\), where

and

and, in addition,

Lemma 3.4

The process \(\overline{L} = (\overline{L}(t), t \ge 0)\) is nonincreasing a.s.

Lemma 3.5

For \(\lambda < \Lambda \), there exist \(r(\lambda ), k(\lambda ) > 0\) such that for \(x \in D\), \(||x|| \ge r(\lambda )\), we have: \(\beta _{\lambda }(x) < - k(\lambda )\).

Assuming we proved these two lemmata, let us complete the proof of Theorem 3.1. Fix \(\lambda \in (0, \Lambda )\). Take a compact set \(C = \{x \in D\setminus ||x|| \le r(\lambda )\}\), with \(r(\lambda )\) from Lemma 3.5. By Proposition 2.2, this set is petite. The process

is a local supermartingale, because \(M = (M(t), t \ge 0)\) is a local martingale and by Lemma 3.4. Now,

for \(b_{\lambda } := \max _{x \in C}\left[ \beta _{\lambda }(x)V_{\lambda }(x)\right] \). This maximum is well defined, because \(\beta _{\lambda }V_{\lambda }\) is a continuous function, and C is a compact set. The rest of the proof is trivial.

Proof of Lemma 3.4

We can write \(\overline{L}(t)\) as

But each \(L_j\) can grow only when \(Z \in D_j\), and then \((R'QZ(t))_j = QZ(t)\cdot r_j \le 0\). It suffices to note that \(V_{\lambda }(Z(t)) \ge 0\) and \(U(Z(t)) \ge 0\). \(\square \)

Proof of Lemma 3.5

For each \(x \in D\setminus \{0\}\) we have: if \(b(x) > 0\), then

Note that \(\theta (x) \rightarrow 0\) as \(||x|| \rightarrow \infty \). From this and conditions (i), (ii), and (iii) of Theorem 3.1 it is straightforward to see that

Also, there exist \(r_0, c_0 > 0\) such that for \(x \in D\), \(||x|| \ge r_0\) we have: \(a(x), b(x) \ge c_0\). Now, fix \(\lambda \in (0, \Lambda )\). Then there exists \(\delta > 0\) such that \(\delta \le \lambda \le \Lambda - 2\delta \), and there exists \(r(\lambda )\) such that for \(x \in D,\ ||x|| \ge r(\lambda )\) we have: \(\Lambda (x) \ge \Lambda - \delta \). Without loss of generality, we assume \(r(\lambda ) \ge r_0\). Now, for such x we have:

This completes the proof of Lemma 3.5. \(\square \)

4 Systems of Competing Brownian Particles

4.1 Classical Systems: Definitions and Background

In this subsection, we use definitions from [4]. Assume the usual setting: a filtered probability space \((\Omega , {\mathcal {F}}, ({\mathcal {F}}_t)_{t \ge 0}, {\mathbf {P}})\) with the filtration satisfying the usual conditions. Let \(N \ge 2\) (the number of particles). Fix parameters

We wish to define a system of N Brownian particles in which the kth smallest particle moves a Brownian motion with drift \(g_k\) and diffusion \(\sigma _k^2\). These systems were studied recently in [3, 34, 35, 38, 48, 49, 52, 53]. Their applications include: (i) mathematical finance, namely modeling the real-world feature of stocks with smaller capitalizations having larger growth rates and larger volatilities; it suffcies to take decreasing sequences \((g_k)\) and \((\sigma _k^2)\); see also [10, 37, 40]; (ii) diffusion limits of a certain type of exclusion processes, namely asymmetrically colliding random walks, see [39]; (iii) a discrete approximation to McKean–Vlasov equation, see [17, 36, 54].

Definition 7

Take i.i.d. standard \(({\mathcal {F}}_t)_{t \ge 0}\)-Brownian motions \(W_1, \ldots , W_N\). For a continuous \({\mathbb {R}}^N\)-valued process \(X = (X(t),\ t \ge 0)\), \(X(t) = (X_1(t), \ldots , X_N(t))'\), let us define \({\mathbf {p}}_t,\ t \ge 0\), the ranking permutation for the vector X(t): this is a permutation on \(\{1, \ldots , N\}\), such that:

-

(i)

\(X_{{\mathbf {p}}_t(i)}(t) \le X_{{\mathbf {p}}_t(j)}(t)\) for \(1 \le i < j \le N\);

-

(ii)

if \(1 \le i < j \le N\) and \(X_{{\mathbf {p}}_t(i)}(t) = X_{{\mathbf {p}}_t(j)}(t)\), then \({\mathbf {p}}_t(i) < {\mathbf {p}}_t(j)\).

Suppose the process X satisfies the following SDE:

Then this process X is called a classical system of N competing Brownian particles with drift coefficients \(g_1, \ldots , g_N\) and diffusion coefficients \(\sigma _1^2, \ldots , \sigma _N^2\). For \(i = 1, \ldots , N\), the component \(X_i = (X_i(t), t \ge 0)\) is called the ith named particle. For \(k = 1, \ldots , N\), the process

is called the k th ranked particle. They satisfy \(Y_1(t) \le Y_2(t) \le \ldots \le Y_N(t)\), \(t \ge 0\). If \({\mathbf {p}}_t(k) = i\), then we say that the particle \(X_i(t) = Y_k(t)\) at time t has name i and rank k.

The coefficients of the SDE (10) are piecewise constant functions of \(X_1(t), \ldots , X_N(t)\), so weak existence and uniqueness in law for such systems follows from [6]. Consider the gap process: an \({\mathbb {R}}^{N-1}_+\)-valued process defined by

It was shown in [4] that this is an \({{\mathrm{SRBM}}}^{N-1}(R, \mu , A)\) in the orthant \(S = {\mathbb {R}}_+^{N-1}\) with parameters

4.2 Main Results

In this subsection, we present results about the gap process: existence of a stationary distribution, Lyapunov functions, and tail estimates. Let \(\overline{g}_k := \left( g_1 + \ldots + g_k\right) /k\) for \(k = 1, \ldots , N\).

Proposition 4.1

The gap process has a stationary distribution if and only if

In this case, it is V-uniformly ergodic with a certain function \(V : {\mathbb {R}}^{N-1}_+ \rightarrow [1, \infty )\).

Proof

This result was already proved in [4], [3, 33, 50], but for the sake of completeness we present a sketch of proof. The matrix R is a reflection nonsingular \({\mathcal {M}}\)-matrix, and

Define the quantities

Then we can rewrite (15) as

Therefore, the gap process has a stationary distribution if and only if each component of this vector is strictly positive, which is equivalent to the condition (14). In this case, the fluid path condition holds by [11], and so by [9] the gap process is V-uniformly ergodic for a certain Lyapunov function \(V : {\mathbb {R}}^{N-1}_+ \rightarrow [1, \infty )\). \(\square \)

From Corollary 3.3, we get a concrete Lyapunov function V, namely:

where \(\varphi \) is defined in (1). We use the fact that the matrix R is symmetric, so in the notation of Corollary 3.3 we have: \(C = I_{N-1}\) and \(\overline{R} = R\). Here, we must have \(\lambda < \Lambda \), where

Let us try to estimate the tail of the stationary distribution \(\pi \).

Theorem 4.2

Using the definition of \(b_1, \ldots , b_{N-1}\) from (16), we have:

Proof

From the results of Theorem 3.1, we have:

where K is defined in (9) (in this notation, \(Q = R^{-1}\)). Now, let us estimate \(K\Lambda \) from below. Define \(\Sigma := \{x \in {\mathbb {R}}^{N-1}_+\mid x_1 + \ldots + x_{N-1} = 1\}\).

Lemma 4.3

-

(i)

The norm of the matrix \(R^{-1}\) is equal to

$$\begin{aligned} ||R^{-1}|| = \lambda ^{-1}_1 = \left( 1 - \cos \frac{\pi }{N}\right) ^{-1}; \end{aligned}$$(17) -

(ii)

\(U(x) \ge 1\) for \(x \in \Sigma \);

-

(iii)

\(\left| R^{-1}\mu \cdot x\right| \ge 2\min (b_1, \ldots , b_{N-1})\) for \(x \in \Sigma \);

-

(iv)

\(x'R^{-1}AR^{-1}x \le ||R^{-1}||^2||A||\) for \(x \in \Sigma \).

Suppose we proved Lemma 4.3. From part (ii) we get: \(K \ge 1\). Using (ii)–(iv), we obtain:

Finally, using (i), we get:

But

Therefore,

The rest of the proof is trivial.

Proof of Lemma 4.3

-

(i)

The eigenvalues of R are given by (see, e.g., [41])

$$\begin{aligned} \lambda _k = 1 - \cos \frac{k\pi }{N},\quad k = 1, \ldots , N - 1. \end{aligned}$$The eigenvalues of \(R^{-1}\) are \(\lambda _k^{-1},\ k = 1, \ldots , N - 1\). The matrix \(R^{-1}\) is symmetric, so its norm is equal to the absolute value of its maximal eigenvalue. Therefore, we get (17).

-

(ii)

The matrix \(R^{-1}\) is symmetric and positive definite. Solving the optimization problem \(x'R^{-1}x \rightarrow \min ,\ x\cdot \mathbf {1}= 1\), we get: the minimum is \(\mathbf {1}'R\mathbf {1}\), which is equal to the sum of all elements of R, which, in turn, equals 1.

-

(iii)

Follows from the fact that \(R^{-1}\mu < 0\) and (15).

-

(iv)

Follows from the multiplicative property of the Euclidean norm, and from the fact that for \(x \in \Sigma \) we have: \(||x||^2 = x_1^2 + \ldots + x_{N-1}^2 \le (x_1 + \cdots + x_{N-1}^2)^2 = 1\).

\(\square \)

4.3 Asymmetric Collisions

One can generalize the classical system of competing Brownian particles from Definition 7 in many ways. Let us describe one of these generalizations. Consider a classical system of competing Brownian particles, as in Definition 7. For \(k = 1, \ldots , N-1\), let

be the semimartingale local time process at zero of the process \(Z_k = Y_{k+1} - Y_k\). We shall call this the collision local time of the particles \(Y_k\) and \(Y_{k+1}\). For notational convenience, let \(L_{(0, 1)}(t) \equiv 0\) and \(L_{(N, N+1)}(t) \equiv 0\). Let

It can be checked that \(\langle B_k, B_l\rangle _t \equiv \delta _{kl}t\), so \(B_1, \ldots , B_N\) are i.i.d. standard Brownian motions. As shown in [3–5], [33, Chapter 3], the ranked particles \(Y_1, \ldots , Y_N\) have the following dynamics:

The collision local time \(L_{(k, k+1)}\) has a physical meaning of the push exerted when the particles \(Y_k\) and \(Y_{k+1}\) collide, which is needed to keep the particle \(Y_{k+1}\) above the particle \(Y_k\). Note that the coefficients at the local time terms are \(\pm 1/2\). This means that the collision local time \(L_{(k, k+1)}\) is split evenly between the two colliding particles: the lower-ranked particle \(Y_k\) receives one half of this local time, which pushes it down, and the higher-ranked particle \(Y_{k+1}\) receives the other one half of this local time, which pushes it up.

In the paper [39], they considered systems of Brownian particles when this collision local time is split unevenly: the part \(q^+_{k+1}L_{(k, k+1)}(t)\) goes to the upper particle \(Y_{k+1}\), and the part \(q^-_kL_{(k, k+1)}(t)\) goes to the lower particle \(Y_k\). Let us give a formal definition.

Definition 8

Fix \(N \ge 2\), the number of particles. Take drift and diffusion coefficients

and, in addition, take parameters of collision

Consider a continuous adapted \({\mathbb {R}}^N\)-valued process

Take other \(N-1\) continuous adapted real-valued nondecreasing processes

with \(L_{(k, k+1)}(0) = 0\), which can increase only when \(Y_{k+1} = Y_k\):

Let \(L_{(0, 1)}(t) \equiv 0\) and \(L_{(N, N+1)}(t) \equiv 0\). Assume that

Then the process Y is called the system of competing Brownian particles with asymmetric collisions. The gap process is defined similarly to the case of a classical system.

Strong existence and pathwise uniqueness for these systems are shown in [39, Section 2.1]. When \(q^{\pm }_1 = q^{\pm }_2 = \ldots = 1/2\), we are back in the case of symmetric collisions.

Remark 5

For systems of competing Brownian particles with asymmetric collisions, we defined only ranked particles \(Y_1, \ldots , Y_N\). It is, however, possible to define named particles \(X_1, \ldots , X_N\) for the case of asymmetric collisions. This is done in [39, Section 2.4]. The construction works up to the first moment of a triple collision. A necessary and sufficient condition for a.s. absence of triple collisions is given in [53]. We will not make use of this construction in our article, instead working with ranked particles.

It was shown in [39] that the gap process for systems with asymmetric collisions, much like for the classical case, is an SRBM. Namely, it is an \({{\mathrm{SRBM}}}^{N-1}(R, \mu , A)\), where \(\mu \) and A are given by (12) and (13), and the reflection matrix R is given by

This matrix is also a reflection nonsingular \({\mathcal {M}}\)-matrix. Therefore, there exists a stationary distribution for this SRBM if and only if \(R^{-1}\mu < 0\). In this case, we can apply the results of [9] again and conclude that the gap process is V-uniformly ergodic with a certain Lyapunov function \(V : {\mathbb {R}}^{N-1}_+ \rightarrow [1, \infty )\). Corollary 3.3 allows us to find an explicit Lyapunov function and provide explicit tail estimates. A remark is in order: the matrix R in (19) in general is not symmetric, as opposed to the matrix R in (11). But if we take the \((N-1)\times (N-1)\) diagonal matrix

then the matrix \(\overline{R} = RC\) is diagonal.

References

Atar, R., Budhiraja, A., Dupuis, P.: Correction note: “On positive recurrence of constrained diffusion processes”. Ann. Probab. 29(3), 1404 (2001)

Atar, R., Budhiraja, A., Dupuis, P.: On positive recurrence of constrained diffusion processes. Ann. Probab. 29(2), 979–1000 (2001)

Banner, A.D., Fernholz, E.R., Ichiba, T., Karatzas, I., Papathanakos, V.: Hybrid atlas models. Ann. Appl. Probab. 21(2), 609–644 (2011)

Banner, A.D., Fernholz, E.R., Karatzas, I.: Atlas models of equity markets. Ann. Appl. Probab. 15(4), 2296–2330 (2005)

Banner, A.D., Ghomrasni, R.: Local times of ranked continuous semimartingales. Stoch. Process. Appl. 118(7), 1244–1253 (2008)

Bass, R.F., Pardoux, É.: Uniqueness for diffusions with piecewise constant coefficients. Probab. Theory Relat. Fields 76(4), 557–572 (1987)

Bramson, M., Dai, J.G., Harrison, J.M.: Positive recurrence of reflecting Brownian motion in three dimensions. Ann. Appl. Probab. 20(2), 753–783 (2010)

Budhiraja, A., Dupuis, P.: Simple necessary and sufficient conditions for the stability of constrained processes. SIAM J. Appl. Math. 59(5), 1686–1700 (1999)

Budhiraja, A., Lee, C.: Long time asymptotics for constrained diffusions in polyhedral domains. Stoch. Process. Appl. 117(8), 1014–1036 (2007)

Chatterjee, S., Pal, S.: A phase transition behavior for Brownian motions interacting through their ranks. Probab. Theory Relat. Fields 147(1–2), 123–159 (2010)

Chen, H.: A sufficient condition for the positive recurrence of a semimartingale reflecting Brownian motion in an orthant. Ann. Appl. Probab. 6(3), 758–765 (1996)

Dai, J.G., Harrison, J.M.: Reflecting Brownian motion in three dimensions: a new proof of sufficient conditions for positive recurrence. Math. Methods Oper. Res. 75(2), 135–147 (2012)

Dai, J.G., Kurtz, T.G.: Characterization of the stationary distribution for a semimartingale reflecting Brownian motion in a convex polyhedron (2003). Unpublished Manuscript

Dai, J.G., Miyazawa, M.: Stationary distribution of a two-dimensional SRBM: geometric views and boundary measures. Queueing Syst. 74(2–3), 181–217 (2013)

Dai, J.G., Williams, R.J.: Existence and uniqueness of semimartingale reflecting Brownian motions in convex polyhedra. Teor. Veroyatnost. i Primenen. 40(1), 3–53 (1995)

Dai, J.G.: Steady-state analysis of reflected Brownian motions: characterization, numerical methods and queueing applications. Thesis (Ph.D.), Stanford University (1990)

Dembo, A., Shkolnikov, M., Varadhan, S.R., Zeitouni, O.: Large deviations for diffusions interacting through their ranks. (2013) Preprint. arXiv:1211.5223

Dieker, A.B., Moriarty, J.: Reflected Brownian motion in a wedge: sum-of-exponential stationary densities. Electron. Commun. Probab. 14, 1–16 (2009)

Douc, R., Fort, G., Guillin, A.: Subgeometric rates of convergence of \(f\)-ergodic strong Markov processes. Stoch. Process. Appl. 119(3), 897–923 (2009)

Douc, R., Fort, G., Moulines, E., Soulier, P.: Practical drift conditions for subgeometric rates of convergence. Ann. Appl. Probab. 14(3), 1353–1377 (2004)

Down, D., Meyn, S.P., Tweedie, R.L.: Exponential and uniform ergodicity of Markov processes. Ann. Probab. 23(4), 1671–1691 (1995)

Dupuis, P., Ishii, H.: On Lipschitz continuity of the solution mapping to the Skorokhod problem, with applications. Stochastics 35(1), 31–62 (1991)

Dupuis, P., Ishii, H.: SDEs with oblique reflection on nonsmooth domains. Ann. Probab. 21(1), 554–580 (1993)

Dupuis, P., Williams, R.J.: Lyapunov functions for semimartingale reflecting Brownian motions. Ann. Probab. 22(2), 680–702 (1994)

Fort, G., Roberts, G.O.: Subgeometric ergodicity of strong Markov processes. Ann. Appl. Probab. 15(2), 1565–1589 (2005)

Foschini, G.J.: Equilibria for diffusion models of pairs of communicating computers—symmetric case. IEEE Trans. Inform. Theory 28(2), 273–284 (1982)

Harrison, J.M., Hasenbein, J.J.: Reflected Brownian motion in the quadrant: tail behavior of the stationary distribution. Queueing Syst. 61(2–3), 113–138 (2009)

Harrison, J.M., Reiman, M.I.: On the distribution of multidimensional reflected Brownian motion. SIAM J. Appl. Math. 41(2), 345–361 (1981)

Harrison, J.M., Reiman, M.I.: Reflected Brownian motion on an orthant. Ann. Probab. 9(2), 302–308 (1981)

Harrison, J.M., Williams, R.J.: Brownian models of open queueing networks with homogeneous customer populations. Stochastics 22(2), 77–115 (1987)

Harrison, J.M., Williams, R.J.: Multidimensional reflected Brownian motions having exponential stationary distributions. Ann. Probab. 15(1), 115–137 (1987)

Hobson, D.G., Rogers, L.C.G.: Recurrence and transience of reflecting Brownian motion in the quadrant. Math. Proc. Camb. Philos. Soc. 113(2), 387–399 (1993)

Ichiba, Tomoyuki: Topics in multi-dimensional diffusion theory: Attainability, reflection, ergodicity and rankings. Thesis (Ph.D.), Columbia University, ProQuest LLC, Ann Arbor, MI (2009)

Ichiba, T., Karatzas, I., Shkolnikov, M.: Strong solutions of stochastic equations with rank-based coefficients. Probab. Theory Relat. Fields 156(1–2), 229–248 (2013)

Ichiba, T., Pal, S., Shkolnikov, M.: Convergence rates for rank-based models with applications to portfolio theory. Probab. Theory Relat. Fields 156(1), 415–448 (2013)

Jourdain, B., Malrieu, F.: Propagation of chaos and poincar inequalities for a system of particles interacting through their CDF. Ann. Appl. Probab. 18(5), 1706–1736 (2008)

Jourdain, B., Reygner, J.: Capital distribution and portfolio performance for in the mean-field atlas model (2013). Preprint. arXiv:1312.5660

Jourdain, B., Reygner, J.: Propagation of chaos for rank-based interacting diffusions and long time behaviour of a scalar quasilinear parabolic equation. Stoch. Partial Differ. Equ. Anal. Comput. 1(3), 455–506 (2013)

Karatzas, I., Pal, S., Shkolnikov, M.: Systems of brownian particles with asymmetric collisions (2012). Preprint. arXiv:1210.0259v1

Karatzas, I., Sarantsev, A.: Diverse market models of competing brownian particles with splits and mergers. (2014). Preprint. arXiv:1404.0748

Kardaras, C.: Balance, growth and diversity of financial markets. Ann. Finance 4(3), 369–397 (2008)

Meyn, S.P., Tweedie, R.L.: Markov Chains and Stochastic Stability. Communications and Control Engineering Series. Springer, London (1993)

Meyn, S.P.: Ergodic theorems for discrete time stochastic systems using a stochastic Lyapunov function. SIAM J. Control Optim. 27(6), 1409–1439 (1989)

Meyn, S.P., Tweedie, R.L.: Stability of Markovian processes. I. Criteria for discrete-time chains. Adv. Appl. Probab. 24(3), 542–574 (1992)

Meyn, S.P., Tweedie, R.L.: Stability of Markovian processes. II. Continuous-time processes and sampled chains. Adv. Appl. Probab. 25(3), 487–517 (1993)

Meyn, S.P., Tweedie, R.L.: Stability of Markovian processes. III. Foster-Lyapunov criteria for continuous-time processes. Adv. Appl. Probab. 25(3), 518–548 (1993)

Reiman, I.M., Williams, R.J.: A boundary property of semimartingale reflecting Brownian motions. Probab. Theory Relat. Fields 77(1), 87–97 (1988)

Reygner, J.: Chaoticity of the stationary distribution of rank-based interacting diffusions (2014). Preprint. arXiv:1408.4103

Sarantsev, A.: Comparison techniques for competing brownian particles. (2015). Preprint. arXiv:1305.1653

Sarantsev, A.: Infinite systems of competing brownian particles (2015). Preprint. arXiv:1403.4229

Sarantsev, A.: Lyapunov functions for reflected jump-diffusions (2015). Preprint. arXiv:1509.01783

Sarantsev, A.: Multiple collisions in systems of competing brownian particles (2015). Preprint. arXiv:1309.2621

Sarantsev, A.: Triple and simultaneous collisions of competing Brownian particles. Electron. J. Probab. 20(29), 1–28 (2015)

Shkolnikov, M.: Large systems of diffusions interacting through their ranks. Stoch. Process. Appl. 122(4), 1730–1747 (2012)

Taylor, L.M., Williams, R.J.: Existence and uniqueness of semimartingale reflecting Brownian motions in an orthant. Probab. Theory Relat. Fields 96(3), 283–317 (1993)

Williams, R.J.: Reflected Brownian motion with skew symmetric data in a polyhedral domain. Probab. Theory Relat. Fields 75(4), 459–485 (1987)

Williams, R.J.: Semimartingale reflecting Brownian motions in the orthant. In: Stochastic Networks, volume 71 of IMA Vol. Math. Appl., pp. 125–137. Springer, New York (1995)

Acknowledgments

The author would like to thank Ioannis Karatzas and Ruth Williams for their help and useful discussion. Also, the author would like to thank two anonymous referees for pointing out misprints and for useful comments which helped improve the paper. This research was partially supported by NSF Grants DMS 1007563, DMS 1308340, DMS 1409434, and DMS 1405210.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Sarantsev, A. Reflected Brownian Motion in a Convex Polyhedral Cone: Tail Estimates for the Stationary Distribution. J Theor Probab 30, 1200–1223 (2017). https://doi.org/10.1007/s10959-016-0674-8

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10959-016-0674-8

Keywords

- Reflected Brownian motion

- Lyapunov function

- Tail estimate

- Generator

- Convex polyhedron

- Polyhedral cone

- Competing Brownian particles

- Symmetric collisions

- Gap process