Abstract

This paper focuses on distributed consensus optimization problems with coupled constraints over time-varying multi-agent networks, where the global objective is the finite sum of all agents’ private local objective functions, and decision variables of agents are subject to coupled equality and inequality constraints and a compact convex subset. Each agent exchanges information with its neighbors and processes local data. They cooperate to agree on a consensual decision vector that is an optimal solution to the considered optimization problems. We integrate ideas behind dynamic average consensus and primal-dual methods to develop a distributed algorithm and establish its sublinear convergence rate. In numerical simulations, to illustrate the effectiveness of the proposed algorithm, we compare it with some related methods by the Neyman–Pearson classification problem.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Distributed algorithms decompose an optimization problem into smaller and more manageable subproblems that can be solved in parallel by a group of agents or processors. Such algorithms are used for large-scale problems in machine learning, wireless sensor networks, social networks, smart grids, etc.

To date, a large number of distributed algorithms have been developed for distributed unconstrained optimization problems. In general, these algorithms can be classified into two categories: dual-decomposition-based algorithms and dynamic-average-consensus-based algorithms. The dual-decomposition-based algorithms utilize a consensus equality constraint to reformulate unconstrained optimization problems into constrained ones such that decision variables of agents are decoupled, then integrate ideas from centralized primal-dual methods. Typical of such algorithms are EXTRA [40], PG-EXTRA [41], NIDS [18], ABC [48], and DCPA [5], etc. On the other hand, the dynamic-average-consensus-based algorithms introduce auxiliary variables to track the average of some quantities that need to reach a consensus among agents, such as tracking the average gradients of all agents’ local objectives. To name a few, Aug-DGM [49], DIGing [26], Push–Pull Gradient [35], Harnessing Smoothness [36], and TV-\(\mathcal{A}\mathcal{B}\) [39], etc. Ideas behind these methods are generalized to design algorithms for solving distributed constrained optimization problems arising in practical applications.

In this paper, we consider the following constrained consensus optimization problem over a multi-agent network consisting of m agents,

where \(\mathcal {K}=\Re ^p_-\times \{0_q\}\), implying that the constraints include inequality and equality. \(f_i:\Re ^n\rightarrow \Re \), \(h_i:\Re ^n\rightarrow \Re ^{p+q}\), \(i=1,2,\dots ,m\), and \(\mathcal {X}\) is a subset of \(\Re ^n\). For each \(i=1,2,\dots ,m\), the local objective \(f_i\) and the constraint function \(h_i\) are only known by agent i, but are not available to other agents. All agents cooperate to find an optimal solution to (DCOP) through peer-to-peer communication and local computation.

1.1 Motivations

The optimization problem (DCOP) is motivated by its applications in stochastic programs, distributed optimization, optimal control, etc. Due to space limitations, we will present two important examples of applications.

Example 1

Consider the following stochastic programming with expectation constraints,

where \(\xi \) is a random vector supported on \(\mathcal {P}\subseteq \Re ^n\) and independent of x. \(F(x,\xi ): \Re ^p\times \mathcal {P}\rightarrow \Re \) and \(G(x,\xi ):\Re ^p\times \mathcal {P}\rightarrow \Re ^q\). The problem (1.1) has many applications in machine learning, finance and data analysis, and operations research, e.g., Neyman–Pearson classification [44] and asset allocation problems [15, 38].

The sample average approximation (SAA) method is widely used for stochastic optimization problems. Applying SAA to solve (1.1), one first generates N independently and identically distributed (i.i.d.) random samples \(\{\xi _i\}_{i=1}^N\) and then uses the averages \(\frac{1}{N}\sum _{i=1}^NF(x,\xi _i)\) and \(\frac{1}{N}\sum _{i=1}^NG(x,\xi _i)\) to approximate f(x) and g(x), respectively. The associated approximation optimization subproblem is

It is well known that optimal solutions of (1.2) asymptotically solve (1.1) as the sample size N tends to infinity. An immediately following question is how to solve such subproblems.

Example 2

If the objective f(x) and constraint function h(x) of (DCOP) both have separable structures, namely, \(x=(x_1,x_2,\dots ,x_m)\), \(f_i(x)=\tilde{f}_i(x_i)\), and \(h_i(x)=\tilde{h}_i(x_i)\), (DCOP) reduces to the following constraint-coupled optimization problem,

The problem (CCOP) has been widely investigated in smart grids, optimal control, machine learning, etc. For instance, in multi-micro energy grid systems [51], the coupled constraints character that the sum of users’ power consumption is equal to the total power generation of grids and the total carbon emissions are not more than a given upper bound. In distributed resource allocation problems [27, 33], the relation of allocating some given resource to agents is formulated as the coupled constraints. Besides, (CCOP) also appears in problems such as distributed model predictive control [6], network utility maximization [20], and distributed estimation [13], etc.

Theoretically, algorithms for (CCOP) can be adopted to solve (DCOP) at the cost of adding \(m-1\) equality constraints, namely, \(x_1=x_2=\dots =x_m\). However, in practical computations, this causes challenges if the network size m and the variable dimension n are relatively large, e.g., \(m=1000\), \(n=100\). Moreover, the consensus constraints, \(x_i=x_j\), for each \(j\in \mathcal {N}_i\) (the set of neighbors of agent i), play an important role in applications such as distributed regression problems [50] and optimal control [24, 45], which motivates us to consider the lifted consensus optimization problem (DCOP).

1.2 Related Work

To accommodate the demands of practical applications, researchers aim at designing distributed algorithms that converge faster and can apply to more general multi-agent networks.

The earlier studies of distributed constrained consensus optimization problems focused on the case where agents’ decisions are only subject to local constraint sets. The distributed projection (sub)gradient method [30] is a seminal work in this direction. Afterward, a large number of dual-decomposition-based [16, 52] or dynamic-average-consensus-based [3, 21, 22] distributed algorithms with fast convergence rates have been proposed to solve such problems.

The first attempt to focus on distributed consensus optimization with equality and inequality constraints may be the work of [53], which presented a distributed primal-dual algorithm with vanishing step-sizes. Afterward, the community aims to design faster convergent distributed algorithms with a fixed step-size for such problems, e.g., see [12, 14, 23]. Under various assumptions such as smoothness and strong-convexity of functions, these algorithms have sublinear or linear convergence rates, while their considered equality and inequality constraints are shared with all agents.

The problem (CCOP) has attracted increasing research interest as the coupled constraints frequently appear in many fields. Approaches directly aiming at (CCOP) typically leverage the Lagrangian duality to deal with the coupled constraints, because the Lagrangian has a separable structure in the primal decision variables and the dual problem is a special case of (DCOP). Works based on the dual-decomposition method are references [2, 10, 27, 42], to mention a few. There are also consensus-based distributed algorithms for such problems, e.g., see [1, 8, 9, 22, 43]. While these mentioned papers considered only the linearly coupled constraints, i.e., \(\mathcal {K}=\{0_q\}\) and each \(\tilde{h}_i\) is affine. The recent works [4, 19, 47] investigated (CCOP) with coupled equality and inequality constraints, and proposed the distributed primal-dual gradient method [19], the integrated primal-dual proximal method [47], and the augmented Lagrangian tracking method [4], in which the integrated primal-dual proximal method has an O(1/k) convergence rate in terms of optimality and feasibility, the other two algorithms asymptotically solve (CCOP) with constant step-sizes but no results on rate analysis.

In general, the dual-decomposition-based algorithms have nice convergence and rates while lacking extensibility to directed and time-varying networks. The dynamic-average-consensus-based algorithms are inexact gradient methods. If the objective and constraint functions do not have good properties, such as strong-convexity or gradient Lipschitz continuity, convergence analysis of these algorithms is difficult. Moreover, such algorithms can be easily extended to directed and time-varying communication networks of multi-agent, but the complex networks also bring challenges in algorithm analysis.

It’s worth noting that the distributed algorithms mentioned so far for the problems (CCOP) or (DCOP) are all executed over a fixed (static) multi-agent network. To my knowledge, there is very little work that investigates (DCOP) over time-varying networks, except for [17]. The authors of [17] proposed a distributed proximal primal-dual algorithm that has an \(O(1/\sqrt{k})\) convergence rate for solving (DCOP).

In this paper, we integrate ideas from the algorithm TV-\(\mathcal{A}\mathcal{B}\) [39] and the primal-dual optimization method to develop a distributed algorithm for solving (DCOP) over time-varying and directed multi-agent networks. The main contributions of the article are threefold. First, this paper considers distributed consensus optimization problems with coupled affine equality and inequality (not necessarily affine) constraints. The objective and constraint functions are only convex and differentiable. Such assumptions are fundamental settings for consensus-based algorithms. The considered problem (DCOP) is more general and challenging than many papers in the literature, e.g., [2, 10, 27]. Second, the investigated multi-agent communication network is more general than the undirected and static ones considered by [2, 10, 19, 27, 42]. Third, The foundational assumptions on the objective and constraint functions, as well as the time-varying communication network of multi-agent bring challenges to analysis of the proposed inexact gradient algorithm, we still establish its convergence and iteration complexity.

The rest of this paper is organized as follows. Section 2 provides necessary preliminaries and constructs the saddle point problem of (DCOP). Section 3 develops a consensus-based distributed primal-dual algorithm. Section 4 presents the main results about the convergence properties of the proposed algorithm. Section 5 simulates the presented algorithm using a realistic example and compares it with some related methods. Finally, Sect. 6 makes some conclusions.

2 Preliminaries

In this section, we will present some preliminaries in graph theory and assumptions on the considered optimization problem and multi-agent network.

2.1 Notion and Notations

At each time slot \(k\ge 0\), a multi-agent system consisting of m agents is modeled as a directed graph \(\mathcal {G}(k)=(\mathcal {V},\mathcal {E}(k))\), where \(\mathcal {V}=\{1,2,\dots ,m\}\) and \(\mathcal {E}(k)\) is the set of directed edges. Let \(A(k)=[a_{ij}(k)]\) and \(B(k)=[b_{ij}(k)]\) be two matrices that are compatible with the graph \(\mathcal {G}(k)\), which means that \((i,j)\in \mathcal {E}(k)\) if and only if \(a_{ij}(k)>0\) and \(b_{ij}(k)>0\). We write \(\mathcal {N}_i^\mathrm{{out}}(k)=\{j\in \mathcal {V}: (i,j)\in \mathcal {E}(k)\}\) and \(\mathcal {N}_i^\mathrm{{in}}(k)=\{j\in \mathcal {V}: (j,i)\in \mathcal {E}(k)\}\) as the sets of out-neighbors and in-neighbors of agent i, respectively. The symbol \(\vert \mathcal {N}_i^\mathrm{{out}}(k)\vert \) (resp. \(\vert \mathcal {N}_i^\mathrm{{in}}(k)\vert \)) represents the out-degree (resp. in-degree) of agent i.

The properties of adjacency matrices of connected (or strongly connected) graphs, such as their eigenvalues and eigenvectors, are closely related to stochastic vectors. Lagrangian function and its saddle points are also fundamental concepts in constrained optimization. To clarify the presentation of the considered problem and algorithm analysis, we introduce the following three notions.

Definition 2.1

(Stochastic vector [29]) A vector \(\pi \in \Re ^m\) is said to be stochastic if its components \(\pi _i\) satisfy that

Definition 2.2

(Absolute probability sequence [39]) For row-stochastic matrices \(\{\mathcal {R}_k\}\), an absolute probability sequence is a sequence \(\{\pi _k\}\) of stochastic vectors such that

Definition 2.3

(Saddle point [37]) Consider a function \(\mathcal {L}:X\times S\rightarrow \Re \), where X and S are non-empty subsets of \(\Re ^n\) and \(\Re ^{p+q}\) respectively, a pair of vectors \((x^*,\lambda ^*)\in X\times S\) is called a saddle point of function \(\mathcal {L}\) over \( X\times S\), if for all \((x,\lambda )\in X\times S\), it holds that

In this paper, the gradient vector of a function f at x is denoted by \(\nabla f(x)\), \(\Vert \cdot \Vert \) represents the Frobenius-norm of a matrix with suitable dimensions, and \(\langle \cdot ,\cdot \rangle \) is the inner product matched with the norm \(\Vert \cdot \Vert \). The projection of a point x onto a subset \(\varOmega \subseteq \Re ^n\) is written as \(P_\varOmega (x)\). For a non-empty subset \(\varOmega \), we denote its relative interior by \(\textrm{ri}(\varOmega )\). \(1_m\) represents the column vector in \(\Re ^m\) whose entries are all ones.

2.2 Assumptions and Saddle Point Problem

This paper focuses on time-varying communication networks of multi-agent. We make the following assumptions on the network communication graphs, which are standard for consensus-based algorithms, e.g., see [29, 30, 39].

Assumption 2.1

(Periodical strong connectivity) There exists a positive integer B such that the directed graph \((\mathcal {V},\bigcup _{t=0}^{B-1}\mathcal {E}(t+k))\) is strongly connected, for all \(k\ge 0\).

Assumption 2.2

(Weights) Let \(A(k)=[a_{ij}(k)]_{m\times m}\) and \(B(k)=[b_{ij}(k)]_{m\times m}\) be two matrices that are compatible with the graph \(\mathcal {G}(k)\).

-

(a)

Stochasticity: Matrices \(\{A(k)\}\) and \(\{B(k)\}\) are row-stochastic and column-stochastic, respectively.

-

(b)

The graph \(\mathcal {G}(k)\) has self-loops, i.e., \(a_{ii}(k)>0, b_{ii}(k)>0, \forall \, i\in V, k\ge 0\).

-

(c)

Uniform positivity: There is a scalar \(\eta \in (0,1)\) such that \(a_{ij}(k)\ge \eta \) and \(b_{ij}(k)\ge \eta , \forall \, (i,j)\in \mathcal {E}(k), k\ge 0\).

The matrices A(k) and B(k) are singly stochastic. Agents can use their out-degrees and in-degrees to construct these two matrices easily, which avoids the difficulty of generating symmetric doubly stochastic adjacency matrices over directed and time-varying networks. Assumption 2.2 (b) implies that each agent performs local computation using information held by itself and received from its in-neighbors. Item (c) indicates that the influence of each agent on the network is not vanishing.

Smooth convex optimization problems are within the range of our consideration, so we make the following standard assumption on (DCOP).

Assumption 2.3

(Convexity and smoothness)

-

(a)

The local function \(f_i\) of agent i is convex and continuous differentiable over the subset \(\mathcal {X}\), \(\forall \, i=1,2,\ldots ,m\).

-

(b)

The function \(h_i^j\) (the j-th coordinate component of \(h_i\)) corresponding to the inequality constraints is convex and continuous differentiable, \(j=1,\ldots ,p\), and \(h_i^l\) corresponding to the equality constraints is affine, \(l=p+1,\ldots ,p+q, \forall \, i=1,\ldots ,m\).

-

(c)

The non-empty subset \(\mathcal {X}\) of \(\Re ^n\) is compact and convex.

To construct a primal-dual method, a fundamental assumption is that the strong duality holds, which is guaranteed by the following Slater’s condition.

Assumption 2.4

(Slater’s condition) Suppose that there exists a point \(\bar{x}\in \textrm{ri}(\mathcal {X})\), such that \(\sum _{i=1}^mh_i(\bar{x})\in \textrm{ri}(\mathcal {K})\).

Let \(\mathcal {K}^\circ \) represent the polar of \(\mathcal {K}\), namely, \(\mathcal {K}^\circ =\Re ^p_+\times \Re ^q\). The saddle point problem of (DCOP) can be formulated as

where \(\mathcal {L}_i(x,\lambda )=f_i(x)+\lambda ^\top h_i(x)\), \(\lambda ^{\textrm{I}}\) and \(\lambda ^{\textrm{E}}\) are the multiplies respectively corresponding to the inequality and equality constraints, and \(~\lambda =(\lambda ^{\textrm{I}},\lambda ^{\textrm{E}})\in \mathcal {K}^\circ \).

Under Slater’s condition, there does not exist a duality gap between the primal problem (DCOP) and its dual. We then attempt to develop a distributed algorithm to solve the max-min problem (2.1) and find an optimal solution to (DCOP).

For any \(\bar{\lambda }\in \mathcal {K}^\circ \), denote

The boundness of multiplier \(\lambda ^{\textrm{I}}\) corresponding to the inequality constraints is indispensable in the design and analysis of our algorithm, which is guaranteed by the following lemma.

Lemma 2.1

([28], Lemma1) For any \(\bar{\lambda }\in \mathcal {K}^\circ \) and \(\alpha \in \Re \), it holds that

where \(\gamma (\bar{x})=\min _{1\le j\le p}\{-\sum _{i=1}^mh_i^j(\bar{x})\}\) and \(\bar{x}\) satisfies Assumption 2.4.

Let \(q^*\) denote the dual-optimal value and \(Q^*\) denote the dual optimal solutions set, namely

According to Lemma 2.1, the optimal multiplier \(\lambda ^{\textrm{I}}\) is bounded. Select an arbitrary vector \(\bar{\lambda }\in \mathcal {K}^\circ \), define

It follows that \(Q^*\subseteq Q(\bar{\lambda })\subseteq Q\). The set Q will be used in designing a distributed algorithm in Sect. 3. Note that Q is the Cartesian product of a ball in \(\Re ^p\) and \(\Re ^q\), and the projection onto Q has a simple closed-form solution.

3 Design of Distributed Algorithm

3.1 Primal-Dual Projected Gradient Method

Consider the following centralized optimization problem with equality and inequality constraints,

where \(\mathcal {K}=\Re ^p_-\times \{0_q\}\). The saddle point problem associated with (3.1) is

where \(\tilde{L}(x,\lambda )=\tilde{f}(x)+\lambda ^\top \tilde{h}(x)\). Given a pair \((x^k,\lambda ^k)\), the primal-dual projected gradient method [7] for solving (3.2) obeys the following rules to generate the successive primal-dual pair \((x^{k+1},\lambda ^{k+1})\),

where \(\alpha _k>0\) is a given step-size. Review the first-order optimality conditions [37, Theorem 36.6] for (3.1), it holds that \(x\in \mathcal {X}\) is an optimal solution to (3.1) if and only if there is a primal-dual pair \((x,\lambda )\) such that

for any scalar \(\alpha >0\). Therefore, the primal-dual projected gradient method also can be viewed as the fixed point method.

3.2 TV-\(\mathcal{A}\mathcal{B}\) Algorithm

Over directed time-varying multi-agent networks consisting of m agents, consider an unconstrained distributed optimization problem:

where \(f_i\) is the local objective function of agent i. The authors of [39] proposed a distributed algorithm referred to as the time-varying \(\underline{\mathcal{A}\mathcal{B}}\) (TV-\(\mathcal{A}\mathcal{B}\)) gradient method for this problem. In specific, the updating rules of variables are

where A(k) and B(k) are row-stochastic and column-stochastic matrices, respectively. The auxiliary variables \(\{y_i^k\}_{i=1}^m\) are used to track the average of \(\{\nabla f_i(x_i^k)\}_{i=1}^m\), precisely, it holds that \(\frac{1}{m}\sum _{i=1}^my_i^k=\frac{1}{m}\sum _{i=1}^m\nabla f_i(x_i^k)\) for all \(k\ge 0\). Each agent i then performs an inexact gradient descent step along the direction \(-y_i^k\). Obeying such distributed strategies, all agents adjust their decision vectors \(\{x_i^k\}_{i=1}^m\) to be consensual (i.e., \(\Vert x_i^k-x_j^k\Vert \rightarrow 0\) as \(k\rightarrow \infty \)) and optimal (i.e., \(\Vert x_i^k-x^*\Vert \rightarrow 0\) as \(k\rightarrow \infty \) for some optimal solution \(x^*\)).

In a multi-agent system, if agents adopts the primal-dual gradient method (3.3) to solve the saddle point problem (2.1) associated with (DCOP), they must access to the global information \(\sum _{i=1}^m\nabla \mathcal {L}_i\). However, in distributed settings, this information is not available to any agent. To avoid such an obstacle, we strategically integrate the ideas behind TV-\(\mathcal{A}\mathcal{B}\) and the primal-dual gradient method to propose the following primal-dual distributed algorithm for solving (DCOP),

where \(z_i^0=\nabla _x\mathcal {L}_i(x_i^0,\lambda _i^0)\) and \( y_i^0=h_i(x_i^0)\), for each \( i=1,2,\ldots ,m\).

In the presented algorithm (3.3), each agent i generates a primal-dual pair \((x_i^k,\lambda _i^k)\) to estimate the saddle point \((x^*,\lambda ^*)\) of the max-min problem (2.1), and employs the sequences \(\{z_i^k\}_{k\ge 0}\) and \(\{y_i^k\}_{k\ge 0}\) to track the average gradients \(\frac{1}{m}\sum _{i=1}^m\nabla _x\mathcal {L}_i(x_i^k,\lambda _i^k)\) and \(\frac{1}{m}\sum _{i=1}^m\nabla _\lambda \mathcal {L}_i(x_i^k,\lambda _i^k)\), respectively. After one round of communication, each agent i mixes the information received from its in-neighbors, then uses the mixed information and local data to output new estimate vector \((x_i^{k+1},\lambda _i^{k+1})\). The local computation of each agent is independent and can be performed by agents in parallel.

Remark 3.1

For the updating formulas of the multipliers \(\lambda _i^k\), we adopt the projection operator \(P_Q\) instead of \(P_{\mathcal {K}^\circ }\). The reason is that we consider the saddle point problem

instead of (2.1). One can get that the problems (2.1) and (3.5) have the same saddle points, e.g., see [53, Lemma 3.1]. The boundness of the sequence \(\{(\lambda _i^k)^{\textrm{I}}\}\) is guaranteed by the bounded set \(Q_I(\alpha )\), where \(\lambda _i^k=\left( (\lambda _i^k)^{\textrm{I}},(\lambda _i^k)^{\textrm{E}}\right) \).

In what follows, we assume that the step-size sequence \(\{\alpha _k\}\) is squared summable, which is also considered by references [2, 10, 32], etc. The step-size conditions are easy to satisfy, e.g., choose \(\alpha _k = c/k^\beta \) with \(\beta \in (1/2,1]\) and constant \(c>0\).

Assumption 3.1

Suppose that the sequence \(\{\alpha _k\}_{k=0}^\infty \) of step-sizes satisfies that

Under Assumption 2.3, the subset \(\mathcal {X}\) is compact. According to the continuity and differentiability of \(f_i\) and \(h_i\), the gradients of \(f_i\) and \(h_i\) are Lipschitz continuous over \(\mathcal {X}\). Without loss of generality, for any \(x, y\in \mathcal {X}\) and \(i\in \mathcal {V}\), assume that there exists a constant \(L>0\) such that

4 Convergence Analysis

In this section, we analyze the proposed Algorithm 1 and show the obtained theoretical results about the convergence and rate. To ease the analysis, we place some supporting lemmas in “Appendix C” and define the following notations,

We then rewrite the iterative schemes of Algorithm 1 into the following compact form,

where the matrices \(\{\mathcal {A}_k\}\) and \(\{\mathcal {B}_k\}\) are also row-stochastic and column-stochastic, respectively. Furthermore, we make a state transformation: \(s^k=V_k^{-1}\eta ^k\), where \(V_k=\textrm{diag}(v_k)\) and \(v_k\) are given by

Consequently, (4.1) and (4.2) can be equivalently rewritten as

where \(\mathcal {R}_k=V_{k+1}^{-1}\mathcal {B}_kV_k\). It can be verified that \(\{\mathcal {R}_k\}\) is a sequence of row-stochastic matrices such that \(\{\frac{1}{2m}v_k\}\) is an absolute probability sequence. For any \(k\ge s\ge 0\), define

As shown in Lemma C.1, for any index \(s\ge 0\), the row-stochastic matrix sequence \(\{\varPhi _\mathcal {A}(k,s)\}\) linearly converges to a matrix \(\varPhi _\mathcal {A}(s)\) whose columns are stochastic vectors, as \(k\rightarrow \infty \). The asymptotic properties of the matrix sequences \(\{\varPhi _\mathcal {A}(k,s)\}\) and \(\{\varPhi _\mathcal {R}(k,s)\}\) are used to estimate consensus errors of the the sequence \(\{\omega ^k\}\).

From (4.2), it holds that

which is equivalent to

Noticing that the initialization \(\eta ^0=\nabla \textrm{L}(\omega ^0)\), the following tracking equations hold,

Define

where the sequence \(\{\phi _k\}\) is given by Lemma C.2. Therefore,

which means that \(\bar{s}^k\) tracks the average gradient of the Lagrange function \(\mathcal {L}(x,\lambda )\). Since the subset \(\mathcal {X}\) is compact and convex, \(\nabla _x\mathcal {L}(x,\lambda )\) and \(\nabla _\lambda \mathcal {L}(x,\lambda )\) are bounded over \(\mathcal {X}\), which implies the sequence \(\{\bar{s}^k\}\) is bounded. Furthermore, the following lemma claims that the sequence \(\{s^k\}\) is also bounded.

Lemma 4.1

There is a constant \(M>0\) such that

Proof

See “Appendix A”. \(\square \)

According to Algorithm 1, each agent i generates the local estimate \(\omega _i^k=(x_i^k,\lambda _i^k)\) to the saddle point \(\omega ^*=(x^*,\lambda ^*)\). It’s necessary to evaluate the error between any two vectors \(\omega _i^k\) and \(\omega _j^k\), or equivalently, the consensus error \(\Vert \omega ^k-1_{2m}\bar{\omega }^k\Vert \), which is the constraint violation of \(x_i=x_j\) and \(\lambda _i=\lambda _j\) for all \((i,j)\in \mathcal {E}_k, k\ge 0\). The following lemma characterizes the consensus errors of the sequences \(\{\omega ^k\}\) and \(\{s^k\}\).

Lemma 4.2

Under Assumptions 2.1–2.4 and 3.1, for the sequences generated by Algorithm 1, it meets that

Proof

See “Appendix B”. \(\square \)

The rest of the convergence analysis is to evaluate the optimality error \(\Vert \bar{\omega }^k-\omega ^*\Vert \), which requires strategically applying the results of Lemmas 4.1 and 4.2, and the supporting lemmas in “Appendix C”.

Theorem 4.1

Under Assumptions 2.1–2.4 and 3.1, for any \(i\in \mathcal {V}\), the sequence \(\{(x_i^k,\lambda _i^k)\}\) generated by Algorithm 1 converges to some saddle point \((x^*,\lambda ^*)\) of the Lagrange function \(\mathcal {L}(x,\lambda )\), i.e.,

Proof

For any \(\omega ^*=(x^*,\lambda ^*)\) \(\in \mathcal {X}\times \mathcal {K}^\circ \), define

We then split the proof into the following three steps.

Step 1: Verify the following inequality,

From (4.4) and noticing the non-expansive projection operator \(P_\varOmega \), one has

Since \(\{\frac{1}{2m}v_k\}\) is a stochastic vector sequence and \(V_k=\textrm{diag}(v_k)\), it follows that

where the last inequality derives from the boundedness of \(\{s^k\}\) (Lemma 4.1). Given that the matrix \(\mathcal {A}_k\) is row-stochastic, it holds that

As to the term \(2\alpha _k\left\langle V_ks^k,\mathcal {A}_k\omega ^k-1_{2m}\omega ^*\right\rangle \), one has

Note that the Lagrange function \(\mathcal {L}(x,\lambda )\) is convex-concave with respect to x and \(\lambda \), applying the subdifferential inequality of convex function yields

Combining the above relations to yield (4.8).

Step 2: Verify that

According to the Cauchy inequality and the Lipschitz continuity of \(\nabla \mathcal {L}\), there exist positive constants \(L_1, L_2\) and \(L_3\) such that

By Lemma 4.2, the three scalar sequences \(\{\vert I_1^k\vert \}\), \(\{\vert I_2^k\vert \}\), and \(\{\vert I_3^k\vert \}\) are summable.

Step 3: Prove the convergence. In the inequality (4.8), choose \(\omega ^*=(x^*,\lambda ^*)\) as an arbitrary saddle point of the Lagrange function \(\mathcal {L}(x,\lambda )\). The definition of saddle points indicates that

Consequently, it follows from Lemma C.3 that the limit \(\lim _{k\rightarrow \infty }\Vert \omega ^k-1_{2m}\omega ^*\Vert \) exists and

In view of \(\sum _{k=0}^\infty \alpha _k=\infty \), it must hold that

Furthermore, the Lagrange function \(\mathcal {L}(x,\lambda )\) is continuous differentiable and convex-concave, hence,

or equivalently, \(\lim _{k\rightarrow \infty }\Vert \bar{\omega }^k-\omega ^*\Vert =0\). From Lemma 4.2, it’s clear that

The proof is completed. \(\square \)

The convergence results of Theorem 4.1 show that for each agent \(i\in \{1,2,\dots ,m\}\), its decision variable sequence \(\{x_i^k\}_{k=0}^\infty \) converges to an optimal solution \(x^*\) of (DCOP), and the Lagrange multiplier estimate sequence \(\{\lambda _i^k\}_{k=0}^\infty \) converges to an dual-optimal solution \(\lambda ^*\). In other words, by communicating with neighbors and computing locally, all agents cooperatively solve (DCOP).

To present results about the convergence rate of Algorithm 1, we define the following weighted sequences:

where \(\bar{x}^k\) and \(\bar{\lambda }^k\) are given by (4.6). The following theorem characters a sublinear convergence rate of Algorithm 1 in terms of the primal-dual gap.

Theorem 4.2

Let \((x^*,\lambda ^*)\) be an arbitrary saddle point of \(\mathcal {L}(x,\lambda )\). Under Assumptions 2.1–2.4 and 3.1, there exists a constant \(M_1>0\) such that

Furthermore, if one chooses the step-size sequence as \(\alpha _k=\frac{c}{k^{1/2+\beta }}\) with \(\beta \in (0,1/2)\) and constant \(c>0\), the primal-dual gap satisfies that

where \(\lfloor n\rfloor \) is the integer part of a positive real number n.

Proof

By the definition of saddle points, it’s clear that

For any fixed \(x\in \Re ^n\) and \(\lambda \in \Re ^{p+q}\), in view of the convexity of the functions \(\mathcal {L}(\cdot ,\lambda )\) and \(-\mathcal {L}(x,\cdot )\), one has

From the proof of Theorem 4.1, it holds that

Let \(M_1:=M^\prime +M^{\prime \prime }\), it’s clear that (4.10) holds. Take \(\alpha _k=\frac{c}{k^{1/2+\beta }}\), one can get the following estimate,

Let \(\phi (x)=x^{1/2-\beta }\) with \(\beta \in (0,1/2)\), it’s easy to verify that \(\phi (x)\) is a concave function, hence,

Choose \(s=\lfloor n/2\rfloor \) in (4.10), it follows that

The proof is completed. \(\square \)

Theorem 4.2 shows that the primal-dual gap \(\mathcal {L}(\tilde{x}_{\lfloor n/2\rfloor }^n,\lambda ^*)-\mathcal {L}(x^*,\tilde{\lambda }_{\lfloor n/2\rfloor }^n)\) decays to zeros at a rate of \(O(\frac{1}{n^{1/2-\beta }})\). If one chooses the value of \(\beta \) to be small enough, the rate is near \(O(1/\sqrt{n})\), where n represents the number of iterations.

5 Numerical Simulations

In this section, we test the proposed algorithm using two examples. The first one is motivated by applications in wireless networks and is used to justify the theoretical results in the paper. Another one is an empirical risk optimization problem to be solved in the Neyman–Pearson (NP) classification [44], which is used to compare the performance of Algorithm 1 with the distributed proximal primal-dual algorithm [17].

5.1 Example in Wireless Networks

Consider the following distributed optimization problem with nonlinear constraints,

where the constraints with this form arise, for instance, in wireless networks to ensure quality of service. This problem is also considered by [17, 25]. Set \(b=5\), \(c_i=i/m\), \(d_i=i/(m+1)\) for each \(i=1,2,\dots ,100\), where the network size (number of agents) \(m=100\). The optimal value \(f^*\) is computed by the convex optimization toolbox [11] (CVX) under the best precision. We then use \(f^*\) as a benchmark for our algorithm.

Convergence of algorithm 1

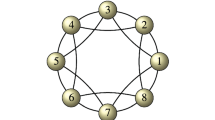

As shown in Fig. 1, the network topology of 100 agents is selected in turn among the line, star, circle, and Erdős–Rényi random graphs with period 4. The corresponding adjacency matrices A(k) and B(k) are constructed by

where \(\vert \mathcal {N}_i^\mathrm{{in}}(k)\vert \) and \(\vert \mathcal {N}_j^\mathrm{{out}}(k)\vert \) are the in-degree of agent i and the out-degree of agent j at time k, respectively.

We execute the proposed Algorithm 1 with step-size \(\alpha _k=1/k^{0.51}\) to solve (5.1) on a laptop computer. The procedure stops if the maximum of objective error and constraint violation decays to smaller than the given tolerance error \(10^{-4}\), where the objective error is \(\vert f(\bar{x}^k)-f^*\vert /\vert f^*\vert \), the constraint violation is the sum of \(\max \left( \sum _{i=1}^{100}-d_i\log (1+\bar{x}^k)-b,0\right) \) (feasibility error) and \(\frac{1}{100}\sum _{i=1}^{100}\Vert x_i^k-\bar{x}^k\Vert \) (consistency error). Here, \(\bar{x}^k=\frac{1}{100}\sum _{i=1}^{100}x_i^k\).

At the beginning of the procedure, each agent i individually chooses the local decision vector \(x_i^0\). As Algorithm 1 runs, Fig. 2 shows that all agents reach a consensus decision by local computations and exchanging information with their neighbors. Furthermore, Fig. 3 shows that this consensus decision vector is an optimal solution with precision of \(10^{-4}\) to the problem (5.1), which verifies the theoretical result of Algorithm 1. In summary, Algorithm 1 guides all agents to adjust their decision vectors to be feasible, consistent, and optimal.

5.2 Neyman–Pearson Classification Problem

Suppose that h is a classifier to predict 1 and \(-1\). The type I error (misclassifying class \(-1\) as 1) and type II error (misclassifying class 1 as \(-1\)) are, respectively, defined by

where \(\psi \) is some merit function. Different from the conventional binary classification in machine learning, the NP classification paradigm is developed to learn a classifier by minimizing type II error subject to that type I error is below a user-specified level \(\tau >0\), see [44] and references therein. Specifically, let \(\mathcal {H}\) be a given class of classifiers. The NP classification is to solve the following problem,

In what follows, we consider its empirical risk minimization counterpart. Given a labeled training dataset \(\{a_i\}_{i=1}^N\) consists of the positive set \(\{a_i^+\}_{i=1}^{N_+}\) and the negative set \(\{a_j^-\}_{j=1}^{N_-}\), The associated empirical NP classification problem is

where \(\ell (\cdot )\) is a loss function, and \(\tau \) is a user-specified level, usually a small value. In this numerical test, we choose \(\ell (\cdot )\) as the \(\ell _2\)-norm regularized logistic loss function, i.e., \(\ell (x^\top a):=\log \left( 1+\exp (-x^\top a)\right) +\theta \Vert x\Vert _2^2\), \(\theta = 10^{-2}\), \(\tau =5\%\). The data \({a_i^+}\)’s and \(a_i^-\)’s are randomly sampled from the dataset CINA [46]. The sample size \(N_+=N_-=1000\) and the variable \(x\in \Re ^{132}\).

We run Algorithm 1 and the distributed proximal primal-dual (DPPD) method of [17] to solve (5.2). The step-size sequences of Algorithm 1 and DPPD are chosen as \(0.1/k^{0.505}\) and \(4/k^{0.5}\), respectively. The subproblems of DPPD are computed by Nesterov’s accelerated gradient method [31] with a tolerance error of \(10^{-6}\). We start these two algorithms from the same initial point and plot the evolution of their objective errors and constraint violations during 1000 iterations, where the objective error is \(\vert f(\bar{x}^k)-f^*\vert /\vert f^*\vert \) and the constraint violation is the sum of \(\max (g(\bar{x}^k),0)\) (feasibility error) and \(\frac{1}{1000}\sum _{i=1}^{1000}\Vert x_i^k-\bar{x}^k\Vert \) (consistency error). Here, \(\bar{x}^k=\frac{1}{1000}\sum _{i=1}^{1000}x_i^k\).

In Fig. 4a, the objective error of DPPD decays to a level of \(10^{-3}\) after about 220 iterations, then suddenly climbs to near \(10^{-1}\) and stays there. While the objective error of Algorithm 1 keeps decreasing except for the first few iterations and reaches \(10^{-4}\) after 1000 iterations. As shown in Fig. 4b, the constraint violation of Algorithm 1 descends faster than that of DPPD, which indicates that the decision variable sequence generated by Algorithm 1 moves to be feasible at a faster speed. Moreover, the ratio of CPU time between Algorithm 1 and DPPD is 1 : 1.25. In summary, Fig. 4 illustrates that Algorithm 1 is efficient and has an advantage in convergence speed over DPPD.

6 Conclusions

This article focused on distributed convex optimization problems with coupled equality and inequality constraints over directed and time-varying multi-agent networks. We proposed a distributed primal-dual algorithm for such problems and established its convergence and iteration complexity. In numerical simulations, we showed that our algorithm is effective for the problem considered in this paper. However, there are still some respects that need to improve. One is that the presented algorithm adopts diminishing step-sizes, which leads to a slow convergence speed. Therefore, we expect to modify the diminishing step-sizes of Algorithm 1 to a fixed step-size in future studies.

Data Availability Statements

The authors declare that all data supporting the findings of this study are available within the article and its supplementary information files.

References

Alessandro, F., Ivano, N., Giuseppe, N., Maria, P.: Tracking-ADMM for distributed constraint-coupled optimization. Automatica 117, 108,962 (2020)

Alessandro, F., Kostas, M., Simone, G., Maria, P.: Dual decomposition for multi-agent distributed optimization with coupling constraints. Automatica 84, 149–158 (2017)

Alessandro, F., Maria, P.: Distributed decision-coupled constrained optimization via proximal-tracking. Automatica 135, 109,938 (2022)

Alessandro, F., Maria, P.: Augmented Lagrangian tracking for distributed optimization with equality and inequality coupling constraints. Automatica 157, 111,269 (2023)

Alghunaim, S.A., Lyu, Q., Yan, M., Sayed, A.H.: Dual consensus proximal algorithm for multi-agent sharing problems. IEEE Trans. Signal Process. 69, 5568–5579 (2021)

Arauz, T., Chanfreut, P., Maestre, J.: Cyber-security in networked and distributed model predictive control. Annu. Rev. Control 53, 338–355 (2022)

Arrow, K.J., Hurwicz, L., Uzawa, H.: Studies in Linear and Nonlinear Programming. Stanford University Press, Palo Alto (1958)

Carli, R., Dotoli, M.: Distributed alternating direction method of multipliers for linearly constrained optimization over a network. IEEE Control Syst. Lett. 4(1), 247–252 (2020)

Chang, T.H.: A proximal dual consensus ADMM method for multi-agent constrained optimization. IEEE Trans. Signal Process. 64(14), 3719–3734 (2016)

Chang, T.H., Nedić, A., Scaglione, A.: Distributed constrained optimization by consensus-based primal-dual perturbation method. IEEE Trans. Autom. Control 59(6), 1524–1538 (2014)

CVX Researc Inc.: CVX: Matlab software for disciplined convex programming (2012). http://cvxr.com/cvx/

Hamedani, E.Y., Aybat, N.S.: Multi-agent constrained optimization of a strongly convex function over time-varying directed networks. In: 2017 55th Annual Allerton Conference on Communication, Control, and Computing (Allerton), pp. 518–525 (2017)

Hao, X., Liang, Y., Li, T.: Distributed estimation for multi-subsystem with coupled constraints. IEEE Trans. Signal Process. 70, 1548–1559 (2022)

Ion, N., Valentin, N.: On linear convergence of a distributed dual gradient algorithm for linearly constrained separable convex problems. Automatica 55, 209–216 (2015)

Lan, G., Zhou, Z.: Algorithms for stochastic optimization with function or expectation constraints. Comput. Optim. Appl. 76, 461–498 (2020)

Lei, J., Chen, H.F., Fang, H.T.: Primal-dual algorithm for distributed constrained optimization. Syst. Control Lett. 96, 110–117 (2016)

Li, X., Feng, G., Xie, L.: Distributed proximal algorithms for multiagent optimization with coupled inequality constraints. IEEE Trans. Autom. Control 66(3), 1223–1230 (2021)

Li, Z., Shi, W., Yan, M.: A decentralized proximal-gradient method with network independent step-sizes and separated convergence rates. IEEE Trans. Signal Process. 67(17), 4494–4506 (2019)

Liang, S., Wang, L.Y., Yin, G.: Distributed smooth convex optimization with coupled constraints. IEEE Trans. Autom. Control 65(1), 347–353 (2020)

Liao, S.: A fast distributed algorithm for coupled utility maximization problem with application for power control in wireless sensor networks. J. Commun. Netw. 23(4), 271–280 (2021)

Liu, C., Li, H., Shi, Y.: A unitary distributed subgradient method for multi-agent optimization with different coupling sources. Automatica 114, 108,834 (2020)

Liu, H., Yu, W., Chen, G.: Discrete-time algorithms for distributed constrained convex optimization with linear convergence rates. IEEE Trans. Cybern. 52(6), 4874–4885 (2022)

Liu, Q., Yang, S., Hong, Y.: Constrained consensus algorithms with fixed step size for distributed convex optimization over multiagent networks. IEEE Trans. Autom. Control 62(8), 4259–4265 (2017)

Liu, T., Han, D., Lin, Y., Liu, K.: Distributed multi-UAV trajectory optimization over directed networks. J. Frankl. Inst. 358(10), 5470–5487 (2021)

Mateos-Núñez, D., Cortés, J.: Distributed saddle-point subgradient algorithms with Laplacian averaging. IEEE Trans. Autom. Control 62(6), 2720–2735 (2017)

Nedić, A., Olshevsky, A., Shi, W.: Achieving geometric convergence for distributed optimization over time-varying graphs. SIAM J. Optim. 27(4), 2597–2633 (2017)

Nedić, A., Olshevsky, A., Shi, W.: Improved convergence rates for distributed resource allocation. In: 2018 IEEE Conference on Decision and Control (CDC), pp. 172–177 (2018)

Nedić, A., Ozdaglar, A.: Approximate primal solutions and rate analysis for dual subgradient methods. SIAM J. Optim. 19(4), 1757–1780 (2009)

Nedić, A., Ozdaglar, A.: Distributed subgradient methods for multi-agent optimization. IEEE Trans. Autom. Control 54(1), 48–61 (2009)

Nedić, A., Ozdaglar, A., Parrilo, P.A.: Constrained consensus and optimization in multi-agent networks. IEEE Trans. Autom. Control 55(4), 922–938 (2010)

Nesterov, Y.: Gradient methods for minimizing composite functions. Math. Program. 140, 125–161 (2013)

Notarnicola, I., Notarstefano, G.: Constraint-coupled distributed optimization: a relaxation and duality approach. IEEE Trans. Control Netw. Syst. 7(1), 483–492 (2020)

Nowak, R.: Distributed EM algorithms for density estimation and clustering in sensor networks. IEEE Trans. Signal Process. 51(8), 2245–2253 (2003)

Polyak, B.: Introduction to Optimization. Optimization Software, New York (2020)

Pu, S., Shi, W., Xu, J., Nedić, A.: Push-pull gradient methods for distributed optimization in networks. IEEE Trans. Autom. Control 66(1), 1–16 (2021)

Qu, G., Li, N.: Harnessing smoothness to accelerate distributed optimization. IEEE Trans. Control Netw. Syst. 5(3), 1245–1260 (2018)

Rockafellar, R.T.: Convex Analysis. Princeton Mathematical Series (1970)

Rockafellar, R.T., Uryasev, S.: Optimization of conditional value-at-risk. J. Risk 2, 21–41 (2000)

Saadatniaki, F., Xin, R., Khan, U.A.: Decentralized optimization over time-varying directed graphs with row and column-stochastic matrices. IEEE Trans. Autom. Control 65(11), 4769–4780 (2020)

Shi, W., Ling, Q., Wu, G., Yin, W.: Extra: an exact first-order algorithm for decentralized consensus optimization. SIAM J. Optim. 25(2), 944–966 (2015)

Shi, W., Ling, Q., Wu, G., Yin, W.: A proximal gradient algorithm for decentralized composite optimization. IEEE Trans. Signal Process. 63(22), 6013–6023 (2015)

Simonetto, A., Jamali-Rad, H.: Primal recovery from consensus-based dual decomposition for distributed convex optimization. J. Optim. Theory Appl. 168(1), 172–197 (2016)

Su, Y., Wang, Q., Sun, C.: Distributed primal-dual method for convex optimization with coupled constraints. IEEE Trans. Signal Process. 70, 523–535 (2022)

Tong, X., Feng, Y., Zhao, A.: A survey on Neyman–Pearson classification and suggestions for future research. WIREs Comput. Stat. 8(2), 64–81 (2016)

Wiltz, A., Chen, F., Dimos, V.D.: A consistency constraint-based approach to coupled state constraints in distributed model predictive control. In: 2022 IEEE 61st Conference on Decision and Control (CDC) pp. 3959–3964 (2022)

Workbench team C: A marketing dataset (2008). http://www.causality.inf.ethz.ch/data/CINA.html

Wu, X., Wang, H., Lu, J.: Distributed optimization with coupling constraints. IEEE Trans. Autom. Control 68(3), 1847–1854 (2023)

Xu, J., Tian, Y., Sun, Y., Scutari, G.: Distributed algorithms for composite optimization: unified framework and convergence analysis. IEEE Trans. Signal Process. 69, 3555–3570 (2021)

Xu, J., Zhu, S., Soh, Y.C., Xie, L.: Augmented distributed gradient methods for multi-agent optimization under uncoordinated constant stepsizes. In: 2015 54th IEEE Conference on Decision and Control (CDC), pp. 2055–2060 (2015)

Yuan, D., Proutiere, A., Shi, G.: Distributed online linear regressions. IEEE Trans. Inf. Theory 67(1), 616–639 (2021)

Zhou, X., Ma, Z., Zou, S., Zhang, J.: Consensus-based distributed economic dispatch for multi micro energy grid systems under coupled carbon emissions. Appl. Energy 324, 119641 (2022)

Zhu, K., Tang, Y.: Primal-dual \(\varepsilon \)-subgradient method for distributed optimization. J. Syst. Sci. Complex. 36, 577–590 (2020)

Zhu, M., Martinez, S.: On distributed convex optimization under inequality and equality constraints. IEEE Trans. Autom. Control 57(1), 151–164 (2012)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Jalal M. Fadili.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Liwei Zhang: This author was supported by the Natural Science Foundation of China (Nos. 11971089 and 11731013) and partially supported by Dalian High-level Talent Innovation Project (No. 2020RD09).

Appendices

Proof of Lemma 4.1

For simplicity, denote \(\delta _k=V_{k+1}^{-1}\left( \nabla \textrm{L}(\omega ^{k+1})-\nabla \textrm{L}(\omega ^k)\right) \), (4.5) can be equivalently written as \(s^{k+1}=\mathcal {R}_ks^k+\delta _k\). Furthermore, \(s^{k+1}=\varPhi _\mathcal {R}(k,1)s^1+\sum _{l=1}^{k-1}\varPhi _\mathcal {R}(k,l+1)\delta _l+\delta _k\). Since \(\{\frac{1}{2m}v_k\}\) is an absolute probability sequence for the matrix sequence \(\{\mathcal {R}_k\}\), it follows that

Applying the subadditivity of \(\Vert \cdot \Vert \) yields

For the matrix sequence \(\{\varPhi _\mathcal {R}(k,s)\}\), it holds the similar results in Lemma C.3, i.e.,

where \(\rho \in (0,1)\) and \(C_1\) is some positive constant. Due to the compactness of the subset \(\mathcal {X}\), it is clear that \(\delta _k\) is bounded. Denote the upper bound of \(\Vert \delta _k\Vert \) by \(\widetilde{C}\). Therefore,

Proof of Lemma 4.2

Firstly, we need to verify the following inequality,

Then, combining the conditions on the step-size sequence \(\{\alpha _k\}\) in Assumption 3.1 and the results of Lemma C.4, it is easy to demonstrate the claimed results.

(a): From (4.4), there exist \(e^k\) such that \(\omega ^{k+1}=\mathcal {A}_k\omega ^k-\alpha _kV_ks^k+e^k\). Similar to the inequality (A.1), it holds that

Since \(\omega ^{k+1}\) is the projection of \(\mathcal {A}_k\omega ^k-\alpha _kV_ks^k\) onto \(\varOmega \), for any \(\omega \in \varOmega \), one has

which implies that

From Lemma 4.1, it follows that

In view of the results in Lemmas C.3 and C.4, one can get a modified version of the inequality (B.1) as follows,

Under Assumption 3.1 and Lemma C.4 (a), it’s clear that

(b): Multiplying the inequality (B.2) by \(\alpha _{k+1}\) at the both sides yields

where \(C_2, C_3, C_4, C_5\) are some positive constants. Once again, under Assumption 3.1 and Lemma C.4 (b), it is concluded that

In Lemma 4.2, the claimed results about the sequence \(\{s^k\}\) is parallel with \(\{\omega ^k\}\) and its proof is omitted here.

Supporting Lemmas

Lemma C.1

([29], Lemma 4) Under Assumptions 2.1 and 2.2, it holds that

-

(a)

\(\lim _{k\rightarrow \infty }\varPhi _A(k,s)=1_{2m}\mu _s^\top \), where \(\mu _s\in \mathbb {R}^{2m}\) is a stochastic vector for each s.

-

(b)

For any i, the entries \(\varPhi _\mathcal {A}(k,s)_{ij}, j=1,\dots ,2m\), converge to the same limit \((\mu _s)_i\) as \(k\rightarrow \infty \) with a geometric rate, i.e., for each i and \(s\ge 0\),

$$\begin{aligned} \left| \varPhi _\mathcal {A}(k,s)_{ij}-\left( \mu _s\right) _i\right| \le C\beta ^{k-s},\ \forall \, k\ge s, \end{aligned}$$where \(C=2\frac{1+2\eta ^{-B_0}}{1-2\eta ^{B_0}}\left( 1-\eta \right) ^{1/{B_0}}\), \(\beta =(1-\eta ^{B_0})\in (0,1)\), \(\eta \) is the lower bound of Assumption 2.2, \(B_0=(m-1)B\), ingeter B is defined by Assumption 2.1.

Lemma C.2

([39], Corollary 1) Under the assumptions of Lemma C.1, the sequence \(\{\phi _k\}\) is an absolute probability sequence for the matrix sequence \(\{\mathcal {A}_k\}\), where

Lemma C.3

([34], Lemma 11, Chapter 2.2) Let \(\{b_k\}, \{c_k\}, \{d_k\}\) be non-negative sequences. Suppose that \(\sum _{k=0}^\infty c_k<\infty \) and

then the sequence \(\{b_k\}\) converges and \(\sum _{k=0}^\infty d_k<\infty \).

Lemma C.4

([30], Lemma 7) Let \(\beta \in (0,1)\), and \(\{\gamma _k\}\) be a positive scalar sequence.

-

(a)

If \(\lim _{k\rightarrow \infty }\gamma _k=0\), then \(\lim _{k\rightarrow \infty }\sum _{l=0}^k\beta ^{k-l}\gamma _l=0\).

-

(b)

Furthermore, if \(\sum _{k=0}^\infty \gamma _k<\infty \), then \(\sum _{k=0}^\infty \sum _{l=0}^k\beta ^{k-l}\gamma _l<\infty \).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Gong, K., Zhang, L. Primal-Dual Algorithm for Distributed Optimization with Coupled Constraints. J Optim Theory Appl 201, 252–279 (2024). https://doi.org/10.1007/s10957-024-02393-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-024-02393-7