Abstract

An invariant-point theorem and its equivalent formulation are established in which distance functions are replaced by minimal time functions. It is worth emphasizing here that the class of minimal time functions can be interpreted as a general type of directional distance functions recently used to develop new applications in optimization theory. The obtained results are applied in two directions. First, we derive sufficient conditions for the existence of solutions to optimization-related problems without convexity. As an easy corollary, we get a directional Ekeland variational principle. Second, we propose a new type of global error bounds for inequalities which allows us to simultaneously study nonconvex and convex functions. Several examples and comparison remarks are included as well to explain advantages of our results with existing ones in the literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

It is well known that the important tool for proving the celebrated theorem of Bishop and Phelps [12] on the density of the set of support points of a bounded, closed and convex set in a Banach space is Lemma 1 in [12], which can be considered as an ordering principle. Since its appearance, this lemma has been developed by many mathematicians with wide applications in diverse fields (see, e.g., [1, 20, 23, 28, 43, 46] and the references therein). One of the crucial contributions is the invariant-point theorem established by Dancs et al. [17] under suitable assumptions on the usual distance function. A number of generalizations of this theorem and its equivalent formulations have been obtained by many authors, see, e.g., [9, 25, 31, 33, 34, 44]. Also, the interested reader is referred to [4, 6, 7] for other necessary and sufficient conditions for the existence of invariant points and their analogous in general spaces.

On the other hand, the so-called minimal time functions have been intensively studied in the literature due to their important applications in variational analysis, optimization, control theory, etc. For details, the reader is referred to [16, 27, 29, 35, 37, 38], among many others. This class of minimal time functions is similar to the class of scalarization functions and enjoys striking and useful properties. Recently, researchers have obtained many interesting results by using minimal time functions as directional distance functions. We mention here several significant developments and applications. In 2013, Nam and Zǎlinescu [38] provided some generalized differentiation properties of this class of functions and applied the obtained results to study location problems. Then, Durea et al. [18] improved and generalized the work of Nam and Zǎlinescu. Particularly, Durea et al. [19] introduced the three directional regularity concepts: directional metric regularity, directional linear openness and directional Aubin continuity. To establish necessary and sufficient conditions for these new regularity concepts, they devised a generalized form of the Ekeland variational principle in which the usual distance function is replaced by a directional minimal time function. Besides, it should be mentioned that there are several significant versions of directional regularity notions, which were introduced and investigated in the excellent papers [3, 5, 24, 41]. However, the approach through the directional minimal time function is more general (see [19, Remark 2.3]).

In this paper, following the aforementioned research direction initiated by Durea et al. [19] and being inspired by [11, 14, 15, 17, 18, 28, 31, 34, 38, 42], we choose the general class of minimal time functions as underlying functions for our consideration. An important advantage of this approach is that the obtained results contain the corresponding directional versions, as will be shown by several examples in the next sections.

The layout of the paper is as follows. Section 2 is devoted to some notations, concepts and preliminary facts for our later use. In Sect. 3, we present two sufficient conditions for the existence important points in nonlinear analysis and prove their equivalence. We also show that one of these sufficient conditions characterizes the completeness of a normed space. Section 4 contains applications of results in the previous section developed in two directions. In the first subsection, we investigate the solution existence of approximated equilibrium and equilibrium problems without any convexity requirement. As a direct consequence, we obtain a directional Ekeland variational principle. For the second subsection, we begin by introducing and studying a new type of global error bounds for an inequality defined by a nonconvex function. Then, we specialize this type of global error bounds to the case of a convex continuous function. The final short section includes conclusions.

2 Notations and Preliminaries

Throughout this paper, we use the convention that \(\inf \emptyset = +\infty \) and \(\sup \emptyset = -\infty \). As usual, \(\mathbb {N}\) and \(\mathbb {R}\) denote the set of the natural numbers and that of the real numbers, respectively. Let X be a normed space, whose topological dual \(X^*\) is endowed with the \(\hbox {weak}^*\) topology and let \(A \subset X\). The interior and closure hull of the set A are denoted by int A and \({\overline{A}}\), respectively. Recall that the polar \(A^\circ \) of A is the set \(A^\circ := \{x^*\in X^*\mid \langle x^*,x\rangle \le 1, \forall x\in A\}.\) Put

The support function \(\sigma _A: X^*\rightarrow \mathbb {R} \cup \{+\infty \}\) of A is defined by

Let \(g: X\rightarrow \mathbb R \cup \{+\infty \}\) be a convex function. The domain of g is the set where it is finite and is denoted by \(\text {dom }g:=\{x\in X\mid g(x)< +\infty \}.\) Given \(\bar{x}\in \text { dom }g\), the subdifferential of g at \({\bar{x}}\) in the sense of convex analysis is

We now introduce a broad class of minimal time functions corresponding to control problems with constant dynamics and target sets in normed vector spaces defined as follows:

Definition 2.1

Let F be a nonempty subset of X and let \(\Omega : X\rightrightarrows X\) be a set-valued map. The minimal time function is given by

We shall work not with general functions \(T_{F,\Omega }\). For \(\emptyset \ne K\subset X\), we set:

-

1)

\(T_F(x,K):=T_{F,\Omega }(x,y)\) when \(\Omega (y)\equiv K\);

-

2)

\(T_F(x,y):=T_{F,\Omega }(x,y)\) when \(\Omega \) is the identity map.

In this paper, we only consider two cases above which are sufficient for our purposes.

Remark 2.1

-

(i)

If one of the sets F and K is compact, while the other one is closed, and \(T_{F}(x,K)<+\infty \), then (see, e.g., [18, Proposition 2.3 (i)]) the infimum in (1) is attained.

-

(ii)

The domain of \(T_{F}(\cdot ,K)\) is the set where it is finite and is denoted by

$$\begin{aligned} \text { dom }T_{F}(\cdot ,K):=\{x\in X\mid T_{F}(x,K)<+\infty \}. \end{aligned}$$Then, we have (see, e.g., [38, Proposition 2.1])

$$\begin{aligned} \text { dom }T_{F}(\cdot ,K)= K-\text { cone }F, \end{aligned}$$where \(\text { cone }F:=\bigcup _{\lambda \ge 0}\lambda F\), the usual conical hull.

-

(iii)

By taking some particular cases of F and \(\Omega \), the minimal time function (1) may be: the indicator function, the distance function, or the Minkowski function. The readers are referred to [16, 27, 29, 35, 37] and the references therein for the study of the minimal time function.

-

(iv)

If F is a spherical sector with the apex at the origin and \(\Omega (y)\equiv K\), then this class of functions can be viewed as a general type of directional distance functions (see [14, 15, 18, 19, 38] and below examples).

The following lemma lists some properties of a special case of the minimal time function (1) which are well suited to our aim in the next sections.

Lemma 2.1

Let \({\bar{x}}\) be an arbitrary element of X, let \(F\subset X\) be a nonempty, bounded, closed, and convex set and let \(\Omega \) be the identity map. Consider the minimal time function \(T_F: X\times X\rightarrow \mathbb {R}\cup \{+\infty \}\). Then,

-

(i)

the domain of \(T({\bar{x}}, \cdot )\) is

$$\begin{aligned} \mathrm{dom }T_{F} ({\bar{x}},\cdot ):=\{y\in X\mid T_{F}({\bar{x}},y)<+\infty \}= {\bar{x}} +\mathrm{cone }F. \end{aligned}$$(2)If \(x\in \overline{\mathrm{dom }T_{F} ({\bar{x}},\cdot )}\), then

$$\begin{aligned} \overline{\mathrm{dom }T_{F} (x,\cdot )}\subset \overline{\mathrm{dom }T_{F} ({\bar{x}},\cdot )}. \end{aligned}$$(3) -

(ii)

\(T_F ({\bar{x}},\cdot )\) is convex and lower semicontinuous.

-

(iii)

\(\partial T_F ({\bar{x}},\cdot )({\bar{x}})=C^*\), where \(C^*:=\{u^*\in X^*\mid \sigma _F(u^*)\le 1\}\).

-

(iv)

\(T_F(x,z)\le T_F(x,y)+T_F(y,z)\) for all \(x,y,z\in X\).

Proof

The proofs are similar to the proofs of results in [16, 18, 19, 35, 37, 38]. However, we prefer to give all the details, for the sake of readability.

-

(i)

If \(y \in \text { dom }T_F ({\bar{x}}, \cdot )\), one has \(T_F ({\bar{x}}, y) <+\infty \). Hence, there exists \(t \ge 0\) and \(u \in F\) such that \(y={\bar{x}} + tu \in {\bar{x}}+\text { cone }F\). Since the reverse inclusion is trivially true, equality (2) holds.

For \(y\in \overline{\text { dom }T_F ( x, \cdot )}\), there exist two sequences \((y_n)\subset \text {dom }T_F (x, \cdot )\) and \((u_n)\subset F\) such that

$$\begin{aligned} y_n=x + T_F(x,y_n)u_n\rightarrow y\text { as } n\rightarrow +\infty . \end{aligned}$$(4)On the other hand, we also have two sequences \((z_n)\subset \text {dom }T_F ({\bar{x}}, \cdot )\) and \((v_n)\subset F\) such that

$$\begin{aligned} z_n={\bar{x}} + T_F({\bar{x}},z_n)v_n\rightarrow x \text { as } n\rightarrow +\infty . \end{aligned}$$(5)$$\begin{aligned} {\bar{x}} + T_F({\bar{x}},z_n)v_n + T_F(x,y_n)u_n \rightarrow y \text { as } k\rightarrow +\infty . \end{aligned}$$While the convexity of cone F tells us that

$$\begin{aligned} T_F({\bar{x}},z_n)v_n + T_F(x,y_n)u_n \in \text { cone }F, \end{aligned}$$and hence, by (2), \({\bar{x}} + T_F({\bar{x}},z_n)v_n + T_F(x,y_n)u_n\in \text { dom }T_F ({\bar{x}}, \cdot ).\) This means that \(y\in \overline{\text { dom }T_F ({\bar{x}}, \cdot )}\), so (3) holds.

-

(ii)

Take any \(y_1,y_2 \in \text { dom }T_F({\bar{x}},\cdot )\) and let \(\alpha \in [0,1]\). Then, there exist \(u_1,u_2\in F\) such that \(y_1={\bar{x}} + T_F({\bar{x}}, y_1)u_1 \text { and }y_2={\bar{x}} + T_F({\bar{x}}, y_2)u_2.\) It follows from the convexity of F that

$$\begin{aligned} \begin{array}{ll} \alpha y_1 + (1 -\alpha )y_2&{}={\bar{x}} +\alpha T_F({\bar{x}},y_1)u_1 + (1 -\alpha )T_F({\bar{x}},y_2)u_2\\ &{}\in {\bar{x}} +(\alpha T_F({\bar{x}},y_1) + (1 -\alpha )T_F({\bar{x}},y_2))F. \end{array} \end{aligned}$$By the definition of the minimal time function, one has

$$\begin{aligned} T_F({\bar{x}},\alpha y_1 + (1 -\alpha )y_2) \le \alpha T_F({\bar{x}},y_1) + (1 -\alpha )T_F({\bar{x}},y_2). \end{aligned}$$This means that \(T_F ({\bar{x}},\cdot )\) is convex.

Fix any \(\lambda \ge 0\). We first show that

$$\begin{aligned} \{y\in X\mid T_F ({\bar{x}},y)\le \lambda \} = {\bar{x}} +[0, \lambda ]F. \end{aligned}$$Indeed, for every \(z\in \{y\in X\mid T_F ({\bar{x}},y)\le \lambda \}\), there exists \(u \in F\) such that \(z={\bar{x}} + T_F ({\bar{x}},z)u \in {\bar{x}}+[0,\lambda ]F\). Hence,

$$\begin{aligned} \{y\in X\mid T_F ({\bar{x}},y)\le \lambda \} \subset {\bar{x}} + [0, \lambda ]F. \end{aligned}$$Conversely, let \(y \in {\bar{x}}+ [0,\lambda ]F\). Then, there are \(t \in [0, \lambda ]\) and \(u \in F\) such that \(y={\bar{x}} + tu\). It follows that \(T_F({\bar{x}},y)\le t\le \lambda \). Thus, one has

$$\begin{aligned} \{y\in X\mid T_F ({\bar{x}},y)\le \lambda \} = {\bar{x}} +[0, \lambda ]F. \end{aligned}$$Since \({\bar{x}} +[0, \lambda ]F\) is closed for any \(\lambda \ge 0\), while if \(\lambda <0\), then

$$\begin{aligned} \{y\in X\mid T_F ({\bar{x}},y)\le \lambda \}=\emptyset , \end{aligned}$$we conclude that all lower level sets of \(T_F({\bar{x}},\cdot )\) are closed. This means that \(T_F({\bar{x}},\cdot )\) is lower semicontinuous.

-

(iii)

Let any \(u^*\in \partial T_F ({\bar{x}},\cdot )({\bar{x}})\). Using the fact that \(T_F({\bar{x}},{\bar{x}})=0\), we get

$$\begin{aligned} \langle u^*, y-{\bar{x}}\rangle \le T_F({\bar{x}},y)\text { for all }y\in X. \end{aligned}$$Fix any \(u\in F\) and set \(y_t:={\bar{x}}+tu\) for some \(t>0\). Then, \(y_t\in \text { dom }T_F({\bar{x}},\cdot )\), and hence, one has

$$\begin{aligned} \langle u^*, y_t-{\bar{x}}\rangle =\langle u^*, tu\rangle \le T_F({\bar{x}},y_t)\le t, \end{aligned}$$or equivalently, \(\langle u^*, u\rangle \le 1.\) This means that \(u^* \in C^*\).

To see the reverse inclusion, fix any \(u^*\in C^*\) and \(y \in \text { dom }T_F({\bar{x}},\cdot )\). Then, there is \(u\in F\) satisfying \(y={\bar{x}}+T_F({\bar{x}},y)u.\) Hence, we get

$$\begin{aligned} \langle u^*, y-{\bar{x}}\rangle =\langle u^*, T_F({\bar{x}},y)u\rangle \le T_F({\bar{x}},y)=T_F({\bar{x}},y)-T_F({\bar{x}},{\bar{x}}), \end{aligned}$$which yields \(u^*\in \partial T_F({\bar{x}},\cdot )({\bar{x}}).\)

-

(iv)

First of all, if \(T_F(x,y)\) or \(T_F(y,z)\) equals \(+\infty \), then there is nothing to prove. Otherwise, i.e., both are finite, one can find \(u_1,u_2 \in F\) such that

$$\begin{aligned} y=x+T_F(x,y) u_1 \text { and }z=y+T_F(y,z) u_2, \end{aligned}$$and hence, \(z= x+T_F(x,y) u_1 +T_F(y,z) u_2\). Since F is convex,

$$\begin{aligned} \begin{array}{ll} z= x+T_F(x,y) u_1 +T_F(y,z) u_2\in x+(T_F(x,y) +T_F(y,z))F. \end{array} \end{aligned}$$Consequently, \(T_F(x,z)\le T_F(x,y)+T_F(y,z).\) \(\square \)

The remaining of this section is devoted to some basic concepts of set theory.

Definition 2.2

Let A be a nonempty set and let \(x,y,z\in A\) be arbitrarily chosen. A binary relation \(\preceq \) on A is said to be

-

(i)

reflexive iff \(x\preceq x\).

-

(ii)

transitive iff \(z\preceq y\) and \(y\preceq x\) imply \(z\preceq x\).

-

(iii)

antisymmetric iff \(x\preceq y\) and \(y\preceq x\) imply \(x=y\).

Definition 2.3

Let A be a nonempty set and \(\preceq \) a binary relation on A. We say that \(\preceq \) is

-

(i)

a preorder iff it is reflexive and transitive.

-

(ii)

a partial order iff it is reflexive, transitive and antisymmetric.

Moreover, if a binary relation \(\preceq \) is a preorder/partial order on A, then \((A,\preceq )\) is called a preordered/partial ordered set.

Definition 2.4

-

(i)

Let \((A,\preceq )\) be a preordered set. An element \({\bar{x}}\) in A is said to be

- (\(\hbox {i}_1\)):

-

minimal iff for every \(x\in A\) such that \(x\preceq {\bar{x}}\) one has \({\bar{x}}\preceq x.\)

- (\(\hbox {i}_2\)):

-

strictly minimal iff for every \(x\in A\) such that \(x\preceq {\bar{x}}\) one has \({\bar{x}}= x.\)

-

(ii)

Let \((A,\preceq )\) be a partially ordered set. An element \({\bar{x}}\) in A is said to be minimal iff for every \(x\in A\) such that \(x\preceq {\bar{x}}\) one has \({\bar{x}}= x.\)

Observe that (ii) implies (\(\hbox {i}_2\)) which in its turn implies (\(\hbox {i}_1\)). The converse is not true in general. We next provide a simple example to clarify the above concepts.

Example 2.1

-

(i)

Let \(A=\{\{a\},\{b\},\{a,b\},\{c,d\}\}\) and \(x,y\in A\) be arbitrarily chosen, and let \(\preceq \) be defined by

$$\begin{aligned} x \preceq y\text { iff } |x|\le |y|, \end{aligned}$$where \(|\cdot |\) denotes cardinality of a set. Then, \((A,\preceq )\) is a preordered set. Clearly, \(\{a,b\}\) and \(\{c,d\}\) are minimal, but not strict.

-

(ii)

Let \(A=\{\{a\},\{b\},\{a,b\}\}\) and let \(\preceq \) be defined as in (i). Then, \(\{a,b\}\) is strictly minimal. Note that \((A,\preceq )\) is not a partially ordered set.

Definition 2.5

Given a preordered set \((A,\preceq )\), we say that \((x_n)\subset A\) is a decreasing sequence iff \(x_{n+1}\preceq x_n\) for all \(n\ge 1\). If in addition A is a topological space, the preorder \(\preceq \) is said to be lower closed iff \(L(x):=\{y\in A\mid y\preceq x\}\) is a closed subset of A, for every \(x\in A\).

3 Necessary and Sufficient Conditions for Invariant Points

Let X be a normed space and \(A\subset X\). It is known that (A, d), where \(d(x,y):=\Vert x-y\Vert \) for all \(x,y\in A\), is a metric space. Let \(G: A \rightrightarrows A\) be a set-valued map. A point \({\bar{x}}\) in A is called an invariant point of G iff \(G(\bar{x})=\{{\bar{x}}\}\). We first present a sufficient condition for the existence of an invariant point, which makes use of the minimal time function introduced in the previous sections.

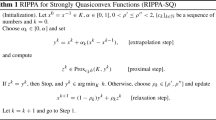

Theorem 3.1

Let X be a Banach space, \(A\subset X\) a closed set and \(F\subset X\) a nonempty, bounded, closed and convex set and let \(G: A \rightrightarrows A\) be a set-valued map. Assume that the following conditions are satisfied

-

(i)

for all \(x\in A\), \(x \in G(x)\) and G(x) is closed;

-

(ii)

for all \(x\in A\), if \(y \in G(x)\) then \(G(y) \subset G(x)\);

-

(iii)

\(\lim _{n\rightarrow \infty } T_{F}(x_{n},x_{n+1}) = 0\), if \(x_{n+1} \in G(x_n)\cap \overline{\text { dom }T_F (x_n, \cdot )}\) for all \(n\ge 1\).

Then, for every \(x_0\in A\), there exists \({\bar{x}} \in G(x_0)\) such that

Consequently, if \(G({\bar{x}})\subset \overline{\text { dom }T_F ({\bar{x}}, \cdot )}\), then \({\bar{x}}\) is an invariant point of G.

The next result is an equivalent reformulation of Theorem 3.1.

Theorem 3.2

Let X, A and F be the same as in Theorem 3.1. Assume that the following conditions hold

-

(i)

the metric space (A, d) is endowed with a preorder \(\preceq \) satisfying the lower closedness on (A, d);

-

(ii)

\(\lim _{n\rightarrow \infty } T_{F}(x_{n},x_{n+1})= 0\), if \((x_n)\subset (A,\preceq )\) is a decreasing sequence and satisfies \(x_{n+1}\in \overline{\text { dom }T_F (x_n, \cdot )}\) for all \(n\ge 1\).

Then, for every \(x_0\in A\), there exists \({\bar{x}}\in \{y\in A\mid y\preceq x_0\}\) such that \({\bar{x}}\) is a strictly minimal element in the set \(A\cap \overline{\text { dom }T_F ({\bar{x}}, \cdot )}\). If, furthermore,

then \({\bar{x}}\) is a strictly minimal element in the set A.

Proof [Direct proof of Theorem 3.1]

First, we observe that, by (i) and the definition of the minimal time function, \(G(x)\cap \overline{\mathrm{dom }T_F (x, \cdot )}\) contains x and is closed for all \(x\in A\). On the other hand, it is known that (A, d) is a complete metric space. If \(\Delta \Big (G(x)\cap \overline{\text {dom }T_F (x, \cdot )}\Big )\), the diameter of \(G(x)\cap \overline{\text { dom }T_F (x, \cdot )}\), is unbounded above for some \(x\in A\), then we replace d by \(d'\) defined as

It is easy to see that this replacement does not affect assumptions (i)–(iii). Hence, we can assume that \(\Delta \Big (G(x)\cap \overline{\text { dom }T_F (x, \cdot )}\Big )\) is bounded for each \(x\in A\). Setting \(x_1:=x_0\). By the definition of \(\Delta \Big (G(x_n)\cap \overline{\text { dom }T_F(x_n,\cdot )}\Big )\), we can take \(x_{n+1}\in G(x_n)\cap \overline{\text { dom }T_F (x_n, \cdot )}\) such that (using \(d'\) if necessary)

We get further from assumption (ii) and Lemma 2.1 (i) that

On the other hand, it follows from (iii) that there exists \(n_0\in \mathbb {N}\) such that \(T_F(x_n,x_{n+1})<+\infty \) for all \(n\ge n_0\). Then, by Lemma 2.1 (i), there is \(u_n\in F\) satisfying \(x_{n+1}=x_{n}+T_{F}(x_{n},x_{n+1}) u_n,\) which immediately yields

This together with (6) implies that

By the boundedness of F and (iii), we obtain

Therefore, Cantor’s intersection theorem tells us that

Since \({\bar{x}}\in G(x_n) \cap \overline{\text { dom }T_F (x_{n}, \cdot )}\) for all \(n\ge 1\), we have \({\bar{x}}\in G(x_0)\) and further that

by assumption (ii) and Lemma 2.1 (i), respectively. It follows from (7) that

Therefore, we must have \(G({\bar{x}})\cap \overline{\text { dom }T_F ( {\bar{x}}, \cdot )}=\{{\bar{x}}\}.\) The equality \(G({\bar{x}})=\{{\bar{x}}\}\) holds trivially when \(G({\bar{x}})\subset \overline{\text { dom }T_F ( {\bar{x}}, \cdot )}\).

[Theorem 3.1\(\Rightarrow \) Theorem 3.2]. We define \(G: A\rightrightarrows A\) by

It follows from the lower closedness of the preorder that G(x) is closed. Hence, the reflexivity and the transitivity of the preorder imply the corresponding assumptions (i) and (ii) of Theorem 3.1 for G. If (\(x_n\)) is a decreasing sequence and satisfies \(x_{n+1}\in \overline{\text { dom }T_F (x_n, \cdot )}\), then one has, by the definition of G and (ii) of Theorem 3.2, that \(x_{n+1}\in G(x_n)\cap \overline{\text { dom }T_F (x_n, \cdot )}\) for all \(n\ge 1\) and

i.e., (iii) of Theorem 3.1 is fulfilled. According to Theorem 3.1, there exists \({\bar{x}}\in G(x_0)\) such that \(G({\bar{x}})\cap \overline{\text { dom }T_F ({\bar{x}}, \cdot )}=\{{\bar{x}}\}.\) Suppose that there exists \(x\in A\cap \overline{\text { dom }T_F ({\bar{x}}, \cdot )}\) satisfying \(x\ne {\bar{x}}\) and such that \(x \preceq \bar{x}.\) Then, the definition of G ensures that \(x\in G({\bar{x}})\cap \overline{\text { dom }T_F ({\bar{x}}, \cdot )}=\{\bar{x}\}\), which is a contradiction. Hence, \({\bar{x}}\in \{x\in A\mid x\preceq x_0\}\) is a strictly minimal element in the set \(A\cap \overline{\mathrm{dom }T_F ({\bar{x}}, \cdot )}\). Clearly, if \(\{y\in A\mid y\preceq {\bar{x}}\}\subset \overline{\mathrm{dom }T_F ({\bar{x}}, \cdot )}\), then \({\bar{x}}\) is a strictly minimal element in the set A.

[Theorem 3.2\(\Rightarrow \) Theorem 3.1]. Define a binary relation \(\preceq \) on A by

By (i) and (ii) of Theorem 3.1, the binary relation \(\preceq \) is a preorder satisfying the lower closedness on (A, d), i.e., (i) of Theorem 3.2 is satisfied. Clearly, for each sequence \((x_n)\) in assumption (iii) of Theorem 3.1 one has that \((x_n)\) is a decreasing sequence and satisfies \(x_{n+1}\in \overline{\text {dom }T_F(x_n,\cdot )}\) and \(\lim _{n\rightarrow \infty } T_{F}(x_{n},x_{n+1}) = 0.\) Hence, by Theorem 3.2, there exists \({\bar{x}}\in \{x\in A\mid x\preceq x_0\}\) such that \({\bar{x}}\) is a strictly minimal element in the set \(A\cap \overline{\mathrm{dom }T_F ({\bar{x}}, \cdot )}\). Then, \({\bar{x}}\) satisfies the conclusions of Theorem 3.1. \(\square \)

The following corollary is the invariant point theorem in [17] (for the case of Banach spaces). It is worth noting that the authors of [17] have used this result to give a simple proof for Ekeland’s variational principle.

Corollary 3.1

Let X, A and G be the same as in Theorem 3.1. Assume that conditions (i) and (ii) of Theorem 3.1 hold. Assume further that

(iii’) \(\lim _{n\rightarrow \infty }\Vert x_{n+1}-x_n\Vert = 0,\) if \(x_{n+1}\in G(x_n)\) for all \(n\ge 1\).

Then G has an invariant point.

Proof

Let F be the closed unit ball. Then, due to the imposed assumptions, all assumptions of Theorem 3.1 are satisfied. We arrive at the conclusion. \(\square \)

In the following simple example, Theorem 3.1 is applicable, while Corollary 3.1 is not.

Example 3.1

Let \(X=\mathbb {R}^m\) (with the Euclidean norm), \( A=\mathbb {R}\times \mathbb {R}^{m-1}_{+}\), where \(\mathbb {R}^{m-1}_{+}\) is the nonnegative orthant of \(\mathbb {R}^{m-1}\), and let \(G: A \rightrightarrows A\) be defined by

Now let \(F=\Pi _{i=1}^{m}[0,1]\). It is easy to see that (i) and (ii) of Theorem 3.1 are fulfilled. If \((x_n)\) is a sequence satisfying \(x_{n+1}\in G(x_n)\cap \overline{\text { dom }T_F(x_n,\cdot )}\), two cases are possible:

-

1)

\(x_{n+1}=x_n\) for all \(n\ge 1.\)

-

2)

\(x_{n+1}\in [x^{1}_{n},0]\times \{x^{2}_{n}\} \times ....\times \{x^{m}_{n}\}\text { for all }n\ge 1.\)

In both cases (1) and (2), we have \(\lim _{n\rightarrow \infty }T_F(x_n,x_{n+1})=0,\) i.e., (iii) of Theorem 3.1 holds. Thus, for every \(x_0\in A\), there exists \({\bar{x}}\in G(x_0)\) such that

$$\begin{aligned} G({\bar{x}})\cap \overline{\text { dom }T_F({\bar{x}},\cdot )}=\{{\bar{x}}\}. \end{aligned}$$By direct checking, one sees that \({\bar{x}}=(0,...,0)\). Moreover, since

$$\begin{aligned} G({\bar{x}})=\{0,...,0\}\subset \mathbb {R}_{+}^{m}=\overline{\text { dom }T_F({\bar{x}},\cdot )}, \end{aligned}$$one has \({\bar{x}}\) is an invariant point of G. However, Corollary 3.1 is not applicable, since, if we take \(x_1:=(-1,1,...,1)\) and

$$\begin{aligned} x_{n+1}:=(-n-1,1,...,1)\in (-\infty ,0]\times \{1\},...,\{1\}=G(x_n) \end{aligned}$$then \(\Vert x_{n+1}-x_{n}\Vert \nrightarrow 0 \text { as } n\rightarrow +\infty .\)

Next, let us give another example in which G has no invariant point but exists \({\bar{x}} \in A\) such that \(G({\bar{x}})\cap \overline{\text { dom }T_F({\bar{x}},\cdot )}=\{{\bar{x}}\}\).

Example 3.2

Let \(X=A=C_{[0,1]}\) (the space of the real continuous functions on [0, 1] with the maximum norm). Let \(G: X\rightrightarrows X\) be defined by

Clearly, G has no invariant point. To apply Theorem 3.1, we take \(F=\{y\in C_{[0,1]}\mid 0\le y(t)\le 1,\forall t\in [0,1] \}\). Assumptions (i) and (ii) hold trivially. It is not hard to see that for all \(x\in X\),

Thus, if \((x_n)\) is a sequence satisfying \(x_{n+1} \in G(x_n) \cap \overline{\text {dom }T_F(x_n,\cdot )}\), then there exists \(y_n\in \{y\in C_{[0,1]}\mid 0\le y(t), \quad \forall t\in [0,1]\}\) such that

where the inequality holds by the definition of G. This clearly implies the equality \(x_{n+1}=x_n\) for all n, and hence \(\lim _{n\rightarrow \infty } T_{F}(x_{n},x_{n+1}) = 0,\) i.e., the assumption (iii) is fulfilled. By theorem 3.1, for every \(x_0\in X\) there exists \({\bar{x}}\in G(x_0)\) such that \(G({\bar{x}})\cap \overline{\text { dom }T_F({\bar{x}},\cdot )}=\{{\bar{x}}\}.\)

We end this section with a result, which together with Theorem 3.1 characterizes completeness.

Theorem 3.3

Let X be a normed vector space. If X is not a Banach space then there exist a closed set \(A\subset X\), a nonempty, bounded, closed and convex set \(F\subset X\) and a set-valued map G satisfying (i)–(iii) of Theorem 3.1 such that G has no invariant point.

Proof

Let \(A=X\) and \(F\subset X\) be a nonempty, bounded, closed and convex set containing the origin as an interior point. Observe first that since \(0\in \mathrm{int}\) F, \(\Vert F^\circ \Vert \) is bounded and \(\overline{\text { dom }T_F(x,\cdot )}=X\) for all \(x\in X\). We next show that

Indeed, for all \(x,y\in X\), one has

where the third equality follows from the bipolar theorem.

On the other hand, since X is not a Banach space, there exists a sequence of nonempty closed sets such that \(X = \Gamma _1\supset ...\supset \Gamma _i\supset ...\) and \(\Delta (\Gamma _i)\rightarrow 0\) as \(i \rightarrow \infty \), but \(\bigcap _{i=1}^{\infty }\Gamma _i=\emptyset \). Now, we can define \(G: X\rightrightarrows X\) as follows:

Clearly, \(x\in G(x)\) and G(x) is closed for all \(x\in X\), i.e., (i) is satisfied. For every \(x\in X\), if \(y\in G(x)\), two cases are possible: either \(y=x\) or \(y\in \Gamma _{i+1}\). If \(y=x\), we obviously have \(G(y)= G(x)\). For the second case, since \(\Gamma _{i+1}\subset ...\subset \Gamma _1\) and \(\bigcap _{i=1}^{\infty }\Gamma _i=\emptyset \), there exists \({\bar{i}}\ge i+1\) such that \(y\in \Gamma _{{\bar{i}}}\text { and }y\notin \Gamma _{{\bar{i}}+1}.\) Then \(G(y)=\Gamma _{{\bar{i}}+1}\cup \{y\}\). Since \(\Gamma _{{\bar{i}}+1}\subset \Gamma _{i+1}\), \(G(y)\subset G(x)\). Thus, (ii) is checked. To verify (iii), let \((x_n)\subset X\) be a sequence satisfying \(x_{n+1}\in G(x_n)\). By the properties of the sequence \((\Gamma _i)\), for every \(x_n\) there exists \(i_n\in \mathbb {N}\) such that

and then \(G(x_n)=\Gamma _{i_n+1}\cup \{x_n\}.\) Since \(x_{n+1}\in G(x_n)\), an argument similar to the verify of (ii) yields \(i_{n+1}\ge i_n\). Thus, if there exists \(n_0\in \mathbb {N}\) such that \(i_{n+1}=i_n\) (i.e., \(x_{n+1}=x_n\)) for all \(n\ge n_0\), then \(\lim _{n\rightarrow \infty }T_F(x_n,x_{n+1})=0.\) Otherwise, we have \(i_n\rightarrow \infty \) whenever \(n\rightarrow \infty \). On the other hand, one gets by (8) that

where the last inequality is valid because \(G(x_{n})\subset \Gamma _{i_n}\). Letting \(n\rightarrow \infty \) and invoking the boundedness of \(\Vert F^\circ \Vert \), we also have \(\lim _{n\rightarrow \infty } T_F(x_{n},x_{n+1})=0.\) Hence, G satisfies all assumptions of Theorem 3.1. However, G has no invariant point. Since if \({\bar{x}}\) exists such that \(G({\bar{x}})=\{{\bar{x}}\}\), we would have

Consequently, \(\Gamma _{i^*+1}=\emptyset \), a contradiction. \(\square \)

4 Applications

Throughout this section, we shall assume that X is a Banach space. By using the results of the previous section, we present several applications in optimization. We also provide some examples for comparisons with existing results in the literature to make sure of the role of our study.

4.1 Existence of Solutions to Optimization-Related Problems

Let A be a subset of X and \(g:A\times A\rightarrow \mathbb {R}\cup \{+\infty \}\). Recall that the equilibrium problem is to find \({\bar{x}}\in A\) such that \(g({\bar{x}}, y)\ge 0\) for all \(y\in A\). This problem is known also as the Ky Fan’s inequality (see [22]). The term “equilibrium problem” first appeared in [36]. It has been developed and applied in many research areas. However, most developments and applications focused on the case where the data had certain convexity assumptions. In this subsection, we derive from Theorem 3.1 sufficient conditions for the existence of solutions to approximate equilibrium and equilibrium problems, without any convexity requirement.

Theorem 4.1

Let X, F and A be the same as in Theorem 3.1 and let \(g:A\times A\rightarrow \mathbb {R}\cup \{+\infty \}\). Assume that the following conditions are satisfied:

-

(i)

for every \(x\in A\), \(g(x,x)= 0\) and \(g(x,\cdot )\) is lower semicontinuous;

-

(ii)

for every \(x,y,z\in A,\) \(g(x,z)\le g(x,y)+g(y,z);\)

-

(iii)

for every \(x\in A\), \(g(x,\cdot )\) is bounded from below on \(A\cap \overline{\text { dom } T_F(x,\cdot )}\).

Then, for any \(x_0\in A\) and any \(\epsilon > 0\), there exists \({\bar{x}}\in S\) such that

where \(S:=\{y\in A\mid g(x_0,y)+\epsilon T_F(x_0,y)\le 0\}.\)

Proof

Fix any \(\epsilon > 0\). We apply Theorem 3.1 with \(G: A\rightrightarrows A\) is defined by

For every \(x\in A\), we have \(x\in G(x)\) by (i) and the definition of the minimal time function. Moreover, G(x) is closed by (i) and Lemma 2.1 (ii). Hence, assumption (i) of Theorem 3.1 is satisfied. For every \(x\in A\), if \(y\in G(x)\) and \(z\in G(y)\), i.e.,

then by summing these inequalities side by side we obtain that

where the last inequality holds by (ii) and Lemma 2.1 (iv). This yields \(z\in G(x)\), i.e., (ii) of Theorem 3.1 is fulfilled. Finally, let \((x_n)\) be a sequence satisfying \(x_{n+1} \in G(x_n)\cap \overline{\text { dom }T_F(x_n, \cdot )}\). By the definition of G, we have

On the other hand, by setting \(u(x_{n}):=\inf _{y\in (G(x_{n})\cap \overline{\text { dom }T_F(x_n, \cdot )})}g(x_n,y)\) we get

where the first inequality holds by Lemma 2.1 (i) and the definition of G. It follows that \(\epsilon T_F(x_n,x_{n+1})\le u(x_{n+1})-u(x_n).\) Observe that for all \(n\ge 1\), we have \(-\infty <u(x_n)\le 0\) by (i) and (iii), and hence \(\sum _{n=1}^{\infty }\epsilon T_F(x_n,x_{n+1})<+\infty .\) This ensures that \(\lim _{n\rightarrow \infty }T_F(x_n,x_{n+1})=0\), i.e., (iii) of Theorem 3.1 holds. According to Theorem 3.1, there exists \({\bar{x}}\in G(x_0)=S\) such that

Consequently,

we are done. \(\square \)

Remark 4.1

In Theorem 4.1, if F is the closed unit ball, then we can obtain Ekeland’s variational principle for an equilibrium problem in [11]. In addition, if \(\epsilon =1\), then Theorem 4.1 becomes Theorem 1 in [42] (for the case of Banach spaces).

As a direct consequence of Theorem 4.1, we derive an Ekeland variational principle in terms of the minimal time functions.

Corollary 4.1

Let X, A and F be the same as in Theorem 4.1. Let \(f: A\rightarrow \mathbb {R}\cup \{+\infty \}\) be a proper lower semicontinuous function. Assume that for each \(x\in A\), f is bounded from below on \(A\cap \overline{\text { dom }T_F(x,\cdot )}\). Then, for any \(x_0 \in A\) and any \(\epsilon > 0\), one can find \({\bar{x}} \in A\) such that

and

Consequently, if f is bounded from below on A, then relation (10) becomes

Proof

Define

It is easy to see that assumptions (i)–(iv) of Theorem 4.1 hold. According to this theorem, there exists \({\bar{x}}\in \{y\in A\mid g(x_0,y)+\epsilon T_F(x_0,y)\le 0\}\) such that

Consequently, (9) and (10) hold.

Now, suppose f is bounded from below on A. Let \(z\in A\setminus \{{\bar{x}}\}\) be such that \(z\notin \overline{\text { dom }T_F({\bar{x}}, \cdot )}\). This means that \(T_F({\bar{x}}, z)=+\infty \), and hence, relation (10) becomes (11). \(\square \)

Remark 4.2

If F is a spherical sector with the apex at the origin, then Corollary 4.1 can be viewed as a directional Ekeland variational principle, which has been recently studied in [19]. Moreover, if F is the closed unit ball, then Corollary 4.1 becomes the famous Ekeland variational principle in [20] (for the Banach space case).

Example 4.1

Let \(X=A=\mathbb {R}\) and \(f:X\rightarrow \mathbb {R}\cup \{+\infty \}\) be defined by

Now, let \(F=[0,1]\). Then, for each \(x\in X\) we have

It is not hard to see that the assumptions of Corollary 4.1 are fulfilled. Therefore, for every \(x_0\in X\) and \(\epsilon >0\), there exists \({\bar{x}}\in X\) such that inequalities (9) and (10) hold.

Remark 4.3

In the above example, we see that since f is unbounded from below on A, Corollary 3.2 in [19] does not work. Thus, Corollary 4.1 is different from Corollary 3.2 in [19].

We now pass to an equilibrium problem.

Theorem 4.2

Let X, F, A and g be as in Theorem 4.1. Assume that

- (i):

-

for each \(x\in A\), \(g(x,x)=0\) and \(g(x,\cdot )\) is lower semicontinuous;

- (ii):

-

for \(x,y,z\in A\) satisfying \(g(x,y)\le 0\) and \(g(y,z)\le 0\), one has \(g(x,z)\le 0\);

- (iii):

-

lim\(_{n\rightarrow +\infty }T_F(x_n,x_{n+1})=0\), if \((x_n)\) is a sequence satisfying

$$\begin{aligned} x_{n+1}\in \overline{\text { dom }T_F(x_n,\cdot )}\text { and }g(x_n,x_{n+1})\le 0,\text { } \forall n\ge 1. \end{aligned}$$

Then, for each \(x_0\in A\), there exists \(\bar{x}\in A\) such that \(g(x_0,{\bar{x}})\le 0\) and

Proof

Employ Theorem 3.1 with \(G(x)=\{y\in A\mid g(x,y)\le 0\}\) for every \(x\in A\). It is easy to see that all assumptions of Theorem 3.1 are satisfied. Clearly, the conclusions of Theorem 3.1 and this theorem are the same. \(\square \)

As we have seen, the proof technique of the above sufficient condition is simple, but seems to also be applicable for many optimization-related problems such as inclusion problems, traffic networks problems and non-cooperative games problems (see, e.g., [31]). The following simple example illustrates Theorem 4.2 which can be interpreted as an instance of an equilibrium in the direction.

Example 4.2

Let \(X=A=\mathbb {R}\), and let \(g: X\times X\rightarrow \mathbb {R}\) be defined by

where \(m\in \mathbb {N}\). The equilibrium problem is

Clearly, this problem does not have any solution. Furthermore, to try with Ekeland’s variational principle for equilibrium problems in [11, 42] we see that Theorem 1 in [42] and Theorem 2.1 in [11] cannot be applied for this case, since for every \(x\in X\), \(g(x,\cdot )\) is not bounded from below on X.

Now, let \(F=[-1,0]\). Then, for each \(x\in X\) we have

Fix any \(x_0\in X\). It is not difficult to verify that the assumptions of Theorem 4.2 are satisfied for g. This meets a direct checking with the result that there exists \({\bar{x}} =x_0\) such that

Let us notice here the importance of the fact that \({\bar{x}} \) is an equilibrium point with respect to its left direction.

4.2 Generalized Global Error Bounds

Let \(f: X \rightarrow \mathbb {R}\). For the inequality

let \(S:=\{x\in X\mid f(x)\le 0\}\) denote the solution set. To avoid trivially, we shall assume that \(\emptyset \ne S\ne X\).

We now introduce a new notion of global error bounds for inequality (12) as follows:

Definition 4.1

Let F be a nonempty subset of X. Inequality (12) is said to have a generalized global error bound with respect to F if there exists \(\tau >0\) such that

where \([f(x)]_+:=\mathrm{max}\{f(x),0\}\) and \(C_F:=\text { dom }T_F(\cdot ,S)=S-\text { cone }F\) (see Remark 2.1 (ii)).

Remark 4.4

-

(i)

We first observe that if F is the closed unit ball, then the concept of the generalized global error bound (13) becomes the concept of the usual global error bounds (see, e.g., [8, 13, 26, 28, 30, 39] and references therein). Moreover, it should be mentioned that the concept of generalized local error bounds with respect to F has been proposed and studied in our paper [34].

-

(ii)

If F is a spherical sector with the apex at the origin, then Definition 4.1 is closely related to the concept of directional metric subregularity in [15]. Indeed, let \(H : X \rightrightarrows \mathbb {R}\) be defined by \(H(x):= [f(x), +\infty )\). Then, inequality (13) can be rewritten as

$$\begin{aligned} T_F(x,H^{-1}(0))\le \tau d(0,H(x))\text { for all } x\in C_F, \end{aligned}$$where \(H^{-1}(0):=\{x\in X\mid 0\in H(x)\}.\) Then, one says that H is globally metrically subregular at 0 with respect to F with modulus \(\tau \).

To establish necessary and sufficient conditions for the existence of generalized global error bounds for a lower semicontinuous function without convexity, we first need the following lemma, which is a type of Lemma 2.42 in [28] and Theorem 3 in [2] in the minimal time function setting.

Lemma 4.1

Let X, F and A be the same as in Theorem 3.2, and let f be a lower semicontinuous function. Suppose that there exists a number \(\beta > 0\) such that for any \(x\in A\) with \(f(x)>0\), there is a \({\bar{y}}\in A\setminus \{x\}\), which satisfies the inequality

Then, for any \(x_0\in A\) satisfying \(f(x_0)>0\), there is a point \({\bar{x}}\in A\) such that \(T_F(x_0,{\bar{x}}) \le \frac{f(x_0)}{\beta }\) and \(f({\bar{x}}) \le 0\).

Proof

Take \(\beta \) and \(x_0\) satisfying the conditions. Set \(\epsilon :=f(x_0)\) and \(\lambda :=\frac{\epsilon }{\beta }\). We apply Theorem 3.2 with the binary relation \(\prec \) defined by

By Lemma 2.1 (ii), the function \(y\mapsto [f(y)]_++T_F(x,y)\) is lower semicontinuous for \(x \in A\). Hence,

is closed in A, for all \(x\in A\). For every \(x\in A\), we have \(x\preceq x\). This means that \(\preceq \) is reflexive. To see the transitivity of \(\preceq \), let \(x,y,z\in A\) be such that \(z\preceq y\) and \(y\preceq x\). Then, we have

Hence,

where the first inequality follows from Lemma 2.1 (iv). This shows that \(z\preceq x\). Therefore, the binary relation \(\preceq \) is a preorder satisfying the lower closedness on A, i.e., assumption (i) of Theorem 3.2 is satisfied. Furthermore, let \((x_n)\) be a decreasing sequence and satisfy \(x_{n+1}\in \overline{\text { dom }T_F(x_n,\cdot )}\). By the definition of the binary relation \(\preceq \), one has

Since \(0\le [f(x_n)]_+<+\infty \) for all \(n\ge 1\),

This immediately implies that \(\lim _{n\rightarrow \infty }T_F(x_n,x_{n+1})=0\), i.e., assumption (ii) of Theorem 3.2 is also fulfilled. By Theorem 3.2, there exists a

such that \({\bar{x}}\) is a strictly minimal element in the set \(\{y\in A\mid y\preceq x_n\}\subset \overline{\text { dom }T(x_n,\cdot )}\). Consequently,

We note that \(\epsilon =f(x_0)\), so \(f(x_0)\le \inf _{x\in A}[f(x)]_++\epsilon \le [f({\bar{x}})]_++\epsilon .\) This together with (15) implies that

and hence, one must have \(T_F(x_0,{\bar{x}})\le \lambda =\frac{f(x_0)}{\beta }.\) Furthermore, we will show that

Indeed, if there exists \(x\in \{y\in A\mid y\preceq {\bar{x}}\}\) such that \(x\ne {\bar{x}}\), then the definition of the binary \(\preceq \) tells us that \( \frac{\epsilon }{\lambda }T_F({\bar{x}}, x)\le [f({\bar{x}})]_+-[f(x)]_+.\) Using the fact that \(0\le [f({\bar{x}})]_+\) and \([f(x)]_+<+\infty \), we get \(x\in \text { dom }T_F({\bar{x}}, \cdot )\), which proves (16). Hence, \({\bar{x}}\) is a strictly minimal element in the set A, or equivalently,

If \(f({\bar{x}})>0\), by (14), there is a \({\bar{y}}\in A\backslash \{{\bar{x}}\}\) satisfying

i.e.,

This clearly contradicts (17). The proof has been completed. \(\square \)

Remark 4.5

When F is the closed unit ball, inequality (14) is called “the Caristi-like condition” by Arutyunov in [2].

Theorem 4.3

Let \(F\subset X\) be a nonempty, compact and convex set, and let f be a lower semicontinuous.

-

(i)

If inequality (12) has a generalized global error bound with respect to F, then there exists a number \(\beta >0 \) such that for any \(x\in C_F\) satisfying \(f(x) > 0\), one has

$$\begin{aligned}{}[f({\bar{y}})]_+ \le f (x) - \beta T_F(x,{\bar{y}})\text { for some } {\bar{y}}\in C_F\setminus \{x\}. \end{aligned}$$(18) -

(ii)

In addition, if we suppose that \(C_F\) is closed, then the converse of (i) is true.

Proof

-

(i)

Suppose there is \(\tau > 0\) such that

$$\begin{aligned} T_F (x, S) \le \tau [f(x)]_+,\quad \forall x \in C_F. \end{aligned}$$Fix arbitrary \(x \in C_F\) with \(f(x) > 0\). Since S is closed, F is compact and \(T_F (x, S)<+\infty \), see Remark 2.1 (i), we can find an \({\bar{y}} \in S\subset C_F\setminus \{x\}\) such that

$$\begin{aligned} T_F(x,{\bar{y}})=T_F (x, S) \le \tau f (x), \end{aligned}$$which means that (18) holds for \(\beta \le \frac{1}{\tau }\).

-

(ii)

Suppose to the contrary that for each \(\tau '\in (0,+\infty )\), there exists \(x_0\in C_F\) such that

$$\begin{aligned} T_F(x_0,S)>\tau ' [f(x_0)]_+. \end{aligned}$$(19)In particular, we can take \(\tau ':=\frac{1}{\beta }\). Clearly, \(x_0\notin S\), i.e., \(f(x_0) >0\). Applying Lemma 4.1 with \(A:=C_F\), there exists \({\bar{x}}\in C_F\) such that \(f({\bar{x}})\le 0\) (i.e., \({\bar{x}}\in S\subset C_F\)) and \(T_F(x_0,{\bar{x}})\le \frac{f( x_0)}{\beta }=\tau ' f(x_0)\). This together with (19) implies that

$$\begin{aligned} \tau ' f(x_0)\ge T_F(x_0,{\bar{x}})\ge T_F(x_0,S)>\tau ' [f(x_0)]_+, \end{aligned}$$a contradiction. The proof is complete. \(\square \)

Remark 4.6

For the special case, where F is the closed unit ball, condition (18) implies the sufficient condition for the existence of usual global/local error bounds studied in [28] and further developed, e.g., in [21, 41].

Next, we give an example to explain the advantage of the second part of Theorem 4.3. Note that this example was first used in [45] (see also [10]) to illustrate the discontinuity of a set-valued map in Hausdorff sense.

Example 4.3

Let \(f_1, f_2 : \mathbb {R}^4 \rightarrow \mathbb {R}\) be defined by \(f_1(x) = x_1\) and

for all \(x = (x_1, x_2, x_3, x_4) \in \mathbb {R}^4\). Define \(f(x) = \max \{f_1(x), f_2(x)\}\), \( x\in \mathbb {R}^4\). Consider the solution set \(S:=\{x\in \mathbb {R}^4\mid f(x)\le 0\}.\) Next, we take \(F:=[0,1]\times [-1,1]\times [-1,1]\times [-1,1]\). Observe first that for every \(x=(x_1,x_2,x_3,x_4)\in \mathbb {R}^4\), if \(x_1>0\) then \(x\not \in S\). This implies that \(S\subset -\text { cone }F\), and hence, we have that \(C_F:=S- \text {cone }F=-\text {cone }F\), and it is closed. Let \(x=(x_1,x_2,x_3,x_4)\in C_F\) be such that \(f(x)>0\). Setting

we get \({\bar{y}}\in S\) (due to \(f_1({\bar{y}})=x_1\le 0\) and \(f_2({\bar{y}})=0\)). This yields \({\bar{y}}\in C_F\setminus \{x\}\). Furthermore, one has

and hence, inequality (18) holds for \(\beta = 1\). According to Theorem 4.3, \(f(x)\le 0\) admits a generalized global error bound with respect to F but does not have an usual global error bound, as shown in [32] and also in [39].

Finally, we specialize the previous result to the case of convex continuous functions. Given a compact and convex \(F\subset X\) and \(x\in X\) with \(T_F( x,S)<+\infty \). The generalized projection of x to S is defined by

where \(\rho _F\) is the Minkowski gauge given by

It is not hard to check that, under our assumptions on F and S, the generalized projection \(\Pi _{F}({\bar{x}},S)\) is always nonempty and \(T_F({\bar{x}},S)=0\) if and only if \( {\bar{x}} \in S\) (see, e.g., [29, Proposition 2.2]).

Theorem 4.4

Let \(F\subset X\) be a nonempty, compact and convex set and let f be a convex continuous function.

-

(i)

If inequality (12) has a generalized global error bound with respect to F, then

$$\begin{aligned} \gamma :=\inf \Big \{\sigma _{F}(-x^*)\mid \text { }x^*\in \partial [f]_+(x),\text { }x\in C_F\setminus S\Big \}>0, \end{aligned}$$(20)(recall that \(C_F:=\mathrm{dom}T_F (\cdot ,S)\)).

-

(ii)

In addition, if we suppose that \(0\in \text { int }F\), then the converse of (i) is true.

Proof

For the first part, let us suppose that there is \(\tau >0\) such that

Hence (cf. [29, Proposition 2.2 (i)])

Fix any \(x\in C_F\setminus S\) and \(x^*\in \partial [f]_+(x))\). Then, one has

On the other hand, since F is compact, S is closed and \(T_F(x,S)<+\infty \), one has \(\Pi _{F}(x,S)\ne \emptyset \). This means that there exists \(\hat{x}\in S\) such that \(\rho _F(\hat{x}-x)=T_{F}(x,S)\). In formula (22), we replace y by \(\hat{x}\) to have

and hence,

Observe that \(\frac{\hat{x}-x}{T_{F}(x,S)}\in F\). Thus, inequality (21) and the definition of the support function imply that

Since \(x^*\) and x are arbitrarily chosen, we conclude that inequality (20) holds.

For the second part, if \( 0\in \) int F then \(C_F=X=\text { dom }T_F(x,\cdot )\) for all \(x\in X\). Hence, (20) can be rewritten as

Suppose to the contrary that for every \(\tau '\in (0,+\infty )\), there exists \(x_0\in X\) such that

Clearly, \(x_0\notin S.\) Set \(\epsilon :=[f(x_0)]_+=f(x_0)>0\) and \(\lambda :=\tau '\epsilon \). Then, one has

and

To employ Theorem 3.1, we set \(A:=X\) and define

It is not difficult to verify that (i)–(iii) of Theorem 3.1 are satisfied by the same argument used in the proof of Theorem 4.1. Then, there is \({\bar{x}}\in X\) such that \({\bar{x}}\in G(x_0)\) and \(G({\bar{x}})={\bar{x}}\) and therefore that

and

It follows that \({\bar{x}}\) is a global minimum of the convex function

Since \([f]_+\) is finite and continuous at \({\bar{x}}\in \text { dom }T_F({\bar{x}}, \cdot )\), one has

This together with Lemma 2.1 (iii) implies that \(0\in \partial [f]_+({\bar{x}})+\frac{1}{\tau '} C^*.\) Then there exist \(x^*\in \partial [f]_+({\bar{x}})\) and \(u^*\in C^*\) such that \(-x^*=\frac{1}{\tau '}u^*,\) and hence,

Furthermore, it follows from (24) and (25) that \({\bar{x}} \in X\setminus S\). We therefore have

which contradicts (23). This completes the proof of the theorem. \(\square \)

Remark 4.7

The existence of error bounds is usually proved by using the Ekeland variational principle in [20]; instead of that, one can apply Theorem 3.1 (invariant points theorem) as seen in the proof of Theorem 4.4. Observe that Theorem 4.4 includes Proposition 2 (ii) of [40]. The following example illustrates the advantage of the necessity part of Theorem 4.4 providing a case when it works well, while Proposition 2 (ii) of [40] is out of use.

Example 4.4

Let m be a arbitrary positive integer satisfying \(m\ge 2\) and let \(X=\mathbb {R}\) and \(f: X \rightarrow \mathbb {R}\) defined by

Clearly, f is a convex continuous function. It is not difficult to verify that

One takes a sequence \((x_k)\subset (0, +\infty )\) satisfying \(x_k \rightarrow 0\) as \(k\rightarrow \infty \). We show that the inequality \(f(x)\le 0\) does not admit any usual global error bound. Suppose on the contrary that there exists \(\tau >0\) such that

Since \(x_k>0\), one has \(1\le \tau x_{k}^{m-1}\text { for all } k\in \mathbb {N}.\) Letting \(x_k\rightarrow 0\) as \(k\rightarrow \infty \), we get a contradiction. Thus, Proposition 2 (ii) of [40] cannot be applied in this case.

Now, let \(F=[0,1].\) Next, we can take \(\tau = 1\) to get

Therefore, \(f (x) \le 0\) has a generalized global error bound with respect to F. Then, Theorem 4.4 tells us that (20) holds. Indeed, by direct computations, one has \(\partial [f]_+(x)=\{-1\} \text { for all }x\in (-\infty ,0)=C_F\setminus S.\) This implies that

i.e., (20) is satisfied.

Remark 4.8

In the above example, we can say that f has a global error bound in the left direction of the solution set.

5 Conclusions

In this paper, we have provided a sufficient condition for a set-valued map, acting from one closed subset of a Banach space to itself, to have an invariant point. We have also shown that this result is equivalent to an ordering principle. The obtained results can be applied to derive sufficient conditions for the existence of solutions to various optimization-related problems. Another direction of the application is related to necessary and sufficient conditions for the existence of a new type of global error bounds.

References

Ansari, Q.H.: Vectorial form of Ekeland-type variational principle with applications to vector equilibrium problems and fixed point theory. J. Math. Anal. Appl. 334(1), 561–575 (2007)

Arutyunov, A.V.: Caristi’s condition and existence of a minimum of a lower bounded function in a metric space. Applications to the theory of coincidence points. Proc. Steklov. Inst. Math. 291, 24–37 (2015)

Arutyunov, A.V., Avakov, E.R., Izmailov, A.F.: Directional regularity and metric regularity. SIAM J. Optim. 18(3), 810–833 (2007)

Arutyunov, A.V., Avakov, E., Gel’man, B.D., Dmitruk, A., Obukhovskii, V.: Locally covering maps in metric spaces and coincidence points. J. Fixed Point Theory Appl. 5(1), 105–127 (2009)

Arutyunov, A.V., Izmailov, A.F.: Directional stability theorem and directional metric regularity. Math. Oper. Res. 31(3), 526–543 (2006)

Arutyunov, A.V., Gel’man, B.D.: On the structure of the set of coincidence points. Sbornik Math. 206(3), 370–388 (2015)

Arutyunov, A.V., Zhukovskiy, E.S., Zhukovskiy, S.E.: On the cardinality of the coincidence set for mappings of metric, normed and partially ordered spaces. Sbornik Math. 209(8), 1107–1130 (2018)

Azé, D., Corvellec, J.-N.: On the sensitivity analysis of Hoffman constants for systems of linear inequalities. SIAM J. Optim. 12(4), 913–927 (2002)

Bao, T.Q., Théra, M.A.: On extended versions of Dancs–Hegedüs–Medvegyev’s fixed point theorem. Optimization 66(6), 875–887 (2017)

Belousov, E.G.: On types of Hausdorff discontinuity from above for convex closed mappings. Optimization 49(4), 303–325 (2001)

Bianchi, M., Kassay, G., Pini, R.: Existence of equilibria via Ekeland’s principle. J. Math. Anal. Appl. 305(2), 502–512 (2005)

Bishop, E., Phelps, R.R.: The support functionals of a convex set. Proc. Sympos. Pure Math. 7, 27–35 (1962)

Chuong, T.D., Jeyakumar, V.: Robust global error bounds for uncertain linear inequality systems with applications. Linear Algebra Appl. 493, 183–205 (2016)

Cibulka, R., Durea, M., Panţiruc, M., Strugariu, R.: On the stability of the directional regularity. Set-Valued Var. Anal. 28(2), 209–237 (2020)

Chelmuş, T., Durea, M., Florea, E.: Directional pareto efficiency: concepts and optimality conditions. J. Optim. Theory Appl. 182(1), 336–365 (2019)

Colombo, G., Wolenski, P.R.: The subgradient formula for the minimal time function in the case of constant dynamics in Hilbert space. J. Glob. Optim. 28, 269–282 (2004)

Dancs, S., Hegedüs, M., Medvegyev, P.: A general ordering and fixed-point principle in complete metric space. Acta Sci. Math. Szeged. 46, 381–388 (1983)

Durea, M., Panţiruc, M., Strugariu, R.: Minimal time function with respect to a set of directions: basic properties and applications. Optim. Methods Softw. 31(3), 535–561 (2016)

Durea, M., Panţiruc, M., Strugariu, R.: A new of directional regularity for mappings and applications to optimization. SIAM J. Optim. 27(2), 1204–1229 (2017)

Eleland, I.: On the variational principle. J. Math. Anal. Appl. 47(2), 324–353 (1974)

Fabian, M.J., Henrion, R., Kruger, A.Y., Outrata, J.V.: Error bounds: necessary and sufficient conditions. Set-Valued Var. Anal. 18(2), 121–149 (2010)

Fan, K.: Minimax inequality and applications. In: Shisha, O. (ed.) Inequalities III, pp. 103–113. Academic Press, New York (1972)

Flores-Bazán, F., Gutiérrez, C., Novo, V.: A Brézis–Browder principle on partially ordered spaces and related ordering theorems. J. Math. Anal. Appl. 375(1), 245–260 (2011)

Gfrerer, H.: On directional metric subregularity and second-order optimality conditions for a class of nonsmooth mathematical programs. SIAM J. Optim. 23(1), 632–665 (2013)

Ha, T.X.D.: Some variants of the Ekeland variational principle for a set-valued map. J. Optim. Theory Appl. 124(1), 187–206 (2005)

Hoffman, A.J.: On approximate solutions of systems of linear inequalities. J. Res. Nat. Bur. Stand. 49, 263–265 (1952)

He, Y., Ng, K.F.: Subdifferentials of a minimum time function in Banach spaces. J. Math. Anal. Appl. 321(2), 896–910 (2006)

Ioffe, A.D.: Variational Analysis of Regular Mappings: Theory and Applications. Springer Monographs in Mathematics, Springer, Cham (2017)

Jiang, Y., He, Y.: Subdifferential properties for a class of minimal time functions with moving target sets in normed spaces. Appl. Anal. 91(3), 491–501 (2012)

Klatte, D.: Hoffman’s error bound for systems of convex inequalities. In: Fiacco, A.V. (ed.) Mathematical Programming with Data Perturbations, pp. 185–199. Marcel Dekker Publ., Moscow (1998)

Khanh, P.Q., Long, V.S.T.: Invariant-point theorems and existence of solutions to optimization-related problems. J. Glob. Optim. 58(3), 545–564 (2014)

Li, G.: On the asymptotically well behaved functions and global error bound for convex polynomials. SIAM J. Optim. 20(4), 1923–1943 (2010)

Lin, L.J., Du, W.S.: Ekeland’s variational principle, minimax theorems and existence of nonconvex equilibria in complete metric spaces. J. Math. Anal. Appl. 323(1), 360–370 (2006)

Long, V.S.T.: A new notion of error bounds: necessary and sufficient conditions. Optim. Lett. 15(1), 171–188 (2021)

Mordukhovich, B.S., Nam, N.M.: Limiting subgradients of minimal time functions in Banach spaces. J. Global Optim. 46(4), 615–633 (2010)

Muu, L.D., Oettli, W.: Convergence of an adaptive penalty scheme for finding constrained equilibria. Nonlinear Anal. 18(12), 1159–1166 (1992)

Nam, N.M., Villalobos, M.C., An, N.T.: Minimal time functions and the smallest intersecting ball problem with unbounded dynamics. J. Optim. Theory Appl. 154(3), 768–791 (2012)

Nam, N.M., Zǎlinescu, C.: Variational analysis of directional minimal time functions and applications to location problems. Set-Valued Anal. 21(2), 405–430 (2013)

Ngai, H.V.: Global error bounds for systems of convex polynomials over polyhedral constraints. SIAM J. Optim. 25(1), 521–539 (2015)

Ngai, H.V., Théra, M.: Error bounds for convex differentiable inequality systems in Banach spaces. Math. Program. Ser. B 104(2–3), 465–482 (2005)

Ngai, H.V., Théra, M.: Directional metric regularity of multifunctions. Math. Oper. Res. 40(4), 969–991 (2015)

Oettli, W., Théra, M.: Equivalents of Ekeland’s principle. Bull. Austral. Math. Soc. 48(3), 385–392 (1993)

Penot, J.-P.: The drop theorem, the petal theorem and Ekeland’s variational principle. Nonlinear Anal. 10(9), 813–822 (1986)

Qiu, J.H.: A pre-order principle and set-valued Ekeland variational principle. J. Math. Anal. Appl. 419(2), 904–937 (2014)

Shironin, V.M.: On Hausdorff continuity of convex and convex polynomial mappings. In: Belousov, E.G., Bank, B. (eds.) Mathematical Optimization: Questions of Solvability and Stability. Moscow Univeristy Publ., Moscow (1986). (in Russian)

Zǎlinescu, C.: Convex Analysis in General Vector Spaces. World Scientific, Singapore (2002)

Acknowledgements

The author would like to thank the editor and the anonymous referee for their useful comments and valuable suggestions, which helped to improve the paper significantly. This research is funded by Vietnam National University HoChiMinh City (VNU-HCM) under Grant Number T2022-18-02.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Nguyen Mau Nam.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Long, V.S.T. An Invariant-Point Theorem in Banach Space with Applications to Nonconvex Optimization. J Optim Theory Appl 194, 440–464 (2022). https://doi.org/10.1007/s10957-022-02033-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-022-02033-y