Abstract

We study a first-order primal-dual subgradient method to optimize risk-constrained risk-penalized optimization problems, where risk is modeled via the popular conditional value at risk (CVaR) measure. The algorithm processes independent and identically distributed samples from the underlying uncertainty in an online fashion and produces an \(\eta /\sqrt{K}\)-approximately feasible and \(\eta /\sqrt{K}\)-approximately optimal point within K iterations with constant step-size, where \(\eta \) increases with tunable risk-parameters of CVaR. We find optimized step sizes using our bounds and precisely characterize the computational cost of risk aversion as revealed by the growth in \(\eta \). Our proposed algorithm makes a simple modification to a typical primal-dual stochastic subgradient algorithm. With this mild change, our analysis surprisingly obviates the need to impose a priori bounds or complex adaptive bounding schemes for dual variables to execute the algorithm as assumed in many prior works. We also draw interesting parallels in sample complexity with that for chance-constrained programs derived in the literature with a very different solution architecture.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We study iterative primal-dual stochastic subgradient algorithms to solve risk-sensitive optimization problems of the form

where \(\omega \in \varOmega \) is random and \(\alpha , \pmb {\beta }:=(\beta ^1, \ldots , \beta ^m)\) in [0, 1) define risk-aversion parameters. The collection of real-valued functions \(f_\omega \), \(g^1_\omega , \ldots , g^m_\omega \) are assumed convex but not necessarily differentiable, over the closed convex set \({\mathbb {X}}\subseteq {\mathbb {R}}^n\), where \({\mathbb {R}}\) and \({\mathbb {R}}_+\) stand for the set of real and nonnegative numbers, respectively. Denote by \({\varvec{G}}\) and \({\varvec{g}}_\omega \), the collection of \(G^i\)’s and \(g^i_\omega \)’s, respectively, for \(i = 1,\ldots , m\). \(\mathrm{CVaR}\) stands for conditional value at risk. For any \(\delta \in [0, 1)\), \(\mathrm{CVaR}_\delta [y_\omega ]\) of a scalar random variable \(y_\omega \) with continuous distribution equals its expectation computed over the \(1-\delta \) tail of the distribution of \(y_\omega \). For \(y_\omega \) with general distributions, \(\mathrm{CVaR}\) is defined via the following variational characterization

following [36]. For each \(\varvec{x} \in {\mathbb {X}}\), assume that \(\mathbb {E} [ | f_\omega (\varvec{x}) | ]\) and \(\mathbb {E} [ | g^i_\omega (\varvec{x}) | ]\) are finite, implying that F and \({\varvec{G}}\) are well defined everywhere in \({\mathbb {X}}\).

\({{{{\mathcal {P}}}}^\mathrm{CVaR}}\) offers a modeler the flexibility to indicate her risk preference in \(\alpha , \pmb {\beta }\). With \(\alpha \) close to zero, she indicates risk-neutrality toward the uncertain cost associated with the decision. With \(\alpha \) closer to one, she expresses her risk aversion toward the same and seeks a decision that limits the possibility of large random costs associated with the decision. Similarly, \(\beta \)’s express the risk tolerance in constraint violation. Choosing \(\beta \)’s close to zero indicates that constraints should be satisfied on average over \(\varOmega \) rather than on each sample. Driving \(\beta \)’s to unity amounts to requiring the constraints to be met almost surely. Said succinctly, \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\) permits the modeler to customize risk preference between the risk-neutral choice of expected evaluations of functions to the conservative choice of robust evaluations.

There is a growing interest in solving risk-sensitive optimization problems with data. See [3, 20] for recent examples that tackle problems with generalized mean semi-deviation risk that equals \(\mathbb {E} [{y_\omega }] + c \mathbb {E} [ \vert {y_\omega } - \mathbb {E} [{y_\omega }] \vert ^{p}]^{1/p}\) for \(p>1\) for a random variable \(y_\omega \). There is a long literature on risk measures, e.g., see [1, 12, 25, 33, 36, 37]. We choose \(\mathrm{CVaR}\) for three particular reasons. First, it is a coherent risk measure, meaning that it is normalized, sub-additive, positively homogeneous and translation invariant, i.e.,

for random variables \(y_\omega , y^1_\omega , y^2_\omega \), \(t >0\) and \(t' \in {\mathbb {R}}\). An important consequence of coherence is that F and \({\varvec{G}}\) in \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\) inherit the convexity of \(f_\omega \) and \({\varvec{g}}_\omega \). Convexity together with the variational characterization in (2) allow us to design sampling based primal-dual methods for \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\) for which we are able to provide finite sample analysis of approximate optimality and feasibility. The popularity of the \(\mathrm{CVaR}\) measure is our second reason to study \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\). Following Rockafellar and Uryasev’s seminal work in [36], \(\mathrm{CVaR}\) has found applications in various engineering domains, e.g., see [22, 27], and therefore we anticipate wide applications of our result. Our third and final reason to study \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\) is its close relation to other optimization paradigms in the literature as we describe next.

\({{{{\mathcal {P}}}}^\mathrm{CVaR}}\) without constraints and \(\alpha = 0\) reduces to the minimization of \(\mathbb {E} [f_\omega (\varvec{x})]\), the canonical stochastic optimization problem. With \(\alpha \uparrow 1\), the problem description of \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\) approaches that of a robust optimization problem (see [4]) of the form \(\min _{\varvec{x} \in {\mathbb {X}}} \text {ess}\sup _{\omega \in \varOmega } f_\omega (\varvec{x})\), where \(\text {ess}\sup \) denotes the essential supremum. Driving \(\beta \)’s to unity, \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\) demands the constraints to be enforced almost surely. Such robust constraint enforcement is common in multi-stage stochastic optimization problems with recourse and discrete-time optimal control problems, e.g., in [16, 39, 40]. \(\mathrm{CVaR}\)-based constraints are closely related to chance constraints introduced by Charnes and Cooper in [12] that enforce \( \mathsf{Pr}\{g_\omega (\varvec{x}) \le 0 \} > 1-\varepsilon \) where \(\mathsf{Pr}\) refers to the probability measure on \(\varOmega \). Even if \(g_\omega \) is convex, chance-constraints typically describe a nonconvex feasible set. It is well known that \(\mathrm{CVaR}\)-based constraints provide a convex inner approximation of chance-constraints. Restricting the probability of constraint violation does not limit the extent of any possible violation, while \(\mathrm{CVaR}\)-based enforcement does so in expectation. \(\mathrm{CVaR}\) is also intimately related to the buffered probability of exceedence (bPOE) introduced and studied more recently in [25, 45]. In fact, bPOE is the inverse function of \(\mathrm{CVaR}\), and hence, problems with bPOE-constraints can often be reformulated as instances of \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\).

It can be challenging to compute \(\mathrm{CVaR}\) of \(f_\omega (\varvec{x})\) or \({\varvec{g}}_\omega (\varvec{x})\) for a given decision variable \(\varvec{x}\) with respect to a general distribution on \(\varOmega \) for two reasons. First, if samples from \(\varOmega \) are obtained from a simulation tool, an explicit representation of the probability distribution on \(\varOmega \) may not be available. Second, even if such a distribution is available, computation of \(\mathrm{CVaR}\) (or even the expectation) can be difficult. For example, with \(f_\omega \) as the positive part of an affine function and \(\omega \) being uniformly distributed over a unit hypercube, computation of \(\mathbb {E} [f_\omega ]\) via a multivariate integral is #P-hard according to [17, Corollary 1]. Therefore, we do not assume knowledge of F and \({\varvec{G}}\) but rather study a sampling-based algorithm to solve \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\).

Solution architectures for \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\) via sampling come in two flavors. The first approach is sample average approximation (SAA) that replaces the expectation in (2) by an empirical average over N samples. One can then solve the sampled problem as a deterministic convex program.Footnote 1 We take the second and alternate approach of stochastic approximation and process independent and identically distributed (i.i.d.) samples from \(\varOmega \) in an online fashion. Iterative stochastic approximation algorithms for the unconstrained problem have been studied since the early works by Robbins and Monro in [34] and by Kiefer and Wolfowitz in [21]. See [24] for a more recent survey. Zinkevich in [46] proposed a projected stochastic subgradient method that can be applied to tackle constraints in such problems. Without directly knowing \({\varvec{G}}\), we cannot easily project the iterates on the feasible set \(\{ \varvec{x} \in {\mathbb {X}}\ | \ {\varvec{G}}(\varvec{x}) \le 0 \}\). We circumvent the challenge by associating Lagrange multipliers \({\varvec{z}} \in {\mathbb {R}}^m_+\) to the constraints and iteratively updating \(\varvec{x}, {\varvec{z}}\) by using \(f_\omega , {\varvec{g}}_\omega \) and their subgradients via a first-order stochastic primal-dual algorithm for \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\) along the lines of [30, 42, 44].

In Sect. 2, we first design and analyze Algorithm 1 for \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\) with \(\alpha =0, \pmb {\beta } = 0\), i.e., the optimization problem

First-order stochastic primal-dual algorithms have a long history, dating back almost forty years, including that in [15, 24, 30,31,32]. The analyses of these algorithms often require a bound on the possible growth of the dual variables. Borkar and Meyn in [8] stress the importance of compactness assumptions in their analysis of stochastic approximation algorithms. A priori bounds used in [31] are difficult to know in practice and techniques for iterative construction of such bounds as in [30] require extra computational effort. A regularization term in the dual update has been proposed in [23, 26] to circumvent this limitation. Instead, we propose a different modification to the classical primal-dual stochastic subgradient algorithm. With this simple modification, we are able to circumvent the need to bound the dual variables in executing the algorithm. As will become clear in Sect. 2, we rely on the existence of a saddle point of the Lagrangian function for \({{{{\mathcal {P}}}}^{\mathrm{E}}}\), which is typically guaranteed under Slater-type constraint qualification. However, knowledge of that saddle point or a strictly feasible “Slater” point is not required to execute the algorithm nor derive its convergence rate. While the classical primal-dual approach samples once for a single update of the primal and the dual variables, we sample twice–once to update the primal variable and then again to update the dual variable with the most recent primal iterate–thus, adopting a Gauss–Seidel approach in place of a Jacobi framework. For Algorithm 1, we bound the expected optimality gap and constraint violations at a suitably weighted average of the iterates by \(\eta /\sqrt{K}\) for a constant \(\eta \) with a constant step-size algorithm. Using these bounds, we then carefully optimize the step-size that allows us to reach within a given threshold of suboptimality and constraint violation with the minimum number of iterations. While we do not bound the dual variables to execute the algorithm or to characterize the \(1/\sqrt{K}\) convergence rate, we do require an overestimate of the distance of the dual initialization from an optimal point to calculate the constant \(\eta \) that in turn is required to optimize the constant step-size. The additional sample required in our update aids in the analysis; however, it comes at the price of making the sample complexity double of the iteration complexity. Given the popularity of decaying step-sizes in first-order algorithms, we also provide stability analysis of our algorithm with such step-sizes. This analysis exploits a dissipation inequality that we derive for our Gauss–Seidel approach. Such a stability analysis is crucial for our primal-dual algorithm, given that we do not explicitly restrict the growth of the dual variables.

In Sect. 3, we solve \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\) with general risk aversion parameters \(\alpha , \pmb {\beta }\) using Algorithm 1 on an instance of \({{{{\mathcal {P}}}}^{\mathrm{E}}}\) obtained through a standard reformulation via the variational formula for \(\mathrm{CVaR}\) in (2) from [36]. We then bound the expected suboptimality and constraint violation at a weighted average of the iterates for \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\) by \(\eta (\alpha , {\varvec{\beta }})/\sqrt{K}\). Upon utilizing the optimized step-sizes from the analysis of \({{{{\mathcal {P}}}}^{\mathrm{E}}}\), we are then able to study the precise growth in the required iteration (and sample) complexity of \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\) as a function of \(\alpha , {\varvec{\beta }}\). Not surprisingly, the more risk-averse a problem one aims to solve, the greater this complexity increases. A modeler chooses risk aversion parameters primarily driven by attitudes toward risk in specific applications. Our precise characterization of the growth in sample complexity with risk aversion will permit the modeler to balance between desired risk levels and computational challenges in handling that risk. We remark that the algorithmic architecture for the risk neutral problem may not directly apply to the risk-sensitive variant for general risk measures. For example, the algorithm described in [20] for general mean-semideviation-type risk measures is considerably more complex than that required for the risk-neutral problem. We are able to extend our algorithm and its analysis for \({{{{\mathcal {P}}}}^{\mathrm{E}}}\) to \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\), thanks to the variational form in (2) that \(\mathrm{CVaR}\) admits. See the discussion after the proof of Theorem 3.1 for a precise list of properties a risk measure must exhibit for us to apply the same trick. Using concentration inequalities, we also report an interesting connection of our results to that in [10, 11] on scenario approximations to chance-constrained programs. The resemblance in sample complexity is surprising, given that the approach in [10, 11] solves a deterministic convex program with sampled constraints, while we process samples in an online fashion.

We illustrate properties of our algorithm through a stylized example. Our experiments reveal that the optimized iteration count (and sample complexity) for even a simple example is quite high. This limitation is unfortunately common for subgradient algorithms and likely cannot be overcome in optimizing general nonsmooth functions that we study. While the bounds are order-optimal, our numerical experiments reveal that a solution with desired risk tolerance can be found in less iterations than obtained from the upper bound. This is an artifact of optimizing step-sizes based on upper bounds on suboptimality and constraint violation. We end the paper in Sect. 4 with discussions on possible extensions of our analysis.

Very recently, it was brought to our attention that the work in [7] done concurrently presents a related approach to tackle optimization of composite nonconvex functions under related but different assumptions. In fact, their work appeared at the same time as our early version and claims a similar result that does not require bounds on the dual variables. Our analysis does not require or analyze the case with strongly convex functions within our setup and therefore Nesterov-style acceleration remains untenable. As a result, our algorithm is different. Our focus on \(\mathrm{CVaR}\) permits us to further analyze the growth in optimized sample complexity with risk aversion and its connection to chance-constrained optimization that is quite different.

2 Algorithm for \({{{{\mathcal {P}}}}^{\mathrm{E}}}\) and Its Analysis

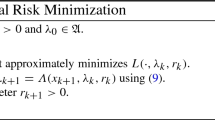

We present the primal-dual stochastic subgradient method to solve \({{{{\mathcal {P}}}}^{\mathrm{E}}}\) in Algorithm 1.

The notation \(\langle \cdot , \cdot \rangle \) stands for the usual inner product in Euclidean space and \(\Vert \cdot \Vert \) denotes the induced \(\ell _2\)-norm. Here, \(\nabla h(\varvec{x})\) stands for a subgradient of an arbitrary convex function h at \(\varvec{x}\). For our analysis, the subgradient in Algorithm 1 for functions \(f_\omega (\varvec{x})\) and \(g_\omega (\varvec{x})\) can be arbitrary elements of the closed convex subdifferential sets \(\partial f_\omega (\varvec{x})\) and \(\partial g_\omega (\varvec{x})\), respectively. We assume that these subdifferential sets are nonempty everywhere in \({\mathbb {X}}\).

The primal-dual method in Algorithm 1 leverages Lagrangian duality theory. Specifically, define the Lagrangian function for \({{{{\mathcal {P}}}}^{\mathrm{E}}}\) as

for \(\varvec{x} \in {\mathbb {X}}\), \({\varvec{z}} \in {\mathbb {R}}^m_+\), where \({{\mathcal {L}}}_\omega (\varvec{x}, {\varvec{z}}) :=f_\omega (\varvec{x}) + {\varvec{z}}^{{\intercal }} {\varvec{g}}_\omega (\varvec{x})\). Then, \({{{{\mathcal {P}}}}^{\mathrm{E}}}\) admits the standard reformulation as a min-max problem of the form

Denote its optimal set by \({\mathbb {X}}_\star \subseteq {\mathbb {X}}\). Define the dual problem of \({{{{\mathcal {P}}}}^{\mathrm{E}}}\) as

Denote its optimal set by \({\mathbb {Z}}_\star \subseteq {\mathbb {R}}^m_+\). Weak duality then guarantees \({{p}^{\mathrm{E}}_\star }\ge {{d}^{\mathrm{E}}_\star }\). When the inequality is met with an equality, the problem is said to satisfy strong duality. A point \((\varvec{x}_\star , {\varvec{z}}_\star ) \in {\mathbb {X}}\times {\mathbb {R}}^m_+\) is a saddle point of \({{\mathcal {L}}}\) if

for all \((\varvec{x}, {\varvec{z}}) \in {\mathbb {X}}\times {\mathbb {R}}^m_+\). The following well-known saddle point theorem (see [6, Theorem 2.156]) relates saddle points with primal-dual optimal solutions.

Theorem

(Saddle point theorem) A saddle point of \({{\mathcal {L}}}\) exists if and only if \({{{{\mathcal {P}}}}^{\mathrm{E}}}\) satisfies strong duality, i.e., \({{p}^{\mathrm{E}}_\star }= {{d}^{\mathrm{E}}_\star }\). Moreover, the set of saddle points of \({{\mathcal {L}}}\) is given by \({\mathbb {X}}_\star \times {\mathbb {Z}}_\star \).

Our convergence analysis of Algorithm 1 requires the following assumptions.

Assumption 1

\({{{{\mathcal {P}}}}^{\mathrm{E}}}{}\) must satisfy the following properties:

-

(a)

Subgradients of F and \({\varvec{G}}\) are bounded, i.e., \(\Vert \nabla F(\varvec{x}) \Vert \le C_F\), \(\Vert \nabla G^i(\varvec{x}) \Vert \le C_G^i\) for each \(i = 1, \dots , m\) and all \(\varvec{x} \in {\mathbb {X}}\).

-

(b)

\(\nabla f_\omega \) and \(\nabla g_\omega ^i\) for \(i = 1, \dots , m\) have bounded variance, i.e., \(\mathbb {E} \Vert \nabla f_\omega (\varvec{x}) - \mathbb {E} [\nabla f_\omega (\varvec{x})] \Vert ^2 \le \sigma _F^2\) and \(\mathbb {E} \Vert \nabla g_\omega ^i(\varvec{x}) - \mathbb {E} [\nabla g_\omega ^i(\varvec{x})] \Vert ^2 \le [\sigma _G^i]^2\) for all \(\varvec{x} \in {\mathbb {X}}\).

-

(c)

\({\varvec{g}}_\omega (\varvec{x})\) has a bounded second moment, i.e., \(\mathbb {E} \Vert g_\omega ^i(\varvec{x}) \Vert ^2 \le [D_G^i]^2\) for all \(\varvec{x} \in {\mathbb {X}}\).

-

(d)

The Lagrangian function \({{\mathcal {L}}}\) admits a saddle point \((\varvec{x}_\star , {\varvec{z}}_\star ) \in {\mathbb {X}}\times {\mathbb {R}}^m_+\).

The subgradient of F and the variance of its noisy estimate are assumed bounded. Such an assumption is standard in the convergence analysis of unconstrained stochastic subgradient methods. The assumptions regarding \({\varvec{G}}\) are similar, but we additionally require the second moment of the noisy estimate of \({\varvec{G}}\) to be bounded over \({\mathbb {X}}\). Boundedness of \({\varvec{G}}\) in primal-dual subgradient methods has appeared in prior literature, e.g., in [42, 44]. The second moment remains bounded if \({g}^i_\omega \) is uniformly bounded over \({\mathbb {X}}\) and \(\varOmega \) for each i. It is also satisfied if \({\varvec{G}}\) remains bounded over \({\mathbb {X}}\) and its noisy estimate has a bounded variance. Convergence analysis of unconstrained optimization problems typically assumes the existence of a finite optimal solution. We extend that requirement to the existence of a saddle point in the primal-dual setting, which by the saddle point theorem is equivalent to the existence of finite primal and dual optimal solutions. A variety of conditions imply the existence of such a point; the next result delineates two such sufficient conditions in (a) and (b), where (a) implies (b).

Lemma 2.1

(Sufficient conditions for existence of a saddle point) For \({{{{\mathcal {P}}}}^{\mathrm{E}}}\), the Lagrangian function \({{\mathcal {L}}}\) admits a saddle point, if either of the following conditions hold:

-

(a)

\({\mathbb {X}}_\star \) is nonempty, \({{p}^{\mathrm{E}}_\star }\) is finite and Slater’s constraint qualification holds, i.e., there exists \( \varvec{x}\) in the relative interior of \({\mathbb {X}}\) for which \({\varvec{G}}(\varvec{x}) < 0\).

-

(b)

\({{{{\mathcal {P}}}}^{\mathrm{E}}}\) admits a finite (\(\varvec{x}_\star , {\varvec{z}}_\star ) \in {\mathbb {X}}\times {\mathbb {R}}_+^m\) that satisfies the generalized Karush–Kuhn–Tucker (KKT) conditions given by

$$\begin{aligned} \begin{aligned} 0 \in \partial _x {{\mathcal {L}}}(\varvec{x}_\star , {\varvec{z}}_\star ) + {{\mathcal {N}}}_{{\mathbb {X}}}(\varvec{x}_\star ), \; {G}^i(\varvec{x}_\star ) \le 0, \; z^i_\star G^i(\varvec{x}_\star ) = 0 \end{aligned} \end{aligned}$$(10)for \(i = 1, \dots , m\), where \({{\mathcal {N}}}_{{\mathbb {X}}}(\varvec{x}_\star )\) denotes the normal cone of \({\mathbb {X}}\) at \(\varvec{x}_\star \).

Proof

Part (a) is a direct consequence of [6, Theorem 1.265]. To prove part (b), notice that (10) ensures the existence of subgradients \(\nabla F(\varvec{x}_\star ) \in \partial F(\varvec{x}_\star )\), \(\nabla {G}^i(\varvec{x}_\star ) \in \partial {G}^i(\varvec{x}_\star )\), \(i=1,\ldots ,m\) and \({\varvec{n}} \in {{\mathcal {N}}}_{\mathbb {X}}(\varvec{x}_\star )\) for which

Then, for any \(\varvec{x} \in {\mathbb {X}}\), we have

The inequalities in the above relation follow from the convexity of F and \({G}^i\)’s, nonnegativity of \({\varvec{z}}_\star \), and the definition of the normal cone. From the above inequalities, we conclude \({{\mathcal {L}}}(\varvec{x}, {\varvec{z}}_\star ) \ge {{\mathcal {L}}}(\varvec{x}_\star , {\varvec{z}}_\star )\) for all \(\varvec{x} \in {\mathbb {X}}\). Furthermore, for any \({\varvec{z}} \ge 0\), we have

where the last step follows from the nonnegativity of \({\varvec{z}}\) and (10), completing the proof. \(\square \)

We now present our first main result that provides a bound on the expected distance to optimality and constraint violation at a weighted average of the iterates generated by the algorithm on \({{{{\mathcal {P}}}}^{\mathrm{E}}}\) under Assumption 1. Denote by \({\varvec{C}}_G\), \({\varvec{D}}_G\), and \({\varvec{\sigma }}_G\) the collections of \(C_G^i\), \(D_G^i\), and \(\sigma _G^i\), respectively. We make use of the following notation.

Theorem 2.1

(Convergence result for \({{{{\mathcal {P}}}}^{\mathrm{E}}}\)) Suppose Assumption 1 holds. For a positive sequence \(\{\gamma _k\}_{k=1}^K\), if \(P_3 \sum _{k=1}^K \gamma _k^2 < 1\), then the iterates generated by Algorithm 1 satisfy

for each \(i = 1, \dots , m\), where \(\bar{\varvec{x}}_{K+1} := \frac{\sum _{k=1}^K \gamma _k \varvec{x}_{k+1}}{\sum _{k=1}^K \gamma _k}\). Moreover, if \(\gamma _k =\gamma /\sqrt{K}\) for \(k=1,\ldots ,K\) with \(0< \gamma < P_3^{-1/2}\), then

for \(i = 1,\ldots ,m\), where \(\eta := \frac{P_1 + P_2 \gamma ^2}{4\gamma (1-P_3\gamma ^2)}\).

A constant step-size of \(\eta /\sqrt{K}\) over a fixed number of K iterations yields the \({{\mathcal {O}}}(1/\sqrt{K})\) decay rate in the expected distance to optimality and constraint violation of Algorithm 1. This is indeed order optimal, as implied by Nesterov’s celebrated result in [32, Theorem 3.2.1].

Remark 2.1

While we present the proof for an i.i.d. sequence of samples, we believe that the result can be extended to the case where \(\omega \)’s follow a Markov chain with geometric mixing rate following the technique in [41]. For such settings, the expectations in the definition of \({F}, {\varvec{G}}\) should be computed with respect to the stationary distribution of the chain. The results will then possibly apply to Markov decision processes with applications in stochastic control.

Given that the literature on primal-dual subgradient methods is extensive, it is important for us to relate and distinguish Algorithm 1 and Theorem 2.1 with prior work. Using the Lagrangian in (6), Algorithm 1 can be written as

where \({{\,\mathrm{proj}\,}}_{\mathbb {A}}\) projects its argument on set \({\mathbb {A}}\). The vectors \(\nabla _x {{\mathcal {L}}}_\omega \) and \(\nabla _z {{\mathcal {L}}}_\omega \) are stochastic subgradients of the Lagrangian function with respect to \(\varvec{x}\) and \({\varvec{z}}\), respectively. Therefore, Algorithm 1 is a projected stochastic subgradient algorithm that seeks to solve the saddle-point reformulation of \({{{{\mathcal {P}}}}^{\mathrm{E}}}\) in (7). Implicit in our algorithm is the assumption that projection on \({\mathbb {X}}\) is computationally easy. Any functional constraints describing \({\mathbb {X}}\) that makes such projection challenging should be included in \({\varvec{G}}\).

Closest in spirit to our work on \({{{{\mathcal {P}}}}^{\mathrm{E}}}\) are the papers by Baes et al. in [2], Yu et al. in [44], Xu in [42], and Nedic and Ozdaglar in [30]. Stochastic mirror-prox algorithm in [2] and projected subgradient method in [30] are similar in their updates to ours except in two ways. First, these algorithms in the context of \({{{{\mathcal {P}}}}^{\mathrm{E}}}\) update the dual variable \({\varvec{z}}_k\) based on \({\varvec{G}}\) or its noisy estimate evaluated at \(\varvec{x}_k\), while we update it based on the estimate at \(\varvec{x}_{k+1}\). Second, both project the dual variable on a compact subset of \({\mathbb {R}}^m_+\) that contains the optimal set of dual multipliers. While authors in [2] assume an a priori set to project on, authors in [30] compute such a set from a “Slater point” that satisfies \({\varvec{G}}(\varvec{x}) < 0\). Specifically, Slater’s condition guarantees that the set of optimal dual solutions \({\mathbb {Z}}_\star \) is bounded (see [6, Theorem 1.265], [18]). Moreover, a Slater point can be used to construct a compact set that contains \({\mathbb {Z}}_\star \), e.g., using [30, Lemma 4.1]. While one can project dual variables on such a set in each iteration, execution of the algorithm then requires a priori knowledge of such a point. We do not assume knowledge of such a point (or any explicit bound on \({\mathbb {Z}}_\star \)) to execute Algorithm 1. Rather, our proof provides an explicit bound on the growth of the dual variable sequence for Algorithm 1, much in line with Xu’s analysis in [42]. Much to our surprise, a minor modification of using a Gauss–Seidel style dual update as opposed to the popular Jacobi style dual update obviates the need for this assumption in the literature for the proofs to work. Unfortunately, our Gauss–Seidel style dual update comes at an additional cost of an extra sample required per iteration of the primal-dual algorithm, making the sample complexity double of the iteration complexity. The constant factor of two, however, does not impact the order-wise complexity. We surmise that the additional sample and the Gauss–Seidel update of the dual variable helps to decouple the analysis of the primal and dual updates and points to a possible extension of our result to an asynchronous setting, often useful in engineering applications. We remark that while we do not utilize a priori knowledge of a dual optimal solution to explicitly restrict the dual variables within a set containing it, an overestimate of \(\left\| {\varvec{z}}_1 - {\varvec{z}}_\star \right\| \) is required to compute \(\eta \) to calculate the precise bound in (17). In other words, gauging the quality of the ergodic mean after K iterations still requires that knowledge. We suspect that the distance of the ergodic mean \(\bar{{\varvec{z}}}_{K+1}\) to the dual optimal set is crucial to bound the extent of expected suboptimality and constraint violation. While analysis such as that in [2] achieves it by explicitly imposing a bound on the entire trajectory of \({\varvec{z}}_k\)’s, we do so by assuming a bound on the distance of the initial point to the optimal set and then characterizing the growth over that trajectory.

Our work shares some parallels with that in [42], but has an important difference. Xu considers a collection of deterministic constraint functions, i.e., \({\varvec{g}}^\omega \) is identical for all \(\omega \in \varOmega \), and considers a modified augmented Lagrangian function of the form \({\tilde{{{\mathcal {L}}}}}(\varvec{x}, {\varvec{z}}) := F(\varvec{x}) + \frac{1}{m} \sum _{i=1}^m \varphi _\delta (\varvec{x}, z^i)\), where

for \(i = 1,\ldots ,m\) with a suitable time-varying sequence of \(\delta \)’s. His algorithm is similar to Algorithm 1 but performs a randomized coordinate update for the dual variable instead of (5). To the best of our knowledge, Xu’s analysis in [42] with such a Lagrangian function does not directly apply to our setting with stochastic constraints that is crucial for the subsequent analysis of the risk-sensitive problem \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\).

Finally, Yu et al.’s work in [44] provides an analysis of the algorithm that updates its dual variables using

where \({v}^i := {g}^i_{\omega _{k}}(\varvec{x}_{k}) + \langle \nabla {g}^i_{\omega _k}(\varvec{x}_k) ,\varvec{x}_{k+1} - \varvec{x}_k \rangle \) for \(i=1,\ldots ,m\). In contrast, our \({\varvec{z}}\)-update in (5) samples \(\omega _{k+1/2}\) and sets \(\varvec{v}_k := {\varvec{g}}_{\omega _{k+1/2}}(\varvec{x}_{k+1})\) at the already computed point \(\varvec{x}_{k+1}\). We are able to recover the \({{\mathcal {O}}}(1/\sqrt{K})\) decay rate of suboptimality and constraint violation with a proof technique much closer to the classical analysis of subgradient methods in [9, 30]. Unlike [44], we provide a clean characterization of the constant \(\eta \) in (17) that is crucial to study the growth in sample (and iteration) complexity of Algorithm 1 applied to a reformulation of \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\).

2.1 Proof of Theorem 2.1

The proof proceeds in three steps.

-

(a)

We establish the following dissipation inequality that consecutive iterates of the algorithm satisfy.

$$\begin{aligned} \begin{aligned}&\gamma _k \mathbb {E} [{{\mathcal {L}}}(\varvec{x}_{k+1}, {\varvec{z}}) - {{\mathcal {L}}}(\varvec{x}, {\varvec{z}}_k)] + \frac{1}{2} \mathbb {E} \Vert \varvec{x}_{k+1} - \varvec{x} \Vert ^2 + \frac{1}{2} \mathbb {E} \Vert {\varvec{z}}_{k+1} - {\varvec{z}} \Vert ^2 \\&\quad \le \frac{1}{2} \mathbb {E} \Vert \varvec{x}_k - \varvec{x} \Vert ^2 + \frac{1}{2} \mathbb {E} \Vert {\varvec{z}}_k - {\varvec{z}} \Vert ^2 + \frac{1}{4}P_2\gamma _k^2 + \frac{1}{4}P_3 \gamma _k^2 \mathbb {E} \Vert {\varvec{z}}_k \Vert ^2 \end{aligned} \end{aligned}$$(21)for any \(\varvec{x} \in {\mathbb {X}}\) and \({\varvec{z}} \in {\mathbb {R}}_+^m\).

-

(b)

Next, we bound \(\mathbb {E} \Vert {\varvec{z}}_k\Vert ^2\) generated by our algorithm from above using step (a) as

$$\begin{aligned} \mathbb {E} \Vert {\varvec{z}}_k\Vert ^2 \le \frac{P_1 + P_2 A_K}{1 - P_3 A_K} \end{aligned}$$(22)for \(k = 1, \ldots , K\), where \(A_K := \sum _{k=1}^K \gamma _k^2\).

-

(c)

We combine the results in steps (a) and (b) to complete the proof.

Define the filtration  , where

, where  is the \(\sigma \)-algebra generated by the samples \(\omega _1, \ldots \omega _{k-1/2}\) for k being multiples of 1/2, starting from unity. Then, \(\{ \varvec{x}_1, {\varvec{z}}_1, \dots , \varvec{x}_k, {\varvec{z}}_k \}\) becomes

is the \(\sigma \)-algebra generated by the samples \(\omega _1, \ldots \omega _{k-1/2}\) for k being multiples of 1/2, starting from unity. Then, \(\{ \varvec{x}_1, {\varvec{z}}_1, \dots , \varvec{x}_k, {\varvec{z}}_k \}\) becomes  -measurable, while \(\{ \varvec{x}_1, {\varvec{z}}_1, \dots , \varvec{x}_k, {\varvec{z}}_k, \varvec{x}_{k+1} \}\) is

-measurable, while \(\{ \varvec{x}_1, {\varvec{z}}_1, \dots , \varvec{x}_k, {\varvec{z}}_k, \varvec{x}_{k+1} \}\) is  -measurable.

-measurable.

\(\bullet \) Step (a)—Proof of (21): We first utilize the \(\varvec{x}\)-update in (4) to prove

for all \(\varvec{x} \in {\mathbb {X}}\). Then, we utilize the \({\varvec{z}}\)-update in (5) to prove

for all \({\varvec{z}} \in {\mathbb {R}}^m_+\). The law of total probability is then applied to the sum of (23) and (24) followed by a multiplication by \(\gamma _k\) yielding the desired result in (21).

Proof of (23): The \(\varvec{x}\)-update in (4) yields

We now simplify the inner product. The product with \(\nabla f_\omega (\varvec{x}_k)\) can be expressed as

where \(\nabla F(\varvec{x}_{k+1})\) denotes a subgradient of F at \(\varvec{x}_{k+1}\). The inequality for the first term follows from the convexity of F. Since  from [5], the expectation of the second summand on the right-hand side (RHS) of (26) satisfies

from [5], the expectation of the second summand on the right-hand side (RHS) of (26) satisfies

Taking expectations in (26), the above relation implies

Next, we bound the inner product with the second term on the RHS of (26). To that end, utilize the convexity of member functions in \({\varvec{g}}_\omega \) and \({\varvec{G}}\) along the above lines to infer

To tackle the inner product with the third term in the RHS of (25), we use the identity

The inequalities in (28), (29), and the equality in (30) together give

To simplify the above relation, apply Young’s inequality to obtain

Recall that  , subgradients of F are bounded and \(\nabla f_\omega \) has bounded variance. Therefore, we infer from the above inequality that

, subgradients of F are bounded and \(\nabla f_\omega \) has bounded variance. Therefore, we infer from the above inequality that

Appealing to Young’s inequality m times and a similar line of argument as above gives

Leveraging the relations in (33) and (34) in (31), we get

that upon simplification gives (23).

Proof of (24): From the \({\varvec{z}}\)-update in (5), we obtain

for all \({\varvec{z}} \ge 0\). Again, we deal with the two summands in the second factor of the inner product of (36) separately. The expectation of the inner product with the first term yieldsFootnote 2

In the above derivation, we have utilized Young’s inequality and the boundedness of the second moment of \({\varvec{g}}_\omega \). Since  , the law of total probability can be used to condition (37) on

, the law of total probability can be used to condition (37) on  rather than on

rather than on  . To simplify the inner product with the second term in (36), we use the identity

. To simplify the inner product with the second term in (36), we use the identity

Utilizing (37) and (38) in (36) gives (24). Adding (23) and (24) followed by a multiplication by \(\gamma _k\) yields

Taking the expectation and applying the law of total probability completes the proof of (21).

\(\bullet \) Step (b)—Proof of (22): Plugging \((\varvec{x}, {\varvec{z}}) = (\varvec{x}_\star , {\varvec{z}}_\star )\) in the inequality for the one-step update in (21) and summing it over \(k=1, \ldots , \kappa \) for \(\kappa \le K\) gives

for \(\kappa = 1,\ldots ,K\). The above then yields

Notice that \( 2 \mathbb {E} \Vert {\varvec{z}}_{\kappa +1} - {\varvec{z}}_\star \Vert ^2 + 2\Vert {\varvec{z}}_\star \Vert ^2 \ge \mathbb {E} \Vert {\varvec{z}}_{\kappa +1}\Vert ^2\). This inequality and \({\varvec{z}}_1 = 0\) in (41) gives

We argue the bound on \(\mathbb {E} \Vert {\varvec{z}}_k\Vert ^2\) for \(k=1,\ldots ,K\) inductively. Since \({\varvec{z}}_1 = 0\), the base case trivially holds. Assume that the bound holds for \(k = 1,\ldots ,\kappa \) for \(\kappa < K\). With the notation \(A_K = \sum _{k=1}^K \gamma _k^2\), the relation in (42) implies

completing the proof of step (b).

\(\bullet \) Step (c)—Combining steps (a) and (b) to prove Theorem 2.1: For any \({\varvec{z}} \ge 0\), the inequality in (21) with \(\varvec{x} = \varvec{x}_\star \) from step (a) summed over \(k=1,\ldots , K\) gives

Using \({\varvec{z}}_1 = 0\) and an appeal to the saddle point property of \((\varvec{x}_\star , {\varvec{z}}_\star )\) yields

In deriving the above inequality, we have utilized the bound on \(\mathbb {E} \Vert {\varvec{z}}_k\Vert ^2\) from step (b) and the definition of \(P_1\) and \(A_K\). To further simplify the above inequality, notice that the saddle point property of \((\varvec{x}_\star , {\varvec{z}}_\star )\) in (9) yields

which implies \({\varvec{z}}_\star ^{\intercal }{\varvec{G}}(\varvec{x}_\star ) \ge 0\). However, the saddle point theorem guarantees that \(\varvec{x}_\star \) is an optimizer of \({{{{\mathcal {P}}}}^{\mathrm{E}}}\), meaning that \(\varvec{x}_\star \) is feasible and \({\varvec{G}}(\varvec{x}_\star ) \le 0\), implying \({\varvec{z}}_\star ^{\intercal }{\varvec{G}}(\varvec{x}_\star ) \le 0\) as \({\varvec{z}}_\star \in {\mathbb {R}}^m_+\). Taken together, we infer

Since \({{\mathcal {L}}}(\varvec{x}, {\varvec{z}})\) is convex in \(\varvec{x}\), Jensen’s inequality and (47) implies

where recall that \(\bar{\varvec{x}}_{K+1}\) is the \(\gamma \)-weighted average of the iterates. Utilizing (48) in (45), we get

The above relation defines a bound on \(\mathbb {E} [{{\mathcal {L}}}(\bar{\varvec{x}}_{K+1}, {\varvec{z}})]\) for every \({\varvec{z}} \ge 0\). Choosing \({\varvec{z}} = 0\) and noting \(\Vert \mathbb {1}+ {\varvec{z}}_\star \Vert ^2 \ge 0\), we get the bound on expected suboptimality in (15). To derive the bound on expected constraint violation in (16), notice that the saddle point property in (9) and (47) implies

where \(\mathbb {1}^i \in {\mathbb {R}}^m\) is a vector of all zeros except the i-th entry that is unity. Choosing \({\varvec{z}} = \mathbb {1}^i + {\varvec{z}}_\star \) in (49) and the observation in (50) then gives

for each \(i=1,\ldots ,m\). This completes the proof of (16). The bounds in (17) are immediate from that in (15)–(16). This completes the proof of Theorem 2.1. \(\square \)

Remark 2.2

The bound in (16) can be sharpened to

using \({\varvec{z}}\) defined by \( {\varvec{z}}^i :={\varvec{z}}^i_\star + {{\,\mathrm{{\mathbb {I}}}\,}}_{\{{G}^i(\bar{\varvec{x}}_{K+1}) > 0 \}} \) for \(i = 1, \ldots , m\) in (49). Here, \({{\,\mathrm{{\mathbb {I}}}\,}}_{\{A\}}\) is the indicator function, evaluating to 1 if A holds and 0 otherwise. This improved bound was suggested to us by an anonymous reviewer. Notice that (52) is a much tighter bound on the expected constraint violation per constraint than (16) when m is large.

In what follows, we offer insights into two specific aspects of our proof. First, we present our conjecture on where the Gauss–Seidel nature of our dual update obtained with an extra sample helps us circumvent the need for an a priori bound on the dual variable. Notice that our dual update allows us to derive the third line of (37) that ultimately yields the term \(-{\varvec{z}}_k^{{\intercal }} {\varvec{G}}(\varvec{x}_{k+1})\) in (24). This term conveniently disappears when (24) is added to the inequality in (23) obtained from the primal update. We conjecture that this cancellation made possible by our dual update makes the theoretical analysis particularly easy. We anticipate that the classical Jacobi-style dual iteration derived with one sample shared within the primal and the dual steps will not lead to said cancellation and yield a term of the form \({\varvec{z}}_k^{{\intercal }} \left[ {\varvec{G}}(\varvec{x}_{k+1}) - {\varvec{G}}(\varvec{x}_{k}) \right] \). Bounding the growth of such a term might prove challenging without an available bound on \(\Vert {\varvec{z}}_k\Vert \) and will likely require a different argument. A detailed comparison between the proof techniques of the Jacobi and the Gauss–Seidel updates is left for future endeavors.

Second, we comment on the presence of a dimensionless constant \(\mathbb {1}\) in \(P_1\) together with \({\varvec{z}}_\star \). We use the inequality in (21) to establish (49) that is valid at all \({\varvec{z}} \ge 0\). Inspired by arguments in [42], we then utilize (49) not only at the dual iterate \({\varvec{z}}_k\)–that is often the case with many prior analyses—but also at \({\varvec{z}}=0\) and \({\varvec{z}} = \mathbb {1}^i + {\varvec{z}}_\star \). Specifically, the nature of the Lagrangian function \({{\mathcal {L}}}(\varvec{x}, {\varvec{z}})\) in \({\varvec{z}}\) permits us to relate these evaluations at \({\varvec{z}}=0\) and \({\varvec{z}} = \mathbb {1}^i + {\varvec{z}}_\star \) to the extents of suboptimality and constraint violation, respectively, using

The deliberate inclusion of \(\Vert {\mathbb {1}+{\varvec{z}}_\star } \Vert ^2\) in constant \(P_1\) aids in drowning the effect of the term \(\frac{1}{2}\Vert {{\varvec{z}}}\Vert ^2\) in (49) evaluated at \({\varvec{z}} = \mathbb {1}^i + {\varvec{z}}_\star \) when deriving the bound on the extent of constraint violation, without impacting the same when evaluated at \({\varvec{z}}=0\), used in deriving the bound on the extent of suboptimality.

2.2 Optimal Step Size Selection

We exploit the bounds in Theorem 2.1 to select a step size that minimizes the iteration count to reach an \(\varepsilon \)-approximately feasible and optimal solution to \({{{{\mathcal {P}}}}^{\mathrm{E}}}\) and solveFootnote 3

The following characterization of optimal step sizes and the resulting iteration count from Proposition 2.1 will prove useful in studying the growth in iteration complexity in solving \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\) with the risk-aversion parameters \(\alpha , {\varvec{\beta }}\) in the following section.

Proposition 2.1

For any \(\varepsilon > 0\), the optimal solution of (54) satisfies

where \(y = 1 + \frac{P_2}{P_1 P_3}\).

Proof

It is evident from (55) that \(\gamma _\star ^2 < P_3^{-1}\). Then, it suffices to show that \(\gamma _\star \) from (55) minimizes

over \(\gamma > 0\). To that end, notice that

The above derivative is negative at \(\gamma = 0^+\) and vanishes only at \(\gamma _\star \) over positive values of \(\gamma \), certifying it as the global minimizer. \(\square \)

Parameter \(P_1\) is generally not known a priori. However, it is often possible to bound it from above. One can calculate \(\gamma _\star \) and \(K_\star \) using (55), replacing \(P_1\) with its overestimate. Notice that

It is straightforward to verify that \(\frac{\partial K_\star }{\partial P_1} > 0\), \(\frac{d y}{d P_1} \le 0\), and \(\frac{\partial \gamma _\star }{\partial y} \le 0\), and hence, overestimating \(P_1\) results in a smaller \(\gamma _\star \). Finally, \(\frac{\partial K_\star }{\partial \gamma } > 0\) for \(\gamma > \gamma _\star \), implying that \(K_\star \) calculated with an overestimate of \(P_1\) is larger than the optimal iteration count–the computational burden we must bear for not knowing \(P_1\). Our algorithm does require knowledge of \(P_3\) to implement the algorithm that in turn depends only on the nature of the functions defining the constraints and not a primal-dual optimizer.

2.3 Asymptotic Almost Sure Convergence with Decaying Step-Sizes

Subgradient methods are often studied with decaying nonsummable square-summable step sizes, for which they converge to an optimizer in the unconstrained setting. The result holds even for distributed variants and for mirror descent methods (see [13]). Establishing convergence of Algorithm 1 to a primal-dual optimizer of \({{{{\mathcal {P}}}}^{\mathrm{E}}}\) is much more challenging without assumptions of strong convexity in the objective. With such step-sizes, we provide the following result to guarantee the stability of our algorithm, which is reminiscent of [28, Theorem 4].

Proposition 2.2

Suppose Assumption 1 holds and \(\{\gamma _k\}_{k=1}^\infty \) is a nonsummable square-summable nonnegative sequence, i.e., \(\sum _{k=1}^\infty \gamma _k = \infty , \sum _{k=1}^\infty \gamma _k^2 < \infty \). Then, \((\varvec{x}_k, {\varvec{z}}_k)\) generated by Algorithm 1 remains bounded and \(\lim _{k\rightarrow \infty } {{\mathcal {L}}}(\varvec{x}_k, {\varvec{z}}_\star ) - {{\mathcal {L}}}(\varvec{x}_\star , {\varvec{z}}_k) = 0\) almost surely.

This ‘gap’ function \({{\mathcal {L}}}(\varvec{x}, {\varvec{z}}_\star ) - {{\mathcal {L}}}(\varvec{x}_\star , {\varvec{z}})\) looks notoriously similar to the duality gap at \((\varvec{x}, {\varvec{z}})\), but is not the same. We are unaware of any results on asymptotic almost sure convergence of primal-dual first-order algorithms to an optimizer for constrained convex programs with convex, but not necessarily strongly convex, objectives. A recent result in [43] establishes such a convergence in primal-dual dynamics in continuous time; our attempts at leveraging discretizations of the same have yet proven unsuccessful.

The proof of Proposition 2.2 takes advantage of the one-step update in (21) that makes it amenable to the well-studied almost supermartingale convergence result by Robbins and Siegmund in [35, Theorem 1].

Theorem

(Convergence of almost supermartingales) Let \(m_k, n_k, r_k, s_k\) be \({{\mathcal {F}}}_k\)-measurable finite nonnegative random variables, where \({{\mathcal {F}}}_1 \subseteq {{\mathcal {F}}}_2 \subseteq \ldots \) describes a filtration. If \(\sum _{k=1}^\infty s_k < \infty \), \(\sum _{k=1}^\infty r_k < \infty \), and

then \(\lim _{k \rightarrow \infty } m_k\) exists and is finite and \(\sum _{k = 1}^\infty n_k < \infty \) almost surely.

Proof of Proposition 2.2

Using notation from the proof of Theorem 2.1, (23) and (24) together yields

We utilize the above to derive a similar inequality replacing  with \({{\mathcal {L}}}(\varvec{x}_{k}, {\varvec{z}}_\star )\) by bounding the difference between them. Then, we apply the almost supermartingale convergence theorem to the result to conclude the proof. To bound said difference, the convexity of \({{\mathcal {L}}}\) in \(\varvec{x}\) and Young’s inequality together implies

with \({{\mathcal {L}}}(\varvec{x}_{k}, {\varvec{z}}_\star )\) by bounding the difference between them. Then, we apply the almost supermartingale convergence theorem to the result to conclude the proof. To bound said difference, the convexity of \({{\mathcal {L}}}\) in \(\varvec{x}\) and Young’s inequality together implies

where \({\nabla }_x {{\mathcal {L}}}\) denotes a subgradient of \({{\mathcal {L}}}\) w.r.t. \(\varvec{x}\). To further bound the RHS of (61), Assumption 1 allows us to deduce

for any \(\varvec{x} \in {\mathbb {X}}\). Furthermore, the \(\varvec{x}\)-update in (18) and the nonexpansive nature of the projection operator yield

From Assumption 1, we get

and along similar lines

that together in (63) yield

Combining the above with (62) in (61) gives

Adding (67) to (60) and simplifying, we obtain

The above inequality with

becomes (59), where

Each term is nonnegative, owing to (9), and \({\varvec{\gamma }}\) defines a square-summable sequence. Applying [35, Theorem 1], \(m_k\) converges to a constant and \(\sum _{k = 1}^\infty n_k < \infty \). The latter combined with the nonsummability of \({\varvec{\gamma }}\) implies the result. \(\square \)

3 Algorithm for \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\) and Its Analysis

We now devote our attention to solving \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\) via a primal-dual algorithm. To do so, we reformulate it as an instance of \({{{{\mathcal {P}}}}^{\mathrm{E}}}\) and utilize Algorithm 1 to solve that reformulation with constant step-sizes under a stronger set of assumptions given below. In the sequel, we use \({{\mathcal {L}}}\) to denote the Lagrangian function defined in (6), but with F and \({\varvec{G}}\) as defined in \({{{{\mathcal {P}}}}^\mathrm{CVaR}}{}\).

Assumption 2

\({{{{\mathcal {P}}}}^\mathrm{CVaR}}\) must satisfy the following properties:

-

(a)

Subgradients of F and \({\varvec{G}}\) are bounded, i.e., \(\Vert \nabla f_\omega (\varvec{x}) \Vert \le C_F\) and \(\Vert \nabla g_\omega ^i(\varvec{x}) \Vert \le C_G^i\) almost surely for all \(\varvec{x} \in {\mathbb {X}}\).

-

(b)

\({\varvec{g}}_\omega (x)\) is bounded, i.e., \(\Vert g^i_\omega (\varvec{x}) \Vert \le D_G^i\) for all \(\varvec{x} \in {\mathbb {X}}\), almost surely.

-

(c)

The Lagrangian function \({{\mathcal {L}}}\) admits a saddle point \((\varvec{x}_\star , {\varvec{z}}_\star ) \in {\mathbb {X}}\times {\mathbb {R}}^m_+\).Footnote 4

Using the variational characterization (2) of \(\mathrm{CVaR}\), rewrite \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\) as

where \({\psi ^h_\omega (\varvec{x}, u; \delta )} :={u + \frac{1}{1-\delta }[h_\omega (\varvec{x}) - u]^+}\) for any collection of convex functions \(h_\omega : {\mathbb {R}}^n \rightarrow {\mathbb {R}}\), \(\omega \in \varOmega \). Coupled with Assumption 2, we will show that we can bound \(|u^i| \le D_G^i\) for each \(i = 1, \dots , m\)Footnote 5 that allows us to rewrite \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\) as

where \(| \cdot |\) denotes the element-wise absolute value. Call the optimal value of \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\) as \(p^\mathrm{CVaR}_\star \) in the sequel.

Theorem 3.1

(Convergence result for \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\)) Suppose Assumption 2 holds. The iterates generated by Algorithm 1 on \({{{{\mathcal {P}}}}^{\mathrm{E}}}'\) for \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\) with parameters \(\alpha , {\varvec{\beta }}\) satisfy

for \(i = 1, \ldots , m\) with step sizes \(\gamma _k = \gamma / \sqrt{K}\) for \(k = 1, \dots , K\) with \(0< \gamma < {P_3^{-1/2}(\alpha , {\varvec{\beta }})}\), where \(\eta (\alpha , {\varvec{\beta }}) :=\frac{P_1 + \gamma ^2 P_2(\alpha , {\varvec{\beta }})}{4\gamma (1 - \gamma ^2 P_3(\alpha , {\varvec{\beta }}))}\) and

Proof

We prove the result in the following steps.

-

(a)

Under Assumption 2, we revise \(P_2\) and \(P_3\) in Theorem 2.1 for \({{{{\mathcal {P}}}}^{\mathrm{E}}}\).

-

(b)

We show that if \(f_\omega , {\varvec{g}}_\omega \) satisfy Assumption 2, then \(\psi ^f_\omega \) and \(\psi ^{g^i}_\omega , i=1,\ldots ,m\) satisfy Assumption 2, but with different bounds on the gradients and function values. Leveraging these bounds, we obtain \(P_2(\alpha , {\varvec{\beta }})\) and \(P_3(\alpha , {\varvec{\beta }})\) for \({{{{\mathcal {P}}}}^{\mathrm{E}}}'\) using step (a).

-

(c)

Using Assumption 2, we prove that the Lagrangian function \({{\mathcal {L}}}':{\mathbb {X}}\times {\mathbb {R}}\times {\mathbb {U}}\times {\mathbb {R}}^m_+ \rightarrow {\mathbb {R}}\) defined as

$$\begin{aligned} {{\mathcal {L}}}'(\varvec{x}, u^0, {\varvec{u}}, {\varvec{z}}) :=\mathbb {E} [ \psi ^f_\omega (\varvec{x}, u^0; \alpha )] + \sum _{i=1}^m z^i \mathbb {E} [ \psi ^f_\omega (\varvec{x}, u^0; \alpha )] \end{aligned}$$(76)admits a saddle point in \({\mathbb {X}}\times {\mathbb {R}}\times {\mathbb {U}}\times {\mathbb {R}}^m_+\), where \({\mathbb {U}}:=\left\{ {\varvec{u}} \in {\mathbb {R}}^m \mid | {\varvec{u}}| \le {\varvec{D}}_G\right\} \).

-

(d)

We then apply Theorem 2.1 with \(P_2(\alpha , {\varvec{\beta }})\) and \(P_3(\alpha , {\varvec{\beta }})\) from step (b) on \({{{{\mathcal {P}}}}^{\mathrm{E}}}'\) to derive the bounds in (73) and (74).

\(\bullet \) Step (a)—Revising Theorem 2.1with Assumption 2: Recall that in the derivation of (33) in the proof of Theorem 2.1, Assumption 1 yields

Assumption 2 allows us to bound the same by \(4C_F^2\), yielding \(P_2 = 16 C_F^2 + 2\Vert {\varvec{D}}_G \Vert ^2\). Along the same lines, we get \(P_3 = 16 m \Vert {\varvec{C}}_G \Vert ^2\).

\(\bullet \) Step (b)—Deriving properties of \(\psi _\omega \): Consider the stochastic subgradient of \(\psi ^f_\omega (\varvec{x}, t; \alpha )\) given by

where \({{\,\mathrm{{\mathbb {I}}}\,}}_{\{\cdot \}}\) is the indicator function. Recall that \(\Vert \nabla f_\omega (\varvec{x}) \Vert \le C_F\) for all \(\varvec{x} \in {\mathbb {X}}\) almost surely. Therefore, we have

Proceeding similarly, we obtain

We also have

Then, (75) follows from step (a) using (79), (80), and (81).

\(\bullet \) Step (c)—Showing that \({{\mathcal {L}}}'\) admits a saddle point: According to [36, Theorem 10], the minimizers of \(\mathbb {E} [ \psi ^f_\omega (\varvec{x}, u^0; \alpha )]\) over \(u^0\) define a nonempty closed bounded interval (possibly a singleton). Thus, we have

for some \(u^0(\varvec{x})\in {\mathbb {R}}\) for each \(\varvec{x} \in {\mathbb {X}}\). Similarly, we infer

for some \(u^i(\varvec{x})\in {\mathbb {R}}\) for each \(\varvec{x} \in {\mathbb {X}}\). Moreover, for all \(u^i > D_G^i\), we have

and for \(u^i < -D_G^i\), we have

Thus, \(\mathbb {E} [ \psi ^{g^i}_\omega (\varvec{x}, u^i; \beta ^i)]\) is nonincreasing in \(u^i\) below \(-D_G^i\) and increasing in it beyond \(D_G^i\). Hence, at least one among the minimizers of \(\mathbb {E} [ \psi ^{g^i}_\omega (\varvec{x}, u^i; \beta ^i)]\) must lie in \([-D_G^i, D_G^i]\). In the sequel, let \(u^i(\varvec{x})\) refer to such a minimizer.

Consider a saddle point \((\varvec{x}_\star , {\varvec{z}}_\star ) \in {\mathbb {X}}\times {\mathbb {R}}^m_+\) of \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\). We argue that \((\varvec{x}_\star , u^0(\varvec{x}_\star ), {\varvec{u}}(\varvec{x}_\star ), {\varvec{z}}_\star )\) is a saddle point of \({{\mathcal {L}}}'\). From the definitions of \({{\mathcal {L}}}\), \({{\mathcal {L}}}'\), (82), (83), and the saddle point property of \((\varvec{x}_\star , {\varvec{z}}_\star )\), we obtain

for all \((\varvec{x}, u^0, {\varvec{u}}) \in {\mathbb {X}}\times {\mathbb {R}}\times {\mathbb {U}}\). Also, for all \({\varvec{z}} \in {\mathbb {R}}^m_+\), we have

\(\bullet \) Step (d)—Proof of (73) and (74): By the saddle point theorem and (86), we have \({{\mathcal {L}}}(\varvec{x}_\star , {\varvec{z}}_\star ) = p_\star ^\mathrm{CVaR}\) that also equals the optimal value of \({{{{\mathcal {P}}}}^{\mathrm{E}}}'\). Applying Theorem 2.1 with revised \(P_2\) and \(P_3\) from step (b) to \({{{{\mathcal {P}}}}^{\mathrm{E}}}'\) for which \(\varvec{x}_0, \dots , \varvec{x}_{K+1}\) and \(u^0_0, \dots , u^0_{K+1}\) are  -measurable, we obtain

-measurable, we obtain

Following a similar argument for \(i=1,\ldots ,m\), we get

completing the proof. \(\square \)

Our proof architecture generalizes to problems with other risk measures as long as that measure preserves convexity of \(f_\omega , {\varvec{g}}_\omega \), admits a variational characterization as in (2), and a subgradient for this modified objective can be easily computed and remains bounded. We restrict our attention to \(\mathrm{CVaR}\) to keep the exposition concrete.

Opposed to sample average approximation (SAA) algorithms, we neither compute nor estimate \(F(\varvec{x}) =\mathrm{CVaR}[f_\omega (\varvec{x})]\), \({\varvec{G}}(\varvec{x}) = \mathrm{CVaR}[{\varvec{g}}_\omega (\varvec{x})]\) for any given decision \(\varvec{x}\) to run the algorithm. Yet, our analysis provides guarantees on the same at \(\bar{\varvec{x}}_{K+1}\) in expectation. If one needs to compute F at any decision variable, e.g., at \(\bar{\varvec{x}}_{K+1}\), one can employ the variational characterization in (2). Such evaluation requires additional computational effort. Notice that Theorem 3.1 does not relate \(F(\bar{\varvec{x}}_{K+1})\) to \(p_\star ^\mathrm{CVaR}\) in an almost sure sense; it only relates the two in expectation according to (73), where the expectation is evaluated with respect to the stochastic sample path.

\(\mathrm{CVaR}\) of a random variable depends on the tail of its distribution. The higher the risk aversion, the further into the tail one needs to look, generally requiring more samples. Even if we do not explicitly compute the tail-dependent CVaR relevant to the objective or the constraints, it is natural to expect our sample complexity to grow with risk aversion, which the following result confirms.

Proposition 3.1

Suppose Assumption 2 holds. For an \(\varepsilon \)-approximately feasible and optimal solution of \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\) with risk aversion parameters \(\alpha , {\varvec{\beta }}\) using Algorithm 1 on \({{{{\mathcal {P}}}}^{\mathrm{E}}}'\), then \(\gamma _\star (\alpha , {\varvec{\beta }})\) and \(K_\star (\alpha , {\varvec{\beta }})\) from Proposition 2.1, respectively, decreases and increases with both \(\alpha \) and \({\varvec{\beta }}\).

Proof

We borrow the notation from Proposition 2.1 and tackle the variation with \(\alpha \) and \({\varvec{\beta }}\) separately.

\(\bullet \) Variation with \(\alpha \): \(P_2\) increases with \(\alpha \), implying \(\gamma _\star \) decreases with \(\alpha \) because \(\frac{d \gamma _\star ^2}{d y} \le 0\) and \(\frac{d y}{d P_2} \ge 0\). Furthermore, using \(\frac{\partial K_\star }{\partial \gamma _\star } < 0\) for \(\gamma < \gamma _\star \) and \(\frac{\partial K_\star }{\partial P_2} \ge 0\) in

we infer that \(K_\star \) increases with \(\alpha \).

\(\bullet \) Variation with \(\beta ^i\): Both \(P_2\) and \(P_3\) increase with \(\beta ^i\) and

Following an argument similar to that for the variation with \(\alpha \), the first term on the RHS of the above equation can be shown to be nonpositive. Next, we show that the second term is nonpositive to conclude that \(\gamma _\star \) decreases with \(\beta ^i\), where we use \(\frac{d P_3}{d {\beta ^i}} \ge 0\). Utilizing \(\frac{P_2}{P_1 P_3} = y - 1\), we infer

To characterize the variation of \(K_\star \), notice that

Again, the first term on the RHS of the above relation is nonnegative, owing to an argument similar to that used for the variation of \(K_\star \) with \(\alpha \). We show \(\frac{\partial K_\star }{\partial P_3} \le 0\) to conclude the proof. Treating \(K_\star \) as a function of \(P_3\) and \(\gamma _\star \), we obtain

It is straightforward to verify that the first summand is nonnegative. We have already argued that \(\gamma _\star \) decreases with \(P_3\), and \(\frac{\partial K_\star }{\partial \gamma } < 0\) for \(\gamma < \gamma _\star \), implying that the second summand is nonnegative as well, completing the proof. \(\square \)

It is easy to compute the optimized iteration count \(K_\star (\alpha , {\varvec{\beta }})\) and the optimized constant step-size \(\gamma _\star (\alpha , {\varvec{\beta }})/\sqrt{K_\star (\alpha , {\varvec{\beta }})}\) from Proposition 2.1. The formula is omitted for brevity. Instead, we derive additional insight by fixing \({\varvec{\beta }}\) and driving \(\alpha \) towards unity. For such an \(\alpha , {\varvec{\beta }}\), we have

With \(\alpha \) approaching unity, notice that \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\) approaches a robust optimization problem. Thus, Algorithm 1 for \({{{{\mathcal {P}}}}^{\mathrm{E}}}'\) is aiming to solve a robust optimization problem via sampling. Not surprisingly, the sample complexity exhibits unbounded growth with such robustness requirements, since we do not assume \(\varOmega \) to be finite. Also, this growth matches that of solving the SAA problem within \(\varepsilon \)-tolerance on the unconstrained problem to minimize \({\widehat{F}}(\varvec{x}) := \frac{1}{K} \sum _{j=1}^K \psi _{\omega ^j}^f(\varvec{x}, u; \alpha )\). To see this, apply Theorem 2.1 on \({\widehat{F}}(\varvec{x})\) with optimized step size from Proposition 2.1, where \(P_2 \sim \Vert \nabla {\widehat{F}}(\varvec{x})\Vert ^2 \sim (1-\alpha )^{-2}\) and \(P_3=1\).

Parallelization can lead to stronger bounds. More precisely, run stochastic approximation in parallel on N machines, each with K samples and compute \( \langle \bar{\varvec{x}}\rangle _{K+1} := \frac{1}{N} \sum _{j=1}^N \bar{\varvec{x}}_{K+1}[j]\) using \(\bar{\varvec{x}}_{K+1} [1], \ldots , \bar{\varvec{x}}_{K+1} [N]\) obtained from the N separate runs. Then, we have

for \(i = 1,\ldots ,m\) and \(\tau > 0\). The steps combine coherence of \(\mathrm{CVaR}\), convexity and uniform boundedness of \(g^i_\omega \), Hoeffding’s inequality and Theorem 3.1. A similar bound can be derived for suboptimality. Thus, parallelized stochastic approximation produces a result whose \({{\mathcal {O}}}(1/\sqrt{K})\)-violation occurs with a probability that decays exponentially with the degree of parallelization N.

The bound in (96) reveals an interesting connection with results for chance constrained programs. To describe the link, notice that \(\mathrm{CVaR}_{\delta }[y_\omega ] \le 0\) implies \(\mathsf{Pr}\{ y_\omega \le 0\} \ge 1-\delta \) for any random variable \(y_\omega \) and \(\delta \in [0, 1)\). Therefore, (96) implies

for constants \(C, C'\). Said differently, our stochastic approximation algorithm requires \({{\mathcal {O}}}(\log (1/{\nu }))\) samples to produce a solution that satisfies an \({{\mathcal {O}}}( 1/\sqrt{\log (1/\sqrt{\nu })})\)-approximate chance-constraint with a violation probability bounded by \(\nu \). This result bears a striking similarity to that derived in [11], where the authors deterministically enforce \({{\mathcal {O}}}(\log (1/{\nu }))\) sampled constraints to produce a solution that satisfies the exact chance-constraint \(\mathsf{Pr}\left\{ g^i_\omega \left( \varvec{x} \right) \le 0 \right\} \ge 1-\beta ^i \) with a violation probability bounded by \(\nu \). This resemblance in order-wise sample complexity is intriguing, given the significant differences between the algorithms.

3.1 An Illustrative Example

We explore the use of our algorithm on the following example problem

Let \(\omega \sim \frac{1}{3}\mathsf{beta}(2, 2)\) and consider the specific choice of risk parameters \(\alpha = 0.3, \beta = 0.2\). To gain intuition into the optimal solution for this example, we numerically estimate F(x) and \(G^1(x)\) for each x and plot them in Fig. 1a. To that end, we first obtain a million samples of \(\omega \). Then, for each value of the decision variable x, we sort the objective function value \(f_\omega (x)\) and the constraint function value \(g_\omega (x)\) with these samples. We then estimate F and \(G^1\) as the average of the highest \(1-\alpha =70\%\) and \(1-\beta =80\%\) among \(f_\omega (x)\)’s and \(g_\omega (x)\)’s, respectively, at each x with those samples. The unique optimum for (98) is numerically evaluated as \(x_\star \approx -0.1929\) for which \(F(x_\star ) \approx 0.4042\) and \(G^1(x_\star ) \approx 0\).

For this example, it is easy to show that \(C_F = \frac{4}{3}\), \(C_G = 1\) and \(D_G = \frac{5}{6}\) that yields \(P_2(0.3, 0.2) = \frac{8276}{93}\) and \(P_3(0.3, 0.2) = 50\). To run Algorithm 1 on \({{{{\mathcal {P}}}}^{\mathrm{E}}}'\) derived from (98), we can use constant step-size \(\gamma _k = \gamma /\sqrt{K}\) with a pre-determined number of steps K for any \(0< \gamma < P_3^{-1/2}(0.3, 0.2) = \frac{1}{5\sqrt{2}}\). With any given K, Theorem 3.1 guarantees that the expected distance to \(F(x_\star )\) and the expected constraint violation evaluated at \(\bar{{x}}_{K+1}\) decays as \(1/\sqrt{K}\). For a given K and \(\gamma < \frac{1}{5\sqrt{2}}\), calculating the precise bound \(\eta (0.3, 0.2)/\sqrt{K}\) requires the knowledge of \(P_1\) or its overestimate. For this example, \(| x_\star | \le \frac{1}{2}\) and \(|u^1_\star | \le D_G = \frac{5}{6}\). Also, \( | u^0_\star |\) is bounded above by the maximum value that \(|f_\omega (x)|\) can take, that is given by \(\frac{8}{9}\). Since we cannot determine \(z_\star \) a priori, we assume \(| z_\star | \le 2\) (that will later be shown to be consistent with our result). Starting from \((x_0, u^0_0, u^1_0, z_0) = 0\), we then obtain \(P_1=\frac{3197}{81}\). To solve \({{{{\mathcal {P}}}}^\mathrm{CVaR}}\) (or equivalently \({{{{\mathcal {P}}}}^{\mathrm{E}}}'\)) with a tolerance of \(\varepsilon =5 \times 10^{-3}\), we require \(\eta (0.3, 0.2)/\sqrt{K} \le 5 \times 10^{-3}\). With this tolerance and the values of \(P_1, P_2, P_3\), Proposition 2.1 yields an optimized \(\gamma _\star = 0.0808\) and \(K_\star \approx 1.35 \times 10^{9}\). We run Algorithm 1 on \({{{{\mathcal {P}}}}^{\mathrm{E}}}'\) with constant step-size \(\gamma _\star /\sqrt{K_\star }\) and plot F and \(G^1\) at the running ergodic mean of the iterates, i.e., at \({\bar{x}}_k := \frac{1}{k}\sum _{j=1}^k x_j\) for each k. Again F and \(G^1\) are evaluated numerically using the \(\mathrm{CVaR}\)-estimation procedure we outlined above.

Plots of a numerically estimated F and \(G^1\) over \({\mathbb {X}}= [-\frac{1}{2}, \frac{1}{2}]\), and b convergence of the running ergodic mean and F, G evaluated at the mean for the example problem (98) with \(\alpha = 0.3\), \(\beta = 0.2\)

Notice that Theorem 3.1 only guarantees a bound on \(F ({\bar{x}}_{K_\star +1}) - F({\bar{x}}_\star )\) and \(G^1({\bar{x}}_{K_\star +1})\) in expectation. Thus, one would expect that only the average of the \(\mathrm{CVaR}\) of F and \(G^1\) evaluated at \({\bar{x}}_{K_\star + 1}\) over multiple sample paths to respect the \(\varepsilon \)-bound. However, our simulation yielded \({\bar{x}}_{K_\star + 1} = -0.1926\) and \({\bar{z}}_{K_\star + 1} = 0.8976\), for which

i.e., the ergodic mean after \(K_\star \) iterations respects the \(\varepsilon \)-bound over the plotted sample path. The same behavior was observed over multiple sample paths. The ergodic mean of the dual iterate is indeed consistent with our assumption \(|z_\star | \le 2\) made in deriving \(\eta (0.3, 0.2)\). We point out that the ergodic mean in Fig. 1b moves much more smoothly than our evaluation of F and \(G^1\) at those means, especially for large k. The noise in F in \(G^1\) emanates from the finitely many samples we use to evaluate F and \(G^1\). The errors appear much more pronounced at larger k, given the logarithmic scale of the plot.

The optimized iteration count \(K_\star (\alpha , \beta )\) from Proposition 2.1 with a modest \(\alpha =0.3, \beta =0.2\) is quite high even for this simple example. This iteration count only grows with increased risk aversion as Fig. 2 reveals. Figure 1b suggests that the \(\varepsilon =5\times 10^{-3}\) tolerance is met earlier than \(K_\star \) iterations. This is the downside of optimizing upper bounds to decide step-sizes for subgradient methods. Carefully designed termination criteria may prove useful in practical implementations. In Fig. 2, we calculate \(K_\star \) and \(\gamma _\star \) with \(P_1 = \frac{3197}{81}\) obtained using \(| z_\star | \le 2\); extensive simulations with various \((\alpha , \beta ) \in [0,0.99]^2\) suggest that this over-estimate indeed holds.

Plot of the optimized number of iterations \(K_\star (\alpha , {\beta })\) on the left and the optimized step size \(\gamma _\star (\alpha , \beta )/\sqrt{K_\star (\alpha , \beta )}\) on the right to achieve a tolerance of \(\varepsilon =5 \times 10^{-3}\) for the example problem in (98)

We end the numerical example with a remark about the comparison of Algorithm 1 that uses Gauss–Seidel-type dual update in (5) and another that uses the popular Jacobi-type dual update on \({{{{\mathcal {P}}}}^{\mathrm{E}}}'\) for (98) with \(\alpha =0.3, \beta =0.2\). This alternate dual update replaces \({\varvec{g}}_{\omega _{k +1/2}}(\varvec{x}_{k+1})\) in (5) by \({\varvec{g}}_{\omega _{k}}(\varvec{x}_{k})\). That is, the same sample \(\omega _k\) is used for both the primal and the dual update. And, the primal iterate \(\varvec{x}_k\) is used instead of \(\varvec{x}_{k+1}\) to update the dual variable. We numerically compared this primal-dual algorithm with Algorithm 1 with various choices of step-sizes (consistent with the requirements of Theorem 3.1) and iteration count for our example and its variations. For each run, we found that the iterates from both these algorithms moved very similarly. The differences are too small to report. The Jacobi-type update requires half the number of samples compared to Algorithm 1. While the extra sample helps us in the theoretical analysis, our experience with this stylized example does not suggest any empirical advantage. A more thorough comparison between these algorithms, both theoretically and empirically, is left to future work.

4 Conclusions and Future Work

In this paper, we study a stochastic approximation algorithm for \(\mathrm{CVaR}\)-sensitive optimization problems. Such problems are remarkably rich in their modeling power and encompass a plethora of stochastic programming problems with broad applications. We study a primal-dual algorithm to solve that problem that processes samples in an online fashion, i.e., obtains samples and updates decision variables in each iteration. Such algorithms are useful when sampling is easy and intermediate approximate solutions, albeit inexact, are useful. The convergence analysis allows us to optimize the number of iterations required to reach a solution within a prescribed tolerance on expected suboptimality and constraint violation. The sample and iteration complexity predictably grows with risk-aversion. Our work affirms that a modeler must not only consider the attitude toward risk but also consider the computational burdens of risk in deciding the problem formulation.

Two possible extensions are of immediate interest to us. First, primal-dual algorithms find applications in multi-agent distributed optimization problems over a possibly time-varying communication network. We plan to extend our results to solve distributed risk-sensitive convex optimization problems over networks, borrowing techniques from [14, 29]. Second, the relationship to sample complexity for chance-constrained programs in [11] encourages us to pursue a possible exploration of stochastic approximation for such optimization problems.

Notes

requires that we sample \(\omega \) once more for the z-update.

requires that we sample \(\omega \) once more for the z-update.The integrality of K is ignored for notational convenience.

Lemma 2.1 provides sufficient conditions for the existence of such a saddle point.

\(\mathrm{CVaR}\) of any random variable can only vary between the mean and the maximum value that random variable can take.

References

Ahmadi-Javid, A.: Entropic value-at-risk: a new coherent risk measure. J. Optim. Theory Appl. 155(3), 1105–1123 (2012)

Baes, M., Bürgisser, M., Nemirovski, A.: A randomized mirror-prox method for solving structured large-scale matrix saddle-point problems. SIAM J. Optim. 23(2), 934–962 (2013)

Bedi, A.S., Koppel, A., Rajawat, K.: Nonparametric compositional stochastic optimization. arXiv preprint arXiv:1902.06011 (2019)

Ben-Tal, A., El Ghaoui, L., Nemirovski, A.: Robust Optimization, vol. 28. Princeton University Press, Princeton (2009)

Bertsekas, D.P.: Stochastic optimization problems with nondifferentiable cost functionals. J. Optim. Theory Appl. 12(2), 218–231 (1973)

Bonnans, J.F., Shapiro, A.: Perturbation Analysis of Optimization Problems. Springer, Berlin (2013)

Boob, D., Deng, Q., Lan, G.: Stochastic first-order methods for convex and nonconvex functional constrained optimization. arXiv preprint arXiv:1908.02734 (2019)

Borkar, V.S., Meyn, S.P.: The ode method for convergence of stochastic approximation and reinforcement learning. SIAM J. Control Optim. 38(2), 447–469 (2000)

Boyd, S., Mutapcic, A.: Subgradient methods. Lecture notes of EE364b, Stanford University, Winter Quarter 2007 (2006)

Calafiore, G., Campi, M.C.: Uncertain convex programs: randomized solutions and confidence levels. Math. Program. 102(1), 25–46 (2005)

Campi, M.C., Garatti, S.: The exact feasibility of randomized solutions of uncertain convex programs. SIAM J. Optim. 19(3), 1211–1230 (2008)

Charnes, A., Cooper, W.W.: Chance-constrained programming. Manag. Sci. 6(1), 73–79 (1959)

Doan, T.T., Bose, S., Nguyen, D.H., Beck, C.L.: Convergence of the iterates in mirror descent methods. IEEE Control Syst. Lett. 3(1), 114–119 (2018)

Dominguez-Garcia, A.D., Hadjicostis, C.N.: Distributed matrix scaling and application to average consensus in directed graphs. IEEE Trans. Autom. Control 58(3), 667–681 (2013)

Ermoliev, Y.M.: Methods of stochastic programming (1976)

Hadjiyiannis, M.J., Goulart, P.J., Kuhn, D.: An efficient method to estimate the suboptimality of affine controllers. IEEE Trans. Autom. Control 56(12), 2841–2853 (2011)

Hanasusanto, G.A., Kuhn, D., Wiesemann, W.: A comment on “computational complexity of stochastic programming problems”. Math. Program. 159(1–2), 557–569 (2016)

Hiriart-Urruty, J.B., Lemaréchal, C.: Convex Analysis and Minimization Algorithms I: Fundamentals, vol. 305. Springer, Berlin (2013)

Johnson, R., Zhang, T.: Accelerating stochastic gradient descent using predictive variance reduction. In: Advances in Neural Information Processing Systems, pp. 315–323 (2013)

Kalogerias, D.S., Powell, W.B.: Recursive optimization of convex risk measures: mean-semideviation models. arXiv preprint arXiv:1804.00636 (2018)

Kiefer, J., Wolfowitz, J., et al.: Stochastic estimation of the maximum of a regression function. Ann. Math. Stat. 23(3), 462–466 (1952)

Kisiala, J.: Conditional value-at-risk: theory and applications. arXiv preprint arXiv:1511.00140 (2015)

Koppel, A., Sadler, B.M., Ribeiro, A.: Proximity without consensus in online multiagent optimization. IEEE Trans. Signal Process. 65(12), 3062–3077 (2017)

Kushner, H., Yin, G.G.: Stochastic Approximation and Recursive Algorithms and Applications, vol. 35. Springer, Berlin (2003)

Mafusalov, A., Uryasev, S.: Buffered probability of exceedance: mathematical properties and optimization. SIAM J. Optim. 28(2), 1077–1103 (2018)

Mahdavi, M., Jin, R., Yang, T.: Trading regret for efficiency: online convex optimization with long term constraints. J. Mach. Learn. Res. 13(1), 2503–2528 (2012)

Miller, C.W., Yang, I.: Optimal control of conditional value-at-risk in continuous time. SIAM J. Control. Optim. 55(2), 856–884 (2017)

Nedić, A., Lee, S.: On stochastic subgradient mirror-descent algorithm with weighted averaging. SIAM J. Optim. 24(1), 84–107 (2014)

Nedić, A., Ozdaglar, A.: Distributed subgradient methods for multi-agent optimization. IEEE Trans. Autom. Control 54(1), 48–61 (2009)

Nedić, A., Ozdaglar, A.: Subgradient methods for saddle-point problems. J. Optim. Theory Appl. 142(1), 205–228 (2009)

Nemirovski, A., Juditsky, A., Lan, G., Shapiro, A.: Robust stochastic approximation approach to stochastic programming. SIAM J. Optim. 19(4), 1574–1609 (2009)

Nesterov, Y.: Introductory Lectures on Convex Optimization: A Basic Course. Springer (2004)

Ogryczak, W., Ruszczyński, A.: From stochastic dominance to mean-risk models: semideviations as risk measures. Eur. J. Oper. Res. 116(1), 33–50 (1999)

Robbins, H., Monro, S.: A stochastic approximation method. Ann. Math. Stat. 22, 400–407 (1951)

Robbins, H., Siegmund, D.: A convergence theorem for non negative almost supermartingales and some applications. In: Optimizing Methods in Statistics, pp. 233–257. Elsevier (1971)

Rockafellar, R.T., Uryasev, S.: Conditional value-at-risk for general loss distributions. J. Bank. Finance 26(7), 1443–1471 (2002)

Ruszczyński, A., Shapiro, A.: Optimization of convex risk functions. Math. Oper. Res. 31(3), 433–452 (2006)

Schmidt, M., Le Roux, N., Bach, F.: Minimizing finite sums with the stochastic average gradient. Math. Program. 162(1–2), 83–112 (2017)

Shapiro, A., Philpott, A.: A tutorial on stochastic programming. Manuscript. Available at www2.isye.gatech.edu/ashapiro/publications.html17 (2007)

Skaf, J., Boyd, S.P.: Design of affine controllers via convex optimization. IEEE Trans. Autom. Control 55(11), 2476–2487 (2010)

Sun, T., Sun, Y., Yin, W.: On Markov chain gradient descent. In: Advances in Neural Information Processing Systems, pp. 9896–9905 (2018)

Xu, Y.: Primal-dual stochastic gradient method for convex programs with many functional constraints. arXiv preprint arXiv:1802.02724v1 (2018)

Yamashita, S., Hatanaka, T., Yamauchi, J., Fujita, M.: Passivity-based generalization of primal-dual dynamics for non-strictly convex cost functions. Automatica 112, 108712 (2020)

Yu, H., Neely, M., Wei, X.: Online convex optimization with stochastic constraints. In: Advances in Neural Information Processing Systems, pp. 1428–1438 (2017)

Zhang, T., Uryasev, S., Guan, Y.: Derivatives and subderivatives of buffered probability of exceedance. Oper. Res. Lett. 47(2), 130–132 (2019)

Zinkevich, M.: Online convex programming and generalized infinitesimal gradient ascent. In: Proceedings of the 20th International Conference on Machine Learning (ICML-03), pp. 928–936 (2003)

Acknowledgements

We thank Eilyan Bitar, Rayadurgam Srikant, Tamer Başar, and Stan Uryasev for helpful discussions. This work was partially supported by the National Science Foundation under grant no. CAREER-2048065, the International Institute of Carbon-Neutral Energy Research (\(\hbox {I}^2\)CNER), and the Power System Engineering Research Center (PSERC).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Xiaolu Tan.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Madavan, A.N., Bose, S. A Stochastic Primal-Dual Method for Optimization with Conditional Value at Risk Constraints. J Optim Theory Appl 190, 428–460 (2021). https://doi.org/10.1007/s10957-021-01888-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-021-01888-x

requires that we sample

requires that we sample