Abstract

In this paper, by virtue of the image space analysis, we investigate general scalar robust optimization problems with uncertainties both in the objective and constraints. Under mild assumptions, we characterize various robust solutions for different kinds of robustness concepts, by introducing suitable images of the original uncertain problem, or the images of its counterpart problems appropriately, which provide a unified approach to tackling with robustness for uncertain optimization problems. Several examples are employed to show the effectiveness of the results derived in this paper.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In most real-world applications, optimization problems (OPs) contain uncertain data. The reasons for data uncertainty include, for example, measurement errors (induced by temperature, pressure or material properties), estimation errors (imprecision in evaluating system performance), implementation errors and unknown future developments. Sometimes, solutions to OPs can exhibit remarkable sensitivity to perturbations in the parameters of the problem, thus often rendering a computed solution that is highly infeasible, suboptimal or both (i.e., completely meaningless). Particularly, one cannot ignore this case from the practical point of view.

Nowadays, there have been two prominent approaches to dealing with uncertain OPs, one is robust optimization and the other is stochastic programming. The former is an effective methodology to handle some practical OPs with uncertainties, in which the uncertain parameters belong to a known set. The idea is to minimize the worst possible objective function value, and look for a solution, that is immunized against the effect of data uncertainty. Unlike the former, the latter consists in finding is a better style commonly to find a feasible solution that optimizes the expected value of some objectives or cost function. The uncertain data are assumed to be random and obey a probability distribution known prior. Both methodologies are complementary for handling data uncertainty in optimization, and each has its own advantages and drawbacks.

Robust optimization is becoming more and more popular in solving scalar OPs with uncertain data in last two decades. The first scholar to investigate robust linear OPs under ellipsoidal uncertainty sets was Soyster [1]. Above all, we refer to Ben-Tal and Nemirovski [2], who gave a seminal paper in the early stage of robustness exploration. Specifically, two detailed monographs [3, 4], that provided extensive collections of theoretical results and applications, play a significant role in the development of robust optimization. In the context of computational attractiveness, the modeling power and broad applicability of robust optimization approach, some recent results, both theoretical and applied, were summarized in [5]. Additionally, Goerigk and Schöbel [6] revealed the close connections between algorithm engineering and robust optimization. They also pointed out some open research directions from a new perspective on algorithm engineering. By means of nonlinear scalarization method, Klamroth et al. [7] unified many different concepts of robustness and stochastic programming. Subsequently, they developed a unified characterization of various concepts from vector optimization, set-valued optimization and scalarization points of view in [8], where the uncertain scalar OPs contains infinite scenario sets. Recently, under various constraint qualifications and convexity assumptions, there have been many studies on characterizing robust dual and robust solutions for uncertain convex OPs. For more details, one can refer to [9,10,11,12,13] and reference therein. However, to the best of our knowledge, very few papers concentrate on deriving robust optimality conditions for general uncertain scalar OPs, without any convexity or concavity assumptions. Besides, whether there exists another tool to generate a unified approach to characterizing a variety of robust solutions? These problems facilitate us to make further explorations, as well as contribute the motivation of this paper.

Image space analysis (for short, ISA) was born with [14]. Its gestation has been a long way. A trace was found in C. Charathédory in his book on Calculus of Variations of 1935. Subsequently, several contributions [15,16,17] have been brought, but independently each other. One of them, very important, is due to M. Hestenes in his book [16]. Such an analysis has begun to grow, when separation arguments have been introduced; in this sense, among others, we mention [17]. It has been proven to be a powerful methodology to study, from the geometrical point of view, different kinds of OPs, for example, constrained extremum problems, variational inequalities, nonconvex vector OPs and so on. By constructing the impossibility of a parametric system, the disjunction of two suitable subsets in the image space, one is the image of the functions and the other is a convex cone, can be obtained. Simultaneously, it also can be reached, by showing that the two subsets lie in two disjoint level sets of a linear or nonlinear separation function, which is the main feature of the ISA approach. Thus, separation is vital in the process of implementation. By exploiting separation arguments in the image space, some theoretical aspects, such as optimality conditions, duality, penalty methods, variational principles, gap functions and error bounds, have been developed; see [14, 18,19,20,21,22,23,24,25,26,27,28]. While, the classical image problem of uncertain OP is not equivalent to itself because of the parameters. Then, it seems to be useless to immediately consider the classical image problem, which leads to the failure in investigating this kind of problem by virtue of ISA. Furthermore, if we only consider the image of robust counterpart problem, it may hide the structure of the uncertain problem; see [14, p. 7, lines 9–12]. Therefore, how to introduce an appropriate image problem, such that it is not only equivalent to the corresponding counterpart problem, but also contains some properties of original uncertain problem, is the key to success.

At present, by defining a suitable image, Wei et al. [29] established an equivalence relation between the uncertain OP and its image problem for strictly robustness, as well as achieved some robust optimality conditions. Besides, based on separation, a unified characterization of several multiobjective robustness concepts [30,31,32] for uncertain multiobjective OPs, was presented in [33, 34]. Some characterizations of multiobjective robustness on vectorization counterparts were also provided in [35]. Motivated greatly by the works reported in [7, 8, 14, 29, 33,34,35,36], this paper focuses on a unified approach to characterizing various robust solutions by virtue of ISA. By defining suitable images of original problem, or the images of its counterpart problems appropriately, the corresponding equivalent problems for most known robustness concepts are described, which provide a unified approach to tackling with robustness for uncertain OPs. Meanwhile, ISA has been proven to be an effective and prominent tool for studying scalar robust OPs. It is worthwhile to notice that this paper provides a full answer to the open question (ii) posed in [29]: by virtue of the ISA, it may be interesting to develop a unified approach to dealing with uncertain problems by defining new images for different robustness concepts (such as optimistic robustness, regret robustness, reliable robustness, light robustness, weighted robustness, adjustable robustness and \(\epsilon \)-constraint robustness, as well as Benson robustness and Elastic robustness recently proposed by Wei et al. [36]) to make two suitable subsets of the image space separable.

The remainder of this paper is organized as follows. In Sect. 2, we make some preliminaries and formulate the uncertain problem. By introducing suitable images of the original problem, or the images of its counterpart problems, Sect. 3 characterizes various robust solutions on the unified framework of ISA. Section 4 gives some interpretations for ISA approach, and proposes several open problems. A short conclusion of the paper is provided in Sect. 5.

2 Preliminaries

In this section, we shall recall some notations and definitions, which will be used in the sequel. Let \(\mathbb {R}^{n}\) be the n-dimensional Euclidean space, where n is a positive integer. Let \(\mathcal {X}\subseteq \mathbb {R}^{n}\) be a nonempty subset and Y be a normed linear space. The closure of a set \(M\subseteq Y\) is denoted by \(\text{ cl }\,M\). \(Y{\setminus } M\) implies a set consisting of all elements that belong to Y but not in M. A nonempty subset \(C\subseteq \mathbb {R}^{m}\) is said to be a cone iff \(tC\subseteq C\) for all \(t\ge 0\). A cone \(C\subseteq \mathbb {R}^{m}\) is said to be convex (resp. pointed) iff \(C+C\subseteq C\) (resp. \(C\cap (-C)=\{0_{\mathbb {R}^{m}}\}\)). The set \(\text{ cone }M:=\bigcup _{t\ge 0}tM\) is the cone generated by M.

Now, a general OP with uncertainties both in the objective and constraints is considered. Assume that U is a nonempty subset of a finite dimensional space and an uncertainty set (convex or nonconvex, compact or noncompact, discrete scenarios or continuous interval), where \(\xi \in U\) implies an uncertain parameter, i.e., a real number, a real vector or a scenario. Let \(f{:}\,\mathcal {X}\times U\rightarrow \mathbb {R}\) and \(F_{i}{:}\,\mathcal {X}\times U\rightarrow \mathbb {R},\, i=1,\ldots ,m\). Then, an uncertain scalar OP is defined as a parametric OP

where for a given \(\xi \in U\) the OP (\(Q(\xi )\)) is described by

We denote by \(\hat{\xi }\in U\) the nominal value, i.e., the value of \(\xi \) that we believe is true today. (\(Q(\hat{\xi })\)) is called the nominal problem.

3 Unified Characterizations of Various Robust Solutions

As far as we know, there is no appropriate methodology to directly solve uncertain problems; thus, it is necessary to replace the uncertain OP by a deterministic version, called the robust counterpart of the uncertain problem. By this means, various robustness concepts, that describe the decision maker’s preferences, have been proposed on the basis of different robust counterparts. With the help of ISA, this section is dedicated to characterizing a variety of robust solutions for different kinds of robustness concepts existing in the literature, which can form a unified approach to tackling with uncertain OPs. In general, the equivalence of problem (1) and its image problem does not hold, due to the effect of parameters; thus, it seems to be meaningless to immediately investigate the image of problem (1). Additionally, if we only consider the image of robust counterpart problem, it may hide the structure of the uncertain problem. Therefore, it is vital to define a proper image problem, such that it can not only be equivalent to the corresponding counterpart problem, but also contain the uncertain parameter on the framework of ISA.

3.1 Strict Robustness

The first classical robustness concept is called strict robustness, which was originally proposed by Soyster [1], for investigating the robust linear OPs under ellipsoidal uncertainty sets. Subsequently, this concept has been extensively researched and developed by Ben-Tal and Nemirovski [2] and Ben-Tal et al. [3]. The idea is to minimize the worst possible objective function value, and search for a solution that is good enough in the worst case. Meanwhile, the constraints should be satisfied for every parameter \(\xi \in U\). Strict robustness is a conservative concept and reveals the pessimistic attitude of a decision maker. Then, the strictly robust counterpart of problem (1) is given by

Set \(D^{1}:=-\mathbb {R}^{m}_{+}\) and \(F(x,\xi ):=(F_{1}(x,\xi ),\ldots ,F_{m}(x,\xi ))\) for a given \(\xi \in U\). It is obvious that \(D^{1}\subseteq \mathbb {R}^{m}\) is a closed and convex cone, and \(F(x,\xi )\in D^{1}\) for feasible points of problem (\(Q(\xi )\)). The set of feasible solutions of problem (2) is denoted as \(X_{1}:=\{x\in \mathcal {X}{:}\,F(x,\xi )\in D^{1},\,\forall \xi \in U\}\). According to [3, pp. 9–10], if a feasible solution \(x\in X_{1}\) is optimal to problem (2), then it is called a strictly robust solution to problem (1). In Sects. 3.1 and 3.3, assume that \(\sup _{\xi \in U}f(x,\xi )<\infty \) and \(\sup _{\xi \in U}F_{i}(x,\xi )<\infty ,i=1,\ldots ,m\) for all \(x\in \mathcal {X}\). Some notations are given to describe the main features of the ISA for problem (1) under the strictly robust counterpart. Let \(\bar{x}\in \mathcal {X}\). Define the map

where \(F^{1}(x):=(\sup \nolimits _{\xi \in U}F_{1}(x,\xi ),\ldots ,\sup \nolimits _{\xi \in U}F_{m}(x,\xi ))\), and consider the following sets

\(\mathcal {K}^{1}_{\bar{x}}\) is called corrected image of problem (1) and \(\mathbb {R}^{1+m}\) is the image space. By means of corrected image, an equivalent characterization of strictly robust solutions for the uncertain OP can be obtained. We should mention that, this separation characterization for the strict robustness has been proposed by authors in [29]. Herein, we make further discussions on it. Particularly, separation characterizations for the weighted robustness and the regret robustness (also known as deviation robustness) will be simultaneously deduced.

Theorem 3.1

A feasible solution \(\bar{x}\in X_{1}\) is a strictly robust solution to problem (1) if and only if

Proof

Necessity. Suppose that \(\bar{x}\in X_{1}\) is a strictly robust solution to problem (1). Then one can read that

The inequality (4) is equivalent to

In addition, it holds \(F^{1}(x)\not \in D^{1}\) for any \(x\in \mathcal {X}{\setminus } X_{1}\). This, together with inequality (5), one can see that (3) is true.

Sufficiency. Assume (3) holds and \(F^{1}(x)\in D^{1}\) for any \(x\in X_{1}\), then we have inequality (5). It follows from the equivalence of (4) and (5) that \(\bar{x}\in X_{1}\) is a strictly robust solution to problem (1). \(\square \)

Generally, the image of a generalized system is not convex, even when the functions involve some convexity properties. To avoid this drawback, we introduce an extended image \(\mathcal {E}^{1}_{\bar{x}}\), that is a regularization to \(\mathcal {K}^{1}_{\bar{x}}\) with respect to the cone \(\text{ cl }\,\mathcal {H}^{1}\), i.e.,

Similarly as in [14], the following proposition can be derived by virtue of the extended image, and we state it without proof.

Proposition 3.1

A feasible solution \(\bar{x}\in X_{1}\) is a strictly robust solution to problem (1) if and only if

or equivalently, \(cone \,\mathcal {E}^{1}_{\bar{x}}\cap \mathcal {H}^{1}=\emptyset \), or equivalently, \(\mathcal {E}^{1}_{\bar{x}}\cap \mathcal {H}^{1}_{0}=\emptyset \), where

Remark 3.1

-

(i)

If we consider the classical definition of image set of problem (1), and define the map

$$\begin{aligned} A_{\bar{x}}{:}\,\mathcal {X}\times U\rightarrow \mathbb {R}^{1+m},\quad A_{\bar{x}}(x,\xi ):=(f(\bar{x},\xi )-f(x,\xi ),\,F(x,\xi )), \end{aligned}$$where \(F(x,\xi ):=(F_{1}(x,\xi ),\ldots ,F_{m}(x,\xi ))\), then the image of problem (1) is

$$\begin{aligned} \mathcal {K}_{\bar{x}}:=\left\{ (u,v)\in \mathbb {R}^{1+m}{:}\,(u,v)=A_{\bar{x}}(x,\xi ),\;x\in \mathcal {X},\,\xi \in U\right\} . \end{aligned}$$While, \(\bar{x}\in X_{1}\) being a strictly robust solution to problem (1) cannot ensure the equality \(\mathcal {K}_{\bar{x}}\cap \mathcal {H}^{1}=\emptyset \). This is because the inequality \(\sup _{\xi \in U}f(\bar{x},\xi )\le \sup _{\xi \in U}f(x,\xi )\) usually does not imply the inequality \(f(\bar{x},\xi )\le f(x,\xi )\) for all \(x\in X_{1}\) and \(\xi \in U\). Thus, the equivalence of robust counterpart problem (2) and the classical image problem of (1) are not satisfied.

-

(ii)

Another approach, which is from [14, p. 7, lines 9–12] in face of minimax problems, is to discuss directly the image of problem (2). In this circumstance, the strictly robust counterpart can be viewed as a special case of constrained extremum problems. That is, define the map

$$\begin{aligned} \widetilde{A_{\bar{x}}}{:}\,\mathcal {X}\rightarrow \mathbb {R}^{1+m},\quad \widetilde{A_{\bar{x}}}(x):=\bigg (\sup _{\xi \in U}f(\bar{x},\xi )-\sup _{\xi \in U}f(x,\xi ),\,F^{1}(x)\bigg ), \end{aligned}$$and the image of (2) is \(\widetilde{\mathcal {K}_{\bar{x}}}:=\{(u,v)\in \mathbb {R}^{1+m}{:}\,(u,v)=\widetilde{A_{\bar{x}}}(x),\;x\in \mathcal {X}\}\). This method hides the structure of problem (1), although \(\sup _{\xi \in U}f(\bar{x},\xi )\le \sup _{\xi \in U}f(x,\xi )\) is tantamount to \(f(\bar{x},\xi )\le \sup _{\xi \in U}f(x,\xi )\) for all \(x\in X_{1}\) and \(\xi \in U\).

Remark 3.2

Let \(U:=\{\xi _{1},\ldots ,\xi _{q}\}\) be a finite set of scenarios. If some scenarios are more likely to be realized than others, to assign weights \(\mathbf {w}_{j}>0\) to each scenario \(\xi _{j}\in U,j=1,\ldots ,q\), then the weighted robust counterpart of the uncertain OP can be presented, by using \(\sup _{j\in \{1,\ldots ,q\}}\mathbf {w}_{j}f(x,\xi _{j})\) instead of the objective function \(\sup _{\xi \in U}f(x,\xi )\) in (2). If \(\mathbf {w}_{j}=1\) for every \(j=1,\ldots ,q\), the weighted robust counterpart is reduced to (2). The concept of weighted robustness is a generalization of strictly robustness and can be described by the weighted Tschebyscheff scalarization. Additionally, let \(f^{*}{:}\,U\rightarrow \mathbb {R}\) be a function given by \(f^{*}(\xi ):=\min \{f(x,\xi ){:}\,x\in X(\xi )\}\), where \(X(\xi ):=\{x\in \mathcal {X}{:}\,F_{i}(x,\xi )\le 0,\,i=1,\ldots ,m\}\). Here, we assume that an optimal solution exists for every fixed scenario \(\xi \). The optimal value of problem (\(Q(\xi )\)) for a given \(\xi \in U\) is \(f^{*}(\xi )\), and it can be interpreted as an ideal point. If we replace the objective function \(\sup _{\xi \in U}f(x,\xi )\) by \(\sup _{\xi \in U}(f(x,\xi )-f^{*}(\xi ))\) in (2), the regret robust counterpart of the uncertain OP can be addressed. The regret robustness is also known as deviation robustness, in which one evaluates a solution by comparing it with the best possible solution for the realized scenario in the worst case. By making a proper modification of \(A^{1}_{\bar{x}}(x,\xi )\), i.e., let \(A^{\mathbf {w}}_{\bar{x}}(x,\xi _{j})=(\mathbf {w}_{j}f(\bar{x},\xi _{j})-\sup _{j\in \{1,\ldots ,q\}}\mathbf {w}_{j}f(x,\xi _{j}),\,F^{1}(x))\) (or \(A^{r}_{\bar{x}}(x,\xi )=(f(\bar{x},\xi )-f^{*}(\xi )-\sup _{\xi \in U}(f(x,\xi )-f^{*}(\xi )),\,F^{1}(x))\)), the corresponding results of Theorem 3.1 and Proposition 3.1 for weighted (regret) robust counterpart problem would be obtained.

Three examples are employed to emphasize the importance of the theoretical results obtained above for (strictly/weighted/regret) robust counterpart. The uncertainty set contains finite scenario in Examples 3.1 and 3.2, while it is regarded as a closed interval in Example 3.3. In this case, the weighted robustness is invalid in the third example.

Example 3.1

For problem (1), take \(\mathcal {X}=\mathbb {R}\) and \(U=\{\xi _{1},\xi _{2}\}\) be the parameter set. Let \(f(x,\xi _{1})=x\), \(f(x,\xi _{2})=-x\), \(F(x,\xi _{1})=x-1\) and \(F(x,\xi _{2})=x-2\). Thus, \(\max _{\xi \in U}f(x,\xi )=|x|\), \(\max _{\xi \in U}F(x,\xi )=x-1\) and \(X_{1}:=]-\infty ,1]\). For strict robustness, one can reach that \(x^{*}=0\) is the unique strictly robust solution. (i) Set \(\bar{x}=0\). Since \(f(0,\xi _{1})=f(0,\xi _{2})=0\), it holds \(A^{1}_{0}(x,\xi _{1})=A^{1}_{0}(x,\xi _{2})=(-|x|,x-1)\). Let \(u=-|x|\) and \(v=x-1\), we can derive \(v=-u-1,u\le 0\) and \(v=u-1,u<0\). Then, the corrected image set is \(\mathcal {K}^{1}_{0}=\{(u,v)\in \mathbb {R}^{2}{:}\,v=-u-1,\;u\le 0\}\cup \{(u,v)\in \mathbb {R}^{2}{:}\,v=u-1,\;u<0\}\). (ii) Take \(\bar{x}=1\). If \(\xi =\xi _{1}\) or \(\xi =\xi _{2}\), then \(A^{1}_{1}(x,\xi _{1})=(1-|x|,x-1)\) and \(A^{1}_{1}(x,\xi _{2})=(-1-|x|,x-1)\). Two cases are discussed and the corrected image set is

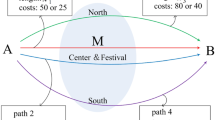

See Fig. 1; the red lines denote \(\mathcal {K}^{1}_{0}\) and the blue ones represent \(\mathcal {K}^{1}_{1}\).

The corrected images for strict robustness in Example 3.1

For weighted robustness, let \(\mathbf {w}_{1}=1\) and \(\mathbf {w}_{2}=2\). One can see that

It is not difficult to see that \(x^{*}=0\) is the unique weighted robust solution. (i) Taking \(\bar{x}=0\), we obtain \(\mathbf {w}_{1}f(0,\xi _{1})=\mathbf {w}_{2}f(0,\xi _{2})=0\) and \(A^{\mathbf {w}}_{0}(x,\xi _{1})=A^{\mathbf {w}}_{0}(x,\xi _{2})=(-\max \{x,-2x\},x-1)\). Setting \(u=-\max \{x,-2x\}\) and \(v=x-1\), the relation between u and v can be formulated as \(v=-u-1,u\le 0\), \(v=\frac{u}{2}-1,u<0\). The corrected image set is

(ii) Set \(\bar{x}=1\), it is obvious that \(\mathbf {w}_{1}f(1,\xi _{1})=1,A^{\mathbf {w}}_{1}(x,\xi _{1})=(1-\max \{x,-2x\},x-1)\), and \(\mathbf {w}_{2}f(1,\xi _{2})=-2\), \(A^{\mathbf {w}}_{1}(x,\xi _{2})=(-2-\max \{x,-2x\},x-1)\). By analyzing two cases, the corresponding corrected image set can be presented by

In Fig. 2, the red lines and blue ones describe \(\mathcal {K}^{\mathbf {w}}_{0}\) and \(\mathcal {K}^{\mathbf {w}}_{1}\), respectively.

\(\mathcal {K}^{\mathbf {w}}_{0}\) and \(\mathcal {K}^{\mathbf {w}}_{1}\) are corrected images for weighted robustness in Example 3.1

For regret robustness, let \(\mathcal {X}=[-\,2,1]\subset \mathbb {R}\), the feasible set of problem (2) is \(X_{1}:=[-\,2,1]\). It follows from Remark 3.2 that \(f^{*}(\xi _{1})=\min _{x\in X(\xi _1)}f(x,\xi _{1})=-2\) and \(f^{*}(\xi _{2})=\min _{x\in X(\xi _2)}f(x,\xi _{2})=-1\). One can read that

and \(x^{*}=-\frac{1}{2}\) is the unique regret robust solution. (i) Set \(\bar{x}=-\frac{1}{2}\). For \(\xi =\xi _{1}\) or \(\xi =\xi _{2}\), it holds \(A^{r}_{-\frac{1}{2}}(x,\xi )=\Big (\frac{3}{2}-\max \{x+2,-x+1\},x-1\Big )\). Let \(u=\frac{3}{2}-\max \{x+2,-x+1\}\) and \(v=x-1\), the corrected image set can be given by

(ii) Take \(\bar{x}=0\). If \(\xi =\xi _{1}\) (or \(\xi _{2}\)), then \(f(0,\xi _{1})-f^{*}(\xi _{1})-\max \{x+2,-x+1\}=2-\max \{x+2,-x+1\}\) and \(f(0,\xi _{2})-f^{*}(\xi _{2})-\max \{x+2,-x+1\}=1-\max \{x+2,-x+1\}\). The corresponding corrected image set is

See Fig. 3; the red lines and blue ones denote \(\mathcal {K}^{r}_{-\frac{1}{2}}\) and \(\mathcal {K}^{r}_{0}\), respectively.

For regret robustness, the corrected images \(\mathcal {K}^{r}_{-\frac{1}{2}}\) and \(\mathcal {K}^{r}_{0}\) in Example 3.1

Example 3.2

For problem (1), let \(\mathcal {X}=[-\,1,1]\), \(U=\{\xi _{1},\xi _{2}\}\), \(f(x,\xi _{1})=\sqrt{1-x^{2}}\), \(f(x,\xi _{2})=1-\frac{1}{2}x\), \(F(x,\xi _{1})=x\) and \(F(x,\xi _{2})=x-1\). It is not difficult to see that

and \(\max _{\xi \in U}F(x,\xi )=x\), \(X_{1}=[-\,1,0]\). For strict robustness, by simple calculation, one can read that \(x^{*}=0\) is the unique strictly robust solution. (i) Take \(\bar{x}=0\). Analogous to the process in Example 3.1, the corrected image can be obtained, i.e.,

(ii) Let \(\bar{x}=-1\). By discussing two cases \(\xi =\xi _{1}\) or \(\xi =\xi _{2}\), we can achieve the corrected image set

See Fig. 4; since \(\mathcal {H}^{1}=\{(u,v)\in \mathbb {R}^{2}{:}\,u>0,\,v\le 0\}\), then \(\mathcal {K}^{1}_{0}\cap \mathcal {H}^{1}=\emptyset \).

For weighted robustness, let \(f(x,\xi _{2})=1-x,\mathbf {w}_{1}=2\) and \(\mathbf {w}_{2}=1\). Then, we get

Due to \(X_{1}=[-\,1,0]\), one can easily reach that \(x^{*}=-\frac{3}{5}\) is the unique weighted robust solution. (i) Taking \(\bar{x}=-\frac{3}{5}\), it follows from the process in Example 3.1 that the corrected image set is

(ii) Set \(\bar{x}=0\). We consider two cases \(\xi =\xi _{1}\) or \(\xi =\xi _{2}\) and derive the corrected image set

In Fig. 5, the red lines represent \(\mathcal {K}^{\mathbf {w}}_{-\frac{3}{5}}\), and \(\mathcal {K}^{\mathbf {w}}_{0}\) is presented by blue ones. It is not difficult to see that \(\mathcal {K}^{\mathbf {w}}_{-\frac{3}{5}}\cap \mathcal {H}^{1}=\emptyset \).

For regret robustness, let \(\mathcal {X}=[-\,1,0]\), and the other conditions are same to weighted robustness. We achieve from Remark 3.2 that \(f^{*}(\xi _{1})=\min _{x\in X(\xi _1)}f(x,\xi _{1})=0\), \(f^{*}(\xi _{2})=\min _{x\in X(\xi _2)}f(x,\xi _{2})=1\) and

It is obvious that \(x^{*}=-\frac{\sqrt{2}}{2}\) is the unique regret robust solution. (i) Let \(\bar{x}=-\frac{\sqrt{2}}{2}\). Similar to the process in Example 3.1, the corrected image set can be given by

(ii) Set \(\bar{x}=0\). The corresponding corrected image set is

From Fig. 6, it can be seen that \(\mathcal {K}^{r}_{-\frac{\sqrt{2}}{2}}\cap \mathcal {H}^{1}=\emptyset \).

Example 3.3

For problem (1), let \(\mathcal {X}=\mathbb {R}\), \(U=[1,2]\), \(f(x,\xi )=x+\xi \) and \(F(x,\xi )=x^{2}-\xi \). Then, we achieve \(\max _{\xi \in U}f(x,\xi )=x+2\), \(\max _{\xi \in U}F(x,\xi )=x^{2}-1\) and \(X_{1}=[-\,1,1]\). One can see that \(x^{*}=-1\) is the unique strictly robust solution. Taking \(\bar{x}=-1\) (resp. \(\bar{x}=1\)), it follows from [29, Example 2.6] that \(\mathcal {K}^{1}_{-1}=\{(u,v)\in \mathbb {R}^{2}{:}\,v=(u+3-\xi )^{2}-1,\xi \in U\}\) and \(\mathcal {K}^{1}_{1}=\{(u,v)\in \mathbb {R}^{2}{:}\,v=(u+1-\xi )^{2}-1,\xi \in U\}\), respectively. Combined with Fig. 7, one can see that \(\mathcal {K}^{1}_{-1}\cap \mathcal {H}^{1}=\emptyset \).

For regret robustness, since \(f^{*}(\xi )=\min _{x\in X(\xi )}f(x,\xi )=-\sqrt{\xi }+\xi \), then the objective function is \(\max _{\xi \in U}(f(x,\xi )-f^{*}(\xi ))=x+\sqrt{\xi }\), we can obtain that \(x^{*}=-1\) is the unique regret robust solution. Setting \(\bar{x}=-1\) (resp. \(\bar{x}=0\)), the corrected image sets can be reached by

and \(\mathcal {K}^{r}_{0}=\{(u,v)\in \mathbb {R}^{2}{:}\,v=(u+\sqrt{2}-\sqrt{\xi })^{2}-1,\xi \in U\}\), respectively. Analogous to Fig. 7, it holds \(\mathcal {K}^{r}_{-1}\cap \mathcal {H}^{1}=\emptyset \).

3.2 Optimistic Robustness

While strict robustness concentrates on the worst case, and can be seen as a pessimistic model, optimistic robustness aims at minimizing the best possible objective function value over \(X_{2}\), and shows an optimistic attitude of a decision maker, where \(X_{2}:=\{x\in \mathcal {X}{:}\,F(x,\xi )\in D^{1}\;\text{ for } \text{ some }\;\xi \in U\}\) stands for the set of optimistic feasible solutions. The optimistic counterpart was proposed by Beck and Ben-Tal [12], in the context of studying robust duality theory. Then, we formulate the optimistic counterpart of problem (1) as follows

If a feasible solution \(x\in X_{2}\) is optimal to problem (7), then it is an optimistic robust solution of problem (1). To introduce a suitable corrected image of problem (1) under the optimistic robust counterpart, we assume that the values of \(\inf _{\xi \in U}f(x,\xi )\) and \(\inf _{\xi \in U}F_{i}(x,\xi ),\,i=1,\ldots ,m\) are finite for all \(x\in \mathcal {X}\), and \(\inf _{\xi \in U}F_{i}(x,\xi ),\,i=1,\ldots ,m\) are attained for all \(x\in \mathcal {X}\). Let \(\bar{x}\in \mathcal {X}\) and \(F^{2}(x):=\Big (\min \nolimits _{\xi \in U}F_{1}(x,\xi ),\ldots ,\min \nolimits _{\xi \in U}F_{m}(x,\xi )\Big )\). Define the map

and consider the following set

By virtue of the corrected image set \(\mathcal {K}^{2}_{\bar{x}}\), a necessary and sufficient condition of optimistic robust solutions for the uncertain OP can be derived.

Theorem 3.2

A feasible solution \(\bar{x}\in X_{2}\) is an optimistic robust solution to problem (1) if and only if

Proof

Necessity. Suppose that \(\bar{x}\in X_{2}\) is an optimistic robust solution. Then it holds

Since \(\inf _{\xi \in U}f(x,\xi )\le f(x,\xi )\) for all \(x\in \mathcal {X}\) and \(\xi \in U\), together with inequality (8), it is obvious that

When \(x\in \mathcal {X}{\backslash }{X_{2}}\), there is at least one \(j\in \{1,\ldots ,m\}\) such that \(F_{j}(x,\xi )>0\) for all \(\xi \in U\). Then, we have \(\min _{\xi \in U}F_{j}(x,\xi )>0\) for \(x\in \mathcal {X}{\backslash }{X_{2}}\), which implies that \(F^{2}(x)\not \in D^{1}\). From above analysis, one can obtain that \(\mathcal {K}^{2}_{\bar{x}}\cap \mathcal {H}^{2}=\emptyset \).

Sufficiency. Since \(F^{2}(x)\in D^{1}\) for \(x\in X_{2}\), it follows from \(\mathcal {K}^{2}_{\bar{x}}\cap \mathcal {H}^{2}=\emptyset \) that inequality (9) holds. Based on equivalence of (8) and (9), it is not difficult to see that \(\bar{x}\in X_{2}\) is an optimistic robust solution. \(\square \)

Remark 3.3

Another formulation of the corrected image is defined by

It is not difficult to see that a solution \(\bar{x}\in X_{2}\) is an optimistic robust solution to problem (1) if and only if \(\widetilde{\mathcal {K}^{2}_{\bar{x}}}\cap \mathcal {H}^{2}=\emptyset \). For all \(x\in \mathcal {X}\) and all \(\xi \in U\), since \(f(\bar{x},\xi )-\inf \nolimits _{\xi \in U}f(x,\xi )\le 0\) implies \(\inf \nolimits _{\xi \in U}f(\bar{x},\xi )-f(x,\xi )\le 0\), but the converse is, in general, not true. Then, the conditions of this conclusion seem to be stronger than the ones of Theorem 3.2. Moreover, the corresponding conclusion of Proposition 3.1 for optimistic robust counterpart can be reached, by introducing an extended image of \(\mathcal {K}^{2}_{\bar{x}}\). Three examples are given to emphasize the importance of Theorem 3.2 in the following.

Example 3.4

For problem (1), take \(\mathcal {X}=\mathbb {R}\) and \(U=\{\xi _{1},\xi _{2}\}\) be the parameter set. Consider two cases as follows:

Case 1 The OP with uncertainties only in the objective is introduced. Let \(f(x,\xi _{1})=x\), \(f(x,\xi _{2})=-x\) and \(F(x)=x^{2}-x-2\). It holds \(\min _{\xi \in U}f(x,\xi )=-|x|\) and \(X_{2}:=[-\,1,2]\). It is not difficult to see that \(x^{*}=2\) is the unique optimistic robust solution. Set \(\bar{x}=2\). Since \(\min _{\xi \in U}f(2,\xi )=-2\), then \(\min _{\xi \in U}f(2,\xi )-f(x,\xi _{1})=-2-x\) and \(\min _{\xi \in U}f(2,\xi )-f(x,\xi _{2})=-2+x\). Setting \(u=-2-x\) (or \(u=-2+x\)), \(v=x^{2}-x-2\), one can reach that \(v=u^{2}+5u+4\) (or \(v=u^{2}+3u\)). The corrected image set is \(\mathcal {K}^{2}_{2}=\{(u,v)\in \mathbb {R}^{2}{:}\,v=u^{2}+5u+4\}\cup \{(u,v)\in \mathbb {R}^{2}{:}\,v=u^{2}+3u\}\). It follows from Fig. 8 that \(\mathcal {K}^{2}_{2}\cap \mathcal {H}^{2}=\emptyset \).

Case 2 The OP with uncertainties both in the objective and constraints is discussed. Let \(f(x,\xi _{1})=x\), \(f(x,\xi _{2})=-x\), \(F(x,\xi _{1})=x^{2}+x\) and \(F(x,\xi _{2})=x^{2}-1\). Thus, \(\min _{\xi \in U}f(x,\xi )=-|x|\),

and \(X_{2}:=[-\,1,1]\). It is obvious that \(x^{*}=-1\) (resp. 1) is an optimistic robust solution. Taking \(\bar{x}=-1\), analogous to Case 1, the corrected image set can be calculated by

We achieve from Fig. 8 that \(\mathcal {K}^{2}_{-1}\cap \mathcal {H}^{2}=\emptyset \).

Example 3.5

See Example 3.3, one can conclude that \(\min _{\xi \in U}f(x,\xi )=x+1\), \(\min _{\xi \in U}F(x,\xi )=x^{2}-2\) and \(X_{2}:=[-\,\sqrt{2},\sqrt{2}]\). By simple calculation, it can be seen that \(x^{*}=-\sqrt{2}\) is the unique optimistic robust solution. Setting \(\bar{x}=-\sqrt{2}\) (resp. \(\bar{x}=0\)), we can obtain the corrected image sets

and \(\mathcal {K}^{2}_{0}=\{(u,v)\in \mathbb {R}^{2}{:}\,v=(u-1+\xi )^{2}-2,\,\xi \in U\}\), respectively. From Fig. 9, one can reach that \(\mathcal {K}^{2}_{-\sqrt{2}}\cap \mathcal {H}^{2}=\emptyset \).

3.3 Reliable Robustness

For some practical problems, it may be hard to find a point \(x\in \mathcal {X}\) that satisfies constraints \(F_{i}(x,\xi )\le 0\), \(i=1,\ldots ,m\) for every realization of the uncertain parameter \(\xi \in U\), i.e., \(X_{1}=\emptyset \). To obtain an OP with feasible solutions, a less conservative approach called the concept of reliable robustness [11] is described. Here, the constraints \(F_{i}(x,\xi )\le 0\) are replaced by \(F_{i}(x,\xi )\le \delta _{i}\) for all \(\xi \in U\), where \(\delta _{i}\in \mathbb {R}\), \(i=1,\ldots ,m\) denote some infeasibility tolerances. But the constraints \(F_{i}(x,\hat{\xi })\le 0,i=1,\ldots ,m\) should be fulfilled for the nominal value \(\hat{\xi }\). Now, the reliable counterpart of problem (1) is given by

Let \(X_{3}:=\{x\in \mathcal {X}{:}\,F_{i}(x,\xi )\le \delta _{i},\;F_{i}(x,\hat{\xi })\le 0,\;\forall \,\xi \in U,\,i=1,\ldots ,m\}\) be the set of reliable feasible solutions. It is obvious that if a feasible solution \(x\in X_{3}\) is optimal to problem (10), then x is a reliable robust solution of problem (1). We need introduce a proper corrected image of problem (1) under the reliable counterpart, for the sake of achieving an equivalent characterization of reliable robust solutions. Let \(\bar{x}\in \mathcal {X}\) and \(\delta =(\delta _{1},\ldots ,\delta _{m})\in \mathbb {R}^{m}\). Define the map

where \(F^{3}(x):=(F_{1}(x,\hat{\xi }),\ldots ,F_{m}(x,\hat{\xi }),\sup \nolimits _{\xi \in U}F_{1}(x,\xi )-\delta _{1},\ldots ,\sup \nolimits _{\xi \in U}F_{m}(x,\xi )-\delta _{m})\), and consider the following sets

where \(D^{3}:=-\mathbb {R}^{2m}_{+}\).

Theorem 3.3

A feasible solution \(\bar{x}\in X_{3}\) is a reliable robust solution to problem (1) if and only if

Proof

Analogous to the proof of Theorem 3.1, this conclusion can be verified easily, so we omit the proof here. \(\square \)

Remark 3.4

If \(\delta _{i}=0,i=1,\ldots ,m\), the reliable robustness is reduced to strict robustness. This concept is described by the Tschebyscheff scalarization with the origin as reference point, and based on a relaxed feasible set. Moreover, by introducing an extended image of \(\mathcal {K}^{3}_{\bar{x}}\), the corresponding conclusion of Proposition 3.1 for reliable robustness can be reached.

Example 3.6

Consider problem (1), take \(\mathcal {X}=\mathbb {R}\), \(U=\{\xi _{1},\xi _{2}\}\) be the parameter set. Let \(f(x,\xi _{1})=x\), \(f(x,\xi _{2})=-x\), \(F(x,\xi _{1})=x^{2}+x\) and \(F(x,\xi _{2})=x^{2}-\frac{3}{2}x+\frac{1}{2}\). Then, we obtain \(\max _{\xi \in U}f(x,\xi )=|x|\) and

In this case, the set \(X_{1}=\emptyset \). Setting \(\delta =\frac{3}{2}\) and \(\hat{\xi }=\xi _{1}\), the reliable feasible set \(X_{3}=[-\,\frac{1}{2},0]\). It is obvious that \(x^{*}=0\) is the unique reliable robust solution. (i) Let \(\bar{x}=0\). Analogous to Example 3.1, one can reach that \(\mathcal {K}^{3}_{0}=\{(u,v)\in \mathbb {R}^{1+2}{:}\,u=-|x|,\; v=(x^{2}+x,\max _{\xi \in U}F(x,\xi )-\frac{3}{2}),\; x\in \mathcal {X}\}\) and \(\mathcal {H}^{3}=\mathbb {R}_{++}\times (-\mathbb {R}^{2}_{+})\). Since \(-\,|x|\le 0\) for all \(x\in \mathcal {X}\), it holds \(\mathcal {K}^{3}_{0}\cap \mathcal {H}^{3}=\emptyset \). (ii) Taking \(\bar{x}=-\frac{1}{2}\), by analyzing two cases for \(\xi =\xi _{1}\) or \(\xi =\xi _{2}\), we achieve from part (i) that the corrected image is

If we take \(\tilde{x}=-\frac{1}{3}\), then \(\frac{1}{2}-|\tilde{x}|=\frac{1}{6}>0\) and \((\tilde{x}^{2}+\tilde{x},\max _{\xi \in U}F(\tilde{x},\xi )-\frac{3}{2})=(-\frac{2}{9},-\frac{7}{18})\in -\mathbb {R}^{2}_{+}\), i.e., there exists a \(\tilde{x}=-\frac{1}{3}\) such that \(\mathcal {K}^{3}_{-\frac{1}{2}}\cap \mathcal {H}^{3}\ne \emptyset \). Hence, \(\bar{x}=-\frac{1}{2}\) is not a regret robust solution.

3.4 Light Robustness

It is difficult to determine the upper bounds \(\delta _{i},i=1,\ldots ,m\) for the constraints of reliable robustness. To avoid these inadequacies, the concept of light robustness is described by minimizing these tolerances. Let \(z^{*}>0\) be the optimal value of the nominal problem (\(Q(\hat{\xi })\)), and the nominal value \(f(x,\hat{\xi })\) be bounded by \((1+\gamma )z^{*}\), where \(\gamma \ge 0\). This approach was firstly discussed in [37], and extended to general robust OPs by Schöbel [38], which can be interpreted as a weighted-sum scalarization on upper bounds. The light counterpart of problem (1) is defined by

where \(\mathbf {w}_{i}\ge 0,i=1,\ldots ,m,\sum ^{m}_{i=1}\mathbf {w}_{i}=1\).

Let \(\varXi :=\big \{(\delta ,x)\in \mathbb {R}^{m}\times \mathcal {X}{:}\,F_{i}(x,\hat{\xi })\le 0,\,f(x,\hat{\xi })\le (1+\gamma )z^{*},\, F_{i}(x,\xi )\le \delta _{i},\;\forall \,\xi \in U,\,i=1,\ldots ,m\big \}\ne \emptyset \) be the feasible set of problem (11). An optimal solution \((\delta ^{*},x^{*})\in \varXi \) of the light counterpart problem is called lightly robust. In Sects. 3.4 and 3.5, we assume that \(\sup _{\xi \in U}F_{i}(x,\xi )<\infty ,i=1,\ldots ,m\) for all \(x\in \mathcal {X}\), then an equivalent condition of lightly robust solutions can be captured, by constructing two suitable subsets in the image space of problem (11). Let \(\bar{\delta }=(\bar{\delta }_{1},\ldots ,\bar{\delta }_{m})\in \mathbb {R}^{m},\bar{x}\in \mathcal {X}\). Define the map

where \(F^{4}(\delta ,x):=\Big (F_{1}(x,\hat{\xi }),\ldots ,F_{m}(x,\hat{\xi }),f(x,\hat{\xi })-(1+\gamma )z^{*},\sup \nolimits _{\xi \in U}F_{1}(x,\xi )-\delta _{1},\ldots ,\sup \nolimits _{\xi \in U}F_{m}(x,\xi )-\delta _{m}\Big )\), and consider the following sets

where \(D^{4}:=-\mathbb {R}_{+}^{2m+1}\).

Theorem 3.4

Let \((\bar{\delta },\bar{x})\in \varXi \). A feasible solution \((\bar{\delta },\bar{x})\) is a lightly robust solution to problem (1) if and only if

Proof

Necessity. Assume that \((\bar{\delta },\bar{x})\) is a lightly robust solution. For any \((\delta ,x)\in \varXi \), we can achieve \(F^{4}(\delta ,x)\in D^{4}\) and

Additionally, when \((\delta ,x)\in (\mathbb {R}^{m}\times \mathcal {X}){\setminus }\varXi \), it holds \(F^{4}(\delta ,x)\not \in D^{4}\). This, together with inequality (13), the equality (12) can be reached.

Sufficiency. Since \(F^{4}(\delta ,x)\in D^{4}\) for any \((\delta ,x)\in \varXi \) and the equality (12) holds, so inequality (13) can be derived. Combined with \((\bar{\delta },\bar{x})\in \varXi \), one can read that \((\bar{\delta },\bar{x})\) is an optimal solution to problem (11). \(\square \)

Remark 3.5

The feasible set \(\varXi \) can be divided into two parts: one is about variable \(\delta \), and the other is about x. Let

and \(X_{\delta }:=\{x\in \mathcal {X}{:}\,F_{i}(x,\hat{\xi })\le 0,\,f(x,\hat{\xi })\le (1+\gamma )z^{*},\, F_{i}(x,\xi )\le \delta _{i},\;\forall \,\xi \in U,\,i=1,\ldots ,m\}\) for a given \(\delta \in \mathbb {R}^{m}\). One can read that \(X_{\delta }\ne \emptyset \) if \(\delta \) belongs to \(\Omega \), otherwise \(X_{\delta }=\emptyset \). By introducing the sets \(\Omega \) and \(X_{\delta }\), and setting \(\delta \in \Omega \), the set \(\varXi \) can be described clearly. Take

If \(\varPhi \) is compact and U is finite, then the light counterpart problem (11) admits an optimal solution, even when the set of strictly robust solutions (or the set of reliable robust solutions) is empty; see [38, pp. 167–168].

Remark 3.6

In contrast to the other robustness concepts, \(\mathcal {K}^{4}_{(\bar{\delta },\bar{x})}\) is just the image of problem (11), but not a corrected image of problem (1). This is because the objective function of problem (11) is the tolerances \(\delta _{i}\) with weighed sum. The structures of the images in both problems are completely different. Additionally, when \(U:=\{\xi _{1},\ldots ,\xi _{q}\}\) is a finite set of scenarios, and choose some initial feasible solution \(x^{0}\in X_{1}\), the Benson robustness was introduced in [36, Sect. 3.1]. By using Benson’s method (a scalarization technique to solve MOPs [39, §4.4]), the corresponding robust counterpart of (1) was given by

where \(\mathbf {w}_{j}>0,\,j=1,\ldots ,q,\,\sum _{j=1}^q \mathbf {w}_{j}=1\). Let

denote the feasible set of problem (14). A feasible solution \((l^{*},x^{*})\in \varPsi \) of (14) is called B-robust. Analogous to the descriptions of light robustness, take \((\bar{l},\bar{x})\in \mathbb {R}^{q}_{+}\times \mathcal {X}\), if we replace \(A^{4}_{(\bar{\delta },\bar{x})}\), \(A^{4}_{(\bar{\delta },\bar{x})}(\delta ,x)\) and \(D^{4}\) by

and \(D^{B}:=\big \{0_{\mathbb {R}^{q}}\big \}\times D^{1}\), respectively, where

an equivalent characterization of B-robust solutions would be achieved.

3.5 \(\epsilon \)-Constraint Robustness

When the preferences of a decision maker have not been represented by other robustness approaches, one can choose to minimize only one objective function for one particular scenario \(\xi _{j}\in U\), while the remaining objectives \(f(x,\xi _{l}),l\in \{1,\ldots ,q\},l\ne j\) bounded by some real values \(\epsilon _{l}\in \mathbb {R}\) are moved to the constraints, which is the description of \(\epsilon \)-constraint robustness. The idea is from the \(\epsilon \)-constraint scalarization method [39] in dealing with multiobjective OPs. The \(\epsilon \)-constraint robustness was initially proposed by Klamroth et al. [7] for a finite uncertainty set, and has been generalized to an infinite compact uncertainty set in [8]. Here, we take \(U:=\{\xi _{1},\ldots ,\xi _{q}\}\) and \(\epsilon =(\epsilon _{1},\ldots ,\epsilon _{q})\) as a finite set of scenarios and a real vector, respectively, the \(\epsilon \)-constraint robust counterpart is thus denoted by

Let \(X_{5}:=\{x\in X_{1}{:}\,f(x,\xi _{l})\le \epsilon _{l},\;l\in \{1,\ldots ,q\},\,l\ne j\}\) denote the set of \(\epsilon \)-constraint feasible solutions. If a feasible solution \(x\in X_{5}\) is optimal to problem (15), then x is an \(\epsilon \)-constraint robust solution of problem (1). With the purpose of deriving a necessary and sufficient condition for \(\epsilon \)-constraint robust solutions, some new sets in the image space of problem (15) are considered. Let \(\bar{x}\in \mathcal {X}\), \(\xi _{j}\in U\) and \(\epsilon ^{j}=(\epsilon _{1},\ldots ,\epsilon _{j-1},\epsilon _{j+1},\ldots ,\epsilon _{q})\). Define the map

and consider the following sets

where

Theorem 3.5

A feasible solution \(\bar{x}\in X_{5}\) is an \(\epsilon \)-constraint robust solution to problem (1) if and only if

Proof

Necessity. Suppose that \(\bar{x}\in X_{5}\) is an \(\epsilon \)-constraint robust solution. Then, we have

where \(\xi _{j}\in U\) is chosen beforehand. Additionally, it is obvious that \(F^{5}(x)\not \in D^{5}\) for any \(x\in \mathcal {X}{\setminus } X_{5}\). This, together with inequality (17), one can reach that the equality (16) holds.

Sufficiency. If the equality (16) holds, we can obtain the inequality (17) because of \(F^{5}(x)\in D^{5}\) for any \(x\in X_{5}\), which implies that \(\bar{x}\in X_{5}\) is an optimal solution to problem (15). \(\square \)

Remark 3.7

The difficulty of \(\epsilon \)-constraint robustness is, how to choose appropriate upper bounds \(\epsilon _{l}\) for the constraints \(f(x,\xi _{l})\le \epsilon _{l}\). If the vector \(\epsilon _{l}\) are chosen too small, the problem (15) may be infeasible (i.e., the feasible set \(X_{5}\) is empty), or the objective function value of \(f(x,\xi _{j})\) may become very bad. In addition, if the bounds \(\epsilon _{j}\) are chosen too big, the quality for the other scenarios decreases, and the objective function values may not attain the expected levels. Meanwhile, the \(\epsilon \)-constraint robust counterpart may be hard to solve in practice, due to the added constraints \(f(x,\xi _{l})\le \epsilon _{l}\). To avoid the drawbacks, the elastic robustness was proposed in [36, Sect. 3.2], by virtue of the elastic constraint scalarization method [39, §4.3], and the elastic robust counterpart of (1) was defined by

where \(u_{l}\ge 0,\,l\ne j\). Combined with the discussions for light robustness and \(\epsilon \)-constraint robustness, an equivalent characterization for elastic robustness can be easily established, when U is a finite set of scenarios.

Remark 3.8

When U contains infinite elements, let \(\epsilon {:}\,U\rightarrow \mathbb {R}\) be a function and \(\bar{\xi }\in U\) be fixed, the \(\epsilon \)-constraint robust counterpart of problem (1) [8, Theorem 23] is given by

In this case, since \(F^{5}(x)\) and \(D^{5}\) are infinitely dimensional, then the sets \(\mathcal {K}^{5}_{\bar{x}}\) and \(\mathcal {H}^{5}\) are not available. It should be interesting and meaningful by introducing suitable sets in the image space of problem (18), such that the equivalent characterization of \(\epsilon \)-constraint robust solutions holds.

3.6 Adjustable Robustness

The concept of adjustable robustness, that has been introduced by Ben-Tal et al. [40] and extensively studied in [3], is significantly less conservative than the usual robustness concepts. It is a two-stage approach in robust optimization, in which there are two types of variables: one type is “here-and-now” variables x, and the other is “wait-and-see” variables \(\zeta \). The former (nonadjustable variables) should get specific numerical values and have to be determined, before the realization of the uncertain parameters; while the latter (adjustable variables) can be decided, after the uncertain parameters becomes known. These variables are chosen, such that the objective function is minimized for given x and realized parameter \(\xi \). Then, an uncertain problem, which contains both “here-and-now” variables \(x\in \mathcal {X}\) and “wait-and-see” variables \(\zeta \in \mathbb {R}^{p}\), is formulated as

In order to solve the problem (19), the adjustable robust counterpart needs to be provided. Let \(Q(x,\xi )=\inf f(x,\zeta ,\xi )\) be the objective function of the second-stage OP, and

denote the set of variables \(\zeta \) for given x and \(\xi \). Thus, the adjustable robust counterpart is defined by

Let \(X_{6}:=\{x\in \mathcal {X}{:}\,\forall \;\xi \in U,\;\exists \;\zeta \in \mathbb {R}^{p}\,\,\text{ s.t. }\,\,F_{i}(x,\zeta ,\xi )\le 0,\;i=1,\ldots ,m\}\) be the adjustable robust feasible set. A feasible solution \(x\in X_{6}\) is called adjustable robust, if it is optimal to problem (20). To obtain an equivalent characterization of adjustable robust solutions, we consider the corrected image of problem (20) and introduce some notations. Assume that \(\sup _{\xi \in U}Q(x,\xi )<\infty \) for all \(x\in \mathcal {X}\). For any given \(\xi _{0}\in U\), we take \(\zeta ^{(x,\xi _{0})}\in \mathbb {R}^{p}\), where the choice of \(\zeta ^{(x,\xi _{0})}\) is connected with variable x and the fixed \(\xi _{0}\). If \(x\in X_{6}\), there exists \(\zeta ^{(x,\xi _{0})}\in \mathbb {R}^{p}\) such that \(F_{i}(x,\zeta ^{(x,\xi _{0})},\xi _{0})\le 0,i=1,\ldots ,m\), then we choose \(\zeta ^{(x,\xi _{0})}\in G(x,\xi _{0})\); otherwise, take \(\zeta ^{(x,\xi _{0})}\not \in G(x,\xi _{0})\). Set \(\bar{x}\in \mathcal {X}\), define the map

where \(F^{6}(x,\xi ):=\Big (F_{1}\Big (x,\zeta ^{(x,\xi )},\xi \Big ),\ldots ,F_{m}\Big (x,\zeta ^{(x,\xi )},\xi \Big )\Big )\), and consider the following sets

Theorem 3.6

A feasible solution \(\bar{x}\in X_{6}\) is an adjustable robust solution to problem (1) if and only if

Proof

Analogous to the proof of Theorem 3.1, the verification of this conclusion can be easily reached by a tiny of changes, then we omit it here. \(\square \)

Remark 3.9

It is worthwhile to notice the three following points: (i) For given x and \(\xi \), \(\zeta ^{(x,\xi )}\) can be determined, but \(\zeta ^{(x,\xi )}\) may be variable with the changes of x and \(\xi \). When the parameter \(\xi \) is realized, \(\zeta ^{(x,\xi )}\) only depends on x. Particularly, we have \(F_{i}(x,\zeta ^{(x,\xi )},\xi )\le 0,i=1,\ldots ,m\) for all \(x\in X_{6}\) and all \(\xi \in U\); (ii) Compared with \(F^{i}(x),i=1,\ldots ,5\), \(F^{6}(x,\xi )\) is related to both x and \(\xi \), while the constraints part in the image set is usually independent of other parameters but x, for the sake of ensuring the equivalence relation, which is attributed to the specific constraints (i.e., a family of sets are nonempty) in problem (20); (iii) If we replace \(F^{6}(x,\xi )\) by \(\widetilde{F^{6}}(x):=(\sup _{\xi \in U}F_{1}(x,\zeta _{0},\xi ),\ldots ,\sup _{\xi \in U}F_{m}(x,\zeta _{0},\xi ))\), where \(\zeta _{0}\in \mathbb {R}^{p}\) is given, then the set \(\widetilde{X_{6}}:=\{x\in \mathcal {X}{:}\,\widetilde{F^{6}}(x)\in D^{1}\}\) is just a proper subset of \(X_{6}\), and the conclusion of Theorem 3.6 is not true.

Remark 3.10

In most cases, the adjustable robust counterpart is computationally intractable (NP-hard), and this difficulty is addressed by restricting the adjustable variables to be affine functions of the uncertain data; see [40]. In this subsection, the difficulty is to construct impossibility of a parametric system, when the constraints comprise a series of sets that should be nonempty. As far as we know, it is the first time to deal with OPs with this constraint by using ISA. By this means, it may be convenient to handle some practical problems.

4 Perspectives and Open Problems

As one knows, some equivalent characterizations of various robustness concepts for uncertain OPs have been established in Sect. 3, by introducing appropriate subsets in the image space. In what follows, we make some interpretations for ISA approach used in this paper and provide some further problems worth considering.

There is a commonality of the assumption for different robustness, i.e., the values of the supremum or infimum of functions are finite. It was used to introduce the definition of the robust regularization in [41], and one can also refer to [42, Sect. 4.1] and [29]. Two reasons are given to explain the validity of the assumption for each robustness concept: For one thing, it is weak and can be satisfied in most of the applications; for another, it ensures that the definitions of \(A^{i}_{\bar{x}},i=1,2,3,5,6,\mathbf {w},r\) and \(A^{4}_{(\bar{\delta },\bar{x})}\) are reasonable, i.e., their corresponding functions would not take the values \(\pm \,\infty \) in their components. Furthermore, strict robustness and optimistic robustness reflect the preferences of a decision maker, from the risk point of view; the former is risk aversion, while the latter is risk appetite. Both of corrected images may be a part of the original image, or a combination of partial initial image and some newly added parts. They contain variable x and parameter \(\xi \) simultaneously. Two less conservative robustness concepts (reliable robustness and light robustness) have been analyzed, and their image sets were defined by different forms. Particularly, the image of light robustness was obtained with the help of an additional variable \(\delta \). It is just the image of its counterpart problem. Analogous to light robustness, \(\xi _{j}\) is any chosen beforehand in \(\epsilon \)-constraint robustness. For the adjustable robustness, it is vital to introduce the notation \(\zeta ^{(x,\xi )}\) that eliminates the parameter \(\zeta \) for every \(\xi \), by this means, the corrected image can be constructed successfully.

ISA, as a new tool to tackle with uncertain problems, plays a significant role in unifying multifarious robustness concepts. It explains clearly the implications of various robust solutions from the geometrical point of view and provides a new method to solve OPs with uncertainties. Even if a unified framework by virtue of ISA has been developed, there are still some problems worth discussing.

- 1.

Referring to Remark 3.8, when U contains infinite elements (i.e., U is an infinite countable set, or is infinite but not countable), the equivalent characterization of \(\epsilon \)-constraint robust solutions is invalid; so how to define suitable subsets is the key to overcoming the difficulties. Furthermore, if U is a subset of infinite dimensional space, the conclusions of this paper may not hold. In this case, it is necessary to develop new theories, according to some researches on infinite-dimensional images; see [26].

- 2.

By means of some known linear and nonlinear (regular) weak separation functions, robust optimality conditions for various robustness concepts, especially saddle point sufficient optimality conditions, can be easily derived. Specifically, one can refer to [29]. However, on the basis of ISA, there have been few works on necessary optimality conditions, and robust duality for scalar robust OPs recently. Thus, it is of value to construct strong separation functions, and use the tools of nonconvex optimization and nonsmooth analysis, to obtain (Lagrangian-type) necessary robust optimality and robust duality results.

- 3.

As we know, unified characterizations of various concepts of robustness and stochastic programming, from vector optimization, set-valued optimization and scalarization points of view, are shown in [7, 8]. Based on ISA, this paper provided another approach to characterizing different kinds of robustness. Then, it would be of interest to explore the relationships between ISA approach and the ones addressed in [8], such as vector optimization, set-valued optimization and scalarization techniques.

5 Conclusions

By using ISA, this paper developed a unifying approach to tackling with scalar robust OPs. Compared with the approaches based on tools from vector optimization, set-valued optimization and scalarization techniques [8], ISA mainly focuses on constructing two subsets in the image space such that they are disjoint, and analyzing the problem from the geometrical point of view. Then, it should have some connections to the existing ones. One possible way is to make use of some notations of ISA presented in Sect. 3, and introduce the corresponding vector OPs and set-valued OPs, and then explore their relations to robustness concepts. To the best of our knowledge, recently very few related papers concentrate on considering robust OPs on the frame of ISA, except for [29, 33,34,35]; it thus provides a good chance to study this kind of problem both in theoretical and practical aspects. What is noteworthy is that this paper gives a full answer to the open question (ii) posed in [29].

References

Soyster, A.L.: Convex programming with set-inclusive constraints and applications to inexact linear programming. Oper. Res. 21, 1154–1157 (1973)

Ben-Tal, A., Nemirovski, A.: Robust convex optimization. Math. Oper. Res. 23, 769–805 (1998)

Ben-Tal, A., El Ghaoui, L., Nemirovski, A.: Robust Optimization. Princeton University Press, Princeton (2009)

Kouvelis, P., Yu, G.: Robust Discrete Optimization and Its Applications. Kluwer, Amsterdam (1997)

Bertsimas, D., Brown, D.B., Caramanis, C.: Theory and applications of robust optimization. SIAM Rev. 53, 464–501 (2011)

Goerigk, M., Schöbel, A.: Algorithm engineering in robust optimization. In: Kliemann, L., Sanders, P. (eds.) Algorithm Engineering: Selected Results and Surveys. In LNCS State of the Art: vol. 9220. Springer. Final volume for DFG Priority Program 1307, arXiv:1505.04901 (2016)

Klamroth, K., Köbis, E., Schöbel, A., Tammer, C.: A unified approach for different kinds of robustness and stochastic programming via nonlinear scalarizing functionals. Optimization 62(5), 649–671 (2013)

Klamroth, K., Köbis, E., Schöbel, A., Tammer, C.: A unified approach to uncertain optimization. Eur. J. Oper. Res. 260, 403–420 (2017)

Jeyakumar, V., Li, G.Y.: Strong duality in robust convex programming: complete characterizations. SIAM J. Optim. 20, 3384–3407 (2010)

Jeyakumar, V., Lee, G.M., Li, G.Y.: Characterizing robust solution sets of convex programs under data uncertainty. J. Optim. Theory Appl. 164, 407–435 (2015)

Ben-Tal, A., Nemirovski, A.: Robust solutions of linear programming problems contaminated with uncertain data. Math. Program. Ser. A 88(3), 411–424 (2000)

Beck, A., Ben-Tal, A.: Duality in robust optimization: primal worst equals dual best. Oper. Res. Lett. 37(1), 1–6 (2009)

Gorissen, B.L., Blanc, H., den Hertog, D., Ben-Tal, A.: Technical note-deriving robust and globalized robust solutions of uncertain linear programs with general convex uncertainty sets. Oper. Res. 62, 672–679 (2014)

Giannessi, F.: Constrained Optimization and Image Space Analysis: Separation of Sets and Optimality Conditions, vol. 1. Springer, Berlin (2005)

Bellman, R.: Dynamic Programming. Princeton University Press, Princeton (1957)

Hestenes, M.R.: Optimization Theory: The Finite Dimensional Case. Wiley, New York (1975)

Castellani, G., Giannessi, F.: Decomposition of mathematical programs by means of theorems of alternative for linear and nonlinear systems. In: Proceedings of the Ninth International Mathematical Programming Symposium, Budapest. Survey of Mathematical Programming, pp. 423–439. North-Holland, Amsterdam (1979)

Giannessi, F., Mastroeni, G.: Separation of sets and Wolfe duality. J. Glob. Optim. 42, 401–412 (2008)

Li, J., Feng, S.Q., Zhang, Z.: A unified approach for constrained extremum problems: image space analysis. J. Optim. Theory Appl. 159, 69–92 (2013)

Mastroeni, G.: Some applications of the image space analysis to the duality theory for constrained extremum problems. J. Glob. Optim. 46, 603–614 (2010)

Li, S.J., Xu, Y.D., You, M.X., et al.: Constrained extremum problems and image space analysis—part I: optimality conditions. J. Optim. Theory Appl. 177, 609–636 (2018)

Li, S.J., Xu, Y.D., You, M.X., et al.: Constrained extremum problems and image space analysis—part II: duality and penalization. J. Optim. Theory Appl. 177, 637–659 (2018)

Mastroeni, G.: Nonlinear separation in the image space with applications to penalty methods. Appl. Anal. 91, 1901–1914 (2012)

Li, J., Huang, N.J.: Image space analysis for variational inequalities with cone constraints applications to traffic equilibria. Sci. China Math. 55, 851–868 (2012)

Zhu, S.K.: Image space analysis to Lagrange-type duality for constrained vector optimization problems with applications. J. Optim. Theory Appl. 177, 743–769 (2018)

Li, J., Mastroeni, G.: Image convexity of generalized systems with infinite-dimensional image and applications. J. Optim. Theory Appl. 169, 91–115 (2016)

Zhou, Z.A., Chen, W., Yang, X.M.: Scalarizations and optimality of constrained set-valued optimization using improvement sets and image space analysis. J. Optim. Theory Appl. (2019). https://doi.org/10.1007/s10957-019-01554-3

Xu, Y.D.: Nonlinear separation approach to inverse variational inequalities. Optimization 65(7), 1315–1335 (2016)

Wei, H.Z., Chen, C.R., Li, S.J.: Characterizations for optimality conditions of general robust optimization problems. J. Optim. Theory Appl. 177, 835–856 (2018)

Ehrgott, M., Ide, J., Schöbel, A.: Minmax robustness for multi-objective optimization problems. Eur. J. Oper. Res. 239, 17–31 (2014)

Khan, A.A., Tammer, C., Zălinescu, C.: Set-Valued Optimization: An Introduction with Applications. Springer, Berlin (2015)

Ide, J., Schöbel, A.: Robustness for uncertain multi-objective optimization: a survey and analysis of different concepts. OR Spectr. 38(1), 235–271 (2016)

Wei, H.Z., Chen, C.R., Li, S.J.: A unified characterization of multiobjective robustness via separation. J. Optim. Theory Appl. 179, 86–102 (2018)

Ansari, Q.H., Köbis, E., Sharma, P.K.: Characterizations of multiobjective robustness via oriented distance function and image space analysis. J. Optim. Theory Appl. 181, 817–839 (2019)

Wei, H.Z., Chen, C.R., Li, S.J.: Characterizations of multiobjective robustness on vectorization counterparts. Optimization (2019). https://doi.org/10.1080/02331934.2019.1625352

Wei, H.Z., Chen, C.R., Li, S.J.: Robustness to uncertain optimization using scalarization techniques and relations to multiobjective optimization. Appl. Anal. 98(5), 851–866 (2019)

Fischetti, M., Monaci, M.: Light robustness. In: Ahuja, R.K., Moehring, R., Zaroliagis, C. (eds.) Robust and Online Large-Scale Optimization, vol. 5868, pp. 61–84. Springer, Berlin (2009)

Schöbel, A.: Generalized light robustness and the trade-off between robustness and nominal quality. Math. Methods Oper. Res. 80(2), 161–191 (2014)

Ehrgott, M.: Multicriteria Optimization. Springer, New York (2005)

Ben-Tal, A., Goryashko, A., Guslitzer, E., Nemirovski, A.: Adjustable robust solutions of uncertain linear programs. Math. Program. Ser. A 99, 351–376 (2004)

Lewis, A., Pang, C.: Lipschitz behavior of the robust regularization. SIAM J. Control Optim. 48(5), 3080–3105 (2009)

Eichfelder, G., Krüger, C., Schöbel, A.: Decision uncertainty in multiobjective optimization. J. Glob. Optim. 69, 485–510 (2017)

Acknowledgements

The authors gratefully thank Professor Franco Giannessi, two anonymous referees and the associate editor for their constructive suggestions and detailed comments, which helped to improve the paper and, in particular, thank one referee for pointing out the proof of Theorem 3.2. Also thanks to Manxue You (Chongqing University) for helpful discussions on the image space analysis.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This research was supported by the National Natural Science Foundation of China (Grant Nos. 11301567, 11571055, 11971078), the Fundamental Research Funds for the Central Universities (Grant No. 106112017CDJZRPY0020) and the Project funded by China Postdoctoral Science Foundation (Grant No. 2019M660247).

Rights and permissions

About this article

Cite this article

Wei, HZ., Chen, CR. & Li, SJ. A Unified Approach Through Image Space Analysis to Robustness in Uncertain Optimization Problems. J Optim Theory Appl 184, 466–493 (2020). https://doi.org/10.1007/s10957-019-01609-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-019-01609-5