Abstract

This paper is devoted to present solutions to constrained finite-horizon optimal control problems with linear systems, and the cost functional of the problem is in a general form. According to the Pontryagin’s maximum principle, the extremal control of such problem is a function of the costate trajectory, but an implicit function. We here develop the canonical backward differential flows method and then give the extremal control explicitly with the costate trajectory by canonical backward differential flows. Moreover, there exists an optimal control if and only if there exists a unique extremal control. We give the proof of the existence of the optimal solution for this optimal control problem with Green functions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

It is well known that there is a close relationship between the theory of optimization and the technique of optimal control [1,2,3]. This paper is devoted to the study of constrained finite-horizon optimal control problems as known as problem (P) by the Pontryagin’s maximum principle and backward differential flows. These two theories have been widely used in the research of optimizations and optimal control problems [4,5,6,7]. In problem (P), an integral cost functional is minimized across the set of controls and trajectories of a linear finite-dimensional dynamical system operating over a bounded interval of time. Using standard notations of control theory, we represent the optimal control problem (P) which has a quadratic part in its cost function and the constraints of the control are a ball and a linear differential equation.

A piecewise continuous function mapping the time to the constraint zone, a ball, is said to be an admissible control. And the initial point is a given vector. Specially, the quadratic part of the cost function is symmetric positive definite. Let the rest part of the cost function be second-order continuously differentiable and the second derivative is great than 0 for all controls.

By the classical optimal control theory, we have the Hamiltonian function [8]. In general, it is difficult to obtain an analytic form of the optimal feedback control for the optimal control problem (P). It is well known that, in the unconstrained case, if the part of the cost function except the quadratic part is a positive semi-definite quadratic form, then a perfect optimal feedback control is obtained by the solution of a Riccati matrix differential equation. The primal goal of this paper is to present an analytic form of the optimal feedback control to the optimal control problem (P).

We know from the Pontryagin principle that the optimal trajectory and corresponding parameter denoting the state and the costate which correspond to the optimal control. Particularly, when the control is an extremal control, it satisfies the Pontryagin principle. By means of the Pontryagin principle and the dynamic programming theory, many numerical algorithms have been suggested to approximate the solution to the problem (P). This is due to the nonlinear integrand in the cost functional. In this paper, combining the backward differential flows with the Pontryagin principle, we solve problem (P) which has nonlinear integrand on the control variable in the cost functional and present the optimal control expressed by the costate via canonical dual variables.

2 Problem Formulation

Using standard notations of control theory, we represent the optimal control problem (P) as follows:

where \(A \in {\mathbb {R}}^{n\times n}\), \(B \in {\mathbb {R}}^{n\times m} \) and \(b\in {\mathbb {R}}^m\) are constant matrices, and \(R\in {\mathbb {R}} ^{m\times m} \) is a symmetric positive definite matrix. The initial point \(x_0\) is a given vector in \({\mathbb {R}}^n\). Let F(x) be second-order continuously differentiable and \( F_{xx}(x)\ge 0\) for all \(x\in {\mathbb {R}}^n\). A piecewise continuous function, \( u(\cdot ):[ 0 , T ] \rightarrow U\), is said to be an admissible control. By the classical optimal control theory, we have the following Hamiltonian function [8]:

The state and costate systems are

In general, it is difficult to obtain an analytic form of the optimal feedback control for the problem (P). It is well known that, in the unconstrained case, if F(x(t)) is a positive semi-definite quadratic form, then a perfect optimal feedback control is obtained by the solution of a Riccati matrix differential equation. The primal goal of this paper is to present an analytic form of the optimal feedback control to the optimal control problem (P).

We know from the Pontryagin principle the \({\hat{x}}(\cdot )\) and \({\hat{\lambda }}(\cdot )\) denoting the state and costate corresponding to \({\hat{u}}(\cdot )\). Particularly, when \({\hat{u}}\) is an extremal control, we have

By means of the Pontryagin principle and the dynamic programming theory, many numerical algorithms have been suggested to approximate the solution to the problem (P). This is due to the nonlinear integrand in the cost functional. In this paper, combining the backward differential flows with the Pontryagin principle, we solve problem (P), which has nonlinear integrand on the control variable in the cost functional and present the optimal control expressed by the costate via canonical dual variables.

3 A Differential Flow with Lagrangian Function

In this section, we present a differential flow to deal with the problem (P), which is used to find the optimal control expressed by the costate in the next section. For the problem (P), the Lagrangian function can be written as

where \({\rho }\) is a Lagrangian multiplier. The corresponding partial derivatives with respect to u are as follows

and

Let R be a \({m\times m} \) symmetric positive definite matrix. We define a set G as

Obviously, G is nonempty.

Then, we introduce a backward differential flow over G.

Definition 3.1

For each \(\rho \) in G, define

which is called a differential flow.

It can be verified easily that the differential flow satisfies the following differential system

Theorem 3.1

If \({\hat{u}}(\rho )\) is the differential flow over G, then, for given \(\rho \in G\), \({\hat{u}}(\rho )\) is the unique minimizer of \(L(u,\rho )\) over \({\mathbb {R}}^n\), i.e.,

Proof

For given \(\rho \in G\), \(L_{uu}(u,\rho ) = R + \rho I > 0\), for any \(u \in {\mathbb {R}}^n\), \(L_{uu}({\hat{u}}(\rho ),\rho ) > 0, \forall \rho \in G\). On the other hand, by (9), it is clear that

\(L_{u}({\hat{u}}(\rho ),\rho ) = - (R+\rho )(R+\rho )^{-1}(B^\mathrm{T} \lambda + b) + (B^\mathrm{T} \lambda + b) = 0\). Then, the conclusion of the theorem follows by elementary calculus. \(\square \)

The dual function with respect to a given \({\hat{u}}(\rho )\) is defined as

The first derivative function of \(P_d(\rho )\) with respect to \(\rho \) can be expressed as

Furthermore, we have the following result.

Lemma 3.1

For all \(\rho \in G\), \(\frac{\mathrm{d} P_d(\rho )}{\mathrm{d} \rho } \le 0\) if and only if

\({\hat{u}}(\rho ) \in D: = \{ u : u^\mathrm{T}u \le 1,u \in {\mathbb {R}}^n \}\).

Proof

By the definition of the feasible set D, a point \(u \in D\) if and only if \({\hat{u}}^\mathrm{T}(\rho ) {\hat{u}}(\rho ) -1\le 0\). Then, by (16) we see that for \(\rho \in G\) , \({{\hat{u}}}(\rho )\in D\) if and only if \(\frac{\mathrm{d}P_d(\rho )}{\mathrm{d} \rho } \le 0\). \(\square \)

4 Solving Quadratically Constrained Quadratic Programming by Dual Problem

For a given quadratic function \(P(u): = \frac{1}{2} u^\mathrm{T}Ru + (B^\mathrm{T}\lambda +b)^\mathrm{T}u\), we have the differential flow with respect to the quadratically constrained quadratic programming problem (P)

According to the dual function (15), we have the dual problem

Theorem 4.1

If \(\rho ^* \in G\) is an optimal solution to the dual problem (18), then the quadratic function P(u) with \(u\in D\) has a minimizer at \({\hat{u}}(\rho ^*)\).

Proof

First, the optimal solution of the dual problem (18) \(\rho ^* \) is also the maximizer of the function \(P_d(\rho )\) with \(\rho \ge 0\). If it is not true, then there exists a sequence \((\rho ^{(k)})\), \(k\ge 1\), which tends to \(\rho ^* \) and satisfies \(P_d(\rho ^{(k)})>P_d(\rho ^*)\). Recall that \(\rho ^* \in G\), and therefore \(R+\rho ^* I>0\). For k is sufficiently large, we have \(R+\rho ^{(k)} I\ge 0\), which implies that \(\rho ^{(k)} \in G\). It contradicts our assumption that \(\rho ^* =\arg {\min _{\rho \in G}\{P_d(\rho )}\}\). \(\square \)

The fact that \(\rho ^*\) is a local maximizer of \(P_d(\rho )\) with \(\rho \ge 0\) implies that it is a K-T point of the problem \(\max _{\rho \ge 0}\{P_d(\rho )\}\). It means that \(\frac{\mathrm{d} P_d(\rho ^*)}{\mathrm{d} \rho }\le 0\) and \(\rho ^* \frac{\mathrm{d} P_d(\rho ^*)}{\mathrm{d}\rho }=0\). By (15) and Lemma 2.1, we have \({\hat{u}}^\mathrm{T}(\rho ^*) {\hat{u}}(\rho ^*) - 1 \le 0\), i.e., \({\hat{u}}(\rho ^*) \in D\) and

For any \(\rho \in G\) and \(u\in D\), we have

Since \(\rho ^*\in G\), it follows that

Thus, \({\hat{u}}(\rho ^*)\) is a global minimum point of P(u) over D.

5 Pontryagin Extremal Control and the Canonical Backward Differential Flow

According to the Pontryagin’s maximum principle [8, 9], an optimal control is an extremal control. For (2), the Hamiltonian function of (P), an extremal control \({\hat{u}}(\cdot )\) with the associated state \({\hat{x}}(\cdot )\) and costate \({\hat{\lambda }}(\cdot )\) together, satisfies

and for almost every given \(t\in [0,T]\),

Here,

Since the global optimization in (23) is solved for a fixed t, noting that the variable x and u are separating, it is helpful to consider the following optimization problem with a given parameter vector \(\lambda \) at first

In what follows, we use the theory of canonical differential flow [6] to solve the optimization problem \((P_1)\). Then, we can prove that the minimizer of the problem \((P_1)\) is on the backward differential flow, and we can point it out by a nonnegative parameter \(\rho \). The details are as follows. Since \(R > 0\), \(R+\rho I>0\) when \(\rho \ge 0\). If \( B^\mathrm{T}\lambda +b \ne 0 \), then there is a \( \rho ^* > 0\) satisfying

Let \(u^*=-(R+\rho ^*I)^{-1}(B^\mathrm{T}\lambda +b)\). Solving the backward differential equation

one may get the so-called canonical differential flow [6] for the optimization problem \((P_1)\)

It is easy to verify, when \(\rho >0\),

Lemma 5.1

\(\parallel {\hat{u}} (\rho ) \parallel \) monotonically decreases when \( \rho \in [0,+\infty )\).

Proof

Since

and \(R+\rho I > 0\) for \(\rho \ge 0\), it follows that

It is deduced that \(\Vert {\hat{u}}\Vert \) monotonically decreases when \(\rho \in [0,+\infty ) \). \(\square \)

6 Solution to the Optimization Problem \((P_1)\)

Theorem 6.1

-

(i)

When \( \Vert R^{-1}(B^\mathrm{T}\lambda +b)\Vert >1 \), the optimization problem \((P_1)\) has a minimizer

$$\begin{aligned} {\hat{u}}_\lambda = -[R + \rho _\lambda I]^{-1}(B^\mathrm{T}\lambda +b), \end{aligned}$$(33)where \( \rho _\lambda \) is the only positive root of \( \Vert [R+\rho I ] ^{-1}(B^\mathrm{T}\lambda +b)\Vert =1 \).

-

(ii)

When \( \Vert R^{-1}(B^\mathrm{T}\lambda +b)\Vert \le 1 \), the optimization problem \((P_1) \) has a minimizer

$$\begin{aligned} {\hat{u}}_\lambda =-R ^{-1}(B^\mathrm{T}\lambda +b). \end{aligned}$$(34)

Proof

-

(i)

Let \( \Vert R^{-1}(B^\mathrm{T}\lambda +b)\Vert >1 \). For \(\lim _{\rho \rightarrow \infty }\Vert {\hat{u}}(\rho )\Vert =0\) and based on Lemma 5.1, there is a \(\rho _\lambda >0\) so that

$$\begin{aligned} \Vert {\hat{u}}(\rho _\lambda )\Vert =1. \end{aligned}$$(35)Let \({\hat{u}}_\lambda = {\hat{u}}(\rho _\lambda )=-(R+\rho _\lambda I)^{-1}(B^\mathrm{T}\lambda + b) \). Then, we have

$$\begin{aligned} \frac{\mathrm{d} (P({\hat{u}}_\lambda ) + \frac{\rho _\lambda }{2}({\hat{u}}^\mathrm{T}_\lambda {\hat{u}}_\lambda - 1))}{d {\hat{u}}_\lambda }= \frac{\mathrm{d} P({\hat{u}}_\lambda )}{d {\hat{u}}_\lambda } +\rho _\lambda {\hat{u}}_\lambda = (R + \rho _\lambda I){\hat{u}}_\lambda + B^\mathrm{T}\lambda + b =0.\nonumber \\ \end{aligned}$$(36)Further, we have

$$\begin{aligned} \frac{\mathrm{d}^2 (P({\hat{u}}_\lambda ) + \frac{\rho _\lambda }{2}({\hat{u}}^\mathrm{T}_\lambda {\hat{u}}_\lambda - 1))}{d {\hat{u}}^2 _\lambda }= R + \rho _\lambda I >0. \end{aligned}$$(37)Thus, for any \(u\in U=\{u^\mathrm{T}u \le 1 \}\),

$$\begin{aligned} P(u) \ge P(u) + \frac{\rho _\lambda }{2} ( u^\mathrm{T}u - 1 ) \ge P({\hat{u}}_\lambda )+\frac{\rho _\lambda }{2} ( {\hat{u}}^\mathrm{T}_\lambda {\hat{u}}_\lambda - 1 ) = P({\hat{u}}_\lambda ). \end{aligned}$$(38)This shows that for the case(i) the optimization problem (\(P_1\)) has a minimizer

$$\begin{aligned} {\hat{u}}_\lambda = -[R+\rho _\lambda I]^{-1}(B^\mathrm{T}\lambda +b). \end{aligned}$$(39) -

(ii)

Let \(\Vert R^{-1}(B^\mathrm{T}\lambda +b)\Vert \le 1\). By Lemma 5.1, for this case, \(\Vert {\hat{u}}(\rho )\Vert \le 1\) in \([0,+\infty )\). Noting (29) and (30), for any \(u\in U\) and \(\rho \ge 0\), we have

$$\begin{aligned} P(u) \ge P(u) + \frac{\rho }{2}(u^\mathrm{T}u-1) \ge P({\hat{u}}) + \frac{\rho }{2}({\hat{u}}^\mathrm{T}(\rho ){\hat{u}}(\rho )-1). \end{aligned}$$(40)Let \({\hat{u}}_\lambda = -R^{-1}(B^\mathrm{T}\lambda + b) \). Consequently,

$$\begin{aligned} P(u) \ge P({\hat{u}}_\lambda ) + \frac{0}{2}({\hat{u}}^\mathrm{T}_\lambda {\hat{u}}_\lambda -1) = P({\hat{u}}_\lambda ). \end{aligned}$$(41)This shows that for the case (ii) the optimization problem (\(P_1\)) has a minimizer \({\hat{u}}_\lambda = -R^{-1}(B^\mathrm{T}\lambda +b)\). \(\square \)

7 Solution to the Linear Optimal Control Problem (P)

In what follows, we use the notation \(\arg \{f(\rho )=0,\rho \ge 0\}\) to stand for the positive root of the equation \(f(\rho )=0\). For a given vector \(\lambda \) such that

we denote

For the optimal control problem (P), we define the control \(u(\lambda )\) as follows.

Definition 7.1

For a vector \( \lambda \), define

where

Lemma 7.1

\(u( {\hat{\lambda }} ) \in C( {\mathbb {R}}^n , {\mathbb {R}}^m)\).

Recall the linear system

It is not difficult to see that

Let \(\alpha :=\max \{C_1,Te^{\Vert A\Vert T}(\Vert B\Vert +1)\}\). Because \(F(x) \in C^2({\mathbb {R}}^n)\), \( F_x(x)\) is bounded in \(S:=\{x:\Vert x\Vert \le \alpha \}\) and \(\sup _{x\in S} \Vert F_x(x)\Vert := C_2\). The original optimal control problem (P) is equivalent to the following optimal control problem

According to Theorem 6.1, the optimal control of problem (49) is

The Pontryagin boundary value problem can be rewritten as

Theorem 7.1

There is a solution to the problem (51).

Proof

Note that the matrix function \( \varPhi (t): = e^{{\hat{A}}t}\) satisfies

The solution to the corresponding homogeneous problem

is

The Green function [10] to the homogeneous BVP is

This is to say

In what follows, we show that there exists one solution Y(t) of the Pontryagin boundary value problem (51), which is equivalent to the solvability of the following integral equation

where \(f(Y): = \left( \begin{array}{c} Bv((0,I)Y)\\ -\frac{1}{C_2} F_x((I,0)Y) \end{array} \right) \).

Let \(X:=C([0,T],{\mathbb {R}}^{2n})\) and \(\varOmega :=\{Y\in X:\Vert Y(\cdot )\Vert \le \alpha \}\). Define an operator \(T:X\rightarrow X\), for given \(t\in [0,T]\), each \(Y\in X\),

Since \(\Vert v((0,I)Y)\Vert \le 1\), if \(Y(\cdot )\in \varOmega \), then \(\Vert -\frac{1}{C_2} F_x((I,0)Y)\Vert \le 1\).

Consequently,

For \(\Vert TY\Vert = \max _{t\in [0,T]} \Vert (TY)(t)\Vert \le \alpha \), \(T\varOmega \subset \varOmega \). By Schaefer’s fixed-point theorem [11], there is a \({\hat{Y}}\in \varOmega \) such that \(T{\hat{Y}}={\hat{Y}}\), i.e.,

It follows that the Pontryagin boundary value problem (51) has a solution. \(\square \)

Theorem 7.2

Let \({\hat{Y}}(\cdot ):=({{\hat{y}}}(\cdot ),{{\hat{\omega }}}(\cdot ))^\mathrm{T}\) be a solution to the problem (51). Then, the control \({\hat{v}}(t) := v \big ( (0,I) {\hat{Y}}(t) \big )=v({{\hat{\omega }}}(t)) \) is a Pontryagin extremal control.

Proof

Note that \({\hat{y}}(\cdot )\) and \({\hat{\omega }}(\cdot )\) satisfy the equations

It implies that \({\hat{y}}(\cdot )\), \({\hat{\omega }}(\cdot )\) and \({\hat{\omega }}\) satisfy the Pontryagin boundary value equations. From Theorem 6.1 and Definition (50), it follows that

where \(H^1(t,{\hat{y}}(t),v,{\hat{\omega }}(t))\) is the Hamiltonian function of the optimal control problem (\(P_2\)). So \( {\hat{v}}(t) \) is a Pontryagin extremal control. \(\square \)

Theorem 7.3

The control \( {\hat{v}}(t) = v \big ( (0,I){\hat{Y}}(t) \big ) \) defined by (50) is an optimal control to the problem \((P_2)\) and (P).

Proof

Based on Theorem 7.2 and Definition (50), the extremal control \( {\hat{v}}(t) = {\hat{v}} \big ( (0,I){\hat{Y}}(t) \big ) \) can be expressed as a function of the costate variable \({\hat{\omega }}\), i.e., \({\hat{v}} ({\hat{\omega }})\). Bringing it back into the Hamiltonian function of problem (\(P_2\)), we get

Since \( {\hat{v}}({\hat{\omega }}) \) is independent of the state variable \({\hat{y}}\) and \( F_{{\hat{y}}{\hat{y}}}({\hat{y}}) \ge 0 \), \( H ^1(t,{\hat{y}} ,{\hat{v}}({\hat{\omega }}),{\hat{\omega }} )\) is convex with respect to the variable \( {\hat{y}} \). Referring to the classical optimal control theory [9, 12], we see that \( {{\hat{v}}}(t) \) is an optimal control to the singular optimal control problem \( (P_2) \), i.e., \( {{\hat{u}}}(t)= {\hat{v}} \) is an optimal control to the singular optimal control problem (P). \(\square \)

8 Example

Example 8.1

Consider the following optimal control problem

According to the Pontryagin principle and Theorem 6.1, the Pontryagin pair \(({\hat{x}}(t),{\hat{u}}(t),{\hat{\lambda }}(t))\) satisfies

By computation, we have

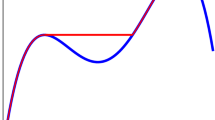

By an iterative algorithm, we can solve this equation. For example, \(r=10\), \(c=1\), \(a=1\), \(b=2\), \(T=1\) and \(x_0= 1\). We figure out the optimal control \({\hat{u}}(\cdot )\) and coordinate trajectory \({\hat{x}}(\cdot )\) in Fig. 1, and the optimal value is 130.3525.

Optimal control and optimal trajectory of Example 8.1

Example 8.2

Consider another example where \(m=2\), i.e., \(u\in {\mathbb {R}}^2\).

where \(T=1\), \(b=\left( \begin{array}{cc} 2 \\ 4 \end{array} \right) \), \(A=\left( \begin{array}{cc} 1&{}3\\ 2&{}5 \end{array} \right) \), \(B=\left( \begin{array}{cc} 2&{}2\\ 3&{}7 \end{array} \right) \) and \(x_0=\left( \begin{array}{cc} 1\\ 2 \end{array} \right) \).

Same as Example 8.1, the Pontryagin pair \(({\hat{x}}(t),{\hat{u}}(t),{\hat{\lambda }}(t))\) satisfies

and

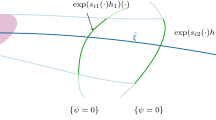

Figure 2 shows the optimal control and corresponding trajectory, and the optimal value is \(3.4688\times 10^5\).

Optimal control and the optimal trajectory of Example 8.2

9 Conclusions

In this paper, a new approach to constrained finite-horizon optimal control problems with linear systems has been investigated using the canonical backward differential flows method, which can produce an analytic form of the optimal feedback control to the optimal control problems. Meanwhile, we give the extremal control explicitly with the trajectory by canonical backward differential flows. The existence of the optimal solution for this optimal control problem has been proved (see Theorem 7.2). More research needs to be done for the development of applicable canonical differential flows theory.

References

Butenko, S., Murphey, R., Pardalos, P.M.: Recent Developments in Cooperative Control and Optimization. Springer, New York (2003)

Hirsch, M.J., Commander, C.W., Pardalos, P.M., Murphey, R. (ed.): Optimization and Cooperative Control Strategies: Proceedings of the 8th International Conference on Cooperative Control and Optimization. Springer, New York (2009)

Pardalos, P.M., Tseveendorj, I., Enkhbat, R.: Optimization and Optimal Control. World Scientific, Singapore (2003)

Halkin, H.: Necessary conditions for optimal control problems with infinite horizons. Econom. J. Econ. Soc. 42, 267–272 (1974)

Kipka, R.J., Ledyaev, Y.S.: Pontryagin maximum principle for control systems on infinite dimensional manifolds. Set Valued Var. Anal. 23, 1–15 (2014)

Zhu, J.H., Wu, D., Gao, D.: Applying the canonical dual theory in optimal control problems. J. Glob. Optim. 54, 221–233 (2012)

Zhu, J.H., Zhao, S.R., Liu, G.H.: Solution to global minimization of polynomials by backward differential flow. J. Optim. Theor. Appl. 161, 828–836 (2014)

Russell, D.L.: Mathematics of Finite-Dimensional Control Systems: Theory and Design. M. Dekker, New York (1979)

Sontag, E.D.: Mathematical Control Theory: Deterministic Finite Dimensional Systems. Springer, New York (1998)

Moylan, P.J., Moore, J.: Generalizations of singular optimal control theory. Automatica 7, 591–598 (1971)

Ge, W., Li, C., Wang, H.: Ordinary Differential Equations and Boundary Valued Problems. Science Press, Beijing (2008)

Pontryagin, L.S.: Mathematical Theory of Optimal Processes. CRC Press, Boca Raton (1987)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Panos M. Pardalos.

Rights and permissions

About this article

Cite this article

Zhao, S., Zhou, J. Solutions to Constrained Optimal Control Problems with Linear Systems. J Optim Theory Appl 178, 349–362 (2018). https://doi.org/10.1007/s10957-018-1308-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-018-1308-3

Keywords

- Optimal control problems

- The Pontryagin’s maximum principle

- Canonical backward differential flows

- Linear systems