Abstract

The methods currently available for designing a linear quadratic regulator for fractional-order systems are either based on sufficient-type conditions for the optimality of functionals or generate very complicated analytical solutions even for simple systems. It follows that the use of such methods is limited to very simple problems. The present paper proposes a practical method for designing a linear quadratic regulator (assuming linear state feedback), Kalman filter, and linear quadratic Gaussian regulator/controller for commensurate fractional-order systems (in Caputo sense). For this purpose, considering the fact that in dealing with fractional-order systems the cost function of linear quadratic regulator has only one extremum, the optimal state feedback gains of linear quadratic regulator and the gains of the Kalman filter are calculated using a gradient-based numerical optimization algorithm. Various fractional-order linear quadratic regulator and Kalman filter design problems are solved using the proposed approach. Specifically, a linear quadratic Gaussian controller capable of tracking step command is designed for a commensurate fractional-order system which is non-minimum phase and unstable and has seven (pseudo) states.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fractional calculus and fractional-order systems (FOSs) have found various applications in modeling and controlling dynamic systems [1]. As for modeling, it is a well-known fact that many real-world systems can be modeled more accurately using fractional-order differential equations and transfer functions [2, 3]. In the field of control, the idea of using FOSs was first proposed by Bode [4], who employed the so-called Bode’s ideal transfer function to stabilize amplifiers. Later, Oustaloup et al. [5] developed a fractional-order controller (FOC) called CRONE on the basis of Bode’s ideal transfer function and showed that its performance excels the PID. However, these works did not draw much attention to the FOC until 1999, when Podlubny [6] introduced fractional-order PID (FOPID), also known as \(PI^\lambda D^\mu \), as an extension of the classical PID. After that innovation, many researchers developed methods for tuning the parameters of FOPID controllers [7,8,9,10,11]. What most of these methods have in common is the use of an optimization algorithm for this purpose. Among the numerous real-world applications of FOPID are control of a solar furnace [12], path tracking control of tractors [13], and unmanned aerial vehicle [14].

Optimal control of an FOS has also been the subject of some studies. For example, [15] obtained the necessary conditions for finite-horizon optimal control of a special FOS (in Caputo sense) containing one state using Euler–Lagrange equations. The so-called central difference numerical scheme is proposed in [16] for optimal control of a time-varying FOS which again has one state. In [17], the results of [16] are extended to FOSs which have several states and control variables. Analytical approaches to designing a fractional-order (in Caputo sense) linear quadratic regulator (LQR) are presented in [18, 19]. These works extend the method available for LQR design in the classical case to the FOS owing to some simplifying assumptions like using necessary-type equations. It is to be noted that the method proposed in [18] directly calculates the optimal control, whereas the approach propounded in [19] calculates the approximate optimal state feedback gains. The main drawback of [18] is that the required calculations are very complicated, and this is probably why the proposed method is used for designing an LQR for a simple FOS with only one state. The main limitation of [19] is that the results are obtained after applying some simplifications which make the results very approximate. In fact, the resultant state feedback gains in [19] are independent of the order of fractional state-space equations of the system. This is not consistent with numerical experiments.

The present paper studies the problem of designing LQR, Kalman filter, and linear quadratic Gaussian (LQG) regulator/controller for commensurate FOSs (the Caputo fractional derivative is considered). For this purpose, we make use of the fact that in dealing with FOSs the cost function of LQR has only one extremum, which can be calculated using gradient-based iterative algorithms. Using this approach, it is possible to design an LQG controller capable of tracking the step command for a non-minimum phase unstable FOS which has seven states.

2 Designing LQR for Commensurate Fractional-Order Systems

2.1 Problem Statement

In the classical (integer-order) case, the LQR problem is defined as calculating the control u(t) which minimizes the cost function:

subject to the state-space equations of the system as below:

where \(x(t)\in {\mathbb {R}}^{n\times 1}\) is the state vector, \(A\in {\mathbb {R}}^{n\times n}\), \(B\in {\mathbb {R}}^{n\times 1}\), \(C\in {\mathbb {R}}^{1\times n}\), and \(D\in {\mathbb {R}}\) are known matrices of the system, \(Q\in {\mathbb {R}}^{n\times n}\) is a positive-definite (PD) weight matrix of the states, and \(r>0\) is the weight of control (the system under consideration is assumed to be single-input single-output; i.e., u and y in (2) are considered to be scalar). As a well-known classical fact, assuming \(u(t)=-K x(t)\) (the so-called linear state feedback control), the vector of optimal state feedback gains \(K\in {\mathbb {R}}^{1\times n}\) is calculated as \(K=r^{-1}B^TP\), where P is the PD solution to the algebraic Riccati equation [20, Ch. 9]:

Considering the linear state feedback rule \(u(t)=-K x(t)\), all of the variables will be functions of K. With this in mind, we denote J, x(t), and u(t) as J(K), x(K, t), and u(K, t), respectively in this paper.

The problem under consideration in this paper is the same as (1) and (2) above except that the system is described through commensurate fractional-order state-space equations as follows:

where \(D^\alpha \) is the Caputo fractional derivative operator of order \(\alpha \) (\(0<\alpha <1\)) [21, 22]. The initial condition of system (4) is regarded as \(x(0)=x_0\), where \(x_0\in {\mathbb {R}}^{n\times 1}\) is a known vector. Since, in general, for the FOS (4), the knowledge of \(x_0\) is not sufficient to determine the future behavior of the system, some authors like [23] proposed using the term pseudo-state instead of state to refer to x in (4). For the sake of simplicity, in the rest of the present paper, we will refer to x in (4) as the state of the system. System (4) with \(0<\alpha <1\) is stable if and only if \(|\mathrm {Arg}(\mathrm {eig}(A))|>\pi \alpha /2\) for all eigenvalues of A [24, 25].

Intuitively, it is expected that J(K) as defined in (1) subject to (4) has only one extremum, which is actually the global minimum. To be more precise, let us consider the problem of finding the vector of the state feedback gain K, which minimizes

subject to (4). As a very classical result of the linear control theory, there is a trade-off between the speed of system response and the corresponding control effort [20, Ch. 9]. In other words, magnitudes of the first and the second integral in the right-hand side of (6) vary in opposite directions by changing K such that the first (second) integral is often a monotonically decreasing (increasing) function of K. Although the summation of a monotonically increasing and decreasing function of K is not guaranteed to have only one extremum, it is usually the case when minimizing (6) subject to (4). The fact that J(K) in (6) has only one extremum helps us find the global best solution by simply using nonlinear numerical optimization algorithms.

2.2 A Convex LQR Problem

This section introduces a special LQR for FOSs which is convex in K. This LQR is especially useful when the system under control has few states and the control effort is not important. The following lemma will be instrumental in the proof of Theorem 2.1, which represents the convex LQR problem for FOS.

Lemma 2.1

Let a and b be two real numbers. Then, for any \(0\le \lambda \le 1\), we have

Proof

The function \(f{:}\,{\mathbb {R}}\rightarrow {\mathbb {R}}\) defined as \(f(x)=x^2\) is convex. Hence, by definition, for any \(0\le \lambda \le 1\) and real numbers a and b, we have

where assuming \(f(x)=x^2\) implies (7). \(\square \)

Theorem 2.1

The cost function of state feedback LQR with \(r=0\) and \(Q=Q^T>0\) which is defined as

subject to (4), where \(x(K,t)=[x_1(K,t) \ldots x_n(K,t)]^T\), is convex in K if for any t and i (\(i=1,\ldots ,n\)), \(x_i(K,t)\) is either a convex function of K and \(x_i(K,t)\ge 0\) or a concave function of K and \(x_i(K,t)\le 0\) .

Proof

For the sake of simplicity and without loss of generality, the following proof is presented assuming \(Q=\mathrm {diag}\{q_1,\ldots ,q_n\}\). For the J(K) defined in (9), assuming \(x(K,t)=[x_1(K,t) \ldots x_n(K,t)]^T\), we have

Assuming that \(x_i(K_,t)\) is a nonnegative convex (or, equivalently, a non-positive concave) function of K, we can write

which yields

Now, applying Lemma 2.1 to the above inequality yields

which proves the convexity of J(K). \(\square \)

It should be noted that the restrictions put on \(x_i(K_,t)\) in Theorem 2.1 are not harsh in practice. For instance, the convexity of \(x_i(K,t)\) with respect to K is automatically achieved when the system under control has only one state or when all of the \(x_i(K,t)\) (\(i=1,\ldots ,n\)) behave like monotonically decreasing functions of t which decay faster by increasing K.

2.3 Designing LQR via Nonlinear Optimization

Let us consider the more general problem of calculating the vector of state feedback gains, K, which minimizes (6) subject to (4). In practice, it is usually observed that the corresponding J(K) has only one extremum. Clearly, in this case, any local optimum solution is actually the global best solution. Thus, in order to find the optimal state feedback gains, one can assign an initial value to K which stabilizes the feedback system and then utilize a gradient-based iterative algorithm to find the optimal solution (which is actually the global best solution) in the vicinity of the initial point. All of the optimizations in this paper are performed using this approach where the MATLAB function fminunc is used as the optimizer and the cost function is approximated through the use of the trapezoidal method via the MATLAB command trapz (the upper bound of the integral is considered a sufficiently big number when an infinite horizon problem is under consideration). In the following examples, the fractional-order state-space equations are solved numerically using the predictor–corrector method [26].

2.4 Numerical Examples

Example 2.1

Consider an FOS with the following transfer function:

The state-space representation of this system is as follows:

Applying the state feedback control law \(u(t)=-kx(t)\) to (16) and then taking the Laplace transform from the resulting equation assuming \(x(0)=x_0\) yield

Note that in order to arrive at (17), we used the fact that the Laplace transform of \(D^\alpha x(t)\), where \(0<\alpha <1\) and \(D^\alpha \) is the Caputo fractional derivative operator, is equal to \(s^\alpha X(s)-s^{\alpha -1}x_0\) [22, p. 106]. For any special value of t, the inverse Laplace transform of (17), denoted as \(x(k,t)=L^{-1}\{X(s)\}\), is a monotonically decreasing function of k \((k>0)\). More precisely, assuming \(\alpha =1/q\), where q is any positive integer, we have [21, p. 326]

where \(E_t\) is the Mittag-Leffler function defined as \(E_t(w,c):=t^w\sum _{n=0}^\infty (ct)^w/\varGamma (1+n+w)\) [21, p. 133]. Figure 1 shows x(k, t) for several values of k assuming \(x_0=1\), \(\alpha =0.5\), and \(a=b=1\). As it is observed, x(k, t) is a monotonically decreasing function of k for any \(t>0\).

Inverse Laplace transform of (17) for \(x_0=1\) and various values of k

The plot of J(k) versus k for Example 2.1

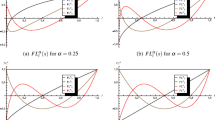

To find the optimal state feedback gain, it has to be noted that this state feedback system is stable for \(k>-b=-1\). Assuming \(r=Q=1\) and starting the optimization (using the MATLAB function fminunc) from the initial point \(k_0=0\), the optimal value of k and the corresponding value of J assuming \(x_0=1\) are obtained as 0.6646 and 1.0435, respectively (the upper bound of the integral in the cost function is considered 100, and the trapezoidal method with 1000 points is used for its numerical evaluation). Figure 2 shows J(k) versus k for various values of x(0), where the minimum point of each curve is shown by x. Note that, as it is expected, the optimal value of k is independent of \(x_0\). Figure 3 shows \(J_1(k)\) and \(J_2(k)\) for this example (see (6) for the definition of these two functions). It is observed that \(J_1(k)\) and \(J_2(k)\) are monotonically decreasing and increasing functions of k, respectively. It is to be noted that according to Theorem 2.1, \(J_1(k)\) is always a convex (and monotonically decreasing) function of k provided that x(k, t) is a monotonically decreasing function of k for any t, which is the case in this example. However, \(J_2(k)\) is often only a monotonically increasing (but, not necessarily, a convex) function of k. Nonetheless, considering the fact that \(J(k)=J_1(k)+J_2(k)\) has only one minimum, the fminunc command can easily find the global best solution. Figure 4 shows the state and control of the state feedback system when the proposed method and the classical LQR of [19] (both assuming \(x_0=1\)) are applied. As can be seen, the proposed method leads to a smaller value for the cost function compared to [19].

State and control of Example 2.1

Example 2.2

Consider the problem of designing an LQR for an FOS with the transfer function below:

where, without any loss of generality, it is assumed that \(\alpha =0.7\), \(b_1=1\), \(b_0=3\), \(a_1=a_2=2\), \(r=1\), and \(Q=I_{2\times 2}\). In order to approximate the cost function using the trapezoidal method, the upper bound of integrals in (6) is considered 100 and the integral is discretized using 1000 points. The state-space model of this system is as follows:

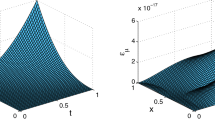

This system has two states, and the corresponding state feedback gains are denoted as \(k_1\) and \(k_2\). Figure 5 shows \(J(k_1,k_2)\) versus \(k_1\) and \(k_2\) assuming \(x_1(0)=x_2(0)=1\). Clearly, the cost function has only one extremum which can be calculated using fminunc. Starting the numerical optimization from the initial point (0, 0) (which stabilizes the state feedback system), we obtain the optimal values of state feedback gains as \(k_1=-0.2248\) and \(k_2=0.2584\) which lead to \(J=2.2580\). Figure 6 depicts the states and control for the proposed method and the classical LQR designed according to [19] for this system. As it is evident, the propounded method brings about a reduction of more than 20% in the cost function compared to [19].

The cost function of Example 2.2 (\(x_1(0)=x_2(0)=1\))

States and control of Example 2.2

3 Kalman Filter and LQG Controller Design for Commensurate Fractional-Order Systems

Figure 7 is the block diagram of a two-degree-of-freedom (2DOF) commensurate fractional-order LQG regulator. The only difference between this system and the classical 2DOF LQG regulator is in the application of the fractional-order derivative operator instead of the ordinary derivative operator. In Fig. 7, w and v are uncorrelated white Gaussian noises such that

It can be easily verified that the state-space representation of the fractional LQG regulator shown in Fig. 7 is as follows:

The fractional LQG has two unknown variables which must be calculated optimally: the state feedback gain \(K\in {\mathbb {R}}^{1\times n}\) and the Kalman filter gain \(L\in {\mathbb {R}}^{n\times 1}\), where n is equal to the number of states of the original system. According to the separation principle [20, Ch. 9], in order to design the fractional LQG regulator, one can first calculate K such that the cost function of the fractional LQR problem as presented in (6) is minimized and then design the Kalman filter gain, L, such that the cost function below is minimized:

Calculation of K is straightforward and can be treated in exactly the same way as that discussed in Sect. 2. Since designing Kalman filter and LQR are dual procedures, calculation of the Kalman filter gain can be performed in a manner similar to the method presented in Sect. 2. More precisely, in order to calculate the optimal L, first we write a MATLAB function which accepts L at its input and returns \(J_{rm kal}(L)\). Then, we utilize the MATLAB command fminunc to find L, which minimizes this cost function. More details on this are presented below.

Before creating a software function for \(J_\mathrm{kal}(L)\), first we generate the (sufficiently long-term) white Gaussian noise vectors v and w, whose covariance matrices are equal to V and W, respectively (v and V are scalar in our study since the system is assumed to have only one output). Next, assuming \(u=0\) and \(x(0)=x_0\), where \(x_0\in {\mathbb {R}}^{n\times 1}\) is the known vector of initial conditions, we calculate y for the “System” in Fig. 7. For this purpose, we first calculate x from \(D^\alpha x=Ax+w\) and then use \(y=Cx+v\). Note that generating w and v and then calculating x and y as described above are done only once prior to developing the software function for calculating \(J_\mathrm{kal}(L)\). The function written in MATLAB to calculate \(J_\mathrm{kal}(L)\) must first calculate the output of the “Kalman filter” in Fig. 7 assuming \(u=0\) and \(\hat{x}(0)=0\). For this purpose, the equation \(D^\alpha \hat{x}=(A-LC)\hat{x}+Ly\) must be solved numerically assuming \(\hat{x}(0)=0\) using the y which was already calculated (note that at this stage L is known since it is entered as the input to our MATLAB function). At the next step, in order to estimate the expected value in (23), the software function calculates the integral of \((x-\hat{x})^T(x-\hat{x})\) from 0 to T, where T is a sufficiently big number (this integral can be approximated by utilizing the trapezoidal method through the MATLAB command trapz).

When the MATLAB function fminunc calls the function we have developed for calculating \(J_\mathrm{kal}(L)\) as described above, an initial guess for L is required. This initial guess (which serves as the starting point for fminunc) must be chosen such that we have \(|\mathrm {Arg(eig(}A-LC))|>\pi \alpha /2\) for all eigenvalues of \(A-LC\). In order to find such an initial guess for the gain of the Kalman filter (or similarly, the gain of the state feedback in the LQR problem), we first solve the problem under consideration assuming \(\alpha =1\) and then consider the resultant solution as the starting point for fminunc. It has to be noted that any linear fractional-order state-space equation is stable for \(0<\alpha <1\) if it is stable for \(\alpha =1\).

3.1 Numerical Examples

Example 3.1

In this example, we design the Kalman filter and the LQG regulator for the system of Example 2.1. Assuming \(x(0)=1\), \(\hat{x}(0)=0\), \(W=V=0.1\), and \(T=100\) (the upper bound of the integral used for approximating the expected value in (23)), we obtain the optimal value of 2.1702 for L. Note that according to the discussion in Sect. 2, the optimal value of L is theoretically independent of the special value assigned to x(0) during numerical optimization. Figure 8 shows the state of the system and the output of the Kalman filter for the fractional LQG regulator shown in Fig. 7. The state of the system, when regulated using LQR (i.e., the same as Example 2.1 but with a noise added to the state and the measured output), is also shown in Fig. 8 for comparison.

Simulation results of Example 3.1

Example 3.2

In this example, a Kalman filter and LQG regulator are designed for the system of Example 2.2. Assuming \([x_1(0)~x_2(0)]=[1~1]\), \([\hat{x}_1(0)~\hat{x}_2(0)]=[0~0]\), \(W=V=0.01I_{2\times 2}\), and \(T=100\), the optimal value of \([0.6263 ~1.2221]^T\) is obtained for L. Figure 9 shows the states of the system and the outputs of the Kalman filter for the fractional LQG of Fig. 7. The states of the noisy system, when it is regulated using LQR, are also shown in Fig. 9 for comparison.

Simulation results of Example 3.2

The benchmark problem of Example 3.3

Example 3.3

In this example, we design an LQG compensator capable of tracking the step command for an inverted pendulum after the partial cancelation of its unstable pole and zero by a precompensator. Consider the inverted pendulum shown in Fig. 10. After the equations of the dynamic system are linearized around \((x,\theta )=(0,0)\), the transfer function of this system from u to x is obtained as follows:

where \(p=\sqrt{g/l+mg/(Ml)}\) and \(z=\sqrt{g/l}\) [27]. Note that for this system we have \(0<z<p\). The simulations of this example are preformed assuming \(g=9.8\) m/s, \(M=m=0.1\) kg, and \(l=1\) m.

It is a well-known fact that the right half-plane (RHP) poles and zeros of the open-loop system limit the performance of the resulting feedback system [20, Ch. 5]. It is especially known that when the RHP pole is larger than the RHP zero, any linear feedback control method will necessarily lead to very large overshoots in the response of the system. However, it is shown in [27] that the fractional-order cancelation of both the RHP pole and the RHP zero can reduce the undershoots and overshoots in the system response. Here, without getting involved in details, we only design an LGQ compensator with the ability of tracking step command for the FOS obtained after such a fractional-order zero-pole cancelation. Figure 11 shows the block diagram of the proposed 2DOF fractional LQG controller. In this figure, the transfer function of the series connection of the inverted pendulum and the precompensator is obtained as follows:

which, assuming \(g=9.8\) m/s, \(M=m=0.1\) kg, and \(l=1\) m yields

The state-space representation of G(s), taking into account the state and measurement noise, is \(D^{0.5} x=Ax+Bu+w\) and \(y=Cx+v\), where

The 2DOF fractional LQG controller of Example 3.3

and w and v are uncorrelated white Gaussian noises with the covariance matrices W and V, respectively (for the sake of the brevity of notations, the numbers in (27) are represented with two digits after the decimal point). In Fig. 11, in addition to the states of G(s) and the Kalman filter, a new state denoted as \(x_i\) is introduced at the output of the fractional-order integrator \(1/s^{0.5}\). This fractional integrator is applied to ensure that y tracks the step command r without steady-state error. Note that one could also use a full integrator instead of \(1/s^{0.5}\) for this purpose, but this fractional integrator has the advantage of simplifying the required calculations. The state-space representation of the fractional LQG system shown in Fig. 11 is as follows:

where \(L\in {\mathbb {R}}^{7\times 1}\), \(K_r\in {\mathbb {R}}^{1\times 7}\), and \(K_i\in {\mathbb {R}}\) are the unknown gains to be calculated. For this purpose, we first solve the equivalent LQR problem shown in Fig. 12 in a way similar to the previous examples. Assuming that \(Q=I_{8\times 8}\), \(r=1\), and the initial value of all states of G(s) is equal to unity, the optimal state feedback gains in Fig. 12 are obtained as below:

Next, assuming \(W=10^{-3}I_{7\times 7}\) and \(V=10^{-3}\), we calculate the gain of the Kalman filter in Fig. 11 as follows:

In order to simulate the fractional LQG controller of Fig. 11, one can numerically solve (28) and (29) for the given initial conditions. Figure 13 shows the y(t) of Fig. 11 assuming two different initial conditions for the states of (26) as modeled by (27) (the initial values of the states of the Kalman filter and the fractional-order integrator are considered zero).

The fractional LQR of Example 3.3

Simulation results of the fractional LQG controller of Example 3.3

4 Conclusions

The LQR, Kalman filter, and LQG compensator were designed for various commensurate fractional-order systems assuming the Caputo definition for the fractional derivative operator. The important result that simplified calculations is that the cost function of the LQR (or the Kalman filter) has only one extremum with respect to the state feedback (or Kalman filter) gains. Therefore, the optimal value of these parameters can be calculated using any gradient-based optimization algorithm. The MATLAB function fminunc was used for this purpose in the present study. Unlike similar methods which can only solve very simple nonrealistic fractional LQR problems, the proposed approach was shown to be capable of being used to design a fractional LQG control system (with the ability of tracking step command) which has a total of 15 (pseudo) states. The conjecture that the cost function of the fractional LQR is convex with respect to the state feedback gains was proposed and proven in a special case.

References

Caponetto, R., Dongola, G., Fortuna, L., Petras, I.: Fractional Order Systems: Modeling and Control Applications. World Scientific, London (2010)

Aghababa, M.P.: Fractional modeling and control of a complex nonlinear energy supply-demand system. Complexity 20, 74–86 (2015)

Lewandowski, R., Chorazyczewski, B.: Identification of the parameters of the Kelvin–Voigt and the Maxwell fractional models, used to modeling of viscoelastic dampers. Comput. Struct. 88, 1–17 (2010)

Bode, H.W.: Network Analysis and Feedback Amplifier Design. Van Nostrand, New York (1945)

Oustaloup, A., Mathieu, B., Lanusse, P.: The CRONE control of resonant plants: application to a flexible transmission. Eur. J. Control 1, 113–121 (1995)

Podlubny, I.: Fractional-order systems and \(PI^\lambda D^\mu \)-controllers. IEEE Trans. Automat. Contr. 44, 208–214 (1999)

Merrikh-Bayat, F.: General rules for optimal tuning the \(PI^\lambda D^\mu \) controllers with application to first-order plus time delay processes. Can. J. Chem. Eng. 90, 1400–1410 (2012)

El-Khazali, R.: Fractional-order \(PI^\lambda D^\mu \) controller design. Comput. Math. Appl. 66, 639–646 (2013)

Vu, T.N.L., Lee, M.: Analytical design of fractional-order proportional-integral controllers for time-delay processes. ISA Trans. 52, 583–591 (2013)

Luo, Y., Chen, Y.Q., Wang, C.Y., Pi, Y.G.: Tuning fractional order proportional integral controllers for fractional order systems. J. Process Control 20, 823–831 (2010)

Merrikh-Bayat, F., Karimi-Ghartemani, M.: Method for designing \(PI^\lambda D^\mu \) stabilisers for minimum-phase fractional-order systems. IET Control Theory Appl. 4, 61–70 (2010)

Beschi, M., Padula, F., Visioli, A.: Fractional robust PID control of a solar furnace. Control Eng. Pract. 56, 190–199 (2016)

Zhang, M., Lin, X., Yin, W.: An improved tuning method of fractional order proportional differentiation (FOPD) controller for the path tracking control of tractors. Biosyst. Eng. 116, 478–486 (2013)

Chao, H., Luo, Y., Di, L., Chen, Y.Q.: Roll-channel fractional order controller design for a small fixed-wing unmanned aerial vehicle. Control Eng. Pract. 18, 761–772 (2010)

Agrawal, O.P.: A formulation and numerical scheme for fractional optimal control problems. J. Vib. Control 14, 1291–1299 (2008)

Baleanu, D., Defterli, O., Agrawal, O.P.: A central difference numerical scheme for fractional optimal control problems. J. Vib. Control 15, 583–597 (2009)

Agrawal, O.P., Defterli, O., Baleanu, D.: Fractional optimal control problems with several state and control variables. J. Vib. Control 16, 1967–1976 (2010)

Li, Y., Chen, Y.Q.: Fractional order linear quadratic regulator. In: IEEE/ASME International Conference on Mechtronic and Embedded Systems and Applications, pp. 363–368. (2008). doi:10.1109/MESA.2008.4735696

Sierociuk, D., Vinagre, B.M.: Infinite horizon state-feedback LQR controller for fractional systems. In: 49th IEEE Conference on Decision and Control (CDC), pp. 6674–6679. (2010). doi:10.1109/CDC.2010.5717252

Skogestad, S., Postlethwaite, I.: Multivariable Feedback Control: Analysis and Design, 2nd edn. Wiley, Hoboken (2005)

Miller, K.S., Ross, B.: An Introduction to the Fractional Calculus and Fractional Differential Equations. Wiley, New York (1993)

Podlubny, I.: Fractional Differential Equations. Academic Press, New York (1998)

Farges, C., Moze, M., Sabatier, J.: Pseudo-state feedback stabilization of commensurate fractional order systems. Automatica 46, 1730–1734 (2010)

Matignon, D.: Stability results on fractional differential equations with applications to control processing. In: Computational Engineering in Systems Applications. Vol. 2. Lille, pp. 963–968 (1996)

Merrikh-Bayat, F.: General formula for stability testing of linear systems with fractional-delay characteristic equation. Cent. Eur. J. Phys. 11, 855–862 (2013)

Diethelm, K., Ford, N.J., Freed, A.D.: A predictor–corrector approach for the numerical solution of fractional differential equations. Nonlinear Dyn. 29, 3–22 (2002)

Merrikh-Bayat, F.: Fractional-order unstable pole-zero cancellation in linear feedback systems. J. Process Control 23, 817–825 (2013)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Arabi, S.H., Merrikh-Bayat, F. A Practical Method for Designing Linear Quadratic Regulator for Commensurate Fractional-Order Systems. J Optim Theory Appl 174, 550–566 (2017). https://doi.org/10.1007/s10957-017-1125-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-017-1125-0

Keywords

- Linear quadratic regulator

- Linear quadratic Gaussian

- Kalman filter

- Commensurate fractional-order system