Abstract

This work develops an optimization method based on a new class of basis function, namely the generalized Bernoulli polynomials (GBP), to solve a class of nonlinear 2-dim fractional optimal control problems. The problem is generated by nonlinear fractional dynamical systems with fractional derivative in the Caputo type and the Goursat–Darboux conditions. First, we use the GBP to approximate the state and control variables with unknown coefficients and parameters. Afterwards, we substitute the obtained values for the variables and parameters in the objective function, nonlinear fractional dynamical system and Goursat–Darboux conditions. The 2-dim Gauss–Legendre quadrature rule together with a fractional operational matrix construct a constrained problem, that is solved by the Lagrange multipliers method. The convergence of the GBP method is proved and its efficiency is demonstrated by several examples.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fractional calculus, a generalized form of the classical calculus, is an important tool the for modeling physical problems in various branches of science [1,2,3]. The modeling in these fields requires the use of ordinary and partial fractional differential equations. It is well know that the analytic and exact solutions of such equations are not available in most cases, motivating researchers to develop accurate approximation numerical methods [4, 5]. Fu et al. [6] implemented the Radial basis functions and Muntz polynomials basis for solving multi-term variable order time fractional partial differential equations. Chen et al. [7] developed a discrete numerical scheme using the finite difference method for solving a multi-term time-space variable order fractional advection–diffusion model. Hajipour et al. [8] proposed a discretization technique using a compact finite difference operator to solve a class of variable order fractional reaction-diffusion problems. Hassani et al. [9] introduced an optimization method based on transcendental Bernstein series for solving nonlinear variable order fractional functional boundary value problems. In recent years, several approximate methods have been applied to solve this type of differential equations [10,11,12,13,14,15,16,17,18].

Optimal control problems (OCP) has a wide range of applications [19,20,21]. In the OCP, the dynamical system and/or the performance index (cost functional) may involve variable order fractional operators. Recently, some researchers focused on numerical methods for this problem. Heydari [22] used the shifted Chebyshev polynomials and their operational matrices (OM) to solve 2-dim variable order fractional optimal control problems. Mohammadi and Hassani [23] applied generalized polynomials for solving the 2-dim variable order fractional optimal control problems. Li and Zhou [24] investigated the fractional spectral collocation discretization of OCP governed by a space-fractional diffusion equation. Salati et al. [25] presented three methods based on the Grünwald–Letnikov, trapezoidal and Simpson fractional integral formulas to solve fractional OCP. Zhang and Zhou [26] provided a spectral Galerkin approximation of OCP governed by a Riesz fractional differential equation with control integral constraint. Zaky and Machado [27] proposed the pseudo-spectral method and the Jacobi–Gauss–Lobatto integration algorithm for distributed fractional OCP. Some numerical approximation methods are investigated in [28,29,30,31,32,33,34,35,36,37,38,39,40,41] for solving the OCP.

It is well known that the Bernoulli polynomials (BP) play a vital role as basis functions in numerical techniques for solving various types of differential equations. Zeghdane [42] proposed a computational method based on the stochastic OM for the integration of BP in the case of the nonlinear Volterra–Fredholm–Hammerstein stochastic integral equations. Singh et al. [43] used OM of integration and differentiation of BP to find the approximate solution of second order two-dimensional telegraph equations with the Dirichlet boundary conditions. Ren and Tian [44] obtained an approximate solution of a boundary value problem for fourth order nonlinear integro-differential equation of Kirchhoff type based on the BP approximation. Loh and Phang [45] derived a BP based on OM of the right-sided Caputo’s fractional derivative to solve fractional integro-differential equation of Fredholm type. Golbabai and Panjeh Ali Beik [46] presented the OM of integration and the product based on the shifted BP for solving a class of linear matrix differential equations. Several other studies on BP can be found in [47,48,49,50,51].

In this paper, we consider nonlinear 2-dim fractional OCP including a fractional derivative in the Caputo type of the form:

subject to the nonlinear fractional dynamical system

and the Goursat–Darboux conditions [52, 53]

In the above relations, \(\mu \) and y are the state and control variables, respectively, that are assumed to be continuous. It is supposed that the nonlinear operators \({\mathcal {Q}}\) and \({\mathcal {S}}\) are differentiable. Moreover, the given functions \(e_{0}\) and \(e_{1}\) are assumed to be continuous.

Here, \({}^{C}_{0}\!{D_{t}^{\eta }}\) denotes the fractional derivative operator of order \(\eta \in (0,1]\) in the Caputo type and is defined as [1, 2]

where \(\Gamma (\cdot )\) denotes the gamma function.

From the definitions of the fractional derivative of the Caputo type, it is straightforward to conclude that

where \(n-1<\eta \le n\).

The proposed method transforms the solution of the above fractional model into an algebraic system of nonlinear equations. To this end, the functions \(\mu \) and y are approximated by the GBP with unknown coefficients and parameters. By inserting these approximations into the objective function and employing the Gauss–Legendre quadrature rule for computing the double integral, a nonlinear algebraic equation including the unknown coefficients and parameters is obtained. Besides, by substituting these approximations in the nonlinear fractional dynamical systems and the Goursat–Darboux conditions, and by adopting the OM of fractional derivative of the GBP, a set of nonlinear algebraic equations is extracted. The method of constrained extremum is employed for the problem generated by jointing the algebraic equations (extracted of the dynamical system) and the Goursat–Darboux conditions to the algebraic equation (obtained of the objective function) by using Lagrange multipliers. As an immediate result, the optimal solution of the problem is obtained by means an algebraic system of nonlinear equations.

The rest of the paper is organized as follows. Section 2 introduces the BP, the GBP and the approximation of a given function. Section 3 describes the OM of fractional derivative and function approximation. Section 4 applies the fractional derivative of the GBP and the Lagrange multipliers method to obtain an approximate solution of (1.1). Section 5 is devoted to the convergence analysis and error estimation. Section 6 provides the numerical results. Section 7 outlines the key conclusions and discusses future works.

2 Bernoulli Polynomials and Generalizes Bernoulli Polynomials

This section introduces the main concepts concerning the BP and GBP and derives the approximation of a given function by means of the BP and GBP.

2.1 Bernoulli Polynomials

The classical BP of degree w, \({\mathcal {B}}_{w}(\tau )\), is defined by [42,43,44]

where \({\mathcal {B}}_{i}:={\mathcal {B}}_{i}(0)\), \(i=0,1,\ldots ,w\), are rational numbers, called Bernoulli numbers, that are obtained by the expansion:

The first Bernoulli numbers are given by:

with \({\mathcal {B}}_{2i+1}=0,~i=1, 2,3,\ldots \).

The first BP can be written as:

Using the above polynomial basis functions, we can expand any function f(x, t) as follows

where

and

so that

2.2 Generalized Bernoulli Polynomials

The GBP, \({\mathscr {B}}_{m}(t)\), are constructed by change of variable \(t^{i}\) to \(t^{i+\varrho _{i}}\), \((i+\varrho _{i} > 0)\), on the BP and are defined by

where the symbols \(\varrho _{i}\) correspond to the control parameters. In particular, if \(\varrho _{i}=0\) then the GBP coincide with the classical BP.

The expansions of a given functions \(\mu (x,t)\) and y(x, t) in the terms of GBP can be represented in the following matrices form

where

where \(k_{i}\), \(l_{j}\), \(r_{i}\) and \(s_{j}\) are the control parameters.

3 The Operational Matrices and Function Approximation

In this section, we derive the OM for the GBP.

The fractional derivative of order \(\eta \) in the Caputo type can be represented by

where \({\mathscr {D}}^{(\eta )}_{t}\) denotes the \((m_{2}+1)\times (m_{2}+1)\) OM of fractional derivative defined by:

The first and second order derivatives of \(\Theta _{m_{1}}(x)\) are given by:

where \({\mathscr {D}}^{(1)}_{x}\) and \({\mathscr {D}}^{(2)}_{x}\) denote the \((m_{1}+1)\times (m_{1}+1)\) OM of derivatives, respectively, so that we can write:

3.1 Function Approximation

Let \({\mathbb {X}}=L^{2}[0,1]\times [0,1]\) and \({\mathbb {Y}}=\left\langle x^{k_{i}}t^{l_{j}};\,\ 0\le i\le m_{1},\,\ 0\le j\le m_2\right\rangle \). Then, \({\mathbb {Y}}\) is a finite dimensional vector subspace of \({\mathbb {X}}\,\left( dim {\mathbb {Y}} \le (m_1+1)(m_2+1)<\infty \right) \) and each \({\tilde{\mu }}={\tilde{\mu }}(x,t)\in X\) has a unique best approximation \(\mu _0=\mu _0(x,t)\in {\mathbb {Y}}\), given by:

For more details, see Theorem 6.1-1 of [54]. Since \(\mu _0\in {\mathbb {Y}}\) and \({\mathbb {Y}}\) is a finite dimensional vector subspace of \({\mathbb {X}}\), by an elementary argument in linear algebra, we have unique coefficients \(a_{ij} \in {\mathbb {R}}\) such that the dependent variable \(\mu _0(x,t)\) may be expanded in terms of the GP as

where \({\mathcal {F}}_{m_{1}}(x)\) and \({\mathcal {G}}_{m_{2}}(t)\) are defined in Eq. (2.11).

4 Explanation of the Proposed Method

This section describes the implementation details of the GBP and OM of derivatives for the nonlinear 2-dim fractional OCP in Eqs. (1.1)–(1.3). Firstly, we approximate the state and control variables \(\mu (x,t)\) and y(x, t) by the GBP as

where \({\mathcal {A}}_{(m_{1}+1)\times (m_{2}+1)}\) and \({\mathcal {B}}_{(n_{1}+1)\times (n_{2}+1)}\) unknown matrices to be found, and the vectors \({\mathcal {F}}_{m_{1}}(x)\), \({\mathcal {G}}_{m_{2}}(t)\), \({\mathcal {H}}_{n_{1}}(x)\) and \({\mathcal {Z}}_{n_{2}}(t)\) are formulated in (2.11). Then, we apply the OM of derivatives for \(\mu (x,t)\) in the following form:

The functions \(e_{0}(x)\) and \(e_{1}(t)\) are expressed by utilizing the GBP as follows

where \({\mathscr {E}}_{0}=[e_0^0\,\,e_1^0\,\,\cdots \,\,e_{m_{1}}^0]^T\) and \({\mathscr {E}}_{1}=[e_0^1\,\,e_1^1\,\,\cdots \,\,e_{m_{2}}^1]\) are unknown vectors to be found.

Regarding to relations (4.1), (4.3) and (1.3), we have

Substituting Eq. (4.1) into Eq. (1.1), the objective function can be expressed as follows

where \({\mathcal {K}}\), \({\mathcal {L}}\), \({\mathcal {R}}\) and \({\mathcal {S}}\) are unknown control vectors that can be represented as

The double integral in Eq. (4.5) can be approximately calculated by using an M-point Gauss–Legendre quadrature rule [55] with the nodal points \(\rho _{i}\) shifted on the unit interval and corresponding weights \(\varpi _{i}\). Therefore, we have

Furthermore, substituting Eqs. (4.1) and (4.2) into Eq. (1.2) yields

Taking the collocation points \((x_{i},t_{j})=(\frac{i}{{\hat{m}}},\frac{j}{{\hat{m}}})\) for \(i=1,2,\ldots , {\hat{m}},\,\,\,j=1,2,\ldots , {\hat{m}}\), where \(m=\min (m_{1},m_{2})\), \(n=\min (n_{1},n_{2})\) and \({\hat{m}}=\min (m,n),\) into Eq. (4.8), we obtain the algebraic equations

From Eq. (4.4) we obtain the following algebraic equations

Let

where

and

We remind that \(\Lambda \) is the Lagrange multipliers vector. The necessary and sufficient conditions of the optimality are obtained as follows [56, 57]

By solving the above system and computing \({\mathcal {A}}\), \({\mathcal {B}}\), \({\mathcal {K}}\), \({\mathcal {L}}\), \({\mathcal {R}}\) and \({\mathcal {S}}\), the approximate solutions \(\mu (x,t)\) and y(x, t) are obtained respectively from Eq. (4.1).

5 Convergence Analysis and Error Estimate of the Proposed Method

In this section, we investigate the convergence of the proposed method. We first prove the following convergence theorem for approximating an arbitrarily continuous function.

Theorem 1

Let \(f:[0,1]\times [0,1]\rightarrow {\mathbb {R}}\) be a continuous function. Then, for every \(x,t\in [0,1]\) and \(\epsilon >0\), there exists a GBP, \(\mu (x,t)\), such that

Proof

Let \(\epsilon >0\) be arbitrarily chosen. In view of Weierstrass theorem [54], there exists a polynomial \(P_{m_1,m_2}(x,t)=\sum ^{m_1}_{i=0}\sum ^{m_1}_{j=0}\sum ^{m_2}_{q=0}\sum ^{m_2}_{p=0}b_{j,i}e_{q,p}x^jt^p\), with \(x,t\in [0,1]\) and \(b_{j,i},e_{q,p}\in {\mathbb {R}}\) such that

We construct a GBP, \(\mu (x,t)\), as follows:

If \(x=0\) or \(t=0\), then by setting \(k_0=0\), \(l_0=0\) and \(\mu (x,t)=\sum ^{m_1}_{i=0}\sum ^{m_1}_{j=0}\sum ^{m_2}_{q=0}\sum ^{m_2}_{p=0}b_{j,i}e_{q,p}\), we get the desired conclusion. Assume now that \(x\ne 0\) and \(t\ne 0\). Letting

and

for all \(i=0,1,\ldots ,m_1\), \(j=0,1,2,\ldots ,m_1\), \(q=0,1,\ldots ,m_2\) and \(p=0,1,\ldots ,m_2\), we can find two sequences \(\{k_{i,j,q,n}\}_{n\in {\mathbb {N}}}\) and \(\{s_{q,p,n}\}_{n\in {\mathbb {N}}}\) of real numbers such that \(k_{i,j,q,n}\rightarrow \frac{-\ln (|c_{j,i}a_{i,q}|)}{\ln (x)}\) and \(l_{q,p,n}\rightarrow \frac{-\ln (|d_{q,p}|)}{\ln (t)}\), where \(\beta _{j-i}\) are Bernoulli numbers. This implies that \(x^{k_{i,j,q,n}}\rightarrow \frac{1}{|c_{j,i}a_{i,q}|}\) and \(t^{l_{q,p,n}}\rightarrow \frac{1}{|d_{l,p}|}\). Hence, for every \(\epsilon >0\), there exist \(N_{0,j},N_{1,j},\ldots ,N_{m_1,j}\) and \(N_{0,p},N_{1,p},\ldots ,N_{m_2,p}\) in \({\mathbb {N}}\), such that, for any \(n\ge N:=\max \{N_{i,j},N_{q,p}:i,j=0,1,\ldots , m_1,~q,p=0,1,\ldots ,m_2\}\), for all \(i,j=0,1,\ldots ,m_1\) and \(q,p=0,1,\ldots ,m_2\), we deduce that

By setting

we conclude that

This implies that

completing the proof. \(\square \)

We show in the follow-up (Theorem 2) that with an increase in the number of the GBP basis \(m_1\) and \(m_2\) in (2.9), the approximate optimum value in (4.5) tends to the exact value. We apply the fractional derivative OM, to compute the fractional derivatives. Therefore, the error of the OM is defined by the following expression:

Let us discuss the convergence of the method for the variable t and the vector \({\mathcal {D}}\Xi _{m_2}\). A similar argument can be applied for the case of \({\mathcal {Q}}{\Omega }_{n_2}\) and, therefore, the details are omitted. If \(0\le j\le m_2\), then we obtain

This shows that \(^C_0D^{\eta }_{t}(\xi _j)\) is continuously differentiable. Employing (3.1), (3.3) and Theorem 1, for \(j=0,1,\dots , m_2\), we conclude that

Let \({GBP}_{m_2}([0,1])\) be the space of all GBP functions of degree less than or equal to \(m_1m_2\) on the interval [0, 1]. The following lemma shows that for \(m_1,m_2\in \mathbb {N}\), increasing the number of approximations \(m_1\), the two sides of (3.1), that is, the functional and the OM of fractional Caputo derivatives, representing two operators on the space \({GBP}_{m_2}([0,1])\), get close to each other with respect to the operator norm.

Lemma 1

Let \(T_{m_2}\) and \(S_{m_1,m_2}\) be two linear operators defined by

and

Then, \(T_{m_2}\) and \(S_{m_1,m_2}\) are bounded and

where \(c_j\in \mathbb {R}\), \(j=0,1,\ldots ,m_2\).

Proof

By the definition of \(T_{m_2}\), we deduce that

If \(\Vert \sum _{j=0}^{m_2}c_j\xi _j \Vert _{\infty }\le 1\), then we obtain

Since \(m_1\) and \(m_2\) are fixed numbers and the Caputo derivative is a linear operator defined on the finite dimensional normed space \({GBP}_{m_2}([0,1])\), then it is a bounded linear operator (see more details [54]) and, therefore, there exists a constant \(L_{{m_2,\eta }}\) such that

This implies that

This proves that \(T_{m_2}\) is a bounded linear operator. On the other hand, in view of (1.1), we conclude that

This entails to

Now, if we suppose that \(\epsilon >0\), then we have

We may assume that \(|c_j|\le 1\). In view of (1.1) we can select \(m_1\) large enough such that

from which we conclude that

This completes the proof. \(\square \)

Let \(\Delta =[0,1]\times [0,1]\) and \((C^2(\Delta ,\Vert \cdot \Vert ))\) be the normed space of all order two differentiable continuous functions where \(\Vert \cdot \Vert \) is defined by

Now we prove the following lemma that plays an important role in the convergence analysis.

Lemma 2

Let \(\Delta =[0,1]\times [0,1]\). Then, the functional \(J:C^2(\Delta )\rightarrow \mathbb {R}\) defined by (1.1) is uniformly continuous on the spaces \((C^2(\Delta ),\Vert \cdot \Vert )\).

Proof

In view of the definition of Caputo derivative, we obtain

and, hence, we can write:

Let \(\epsilon >0\), \(\mu (x,t)\in C^2(\Delta )\) and \(\delta >0\) be arbitrarily chosen. Then, there exists \(h(x,t)\in C^2(\Delta )\) such that

This, together with (1.1)–(1.4), implies that

By the assumption on the nonlinear fractional dynamical system (1.2), \({\mathcal {S}}(x,t,\mu ,\mu _x,\mu _{xx},y)\), is a continuous function. Thus, for sufficiently small valued of \(\delta >0\) with \(\Vert \mu -h\Vert <\delta \) we conclude that \(\Vert {\mathcal {Q}}\left( x,t,\mu ,y\right) -{\mathcal {Q}}\left( x,t,h,y\right) \Vert _{\infty }<\epsilon \) and

This ensures that

\(\square \)

Theorem 2

Let \(\sigma \) be the optimum of the functional J of \(C^2(\Delta )\). If \({\sigma }_{m_1,m_2}\) is the optimum of J on \(C^2(\Delta )\cap GBP_{m_1,m_2}([0,1])\), then

Let \(\epsilon >0\) be fixed. In view of the definition of J, there exists \(\mu _0\in C^2(\Delta )\) such that

Using Lemmas 1 and 2, and the continuity of J, for \(h(x,t)\in C^2(\Delta )\) with \(\Vert h-\mu _0\Vert <\delta \), we conclude that

Using Theorem 1, for \(m_1\) and \(m_2\) large enough, we can find \(\nu _{m_1,m_2}\in C^2(\Delta )\cap {GBP}_{m_1,m_2}([0,1])\) such that

Setting \(\sigma _{m_1,m_2}:=J[\nu _{m_1,m_2}]\), we obtain

If \(\epsilon \rightarrow 0\), then we arrive at

This completes the proof.

Now, we investigate the error estimate of the GBP expansion in two dimensions by means of the following theorem. We first mention the polynomial interpolation formula from [58].

Interpolation formula 1. Let P(x, t) be any two variables polynomial in x and t. Then, we have

The remainder formula can be derived as follows. For any smooth function f, there exist values \(\zeta ,\zeta ',\gamma \) and \(\gamma '\) such that

where \(x_k\), \(k=0,1,2,\ldots , m_1\) and \(t_s\), \(s=0,1,2,\ldots , m_2\), are the roots of the \((m_1+1)\)-degree and \((m_2+1)\)-degree GBP, respectively.

Theorem 3

Let \(\mathbb {X}=L^2([0,1]\times [0,1])\) and \(\mathbb {Y}=\langle x^{k_i}t^{l_j}:0\le i\le m_1,0\le j\le m_2\rangle \). Suppose that \(\mu _0=\mu _0(x,t)\in \mathbb {Y}\) is the unique best approximation of \({\tilde{\mu }}={\tilde{\mu }}(x,t)\in \mathbb {X}\). Let \({\tilde{\mu }}:[0,1]\times [0,1]\rightarrow {\mathbb {R}}\) be a continuous function. Then, we have

Proof

We first notice that if \({\hat{\mu }}={\hat{\mu }}(x,t)\) is the interpolation polynomial for \({\tilde{\mu }}\) at the points \((x^i,t^j)\) and \((x^{i+k_i},t^{j+l_j})\), where \(x^i\), \(t^j\), \(x^{i+k_i}\) and \(t^{j+l_j}\) are defined in (2.16) and (2.17), respectively, then we get from the above inequality that

where \(\zeta ,\zeta '\in [0,1]\) and \(\gamma ,\gamma '\in [0,1]\). This implies that

Since \([0,1]\times [0,1]\) is a compact set and \({\tilde{\mu }}\) is continuous on \([0,1]\times [0,1]\), we have

Now, knowing that \((x^i,t^j),(x^{i+k_i},t^{j+l_j})\in [0,1]\times [0,1]\), we are led to

This completes the proof. \(\square \)

6 Numerical Examples

In this section two test problems are solved with the method presented in the previous sections. To confirm the accuracy and efficiency of the method, the approximations are compared with the exact solutions. All numerical calculations were performed using Maple 17 via 30 digits precision. Also, an 30-point Gauss–Legendre quadrature rule is employed for the numerical purposes. The absolute errors for the state variable (\(\varepsilon _{\mu }(x_{i},t_{i})\)) and the control variable (\(\varepsilon _y(x_{i},t_{i})\)) in various points \((x_{i},t_{i})\in [0,1]\times [0,1]\) are obtained as

and

The convergence order of the proposed scheme is computed as follows

where \(\varepsilon _{1}\) and \(\varepsilon _{2}\) are the first and the second values of the absolute error respectively. For more details on the order convergence, we refer the readers to [59, 60].

Example 1

Consider the objective function

subject to the nonlinear fractional dynamical system

and the Goursat–Darboux conditions

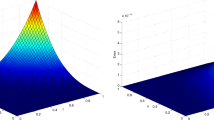

The analytical optimal solution of this example is \(\left<\mu (x,t),y(x,t)\right>=\left<x^{2.5}\,t^{2.1},x^{1.8}\,t^{2.3}\right>\). The method introduced in Sect. 4 is used for solving this example using the GBP and BP with some values of \(m_{1}\), \(m_{2}\), \(n_{1}\), \(n_{2}\) and \(\eta \). The approximate values of the objective function \({\mathcal {J}}\) are summarized in Tables 1 and 2, respectively. The resulting values of the absolute errors for the state and control variables and the value of \(\Xi \) for the case 5 with \(\eta =0.50\) are summarized in Table 3. The approximate solution and the absolute error for the state and control variables for the case 5 with \(\eta =0.50\) are shown in Figs. 1 and 2, respectively. The results depicted in Tables 1 and 2 imply that the proposed method is more accurate than the method based on the BP. The results confirm the presented method is highly efficient when solving this problem. Moreover, the results show that applying more terms of the GBP provides numerical results with high accuracy.

The approximate solution \(\mu (x,t)\) (left side) and the absolute error function \(\varepsilon _{\mu }\) (right side) for case 5 (\(m_{1}=m_{2}=n_{1}=n_{2}=5\) and \(\eta =0.50\)) in Example 1

The approximate solution y(x, t) (left side) and the absolute error function \(\varepsilon _y\) (right side) for case 5 (\(m_{1}=m_{2}=n_{1}=n_{2}=5\) and \(\eta =0.50\)) in Example 1

Example 2

Consider the objective function

subject to the nonlinear fractional dynamical system

and the Goursat–Darboux conditions

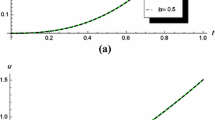

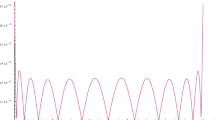

The analytical optimal solution of this example is \(\left<\mu (x,t),y(x,t)\right>=\left<t^{4}\sin (x),t^{3}\cos (x)\right>\). The presented and the standard BP methods with some values of \(m_{1}\), \(m_{2}\), \(n_{1}\), \(n_{2}\) and \(\eta \) are used for solving this example. The approximate values of the objective function \({\mathcal {J}}\) are summarized in Tables 4 and 5, respectively. The resulting values of the absolute error for the state and control variables and and the value of \(\Xi \) for the case 5 with \(\eta =0.80\) are summarized in Table 6. The approximate solution and the absolute error for the state and control variables for the case 5 with \(\eta =0.80\) are shown in Figs. 3 and 4, respectively. The results confirm the proposed method is convergent and perform very accurate for Problem (6.5). The results listed in Tables 4 and 5 imply that the method using the GBP is more accurate than the one based on BP. Moreover, from Tables 4 and 6 and also Figs. 3 and 4, we can conclude that our numerical solutions are in high agreement with the exact solution.

The approximate solution \(\mu (x,t)\) (left side) and the absolute error function \(\varepsilon _{\mu }\) (right side) for case 5 (\(m_{1}=n_{1}=7\), \(m_{2}=n_{2}=5\) and \(\eta =0.80\)) in Example 2

The approximate solution y(x, t) (left side) and the absolute error function \(\varepsilon _y\) (right side) for case 5 (\(m_{1}=n_{1}=7\), \(m_{2}=n_{2}=5\) and \(\eta =0.80\)) in Example 2

7 Conclusion

In this work, an optimization algorithm based on the OM of derivatives for GBP together with the Gauss–Legendre quadrature rule is used to solve the nonlinear 2-dim fractional optimal control problems. The OM derived and the use of Gauss–Legendre quadrature rule and Lagrange multipliers methods for reduce the problem into a system of nonlinear algebraic equations. The proposed method provides very accurate solution even for small number of basis function. The error analyses of the method discussed show that this proposed technique will be an effective approach to solve the problem under study. The method can also be extended for higher order nonlinear PDEs which is a topic of our future study.

References

Podlubny, I.: Fractional Differential Equations: An Introduction to Fractional Derivatives, Fractional Differential Equations, to Methods of Their Solution and Some of Their Applications, vol. 198. Academic Press, Cambridge (1998)

Samko, S.G., Kilbas, A.A., Marichev, O.I.: Fractional Integrals and Derivatives: Theory and Applications. Gordon and Breach, Langhorne (1993)

Miller, K.S., Ross, B.: An Introduction to the Fractional Calculus and Fractional Differential Equations. Wiley, New York (1993)

Bhrawy, A.H., Alofi, A.S.: The operational matrix of fractional integration for shifted Chebyshev polynomials. Appl. Math. Lett. 26(1), 25–31 (2013)

Bhrawy, A.H., Tharwat, M.M., Yildirim, A.: A new formula for fractional integrals of Chebyshev polynomials: Application for solving multi-term fractional differential equations. Appl. Math. Model. 37(6), 4245–4252 (2013)

Fu, Z.J., Reutskiy, S., Sun, H.G., Ma, J., Ahmad Khan, M.: A robust kernel-based solver for variable-order time fractional PDEs under 2D/3D irregular domains. Appl. Math. Lett. 94, 105–111 (2019)

Chen, R., Liu, F., Anh, V.: Numerical methods and analysis for a multi-term time-space variable-order fractional advection-diffusion equations and application. J. Comput. Appl. Math. 352, 437–452 (2019)

Hajipour, M., Jajarmi, A., Baleanu, D., Sun, H.G.: On an accurate discretization of a variable-order fractional reaction-diffusion equation. Commun. Nonl. Sci. Num. Sim. 69, 119–133 (2019)

Hassani, H., Tenreiro Machado, J.A., Avazzadeh, Z.: An effective numerical method for solving nonlinear variable-order fractional functional boundary value problems through optimization technique. Nonlinear Dyn. 97(4), 2041–2054 (2019)

Meng, R., Yin, D., Drapaca, C.S.: Variable-order fractional description of compression deformation of amorphous glassy polymers. Comput. Mech. 64(1), 163–171 (2019)

Malesz, W., Macias, M., Sierociuk, D.: Analytical solution of fractional variable order differential equations. J. Comput. Appl. Math. 348, 214–236 (2019)

Liu, X.T., Su, H.G., Zhang, Y., Fu, Z.: A scale-dependent finite difference approximation for time fractional differential equation. Comput. Mech. 63(3), 429–442 (2019)

Zhao, T., Maob, Z., Karniadakis, G.E.: Multi-domain spectral collocation method for variable-order nonlinear fractional differential equations. Comput. Method. Appl. M. 348, 377–395 (2019)

Babaei, A., Moghaddam, B.P., Banihashemi, S., Machado, J.A.T.: Numerical solution of variable-order fractional integro-partial differential equations via Sinc collocation method based on single and double exponential transformations. Commun. Nonl. Sci. Num. Sim. 82, 104985 (2020)

Heydari, M.H., Avazzadeh, Z., Yang, Y.: A computational method for solving variable-order fractional nonlinear diffusion-wave equation. Appl. Math. Comput. 352, 235–248 (2019)

Hassani, H., Avazzadeh, Z., Tenreiro Machado, J.A.: Numerical approach for solving variable-order space-time fractional telegraph equation using transcendental Bernstein series. Eng. Comput. (2019). https://doi.org/10.1007/s00366-019-00736-x

Hosseininia, M., Heydari, M.H., Maalek Ghaini, F.M., Avazzadeh, Z.: A wavelet method to solve nonlinear variable-order time fractional 2D Klein-Gordon equation. Comput. Math. Appl 78(12), 3713–3730 (2019)

Bhrawy, A.H., Zaky, M.A.: Numerical algorithm for the variable-order Caputo fractional functional differential equation. Nonlinear Dyn. 85(3), 1815–1823 (2016)

Olivie, A., Pouchol, C.: Combination of direct methods and homotopy in numerical optimal control: application to the optimization of chemotherapy in cancer. J. Optim. Theory Appl. 181(2), 479–503 (2019)

Nemati, A., Mamehrashi, K.: The use of the Ritz method and Laplace transform for solving 2d fractional order optimal control problems described by the Roesser model. Asian J. Control. 21(3), 1189–1201 (2019)

Rosa, S., Torres, D.F.M.: Optimal control of a fractional order epidemic model with application to human respiratory syncytial virus infection. Chaos Soliton. Fract. 117, 142–149 (2018)

Heydari, M.H.: A direct method based on the Chebyshev polynomials for a new class of nonlinear variable-order fractional 2D optimal control problems. J. Frankl. I. 365(15), 8216–8236 (2019)

Mohammadi, F., Hassani, H.: Numerical solution of two-dimensional variable-order fractional optimal control problem by generalized polynomial basis. J. Optim. Theory Appl. 180(2), 536–555 (2019)

Li, S., Zhou, Z.: Fractional spectral collocation method for optimal control problem governed by space fractional diffusion equation. Appl. Math. Comput. 350, 331–347 (2019)

Salati, A.B., Shamsi, M., Torres, D.F.M.: Direct transcription methods based on fractional integral approximation formulas for solving nonlinear fractional optimal control problems. Commun. Nonlinear Sci. Numer. Simul. 67, 334–350 (2019)

Zhang, L., Zhou, Z.: Spectral Galerkin approximation of optimal control problem governed by Riesz fractional differential equation. Appl. Numer. Math. 143, 247–262 (2019)

Zaky, M.A., Tenreiro Machado, J.A.: On the formulation and numerical simulation of distributed-order fractional optimal control problems. Commun. Nonlinear Sci. Numer. Simul 52, 177–189 (2017)

Nemati, S., Lima, P.M., Torres, D.F.M.: A numerical approach for solving fractional optimal control problems using modified hat functions. Commun. Nonlinear Sci. Numer. Simul. (2019). https://doi.org/10.1016/j.cnsns.2019.104849

Heydari, M.H.: A new direct method based on the Chebyshev cardinal functions for variable-order fractional optimal control problems. J. Frankl. I. 355(12), 4970–4995 (2018)

Hassani, H., Tenreiro Machado, J.A., Naraghirad, E.: Generalized shifted Chebyshev polynomials for fractional optimal control problems. Commun. Nonlinear Sci. Numer. Simul 75, 50–61 (2019)

Hosseinpour, S., Nazemi, A., Tohidi, E.: Müntz-Legendre spectral collocation method for solving delay fractional optimal control problems. J. Comput. Appl. Math. 351, 344–363 (2019)

Zhou, Z., Tan, Z.: Finite Element Approximation of Optimal Control Problem Governed by Space Fractional Equation. J. Sci. Comput. 78(3), 1840–1861 (2019)

Lotfi, A.: Epsilon penalty method combined with an extension of the Ritz method for solving a class of fractional optimal control problems with mixed inequality constraints. Appl. Numer. Math. 135, 497–509 (2019)

Tang, X., Shi, Y., Wang, L.L.: A new framework for solving fractional optimal control problems using fractional pseudospectral methods. Automatica 78, 333–340 (2017)

Tohidi, E., Saberi Nik, H.: A Bessel collocation method for solving fractional optimal control problems. Appl. Math. Model. 39(2), 455–465 (2015)

Rahimkhani, P., Ordokhani, Y.: Numerical solution a class of 2D fractional optimal control problems by using 2D Müntz-Legendre wavelets. Optim. Contr. Appl. Met. 39(6), 1916–1934 (2018)

Heydari, M.H., Hooshmandasl, M.R., Maalek Ghaini, F.M., Cattani, C.: Wavelets method for solving fractional optimal control problems. Appl. Math. Comput. 286, 139–154 (2016)

Hassani, H., Avazzadeh, Z.: Transcendental Bernstein series for solving nonlinear variable order fractional optimal control problems. Appl. Math. Comput. (2019). https://doi.org/10.1016/j.amc.2019.124563

Hassani, H., Avazzadeh, Z., Machado Tenreiro, J.A.: Solving two-dimensional variable-order fractional optimal control problems with transcendental Bernstein series. J. Comput. Nonlin. Dyn. 14(6), 061001 (2019)

Zaky, M.A.: A Legendre collocation method for distributed-order fractional optimal control problems. Nonlinear Dyn. 91, 2667–2681 (2018)

Keshavarz, E., Ordokhani, Y., Razzaghi, M.: A numerical solution for fractional optimal control problems via Bernoulli polynomials. J. Vib. Control. 22(18), 3889–3903 (2015)

Zeghdane, R.: Numerical solution of stochastic integral equations by using Bernoulli operational matrix. Math. Comput. Simulat. 165, 238–254 (2019)

Singh, S., Patel, V.K., Singh, V.K., Tohidi, E.: Application of Bernoulli matrix method for solving two-dimensional hyperbolic telegraph equations with Dirichlet boundary conditions. Comput. Math. Appl. 75(7), 2280–2294 (2018)

Ren, Q., Tian, H.: Numerical solution of the static beam problem by Bernoulli collocation method. Appl. Math. Model. 40(21–22), 8886–8897 (2016)

Loh, J.R., Phang, C.: Numerical Solution of Fredholm Fractional Integro-differential Equation with Right-Sided Caputo’s Derivative Using Bernoulli Polynomials Operational Matrix of Fractional Derivative. Mediterr. J. Math. 16, 28 (2019)

Golbabai, A., Panjeh Ali Beik, S.: An efficient method based on operational matrices of Bernoulli polynomials for solving matrix differential equations. Computa. Appl. Math. 34(1), 159–175 (2015)

Napoli, A.: Solutions of linear second order initial value problems by using Bernoulli polynomials. Appl. Numer. Math. 99, 109–120 (2016)

Rahimkhani, P., Ordokhani, Y., Babolian, E.: Fractional-order Bernoulli wavelets and their applications. Appl. Math. Model. 40(17–18), 8087–8107 (2016)

Rahimkhani, P., Ordokhani, Y., Babolian, E.: Numerical solution of fractional pantograph differential equations by using generalized fractional-order Bernoulli wavelet. Appl. Numer. Math. 309, 493–510 (2017)

Sahu, P.K., Saha, S.: A new Bernoulli wavelet method for accurate solutions of nonlinear fuzzy Hammerstein-Volterra delay integral equations. Fuzzy Set. Syst. 309, 131–144 (2017)

Bhrawy, A.H., Tohidi, E., Soleymani, F.: A new Bernoulli matrix method for solving high-order linear and nonlinear Fredholm integro-differential equations with piecewise intervals. Appl. Math. Comput. 219(2), 482–497 (2012)

Chandhini, G., Prashanthi, K.S., Antony Vijesh, V.: A radial basis function method for fractional Darboux problems. Eng. Anal. Bound. Element 86, 1–18 (2017)

Belbas, S.A.: Optimal control of Goursat-Darboux systems with discontinuous co-state. Appl. Math. Comput. 186, 101–116 (2007)

Kreyszig, E.: Introductory Functional Analysis with Applications. Wiley, Hoboken (1978)

Canuto, C., Hussaini, M., Quarteroni, A., Zang, T.: Spectral Methods in Fluid Dynamics. Springer Verlag, Berlin (1988)

Bertsekas, D.P.: Constrained Optimization and Lagrange Multiplier Methods. Academic Press Inc, Cambridge (1982)

Ito, K., Kunisch, K.: Lagrange Multiplier Approach to Variational Problems and Applications, Advances in Design and Control. SIAM, Philadelphia (2008)

Gasea, M., Sauer, T.: On the history of multivariate polynomial interpolation. J. Comput. Appl. Math. 122, 23–35 (2000)

Zaky, M.A., Hendy, A.S., Macías-Díaz, J.E.: Semi-implicit Galerkin-Legendre spectral schemes for nonlinear time-space fractional diffusion-reaction equations with smooth and nonsmooth solutions. J. Sci. Comput. (2020). https://doi.org/10.1007/s10915-019-01117-8

Mathews, J.H., Fink, K.D.: Numerical methods using MATLAB. N. J Pearson, Upper Saddle River (2004)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Hassani, H., Machado, J.A.T., Avazzadeh, Z. et al. Generalized Bernoulli Polynomials: Solving Nonlinear 2D Fractional Optimal Control Problems. J Sci Comput 83, 30 (2020). https://doi.org/10.1007/s10915-020-01213-0

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-020-01213-0

Keywords

- Generalized Bernoulli polynomials

- Nonlinear 2-dim fractional optimal control problems

- Nonlinear fractional dynamical systems

- Goursat–Darboux conditions

- Fractional derivative

- Coefficients and parameters