Abstract

In this paper, an optimal feedback, for a free vibrating semi-active controlled plant, is derived. The problem is represented as a constrained optimal control problem of a single input, free vibrating bilinear system, and a quadratic performance index. It is solved by using Krotov’s method and to this end, a novel sequence of Krotov functions that suits the addressed problem, is derived. The solution is arranged as an algorithm, which requires solving the states equation and a differential Lyapunov equation in each iteration. An outline of the proof for the algorithm convergence is provided. Emphasis is given on semi-active control design for stable free vibrating plants with a single control input. It is shown that a control force, derived by the proposed technique, obeys the physical constraint related with semi-active actuator force without the need of any arbitrary signal clipping. The control efficiency is demonstrated with a numerical example.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Bilinear differential equations are simple and at the same time effective nonlinear dynamic models that appear in some linearizations of nonlinear systems at a common fixed point [1], and in many practical modern control problems [2]. Despite their nonlinearity, their properties are close to those of linear systems and therefore they can be treated by several techniques and procedures from linear systems theory [3].

Optimal control of bilinear models has been addressed by several researchers. In [4], a bilinear-quadratic optimal control problem was defined for a homogeneous bilinear system, unconstrained control forces and a quadratic performance index. An iterative scheme, that produces a linear control law, was derived by using Pontryagin’s minimum principle and the successive approximations approach.

The use of Adomian decomposition method for the solution of the time-varying bilinear quadratic, optimal unconstrained control problem was suggested in [5]. According to this method, the bilinear model is represented by a convergent sequence of linear ones. Then, the solution of a bilinear quadratic problem is represented as a convergent sequence of LQR solutions. The optimal tracking problem is considered to illustrate the theory.

An optimal control law for a tracking problem related to a bilinear system and a quadratic performance index was suggested in [6]. The theoretical framework was constructed by using Lagrange’s multipliers, and an iterative algorithm was proposed for its solution.

In [7], the bilinear quadratic optimal unconstrained control problem is solved by construction of Hamiltonian equations, which leads to the common two-point boundary value problem for the state and co-state. The computation of the initial co-state is done successively by solving two first-order quasi-linear partial differential equations, which are derived in the paper.

The present paper introduces a method for solving a constrained optimal control problem for a single input bilinear system and a quadratic performance index. Emphasis is placed on semi-active control of mechanical vibrations in structures. As a first step, it is shown that a bilinear model with a single, constrained control input, can be used for describing a semi-active controlled plant. Next, Krotov’s method is used to derive an algorithm for the computation of an optimal feedback that obeys the constraints. As it will be described hereinafter, the main novelty in this study is the formulation of the sequence of Krotov functions that suits the addressed problem and allows for Krotov’s method to be used for its solution. The required computational steps are arranged as an algorithm and the outlines of the proofs for convergence and the optimality of the solution are given. The efficiency of the suggested method is demonstrated by numerical example.

2 Bilinear Models for Semi-Active Structural Control

The state-space model of a free vibrating linear structure, equipped with a set of actuators is governed by [8]:

where \({\mathbf {M}}>0\), \({\mathbf {C}}_d\ge 0\) and \({\mathbf {K}}>0\) are \(n_z\times n_z\) mass, damping and stiffness matrices, respectively;Footnote 1 \(n_z\) is the number of dynamic degrees of freedom (DOF); \(n_w\) is the number of control forces applied to the plant; \({\mathbf {z}}:[0,t_f]\rightarrow \mathbb {R}^{n_z}\) is a smooth vector function, which represents the DOF displacements; \({\mathbf {w}}:[0,t_f]\rightarrow \mathbb {R}^{n_w}\) is a vector function of the control forces and \({\varvec{\varPhi }}\in \mathbb {R}^{n_z\times n_w}\) is an input matrix that describes how the force inputs affects the structure’s DOF. This model is well-known and is widely used in many structural control problems.

A proper control law, which is used for the computation of \({\mathbf {w}}\), must take into account different constraints that originate in the physical control implementation strategy. It is common to classify structural control into four strategies. One of them is the semi-active control [9], it uses inherently dissipative controllers for improving the stability of a dynamic system. It differs from active ones since it is incapable of adding energy to the plant, i.e., it cannot excite the plant. It also differs from passive control since the control law can adapt to the response in real time. While semi-active controllers are restricted to systematic mechanical energy dissipation [10], semi-active actuators have a more severe restriction. These devices are characterized by local energy dissipation, in other words, the work done by the actuator can only be negative.

An intuitive approach that can be used as a semi-active control law is the clipped optimal control. According to which, the controller design is usually done by some known optimal method and by ignoring the actuator constraints. Later, when the control forces are applied, the control commands are arbitrarily clipped whenever the physical constraints are violated. This approach was used for semi-active control by many researchers (e.g., [11,12,13,14,15,16]). However, although it has the benefit of being simple, clipped optimal control distorts the control signal and therefore raises a question on the theoretical justification of the clipped signal. Here, we suggest an alternative which is based on bilinear models and that is known to minimize a defined performance index.

For several types of semi-active actuators, such as magneto-rheological dampers (MRD), pneumatic actuators [17], electromagnetic friction dampers [18], and semi-active viscous dampers [8], the generated force is always opposed to some linear combination of the plant states [19]. That constraint can be modeled conveniently by using a bilinear form.

For example, a variable viscous damper [8] is a type of semi-active actuator, in which the damping gain is controlled by real-time adjustment of the mounting angle between two passive viscous dampers. The produced force is governed by

where the viscous damping gain \(u:[0,t_f]\rightarrow [0,\infty [\) is a nonnegative, continuous function; \(\dot{z}_d:[0,t_f]\rightarrow \mathbb {R}\) is the damper velocity which can be written as a linear combination of the states- \(\dot{z}_d(t)={\mathbf {c}}{\mathbf {x}}(t)\), where \({\mathbf {c}}\in \mathbb {R}^n\) is a row vector that produces the suitable linear combination.

Another example for bilinear control force representation are MRDs. According Bingham’s constitutive model, the MRD force is the sum of two components [20]: viscous damping force and a controllable yield force- \(w^y\). While the damping coefficient can be designed by linear viscous design methods [21], a control law should be found for \(w^y\). Since \(w^y\) is always opposed to the damper velocity, it can also be represented in the form \(w^y(t) = -u(t) \dot{z}_d(t)\). only that here \(u:[0,t_f]\rightarrow [0,\infty [\) is a nonnegative, unnecessarily continuous, function. Note that from the physical viewpoint, that representation can be interpreted as an equivalent variable viscous damper. Hence, both the variable viscous damper force and the MRD force can be represented as a bilinear feedback:

By virtue of the above, the state-space equation of a plant configured with \(n_w\) semi-active actuators, can be written as:

where \({\mathbf {c}}_i\) are defined with correspondence to the semi-active actuator type and \((u_i)_{i=1}^{n_u}\) is a vector of control input signals. Here, \(n_w=n_u\) based on an assumption that each actuator is controlled by single control signal.

It should be noted that unlike the optimal constrained control problem, defined in [19], in the bilinear semi-active representation, the constraint which requires \(w({\mathbf {x}}(t),u(t))\ne 0\) when \({\mathbf {c}}{\mathbf {x}}(t)=0\), is satisfied intrinsically.

3 Krotov’s Method

While the use of first-order variational calculus or Pontryagin’s minimum principle is fairly common in optimal control and its applications, for many problems these theorems provide merely necessary conditions [22], and as a result, the calculated solution is at most a candidate local optimum. Starting in the sixties, new results on sufficient conditions for global optimum of optimal control problems were published by V. F. Krotov [23]. These results were used in this paper for solving the addressed problem.

Before starting with the main derivations of this paper, the following two theorems should be introduced. They are based on the theory provided by Krotov and are written in a form which is more suitable to the addressed problem.

Let \(\mathscr {U}\) be a collection of admissible control signals and \(\mathscr {X}\) a linear space of state vector functions. The term admissible process refers to the pair \(({\mathbf {x}},{\mathbf {u}})\), where \({\mathbf {u}}\in \mathscr {U}\), \({\mathbf {x}}\in \mathscr {X}\) and they both satisfy the states equation

The notation \(\mathscr {X}(t)=\{{\mathbf {x}}(t)|{\mathbf {x}}\in \mathscr {X}\}\subset \mathbb {R}^n\) means an intersection of \(\mathscr {X}\) at a given t. For instance, \(\mathscr {X}(t_f)\) is a set of all the terminal states of the processes in \(\mathscr {X}\).

The function \(q:\mathbb {R}^n\times [0,t_f]\rightarrow \mathbb {R}\) is a piecewise smooth function, denoted as Krotov function [2, 23, 24]. Each q is related with some equivalent formulation of performance index, as follows.

Theorem 3.1

Consider a performance index:

where \(l_f:\mathbb {R}^n\rightarrow \mathbb {R}\) and \(l:\mathbb {R}^n\times \mathbb {R}^{n_u}\times [0,t_f]\rightarrow \mathbb {R}\) are continuous.

Let q be a Krotov function. Let \(q_t\) and \(q_{\mathbf {x}}\) denote its partial derivatives. For each q there is an equivalent representation of \(J({\mathbf {x}},{\mathbf {u}})\):

where

Proof

See section 2.3 in [24]. \(\square \)

The equivalent formulation of J leads to the sufficient condition for a global optimal admissible process, which is given in the following theorem.

Theorem 3.2

Let s and \(s_f\) (Eqs. (5a) and (5b)) be related with some Krotov function - q. Let \(({\mathbf {x}}^*,{\mathbf {u}}^*)\) be an admissible process. If:

then \(({\mathbf {x}}^*,{\mathbf {u}}^*)\) is an optimal process.

Proof

Assume that Eq. (6) holds. Thus:

By the hypothesis, for any admissible process \(({\mathbf {x}},{\mathbf {u}})\) we have

which assures that \(J_{eq}({\mathbf {x}},{\mathbf {u}})-J_{eq}({\mathbf {x}}^*,{\mathbf {u}}^*) = J({\mathbf {x}},{\mathbf {u}})-J({\mathbf {x}}^*,{\mathbf {u}}^*) \ge 0\). Therefore \(({\mathbf {x}}^*,{\mathbf {u}}^*)\) is optimal. \(\square \)

Remark 3.1

-

An optimum derived by this theorem is global since the minimization problem defined in Eq. (6) is global [23].

-

Although theorem 3.2 provides a hint for finding a global optimum, the main problem remains—the existence and formulation of a suitable Krotov function. Note that a similar approach is used in Lyapunov’s method of stability.

-

Note that the equivalence \(J({\mathbf {x}},{\mathbf {u}}) = J_{eq}(q,{\mathbf {x}},{\mathbf {u}})\) holds if \({\mathbf {x}}\) and \({\mathbf {u}}\) satisfy the state equation (Eq. (3)). Otherwise, Eq. (4) might be false.

-

Since q is not unique, the equivalent representation \(J_{eq}\), s and \(s_f\), are also nonunique.

-

In many publications, such as [23], s is written with an opposite sign before l, which turns some of the minimization problems into maximization problems. Though, there is no intrinsic difference between these two formulations.

Theorems 3.1 and 3.2 provide not only a sufficient condition for global optimality but also lay the foundation for novel algorithms for the solution of optimal control problems [23], one of them is known as Krotov’s method. According to this method, the solution is not direct but a sequential one. It yields a convergent sequence of admissible processes whose limit is \(({\mathbf {x}}^*,{\mathbf {u}}^*)\) (theorem 3.2) [24]. Such a sequence of processes is called an optimizing sequence. The approach was used successfully for optimal control of bilinear systems in quantum-mechanics [2], and oscillations damping [25].

The method initializes with some admissible process \(({\mathbf {x}}_0,{\mathbf {u}}_0)\). An improved admissible process \(({\mathbf {x}}_1,{\mathbf {u}}_1)\) is computed in the following manner:

-

1.

Formulate \(q_0\) such that \(s_0\) and \(s_{f0}\) will satisfy:

$$\begin{aligned} s_0({\mathbf {x}}_0(t),{\mathbf {u}}_0(t),t)&= \max _{{\mathbf {x}}\in \mathscr {X}(t)} s_0({\mathbf {x}},{\mathbf {u}}_0(t),t) \; \forall t\in [0,t_f[ \\ s_{f0}({\mathbf {x}}_0(t_f))&= \max _{{\mathbf {x}}\in \mathscr {X}(t_f)} s_{f0}({\mathbf {x}}) \end{aligned}$$ -

2.

Formulate a mapping \(\hat{{\mathbf {u}}}_0:\mathbb {R}^n\times [0,t_f]\rightarrow \mathbb {R}^{n_u}\) such that

$$\begin{aligned} {\hat{{\mathbf {u}}}}_0({\mathbf {x}}(t),t) = \arg \min _{{\mathbf {u}}\in \mathscr {U}(t)} s_0({\mathbf {x}}(t),{\mathbf {u}},t) \; \forall {\mathbf {x}}\in \mathscr {X}, t\in [0,t_f] \end{aligned}$$ -

3.

Solve \({\dot{{\mathbf {x}}}}_1(t) = {\mathbf {f}}({\mathbf {x}}_1(t),\hat{{\mathbf {u}}}_0({\mathbf {x}}_1(t),t),t)\), for the given \({\mathbf {x}}(0)\), and set \({\mathbf {u}}_1(t)={\hat{{\mathbf {u}}}}_0({\mathbf {x}}_1(t),t)\) for all \(t\in [0,t_f]\).

\(({\mathbf {x}}_2,{\mathbf {u}}_2)\) is computed by starting over from \(({\mathbf {x}}_1,{\mathbf {u}}_1)\), formulation of \(q_1\) and \({\hat{{\mathbf {u}}}}_1\), and so on.

As in Lyapunov’s method, the use of Krotov’s method is not straightforward. It requires the formulation of a suitable sequence of Krotov functions, \(\{q_k\}\), such that \(s_k\) and \(s_{fk}\) will satisfy the aforementioned min/max problem. If a sequence of such functions can be found, it allows the computation of a global optimum for the given optimal control problem. However, the search for such a Krotov function is a significant challenge that should be overcome in order to use this method. The difficulty is that there is no known unified approach for formulating Krotov functions and they usually differ from one optimal control problem to another. In this work, a suitable sequence of Krotov functions was found for the following constrained bilinear quadratic regulator problem.

4 Optimal Control Problem and Solution—Main Results

The constrained bilinear quadratic regulator (CBQR) problem is defined as follows.

Definition 4.1

(CBQR) Let \(\mathscr {U}=\{u:[0,t_f]\rightarrow [0,\infty [\}\) be a collection of admissible control signals. Let the state-space \(\mathscr {X}\) be a linear space of continuous and piecewise smooth vector functions \({\mathbf {x}}:[0,t_f]\rightarrow \mathbb {R}^n\).

It is desirable to find an optimal and admissible process \(({\mathbf {x}}^*,u^*)\) that satisfies the bilinear states equation:

and minimizes quadratic performance index:

where \({\mathbf {A}}\in \mathbb {R}^{n\times n}\); \({\mathbf {c}},{\mathbf {b}}\in \mathbb {R}^n\) where \({\mathbf {b}}\) is a column vector and \({\mathbf {c}}\) is a row vector; \(0\le {\mathbf {Q}}\in \mathbb {R}^{n\times n}\); \(r>0\).

The following theorems define the optimal control law and the sequence of q which are needed for applying Krotov’s method for the CBQR problem. Two notations will be used in order to distinguish between the control signal and the control law. The first will be denoted as u and the second as \({\hat{u}}\).

Theorem 4.1

Let the Krotov function be

where \({\mathbf {P}}:[0,t_f]\rightarrow \mathbb {R}^{n\times n}\) is a symmetric matrix function, smooth above \(]0,t_f[\); and let \({\mathbf {x}}\) be a given process. Then, there exists a unique control law that minimizes \(s({\mathbf {x}}(t),u(t),t)\) and it is defined by:

Proof

The partial derivatives of q are:

Substituting in Eqs. (5a) and (5b) yields:

Completing the square leads to:

where \(f_2\) is independent of u. It is obvious that for minimization of \(s({\mathbf {x}}(t),u(t),t)\) it is best to choose \(u(t)=v({\mathbf {x}}(t),t)\), however, this choice is admissible only if \(v({\mathbf {x}}(t),t)\ge 0\). For \(v({\mathbf {x}}(t),t) < 0 \), the admissible minimization is given by \(u(t)=0\). That result is summarized in Eq. (8). \(\square \)

Theorem 4.2

Let \(({\mathbf {x}}_k,u_k)\) be a given process and let \({\mathbf {P}}_k(t)\) be the solution of:

Then, the Krotov function

satisfies

Proof

According to Eq. (9), \(s_k({\mathbf {x}}(t),u_k(t),t)\) will be

Substitution of \({\dot{{\mathbf {P}}}}_k(t)\) from Eq. (10) yields

Since \(s_k({\mathbf {x}}(t),u_k(t),t) = s_k({\mathbf {x}}_k(t),u_k(t),t)\), it is obvious that

\(\square \)

The dependency of Krotov’s method on a Krotov function makes it somewhat abstract. The last two theorems turn it into a concrete solution method for the addressed CBQR problem. Theorem 4.2 provides a suitable Krotov function (step 1 in Krotov’s method), and theorem 4.1 define the corresponding control law (step 2 in Krotov’s method). The resulting algorithm is summarized in Fig. 1. It computes two sequences, \(\{q_k\}\) and \(\{({\mathbf {x}}_k,u_k)\}\), where the latter is an optimizing sequence. Its output is an “arbitrary close” approximation for the optimal \({\mathbf {P}}\), which defines the control law (Eq. (8)). As J has an infimum, the convergence of \(\{({\mathbf {x}}_k,u_k)\}\) to the optimal process \(({\mathbf {x}}^*,u^*)\), is guaranteed. It should be noted that the use of absolute value in line 8 of the algorithm is theoretically unnecessary. Though, due to numerical computation errors, the algorithm might lose its monotonicity when it gets closer to the optimum without it.

5 Numerical Example

To demonstrate the efficiency of the CBQR control design, a dynamic model of a typical 3 floor free vibrating reinforced concrete frame was analyzed. The control force was applied through a single variable viscous damper installed at the first floor. The model is represented as a plane frame whose dynamic scheme is presented in Fig. 1. All computations were carried out using original routines written in MATLAB framework.

The mass, damping, and stiffness matrices as well as the control force distribution vector are:

\({\mathbf {K}}\), \({\mathbf {M}}\) and \({\varvec{\phi }}\) were computed by suitable Ritz transformations. \({\mathbf {C}}_d\) is a Rayleigh proportional damping matrix and \({\mathbf {c}}=\begin{bmatrix}{\mathbf {0}}&{\varvec{\phi }}^T\end{bmatrix}\). The states and control weighting matrices are:

The initial state was chosen to be- \({\mathbf {x}}(0)=\begin{bmatrix}0\,&0\,&0\,&0.4\,&0.4\,&0.4\end{bmatrix}^T\).

The following cases were considered:

-

Case A: Uncontrolled structure.

-

Case B: LQR controlled structure.

-

Case C: CBQR controlled structure.

The LQR control weights were chosen such that the mechanical energy dissipated in each actuator, for cases B and C, would be equal. Ten iterations were carried out. The performance index improvement for each iteration is illustrated in Fig. 2. As it is evident from the figure, convergence was achieved after two iterations, and the change in J is monotonic. As a matter of fact, some small nonmonotonic variations were observed after the 5th iteration. However, their absolute value was decreasing consistently and they returned to be monotonic whenever the sample time was reduced.

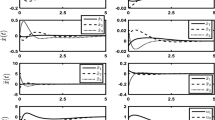

The CBQR control signal is given in Fig. 3. Its continuous form should be noted. For many semi-active control design approaches, e.g., bang–bang control [3] or clipped optimal control [13], u is not continuous and rapidly varying. From a practical viewpoint, smoothness is an advantage of the CBQR as rapid changes might be unwanted and sometimes impossible for control realization. The roof displacements for cases A to C are presented in Fig. 4. It can be seen that better performance was achieved in case B. The reason is that, unlike case C, in case B no constraints were imposed on the control signal and therefore additional improvement was gained. However, its realizability under semi-active constraints is questionable.

Figure 5 illustrates the correspondence between the actuator control force and velocity for case B. Figure 6 does the same for case C. It is observed that for case B there are intervals in which the computed control force is required to have the same sign as the actuator velocity. This is physically impossible for many semi-active actuators. On the other hand, the control force for case C is always opposed to the actuator velocity.

6 Conclusions

In this study a CBQR problem was defined, i.e., a constrained optimal control problem of a single input, free vibrating bilinear system and a quadratic performance index. It was shown that the CBQR problem is a mathematical formulation of a common semi-active control design problem which appears in structural control. The plant model that was used is general and is suitable for many structural control problems. The problem was solved by Krotov’s method, which is also known as the “global method of successive improvements of control.” Since the use of Krotov’s method requires a sequence of Krotov functions that corresponds to the properties of the addressed optimal control problem, a new type of Krotov function that suits the CBQR problem was formulated.

The computational steps were organized as an algorithm, which requires the states equation and a differential Lyapunov equation to be solved in each iteration. A proof outline for the algorithm convergence is provided.

The method was used for semi-active control design of a stable, free vibrating plant with a single control input. It was shown that the physical constraint related to semi-active actuator force can be relaxed by using CBQR as a control design tool, without the need of any arbitrary signal clipping. Its efficiency was demonstrated in a numerical example. It was demonstrated that the CBQR control signal is continuous, which is an advantage since from a practical viewpoint, rapid changes might be unwanted (and sometimes impossible) for control realization. The improvement in the controlled plant response is evident from the simulation results.

Notes

Recall that \({\mathbf {M}}>0\), \({\mathbf {K}}>0\) iff \({\mathbf {z}}^T{\mathbf {M}}{\mathbf {z}}>0\), \({\mathbf {z}}^T{\mathbf {K}}{\mathbf {z}}>0\) for all \({\mathbf {z}}\in \mathbb {R}^{n_z}\), \({\mathbf {z}}\ne {\mathbf {0}}\) and \({\mathbf {C}}_d\ge 0\) iff \({\mathbf {z}}^T{\mathbf {C}}_d{\mathbf {z}}\ge 0\) for all \({\mathbf {z}}\in \mathbb {R}^{n_z}\).

References

Wang, H.: Feedback stabilization of bilinear control systems. SIAM J. Control Optim. 36(5), 1669–1684 (1998). doi:10.1137/S0363012996305498

Krotov, V.F., Bulatov, A.V., Baturina, O.V.: Optimization of linear systems with controllable coefficients. Automat. Remote Control 72(6), 1199–1212 (2011). doi:10.1134/S0005117911060063

Bruni, C., DiPillo, G., Koch, G.: Bilinear systems: an appealing class of “nearly linear” systems in theory and applications. IEEE Trans. Autom. Control 19(4), 334–348 (1974)

Aganovic, Z., Gajic, Z.: The successive approximation procedure for finite-time optimal control of bilinear systems. IEEE Trans. Autom. Control 39(9), 1932–1935 (1994). doi:10.1109/9.317128

Chanane, B.: Bilinear quadratic optimal control: a recursive approach. Optim. Control Appl. Methods 18, 273–282 (1997)

Lee, S.H., Lee, K.: Bilinear systems controller design with approximation techniques. J. Chungcheong Math. Soc. 18(1), 101–116 (2005)

Costanza, V.: Finding initial costates in finite-horizon nonlinear-quadratic optimal control problems. Optim. Control Appl. Methods 29(3), 225–242 (2008)

Ribakov, Y., Gluck, J., Reinhorn, A.M.: Active viscous damping system for control of MDOF structures. Earthq. Eng. Struct. Dyn. 30(2), 195–212 (2001). doi:10.1002/1096-9845(200102)30:2<195::AID-EQE4>3.0.CO;2-X

Morales-Beltran, M., Paul, J.: Technical note: active and semi-active strategies to control building structures under large earthquake motion. J. Earthq. Eng. 19(7), 1086–1111 (2015). doi:10.1080/13632469.2015.1036326

Scruggs, J.T.: Structural control using regenerative force actuation networks. Ph.D. thesis, California Institute of Technology (2004)

Patten, W.N., Kuo, C.C., He, Q., Liu, L., Sack, R.L.: Seismic structural control via hydraulic semi-active vibration dampers (savd). In: Proceedings of the 1st world conference on structural control (1994)

Dyke, S.J., Spencer Jr., B.F., Sain, M.K., Carlson, J.D.: Modeling and control of magnetorheological dampers for seismic response reduction. Smart Mater. Struct. 5(5), 565 (1996)

Sadek, F., Mohraz, B.: Semiactive control algorithms for structures with variable dampers. J. Eng. Mech. 124(9), 981–990 (1998)

Yuen, K.V., Shi, Y., Beck, J.L., Lam, H.F.: Structural protection using MR dampers with clipped robust reliability-based control. Struct. Multidiscip. Optim. 34(5), 431–443 (2007). doi:10.1007/s00158-007-0097-3

Aguirre, N., Ikhouane, F., Rodellar, J.: Proportional-plus-integral semiactive control using magnetorheological dampers. J. Sound Vib. 330(10), 2185–2200 (2011)

Robinson, W.D.: A pneumatic semi-active control methodology for vibration control of air spring based suspension systems. Ph.D. thesis, Iowa State University (2012)

Ribakov, Y.: Semi-active pneumatic devices for control of MDOF structures. Open Constr. Build Technol. J. 3, 141–145 (2009)

Agrawal, A., Yang, J.: A Semi-active Electromagnetic Friction Damper for Response Control of Structures, Chap. 5. American Society of Civil Engineers, Reston (2000). doi:10.1061/40492(2000)6

Halperin, I., Agranovich, G.: Optimal control with bilinear inequality constraints. Funct. Differ. Equ. 21(3–4), 119–136 (2014)

Wang, D.H., Liao, W.H.: Magnetorheological fluid dampers: a review of parametric modelling. Smart Mater. Struct. 20(2), 023001 (2011)

Halperin, I., Ribakov, Y., Agranovich, G.: Optimal viscous dampers gains for structures subjected to earthquakes. Struct. Control Health Monit. 23(3), 458–469 (2016). doi:10.1002/stc.1779

Seierstad, A., Sydsaeter, K.: Sufficient conditions in optimal control theory. Int. Econ. Rev. 18(2), 367–391 (1977)

Krotov, V.F.: A technique of global bounds in optimal control theory. Control Cybern. 17(2–3), 115–144 (1988)

Krotov, V.F.: Global Methods in Optimal Control Theory. Chapman & Hall/CRC Pure and Applied Mathematics. CRC Press (1995)

Khurshudyan, A.Z.: The Bubnov–Galerkin method in control problems for bilinear systems. Autom. Remote Control 76(8), 1361–1368 (2015). doi:10.1134/S0005117915080032

Acknowledgements

Ido Halperin is grateful for the support of The Irving and Cherna Moskowitz Foundation for his scholarship.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Halperin, I., Agranovich, G. & Ribakov, Y. Optimal Control of a Constrained Bilinear Dynamic System. J Optim Theory Appl 174, 803–817 (2017). https://doi.org/10.1007/s10957-017-1095-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-017-1095-2