Abstract

Quasi-Newton and truncated-Newton methods are popular methods in optimization and are traditionally seen as useful alternatives to the gradient and Newton methods. Throughout the literature, results are found that link quasi-Newton methods to certain first-order methods under various assumptions. We offer a simple proof to show that a range of quasi-Newton methods are first-order methods in the definition of Nesterov. Further, we define a class of generalized first-order methods and show that the truncated-Newton method is a generalized first-order method and that first-order methods and generalized first-order methods share the same worst-case convergence rates. Further, we extend the complexity analysis for smooth strongly convex problems to finite dimensions. An implication of these results is that in a worst-case scenario, the local superlinear or faster convergence rates of quasi-Newton and truncated-Newton methods cannot be effective unless the number of iterations exceeds half the size of the problem dimension.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Quasi-Newton and truncated-Newton methods have been widely used since their origin. Arguments for their use are that their convergence is faster than that of the classical gradient method, due to curvature information and simple implementations and that, in the limited-memory form and when using truncated-Newton, they are suitable for large-scale optimization. These features have made the methods a textbook must-have [1–3]. In this paper, we will analyze some of the more common quasi-Newton methods, specifically quasi-Newton methods of the Broyden [4] and Huang [5] families, which include the well-known variants Broyden–Fletcher–Goldfarb–Shanno (BFGS) [6–9], symmetric rank 1 (SR1) [4, 10–13] and Davidon–Fletcher–Powell (DFP) [14, 15] (see also [16]). We will also consider the limited-memory BFGS (L-BFGS) method [17, 18]. Local convergence of these algorithms is well studied, see [2, 3] for an overview.

Many connections exist between quasi-Newton methods and first-order methods. The DFP method using exact line search generates the same iterations as the conjugate gradient method for quadratic functions [19] (see also [20, pp. 57] and [21, pp. 200–222]). A more general result is that the nonlinear Fletcher–Reeves conjugate gradient method is identical to the quasi-Newton Broyden method (with exact line search and initialized with the identity matrix) when applied to quadratic functions [2, The. 3.4.2]. These connections only apply for quadratic functions, but stronger properties and connections are also known. A memoryless BFGS with exact line search is equivalent to the nonlinear Polak–Ribiére conjugate gradient methods with exact line search [22] (note that the Hestenes-Stiefel and Polak–Ribiére nonlinear conjugate gradient methods are equivalent when utilizing exact line search [3, §7.2]). The DFP method with exact line search and initialized with the identity matrix is a nonlinear conjugate gradient method with exact line search [21, pp. 216–222]. Further, all quasi-Newton methods of the Broyden family are equivalent when equipped with exact line search [23]. Consequently, all the above statements can be extended to all methods of the Broyden family when exact line search is utilized (see also [2, pp. 64]).

Even though it seems clear that quasi-Newton methods and first-order methods are similar, it is not uncommon to encounter thoughts on the subject along the lines: “Two of the most popular methods for smooth large-scale optimization, the inexact- (or truncated-) Newton method and limited-memory BFGS method, are typically implemented so that the rate of convergence is only linear. They are good examples of algorithms that fall between first- and second-order methods” [24]. This statement raises two questions (i) Why is a limited-memory BFGS method not considered a first-order method? (ii) Can we give a more informative classification of truncated-Newton methods?

We can already partly answer question one since this is addressed in the convex programming complexity theory of Nemirovsky and Yudin [25]. Since the limited-memory BFGS utilizes information from a first-order oracle, it is a first-order method which implies certain worst-case convergence rates. The first-order definition and related worst-case convergence rates can be simplified for instructional purposes, as done by Nesterov [26]. However, the inclusion of quasi-Newton methods in the simplified and more accessible analysis of first-order methods by Nesterov is not addressed.

The second question on the classification of truncated-Newton methods is open for discussion. The truncated-Newton method implicitly utilizes second-order information. Customary classification would then denote the truncate-Newton method a second-order method since it requires a second-order oracle. However, the standard Newton method is also a second-order method. The worst-case convergence rates of the Newton and truncated-Newton methods are not the same and grouping these two substantially different methods into the same classification is dissatisfactory.

Contributions This paper elaborates on previous results and offers a more straightforward and accessible proof to show that a range of quasi-Newton methods, including the limited-memory BFGS method, are first-order methods in the definition of Nesterov [26]. Further, by defining a so-called generalized first-order method, we extend the analysis to include truncated-Newton methods as well. For the sake of completeness, we also consider complexity analysis of a class of smooth and strongly convex problems in finite dimensions. For a worst-case scenario, quasi-Newton and truncated-Newton methods applied to this class of problems show linear convergence rate for as many iterations as up to half the size of the problem dimension \(k\le {\textstyle \frac{1}{2}}n\). Hence, problems exist for which local superlinear or faster convergence of quasi-Newton and truncated-Newton methods will not be effective unless \(k > {\textstyle \frac{1}{2}}n\).

The rest of the paper is organized as follows. Section 2 below describes the used definition of first-order methods. Section 3 describes quasi-Newton methods, and it is shown that a range of quasi-Newton methods are first-order methods in the definition of Nesterov. Section 4 describes a generalized first-order method and shows that the truncated-Newton method belongs to this class and that the worst-case convergence rate is the same as that of first-order methods. Section 5 contains the conclusions.

2 First-Order Methods

We denote \({\mathbb {N}} := \{0,1,2,\ldots \}\) the natural numbers including 0. A vector is denoted \(x = [x^1,\ldots ,x^n]^T \in {\mathbb {R}}^n\). The vector \(e_i \in {\mathbb {R}}^n\) is the ith standard basis vector for \({\mathbb {R}}^n\). We will define the span of a set \(X \subseteq {\mathbb {R}}^n\) as \(\mathrm {span}\, X := \{ \sum _{i=1}^{|X|} c_i x_i \, : \, x_1,\ldots ,x_{|X|} \in X; c_1,\ldots ,c_{|X|} \in {\mathbb {R}}\}\). Note that this means that \(\mathrm {span}\, \{x_1,\ldots , x_m\} = \{ \sum _{i=1}^m c_i x_i \, : \, c_1,\ldots ,c_m \in {\mathbb {R}}\}\). Let X and Y be two sets with \(X \subseteq {\mathbb {R}}^n\) and \(Y \subseteq {\mathbb {R}}^n\), and then the (Minkowski) sum of sets is

We consider the convex, unconstrained optimization problem

with optimal objective \(f(x^\star ) = f^\star \). We assume that f is a convex function, continuously differentiable with Lipschitz continuous gradient constant L:

From time to time, we will strengthen our assumption on f and assume that f is also strongly convex with strong convexity parameter \(\mu > 0\), such that

For twice differentiable functions, the requirements on \(\mu \) and L are equivalent to the matrix inequality \(\mu I \preceq \nabla ^2 f(x) \preceq L I\). The condition number of a function is given by \(Q = \frac{L}{\mu }\). Following Nesterov [26], we will define a first-order (black-box) method for the problem (2) as follows.

Definition 2.1

A first-order method for differentiable objectives is any iterative method that from an initial point \(x_0\) generates \((x_i)_{i=1,\ldots ,k+1}\) such that

with \(F_0 = \emptyset \) and \(k \in {\mathbb {N}}\).

Strong results exist for such first-order methods [26]. These results date back to [25], but methods for achieving these bounds were first given in [27]. In Theorem 2.1, we reproduce an important result related to the first-order methods in Definition 2.1 as provided in [26].

Theorem 2.1

[26, Th 2.1.4 and Th 2.1.13] For any \(k \in {\mathbb {N}}\), \(1 \le k \le {\textstyle \frac{1}{2}}(n-1)\) and \(x_0\), there exists a (quadratic) function \(f: {\mathbb {R}}^n \mapsto {\mathbb {R}}\), which has a Lipschitz continuous gradient with constant L, such that any first-order method satisfies

There exists a function \(f: {\mathbb {R}}^\infty \mapsto {\mathbb {R}}\) with a Lipschitz continuous gradient which is strongly convex with condition number Q, such that any first-order method satisfies

We extend the above result for smooth and strongly convex problems from infinite-dimensional to finite-dimensional problems.

Theorem 2.2

For any \(k \in {\mathbb {N}}\), \(1\le k\le {\textstyle \frac{1}{2}}n\) and \(x_0\in {\mathbb {R}}^n\), there exists a function \(f: {\mathbb {R}}^n \mapsto {\mathbb {R}}\) with a Lipschitz continuous gradient which is strongly convex with condition number \(Q\ge 8\), such that any first-order method satisfies

with constant \(\beta = 1.1\).

Proof

See “Appendix 1”. \(\square \)

This means that for certain problems, the convergence rate of any first-order method cannot be faster than linear. Since some methods achieve these bounds, it is known the latter are tight (up to a constant) [26]. Several other variants have been published [28–32], see also the overview [33].

3 Quasi-Newton Methods

For the (line search) quasi-Newton methods, we select the iterations as follows

where \(x_0\) and \(H_0\) are provided initializations. Consequently, several methods for selecting the step sizes \(t_k\) and the approximations of the inverse Hessian \(H_k\) exist. Often \(H_{k+1}\) is built as a function of \(H_k\) and the differences

The following lemma connects the line search quasi-Newton methods to first-order methods in a straightforward manner.

Lemma 3.1

Any method of the form

where \(t_k \in {\mathbb {R}}\), \(H_0= \alpha I\), \(\alpha \in {\mathbb {R}}, \alpha \ne 0\) and

is a first-order method.

Proof

The first iteration \(k=0\) is a special case; this is verified by

The iterations \(k=1,2,\ldots \) will be shown by induction of the statement:

Induction start for \(k=1\) we have

and

so X(1) holds. Induction step. Assume X(k) holds. We have

where we have assumed that X(k) holds. Equation (7) is the first part of \(X(k+1)\). For the iterators, we then have

where we have used X(k) and (7). Equation (8) is the second part of \(X(k+1)\); consequently, \(X(k+1)\) holds. The iterations then satisfy \(x_{k+1} \in x_0 + F_{k+1}\) and the method is a first-order method. \(\square \)

We will now show that the quasi-Newton methods in the Broyden and Huang family, and the L-BFGS methods are first-order methods. This implies that they share the worst-case complexity bounds of any first-order method given in Theorems 2.1 and 2.2. Note that these methods are not necessarily optimal, in the sense that (up to a constant) they achieve the bounds in Theorems 2.1 and 2.2. As described in the introduction, these are known results [25]. Our reason for conducting this analysis is twofold: we believe that (i) the provided analysis is more intuitive and insightful (ii) it provides a logical background for analyzing truncated-Newton, taking a similar approach.

3.1 The Broyden Family

The (one parameter) Broyden family includes updates on the form

where \(\eta _k\) is the Broyden parameter and

The Broyden family includes the well-known BFGS, DFP, and SR1 quasi-Newton methods as special cases with certain settings of \(\eta _k\), specifically:

Corollary 3.1

Any quasi-Newton method of the Broyden family with \(\eta _k \in {\mathbb {R}}\), any step size rule and \(H_0 = \alpha I\), \(\alpha \in {\mathbb {R}}\), \(\alpha \ne 0\) is a first-order method.

Proof

Using Eq. (9), we have:

and the results then follow directly from Lemma 3.1. \(\square \)

3.2 The Huang Family

The Huang family includes updates on the form [5]:

where \(\psi _k,\, \phi _k,\,\varphi _k,\,\theta _k,\,\vartheta _k \in {\mathbb {R}}\) and

Note that the Huang family includes the DFP methods with the selection \(\psi _k = 1, \phi _k= 1, \varphi _k=0, \theta _k = 0, \vartheta _k = 1\), but also a range of other methods, see e.g. [5], including non-symmetric forms.

Corollary 3.2

Any quasi-Newton method of the Huang family, any step size rule and \(H_0 = \alpha I\), \(\alpha \in {\mathbb {R}}\), \(\alpha \ne 0\) is a first-order method.

Proof

Using Eq. (13), we have:

and the results then follow directly from Lemma 3.1. \(\square \)

3.3 Limited-Memory Broyden–Fletcher–Goldfarb–Shanno (L-BFGS)

For L-BFGS, we only build a Hessian approximation \({\bar{H}}_k\) out of the m most recent gradients [3]

where \(V_k = I - \rho _k y_k s_k^T\). From this, it is clear that for \(k \le m\) and \({\bar{H}}_{ k}^0 = H_0\) the approximations of the Hessian for the BFGS and L-BFGS methods are identical \({\bar{H}}_{k} = H_{k}\). This motivates that L-BFGS is also a first-order method.

Corollary 3.3

The L-BFGS method with any step size rule and \({\bar{H}}_k^0 = \gamma _k I\), \(\gamma _k \in {\mathbb {R}}\), \(\gamma _k \ne 0\) is a first-order method

Proof

See “Appendix 2”. \(\square \)

4 Generalized First-Order Methods and the Truncated-Newton Method

In this section, we will show that the truncated-Newton method is very similar as regards behavior as a first-order method. However, the truncated-Newton method is not a first-order method following Definition 2.1. Instead, when comparing a first-order method with a truncated-Newton method, we must assume some properties of the underlying iterative solver of the Newton equation

which is usually achieved by an underlying lower level algorithm that is often a first-order methods itself, e.g. the conjugate gradient method. For a fixed higher level index k, these iterations start with \(x_{k,0}:=x_k\) (warm-start) and \(F_{k,0}^\prime =\emptyset ,\) and subsequently iterate for \(i \in {\mathbb {N}}\)

After the lower level iterations have been completed, e.g. at iterate \(i^\star +1\), the new higher level iterate is set to

where \(t_k\) is obtained by a line search. By renumbering all iterates so that the lower and higher level iterations become part of the same sequence, we might express the last equation as

where the iterates and subspaces after \(x_k\) and \(F_k^\prime =\emptyset \) are generated following

We see that the main differences of a truncated-Newton method compared to a standard first-order method are (i) that the subspace is reset at the beginning of the lower level iterations (here, at index k) and (ii) that the exact gradient evaluations are replaced by a first-order Taylor series (here evaluated at \(x_k\)). This motivates the question of how to define a generalization of first-order methods that is also applicable to the class of truncated-Newton methods. In this definition, we will introduce a higher degree of freedom, but make sure that the truncated-Newton methods are contained. One way to formulate the generalized first-order subspaces could be

This definition would include all truncated-Newton methods that use first-order methods in their lower level iterations, together with standard first-order methods, and many more methods as well. Note that the dimensions of the subspaces do not only increase by one in each iteration as in the standard first-order method, but possibly by \(k+1\). Here, we can disregard the fact that in practice, we will not be able to generate these large dimensional subspaces. In this paper, we decide to generalize the subspace generation further, as follows.

Definition 4.1

A generalized first-order method for a problem with a twice continuously differentiable objective is any iterative algorithm that selects

with \(S_0 = \{ 0 \}\) and \(k \in {\mathbb {N}}\).

Remark 4.1

Note that here we use \(S_0 = \{0\}\) and not \(S_0 = \emptyset \) to ensure that the set-builder notation in (14) is well defined for the first iteration.

At this point, a short discussion of the term “generalized first-order methods” seems appropriate. The model in Definition 4.1 requires second-order information and using standard terminology for such methods, see for instance [25], these should be denoted second-order methods. However, one motivation for using truncated-Newton methods is the implicit usage of the second-order information in which the Hessian matrix \(\nabla ^2f(p)\in {\mathbb {R}}^{n \times n}\) is never formed but only touched upon via the matrix-vector product \(\nabla ^2f(p) d \in {\mathbb {R}}^{n}\). Further, whether or not the Hessian matrix is used explicitly or implicitly, a truncated-Newton method is more similar to first-order methods than to second-order methods from a global convergence perspective, as we will show in the following section. Hence, we believe that the term “generalized first-order methods” provides a meaningful description of these methods.

This also motivates the following definition of a generalized first-order oracle (deterministic, black box):

This means that with a two-tuple input (x, z), the oracle returns the three-tuple output \((f(x), \nabla f(x), \nabla ^2 f(x) z)\). In this case, the second-order information is not directly available as in a second-order oracle but only \(\nabla ^2 f(x) z\). This results in a stronger and more precise description of truncated-Newton methods than saying that they fall between first- and second-order methods as suggested in [24] and discussed in the introduction.

4.1 Equivalence of Generalized and Standard First-Order Methods for Quadratic Functions

For a quadratic function, f(x), the spaces of the usual first-order method and the generalized first-order method coincide, \(F_k=S_k\).

Lemma 4.1

For quadratic functions f, \(S_k = F_k\), \(k\ge 1\).

Proof

As f is quadratic, \(\nabla f\) is linear, and thus coincides with its first-order Taylor series, i.e. \(\nabla f(p) + \nabla ^2f(p) \, d = \nabla f(p + d)\). To simplify the notation, denote the constant Hessian \(\nabla f^2(x) = P\) for all \(x \in {\mathbb {R}}^n\). For \(F_1 = \mathrm {span}\{\nabla f(x_0) \}\), and for quadratic problems \(S_1 = 0 \,+\, \mathrm {span}\{\nabla f(x_0) + P \cdot 0 \}= \mathrm {span}\{ \nabla f(x_0) \}\). For the standard first-order method \(k\ge 1\), the following holds

Conversely, for the generalized first-order method applied to a quadratic function for \(k\ge 1\) it holds that

Since \(S_1 = F_1\) and (15)–(16) are the same recursions, \(S_k = F_k,\; \forall k\ge 1\). \(\square \)

With the Lemma 4.1 at hand, we can now present an important result which motivates the name “generalized first-order methods”.

Theorem 4.1

Theorems 2.1 and 2.2 holds for generalized first-order methods.

Proof

Generalized first-order methods and first-order methods are equivalent for quadratic problems following Lemma 4.1. Hence, the results in Theorems 2.1 and 2.2 follow directly (see also [26, The. 2.1.7 and The. 2.1.13]). \(\square \)

This means that the convergence for generalized first-order methods is the same as for first-order methods, i.e., in a worst-case scenario, they are sub-linear for smooth problems and linear for smooth and strongly convex problems, including the finite-dimensional case. This implies that from a global convergence perspective, a generalized first-order method has more in common with first-order methods compared to second-order methods.

5 Conclusions

In this paper, we have tried to answer two important questions. First, why are limited-memory BFGS methods not considered first-order methods? We believe that this is connected to the accessibility of the analysis provided in the work [25]. To this end, we have given a more straightforward analysis, using the definition of first-order methods as provided in [26]. The second question is whether it is possible to give a better description of the classification of truncated-Newton methods? We have described a class of methods named generalized first-order methods to which the truncated-Newton methods belong and have shown that the worst-case global convergence rate for generalized first-order methods is the same as that applying to for first-order methods. Thus, in a worst-case scenario, quasi-Newton and truncated-Newton methods are lower-bounded by a linear rate of convergence for \(k\le {\textstyle \frac{1}{2}}n\) according to Theorem 2.2 (see also the comment [26, pp. 41]). In a worst-case scenario, a better convergence, such as superlinear, can only be effective for \(k>{\textstyle \frac{1}{2}}n\). Since the number of iterations k for this bound depends on the dimensionality of the problem n, this bound has the strongest implication for large-scale optimization.

References

Conn, A.R., Gould, N.I.M., Toint, P.L.: Trust-Region Methods. SIAM, Philadelphia (2000)

Fletcher, R.: Practical Methods of Optimization, 2nd edn. Wiley, Hoboken (2000)

Nocedal, J., Wright, S.J.: Numerical Optimization, 2nd edn. Springer Series in Operations Research, Berlin (2006)

Broyden, C.G.: Quasi-Newton methods and their application to function minimization. Math. Comput. 21, 368–381 (1967)

Huang, H.Y.: Unified approach to quadratically convergent algorithms for function minimization. J. Optim. Theory Appl. 5(6), 405–423 (1970)

Broyden, C.G.: The convergence of a class of double-rank minimization algorithms: 2. The new algorithm. IMA J. Appl. Math. 6(3), 222–231 (1970)

Fletcher, R.: A new approach to variable metric algorithms. Comput. J. 13(3), 317–322 (1970)

Goldfarb, D.: A family of variable-metric methods derived by variational means. Math. Comput. 24(109), 23–26 (1970)

Shanno, D.F.: Conditioning of quasi-Newton methods for function minimization. Math. Comput. 24(111), 647–656 (1970)

Davidon, W.C.: Variance algorithm for minimization. Comput. J. 10(4), 406–410 (1968)

Fiacco, A.V., McCormick, G.P.: Nonlinear Programming. Wiley, New York (1968)

Murtagh, B.A., Sargent, R.W.H.: A constrained minimization method with quadratic convergence. In: Optimization. Academic Press, London (1969)

Wolfe, P.: Another variable metric method. Working paper (1968)

Davidon, W.C.: Variable metric method for minimization. Technical report. AEC Research and Development Report, ANL-5990 (revised) (1959)

Fletcher, R., Powell, M.J.D.: A rapidly convergent descent method for minimization. Comput. J. 6(2), 163–168 (1963)

Hull, D.: On the huang class of variable metric methods. J. Optim. Theory Appl. 113(1), 1–4 (2002)

Nocedal, J.: Updating quasi-Newton matrices with limited storage. Math. Comput. 5, 773–782 (1980)

Liu, D.C., Nocedal, J.: On the limited-memory BFGS method for large scale optimization. Math. Program. 45, 503–528 (1989)

Meyer, G.E.: Properties of the conjugate gradient and Davidon methods. J. Optim. Theory Appl. 2(4), 209–219 (1968)

Polak, E.: Computational Method in Optimization. Academic Press, Cambridge (1971)

Ben-Tal, A., Nemirovski, A.: Lecture notes: optimization iii: Convex analysis, nonlinear programming theory, nonlinear programming algorithms. Georgia Institute of Technology, H. Milton Stewart School of Industrial and System Engineering. http://www2.isye.gatech.edu/~nemirovs/OPTIII_LectureNotes.pdf (2013)

Shanno, D.F.: Conjugate gradient methods with inexact searches. Math. Oper. Res. 3(3), 244–256 (1978)

Dixon, L.C.W.: Quasi-newton algorithms generate identical points. Math. Program. 2(1), 383–387 (1972)

Nocedal, J.: Finding the middle ground between first and second-order methods. OPTIMA 79— Mathematical Programming Society Newsletter, discussion column (2009)

Nemirovskii, A.S., Yudin, D.B.: Problem Complexity and Method Efficiency in Optimization. Wiley, New York (1983). First published in Russian (1979)

Nesterov, Y.: Introductory Lectures on Convex Optimization, A Basic Course. Kluwer Academic Publishers, Berlin (2004)

Nesterov, Y.: A method for unconstrained convex minimization problem with the rate of convergence \(O({1}/{k^2})\). Dokl. AN SSSR (translated as Soviet Math. Docl.) 269, 543–547 (1983)

Nesterov, Y.: On an approach to the construction of optimal methods of minimization of smooth convex functions. Ekonom. i. Mat. Mettody 24, 509–517 (1988)

Nesterov, Y.: Smooth minimization of nonsmooth functions. Math. Program. Ser. A 103, 127–152 (2005)

Nesterov, Y.: Gradient methods for minimizing composite objective function. Université Catholique de Louvain, Center for Operations Research and Econometrics (CORE). No 2007076, CORE discussion papers (2007)

Bioucas-Dias, J.M., Figueiredo, M.A.T.: A new TwIST: two-step iterative shrinkage/thresholding algorithms for image restoration. IEEE Trans. Image Process. 16(12), 2992–3004 (2007)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imag. Sci. 2, 183–202 (2009)

Tseng, P.: On accelerated proximal gradient methods for convex-concave optimization. unpublished manuscript (2008)

Gregory, R.T., Karney, D.L.: A Collection of Matrices for Testing Computational Algorithms. Wiley, New York (1969)

Acknowledgments

The authors would like to thank the anonymous reviewers of an earlier submission of this manuscript. The first author thanks Lieven Vandenberghe for interesting discussions on the subject. The second author thanks Michel Baes and Mike Powell for an inspirational and controversial discussion on the merits of global iteration complexity versus locally fast convergence rates in October 2007 at OPTEC in Leuven. Grants The work of T. L. Jensen was supported by The Danish Council for Strategic Research under Grant No. 09-067056 and The Danish Council for Independent Research under Grant No. 4005-00122. The work of M. Diehl was supported by the EU via FP7-TEMPO (MCITN-607957), ERC HIGHWIND (259166), and H2020-ITN AWESCO (642682).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Ilio Galligani.

Appendices

Appendix 1: Proof of Theorem 2.2

Proof

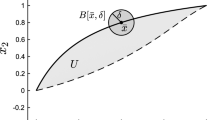

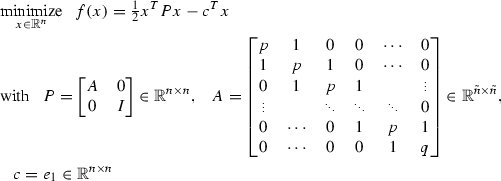

We will follow the approach [26, The. 2.1.13] but for finite-dimensional problems (this is more complicated as indicated in [26, p. 66]). Consider the problem instance

where \({\tilde{n}}\le n\) and the initialization \(x_0 = 0\). We could also have generated the problem \({\bar{f}}({\bar{x}}) = f({\bar{x}} + \bar{x}_0)\) and initialized it at \({\bar{x}}_0\) since this is just a shift of sequences of any first-order method. To see this, let \(k\ge 1\) and \({\bar{x}} \in {\bar{x}}_0 + {\bar{F}}_k\) be an allowed point for a first-order method applied to a problem with objective \({\bar{f}}\) using \({\bar{x}}_0\) as initialization. Let \(x = {\bar{x}} + {\bar{x}}_0\), then

Consequently \(x \in F_k = {\bar{F}}_k\) and we can simply assume \(x_0 = 0\) in the following.

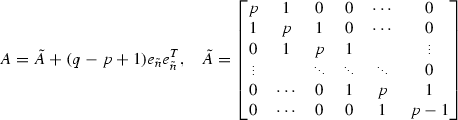

We select \(p = 2+\mu \) and \(q=(p+\sqrt{p^2-4})/2\) with the bounds \(1\le q \le p\). Then:

The value \(q-p+1\ge 0 \) for \(\mu \ge 0\). The eigenvalues of the matrix \({\tilde{A}}\) are given as [34]

The smallest and largest eigenvalues of A are then bounded as

With \(\mu \le 1\), we have \(\lambda _\mathrm{min}(P) = \lambda _\mathrm{min} (A)\) and \(\lambda _\mathrm{max}(P) = \lambda _\mathrm{max} (A)\). The condition number is given by \(Q=\frac{L}{\mu } = \frac{p+3}{p-2}=\frac{5+\mu }{\mu }\ge 6\), and the solution is given as \(x^\star = P^{-1} e_1\). The inverse \(A^{-1}\) can be found in [34], and the ith entry of the solution is then

where

Since q is a root of the second-order polynomial \(y^2-p y+1\), we have \(s_i = q^i,\forall i\ge 0\). Using \(Q = \frac{p+3}{p-2} \Leftrightarrow p = 2\frac{Q+\frac{3}{2}}{Q-1}\) and then

A simple calculation of \(\beta \) in (17) can for instance be \(\beta = \frac{\frac{3}{2} + \sqrt{{\textstyle \frac{1}{2}}+ 5 Q}}{\sqrt{Q}} \Big \vert _{Q=8} \simeq 2.78\), which is sufficient for any \(Q \ge 8\). However, solving the nonlinear equation yields that \(\beta =1.1\) is also sufficient for any \(Q \ge 8\). Since \(\nabla f(x) = P x - c\), the set \(F_k\) expands as:

Since P is tridiagonal and \(x_0 = 0\), we have

Considering the relative convergence

Fixing \({\tilde{n}}=2k\), we have for \(k = {\textstyle \frac{1}{2}}{\tilde{n}} \le {\textstyle \frac{1}{2}}n\)

and inserting (17) yields

\(\square \)

Remark We note that it is possible to explicitly state a smaller \(\beta \) and hence a tighter bound, but we prefer to keep the explanation of \(\beta \) simple.

Appendix 2: Proof of Corollary 3.3

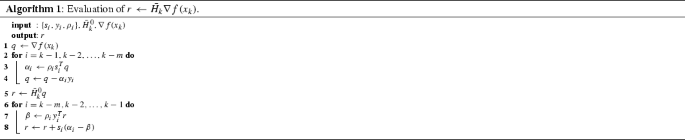

For this proof, we note that the multiplication \({\bar{H}}_k \nabla f(x_k)\) can be calculated efficiently via Algorithm 1 [3, Alg. 7.4]. From Algorithm 1, we obtain that with \({\bar{H}}_k^0 = \gamma _k I\) and using (6),

and then recursively inserting

The iterations are then given as

and L-BFGS is a first-order method. \(\square \)

Rights and permissions

About this article

Cite this article

Jensen, T.L., Diehl, M. An Approach for Analyzing the Global Rate of Convergence of Quasi-Newton and Truncated-Newton Methods. J Optim Theory Appl 172, 206–221 (2017). https://doi.org/10.1007/s10957-016-1013-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-016-1013-z