Abstract

We consider bootstrap percolation and diffusion in sparse random graphs with fixed degrees, constructed by configuration model. Every vertex has two states: it is either active or inactive. We assume that to each vertex is assigned a nonnegative (integer) threshold. The diffusion process is initiated by a subset of vertices with threshold zero which consists of initially activated vertices, whereas every other vertex is inactive. Subsequently, in each round, if an inactive vertex with threshold \(\theta \) has at least \(\theta \) of its neighbours activated, then it also becomes active and remains so forever. This is repeated until no more vertices become activated. The main result of this paper provides a central limit theorem for the final size of activated vertices. Namely, under suitable assumptions on the degree and threshold distributions, we show that the final size of activated vertices has asymptotically Gaussian fluctuations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Threshold models of contagion have been used to describe many complex phenomena in diverse areas including epidemiology [7, 33, 35, 36, 41], neuronal activity [3, 39], viral marketing [24, 25, 27] and spread of defaults in economic networks [4, 8].

Consider a connected graph \(G=(V,E)\) with n vertices \(V=[n]:=\{1,2, \ldots , n\}\). Given two vertices \(i,j \in [n]\), we write \(i \sim j\) if \(\{i,j\} \in E\). Every vertex has two states: it is either active or inactive (sometimes also referred to as infected or uninfected). We assume that to each vertex is assigned a nonnegative (integer) threshold. Let \(\theta _i\) be the threshold of vertex i. The diffusion is then initiated by the (deterministic) subset of vertices with threshold zero which consists of initially activated vertices \({\mathcal {A}}_0:=\{i\in V: \theta _i=0\}.\) Subsequently, in each round, if an inactive vertex with threshold \(\theta \) has at least \(\theta \) of its neighbours activated, then it also becomes active and remains so forever. Namely, at time \(t\in {\mathbb {N}}\), the (deterministic) set of active vertices is given by

where  denotes the indicator of an event \({\mathcal {E}}\), i.e., this is 1 if \({\mathcal {E}}\) holds and 0 otherwise.

denotes the indicator of an event \({\mathcal {E}}\), i.e., this is 1 if \({\mathcal {E}}\) holds and 0 otherwise.

We study above threshold driven contagion model in sparse random graphs with fixed degrees, constructed by configuration model. The interest in this random graph model stems from its ability to mimic some of the empirical properties of real networks, while allowing for tractability (see e.g., [16, 32, 40]). We describe this random graph model next.

1.1 Configuration Model

For each integer \(n\in {\mathbb {N}}\), we consider a system of n vertices \([n]:=\{1,2, \ldots ,n\}\) endowed with a sequence of initial non-negative integer thresholds \((\theta _{n,i})_{i=1}^n\). We are also given a sequence \({\textbf{d}}_n = (d_{n,i})_{i=1}^n\) of nonnegative integers \(d_{n,1},\ldots ,d_{n,n}\) such that \(\sum _{i=1}^n d_{n,i}\) is even. By means of the configuration model we define a random multigraph with given degree sequence, denoted by \({\mathcal {G}}(n,{\textbf{d}}_n)\). It starts with n vertices and \(d_{n,i}\) half-edges corresponding to the i-th vertex. Then at each step two half-edges are selected uniformly at random, and a full edge is completed by joining them. The multigraph is constructed when there is no more half edges left. Although self-loops and multiple edges may occur, these become rare (under our regularity conditions below) as \(n \rightarrow \infty \). It is easy to see conditional on the multigraph being simple graph, we obtain a uniformly distributed random graph with these given degree sequences; see e.g. [40].

Let \(u_n(d, \theta )\) be the numer of vertices with degree d and threshold \(\theta \):

We assume the following regularity conditions on the degree sequence and thresholds.

Condition 1.1

For each \(n\in {\mathbb {N}}\), \({\textbf{d}}_n = (d_{n,i})_{i=1}^n\) and \((\theta _{n,i})_{i=1}^n\) are sequence of non-negative integers such that \(\sum _{i=1}^n d_{n,i}\) is even and, for some probability distribution \(p:{\mathbb {N}}^2\rightarrow [0,1]\) independent of n, with \(u_n(d, \theta )\) as defined above:

- \(\mathrm{(C_1)}\):

-

as \(n\rightarrow \infty \),

$$\begin{aligned} \frac{u_n(d,\theta )}{n}:=\frac{\#\{ i \in [n]: d_{n,i} = d, \ \theta _{n,i} = \theta \}}{n} \longrightarrow p(d,\theta ) \end{aligned}$$for every non-negative integers d and \(\theta \). We further assume \(\sum _{\theta =0}^\infty p(0,\theta )<1\).

- \(\mathrm{(C_2)}\):

-

for every \(A>1\), we have

$$\begin{aligned} \sum _{d= 0}^\infty \sum _{\theta =0}^\infty u_n(d,\theta )A^d = \sum _{i=1}^n A^{d_{n,i}} = O(n). \end{aligned}$$

Remark 1.2

Let \((D_n,\Theta _n)\) be random variables with joint distribution \({\mathbb {P}}(D_n=d, \Theta _n=\theta )=u_n(d, \theta )/n,\) which is the distribution of dergee and threshold of a random vertex in \({\mathcal {G}}(n,{\textbf{d}}_n)\). Moreover, let \((D,\Theta )\) be random variables (over nonnegative integers) with joint distribution \({\mathbb {P}}(D=d, \Theta =\theta )=p(d,\theta )\). Let

Then Condition 1.1 can be rewritten as \((D_n, \Theta _n) {\mathop {\longrightarrow }\limits ^{d}}(D, \Theta )\) as \(n\rightarrow \infty \) and \({\mathbb {E}}[A^{D_n}]=O(1)\) for each \(A>1\), which in particular implies the uniform integrability of \(D_n\), so (as \(n\rightarrow \infty \))

Similarly, all higher moments converge.

1.2 Diffusion Process in \({\mathcal {G}}(n,{\textbf{d}}_n)\)

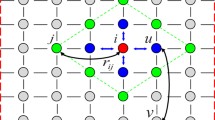

The aim of this section is to write the activation process described above as a diffusion process. We start with the graph \({\mathcal {G}}(n,{\textbf{d}}_n)\), and then remove (here the removal of a vertex is the same as activation described above) the initially activated vertex with threshold zero, say \({\mathcal {A}}_0\). Now the degree of each vertices in the graph induced by \([n]\setminus {\mathcal {A}}_0\) is less than or equal to the degree of that vertex in \({\mathcal {G}}(n,{\textbf{d}}_n)\). We denote the degree of vertex i at time t in the evolving graph by \(d_{i}^B(t)\) (here the time t is non-negative integer valued, later we will introduce a continuous time). In this process, we remove the vertex i at time t if \(d_i^B(t) \le d_i-\theta _i\) (if there are more than one such i, then we we select one arbitrarily). Note that at the end of this procedure all the vertices that are removed will be the set of active vertices, and the rest will remain inactive.

Let us describe the process by assigning a type A (active) or B (inactive) to each of these vertices. To begin with (at time \(t=0\)), the initially activated vertices (with threshold zero) are said to be of type A, and all vertices not in the initially activated vertices are of type B. At time \(t>0\), a vertex is said to be of type A if \(d_i^B(t) \le d_i-\theta _i\), otherwise, we call it of type B. In particular at the beginning of the diffusion process, all vertices in initially activated vertices are of type A, and all vertices not in the initially activated vertices are by definition of type B. As we proceed with the algorithm, \(d_i^B(t)\) might decrease and a type B vertices may become of type A. In terms of edges, a half-edge is of type A or B when its endpoint is. As long as there is any half-edge of type A, we choose one such half-edge uniformly at random and remove the edge it belongs to. Note that it may result in changing the other endpoint from B to A (by decreasing \(d_i^B(t)\)) and thus create new half-edges of type A. When there are no half-edges of type A left, we stop the process and the final set of activated vertices is the set of vertices of type A (which are all isolated).

The next step is to turn this process into a balls and bins problem. In this step we simultaneously run the activation process described above and construct the configuration model. We call a type A (or B) vertex as type A (or B) bins and similarly consider the half-edges as balls of type A (or B) if its end point is type A (or B). At each step, we remove first one random ball from the set of balls in A-bins and then another ball without restriction. We stop when there are no non-empty A-bins. Therefore we alternately remove an A-ball and a random ball. We may just as well say that we first remove a random A-ball. We then remove balls in pairs, first a ball without restriction, and then a random A-ball, and stop with the ball, leaving no A-ball to remove.

The next step is to run the above deletion process in continuous time. Each ball has an exponentially distributed random lifetime with mean 1, independent of the other balls. In other words balls die and are removed at rate 1, independent of each other. Also when a ball dies we remove a random A ball (in terms of configuration model these two half-edges form an edge). We stop when we should remove an A ball but there is no such a ball. Let \(H_A(t)\) and \(H_B(t)\) denote the number of A balls and B balls respectively, at time t. Note that these parameters depend on n, but we will omit the subscript as it is clear from the context. We also let \(A_n(t)\) and \(B_n(t)\) be the number of A bins and B bins at time t.

Let \(\tau _n\) be the stopping time of the above diffusion process. Note that there are no A balls left at \(\tau _n\). However we pretend that we delete a (nonexistent) A ball at \(\tau _n\)-th step and denote \(H_A(\tau ) = -1\). Therefore the stopping time \(\tau _n\) may be characterized by \(H_A(\tau ) = -1\), and \(H_A(t) \ge 0\) for all \(0\le t < \tau _n\). Denoting by \({\mathcal {A}}_n^*\) the final set of activated vertices, we observe that \(|{\mathcal {A}}^*_n|= n-B_n(\tau _n).\) Next we consider the balls only. The total number of balls at time t is \(H_A(t)+H_B(t)\). In the evolution of this process each ball dies with rate 1 and another ball is removed upon its death. Therefore, \(H_A(t)+H_B(t)\) is a death process with rate 2.

1.3 Main Theorems

The main result of this paper provides a central limit theorem for the final size of activated vertices. Namely, when the degree and threshold sequences satisfy Condition 1.1, we show that the final size of activated vertices \(|{\mathcal {A}}^*_n|\) has asymptotically Gaussian fluctuations.

Let

and \(\textsf{Bin}(d,z)\) denotes the binomial distribution with parameters d and z.

For the following theorems, we will use the notation

where \(u_n(d,\theta )\) is the number of vertices with degree d and threshold \(\theta \), and

Theorem 1.3

Assume that Condition 1.1 holds. Let \(\tau _n' \le \tau _n\) be a stopping time such that \(\tau _n' {\mathop {\longrightarrow }\limits ^{p}}t_0\) for some \(t_0 \ge 0\). Then in \({\mathcal {D}}[0,\infty )\), as \(n\rightarrow \infty \),

for all \(t\le t_0\), where \(Z_{A}\) is a continuous Gaussian process on \([0,t_0]\) with mean 0 and variance

where

The proof of above theorem is provided in Sect. 3.1.

Let us define

which also gives us the plausible candidate for the limit of our stopping time

Note that the solution \({\widehat{z}}\) always exists since \(h_A(0)=0\). Also, \(h_A(z)\) is continuous in z. We will further assume that if \({\widehat{z}}\ne 0\), then it is not a local minimum of \(h_A(z)\), i.e., \(\alpha :=h'_A({\widehat{z}})>0\).

Lemma 1.4

Let \(\tau _n\) be the stopping time of the diffusion process such that \(H_A(\tau _n) = -1\) for the first time. Then \(\tau _n {\mathop {\longrightarrow }\limits ^{p}}-\ln {\widehat{z}}\) as \(n\rightarrow \infty \), where \({\mathop {\longrightarrow }\limits ^{p}}\) denotes the convergence in probability.

The proof of lemma is provided in Sect. 3.3.

We are now interested in the final number of activated vertices (bins) which is given by \(\lim _{t \rightarrow \infty }A_n(t \wedge \tau _n)\). Note that from Theorem 1.3 with the stopping time \(\tau _n\) (as in Lemma 1.4), we have for \(t\ge -\ln {{\widehat{z}}}\),

Therefore for \(t\ge \ln {{\widehat{z}}}\),

The following theorem provides a central limit theorem for the final size of activated vertices. Let us denote by

and let \({\widehat{z}}_n\) be the largest \(z\in [0,1]\) such that \(h_A^n(z)=0\).

Theorem 1.5

Assume Condition 1.1. Let \({\widehat{\tau }}=-\ln {{\widehat{z}}}\) and \({\widehat{\tau }}_n=-\ln {{\widehat{z}}_n}\). We have:

-

If \({\widehat{z}}=0\) then asymptotically almost all nodes become active and \(|{\mathcal {A}}^*_n|=n-o_p(n)\).

-

If \({\widehat{z}}\ne 0\) and \({\widehat{z}}\) is a stable solution, i.e. \(\alpha :=h'_A({\widehat{z}})>0\), then

$$\begin{aligned} n^{-1/2}\left( |{\mathcal {A}}^*_n|- n{\widehat{a}}_n({\widehat{\tau }}_n)\right) {\mathop {\longrightarrow }\limits ^{d}}Z_{\text {Act}}, \end{aligned}$$where \(Z_{\text {Act}}= \frac{{{\widehat{a}}'}({\widehat{\tau }})}{\alpha } Z_{HA}({\widehat{\tau }})+ Z_A({\widehat{\tau }})\), where \((Z_{HA}({\widehat{\tau }}), Z_A({\widehat{\tau }}))\) jointly follow a Gaussian distribution that is described in Proposition 3.3.

The proof of above theorem is given in Sect. 3.4, where we first derive a joint functional central limit theorem for the processes \((A_n(t),H_A(t))\), from which we derive the theorem.

1.4 Relation to Bootstrap Percolation and k-Core

We now discuss our results with respect to related literature.

The diffusion model we consider in this paper can be seen as a generalization of bootstrap percolation and k-core in any graph \(G=(V,E)\).

In the case of equal thresholds (\(\theta _i=\theta \) over all vertices), our model is equivalent to bootstrap percolation (with deterministic initial activation). This process was introduced by Chalupa, Leath and Reich [13] in 1979 as a simplified model of some magnetic disordered systems. A short survey regarding applications of bootstrap percolation processes can be found in [1]. Recently, bootstrap percolation has been studied on varieties of random graphs models, see e.g., [22] for random graph \(G_{n,p}\), [5, 6, 18] for inhomogeneous random graphs and [2, 9, 26] for the configuration model; see also [12, 15, 28, 29, 34].

The k-core of a graph G is the largest induced subgraph of G with minimum vertex degree at least k. The k-core of an arbitrary finite graph can be found by removing vertices of degree less than k, in an arbitrary order, until no such vertices exist. By setting the threshold of vertex i as \(\theta _i=(d_i-k+1)_+=\max \{d_i-k+1,0\}\), we find that \(B_n(\tau _n)\) will be the size of k-core in the random graph \(G(n,{\textbf{d}}_n)\).

The questions concerning the existence, size and structure of the k-core in random graphs, have attracted a lot of attention over the last few decades, see e.g., [30, 37] for random graph \(G_{n,p}\), [10, 38] for inhomogeneous random graphs and [14, 17, 20, 21, 31] for the configuration model.

In particular, more closely related to our paper, [21] analyze the asymptotic normality of the k-core for sparse random graph \(G_{n,p}\) and for configuration model. We continue on the same line as [21] and generalize partly their results by allowing different threshold levels to each of vertices. Our proof technique is also inspired by [21]. In particular, we look at the spread of activation (or infection) and constructing the configuration model simultaneously. Then we express the number of inactive vertices at a particular time point in terms of a martingale. After that we appeal to a martingale limit theorem from [19] to derive the limiting distribution.

Notation We let \({\mathbb {N}}\) be the set of nonnegative integers. Let \(\{ X_n \}_{n \in {\mathbb {N}}}\) be a sequence of real-valued random variables on a probability space \( (\Omega , {\mathbb {P}})\). If \(c \in {\mathbb {R}}\) is a constant, we write \(X_n {\mathop {\longrightarrow }\limits ^{p}} c\) to denote that \(X_n\) converges in probability to c. That is, for any \(\epsilon >0\), we have \({\mathbb {P}} (|X_n - c|>\epsilon ) \rightarrow 0\) as \(n \rightarrow \infty \). We write \(X_n = o_{p} (a_n)\), if \(|X_n|/a_n\) converges to 0 in probability. We use \({\mathop {\longrightarrow }\limits ^{d}}\) for convergence in distribution. If \({\mathcal {E}}_n\) is a measurable subset of \(\Omega \), for any \(n \in {\mathbb {N}}\), we say that the sequence \(\{ {\mathcal {E}}_n \}_{n \in {\mathbb {U}}}\) occurs with high probability (w.h.p.) if \({\mathbb {P}} ({\mathcal {E}}_n) = 1-o(1)\), as \(n\rightarrow \infty \). Also, we denote by \(\textsf{Bin}(k,p)\) a binomial distribution corresponding to the number of successes of a sequence of k independent Bernoulli trials each having probability of success p. We will suppress the dependence of parameters on the size of the network n, if it is clear from the context. We use the notation  for the indicator of an event \({\mathcal {E}}\) which is 1 if \({\mathcal {E}}\) holds and 0 otherwise. We let \({\mathcal {D}}[0,\infty )\) be the standard space of right-continuous functions with left limits on \([0,\infty )\) equipped with the Skorohod topology (see e.g. [19, 23])

for the indicator of an event \({\mathcal {E}}\) which is 1 if \({\mathcal {E}}\) holds and 0 otherwise. We let \({\mathcal {D}}[0,\infty )\) be the standard space of right-continuous functions with left limits on \([0,\infty )\) equipped with the Skorohod topology (see e.g. [19, 23])

2 Preliminaries

In this section we provide some preliminary lemmas that will be used in our proofs.

2.1 Some Death Process Lemmas

Consider a pure death process with rate 1. This process starts with some number of balls whose lifetimes are i.i.d. rate 1 exponentials.

Lemma 2.1

(Death Process Lemma) Let \(N_n(t)\) be the number of balls alive at time t in a rate 1 death process with \(N_n(0)=n\). Then

Proof

\(1-N_n(t)/n\) is the empirical distribution function of the n lifetimes, which are i.i.d. random variables with the distribution function \(1-e^{-t}\). Therefore the result follows using Glivenko-Cantelli theorem (see e.g. [23, Proposition 4.24]). \(\square \)

Lemma 2.2

(Number of Balls Centrality Lemma) The number of balls \(H_A(t)+H_B(t)\) follow a pure death process, and

Proof

In the evolution of this process each ball dies with rate 1 and another ball is removed upon its death. Therefore \(A(t)+B(t)\) is a death process with rate 2. Therefore the lemma follows using Lemma 2.1. \(\square \)

2.2 Martingale Limit Theorems

We recall some martingale theory that are going to be useful in proving Theorem 1.3. Let X be a martingale defined on \([0,\infty )\), we denote its quadratic variation of X by \([X,X]_t\), and the bilinear extension of quadratic variation to two martingales X and Y by \([X,Y]_t\). If X and Y be two martingales with path-wise finite variation, then

where \(\Delta X(s):= X(s) -X(s-)\) is the jump of X at s. Similarly, \(\Delta Y(s):= Y(s) -Y(s-)\). In our context there will only be countable number of jumps for the martingales under consideration, and the sum in Eq. (2) will be finite. We will assume \([X,Y]_0 = 0\). For vector-valued martingales \(X = (X_i)_{i=1}^m\) and \(Y = (Y_i)_{i=1}^n\), we define the square bracket [X, Y] to be the matrix \(([X_i,Y_j])_{i,j}\). A real-valued martingale X(s) on [0, t] is an \(L^2\) if and only if \({\mathbb {E}}[X,X]_t <\infty \) and \({\mathbb {E}}|X(0)|^2 <\infty \), and then \({\mathbb {E}}|X(t)|^2 = {\mathbb {E}}[X,X]_t+{\mathbb {E}}|X(0)|^2\). We will use the following martingale limit theorem from [19], see also [21, Proposition 4.1].

Proposition 2.3

For each \(n\ge 1\), let \(M_{n}(t)=(M_{ni}(t))_{i=0}^q\) be a q-dimensional martingale on \([0,\infty )\) and \(M_n(0)=0\). Also \(\Sigma (t)\) be a continuous positive semi-definite function such that for every fixed \(t\ge 0\)

Then \(M_n{\mathop {\longrightarrow }\limits ^{d}}M\), in \({\mathcal {D}}[0,\infty )\) where M is continuous q-dimensional Gaussian martingale with \({\mathbb {E}} M(t)=0\) and covariances \(\textrm{Cov}(M(t))= \Sigma (t)\).

In the next section, we will apply Proposition 2.3 to stopped processes.

3 Proofs

In this section we present the proofs of Theorem 1.3, Lemma 1.4 and Theorem 3.4.

Remark 3.1

In the proof of Theorem 1.3, we always consider the processes up to time \(\tau _n'\le \tau _n\). Although, sometimes it will be possible to extend the process (for example by removing non-existent balls), that will not be relevant for us, and thus we will always stop the process at \(\tau _n'\).

3.1 Proof of Theorem 1.3

We denote the number of (alive) balls at time t by \(W_n(t)\). Clearly \(W_n(t)= H_A(t) +H_B(t)\). Let \(m_n:=\frac{1}{2} \sum _{i=1}^n d_{n,i}\) denotes the total number of edges in \({\mathcal {G}}(n,{\textbf{d}}_n)\). In our construction \(W_n(0)=2m_n-1\), and \(W_n\) decreases by 2 each time a ball dies. The death happens with rate 1, and therefore \(W_n(t)+\int _{0}^t 2W_n(s)\, ds\) is a martingale on \([0,\tau _n']\). In differential form

where \({\mathcal {M}}\) is a martingale. Now by Ito’s lemma and (3),

which implies that \({\widehat{W}}_n(t): = e^{2t} W_n(t)\) is another martingale. Note that distinct balls die at distinct time with probability one, also all jumps in \(W_n(t)\) equals \(-2\). The quadratic variation of \({\widehat{W}}_n(t)\) is given by

Using the fact that \(W_n(t)\) is a decreasing function in t and \(W_n(0) = 2m_n-1\) we get

Let the stopped martingale on \([0,\infty )\) be \(W_n^*(t):= \frac{1}{n}{\widehat{W}}(t \wedge \tau _n')\). Then \(W_n^*\) is a martingale on \([0,\infty )\) and, for every \(T<\infty \), the quadratic variation of this martingale is

as n goes to infinity. Also \(0\le W_n^*(t) \le 2m_n/n = O(1)\). Therefore by Proposition 2.3 on \({\mathcal {D}}[0,\infty )\), \(W_n^*(t) - W_n^*(0) {\mathop {\longrightarrow }\limits ^{p}}0\) uniformly on [0, T], and as \(n\rightarrow \infty \),

Now using (4), (5) and (6) we get for every \(t \in [0, T\wedge \tau _n']\),

Note that in the last display, (6) ensures that we can use the approximation \(W_n(t)= W_n(0)e^{-2t}+o_p(n)\) on \([0, T\wedge \tau _n']\). Let \(W_i(t)\) be the number of balls for vertex (bin) i and denote by (for \(d,\theta , \ell \in {\mathbb {N}}\))

the set of vertices (bins) with degree d, threshold \(\theta \) and \(\ell \) balls at time t. Let \(B_{d, \theta ,\ell }(t)=|{\mathcal {B}}_{d, \theta ,\ell }(t)|\). Therefore, the total number of (inactive) B bins at time t is given by

where \({\widetilde{B}}_{d,\theta ,\ell } = \sum _{r=\ell }^d B_{d, \theta ,r}(t)\) denotes the number of (inactive) B bins at time t with initial degree d and threshold \(\theta \) with at least \(\ell \) balls. In the rest of the proof we will derive a central limit theorem for the quantity \(B_n(t)\), which is the number of inactive vertices at time t. This will immediately give us our desired result since \(A_n(t)+B_n(t) =n\).

Note that \({\widetilde{B}}_{d,\theta ,\ell }\) decreases by one only when a ball dies in an uninfected (inactive) bin with initially d balls and threshold \(\theta \) that has exactly \(\ell \) balls, and there are precisely \(\ell B_{d,\theta ,\ell }\) many such balls, therefore

where \({\mathcal {M}}\) is a martingale.

Let us now define the following quantity

which gives

Plugging in (8) we get

where \(\, d\mathcal {M'}(t) = e^{\ell t} \,d {\mathcal {M}}(t)\). Since this is yet another martingale differential, we can define the following martingale for every fixed \(d\ge \ell \ge 0\)

The quadratic variation will be same as that of \({\widehat{B}}_{d,\theta ,\ell }(t)\), i.e.,

Let us now define the centered version of \(M_{d,\theta ,\ell }\) as follows

This is of course the centered martingale where for \(\ell \le d\),

The quadratic variation of \({\widetilde{M}}_{d,\theta ,\ell }(t)\) can be calculated using integration by parts as follows

Recall from (9) that \({\widehat{B}}_{d,\theta ,\ell }(t):= e^{\ell t} {\widetilde{B}}_{d,\theta ,\ell }(t),\) where \({\widetilde{B}}_{d,\theta ,\ell }(t)\) is the number of (uninfected) B bins with at least \(\ell \) balls at time t with initial number of balls (degree) d and threshold \(\theta \). Also, recall that balls die independently with rate 1. Let us denote

so that \(u_n(d,\theta ) = |{\mathcal {U}}_n(d,\theta )|\). This gives also \(u_{n}(d,\theta ) = \sum _{\ell }B_{d,\theta ,\ell }(0)\).

Recall that \(B_{d, \theta , \ell }(t) = |{\mathcal {B}}_{d,\theta ,\ell }(t)|\) where \({\mathcal {B}}_{d,\theta ,\ell }(t) = \{i\in [n]: d_i=d, \theta _i=\theta , W_i(t)=\ell \}\). Since each ball dies with rate one independent of each other and survival probability of a ball after time t is equal to \(e^{-t}\). A bin from \({\mathcal {U}}_n(d,\theta )\) has at least \(\ell \) balls at time t with probability

Hence we get

which gives

Now note that (for \(\theta \ge 1\))

Hence, by using Condition 1.1 and Glivenko-Cantelli’s lemma (since each bins are independent), we get

as \(n\rightarrow \infty \). Therefore, the quadratic variation of \({\widetilde{M}}_{d,\theta ,\ell }(t)\) satisfies (for \(\theta \ge 1\) and \(\ell \le d\)) (see also Eq. 31)

We will also use the following crude estimate. Using (11) we get that

Therefore we can apply Proposition 2.3 to the stopped process at \(\tau _n'\)

where \(Z_{d,\theta ,\ell }\) is a Gaussian process with \({\mathbb {E}}Z_{d,\theta ,\ell }(t) =0\) and covariance \(\Delta _{d,\theta ,\ell }(t)\).

Also note that \({\widetilde{B}}_{d,\theta ,\ell }(t)\) and \({\widetilde{B}}_{d',\theta ',\ell '}(t)\) can not change together almost surely for \((d,\theta ,\ell )\ne (d',\theta ',\ell ')\), therefore \([{\widetilde{M}}_{{d,\theta ,\ell }}, {\widetilde{M}}_{{d', \theta ', \ell '}}] = 0\). Thus for a finite set S, \(\bigl ({\widetilde{M}}_{d,\theta ,\ell }\bigr )_{(d,\theta ,\ell )\in S}\) converges to \((Z_{d,\theta ,\ell })_{(d,\theta ,\ell )\in S}\) in distribution, and \((Z_{d,\theta ,\ell })\) are independent.

Let us now express \({\widehat{B}}_{d,\theta ,\ell }\) in terms of \({M}_{d,\theta ,\ell }\) so that we can apply the limit theorems for \({\widetilde{M}}_{d,\theta ,\ell }\)’s to get limit theorems for \({\widehat{B}}_{d,\theta ,\ell }\).

Using (10) one can write

To see how to get (16) from (10), note that

Proceeding like this by repeatedly using (10), we can obtain (16). Since \(M_{d,\theta ,\ell }\) is a martingale \({\mathbb {E}}[M_{d,\theta ,\ell }(t)]= M_{d,\theta ,\ell }(0)\), and using (16) we get \( {\mathbb {E}}[{\widehat{B}}_{d,\theta ,\ell }(t)]={\widehat{b}}_{d,\theta ,\ell }(t)\), where we define for \(t\ge 0\),

Using (16), (17) and (12), it turns out that the centered version of \({\widehat{B}}_{d,\theta , \ell }(t)\) satisfies

Now using (14) we get (for \(\ell \le d\) and for any \(T\ge 0\)):

Then using Condition 1.1 and the simple fact that for \(A>1\),

we get for any \(T\ge 0\), by setting \(A= e^{4T}\), there is a constant \(C_A\) such that

Now using Doob’s \(L^2-\)inequality we get

Therefore using Cauchy–Schwarz inequality,

Therefore by (15) and Fatou’s lemma we get

Let us define

Then we have (since \((e^{-s}-e^{-t})^{r-\ell -1}\le (1-e^{-t})^{r-\ell -1}\) for \(s\in [0,t]\))

Therefore (19) yields

For fixed T, using the sum formula for negative binomial distribution with parameters \(\ell +1\) and probability of success \(1-e^{-T}(1-e^{-T})\),

which implies that (from (21))

Since \(1-e^{-T}(1-e^{-T})>e^{-T}\) (from \((1-e^{-T})^2>0\) for \(T>0\)), RHS of (21) converges to zero uniformly in n, as \(\ell \rightarrow \infty \). Now using (16), (17), (21) and applying [11, Theorem 4.2] we get

in \({\mathcal {D}}[0,\infty )\) for each \(\ell \), where

Again using (20) we obtain that the sum in (22) almost surely uniformly converges for \(t\le t_0\), and this gives \({\widetilde{Z}}_{d,\theta ,\ell }\) is continuous for each \((d,\theta ,\ell )\). Using (9), we can write

Now recall that we are interested in the case \(\ell =d-\theta +1\). More particularly, we will derive a central limit theorem of the following quantity

Note that from (15) we know the limiting distribution of \({\widetilde{M}}_{d, \theta , d-\theta }(t)\), and therefore, it will be sufficient to show that the contribution from the tail of (23) is negligible. To be precise, let us define two terms (we ignore the contribution from \(e^{-(d-\theta ) t}\), which will always be bounded above by 1)

and

In the following lemma we show that \({\mathbb {E}} \left[ \sup _{0\le t\le T} \left| {\widetilde{R}}_{\ell }(t)\right| \right] \), and \( {\mathbb {E}} \left[ \sup _{0\le t\le T} \left| {\widehat{R}}_{\ell }(t)\right| \right] \) converges to zero as \(\ell \rightarrow \infty \) uniformly in n.

Lemma 3.2

We have \({\mathbb {E}} \left[ \sup _{0\le t\le T} \left| {\widetilde{R}}_{\ell }(t)\right| \right] \rightarrow 0\) and \( {\mathbb {E}} \left[ \sup _{0\le t\le T} \left| {\widehat{R}}_{\ell }(t)\right| \right] \rightarrow 0\), as \(\ell \rightarrow \infty \), uniformly in n.

Proof

From Condition 1.1 and the fact that for all \(A>1\), \(A^d u_n(d,\theta ) \le \sum _{d \ge \ell }u_n(d,\theta ) A^d\) we find that for any \(A>1\) there is a constant \(C_A\) such that

Using Doob’s \(L^2-\)inequality we get

Therefore,

Using Cauchy–Schwarz inequality, and (24)

Using Condition 1.1, for all \(A>1\), \(A^d u_n(d,\theta ) =O(n) \). Therefore for any \(A>1\) there is a constant \(C_A\) such that

In particular, choosing \(A=e^{4T}\), we see that \({\mathbb {E}} \left[ \sup _{0\le t\le T} \left| {\widetilde{R}}_{\ell }(t)\right| \right] \) converges to zero as \(\ell \rightarrow \infty \) uniformly in n.

Now let us define

Observe that the index r is at most d, and therefore using Cauchy–Schwarz inequality, and (24)

We can now compute the following tail bound

Again we have \(u_n(d,\theta )\le C_A A^{-d}n\) for some constant \(C_A>0\). Plugging in \(A=e^{8T}\) we get

We can write

where in the second equality we used the expectation of negative binomial distribution. Now plugging this in (26) we have

which again goes to zero uniformly in n as \(\ell \rightarrow \infty \). This completes the proof of Lemma 3.2. \(\square \)

By using Lemma 3.2, (23) and [11, Theorem 4.2] we get

as \(n\rightarrow \infty \), that is

We are now done except for showing that \(Z_A(t)\) is continuous. Using (18) and (25) we get

Again using \(u_n(d,\theta )\le C_A A^{-d}n\) and writing the second term in terms of negative binomial distribution we get

Since \(\ell \le d\), by choosing \(A=e^{4T+2}\), we get

Using (30) and Fatou’s lemma we get

This in turn implies that \(\sum _{d=1}^{\infty }\sum _{\theta =1}^{d} e^{-(d-\theta ) t}{\widetilde{Z}}_{d,\theta ,d-\theta }(t)\), almost surely converges uniformly in \(t\le t_0\) and therefore \(\sum _{d=1}^{\infty } \sum _{\theta =1}^{d}{ e^{-(d-\theta ) t}} {\widetilde{Z}}_{d,\theta ,d-\theta }(t)\) is continuous almost surely. This completes the proof of Theorem 1.3.

3.2 The Stopping Time

Note that we can write

It is precisely the number of balls that were not initially infected (active), and remain non-infected at time t. First let us consider the term  . Consider those \(u_n(d,\theta )\) bins with degree d and threshold \(\theta \ge 1\) (not initially infected). For \(k=1,2\ldots ,u_n(d,\theta )\), let \(T_k\) be the time \(\ell \)-th ball is removed from the k-the such bin. Then

. Consider those \(u_n(d,\theta )\) bins with degree d and threshold \(\theta \ge 1\) (not initially infected). For \(k=1,2\ldots ,u_n(d,\theta )\), let \(T_k\) be the time \(\ell \)-th ball is removed from the k-the such bin. Then

For the k-th bin we get

out of the \(u_n(d,\theta )\) bins. Since all bins are independent of each other, using Glivenko-Cantelli lemma we get that

in probability as \(n \rightarrow \infty \). By Condition 1.1, \(u_n(d,\theta ) = p(d,\theta )n+o(n)\), therefore we get

in probability as \(n \rightarrow \infty \). Since (31) is true for all \(0\le \ell \le d\), taking difference of two consecutive terms we get for each \(0\le r\le d\),

and therefore taking a sum over r, and using Condition 1.1,

Also combining Condition 1.1 and (31) we get

in probability as \(n \rightarrow \infty \). Recall that the stopping time \(\tau \) was defined as \(H_A(\tau ) = -1\). Also note that from (32) and (6) we get that

in probability as \(n \rightarrow \infty \). We can then write \(h_B(z) =\sum _{d=1}^{\infty }\sum _{\theta =1}^{d}\sum _{\ell = d-\theta +1}^{d} \ell p(d,\theta )b(d,z,\ell )\) and \(h_A(z)=\lambda z^2 - h_B(z)\), as the limit of \(\frac{H_B(t)}{n}\) and \(\frac{H_A(t)}{n}\), by setting \(z=e^{-t}\). Also (33), gives us the plausible candidate for the limit of our stopping time \( {\widehat{z}}:=\sup \{z\in [0,1]: h_A(z)=0 \}. \) We further assume that if \({\widehat{z}}\) is a non-zero then it is not a local minimum of \(h_A(z)\).

3.3 Proof of Lemma 1.4

To show this let us take a constant \(t_1>0\) such that \(t_1 < -\ln {\widehat{z}}\). This means \({\widehat{z}}<1\), and hence \(\lambda > h_B(1)\). Therefore using the fact that \(\lambda z^2 - h_B(z)\) is continuous we get \(h_B(z) < \lambda z^2\) for \(z \in ({\widehat{z}},1]\). This again gives \(h_B(e^{-t}) < \lambda e^{-2t}\) for all \(t \le t_1\). Since \([0,t_1]\) is compact again using the continuity of \(h_B(z)\) we get \(h_B(e^{-t}) - \lambda e^{-2t}<-c\) for some \(c>0\). Therefore on the set \(\tau < t_1\) we will have \(h_B(e^{-\tau }) - \lambda e^{-2\tau }<-c\). On the other hand, since \(H_A(\tau ) =-1\), as \(n\rightarrow \infty \) in (33) \({H_A(\tau )}/{n} \rightarrow 0\), which gives a contradiction, and thus \({\mathbb {P}}(\tau \le t_1) \rightarrow 0\) as \(n \rightarrow \infty \). Now let us choose \(t_2 < \tau \) where \(t_2 \in ( -\ln {{\widehat{z}}}, -\ln {({\widehat{z}} -\varepsilon )})\). By our assumption \({\widehat{z}}\) is not a local minimum of \(h_A(z)=\lambda z^2 - h_B(z)\), therefore there is an \(\varepsilon >0\) such that \(\lambda e^{-2t_2} - h_B(e^{-t_2})=-c\) for some \(c>0\). Now by definition if \(\tau >t_2\), then \(H_B(t_2)\ge 0\). Plugging these in (33) we get \(\frac{H_B(t_2)}{n} - \lambda e^{-2t_2} + h_B(t_2) \ge c\). This gives \({\mathbb {P}}(\tau \ge t_2) \rightarrow 0\) as \(n \rightarrow \infty \). Since \(t_1\) and \(t_2\) can be arbitrary close to \(-\ln {\widehat{z}}\), the proof of the claim is complete.

3.4 Proof of Theorem 1.5

We first derive a joint functional central limit theorem for the processes \((A_n(t),H_A(t))\), from which we prove the theorem.

Proposition 3.3

Let \(\tau _n' \le \tau _n\) be a stopping time such that \(\tau _n' {\mathop {\longrightarrow }\limits ^{p}}t_0\) for some \(t_0 \ge 0\). Then in \({\mathcal {D}}[0,\infty )\), as \(n\rightarrow \infty \),

with \(Z_A\) as in Theorem 1.3, and \(Z_{HA}(t)\) is a Gaussian process that is described in the proof of this proposition. (The covariance could be calculated from (29) and (47).)

For the sake of readability, we postpone the proof of the proposition to the end of this section. We continue with the proof of Theorem 1.5. Part (i) follows form [2]. Consider now the case when \({\widehat{z}}\ne 0\) and \({\widehat{z}}\) is a stable solution, i.e. \(\alpha :=h'_A({\widehat{z}})>0\). Using similar arguments as in the proof of [21, Lemma 2.3], one can show that \({{\widehat{a}}_n}\) converges to \({{\widehat{a}}}\) and \({h}_A^n\) converges to \({h}_A\), together with their derivatives, i.e., \({{\widehat{a}}_n}'\) converges to \({{\widehat{a}}}'\) and \({h_A^n}'\) converges to \(h_A'\), uniformly on [0, 1].

Hence, since for small enough \(\delta >0\), \(h_A({\widehat{z}}+\delta )>0\), and \(h_A({\widehat{z}}-\delta )<0\), \(h_A^n\) has a zero at \({\widehat{z}}_n\) in \(({\widehat{z}}-\delta , {\widehat{z}}+\delta )\) for sufficiently large n. Further, since \(h_A^n \rightarrow h_A\) uniformly, we have \(h_A^n>0\) in the interval \([{\widehat{z}}+\delta ,1]\). Since \(\delta >0\) is arbitrary, we have \({\widehat{z}}_n \rightarrow {\widehat{z}}\) as \(n\rightarrow \infty \). Let us write \({\widehat{\tau }}_n= -\ln {{\widehat{z}}_n}\), and \({\widehat{\tau }}= -\ln {{\widehat{z}}}\), therefore \({\widehat{\tau }}_n\rightarrow {\widehat{\tau }}\) as \(n\rightarrow \infty \).

We now use the Skorohod coupling theorem so that we can consider all the random variables in (34) to be defined on the same probability space and the limit holds almost surely. Now we apply Proposition 3.3 with \(\tau _n\), and Lemma 1.4 gives us that \(\tau _n\rightarrow {\widehat{\tau }}=-\ln {{\widehat{z}}}\). Also, since the limits are continuous almost surely, we can say (34) holds uniformly on \([0,{\widehat{\tau }}+1]\). Therefore taking \(t = \tau _n\) (this is less than \({\widehat{\tau }}+1\) for large n almost surely), we get

where using continuity of \(Z_{HA}\), we absorb the error term in \(o(n^{1/2})\). Since \(H_A(\tau _n) =-1\), therefore

Using the Mean-Value theorem, we can write for some \(\xi _n \in [{\widehat{z}}_n,z_n ]\) or \([z_n,{\widehat{z}}_n ]\),

We have \(z_n\rightarrow {\widehat{z}}\) and \({\widehat{z}}_n\rightarrow {\widehat{z}}\), and therefore \(\xi _n \rightarrow {\widehat{z}}\). Now, from \({h_A^n}' \rightarrow {h_A}'\) uniformly, we get \({h_A^n}'(\xi _n)\rightarrow {h_A}'({\widehat{z}})=\alpha \). Therefore (36), and (37) we get

Now, we use the Mean value theorem on \(A_n\) for some \(\xi _n \rightarrow {\widehat{z}}\), and (38) to obtain

In the third equality we have used the fact that \({{\widehat{a}}_n}' \rightarrow {{\widehat{a}}}'\) uniformly in t. The proof is thus complete since \(|{\mathcal {A}}^*_n|= A_n(\tau _n)\). We end this section by presenting the proof of Proposition 3.3.

Proof of Proposition 3.3

First note that we can write the number of (uninfected) B balls at time t as

This gives

We wish to prove a joint central limit theorem for \((A_n(t), H_A(t))\). Let us first outline the procedure. We have already expressed \({\widehat{B}}_{d,\theta , \ell }(t)\) (which is the centered and scaled version of \({\widetilde{B}}_{d,\theta , \ell }(t)\)) in terms of \({\widetilde{M}}_{d,\theta ,\ell }(t)\)’s in (16), and therefore \(H_A(t)\) is also implicitly a linear combination of \(W_n(t)\), and \({\widetilde{M}}_{d,\theta ,\ell }(t)\)’s (since \({\widetilde{B}}_{d,\theta , \ell }(t)\)’s are also linear combinations of \({\widetilde{M}}_{d,\theta ,\ell }(t)\)). Therefore once we can prove that the joint distribution \((W_n, {\widetilde{M}}_{d,\theta ,\ell })\) is Gaussian, we can derive the joint distribution of \((A_n(t), H_A(t))\) (since both \(A_n(t)\), \(H_A(t)\) are linear combination of independent random variables which are jointly Gaussian). To do that, let us define \(\widetilde{{\widehat{W}}}_n(t)= n^{-1/2}\left( {\widehat{W}}_n(t)-{\widehat{W}}_n(0)\right) \), and note that using (7) we get for every \(t \in [0, T\wedge \tau _n]\),

Therefore using Proposition 2.3 on the stopped process

as \(n\rightarrow \infty \), where \({\widehat{Z}}_W\) is a continuous Gaussian process with mean zero, and variance \(2\lambda (e^{2t}-1)\). Now since \({\widehat{W}}_n(t): = e^{2t} W_n(t)\), we get that

as \(n\rightarrow \infty \), where \(Z_W\) is a continuous Gaussian process with mean zero, and variance \(2\lambda (e^{-2t}-e^{-4t})\). Moreover, for \(t\in [0, T\wedge \tau _n]\), \(\ell \ge d-\theta +1\), and \(\theta \ge 1\)

Now when \({\widetilde{B}}_{d,\theta ,\ell }\) jumps by 1 for some \(\ell \ge d-\theta +1\), the \(W_n\) jumps by \(-2\), therefore using (13), (43) yields

Therefore using Proposition 2.3 we get the joint convergence of \(({\widetilde{M}}_{d,\theta ,\ell }(t\wedge \tau _n'),\widetilde{{\widehat{W}}}_n(t\wedge \tau _n'))\) for \(\ell \ge d-\theta +1\). Thus combining (42), the joint convergence of \(({\widetilde{M}}_{d,\theta ,\ell }(t\wedge \tau _n'),\widetilde{{\widehat{W}}}_n(t\wedge \tau _n'))\) for \(\ell \ge d-\theta +1\), and (28) we get

as \(n\rightarrow \infty \), where \({\widehat{a}}_n(t) = 1-\frac{1}{n} \sum _{d=1}^{\infty }\sum _{\theta =1}^{d} u_n(d,\theta ) \beta (d,e^{-t},d-\theta +1)\),

and then

We can ignore the contribution of the tail in the centered and scaled version of (40) using an almost identical argument as Lemma 3.2. Similarly, we can also ensure that the limit \(Z_{HA}(t)\) is continuous. This completes the proof of Proposition 3.3. \(\square \)

Data Availability

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

References

Adler, J., Lev, U.: Bootstrap percolation: visualizations and applications. Braz. J. Phys. 33, 641–644 (2003)

Amini, H.: Bootstrap percolation and diffusion in random graphs with given vertex degrees. Electron. J. Comb. 17, R25 (2010)

Amini, H.: Bootstrap percolation in living neural networks. J. Stat. Phys. 141(3), 459–475 (2010)

Amini, H., Cont, R., Minca, A.: Resilience to contagion in financial networks. Math. Financ. 26(2), 329–365 (2016)

Amini, H., Fountoulakis, N.: Bootstrap percolation in power-law random graphs. J. Stat. Phys. 155(1), 72–92 (2014)

Amini, H., Fountoulakis, N., Panagiotou, K.: Bootstrap percolation in inhomogeneous random graphs. arXiv preprint arXiv:1402.2815, (2014)

Amini, H., Minca, A.: Epidemic spreading and equilibrium social distancing in heterogeneous networks. Dyn. Games Appl. 12(1), 258–287 (2022)

Amini, H., Minca, A., Sulem, A.: A dynamic contagion risk model with recovery features. Math. Oper. Res. 47(2), 1412–1442 (2022)

Balogh, J., Pittel, B.G.: Bootstrap percolation on the random regular graph. Random Struct. Algorithms 30(1–2), 257–286 (2007)

Bayraktar, E., Chakraborty, S., Zhang, X.: K-core in percolated dense graph sequences. arXiv preprint arXiv:2012.09730, (2020)

Billingsley, P.: Convergence of Probability Measures. Wiley, New York (2013)

Boccaletti, S., Latora, V., Moreno, Y., Chavez, M., Hwang, D.-U.: Complex networks: structure and dynamics. Phys. Rep. 424(4–5), 175–308 (2006)

Chalupa, J., Leath, P.L., Reich, G.R.: Bootstrap percolation on a Bethe lattice. J. Phys. C 12(1), L31 (1979)

Cooper, C.: The cores of random hypergraphs with a given degree sequence. Random Struct. Algorithms 25(4), 353–375 (2004)

Dorogovtsev, S.N., Goltsev, A.V., Mendes, J.F.F.: Critical phenomena in complex networks. Rev. Mod. Phys. 80(4), 1275 (2008)

Durrett, R.: Random Graph Dynamics. Cambridge University Press, Cambridge (2006)

Fernholz, D., Ramachandran, V.: Cores and Connectivity in Sparse Random Graphs. The University of Texas at Austin, technical report TR-04-13 (2004)

Fountoulakis, N., Kang, M., Koch, C., Makai, T., et al.: A phase transition regarding the evolution of bootstrap processes in inhomogeneous random graphs. Ann. Appl. Probab. 28(2), 990–1051 (2018)

Jacod, J., Shiryaev, A.: Limit Theorems for Stochastic Processes, vol. 288. Springer, New York (2013)

Janson, S., Luczak, M.J.: A simple solution to the k-core problem. Random Struct. Algorithms 30(1–2), 50–62 (2007)

Janson, S., Luczak, M.J.: Asymptotic normality of the k-core in random graphs. Ann. Appl. Probab. 18(3), 1085–1137 (2008)

Janson, S., Łuczak, T., Turova, T., Vallier, T.: Bootstrap percolation on the random graph \({G}_{n, p}\). Ann. Appl. Probab. 22(5), 1989–2047 (2012)

Kallenberg, O.: Foundations of Modern Probability. Springer, New York (1997)

Kempe, D., Kleinberg, J., Tardos, É.: Maximizing the spread of influence through a social network. In: Proceedings of the Ninth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 137–146 (2003)

Kleinberg, J.: Cascading behavior in networks: algorithmic and economic issues. Algorithmic Game Theory 24, 613–632 (2007)

Lelarge, M.: Diffusion and cascading behavior in random networks. Games Econ. Behav. 75(2), 752–775 (2012)

Leskovec, J., Adamic, L.A., Huberman, B.A.: The dynamics of viral marketing. ACM Trans. Web 1(1), 5 (2007)

Liu, Y.-Y., Barabási, A.-L.: Control principles of complex systems. Rev. Mod. Phys. 88(3), 035006 (2016)

Liu, Y.-Y., Csóka, E., Zhou, H., Pósfai, M.: Core percolation on complex networks. Phys. Rev. Lett. 109(20), 205703 (2012)

Łuczak, T.: Size and connectivity of the k-core of a random graph. Discret. Math. 91(1), 61–68 (1991)

Molloy, M.: Cores in random hypergraphs and boolean formulas. Random Struct. Algorithms 27(1), 124–135 (2005)

Molloy, M., Reed, B.: A critical point for random graphs with a given degree sequence. Random Struct. Algorithms 6(2–3), 161–179 (1995)

Morris, S.: Contagion. Rev. Econ. Stud. 67(1), 57–78 (2000)

Newman, M., Barabási, A.-L., Watts, D.J.: The Structure and Dynamics of Networks. Princeton University Press, Princeton (2006)

Newman, M.E.J.: Spread of epidemic disease on networks. Phys. Rev. E 66(1), 016128 (2002)

Pastor-Satorras, R., Castellano, C., Van Mieghem, P., Vespignani, A.: Epidemic processes in complex networks. Rev. Mod. Phys. 87, 925–979 (2015)

Pittel, B., Spencer, J., Wormald, N.: Sudden emergence of a giantk-core in a random graph. J. Comb. Theory Ser. B 67(1), 111–151 (1996)

Riordan, O.: The k-core and branching processes. Comb. Probab. Comput. 17(1), 111–136 (2008)

Tlusty, T., Eckmann, J.-P.: Remarks on bootstrap percolation in metric networks. J. Phys. A 42(20), 205004 (2009)

van der Hofstad, R.: Random Graphs and Complex Networks, vol. 43. Cambridge university Press, Cambridge (2016)

Watts, D.J.: A simple model of global cascades on random networks. Proc. Natl. Acad. Sci. 99(9), 5766–5771 (2002)

Acknowledgements

We thank the referee for a very detailed report which significantly improved the quality of this article.

Funding

Erhan Bayraktar is partially supported by the National Science Foundation under Grant DMS- 2106556 and by the Susan M. Smith chair. Suman Chakraborty is partially supported by the Netherlands Organisation for Scientific Research (NWO) through Gravitation-Grant NETWORKS- 024.002.003.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Communicated by Eric A. Carlen.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Amini, H., Bayraktar, E. & Chakraborty, S. A Central Limit Theorem for Diffusion in Sparse Random Graphs. J Stat Phys 190, 57 (2023). https://doi.org/10.1007/s10955-023-03068-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10955-023-03068-9