Abstract

In this paper, we study phase transitions of a class of time-inhomogeneous diffusion processes associated with the \(\varphi ^4\) model. We prove that when \(\gamma <0\), the system has no phase transition and when \(\gamma >0\), the system has a phase transition and we study the phase transition of the system through qualitative and quantitative methods. We further show that, as the strength of the mean field tends to 0, the solution and stationary distribution of such system converge locally uniformly in \(L^2\) and Wasserstein distance respectively to those of corresponding system without mean field.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we study a class of time-inhomogeneous diffusion processes associated with the \(\varphi ^4\) model. These processes are solutions of the following stochastic differential equations defined on a complete filtered probability space \((\Omega ,\mathscr {F},\{\mathscr {F}_{t}\}_{t\ge 0}, \mathbb {P})\):

where \(\gamma \ne 0\), \(\xi \) is a \(\mathscr {F}_{0}\)-measurable random variable, and \(\{W_t\}_{t\ge 0}\) is a Brownian motion defined on \((\Omega ,\mathscr {F},\{\mathscr {F}_{t}\}_{t\ge 0}, \mathbb {P})\), \(u(x)=x^4-\beta x^2\), and \(\beta \ge 0\) is the inverse temperature. Due to the double-well potential u(x), there is a phase transition in this model. As shown in Fig. , it is difficult for u(x) to get out of a pit at low temperatures.

Phase transition is a central topic in statistical physics. Many methods have been developed to study phase transitions, including the coupling method [2, 4, 15, 22], the dual method [15, 22], the graph representation and seepage theory [7, 14], the Peirels method and Pirogov–Sinai theory [19], the cluster expansions method [16, 17] and the gap estimation method [3, 13, 15] etc.

In statistical physics, one often studies the mean field models as simplified approximations of the original ones. It is usually a common phenomenon that with the mean field models it is easier to exhibit phase transitions, from which our paper origins. Consider the following operator on \(\mathbb {R}\):

The first two terms represent the drift and diffusion terms as given in diffusion process. The new additional drift term \(\mathbb {E}X_t\), the mean of the process, represents the exchange of the energy from the outside: a source of energy is provided from the outside according to the mean of the process. Intuitively, this model can be interpreted as follows. Fix a vessel \(u\in \mathbb {Z}^d\) in which the reaction is kept. Regard all \(v\in \mathbb {Z}^d\backslash \{u\}\) as outside and the diffusions between u and \(v\in \mathbb {Z}^d\backslash \{u\}\) are now described simply by the new drift term \(\mathbb {E}X_t\). In this sense, the process represents a non-equilibrium model. Clearly, the new term makes the process be time-inhomogeneous. For more information about mean field models, see Dawson and Zheng [8], Feng [9,10,11], Feng and Zheng [12].

In this paper, we restrict ourselves to the time-inhomogeneous \(\varphi ^4\) model (1), whose operator is

Here, \(\gamma \) represents the strength coefficient of the mean field and we are also ready to study its effect on the phase transition. Because \(\mathbb {E}X_t\) is a function of the distribution of \(X_t\), thus Eq. (1) is a particular type of distribution dependent stochastic differential equation or McKean-Vlasov stochastic differential equation. And the existence and non-uniqueness of invariant probability measures for McKean-Vlasov SDEs was investigated in [23].

The main purpose of this paper is to show that there is a phase transition for the number of invariant probability measures of Eq. (1). In Sect. 2, we give some preliminaries. In Sect. 3, we study the number of invariant probability measures. In the case of \(\gamma <0\), the system has no phase transition and in the case of \(\gamma >0\), we study the phase transition of the system through qualitative and quantitative methods. More specifically, we prove that there exists a critical value \(\beta _{c}\) such that the system admits three invariant probability measures for \(\beta >\beta _c\), while the system has a unique invariant probability measure for \(\beta \le \beta _c\). We also obtain an expression for \(\beta _{c}\) by quantitative analysis and this result is completely new. In the last section, we show that when \(\gamma \rightarrow 0\) the solution and invariant probability measure of the system converge to the solution and invariant probability measure in the case of \(\gamma =0\).

2 Preliminaries

Consider the following distribution dependent SDE or Mckean–Vlasov SDE on \(\mathbb {R}\)

where \(b:[0,\infty )\times \mathbb {R}\times \mathscr {P}(\mathbb {R})\rightarrow \mathbb {R}\), \(\sigma :[0,\infty )\times \mathbb {R}\times \mathscr {P}(\mathbb {R})\rightarrow \mathbb {R}\times \mathbb {R}\) are measurable, and \(\mathscr {L}_{X_t}\) is the distribution of \(X_t\).

Let \(\mathscr {P}(\mathbb {R})\) be the family of all probability measures on \(\mathbb {R}\). For \(\theta \in [1,\infty )\), we define the following subspace of \(\mathscr {P}(\mathbb {R})\):

which is a Polish space under the Wasserstein distance

where \(\mathscr {C}(\mu _1,\mu _2)\) is the set of all couplings for\(~\mu _1\) and \(\mu _2\) (see [2] or [21]).

When Eq. (2) has a unique strong solution (see [21]) in \(\mathscr {P}_\theta \), the solution \((X_t)_{t\ge 0}\) is a Markov process in the sense that for any \(s\ge 0\), \((X_t)_{t\ge s}\) is determined by solving the equation from time s with initial state \(X_s\). More precisely, letting \(\{X_{s,t}(\xi )\}_{t\ge s}\) denote the solution of the equation from time s with initial state \(X_{s,s}=\xi \), the existence and uniqueness imply

where \(\xi \) is \(\mathscr {F}_s\)-measurable with \(\mathbb {E}{\vert \xi \vert }^\theta <\infty \).

When Eq. (2) has \(\mathscr {P}_\theta \)-weak uniqueness, we may define a semigroup \((P^*_{s,t})_{t\ge s}\) on \(\mathscr {P}_\theta \) by letting \(P^*_{s,t}\mu :=\mathscr {L}_{X_{s,t}}\) for \(\mathscr {L}_{X_{s,s}}=\mu \in \mathscr {P}_\theta \). Indeed, by (3) we have

We can investigate the ergodicity in the time homogeneous case when \(\sigma \) and b do not depend on t. In this case we have \(P^*_{s,t}=P^*_{t-s}\), for \(t\ge s\ge 0\).

We call \(\mu \in \mathscr {P}_\theta \) an invariant probability measure of \(P^*_t\) if \(P^*_t\mu =\mu \) for all \(t\ge 0\), and we call the solution ergodic if there exists \(\mu \in \mathscr {P}_\theta \) such that \(\lim _{t\rightarrow \infty }P^*_t\nu =\mu \) weakly for any \(\nu \in \mathscr {P}_\theta .\)

Now, we consider the following one-dimensional SDE

where \(\sigma (x)\ne 0\) for all \(x\in \mathbb {R}\), \(\sigma \) and b satisfy the Lipschitz and growth conditions. Thus SDE (4) has a unique strong and non-explosive solution \((X_t)_{t\ge 0}\). The generator of process \((X_t)_{t\ge 0}\) is

where \(a(x)=\frac{1}{2}\sigma (x)^2\).

Suppose that the function \(x\mapsto \frac{1}{a(x)}\exp \big [\int ^x_0\frac{b(y)}{a(y)}dy\big ]\) is Lebesgue integrable for all \(x\in \mathbb {R}\). We can verify that L is symmetric with respect to the following measure

That is,

Let \(\pi =\mu (\mathbb {R})^{-1}\mu \). Then, (6) implies that \(\pi \) is an invariant probability measure of the process \((X_t)_{t\ge 0}\). The detailed proof is provided in Remark 1 at the end of this paper. This assertion also can be seen around Eq. (7.29) on page 172 in [5]. On the other hand, since a(x) is continuous on \(\mathbb {R}\) and \(a(x)>0\) for all \(x\in \mathbb {R}\), it is well known that the process \((X_t)_{t\ge 0}\) is Lebesgue irreducible and has the strong Feller property. Hence, we can get the uniqueness of the invariant measure (see [6]; Theorem 4.2.1). Combining with both conclusions above, we find that the process \((X_t)_{t\ge 0}\) has the unique invariant probability measure \(\pi \).

Indeed, due to the Girsanov transform of diffusion process (see [18]), one can see that the transition probability kernel \(P_t(x,dy)\) of the SDE (4) is absolutely continuous with respect to each other, which in turn yields the unique invariant probability measure is absolutely continuous with respect to the Lebesgue measure.

3 The Existence of Phase Transitions

In this section, we prove that there exists a phase transition for the number of invariant probability measures of Eq. (1). Equation (1) can be regarded as a Mckean–Vlasov stochastic differential equation, and the existence and uniqueness of the solution can be proved by [21] (for specific verification one can see Remark 2).

In the following, we can refer to [1], which investigated the issue on the phase transition concerning a class of McKean–Vlasov SDEs. Once more, by the algebraic equation, which is based on the fact that the first moment of the solution process coincides with that of the invariant probability measure, the question on phase transition goes back to seek the number of roots.

Assume \((X_t)_{t\ge 0}\) is the unique solution of Eq. (1) and \(\mu (t)=\mu (t,dx)\) is a measure on \(\mathbb {R}\) with finite first moment \(\lambda (t):=\int _\mathbb {R}x\mu (t,dx)\). Recall that a probability measure \(\pi \in \mathscr {P}(\mathbb {R})\) is stationary if \(\pi (B)=P^*_t\pi (B)=\int _BP^*_t(x,dy)\pi (dx)\) is not dependent on t, where \(B\in \mathscr {B}(\mathbb {R})\). If \(\pi =\pi (dx)\) is a stationary distribution of \((X_t)_{t\ge 0}\) with finite first moment \(m:=\int _\mathbb {R}x\pi (dx)\), then we get \(\mathbb {E}X_t=m\). Hence, Eq. (1) becomes

In fact, the stationary distribution \(\pi \) can be given by (5):

where

Note that Z is well-defined, since it is defined by the integral on the whole real line and the exponent tends to \(-\infty \) as x tends to \(\infty \) . Then, the finiteness of the first moment gives us a condition

We have

Qualitative Analysis

Lemma 1

where \(\sharp \) represents the number of elements of the set.

Theorem 2

For Eq. (7), we suppose \(\beta \ge 0\) and \(\gamma \ne 0\).

-

(1)

In the case of \(\gamma >0\), there exists a critical value \(\beta _c>0\) such that

$$\begin{aligned} \vert \mathscr {I}\vert {\left\{ \begin{array}{ll} \ge 1 &{} in~any~case,\\ =1 &{} for~all~0<\beta \le \beta _c,\\ =3 &{} for~all~\beta>\beta _c>0. \end{array}\right. } \end{aligned}$$(10)This means that there exists phase transition for the solution of Eq. (7) when \(\beta>\beta _c>0\).

-

(2)

In the case of \(\gamma <0\), there is no phase transition.

Proof

The proof adopts the idea of [20], Theorem 2.1]. Obviously, \(f(m)=0\). Using the series expansion of \(e^{\gamma mx}\), we have

where

In the case of \(\gamma <0\), we have \(\frac{\gamma I_\beta (2n+2)}{(2n+1)I_\beta (2n)}-1<0\). From (11) we get that when \(m<0\), we have \(f(m)>0\); when \(m>0\), we have \(f(m)<0\). Then f(m) has a unique zero point \(m=0\), so there is no phase transition and the result of part (2) is proved.

Now we consider the case of \(\gamma >0\). Note that when \(\beta =0\), u(x) is not a double-well potential and there is no phase transition.

(a) We will prove that there exists an integer \(n_0=n_0(\gamma ,\beta )\) such that for all \(n\ge n_0\), \(f^{(2n+1)}(0)\le 0\) and for all \(n<n_0\), \(f^{(2n+1)}(0)>0\). We introduce the quantity

Integration by parts gives

so

First, we will prove that for all \(\beta >0\) and \(\gamma >0\) the sequence \(\{R_n(\gamma ,\beta )\}_{n\in \mathbb {N}}\) is nonincreasing. It is sufficient to prove that sequence \(\{I_\beta (2n+4)/I_\beta (2n+2)\}_{n\in \mathbb {N}}\) is nondecreasing. For this, we put \(T(x)=(I_\beta (x+2))/(I_\beta (x))\), \(x>0\), then

For proving T(x) is nondecreasing, we only need to prove that \(\zeta (x):=I'_\beta (x)/I_\beta (x)\) is nondecreasing since \(T(x)\ge 0\) for all \(x\in \mathbb {R}_+\). By Cauchy–Schwarz inequality, we have

This means that \(\big (R_n(\gamma ,\beta )\big )_{n\in \mathbb {N}}\) is nonincreasing for all \(\beta >0\) and \(\gamma >0\).

Now we prove that \(R_n(\gamma ,\beta )\le 0\) for n large enough. To do this, it is sufficient to find a real a such that \(f(a)\le 0\). Put \(V(x):=x^4-(\beta +\frac{\gamma }{2})x^2\), take \(a=\sqrt{\beta +\frac{\gamma }{2}}\), \(y:=x-a\), it is easy to get that \(V(y+a)\ge V(y-a)\) for all \(y\ge 0\). So we have

In conclusion, there exists \(n\in \mathbb {N}\) such that \(R_n(\gamma ,\beta )\le 0\).

Finally, put \(n_0:=\min \big \{n:R_n(\gamma ,\beta )\le 0\big \}\). Since \(R_n(\gamma ,\beta )\) and \(f^{(2n+1)}(0)\) have the same sign, we deduce that \(f^{(2n+1)}(0)\le 0\) for all \(n\ge n_0\) and \(f^{(2n+1)}(0)>0\) for all \(n< n_0\).

(b) By the last step, we can write

Taking out a common factor \(m^{2n_0+1}\), we get

Since the function \(m\rightarrow m^{2(n-n_0)}\) (resp. \(m\rightarrow -m^{2(n-n_0)}\)) is decreasing for all \(n\le n_0-1\) (resp. \(n\ge n_0\)), we deduce that f(m) admits at most one positive zero point. The function f(m) is odd so it admits one or three zero points on \(\mathbb {R}\). This means that Eq. (7) admits one or three invariant probability measures.

(c) Here we prove that the uniqueness of the invariant probability measure is directly related to the sign of \(f'(0)\). Taking derivative in Eq. (12) and taking out a common factor \(m^{2n_0}\), we get

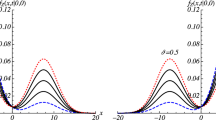

By an argument similar to that in the paragraph above, we deduce that \(f'(m)\) vanishes at most one point on \(\mathbb {R_+}\). Because \(f'(m)\) tends to \(-\infty \) when m goes to infinity, this means that \(f'(m)\) is either always nonpositive or positive then nonpositive. This means that the behavior of f(m) is related to the sign of \(f'(0)\), as is shown in Figs. and .

-

For \(f'(0)\le 0\), f(m) is nonincreasing on \(\mathbb {R_+}\). Since \(f(0)=0\), f(m) does not vanish on \(\mathbb {R_+}\), so Eq. (7) admits a unique invariant probability measure \(\pi (dx)\) such that \(\int _{\mathbb {R}}x\pi (dx)=0\).

-

For \(f'(0)>0\), f(m) is first increasing then nonincreasing on \(\mathbb {R_+}\), which admits three zeros 0, \(m_0>0\) and \(-m_0\). Thus, we have three invariant probability measures \(\pi _0(dx),~\pi _+(dx)\), and \(\pi _-(dx)\) such that \(\int _{\mathbb {R}}x\pi (dx)=0\), \(\int _{\mathbb {R}}x\pi _+(dx)=m_0>0\), and \(\int _{\mathbb {R}}x\pi _-(dx)=-m_0<0\).

(d) Now we study the sign of \(f'(0)\). For all \(\beta >0\) and \(\gamma >0\), we put

It is easy to see that \(\gamma \) is a function of \(\beta \),

By the Cauchy–Schwarz inequality, we get

Therefore, \(\gamma (\beta )\) is decreasing for all \(\beta >0\). Thus, for any fixed \(\gamma _c>0\), there exists a unique \(\beta _c>0\) such that \(f'(0)=0\).

For all \(0<\beta \le \beta _c\), we have \(\gamma \ge \gamma _c\). This yields

we obtain that the solution of Eq. (7) has a unique invariant probability measure.

For all \(\beta >\beta _c\), we have \(\gamma <\gamma _c\) then

so there are three invariant probability measures. The proof is complete. \(\square \)

Quantitative Analysis

Theorem 2 implies that when \(\gamma <0\), there is no phase transition. So we only consider the case of \(\gamma >0\).

Theorem 3

For Eq. (7), we assume \(\beta >0\) and \(\gamma >0\). Then there exists a critical value \(\beta _{c}=\frac{12-\gamma ^2}{2\gamma }>0\) such that

-

(i)

For \(0<\gamma <2\sqrt{3}\) and \(\beta >\beta _c\), or \(\gamma \ge 2\sqrt{3}\) and \(\beta >0\), there exist three invariant probability measures \(\{\pi _s:s=0,\pm \}\). In this case, there exists phase transition for the solution of Eq. (7).

-

(ii)

For \(0<\gamma <2\sqrt{3}\) and \(0<\beta \le \beta _c\), there exists unique invariant probability measure: \(\pi _0\). Here,

$$\begin{aligned} m_0=0,~~m_\pm =\pm \frac{1}{2}\sqrt{(\gamma ^2+2\beta \gamma -12)/\gamma }, \\ \mu _s(dx)=\exp [-x^4+\beta x^2+\gamma m_sx]dx, \\ \pi _s(dx)=\mu _s(dx)/\mu _s(\mathbb {R}),~~s=0,~\pm . \end{aligned}$$

As shown in Fig. , the shaded areas are phase transition areas and others are ergodic ones.

Proof

Since

by Eq. (9) we have

Taking the derivative of both sides of the above equation with respect to m, we have

This and Eq. (15) imply

Taking the derivative again, we get

and from Eq. (15) we obtain

Thus, we have

that is

Taking the expectation of both sides of Eq. (14), we get

\(\mathbb {E}X_t=m\) and \(\mathbb {E}X_t^3=\frac{\gamma m^3+3m}{\gamma }\) imply

By Eq. (16), we have

Put

Let \(g(\gamma ,\beta )=0\), we have a critical value of \(\beta \)

For \(g(\gamma ,\beta )>0\), Eq. (16) has three real solutions:

Otherwise Eq. (16) has a unique real solution: \(m_0=0\). The specific analysis is as follows.

-

For \(0<\gamma <2\sqrt{3}\) and \(\beta >\beta _c\), or \(\gamma \ge 2\sqrt{3}\) and \(\beta >0\), we have \(g(\gamma ,\beta )>0\).

-

For \(0<\gamma <2\sqrt{3}\) and \(0<\beta \le \beta _c\), we have \(g(\gamma ,\beta )\le 0\).

If \(g(\gamma ,\beta )>0\), then there exist three invariant probability measures:

Thus, there exists phase transition for the solution of Eq. (7).

If \(g(\gamma ,\beta )\le 0\), then there exists unique invariant probability measure:

The proof is complete. \(\square \)

4 Asymptotic Behavior

When \(\gamma =0\), Eq. (7) degenerates into the following stochastic differential equation:

The existence and uniqueness of the solution \(Y_t\) are obvious, and we have the unique invariant probability measure

For Eq. (7) in order to emphasize \(\gamma \), we write \(X(t)=X_\gamma (t)\). Let \(\pi _\gamma \) denote the invariant probability measure of \(X_\gamma (t)\). We shall use the following lemma to study the convergence of \(\pi _\gamma \) in Wasserstein distance (see [2]; Theorem 5.6).

Lemma 4

\(\pi _\gamma {\mathop {\longrightarrow }\limits ^{W_\theta }}\pi _0\) as \(\gamma \rightarrow 0\) iff the following two conditions

-

1.

\(\pi _\gamma {\mathop {\longrightarrow }\limits ^{w}}\pi _0\) as \(\gamma \rightarrow 0\),

-

2.

\(\int _\mathbb {R}{\vert x-x_0\vert }^\theta \pi _\gamma (dx)\rightarrow \int _\mathbb {R}{\vert x-x_0\vert }^\theta \pi _0(dx)\) for some (or any) \(x_0\in \mathbb {R}\) as \(\gamma \rightarrow 0\)

hold.

Given \(\xi \in L^2(\Omega ,\mathscr {F}_0,\mathbb {P};\mathbb {R})\), as \(\gamma \rightarrow 0\), we have the following theorem.

Theorem 5

We claim that

-

1.

\(\lim _{\gamma \rightarrow 0}\mathbb {E}\sup _{t\le T}{\vert X_\gamma (t)-Y(t)\vert }^2=0,~~\forall ~T>0.\)

-

2.

\(\pi _\gamma {\mathop {\longrightarrow }\limits ^{W_\theta }}\pi _0\) as \(\gamma \rightarrow 0\).

Proof

(1) By Itô’s formula, we obtain

For any \(T>0\), we have

Gronwall’s inequality yields

Using the fact \(\vert x-y\vert ^2\le 2(\vert x\vert ^2+\vert y\vert ^2)\) and putting \(x=X_\gamma -Y\), \(y=-Y\), we obtain

It follows that there exists \(\gamma _0>0\) such that we have

Here we used the fact that, due to \(\xi \in L^2(\Omega ,\mathscr {F}_0,\mathbb {P};\mathbb {R})\) there exists \(\alpha \ge 0\) such that \(\mathbb {E}{\vert Y(t)\vert }^2\le \alpha (1+\mathbb {E}\vert \xi \vert ^2)<\infty \) for any \(t\in [0,T].\) Thus,

Finally, by (18) we obtain

(2) (a) For any \(f\in C_b(\mathbb {R})\), assume \(\vert f(x)\vert \le M\) for all \(x\in \mathbb {R}\), then by dominated convergence theorem and

we have

Thus, we have \(\pi _\gamma {\mathop {\longrightarrow }\limits ^{w}}\pi _0\).

(b) We still need to prove that \(\int _\mathbb {R}{\vert x-x_0\vert }^\theta \pi _\gamma (dx)\rightarrow \int _\mathbb {R}{\vert x-x_0\vert }^\theta \pi _0(dx)\) as \(\gamma \rightarrow 0\). Choosing \(x_0=0\) and put

The dominated convergence theorem yields that

By Lemma 4.1, we have \(\pi _\gamma {\mathop {\longrightarrow }\limits ^{W_\theta }}\pi _0\) as \(\gamma \rightarrow 0\). The proof is complete. \(\square \)

Remark 1

In the Eq. (6), for any \(f,~g\in C_c^2(\mathbb {R}),\)

Using integration by parts, we get

and

Therefore,

In the same way, we obtain that the left hand of Eq. (6) is equal to the same formula and Eq. (6) is verified.

Let \((P_t)_{t\ge 0}\) denote the family of transition probability of the process \((X_t)_{t\ge 0}\). Since \((X_t)_{t\ge 0}\) is unique, Eq. (6) is equivalent to

Taking \(g(x)=\mathrm{{I}}_{\mathbb {R}}(x)\), one can get \(P_tg(x)=1\), and then

which means that \(\mu \) is an invariant measure. Thus, we get an invariant probability measure \(\pi =\mu (\mathbb {R})^{-1}\mu \).

Remark 2

At first, for the existence and uniqueness of Eq. (1), we need prove that the coefficients satisfy conditions \(({\textbf {H1}})-({\textbf {H3}})\) of Theorem 2.1 in [21]. Note that assumption \(({\textbf {H1}})\) holds obviously, thus it is sufficient to prove assumptions \(({\textbf {H1}})\) and \(({\textbf {H3}})\) hold.

Denote \(b(x,\mu )=-4x^3+2\beta x+\gamma \mu (\vert \cdot \vert )\), then for any \(x,y\in \mathbb {R},~\mu ,\nu \in \mathscr {P}_1\), we have \(({\textbf {H2}})\) (Monotonicity)

Note that for any coupling \(\pi \) of \(\mu \) and \(\nu \), it is easy to see that

Since the \(\pi \) is arbitrary, taking the infimum over all coupling \(\pi \), we obtain that

\(({\textbf {H3}})\) (Growth)

Next, when we say the strong solution, it needs a jointly continuous the spatial and time like Theorem 2.2 in [21]. Thus it is enough to prove that, for any \(x,y\in \mathbb {R},~\mu ,\nu \in \mathscr {P}_3\), the following condition holds:

where \(K=\max \{\frac{4\beta +2\vert \gamma \vert }{3}, 4+\frac{2\beta }{3}, \frac{\vert \gamma \vert }{3}\}\), and here we have used the Young-inequality.

Data Availibility

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

Change history

24 January 2023

A Correction to this paper has been published: https://doi.org/10.1007/s10955-023-03070-1

References

Ahmed, N.U., Ding, X.: On invariant measures of nonlinear markov processes. J. Appl. Math. Stoch. Anal. 6, 385–406 (1993)

Chen, M.F.: From Markov Chains to Non-equilibrium Particle Systems, 2nd edn. World Scientific Publishing, Singapore (2004)

Chen, M.F.: Eigenvalues, Inequalities, and Ergodic Theory. Springer, London (2005)

Chen, M.F.: Jump Processes and Interacting Particle Systems. Beijing Normal Univ, Press, Beijing (1986). (in Chinese)

Chen, M.F., Mao, Y.H.: Introduction to Stochastic Processes. Higher Education Press and World Scientific, Singapore (2021)

Da Prato, G., Zabczyk, J.: Ergodicity for Infinite-dimensional Systems. Cambridge University Press, Cambridge (1996)

Durrett, R.: Lecture Notes on Particle Systems and Percolation. Calif,Wadsworth and Brooks/Cole, Pacific Grove, Belmont (1988)

Dawson, D.A., Zheng, X.G.: Law of large numbers and central limit theorem for unbounded jump mean field models. Adv. Appl. Math. 12, 293–326 (1991)

Feng, S.: Large deviations for empirical process of interacting particle system with unbounded jumps. Ann. Probab. 22(4), 2122–2151 (1994)

Feng, S.: Large deviations for Markov processes with interaction and unbounded jumps. Probab. Theory Relat. Fields 100, 227–252 (1994)

Feng, S.: Nonlinear master equations of multitype practile systems. Stoch. Proc. Appl. 57, 247–271 (1995)

Feng, S., Zheng, X.G.: Solutions of a class of nonlinear master equations. Stoch. Proc. Appl. 43, 65–84 (1992)

Holley, R.A., Stroock, D.W.: \(L^2\) theory for the stochastic ising model. Z Wahrscheinlichkeit Verw Gebiete 35, 87–101 (1976)

Kesten, H.: Percolation Theory for Mathematicians. Birkhäuser, Boston (1982)

Liggett, T.M.: Interacting Particle Systems. Springer, New York (1985)

Malyshev, V.A., Minlos, R.A.: Gibbs Random Fields: Cluster Expansions. Kluwer Academic Publishers, New York (1991)

Malyshev, V. A., Minlos, R. A.: Linear Infinite-Particle Operators. Transl. Math. Monographs. American Mathematical Society, p. 143 (1994)

Øksendal, B.: Stochastic Differential Equations: An Introduction with Applications, 6th edn. Springer, Singapore (2013)

Sinai, Y.G.: Thoery of Phase Transitions: Rigorous Results. Pergamon Press, Oxford (1982)

Tugaut, J.: Phase transitions of McKean-Vlasov processes in double-wells landscape. Stoch. Int. J. Probab. Stoch. 86(2), 257–284 (2014)

Wang, F.. Y.: Distribution dependent SDEs for landau type equations. Stoch. Process. Appl. 2, 128 (2018)

Yan, S.J.: Introduction to Infinite Particle Markov Processes. Beijing Normal University Press, Beijing (1989)

Zhang, S.Q.: Existence and Non-uniqueness of Stationary Distributions for Distribution Dependent SDEs. 2105.04226 (2021)

Acknowledgements

This work is supported by the National Natural Science Foundation of China (11931004, 12090011) and the Priority Academic Program Development of Jiangsu Higher Education Institutions.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no financial or proprietary interests in any material discussed in this article.

Additional information

Communicated by Li-Cheng Tsai.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this article was revised: In this article the affiliation details for Yingchao Xie were incorrect and it has been updated.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, Y., Xie, Y. & Zhang, M. Phase Transitions for a Class of Time-Inhomogeneous Diffusion Processes. J Stat Phys 190, 42 (2023). https://doi.org/10.1007/s10955-022-03054-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10955-022-03054-7