Abstract

We study a stochastic process which describes the dynamics of a particle performing a finite-velocity random motion whose velocities alternate cyclically. We consider two cases, in which the state-space of the process is (i) \({\mathbb {R}} \times \{\vec {v}_1, \vec {v}_2\}\), with velocities \(v_1 > v_2\), and (ii) \({\mathbb {R}}^2 \times \{\vec {v}_1, \vec {v}_2, \vec {v}_3\}\), where the particle moves along three different directions with possibly unequal velocities. Assuming that the random intertimes between consecutive changes of directions are governed by geometric counting processes, we first construct the stochastic models of the particle motion. Then, we investigate various features of the considered processes and obtain the formal expression of their probability laws. In the case (ii) we study a planar random motion with three specific directions and determine the exact transition probability density functions of the process when the initial velocity is fixed. In both cases we also investigate the behavior of the probability distributions of the motion when the intensities of the underlying geometric counting processes tend to infinity. The asymptotic probability law of the particle is found to be a uniform distribution in case (i) and a three-peaked distribution in case (ii).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Stochastic processes for the description of finite-velocity random motions have been widely studied during the last decades. Typically, they refer to the motion of a particle moving with finite speed on the real line, or on more general domains, and alternating between various possible velocities or directions at random times. The basic model is pertaining the so-called (integrated) telegraph process, in which the changes of directions of the two possible velocities are governed by the Poisson process (see, for instance, Orsingher [29] and Beghin et al. [2]). Various generalizations of the basic model have been proposed in the past. See, for instance, the one-dimensional random evolution where the new velocity is determined by the outcome of a random trial, cf. Crimaldi et al. [6]. Recent developments in this area are devoted to determining the exact distributions of the maximum of the telegraph process (see Cinque and Orsingher [4]), to analyze telegraph random evolutions on a circle (cf. De Gregorio and Iafrate [8]), to investigate the telegraph process driven by gamma components (cf. Martinucci et al. [28]), and to study the squared telegraph process (see Ratanov et al. [38] and Martinucci and Meoli [27]). Further investigations have been oriented to study generalized telegraph equations and random flights in higher dimensions (cf. Pogorui and Rodríguez-Dagnino [33,34,35] and De Gregorio [7]), telegraph-type reinforced random-walk models leading to superdiffusion (cf. Fedotov et al. [14]), and the Ornstein-Uhlenbeck process of bounded variation with underlying an integrated telegraph process (cf. Ratanov [37]).

Several studies on the telegraph and related processes have been developed in the physics community, since from the very first contributions in the area of finite-velocity random motions due to Goldstein [16] and Kac [19]. Recently, there has been a resurgence of the study of the telegraph process in the physics literature in the context of active matter, where the process is also known as the run-and-tumble particle motion. As a recent contribution in this area we recall the paper by Malakar et al. [26], that is devoted to the determination of the exact probability distribution of a run-and-tumble particle perturbed by Gaussian white noise in one dimension. Here, the authors focus also on the analysis of the relaxation to the steady-state and on certain first-passage-time problems. Moreover, similar problems have been faced in Dhar et al. [9] for a run-and-tumble particle subjected to confining potentials of the type \(V (x) = \alpha \vert x\vert ^p\), with \(p > 0\). An extension of the analysis performed in the previous articles can be found in Santra et al. [40], where a run-and-tumble particle running in two spatial dimensions is considered.

A fruitful research line related to finite-velocity random evolution has been stimulated by applications to insurance and mathematical finance (cf. the books by Rolsky et al. [39], Kolesnik and Ratanov [20], and Swishchuk et al. [42]), since the alternating random behavior of the relevant stochastic processes are especially suitable to describe the floating behaviour of the prices of risky assets observed in financial markets. See, for instance, the model based on the geometric telegraph process by Di Crescenzo and Pellerey [12], the refinement characterized by alternating velocities and jumps introduced in Lopez and Ratanov [25], and the transformed telegraph process for the pricing of European call and put options (cf. Pogorui et al. [36]).

Several real-world applications involve inter-arrival time statistics analogous to those of the counting processes governing the finite-velocity random motions of interest. An example related to the nonhomogeneous Poisson process for the earthquakes occurrences is given in Shcherbakov et al. [41]. Another application in geoscience is related to the Brownian motion process driven by a generalized telegraph process for the description of the vertical motions in the Campi Flegrei volcanic region (see Travaglino et al. [43]).

Moreover, stochastic processes for finite-velocity motions are largely used in biomathematics, motivated by the need of describing a variety of random movements performed by cells, micro-organisms and animals. For instance, Garcia et al. [15] investigate random motions of Daphnia where the animal, while foraging for food, performs a correlated random walk in two dimensions formed by a sequence of straight line hops, each followed by a pause, and a change of direction through a turning angle. See also Hu et al. [17] for a Brownian motion governed by a telegraph process adopted as a moving-resting model for the movements of predators with long inactive periods.

In this area, finite-velocity planar random motions are suitable to model the alternation of particle movements and changes of direction at random times. Then, large efforts have been employed to develop mathematical tools for the determination of the related exact probability laws. For instance, we recall the study of cyclic motions in \({\mathbb {R}}^2\) (cf. Orsingher [30, 31] for the case of exponential times between changes of direction, and Di Crescenzo et al. [10] for the case of Erlang times). Moreover, symmetry properties related to the particle’s motion in \({\mathbb {R}}^2\) are investigated in Kolesnik and Turbin [21]. The probability law of the motion of a particle performing a cyclic random motion in \({\mathbb {R}}^n\) is determined in Lachal [22], while a minimal cyclic random motion is studied in Lachal et al. [23]. The evaluation of the conditional probabilities in terms of order statistics has been successfully applied in the case of planar cyclic random motions with orthogonal directions by Orsingher et al. [32]. A particular case involving orthogonal directions switching at times regulated by a Poisson process is assessed by Leorato et al. [24], whereas a similar model governed by a non-homogeneous Poisson process is treated in Cinque et al. [5].

Several attempts finalized to extend the basic variants of the telegraph process have been performed in the recent years along the generalization or modification of the underlying Poisson process. However, only few instances allow to construct solvable and tractable models of random motions. For instance, see Iacus [18] where the number of velocity switches follows a suitable non-homogeneous Poisson process whose time-varying intensity is the hyperbolic tangent function.

Stimulated by the previous results and motivated by possible applications, in this paper we propose a new paradigm for the underlying point process in suitable instances of the telegraph process. Specifically, we refer to finite-velocity random motions in \({\mathbb {R}}\) and \({\mathbb {R}}^2\), with 2 and 3 velocities alternating cyclically, respectively, where the number of displacements of the motion along each possible direction follows a Geometric Counting Process (GCP) (see, for instance, Cha and Finkelstein [3]).

This new scheme is motivated by the fact that the memoryless property of the times separating successive events in real phenomena represents an exceptional situation, while in many concrete cases their distributions are characterized by heavy tails. Accordingly, the proposed study is finalized to extend the analysis of the classical telegraph process to cases in which the intertimes between velocity changes –instead of the typical exponential distribution– possess an heavy-tailed distribution, such as the modified Pareto distribution concerning the GCP. Moreover, the proposed stochastic processes provide new models for the description of phenomena that are no more governed by hyperbolic PDE’s as the classical telegraph equation. Another point of strength of the present study is the construction of new solvable models of random motion, whose probability laws are obtained in closed and tractable form.

Differently from the classical telegraph process, whose probability density under the Kac’s limiting conditions tends to the Gaussian transition function of Brownian motion, the processes under investigations exhibit a different behaviour. In particular, a noteworthy result of this paper shows that when the parameters of underlying GCP tend to infinity, the probability density of the process tend to (i) a uniform distribution in the one-dimensional case, and (ii) a three-peaked distribution in two-dimensional case.

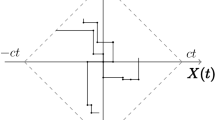

In detail, we firstly study the process \(\{(X(t),V(t)) \; t\in {\mathbb {R}}_0^+\}\), with state space \({\mathbb {R}} \times \{\vec {v}_1, \vec {v}_2\}\), which represents the motion of a particle on the real line, with alternating velocities \(v_1 > v_2\). As customary, such process has two components: a singular component, corresponding to the case in which there are no velocity switches, and an absolutely continuous component, related to the motion of the particle when the velocity changes at least once. Secondly, we analyze the process \(\{(X(t),Y(t),V(t)), \; t \in {\mathbb {R}}_0^+)\}\), with state-space \({\mathbb {R}}^2 \times \{\vec {v}_1, \vec {v}_2, \vec {v}_3\}\), which describes a particle performing a planar motion with three specific directions. Once defined the region \({\mathcal {R}}(t)\) representing all the possible positions of the particle at a given time, the probability law shows that the distribution of this process is a mixture of two discrete components, describing the situations in which the particle is found on the boundary of the region, and an absolutely continuous part, related to the motion of the particle in the interior of \({\mathcal {R}}(t)\). This type of two-dimensional process well describes the motion of the particle in a turbulent medium, for example, in the presence of a vortex.

This is the plan of the paper. In Sect. 2, we formally describe the process X(t) illustrating some preliminary results on the transition densities in a more general case. In Sect. 3, assuming that the random intertimes between consecutive changes of directions are governed by a geometric counting process (GCP), after the construction of the stochastic model we obtain the formal expression of the probability laws and the moments of the process conditional on the initial velocity \(\vec {v}_1\). In particular, we determine the probability distribution of X(t) and study some limit results when the initial velocity is random. We also show that, when the parameters of the intertimes between velocity changes tend to infinity, the distribution of X(t) tends to be uniform over the relevant diffusion interval. Finally, in Sect. 4, we study a planar random motion determining the exact transition probability density functions of the process when the initial velocity is \(\vec {v}_1\). We investigate the stochastic process and the probability laws of the process with underlying geometric counting process (GCP). As example, we analyze a special case with three fixed cyclic directions. Also in this case we discuss the limiting distribution of the process when the parameters of the intertimes tend to infinity. Differently from the one-dimensional case, the distribution of the planar process tends to a non-uniform distribution characterized by three peaks.

Throughout the paper we assume that \(\sum _{i=1}^0a_i=0\) and \(\prod _{i=1}^0a_i=1\), as customary.

2 A Finite Two-Velocities Random Motion

We consider a particle that starts at the origin of the real line and that proceeds alternately with two velocities \(\vec {v}_1\) and \(\vec {v}_2\). The magnitude of the vector \(\vec {v}_j\) is denoted as \(|\vec {v}_j|=v_j\), with \(j=1,2\) and \(v_1 > v_2\). The direction of the particle motion is determined at each instant by the sign of the velocity, so that it is forward, stationary or backward if \(v_j>0\), \(v_j=0\) or \(v_j<0\), respectively.

Let \(D_{j,k}\), with \(j=1,2\), be the duration of the k-th time intervals during which the motion proceeds with velocity \(\vec {v}_j\). Let \({\mathbb {N}}=\{1,2,\ldots \}\). We assume that \(\{D_{j,k}, \; k\in {\mathbb {N}}\}_{j=1,2}\) are mutually independent sequences of nonnegative and possibly dependent absolutely continuous random variables. With reference to the intertimes \(D_{j,k}\), for all \(x\in {\mathbb {R}}\) we denote the distribution function by \(F_{D_{j,k}}(x)={\mathbb {P}}(D_{j,k}\le x)\), the survival function by \({\overline{F}}_{D_{j,k}}(x)=1-F_{D_{j,k}}(x)\) and the p.d.f. by \(f_{D_{j,k}}(x)\). Let us set

where, for fixed \(j\in \{1,2\}\), the r.v.’s \(D_{j,k}\) are possibly dependent.

We denote by \(T_n\), \(n\in {\mathbb {N}}\), the n-th random instant in which the motion changes velocity. Let \(\{N(t), \; t\in {\mathbb {R}}_0^{+}\}\) be the alternating counting process, with two independent subprocesses, having inter-renewal times \(T_1,T_2,\ldots \), so that N(t) counts the number of velocity reversals of the particle in [0, t], i.e.

Now we introduce the stochastic process \(\{(X(t),V(t)), \; t\in {\mathbb {R}}_0^{+}\}\), having state-space \({\mathbb {R}}\times \{{v}_1,{v}_2\}\), that describes the motion of the particle, with initial conditions

The position X(t) and the velocity V(t) of the particle at time t are expressed respectively as follows:

with \(\ell \) defined as

Hence, recalling Eq. (1), the n-th velocity change satisfies

From Eq. (3) we have that the particle at every instant \(t \in {\mathbb {R}}^+\) is confined in \([v_2t,v_1t]\). Indeed, if the particle does not change velocity in [0, t], then it occupies one of the extremes of \([v_2t,v_1t]\) according to the initial velocity V(0). Otherwise, if the particle changes velocity at least once in [0, t], then it occupies a state belonging to \((v_2t,v_1t)\). Therefore, the conditional law of \(\{(X(t),V(t));t\ge 0\}\) is characterized by two components, for \(t \in {\mathbb {R}}^+\) and \(v\in \{{v}_1,{v}_2\}\):

-

(i)

a discrete component

$$\begin{aligned} {\mathbb {P}}\{X(t)=vt, V(t)=v\, \vert \,X(0)=0, V(0)=v\}, \end{aligned}$$(6) -

(ii)

an absolutely continuous component

$$\begin{aligned} \begin{aligned} p(x,t\,\vert \,v)&= {\mathbb {P}}\{X(t)\in \textrm{d}x \,\vert \,X(0)=0, V(0)=v\} / \textrm{d}x\\&=p_1(x,t\,\vert \,v)+p_2(x,t\,\vert \,v), \end{aligned} \end{aligned}$$(7)where, for \(j=1,2\) and \(v_2t<x<v_1t\),

$$\begin{aligned} p_j(x,t\,\vert \,v)\,\textrm{d}x= {\mathbb {P}}\{X(t)\in \textrm{d}x, V(t)=v_j\, \vert \,X(0)=0, V(0)=v\}. \end{aligned}$$(8)

We remark that the case \(v_2<0<v_1\) has been often treated as a typical instance of the (integrated) telegraph process (see [2], for instance), that goes along forward and backward directions alternately. In this case, the functions \(p_1\) and \(p_2\) are respectively the forward and backward p.d.f.’s of the motion given the initial velocity \(V(0)=v\in \{v_1,v_2\}\).

3 Intertimes Distributed as a Geometric Counting Process

The general assumptions considered in Sect. 2 can be specialized to the case in which the alternating counting process defined in Eq. (2) arises from the alternation of two independent geometric counting processes.

3.1 Background on the Geometric Counting Process

Let us now recall some key results concerning the geometric counting process. We consider a mixed Poisson process \(\{{\tilde{N}}_\lambda (t), \; t\in {\mathbb {R}}_0^{+}\}\), whose marginal distribution is expressed as the following mixture:

where \(N^{(\alpha )}(t)\) is a Poisson process with intensity \(\alpha \) and where \(U_{\lambda }\) is an exponential distribution with mean \(\lambda \in {\mathbb {R}}^{+}\). According to the terminology adopted in [3], the process \({\tilde{N}}_\lambda (t)\) is said a GCP with intensity \(\lambda \), since its probability distribution is

See, for instance, Di Crescenzo and Pellerey [13] for some results and applications of the GCP. We denote by \({\tilde{T}}_{n,\lambda }\), \(n \in {\mathbb {N}}\), the random times denoting the arrival instants of the process \({\tilde{N}}_\lambda (t)\), with \({\tilde{T}}_{0,\lambda } = 0\). Recalling [13], \({\tilde{T}}_{n,\lambda }\) has a modified Pareto (Type I) distribution, with p.d.f.

The process \({\tilde{N}}_{\lambda }(t)\) has dependent increments \({\tilde{D}}_{n,\lambda }={\tilde{T}}_{n, \lambda }-{\tilde{T}}_{n-1,\lambda }, \; n \in {\mathbb {N}}\). Moreover, the conditional survival function of \({\tilde{D}}_{n,\lambda }\) conditional on \({\tilde{T}}_{n-1,\lambda }=t\), for \(n \in {\mathbb {N}}\), can be written as (see, e.g. [1])

The corresponding p.d.f. of \({\tilde{D}}_{n,\lambda }\) conditional on \({\tilde{T}}_{n-1,\lambda }=t\) is:

Moreover, the instantaneous jump rate of \({\tilde{N}}(t)\) depends on time and on the number of occurred jumps, being

as \(h \rightarrow 0^{+}\). With reference to the stochastic process \(\{(X(t),V(t)), \; t \in {\mathbb {R}}_0^+\}\) defined in Sect. 2, hereafter we study the random motion in the special case when the alternating phases of the motion are governed by two independent GCP’s with possibly different intensities.

3.2 The Stochastic Process and Its Probability Laws

In this section, we investigate the probability law of the process \(\{(X(t),V(t)), t\in {\mathbb {R}}_0^{+}\}\) when the subprocesses of the alternating counting process (2) are two independent GCP’s. Specifically, we assume that for \(j=1,2\) the sequences of intertimes \(D_{j,k}\), \(k\in {\mathbb {N}}\), during which the motion has velocity \(v_j\), are distributed as the intertimes of a GCP with intensity \(\lambda _j\).

In the remainder of this section, \({\mathbb {P}}_i\) will denote the probability conditional on \(\{X(0)=0, V(0)=v_i\}\), for \(i=1,2\).

Let us now introduce the following conditional sub-densities of the process \(\{(X(t),V(t)), \; t\in {\mathbb {R}}_0^{+}\}\) for \(t>0\), \(v_2 t< x < v_1 t\), \(n \in {\mathbb {N}}\) and \(j=1,2\):

recalling that N(t) gives the number of velocity changes in [0, t]. Hence, from Eqs. (8) and (15) we have, for \(v\in \{v_1,v_2\}\),

For the analysis of finite-velocity random motions one is often led to constructing the PDE’s for the related sub-densities (see, for instance, Di Crescenzo et al. [11] for a finite-velocity damped motion on the real line). In our case, for the p.d.f.’s introduced in (15) a system of hyperbolic PDE’s can be obtained. Details are omitted for brevity. Unfortunately, solving such a system is a very hard task. Thus, in order to obtain the conditional probability law of \(\{(X(t),V(t)), \; t\in {\mathbb {R}}_0^{+}\}\) we develop an approach based on the analysis of the intertimes between consecutive velocity changes. We first consider the case \(V(0)=v_1\).

Theorem 1

Let \(\{(X(t),V(t)), \; t\in {\mathbb {R}}_0^{+}\}\) be the process defined in (3), where N(t) is the alternating counting process determined by two independent GCP’s with intensities \(\lambda _1\) and \(\lambda _2\). Then, for all \(t>0\) we have

Moreover, for \(v_2t<x<v_1t\) one has

where

Proof

Eq. (17) follows from the p.d.f. of \(D_{1,1}\), given in (11) for \(n=1\). To obtain Eq. (18), we first analyze the conditional sub-density \(p_{1,n}(x,t\,\vert \,v_1)\). Recalling the first of (15), and conditioning on the last instant s preceding t in which the particle changes velocity from \(\vec {v}_2\) to \(\vec {v}_1\), we have

for \(t>0\), \(v_2t<x<v_1t\) and \(n \in {\mathbb {N}}\). Since \(V(0) = v_1\), one has \(T_{2n}= D_1^{(n)} + D_2^{(n)} = s\) and \(X(s)= v_1D_1^{(n)} + v_2D_2^{(n)}\), so that

Furthermore, the relation \(v_2s< X(s) = x-v_1(t - s)\) yields \(s > t-\tau \), due to (20). Hence, denoting by \(h(\cdot , \cdot )\) the joint p.d.f. of

we obtain

Since the sequences \(\{D_{1,n}\}\) and \(\{D_{2,n}\}\) are mutually independent by assumption, making use of Eq. (13) we get

Moreover, from the conditional survival function given in Eq. (12) it follows that

Therefore, from the latter three equations, after some calculations we obtain:

Similarly, the first sub-density introduced in (15) for \(j=2\) is given by

Finally, by making use of Eq. (16) and

we obtain the p.d.f.’s (18) and (19). \(\square \)

As example, a sample path for the particle motion when \(V(0)=v_1\) is shown in Fig. 1.

Remark 1

It is worth mentioning that the term \(v_1-v_2\) in the right-hand-side of Eqs. (18) and (19) can be viewed as the measure of the diffusion interval at time \(t=1\).

Remark 2

Due to symmetry, if \(V(0)=v_2\), the probability law of the process \(\{(X(t),V(t), \; t \in {\mathbb {R}}_0^+\}\) can be obtained from Theorem 1 by interchanging \(p_1\) with \(p_2\), \(\lambda _1\) with \(\lambda _2\), and x with \((v_1+v_2)t-x\). Therefore, we have

Moreover, the corresponding p.d.f.’s for \(t>0\) and \(v_2t<x<v_1t\) are given by

and analogously to (17) we have

Remark 3

Under the assumptions of Theorem 1, it is not hard to see that, for \(t\in {\mathbb {R}}^+\) and \(j=1,2\),

Let us now focus on the marginal distribution of \(\{X(t), \; t\in {\mathbb {R}}_0^{+}\}\) conditional on \(\{X(0)=0, V(0)=v_j\}\), \(j=1,2\). In this case, the absolutely continuous components for \(t\in {\mathbb {R}}^+\) and \(x\in (v_2t, v_1t)\) are described by

Since \(p(x,t\vert v_j)=p_1(x,t\vert v_j)+p_2(x,t\vert v_j)\), making use of (18)–(19) and (30)–(31) it is not hard to obtain the following result.

Corollary 1

Under the assumptions of Theorem 1, the probability distribution of \(\{X(t), \; t\in {\mathbb {R}}_0^{+}\}\) conditional on \(\{X(0)=0, V(0)=v_j\}\), \(j=1,2\), is given by a discrete component identical to (17) and (32), respectively, and by

for all \(t\in {\mathbb {R}}^+\) and \(v_2t< x < v_1t\), with \(\tau =\tau (x,t)\) given in (20).

3.3 Moments

In this section, let \({\mathbb {E}}_i\) and \(\text {Var}_i\) denote respectively the expectation and the variance conditional on \(\{X(0)=0, V(0)=v_i\}\), \(i=1,2\).

Making use of Eqs. (17) and (35) for \(j=1\), we can now obtain the first and second conditional moments of X(t).

Theorem 2

Under the assumptions of Theorem 1, for all \(t \in {\mathbb {R}}_0^{+}\), we have that, for \(\lambda _1\ne \lambda _2\),

and

Moreover, for \(\lambda _1= \lambda _2 \equiv \lambda \) we get

Remark 4

The results from Eqs. (39) and (40) can be generalized in order to obtain the corresponding k-th moment of X(t). Indeed, in the case \(\lambda _1= \lambda _2 \equiv \lambda \), for \(t\in {\mathbb {R}}_0^+\), one has

In the case \(\lambda _1\ne \lambda _2\), similarly we have

where \(\Gamma \) and \({}_2F_1\) denote respectively the well-known gamma and Gaussian hypergeometric functions.

Remark 5

Using Eqs. (36)–(38), we can obtain the expression of the variance of X(t) conditional on \(\{X(0)=0,V(0)=v_1\}\). When \(\lambda _1=\lambda _2\), from Eqs. (39)–(40) one has

In both cases \(\lambda _1 \ne \lambda _2\) and \(\lambda _1=\lambda _2\), we can state that the mean and the variance of X(t) are both linear when t tends to infinity, as shown hereafter.

Corollary 2

Under the assumptions of Theorem 1, the following limits hold:

Remark 6

The results obtained in Corollary 2 can be compared with those for the telegraph processes in which the random times between consecutive velocity changes have exponential distribution (i) with constant rates, and (ii) with linearly increasing rates (cf. Di Crescenzo and Martinucci [11]). Indeed, for the process under investigation and for the two above mentioned processes the conditional mean is asymptotically linear as \(t\rightarrow \infty \), so that the following limit holds for all such cases when \(\lambda _1=\lambda _2\):

This analogy refers to the means of the mentioned processes, and is not extended to other moments. Indeed, for instance, we recall (cf. Section 1 of [13]) that the underling Poisson and geometric processes have the same mean but different variance. Moreover, the Poisson process over time tends to a constant as time tends to \(\infty \), whereas in the same limit the geometric process over time converges in distribution to an exponential random variable.

Remark 7

Under the assumptions of Theorem 1, due to symmetry, for \(t\in {\mathbb {R}}_0^+\) one has:

3.4 Random Initial Velocity

Hereafter we analyze the probability distribution of X(t) when the initial velocity is random, according to the following rule, for \(0\le q\le 1\),

To this aim, denoting by \({\mathbb {P}}_0\) the probability conditional on \(X(0)=0\) and initial velocity specified as in (48), similarly to Eq. (8) we define

for \(v_2t<x<v_1t\), \(t\in {\mathbb {R}}_0^+\). In analogy with (17), in this case we have

For \(v_2t<x<v_1t\), \(t\in {\mathbb {R}}_0^+\), we now focus on the p.d.f.

and on the so-called flow function

We remark that w(x, t) measures, at each time t, the excess of particles moving with velocity \(v_1\) with respect to the ones moving with velocity \(v_2\) near x in a large ensemble of particles moving according to the stated rules of X(t) (see, e.g. Orsingher [29]). Making use of Eqs. (18), (19), (29), (30) and Corollary 1, now we can state the following results on the distribution and the flow function of X(t) when the initial velocity is random.

Proposition 1

Let the initial velocity of the process \(\{(X(t),V(t)),\; t \in {\mathbb {R}}_{0}^{+}\}\) be distributed as in Eq. (48). Then, for \(t \in {\mathbb {R}}^+\) and \(v_2t< x < v_1t\) we have

and therefore, due to Eqs. (51) and (52),

where \(\tau =\tau (x,t)\) is defined in (20).

Remark 8

Under the assumptions of Proposition 1, from Remark 2 one has the following symmetry relations:

valid for \(v_2t<x<v_1 t\), \(t\in {\mathbb {R}}^+\). These properties are confirmed by the plots of the functions (54) and (55) shown in Fig. 2.

Remark 9

Making use of Eq. (55) we are now able to discuss the sign of the flow function of X(t). Under the assumptions of Proposition 1, recalling (20) we have that

where

Clearly, \(m_t(\textbf{v})\) is the middle point of the diffusion interval \((v_2t, v_1t)\). Moreover, one has \(\beta (q,\textbf{v},{\varvec{\lambda }})\ge 0\) when \(q\ge \displaystyle \frac{\lambda _2}{\lambda _1+\lambda _2}\).

Remark 10

(i) Under the assumptions of Proposition 1, recalling that \(\tau (x,t)\) is increasing in x, from (54) we immediately have that p(x, t) is strictly increasing (decreasing) in x when \(\lambda _1<\lambda _2\) (\(\lambda _1>\lambda _2\)), for fixed t.

(ii) Moreover, if \(\lambda _1=\lambda _2\equiv \lambda \) then the probability law does not depend on q, and for \(t \in {\mathbb {R}}^+\) it is expressed by

In this case, due to (55) the flow function of X(t), \(t \in {\mathbb {R}}^+\), is given by

Let us now analyze the functions (51) and (52) when x tends to the border of the diffusion interval at a fixed time.

Corollary 3

Let the assumptions of Proposition 1 hold. For fixed \(t\in {\mathbb {R}}^+\) we have

and

Making use of Theorem 2 and Remark 7 we can now obtain the mean of X(t) when the initial velocity is random, henceforth denoted by \({\mathbb {E}}_0[X(t)]\).

Corollary 4

Let the initial velocity of the process \(\{(X(t),V(t)),\; t \in {\mathbb {R}}_{0}^{+}\}\) be distributed as in (48). If \(\lambda _1\ne \lambda _2\) then for \(t \in {\mathbb {R}}^+\) one has

whereas, if \(\lambda _1=\lambda _2\equiv \lambda \) then for \(t \in {\mathbb {R}}^+\)

Similarly to the classical telegraph process driven by the Poisson process, it is not hard to see that the process X(t) does not admit a stationary state. Indeed, due to Eq. (54), for all \(x\in {\mathbb {R}}\) one has

Moreover, for the classical telegraph process driven by the Poisson process it is well known that an asymptotic limit holds under the Kac’s conditions, which involve both intensity and velocity, leading to the Gaussian transition function of Brownian motion (see, e.g., Lemma 2 of Orsingher [29]). We conclude this section with a different result for the stochastic process under investigation. This can be seen in the next corollary, where we let the intensities tend to \(+\infty \) in Eqs. (53) and (54). In this case the limit condition is meaningful even if it does not involve the velocities \(v_i\).

Corollary 5

Let the assumptions of Proposition 1 hold. For \(t\in {\mathbb {R}}^+\) and \(v_2t< x < v_1t\) one has, with \(\tau \) defined in (20),

and

The right-hand side of (69) shows that the distribution of the process X(t) tends to be uniform over the diffusion interval \((v_2t,v_1t)\) when the intensities \(\lambda _1\) and \(\lambda _2\) tend to infinity, with \(\lambda _1/\lambda _2\rightarrow 1\), whereas the p.d.f.’s \(p_j(x,t)\) are not asymptotically uniformly distributed.

In the following section we extend the analysis of the finite-velocity motion governed by the GCP to the case when the motion evolves in \({\mathbb {R}}^2\) along three different directions attained cyclically.

4 Planar Random Motion with Underlying Geometric Counting Process

We consider a planar random motion of a particle that moves along three directions. The motion is oriented toward the cyclically alternating directions described by the vector

where \(\vec {h}\) and \(\vec {k}\) are the unit vectors along the Cartesian coordinate axes. Moreover, the angles \(\theta _j\) satisfy the conditions

The particle moves from the origin at time \(t=0\), running with constant velocity \(c>0\). Initially, it moves along the direction \(\vec {v}_1\). Then, after a random duration denoted \(D_{1,1}\), the particle changes instantaneously the direction, moving along \(\vec {v}_2\) for a random duration \(D_{2,1}\). Subsequently, it moves along \(\vec {v}_3\) for a random duration \(D_{3,1}\), and so on by attaining cyclically the directions \(\vec {v}_1, \vec {v}_2, \vec {v}_3\) for the random periods \(D_{1,2},D_{2,2},D_{3,2},D_{1,3},D_{2,3},D_{3,3},\ldots \). Hence, during the n-th cycle the particle moves along directions \(\vec {v}_1,\vec {v}_2, \vec {v}_3\) in sequence for the random lengths \(D_{1,n},D_{2,n},D_{3,n}\), for \(n \in {\mathbb {N}}\), respectively. Denoting by \(T_{k}\) the k-th random instant in which the motion changes its direction, for \(n \in {\mathbb {N}}_0\) we have

where

is the total duration of the motion along direction \(\vec {v}_j\) until the n-th cycle, with

In agreement with (72) we set \(T_0=0\). In the following, we assume that the sequences \(\{ D_{j,k}; \; k \in {\mathbb {N}}\}_{j=1,2,3}\) are mutually independent. Moreover, for \(j=1,2,3\) the durations \(D_{j,1}, D_{j,2}, \ldots \) are nonnegative absolutely continuous possibly dependent random variables.

It is worth mentioning that the conditions (71) ensure that the moving particle can reach any state in \({\mathbb {R}}^2\) in a sufficiently large time t. Moreover, the considered motion provides a simple scheme with the minimal number of possible directions for the description of a vorticity motion with intertimes characterized by heavy tailed distributions.

Hereafter we introduce the stochastic process that describes the planar random motion considered so far.

4.1 The Stochastic Process and Its Probability Laws

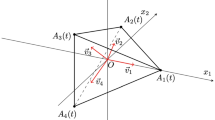

Let \(\{(X(t), Y(t), V(t)), t \in {\mathbb {R}}_0^{+}\}\) be a stochastic process with state-space \({\mathbb {R}}^2 \times \{\vec {v}_1, \vec {v}_2, \vec {v}_3\}\), where (X(t), Y(t)) gives the location of the particle and V(t) the direction of the motion at time \(t \in {\mathbb {R}}_0^{+}\), conditional on \(X(0)=0, Y(0)=0, V(0)=\vec {v}_1\). In order to specify the diffusion region of the particle at time \(t\in {\mathbb {R}}^{+}\), let us now define a time-dependent triangle \({\mathcal {R}}(t)\) whose edges are denoted by \(E_{ij}(t)\), with \(i,j=1,2,3\) and \(i < j\), whereas the vertices are given by

for fixed \(c\in {\mathbb {R}}^{+}\). Hence, the angle \(\theta _j\) describes the direction of the vertex corresponding to \(\vec {v}_j\), for \(j=1,2,3\). Therefore, under conditions (71), the equations linking two adjacent vertices are expressed as

where

Since the particle moves with velocity \(c\in {\mathbb {R}}^{+}\), and the motion is oriented alternately along the directions determined by vectors (70), the particle at time \(t\in {\mathbb {R}}^{+}\) is located inside the region delimited by the triangle

where the defining conditions arise from Eqs. (76). In particular, the particle motion involves three different, mutually exclusive cases based on the assumption that \(X(0)=0, Y(0)=0,V(0)=\vec {v}_1\):

-

(i)

if the direction of the motion does not change in (0, t), then at time \(t\in {\mathbb {R}}^+\) the particle is placed in the vertex \(A_1(t)\);

-

(ii)

if the direction of the motion changes once in (0, t), then at time \(t\in {\mathbb {R}}^+\) the particle is situated somewhere on the edge \(E_{12}(t)\);

-

(iii)

if more than one direction change occurs in (0, t), then at time \(t\in {\mathbb {R}}^+\) the particle is located in the interior of the region \({\mathcal {R}}(t)\), that will be denoted \(\mathring{{\mathcal {R}}}(t)\).

Let us now denote \(B_1:=\{X(0)=0, Y(0)=0, V_0=\vec {v}_1\}\). To determine the probability laws of the process \(\{(X(t), Y(t), V(t)), t \in {\mathbb {R}}_0^{+}\}\) conditional on \(B_1\), hereafter we express the discrete components concerning events (i) and (ii) considered above. As usual, we denote \({F}_{D}\) and \({\overline{F}}_{D}\) for the distribution function and the survival function of a generic random variable D.

Theorem 3

(Discrete components) Let \(\{(X(t), Y(t), V(t)), t \in {\mathbb {R}}_0^{+}\}\) be the stochastic process defined in Sect. 4.1. For all \(t \in {\mathbb {R}}^+\) we have

and

Proof

Equations (79) and (80) are a consequence of the conditions (i) and (ii) when the initial velocity is \(V(0)=\vec {v}_1\). \(\square \)

Using condition (iii), for \(t\in {\mathbb {R}}^+\) and \(j=1,2,3\) the absolutely continuous component of the probability law can be expressed by

Clearly, the right-hand-side of Eq. (81) represents the probability that the particle at time \(t\in {\mathbb {R}}^+\) is located in a neighborhood of (x, y) and moves along direction \(\vec {v}_j\), given the initial condition expressed by \(B_1\). Moreover, we can introduce the p.d.f. of the particle location at time \(t \in {\mathbb {R}}^+\), i.e.

Due to Eqs. (81) and (82), one immediately has

In order to determine \(p_{1j}(x,y,t)\), we first introduce the function \(\psi : {\mathbb {R}}^3 \rightarrow {\mathbb {R}}^3\), which is defined as follows:

Here \(x_{\vec {v}_j}\) and \(y_{\vec {v}_j}\), for \(j=1,2,3\), denote respectively the components of each vector \(\vec {v}_j\) along the x and y axes. For a cycle of the motion with durations \(t_1\), \(t_2\) and \(t_3\), the function introduced in (84) gives a vector containing the displacements performed along x and y axes during the cycle, as well as its whole duration. We also consider the transformation matrix \({\varvec{A}}\) of function \(\psi (t_1,t_2,t_3)\), that is

Remark 11

Recalling the vertices (75), it is easy to see that \(\det (t {\varvec{A}})\) represents the area of the region \({\mathcal {R}}(t)\) defined in (78).

For a given sample path of the process \(\{(X(t), Y(t), V(t)), t \in {\mathbb {R}}_0^{+}\}\) we denote by \(\xi _j=\xi _j(x,y,t)\) the value attained by the residence times of the motion in each direction \(\vec {v}_j\) during [0, t] such that \((X(t), Y(t))=(x,y)\), given by

where \(\mathbbm {1}_{\{V(s)=\vec {v}_j\}}\) is the indicator function

so that \(\sum _{j=1}^3\xi _j=t\). It is not hard to see that \(\varvec{\xi }=(\xi _1,\xi _2,\xi _3)^T\) is solution of the system \({\varvec{A}}\, \varvec{\xi }= (x,y,t)^T\). Therefore, recalling (85) and (75), we can express \(\xi _j\) in terms of \(\varvec{\theta }=(\theta _1,\theta _2,\theta _3)\) as follows, for \(j=1,2,3\),

where \(a\hat{+}b\) denotes \((a + b)\mod 3\), and

We are now able to formulate the following result about the absolutely continuous component of the process (X(t), Y(t), V(t)), which is concerning the case (iii) considered above. To this aim, we denote by \(f_{D_{j}^{(n)}}\) the p.d.f. of \(D^{(n)}_{j}\), cf. (73). Hence, due to (74), \(f^{(0)}_{D_{j}}\) are delta-Dirac functions, for \(j=1,2,3\). Moreover, we indicate with \({\overline{F}}_{D_{j ,k+1}\vert D_j^{(k)}}\) the conditional survival function of \(D_{j,k+1}\).

Theorem 4

(Absolutely continuous components) For the stochastic process \(\{(X(t), Y(t), V(t)), t \in {\mathbb {R}}_0^{+}\}\) defined in Sect. 4.1, under the initial condition \(B_1\), the absolutely continuous components of the probability law are expressed as follows, for all \((x,y) \in \mathring{{\mathcal {R}}}(t)\) and \(t \in {\mathbb {R}}^+\):

where \(\xi _j=\xi _j(x,y,t)\), for \(j=1,2,3\), is defined as in Eq. (88), and where \(\det ({\varvec{A}})\) can be recovered from (89).

Proof

By conditioning on the number of direction switches in (0, t), say k, and on the last instant s previous to t in which the particle changes its direction to restart a cycle, we can rewrite Eq. (81) as follows (with \(j=1,2,3\))

where \(T_{3k+j-1}\) is a switching instant occurring at time s such that the particle’s direction becomes \(\vec {v}_j\). Thus, (X(s), Y(s)) is the position of the particle when such direction’s change occurs. Therefore, due to Eq. (72), one has

Hence, for \(s=T_{3k+j-1}\) it follows that (X(s), Y(s)) can be expressed as suitable combinations of the sums (73) as

Recalling Eq. (78), the condition \((X(s), Y(s)) \in {\mathcal {R}}(s)\) implies that \(s > t-\xi _j\). Furthermore, using (85) and substituting Eqs. (92) and (93) in Eq. (91), we have

where \(\phi _{j,k}\) is the joint p.d.f. of \((T_{3k+j-1}, X(T_{3k+j-1}), Y(T_{3k+j-1}))\), with

According to (84), we have

Due to the mutual independence of the variables \(\{D_{j,k}; \; k \in {\mathbb {N}}\}_{j=1,2,3}\), we get

due to Eq. (88). Therefore, Eq. (90) is directly obtained replacing (99) in Eq. (94). \(\square \)

Remark 12

In analogy with the one-dimensional case, cf. Remark 1, we note that the term \(\det ({\varvec{A}})\) in the right-hand-side of Eq. (90) can be viewed as the measure of the state-space at time \(t=1\), i.e. \(\mathcal{R}(1)\) (cf. Remark 11).

We point out that the cyclic update condition is regulated by the switching times considered in Eq. (92) and by the particle position expressed in Eq. (93). Since the sequence of directions of the motion is fixed by a non-random rule this allows to express those equations in a tractable way. On the contrary, more complex dynamics concerning the random choices of directions would provide for the inclusion of an additional element of randomness in those equations, leading to less tractable expressions. For instance, some results concerning the case when the directions are picked randomly with constant intensities are given in Santra et al. [40].

Clearly, the results given in Theorems 3 and 4 for the discrete and absolutely continuous component of the process can be obtained in a similar way also when the initial velocity is \(V(0)=\vec {v}_2\) and \(V(0)=\vec {v}_3\).

4.2 An Analysis of a Special Case

In this section we analyze an instance of the process considered above, under the initial condition \(B_1\).

Assumptions 1

We consider the following conditions:

-

(i)

The motion is characterized by the three directions led by the following angles:

$$\begin{aligned} \theta _1=\frac{\pi }{3}, \qquad \theta _2=\pi , \qquad \theta _3=\frac{5}{3}\pi . \end{aligned}$$(100) -

(ii)

The durations \(D_{j,1}, D_{j,2}, \ldots \) constitute the intertimes of a GCP with intensity \(\lambda _j\in {\mathbb {R}}^+\), where \(D_{j,n}\) is the j-th duration of the motion within the n-th cycle, for \(j=1,2,3\) and \(n\in {\mathbb {N}}\).

In Fig. 3 we show an example of projections onto the state-space of suitable paths of the process \(\{(X(t), Y(t)), t \in {\mathbb {R}}_0^{+}\}\) under the Assumptions 1.

The given assumptions differ from those of similar two-dimensional finite-velocity processes studied in the recent literature. Indeed, apart from the cyclic motions in \({\mathbb {R}}^2\) described in Sect. 1 (see e.g. [10, 30, 31]), Santra et al. [40] considered run-and-tumble particle dynamics regulated by constant switching rates among possible orientations of the particle which can assume a set of discrete values or are governed by a continuous random variable.

According to (78), condition (i) of Assumptions 1 implies that the particle at every instant \(t \in {\mathbb {R}}^+\) is confined in the triangle

whose vertices are

Figure 4 shows a sample of the set \({\mathcal {R}}(t)\) defined in (101), with directions \(\vec {v}_1\), \(\vec {v}_2\) and \(\vec {v}_3\) led by the angles given in (100). Since the angles (100) satisfy conditions (71), it is ensured that the particle can reach any state, i.e. \({\mathcal {R}}(t)\rightarrow {\mathbb {R}}^2\) as \(t\rightarrow \infty \).

A sample of the set \({\mathcal {R}}(t)\) with velocities \(\vec {v}_1\), \(\vec {v}_2\), \(\vec {v}_3\) and fixed angles as in (100)

We recall that the sequences \(\{D_{j,k}; \; k \in {\mathbb {N}}\}_{j=1,2,3}\) are mutually independent. Moreover, under condition (ii) of Assumptions 1, for \(j=1,2,3\) the durations \(D_{j,n}\), \(n\in {\mathbb {N}}\), are dependent random variables having marginal p.d.f. (cf. Eq. (11) of [13])

Hereafter we determine the explicit probability laws of the process under the assumptions considered so far, i.e. when the random intertimes of the motion along the possible directions follow three independent GCPs with intensities \(\lambda _1\), \(\lambda _2\) and \(\lambda _3\).

Theorem 5

Let \(\{(X(t), Y(t), V(t)), t \in {\mathbb {R}}_0^{+}\}\) be the stochastic process defined in Sect. 4.1, under initial condition \(B_1\). If the Assumptions 1 hold, then for all \(t \in {\mathbb {R}}^+\) we have

and

Moreover, for all \((x,y) \in \mathring{{\mathcal {R}}}(t)\), with reference to the region \({{\mathcal {R}}}(t)\) in (101), we have

with

and where the terms \(\xi _j=\xi _j(x,y,t)\), \(j=1,2,3\), are given by

Proof

Recalling Theorem 3, the discrete components of the process are obtained from Eqs. (79) and (80). The absolutely continuous components in Eq. (106) are obtained in closed form as an immediate consequence of Theorem 4 after straightforward but tedious calculations. The right-hand-side of Eq. (90) is made explicit by using the finite sum of the resulting series as seen in Eq. (29) of Theorem 1. \(\square \)

We remark that the values of \(\xi _j\) in (108) are obtained directly from Eq. (88) given the angles in (100). Moreover, due to (83), under the assumptions of Theorem 5 the p.d.f. \(p_{1}(x,y,t)\) can be immediately obtained from Eq. (106). Similarly to the one-dimensional case treated in Corollary 5, we are now able to study the asymptotic behaviour of the p.d.f. of the particle location defined in (82) when the intensities \(\lambda _j\) tend to infinity.

Corollary 6

Under the assumptions of Theorem 5, for \(t\in {\mathbb {R}}^+\) and \((x,y) \in \mathring{{\mathcal {R}}}(t)\) one has

where \(\eta (x,y,t)\) is the following p.d.f.

with \(\xi _j\)’s introduced in (108).

Figure 5 shows a plot of the limiting three-peaked density obtained in Corollary 6.

The results expressed so far in this section for \(V(0)=\vec {v}_1\) can be extended to the cases \(V(0)=\vec {v}_2\) and \(V(0)=\vec {v}_3\) by adopting a similar procedure.

5 Conclusions

This paper has been centered on the analysis of telegraph processes in one and two dimensions, with cyclically alternating directions, when the changes of direction follow a GCP. The presence of intertimes possessing Pareto-type distributions yields results that are quite different from those concerning the classical telegraph process with exponential intertimes.

In view of potential applications in engineering, financial and actuarial sciences, we note that possible future developments can be oriented to

-

the study of first-passage-time problems for the considered processes,

-

the extension of the stochastic model to the case of a non-minimal number of directions, along the research line exploited by Lachal [22],

-

the analysis of other dynamics governed by differently distributed inter-arrival times and more general counting processes, even non-homogeneous processes as treated, for instance, in Yakovlev et al. [44].

Further research areas in which the finite-velocity random motions can be used for applications related to (i) mathematical geology, for the description of alternating trends in volcanic areas, to (ii) mathematical biology, for the modelling of the random motions of microorganisms, and to (iii) mathematical physics, for the constructions of simple stochastic models of vorticity motions in two or more dimensions.

Data Availability

All data generated or analysed during this study are included in this published article.

References

Albrecht, P.: Encyclopedia of statistical sciences. In: Kotz, S., Johnson, N.L., Read, C.B. (eds.) Mixed Poisson Processes. Wiley, New York (1985)

Beghin, L., Nieddu, L., Orsingher, E.: Probabilistic analysis of the telegrapher’s process with drift by means of relativistic transformations. J. Appl. Math. Stoch. Anal. 14(1), 11–25 (2001)

Cha, J.H., Finkelstein, M.: A note on the class of geometric counting processes. Probab. Eng. Inform. Sci. 27(2), 177–185 (2013)

Cinque, F., Orsingher, E.: On the exact distributions of the maximum of the asymmetric telegraph process. Stoch. Process. Appl. 142, 601–633 (2021)

Cinque, F., Orsingher, E.: Stochastic dynamics of generalized planar random motions with orthogonal directions. arXiv:2108.10027 (2021)

Crimaldi, I., Di Crescenzo, A., Iuliano, A., Martinucci, B.: A generalized telegraph process with velocity driven by random trials. Adv. Appl. Probab. 45(4), 1111–1136 (2013)

De Gregorio, A.: On random flights with non-uniformly distributed directions. J. Stat. Phys. 147, 382–411 (2012)

De Gregorio, A., Iafrate, F.: Telegraph random evolutions on a circle. Stoch. Process. Appl. 141, 79–108 (2021)

Dhar, A., Kundu, A., Majumdar, S.N., Sabhapandit, S., Schehr, G.: Run-and-tumble particle in one-dimensional confining potentials: steady-state, relaxation, and first-passage properties. Phys. Rev. E 99(3), 032132 (2019)

Di Crescenzo, A.: Exact transient analysis of a planar random motion with three directions. Stoch. Stoch. Rep. 72(3–4), 175–189 (2002)

Di Crescenzo, A., Martinucci, B.: A damped telegraph random process with logistic stationary distribution. J. Appl. Probab. 47(1), 84–96 (2010)

Di Crescenzo, A., Pellerey, F.: On prices’ evolutions based on geometric telegrapher’s process. Appl. Stoch. Models Bus. Ind. 18(2), 171–184 (2002)

Di Crescenzo, A., Pellerey, F.: Some results and applications of geometric counting processes. Methodol. Comput. Appl. Probab. 21(1), 203–233 (2019)

Fedotov, S., Han, D., Ivanov, A.O., da Silva, M.A.A.: Superdiffusion in self-reinforcing run-and-tumble model with rests. Phys. Rev. E 105, 014126 (2022)

Garcia, R., Moss, F., Nihongi, A., Strickler, J.R., Göller, S., Erdmann, U., Schimansky-Geier, L., Sokolov, I.M.: Optimal foraging by zooplankton within patches: the case of Daphnia. Math. Biosci. 207(2), 165–188 (2007)

Goldstein, S.: On diffusion by discontinuous movements, and on the telegraph equation. Q. J. Mech. Appl. Math. 4(2), 129–156 (1951)

Hu, C., Elbroch, M., Meyer, T., Pozdnyakov, V., Yan, J.: Moving-resting process with measurement error in animal movement modeling. Methods Ecol. Evolut. 12(11), 2221–2233 (2021)

Iacus, S.M.: Statistical analysis of the inhomogeneous telegrapher’s process. Stat. Probab. Lett. 55(1), 83–88 (2001)

Kac, M.: A stochastic model related to the telegrapher’s equation. Rocky Mountain J. Math. 4(3), 497–509 (1974)

Kolesnik, A.D., Ratanov, N.: Telegraph Processes and Option Pricing, vol. 204, (2013)

Kolesnik, A.D., Turbin, A.F.: The equation of symmetric Markovian random evolution in a plane. Stoch. Process. Appl. 75(1), 67–87 (1998)

Lachal, A.: Cyclic random motions in \(\mathbb{R}^d\)-space with \(n\) directions. ESAIM 10, 277–316 (2006)

Lachal, A., Leorato, S., Orsingher, E.: Minimal cyclic random motion in \(\mathbb{R} ^n\) and hyper-Bessel functions. Ann. l’IHP Probab. Stat. 42(6), 753–772 (2006)

Leorato, S., Orsingher, E., Scavino, M.: An alternating motion with stops and the related planar, cyclic motion with four directions. Adv. Appl. Probab. 35(4), 1153–1168 (2003)

López, O., Ratanov, N.: Option pricing driven by a telegraph process with random jumps. J. Appl. Probab. 49(3), 838–849 (2012)

Malakar, K., Jemseena, V., Kundu, A., Kumar, K.V., Sabhapandit, S., Majumdar, S.N., Redner, S., Dhar, A.: Steady state, relaxation and first-passage properties of a run-and-tumble particle in one-dimension. J. Stat. Mech. 2018(4), 043215 (2018)

Martinucci, B., Meoli, A.: Certain functionals of squared telegraph processes. Stoch. Dyn. 20(1), 2050005 (2020)

Martinucci, B., Meoli, A., Zacks, S.: Some results on the telegraph process driven by gamma components. Adv. Appl. Probab., online first, (2022). https://doi.org/10.1017/apr.2021.54

Orsingher, E.: Probability law, flow function, maximum distribution of wave-governed random motions and their connections with Kirchoff’s laws. Stoch. Process. Appl. 34(1), 49–66 (1990)

Orsingher, E.: Exact joint distribution in a model of planar random motion. Stoch. Stoch. Rep. 69(1–2), 1–10 (2000)

Orsingher, E.: Bessel functions of third order and the distribution of cyclic planar motions with three directions. Stoch. Stoch. Rep. 74(3–4), 617–631 (2002)

Orsingher, E., Garra, R., Zeifman, A.I.: Cyclic random motions with orthogonal directions. Markov Process. Relat. Fields 26(3), 381–402 (2020)

Pogorui, A.A., Rodríguez-Dagnino, R.M.: Isotropic random motion at finite speed with \(K\)-Erlang distributed direction alternations. J. Stat. Phys. 145, 102–112 (2011)

Pogorui, A.A., Rodríguez-Dagnino, R.M.: Random motion with uniformly distributed directions and random velocity. J. Stat. Phys. 147, 1216–1225 (2012)

Pogorui, A.A., Rodríguez-Dagnino, R.M.: Goldstein-Kac telegraph equations and random flights in higher dimensions. Appl. Math. Comput. 361, 617–629 (2019)

Pogorui, A.A., Swishchuk, A., Rodríguez-Dagnino, R.M.: Transformations of telegraph processes and their financial applications. Risks 9(8), 147 (2021)

Ratanov, N.: Ornstein–Uhlenbeck processes of bounded variation. Methodol. Comput. Appl. Probab. 23, 925–946 (2021)

Ratanov, N., Di Crescenzo, A., Martinucci, B.: Piecewise deterministic processes following two alternating patterns. J. Appl. Probab. 56(4), 1006–1019 (2019)

Rolski, T., Schmidli, H., Schmidt, V., Teugels, J.L.: Stochastic Processes for Insurance and Finance. Wiley, New York (2009)

Santra, I., Basu, U., Sabhapandit, S.: Run-and-tumble particles in two dimensions: marginal position distributions. Phys. Rev. E 101(6), 062120 (2020)

Shcherbakov, R., Yakovlev, G., Turcotte, D.L., Rundle, J.B.: Model for the distribution of aftershock interoccurrence times. Phys. Rev. Lett. 95(21), 218501 (2005)

Swishchuk, A., Pogorui, A., Rodriguez-Dagnino, R.M.: Random Motions in Markov and Semi-Markov Random Environments 2: High-dimensional Random Motions and Financial Applications. Wiley, New York (2020)

Travaglino, F., Di Crescenzo, A., Martinucci, B., Scarpa, R.: A new model of Campi Flegrei inflation and deflation episodes based on Brownian motion driven by the telegraph process. Math. Geosci. 50(8), 961–975 (2018)

Yakovlev, G., Rundle, J.B., Shcherbakov, R., Turcotte, D.L.: Inter-arrival time distribution for the non-homogeneous Poisson process. arXiv:cond-mat/0507657 (2005)

Acknowledgements

The authors are members of the group GNCS of INdAM (Istituto Nazionale di Alta Matematica). This work is partially supported by MIUR–PRIN 2017, Project “Stochastic Models for Complex Systems” (no. 2017JFFHSH) and by INdAM-GNCS (project “Modelli di shock basati sul processo di conteggio geometrico e applicazioni alla sopravvivenza” (CUP E55F22000270001)).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors contributed equally to this paper. The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Communicated by Abhishek Dhar.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Di Crescenzo, A., Iuliano, A. & Mustaro, V. On Some Finite-Velocity Random Motions Driven by the Geometric Counting Process. J Stat Phys 190, 44 (2023). https://doi.org/10.1007/s10955-022-03045-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10955-022-03045-8