Abstract

We study a model of random colliding particles interacting with an infinite reservoir at fixed temperature and chemical potential. Interaction between the particles is modeled via a Kac master equation (in: Kay, Proceedings of the Third Berkeley Symposium on Mathematical Statistics and Probability, 1954–1955, University of California Press, Berkeley and Los Angeles, 1956). Moreover, particles can leave the system toward the reservoir or enter the system from the reservoir. The system admits a unique steady state given by the Grand Canonical Ensemble at temperature \(T=\beta ^{-1}\) and chemical potential \(\chi \). We show that any initial state converges exponentially fast to equilibrium by computing the spectral gap of the generator in a suitable \(L^2\) space and by showing exponential decrease of the relative entropy with respect to the steady state. We also show propagation of chaos and thus the validity of a Boltzmann-Kac type equation for the particle density in the infinite system limit.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In 1955, Mark Kac [14] introduced a simple model to study the evolution of a dilute gas of N particles with unit mass undergoing pairwise collisions. Instead of following the deterministic evolution of the particles until a collision takes place, he considered particles that collide at random times with every particle undergoing, on average, a given number of collisions per unit time. Moreover, when a collision takes place, the energy of the two particles is randomly redistributed between them. In such a situation, one can neglect the position of the particles and focus on their velocities. To obtain a model as simple as possible, he considered particles that move in one spatial dimension. This leads to an evolution governed by a master equation for the probability distribution \(f(\underline{v}_N)\), where \(\underline{v}_N\in {\mathbb {R}}^N\) are the velocities of the particles. Since collisions preserve the kinetic energy of the system, to obtain ergodicity one has to restrict the evolution to \(\underline{v}_N\in {\mathbb {S}}^{N-1}(\sqrt{2eN})\), that is on the surface of constant kinetic energy with e the kinetic energy per particle. To further simplify the model, he neglected the dependence of a particle collision rate on its speed, a situation sometime referred as Maxwellian particles. In this setting, the dynamical properties of the evolution do not depend on e and it is thus natural to set \(e=1/2\), see [14,15,16] for more details.

The study of the Kac master equation has been very useful to clarify and investigate notions and conjectures arising from the kinetic theory of diluted gases. We refer the reader to Kac’s original works [14] and [15] for extensive discussion.

Kac’s master equation also provides a natural setting to study approach to equilibrium. In the case of the standard Kac model [14], equilibrium is represented by the uniform distribution on the surface of given kinetic energy. Uniform convergence in the sense of the \(L^2\) gap was conjectured by Kac and it was established in [13] while the gap was explicitly computed in [5].

A more natural way to define approach to equilibrium is via the relative entropy. This provides a better setting since the relative entropy, in general, grows only linearly with the number of particles. There is no result of exponential decay of relative entropy with a rate that is uniform in N for the original Kac model. Moreover, estimates of the entropy production rate seem to point to a slow decay, at least for short times, see [7, 19].

In [4], the authors studied the evolution of a dilute gas of N particles brought to equilibrium via a Maxwellian thermostat, i.e. an infinite heat reservoir at fixed temperature \(T=\beta ^{-1}\). The velocities of the particles in the system evolve according to the standard Kac collision process described above. On top of this, particles in the system collide with particles in the thermostat at randomly distributed times. In this way, the system and the reservoir exchange energy, but there is no exchange of particles. In particular, the kinetic energy of the system is no more preserved. They proved that the system admits as a unique steady state the Canonical Ensemble, i.e. in the steady state the probability distribution \(f(\underline{v}_N)\) is the Maxwellian distribution at temperature T. Moreover, the steady state is approached exponentially fast and uniformly in N, both in the sense of the spectral gap, in a suitable \(L^2\) space, and in the sense of the relative entropy. In both cases, the rate of approach is determined by the interaction with the thermostat while the rate of collision between particles in the system appears only in the second spectral gap. They also adapted McKean’s proof [16] of propagation of chaos and obtained a Boltzmann-Kac type effective equation for the evolution of the one particle marginal in the limit \(N\rightarrow \infty \).

In the present work, we study a different way to bring the system to equilibrium. As in [4], we study a system of N particles evolving through pair collisions and interacting with an infinite reservoir at given temperature T; however, the system and the reservoir are allowed to exchange particles. The evolution of the the velocities of the particles in the system is again described by a standard Kac collision process. On top of these, at random times a particle in the system can leave it while, still at random times, a particle can enter the system from the reservoir with its velocity distributed according to the Maxwellian at temperature T. Since the reservoir is infinite, no particle can enter or leave the system more than once. Clearly, in this new setting, energy and number of particles are not preserved. We show that this new evolution admits as its unique steady state the Grand Canonical Ensemble. This means that, in the steady state, the probability that the system contains N particles is given by a Poisson distribution while the probability distribution on the velocities, given the number of particles, is the Maxwellian at temperature T.

We also study the approach to equilibrium in a suitable \(L^2\) space and in relative entropy. In both cases, we show that the rate of approach is uniform in the average number of particles. As in [4], the approach to equilibrium, both in \(L^2\) and in relative entropy, is driven by the thermostat alone while the second spectral gap depends on the rate of binary particle collisions. Finally, we look at the emergence of an effective evolution for the particle density in the limit of a large system, that is when the average number of particles goes to infinity. This requires some adaptation of the concept of propagation of chaos since the number of particles in the system is not constant. Adapting the proof in [16], we show that the relative particle density, defined in (19) and (22) below, satisfies a Boltzmann-Kac type of equation.

The rest of the paper is organized as follows. In Sect. 2, we present the model and state our main results. Section 3 contains the proofs of our main results, while in Sect. 4 we report some open problems and present possible areas of future work. Finally the appendix contains the proofs of some technical Lemmas used in Sect. 3.

2 Model and Results

Since we want to describe a dilute gas with uniform density exchanging particles with an infinite reservoir, it is natural to assume that, in a given time, each particle in the system has the same probability of leaving it independently from the total number N of particles in the system. This implies that the flow of particles from the system to the reservoir is proportional to N. On the other hand, the probability of a particle to enter the system from the reservoir depends only on the characteristics of the reservoir, and not on N, so that the flow of particles in the system is independent from N. Finally, since the gas is dilute, given two particles in the system, their probability of colliding in a given time does not depend on the total number of particles in the system. Thus we expect the number of binary collisions in the system, in a given time, to be proportional to \(\left( {\begin{array}{c}N\\ 2\end{array}}\right) \). These are the main heuristic considerations that lead to the formulation of our model to be introduced formally below.

We consider a system of particles in one space dimension interacting with an infinite reservoir with which it exchanges particles. Since the number of particles in the system is not constant, the phase space is given by \({\mathcal {R}}=\bigcup _{N=0}^\infty {\mathbb {R}}^N\), where \({\mathbb {R}}^0=\{\emptyset \}\) represents the state where no particle is in the system.

The evolution of the system is governed by three separate random processes. First, at exponentially distributed times a particle is added to the system with a velocity randomly chosen from a Maxwellian distribution at temperature T. To simplify notation we chose \(T^{-1}=2\pi \). Second, also at exponentially distributed times, a particle is chosen at random to exit the system and disappear forever with no chance of reentry. Finally, a pair of particles in the system is selected at random to undergo a standard Kac collision.

More precisely, let \(L^1_s({{\mathcal {R}}})=\bigoplus _{N=0}^\infty L^1_s({\mathbb {R}}^N)\) be the Banach space of all states \(\mathbf {f}=(f_N)_{N=0}^\infty \), with \(f_N(\underline{v}_N)\) symmetric under permutation of the \(v_i\), defined by the norm \(\Vert \mathbf {f}\Vert _1:=\sum _N\Vert f_N\Vert _{1,N}\), where \(\Vert f_N\Vert _{1,N}=\int d\underline{v}_N|f_N(\underline{v}_N)|\). We say that \(\mathbf {f}\) is positive if \(f_N(\underline{v}_N)\ge 0\) for every N and almost every \(\underline{v}_N\). If \(\mathbf {f}\) is positive and \(\Vert \mathbf {f}\Vert _1=1\) then \(\mathbf {f}\) is a probability distribution on \({\mathcal {R}}\). In this case, for \(N>0\), \(f_N(\underline{v}_N)\) represents the probability of finding N particles in the system with velocities \({\underline{v}}_N=(v_1,\dots ,v_N)\) while \(f_0\in {\mathbb {R}}\) is the probability that the system contains no particles.

The master equation for the evolution is given by

where \({\mathcal {I}}\) is the in operator that represents the effect of introducing a particle into the system and, after symmetrization, is given by

while \({\mathcal {O}}\) is the out operator that represents the effect of a random particle leaving the system

and

Observe that, due to the symmetry of \(f_{N+1}\), we can write

We also define the thermostat operator \({\mathcal {T}}\) as

These definitions imply that, in every time interval dt, there is a probability \(\mu dt\) of a particle being added to the system. This probability is independent of the number of particles already in the system. In the same time interval, every particle in the system has a probability \(\rho dt\) of leaving the system, which is, again, independent of the number of particles in the system. Thus, as discussed at the beginning of this section, the outflow of particles is proportional to N while the inflow does not depend on N.

Finally \({\mathcal {K}}\) represents the effect of the collisions among particles. It acts independently on each of the N particles subspaces, that is it is \(({\mathcal {K}}\mathbf {f})_N=K_N f_N\) with

where \(R_{i,j}\) represents the effect of a collision between particles i and j:

that is, \(R_{i,j}f_N\) is the average of \(f_N\) over all rotations in the plane \((v_i,v_j)\). In this way, the probability that two given particles suffer a collision in an interval dt is proportional to \(\tilde{\lambda }\) and does not depend on the number of particles in the system.

Since \({\mathcal {L}}\) is a sum of unbounded operators that do not commute, we first need to show that (1) defines an evolution on \(L^1_s({\mathcal {R}})\) and that such an evolution preserves probability distributions. Observe that, notwithstanding \({\mathcal {L}}\) is unbounded, the operator \({\mathcal {L}}_N\mathbf {f}\), defined by \({\mathcal {L}}_N\mathbf {f}:=({\mathcal {L}}\mathbf {f})_N\), is bounded as an operator from \(L_s^1({\mathcal {R}})\) to \(L^1_s({\mathbb {R}}^N)\) with \(\Vert {\mathcal {L}}_N\Vert _{1,N}\le 2\mu +(2N+1)\rho +\tilde{\lambda } N^2\). Thus we will take \(D^1=\{\mathbf {f}\;|\,\sum _N N^2\Vert f_N\Vert _{1,N}<\infty \}\) as the domain of \({\mathcal {L}}\). It is easy to see that \(D^1\) is dense in \(L_s^1({\mathcal {R}})\).

In Sect. 3.1 we will build a semigroup of continuous operators \(e^{t{\mathcal {L}}}\) that solves (1) for initial data \(\mathbf {f}\in D^1\) and show that \(e^{t{\mathcal {L}}}\) preserves probability distributions.

Lemma 1

There exists a semigroup of continuous operators \(e^{t{\mathcal {L}}}\) such that if \(\mathbf {f}\in D^1\) then \(\mathbf {f}(t)=e^{t{\mathcal {L}}}\mathbf {f}\) solves (1). For every \(\mathbf {f}\in L^1_s({\mathcal {R}})\) we have

Moreover, if \(\mathbf {f}\) is positive then so is \(e^{t{\mathcal {L}}}\mathbf {f}\) and \(\Vert e^{t{\mathcal {L}}}\mathbf {f}\Vert _1=\Vert \mathbf {f}\Vert _1\). Thus (1) generates an evolution that preserves probability distributions.

Proof

See Sect. 3.1. \(\square \)

It is not hard to see that the evolution generated by (1) admits the steady state \(\varvec{\varGamma }\) given by

where \(\gamma _N(\underline{v}_N)=\prod _{i=1}^N\gamma (v_i)\), with \(\gamma (v)=e^{-\pi v^2}\), is the Maxwellian distribution with \(\beta =2\pi \) in dimension N while \(a_N=\left( \frac{\mu }{\rho }\right) ^N\frac{e^{-\frac{\mu }{\rho }}}{N!}\) is a Poisson distribution on \({\mathbb {N}}\). We observe that \(\varvec{\varGamma }\) is a Grand Canonical Ensemble with temperature \(T=\beta ^{-1}=1/2\pi \), chemical potential \(\chi =(2\pi )^{-1} \log (\rho /\mu )\), and average number of particles \(\langle {\mathcal {N}}\varvec{\varGamma }\rangle =\mu /\rho \) where

In Sect. 3.1 we show that \(\varvec{\varGamma }\) is the unique steady state of the evolution generated by (1). Finally, from a physical point of view, it is natural to consider only initial states with finite average number of particles and average kinetic energy, that is probability distributions \(\mathbf {f}\) such that

Since the Kac collision operator \({\mathcal {K}}\) preserves energy and number of particles, we can derive autonomous equations for the evolutions of \(N(t)=\langle {\mathcal {N}}\mathbf {f}(t)\rangle \) and \(E(t)=\langle {\mathcal {E}} \mathbf {f}(t)\rangle \). Indeed, if \(\mathbf {f}\) is a probability distribution, we obtain

so that, if (8) holds at time \(t=0\) it holds for every time \(t>0\). See Sect. 3.1 for a derivation of these equations. Letting \(e(t)=E(t)/N(t)\), we get

Equation (10) looks like Newton law of cooling for a system like ours. Notwithstanding this, e(t) is not the natural definition of temperature since it is not the average kinetic energy per particle. A more interesting quantity is \(\tilde{e}(t)=\langle v_1^2\mathbf {f}\rangle \), but we were not able to obtain a closed form expression for its evolution.

As discussed in the introduction, we are interested in properties that are uniform in the average number of particles in the steady state \(\langle {\mathcal {N}}\varvec{\varGamma }\rangle =\mu /\rho \) and eventually we want to consider the situation where the average number of particles goes to infinity, that is \(\mu /\rho \rightarrow \infty \). A classical way to take such a limit is to require that the collision rate between particles decreases as the average number of particles increases in such a way that the average number of collisions a given particle suffers in a given time is independent from \(\mu /\rho \), at least when \(\mu /\rho \) is large. This is achieved by setting

Observe that in this way, the scaling in N of \(K_N\) in (5) differs from the scaling in the standard Kac model. Notwithstanding this, they can both be thought as implementations of the Grad-Boltzmann limit in the two different situations, see [11].

One way to study the approach of an initial state \(\mathbf {f}\) toward \(\varvec{\varGamma }\) is by computing the spectral gap of \({\mathcal {L}}\). Since \({\mathcal {L}}\) is not self adjoint on \(L^2_s({\mathcal {R}})\) we perform a ground state transformation setting

We will express (11) as \(\mathbf {f}=\varvec{\varGamma } \mathbf {h}\). Inserting the above definition in (1) we get

where we have set

In this representation, the steady state is given by the vector \(\mathbf {e}^0\) such that \((\mathbf {e}^0)_N\equiv 1\) for every N. Thus \(\widetilde{{\mathcal {L}}}\) is an unbounded operator on the Hilbert space

of all states \(\mathbf {h}=(h_0,h_1,h_2,\ldots )\) with \(h_N(\underline{v}_N)\) symmetric under permutations of the \(v_i\) and defined by the scalar product

As for \({\mathcal {L}}\), defining \(\widetilde{{\mathcal {L}}}_M\mathbf {h}=({\mathcal {L}}\mathbf {h})_M\) we get a bounded operator from \(L^2_s({\mathcal {R}},\varvec{\varGamma })\) to \(L^2_s({\mathbb {R}}^N,\gamma _N(\underline{v}_N))\) so that, calling \(\Vert h_N\Vert _{2,N}=(h_{N},h_{N})_N\), we can take

as the domain of \(\widetilde{{\mathcal {L}}}\). The following Theorem shows that \(\widetilde{{\mathcal {L}}}\) defines an evolution on \(L^2_s({\mathcal {R}},\varvec{\varGamma })\).

Theorem 2

The generator \(\widetilde{{\mathcal {L}}}\) is self adjoint and non-positive definite on \(L^2_s({\mathcal {R}},\varvec{\varGamma })\). Furthermore, if we define

where \(\Vert \mathbf {h}\Vert _2=(\mathbf {h},\mathbf {h})\) and \(\mathbf {E}_0=\mathrm {span}\{\mathbf {e}^0\}\), we get

Moreover \(\varDelta \) is an eigenvalue and the associated eigenspace is \(\mathbf {E}_1=\mathrm {span}\{\mathbf {e}_1,\mathbf {e}_{(0,0,1)}\}\) with \(\mathbf {e}_1=\sqrt{\frac{\rho }{\mu }}{\mathcal {P}}^+\mathbf {e}^0-\sqrt{\frac{\mu }{\rho }}\mathbf {e}^0\) while

Proof

See Sect. 3.2. \(\square \)

Due to the invariance of even, second degree polynomials under the Kac collision operator \({\mathcal {K}}\), Theorem 2 shows that the spectral gap of the generator \(\widetilde{{\mathcal {L}}}\) is completely determined by the presence of the reservoir. This is not surprising since all states \(\mathbf {h}\) such that \(h_N\) is rotationally invariant for every N are in the null space of \({\mathcal {K}}\).

As in [4], to see the effect of the Kac collision operator \({\mathcal {K}}\), we have to look at the second gap, defined as

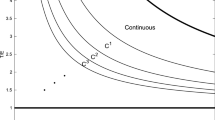

Theorem 3

If

we have

Moreover \(\varDelta _2\) is an eigenvalue and the associated eigenspace is contained in the space of all states \(\mathbf {h}\) such that \(h_N\) is an even, fourth degree polynomial.

Proof

See Sect. 3.3. \(\square \)

Since \(\mu /\rho \) is the average number of particles in the steady state, the conditions in (13) are not too restrictive.

It is possible to see that, as in the case of the standard Kac evolution, the \(L^2\) norm discussed above does not scale well with the average number of particles in the system and thus it is not a good measure of distance from the steady state if \(\mu /\rho \) is large. A better measure is the entropy of a probability distribution \(\mathbf {f}\) relative to the steady state \(\varvec{\varGamma }\) defined as

where, as before, \(\mathbf {f}=\varvec{\varGamma }\mathbf {h}\) and \(a_N\) and \(\gamma _N\) are defined in (11).

As usual, it is easy to show using convexity that \({\mathcal {S}}(\mathbf {f}\,|\,\varvec{\varGamma })\ge 0\), \({\mathcal {S}}(\mathbf {f}\,|\,\varvec{\varGamma })=0\) if and only if \(\mathbf {f}=\varvec{\varGamma }\). Moreover, from Lemma 1 and convexity, it follows that \({\mathcal {S}}(\mathbf {f}(t)\,|\,\varvec{\varGamma })\le {\mathcal {S}}(\mathbf {f}\,|\,\varvec{\varGamma })\) where \(\mathbf {f}(t)=e^{t{\mathcal {L}}}\mathbf {f}\). In Sect. 3.4, we show that, thanks to the presence of the reservoir, the entropy production rate is strictly negative. More precisely, assuming that \(\mathbf {f}=\varvec{\varGamma } \mathbf {h}\in D^1\) and \(\varvec{\varGamma } \mathbf {h}\log \mathbf {h}\in D^1\) we essentially obtain that

See Lemmas 19 and 20 in Sect. 3.4 below for a precise statement. Form (14) we obtain the following Theorem.

Theorem 4

If \(\mathbf {f}=\mathbf {h}\varvec{\varGamma }\in D^1\) is a probability distribution such that \(\varvec{\varGamma }\mathbf {h}\log \mathbf {h}\in D^1\) then

Proof

See Sect. 3.4. \(\square \)

As in the case of Theorem 2, convergence to equilibrium in entropy is completely dominated by the presence of the thermostat, that is, Theorem 4 remains valid in the case \(\tilde{\lambda }=0\) where there is no collision among the particles.

We can now discuss the validity of a Boltzmann-Kac type equation when the average number of particles in the system goes to infinity. To follow the standard analysis in [16], we have first to define what a chaotic sequence is in the present situation. It is natural to call \(\mathbf {f}=(f_0,f_1,f_2,\ldots )\) a product state if it has the form

where g(v) is a probability density on \({\mathbb {R}}\) and \(\eta >0\) is the average number of particles. We observe that for the state \(\mathbf {f}\) in (16), we have

where \({\mathcal {T}}\) is defined in (4) and, calling \(l(v,t)=\frac{\rho }{\mu }\eta (t)g(v,t)\), we get

This implies that the thermostat preserves the product structure exactly. See Sect. 3.5 for a derivation of (17) and (18).

Thus we call a sequence of states \(\mathbf {f}_n=(f_{n,0},f_{n,1},f_{n,2},\ldots )\) chaotic if it approaches the structure (16) while the average number of particles \(\langle {\mathcal {N}}\mathbf {f}_n\rangle \) goes to infinity. More precisely, let \(\mu _n\) be a sequence such that \(\lim _{n\rightarrow \infty }\mu _n=\infty \) and define

where the factor \(\frac{N!}{(N-k)!}\) accounts for the possible ways to choose the k particles with velocities \(\underline{v}_k\). We also define

so that \(\Vert F_n^{(k)}\Vert _{1,k}\le \left( \frac{\rho }{\mu _n}\right) ^k\Vert \mathbf {f}_n\Vert _1^{(k)}\).

Observe that, if \(\mathbf {f}_n\) is a product state of the form (16) with average number of particles \(\eta _n\), that is if

we get

Thus the factor \(\left( \frac{\rho }{\mu _n}\right) ^{k}\) in (19) assures that, at least in this case, if \(\lim _{n\rightarrow \infty }\eta _n/\mu _n\) exists then also \(\lim _{n\rightarrow \infty } F_n^{(k)}\) exists.

To generalize these observations, we say that \(F_n^{(k)}\) converges weakly to \(F^{(k)}\) if, for any continuous and bounded test function \(\phi _k:{\mathbb {R}}^k\rightarrow {\mathbb {R}}\), we have

and we write \(\text {w-lim}_{n\rightarrow \infty }F_n^{(k)}=F^{(k)}\). Given a sequence \(\mathbf {f}_n\) of probability distributions such that

for some \(M>0\) and every n and r, we say that \(\mathbf {f}_n\) is chaotic (w.r.t. \(\mu _n\)) if, for some F

while for every \(k>1\) we have

where \(F^{\otimes k}(\underline{v}_k)=\prod _{i=1}^k F(v_i)\). Observe that

so that we can see F(v) as the relative particle density.

In [14, 16] a sequence of probability distributions \(f_n:{\mathbb {R}}^n\rightarrow {\mathbb {R}}\) is said to be chaotic if, calling

we have

If we consider the sequence of states \(\mathbf {f}_n\) defined as

with the natural choice \(\mu _n=n\rho \), since the number of particles in \(\mathbf {f}_n\) is exactly n, from (19) we get \(F=\widetilde{F}\) and thus \(F^{(k)}=\widetilde{F}^{(k)}\). In this sense, (19) and (23) can be considered as a generalization of the classical definition in [14].

Let now

where \({\mathcal {L}}_n\) is given by (1) with \(\mu =\mu _n\) and

In Sect. 3.6, we prove that \(e^{{\mathcal {L}}_n t}\) propagates chaos in the sense that, if \(\mathbf {f}_n(0)\) forms a chaotic sequence, then \(\mathbf {f}_n(t)\) also forms a chaotic sequence for every t. This gives the following theorem.

Theorem 5

If \(\mathbf {f}_n(0)\) forms a chaotic sequence w.r.t. \(\mu _n\), with \(\lim _{n\rightarrow \infty }\mu _n=\infty \), then also \(\mathbf {f}_n(t)\) forms a chaotic sequence for every \(t\ge 0\). Moreover the relative particle density

satisfies the Boltzmann-Kac type equation

Proof

See Sect. 3.6. \(\square \)

3 Proofs

3.1 Proof of Lemma 1

The results in this section are based on two observations. The first is that the collision operator \({\mathcal {K}}\) acts independently on each \(L^1_s({\mathbb {R}}^N)\) and thus preserves positivity and probability. The second is that, due to the different scaling in N of the in and out operators, see (2) and (3), for large N the outflow of particles dominates the inflow. Thus even if the initial probability of having a number of particles much larger than the steady state average \(\mu /\rho \) is high, this probability will rapidly decrease toward its steady state value, see (34) and (46) below. In particular this prevents probability from “leaking out at infinity”.

We will now construct a solution of (1) in three steps, starting from \({\mathcal {K}}\) alone, using a partial power series expansion, see (27) below, and then adding the out operator \({\mathcal {O}}\) and finally the in operator \({\mathcal {I}}\), using a Duhamel style expansion, see (33) and (40) below. These expansions are strongly inspired by the stochastic nature of the the evolution studied, see Remark 8 below for more details.

It is natural to define \(\left( e^{t\tilde{\lambda } {\mathcal {K}}}\mathbf {f}\right) _N=e^{t\tilde{\lambda } K_N}f_N\) where we can write

Observing that

and using that from Dominated Convergence we get

we obtain that \(\lim _{t\rightarrow 0^+}e^{t\tilde{\lambda } {\mathcal {K}}}\mathbf {f}=\mathbf {f}\). Similarly, we get

so that, if \(\mathbf {f}\in D_1\) then \(\lim _{t\rightarrow 0^+}\left( e^{t\tilde{\lambda } {\mathcal {K}}}\mathbf {f}-\mathbf {f}\right) /t =\tilde{\lambda }{\mathcal {K}}\mathbf {f}\). Since \(\Vert e^{t\tilde{\lambda } K_N}f_N\Vert _{1,N}\le \Vert f_N\Vert _{1,N}\) we get \(\Vert e^{t\tilde{\lambda }{\mathcal {K}}}\mathbf {f}\Vert _1\le \Vert \mathbf {f}\Vert _1\). Moreover if \(f_N\) is positive then also \(e^{t\tilde{\lambda } K_N}f_N\) is positive and \(\Vert e^{t\tilde{\lambda } K_N}f_N\Vert _{1,N}= \Vert f_N\Vert _{1,N}\). Thus if \(\mathbf {f}\) is positive then \(e^{t\tilde{\lambda }{\mathcal {K}}}\mathbf {f}\) is positive and \(\Vert e^{t\tilde{\lambda }{\mathcal {K}}}\mathbf {f}\Vert _1= \Vert \mathbf {f}\Vert _1\).

Let now \(\mathbf {f}(t)\) be a solution of

with \(\mathbf {f}(0)=\mathbf {f}\in D^1\). If such a solution exists, it satisfies the Duhamel formula

where the construction of \(e^{(\tilde{\lambda } {\mathcal {K}}-\rho {\mathcal {N}})t}\) is analogous to that of \(e^{\tilde{\lambda } {\mathcal {K}}t}\). From (30) we get

where we have used that

Observe that, in (32), equality holds if and only if \(f_{N+1}\) is everywhere positive or everywhere negative. To construct a solution of (29) we iterate (30) to define

and then show that \({\mathcal {Q}}(t)\) is a semigroup of bounded operators and that \(\mathbf {f}(t)={\mathcal {Q}}(t)\mathbf {f}\) solves (29) if \(\mathbf {f}\in D^1\). Using (31) iteratively we get

where, in the last identity, we have used that

After summing over N we get

so that \(\Vert {\mathcal {Q}}(t)\Vert _1\le 1\). Observe also that, if \(\mathbf {f}\) is positive then \({\mathcal {Q}}(t)\mathbf {f}\) is positive and \(\Vert {\mathcal {Q}}(t)\mathbf {f}\Vert _1=\Vert \mathbf {f}\Vert _{1}\), see comment below (32). Conversely, if for some N, \(f_N\) takes both positive and negative values then \(\Vert {\mathcal {Q}}(t)\mathbf {f}\Vert _1<\Vert \mathbf {f}\Vert _{1}\).

From (33), we see that \({\mathcal {Q}}(t_1){\mathcal {Q}}(t_2)= {\mathcal {Q}}(t_1+t_2)\) while, using (34) and (36), and the fact that

we get

so that \({\mathcal {Q}}(t)\mathbf {f}\in D^1\) if \(\mathbf {f}\in D^1\). Moreover observe that

so that \(\lim _{t\rightarrow 0^+}{\mathcal {Q}}(t)\mathbf {f}=\mathbf {f}\). Similarly we have

If \(\mathbf {f}\in D^1\), proceeding as in (37) we see that the second and third lines of the right hand side of (38) vanish as \(t\rightarrow 0^+\) while writing

and using (28) we see that also the last line of (38) vanish as \(t\rightarrow 0^+\). This implies that, for \(\mathbf {f}\in D^1\), we have \(\lim _{t\rightarrow 0^+} \left( {\mathcal {Q}}(t)\mathbf {f}-\mathbf {f}\right) /t =\tilde{\lambda }{\mathcal {K}}\mathbf {f}+\rho ({\mathcal {O}}-{\mathcal {N}})\mathbf {f}\) and we can write \({\mathcal {Q}}(t)=e^{t(\tilde{\lambda } {\mathcal {K}}+\rho ({\mathcal {O}}-{\mathcal {N}}))}\).

We can now use a Duhamel style expansion once more to obtain

that, thanks to the fact that \({\mathcal {I}}\) is bounded, converges for every \(\mathbf {f}\in L^1({\mathcal {R}})\) to a solution of \(\frac{d}{dt}\mathbf {f}(t)={\mathcal {L}}\mathbf {f}(t)\). Lemma 1 follows easily observing that \(\Vert {\mathcal {I}}\mathbf {f}\Vert _1= \Vert \mathbf {f}\Vert _1\). \(\square \)

Remark 6

The proof of Lemma 1 above also shows that given \(\mathbf {f}\in L^1({\mathcal {R}})\), if for some N, \(f_N\) takes both positive and negative values, then \(\Vert e^{t{\mathcal {L}}}\mathbf {f}\Vert _1<\Vert \mathbf {f}\Vert _{1}\).

Remark 7

From (30) it is not hard to see that, if \(\mathbf {f}_i(t)\in D^1\), \(i=1,2\), are two solutions of (1) with \(\mathbf {f}_1(0)=\mathbf {f}_2(0)\) then \(\mathbf {f}_1(t)=\mathbf {f}_2(t)\).

Remark 8

Observe that (1) is the master equation of a jump process where jumps occur when two particles collide, a particle enters the system or a particle leaves it. Moreover, these jumps arrive according to a Poisson process. The expansions (27), (33) and (40) combined can be seen as a representation of the evolution of \(\mathbf {f}\) as an integral over all possible realizations of the jump process, sometime called jump or collision histories. A similar representation was used in [2] to study the interaction of a Kac system with a large reservoir. Clearly, such a representation is much more complex in the present situation then for the model studied in [2]. Here the arrival rate for the jumps depends on the state of the system via the number of particles N and goes to infinity as N increases.

Given a state \(\mathbf {f}=(f_0,f_1,\ldots )\) we set \(\bar{f}_N=\int f_N(\underline{v}_N)d\underline{v}_N\). It is easy to see that

while

so that we get

If \(\varvec{\varGamma }\) is a steady state, writing

we see from (42) that \(c_N=c_0\) for every N. Since \(\sum _N\overline{\varGamma }_N=1\) we get \(\overline{\varGamma }_N=a_N\), see (7). This implies that if \(\varvec{\varGamma }\) and \(\varvec{\varGamma }'\) are two steady states then

for every N. From Remark 6 it follows that, if \(\varvec{\varGamma }\not =\varvec{\varGamma }'\) then \(\Vert e^{t{\mathcal {L}}}(\varvec{\varGamma }-\varvec{\varGamma }')\Vert _1 <\Vert \varvec{\varGamma }-\varvec{\varGamma }'\Vert _{1}\). Uniqueness of the steady state follows immediately.

We now prove a more general version of (9). For \(r\ge 0\) we define

and, using (42), we get

that, for \(r=1\), would imply the first of (9) since for a probability distribution we have \(N_0(\mathbf {f})=1\). This argument is suggestive but only formal since we need to show that we can exchange the sum with the derivative in the above derivation. Notwithstanding this, it shows that for \(r=0\), if \(\mathbf {f}\in D^1\) then

To prove (9) we proceed more directly using the expansions derived previously. Indeed from (34) and (36) we get

Furthermore, using that \(N_r({\mathcal {I}}\mathbf {f})= N_r(\mathbf {f})+r N_{r-1}(\mathbf {f})\), we get

that gives

For \(r=1\), if \(\mathbf {f}(0)\) is a probability distribution, we get

that proves the first of (9). We will need the following corollary in Sect. 3.6 below.

Corollary 9

Given a probability distribution \(\mathbf {f}\), assume that there exists M such that \(|N_r(\mathbf {f}(0))|\le M^r\) then we have

for every \(t\ge 0\).

Proof

Clearly (49) holds for \(r=0\) since \(N_0(\mathbf {f}(t))=1\) for every \(t\ge 0\). Calling \(M_1=\max \left\{ M,\frac{\mu }{\rho }\right\} \), assume that \(|N_{r-1}(\mathbf {f}(t))|\le M_1^{r-1}\). Form (48) we get

The corollary follows by induction on r. \(\square \)

Let now

so that \(E(t)=\sum _{N=1}^\infty \tilde{f}_N\) and observe that

while

Again proceeding formally we get

It is not hard to adapt this argument, together with (46) and (47), to prove the second of (9).

3.2 Proof of Theorem 2

To prove Theorems 2 and 3, we will construct a basis of eigenvectors for the generator

of the evolution due to the thermostat on \(L^2_s({\mathcal {R}},\varvec{\varGamma })\). We start by defining

with \(\underline{v}_{N,i}(w)=(v_1,\ldots ,v_{i-1},w,v_i,\ldots , v_N)\) and \(g\in L^2({\mathbb {R}},\gamma )\). Moreover, we use the convention that the sum over an empty set is 0 so that \(({\mathcal {P}}^+(g)\mathbf {h})_{0}=0\) for every \(\mathbf {h}\). With this notation, \({\mathcal {P}}^+\) and \({\mathcal {P}}^-\) from the introduction are \({\mathcal {P}}^+(1)\) and \({\mathcal {P}}^-(1)\), respectively.

Lemma 10

We have

so that \({\mathcal {G}}\) is self-adjoint.

Proof

Proceeding as in the definition of \(D^2\), we take as domain of \({\mathcal {P}}^\pm (g)\) the subspaces

It is easy to see that \(D^\pm \) are dense in \(L^2({\mathcal {R}},\varvec{\varGamma })\).

Calling \(\underline{v}_N^i=(v_1,\dots ,v_{i-1},v_{i+1},\dots ,v_N)\) we get

Assume now that \(\mathbf {h}\) is in the domain of \({\mathcal {P}}^+(g)^*\). This means that for every \(\mathbf {j}\) in \(D^+\) we have

Given M, choose \(\mathbf {j}\) such that \(j_N\equiv 0\) if \(N\not =M\). For such a \(\mathbf {j}\) we have \(\mathbf {j}\in D^+\) and

where the last equality follows from (52) and the fact that

This implies that \(\rho ({\mathcal {P}}^+(g)^*\mathbf {h})_M=\mu ({\mathcal {P}}^-(g)\mathbf {h})_M\) for every M thus proving (51). This also implies that \({\mathcal {G}}\) is self adjoint. \(\square \)

To obtain convergence toward \(\mathbf {e}^0\), we first need to show that \({\mathcal {G}}\) is non positive. This is the content of the following Lemma.

Lemma 11

\({{\mathcal {G}}}\) is non positive and \({{\mathcal {G}}}\mathbf {h}=0\) if and only if \(\mathbf {h}=c\mathbf {e}^0\), where \(\mathbf {e}^0\) is given by \(e_N^0(\underline{v}_N)=1\) for every N and \(\underline{v}_N\).

Proof

From (51), we get \(\rho (\mathbf {h},{\mathcal {P}}^+\mathbf {h})= \mu ({\mathcal {P}}^-\mathbf {h},\mathbf {h})\) so that

Moreover we have

where we have used (53) to obtain the second line and that \(ab\le (a^2+b^2)/2\) in going from the second to the third line of (55). Non positivity follows immediately from (54) and (55). Furthermore, we see that the inequality at the end of the second line of (55) becomes an equality if and only if:

or \(h_N(\underline{v}_N)=h_{N-1}(\underline{v}_N^i)\) for every i and N which implies that \(h_N\equiv h_0\). \(\square \)

Our construction of the eigenvalues and eigenvectors of \({\mathcal {G}}\) is inspired by the construction of the Fock space for a bosonic quantum field theory, see for example Chap. 6 of [17]. The main observation is that the operators \({\mathcal {P}}^\pm (g)\) defined in (50) have the form of the creation and annihilation operators. Since the “ground state” of \({\mathcal {G}}\) is \(\mathbf {e}^0\), as opposed to the state with no particles \(\mathbf {n}\), see (64) below, we will introduce the operators \({\mathcal {R}}^\pm (g)\), see (57) below, that can be thought as quasi particle operators, that is operators that create and destroy excitations above the ground state, see for example [1]. The proofs of the Lemmas in the remaining part of this section should be familiar to readers with a background in QFT.

We start with the commutation relations of the operators \({\mathcal {P}}^\pm (g)\) and \({\mathcal {N}}\). Setting \(\{{\mathcal {A}},{\mathcal {B}}\}={\mathcal {A}} {\mathcal {B}}-{\mathcal {B}}{\mathcal {A}}\), we obtain the following Lemma.

Lemma 12

We have

where

Proof

We first observe that, due to the symmetry of \(h_N\), we have

while

where

Thus we get

Using again that \(h_N\) is symmetric we get \(\{{\mathcal {P}}^-(g_1),{\mathcal {P}}^-(g_2)\}=0\). Moreover, we have

so that

Summing over i and j it follows that \(\{{\mathcal {P}}^+(g_1),{\mathcal {P}}^+(g_2)\}=0\).

Similarly we have

while for \(i\le N\) we get

Summing over i we get \(\{{{\mathcal {P}}}^+(g_1),{{\mathcal {P}}}^-(g_2)\} =-(g_1,g_2)\mathrm {Id}\).

Finally we observe that

so that \(\{{\mathcal {N}} ,{\mathcal {P}}^-(g)\}=-{\mathcal {P}}^-(g)\). The commutation relation for \({\mathcal {P}}^+\) follows taking the adjoint. \(\square \)

Observe that \({\mathcal {P}}^-(g)\mathbf {e}^0=(g,1)\mathbf {e}^0\) while from Lemma 12 it follows that

that makes it natural to define the new creation and annihilation operators

The following Corollary collects the relevant properties of \({{\mathcal {R}}}^\pm (g)\).

Corollary 13

We have \({\mathcal {R}}^+(g)^*={\mathcal {R}}^-(g)\), \({\mathcal {R}} ^-(g)\mathbf {e}^0=0\), and

Moreover we also have

Proof

It is easy to verify that \({\mathcal {R}}^-(g)\mathbf {e}^0=0\). Moreover we only need to prove (58) since the other relations are immediate consequences of Lemma 12. From (56) we get

The second equation of (58) follows by taking the adjoint of the first. \(\square \)

Since \(K_N\) preserves the space of polynomials of a given degree, see [4], we choose as an orthonormal basis for \(L^2({\mathbb {R}},\gamma (v))\) the polynomials

where

are the standard Hermite polynomials. For every sequence \(\underline{\alpha }=(\alpha _0,\alpha _1,\alpha _2,\ldots )\) such that \(\alpha _i\in {\mathbb {N}}\) and \(\lambda (\underline{\alpha }) :=\sum _{i=0}^\infty \alpha _i<\infty \), we define

where \({\mathcal {R}}^\pm _n={\mathcal {R}}^\pm (L_n)\).

Lemma 14

The vectors \(\mathbf {e}_{\underline{\alpha }}\) form an orthonormal basis in \(L^2_s({\mathcal {R}},\varvec{\varGamma })\). Moreover, we have

Finally we have \(\Vert {\mathcal {K}}\mathbf {e}_{\underline{\alpha }}\Vert _2<\infty \), so that \(\mathbf {e}_{\underline{\alpha }}\in D^2\), for every \(\underline{\alpha }\).

Proof

If \(n_1\not =n_2\) and \(\alpha _1\alpha _2\not =0\), using Corollary 13 we get

while

Assuming \(\alpha _1\ge \alpha _2\) we get

so that

from which orthonormality follows easily. Observe now that

so that we can write

where \(\mathbf {n}=(1,0,0,\ldots )\). Since \({\mathcal {P}}^+(1) =\sqrt{\frac{\rho }{\mu }}{\mathcal {R}}_0^++\sqrt{\frac{\mu }{\rho }}\mathrm{Id}\) we see that \(\mathbf {n}\) is in the closure of the span of the \(\mathbf {e}_{\underline{\alpha }}\). Calling \({\mathcal {P}}^+_i={\mathcal {P}}^+(L_i)\), we observe that \(({\mathcal {P}}^+_i\mathbf {n})_N=0\) for \(N\not =1\) while \(({\mathcal {P}}^+_i\mathbf {n})_1=L_i\). Since the \(L_i\) form a basis for \(L^2({\mathbb {R}},\gamma _1)\) we see that the closure of the span of \(\{\mathbf {n}; {\mathcal {P}}^+_i\mathbf {n}, i\ge 0\}\) contains a basis for \(L^2_s({\mathbb {R}}^0,a_0)\oplus L^2_s({\mathbb {R}},a_1\gamma _1)\). Observe now that \({\mathcal {P}}^+_i=\sqrt{\frac{\mu }{\rho }}{\mathcal {R}}^+_i+\delta _{i,0} \frac{\mu }{\rho }\mathrm {Id}\) and that \({\mathcal {R}}^+_i\mathbf {e}_{\underline{\alpha }} =\sqrt{\alpha _i+1}\mathbf {e}_{\underline{\alpha }'}\), where \(\alpha '_j=\alpha _j\) for \(j\not =i\) while \(\alpha '_i=\alpha _i+1\). Combining this with (64) we get that the closure of the span of the \(\mathbf {e}_{\underline{\alpha }}\) contains \({\mathcal {P}}^+_i\mathbf {n}\) and thus it contains a basis for \(L^2_s({\mathbb {R}}^0)\oplus L^2_s({\mathbb {R}},a_1\gamma _1)\). Iterating this construction we obtain completeness. Equation (61) follows easily from (58).

Finally, since \((h_N,R_{i,j}h_N)_N\le \Vert h_n\Vert _{2,N}\), from (5) we get

Using the commutation relations in Corollary 13 as in the derivation of (62) we get

that, together with \({\mathcal {N}}\mathbf {e}^0=\sqrt{\frac{\mu }{\rho }} {\mathcal {R}}^+_0\mathbf {e}^0+\frac{\mu }{\rho }\mathbf {e}^0\), gives

where \(\alpha ^\pm _i=\alpha _i\), for \(i>0\), while \(\alpha _0^\pm =\alpha _0\pm 1\). Thus we have \(\Vert {\mathcal {N}}^2\mathbf {e}_{\underline{\alpha }}\Vert _2<\infty \) and the proof is complete. \(\square \)

In Sect. 3.3 we will need a more explicit representation of the \(\mathbf {e}_{\underline{\alpha }}\). To this end observe that, if \(n\not =0\), \(({\mathcal {R}}^+_n\mathbf {e}^0)_N(\underline{v}_N)=\sqrt{\frac{\rho }{\mu }} \sum _{i=1}^N L_n(v_i)\) while for \(n_1,n_2\not =0\) and \(N\ge 2\) we can write

where \(\mathrm{Sym}(N)\) is the group of permutations on \(\{1,\ldots ,N\}\). More generally, given \(n_i\not =0\), \(i=1,\ldots ,M\), we get, for \(N\ge M\),

while \(\left( \prod _{i=1}^M{\mathcal {R}}^+_{n_i}\mathbf {e}^0\right) _N\equiv 0\) for \(N<M\). Given \(\underline{\alpha }\) with \(\lambda (\underline{\alpha })<\infty \), define

where \(L_{i}^{\otimes 0}=1\) and observe that \(L_{\underline{\alpha }}\) is a polynomial in \(\lambda _0(\underline{\alpha }):=\sum _{i=1}^\infty \alpha _i\) variables with degree \(d(\underline{\alpha }) :=\sum _{i=1}^\infty i\alpha _i\). Also for \(\pi \in \mathrm{Sym}(N)\), define \(\pi (\underline{v}_N)=(v_{\pi (1)},v_{\pi (2)},\ldots v_{\pi (N)})\). Using these definitions, together with (60) and the fact that \({\mathcal {R}}_0^+=\sqrt{\frac{\rho }{\mu }}{\mathcal {P}}^+(1)+\sqrt{\frac{\mu }{\rho }} \mathrm {Id}\) we can write, for \(N\ge \lambda _0(\underline{\alpha })\),

for suitable coefficients \(c_{\underline{\alpha },N}\), while \((\mathbf {e}_{\underline{\alpha }})_N(\underline{v}_N)=0\) for \(N<\lambda _0(\underline{\alpha })\).

We now come back to the full operator \(\widetilde{{\mathcal {L}}}\).

Corollary 15

The operator \(\widetilde{{\mathcal {L}}}\) is self-adjoint, non positive and \(\widetilde{{\mathcal {L}}}\mathbf {h}=0\) if and only if \(\mathbf {h}=c\mathbf {e}^0\).

Proof

We can proceed exactly as in proof of Lemma 10. Assume that \(\mathbf {h}\) is in the domain of \(\widetilde{{\mathcal {L}}}^*\). This means that for every \(\mathbf {j}\) in \(D^2\) we have

Given M, choose \(\mathbf {j}\) such that \(j_N\equiv 0\) if \(N\not =M\). Clearly \(\mathbf {j}\in D^2\) because \((\widetilde{{\mathcal {L}}}\mathbf {j})_N \not =0\) only for \(N=M-1\), M, and \(M+1\). Moreover \(({\mathcal {K}}\mathbf {h},\mathbf {j})=a_M(K_Mh_M,j_M)_M\) is well defined for every \(\mathbf {h}\in L_s^2({\mathcal {R}},\varvec{\varGamma })\). Finally we known that \(K_M\) is non negative and self-adjoint for every M. Thus we get

This implies that \((\widetilde{{\mathcal {L}}}^*\mathbf {h})_M =(\widetilde{{\mathcal {L}}}\mathbf {h})_M\) for every M. This proves that \(\widetilde{{\mathcal {L}}}\) is self-adjoint. Observe also that \({\mathcal {G}}\mathbf {h}=0\) if and only if \(\mathbf {h}=c\mathbf {e}^0\), see Lemma 11, while \({\mathcal {K}}\) is positive and \({\mathcal {K}}\mathbf {e}^0=0\). This completes the proof. \(\square \)

Let \(\mathbf {W}_1=\mathrm {span}\{\mathbf {e}_{\underline{\alpha }}\,|\, \lambda (\underline{\alpha })=1\}=\mathrm {span}\{{\mathcal {R}}^+_n\mathbf {e}^0\,|\,n\ge 0\}\). Observe that \({\mathcal {G}}\mathbf {h}=-\rho \mathbf {h}\) if \(\mathbf {h}\in \mathbf {W}_1\) while \((\mathbf {h},{\mathcal {G}}\mathbf {h})<-\rho (\mathbf {h},\mathbf {h})\) if \(\mathbf {h}\in D^2\), \(\mathbf {h}\perp \mathbf {e}^0\) but \(\mathbf {h}\not \in \mathbf {W}_1\). Thus we get

From [4] we know that \((f_N,K_Nf_N)\le 0\) for every \(f_N\) while \((f_N,K_Nf_N)= 0\) if and only if \(f_N\) is rotationally invariant. Since \(({\mathcal {R}}^+_n \mathbf {e}^0)_N=\sqrt{\rho /\mu }\sum _{i=1}^N L_{n}(v_i)\), for \(n>0\), while \(({\mathcal {R}}^+_0 \mathbf {e}^0)_N=\sqrt{\rho /\mu }N-\sqrt{\mu /\rho }\) we have that \({\mathcal {R}}^+_n\mathbf {e}^0\) is rotationally invariant if and only if \(n=0\) or \(n=2\). This implies that \((\mathbf {h},\widetilde{{\mathcal {L}}}\mathbf {h})=-\rho \Vert \mathbf {h}\Vert _2\) if and only if \(\mathbf {h}\in \mathrm {span}\{{\mathcal {R}}_0^+\mathbf {e}^0,{\mathcal {R}}_2^+\mathbf {e}^0\}\). Since \({\mathcal {R}}_0^+\mathbf {e}^0=\mathbf {e}_{(1,0,\ldots )}\) and \({\mathcal {R}}_2^+\mathbf {e}^0=\mathbf {e}_{(0,0,1,0,\ldots )}\), this completes the proof of Theorem 2. \(\square \)

3.3 Proof of Theorem 3

To prove Theorem 3, we need more information on the action of \({\mathcal {K}}\) on the basis vectors \(\mathbf {e}_{\underline{\alpha }}\).

As a basic step, we compute the action of \(R_{1,2}\), see (6), on the product of two Hermite polynomials in \(v_1\) and \(v_2\). A simple calculation, see e.g. [4], shows that \((R_{1,2}F)(v_1,v_2)=0\) for every F odd in \(v_1\) or \(v_2\). Thus, calling \(H_{(m_1,m_2)}(v_1,v_2)=H_{m_1}(v_1)H_{m_2}(v_2)\), it follows that \(R_{1,2}H_{(m_1,m_2)}\not =0\) if and only if \(m_1\) and \(m_2\) are both even while \(R_{1,2}H_{(2n_1,2n_2)}\) is a rotationally invariant polynomial of degree \(2(n_1+n_2)\) in \(v_1\) and \(v_2\). Moreover, if \(m_1+m_2<2n_1+2n_2\), we get

where we have used that \(H_{(2n_1,2n_2)}\) is orthogonal to any polynomial of degree less that \(2(n_1+n_2)\). Thus we have \(R_{1,2}H_{(2n_1,2n_2)}\in \mathrm {span}\{H_{(p_1,p_2)}\,|\, p_1+p_2=2n_1+2n_2\}\) and, since \(H_n\) is a monic polynomial of degree n, we can write

for suitable coefficients \(a_{k,n_1,n_2}\) and polynomial \(Q(v_1,v_2)\) of degree strictly less then \(2(n_1+n_2)\). This, together with rotational invariance, implies that

for suitable coefficients \(\tilde{\tau }_{n,m}\). Using (67), together with (66), it is possible to give an explicit representation of \({\mathcal {K}}\) on the basis of the \(\mathbf {e}_{\underline{\alpha }}\). For the purpose of this paper, we will only need some particular cases discussed in detail below.

Let now \(\mathbf {V}_m=\mathrm {span}\{\mathbf {e}_{\underline{\alpha }}| \sum _{i=1}^\infty i\alpha _i=m\}=\mathrm {span}\{\prod _i ({\mathcal {R}}_i^+)^{\alpha _i}\mathbf {e}^0|\sum _{i=1}^\infty i\alpha _i=m\}\), that is \(\mathbf {V}_m\) is the subspace of all states \(\mathbf {h}\) such that \(h_N\) is a polynomial of degree m orthogonal to all polynomials of degree less than m. From the above considerations and (66) it follows that \({\mathcal {K}}\mathbf {V}_m\subset \mathbf {V}_m\) so that defining

and observing that \(L^2_s({\mathcal {R}},\varvec{\varGamma }) =\bigoplus _{m=0}^{\infty } \mathbf {V}_m\), we get \(\varDelta _2=-\inf _m\delta _m\).

Since \(\mathbf {E}_1=\mathrm {span}\{{\mathcal {R}}_0^+\mathbf {e}^0,{\mathcal {R}}_2^+\mathbf {e}^0\}\), we get

Observing that \({\mathcal {K}}({\mathcal {R}}^+_0)^n\mathbf {e}^0={\mathcal {K}}({\mathcal {R}}^+_0)^n {\mathcal {R}}_2^+ \mathbf {e}^0=0\), due to rotational invariance, while \({\mathcal {K}}({\mathcal {R}}^+_0)^m ({\mathcal {R}}^+_1)^2\mathbf {e}^0=0\), due to parity, we obtain \(\delta _0=\delta _2=2\rho \). Moreover we have that, for \(m\not =0,2\), \(\mathbf {V}_m\perp \mathbf {E}_1 \oplus \mathbf {E}_0\). Thus we need a lower bound on \(\delta _m\) for m odd and for m even and greater than 2.

Observe that \(({\mathcal {R}}^+_{m}\mathbf {e}^0,{\mathcal {G}}{\mathcal {R}}^+_{m}\mathbf {e}^0)=-\rho \) while \((\mathbf {h},{\mathcal {G}}\mathbf {h})\le -2\rho (\mathbf {h},\mathbf {h})\) if \(\mathbf {h}\in \mathbf {V}_m\) and \(\mathbf {h}\perp {\mathcal {R}}^+_{m}\mathbf {e}^0\). Thus, if \(\lambda \) is not too big, it is natural to search for the infimum of \((\mathbf {h},-\widetilde{{\mathcal {L}}}\mathbf {h})\) on \(\mathbf {V}_m\) looking at states \(\mathbf {h}\) close to \({\mathcal {R}}^+_{m}\mathbf {e}^0\). To do this, we need the representation of \({\mathcal {K}}{\mathcal {R}}^+_m\mathbf {e}^0\) on the basis formed by the \(\mathbf {e}_{\underline{\alpha }}\). If \(m=2n\), using (67) for \(n_2=0\) we get

where \(\tau _n=\tilde{\tau }_{n,0}\). To compute \(\tau _n\) we compare the coefficients of \(v_1^{2n}\) on the left and right hand side of (69). On the left hand side the only contribution comes from \(R_{1,2}v_1^{2n}\) since \(R_{1,2}\) preserve the degree. On the right hand side only the term with \(k=n\) contains the monomial \(v_1^{2n}\). Since the \(H_n\) are monic and

and we obtain

Combining with (59) we get

Since for \(n>0\) we have \(({\mathcal {R}}^+_{2n}\mathbf {e}^0)_N =\sqrt{\rho /\mu }\sum _{i=1}^N L_{2n}(v_i)\), a direct computation shows that

where

This gives us

where we have used that \({\mathcal {N}}\mathbf {e}^0=\sqrt{\frac{\mu }{\rho }} {\mathcal {R}}^+_0\mathbf {e}^0+\frac{\mu }{\rho }\mathbf {e}^0\).

If \(m=2n+1\), \(R_{1,2}H_{2n+1}(v_1)=0\) gives

so that \(\delta _{2n}\le \rho +\lambda (1-2\tau _n)\) and \(\delta _{2n+1}\le \rho +\lambda \).

The following Lemma shows that, if the average number of particles in the steady state is large enough and \(\lambda \) is not too large, one can find a lower bound for \(\delta _m\) close to the upper bound derived above.

Lemma 16

For \(m=2n+1\) we have

while for \(m=2n\), \(n>1\), we have

Proof

See Appendix A.1. \(\square \)

Since \(\tau _2=3/8\) and \(({\mathcal {R}}_4\mathbf {e}^0, -\widetilde{{\mathcal {L}}}{\mathcal {R}}_4\mathbf {e}^0)=\rho +\lambda /4\), we get

Moreover, thanks to (13),

so that \(\delta _{2n+1}>\delta _4\) for every n. Finally we observe that \(\tau _{n+1}<\tau _n\) and \(\tau _3=5/16\). Using (13) again it follows that, for \(n\ge 3\),

so that \(\varDelta _2=-\delta _4\).

To show that \(\varDelta _2\) is an eigenvalue, we need to construct an eigenstate, that is we need to find \(\hat{\mathbf {h}}\in \mathbf {V}_4\) such that \(\widetilde{{\mathcal {L}}}\hat{\mathbf {h}}=-\delta _4 \hat{\mathbf {h}}\). To this end, it is enough to show that there exists \(\hat{\mathbf {h}}\in \mathbf {V}_4\) such that \((\hat{\mathbf {h}},\widetilde{{\mathcal {L}}} \hat{\mathbf {h}})=-\delta _4 (\hat{\mathbf {h}},\hat{\mathbf {h}})\). Observe that if \(\mathbf {h}\in \mathbf {V}_4\) then \({\mathcal {K}}\mathbf {h}\) is even. We thus restrict our search to \(\hat{\mathbf {h}}\in \mathbf {V}^e_4=\mathrm {span}\{({\mathcal {R}}^+_0)^k{\mathcal {R}}^+_4\mathbf {e}^0,\, ({\mathcal {R}}^+_0)^k({\mathcal {R}}^+_2)^2\mathbf {e}^0;\,k\ge 0 \}\).

Consider a sequence \(\mathbf {h}_n\in \mathbf {V}^e_4\) such that \(\Vert \mathbf {h}_n\Vert _2=1\) and \(\lim _{n\rightarrow \infty }(\mathbf {h}_n, -\widetilde{{\mathcal {L}}}\mathbf {h}_n)=\delta _4\). Calling \(\mathbf {V}^e_{4,k}=\mathrm {span}\{({\mathcal {R}}^+_0)^{k-1}{\mathcal {R}}^+_4\mathbf {e}^0, ({\mathcal {R}}^+_0)^{k-2}({\mathcal {R}}^+_2)^2\mathbf {e}^0 \}\) for \(k>2\), while \(\mathbf {V}^e_{4,1}=\mathrm {span}\{{\mathcal {R}}^+_4\mathbf {e}^0\}\), we can write \(\mathbf {h}_n=\sum _{k=0}^\infty \mathbf {h}_{n,k}\) with \(\mathbf {h}_{n,k}\in \mathbf {V}^e_{4,k}\) and we can find a subsequence \(\mathbf {h}^0_n\) of \(\mathbf {h}_n\) such that \(\lim _{n\rightarrow \infty }\mathbf {h}_{n,0}=\hat{\mathbf {h}}_0\). Similarly we can find a new subsequence \(\mathbf {h}^1_n\) of \(\mathbf {h}^0_n\) such that \(\lim _{n\rightarrow \infty }\mathbf {h}_{n,1}=\hat{\mathbf {h}}_1\). Proceeding like this we find a sequence \(\mathbf {h}_n^\infty \) such that \(\lim _{n\rightarrow \infty }\mathbf {h}^\infty _{n,k}=\hat{\mathbf {h}}_k\), for every k. Analogously, since \(h_{n,N}\) is an even polynomial of degree 4 in \(\underline{v}_N\) we can assume, possibly at the cost of further extracting a subsequence, that \(\lim _{n\rightarrow \infty } h^\infty _{n,N}=\hat{h}_N\) for every N. From Fatou’s Lemma we get that \(\lim _{n\rightarrow \infty }\mathbf {h}^\infty _n=\hat{\mathbf {h}}\) with \(\Vert \hat{\mathbf {h}}\Vert _2\le 1\) while

and analogously, since \(K_N\) is non positive,

so that

while \((\hat{\mathbf {h}},-\widetilde{{\mathcal {L}}}\hat{\mathbf {h}})\ge \delta _4 \Vert \hat{\mathbf {h}}\Vert _2\) since \(\hat{\mathbf {h}}\in \mathbf {V}_4^e\). Thus we need to show that \(\Vert \hat{\mathbf {h}}\Vert _2=1\).

To this end observe that for every \(M>0\) we have

eventually in n. Thus, for every \(\epsilon \) there exists M such that \(\sum _{k=0}^M\Vert \mathbf {h}_{n,k}\Vert _2^2\ge 1-\epsilon \) eventually in n. Taking the limit this implies that for every \(\epsilon \) there exists M such that \(\sum _{k=0}^M\Vert \hat{\mathbf {h}}_{k}\Vert _2^2 \ge 1-\epsilon \) and thus we get \(\Vert \hat{\mathbf {h}}\Vert =1\). This concludes the proof of Theorem 3. \(\square \)

3.4 Proof of Theorem 4

To simplify notation, given \(\mathbf {f}=\mathbf {h}\varvec{\varGamma }\), we set \(S(\mathbf {h})={{\mathcal {S}}}(\mathbf {f}\,|\,\varvec{\varGamma }) \) and we define

Finally we observe that if \(\mathbf {f}\in L^1_s({\mathcal {R}})\) then \(\mathbf {h}\in L^1_s({\mathcal {R}},\varvec{\varGamma })\) and \(e^{{\mathcal {L}}t}\mathbf {f}=(e^{\widetilde{{\mathcal {L}}}t}\mathbf {h})\varvec{\varGamma }\) with \(\widetilde{{\mathcal {L}}}={\mathcal {G}}+\tilde{\lambda }{\mathcal {K}}\) defined in Sect. 3.2 but now considered as an operator on \(L^1_s({\mathcal {R}},\varvec{\varGamma })\).

To obtain an explicit expression for \(\frac{d}{dt}S(\mathbf {h}(t))\), where \(\mathbf {h}(t)=e^{\widetilde{{\mathcal {L}}}t}\mathbf {h}\) we need to interchange the order of the derivative in t with the sum over N and the integral over \(\underline{v}_N\). To do this we will use the following two Lemmas that will allow us to use Fatou’s Lemma to excahnge derivative and integrals.

Lemma 17

Given \(\mathbf {f}\in L^1({\mathcal {R}})\) we have

for every N and almost every \(\underline{v}_N\).

Proof

See Appendix A.2. \(\square \)

Lemma 18

If \(\mathbf {h}\varvec{\varGamma }\in L^1_s({\mathcal {R}})\) then

Proof

See Appendix A.3. \(\square \)

After setting

we are ready to estimate of the variation in time of \(S(\mathbf {h})\).

Lemma 19

Let \(\mathbf {h}\) be such that \(\mathbf {h}\varvec{\varGamma }\in D^1\) and \(\mathbf {h}\log \mathbf {h}\varvec{\varGamma }\in D^1\) then we have

Proof

From Lemma 18 we get

Since \(\mathbf {h}\log \mathbf {h}\varvec{\varGamma }\in L^1({\mathcal {R}})\), conservation of probability gives

so that by Fatou’s Lemma

and, using Lemma 17, we get

Since \(\varvec{\varGamma } \mathbf {h}\in D^1\) and \(\varvec{\varGamma } \mathbf {h}\log \mathbf {h}\in D^1\), (45) gives

where we have used that \(\int d\underline{v}_N\gamma _N (K_Nh_N)\log h_N\le 0\). Observe finally that

from which we get

The thesis follows by reindexing the first sum and using (53). \(\square \)

Thus to show that \(S(\mathbf {h}(t))\) decays exponentially we need a lower bound for \(\varPsi (\mathbf {h})\) in terms of \(S(\mathbf {h})\). This is the content of the following Lemma that is the main result of this section.

Lemma 20

If \(\varvec{\varGamma }\mathbf {h}\in L^1_s({\mathcal {R}})\) with \(S(\mathbf {h})<\infty \), then

Remark 21

The idea behind the proof of (75) is to think of the entry and exit processes defined by the thermostat as a continuous family of independent entry processes, one for each possible velocity v, with entry rates \(\mu \gamma (v)dv\), while each particle in the system leaves with rate \(\rho \) independent of its velocity. Clearly such a description makes little mathematical sense and, as a first step, one may think of approximating the original process by restricting the velocity of each particle to assume only a finite number of values \(\bar{v}_k\), \(k=1,\ldots ,K\), characterized by suitable entry rates \(\omega _k\). After this, using convexity, we reduce the proof of (75) to the case with \(K=1\), essentially equivalent to the case in which all particles in the thermostat have the same velocity. In this situation, we further approximate the infinite reservoir by a large finite reservoir containing M particles that enter and leave the system, independently from each other, at a suitable rate. Convexity will allow us to reduce this situation to that of a single particle jumping from the system to the reservoir and back. The final step is thus Lemma 25 below that deals with this situation. This argument is inspired by the proof of the Logarithmic Sobolev Inequality in [12].

Remark 22

In the proof of Lemma 19 we required that \(\mathbf {h}\varvec{\varGamma }\in D^1\) and \(\mathbf {h}\log \mathbf {h}\varvec{\varGamma }\in D^1\) only to differentiate \(e^{t\widetilde{{\mathcal {L}}}}\mathbf {h}\) and show that \(\sum _{N=0}^\infty a_N\int (\widetilde{{\mathcal {L}}}\mathbf {h})_N \gamma _Nd\underline{v}_N=0\) and similarly for \(\mathbf {h}\log \mathbf {h}\). We believe it is possible to implement the strategy outlined in Remark 21, and developed in the proof below, directly to \(S(\mathbf {h})\) thanks to the representation of the evolution described in Remark 8. This would eliminate the need for conditions on \(\mathbf {h}\) but it would make the proof below unnecessarily involved.

Proof of Lemma 20

A way to make the first step of the discussion in Remark 21 rigorous is to coarse grain, that is to approximate each \(h_N\) by a simple function obtained by averaging it over the element of a partition of \({\mathbb {R}}^N\) made by rectangles obtained as the Cartesian product of a finite number of measurable set of \({\mathbb {R}}\).

More precisely, we call \({\mathscr {B}}=\{B_k\}_{k=1}^K\) a (measurable) partition of \({\mathbb {R}}^N\) if \(B_k\subset {\mathbb {R}}^N\) are measurable and \(\bigcup _k B_k={\mathbb {R}}^N\) while \(B_k\cap B_{k'}=\emptyset \) if \(k\not =k'\). Given a measurable partition \({\mathcal {B}}\) let \(I_k(\underline{v}_N,\underline{w}_N)\) be the indicator function of \(B_k\times B_k\subset {\mathbb {R}}^{2N}\) and define the coarse graining kernel:

Clearly, for every \(\underline{w}_N\) we have

while \(C_{\mathcal {B}}(\underline{v}_n,\underline{w}_N)=C_{\mathcal {B}} (\underline{w}_N,\underline{v}_N)\). Given a function \(h_N\) is \(L^1({\mathbb {R}}^N)\) we can define its coarse grained version as

Observe that, if \(\underline{v}_N\in B_k\) then

This means that \(h_{N,{\mathcal {B}}}(\underline{v}_N)\) is a simple function that assumes only K possible values. Finally we have \(\int _{{\mathbb {R}}^N}h_{N,{\mathcal {B}}}(\underline{v}_N)\gamma (\underline{v}_N)d\underline{v}_N =\int _{{\mathbb {R}}^N}h_N(\underline{v}_N) \gamma (\underline{v}_N) d\underline{v}_N\).

Given measurable partitions \({\mathcal {B}}=\{B_k\}_{k=1}^K\) and \({\mathcal {B}}'=\{B'_j\}_{j=1}^J\)of \({\mathbb {R}}^N\) and \({\mathbb {R}}^M\) respectively, we can define the product partition \({\mathcal {B}}\times {\mathcal {B}}'=\{B_k\times B'_j\,|k=1,\ldots ,K\,\,\, j=1,\ldots ,J\}\) of \({\mathbb {R}}^{N+M}\). Observe that the coarse graining kernel of \({\mathcal {B}}\times {\mathcal {B}}'\) satisfies

Finally, given a partition \({\mathcal {B}}=\{B_k\}_{k=1}^K\) of \({\mathbb {R}}\), and \(\underline{k}=(k_1,\ldots ,k_N)\in \{1,\ldots ,K\}^N\) we consider the set \(B_{\underline{k}}=\times _i B_{k_i}\subset {\mathbb {R}}^N\). Clearly the \(B_{\underline{k}}\) form a measurable partition of \({\mathbb {R}}^N\) that we will denote as \({\mathcal {B}}^N\). As before, we can define the coarse graining kernel for \({\mathcal {B}}^N\) as

where \(\omega _{\underline{k}}=\prod _{i=1}^{N}\omega _{k_i}\) and \(I_{\underline{k}}(\underline{v}_N,\underline{w}_N)\) is the characteristic function of \(B_{\underline{k}}\times B_{\underline{k}}\in {\mathbb {R}}^{2N}\). Moreover the coarse grained version of \(h_N\in L^1({\mathbb {R}}^N,\gamma _N)\) is

Again, if \(\underline{v}_N\in B_{\underline{k}}\) we have

and \(h_{N,{\mathcal {B}}^N}(\underline{v}_N)\) assumes only the \(K^N\) possible values \(\bar{h}_{N,{\mathcal {B}}^N}(\underline{k})\). Observe finally that, since

we can write

Given a state \(\mathbf {h}\) and a partition \({\mathcal {B}}\) of \({\mathbb {R}}^N\), we define the coarse grained version \(\mathbf {h}_{{\mathcal {B}}}\) of \(\mathbf {h}\) over \({\mathcal {B}}\) by setting \(h_{{\mathcal {B}},N}=h_{N,{\mathcal {B}}^N}\). Since \(x\log (x)\) is convex in x and \((x-y)(\log (x)-\log (y))\) is jointly convex in x and y, for every partition \({\mathcal {B}}\) of \({\mathbb {R}}\), we get

where in the inequality for \(\varPsi \) we used (76). On the other hand, we have the following Lemma.

Lemma 23

Given \(\mathbf {h}\), for every \(\epsilon \) we can find a finite measurable partition \({\mathcal {B}}\) of \({\mathbb {R}}\) such that

Proof

See Appendix A.4. \(\square \)

We thus claim that to prove Lemma 20 we just need to show that, for every finite partition \({\mathcal {B}}\) of \({\mathbb {R}}\) and every state \(\mathbf {h}\) we have

To see this observe that Lemma 23, together with (77) and (78), implies that for every \(\epsilon \) we can find a partition \({\mathcal {B}}\) such that

Thus we consider a given finite partition \({\mathcal {B}}=\{B_k\}_{k=1}^K\) and a given state \(\mathbf {h}\). Since \(h_{{\mathcal {B}}, N}\) takes only finitely many values, it should be possible to transform the integrals defining \(E(\mathbf {h}_{{\mathcal {B}}})\), \(S(\mathbf {h}_{{\mathcal {B}}})\) and \(\varPsi (\mathbf {h}_{{\mathcal {B}}})\) into summations. To do this, given \(\underline{k}\in \{1,\ldots , K\}^N\), we define the occupation numbers \(\underline{n}(\underline{k})=(n_1(\underline{k}),\dots ,n_K(\underline{k}))\in {\mathbb {N}}^K\) as

That is \(n_q(\underline{k})\)is the number of i such that \(k_i=q\). In other words, if \(\underline{v}_N\in B_{\underline{k}}\) then there are \(n_q(\underline{k})\) particles with velocity in \(B_q\).

The fact that \(h_N\) is invariant under permutation of its arguments implies that \(\bar{h}_{N,{\mathcal {B}}^N}(\underline{k})\) depends only on \(\underline{n}(\underline{k})\) or, more precisely, if \(\underline{n}(\underline{k})=\underline{n}(\underline{k}')\) then \(\bar{h}_{N,{\mathcal {B}}^N}(\underline{k})=\bar{h}_{N,{\mathcal {B}}^N}(\underline{k}')\). This allow us to define the function \(F:{\mathbb {N}}^K\rightarrow {\mathbb {R}}\) given by

Using this definition and the fact that \(\sum _{k=1}^K\omega _k=1\), we can now write

where \(\underline{\alpha }_K=(\alpha _1,\ldots ,\alpha _K)\) with \(\alpha _k=\mu \omega _k/\rho \) and

that is \(\pi _{\alpha _k}\) is the Poisson distribution with expected value \(\alpha _k\). Similarly we have

Finally setting \(\underline{n}^{q}=(n_1,\ldots ,n_q+1,\ldots ,n_K)\) we get

so that, to prove (78), we need to show that, for every \(F:{\mathbb {N}}^K\rightarrow {\mathbb {R}}_+\) and for every K and \(\underline{\alpha }_K\in {\mathbb {R}}_+^K\), if \(\widetilde{S}_K(F)<\infty \) then

We will prove (82) by induction over K. Assume that (82) is valid for every index less than K for some \(K>1\) and write

and similar expression for \(E_{\underline{\alpha }_{K-1}}(F(\cdot ,n_K))\) and \(\varPsi _{\underline{\alpha }_{K-1}}(F(\cdot ,n_K))\).

Using the inductive hypothesis we obtain

Calling \(F_1(n_K)=\widetilde{E}_{\underline{\alpha }_{K-1}} (F(\cdot ,n_K))\) and using the inductive hypothesis again we get

so that

Observing that \(\widetilde{E}_{\alpha _K}(F_1) =\widetilde{E}_{\underline{\alpha }_{K}}(F)\) and that, by convexity,

we get (82) for K. Thus, by induction, to prove (82) for every K we just need to prove it for \(K=1\). This is the content of the following Lemma.

Lemma 24

Let \(\pi _\alpha \) be the Poisson distribution on \({\mathbb {N}}\) with expected value \(\alpha >0\) and \(f:{\mathbb {N}}\rightarrow {\mathbb {R}}^+\) be such that

then we have

Proof

Observe first that since \(\alpha \pi _\alpha (n)=(n+1)\pi _\alpha (n+1)\) we get

Let now \(\pi _{\alpha ,N}(n)\) be the binomial distribution with parameters N and \(\alpha /N\), that is

We will prove by induction that for every N and every \(\alpha \le N\) we have

so that, taking the limit for \(N\rightarrow \infty \), we will obtain (84). The base case \(N=1\) is covered by the following Lemma. \(\square \)

Lemma 25

Let \(\mu _x\ge 0\), \(x\in \{0,1\}\), be such that \(\mu _0+\mu _1=1\) then for every function \(f:\{0,1\}\rightarrow {\mathbb {R}}^+\) we have

Proof

Calling \(h(0)=f(0)/(\mu _0f(0)+\mu _1 f(1))\) and \(h(1)=f(1)/(\mu _0f(0)+\mu _1 f(1))\), (86) becomes

Since \(\mu _0 h(0)+\mu _1 h(1)=1\) we can write \(h(0)=1+\delta \mu _1\) and \(h(1)=1-\delta \mu _0\) and we get

where we have used concavity of the logarithm. \(\square \)

Assume now that (85) holds for every index less than N. Given \(\alpha \le N\) call \(\beta =(N-1)\alpha /N\) so that \(\beta \le N-1\). Define also \(\mu _0=1-\alpha /N\), \(\mu _1=\alpha /N\), and observe that, for every \(J:{\mathbb {N}}\rightarrow {\mathbb {R}}\),

Calling

and using (87) and the inductive hypothesis for index \(N-1\), we get

while using Lemma 25 for the first term in the second line delivers

Finally using the joint convexity in (x, y) of the function \((x-y)(\log x - \log y)\) and the fact that \(\mu _0<1\) we can write

that inserted in (88) gives

Changing summation variables from (x, n) to \((x,n+x)\) and using (87) we obtain (85) for index N. Thus (85) is valid for every \(N\ge 1\) and every \(\alpha \le N\).

To complete the proof of Lemma 24 we need to show that we can take the limit for \(N\rightarrow \infty \) in (85). To this end observe that given \(\alpha \), for N large enough we have \(0<\left( 1-\frac{\alpha }{N}\right) ^{N}\le 2e^{-\alpha }\). Thus for large N and \(\alpha <n\le N\) we get

Using Dominated Convergence, (89) implies that, if f(n) is bounded below and \(\sum _{n=0}^\infty f(n)\pi _{\alpha }(n)\le \infty \) then

We can now let \(N\rightarrow \infty \) in (85) to obtain (84). This concludes the proof of Lemma 24. \(\square \)

To sum up, the validity of (84) together with the inductive argument in (83) shows that (82) is valid for every K and \(\alpha _k\), \(k=1,\ldots , K\). This in turn, together with (79), (80) and (81), establishes the validity of (78) for every state \(\mathbf {h}\) and every partition \({\mathcal {B}}\) of \({\mathbb {R}}\). This, together with Lemma 24 completes the proof of Lemma 20. \(\square \)

Observe now that if \(\mathbf {f}\) is a probability distribution \(E(\mathbf {h})=1\) so that Lemma 20, together with Lemma 19, gives

To complete the proof of Theorem 4 we have to show that (90) implies (15). To this end, take \(\rho '<\rho \), assume that there exists t such that \(S(\mathbf {h}(t))> e^{-\rho ' t} S(\mathbf {h}(0))\) and let

By continuity we get \(S(\mathbf {h}(T))= e^{-\rho ' T} S(\mathbf {h}(0))\). From (90), for every \(\epsilon \) we can find \(\delta \) such that

for every \(h\le \delta \). Choosing \(\epsilon =(\rho -\rho ')e^{-T\rho '}S(\mathbf {h}(0))\) we get

which implies that \(S(\mathbf {h}(t))\le e^{-t\rho '}S(\mathbf {h}(0))\) for every \(t\ge 0\) and every \(\rho '<\rho \). \(\square \)

3.5 Derivation of (17)

To prove (17), we observe that \(\eta (t)\) and g(v, t) in (18) satisfy the equations

Setting \(\mathbf {f}(t)=(f_0(t),f_1(t),f_2(t),\ldots )\) with

we get

Thus \(\mathbf {f}(t)\) solves (1) with \(\tilde{\lambda }=0\). Clearly \(\mathbf {f}(t)\in D^1\) for every \(t\ge 0\) so that, by Remark 7, \(\mathbf {f}(t)=e^{t{\mathcal {T}}}\mathbf {f}(0)\).

3.6 Proof of Theorem 5

Given a continuous and bounded test function \(\phi _k:{\mathbb {R}}^k\rightarrow {\mathbb {R}}\), symmetric with respect to the permutation of its variables, we define

What we need to show is that, if \(\mathbf {f}_n\) forms a chaotic sequence and \(\phi :{\mathbb {R}}\rightarrow {\mathbb {R}}\) is a test function then

which implies propagation of chaos.

The argument to prove propagation of chaos introduced in [16] is based on the power series expansion of \(e^{\lambda K_N t}\), which converges since \(K_N\) is a bounded operator. After this, one can exploit a cancellation between \(Q_N\) and \(\left( {\begin{array}{c}N\\ 2\end{array}}\right) \mathrm {Id}\), see (5), when they act on a function \(\phi _k\) depending only on \(k<N\) variables, see Sect. 3 of [16]. In the present case the analogue of such an argument formally works but it cannot be applied directly since, being \({\mathcal {K}}\) unbounded, the power series expansion of \(e^{\tilde{\lambda }_n {\mathcal {K}}t}\) does not converge. To avoid this problem, one may try to use the convergent expansion (27) introduced in Sect. 3.1. But the different treatment of \(Q_N\) and \(\left( {\begin{array}{c}N\\ 2\end{array}}\right) \mathrm {Id}\) in (27) would make it very hard to see the needed cancellation.

Thus we will introduce a partial expansion of \(e^{\tilde{\lambda }_n {\mathcal {K}}t}\) and combine it with (33) and (40). The idea is to expand this exponential in the least possible way to exploit the central cancellations of McKean’s argument. We first decompose \(K_N\) as

with

and obtain

where we used that \(K_N\) is a bounded operator on \(C^0({\mathbb {R}}^N)\) and that \(\widetilde{K}_{N-k}\phi _k=0\). Since we are interested in integrating (91) against a symmetric function \(f_N\) we can write

To iterate we need to apply (91) to the factor \(e^{\tilde{\lambda }_n K_N(t-s)}\) inside the integral in (91) itself. Since \(G_ke^{\tilde{\lambda }_n K_k s}\phi _k\) is a function of \(k+1\) variables we now have to write

Iterating this procedure we get

so that

where the factor \(\lambda ^p\) in the second line of (92), comes from (25) and (19).

Observe now that the \(R_{i,j}\) are averaging operators so that \(\Vert R_{i,j}\Vert _\infty \le 1\) which gives

For the same reason we have

Using (21) we get

Observe that the series in the last line converges for \(\lambda K t<1/2\). On the other hand, since \(\lim _{n\rightarrow \infty }\tilde{\lambda }_n=0\), for every t we have

and similarly, calling \(G_k^{*p}=\prod _{i=0}^p G_{k+i}\),

so that we finally get

Observe now that \(G_k\) acts as a derivation in the sense of [16], that is, for every \(\phi _{k_1}\) and \(\psi _{k_2}\) with \(k_1+k_2=k\), we have

This implies that

Observing that if \(\mathbf {f}_n\) forms a chaotic sequence then

we get

which implies that \(e^{\tilde{\lambda }_n {\mathcal {K}}t}\) propagates chaos, at least for \(t\le t_0=\frac{1}{2\lambda K}\). Finally we need to verify that (21) still holds. Since \(\mathbf {f}_n\) are positive \(\Vert \mathbf {f}_n\Vert _1^{(r)}=N_r(\mathbf {f})\), see (43). Thus Corollary 9 implies that for every \(t\ge 0\) we have \(\Vert \mathbf {f}_n(t)\Vert ^{(r)}\le K_1^r\left( \frac{\mu _n}{\rho }\right) ^r\) with \(K_1=\max \{K,1\}\). Thus \(\mathbf {f}_n(t_0)=e^{\tilde{\lambda }_n {\mathcal {K}}t_0}\mathbf {f}_n\) forms a chaotic sequence that satisfies (21) with \(K_1\) in place of K. Using \(\mathbf {f}_n(t_0)\) as initial condition we get that propagation of chaos holds up to time \(t_1=\frac{1}{2\lambda K}+\frac{1}{2\lambda K_1}\). Iterating this argument we see that \(e^{\tilde{\lambda }_n {\mathcal {K}}t}\) propagates chaos for every \(t\ge 0\).

To add the out operator \({\mathcal {O}}\), we observe that from (93) we get

Inserting (97) into (33), after some long algebra that we report in Appendix A.5, we obtain

It is not hard to see that (98) implies that \(e^{(\tilde{\lambda }_n {\mathcal {K}}+\rho ({\mathcal {O}}-{\mathcal {N}}))t}\) propagates chaos.

Finally we consider the in operator \({\mathcal {I}}\). Observe that

so that

where

which clearly act as a derivative in the sense of [16]. We can now use an expansion similar to (34)

that combined (99) with (98) gives

where \(|p|_{i}=\sum _{j=0}^{i-1} p_j\) and \(t_0=t\), \(t_{q+1}=0\) and the order of the \(t_i\) in the integral is inverted due to the inversion of the order of the operators when taking the adjoint. From (101) it follows, after more long algebra reported in Appendix A.5, that, if \(k_1+k_2=k\), then

that is, \(e^{{\mathcal {L}}_n t}\) propagates chaos. The validity of the Boltzmann-Kac type equation (26) follows exactly as in [16]. \(\square \)

4 Conclusions

The central aim of this work is the extension of the analysis in [4], in which a thermostat idealizes the interaction with a large reservoir of particles kept at constant temperature and chemical potential. While in [4] the reservoir and the system could not exchange particles, here the main interaction is the continuous exchange of particles between the two.

However, it is in this same work which we hoped to extend that we also find points of possible extension to our current work. In the case of the standard Kac model, approach to equilibrium in the sense of the GTW metric \(d_2\) was shown in [18] while for a Kac system interacting with one or more Maxwellian thermostats it was shown in [8]. In the present situation though, it is not clear how to define an analogue of the GTW metric since the components \(f_N\) of a state \(\mathbf {f}\) are not, in general, probability distributions on \({\mathbb {R}}^N\).

Furthermore, in [3] the authors show that, in a strong and uniform sense, the evolution of the Kac system with a Maxwellian thermostat can be thought of as an idealization of the interaction with a large heat reservoir, itself described as a Kac system. We think it is possible to replicate such an analysis in the present context and hope to come back to this issue in a forthcoming paper.

We based our proof of propagation of chaos on the work in [16]; therefore, as in [16], it is not quantitative nor uniform in time. Recently, a quantitative and uniform in time result was obtained for the Kac system with a Maxwellian thermostat [6]. It is unclear to us whether the methods in their work extend to the present model.

Finally, the assumption that the rates \(\rho \) and \(\mu \) are independent of the number of particles is clearly unrealistic, allowing the possibility of an unbounded number of particles in the system. However, in the steady state (and in a chaotic state) the probability of having a number of particles in the system much larger then the average is extremely small, and so we do not consider this a serious problem. In any case, it would be interesting to investigate what happens if one assumes a maximum number of particles allowed inside the system.

Data Availability

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

Notes

Observe that for our purpose it is enough to work with a partition \(mod\, 0\), that is a family of set \(B_k\) such that \(m({\mathbb {R}}\backslash \bigcup _k B_k)=0\) and \(m(B_k\cap B_{k'})=0\) for \(k\not = k'\). For this reason, the boundaries of the rectangles defined in this proof are irrelevant.

The partition \({\mathcal {D}}\) can be constructed by taking intersections of the \(C_{\underline{k}}\) and their complements.

References

Benfatto, G., Gallavotti, G.: Perturbation theory of the fermi surface in a quantum liquid. A general quasiparticle formalism and one-dimensional systems. J. Stat. Phys. 59, 541–664 (1990)

Bonetto, F., Geisinger, A., Loss, M., Ried, T.: Entropy decay for the Kac evolution. Commun. Math. Phys. 363(3), 847–875 (2018). https://doi.org/10.1007/s00220-018-3263-0

Bonetto, F., Loss, M., Tossounian, H., Vaidyanathan, R.: Uniform approximation of a Maxwellian thermostat by finite reservoirs. Commun. Math. Phys. 351(1), 311–339 (2017). https://doi.org/10.1007/s00220-016-2803-8

Bonetto, F., Loss, M., Vaidyanathan, R.: The Kac model coupled to a thermostat. J. Stat. Phys. 156(4), 647–667 (2014). https://doi.org/10.1007/s10955-014-0999-6

Carlen, E., Carvalho, M.C., Loss, M.: Many-body aspects of approach to equilibrium. In: Journées “Équations aux Dérivées Partielles” (La Chapelle sur Erdre, 2000), pp. Exp. No. XI, 12. Univ. Nantes, Nantes (2000)

Cortez, R., Tossounian, H.: Uniform propagation of chaos for the thermostated Kac model. J. Stat. Phys. 183, 28 (2021)

Einav, A.: On Villani’s conjecture concerning entropy production for the Kac master equation. Kinet. Relat. Models 4(2), 479–497 (2011). https://doi.org/10.3934/krm.2011.4.479

Evans, J.: Non-equilibrium steady states in Kac’s model coupled to a thermostat. J. Stat. Phys. 164, 1103–1121 (2016)

Folland, G.B.: Real Analysis: Modern Techniques and Their Applications. Wiley, New York (1999)

Golub, G.H., Van Loan, C.F.: Matrix Computations. Johns Hopkins University Press, Baltimore (1996)

Grad, H.: On the kinetic theory of rarefied gases. Commun. Pure Appl. Math. 2, 331–407 (1949)

Gross, L.: Logarithmic Sobolev inequalities. Am. J. Math. 97(4), 1061–1083 (1975)

Janvresse, E.: Spectral gap for Kac’s model of Boltzmann equation. Ann. Probab. 29(1), 288–304 (2001). https://doi.org/10.1214/aop/1008956330

Kac, M.: Foundations of kinetic theory. In: Proceedings of the Third Berkeley Symposium on Mathematical Statistics and Probability, 1954–1955, vol. III, pp. 171–197. University of California Press, Berkeley and Los Angeles (1956)

Kac, M.: Probability and related topics in physical sciences, with special lectures by G. E. Uhlenbeck, A.R. Hibbs, and B. van der Pol. Lectures in Applied Mathematics. In: Proceedings of the Summer Seminar, Boulder, Colo., vol. 1957. Interscience Publishers, London (1959)

McKean, H.P., Jr.: Speed of approach to equilibrium for Kac’s caricature of a Maxwellian gas. Arch. Ration. Mech. Anal. 21, 343–367 (1966)

Schweber, S.: An Introduction to Relativistic Quantum Field Theory. Dover Publications, Mineola (2011)

Tossounian, H.: Equilibration in the Kac model using the GTW metric d2. J. Stat. Phys. 169, 168–186 (2017)