Abstract

The paper treats an agent-based model with averaging dynamics to which we refer as the K-averaging model. Broadly speaking, our model can be added to the growing list of dynamics exhibiting self-organization such as the well-known Vicsek-type models (Aldana et al. in: Phys Rev Lett 98(9):095702, 2007; Aldana and Huepe in: J Stat Phys 112(1–2):135–153, 2003; Pimentel in: Phys. Rev. E 77(6):061138, 2008). In the K-averaging model, each of the N particles updates their position by averaging over K randomly selected particles with additional noise. To make the K-averaging dynamics more tractable, we first establish a propagation of chaos type result in the limit of infinite particle number (i.e. \(N \rightarrow \infty \)) using a martingale technique. Then, we prove the convergence of the limit equation toward a suitable Gaussian distribution in the sense of Wasserstein distance as well as relative entropy. We provide additional numerical simulations to illustrate both results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The collective behavior of various particle systems is a subject of intensive research that has potential applications in biology, physics, economics, and engineering [7, 15, 28]. Different models are proposed to study the emergence of flocking of birds, formation of consensus in opinion dynamics, and phase transitions in network models [5, 14, 27, 33]. Broadly speaking, all of the aforementioned models are instances of interacting particle systems, under various interaction rules among the particles. We refer the readers to [23] for a general introduction to this branch of applied mathematics.

In this work, we investigate a simple model to describe the collective alignment of a group of particles. The model we examine here can be classified in general as an averaging dynamics and will be referred to as the K-averaging model. The readers are encouraged to consult [9, 10, 12, 13] for a variety of models in biology and physics in which averaging plays a key role in the model definition. One important inspiration for the present work is a paper of Maurizio Porfiri and Gil Ariel [33], which can be thought as a K-averaging model on the unit circle. In the K-averaging model considered in this manuscript, at each time step, we update the position of each particle (viewed as an element of \({\mathbb {R}}^d\)) according to the average position of its K randomly chosen neighbors while being simultaneously subjected to additive noise [see Eq. (1)]. Thus, we give the following definition.

Definition 1

(K-averaging model) Consider a collection of stochastic processes \(\{X^n_i\}_{1\le i\le N}\) evolving on \({\mathbb {R}}^d\), where n is the index for time. At each time step, each particle updates its value to the average of K randomly selected neighbors, subject to an independent noise term:

where \(S^n_i(j)\) are indices taken randomly from the set \(\{1,2,\ldots ,N\}\) (i.e., \(S^n_i(j) \sim \mathrm {Uniform}(\{1,2,\ldots ,N\})\) and is independent of i, j and n), and \(W^n_i \sim \mathcal {N}(\varvec{0},\sigma ^2\mathbb {1}_d)\) is independent of i and n, in which \(\varvec{0}\) and \(\mathbb {1}_d\) stands for the zero vector and the identity matrix in dimension d, respectively (see Fig. 1 for a illustration).

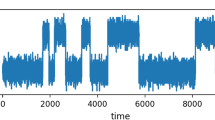

We illustrate the dynamics in Fig. 1. The key question of interest is the exploration of the limiting particle distribution as the total number of particles and the number of time steps become large. We illustrate numerically (see Fig. 2) the evolution of the dynamics in dimension \(d = 1\) using \(N = 5000\) particles after \(n=1000\) time steps.

Sketch illustration of the K-averaging dynamics (1). At each time step, a particle updates its position taking the averaging of K randomly selected particles and add some (Gaussian) noise

Simulation of the K-averaging dynamics in dimension \(d = 1\) with \(K = 5\) and \(N = 5000\) particles after 1000 time steps, in which we used \(\sigma = 0.1\) and initially each \(X_i \sim \mathrm {Uniform}(-1,1)\). As to be shown later, the distribution of particles will be asymptotically Gaussian under the large N and large time limits

One of the main difficulty in the rigorous mathematical treatment of models involving large number of interacting particles or agents lies in the general fact that interaction will build up correlation over time. Fortunately the framework of kinetic theories allows possible simplification of the analysis of certain such models via suitable asymptotic analysis, see for instance [20, 21, 25, 26, 29, 35]. For the model at hand, our main contribution is two fold: we first prove a result of propagation-of-chaos type under the large N limit (see Theorem 1 for a precise statement), in which interactions among particles are eliminated in finite time and a mean-field dynamics emerges. After the large population limit is carried out and the simplified dynamics [see Eq. (6)] is obtained, we then show that the law of the limiting dynamics defined by (6) is asymptotically Gaussian under the large time limit, and such convergence of distribution occurs both in the Waasserstein distance (see Theorem 2) and in the sense of relative entropy (see Theorem 3). A schematic illustration of the strategy used in this manuscript is presented in Fig. 3.

Schematic illustration of the limiting procedure carried out for the study the K-averaging dynamics (1). The empirical measure \(\rho ^n_{\mathrm {emp}}(\varvec{x})\) of the system [see Eq. (16)] will be shown to converge as \(N \rightarrow \infty \) to its limit law  described by the evolution equation (11), and then the relaxation of

described by the evolution equation (11), and then the relaxation of  to its Gaussian equilibrium will be established

to its Gaussian equilibrium will be established

We briefly explain the possible motivation for study such a model (at least in dimension \(d=1\)). In the context of a opinion dynamics model (see for instance [6]), \(X^n_i\) may represent an evolving opinion of agent i at time step n. For a given event, agent i has a opinion \(X_i\) (which can be positive or negative) with strength \(|X_i|\), and agents update their opinions based on the Eq. (1).

There remain some open questions related to our current work. First, our analysis of the model is restricted to \(K\ge 2\), under which we are able to identify the equilibrium distribution and prove various results, we speculate that the propagation of chaos property will be lost if \(K=1\), yet we have not been able to find a perfect analytical justification. We also remark here that the case of \(K = 1\) can be seen as a variant of the "Choose the Leader" (CL) dynamics introduced in [13], in which each of the N particles decides to jump to the location of the other particle chosen independently and uniformly at random at every time step, though noise is injected in such a jump. Second, we think similar results can be obtained if the noise is no longer Gaussian, except that the equilibrium will not be explicit in general.

The remainder of the paper is organized as follows: In Sect. 2.1, we present several preliminaries related to random probability measures and the concept of propagation of chaos. Sections 2.2 and 2.3 are concerned with the intuitive derivation of the simplified model (6) and related properties. We give a full proof of the propagation of chaos result in Sect. 3 and the large time asymptotic of (6) is investigated in 4. We devote Sect. 5 to the continuous-time counterpart of the K-averaging model studied in previous sections, and finish the paper with a conclusion in Sect. 6.

2 K-Averaging Model

In Sect. 2.1, we perform a brief review on convergence of random probability measures and the notion of propagation of chaos. Section 2.2 encapsulated a heuristic argument for the large N limit, and we prove a Lipschitz continuity property of the key operator T arising naturally from the K-averaging dynamics in Sect. 2.3, which will be leveraged in the proof of Theorem 1.

2.1 Review Propagation of Chaos and Convergence of Random (Probability) Measures

We devote this section to a quick review on propagation of chaos and convergence of random probability measures. First, we intend to briefly discuss propagation of chaos, but we need to carefully define what propagation of chaos means. With this aim, we consider a (stochastic) N-particle system denoted by \((X_1,\ldots ,X_N)\) in which particles are indistinguishable. In other words, the particle system enjoys a property known as permutation invariance, i.e. for any test function \(\varphi \) and permutation \(\eta \in \mathcal {S}_N\):

In particular, all the single processes \(X_i\) have the same law for \(1\le i\le N\) (although they are in general correlated). Let \(\rho ^{(N)}(x_1,\ldots ,x_N)\) to be the density distribution of the N-particle process and denote \(\rho ^{(N)}_k\) its k-particle marginal density, i.e., the law of the process \((X_1,\ldots ,X_k)\):

Consider now a (potential) limit stochastic process  where

where  are i.i.d. Denote by

are i.i.d. Denote by  the law of a single process, thus by independence assumption the law of all the process is given by:

the law of a single process, thus by independence assumption the law of all the process is given by:

The following definition is classical and can be found for instance in [13, 35].

Definition 2

We say that the stochastic process \((X_1,\ldots ,X_N)\) satisfies the propagation of chaos if for any fixed k:

which is equivalent to the validity of the following relation for any test function \(\varphi \):

Next, we shift to a review on convergence of random probability measures. Such topic can be found for instance in a classical book by Billingsley [11]. However, we prefer to give a more practical treatment on convergence of random probability measures, based on [8]. Consider a sequence of random probability measures \(\mu _n(\omega )\), i.e., for a given \(\omega \in \Omega \), \(\mu _n(\omega ) \in \mathcal {P}({\mathbb {R}}^d)\). We shall define the mode of convergence as follows:

Definition 3

We say that \(\mu _n\) converges to \(\mu \in \mathcal {P}({\mathbb {R}}^d)\) in probability, denoted by \(\mu _n \xrightarrow []{{\mathbb {P}}} \mu \), if

We record here a simple criteria to test the convergence in probability of random measures.

Lemma 1

Suppose that the sequence of random measures \(\{\mu _n(\omega )\}_n\) satisfies

Then \(\mu _n \xrightarrow []{{\mathbb {P}}} \mu \).

Proof

It is a direct application of the Markov’s inequality. Fixing \(\varphi \in C_b({\mathbb {R}}^d)\) and let \(\varepsilon >0\), we have

Therefore, the random variables \(X_n(\omega ):=\langle \mu _n(\omega ),\varphi \rangle \) converges in probability to \(X(\omega ):= \langle \mu (\omega ),\varphi \rangle \). Since it is true for any \(\varphi \in C_b({\mathbb {R}}^d)\), we deduce that \(\mu _n \xrightarrow []{{\mathbb {P}}} \mu \). \(\square \)

2.2 Formal Limit as \(N \rightarrow \infty \)

We would like to investigate formally the limit as \(N \rightarrow \infty \) of the dynamics, and we will provide the rigorous derivation in the next section. Motivated by the famous molecular chaos assumption (also known as propagation of chaos), which suggests that we have the statistical independence among the particle systems defined by (1) under the large \(N \rightarrow \infty \) limit, we henceforth give the following definition of the limiting dynamics of  as \(N \rightarrow \infty \) from the process point of view.

as \(N \rightarrow \infty \) from the process point of view.

Definition 4

(Asymptotic K-averaging model) We define a collection of random variables  by setting

by setting  and

and

where  are K i.i.d copies of

are K i.i.d copies of  and \(W^n \sim \mathcal {N}(\varvec{0},\sigma ^2\mathbb {1}_d)\) is independent of n.

and \(W^n \sim \mathcal {N}(\varvec{0},\sigma ^2\mathbb {1}_d)\) is independent of n.

If we denote  to be the law of

to be the law of  , then it is possible to determine the evolution of

, then it is possible to determine the evolution of  with respect to time n. For this purpose, We will first collect some definitions to be used throughout the manuscript.

with respect to time n. For this purpose, We will first collect some definitions to be used throughout the manuscript.

Definition 5

We use \(\mathcal {P}({\mathbb {R}}^d)\) to represent the space of probability measures on \({\mathbb {R}}^d\). We will denote by \(\phi \) the probability density of a d-dimensional Gaussian random variable \(\mathcal {E} \sim \mathcal {N}(\varvec{0},\sigma ^2\mathbb {1}_d)\). For \(\rho \in \mathcal {P}({\mathbb {R}}^d)\), we define \(T:\mathcal {P}({\mathbb {R}}^d) \rightarrow \mathcal {P}({\mathbb {R}}^d)\) through

in which the \(C_K\) is the K-fold repeated self-convolution defined via

and \(S_K\) is the scaling (renormalization) operator given by

Remark 1

We emphasize here that the operator T given in Definition 5 fully encodes the update rule (6) for the asymptotic K-averaging model. Indeed, for each valid test function \(\varphi \), we have

where the last equality follows because the random variable  has law

has law  . Thus, from the density point of view, as

. Thus, from the density point of view, as  is the law of

is the law of  at time n, then

at time n, then  represents exactly the law of

represents exactly the law of  at time \(n+1\).

at time \(n+1\).

Equipped with Definition 5, we can write the evolution of the limit equation as

Notice that the mean value is preserved by the dynamics (6), we will make a harmless assumption throughout this paper that

Remark 2

In dimension 1, we derive from (6) that

leading to  . A similar consideration demonstrates that the covariance matrix associated with

. A similar consideration demonstrates that the covariance matrix associated with  converges to \(\frac{K\sigma ^2}{K-1}\cdot \mathbb {1}_d\).

converges to \(\frac{K\sigma ^2}{K-1}\cdot \mathbb {1}_d\).

Now we can verify that a suitable Gaussian profile is a fixed point of the iteration process described by (11) as long as \(K\ge 2\).

Lemma 2

Fixing \(K\ge 2\). Let

with \(\sigma ^2_\infty := \frac{K}{K-1}\sigma ^2\), then  is a fixed point of T. i.e,

is a fixed point of T. i.e,  satisfies

satisfies  .

.

Proof

It is readily seen that the operator T maps a Gaussian density to another (possibly different) Gaussian density. We investigate the effect of each operator appearing in the definition of T on  . Indeed, since \(Z_1 + \cdots + Z_K \sim \mathcal {N}(\varvec{0},K\sigma ^2_\infty \mathbb {1}_d)\) when \((Z_i)_{1\le i\le K}\) are i.i.d. with law \(\mathcal {N}(\varvec{0},\sigma ^2_\infty \mathbb {1}_d)\), we have

. Indeed, since \(Z_1 + \cdots + Z_K \sim \mathcal {N}(\varvec{0},K\sigma ^2_\infty \mathbb {1}_d)\) when \((Z_i)_{1\le i\le K}\) are i.i.d. with law \(\mathcal {N}(\varvec{0},\sigma ^2_\infty \mathbb {1}_d)\), we have

Next, notice that \(\frac{Z}{K} \sim \mathcal {N}(\varvec{0},\frac{\sigma ^2_\infty }{K}\mathbb {1}_d)\) if \(Z \sim \mathcal {N}(\varvec{0},K\sigma ^2_\infty \mathbb {1}_d)\), from which we deduce that

Finally, we conclude that

which completes the proof. \(\square \)

We end this subsection with a numerical experiment demonstrating the relaxation of the solution of (11) to its Gaussian equilibrium  , as is shown in Fig. 4.

, as is shown in Fig. 4.

Simulation of the discrete evolution equation (11) in dimension \(d = 1\) with \(K = 5\) after 3 time steps, in which we used \(\sigma = 0.1\) and a uniform distribution over \([-1,1]\) initially  (the green curve). The blue and red curve represent

(the green curve). The blue and red curve represent  and

and  , respectively. We also remark that in this example

, respectively. We also remark that in this example  and

and  are almost indistinguishable

are almost indistinguishable

2.3 Lipschitz Continuity of the Operator T

To conclude Sect. 2, we demonstrate a useful property of the operator T introduced in (7). First, we start with the following definition.

Definition 6

For each \(\mu \in \mathcal {P}({\mathbb {R}}^d)\), we define the (strong) norm of \(\mu \), denoted by \({\left| \left| \left| \mu \right| \right| \right| }\), via

The main result in this section lies in the Lipschitz continuity of T, to which we now turn.

Proposition 1

For each \(\mu ,\nu \in \mathcal {P}({\mathbb {R}}^d)\), we have

Proof

We recall that for each \(g \in \mathcal {P}({\mathbb {R}}^d)\) we have

Moreover, we have

Also, for \(\mu , \nu \in \mathcal {P}({\mathbb {R}}^d)\) and \(\varphi \in C_b({\mathbb {R}}^d)\), there holds

where \(\widehat{\mu }\) is defined via \(\widehat{\mu }(\varvec{x}) := \mu (-\varvec{x})\). Fixing \(\varphi \) with \(\Vert \varphi \Vert _\infty \le 1\), for each pair of probability measures \(\mu ,\nu \in \mathcal {P}({\mathbb {R}}^d)\), we have

Setting \(\psi _j = \widehat{\kappa _j}*S_{\frac{1}{K}}[\phi *\varphi ]\) for each \(1\le j\le K-1\), then we have

Thus, if we define \(\varphi ^{(1)} = K^d \sum _{j=0}^{K-1} \psi _j\), then \(\Vert \varphi ^{(1)}\Vert _\infty \le \frac{K^{d+1}}{K^d} = K\). Now taking the supremum over all \(\varphi \) with \(\Vert \varphi \Vert _\infty \le 1\), we deduce from (15) that

and the proof is completed. \(\square \)

3 Propagation of Chaos

This section is devoted to the rigorous proof of propagation of chaos for the K-averaging dynamics, by employing a martingale-based technique introduced recently in [25]. We will need the following definition.

Definition 7

Let \(\{X^n_i\}_{1\le i\le N}\) be as in Definition 1, we define

to be the empirical distribution of the system at time n. In particular, \(\rho ^n_{\mathrm {emp}}\) is s stochastic measure.

Thanks to a classical result (see for instance Proposition 1 in [17] or Proposition 2.2 in [35]), to justify the propagation of chaos, it suffices to show that

i.e.,

In fact, one can prove our first theorem.

Theorem 1

Under the settings of the K-averaging model with \(K\ge 2\), if

then for each fixed \(n \in {\mathbb {N}}\) we have

where \(\rho ^n_{\mathrm {emp}}\) and  are defined in (16) and (11), respectively.

are defined in (16) and (11), respectively.

Proof

We adopt a martingale-based technique developed recently in [25]. We have for each test function \(\varphi \) that

where \(\{Y^n_{i,j}\}\) are i.i.d. with law \(\rho ^n_{\mathrm {emp}}\). Denoting

for each \(1\le i\le N\), since the law of \(Z^n_i\) is \(T[\rho ^n_{\mathrm {emp}}]\) for each \(1\le i\le n\) and \(\{Z^n_i\}_{1\le i\le N}\) are i.i.d., following the reasoning behind (10) we have

Now if we set

for each \(n \ge 0\), then \((M^n)_{n\ge 0}\) defines a martingale. Moreover, thanks to the fact that \(\{\varphi (Z^n_i)\}\) are i.i.d. bounded random variables, and using the convention that the variance operation \({\text {Var}}(X)\) is interpreted as \({\text {Var}}(X) := \sum _{k=1}^d {\text {Var}}(X_k)\) when X is a d-dimensional vector-valued random variable, we have

where we have employed Popoviciu’s inequality (see for instance [31]) for upper bounding the variance of a bounded random variable. Comparing (20) with (11) yields

Now if \(\Vert \varphi \Vert _\infty \le 1\), we can recall the computations carried out in (15), which ensures the existence of some \(\varphi ^{(1)}\) with \(\Vert \varphi ^{(1)}\Vert _\infty \le K\) such that

Then we can deduce from (21) and (22) that

in which \(\varphi ^{(1)}\) satisfies \(\Vert \varphi ^{(1)}\Vert _\infty \le K\). We can iterate (23) to arrive at

in which \(\varphi ^{(n)}\) satisfies \(\Vert \varphi ^{(n)}\Vert _\infty \le K^n\). Finally, combining (17) with (24) allows us to complete the proof of Theorem 1. \(\square \)

Remark 3

As we do not have a uniform-in-time propagation of chaos, we would like to know whether the convergence declared in Theorem 1 still holds if we do not fix n (i.e., if \(n \rightarrow \infty \)). We speculate such a uniform in time convergence can no longer be hoped for by looking at the evolution of the center of mass of the particle systems. Indeed, define

to be the location of the center of mass, and denote by \(\mathcal {F}_n\) the natural filtration generated by \((X^n_1,\cdots ,X^n_N)\), then in dimension \(d = 1\) we have

where the last equality comes from the fact that \(X^{n+1}_i\) and \(X^{n+1}_j\) are i.i.d. given \(\mathcal {F}_n\). Thus, loosely speaking, at least in dimension \(d = 1\), the center of mass of the particle systems behaves like a discrete time Brownian motion with intensity of order at least \(\mathcal {O}(1/\sqrt{N})\), such variation can accumulate in time which will eventually ruin the chaos propagation property in the long run.

4 Large Time Behavior

The long time behavior of the limit equation, resulted from the simplified mean-field dynamics, is treated in this section. In Sect. 4.1, by employing a coupling technique and equipping the space of probability measures on \({\mathbb {R}}^d\) with the Wasserstein distance, we will justify the asymptotic Gaussianity of the distribution of each particle. Then we will strengthen the convergence result shown in the previous section in Sect. 4.2, and numerical simulations are also performed in support of our theoretical discoveries in Sect. 4.3. We emphasize here that coupling techniques will be at the core of our proof in Sect. 4.1, and the technique used in Sect. 4.2 depends heavily on several classical results from information theory.

4.1 Convergence in Wasserstein Distance

After we have achieved the transition from the interacting particle system (1) to the simplified de-coupled dynamics (6) under the limit \(N\rightarrow \infty \), in this section we will analyze (6) and its associated evolution of its law (governed by (11)), with the intention of proving the convergence of  to a suitable Gaussian density. The main ingredient underlying our proof lies in a coupling technique. First, we recall the following classical definition.

to a suitable Gaussian density. The main ingredient underlying our proof lies in a coupling technique. First, we recall the following classical definition.

Definition 8

The Wasserstein distance (of order 2) is defined via

where both \(\mu \) and \(\nu \) are probability measures on \({\mathbb {R}}^d\).

We can now state and prove our main result in this section.

Theorem 2

Assume that the innocent-looking normalization (12) holds and \(K\ge 2\), then for the dynamics (11), we have

In particular, if  is chosen such that

is chosen such that  , then

, then

Proof

We first show that

for each \(\mu ,\nu \in \mathcal {P}({\mathbb {R}}^d)\). In other words, if we equip the space \(\mathcal {P}({\mathbb {R}}^d)\) with the Wasserstein distance of order 2, T is a strict contraction as long as \(K\ge 2\). Now we fix \(\mu ,\nu \in \mathcal {P}({\mathbb {R}}^d)\). It is recalled that \(T(\mu )\) is the law of the random variable

where \(\{X_i\}_{1\le i\le K}\) are i.i.d. with law \(\mu \) and \(\mathcal {E} \sim \mathcal {N}(\varvec{0},\sigma ^2\mathbb {1}_d)\). Thus, if we also introduce

in which \(\{Y_i\}_{1\le i\le K}\) are i.i.d. with law \(\nu \) and \(\widetilde{\mathcal {E}} \sim \mathcal {N}(\varvec{0},\sigma ^2\mathbb {1}_d)\), then we can write

We can couple \((X_1,\cdots ,X_K,\mathcal {E})\) and \((Y_1,\cdots ,Y_k,\tilde{\mathcal {E}})\) as we want. First, we take \(\mathcal {E}=\widetilde{\mathcal {E}}\), meaning we have a common source of noise. Second, fixing \(\eta >0\), we take \((X_1,Y_1)\) such that

(i.e. almost best coupling). Finally, we perform similarly for the other \((X_i,Y_i)\) with \((X_i,Y_i)\) independent of \((X_j,Y_j)\) if \(i\ne j\). These procedures lead us to

Since this is true for any \(\eta >0\), (26) is verified. Now we can deduce from (26) that

whence (25) is proved. \(\square \)

4.2 Convergence in Relative Entropy

In this subsection we will show that the evolution of the discrete equation (11) relaxes to its Gaussian equilibrium  in the sense of relative entropy, as long as \(K\ge 2\). Before stating our result, we first clarify some definitions. We refer the reader to [16] for a comprehensive account of modern information theory.

in the sense of relative entropy, as long as \(K\ge 2\). Before stating our result, we first clarify some definitions. We refer the reader to [16] for a comprehensive account of modern information theory.

Definition 9

We use

to represent the differential entropy of a \({\mathbb {R}}^d\)-valued random variable X with law g. Moreover,

denotes the relative entropy from \(h \in {\mathcal {P}}({\mathbb {R}}^d)\) to \(g \in {\mathcal {P}}({\mathbb {R}}^d)\), in which

is the cross-entropy from g to h (or equivalently, from X to Y where the laws of X and Y are g and h, respectively).

For the reader’s convenience, we explicitly state two fundamental results from information theory that we shall reply on.

Lemma 3

(Shannon–Stam) Under the set-up of Definition 9, we have

for each \(\lambda \in [0,1]\).

Lemma 3 is one of the three equivalent formulations of the well-known Shannon-Stam inequality, see for instance Sect. 1.3.2 of [34]. The next lemma (see for instance Theorem 1 in [3] or Eq. (7) in [24]) demonstrates the monotonicity of the differential entropy along re-scaled sum of i.i.d. square-integrable random variables.

Lemma 4

Let \(X_1,X_2,\ldots \) be i.i.d. square-integrable random variables. Then

for each \(n \ge 2\).

Theorem 3

Assume that  is a solution to (11), then for each fixed \(K \ge 2\) we have

is a solution to (11), then for each fixed \(K \ge 2\) we have

In particular, for each \(K \ge 2\) we have  as \(n \rightarrow \infty \).

as \(n \rightarrow \infty \).

Proof

Let  be as in Definition 4. If we introduce a random variable

be as in Definition 4. If we introduce a random variable  with law

with law  , i.e.,

, i.e., , then for each \(n \in {\mathbb {N}}\), we can rewrite (6) as

, then for each \(n \in {\mathbb {N}}\), we can rewrite (6) as

since  in law. Setting \(\gamma = \frac{1}{\sqrt{K}}\), we obtain

in law. Setting \(\gamma = \frac{1}{\sqrt{K}}\), we obtain

Consequently, the Shannon-Stam inequality (see Lemma 3) together with the monotonicity of differential entropy along normalized sum of i.i.d. random variables (see Lemma 4) yields

Next, we observe that the cross-entropy from each \(f \in \mathcal {P}({\mathbb {R}}^d)\) with mean \(\varvec{0}\) to the equilibrium distribution  is essentially the variance of f, meaning that

is essentially the variance of f, meaning that

In particular, if X and Y are independent random variables with mean \(\varvec{0}\) and \(a^2+b^2 = 1\), then

Thus, using this formulation with \(\sqrt{\gamma }\) and \(\sqrt{1-\gamma }\), we find

Combining this with (28) leads to

and the proof is completed. \(\square \)

Remark 4

By Talagrand’s inequality (see for instance Theorem 9.2.1 in [4]), the convergence  implies the convergence

implies the convergence  .

.

4.3 Numerical Illustration of Decay in Relatively Entropy

We investigate numerically the convergence of the solution  of (11) to its equilibrium

of (11) to its equilibrium  in support of our Theorem 3, see Fig. 5. We use \(d = 1\) (dimension), \(K = 5\) (number of neighbors to be averaged over), \(\sigma = 0.1\) (the intensity of a centered Gaussian noise) in the simulation of the evolution equation (11). To discretize (11), we employ the step-size \(\Delta x = 0.001\) and a cutoff threshold \(M = 100,000\) so that the support of

in support of our Theorem 3, see Fig. 5. We use \(d = 1\) (dimension), \(K = 5\) (number of neighbors to be averaged over), \(\sigma = 0.1\) (the intensity of a centered Gaussian noise) in the simulation of the evolution equation (11). To discretize (11), we employ the step-size \(\Delta x = 0.001\) and a cutoff threshold \(M = 100,000\) so that the support of  is contained in \(\{j\Delta x\}_{-M\le j\le M}\) for all n, and the total number of simulation steps is set to 15. As initial condition, we use the Laplace distribution

is contained in \(\{j\Delta x\}_{-M\le j\le M}\) for all n, and the total number of simulation steps is set to 15. As initial condition, we use the Laplace distribution  . Moreover, the simulation result is displayed in the semi-logarithmic scale, which clearly indicates a geometrically fast convergence.

. Moreover, the simulation result is displayed in the semi-logarithmic scale, which clearly indicates a geometrically fast convergence.

Simulation of the relative entropy from  to

to  in dimension \(d = 1\) with \(K = 5\) after 15 time steps, in which we used \(\sigma = 0.1\) and a Laplace distribution

in dimension \(d = 1\) with \(K = 5\) after 15 time steps, in which we used \(\sigma = 0.1\) and a Laplace distribution  initially. The blue and orange curves represent the numerical error and the analytical upper bound on the error, respectively. We also noticed that the numerical error can not really go below \(10^{-12}\), but this is presumably due to the floating-point precision error

initially. The blue and orange curves represent the numerical error and the analytical upper bound on the error, respectively. We also noticed that the numerical error can not really go below \(10^{-12}\), but this is presumably due to the floating-point precision error

5 Continuous-Time K-averaging Dynamics

With suitable modifications, the argument used in the discrete-time applies in continuous-time as well, so in this section we briefly consider the continuous version of the K-averaging model studied in previous sections, i.e., the K-averaging occurs according to a Poisson process. First, we give a formal definition of the model.

Definition 10

(Continuous-time K-averaging model) Consider a collection of stochastic processes \(\{X_i(t)\}_{1\le i\le N}\) evolving on \({\mathbb {R}}^d\). At each time a Poisson clock with rate \(\lambda \) rings, we pick a particle \(i \in \{1,\ldots ,N\}\) uniformly at random and update the position of \(X_i\) according to the average position of K randomly selected neighbors, subject to an independent noise term, i.e., for each test function \(\varphi \) the process must satisfy

where \(Z_i(t) := \frac{1}{K}\sum _{j=1}^K X_{S_i(j)}(t) + W_i(t)\), \(S_i(j)\) are indices taken randomly from the set \(\{1,2,\ldots ,N\}\) (i.e., \(S_i(j) \sim \mathrm {Uniform}(\{1,2,\ldots ,N\})\) and is independent of i, j and t), and \(W_i(t) \sim \mathcal {N}(\varvec{0},\sigma ^2\mathbb {1}_d)\) is independent of i and t.

In the large N limit, we expect an emergence of a simplified dynamics, which motivates the following definition.

Definition 11

(Asymptotic continuous-time K-averaging model) Consider a \({\mathbb {R}}^d\)-valued stochastic process  which satisfies the following relation for each test function \(\varphi \):

which satisfies the following relation for each test function \(\varphi \):

in which  , where

, where  are K i.i.d. copies of

are K i.i.d. copies of  and \(W(t) \sim \mathcal {N}(\varvec{0},\sigma ^2\mathbb {1}_d)\) is independent of t.

and \(W(t) \sim \mathcal {N}(\varvec{0},\sigma ^2\mathbb {1}_d)\) is independent of t.

If we define  to be the law of

to be the law of  at time t, one can readily see that the evolution of

at time t, one can readily see that the evolution of  is governed by

is governed by

Moreover, one can show the continuous-time analog of Theorem 1 and Theorem 3.

Theorem 4

Let \(\rho _{\mathrm {emp}}(t) := \frac{1}{N}\sum _{i=1}^N \delta _{X_i(t)}\) to be the empirical distribution of the system determined by (29) at time t and  the solution of (31) with the Gaussian equilibrium

the solution of (31) with the Gaussian equilibrium  defined in (13), then

defined in (13), then

-

(i)

under the set-up of the continuous-time K-averaging model with \(K \ge 2\), if

(32)

(32)then we have

holding for all \(0\le t \le T\) with any prefixed \(T >0\).

-

(ii)

for each fixed \(K \ge 2\) we have

(33)

(33)where \(\gamma = \frac{1}{K}\) as before. In particular, we have

(34)

(34)

Proof

We assume without loss of generality that \(\lambda =1\). For (i), mimic the argument in the discrete-time setting we obtain for each test function \(\varphi \) that

in which \(Z_i(t) = \frac{1}{K}\sum _{j=1}^K Y_{i,j}(t) + W_i(t)\) and \(\{Y_{i,j}(t)\}\) are i.i.d. with law \(\rho _{\mathrm {emp}}(t)\). Then by Dynkin’s formula, the compensated process

defines a martingale. Comparing with (31) yields

We then take the supremum over all \(\varphi \) with \(\Vert \varphi \Vert _\infty \le 1\) to deduce from Proposition 1 and (35) that

where we have set

By Gronwall’s inequality, we obtain

In order to justify our claim (i) for \(t\le T\), it therefore suffices to show that

To show (36), we address each term appearing in the definition of \(\eta (t)\) separately. The second one vanishes due to our assumption (32). For the first one, i.e., the martingale term, we note that the i-th coordinate of \(M_\varphi \) is a continuous time martingale with jumps of size \(\frac{1}{N}\varphi (Z_i) - \frac{1}{N}\varphi (X_i)\) whose rates of occurrence are \(\lambda \cdot \mathrm {d}t = \mathrm {d}t\). Therefore,

whence the convergence

follows readily from Doob’s martingale inequality. For (ii), we recall that in the discrete-time case (with \(\gamma = \frac{1}{K}\)), (27) can be rewritten as

This can be translated immediately to its continuous-time analog (33), whence the proof is completed. \(\square \)

6 Conclusion

In this manuscript, we have investigated a model (which we call the K-averaging model) for a system of self-propelled particles on \({\mathbb {R}}^d\), in both discrete-time and continuous-time settings. We also provided a rigorous proof on the convergence of the distribution of a typical particle towards a suitable Gaussian equilibrium under the large particle size \(N \rightarrow \infty \) and large time \(n\rightarrow \infty \) limit. Even though the majority of the work is done in discrete-time, the relevant results carry over easily to continuous-time. It would also be interesting to examine variants of this model. For instance, the K-averaging dynamics on \({\mathbb {S}}^1\) is closely related to several models in the literature [1, 2, 30, 32, 33], and it is reasonable to expect a rigorous proof of the corresponding mean-field limit. Unfortunately, the situation on \({\mathbb {S}}^1\) is inevitably much more complicated due to the lack of a vector-space structure. More generally, averaging is not a straightforward operation over a manifold [18]. Other extensions of the model in the present manuscript are also possible. As of now, every agent communicates with each other. Thus, what would happen if only agents are only interacting through a pre-defined graph of neighboring few chosen neighbors? We would lose the invariance by permutation, thus the notion of limit is more challenging. This would also link the model to certain "consensus models" [19, 22]. One can also explore different laws of communication between the particles (especially of the non-symmetric and non-all-to-all variety), and investigate the role of noise introduced into the system.

References

Aldana, M., Dossetti, V., Huepe, C., Kenkre, V.M., Larralde, H.: Phase transitions in systems of self-propelled agents and related network models. Phys. Rev. Lett. 98(9), 095702 (2007)

Aldana, M., Huepe, C.: Phase transitions in self-driven many-particle systems and related non-equilibrium models: a network approach. J. Stat. Phys. 112(1–2), 135–153 (2003)

Artstein, S., Ball, K., Barthe, F., Naor, A.: Solution of Shannon’s problem on the monotonicity of entropy. J. Am. Math. Soc. 17(4), 975–982 (2004)

Bakry, D., Gentil, I., Ledoux, M.: Analysis and Geometry of Markov Diffusion Operators, vol. 348. Springer, New York (2013)

Barbaro, A.B.T., Degond, P.: Phase transition and diffusion among socially interacting self-propelled agents. arXiv:1207.1926 (2012)

Baumann, F., Lorenz-Spreen, P., Sokolov, I.M., Starnini, M.: Modeling echo chambers and polarization dynamics in social networks. Phys. Rev. Lett. 124(4), 048301 (2020)

Belmonte, J.M., Thomas, G.L., Brunnet, L.G., de Almeida, R.M.C., Chaté, H.: Self-propelled particle model for cell-sorting phenomena. Phys. Rev. Lett. 100(24), 248702 (2008)

Berti, P., Pratelli, L., Rigo, P.: Almost sure weak convergence of random probability measures. Stoch. Stoch. Rep 78(2), 91–97 (2006)

Bertin, E., Droz, M., Grégoire, G.: Boltzmann and hydrodynamic description for self-propelled particles. Phys. Rev. E 74(2), 022101 (2006)

Bertin, E., Droz, M., Grégoire, G.: Hydrodynamic equations for self-propelled particles: microscopic derivation and stability analysis. J. Phys. A 42(44), 445001 (2009)

Billingsley, P.: Convergence of Probability Measures. Wiley, New York (2013)

Boissard, E., Degond, P., Motsch, S.: Trail formation based on directed pheromone deposition. J. Math. Biol. 66(6), 1267–1301 (2013)

Carlen, E., Chatelin, R., Degond, P., Wennberg, B.: Kinetic hierarchy and propagation of chaos in biological swarm models. Physica D 260, 90–111 (2013)

Chaté, H., Ginelli, F., Grégoire, G., Peruani, F., Raynaud, F.: Modeling collective motion: variations on the Vicsek model. Eur. Phys. J. B 64(3), 451–456 (2008)

Chuang, Y.-L., Dorsogna, M.R., Marthaler, D., Bertozzi, A.L., Chayes, L.S.: State transitions and the continuum limit for a 2D interacting, self-propelled particle system. Physica D 232(1), 33–47 (2007)

Cover, T.M.: Elements of Information Theory. Wiley, New York (1999)

Dai Pra, P.: Stochastic mean-field dynamics and applications to life sciences. In: International Workshop on Stochastic Dynamics Out of Equilibrium, pp. 3–27. Springer, New York (2017)

Degond, P., Frouvelle, A., Raoul, G.: Local stability of perfect alignment for a spatially homogeneous kinetic model. J. Stat. Phys. 157(1), 84–112 (2014)

Hardin, M., Lanchier, N.: Probability of consensus in spatial opinion models with confidence threshold. arXiv:1912.06746 (2019)

Hauray, M., Jabin, P.-E.: N-particles approximation of the Vlasov equations with singular potential. Arch. Ratl. Mech. Anal. 183(3), 489–524 (2007)

Jabin, P.-E., Wang, Z.: Quantitative estimates of propagation of chaos for stochastic systems with \(W^{-1,\infty }\) kernels. Invent. Math. 214(1), 523–591 (2018)

Lanchier, N., Li, H.-L.: Probability of consensus in the multivariate Deffuant model on finite connected graphs. Electr. Commun. Probab. 25, 1–12 (2020)

Liggett, T.M.: Interacting Particle Systems, vol. 276. Springer, New York (2012)

Madiman, M., Barron, A.: Generalized entropy power inequalities and monotonicity properties of information. IEEE Trans. Inform. Theory 53(7), 2317–2329 (2007)

Merle, M., Salez, J.: Cutoff for the mean-field zero-range process. Ann. Probab. 47(5), 3170–3201 (2019)

Méléard, S., Roelly-Coppoletta, S.: A propagation of chaos result for a system of particles with moderate interaction. Stoch. Process. Appl. 26, 317–332 (1987)

Motsch, S., Tadmor, E.: Heterophilious dynamics enhances consensus. SIAM Rev. 56(4), 577–621 (2014)

Naldi, G., Pareschi, L., Toscani, G.: Mathematical Modeling of Collective Behavior in Socio-Economic and Life Sciences. Springer, New York (2010)

Oelschlager, K.: A martingale approach to the law of large numbers for weakly interacting stochastic processes. Ann. Probab. 12, 458–479 (1984)

Pimentel, J.A., Aldana, M., Huepe, C., Larralde, H.: Intrinsic and extrinsic noise effects on phase transitions of network models with applications to swarming systems. Phys. Rev. E 77(6), 061138 (2008)

Popoviciu, T.: Sur les équations algébriques ayant toutes leurs racines réelles. Mathematica 9, 129–145 (1935)

Porfiri, M.: Linear analysis of the vectorial network model. IEEE Trans. Circ. Syst. II 61(1), 44–48 (2013)

Porfiri, M., Ariel, G.: On effective temperature in network models of collective behavior. Chaos 26(4), 043109 (2016)

Rezakhanlou, F., Villani, C., Golse, F.: Entropy methods for the Boltzmann equation: lectures from a special semester at the Centre Émile Borel, Institut H. Poincaré, Paris, 2001, No. 1916. Springer, New York (2008)

Sznitman, A.-S.: Topics in propagation of chaos. In: Ecole d’été de Probabilités de Saint-Flour XIX–1989, pp. 165–251. Springer, Berlin (1991)

Acknowledgements

It is a pleasure to thank my Ph.D. advisor Sébastien Motsch for his tremendous help on various portions of this manuscript, and I thank the anonymous reviewer for providing helpful comments on earlier drafts of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Irene Giardina.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Cao, F. K-Averaging Agent-Based Model: Propagation of Chaos and Convergence to Equilibrium. J Stat Phys 184, 18 (2021). https://doi.org/10.1007/s10955-021-02807-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10955-021-02807-0