Abstract

We study the 2d stationary fluctuations of the interface in the SOS approximation of the non equilibrium stationary state found in De Masi et al. (J Stat Phys 175:203–221, 2019). We prove that the interface fluctuations are of order \(N^{1/4}\), N the size of the system. We also prove that the scaling limit is a stationary Ornstein–Uhlenbeck process.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The non equilibrium stationary states (NESS) for diffusive systems in contact with reservoirs have been extensively studied, one of the main targets being to understand how the presence of a current affects what seen in thermal equilibrium. In particular it has been shown that fluctuations in NESS have a non local structure as opposite to what happens in thermal equilibrium. The theory of such phenomena is well developed, [1, 5] but mathematical proofs are restricted to very special systems (SEP, [6], KMP, [8], chain of oscillators, [2] \(\ldots \)).

The general structure of the NESS in the presence of phase transitions is a very difficult and open problem not only mathematically, also a theoretical understanding is lacking. However a breakthrough came recently from a paper by De Masi et al. [4], where they prove that the NESS can be computed explicitly for a quite general class of Ginzburg–Landau stochastic models which include phase transitions.

The main point in [4] is that the NESS is still a Gibbs state but with the original Hamiltonian modified by adding a slowly varying chemical potential. Thus for boundary driven Ginzburg–Landau stochastic models the analysis of the NESS is reduced to an equilibrium Gibbsian problem and, at least in principle, very fine properties of their structure can be investigated which is unthinkable for general models.

In particular we can study cases where there are phase transitions and purpose of this paper is to give an indication that the 2d NESS interface is much more rigid than in thermal equilibrium.

The analysis in [4] includes a system where the Ising model is coupled to a Ginzburgh–Landau process. In the corresponding NESS the distribution of the Ising spin is a Gibbs measure with the usual nearest neighbour ferromagnetic interaction plus a slowly varying external magnetic field.

In particular in the 2d square \(\Lambda _N:=[0,N]\times [-N,N]\cap {\mathbb {Z}}^2\) the NESS \(\mu _N(\sigma )\) is

where \(b>0\) is fixed by the chemical potentials at the boundaries.

We assume \(\beta >\beta _c\), thus since the slowly varying external magnetic field \(\displaystyle {\frac{bx\cdot e_2}{N}}\) is positive in the half upper plane and negative in the half lower plane, we expect the existence of an interface, namely a connected “open line” \(\lambda \) in the dual lattice which goes from left to right and which separates the region with the majority of spins equal to 1 to the one with the majority of spins equal to \(-1\).

The problem of the microscopic location of the interface has been much studied in equilibrium without external magnetic field and when the interface is determined by the boundary conditions: \(+\) boundary conditions on \(\Lambda _N^c \cap \{x\cdot e_2 \geqslant 0\}\) and − boundary conditions on \(\Lambda _N^c \cap \{x\cdot e_2 < 0\}\).

It is well known since the work initiated by Gallavotti [7], that in the 2d Ising model at thermal equilibrium the interface fluctuates by the order of \(\sqrt{N}\), N the size of the system.

In this paper we argue that at low temperature (much below the critical value) and in the presence of a stationary current produced by reservoirs at the boundaries the interface is much more rigid as it fluctuates only by the order \(N^{1/4}\).

We study the problem with a drastic simplification by considering the SOS approximation of the interface. Namely we consider the simplest case where the interface \(\lambda \) is a graph, namely \(\lambda \) is described by a function \(s_x\), \(x\in \{0,\ldots ,N\}\) with integers values in \({\mathbb {Z}}\). The corresponding Ising configurations are spins equal to \(-1\) below \(s_x\) and \(+1\) above \(s_x\). Namely \(\sigma (x,i)=1\) if \(i\geqslant s_x\) and \(\sigma (x,i)=-1\) if \(i\leqslant s_x\).

The interface is then made by a sequence of horizontal and vertical segments and the Ising energy of such configurations is \(|\lambda |\). We normalise the energy by subtracting the energy of the flat interface so that the normalised energy is

i.e. the sum of the lengths of the vertical segments.

The energy due to the external magnetic field is normalised by subtracting the energy of the configuration when all \(s_x\) are equal to 0. This is (below we set \(b=1\))

Thus we get the SOS Hamiltonian

We prove that the stationary fluctuations of the interface in this SOS approximation scaled by \(N^{1/4}\) convergence to a stationary Ornstein–Unhlenbeck process.

The problem addressed in this article is the behavior of the interface in the NESS and the aim is to argue that its fluctuations are more rigid than in thermal equilibrium as indicated by the SOS approximation. Thus in the SOS approximation we prove the \(N^{1/4}\) behavior in the simplest setting of Sect. 2.

More general results similar to those in [9] presumably apply. We cannot use directly the results in [9] because their SOS models have an additional constraint (the interface is in the upper half plane). Our proofs have several points in common with [9], but since we work in a more specific setup with less constrains, they are considerably simpler and somehow more intuitive.

2 Model and Results

We consider \(\Lambda _N= \{0,\ldots ,N\}\times {\mathbb {Z}}\) and denote the configuration of the interface with \({\mathbf {s}}= \{s_x\in {\mathbb {Z}}, x= 0,\ldots , N\}\). The interface increments are denoted by \(\eta _x=s_x-s_{x-1} \in {\mathbb {Z}}, x= 1, \ldots , N\).

Let \(\pi \) a symmetric probability distribution on \({\mathbb {Z}}\) aperiodic and such that

We denote \(\sigma ^2\) the variance of \(\pi \) and as we shall see the result does not depend on the particular choice of \(\pi \) but only on the variance \(\sigma ^2\).

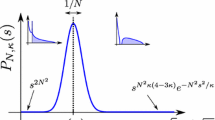

For \(s, {\overline{s}} \in {\mathbb {Z}}\) define the positive kernel

Call \(T_Nf(s)\) the integral operator with kernel \(T_N\). \(T_N\) is a symmetric positive operator in \(\ell _2({\mathbb {Z}})\), and it can be checked immediately that it is Hilbert–Schmidt, consequently compact. Then the Krein–Rutman theorem [11] applies, thus there is a strictly positive eigenfunction \(h_N\in \ell _2({\mathbb {Z}})\) and a strictly positive eigenvalue \(\lambda _N>0\):

The eigenvalue \(\lambda _N<1\), and \(\lambda _N \rightarrow 1\) as \(N\rightarrow \infty \), see Theorem 3.1.

We then observe that the Gibbs distribution \(\nu _N\) with the Hamiltonian given in (1.1) and with the values at the boundaries distributed according to the measure \(h_N(s)e^{\frac{s^2}{2N}}\) can be expressed in terms of the kernel \(T_N\) and the double-geometric distribution

In fact

with \(Z_N\) the partition function.

Call

\(p_N\) defines an irreducible positive-recurrent Markov chain on \({\mathbb {Z}}\) with reversible measure given by \(h_N^2(s)\). We call \( P_N\) the law of the Markov chain starting from the invariant measure \(h_N^2(s)\).

Observe that \(\nu _N({\mathbf {s}})\) in (2.4) is the \( P_N\)-probability of the trajectory \({\mathbf {s}}\), indeed from (2.5) we get

which proves that \(Z_N=\lambda ^N_N\) and that \( \nu ({\mathbf {s}}) = P_N({\mathbf {s}})\).

We define the rescaled variables

then \({\widetilde{S}}^N(t) \) is extended to \(t\in [0,1]\) by linear interpolation, in this way we can consider the induced distribution \({\mathcal {P}}_N\) on the space of continuous function C([0, 1]).

We denote by \({\mathcal {E}}_N\) the expectation with respect to \({\mathcal {P}}_N\).

Our main result is the following Theorem.

Theorem 2.1

The process \(\{{\widetilde{S}}^N(t), t\in [0,1]\}\) converges in law to the stationary Ornstein–Uhlenbeck process with variance \(\sigma /2\). Moreover \(\displaystyle {\lim _{N\rightarrow \infty } \lambda _N^{\sqrt{N}}= e^{-\sigma /2}}\).

The paper is organized as follows: in Sect. 3 we give a priori estimates on the eigenfunctions \(h_N\) and on the eigenvalues \(\lambda _N\), in Sect. 4 we prove convergence of the eigenfunctions \(h_N\) and identify the limit, in Sect. 5 we prove Theorem 2.1.

3 Estimates on the Eigenfunctions and the Eigenvalues

Theorem 3.1

The operator \(T_N\) defined in (2.2) has a maximal positive eigenvalue \(\lambda _N\) and a positive normalized eigenvector \(h_N(s)\in \ell ^{2}({\mathbb {Z}})\) as in (2.3) with the following properties:

-

(i)

\(h_N\) is a symmetric function.

-

(ii)

\(\Vert h_N\Vert _\infty \leqslant 1\) for all N.

-

(iii)

There exists c so that \(\displaystyle {1- \frac{c}{\sqrt{N}} \leqslant \lambda _N < 1}\).

Proof

That \(h_N(s)\) is positive follows by the Krein–Rutman theorem, [11], also \(\lambda _N\) is not degenerate, its eigenspace is one-dimensional. The symmetry follows from the symmetry of \(T_N\), since \(h_N(-s)\) is also eigenfunction for \(\lambda _N\).

The \(\ell _\infty \) bound follows from

The upper bound in (iii) easily follows from

having used that \(\sum _{s} h_N(s)^2 = 1\).

To prove the lower bound in (iii) we use the variational formula

By choosing h with \(\sum _{s} h(s)^2 = 1\), and using the inequality \(e^{-x} \geqslant 1 - x\), we have a lower bound

Observe that, since \(\sum _{s} h(s)^2 = 1\),

Thus

For \(\alpha >0\), we choose \(h(s) = h_\alpha (s) := C_{\alpha }\)\( e^{- \alpha s^2/4}\), with \(C_\alpha = \left( \sum _s e^{-\alpha s^2/2}\right) ^{-1/2} \). Observe that for \(\alpha \rightarrow 0\)

Thus

We next prove that

To prove (3.6) observe that \(h_\alpha (s) h_\alpha (s+\tau ) = h_\alpha (s)^2 e^{-\alpha \tau ^2/4 - \alpha s \tau /2}\), then

Using again that \(e^{-z} \geqslant 1 - z\) and the parity of \(h_\alpha \) and of \(\pi \) we get

which proves (3.6).

We choose \(\alpha = N^{-1/2}\) and from (3.4), (3.5) and (3.6) we then get

which gives the lower bound. \(\square \)

Given s let \(s_x\) be the position at x of the random walk starting at s, namely \(\displaystyle {s_x=s+\sum _{k=1}^x \eta _k}\) where \(\{\eta _k\}_k\) are i.i.d. random variables with distribution \(\pi \). By an abuse of notation we will denote by \(\pi \) also the probability distribution of the trajectories of the corresponding random walk and by \({\mathbb {E}}_{s}\) the expectation with respect to the law of the random walk which starts from s.

We will use the local central limit theorem as stated in Theorem (2.1.1) in [12] [see in particular formula (2.5)]. There exists a constant c not depending on n such that for any s:

where

By iterating (2.3) n times we get

Theorem 3.2

There exist positive constants c, C (independent of N) such that

Proof

Below we will write h(s) for the eigenfunction \(h_N(s)\), and \(\lambda \) for \(\lambda _N\).

Because of the symmetry of h, it is enough to consider \(s>0\). From (3.9) we get

To estimate \({\mathbb {E}}_{s}( h^2(s_n))\) we use (3.8),

where K is a constant independent of N.

Thus for \(n=\sqrt{N}\) we get

For \(\alpha \in (0,1)\) we consider

and we split the expectation on the right hand side of (3.13)

Calling \(M_x:= s_x-s\), and \(\Lambda (a)=\log {\mathbb {E}}(e^{a\eta })\) for \(|a|\leqslant a_0\), see (2.1), we get that \( e^{aM_x-x\Lambda (a)}\) is a martingale, so that

Also \(M_{z} \leqslant -\alpha s\) and thus, choosing \(a<0\), we have \(aM_{z} \geqslant -a\alpha s\), so that:

Since \(\Lambda (a)= \frac{1}{2} \sigma ^2 a^2+O(a^4)\) choosing \(a=-\frac{\sqrt{2} (1-\alpha )s}{\sigma N^{1/2}}\) we get

Recalling (3.15), we have

For \(n = \sqrt{N}\) we thus get that there is a constant b so that

From (iii) of Theorem 3.1 there is \(B>0\) so that \(\lambda ^{\sqrt{N}} \geqslant B\), thus from (3.13) and (3.17) we get (3.10). \(\square \)

4 Convergence and Identification of the Limit

We start the section with a preliminary lemma.

Lemma 4.1

There is \(b>0\) so that

Proof

Using that \(\sum _sh_n(s)^2=1\) we have

By (iii) of Theorem 3.1\(2(1-\lambda _N)\leqslant \frac{2c}{\sqrt{N}} \). By using that \(1-e^x<x\) and that \(\sum _s s^2 h_N(s)\leqslant c'\), by Theorem 3.2 we have

From this (4.1) follows. \(\square \)

Define for \(r\in {\mathbb {R}}\)

Proposition 4.2

The following holds.

-

(1)

The sequence of measures \( {\widetilde{h}}_N^2(r)dr\) in \({\mathbb {R}}\) is tight and any limit measure is absolutely continuous with respect to the Lebesgue measure.

-

(2)

The sequence of functions \({\widetilde{h}}_N(r):=N^{1/8} h_N([r N^{1/4}])\) is sequentially compact in \(L^2({\mathbb {R}})\).

Proof

As a straightforward consequence of Theorem 3.2, we have that

It follows that for any \(\epsilon \) there is k so that \(\displaystyle {\int _{|r|\leqslant k} {\widetilde{h}}_N^2(r)dr\geqslant 1-\epsilon }\), which proves tightness of the sequence of probability measures \( {\widetilde{h}}_N^2(r) dr\) on \({\mathbb {R}}\). From (4.4) we also get that any limit measure must be absolutely continuous.

To prove that the sequence \(( {\widetilde{h}}_N(r))_{N\geqslant 1}\) is sequentially compact in \(L^2({\mathbb {R}})\) we prove below that there exists a constant C such that for any N and any \(\delta >0\):

Assume that \(\pi (1) >0\), then

The condition \(\pi (1) >0\) can be relaxed easily by a slight modification of the above argument.

From (4.4) and (4.5), applying the Kolmogorov–Riesz compactness theorem (see e.g. [10]), we get that \({\widetilde{h}}_N\) is sequentially compact in \(L^2({\mathbb {R}})\). \(\square \)

We next identify the limit.

Proposition 4.3

Any limit point u(r) of \({\widetilde{h}}_N(r)\) in \(L^2\) satisfies in weak form

where \(B_s\) is a Brownian motion with variance \(\sigma ^2\) and with \(B_0=r\) furthermore \(\displaystyle {\lambda =\lim _{N\rightarrow \infty } \lambda _N^{\sqrt{N}}}\) which exists.

The unique solution of (4.6) (up to a multiplicative constant) is \(u(r)=\exp \{- r^2/2\sigma \}\) and \(\lambda = e^{-\sigma /2}\).

Proof

Given r call \(r_N =[r N^{1/4}]\), iterating (2.3) \(\sqrt{N}\) times (assuming that \(\sqrt{N}\) is an integer) we get

where \({\mathbb {E}}^N_{r_N}\) is the expectation w.r.t. the random walk which starts from \(r_N\).

By the invariance principle,

in law, where \(B_t\) is a standard Brownian motion which starts from 0.

Take a subsequence along which \({\widetilde{h}}_N\) converges strongly in \(L^2({\mathbb {R}})\) and call u(r) the limit point. Choosing a test function \(\varphi \in L^2({\mathbb {R}})\), and denoting \(\pi _n(s) = \pi \left( \sum _{k=1}^{n} \eta _k = s\right) \), we get along that sequence

Since the exponential on the right hand side of (4.7) is a bounded functional of the random walk, from (4.9) we get (along the chosen sequence),

where \({\mathbb {E}}_0 \) is the expectation w.r.t. the law of a standard Brownian motion starting at 0 and the limits are intended in the weak \(L^2\) sense.

Since \({\widetilde{h}}_N\) is converging strongly in \(L^2\) (along the subsequence we have chosen) and the expectation on the right hand side of (4.7) has a finite limit, we get that the limit of \(\lambda _N^{\sqrt{N}}\) must exists.

Observe that for a standard Brownian motion \(\{B_s\}_{s\in [0,1]}\) we have that

Furthermore by Ito’s formula

Thus

that implies

Comparing with (4.6) we identify u(r) and \(\lambda \). \(\square \)

We thus have the following corollary of Proposition 4.2 and Proposition 4.3.

Corollary 4.4

The sequence of measures \( {\widetilde{h}}_N^2(r)dr\) in \({\mathbb {R}}\) converges weakly to the Gaussian measure \(g^2(r)dr\) where \(g(r)= (\pi \sigma )^{-1/4} e^{-r^2/2\sigma }\).

Moreover for any \(\psi , \varphi \in C_b({\mathbb {R}})\) and any \(t\in [0,1]\)

where \({\mathbb {E}}_r \) is the expectation w.r.t. the law of the Brownian motion starting at r.

Proof

From Proposition 4.3 we have that any subsequence of \( {\widetilde{h}}_N(r)\) converges in \(L^2({\mathbb {R}})\) to \(c e^{-r^2/2\sigma }\) but since \(\Vert {\widetilde{h}}^2_N\Vert _{L^2}=1\) we get that c must be equal to \( (\pi \sigma )^{-1/4} \). This together with (1) of Proposition 4.2 concludes the proof.

The proof of (4.12) is an adaptation of (4.10) and (4.11). \(\square \)

5 Proof of Theorem 2.1

Recall that \({\mathcal {P}}_N\) and \({\mathcal {E}}_N\) denote respectively the law and the expectation in C([0, 1]) of the process \( {\widetilde{S}}_N(t) =N^{-1/4} s_{[tN^{1/2}]} \) induced by the law of the Markov chain with transition probabilities given in (2.5) and initial distribution the invariant measure \({\widetilde{h}}^2_N(r)dr\).

Proposition 5.1

The finite dimensional distributions of \({\widetilde{S}}_N(t)\), \(t\in [0,1]\), converge in law to those of the stationary Ornstein–Uhlenbeck.

Proof

For any k, any \(0\leqslant \tau _1<\cdots <\tau _k\leqslant 1\) and any collection of continuous bounded functions with compact support \(\varphi _0,\varphi _1, \ldots \varphi _k\), setting \(t_i=\tau _i-\tau _{i-1}\), \(i=1,\ldots ,k\), \(\tau _0=0\) we have

where \(r_i=N^{-1/4}\Big [r_{i-1}+\sum _{x=1}^{[t_i\sqrt{N}]} \eta _x\Big ]\). Then from a ripetute use of (4.12) we get

\(\square \)

To conclude the proof of Theorem 2.1 we need to show tightness of \({\mathcal {P}}_N\) in C([0, 1]); this is a consequence of Proposition 5.2 below, see Theorem 12.3, Eq. (12.51) of [3].

Proposition 5.2

There is C so that for all N,

Proof

where \(\pi _n(s) = \pi \left( \sum _{k=1}^{n} \eta _k = s\right) \). By Proposition 2.4.6 in [12], if \(\pi \) is aperiodic with finite 4th moments, as in our case, we have the bound

From this estimate it follows that the right hand side of (5.2) is bounded by

By (3.10) we have that \(\sum _{s} h_N(s) \leqslant N^{1/8}\), and the bound follows. \(\square \)

References

Bertini, L., De Sole, A., Gabrielli, D., Jona-Lasinio, G., Landim, C.: Macroscopic fluctiation theory. Rev. Mod. Phys. 87, 593 (2015)

Bernardin, C., Olla, S.: Fourier law for a microscopic model of heat conduction. J. Stat. Phys. 121, 271–289 (2005)

Billingsley, P.: Convergence of Probability Measures. Wiley, New York (1968)

De Masi, A., Olla, S., Presutti, E.: A note on Fick’s law with phase transitions. J. Stat. Phys. 175, 203–21 (2019)

Derrida, B., Lebowitz, J.L., Speer, E.R.: Free energy functional for nonequilibrium systems: an exactly solvable case. Phys. Rev. Lett. 87, 150601 (2001)

Derrida, B., Lebowitz, J.L., Speer, E.R.: Large deviation of the density profile in the steady state of the open symmetric simple exclusion process. J. Stat. Phys. 107(3/4), 599–634 (2002)

Gallavotti, G.: The phase separation line in the two-dimensional Ising model. Commun. Math. Phys. 27, 103–136 (1972)

Kipnis, C., Marchioro, C., Presutti, E.: Heat flow in an exactly solvable model Journ. Stat. Phys. 27, 65–74 (1982)

Ioffe, D., Shlosman, S., Velenik, Y.: An invariance principle to Ferrari–Spohn diffusions. Commun. Math. Phys. 336, 905–932 (2015)

Hanche-Olsen, Harald, Holden, Helge: The Kolmogorov–Riesz compactness theorem. Exp. Math. 28(4), 385–394 (2010). https://doi.org/10.1016/j.exmath.2010.03.001

Krein, M.G., Rutman, M.A.: Linear operators leaving invariant a cone in a Banach space. Ann. Math. Soc. Transl. 26, 128 (1950)

Lawler, G.F., Limic, V.: Random Walk: A Modern Introduction. Cambridge Studies in Advanced Mathematics, vol. 123. Cambridge University Press, Cambridge (2010)

Acknowledgements

We thank S. Shlosman for helpful discussions. A.DM thanks very warm hospitality at the University of Paris-Dauphine where part of this work was performed. This work was partially supported by ANR-15-CE40-0020-01 grant LSD.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Michael Aizenman.

Dedicated to Joel for his important contributions to the theory of phase transition and interfaces.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

De Masi, A., Merola, I. & Olla, S. Interface Fluctuations in Non Equilibrium Stationary States: The SOS Approximation. J Stat Phys 180, 414–426 (2020). https://doi.org/10.1007/s10955-019-02450-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-019-02450-w