Abstract

We study the hydrodynamic scaling limit for the Glauber–Kawasaki dynamics. It is known that, if the Kawasaki part is speeded up in a diffusive space-time scaling, one can derive the Allen–Cahn equation which is a kind of the reaction–diffusion equation in the limit. This paper concerns the scaling that the Glauber part, which governs the creation and annihilation of particles, is also speeded up but slower than the Kawasaki part. Under such scaling, we derive directly from the particle system the motion by mean curvature for the interfaces separating sparse and dense regions of particles as a combination of the hydrodynamic and sharp interface limits.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we consider the Glauber–Kawasaki dynamics, that is the simple exclusion process with an additional effect of creation and annihilation of particles, on a d-dimensional periodic square lattice of size N with \(d\ge 2\) and study its hydrodynamic behavior. We introduce the diffusive space-time scaling for the Kawasaki part. Namely, the time scale of particles performing random walks with exclusion rule is speeded up by \(N^2\). It is known that, if the time scale of the Glauber part stays at O(1), one can derive the reaction–diffusion equation in the limit as \(N\rightarrow \infty \).

This paper discusses the scaling under which the Glauber part is also speeded up by the factor \(K=K(N)\), which is at the mesoscopic level. More precisely, we take K such as \(K\rightarrow \infty \) satisfying \(K \le \text {const}\times (\log N)^{1/2}\), and show that the system exhibits the phase separation. In other words, if we choose the rates of creation and annihilation in a proper way, then microscopically the whole region is separated into two regions occupied by different phases called sparse and dense phases, and the macroscopic interface separating these two phases evolves according to the motion by mean curvature.

1.1 Known Result on Hydrodynamic Limit

Before introducing our model, we explain a classical result on the hydrodynamic limit for the Glauber–Kawasaki dynamics in a different scaling from ours. Let \({\mathbb {T}}_N^d :=({\mathbb {Z}}/N{\mathbb {Z}})^d = \{1,2,\ldots ,N\}^d\) be the d-dimensional square lattice of size N with periodic boundary condition. The configuration space is denoted by \(\mathcal {X}_N = \{0,1\}^{{\mathbb {T}}_N^d}\) and its element is described by \(\eta =\{\eta _x\}_{x\in {\mathbb {T}}_N^d}\). In this subsection, we discuss the dynamics with the generator given by \(L_N = N^2L_K+L_G\), where

for a function f on \(\mathcal {X}_N\). The configurations \(\eta ^{x,y}\) and \(\eta ^x\in \mathcal {X}_N\) are defined from \(\eta \in \mathcal {X}_N\) as

The flip rate \(c(\eta )\equiv c_0(\eta )\) in the Glauber part is a nonnegative local function on \(\mathcal {X}:=\{0,1\}^{{\mathbb {Z}}^d}\) (regarded as that on \({\mathcal {X}}_N\) for N large enough), \(c_x(\eta ) = c(\tau _x\eta )\) and \(\tau _x\) is the translation acting on \({\mathcal {X}}\) or \({\mathcal {X}}_N\) defined by \((\tau _x\eta )_z =\eta _{z+x}, z\in {\mathbb {Z}}^d\) or \({\mathbb {T}}_N^d\). In fact, \(c(\eta )\) has the following form:

where \(c^+(\eta )\) and \(c^-(\eta )\) represent the rates of creation and annihilation of a particle at \(x=0\), respectively, and both are local functions which do not depend on the occupation variable \(\eta _0\).

Let \(\eta ^N(t)=\{\eta _x^N(t)\}_{x\in {\mathbb {T}}_N^d}\) be the Markov process on \(\mathcal {X}_N\) generated by \(L_N\). The macroscopically scaled empirical measure on \({\mathbb {T}}^d\), that is \([0,1)^d\) with the periodic boundary, associated with a configuration \(\eta \in \mathcal {X}_N\) is defined by

and we set

Then, it is known that the empirical measure \(\alpha ^N(t,dv)\) converges to \(\rho (t,v)dv\) as \(N\rightarrow \infty \) in probability (multiplying a test function on \({\mathbb {T}}^d\)) if this holds at \(t=0\). Here, \(\rho (t,v)\) is a unique weak solution of the reaction–diffusion equation

with the given initial value \(\rho (0)\), dv is the Lebesgue measure on \({\mathbb {T}}^d\) and

where \(\nu _\rho \) is the Bernoulli measure on \({\mathbb {Z}}^d\) with mean \(\rho \in [0,1]\). This was shown by De Masi et al. [8]; see also [7] and [13] for further developments in the Glauber–Kawasaki dynamics. We use the same letter f for functions on \(\mathcal {X}_N\) and the reaction term defined by (1.4), but these should be clearly distinguished.

From (1.1), the reaction term can be rewritten as

if \(c^\pm \) are given as the finite sum of the form:

with some constants \(c_\Lambda ^\pm \in {\mathbb {R}}\). Note that \(c^\pm (\rho ) := E^{\nu _\rho }[c^\pm (\eta )]\) are equal to (1.5) with \(\eta _x\) replaced by \(\rho \). We give an example of the flip rate \(c(\eta )\) and the corresponding reaction term \(f(\rho )\) determined by (1.4).

Example 1.1

Consider \(c^\pm (\eta )\) in (1.1) of the form

with \(a^\pm , b^\pm , c^\pm \in {\mathbb {R}}\) and \(n_1, n_2 \in {\mathbb {Z}}^d\) such that three points \(\{n_1, n_2, 0\}\) are different. Then,

In particular, under a suitable choice of six constants \(a^\pm , b^\pm , c^\pm \), one can have

with some \(C>0\), \(0<\alpha _1<\alpha _*< \alpha _2<1\) satisfying \(\alpha _1+\alpha _2=2\alpha _*\); see the example in Sect. 8 of [13] with \(\alpha _*=1/2\) given in 1-dimensional setting. Namely, \(f(\rho )\) is bistable with stable points \(\rho = \alpha _1, \alpha _2\) and unstable point \(\alpha _*\), and satisfies the balance condition \(\int _{\alpha _1}^{\alpha _2}f(\rho )d\rho =0\).

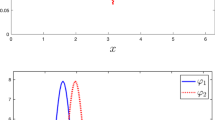

For the reaction term f of the form (1.8), the Eq. (1.3) considered on \({\mathbb {R}}\) instead of \({\mathbb {T}}^d\) admits a traveling wave solution which connects two different stable points \(\alpha _1, \alpha _2\), and its speed is 0 due to the balance condition. The traveling wave solution with speed 0 is called a standing wave. See Sect. 4.2 for details.

1.2 Our Model and Main Result

The model we concern in this paper is the Glauber–Kawasaki dynamics \(\eta ^N(t)=\{\eta _x^N(t)\}_{x\in {\mathbb {T}}_N^d}\) on \(\mathcal X_N\) with the generator \(L_N = N^2 L_K + K L_G\) with another scaling parameter \(K>0\). The parameter K depends on N as \(K=K(N)\) and tends to \(\infty \) as \(N\rightarrow \infty \).

If we fix K so as to be independent of N, then, as we saw in Sect. 1.1, we obtain the reaction–diffusion equation for \(\rho \equiv \rho ^K(t,v)\) in the hydrodynamic limit:

The partial differential equation (PDE) (1.9) with the large reaction term Kf, which is bistable and satisfies the balance condition as in Example 1.1, is called the Allen–Cahn equation. It is known that as \(K\rightarrow \infty \) the Allen–Cahn equation leads to the motion by mean curvature; see Sect. 4. Our goal is to derive it directly from the particle system.

For our main theorem, we assume the following five conditions on the creation and annihilation rates \(c^\pm (\eta )\) and the mean \(u^N(0,x) = E[\eta _x^N(0)]\), \(x\in {\mathbb {T}}_N^d\) of the initial distribution of our process.

-

(A1)

\(c^\pm (\eta )\) have the form (1.6) with \(n_1, n_2 \in {\mathbb {Z}}^d\), both of which have at least one positive components, and three points \(\{n_1,n_2,0\}\) are different.

-

(A2)

The corresponding f defined by (1.4) or equivalently by (1.7) is bistable, that is, f has exactly three zeros \(0<\alpha _1<\alpha _*<\alpha _2<1\) and \(f'(\alpha _1)<0\), \(f'(\alpha _2)<0\) hold, and it satisfies the balance condition \(\int _{\alpha _1}^{\alpha _2}f(\rho ) d\rho =0\).

-

(A3)

\(c^+(u)\) is increasing and \(c^-(u)\) is decreasing in \(u=\{u_{n_k}\}_{k=1}^2 \in [0,1]^2\) under the partial order \(u\ge v\) defined by \(u_{n_k}\ge v_{n_k}\) for \(k=1,2\), where \(c^\pm (u)\) are defined by (1.6) with \(\eta _{n_k}\) replaced by \(u_{n_k}\).

-

(A4)

\(\Vert \nabla ^N u^N(0,x)\Vert \le C_0 K \, \big (\!=C_0K(N)\big )\) for some \(C_0>0\), where \(\nabla ^N u(x) := \{N(u(x+e_i)-u(x))\}_{i=1}^d\) with the unit vectors \(e_i\in {\mathbb {Z}}^d\) of the direction i and \(\Vert \cdot \Vert \) stands for the standard Euclidean norm of \({\mathbb {R}}^d\).

-

(A5)

\(u^N(0,v), v\in {\mathbb {T}}^d\) defined by (2.5) from \(u^N(0,x)\) satisfies the bound (4.3) at \(t=0\).

The condition (A5) implies that a smooth hypersurface \(\Gamma _0\) in \({\mathbb {T}}^d\) without boundary exists and \(u^N(0,v)\) defined by (2.5) converges to \(\chi _{\Gamma _0}(v)\) weakly in \(L^2({\mathbb {T}}^d)\) as \(N\rightarrow \infty \), see (4.7) taking \(t=0\). We denote for a closed hypersurface \(\Gamma \) which separates \({\mathbb {T}}^d\) into two disjoint regions,

It is known that a smooth family of closed hypersurfaces \(\{\Gamma _t\}_{t\in [0,T]}\) in \({\mathbb {T}}^d\), which starts from \(\Gamma _0\) and evolves being governed by the motion by mean curvature (4.1), exists until some time \(T>0\); recall \(d \ge 2\) and see the beginning of Sect. 4 for details. Note that the sides of \(\Gamma _t\) in (1.10) with \(\Gamma =\Gamma _t\) is kept under the time evolution and determined continuously from those of \(\Gamma _0\). We need the smoothness of \(\Gamma _t\) to construct super and sub solutions of the discretized hydrodynamic equation in Theorem 4.6.

Let \(\mu _0^N\) be the distribution of \(\eta ^N(0)\) on \(\mathcal {X}_N\) and let \(\nu _0^N\) be the Bernoulli measure on \(\mathcal {X}_N\) with mean \(u^N(0) = \{u^N(0,x)\}_{x\in {\mathbb {T}}_N^d}\). Recall that \(u^N(0,x)\) is the mean of \(\eta _x\) under \(\mu _0^N\) for each \( x\in {\mathbb {T}}_N^d\). Our another condition with \(\delta >0\) is the following.

- (A6)\(_\delta \):

-

The relative entropy at \(t=0\) defined by (2.4) behaves as \(H(\mu _0^N|\nu _0^N) = O(N^{d-\delta _0})\) as \(N\rightarrow \infty \) with some \(\delta _0>0\) and \(K=K(N) \rightarrow \infty \) satisfies \(1\le K(N) \le \delta (\log N)^{1/2}\).

The main result of this paper is now stated as follows. Recall that \(\alpha ^N(t)\) is defined by (1.2).

Theorem 1.1

Assume the six conditions (A1)–(A6)\(_\delta \) with \(\delta >0\) small enough chosen depending on T. Then, we have

for every \(\varepsilon >0\) and \(\varphi \in C^\infty ({\mathbb {T}}^d)\), where \(\langle \alpha ,\varphi \rangle \) or \(\langle \chi _\Gamma ,\varphi \rangle \) denote the integrals on \({\mathbb {T}}^d\) of \(\varphi \) with respect to the measures \(\alpha \) or \(\chi _\Gamma (v)dv\), respectively.

The proof of Theorem 1.1 consists of two parts, that is, the probabilistic part in Sects. 2 and 3, and the PDE part in Sect. 4. In the probabilistic part, we apply the relative entropy method of Jara and Menezes [24, 25], which is in a sense a combination of the methods due to Guo et al. [22] and Yau [31]. In the PDE part, we show the convergence of solutions of the discretized hydrodynamic equation (2.2) with the limit governed by the motion by mean curvature. More precise rate of convergence in (1.11) is given in Remark 3.1.

We give some explanation for our conditions. If we take \(a^+=32, b^+=0, c^+=3, a^-=0, b^-=-16, c^-=19\) in Example 1.1, we have \(c^+(u) =32u_{n_1}u_{n_2}+3\), \(c^-(u) = -16u_{n_1}+19\) and \(f(\rho )\) has the form (1.8) with \(C=32\), \(\alpha _1=1/4\), \(\alpha _*=1/2\) and \(\alpha _2=3/4\) so that the conditions (A1)–(A3) are satisfied. For simplicity, we discuss in this paper \(c^\pm (\eta )\) of the form (1.6) only, however one can generalize our result to more general \(c^\pm (\eta )\) given as in (1.5). The corresponding f may have several zeros, but we may restrict our arguments in the PDE part to a subinterval of [0, 1], on which the conditions (A2) and (A3) are satisfied. The entropy condition in (A6)\(_\delta \) is satisfied, for example, if \(d\mu _0^N = g_Nd\nu _0^N\) and \(\log \Vert g_N\Vert _\infty \le C N^{d-\delta _0}\) holds for some \(C>0\).

In the probabilistic part, we only need the following condition weaker than (A5).

-

(A7)

\(u_- \le u^N(0,x) \le u_+\) for some \(0<u_-<u_+<1\).

For convenience, we take \(u_\pm \) such that \(0<u_-<\alpha _1<\alpha _2<u_+<1\) by making \(u_-\) smaller and \(u_+\) larger if necessary; see the comments given below Theorem 4.1. The condition (A7) with this choice of \(u_\pm \) is called (A7)\('\). Under this choice of \(u_\pm \), the condition (A3) can be weakened and it is sufficient if it holds for \(u\in [u_-,u_+]^2\). The conditions (A1), (A4), (A6)\(_\delta \), (A7) are used in the probabilistic part, while (A2), (A3), (A5) are used in the PDE part. To be precise, (A2), (A3) are used also in the probabilistic part but in a less important way; see the comments below Theorem 2.1.

The derivation of the motion by mean curvature and the related problems of pattern formation in interacting particle systems were discussed by Spohn [29] rather heuristically, and by De Masi et al. [11], Katsoulakis and Souganidis [26], Giacomin [21] for Glauber–Kawasaki dynamics. De Masi et al. [9, 10], Katsoulakis and Souganidis [27] studied Glauber dynamics with Kac type long range mean field interaction. Related problems are discussed by Caputo et al. [3, 4]. Similar idea is used in Hernández et al. [23] to derive the fast diffusion equation from zero-range processes. Bertini et al. [2] discussed from the viewpoint of large deviation functionals.

In particular, the results of [26] are close to ours. They consider the Glauber–Kawasaki dynamics with generator \(\lambda ^{-2}(\varepsilon ^{-2}L_K+L_G)\) under the spatial scaling \((\lambda \varepsilon )^{-1}\), where \(\lambda =\lambda (\varepsilon ) \, (\downarrow 0)\) should satisfy the condition \(\lim _{\varepsilon \downarrow 0} \varepsilon ^{-\zeta ^*}\lambda (\varepsilon ) = \infty \) with some \(\zeta ^*>0\). If we write \(N=(\lambda \varepsilon )^{-1}\) as in our case, the generator becomes \(N^2L_K+\lambda ^{-2}L_G\) so that \(\lambda ^{-2}\) plays a role similar to our \(K=K(N)\). They analyze the limit of correlation functions.

On the other hand, our analysis makes it possible to study the limit of the empirical measures, which is more natural in the study of the hydrodynamic limit, under a milder assumption on the initial distribution \(\mu _0^N\). Moreover, we believe that our relative entropy method has an advantage to work for a wide class of models in parallel. Furthermore, this method is applicable to study the fast-reaction limit for two-component Kawasaki dynamics, which leads to the two-phase Stefan problem, see [12].

Finally, we make a brief comment on the case that f is unbalanced: \(\int _{\alpha _1}^{\alpha _2}f(\rho )d\rho \not =0\). For such f, the proper time scale is shorter and turns out to be \(K^{-1/2}t\), so that the Eq. (1.9) is rescaled as

It is known that this equation exhibits a different behavior in the sharp interface limit as \(K\rightarrow \infty \), see p. 95 of [16]. The present paper does not discuss this case.

2 Relative Entropy Method

We start the probabilistic part by formulating Theorem 2.1. This gives an estimate on the relative entropy of our system with respect to the local equilibria and implies the weak law of large numbers (2.6) as its consequence. We compute the time derivative of the relative entropy to give the proof of Theorem 2.1. In Sects. 2 and 3, it is unnecessary to assume \(d\ge 2\), so that we discuss for all \(d\ge 1\) including \(d=1\).

2.1 The Entropy Estimate

From (1.1), the flip rate \(c_x(\eta ) \equiv c(\tau _x\eta )\) of the Glauber part has the form

where \(c_x^\pm (\eta )= c^\pm (\tau _x\eta )\) with \(c^\pm (\eta )\) of the form (1.5). Let \(u^N(t) = \{u^N(t,x)\in [0,1]\}_{x\in {\mathbb {T}}_N^d}\) be the solution of the discretized hydrodynamic equation:

where \(f^N(x,u)\) is defined by

for \(u\equiv \{u_x = u(x)\}_{x\in {\mathbb {T}}_N^d}\) and

Note that \(c_x^\pm (u):= E^{\nu _u}[c^\pm _x(\eta )]\) are given by (1.5) with \(\eta _x\) replaced by \(u_x\) and \(\nu _u\) is the Bernoulli measure with non-constant mean \(u=u(\cdot )\). In the following, we assume that \(c^\pm (\eta )\) have the form (1.6) and, in this case, we have

Let \(\mu \) and \(\nu \) be two probability measures on \(\mathcal {X}_N\). We define the relative entropy of \(\mu \) with respect to \(\nu \) by

if \(\mu \) is absolutely continuous with respect to \(\nu \), \(H(\mu |\nu ) :=\infty \), otherwise. Let \(\mu _t^N\) be the distribution of \(\eta ^N(t)\) on \(\mathcal {X}_N\) and let \(\nu _t^N\) be the Bernoulli measure on \(\mathcal {X}_N\) with mean \(u^N(t) = \{u^N(t,x)\}_{x\in {\mathbb {T}}_N^d}\). The following result plays an essential role to prove Theorem 1.1.

Theorem 2.1

We assume the conditions (A1)–(A4) and (A7)\('\). Then, if (A6)\(_\delta \) holds with small enough \(\delta >0\), we have

as \(N\rightarrow \infty \). The constant \(\delta >0\) depends on \(T>0\).

Note that the condition (A7)\('\), i.e. (A7) with an additional condition on the choice of \(u_\pm \), combined with the comparison theorem implies that the solution \(u^N(t,x)\) of the discretized hydrodynamic equation (2.2) satisfies that \(u_- \le u^N(t,x) \le u_+\) for all \(t\in [0,T]\) and \(x\in {\mathbb {T}}_N^d\); see the comments given below Theorem 4.1. The conditions (A2) and (A3) are used only to show this bound for \(u^N(t,x)\).

2.2 Consequence of Theorem 2.1

We define the macroscopic function \(u^N(t,v), v\in {\mathbb {T}}^d\) associated with the microscopic function \(u^N(t,x), x\in {\mathbb {T}}_N^d\) as a step function

where \(B(\frac{x}{N},\frac{1}{N}) = \prod _{i=1}^d [\frac{x_i}{N}-\frac{1}{2N}, \frac{x_i}{N}+\frac{1}{2N})\) is the box with center x / N and side length 1 / N. Then the entropy inequality (see Proposition A1.8.2 of [28] or Sect. 3.2.3 of [17])

combined with Theorem 2.1 and Proposition 2.2 stated below shows that

for every \(\varepsilon >0\), where

Proposition 2.2

There exists \(C=C_\varepsilon >0\) such that

Proof

Set and observe

as \(N\rightarrow \infty \). Then, we have

for every \(\gamma >0\), where we used the elementary inequality \(e^{|x|}\le e^x+e^{-x}\) to obtain the second inequality. By the independence of \(\{\eta _x\}_{x\in {\mathbb {T}}_N^d}\) under \(\nu _t^N\), the expectations inside the last braces can be written as

where \(u_x = u^N(t,x)\) and \(\varphi _x = \varphi (x/N)\). Applying the Taylor’s formula at \(\gamma =0\), we see

for \(0<\gamma \le 1\). Thus we obtain

for \(\gamma >0\) sufficiently small. This shows the conclusion. \(\square \)

2.3 Time Derivative of the Relative Entropy

For a function f on \(\mathcal {X}_N\) and a measure \(\nu \) on \(\mathcal {X}_N\), set

where

Take a family of probability measures \(\{\nu _t\}_{t\ge 0}\) on \(\mathcal {X}_N\) differentiable in t and a probability measure m on \(\mathcal {X}_N\) as a reference measure, and set \(\psi _t(\eta ) := (d\nu _t/dm)(\eta )\). Assume that these measures have full supports in \(\mathcal {X}_N\). We denote the adjoint of an operator L on \(L^2(m)\) by \(L^{*,m}\) in general. Then we have the following proposition called Yau’s inequality; see Theorem 4.2 of [17] or Lemma A.1 of [25] for the proof.

Proposition 2.3

where \(\mathbf{1}\) stands for the constant function \(\mathbf{1}(\eta )\equiv 1\), \(\eta \in \mathcal X_N\).

We apply Proposition 2.3 with \(\nu _t=\nu _t^N\) to prove Theorem 2.1.

2.4 Computation of \(L_N^{*,\nu _t^N}{} \mathbf{1} - \partial _t \log \psi _t\)

We compute the integrand of the second term in the right hand side of (2.8). Similar computations are made in the proofs of Lemma 3.1 of [20], Appendix A.3 of [25] and Lemmas 4.4–4.6 of [17]. We introduce the centered variable \(\bar{\eta }_x\) and the normalized centered variable \(\omega _x\) of \(\eta _x\) under the Bernoulli measure with mean \(u(\cdot ) = \{u_x\}_{x\in {\mathbb {T}}_N^d}\) as follows:

where \(\chi (\rho ) = \rho (1-\rho )\), \(\rho \in [0,1]\). We first compute the contribution of the Kawasaki part.

Lemma 2.4

Let \(\nu = \nu _{u(\cdot )}\) be a Bernoulli measure on \(\mathcal {X}_N\) with mean \(u(\cdot ) = \{u_x\}_{x\in {\mathbb {T}}_N^d}\). Then, we have

where \((\Delta u)_x = \sum _{y\in {\mathbb {T}}_N^d:|y-x|=1} (u_y-u_x)\).

Proof

Take a test function f on \(\mathcal {X}_N\) and compute

where \(\mathbf{1}_{\mathcal A}\) denotes the indicator function of a set \(\mathcal A\subset \mathcal X_N\). To obtain the second line, we have applied the change of variables \(\eta ^{x,y}\mapsto \eta \), and then the identity

and finally the symmetry in x and y. Since one can rewrite \(\mathbf{1}_{\{\eta _x=1,\eta _y=0\}}\) as \(\bar{\eta }_x(1-\bar{\eta }_y) -\bar{\eta }_x u_y - \bar{\eta }_y u_x + u_x(1 -u_y)\), we have

However, the sum of the last term vanishes, while the sum of the second and third terms is computed by exchanging the role of x and y in the third term and in the end we obtain

The right hand side in (2.10) can be further rewritten as

by computing the coefficient of \(\bar{\eta }_x\) in (2.10) as

which gives \((\Delta u)_x\) by taking the sum in y. Finally, the first term in (2.11) can be symmetrized in x and y and we obtain (2.9). \(\square \)

The following lemma is for the Glauber part. Recall that the flip rate \(c_x(\eta )\) is given by (2.1) with \(c^\pm (\eta )\) of the form (1.5) in general.

Lemma 2.5

The Bernoulli measure \(\nu =\nu _{u(\cdot )}\) is the same as in Lemma 2.4. Then, we have

where \(f^N(x,u)\) is given by (2.3) and

with a finite sum in \(\Lambda \) with \(|\Lambda |\ge 2\) and some local functions \(c_\Lambda (u)\) of \(u\, (=\{u_x\}_{x\in {\mathbb {Z}}^d})\) for each \(\Lambda \). In particular, if \(c^\pm (\eta )\) have the form (1.6), we have

where a, b, c are shift-invariant bounded functions of u defined by

respectively.

Proof

The first identity in (2.12) is shown by computing \(\int _{\mathcal {X}_N} L_G^{*,\nu }{} \mathbf{1}\cdot f d\nu \) for a test function f and applying the change of variables \(\eta ^x\mapsto \eta \) as in the proof of Lemma 2.4; note that

and

To see the second identity in (2.12), we recall (1.5) and note that

for \(0\notin \Lambda \Subset {\mathbb {Z}}^d\). Therefore, we have

Since the last term is equal to \(f^N(x,u) \omega _x\), this shows the second identity with

In particular, for \(c^\pm (\eta )=c_0^\pm (\eta )\) of the form (1.6), we have

This leads to the desired formula (2.13). \(\square \)

We have the following lemma for the last term in (2.8).

Lemma 2.6

Recalling that \(\nu _t = \nu _{u(t,\cdot )}\), \(u(t,\cdot )=\{u_x(t)\}_{x\in {\mathbb {T}}_N^d}\), is Bernoulli, we have

where \(\omega _{x,t} = \bar{\eta }_x/\chi (u_x(t))\).

Proof

The proof is straightforward. In fact, we have by definition

and therefore,

This shows the conclusion. \(\square \)

The results obtained in Lemmas 2.4, 2.5 and 2.6 are summarized in the following corollary. Note that the discretized hydrodynamic equation (2.2) exactly cancels the first order term in \(\omega \). Therefore only quadratic or higher order terms in \(\omega \) survive. We denote the solution \(u^N(t) = \{u^N(t,x)\}_{x\in {\mathbb {T}}_N^d}\) of (2.2) simply by \(u(t)=\{u_x(t)\}_{x\in {\mathbb {T}}_N^d}\).

Corollary 2.7

We have

where \(\omega _t = (\omega _{x,t})\). In particular, when \(c^\pm (\eta )\) are given by (1.6), omitting to write the dependence on t, this is equal to

where \(\tilde{\omega }_x^{(a)}\) stands for \(a(u_x,u_{x+n_1},u_{x+n_2})\omega _x\), and \(\tilde{\omega }_x^{(b)}\) and \(\tilde{\omega }_x^{(c)}\) are defined similarly.

3 Proof of Theorem 2.1

We prove in this section Theorem 2.1. In view of Proposition 2.3 and Corollary 2.7, our goal is to estimate the following expectation under \(\mu _t\) by the Dirichlet form \(N^2\mathcal {D}_K(\sqrt{f_t};\nu _t)\) and the relative entropy \(H(\mu _t|\nu _t)\) itself, where \(f_t = d\mu _t/d\nu _t\) and \(\mu _t = \mu _t^N\), \(\nu _t = \nu _t^N\):

Note that the condition (A7)\('\) implies that \(\chi (u_x(t))^{-1}=\chi (u^N(t,x))^{-1}\) appearing in the definition of \(\omega _{x,t}\) is bounded; see the comments given below Theorem 4.1. From the condition (A4) combined with Proposition 4.3 stated below, the first term in (3.1) can be treated similarly to the second, but with the front factor K replaced by \(K^2\); see Sect. 3.3 for details.

3.1 Replacement by Local Sample Average

Recall that we assume \(c^\pm (\eta )\) have the form (1.6) by the condition (A1) so that \(F(\omega ,u)\) has the form (2.13). With this in mind, recall the definition of \(V_a\) defined in (2.15):

where \(\tilde{\omega }_x^{(a)}\) is defined in Corollary 2.7. The first step is to replace \(V_a\) by its local sample average \(V_a^\ell \) defined by

where

for functions \(g=\{g_x(\eta )\}_{x\in {\mathbb {T}}_N^d}\) and \(\Lambda _\ell = [0,\ell -1]^d\cap {\mathbb {Z}}^d\). Since \(\ell \) will be smaller than N, one can regard \(\Lambda _\ell \) as a subset of \({\mathbb {T}}_N^d\). The reason that we consider both \(\overrightarrow{g}_{x,\ell }\) and \(\overleftarrow{g}_{x,\ell }\) is to make \(h_x^{\ell ,j}\) defined by (3.4) satisfy the condition \(h_x^{\ell ,j}(\eta ^{x,x+e_j})=h_x^{\ell ,j}(\eta )\) for any \(\eta \in \mathcal X_N\).

Proposition 3.1

We assume the conditions of Theorem 2.1 and write \(\nu =\nu _{u(\cdot )}\) and \(d\mu =fd\nu \) by omitting t. For \(\kappa >0\) small enough, we choose \(\ell = N^{\frac{1}{d}(1-\kappa )}\) when \(d\ge 2\) and \(\ell =N^{\frac{1}{2}-\kappa }\) when \(d=1\). Then, the cost of this replacement is estimated as

for every \(\varepsilon _0>0\) with some \(C_{\varepsilon _0,\kappa }>0\) when \(d\ge 2\) and the last \(N^{d-1+\kappa }\) is replaced by \(N^{\frac{1}{2}+\kappa }\) when \(d=1\).

The first step for the proof of this proposition is the flow lemma for the telescopic sum. We call \(\Phi = \{\Phi (x,y)\}_{x\sim y: x, y\in G}\) a flow on a finite graph G connecting two probability measures p and q on G if \(\Phi (x,y) = -\Phi (y,x)\) and \(\sum _{z\sim x}\Phi (x,z) = p(x)-q(x)\) hold for all \(x, y \in G: x\sim y\). We define a cost of a flow \(\Phi \) by

The following lemma has been proved in Appendix G of [25].

Lemma 3.2

(Flow lemma) For each \(\ell \in {\mathbb {N}}\), let \(p_\ell \) be the uniform distribution on \(\Lambda _\ell \) and set \(q_\ell :=p_\ell * p_\ell \). Then, there exists a flow \(\Phi ^\ell \) on \(\Lambda _{2\ell -1}\) connecting the Dirac measure \(\delta _0\) and \(q_\ell \) such that \(\Vert \Phi ^\ell \Vert ^2 \le C_d g_d(\ell )\) with some constant \(C_d>0\), independent of \(\ell \), where

The flow stated in Lemma 3.2 is constructed step by step as follows. For each \(k=0,\dots ,\ell -1\), we first construct a flow \(\Psi ^\ell _k\) connecting \(p_k\) and \(p_{k+1}\) such that \(\sup _{x,j}|\Psi ^\ell _k(x,x+e_j)|\le c k^{-d}\) with some \(c>0\). Then we can obtain the flow \(\Psi ^\ell \) connecting \(\delta _0\) and \(p_\ell \) by simply summing up \(\Psi ^\ell _k\): \(\Psi ^\ell :=\sum _{k=0}^{\ell -1} \Psi _k^\ell \). It is not difficult to see that the cost of \(\Psi ^\ell \) is bounded by \(Cg_d(\ell )\). Finally, we define the flow \(\Phi ^\ell \) connecting \(\delta _0\) and \(q_\ell \) by

whose cost is bounded by \(C_dg_d(\ell )\); see [25] for more details.

Recall \(p_\ell (y)\) defined in Lemma 3.2 and note that \(p_\ell \) can be regarded as a probability distribution on \({\mathbb {T}}_N^d\). Set \(\hat{p}_\ell (y) = p_\ell (-y)\), then we have

and similarly \(g*\hat{p}_\ell = \overrightarrow{g}_{x,\ell }\). Therefore,

where \(q_\ell \) is defined in Lemma 3.2 and \(\hat{q}_\ell (y) := q_\ell (-y)\). Note that supp\(\,q_\ell \subset \Lambda _{2\ell -1} = [0,2\ell -2]^d \cap {\mathbb {Z}}^d\). Let \(\Phi ^\ell \) be a flow given in Lemma 3.2. Accordingly, since \(\Phi ^\ell \) is a flow connecting \(\delta _0\) and \(q_\ell \), one can rewrite

For the last line, we introduced the change of variables \(x+y+n_1 \mapsto x\) for the sum in x. Thus, we have shown

where

Note that \(h_x^{\ell ,j}\) satisfies \(h_x^{\ell ,j}(\eta ^{x,x+e_j}) = h_x^{\ell ,j}(\eta )\) for any \(\eta \in \mathcal X_N\). Indeed, in (3.4), \(\tilde{\omega }_{x-y-n_1}^{(a)}(\eta ^{x,x+e_j}) \not = \tilde{\omega }_{x-y-n_1}^{(a)}(\eta )\) only if \(x-y-n_1=x\) or \(x+e_j\), namely, \(y=-n_1\) or \(y=-n_1-e_j\), but these y are not in \(\Lambda _{2\ell -1}\) due to the condition (A1) for \(n_1\).

Another lemma we use is the integration by parts formula under the Bernoulli measure \(\nu _{u(\cdot )}\) with a spatially dependent mean. We will apply this formula for the function \(h=h_x^{\ell ,j}\).

Lemma 3.3

(Integration by parts) Let \(\nu =\nu _{u(\cdot )}\) and assume \(u_-\le u_x, u_y\le u_+\) holds for \(x, y\in {\mathbb {T}}_N^d: |x-y|=1\) with some \(0<u_-<u_+<1\). Let \(h=h(\eta )\) be a function satisfying \(h(\eta ^{x,y})=h(\eta )\) for any \(\eta \in \mathcal X_N\). Then, for a probability density f with respect to \(\nu \), we have

and the error term \(R_1=R_{1,x,y}\) is bounded as

with some \(C=C_{u_-,u_+}>0\), where \(\nabla _{x,y}^1 u =u_x-u_y\).

Proof

First we write

Then, by the change of variables \(\eta ^{x,y}\mapsto \eta \) and noting the invariance of h under this change, we have

To replace the last \(\nu (\eta ^{x,y})\) by \(\nu (\eta )\), we observe

with

By the condition on u, this error is bounded as

These computations are summarized as

For the second term denoted by \(R_1\), applying the change of variables \(\eta ^{x,y} \mapsto \eta \) again, we have

since \(|\eta _y|\le 1\) and \( |\nabla _{x,y}^1 u|\le 2\). This completes the proof. \(\square \)

We apply Lemma 3.3 to \(V_a-V_a^\ell \) given in (3.3). However, \(\omega _x= (\eta _x-u_x)/\chi (u_x)\) in (3.3) depends on \(u_x\) which varies in space. We need to estimate the error caused by this spatial dependence.

Lemma 3.4

-

(1)

Assume that \(\nu =\nu _{u(\cdot )}\) and \(h=h(\eta )\) satisfy the same conditions as in Lemma 3.3. Then, we have

$$\begin{aligned} \int h(\omega _y-\omega _x) f d\nu =\int h(\eta ) \frac{\eta _x}{\chi (u_x)} \big ( f(\eta ^{x,y})-f(\eta )\big ) d\nu +R_2, \end{aligned}$$and the error term \(R_2=R_{2,x,y}\) is bounded as

$$\begin{aligned} |R_2| \le C |\nabla _{x,y}^1 u| \int |h(\eta )| f d\nu , \end{aligned}$$with some \(C=C_{u_-,u_+}>0\).

-

(2)

In particular, for \(h_x^{\ell ,j}\) defined in (3.4), we have

$$\begin{aligned} \int h_x^{\ell ,j}(\omega _x- \omega _{x+e_j}) f d\nu =-\int h_x^{\ell ,j}\frac{\eta _x}{\chi (u_x)} \big ( f(\eta ^{x,x+e_j})-f(\eta )\big ) d\nu +R_{2,x,j}, \end{aligned}$$(3.5)and

$$\begin{aligned} |R_{2,x,j}| \le C |\nabla _{x,x+e_j}^1 u| \int |h_x^{\ell ,j}(\eta )| f d\nu . \end{aligned}$$

Proof

By the definition of \(\omega _x\), we have

For \(I_2\), we have

On the other hand, \(I_1\) can be rewritten as

For \(I_{1,1}\), one can apply Lemma 3.3 to obtain

Finally for \(I_{1,2}\), observe that

Therefore, we obtain (1). Since \(h_x^{\ell ,j}(\eta ^{x,x+e_j}) = h_x^{\ell ,j}(\eta )\) for any \(\eta \in \mathcal X_N\), taking \(y=x+e_j\) and changing the sign of both sides, (2) is immediate from (1). \(\square \)

We can estimate the first term in the right hand side of (3.5) by the Dirichlet form of the Kawasaki part and obtain the next lemma.

Lemma 3.5

Let \(\nu =\nu _{u(\cdot )}\) satisfy the condition in Lemma 3.3 with \(y=x+e_j\). Then, for every \(\beta >0\), we have

with some \(C=C_{u_-,u_+}>0\), where

is a piece of the Dirichlet form \(\mathcal {D}_{K}(f;\nu )\) corresponding to the Kawasaki part considered on the bond \(\{x,y\}: |x-y|=1\) and the error term \(R_{2,x,j}\) is given by Lemma 3.4.

Proof

For simplicity, we write y for \(x+e_j\). By decomposing \(f(\eta ^{x,y})-f(\eta ) = \big ( \sqrt{f(\eta ^{x,y})}+\sqrt{f(\eta )}\big ) \big ( \sqrt{f(\eta ^{x,y})}-\sqrt{f(\eta )}\big )\), the first term in the right hand side of (3.5) is bounded by

for every \(\beta >0\). Applying the change of variables \(\eta ^{x,y}\mapsto \eta \), the second term of the last expression is equal to and bounded by

This shows the conclusion. \(\square \)

We now give the proof of Proposition 3.1.

Proof of Proposition 3.1

By Lemma 3.5, choosing \(\beta = \varepsilon _0 N^2/K\) with \(\varepsilon _0>0\), we have

For \(R_{2,x,j}\), since \(|\nabla _{x,x+e_j}^1u| \le CK/N\) from the condition (A4) combined with Proposition 4.3 stated below, estimating \(|h_x^{\ell ,j}| \le 1+(h_x^{\ell ,j})^2\), we have

Thus, estimating \(1/N\le 1\) for the second term of (3.6) (though this term has a better constant \(CK^2/N^2\), the same term with \(CK^2/N\) arises from \(K|R_{2,x,j}|\)), we obtain

\(\square \)

We assume without loss of generality that \(N/2\ell \) is an integer and that \(n_1=(1,\dots , 1)\) for notational simplicity. Then, for the second term of the right hand side in (3.7), we first decompose the sum \(\sum _{x\in {\mathbb {T}}_N^d}\) as \(\sum _{y\in \Lambda _{2\ell }}\sum _{z\in (2\ell ) {\mathbb {T}}_N^d}\) regarding \(x=z+y\). Note that the random variables \(\{h_{z+y}^{\ell , j}\}_{z\in (2\ell ) {\mathbb {T}}_N^d}\) are independent for each \(y\in \Lambda _{2\ell }\). Recall that \(d\mu =fd\nu \). Then, applying the entropy inequality, we have

Now we apply the concentration inequality (see Appendix B of [24]) for the last term:

Lemma 3.6

(Concentration inequality) Let \(\{X_i\}_{i=1}^n\) be independent random variables with values in the intervals \([a_i,b_i]\). Set \(\bar{X}_i = X_i - E[X_i]\) and \(\bar{\sigma }^2 = \sum _{i=1}^n (b_i-a_i)^2\). Then, for every \(\gamma \in [0,(\bar{\sigma }^2)^{-1}]\), we have

In fact, since \(h_x^{\ell ,j}\) is a weighted sum of independent random variables, from this lemma, we have

for every \(\gamma \le C_0/\sigma ^2\), where \(C_0\) is a universal constant and \(\sigma ^2\) is the variance of \(h_x^{\ell , j}\). On the other hand, it follows from the flow lemma that \(\sigma ^2 \le C_d g_d(\ell )\). Therefore, we have

Thus, taking \(\gamma ^{-1}= (C_dg_d(\ell ))/C_0\), we have shown

with some \(C=C_{\varepsilon _0}>0\). For \(\kappa >0\) small enough, choose \(\ell =N^{\frac{1}{d}(1-\kappa )}\) when \(d\ge 2\) and \(\ell =N^{\frac{1}{2}-\kappa }\) when \(d=1\). Then, recalling \(1\le K \le \delta (\log N)^{1/2}\) in the condition (A6)\(_\delta \), when \(d\ge 2\), we have

which shows (3.2). When \(d=1\),

This shows the conclusion for \(d=1\) and the proof of Proposition 3.1 is complete. \(\square \)

3.2 Estimate on \(\int V_a^\ell f d\nu _{u(\cdot )}\)

The next step is to estimate the integral \(\int V_a^\ell f d\nu \). We assume the same conditions as in Proposition 3.1 and therefore Theorem 2.1. We again decompose the sum \(\sum _{x\in {\mathbb {T}}_N^d}\) as \(\sum _{y\in \Lambda _{2\ell }}\sum _{z\in (2\ell ) {\mathbb {T}}_N^d}\) and then, noting the \((2\ell )\)-dependence of \(\overleftarrow{(\tilde{\omega }_\cdot ^{(a)})}_{x,\ell } \overrightarrow{(\omega _{\cdot +e})}_{x,\ell }\), use the entropy inequality, the elementary inequality \(ab\le (a^2+b^2)/2\) and the concentration inequality to show

for \(\gamma =c\ell ^d\) with \(c>0\) small enough. Roughly saying, by the central limit theorem, both \(\overleftarrow{(\tilde{\omega }_\cdot ^{(a)})}_{x,\ell }\) and \(\overrightarrow{(\omega _{\cdot +e})}_{x,\ell }\) behave as \(C_2\ell ^{-d/2}N(0,1)\) in law for large \(\ell \), respectively, where N(0, 1) denotes a Gaussian random variable with mean 0 and variance 1. This effect is controlled by the concentration inequality. When \(d\ge 2\), we chose \(\ell = N^{\frac{1}{d}(1-\kappa )}\) so that we obtain

When \(d=1\), we chose \(\ell = N^{\frac{1}{2}-\kappa }\) so that we obtain (3.11) with \(N^{d-1+\kappa }\) replaced by \(N^{\frac{1}{2}+\kappa }\).

3.3 Estimates on Three Other Terms \(V_b, V_c, V_1\)

Two terms \(V_b\) and \(V_c\) defined in (2.15) can be treated exactly in a same way as \(V_a\) and we have similar results to Proposition 3.1 and (3.11) for these two terms.

The term \(V_1\) requires more careful study. As we pointed out at the beginning of this section, the condition (A4) combined with Proposition 4.3 shows that

Therefore, the front factor behaves like \(K^2\) instead of K. Noting this, for the replacement of \(V_1\) with \(V_1^\ell \), we have a similar bound (3.8) with K replaced by \(K^2\). However, since \(K\le \delta (\log N)^{1/2}\), one can absorb even \(K^2\) by the factor \(N^\kappa \) with \(\kappa >0\) as in (3.9) and (3.10) (with K replaced by \(K^2\)). Thus, the bound (3.2) in Proposition 3.1 holds also for \(V_1-V_1^\ell \) in place of \(V_a-V_a^\ell \).

On the other hand, (3.11) should be modified as

Note that (3.12) holds with K instead of \(K^2\) in an averaged sense in (t, x) as we will see in Lemma 4.4. But this is not enough to improve (3.13) with \(K^2\) to K.

3.4 Completion of the Proof of Theorem 2.1

Finally, from Propositions 2.3, 3.1 (for \(V_a, V_b, V_c, V_1\)) and (3.11) (for \(V_a, V_b, V_c\)), (3.13) (for \(V_1\)), choosing \(\varepsilon _0>0\) small enough such that \(4\varepsilon _0<2\), we obtain

with some \(0<\alpha <1\) (\(\alpha =1-\kappa \)) when \(d\ge 2\) and \(0<\alpha <1/2\) (\(\alpha =1/2-\kappa \)) when \(d=1\). Thus, Gronwall’s inequality shows

Noting \(H(\mu _0|\nu _0) = O(N^{d-\delta _0})\) with \(\delta _0>0\) and \(e^{CK^2t} \le N^{Ct\delta ^2}\) from \(1\le K\le \delta (\log N)^{1/2}\) in the condition (A6)\(_\delta \), this concludes the Proof of Theorem 2.1, if we choose \(\delta >0\) small enough.

Remark 3.1

The above argument actually implies \(H(\mu _t^N|\nu _t^N)=O(N^{d-\delta _*})\) for some \(\delta _*>0\). From Theorem 4.1, the probability in the left hand side of (1.11) is bounded above by \(\mu _t^N(\mathcal A_{N,t}^{\varepsilon /2})\) for N sufficiently large, recall \(\mathcal A_{N,t}^{\varepsilon }\) defined below (2.6). On the other hand, from the proof of Proposition 2.2, there exists a constant \(C_0\), which depends only on \(\Vert \varphi \Vert _\infty \), such that \(\nu _t^N(\mathcal A_{N,t}^{\varepsilon /2})\le e^{-C_0\varepsilon ^2N^d}\). These estimates together with the entropy inequality show that

for N sufficiently large. This gives the rate of convergence in the limit (1.11).

4 Motion by Mean Curvature from Glauber–Kawasaki Dynamics

The rest is to study the asymptotic behavior as \(N\rightarrow \infty \) of the solution \(u^N(t)\) of the discretized hydrodynamic equation (2.2), which appears in (2.6). We also give a few estimates on \(u^N(t)\) which were already used in Sect. 3.

Theorem 4.1 formulated below is purely a PDE type result, which establishes the sharp interface limit for \(u^N(t)\) and leads to the motion by mean curvature. Recall that we assume \(d\ge 2\). A smooth family of closed hypersurfaces \(\{\Gamma _t\}_{t\in [0,T]}\) in \({\mathbb {T}}^d\) is called the motion by mean curvature flow starting from \(\Gamma _0\), if it satisfies

where V is the inward normal velocity of \(\Gamma _t\) and \(\kappa \) is the mean curvature of \(\Gamma _t\) multiplied by \((d-1)\). It is known that if \(\Gamma _0\) is a smooth hypersurface without boundary, then there exists a unique smooth solution of (4.1) starting from \(\Gamma _0\) on [0, T] with some \(T>0\); cf. Theorem 2.1 of [5] and see Sect. 4 of [16] for related references. In fact, by using the local coordinate (d, s) for x in a tubular neighborhood of \(\Gamma _0\) where \(d=d(x)\) is the signed distance from x to \(\Gamma _0\) and \(s=s(x)\) is the projection of x on \(\Gamma _0\), \(\Gamma _t\) is expressed as a graph over \(\Gamma _0\) and represented by \(d=d(s,t)\), \(s\in \Gamma _0\), \(t\in [0,T]\), and the Eq. (4.1) for \(\Gamma _t\) can be rewritten as a quasilinear parabolic equation for \(d=d(s,t)\). A standard theory of quasilinear parabolic equations shows the existence and uniqueness of smooth local solution in t. We cite [1] as an expository reference for the definitions of mean curvature, motion by mean curvature flow and Allen–Cahn equation. As we mentioned above, Sect. 4 of [16] also gives a brief review of these topics.

The limiting behavior of \(u^N(t)\) as \(N\rightarrow \infty \) is given by the following theorem. Recall that the solution \(\{u^N(t,x), x\in {\mathbb {T}}_N^d\}\) is extended as a step function \(\{u^N(t,v), v\in {\mathbb {T}}^d\}\) on \({\mathbb {T}}^d\) as in (2.5).

Theorem 4.1

Under the conditions (A2), (A3) and (A5), for \(t\in [0,T]\) and \(v\notin \Gamma _t\), \(u^N(t,v), v\in {\mathbb {T}}^d\) converges as \(N\rightarrow \infty \) to \(\chi _{\Gamma _t}(v)\) defined by (1.10) from the hypersurface \(\Gamma _t\) in \({\mathbb {T}}^d\) moving according to the motion by mean curvature (4.1).

Combining the probabilistic result (2.6) with this PDE type result, we have proved that \(\alpha ^N(t)\) converges to \(\chi _{\Gamma _t}\) in probability when multiplied by a test function \(\varphi \in C^\infty ({\mathbb {T}}^d)\). This completes the proof of our main Theorem 1.1.

Under the condition (A7)\('\), especially with \(u_\pm \) chosen as \(0<u_-<\alpha _1< \alpha _2<u_+<1\), by the comparison theorem (Proposition 4.5 below) for the discretized hydrodynamic equation (2.2) and noting that, if \(u^N(0,x)\equiv u_-\) (or \(u_+\)), the solution \(u^N(t,x)\equiv u^N(t)\) of (2.2) increases in t toward \(\alpha _1\) (or decreases to \(\alpha _2\)) by the condition (A2), the condition \(u^N(0,x)\in [u_-,u_+]\) implies the same for \(u^N(t,x)\). In particular, this shows \(\chi (u^N(t,x))\ge c>0\) with some \(c>0\) for all \(t\in [0,T]\) and \(x\in {\mathbb {T}}_N^d\).

4.1 Estimates on the Solutions of the Discretized Hydrodynamic Equation

We give estimates on the gradients of the solutions \(u(t)\equiv u^N(t) =\{u_x(t)\}_{x\in {\mathbb {T}}_N^d}\) of the discretized hydrodynamic equation (2.2). These were used to estimate the contribution of the first term in (3.1) and also \(R_{2,x,j}\) in (3.6) as we already mentioned. Let \(p^N(t,x,y)\) be the discrete heat kernel corresponding to \(\Delta ^N\) on \({\mathbb {T}}_N^d\). Then, we have the following global estimate in t.

Lemma 4.2

There exist \(C, c>0\) such that

where \( \nabla ^N u(x) = \left\{ N(u(x+e_i)-u(x))\right\} _{i=1}^d \) and \(\Vert \cdot \Vert \) stands for the standard Euclidean norm of \({\mathbb {R}}^d\) as we defined before.

Proof

Let p(t, x, y) be the heat kernel corresponding to the discrete Laplacian \(\Delta \) on \({\mathbb {Z}}^d\). Then, we have the estimate

with some constants \(C, c>0\), independent of t and x, y, where \(\nabla =\nabla ^1\). For example, see (1.4) in Theorem 1.1 of [6] which discusses more general case with random coefficients; see also [30]. Then, since

the conclusion follows. \(\square \)

We have the following \(L^\infty \)-estimate on the gradients of \(u^N\).

Proposition 4.3

For the solution \(u^N(t,x)\) of (2.2), we have the estimate

if \(\Vert \nabla ^N u^N(0,x)\Vert \le C_0 K\) holds.

Proof

From Duhamel’s formula, we have

By noting \(f^N(x,u)\) is bounded and applying Lemma 4.2, we obtain the conclusion. \(\square \)

It is expected that \(\nabla ^N u^N\) behaves as \(\sqrt{K}\) near the interface by the scaling property (see Sect. 4.2 of [17] and also as Theorem 4.6 below suggests) and decays rapidly in K far from the interface where \(u^N(t,x)\) would be almost flat. In this sense, the estimate obtained in Proposition 4.3 may not be the best possible. In a weak sense, one can prove the behavior \(u_x(t)-u_y(t) \sim \sqrt{K}/N\) (instead of K / N) for \(x,y: |x-y|=1\) as in the next lemma.

Lemma 4.4

We have

Proof

By multiplying \(u_x(t) \, \big (= u^N(t,x)\big )\) to the both sides of (2.2) and taking the sum in x, we have

Here, we have used the bound \(u_x f^N(x, u) \le C\). Since \(\sum _{x\in {\mathbb {T}}_N^d} u_x(0)^2 \le N^d\), we have the conclusion. \(\square \)

4.2 Proof of Theorem 4.1

For the proof of Theorem 4.1, we rely on the comparison argument for the discretized hydrodynamic equation (2.2); cf. [14], Proposition 4.1 of [15], Lemma 2.2 of [18] and Lemma 4.3 of [19].

Assume that \(f^N(x,u), u=(u_x)_{x\in {\mathbb {T}}_N^d}\in [0,1]^{{\mathbb {T}}_N^d}\) has the following property: If \(u=(u_x)_{x\in {\mathbb {T}}_N^d}, v=(v_x)_{x\in {\mathbb {T}}_N^d}\) satisfies \(u\ge v\) (i.e., \(u_y\ge v_y\) for all \(y\in {\mathbb {T}}_N^d\)) and \(u_x = v_x\) for some x, then \(f^N(x,u)\ge f^N(x,v)\) holds. Note that \(f^N(x,u)\) given in (2.3) with \(c^\pm (\eta )\) of the form (1.6) has this property, since \(c_x^+(u)\) is increasing and \(c_x^-(u)\) is decreasing in u in this partial order by the condition (A3).

Proposition 4.5

Let \(u^\pm (t,x)\) be super and sub solutions of

Namely, \(u^+\) satisfies (4.2) with “\(\ge \)”, while \(u^-\) satisfies it with “\(\le \)” instead of the equality. If \(u^-(0) \le u^+(0)\), then \(u^-(t) \le u^+(t)\) holds for all \(t>0\). In particular, one can take the solution of (4.2) as \(u^+(t,x)\) or \(u^-(t,x)\).

Proof

Assume that \(u^+(t)\ge u^-(t)\) and \(u^-(t,x)=u^+(t,x)\) hold at some (t, x). Since \(u^\pm \) are super and sub solutions of (4.2), we have

On the other hand, noting that

and that \(f^N(x,u^+)- f^N(x,u^-)\ge 0\) by the assumption, we have \(\partial _t (u^+-u^-)(t,x) \ge 0\). This shows that \(u^-(t)\) can not exceed \(u^+(t)\) for all \(t>0\). \(\square \)

For \(\delta \in {\mathbb {R}}\) with \(|\delta |\) sufficiently small, one can find a traveling wave solution \(U=U(z;\delta ), z\in {\mathbb {R}}\), which is increasing in z and its speed \(c=c(\delta )\) by solving an ordinary differential equation:

where \(U_-^*(\delta )<U_+^*(\delta )\) are two stable solutions of \(f(U)+\delta =0\). Note that \(U_-^*(0)=\alpha _1, U_+^*(0)=\alpha _2\) and \(c(0)=0\). The solution \(U(z;\delta )\) is unique up to a translation and one can choose \(U(z;\delta )\) satisfying \(U_\delta (z;\delta )\ge 0\); see [5], p. 1288. Note also that the traveling wave solution U is associated with the one-dimensional version of the reaction–diffusion equation and not with the discrete equation (4.2). Indeed, \(u(t,z) := U(z-ct)\) solves the equation

which is a one-dimensional version of (1.3) or (1.9) with \(K=1\) considered on the whole line \({\mathbb {R}}\) in place of \({\mathbb {T}}^d\) with f replaced by \(f+\delta \) and connecting two stable solutions \(U_\pm ^*(\delta )\) at \(z=\pm \infty \).

Let \(\Gamma _t, t\in [0,T]\) be the motion of smooth hypersurfaces in \({\mathbb {T}}^d\) determined by (4.1). Let \(\tilde{d}(t,v), t\in [0,T], v\in {\mathbb {T}}^d\) be the signed distance function from v to \(\Gamma _t\), and similarly to [5], p.1289, let d(t, v) be a smooth modification of \(\tilde{d}\) such that

where \(d_0>0\) is taken such that \(\tilde{d}(t,v)\) is smooth in the domain \(\{(t,v); |\tilde{d}(t,v) | < 2 d_0, t\in [0,T]\}\). We define two functions \(\rho _{\pm }(t,v) \equiv \rho _{\pm }^K(t,v)\) by

Applying Proposition 4.5 and repeating computations of Lemma 3.4 in [5], we have the following theorem for \(u^N(t,v)\) defined by (2.5) from the solution \(u^N(t,x)\) of (2.2). The functions \(\rho _\pm (t,v)\) describe the sharp transition of \(u^N(t,\cdot )\) and change their values quickly from one side to the other crossing the interface \(\Gamma _t\) to the normal direction.

Theorem 4.6

We assume the conditions (A2), (A3) and (A5), in particular \(\{\Gamma _t\}_{t\in [0,T]}\) is smooth and the following inequality (4.3) holds at \(t=0\). The condition on K can be relaxed and we assume \(K \le CN^{2/3}\) for \(K=K(N) \rightarrow \infty \). Then, taking \(m_2, m_3>0\) large enough, there exists \(N_0\in {\mathbb {N}}\) such that

holds for every \(a>1/2\), \(t\in [0,T]\), \(v= x/N, x \in {\mathbb {T}}_N^d\) and \(N\ge N_0\).

Proof

Let us show that

for every \(N\ge N_0\) with some \(N_0\in {\mathbb {N}}\). We decompose

where \(\Delta \) is the continuous Laplacian on \({\mathbb {T}}^d\) and

The term \(L^K \rho _+\) can be treated as in [5], from the bottom of pp. 1291–1293. Note that \(\varepsilon ^{-2}\) in their paper corresponds to K here, and they treated the case with a non-local term, which we don’t have. Since we can extend \(m_1 \varepsilon e^{m_2 t}\) in the definition of super and sub solutions in their paper to \(K^{-a}e^{m_2t}\) (i.e., we can take \(K^{-a}\) instead of \(m_1\varepsilon \)) for every \(a>0\), we briefly repeat their argument by adjusting it to our setting. The case with noise term is discussed by [14], pp. 412–413.

In fact, \(L^K \rho _+\) can be decomposed as

where

by just writing \(K^{-a}\) instead of \(m_1\varepsilon \) (i.e., here \(m_1 = K^{1/2-a} \searrow 0\)) in [5], p. 1292 noting that \(W(\nu ,\delta ) = c(\delta )\), \(U(z;\nu ,\delta ) = U(z;\delta )\) and \(C_2=0\) in our setting. Repeating their arguments, one can show that, if \(m_2\) is large enough compared with \(m_3\), \(T_1, T_2 \ge -C\) hold for some \(C>0\), and \(T_3\ge 0\) since \(U_\delta \ge 0\). Therefore, we obtain

For the rest in (4.4), since d(t, v) and U(z) so that \(\rho _\pm \) are smooth in v, we have

The first one follows from Taylor expansion for \(\Delta ^N\rho _+\) up to the third order term, while the second one follows by taking the expansion up to the first order term. Therefore, if \(K=O(N^{2/3})\), these terms stay bounded in N and are absorbed by \(L^K\rho _+\) estimated in (4.6) with \(m_3\) chosen large enough. Thus, we obtain \(L^{N,K}\rho _+\ge 0\). The lower bound by \(\rho _-(t,v)\) is shown similarly. \(\square \)

Theorem 4.1 readily follows from Theorem 4.6 by noting that we have from the definitions of \(\rho _\pm (t,v)=\rho ^K_\pm (t,v)\),

for \(t\in [0,T]\) and \(v\notin \Gamma _t\).

Remark 4.1

The choice \(\pm K^{-1}m_3e^{m_2t}\) in the definition of the super and sub solutions is the best. In fact, in stead of \(K^{-1}\), if we take \(K^{\beta -1}\) with \(\beta >0\), then we may consider \(m_3=K^\beta \) but this diverges so that \(m_2\) also must diverge. On the other hand, if \(\beta <0\), as the above proof shows, we don’t have a good control.

References

Bellettini, G.: Lecture Notes on Mean Curvature Flow, Barriers and Singular Perturbations, Lecture Notes. Scuola Normale Superiore di Pisa, Pisa (2013)

Bertini, L., Buttà, P., Pisante, A.: On large deviations of interface motions for statistical mechanics models. Ann. Henri Poincaré 20, 1785–1821 (2019)

Caputo, P., Martinelli, F., Simenhaus, F., Toninelli, F.L.: “Zero” temperature stochastic 3D Ising model and dimer covering fluctuations: a first step towards interface mean curvature motion. Commun. Pure Appl. Math. 64, 778–831 (2011)

Caputo, P., Martinelli, F., Toninelli, F.L.: Mixing times of monotone surfaces and SOS interfaces: a mean curvature approach. Commun. Math. Phys. 311, 157–189 (2012)

Chen, X., Hilhorst, D., Logak, E.: Asymptotic behavior of solutions of an Allen–Cahn equation with a nonlocal term. Nonlinear Anal. 28, 1283–1298 (1997)

Delmotte, T., Deuschel, J.-D.: On estimating the derivatives of symmetric diffusions in stationary random environment, with applications to \(\nabla \phi \) interface model. Probab. Theory Relat. Fields 133, 358–390 (2005)

De Masi, A., Presutti, E.: Mathematical Methods for Hydrodynamic Limits, Lecture Notes Math. Springer, New York (1991)

De Masi, A., Ferrari, P., Lebowitz, J.: Reaction diffusion equations for interacting particle systems. J. Stat. Phys. 44, 589–644 (1986)

De Masi, A., Orlandi, E., Presutti, E., Triolo, L.: Motion by curvature by scaling nonlocal evolution equations. J. Stat. Phys. 73, 543–570 (1993)

De Masi, A., Orlandi, E., Presutti, E., Triolo, L.: Glauber evolution with Kac potentials, I. Mesoscopic and macroscopic limits, interface dynamics. Nonlinearity 7, 633–696 (1994)

De Masi, A., Pellegrinotti, A., Presutti, E., Vares, M.E.: Spatial patterns when phases separate in an interacting particle system. Ann. Probab. 22, 334–371 (1994)

De Masi, A., Funaki, T., Presutti, E., Vares, M.E.: Fast-reaction limit for Glauber–Kawasaki dynamics with two components. ALEA Lat. Am. J. Probab. Math. Stat. 16, 957–976 (2019)

Farfan, J., Landim, C., Tsunoda, K.: Static large deviations for a reaction–diffusion model. Probab. Theory Relat. Fields 174, 49–101 (2019)

Funaki, T.: Singular limit for stochastic reaction–diffusion equation and generation of random interfaces. Acta Math. Sinica 15, 407–438 (1999)

Funaki, T.: Hydrodynamic limit for \(\nabla \phi \) interface model on a wall. Probab. Theory Relat. Fields 126, 155–183 (2003)

Funaki, T.: Lectures on Random Interfaces. Springer Briefs in Probability and Mathematical Statistics. Springer, New York (2016)

Funaki, T.: Hydrodynamic limit for exclusion processes. Commun. Math. Stat. 6, 417–480 (2018)

Funaki, T., Ishitani, K.: Integration by parts formulae for Wiener measures on a path space between two curves. Probab. Theory Relat. Fields 137, 289–321 (2007)

Funaki, T., Olla, S.: Fluctuations for \(\nabla \phi \) interface model on a wall. Stoch. Proc. Appl. 94, 1–27 (2001)

Funaki, T., Uchiyama, K., Yau, H.-T.: Hydrodynamic Limit for Lattice Gas Reversible Under Bernoulli Measures. In: Woyczynski, W. (ed.) Nonlinear Stochastic PDE’s: Hydrodynamic Limit and Burgers’ Turbulence, pp. 1–40. IMA volume (University of Minnesota), Springer, Minneapolis (1996)

Giacomin, G.: Onset and structure of interfaces in a Kawasaki–Glauber interacting particle system. Probab. Theory Relat. Fields 103, 1–24 (1995)

Guo, M.Z., Papanicolaou, G.C., Varadhan, S.R.S.: Nonlinear diffusion limit for a system with nearest neighbor interactions. Commun. Math. Phys. 118, 31–59 (1988)

Hernández, F., Jara, M., Valentim, F.: Lattice model for fast diffusion equation. Stoch. Proc. Appl. (to appear)

Jara, M., Menezes, O.: Non-equilibrium fluctuations for a reaction-diffusion model via relative entropy, arXiv:1810.03418

Jara, M., Menezes, O.: Non-equilibrium fluctuations of interacting particle systems, arXiv:1810.09526

Katsoulakis, M.A., Souganidis, P.E.: Interacting particle systems and generalized evolution of fronts. Arch. Ration. Mech. Anal. 127, 133–157 (1994)

Katsoulakis, M.A., Souganidis, P.E.: Generalized motion by mean curvature as a macroscopic limit of stochastic Ising models with long range interactions and Glauber dynamics. Commun. Math. Phys. 169, 61–97 (1995)

Kipnis, C., Landim, C.: Scaling Limits of Interacting Particle Systems. Springer, Boston (1999)

Spohn, H.: Interface motion in models with stochastic dynamics. J. Stat. Phys. 71, 1081–1132 (1993)

Stroock, D.W., Zheng, W.: Markov chain approximations to symmetric diffusions. Ann. Inst. H. Poincare Probab. Stat. 33, 619–649 (1997)

Yau, H.-T.: Relative entropy and hydrodynamics of Ginzburg–Landau models. Lett. Math. Phys. 22, 63–80 (1991)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Abhishek Dhar.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Tadahisa Funaki is supported in part by JSPS KAKENHI, Grant-in-Aid for Scientific Researches (S) 16H06338, (A) 18H03672, 17H01093, 17H01097 and (B) 16KT0024, 26287014. Kenkichi Tsunoda is supported in part by JSPS KAKENHI, Grant-in-Aid for Early-Career Scientists 18K13426.

Rights and permissions

About this article

Cite this article

Funaki, T., Tsunoda, K. Motion by Mean Curvature from Glauber–Kawasaki Dynamics. J Stat Phys 177, 183–208 (2019). https://doi.org/10.1007/s10955-019-02364-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-019-02364-7

Keywords

- Hydrodynamic limit

- Motion by mean curvature

- Glauber–Kawasaki dynamics

- Allen–Cahn equation

- Sharp interface limit