Abstract

We consider the cardinality of supercritical oriented bond percolation in two dimensions. We show that, whenever the the origin is conditioned to percolate, the process appropriately normalized converges asymptotically in distribution to the standard normal law. This resolves a longstanding open problem pointed out to in several instances in the literature. The result applies also to the continuous-time analog of the process, viz. the basic one-dimensional contact process. We also derive general random-indices central limit theorems for associated random variables as byproducts of our proof.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider oriented bond percolation on the two-dimensional integer lattice. For background on this process, we refer to the review [15]. We show that the process exhibits classic central limit theorem (CLT) behavior in all of the supercritical phase; meaning that the law of the diffusively rescaled cardinality of the process started from a site conditioned to percolate converges asymptotically in distribution to the standard normal law. The continuous-time analog of two-dimensional oriented percolation is the basic contact process in one (spatial) dimension. Our result and approach convey analogously to this process. The contact process on integer-lattices was introduced in [33]. The corresponding strong law of large numbers (SLLN) was shown in [21]. The CLT has been posed as an open problem originally in [15], and later in [16], and more recently, in [17]. The contact process falls into the subject of interacting particle systems (IPS), for background on which we refer to the classic accounts [16, 26, 39], furthermore, we refer to the later, more comprehensive accounts [18, 41] as the subject received additional attention afterward in the literature. Percolation theory originates in [9] and, for more in this regard, we refer to the classic accounts [8, 28].

Harris’ Growth Theorem [35] regards that the rate of growth of the highly supercritical contact process conditioned to percolate is almost surely linear in all dimensions.Footnote 1 The corresponding \(L^{1}\)-LLN for the supercritical process in one dimension was shown by means of subadditivity and coupling arguments in [14].Footnote 2 We note that this result is considered a precursor to the general subadditive ergodic theorem shown in [40]. The range of parameter values for which the Harris’ Growth Theorem holds was extended by means of improvements to Peierls’ argument in continuous time, shown in [27].Footnote 3 The SLLN for the process in all dimensions with parameter value larger than the critical value of the one-sided process in dimension one was derived, as a corollary of the general shape theorem, in [20]. The SLLN, valid for all of the supercritical phase in dimension one, was shown by means of renormalization group techniques in [21].Footnote 4 Furthermore, the important property that the invariant measure possesses exponentially decaying correlations, together with other exponential estimates, was shown there.Footnote 5 This key property, together with the SLLN for the position of the endpoints, result shown earlier in [14], enabled the proof of the SLLN in [21]. Among other landmark results, the shape theorem, and hence the SLLN, valid for all of the supercritical phase and in all dimensions, was shown by means of renormalization techniques in [5], see also the review [17]. To date the following CLT’s have been derived in the literature regarding other functionals of the contact process. The CLT regarding time-averages of finite support functions of the infinite one-dimensional supercritical contact process was shown in [52], by means of following an approach by [12], using an exponential decay property by [20], and applying general results of [44, 45]. Further, we mention that the CLT regarding the endpoints of the process was shown by means of mixing techniques in [25], and later by means of elementary arguments in [37]. In addition, for a detailed literature account regarding known CLT’s in classic percolation, we refer to \(\S \) 11.6 in [28], see also the later [48, 49].

Furthermore, we derive certain CLT’s regarding randomly-indexed partial sums of non-stationary, associated r.v.’s (random variables), as byproducts of our proof technique. To the limits of one’s knowledge, randomly-indexed CLT’s for families of associated r.v.’s have not been considered elsewhere in the literature. The introduction and the appreciation of the usefulness of association in percolation dates back to the Harris’ Lemma [32],Footnote 6 with most prominent extensions to non-product measures, being the FKG inequality [23], the Holley inequality [36],Footnote 7 and the Ahlswede–Daykin inequality [1].Footnote 8 The systematic study of this concept as a general dependence structure was initiated in [22]. The acknowledgment of that asymptotics for the correlation structure are useful in studying approximate independence of associated r.v.’s originates to [38], where necessary and sufficient conditions of this sort for the ergodic theorem to extend to this case were shown. The first corresponding CLT was derived in [43], whereas the key notion of demimartingales was introduced in [44]. Other notable CLT’s, which replace the stationarity assumption with moment conditions, are those due to [13], and also [7]. For background and comprehensive expositions on association, classic limit theorems for associated r.v.’s in particular, and much more about recent advances on the subject, we refer to the reviews [10, 46, 47, 50].

Our main result comprises the CLT for supercritical oriented percolation in two dimensions, which we state explicitly in Sect. 2.1, Theorem 2.1. Regarding the CLT’s for randomly-indexed associated r.v.’s, see Sect. 2.2.

1.1 Definition of the Process

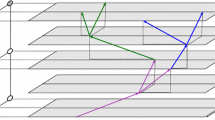

We let \({\mathscr {L}}= {\mathscr {L}}( {\mathbb {L}}, {\mathbb {B}})\) be the usual two-dimensional oriented percolation lattice graph, for which the set of sites is \({\mathbb {L}}= \{ (x,n) \in {\mathbb {Z}}^{2}: x+n \in 2{\mathbb {Z}} \text{ and } n \ge 0\}\), \(2{\mathbb {Z}}= \{2k: k \in {\mathbb {Z}}\}\), and the set of bonds is \({\mathbb {B}}= \{[(x,n), (y, n+1)\rangle : |x-y| =1\}\), and where \([s, u\rangle \) means that an arrow (or bond) directed from site s to site u is present, see fig. 1, p. 1001, [15], for this and other transpositions of \({\mathscr {L}}\) in the plane. We consider independent bond percolation on \({\mathscr {L}}\) with open (or retaining) probability parameter \(p \in [0,1]\), defined as follows. We consider the configuration space \(\Omega = \{0,1\}^{{\mathbb {B}}} = \{ \omega : {\mathbb {B}}\rightarrow \{0,1\} \}\). We let \({\mathbb {P}}(= {\mathbb {P}}_{p})\) denote the joint distribution of \((\omega (b): b \in {\mathbb {B}})\), an ensemble of i.i.d. p-Bernoulli r.v.’s, which is, \(\mu (\omega (b)= 1) = 1- \mu (\omega (b)= 0) = p\). We note that \({\mathbb {P}}\) yields a probability measure on \(\Omega \), equipped as usual with \({\mathcal {F}}\), the \(\sigma \)-field of subsets of \(\Omega \) generated by finite-dimensional cylinders. We may further let \({\mathscr {G}}= {\mathscr {G}}( {\mathbb {L}}, {\mathbb {B}}_{1})\), \({\mathbb {B}}_{1} = \{b : \omega (b) =1\}\), be the subgraph of \({\mathscr {L}}\) in which b is retained if and only if \(\omega (b) = 1\).

For given \(\omega \in \Omega \), bonds b such that \(\omega (b)= 1\) are thought of as open (or retained); whereas if b is assigned value \(\omega (b)= 0\), we consider b as closed (or removed), which may be thought of as flow being disallowed. If \(s_{m}, s_{n} \in {\mathbb {L}}\), \(s_{m}= (x_{m}, m)\), \(s_{n}= (x_{n},n)\), \(m \le n\), then, given \(\omega \in \Omega \), we write \( s_{m} \rightarrow s_{n}\) whenever there is a directed path from \( s_{m}\) to \(s_{n}\) in \({\mathscr {G}}(\omega )\), that is, there is \(s_{m+1} = (x_{m+1}, m+1), \dots , s_{n-1} = (x_{n-1}, n-1)\) such that \( \omega ([s_{k}, s_{k+1}\rangle ) = 1\) for all \(m \le k \le n-1\).

We let \(\xi _{n}^{\eta } = \{x: \left( y,0 \right) \rightarrow (x,n), \text{ for } \text{ some } y \in \eta \}\), \(\eta \subseteq 2{\mathbb {Z}}\). We note also that, when convenient, we shall use the coordinate-wise notation \(\xi _{n}^{\eta }(x) = 1(x \in \xi _{n}^{\eta })\), where we denote by 1(A) the indicator random variable of an event A. We note that \((\xi _{n}^{\eta }, \eta \subset 2{\mathbb {Z}})\) furthermore admits a Markovian definition, and hence, we may think of the vertices’ first and second coordinates as space and time, respectively. We also note that, by definition of \({\mathbb {L}}\), \(\xi _{n}^{\eta }\subset 2{\mathbb {Z}}\), for \(n \in 2{\mathbb {Z}}_{+}\), and \(\xi _{n}^{\eta }\subset 2{\mathbb {Z}}+1\), for \(n \in 2{\mathbb {Z}}_{+} +1\).

We will denote simply by \((\xi _{n})\) the process started from \(O = \{0\}\) and, in general, we will drop superscripts associated to the starting set in our notation when referring to \(O = \{0\}\).

1.2 The Critical Value and the Upper-Invariant Measure

We state here some basic definitions and facts, for a more detailed exposition of which, see for instance, [15, 29, 42]. We let \(\Omega _{\infty }^{\eta }\) be the percolation event for initial configurations \(\eta \), \(|\eta |< \infty \), that is, we let

where \(| \cdot |\) denotes cardinality; and we also note that \(\Omega _{n}^{\eta } \supseteq \Omega _{n+1}^{\eta }\), \({\mathbb {P}}\)-a.s.. Further, we let \(\rho = \rho (p)\) be the so-called asymptotic density, defined as follows

where the appellation derives in view of (5), from the resultant equality of \(\rho (p)\) by appropriately applying (6) below. We let in addition \(p_{c}\) be the critical value, defined as follows

Where we recall that it is elementary that \(p_{c} \in (0,1)\), and that the well-definedness of \(p_{c}\) comes from that \(\rho (p)\) is non-decreasing in p, which is an elementary consequence of the construction by the superposition of Bernoulli r.v.’s property. We also note that the assumption that \(p>p_{c}\) we require here may be replaced by the apriori weaker assumption that \(\rho (p)>0\), since the two assumptions are equivalent due to that \(\rho (p_{c})=0\), shown for all dimensions in [5], see also [17], and also [4] for the extension of this results to general attractive spin systems.

Let further,

We recall also that, if \(\mu _{n}\) denotes the distribution of \(\xi _{2n}^{2{\mathbb {Z}}}\), then we have that

where \({\bar{\nu }}\) is the so-called upper-invariant measure, defined on \(\Sigma \) and uniquely determined by its cylinders, which, in view of the so-called self-duality property (see, for example, (34) below), is such that

\(B \in \Sigma _{0}\), and where ‘\(\Rightarrow \)’ denotes weak convergence, which we define as convergence of the finite-dimensional distributions

as \(n \rightarrow \infty \). To see the reason that we refer to \(\rho (p)\) as the asymptotic density, note that by (6) we have that \({\bar{\nu }}(\eta : \eta \cap O \not = \emptyset ) = \rho \). Further, we note that (5) is denoted below simply as follows,

where \({\bar{\xi }}\) is a random field distributed according to \({\bar{\nu }}\), denoted as \({\bar{\xi }} \sim {\bar{\nu }}\) below.

Finally, prior to turning to our main statement in the section below, we give some additional notation first. We note that the shorthands \({\mathcal {L}}(X_{n}) \xrightarrow {w} {\mathcal {N}}(0,\sigma ^{2})\), as \(n \rightarrow \infty \), \(n \in {\mathbb {N}}\), as well as \(X_{n} \xrightarrow {w} {\mathcal {N}}(0,\sigma ^{2})\), as \(n \rightarrow \infty \), will be in force in the sequel in order to denote weak convergence to a normal distribution with mean 0 and variance \(\sigma ^{2}\), which is that, if we let \(F_{n}(x)\) be the cumulative distribution function associated with \(X_{n}\), we have that \(F_{n}(x) \rightarrow \int _{- \infty }^{x} (2\pi )^{- 1/2} e^{- u^{2}/2} \text{ d } u\), as \(n \rightarrow \infty \).

2 Results

2.1 The CLT

To state next our main result, we let \(p>p_{c}\) and we let \({\bar{\xi }} \sim {\bar{\nu }}\). We let also

Furthermore, we let \({\bar{{\mathbb {P}}}}\) be the probability measure induced by the original \({\mathbb {P}}\) by conditioning on \(\Omega _{\infty }\), which is, \({\bar{{\mathbb {P}}}}(\cdot ) = {\mathbb {P}}(\cdot | \Omega _{\infty })\). We denote by \({\mathcal {L}}(X| \Omega _{\infty })\) the law of a r.v. X under \({\bar{{\mathbb {P}}}}\). In addition, we let \(r_{n} = \sup \xi _{n}\) and \(l_{n} = \inf \xi _{n}\), and we further let \(d_{n} = \frac{1}{2} (r_{n} - l_{n}) +1\). We recall also that \(\rho _{n} = {\mathbb {P}}(\Omega _{n})\).

Theorem 2.1

Let \(p > p_{c}\). We have that, as \(n \rightarrow \infty \),

From a technical perspective, the main novelty in our proof approach is the consideration of the (non-stopping) time regarding the last-intersection of the two infinite endpoints processes. To briefly elaborate on this coupling observation here (see the Lemmas subsequent to its definition in (44) for the exact statements), we let

where \(2{\mathbb {Z}}_{-} = \{ \dots ,-2, 0\}\), and \(2{\mathbb {Z}}_{+} = \{ 0, 2, \dots \}\). We note that, as will be seen from the proof, the distribution of \(|\xi _{n}| = \sum _{x=l_{n}}^{r_{n}} \xi _{n}(x)\) conditioned on \(\Omega _{\infty }\), is equal to that of \(\sum _{x=l_{n}^{+}}^{r^{-}_{n}} \xi _{n}^{2 {\mathbb {Z}}}(x)\), for all n after this random time occurs, and therefore, asymptotics for the latter process permit to infer the same asymptotics for the former one. In this manner, we circumvent the effects of altering the distribution of \(\xi _{n}\) when conditioning on \(\Omega _{\infty }\), and thus, we are able to deduce Theorem 2.1 by working on the whole probability space, and dealing with partial sums of the infinite processes, involved in \(\sum _{x=l_{n}^{+}}^{r^{-}_{n}} \xi _{n}^{2 {\mathbb {Z}}}(x)\), instead. We further note that, in order to deal with the fact that this is a non-stopping time in our proof, we reside on independence inherited from the independence of the underlying Bernoulli r.v.’s in disjoint parts of \({\mathscr {L}}\), an observation applied in a different context by [37]. We also note that this coupling is intrinsic to two-dimensions, since it relies on path intersection properties, and that hence, we expect that new methods will be required for the extension of this result to higher dimensions. On the other hand, we believe and pursue in forthcoming work [54] that the techniques we develop can be used in order to give a proof of the law of the iterated logarithm corresponding to Theorem 2.1. Furthermore, we note that the method of proof of Theorem 2.1 relies on Proposition 2.3, stated in Sect. 2.3 below, and also incorporates an earlier observation due to [15]. We note that a key ingredient for Proposition 2.3 to apply in the context of supercritical oriented percolation is the Harris’ correlation inequality [34]; see also Theorem B.17 in [41] and the references therein. In addition, we note that our proof approach, and the techniques involved, differentiate from those devised in known CLT’s for percolation processes, due to the fact that we consider partial sums that are indexed randomly, depending on the state of the process itself.

2.2 Random-Indices CLT’s

We recall here the following definition.

Association A collection of r.v.’s \((X_{i}: i \in I)\), \(|I| = \infty \), is associated if for all finite sub-collections \(X_{1}, \dots , X_{m}\) and all coordinate-wise non-decreasing \(f_{1}, f_{2}: {\mathbb {R}}^{m} \rightarrow {\mathbb {R}}\) we have that \(\mathrm {Cov}({\tilde{f}}_{1}, {\tilde{f}}_{2}) \ge 0\), \({\tilde{f}}_{j} := f_{j}(X_{1}, \dots , X_{m})\), \(j=1,2\), whenever this covariance exists.

By known results our proof approach provides with certain random-indices central limit theorems for associated triangular arrays of r.v.’s, which we effectively obtain as direct byproducts. One important aspect of those statements is that nothing is assumed regarding independence among the summands and the index family of r.v.’s. Corollary 2.2 stated next in particular is a random-index extended version of the CLT in Theorem 1 due to [13] with the additional proviso (11) below. Furthermore, we note that we may in addition obtain in a manner which is directly analogous and is thus omitted the corresponding random-index CLT’s extensions to Theorem 3 in [7], or Theorem 3 in [44], with the said additional proviso.

Corollary 2.2

Let \(\{X_{n}(j): 0 \le j \le n\}\) be such that \({\mathbb {E}}(X_{n}(j))= 0\), \(\forall \) n, j, and that, for each n,

Suppose also that

Furthermore, suppose that \(u(r) = \sup _{j, n}\sum _{|k - j| \ge r} \mathrm {Cov}(X_{n}(j), X_{n}(k))\), \(r \ge 0\), is such that

Let \(S_{n}(i) = \sum _{j=0}^{i} X_{n}(j)\), and assume in addition that

Let \((N_{n}, n \in {\mathbb {N}})\) be integer-valued and positive r.v.’s, such that

for some \(0<\theta \le 1\).

We then have that

and also that

where \(\sigma ^{2}:= \lim _{n \rightarrow \infty } \text{ Var } (S_{[ \theta n]} / \sqrt{[\theta n]})\), \(0<\sigma ^{2}<\infty \).

By Theorem 1 in [13], the method of proof of Corollary 2.2 further relies on an application of Lemma 4.2, which we derive on the way to the proof of Proposition 2.3 below.

2.3 Anscombe’s Condition

Proposition 2.3 next regards a condition about deviations of random partial sums from deterministic ones the interval. This condition in the i.i.d. case was shown in [3].Footnote 9 The validity of this condition has not been anticipated to extend in the generality of Proposition 2.3, see Remark 3.3. To state it, we write \(X_{n} \xrightarrow {p} X\), as \(n \rightarrow \infty \), to denote convergence in probability. Further, we let \(\{X_{t}(j): (j, t) \in {\mathscr {L}}\}\) and let \(S_{t}(u, v) = \sum _{j =u}^{v} X_{t}(j)\). We introduce the following assumptions, which we will invoke there.

furthermore, let \(S_{t}^{+}(v) = \sum _{j = 0}^{v} X_{t}(j)\), \(S_{t}^{-}(u) = \sum _{j = 0}^{u} X'_{t}(j)\), \(X'_{t}(j) = X_{t}(-j-1)\), \(u, v \ge 0\), \(j\ge 0\) and assume that

We further let \((M_{t}: t\ge 0)\) and \((m_{t}: t\ge 0)\) be such that \((M_{t}, t) \in {\mathbb {L}}\) and that \((m_{t}, t) \in {\mathbb {L}}\); we assume that, for some \(0<\theta <\infty \),

Proposition 2.3

We let \(\{X_{t}(j): (j, t) \in {\mathbb {L}}\}\) and let \(S_{t}(u, v) = \sum _{j =u}^{v} X_{t}(j)\). Let us assume that conditions (13), (14), (15), and (16) are fulfilled. We let \((M_{t}: t\ge 0)\) and \((m_{t}: t\ge 0)\) be such that \((M_{t}, t) \in {\mathbb {L}}\) and that \((m_{t}, t) \in {\mathbb {L}}\) and, further, assume that (17) is fulfilled. We then have that

Where we note that throughout here, and in the above statement in particular, we will write that \(\sum _{x = -c}^{C}\) for \(\sum _{x = -[c] -1 }^{ [C] }\), where \([\cdot ]\) denotes the largest integer smaller than the argument, and that we also use the notational convention \(\sum _{0}^{-1} := 0\).

The method of proof of Proposition 2.3 extends the direct proof approach due to [51] for showing the Anscombe condition in the case of i.i.d. summands. We note that our proof invokes the so-called Hajek–Rényi inequality for associated r.v.’s, due to [11]. The proof of Theorem 2.1 given here relies on Proposition 2.3 and thus, follows an elementary approach, see also Remark 3.12 for a different approach. The random-index CLT’s in Sect. 2.2, as we noted above, are in addition consequences of Proposition 2.3, which we find of independent interest.

Outline of Proofs The remainder of this paper is organized as follows. The proof of Theorem 2.1, by means of applying Proposition 2.3, is given in Sect. 3. Preliminaries we will invoke in this proof are stated first in Sect. 3.1 separately, whereas another proof of Proposition 3.2 stated below in there is provided with for completeness in the Appendix. In Sect. 4, the proof of Proposition 2.3 is provided with, see Sect. 4.1. That of Corollary 2.2 is also given there, in Sect. 4.2.

3 Theorem 2.1

3.1 Preliminaries

We briefly state certain facts on oriented percolation that we use later on.

Some Notation The following definitions, which will also be useful for simplifying notations below, are introduced. Recall that we let \(r^{-}_{n} = \sup \xi _{n}^{2{\mathbb {Z}}_{-}}\) and \(l_{n}^{+}= \inf \xi _{n}^{2{\mathbb {Z}}_{+}}\), where \(2{\mathbb {Z}}_{-} = \{ \dots ,-2, 0\}\), and \(2{\mathbb {Z}}_{+} = \{ 0, 2, \dots \}\). We let

if \(l_{n} \le r_{n}\), and \({\mathcal {I}}_{n} = O\), otherwise. Similarly, we let

if \(l_{n}^{+} \le r_{n}^{-}\), and \({\mathcal {J}}_{n} = O\), otherwise. To see our motivation for considering \({\mathcal {J}}_{n}\), and \({\mathcal {I}}_{n}\) analogously, note that

and \(|{\mathcal {J}}_{n}| = 1\), otherwise. We let in addition the family of centered r.v.’s, which will play a central rôle in our analysis below, \(({\hat{\xi }}_{n}^{2{\mathbb {Z}}}(x): x \in 2{\mathbb {Z}})\), as follows. We let

where \(\rho _{n} = {\mathbb {P}}(\Omega _{n})\) is defined in (2). We note that \(({\hat{\xi }}_{n}^{2{\mathbb {Z}}}(x): x \in 2{\mathbb {Z}})\) are zero-mean, since by (34) below, \({\mathbb {E}}(\xi _{n}^{2{\mathbb {Z}}}(x)) = \rho _{n}\).

The Basic Coupling We state an important observation due to [14], which comprises the following consequence of path intersection properties. We have that

\({\mathbb {P}}\text{-a.s. }\) and, in particular,

\({\mathbb {P}}\text{-a.s. }\) and further, (22) gives that

\({\mathbb {P}}\text{-a.s. }\). Furthermore, since \(\Omega _{n} = \{r_{k} \ge l_{k}, \forall k \le n\}\), we also have that

Further, we note that (25) is in fact a special case of the following statement, regarding general initial configurations. Let \(\eta ^{-}\) and \(\eta ^{+}\) be such that \(\eta ^{-}(O) =\eta ^{+}(O) =1\), and also \(\eta ^{-}(x)=0\), for \( x\ge 2\), whereas, \(\eta ^{+}(x) = 0\), \(x \le -2\), and otherwise arbitrary. Letting \(r_{n}^{\eta ^{-}} = \sup \{x: \xi _{n}^{\eta ^{-}}(x) =1 \}\) and \(l_{n}^{\eta ^{+}}= \inf \{x: \xi _{n}^{\eta ^{+}}(x) = 1\}\), we have that, for all \(n \ge 1\), on \(\Omega _{n}\), \(r_{n} = r^{\eta ^{-}}_{n}\) and \(l_{n} = l_{n}^{\eta ^{+}}\) and, further that

For proofs of these statements, see for instance, \(\S \)3, [15], see also [14, 27].

The Asymptotic Velocity For all \(p > p_{c}\), there is \(\alpha = \alpha (p)>0\), such that

\({\mathbb {P}}\)-a.s.. Further, we have that (27) yields from (23), that

where we refer to \(\alpha := \alpha (p)\) as the asymptotic velocity. For a proof of (27) we refer to Theorem 1.4 in [14], and also (7) in \(\S \) 3 in [15].

The SLLN Let \(p > p_{c}\). Let \(\rho \) and \(\alpha \) be the asymptotic density and velocity, as defined in (27) and in (2), respectively. We have that

For a proof of (29) we refer to Theorem 9 in [21], see also (2) in \(\S \) 13 in [15]. We mention here that from (22) and (28), since \(|{\mathcal {I}}_{n}| = \frac{r_{n}-l_{n}}{2}+1\), we have that, as \(n \rightarrow \infty \), \(\displaystyle {\frac{|{\mathcal {I}}_{n}|}{n} \rightarrow \alpha ,\, {\bar{{\mathbb {P}}}} \text{ a.s. }}\). Thus, we have that (29) yields that, as \(n \rightarrow \infty \), \(\displaystyle {\frac{\sum _{x \in {\mathcal {I}}_{n}} \xi _{n}(x)}{|{\mathcal {I}}_{n}|} \rightarrow \rho , \quad {\bar{{\mathbb {P}}}} \text{ a.s. }}\).

Large Deviations Let \(p > p_{c}\). let \(a< \alpha (p)\). Then, the following limit exists and is strictly negative,

We require in addition below, the following known elementary consequence of (30). There are \(C, \gamma \in (0, \infty )\), such that

\(n \ge 1\), see, for instance [15], p. 1031, \(\S \) 12, first display in the proof of (1).

Monotonicity and Self-Duality An immediate consequence of the construction is that

\({\mathbb {P}}\)-a.s. Further, we have that, for all n even,

\(A, B \subset 2{\mathbb {Z}}\), and analogously, for n odd. The proof of (33), see \(\S \) 8, (2), p. 1021 in [15] relies on the observation that, after reversing the direction of all arrows in any realization, the law of the process started from (B, 2n), defined analogously by these new paths, and going backwards in time is the same as that of (B, 0); and, moreover, that a path connecting (A, 0) to (B, 2n) exists in the original sample point if and only if there is a backwards in time path connecting (B, 2n) to (A, 0) in the corresponding sample point. By an application of (33) and by the definition of the upper invariant measure \({\bar{\nu }}\), see (5), we note that,

\(A \subset \Sigma _{0}\). Furthermore, by (34) and recalling the definition of \(\rho \) from (2), gives that

CLT for the Upper Invariant Measure: Decay of Correlations Whenever \(p > p_{c}\), the upper invariant measure \({\bar{\nu }}\) possesses positive, and exponentially decaying, correlations. That is, if \({\bar{\xi }} \sim {\bar{\nu }}\), we have that there are \(C, \gamma \in (0, \infty )\), such that

\(x \in 2{\mathbb {Z}}\). As pointed out to in [21], p. 2, see also the final Remark in \(\S \) 6 in [27], property (36) implies the following Lemma by general results for random fields, see for instance, Theorem 12 in [46], or the list of references before statement Proposition 4.18 in Chpt. I, [39].

Lemma 3.1

\(\displaystyle {{\mathcal {L}}\left( \frac{\sum _{x = -\alpha n}^{\alpha n} ({\bar{\xi }}(x) - \rho )}{\sqrt{\alpha n}}\right) \xrightarrow {w} {\mathcal {N}}(0, \sigma ^{2})}\), as \(n \rightarrow \infty \), \(\sigma ^{2} < \infty \),

where \(\sigma ^{2} < \infty \) because \({\bar{\xi }}\) is strictly stationary (translation invariant), and we thus have that \(\sigma ^{2} = \mathrm {Var}({\bar{\xi }}(0)) + 2 \sum _{x \ge 2} \mathrm {Cov}({\bar{\xi }}(0), {\bar{\xi }}(x))\), and \(\mathrm {Var}({\bar{\xi }}(0)) = \rho - \rho ^{2}\), so that \(\sigma ^{2} < \infty \), by (36).

Furthermore in [21] the following stronger than (36) property is shown. To state it, consider \(({\hat{\xi }}_{n}^{2{\mathbb {Z}}}(x): x \in 2{\mathbb {Z}})\), as defined in (21). We have that, for all \(p >p_{c}\), there are \(C, \gamma \in (0, \infty )\), such that, for any n, and \((x_{i} \in 2{\mathbb {Z}}: i =1, \dots , k)\), \(k < \infty \), \(|x_{i}- x_{j}| > 2m\), we have that

and we refer to Theorem 8 in [21], see also (1), p. 1033, [15], for a proof of (37). We also finally mention two other routes to derive Lemma 3.1. One of them is provided with in the discussion prior to Theorem 3.23 in Chpt. VI, [39]. This approach relies on deriving, by means of (30), that the convergence in (5) occurs exponentially fast, which then implies as shown there by general results that \({\bar{\nu }}\) has exponentially decaying correlations, from which the conclusion follows as noted above. The other route is provided with in \(\S \) 6 of [27], where Lemma 3.1 is derived under the condition that, there exists \(C, \gamma \in (0, \infty )\), such that \({\mathbb {P}}({\bar{\Omega }}_{\infty }^{\{0, \dots , 2n\}}) \le C e ^{-\gamma n},\) for all \(n \ge 1\), which is shown there to be valid for sufficiently large values of p, and later shown for all \(p> p_{c}\) in [21], where we note that \({\bar{E}}\) denotes the complement of event E.

CLT for the Infinite Process in a Cone We state here an observation, pointed out in [15] see \(\S \) 13, (4); see also p. 286 in [16]. Property (37), together with the corresponding extension of Theorem 3 in [44] to triangular arrays, yields that \(\xi _{n}^{2 {\mathbb {Z}}}\) obeys classic CLT behavior. To state this explicitly, recall the definition of \({\hat{\xi }}_{n}^{2{\mathbb {Z}}}(x) = \xi _{n}^{2{\mathbb {Z}}}(x) - \rho _{n}\) in (21). We let \(p >p_{c}\) and let \(\alpha >0\) be the associated asymptotic velocity, and \({\bar{\xi }} \sim {\bar{\nu }}\), and \(\sigma ^{2}:= \sum _{x \in 2{\mathbb {Z}}} \mathrm {Cov}({\bar{\xi }}(O), {\bar{\xi }}(x))\), as in (7).

Proposition 3.2

\(\displaystyle {{\mathcal {L}}\left( \frac{\sum _{x = - \alpha n}^{\alpha n} {\hat{\xi }}_{n}^{2{\mathbb {Z}}}(x)}{\sqrt{\alpha n}}\right) \xrightarrow {w} {\mathcal {N}}(0, \sigma ^{2}), \text{ as } n \rightarrow \infty ,}\) and \(\sigma ^{2} < \infty \).

Remark 3.3

The following heuristic, which is suggested from properties of the basic coupling above, is stated next in (5) there:

Note that as expected there, and proved later in [25], and in [37], when diffusively normalized each term of the RHS converges asymptotically to a normal distribution, which then suggests that the RHS fluctuations diffusively rescaled would not converge in distribution to zero; see also the form of the variance conjectured in the latter reference for Theorem 2.1. We note that Proposition 2.3 will allow us to show that when normalized, the LHS converges in law to zero.

An Exponential Estimate We require below the following known estimate. Recall that \(\rho _{n} ={\mathbb {P}}(\Omega _{n})\) \(\rho = {\mathbb {P}}(\Omega _{\infty })\). Let \(p > p_{c}\). There are \(C, \gamma \in (0, \infty )\), such that

\(n \ge 1\). To see that (38) follows from known facts note that, if \(p > p_{c}\), then there are \(C, \gamma \in (0, \infty )\), such that

\(n \ge 1\), where for a proof of the above display, see [21], see also [15, (1), p. 1031]. By the law of total probability, and because \(\Omega _{n} \supseteq \Omega _{\infty }\), we have that

Combining the two displays above, and noting that by definition, if \(m \le n\), then \(\Omega _{n} \subseteq \Omega _{m}\), and therefore \({\mathbb {P}}(\Omega _{n}) - {\mathbb {P}}(\Omega _{\infty }) \ge 0\), we arrive at (38).

Elementary Facts We give next certain elementary probability statements. To this end, we let \((X_{n}: n\ge 0)\) and \((Y_{n}: n \ge 0)\) be collections of r.v.’s. For a proof of Lemma 3.4 stated next, see for instance, 5.11.4 in [31]. Lemma 3.5 regards the the basic fact that almost sure convergence is stronger than convergence in distribution, see for instance, Theorem 5.3.1 in [31]. Finally, Lemma 3.6 follows by noting that, as \(n \rightarrow \infty \), \(X_{n}(\omega ) - X_{k + n}(\omega ) \rightarrow 0\), \(\forall \omega \in \{\tau = k\}\) and any \(k \ge 0\), and then considering the partition \(\cup _{k \ge 0} \{\tau = k\}\), to conclude that \(X_{n} - X_{\tau + n} \rightarrow 0\) a.s..

Lemma 3.4

We have that

as \(n \rightarrow \infty \). Furthermore,

\(\gamma \in {\mathbb {R}}\backslash \{0\}\), as \(n \rightarrow \infty \).

Lemma 3.5

We have that, as \(n \rightarrow \infty \),

Lemma 3.6

Let \(\tau < \infty \) a.s., but otherwise arbitrary. We have that \(X_{\tau + n} - X_{n} \rightarrow 0\) a.s..

3.2 Proof of Theorem 2.1

The contents of this section comprise primarily the proof of Theorem 2.1 and may be outlined as follows. This proof is given here first, by means of relying on Propositions 3.7 and 3.9 we state in it and prove immediately afterward in the same order as stated. Proofs of various auxiliary statements, denominated Lemmas, required along the way in our proofs are further postponed, in order not to interrupt its course, to the end of this section. In Remark 3.12 We discuss here briefly in regard to modifications required to obtain the IP’s associated to our Theorem 2.1

Certain remarks regarding other route approaches are provided with at the end of these proofs.

Proof of Theorem 2.1

Note that, due to that \({\mathcal {I}}_{n} = \{x: l_{n} \le x \le r_{n}, (x,n) \in {\mathbb {L}}\}\), the result comprises the statement

Taking into account also that \(\sum _{x \in {\mathcal {I}}_{n}}( \xi _{n}(x) - \rho _{n}) = |\xi _{n}| - | {\mathcal {I}}_{n}|\rho _{n}, \text{ on } \Omega _{\infty },\) we have that, if we let \(A_{n} = \frac{\sum _{x \in {\mathcal {I}}_{n}}(\xi _{n}^{O}(x) - \rho _{n})}{\sqrt{|{\mathcal {I}}_{n}|}}\), then to complete this proof, it suffices to show that

We state next a key Proposition and subsequently state an auxiliary Lemma we require. We recall that we provide with the proofs of Propositions and Lemmas stated here afterward. Recall first that \(r^{-}_{n} = \sup \xi _{n}^{2{\mathbb {Z}}_{-}}\) and \(l_{n}^{+}= \inf \xi _{n}^{2 {\mathbb {Z}}^{+}}\), \(2{\mathbb {Z}}_{-}= \{ \dots ,-2, 0\}\), \(2 {\mathbb {Z}}^{+} = \{ 0, 2, \dots \}\). Recall further that we let \(({\hat{\xi }}_{n}^{2{\mathbb {Z}}}(x): (x,n) \in {\mathbb {L}})\), \({\hat{\xi }}_{n}^{2{\mathbb {Z}}}(x) = \xi _{n}^{2{\mathbb {Z}}}(x) - \rho _{n}\), \(\rho _{n} = {\mathbb {P}}(\Omega _{n})\), as defined in (21). Recall also that \({\mathcal {J}}_{n} = \{x: l_{n}^{+} \le x \le r_{n}^{-}, (x,n) \in {\mathbb {L}}\}\), whenever \(l_{n}^{+} \le r_{n}^{-}\), and \({\mathcal {J}}_{n} = O\), otherwise, as in (20). \(\square \)

Proposition 3.7

Let \(\displaystyle {{\bar{A}}_{n} = \frac{\sum _{x \in {\mathcal {J}}_{n}} {\hat{\xi }}_{n}^{2{\mathbb {Z}}}(x)}{\sqrt{|{\mathcal {J}}_{n}|}}}\). We have that

We state the other key Proposition below, after the following auxiliary Lemma required first.

Lemma 3.8

Let

We have that \(\tau < \infty \), a.s.

We may now give the said Proposition.

Proposition 3.9

\({\mathcal {L}}({\bar{A}}_{n + \tau }) = {\mathcal {L}}(A_{n}| \Omega _{\infty })\), for all \(n \ge 0\).

Note that, Lemma 3.8 by an application of Lemma 3.6 gives that, as \(n \rightarrow \infty \),

However (45) by Lemma 3.5 gives that \({\mathcal {L}}({\bar{A}}_{n + \tau } - {\bar{A}}_{n}) \rightarrow 0\), as \(n \rightarrow \infty \), and hence, Proposition 3.7 by an application of (39)an application of (39) yields that

From Proposition 3.9 and (46), we have that (42) follows, and, therefore, the proof is complete. \(\square \)

Proof of Proposition 3.7

From Proposition 3.2 we have that

where we recall that \(\alpha : = \alpha (p)>0\), \(p > p_{c}\), is the asymptotic velocity, as defined in (27); however, note that (27) gives that \(\sqrt{\frac{|{\mathcal {J}}_{n}|}{[\alpha n]}} \rightarrow 1\), as \(n \rightarrow \infty \), a.s., therefore, by Lemma 3.4, (40), we have that

Hence, if we assume that

then, (47) together with an application of Lemma 3.4, (39), yields (43), and the result is proved.

We prove the remaining (48). To do this, we will show that the hypotheses of the general Proposition 2.3 are fulfilled when setting \(({\hat{\xi }}_{n}^{2{\mathbb {Z}}}(x), l_{n}^{+}, r_{n}^{-})\) equal to \((X_{t}(j), m_{t}, M_{t})\) there. We have that: a) Recall that \({\mathbb {E}}(\xi _{n}^{2{\mathbb {Z}}}(x)) = \rho _{n}\), where this equality comes from self-duality, see (33). We therefore have that assumption (13) holds since \(({\hat{\xi }}_{n}^{2{\mathbb {Z}}}(x))\) are centered r.v.’s. b) We now show that assumption (14) is granted for \(({\hat{\xi }}_{n}^{2{\mathbb {Z}}}(x))\) as follows. Note that, due to a corollary to Harris’ correlation inequality [34], see [39, Thm. 2.14, Chpt. II], which applies since every deterministic configuration is positively correlated, we have that \((\xi _{n}^{2 {\mathbb {Z}}})\) has positive correlations for all n. Because \(\xi _{n}^{2 {\mathbb {Z}}}\) takes values on a partially ordered set, this gives that \(\{\xi _{n}^{2 {\mathbb {Z}}}(x)\}\) are associated, and hence also \(({\hat{\xi }}_{n}^{2{\mathbb {Z}}}(x))\) are associated, because increasing functions of associated r.v.’s are also associated by using the definition. c) Because \(\xi _{n}^{2 {\mathbb {Z}}}(x) \in \{0,1\}\), we have that \({\mathbb {E}}|\xi _{n}^{2 {\mathbb {Z}}}(x)| ^{2} \le 1\), and therefore assumption (15) is also fulfilled. d) Furthermore, we have that (37) gives that (16) is valid, because, by using that the covariance is a linear operation in the one argument if the other is fixed, we then have that \(C^{-} = C^{+} = \frac{C}{1-\gamma } < \infty \). e) Finally, we have that (17) is valid for \(m_{t} = l_{n}^{+}\) and \(M_{t} = r^{-}_{n}\) due to (27). Hence, we have that (48) holds, and the proof is thus complete. \(\square \)

Proof of Proposition 3.9

We will show that

for all \(k \ge 0, a\in {\mathbb {R}}\). To see that proving the above display suffices complete this proof note that, due to Lemma 3.8, we have by the law of total probability and (49), that

To state the next auxiliary lemma we require, we let \({\mathcal {F}}_{n}\) denote the \(\sigma \)-algebra associated to the part of the construction of the processes with bonds the end-vertices of which have time-coordinate no greater than n. \(\square \)

Lemma 3.10

We let

\((x,n) \in {\mathbb {L}}\), \(m \ge 0\). We have the following representation

for some \(F \in {\mathcal {F}}_{n-1}\).

We state next another general auxiliary statement we will use below.

Lemma 3.11

Let \(\eta ^{-}, \eta ^{+}\) be such that \(\eta ^{-}(O) =\eta ^{+}(O) =1\) and \(\eta ^{-}(x)=0\), \( x\ge 2\), \(\eta ^{+}(x) = 0\), \(x \le -2\). Let \(r_{n}^{\eta ^{-}} = \sup \{x: \xi _{n}^{\eta ^{-}}(x) =1 \}\) and \(l_{n}^{\eta ^{+}}= \inf \{x: \xi _{n}^{\eta ^{+}}(x) = 1\}\). We have that \(\Omega _{\infty } =\{r_{n}^{\eta ^{-}} \ge l_{n}^{\eta ^{+}}, \text{ for } \text{ all } n \ge 1\}\).

We now have that, for any \((x,k) \in {\mathbb {L}}\),

where in (52) we plug in (51) from Lemma 3.10, in (53) we use independence of events measurable with respect to disjoint parts of \({\mathscr {L}}\) by construction, in (54) we use translation-invariance with respect to (x, k), and finally in (55) we use that, by (22), \({\bar{A}}_{n} = A_{n}\) a.s. on \(\Omega _{\infty }\).

The law of total probability gives

where (56) follows from (55), and (57) follows from that, \({\mathbb {P}}(|r_{k}^{-}| < \infty | \tau = k) = 1\), for all k, due to that \({\mathbb {P}}(|r_{n}^{-}| < \infty ) = 1\), which follows from (27) by considering the contrapositive statement. This proof is thus complete. \(\square \)

We prove the remaining Lemmas 3.8, 3.10, and 3.11 that we stated and used above.

Proof of Lemma 3.8

We will derive the estimate that there are \(C, \gamma \in (0, \infty )\) such that

for all \(n \ge 1\).

Let \(E^{r}_{n} = \{\exists m\ge n: r^{-}_{m} < 0\} \text{ and } E^{l}_{n} = \{\exists m\ge n: l^{+}_{m} >0\}.\) From (31), we have that

\( n \ge 1\). Further, note that

where (60) follows by (44) and considering the contra-positive relation, i.e. that

Hence, subadditivity and noting that by symmetry \({\mathbb {P}}(E^{r}_{n}) = {\mathbb {P}}(E^{l}_{n})\), gives

from which the proof of (58) is complete by (59). \(\square \)

Proof of Lemma 3.10

To prove (51), note that it suffices to show that

However, Lemma 3.11 and translation invariance give that, for any \((x, n) \in {\mathbb {L}}\),

hence (61) is identical to (44), and (51) follows. \(\square \)

Proof of Lemma 3.11

Note that, since \(\Omega _{\infty } = \cap _{n \ge 1} \Omega _{n}\), this statement follows directly from (26). \(\square \)

Remark 3.12

To derive the IP corresponding to Proposition 3.2, that is that, if we let \(V_{t}^{n} = \frac{1}{\sigma \sqrt{\alpha n}}\sum _{x = - \alpha n}^{\alpha n} {\hat{\xi }}_{n}^{2{\mathbb {Z}}}(x)\), then we have that

which means that the random functions \(V^{n}\) converge to the Wiener measure, W, in the space D, see Section 13 in [6] for definitions and background in this regard. To derive (62) one may either modify the proof of Theorem 3 in [44] to the case of arrays of r.v.’s in which the length of each row grows linearly with the row number, or alternatively, one may modify the proof of Proposition 3.2 in the Appendix by invoking the IP extension of Lemma 3.1 instead there. Letting \(U_{t}^{n} = \frac{1}{\sigma \sqrt{\alpha n}}\sum _{x \in {\mathcal {J}}_{n}} {\hat{\xi }}_{n}^{2{\mathbb {Z}}}(x)\), we then have that by (62) and (27) the assumptions of Theorem 14.4 in [6] are fulfilled, hence yielding that

By appropriately modifying the argument from (44) onwards in the proof above we have that the corresponding IP to Theorem 2.1 may also be derived from (63). Our proof of Theorem 2.1 contrasts to the approach we briefly sketched here in that the former does not prerequisite invoking any general statements. Further, note that the latter approach does not go through Proposition 2.3 and hence, does not provide with the random-indices CLT’s we provide with in Sect. 2.2.

4 Proposition 2.3 and Corollary 2.2

4.1 Proof of Proposition 2.3.

The proof of Proposition 2.3 is divided into two parts. We will first derive Proposition 2.3 by means of relying on Lemma 4.2, stated below here next, and proved below immediately thereafter, in this section. Prior to that, we also state here the Hajek–Rényi inequality for associated r.v.’s, due to [11], see also [53]. Recall the definition of association given in Sect. 2.2.

Lemma 4.1

Let \((X_{j}: j = 1, \dots , n)\) be associated r.v.’s such that \({\mathbb {E}}(X_{j})= 0\) for all j, and let also \((c_{j}: j = 1, \dots , n)\) be a sequence of non-increasing and positive numbers. Let \(S_{k} = \sum _{j=1}^{k} X_{j}\). Then, we have that

We may now state the following Lemma.

Lemma 4.2

Let \(\{X_{t}(j): j, t \ge 0 \}\) be such that \({\mathbb {E}}(X_{t}(j))= 0\), \(\forall \,t, j\). Let also \({S_{t}(i) = \sum _{j =0}^{i} X_{t}(j)}\). We assume the following:

Furthermore, we let \((N_{t}: t\ge 0)\) be integer-valued and non-negative r.v.’s, such that, for some \(0<\theta <\infty \),

Then, we have that

Proof of Proposition 2.3

Let \(M'_{t}= M_{t} \cdot 1\{M_{t} \ge 0\}\) and \(m_{t}' = m_{t}\cdot 1\{m_{t} \le 0\}\), where we recall that \(M_{t}\) and \(m_{t}\) are as in (17). Note that in the notation introduced we have that

and thus, by the triangle inequality, we have that

However, the assumptions of Lemma 4.2 are appropriately satisfied, yielding that

as \(t \rightarrow \infty \). Hence, from (68) and the display above, we have that

To conclude the proof of (18), note that, again by the triangle inequality,

so that in view of (69), it suffices to show that

which follows simply by noting that, for all \(\epsilon >0\),

and hence (70) follows, since from (17) we have that \(\lim _{t \rightarrow \infty } {\mathbb {P}}(M_{t} \le -1) = 0\) and \(\lim _{t \rightarrow \infty } {\mathbb {P}}(m_{t} \ge 1) = 0\). The proof is thus complete. \(\square \)

Proof of Lemma 4.2

Note that without loss of generality we may take \(\theta = 1\). Let \(\epsilon \in (0,1)\), and also let \(m(t) = [t(1-\epsilon ^{3})]+1\) and \(n(t) = [t(1+\epsilon ^{3})]\). Let \(Y_{i}(t) = X_{t}(t+i)\), \(i = 1, \dots , n(t)\) and denote their partial sums as \(Z_{k}(t) = \sum _{i=1}^{k} Y_{i}(t)\), then we have that

From (64) we have that Lemma 4.1 applies and choosing there \(c_{k} = \frac{1}{\sqrt{t}}\), gives that

where C is independent of t, and (72) comes from (65) and (66). Similarly, letting \(Y'_{i}(t) =X_{t}(t+1-i)\), for \(i = 1, \dots t - m(t) +1\) and \(Z'_{k}(t) = \sum _{j=1}^{k}Y'_{i}(t)\), we have that

Again, we can apply Lemma 4.1 with \(c_{k} = \frac{1}{\sqrt{t}}\) from (64), so that

where again we use (65) and (66) in (74).

Partitioning according to the event \(N_{t} \in [m(t), n(t)]\) and its complement and then using (72) and (74) gives that

where for the last inequality we invoke (72) and (74). However, (67) gives

and hence, from (75) we get that

which due to that \(\epsilon \) is arbitrary, completes the proof. \(\square \)

4.2 Proof of Corollary 2.2

The following Theorem, due to [13], is applied in the proof of this Corollary following next.

Theorem 4.3

Let \(\{X_{n}(j): 0 \le j \le n\}\) be such that \({\mathbb {E}}(X_{n}(j))= 0\), \(\forall \,n, j\), and suppose that (8), (9), and (10) hold. Letting \(S_{n}(n) = \sum _{j =0}^{n} X_{n}(j)\), we then have that

where \(\sigma ^{2}:= \lim _{n \rightarrow \infty } {\text{ Var }} (S_{n}(n) / \sqrt{n})\), \(0<\sigma ^{2}<\infty \).

Proof of Corollary 2.2

Note that it suffices to only show the first conclusion Corollary 2.2, for the second one follows from that by an application of Lemma 3.4. Note also that there is no loss of generality in assuming \(\theta =1\). We thus have that the hypotheses of Theorem 4.3 are met, and hence, (76) holds. From it and Lemma 4.2, the proof is complete by an application of Lemma 3.4. \(\square \)

Notes

References

Ahlswede, R., Daykin, D.E.: An inequality for the weights of two families of sets, their unions and intersections. Probab. Theory Relat. Fields 43(3), 183–185 (1978)

Alon, N., Spencer, J.H.: The Probabilistic Method. Wiley, New York (2016)

Anscombe, F.: Large-sample theory of sequential estimation. Math. Proc. Camb. Philos. Soc. 48(4), 600–607 (1952)

Bezuidenhout, C., Gray, L.: Critical attractive spin systems. Ann. Probab. 22, 1160–1194 (1994)

Bezuidenhout, C., Grimmett, G.: The critical contact process dies out. Ann. Probab. 18, 1462–1482 (1990)

Billingsley, P.: Convergence of Probability Measures. Wiley, New York (1995)

Birkel, T.: The invariance principle for associated processes. Stoch. Process. Appl. 27, 57–71 (1987)

Bollobás, B., Riordan, O.: Percolation. Cambridge University Press, Cambridge (2006)

Broadbent, S.R., Hammersley, J.M.: Percolation processes: I. Crystals and mazes. Math. Proc. Camb. Philos. Soc. 53(3), 629–641 (1957)

Bulinski, A., Shashkin, A.: Limit Theorems for Associated Random Fields and Related Systems, vol. 10. World Scientific, Hackensack (2007)

Christofides, T.: Maximal inequalities for demimartingales and a strong law of large numbers. Stat. Probab. Lett. 50(4), 357–363 (2000)

Cox, J., Griffeath, D.: Occupation time limit theorems for the voter model. Ann. Probab. 11, 876–893 (1983)

Cox, T., Grimmett, G.: Central limit theorems for associated random variables and the percolation model. Ann. Probab. 12, 514–528 (1984)

Durrett, R.: On the growth of one dimensional contact processes. Ann. Probab. 8, 890–907 (1980)

Durrett, R.: Oriented percolation in two dimensions. Ann. Probab. 12, 999–1040 (1984)

Durrett, R.: Lecture Notes on Particle Systems and Percolation. Wadsworth, Belmont (1988)

Durrett, R.: The contact process, 1974-1989. Cornell University, Mathematical Sciences Institute (1991)

Durrett, R.: Ten Lectures on Particle Systems. Lecture Notes in Math, vol. 1608. Springer, New York (1995)

Durrett, R.: Probability: Theory and Examples. Cambridge University Press, Cambridge (2010)

Durrett, R., Griffeath, D.: Contact processes in several dimensions. Probab. Theory Relat. Fields 59(4), 535–552 (1982)

Durrett, R., Griffeath, D.: Supercritical contact processes on \({\mathbb{Z}}\). Ann. Probab. 11, 1–5 (1983)

Esary, J.D., Proschan, F., Walkup, D.W.: Association of random variables, with applications. Ann. Math. Stat. 38(5), 1466–1474 (1967)

Fortuin, C.M., Kasteleyn, P.W., Ginibre, J.: Correlation inequalities on some partially ordered sets. Commun. Math. Phys. 22(2), 89–103 (1971)

Georgii, H.O., Häggström, O., Maes, C.: The random geometry of equilibrium phases. Phase Transit. Crit. Phenom. 18, 1–142 (2001)

Galves, A., Presutti, E.: Edge fluctuations for the one-dimensional supercritical contact process. Ann. Probab. 15, 1131–1145 (1987)

Griffeath, D.: Additive and Cancellative Interacting Particle Systems. Springer, New York (1979)

Griffeath, D.: The basic contact processes. Stoch. Proc. Appl. 11, 151–185 (1981)

Grimmett, G.: Percolation, 2nd edn. Springer, Berlin (1999)

Grimmett, G.: Probability on Graphs: Random Processes on Graphs and Lattices. Cambridge University Press, Cambridge (2012)

Gut, A.: Stopped Random Walks. Springer, New York (2009)

Gut, A.: Probability: A Graduate Course, 2nd edn. Springer, New York (2012)

Harris, T.E.: A lower bound for the critical probability in a certain percolation process. Proc. Camb. Philos. Soc. 56, 13–20 (1960)

Harris, T.E.: Contact interactions on a lattice. Ann. Probab. 2, 969–988 (1974)

Harris, T.E.: A correlation inequality for Markov processes in partially ordered state spaces. Ann. Probab. 5, 451–454 (1977)

Harris, T.E.: Additive set-valued Markov processes and graphical methods. Ann. Probab. 6, 355–378 (1978)

Holley, R.: Remarks on the FKG inequalities. Commun. Math. Phys. 36(3), 227–231 (1974)

Kuczek, T.: The central limit theorem for the right edge of supercritical oriented percolation. Ann. Probab. 17, 1322–1332 (1989)

Lebowitz, J.L.: Bounds on the correlations and analyticity properties of ferromagnetic Ising spin systems. Commun. Math. Phys. 28(4), 313–321 (1972)

Liggett, T.M.: Interacting Particle Systems. Springer, New York (1985)

Liggett, T.M.: An improved subadditive ergodic theorem. Ann. Probab. 13, 1279–1285 (1985)

Liggett, T.M.: Stochastic Interacting Systems: Contact, Voter and Exclusion Processes. Springer, New York (1999)

Liggett, T.M.: Continuous Time Markov Processes: An Introduction, vol. 113. American Mathematical Soc, Providence, RI (2010)

Newman, C.M.: Normal fluctuations and the FKG inequalities. Commun. Math. Phys. 74(2), 119–128 (1980)

Newman, C.M., Wright, L.: An invariance principle for certain dependent sequences. Ann. Probab. 9, 671–675 (1981)

Newman, C.M.: A general central limit theorem for FKG systems. Commun. Math. Phys. 91(1), 75–80 (1983)

Newman, C.M.: Asymptotic Independence and Limit Theorems for Positively and Negatively Dependent Random Variables. Lecture Notes-Monograph Series, pp. 127–140 (1984)

Oliveira, P.E.: Asymptotics for Associated Random Variables. Springer, New York (2012)

Penrose, M.D.: A central limit theorem with applications to percolation, epidemics and Boolean models. Ann. Probab. 29, 1515–1546 (2001)

Penrose, M.D.: Multivariate spatial central limit theorems with applications to percolation and spatial graphs. Ann. Probab. 33(5), 1945–1991 (2005)

Rao, B.P.: Associated Sequences, Demimartingales and Nonparametric Inference. Springer, New York (2012)

Rényi, A.: On the central limit theorem for the sum of a random number of independent random variables. Acta Math. Hung. 11, 97–102 (1960)

Schonmann, R.: Central limit theorem for the contact process. Ann. Probab. 14, 1291–1295 (1986)

Sung, S.: A note on the Hajek-Renyi inequality for associated random variables. Stat. Probab. Lett. 78(7), 885–889 (2008)

Tzioufas, A.: The law of the iterated logarithm for supercritical 2D oriented percolation (in preparation)

Williams, D.: Probability with Martingales. Cambridge University Press, Chicago (1991)

Acknowledgements

This work has been supported during non-overlapping periods of time by CONICET, by FAPESP grant 2016/03988-5, and, currently, by PNPD/CAPES.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Proof of Proposition 3.2

We let \((\xi _{n}^{2{\mathbb {Z}}})\) and \(({\bar{\xi }}_{n})\) be the processes with starting sets \(2{\mathbb {Z}}\) and \({\bar{\xi }}_{0}\sim {\bar{\nu }}\), where we enlarge our probability space to support a \({\bar{\nu }}\)-distributed independent random set \(\mathbf{S}\) by setting \({\bar{\xi }}_{0} = S\), on \(\{\mathbf{S} = S\}\). We also let \(K_{n}(a)\) be the set of points of \({\mathbb {L}}\) inside a cone of slope \(a>0\) and apex (O, 0), as follows \(K_{n}(a) = \{x: -an \le x \le an \text{ and } (x,n) \in {\mathbb {L}}\}\), \(n \ge 0\). We have the next Lemma. \(\square \)

Lemma 5.1

\(\{\forall x \in K_{n}(a), \xi _{n}^{2 {\mathbb {Z}}}(x) = {\bar{\xi }}_{n}(x)\}, \,\, \forall n \text{ large, } {\mathbb {P}}\text{-a.s. }\)

Proof

From the Borel-Cantelli lemma and the union bound, since \(|K_{n}(a)|\) grows linearly in n, it suffices to show that, for all \(a>0\), there are \(C, \gamma \) such that, for any \(x \in K_{n}(a)\),

\(n \ge 1\). However, we have that

\(n \ge 1\), where in the first line we used that, by (32), \({\bar{\xi }}_{n} \supseteq \xi ^{2 {\mathbb {Z}}}_{n}\), and in the second one we used that and, in addition, the law of total probability; in the third line we used (33), and also (35) together with stationarity; and the last inequality comes from (38). This completes the proof. \(\square \)

We also note here the following consequence of Lemma 3.1 above.

Corollary 5.2

\(\displaystyle {{\mathcal {L}}\left( \frac{\sum _{x \in K_{n}(\alpha )} ({\bar{\xi }}_{n}(x) - \rho )}{\sqrt{\alpha n}}\right) \xrightarrow {w} {\mathcal {N}}(0, \sigma ^{2})}\), as \(n \rightarrow \infty \).

Proof

Let \(\xi _{n}^{'}\) be such that

and note that, for all n, \(\xi _{n}^{'} \sim {\bar{\nu }}\). Applying Lemma 3.1, completes the proof. \(\square \)

Note that Lemma 5.1 gives that \(\sum _{x \in K_{n}(\alpha )} \xi ^{2 {\mathbb {Z}}}_{n}(x) = \sum _{x \in K_{n}(\alpha )}{\bar{\xi }}_{n}(x), \forall n \text{ large, } {\mathbb {P}}\text{-a.s. }\), so that from Corollary 5.2, and Lemma 3.4, (39), we have that

By the last display above, since \({\hat{\xi }}_{n}^{2{\mathbb {Z}}}(x) = \xi _{n}^{2{\mathbb {Z}}}(x) - \rho _{n}\), again by applying Lemma 3.4, (39), we have that it suffices to show that \( \displaystyle {\sqrt{\alpha n} (\rho _{n} - \rho ) \rightarrow 0}\), as \(n \rightarrow \infty \), which holds by (38), and hence the proof is complete. \(\square \)

Rights and permissions

About this article

Cite this article

Tzioufas, A. The Central Limit Theorem for Supercritical Oriented Percolation in Two Dimensions. J Stat Phys 171, 802–821 (2018). https://doi.org/10.1007/s10955-018-2040-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-018-2040-y