Abstract

The classical fluctuation-dissipation theorem predicts the average response of a dynamical system to an external deterministic perturbation via time-lagged statistical correlation functions of the corresponding unperturbed system. In this work we develop a fluctuation-response theory and test a computational framework for the leading order response of statistical averages of a deterministic or stochastic dynamical system to an external stochastic perturbation. In the case of a stochastic unperturbed dynamical system, we compute the leading order fluctuation-response formulas for two different cases: when the existing stochastic term is perturbed, and when a new, statistically independent, stochastic perturbation is introduced. We numerically investigate the effectiveness of the new response formulas for an appropriately rescaled Lorenz 96 system, in both the deterministic and stochastic unperturbed dynamical regimes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Under suitable conditions, the fluctuation-dissipation theorem (FDT) [28, 29, 41] furnishes an approximation to the statistical response of a dynamical system to a deterministic external perturbation via statistical correlations of the unperturbed dynamics. The FDT offers more insight into statistical properties of dynamical processes near equilibrium in various scientific applications [13, 15, 16, 18, 21,22,23,24,25, 30, 32, 37, 38]. In the past works [1,2,3, 7, 8, 10,11,12], a computational framework predicting the average response of both deterministic and stochastic dynamical systems to a small deterministic external perturbation was developed and extensively studied.

In the current work, we develop the fluctuation-response theory and numerically test the computational framework of the response of statistical averages to a stochastic external perturbation, for both the deterministic and stochastic unperturbed dynamics. For the deterministic unperturbed dynamics, our set-up is similar to the one used in [35], however, the resulting formula we arrive at is different from the one in [35], on which we will comment below. For the stochastic unperturbed dynamics, we consider two different types of perturbations: first, where the existing stochastic term is perturbed, and, second, when a new, statistically independent, stochastic term is introduced. We test the computational framework of the stochastic response on the Lorenz 96 system [33, 34], which we used as a test-bed nonlinear chaotic system with forcing and dissipation for various purposes in the past [1,2,3,4,5,6,7,8,9,10,11, 38].

Before going into the details, here we start by explaining the basic idea of the average response and how it can be expressed via the statistical properties of the underlying unperturbed dynamical system, and also what problems one runs into while considering what otherwise seems to be a rather simple dynamical set-up.

1.1 Deterministic Dynamics

We start by considering a system of ordinary differential equations of the form

where t is a scalar time variable, \(\varvec{x}_t\) is an N-dimensional vector in the Euclidean space \({\mathbb R^N}\), and \(\varvec{f}:{\mathbb R^N}\rightarrow {\mathbb R^N}\) is a smooth vector field. Observe that the solution \(\varvec{x}_t\) can be specified in the form of a semigroup \(\phi _t\),

where \(\varvec{x}\) is the initial condition. We assume that any solution \(\varvec{x}_t=\phi _t\varvec{x}\) of (1.1) is attracted, as \(t\rightarrow \infty \), to a compact set \(\mathcal M\subset {\mathbb R^N}\), on which it possesses a unique invariant ergodic measure \(\mu \). We will say that \(\mathcal M\) is the global attractor of (1.1). Here we assume that the system in (1.1) is chaotic and mixing, that is, it has positive first Lyapunov exponent and decaying time autocorrelation functions.

Let \(A(\varvec{x})\) be a twice-differentiable function on \({\mathbb R^N}\), then we denote its \(\mu \)-average as

Observe that even though \(A(\varvec{x}_t)\) changes with time t, its \(\mu \)-average \(\langle A(\varvec{x}_t)\rangle \) is fixed in t, due to the fact that \(\mu \) is invariant on \(\mathcal M\) under \(\phi _t\),

1.2 The Concept of the Average Response to a Stochastic Perturbation

Consider the situation where the average \(\langle A\rangle \) is computed across a statistical ensemble of solutions of (1.1), which is distributed according to the invariant measure \(\mu \) above. As we already pointed out, this average \(\langle A\rangle \) is constant in time. However, assume that, at \(t=0\), an external perturbation (that is, a stochastic modification of the right-hand side) is introduced into (1.1). Clearly, since the right-hand side is different, the measure \(\mu \) is no longer invariant for the new, modified dynamics. Because of that, the statistical average \(\langle A\rangle \) with respect to \(\mu \) becomes time-dependent for the perturbed dynamics. Here we do not assume that \(\mu \) is necessarily the Sinai–Ruelle–Bowen measure [45] as the finite time response to an external perturbation does not require it; an SRB measure is, however, necessary for the infinite time response to be differentiable with respect to a deterministic external perturbation [42, 43].

The difference between the new time-dependent ensemble average of A and its previous stationary (for the unperturbed dynamics) value is then called the “response”:

Observe that above the average is taken not only with respect to the statistical ensemble of solutions, but also over all possible realizations of the external stochastic perturbation. Our goal here is to derive the leading order term in the response, which depends only on statistics of the unperturbed dynamical system, under the assumption that the perturbation is sufficiently small.

The more obvious, “brute force” approach, would be to do the following:

-

1.

Start with a point \(\varvec{x}\) on \(\mathcal M\), and emit two trajectories out of \(\varvec{x}\): the unperturbed one, given by \(\phi _t\varvec{x}\), and the perturbed one, given by the corresponding solution of the perturbed system.

-

2.

Clearly, both trajectories, perturbed and unperturbed, are generally nonlinear functions of elapsed time t and initial condition \(\varvec{x}\). So, assuming that the unperturbed solution \(\phi _t\varvec{x}\) is “known”, figure out a suitable way to “expand” the perturbed solution around the unperturbed one in small increments, and keep only the leading order term.

-

3.

Recall that this has to be done for every \(\varvec{x}\in \mathcal M\), so, average the above result out with respect to the invariant measure \(\mu \), and over all possible realizations of the external stochastic perturbation. Provided that the leading order response from the previous item is somehow expressed in terms of trajectories of the unperturbed system, the \(\mu \)-average can be replaced with the long-term time average, with help of Birkhoff’s theorem [14].

With the exception of averaging across realizations of stochastic perturbations, this is what was previously done for the deterministic perturbations of chaotic and stochastic dynamical systems [1,2,3, 7, 10,11,12]. It is a long and cumbersome way of deriving the response, and the result involves the (computationally expensive) tangent map  , given by

, given by

The situation is further complicated by the fact that, for chaotic dynamical systems,  grows exponentially fast in t, which causes a numerical instability for moderate and long response times. Thus, this approach can only be practically used for rather short response times (although it is usually quite precise, provided that the response time is sufficiently short [1,2,3, 7]).

grows exponentially fast in t, which causes a numerical instability for moderate and long response times. Thus, this approach can only be practically used for rather short response times (although it is usually quite precise, provided that the response time is sufficiently short [1,2,3, 7]).

1.3 The Forward Kolmogorov Equation

Another way to compute the average response is to employ the concept of the probability density p of a statistical ensemble distribution. The key idea here is to use that fact that, while \(\varvec{x}_t\) is governed by nonlinear dynamics, the partial differential equation for p (called the forward Kolmogorov equation [19], and also the Fokker–Planck equation [41]) is linear. In particular, for the deterministic dynamical system in (1.1), the forward Kolmogorov equation for p is given by

where D is the differentiation operator with respect to the vector-argument of the function it acts upon. Above, the dot-product of D with a vector-function \(\varvec{a}(\varvec{x})\) refers to

with the usual summation convention. Observe that in order for the solution p of (1.7) to remain a probability density, its integral over \({\mathbb R^N}\) must remain equal to 1 (which, together with the non-negativity of p, implies that p must vanish at infinity), even if p itself changes with time. Indeed, one can verify that the integral of p over \({\mathbb R^N}\) is preserved by (1.7), which is necessary for p to remain a probability density.

The Kolmogorov equation above is an extremely useful tool for working with the statistical properties of the system in (1.1), since it describes the statistical distribution of the system in a direct fashion. Unfortunately, it cannot be used directly to compute the response of the deterministic system in (1.1), for the following reason.

Since, as stated earlier, any solution of (1.1) attracts to \(\mathcal M\) as t becomes infinite, it would be natural to think that, in the limit as \(t\rightarrow \infty \), p becomes the density of the ergodic invariant measure \(\mu \) on \(\mathcal M\). However, here lies the fundamental “incompatibility” of the Kolmogorov equation in (1.7), and the limiting dynamics of (1.1) on its global attractor \(\mathcal M\): for many applied dynamical systems, especially those with dissipation and forcing [17, 42, 43, 45], the invariant measure \(\mu \) on \(\mathcal M\) is not differentiable in \(\varvec{x}\) (it is also said that it is not continuous with respect to the Lebesgue measure on \(\mathcal M\)). In this situation, the (non-stationary) solution \(p(t,\varvec{x})\) of (1.7) contracts exponentially rapidly along certain directions of the phase space (while appropriately expanding transversally, so that its integral over \({\mathbb R^N}\) remains 1), becoming singular in the infinite time limit.

Observe that above we considered arguably the most simple setup for a dynamical system, which describes a wide class of applied problems. Yet, we cannot make use of the Kolmogorov equation (1.7) to statistically describe dynamics near the attractor of the system in (1.1), which is necessary for understanding of how the system responds to an external perturbation. Therefore, in order to be able to use the Kolmogorov equation in (1.7), we must be willing to consider a suitable modification of (1.1), which renders its invariant measure \(\mu \) continuous with respect to the Lebesgue measure on \({\mathbb R^N}\). Arguably, the simplest such modification is achieved via a stochastic noise added into the otherwise deterministic dynamical system in (1.1).

1.4 Stochastic Dynamics

Here we are going to consider a stochastic modification of (1.1), achieved via introducing an additional noise term via a Wiener process \(\varvec{W}_t\) of dimension K:

where  is a smooth \(N\times K\) matrix. For convenience, here we interpret the resulting integral of the solution

is a smooth \(N\times K\) matrix. For convenience, here we interpret the resulting integral of the solution

in the sense of Itô [26, 27]. The forward Kolmogorov equation for the differential equation in (1.9) is given by [19, 39]

where “ : ” denotes the Frobenius product of two matrices, so that

To ensure the smoothness of solutions of (1.11), here we follow [40] and assume that both \(\varvec{f}\) and  have bounded derivatives of all orders, and that the matrix product

have bounded derivatives of all orders, and that the matrix product  is uniformly positive definite in \({\mathbb R^N}\). The latter automatically means that the columns of

is uniformly positive definite in \({\mathbb R^N}\). The latter automatically means that the columns of  span \({\mathbb R^N}\) for any \(\varvec{x}\in {\mathbb R^N}\), implying \(K\ge N\). Additionally, we will assume that there is a unique smooth stationary probability density \(p_0\) which sets the right-hand side of (1.11) to zero.

span \({\mathbb R^N}\) for any \(\varvec{x}\in {\mathbb R^N}\), implying \(K\ge N\). Additionally, we will assume that there is a unique smooth stationary probability density \(p_0\) which sets the right-hand side of (1.11) to zero.

Observe that the solution \(\varvec{x}_t\) of (1.9) cannot be represented by a t-semigroup like in (1.2), since \(\varvec{W}_t\) depends on t explicitly. However, instead a similar representation can be done for the Kolmogorov equation in (1.11) with help of the transitional probability density \(p^*\). Indeed, let \(p^*(t,\varvec{x},\varvec{x}_0)\) denote the solution of (1.11), for which the initial condition at \(t=0\) is the delta-function \(\delta (\varvec{x}-\varvec{x}_0)\). Then, assuming that at time t the solution is \(p(t,\varvec{x})\), its extension \(p(t+s,\varvec{x})\) for \(s\ge 0\) can be expressed as a convolution with \(p^*\) as follows:

Now, let \(p_0\) denote the stationary smooth probability density of (1.11), such that

and, therefore,

Then, the statistically average value of a function \(A(\varvec{x})\) is given by

where we assume that \(A(\varvec{x})\) is such that the integral above is finite. As before, if a statistical ensemble of solutions \(\varvec{x}_t\) of (1.9) is distributed according to \(p_0\), then the ensemble average of A is constant in time, even though each individual solution in such an ensemble is in general not stationary.

1.5 The Layout of the Paper

In what is to follow, we arrange the presentation in the reverse order (relative to what was presented above), due to the fact that, as was mentioned previously, it turns out to be easier to start with a stochastic differential equation of the form in (1.9) and derive the leading order response via the Kolmogorov equation (1.11), which we do in Sect. 2. Then, in Sect. 3 we return to the deterministic unperturbed dynamics, and derive the response formula in the “brute force” fashion, sketched above. In Sect. 4 we show that, if one formally replaces the invariant measure \(\mu \) of the deterministic system in (1.1) with a smooth density approximation, then the response formulas for the deterministic and stochastic unperturbed dynamics become equivalent. In Sect. 5 we derive simplified response formulas for both the deterministic and stochastic unperturbed dynamics under the assumption that the probability density of the unperturbed state is Gaussian, as was previously done in [9,10,11,12, 38] for deterministic perturbations. In Sect. 6 we present the numerical experiments with both the deterministic and stochastically forced Lorenz 96 models to verify the computed response formulas. Section 7 summarizes the results of the work.

2 Leading Order Response of a Stochastic Dynamics to a Stochastic Perturbation

We start by considering the response of the stochastic dynamics in (1.9), as due to the fact that the invariant state \(p_0\) of the unperturbed dynamics in (1.9) is a smooth stationary solution of the Kolmogorov equation in (1.11), it is in fact much easier technically to derive the corresponding leading order response formula (as opposed to the situation with a deterministic unperturbed dynamics). Here we will consider two different types of perturbation: first, when the existing stochastic matrix is perturbed, and, second, when a new, statistically independent stochastic perturbation is added.

2.1 Perturbing the Existing Stochastic Term

First, we are going to assume that the already present in (1.9) stochastic diffusion matrix  is perturbed by a small time-dependent term starting at \(t=0\):

is perturbed by a small time-dependent term starting at \(t=0\):

where \(0<\varepsilon \ll 1\) is a small constant parameter to signify that the perturbation is small,  is a matrix of the same dimension and smoothness properties as

is a matrix of the same dimension and smoothness properties as  , and \(\eta (t)\) is a bounded, piecewise continuous and square-integrable function, which is zero for negative values of t. Then, the corresponding perturbed Kolmogorov equation is obtained from (1.11) by replacing

, and \(\eta (t)\) is a bounded, piecewise continuous and square-integrable function, which is zero for negative values of t. Then, the corresponding perturbed Kolmogorov equation is obtained from (1.11) by replacing  with

with  :

:

We assume that the solution \(p^\varepsilon \) of the perturbed Kolmogorov equation above depends smoothly on \(\varepsilon \) for sufficiently small \(\varepsilon \), and admits the expansion

where \(p_0\) is the stationary solution of the unperturbed Kolmogorov equation, while the expansion terms \(p_i\), \(i>0\) are independent on \(\varepsilon \) and have zero initial conditions. We seek the perturbed solution in the leading order of \(\varepsilon \), which leads directly to

One can verify directly that the solution for \(p_1\) is given by

where \(\mathcal P^t\) is defined in (1.13). The response of A in the leading order of \(\varepsilon \) is thus given by

with

At this point, we use the definition of \(\mathcal P^t\) in (1.13) to obtain \(R_1(t)\) in terms of the time-correlation function [40, 41]

with

Above, the division by \(p_0\) is allowed since it is the solution to an elliptic equation which vanishes at infinity, and thus is never zero for finite \(\varvec{y}\).

For the practical computation of the time correlation function in (2.8), we use the Birkhoff–Khinchin theorem [20] and replace the spatial integrals in (2.8) with the time average along a long-term trajectory as

which results, after substituting the expression for \(B(\varvec{x}_s)\), in

The long-term trajectory \(\varvec{x}_s\) above is computed via a numerical simulation of (1.9), from an arbitrary initial condition.

2.2 Adding a New Stochastic Term

Here we assume that a new small stochastic term is added to (1.9),

where the Wiener process \(\varvec{W}_t'\) is independent of \(\varvec{W}_t\). In order to derive the corresponding Kolmogorov equation, we rewrite the above equation in the form

where

The corresponding perturbed Kolmogorov equation is, obviously, given by

Further observing that

we arrive at

Observe that there is no first-order term in \(\varepsilon \), so we can expand \(p^\varepsilon \) near \(p_0\) in even powers of \(\varepsilon \) as

where, as before, \(p_{2i}\) for \(i>0\) are independent of \(\varepsilon \) and have zero initial condition, and similarly obtain the equation for \(p_2\) as

Again, one can verify that \(p_2\) is given by

The response of A in the leading order of \(\varepsilon \) (which is now \(\varepsilon ^2\)) is thus given by

with

Following the same steps as above for \(R_1(t)\), we express \(R_2(t)\) via the time average as

Observe that in this case the response is quadratic in \(\varepsilon \eta (t)\) (which is unlike the previous case, where the existing diffusion matrix was perturbed).

3 Leading Order Response of a Deterministic Dynamics to a Stochastic Perturbation

Now, we consider a small external stochastic perturbation of the deterministic system in (1.1) of the form

where the perturbation term has the same properties as in the previous section, while, for the purposes of the derivation, we additionally require \(\varvec{f}\) to be uniformly Lipschitz in \({\mathbb R^N}\) [19, 40] to ensure the existence of solutions to (3.1) (recall that the unperturbed system back in (1.1) does not necessarily require it for global existence). Here, however, we cannot use an expansion of Kolmogorov equation near the stationary unperturbed state, since this state may not necessarily be continuous with respect to the Lebesgue measure. Instead, we will have to employ the differentiability of the resulting stochastic flow with respect to the perturbation [31].

We denote the solution to the perturbed system in (3.1) by \(\varvec{x}_t^\varepsilon =\phi _t^\varepsilon \varvec{x}\), and rewrite (3.1) in the integral form as

where the stochastic integral is computed in the sense of Itô. Let \(A(\varvec{x})\) be a twice differentiable function, then one can write its second-order Taylor expansion in \(\varepsilon \) as

where “\(\otimes \)” is the outer product of two vectors, that is,

Also, the following notation is used above:

For the \(\varepsilon \)-derivative of \(\phi _t^\varepsilon \varvec{x}\) (which we assume to exist almost surely for finite t according to [31]) we compute

which results, by setting \(\varepsilon =0\), in

At this point, we need to solve the Itô integral equation above. Applying the Itô differentiation formula to both sides of (3.7) results in

At the same time, it is easy to verify that the tangent map  from (1.6) satisfies

from (1.6) satisfies

which further yields

Now we multiply both sides of the above identity by the inverse of  on the left, which results, after taking into account the identity

on the left, which results, after taking into account the identity

for an arbitrary matrix  , in

, in

Pulling the first term in the right-hand side above to the left, combining the terms and integrating from 0 to t, we arrive at

where the last term is the quadratic covariation of the processes  and \(\partial _\varepsilon \phi _t\varvec{x}\):

and \(\partial _\varepsilon \phi _t\varvec{x}\):

However, since we have assumed above that \(\partial _\varepsilon \phi _t\varvec{x}= \int _0^t\mathrm {d}\left( \partial _\varepsilon \phi _s\varvec{x}\right) \) is almost surely finite for finite t, the quadratic covariation above in (3.13) is almost surely zero. Further multiplying (3.12) by  on the left and taking into account its cocycle property, we finally arrive at

on the left and taking into account its cocycle property, we finally arrive at

For the second \(\varepsilon \)-derivative we further obtain by the differentiation of (3.6)

which becomes, upon setting \(\varepsilon =0\),

Now recall that, according to the definition of the average response in (1.5), we should compute the expectation (that is, the average) of the second-order Taylor expansion in (3.3) over all realizations of \(\varvec{W}_t\), which leads to

One immediately observes that

where in the first identity the integrand is not a random variable, while in the second the integrand is adapted to the natural filtration of \(\varvec{W}_t\). After some computation while remembering Duhamel’s principle and Itô’s isometry, we arrive at

Now we recall that the expectations above are taken under the condition that the stochastic flows start at \(\varvec{x}\), which, in turn, belongs to the attractor of (1.1). Therefore, we further need to average the result above over the invariant measure \(\mu \) of the unperturbed system. The result is, after discarding the higher-order terms,

4 The Equivalence of the Response Formulas for the Deterministic and Stochastic Dynamics

While the response formula in (3.20b) is rather complicated for a practical use, one can actually show that the response operator R(t) in (3.20b) can be written in a more concise way:

Indeed, observe that, first,

where the combination of the Frobenius and outer product for matrices denotes

Next, differentiating (3.9) with respect to \(\varvec{x}\) yields

Duhamel’s principle then yields

Combining the results, we obtain

which leads to the above claim.

It is interesting that a different response formula was obtained in [35] for a stochastic perturbation of a deterministic dynamics.Footnote 1 The derivation in [35] was also different: instead, a stochastic perturbation was used directly in the second-order response formula for deterministic perturbations [44], which was further scaled by a factor of one-half.

As we mentioned above, generally one cannot assume that the invariant measure of the deterministic dynamics of the form (1.1) possesses a density, since most often the compact set on which the solution of (1.1) lives has a complicated structure. However, let us assume that there exists a smooth probability density \(p_0(\varvec{x})>0\), such that \(p_0\mathrm {d}\varvec{x}\) is a suitable, in an appropriate for our purposes sense, approximation for the invariant measure \(\mathrm {d}\mu \). Under such a hypothetical assumption, one writes (4.1) in the form

Now that \(\mu \) is replaced by the density \(p_0(\varvec{x})\), one can integrate the above expression by parts, obtaining

Replacing the measure averages with the time averages with help of Birkhoff’s theorem, we obtain the same formula as in (2.23):

In other words, under the assumption of a differentiable approximation to the invariant state, the time-averaged response formula for the deterministic unperturbed dynamics is identical to the response formula for the stochastic dynamics in (2.23), where the external stochastic perturbation is statistically independent to the unperturbed noise term.

Below we will see that this approach allows to obtain a sensible approximation to the response operator even in a situation where the unperturbed dynamics is purely deterministic, similar to what was observed for the deterministic perturbations in [10,11,12, 38].

5 The Quasi-Gaussian Approximation for the Response Operator

Observe that the response formulas for the stochastic unperturbed dynamics in (2.11) and (2.23) are not computable directly, since the equilibrium density \(p_0(\varvec{x})\) of (1.9) is not generally known explicitly. It is, theoretically, possible to compute the response in (4.1) by computing the tangent map  in parallel with \(\varvec{x}_t\) (for more details, see [1,2,3, 7, 10,11,12]) and using (3.20b) expressed as a time-lagged autocorrelation function over the time-series average. However, the latter option is very expensive from the computational standpoint, and, for a chaotic unperturbed dynamical system, it will only remain computationally stable for short response times.

in parallel with \(\varvec{x}_t\) (for more details, see [1,2,3, 7, 10,11,12]) and using (3.20b) expressed as a time-lagged autocorrelation function over the time-series average. However, the latter option is very expensive from the computational standpoint, and, for a chaotic unperturbed dynamical system, it will only remain computationally stable for short response times.

Instead, we are going to use a simplified method to compute the response, called the quasi-Gaussian FDT (qG-FDT) approximation [11]. The main idea of the qG-FDT approximation is that \(p_0(\varvec{x})\) in (2.11), (2.23) and (4.9) is replaced with its Gaussian approximation, which has the same mean state and covariance matrix as does \(p_0(\varvec{x})\). For that, first observe that (2.11) and (2.23) (which is identical to (4.9)) can be written as

Now, let us denote the mean state of \(p_0(\varvec{x})\) as \(\varvec{m}\), and its covariance matrix as  :

:

Then, the Gaussian approximation \(p_0^G(\varvec{x})\) for \(p_0(\varvec{x})\) is given by the explicit formula

which results in

The approximations above are then inserted directly into (5.1a) and (5.1b), resulting in explicit time-lagged autocorrelation functions, computed along the long-term time series of solutions of the unperturbed system in (1.9). Observe that the autocorrelations in (5.1a) and (5.1b), computed via the Gaussian approximations in (5.4), simplify somewhat further if the matrices  and

and  are constant, as only one term out of the three remains in such a case.

are constant, as only one term out of the three remains in such a case.

6 Computational Experiments

In this section we investigate the validity of the qG-FDT response formulas in (5.1a) and (5.1b) for both the deterministic and stochastic unperturbed dynamics. For the test-bed dynamical system, we choose the well-known Lorenz 96 model.

6.1 The Rescaled Lorenz 96 Model

The test system used to study the new method of stochastic parameterization here is the rescaled Lorenz 96 system with stochastic forcing. Without the stochastic forcing, it was previously used in [4,5,6,7,8,9, 36] to study the deterministic reduced model parameterization, as well as the average response to deterministic external perturbations. The rescaled Lorenz 96 system with stochastic forcing is given by

with \(1\le i\le N\). Above, \(W_t^i\) denotes the family of N mutually independent Wiener processes, indexed by i, with G being a constant stochastic forcing parameter, so that, in terms of the notations in (1.9),  is a constant multiple of the identity matrix,

is a constant multiple of the identity matrix,

The model has periodic boundary conditions: \(x_{i+N}=x_i\). The parameters \(\bar{x}\) and \(\beta \) are the statistical mean and the standard deviation, respectively, for the corresponding unrescaled Lorenz 96 model [33, 34]

with the same periodic boundary conditions. The rescaling above ensures that, in the absence of the stochastic forcing (that is, \(G=0\)) the Lorenz 96 model in (6.1) has zero mean state and unit standard deviation, and that the time scale of evolution of its solution is roughly the same for different values of F. In the current work, we test the response theory, developed above, for two values of F, \(F=24\) and \(F=8\), and two values of G, \(G=0.5\) and \(G=0\), with the latter corresponding to the purely deterministic unperturbed dynamics. As in the original paper [34], we set \(N=40\).

We must note that the right-hand side of (6.1) does not satisfy the requirements imposed on (1.9) in Sect. 1; observe that the deterministic part of the right-hand side of (6.1) is neither bounded nor even uniformly Lipschitz in \({\mathbb R^N}\), which means that the existence of strong solutions to (6.1) is not guaranteed [19, 40]. Nonetheless, below we demonstrate via the numerical simulations that, for the chosen parameters of the system, numerical solutions exist for a long enough time to allow the reliable time-averaging for the response computation.

6.2 Long-Term Statistics of the Unperturbed Dynamics

The computational settings for the numerical simulations were chosen as follows:

-

Forward integration scheme: Runge-Kutta 4th order for the deterministic part of the time step, forward Euler for the stochastic part of the time step;

-

Time discretization step: \(\Delta t=1/64\);

-

Time averaging window: \(T_{av}=\)200,000 time units;

-

Spin-up time window (time skipped between the initial condition and the beginning of the time averaging window): \(T_{skip}=\)10,000 time units;

-

Initial condition: each initial state \(x_i\), \(1\le i\le N\), is generated at random using normal distribution with zero mean and unit standard deviation.

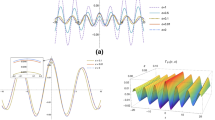

In Fig. 1 we show the histograms of the probability density functions (PDFs), computed by the standard bin-counting, as well as the simplest time-lag autocorrelation functions of the solution with itself, the latter computed numerically as

where x(t) denotes one of the N variables of (6.1). Obviously, due to the translational invariance of (6.1), both the PDFs and correlation functions are identical across different variables. Observe that the PDFs look close to Gaussian, and the time autocorrelation functions decay rather rapidly within the first five units of time. There are two reasons why we need to check the decay of the time autocorrelation functions: first, we need to ensure that the time averaging window \(T_{av}\) is much longer than the decay time scale of C(t) for the adequate statistical averaging; and, second, we need to estimate the time scale of development of the response, since it is directly connected to the time scale of the autocorrelation functions according to (5.1a) and (5.1b).

For more precise estimates of how close the PDFs on Fig. 1 are to Gaussian, in Table 1 we show the skewness (third moment) and kurtosis (fourth moment) of the PDFs, nondimensionalized by the appropriate powers of the variance. For the purely Gaussian distribution, the skewness is zero, and the kurtosis is 3. Observe that, in this respect, the PDFs for the dynamical regimes with greater F and greater G are closer to the Gaussian, and thus we may expect generally better performance of the qG-FDT approximations in (5.1a) and (5.1b) for those regimes.

6.3 The External Perturbations and the Response Function

For testing the response theory developed above, we use a rather simple set-up. We set the stochastic perturbation matrix  entirely to zero, except for its single upper-left corner element, which is set to 1:

entirely to zero, except for its single upper-left corner element, which is set to 1:

The product \(\varepsilon \eta (t)\) is set to a small constant \(\eta \) at zero response time:

where we choose two values for \(\eta \): \(\eta =0.05\), and \(\eta =0.1\). Thus, the stochastic perturbation is applied to the first variable of the model, \(x_1\), and constitutes a scalar Wiener noise \(\eta W_t\).

As far as the choice of the response function \(A(\varvec{x})\) is concerned, it is obvious that monitoring a single scalar quantity (as presented in the theory above) is not sufficient to evaluate the detailed impact of the external stochastic perturbation, even of the simplest type we chose above, on the model. Thus, we choose to monitor the response to the stochastic perturbation of each model variable instead. More precisely, instead of monitoring one response function for the whole system, we monitor N of those, separately for each model variable:

where the square of a variable \(x_i\) is chosen (rather than the variable itself) because it is likely to respond more substantially to a stochastic perturbation. If we denote the set of all \(A_i(\varvec{x})\) as the vector \(\varvec{A}(\varvec{x})\), the latter can be expressed concisely as the Hadamard product of \(\varvec{x}\) with itself:

Note that in our previous works [1,2,3, 7, 9,10,11,12, 38], where the deterministic perturbations where studied, the typical choice of \(A(\varvec{x})\) was \(\varvec{x}\) itself. Here, however, we prefer (6.8) because \(\varvec{x}\) by itself is unlikely to respond to a stochastic perturbation in a well-articulated fashion.

The set-up above allows us to compute the response of all \(x_i^2\), \(1\le i\le N\), separately, to a small constant stochastic forcing at the first variable, \(x_1\), which is switched on at zero time. Due to the constant nature of the stochastic perturbation, the response formulas are simplified as

or

depending on the type of stochastic forcing, where \(\varvec{R}_1(s)\) and \(\varvec{R}_2(s)\) are the corresponding vectorized leading order qG-FDT response operators from (5.1a) and (5.1b) for the vector response function in (6.8). In what follows, we display the operators \(\mathcal R_1(t)\) or \(\mathcal R_2(t)\) (again, depending on the type of stochastic perturbation) computed at different times \(t=0.5,1,2,4\). We compare these operators with the directly measured responses via ensemble simulations, normalized by either \(\eta \) (for comparison with \(\mathcal R_1\)) or \(\eta ^2\) (for comparison with \(\mathcal R_2\)), respectively. The ensemble simulations are performed with the ensemble size of 20000 members (which were sampled from the same long term trajectory of the unperturbed system as was used to compute the statistics and the qG-FDT response), with 1000 realizations of the Wiener process carried out for each member. Each such ensemble simulation took several hours on a 16-processor Intel Xeon server, running fully in parallel. In contrast, each qG-FDT response computation took only few minutes using a single CPU core.

In addition to the plots of the response at different times, we show the relative errors and collinearity correlations between the actually measured response, and the response predicted by the qG-FDT formulas in (5.1a) or (5.1b), depending on the type of noise perturbation. The relative error is defined as the ratio of the Euclidean norm of the difference between the qG-FDT response and the normalized measured response, over the Euclidean norm of the qG-FDT response:

The collinearity correlation is defined as the Euclidean inner product of the qG-FDT response with one of the measured responses, normalized by the product of their corresponding Euclidean norms:

It is easy to see that the collinearity correlation achieves its maximum value of 1 if and only if one response is the exact multiple of the other.

6.4 Perturbing the Existing Noise Term

In this section we study the response of two dynamical regimes of the stochastically forced (\(G=0.5\)) rescaled Lorenz 96 model in (6.1). The first regime corresponds to the constant forcing \(F=24\), and is, according to Fig. 1 and Table 1, the closest to the Gaussian regime of all examined in Sect. 6.2. In Fig. 2 we show the response of the function (6.8) to the perturbation of the existing stochastic matrix  by the perturbations described above in (6.5)–(6.6), with \(\eta \) set to 0.05 and 0.1. With help of the periodicity of (6.1), the response variables in Fig. 2, as well as all subsequent figures, are displayed so that the variable \(x_1\) (on which the perturbation occurs) is at the center of the plot, with \(x_2\) immediately to the right, and \(x_N\) to the left.

by the perturbations described above in (6.5)–(6.6), with \(\eta \) set to 0.05 and 0.1. With help of the periodicity of (6.1), the response variables in Fig. 2, as well as all subsequent figures, are displayed so that the variable \(x_1\) (on which the perturbation occurs) is at the center of the plot, with \(x_2\) immediately to the right, and \(x_N\) to the left.

Observe that the precision of the qG-FDT response prediction in this case is truly striking—there is hardly any visual difference between the qG-FDT prediction and the directly measured responses for both \(\eta =0.05\) and \(\eta =0.1\). Additionally, in Table 2 we show the relative errors and collinearity correlations for the qG-FDT prediction and two directly measured responses for the regime with \(F=24\) and \(G=0.5\). Observe that the relative errors are about 10–15% for all displayed response times, and their collinearity exceeds 99%.

In Fig. 3 we show the results for the second regime where we perturb the existing stochastic forcing. In this regime, the stochastic diffusion matrix is set to the same value  as before, by the constant deterministic forcing F is set to \(F=24\). According to Fig. 1 and Table 1, this regime is the second closest to the Gaussian, and it is clearly manifested in the difference between the qG-FDT prediction and the directly measured response with \(\eta =0.05\) and \(\eta =0.1\), which are shown in Fig. 3. Namely, this time there is a visible discrepancy between the qG-FDT response prediction and both directly measured responses in the second variable to the right from the one where the perturbation is applied. Table 3 reinforces this visual perception, indicating relative errors between 15 and 26%. Surprisingly, the collinearity correlations between the qG-FDT prediction and the directly measured responses do not suffer much, still remaining about 97-98% of the maximum possible.

as before, by the constant deterministic forcing F is set to \(F=24\). According to Fig. 1 and Table 1, this regime is the second closest to the Gaussian, and it is clearly manifested in the difference between the qG-FDT prediction and the directly measured response with \(\eta =0.05\) and \(\eta =0.1\), which are shown in Fig. 3. Namely, this time there is a visible discrepancy between the qG-FDT response prediction and both directly measured responses in the second variable to the right from the one where the perturbation is applied. Table 3 reinforces this visual perception, indicating relative errors between 15 and 26%. Surprisingly, the collinearity correlations between the qG-FDT prediction and the directly measured responses do not suffer much, still remaining about 97-98% of the maximum possible.

6.5 Perturbing with a New Noise

Here we show the results of numerical simulations where the external stochastic perturbation is introduced into the system via a separate noise realization. In this situation, we can study both the fully deterministic and the stochastically forced dynamics of the rescaled Lorenz 96 model in (6.1); together with two different values of the forcing F, this constitutes four different combinations of parameters: (\(F=24\), \(G=0\)), (\(F=8\), \(G=0\)), (\(F=24\), \(G=0.5\)), and (\(F=8\), \(G=0.5\)).

As we mentioned before, the qG-FDT approximation in (5.1b) should not formally be valid for the deterministic unperturbed dynamics with \(G=0\), since there is no guarantee that the invariant measure \(\mu \) of the unperturbed dynamics is even continuous with respect to the Lebesgue measure, let alone possesses a Gaussian density. However, practice showed in the past with deterministic perturbations [9,10,11,12, 38] that the quasi-Gaussian formula for the deterministic invariant measure can in fact be quite a reasonable approximation, especially if the unperturbed dynamics is strongly chaotic and rapidly mixing. This is what we confirm here for stochastic external perturbations as well.

To model the ideal response in the stochastically forced unperturbed regimes with \(G=0.5\), we apply an independent realization of the unperturbed stochastic forcing with the diffusion matrix  to each of the 20,000 ensemble members, while modeling the stochastic perturbation via another 1000 independent realizations of the Wiener noise for each ensemble simulation as before. This is done to retain the same computational expense as for the other studied cases, which, of course, leads to statistical undersampling of the expectation over the noise realizations. Indeed, in the case of stochastic perturbations independent from the unperturbed noise, the expectation must be computed over the comparable number of realizations separately for the unperturbed noise and for the stochastic perturbation to retain comparable averaging fidelity. Still, we find that even in this simplified setup the qG-FDT approximation shows good agreement with the measured response, at least for sufficiently short response times.

to each of the 20,000 ensemble members, while modeling the stochastic perturbation via another 1000 independent realizations of the Wiener noise for each ensemble simulation as before. This is done to retain the same computational expense as for the other studied cases, which, of course, leads to statistical undersampling of the expectation over the noise realizations. Indeed, in the case of stochastic perturbations independent from the unperturbed noise, the expectation must be computed over the comparable number of realizations separately for the unperturbed noise and for the stochastic perturbation to retain comparable averaging fidelity. Still, we find that even in this simplified setup the qG-FDT approximation shows good agreement with the measured response, at least for sufficiently short response times.

In Fig. 4 and Table 4 we show the qG-FDT prediction together with the directly measured response with perturbations \(\eta =0.05\) and \(\eta =0.1\), for the dynamical regime of (6.1) with \(F=24\) and \(G=0\) (fully deterministic). Observe that, in comparison with the previously studied regimes, the quality of the qG-FDT prediction tends to deteriorate rather substantially, as the relative errors increase to 20–33%, and the collinearity correlations drop to 95–98% for the response times \(t\le 2\). However, what we can also observe is the large discrepancy between the two directly measured responses for different perturbation magnitudes, which develops at \(t=4\). Note that no such discrepancy was observed for the stochastic unperturbed dynamics—both directly measured responses, for \(\eta =0.05\) and \(\eta =0.1\), were virtually identical in Figs. 2 and 3. This could be a manifestation of the developing structural instability of the attractor of the system as the dynamics transition from purely deterministic to stochastic.

In Fig. 5 and Table 5 we show the results for the regime with \(F=8\), and all other parameters set as above. Here, observe that the quality of the qG-FDT prediction deteriorates even further, as the relative errors increase to 36–53%, and the collinearity correlations drop to 93–87% for the response times \(t\le 2\). This is to be expected, however, since this regime is the farthest from the Gaussian, according to Fig. 1 and Table 1. The discrepancy between the directly measured responses for perturbations of different magnitudes, which could be attributed to the developing structural instability of the attractor, also manifests itself at time \(t=4\), as for the previously studied deterministic regime with \(F=24\).

In Fig. 6 and Table 6 we show the qG-FDT prediction together with the directly measured response with perturbations \(\eta =0.05\) and \(\eta =0.1\), for the dynamical regime of (6.1) with \(F=24\) and \(G=0.5\), where the stochastic perturbation is independent of the unperturbed noise. It is interesting that, in comparison with the previously studied regimes, the quality of the qG-FDT prediction tends to be good for short response times, \(t=0.5\) and \(t=1\), with relative errors about 8–16%, and collinearity correlations about 98–99%. However, for longer response times the errors appear to grow faster than in any of the previously considered cases.

Just as for the deterministic unperturbed dynamics above, here we can also observe the large discrepancy between the two directly measured responses for different perturbation magnitudes, which develops at \(t=2\). This is likely the manifestation of statistical undersampling mentioned above, since the size of the statistical ensemble is unchanged even though an additional independent stochastic forcing is introduced into the dynamics.

In Fig. 7 and Table 7 we show the results for the regime with \(F=8\), \(G=0.5\), and a statistically independent stochastic perturbation. Here, the observations appear to be consistent with the previous results. Namely, the relative errors (15–33%) for the initial times \(t=0.5\) and \(t=1\) are worse than those for the previously considered regime, \(F=24\), \(G=0.5\), likely due to the fact that the qG-FDT approximation is worse for the present regime due to stronger non-Gaussianity of the unperturbed regime. However, the errors for longer times, \(t=2\) and \(t=4\), are smaller than those for the regime with \(F=24\), again, likely due to the fact that the statistical undersampling does not manifest as strongly in a less chaotic regime. The collinearity correlations appear to follow the same trend as the relative errors.

7 Summary

In this work we develop a fluctuation-response theory and test a computational algorithm for the leading order response of chaotic and stochastic dynamical systems to stochastic perturbations. The key property of this approach is that it allows to estimate the average response to an external stochastic perturbation from a certain combination of the time-lagged averages of the unperturbed system. For dynamical systems, which are already stochastic, we consider two cases: first, where the existing stochastic term is perturbed; and, second, where a new stochastic perturbation is introduced, which correspondingly leads to different leading order average response formulas. We also show that, under appropriate assumptions, the resulting formulas for leading order response to a stochastic perturbation for the deterministic and stochastic unperturbed dynamics are equivalent. For the practical computation of the leading order response approximation, we derive the approximate quasi-Gaussian response formulas, where the probability density of the unperturbed statistical state is assumed to be Gaussian. We numerically investigate the validity of the quasi-Gaussian response formulas for stochastic perturbations of both deterministic and stochastically forced Lorenz 96 system. We find that the quasi-Gaussian response formulas appear to more effective for the regimes where the unperturbed dynamics is already stochastic. Additionally, for the stochastic perturbations of the deterministic Lorenz 96 model, we observe what seems to be a manifestation of structural instability of the system’s attractor under a stochastic perturbation.

Notes

The author privately communicated that his response formula applies to a perturbation noise in the Stratonovich form.

References

Abramov, R.V.: Short-time linear response with reduced-rank tangent map. Chin. Ann. Math. 30B(5), 447–462 (2009)

Abramov, R.V.: Approximate linear response for slow variables of deterministic or stochastic dynamics with time scale separation. J. Comput. Phys. 229(20), 7739–7746 (2010)

Abramov, R.V.: Improved linear response for stochastically driven systems. Front. Math. China 7(2), 199–216 (2012)

Abramov, R.V.: A simple linear response closure approximation for slow dynamics of a multiscale system with linear coupling. Multiscale Model. Simul. 10(1), 28–47 (2012)

Abramov, R.V.: Suppression of chaos at slow variables by rapidly mixing fast dynamics through linear energy-preserving coupling. Commun. Math. Sci. 10(2), 595–624 (2012)

Abramov, R.V.: A simple closure approximation for slow dynamics of a multiscale system: nonlinear and multiplicative coupling. Multiscale Model. Simul. 11(1), 134–151 (2013)

Abramov, R.V.: Linear response of the Lyapunov exponent to a small constant perturbation. Commun. Math. Sci. 14(4), 1155–1167 (2016)

Abramov, R.V.: A simple stochastic parameterization for reduced models of multiscale dynamics. Fluids 1(1), 2 (2016)

Abramov, R.V., Kjerland, M.: The response of reduced models of multiscale dynamics to small external perturbations. Commun. Math. Sci. 14(3), 831–855 (2016)

Abramov, R.V., Majda, A.J.: Blended response algorithms for linear fluctuation-dissipation for complex nonlinear dynamical systems. Nonlinearity 20, 2793–2821 (2007)

Abramov, R.V., Majda, A.J.: New approximations and tests of linear fluctuation-response for chaotic nonlinear forced-dissipative dynamical systems. J. Nonlinear Sci. 18(3), 303–341 (2008)

Abramov, R.V., Majda, A.J.: New algorithms for low frequency climate response. J. Atmos. Sci. 66, 286–309 (2009)

Bell, T.: Climate sensitivity from fluctuation dissipation: some simple model tests. J. Atmos. Sci. 37(8), 1700–1708 (1980)

Birkhoff, G.D.: Proof of the ergodic theorem. Proc. Natl. Acad. Sci. USA 17(12), 656–660 (1931)

Carnevale, G., Falcioni, M., Isola, S., Purini, R., Vulpiani, A.: Fluctuation-response in systems with chaotic behavior. Phys. Fluids A 3(9), 2247–2254 (1991)

Cohen, B., Craig, G.: The response time of a convective cloud ensemble to a change in forcing. Q. J. R. Meteorol. Soc. 130(598), 933–944 (2004)

Eckmann, J.-P., Ruelle, D.: Ergodic theory of chaos and strange attractors. Rev. Mod. Phys. 57(3), 617–656 (1985)

Evans, D., Morriss, G.: Statistical Mechanics of Nonequilibrium Liquids. Academic Press, New York (1990)

Gikhman, I.I., Skorokhod, A.V.: Introduction to the Theory of Random Processes. Courier Dover Publications, New York (1969)

Gikhman, I.I., Skorokhod, A.V.: The Theory of Stochastic Processes I. Classics in Mathematics. Springer, New York (2004)

Gritsun, A.: Fluctuation-dissipation theorem on attractors of atmospheric models. Russ. J. Numer. Math. Model. 16(2), 115–133 (2001)

Gritsun, A., Branstator, G.: Climate response using a three-dimensional operator based on the fluctuation-dissipation theorem. J. Atmos. Sci. 64, 2558–2575 (2007)

Gritsun, A., Branstator, G., Dymnikov, V.: Construction of the linear response operator of an atmospheric general circulation model to small external forcing. Numer. Anal. Math. Model. 17, 399–416 (2002)

Gritsun, A., Branstator, G., Majda, A.J.: Climate response of linear and quadratic functionals using the fluctuation dissipation theorem. J. Atmos. Sci. 65, 2824–2841 (2008)

Gritsun, A., Dymnikov, V.: Barotropic atmosphere response to small external actions. Theory and numerical experiments. Atmos. Ocean Phys. 35(5), 511–525 (1999)

Itô, K.: Stochastic integral. Proc. Imp. Acad. Tokyo 20, 519–524 (1944)

Itô, K.: On stochastic differential equations. Mem. Am. Math. Soc. 4, 1–51 (1951)

Kubo, R.: Statistical mechanical theory of irreversible processes I: general theory and simple applications to magnetic and conduction problems. J. Phys. Soc. Jpn. 12, 507–586 (1957)

Kubo, R.: The fluctuation-dissipation theorem. Rep. Prog. Phys. 29, 255–284 (1966)

Kubo, R., Toda, M., Hashitsume, N.: Statistical Physics II: Nonequilibrium Statistical Mechanics. Springer, New York (1985)

Kunita, H.: Stochastic Flows and Stochastic Differential Equations. Cambridge University Press, Cambridge (1997)

Leith, C.: Climate response and fluctuation-dissipation. J. Atmos. Sci. 32, 2022–2025 (1975)

Lorenz, E.: Predictability: a problem partly solved. In: Proceedings of the Seminar on Predictability, ECMWF, Shinfield Park, Reading, England (1996)

Lorenz, E., Emanuel, K.: Optimal sites for supplementary weather observations. J. Atmos. Sci. 55, 399–414 (1998)

Lucarini, V.: Stochastic perturbations to dynamical systems: a response theory approach. J. Stat. Phys. 146, 774–786 (2012)

Lucarini, V., Sarno, S.: A statistical mechanical approach for the computation of the climatic response to general forcings. Nonlinear Process. Geophys. 18, 7–28 (2011)

Majda, A.J., Abramov, R.V., Gershgorin, B.: High skill in low frequency climate response through fluctuation dissipation theorems despite structural instability. Proc. Natl. Acad. Sci. USA 107(2), 581–586 (2010)

Majda, A.J., Abramov, R.V., Grote, M.J.: Information theory and stochastics for multiscale nonlinear systems, vol 25 of CRM Monograph Series of Centre de Recherches Mathématiques, Université de Montréal. American Mathematical Society (2005). ISBN 0-8218-3843-1

Øksendal, B.: Stochastic Differential Equations: An Introduction with Applications. Universitext, 6th edn. Springer, New York (2010)

Pavliotis, G.: Stochastic Processes and Applications. Texts in Applied Mathematics, vol. 60. Springer, New York (2014)

Risken, H.: The Fokker-Planck Equation, 2nd edn. Springer, New York (1989)

Ruelle, D.: Differentiation of SRB states. Commun. Math. Phys. 187, 227–241 (1997)

Ruelle, D.: General linear response formula in statistical mechanics, and the fluctuation-dissipation theorem far from equilibrium. Phys. Lett. A 245, 220–224 (1998)

Ruelle, D.: Nonequilibrium statistical mechanics near equilibrium: computing higher order terms. Nonlinearity 11, 5–18 (1998)

Young, L.-S.: What are SRB measures, and which dynamical systems have them? J. Stat. Phys. 108(5–6), 733–754 (2002)

Acknowledgements

The work was supported by the Office of Naval Research Grant N00014-15-1-2036.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Abramov, R.V. Leading Order Response of Statistical Averages of a Dynamical System to Small Stochastic Perturbations. J Stat Phys 166, 1483–1508 (2017). https://doi.org/10.1007/s10955-017-1721-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-017-1721-2