Abstract

We consider asymptotics of the correlation functions of characteristic polynomials corresponding to random weighted \(G(n, \frac{p}{n})\) Erdős–Rényi graphs with Gaussian weights in the case of finite p and also when \(p \rightarrow \infty \). It is shown that for finite p the second correlation function demonstrates a kind of transition: when \(p < 2\) it factorizes in the limit \(n \rightarrow \infty \), while for \(p > 2\) there appears an interval \((-\lambda _*(p), \lambda _*(p))\) such that for \(\lambda _0 \in (-\lambda _*(p), \lambda _*(p))\) the second correlation function behaves like that for Gaussian unitary ensemble (GUE), while for \(\lambda _0\) outside the interval the second correlation function is still factorized. For \(p \rightarrow \infty \) there is also a threshold in the behavior of the second correlation function near \(\lambda _0 = \pm 2\): for \(p \ll n^{2/3}\) the second correlation function factorizes, whereas for \(p \gg n^{2/3}\) it behaves like that for GUE. For any rate of \(p \rightarrow \infty \) the asymptotics of correlation functions of any even order for \(\lambda _0 \in (-2, 2)\) coincide with that for GUE.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Main Results

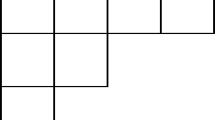

Consider an ensemble of hermitian \(n \times n\) random matrices of the form

where

and \(\{w_{jk}^{(1)}, w_{jk}^{(2)}, w_{ll} : 1 \le j < k \le n, 1 \le l \le n\}\) are i.i.d. random variables with zero mean such that

Here and everywhere below \(\mathbf {E}\) denotes the expectation with respect to all random variables. \(\{d_{jk} : j \le k\}\) are also independent of each other and of \(w_{jk}^{(1)}, w_{jk}^{(2)}, w_{ll}\).

These matrices are known as “weighted” adjacency matrices of random Erdős–Rényi \(G(n, \frac{p}{n})\) graphs, with \(\{d_{jk}\}\) corresponding to the standard adjacency matrix and \(\{w_{jk}\}\)—the set of independent weights, which we take to be Gaussian. These matrices are widely discussed in the last few years since they demonstrate a kind of interpolation between a “sparse” matrix with finite p, when there is only a finite number of nonzero elements in each line, and the matrix with \(p = n\) coinciding with Gaussian unitary ensemble (GUE). The results on the convergence of normalized eigenvalue counting measure

of these matrices in the case of finite p were obtain in [20, 21] on the physical level of rigour, then in [1] for \(w_{jk} = 1\) and in [14] for arbitrary \(\{w_{jk}\}\) independent on \(\{d_{jk}\}\) and having four moments.

It was also shown that for \(p \rightarrow \infty \) the limiting eigenvalue distribution coincides with GUE

while for finite p the limiting measure is a solution of a rather complicated nonlinear integral equation which is difficult for the analysis. It is known that the support of the limiting measure (spectrum) is the whole real line. Central Limit Theorem for linear eigenvalue statistics was proven in [26] for finite p and in [27] for \(p \rightarrow \infty \). For the local regime it was conjectured the existence of the critical value \(p_c > 1\) (see [5]) such that for \(p > p_c\) the eigenvalues are strongly correlated and are characterized by GUE matrix statistics, for \(p < p_c\) the eigenvalues are uncorrelated and follow Poison statistics. The conjecture was confirmed by numerical calculations [15] and by supersymmetry approach (SUSY) [8, 18] on the physical lever of rigour. Notice, that the results of the present paper confirm the existence of similar threshold for the second correlation function of characteristic polynomials. Rigorous results for the local eigenvalue statistics were obtained recently in [4, 10]. First for \(p \gg n^{2/3}\) and then for \(p \gg n^\varepsilon \) with any \(\varepsilon > 0\) it was shown that the spectral correlation functions of sparse hermitian random matrices in the bulk of the spectrum converge in the weak sense to that of GUE. For the edge of the spectrum, it was proved in [12] that for \(p \gg n^{2/3}\) the limiting probability \(P\{\max \limits _j\lambda _j^{(n)} > 2 + x/n^{2/3}\}\) admits a certain universal upper bound, whereas the result of [13] implies that for \(p \ll n^{1/5}\) the limiting probability \(P\{\max \limits _j\lambda _j^{(n)} > 2 + x/p\}\) is zero. Note that more advanced results for the edge eigenvalue statistics were obtained in [28] for so-called random d-regular graphs. It was shown that if \(3 \le d \ll n^{2/3}\) and \(w_{jk} = \pm 1\) then the scaled largest eigenvalue of (1.1) converges in distribution to the Tracy–Widom law.

The correlation functions of characteristic polynomials formally do not characterize the local eigenvalue statistics. However, from the SUSY point of view, their analysis is similar to that for spectral correlation functions. In combination with the fact that the analysis for correlation functions of characteristic polynomials usually is simpler than that for spectral correlation functions, it causes that such an analysis is often the first step in studies of local regimes.

The moments of the characteristic polynomials were studied for a lot of random matrix ensembles: for Gaussian orthogonal ensemble in [6], for circular unitary ensemble in [7, 11], for \(\beta \)-models with \(\beta = 2\) in [2, 29] and with \(\beta = 1,\,2,\,4\) in [3, 17]. Götze and Kösters in [9] have studied the second order correlation function of the characteristic polynomials of the Wigner matrix with arbitrary distributed entries, possessing the forth moments, by the method of generation functions. The result was generalized soon on the correlation functions of any even order by T. Shcherbina in [22] where it was proposed the method which allowed to apply SUSY technique (or the Grassmann integration technique) to study the correlation functions of characteristic polynomials of random matrices with non Gaussian entries. The proposed method appeared to be rather powerful and since that was successfully applied to study characteristic polynomials of sample covariance matrices (see [23]) and band matrices [24, 25].

In the present paper we apply the method of [22] to study characteristic polynomials of sparse matrices. To be more precise, let us introduce our main definitions. The mixed moments or the correlation functions of the characteristic polynomials have the form

where \(\Lambda = {\text {diag}}\{\lambda _1, \ldots , \lambda _{2m}\}\) are real or complex parameters which may depend on n.

We are interested in the asymptotic behavior of (1.5) for matrices (1.1), as \(n \rightarrow \infty \), for

where \(\lambda _0\), \(\{x_j\}_{j = 1}^{2m}\) are real numbers and notation \(j = \overline{1, 2m}\) means that j varies from 1 to 2m.

Set also

Theorem 1

Let an ensemble of sparse random matrices be defined by (1.1)–(1.3) for finite p and let \(w_{jk}^{(1)},\, w_{jk}^{(2)}\) be Gaussian random variables. Then the correlation function of two characteristic polynomials (1.5) for \(m = 1\) satisfies the asymptotic relations

-

(i)

for \(\lambda _0 \in (-\lambda _*(p), \lambda _*(p))\)

$$\begin{aligned} \lim _{n \rightarrow \infty } D_2\left( \Lambda \right) = \frac{\sin ((x_1-x_2)\sqrt{\lambda _*(p)^2-\lambda _0^2}/2)}{(x_1-x_2)\sqrt{\lambda _*(p)^2-\lambda _0^2}/2}; \end{aligned}$$ -

(ii)

for \(\lambda _0 \notin (-\lambda _*(p), \lambda _*(p))\)

$$\begin{aligned} \lim _{n \rightarrow \infty } D_2\left( \Lambda \right) = 1, \end{aligned}$$

where \(D_2\) and \(\lambda _*(p)\) are defined in (1.6).

Remarks

-

1.

The theorem shows that the second order correlation function has a threshold \(p = 2\), i.e. if \(p > 2\) there are two types of the asymptotic behavior—cases (i) and (ii), if \(p \le 2\) there is only one type of the asymptotic behavior—case (ii).

-

2.

If we let \(\lambda _0\) depend on n, the asymptotic regimes (i) and (ii) are fully agreed.

-

3.

Note that \(\lambda _*(p) \rightarrow 2\), as \(p \rightarrow \infty \), and since the limiting spectrum is always \([-2, 2]\) (see (1.4)), therefore for \(p \rightarrow \infty \) one expects GUE behavior for all \(\lambda _0 \in (-2, 2)\) (i.e. for all \(\lambda _0\) in the interior of the limiting spectrum, cf (1.4)); we confirm this in Theorem 2.

Theorem 2

Let an ensemble of diluted random matrices be defined by (1.1)–(1.3), \(p \rightarrow \infty \) and let \(w_{jk}^{(1)},\, w_{jk}^{(2)}\) be Gaussian random variables. Then the correlation function of characteristic polynomials (1.5) for \(\lambda _0 \in (-2, 2)\) satisfies the asymptotic relation

with \(X = {{\mathrm{diag}}}\{x_1, \ldots , x_{2m}\}\) and

where \(\Delta (y_1, \ldots , y_m)\) is the Vandermonde determinant of \(y_1, \ldots , y_m\).

Notice that \(\hat{S}_{2m}(I)\) is well defined because the difference of the rows \(j_1\) and \(j_2\) in the determinant in (1.7) is of order \(O(x_{j_1} - x_{j_2})\), as \(x_{j_1} \rightarrow x_{j_2}\). The same is true for columns.

To formulate our last result we introduce the Airy kernel

where Ai(x) is the Airy function.

Theorem 3

Let an ensemble of diluted random matrices be defined by (1.1)–(1.3), \(p \rightarrow \infty \), and let \(w_{jk}^{(1)},\, w_{jk}^{(2)}\) be Gaussian random variables. Then the correlation function of two characteristic polynomials (1.5) for \(m = 1\) satisfies the asymptotic relations

-

(i)

If \(\frac{n^{2/3}}{p} \rightarrow \infty \)

$$\begin{aligned} \lim _{n \rightarrow \infty } D_2(2I + n^{-2/3}X) = 1; \end{aligned}$$ -

(ii)

If \(\frac{n^{2/3}}{p} \rightarrow c\)

$$\begin{aligned} \lim _{n \rightarrow \infty } D_2(2I + n^{-2/3}X) = \frac{\mathbb {A}(x_1 + 2c, x_2 + 2c)}{\sqrt{\mathbb {A}(x_1 + 2c, x_1 + 2c) \mathbb {A}(x_2 + 2c, x_2 + 2c)}}, \end{aligned}$$

where \(D_2\) is defined in (1.6) and \(\mathbb {A}\) is defined in (1.8). For \(\lambda _0 = -2\) similar assertions are also valid.

Remarks

-

1.

Notice, that the case \(p \gg n^{2/3}\) corresponds to the case \(c = 0\) in (ii).

-

2.

The results of Theorem 3 are in a good agreement with the results of [12, 13] in the sense that the asymptotic behavior changes when p crosses the rate \(n^{2/3}\). However, in [13] it is argued that in the case \(p \ll n^{2/3}\) the appropriate scale is \(p^{-1}\) instead of \(n^{-2/3}\). We postpone the study of \(F_2\) with the scaling \(p^{-1}\), as well as the related study of \(F_2\) near \(\lambda _*(p)\) for finite p, to subsequent publications.

The paper is organized as follows. In Sect. 2 we obtain a convenient integral representation for \(F_{2m}\) using integration over the Grassmann variables and Harish–Chandra/Itzykson–Zuber formula for integrals over the unitary group. Sections 3, 4 and 5 deal with the proof of the Theorems 1, 2 and 3 respectively. The proof is based on the steepest descent method applied to the integral representation.

Notice also that everywhere below we denote by C various n-independent constants, which can be different in different formulas.

2 Integral Representation

To formulate the result of the section, the following notations are introduced

-

$$\begin{aligned} \Delta ({{\mathrm{diag}}}\{y_j\}_{j = 1}^{k}) = \Delta (\{y_j\}_{j = 1}^{k})\; \text {is the Vandermonde determinant of} \{y_j\}_{j = 1}^{k}; \end{aligned}$$(2.1)

-

\({{\mathrm{End}}}V\) is the set of linear operators on a linear space V;

-

$$\begin{aligned} I_{n, k} = \Big \{\alpha \in \mathbb {Z}^k | 1 \le \alpha _1 < \ldots < \alpha _k \le n\Big \}. \end{aligned}$$(2.2)

The lexicographical order on \(I_{n, k}\) is denoted by \(\prec \);

-

\(\mathcal {H}_{2m, l}\) is the space of self-adjoint operators in \({\text {End}} \Lambda ^l \mathbb {C}^{2m}\) (see [30, Chapter 8.4] for definition of \(\Lambda ^q V\));

-

$$\begin{aligned} dB = dP_l(B) = \prod \limits _{\alpha \in I_{2m, l}} dB_{\alpha \alpha } \prod \limits _{\alpha \prec \beta } d\mathfrak {R}B_{\alpha \beta } d\mathfrak {I}B_{\alpha \beta } \end{aligned}$$(2.3)

is a measure on \(\mathcal {H}_{2m, l}\). \(B_{\alpha \beta }\) denotes the corresponding entry of the matrix of B in some basis. It is easy to see that \(dP_l(UBU^*) = dP_l(B)\) for any unitary matrix U, so the definition is correct.

-

$$\begin{aligned} H_m = \prod \limits _{l = 2}^{2m} \mathcal {H}_{2m, l}; \end{aligned}$$(2.4)

-

Set also

$$\begin{aligned} A_{2m}(G_1, G) = \sum \limits _{\begin{array}{c} k_1 + 2k_2 + \ldots + 2mk_{2m} = 2m \\ k_j \in \mathbb {Z}_+ \end{array}}(2m)!\prod \limits _{q = 1}^{2m} \frac{1}{(q!)^{k_q}k_q!} \bigwedge \limits _{s = 1}^{2m} (b_{s}G_{s} - \tilde{b}_sI)^{\wedge k_{s}} \end{aligned}$$(2.5)where \(\{b_s\}_{s = 1}^\infty \), \(\{\tilde{b}_s\}_{s = 1}^\infty \) are the sequences of certain n, p-dependent numbers and

$$\begin{aligned} G = (G_2,&\ldots , G_{2m}), \quad G_l \in {{\mathrm{End}}}\Lambda ^l \mathbb {C}^{2m}, \quad l = \overline{1,2m}. \end{aligned}$$Exterior product \(A \wedge B\) of operators is defined in Sect. 1 in Appendix. Since \(\dim \Lambda ^{2m} \mathbb {C}^{2m} = 1\), the space \({\text {End}} \Lambda ^{2m} \mathbb {C}^{2m}\) may be identified with the \(\mathbb {C}\). In (2.5) \(\bigwedge \limits _{s = 1}^{2m} (b_{s}G_{s} - \tilde{b}_sI)^{\wedge k_{s}}\) is understood as \(\Big \{\bigwedge \limits _{s = 1}^{2m} (b_{s}G_{s} - \tilde{b}_sI)^{\wedge k_{s}}\Big \}_{1\ldots n;1\ldots n}\).

-

$$\begin{aligned} C_n^{(2m)}(X) = \pi ^m \left( \frac{1}{2} \right) ^{\frac{1}{2}(2^{2m} - 1)} \left( \frac{n}{\pi } \right) ^{\frac{1}{2}\left( \left( {\begin{array}{c}4m\\ 2m\end{array}}\right) - 1\right) } \exp \Big \{\frac{1}{2n}\sum \limits _{j = 1}^{2m} x_j^2\Big \}. \end{aligned}$$(2.6)

We prove the following integral representation for the correlation function \(F_{2m}.\)

Proposition 1

Let \(M_n\) be a random matrix of the form (1.1)–(1.3), where \(w_{jk}^{(1)}\), \(w_{jk}^{(2)}\), \(j < k\), \(w_{ll}\) have Gaussian distribution. Then the correlation function (1.5) admits the representation

where \(R = (R_2, \ldots , R_{2m})\), \(R_l \in \mathcal {H}_{2m, l}\), \(dR = \prod \limits _{j = 2}^{2m} dR_j\), \(T = {{\mathrm{diag}}}\{t_j\}_{j = 1}^{2m}\), \(dT = \prod \limits _{j = 1}^{2m} dt_j\),

and all other notation is defined at the beginning of the section.

Remark 1

In the special case \(m = 1\), the representation (2.7) simplifies to

where \(C_n(X) = C_n^{(2)}(X)\) and

The proof of Proposition 1 is based on the method of integration over the Grassmann variables, the required properties of which are reviewed in Sect. 1 in Appendix. The proof of the proposition is given in Sect. 2.1.

2.1 Proof of Proposition 1

Let us transform \(F_{2m}(\Lambda )\), using (6.1)

Averaging first with respect to \(\{w_{jk}\}\), we obtain

where, in order to simplify formulas below we denote

Since evidently \((\chi _{jk}\chi _{kj})^{2m + 1} = 0\), we get

Define the numbers \(\{a_l\}_{l=1}^{2m}\) by the identity

Observe that

and that

Then \(F_{2m}(\Lambda )\) can be represented as

To facilitate the reading, the remaining steps are first explained in the simpler case \(m = 1\) and only then in the general case.

2.1.1 Case \(m = 1\)

Let us transform the exponent of (2.12).

where \(I_{2, 1}\) is defined in (2.2). Since \(\psi _{jl}^2 = \overline{\psi }\phantom {\psi }_{jl}^2 = 0\), we have

Hubbard–Stratonovich transformation (6.2) applied to (2.12) yields

where \(\mathcal {H}_2\) is the space of self-adjoint operators in \({\text {End}} \mathbb {C}^{2}\) and

Set \(b_2 = -\sqrt{4na_2} = -\sqrt{\frac{2(n-p)}{pn}}\). Now we can integrate over the Grassmann variables

Change the variables \(t_j \rightarrow t_j + i\lambda _j\) and move the line of integration back to the real axis. Indeed, consider the rectangular contour with vertices in the points \((-R, 0)\), (R, 0), \((R, -i\lambda _j)\) and \((-R, -i\lambda _j)\). Since the integrand is a holomorphic on \(\mathbb {C}\) function, the integral over this contour is zero. Because the integrand is a polynomial multiplied by exponent, the integral over the vertical sides of the contour tends to 0 when \(R \rightarrow \infty \). So, recalling that \(\lambda _j = \lambda _0 + x_j/n\), we can write

where

Let us change the variables \(Q \rightarrow U^*TU\), where U is a unitary matrix and \(T = {\text {diag}}\{t_1, t_2\}\). Then dQ changes to \(\frac{\pi }{2}(t_1 - t_2)^2 dt_1 dt_2\) and

where \(U_2\) is the group of the unitary \(2 \times 2\) matrices, \(dU_2(U)\) is the normalized to unity Haar measure, \(dT = dt_1 dt_2\).

The integration over the unitary group using the Harish–Chandra/Itsykson–Zuber formula (6.3) implies the assertion of Remark 1.

2.1.2 General Case \(m > 1\)

Let us transform the exponent of (2.12).

where \(I_{2m, l}\) is defined in (2.2).

Since \(\psi _{jl}^2 = \overline{\psi }_{jl}^2 = 0\), we have

where \(\prec \) is the lexicographical order.

Hubbard–Stratonovich transformation (6.2) yields

where

The above computations compose into the following representation of the exponent of (2.12)

where \(\mathcal {H}_{2m, l}\) is the space of self-adjoint operators in \({\text {End}} \Lambda ^l \mathbb {C}^{2m}\) and \(dQ_l\) is defined in (2.3). Therefore, substitution of (2.15) into (2.12) gives us

where \(H_m\) is defined in (2.4) and

Now we can expand the exponents of (2.16) into the series

where

The most important terms contain all 4m Grassmann variables \(\{\psi _{js},\, \overline{\psi }_{js}\}_{s = 1}^{2m}\), because the other terms become zeros after integration over Grassmann variables. Thus, expansion of (2.17) with Lemma 6 implies

where only the most important terms remain. Integration over the Grassmann variables and substitution of the result into (2.16) gives us

where \(\hat{Q}_1 = Q_1 + \frac{1}{b_1}\Lambda = Q_1 - i\Lambda \), \(Q = (Q_2, \ldots , Q_{2m})\), \(dQ = \prod \limits _{l = 2}^{2m} dQ_l\) and \(A_{2m}\) is defined in (2.5).

Change the variables \((Q_1)_{jj} \rightarrow (Q_1)_{jj} + i\lambda _j\) and move the line of integration back to the real axis. Similarly to the case \(m = 1\), the Cauchy theorem yields

where \(C_n^{(2m)}(X)\) is defined in (2.6). Let us change the variables \(Q_1 = U^*TU\), where U is a unitary operator and \(T = {\text {diag}}\{t_j\}_{j = 1}^{2m}\). Then dQ changes to \(\pi ^m K_{2m}^{-1}\Delta (T)^2 dT\) and

where \(K_{2m} = \prod \limits _{j = 1}^{2m} j!\), \(\Delta (T)\) is defined in (2.1), \(U_{2m}\) is the subgroup of the unitary operators in \({{\mathrm{End}}}\mathbb {C}^{2m}\), \(dU_{2m}(U)\) is the normalized to unity Haar measure, \(dT = \prod \limits _{j = 1}^{2m} dt_j\). Transform \(A_{2m}(Q_1, Q)\)

where I is the identity operator. The assertion (iv) of Proposition 2 implies

Change the variables \(Q_l = (U^*)^{\wedge l}R_l U^{\wedge l}\), \(l = \overline{2, 2m}\). Since \(U^{\wedge l}\) is the unitary operator , dQ changes to \(dR = \prod \limits _{l = 2}^{2m} dR_l\). Then (2.18) implies

where \(R = (R_2, \ldots , R_{2m})\).

is a symmetric function of \(\{t_j\}_{j = 1}^{2m}\). Indeed, after swapping \(t_{j_1}\) and \(t_{j_2}\) and changing the variables \(R_l \rightarrow (\mathcal {M}_{j_1j_2}^*)^{\wedge l}R_l\mathcal {M}_{j_1j_2}^{\wedge l}\), where \(\mathcal {M}_{j_1j_2}\) is the unit matrix, in which rows \(j_1\) and \(j_2\) are swapped, the integrand in (2.19) remains unchanged. Hence, the integration over the unitary group using the Harish–Chandra/Itsykson–Zuber formula (6.3) can be done, which yields the assertion of Proposition 1.

3 Proof of Theorem 1

To find asymptotics of \(F_2(\Lambda )\), we apply the steepest descent method to the integral representation (2.9). As usual, the key technical point of the steepest descent method is to choose a good contour of integration (in our case it is 3 dimension space of \((t_1, t_2, s)\)), which contains the stationary point \((t_1^*, t_2^*, s^*)\) of f and then to prove that for any \((t_1, t_2, s)\) in our “contour”

Let us introduce the function \(h_\alpha : \mathbb {R}^5 \rightarrow \mathbb {R}\)

where

Then \(\mathfrak {R}f\) at our “contour” (which is defined further) has the form

for some \(\alpha \). To prove (3.1), we use the following lemma.

Lemma 1

Let \(h_\alpha \) be defined by (3.2) and (3.3). Then for every \(\alpha \in [1/2,\,1)\), \(t_1\), \(t_2\), s, \(b_2\), \(\lambda _0 \in \mathbb {R}\) the following inequality holds

Moreover, the equality holds if and only if at least one of the following conditions is satisfied

-

(a)

\(\alpha = 1/2,\, t_1 = -t_2 = \pm \sqrt{4 - 4b_2^2 - \lambda _0^2}/2,\, s = b_2\);

-

(b)

\(\alpha = 1/2,\, t_1 = t_2 = \pm \sqrt{4 - 4b_2^2 - \lambda _0^2}/2,\, s = -b_2,\, b_2\lambda _0 = 0\);

-

(c)

\(t_1 = t_2 = 0,\, s = b_2\frac{1-\alpha }{\alpha },\, \alpha (1-\alpha )\lambda _0^2+\left( \frac{1-\alpha }{\alpha }\right) ^2b_2^2 = 1\);

-

(d)

\(t_1 = t_2 = 0,\, s = -b_2\frac{1-\alpha }{\alpha },\, b_2 = \pm \frac{\alpha }{1 - \alpha },\, \lambda _0 = 0\).

Proof

Rewrite the inequality (3.4) in the form

where \(d = \frac{1 - \alpha }{\alpha }b_2\). Since

it is sufficient to prove

Recalling (3.3), we have

(3.6) is transformed into

The last inequality is the sum of following obvious inequalities

It remains to determine conditions when the equality in (3.4) holds. It holds if and only if the equalities in (3.5), (3.7)–(3.11) hold. Let \((n')\) denotes the corresponding equality for inequality (n). Then

Everywhere below until the end of the proof we assume that \(s^2 = d^2\) and \(t_1^2 = t_2^2\). Then

Let us consider the following cases

-

1.

\(t_1 = 0\).

-

1.1

\(\lambda _0 = 0\). Then (3.12) is transformed into \((b_2s)^2 = \big (\frac{\alpha }{1 - \alpha }\big )^2\). Since \(s^2 = d^2\), we get \(b_2 = \pm \frac{\alpha }{1 - \alpha }\) that implies (d).

-

1.2

\(sd \ge 0\). Then (3.12) is equivalent to \(\alpha ^2\lambda _0^2+\frac{1-\alpha }{\alpha }b_2^2 = \frac{\alpha }{1 - \alpha }\) that implies (c).

-

1.1

-

2.

\(t_1 \ne 0 \Rightarrow \alpha = 1/2\).

-

2.1

\(s = 0\). Hence, (3.12) is transformed into \(\lambda _0^2/4 + t_1^2 = 1\) that implies (b).

-

2.2

\(s \ne 0 \Rightarrow dst_1t_2 < 0\).

-

2.1

Finally, it is easy to check that the values of \(h_{\alpha }\) at the points satisfying (a)–(d) are equal to the r.h.s. of (3.4). \(\square \)

Now we are ready to prove Theorem 1. We start from the lemma

Lemma 2

Let all conditions of Theorem 1 are hold and \(\lambda _0 \in (\lambda _*(p), \lambda _*(p))\). Then \(F_2(\Lambda )\) satisfies the asymptotic relation

where \(b_2\) is defined in (2.14).

Proof

Set

where \(\eta , \nu = 1, 2\).

Consider the contour \(\mathfrak {I}t_1 = \mathfrak {I}t_2 = -\lambda _0/2,\, s \in \mathbb {R}\). It contains the points \((t_1^* - i\lambda _0/2, t_2^* - i\lambda _0/2, b_2)\) and \((t_2^* - i\lambda _0/2, t_1^* - i\lambda _0/2, b_2)\), which are the stationary points of f. The contour may contain another stationary points of f, but this fact does not affect the proof, except the case \(\lambda _0 = 0\) for which the points \((t_1^*, t_1^*, -b_2)\) and \((t_2^*, t_2^*, -b_2)\) are also under consideration. First, consider the case \(\lambda _0 \ne 0\).

Shift the variables \(t_\eta \rightarrow t_\eta - i\lambda _0/2\) in (2.9) and restrict the integration domain by

Then

where f is defined in (2.10) and

Then it is easy to see, that for \(\eta \ne \nu \)

where \(T_*^{(\eta \nu )}\) is defined in (3.14).

Note that

where \(h_\alpha \) is defined in (3.2). According to Lemma 1, \(\mathfrak {R}f\left( T - \frac{i}{2}\Lambda _0, s\right) \), as a function of real variables \(t_1\), \(t_2\), s, attains its maximum at \((T_*^{(\eta \nu )}, b_2)\). Hence, \(\mathfrak {R}f''(T_*^{(\eta \nu )}, b_2)\) is nonpositive, but since \(\det \mathfrak {R}f''(T_*^{(\eta \nu )}, b_2) < 0\), \(\mathfrak {R}f''(T_*^{(\eta \nu )}, b_2)\) is negative definite.

Let \(V_n^{(\eta \nu )}\) be a \(n^{-1/2} \log n\text {-neighborhood}\) of the point \((T_*^{(\eta \nu )}, b_2)\) and let \(V_n\) everywhere below denote the union of such neighborhoods of the stationary points under consideration, unless otherwise stated. Then for \(\left( T, s\right) \notin V_n\) and sufficiently large n we have

Thus we can restrict the integration domain by \(V_n\).

Set \(q = (t_1, t_2, s),\, q^{*} = (t_\eta ^{*}, t_\nu ^{*}, b_2).\) Then expanding f and g by the Taylor formula and changing the variables \(q \rightarrow n^{-1/2}q + q^{*}\), we get

This is true because \(g(\mathfrak {R}T_*^{(\eta \nu )}, b_2) \ne 0\). Performing the Gaussian integration, we obtain

Since

and \(C_n\) has the form (2.13), we have (3.13).

If \(\lambda _0 = 0\), then repeating the above steps, we obtain the formula similar to (3.16). The only difference is that there are two more terms (i.e. there are as many terms as stationary points) in the sum. Since g is zero at the points with \(t_1= t_2\), we have exactly (3.16) and hence the asymptotic equality (3.13) is also valid. \(\square \)

The assertion (i) of the theorem follows immediately from Lemma 2.

Lemma 3

Let all conditions of Theorem 1 are hold and \(\lambda _0^2 > 4 - 4b_2^2 + \varepsilon \) for some \(\varepsilon > 0\). Then \(F_2(\Lambda )\) satisfies the asymptotic relations

-

(i)

for \(\lambda _0 \ne 0\)

$$\begin{aligned} F_2(\Lambda ) = \frac{\alpha ^2 \exp \Big \{n\widehat{A} + (1 - \alpha )\lambda _0(x_1 + x_2)\Big \}}{(2\alpha -1)^{3/2}(2-\alpha (1-\alpha )(3-2\alpha )\lambda _0^2)^{1/2}}(1 + o(1)), \end{aligned}$$(3.17)where \(\alpha \) and \(\widehat{A}\) satisfy

$$\begin{aligned} \alpha \in (1/2, 1), \qquad&\alpha (1-\alpha )\lambda _0^2+\left( \frac{1-\alpha }{\alpha }\right) ^2b_2^2 - 1 = 0, \end{aligned}$$(3.18)$$\begin{aligned}&\widehat{A} = f \left( - i\alpha \Lambda _0, \frac{1-\alpha }{\alpha }b_2\right) . \end{aligned}$$(3.19) -

(ii)

for \(\lambda _0 = 0\)

$$\begin{aligned} F_2(\Lambda ) = b_2^n e^{-n/2} \frac{b_2^2}{(b_2^2 - 1)^{3/2}}\left( b_2 + 1 + (-1)^n (b_2 - 1) \right) (1 + o(1)). \end{aligned}$$(3.20)

Proof

Choose \(\mathfrak {I}t_1 = \mathfrak {I}t_2 = -\alpha \lambda _0\), \(s \in \mathbb {R}\) as the good contour with the stationary point \(\left( -i\alpha \lambda _0, -i\alpha \lambda _0, \frac{1-\alpha }{\alpha }b_2\right) \), where \(\alpha \) satisfies (3.18). Existence and uniqueness of such \(\alpha \) follow from the fact that the l.h.s. of (3.18) is a monotone decreasing function of \(\alpha \) whose values at \(\alpha = 1/2\) and \(\alpha = 1\) have different signs. Everywhere below we assume that \(\alpha \) is a solution of (3.18).

If \(\lambda _0 = 0\), we have two stationary points at the contour—\((0, 0, \pm 1)\).

Consider the case \(\lambda _0 > \varepsilon \). Shifting the variables \(t_j \rightarrow t_j - i\alpha \lambda _0\), similarly to (3.15) we get

It is easy to check that

In addition,

with \(h_\alpha \) of (3.2). So, similarly to the proof of Lemma 2 one can write

where \(V_n = U_{n^{-1/2}\log n}\left( \left( 0, \frac{1-\alpha }{\alpha }b_2\right) \right) \) and \(\widehat{A}\) is defined in (3.19).

Repeating the argument of Lemma 2, we get

where \(\langle \cdot , \cdot \rangle \) denotes the scalar product in \(\mathbb {R}^3\). The first integral is zero, because f is a symmetric with respect to \(t_1\) and \(t_2\) function and \(\dfrac{\partial g}{\partial t_1}\left( 0, \frac{1-\alpha }{\alpha }b_2\right) = -\dfrac{\partial g}{\partial t_2}\left( 0, \frac{1-\alpha }{\alpha }b_2\right) = 1\). Expanding the exponent into the Taylor series, we obtain

It is easy to see that

Computing the integral in (3.25), we have (3.17).

If \(\lambda _0 = 0\), then \(\alpha = \frac{b_2}{b_2 + 1}\). As it was mentioned above, in this case there are two stationary points at the contour. The asymptotics of the integral (3.21) in the neighborhood of the second stationary point (i.e. \((0, 0, -1)\)) is computed by the same way as above. (3.17) is transformed into (3.20). \(\square \)

The assertion (ii) follows from (3.17) and (3.20).

Now we proceed to the proof of agreement between cases (i) and (ii) of Theorem 1.

Lemma 4

Let all conditions of Theorem 1 are hold and \(\lambda _0^2 = 4 - 4b_2^2 - \delta _n\), \(\delta _n \rightarrow 0\). Then \(F_2(\Lambda )\) satisfies the asymptotic relation

where

Proof

Consider the case \(\delta _n \ge 0\). Choose the same contour as in the proof of Lemma 2. Stationary points are also the same.

Change of the variables \(\tau = t_1 + t_2\), \(\sigma = t_1 - t_2\) gives us

where \(\tilde{f}(\tau , \sigma , s) = f(T, s)\). Set

It is easy to see that

Let us choose \(V_n\) as a union of the products of the neighborhoods of \(\tau ^*\), \(\sigma _1^*\), \(b_2\) and \(\tau ^*\), \(\sigma _2^*\), \(b_2\) such that the radii of the neighborhoods corresponding to \(\tau \) and s are equal to \(\log n/\sqrt{n}\), whereas the radius of the neighborhood corresponding to \(\sigma \) is equal to \(\log n/\sqrt{n\delta _n}\), if \(n\delta _n^2 \rightarrow \infty \), and to \(\log n/n^{1/4}\) otherwise. Similarly to the proof of Lemma 2 it can be proved that for \(\left( \tau , \sigma , s\right) \notin V_n\) and sufficiently big n

Let \(n\delta _n^2 \rightarrow \infty \). Then by the same way as before, with the only one distinction that the change of the variable \(\sigma \) is \(\sigma \rightarrow (n\delta _n)^{-1/2}\sigma + \sigma _\eta ^*\), we obtain

where \(A_{\tilde{f}}^{(\eta )}\) is a quadratic form, defined by the matrix, which is obtained from \(\tilde{f}''(\tau ^*, \sigma _\eta ^*, b_2)\) by dividing by \(\delta _n^{1/2}\) of all numbers in the second line and the second column, i.e.

Therefore we have (3.26).

Now let \(n\delta _n^2 \rightarrow 0\). Then, changing the variables \(\sigma ^2 = \tilde{\sigma }\), we get

Let \(\tilde{V}_n\) be a product of the \(\frac{\log n}{\sqrt{n}}\text {-neighborhoods}\) of 0 and \(b_2\). Then we can shift the variable \(\tau \rightarrow \tau - i\lambda _0\) and in view of (3.27) restrict the integration domain by \(\left[ 0, \frac{\log ^2 n}{\sqrt{n}}\right] \times \tilde{V}_n\)

Expanding \(\tilde{f}\) and sin by the Taylor formula near \((-i\lambda _0, 0, b_2)\) and 0 respectively and changing the variables \(\tau \rightarrow n^{-1/2}\tau \), \(\tilde{\sigma } \rightarrow n^{-1/2}\tilde{\sigma }\), \(s \rightarrow n^{-1/2}s + b_2\), we have

where \(B_{\tilde{f}}\) is a quadratic form defined by the matrix

where all entries of the matrix P are units. Performing the Gaussian integration, we obtain

that imply (3.26).

If \(n\delta _n^2 \rightarrow \text {const}\), then there is a certain third power polynomial instead of \(B_{\tilde{f}}\) in the last exponent in (3.29). Thus, the asymptotics of \(F_2(\Lambda )\) differs from (3.26) only by multiplicative n-independent constant, which is absorbed by C in \(Y_n\). For negative \(\delta _n\), if \(n\delta _n^2 \rightarrow 0\), all the changes in equations appear in multiplication by factors which are equal to \(1 + o(1)\), so (3.26) is unchanged . If \(n\delta _n^2 \rightarrow \infty \), then the combination of the argument of the case \(\delta _n \ge 0\) and of Lemma 3 causes some changes in (3.28) and implies \(Y_n = C(-\delta _n)^{-3/2}\) in (3.26). \(\square \)

4 Proof of Theorem 2

As in the case of Theorem 1, the proof of Theorem 2 is based on the application of the steepest descent method to the integral representation of \(F_{2m}\) obtained in Sect. 2. For this end a “good contour” and stationary points of \(f_{2m}\), defined in (2.8), have to be chosen. We start from the choice of stationary points.

If \(p = n\) and hence \(b_l = \tilde{b}_{2k} = 0\), \(l > 1\), the proper stationary points are

where

Set

Since

is nondegenerate for \(\lambda _0 \in (-2, 2)\), for sufficiently small b there exists the unique solution

of the equation

such that \(T(0) = \widetilde{T}\), \(R(0) = 0\), and the solution continuously depends on b and \(\lambda _0\). When \(p \rightarrow \infty \), (2.11) and (2.14) yield \(b \rightarrow 0\). Therefore, the solutions (4.2) are the required stationary points.

Lemma 5

The solution (4.2) also has the following properties

-

(1)

\(T_{j_1 j_1}(b) = T_{j_2 j_2}(b)\), \(T_{k_1 k_1}(b) = T_{k_2 k_2}(b)\) for all \(j_1, j_2 \in I_+\), \(k_1, k_2 \in I_-\), where \(I_+ = \{j,\, t_j^* = t^*\}\), \(I_- = \{j,\, t_j^* = -\overline{t^*}\}\);

-

(2)

\((R_l)_{\alpha \beta }(b) = 0\), \(l = \overline{2, 2m}\), \(\alpha \ne \beta \).

Proof

Let

where \(\pi R_l\) is the such matrix that \((\pi R_l)_{\alpha \beta } = (R_l)_{\alpha _\pi \beta _\pi }\), multi-index \(\pi (\alpha )\) contains numbers \(\pi (\alpha _1), \ldots , \pi (\alpha _l)\) sorted by increasing and \(S_{2m}\) is the group of permutations of length 2m. Then \(\forall \pi \in S_{2m}\) \(f_{2m}(\pi T, \pi R) = f_{2m}(T, R)\). So, it is sufficiently to proof the lemma for those stationary points, for which \(t_1^* = \ldots = t_k^* = -\overline{t_{k + 1}^*} = \ldots = -\overline{t_{2m}^*} = t^*\).

We are going to prove that there exists the solution of (4.3) that satisfies conditions (1) and (2) and \(T(0) = \widetilde{T}\), \(R(0) = 0\). It is equivalent to existence of the solution of the system

where T and R satisfy (1) and (2), with respect to the variables \(t_1\), \(t_{2m}\), \((R_l)_{\alpha \alpha }\). Since the derivative of the l.h.s. of the system at \(T = \widetilde{T}\), \(R = 0\), \(b = 0\) is nondegenerate, there exists the solution of it. In view of uniqueness of the solution of (4.3), the solution of (4.4) coincides with (4.2). \(\square \)

The next step is the choice of the “contour” (in this case it is a \(d_{2m}\)-dimensional manifold, \(d_{2m} = (\left( {\begin{array}{c}4m\\ 2m\end{array}}\right) - 4m^2 - 1 + 2m)\)). For each variable we consider some contour and the required manifold \(\hat{M}_{2m}\) will be the product of these contours. Fix some variable. Order the corresponding components of the stationary points by increasing of the real part (if real parts are equal, order by increasing of the imaginary part). Then the contour is a polygonal chain that connect points by the order described above. Infinite segments of the polygonal chain are parallel to the real axis and directed from the first point to the left and from the last point to the right.

The Cauchy theorem and (2.7) imply

where

Moreover,

where

with \(\left\| y \right\| _\infty = \max \limits _j |y_j|\).

Let \(\varepsilon > 0\) be an arbitrary positive number. Then, since \((T(b), R(b)) \underset{n \rightarrow \infty }{\longrightarrow } (\widetilde{T}, 0)\), for sufficiently big n and for every \((T, R) \in \hat{M}_{2m}^N\) we have

where \(f_{2m}^0(T, R) = f_{2m}(T, R)|_{b = 0}\). Also, \(f_{2m}(T, R) \rightrightarrows f_{2m}^0(T, R)\), \(n \rightarrow \infty \), \((T, R) \in K\) for any compact set K. Hence, for sufficiently big n

Consider the point \((T^0, R^0) \in \hat{M}_{2m}^N\) such that \(\mathfrak {R}T^0 = \mathfrak {R}\widetilde{T}\), \(\mathfrak {R}R^0 = 0\). Then \(\mathfrak {R}f_{2m}(T^0, R^0) > \mathfrak {R}f_{2m}^0(\widetilde{T}, 0) - 2\varepsilon \). Thus,

Therefore, if \(\mathfrak {R}f_{2m}(T^1, R^1) = \max \limits _{(T, R) \in \hat{M}_{2m}^N} \mathfrak {R}f_{2m}(T, R)\), then

So, it is evident, that \((T^1, R^1) \rightarrow (\widetilde{T}, 0)\), \(n \rightarrow \infty \) for certain \(\widetilde{T}\) of the form (4.1).

Let \(V_n(T(b), R(b))\) be the neighborhood of the stationary point (T(b), R(b)), which contains the corresponding maximum point of \(\mathfrak {R}f_{2m}\) with its \(\frac{\log n}{\sqrt{n}}\)-neighborhood, and \({\text {diam}} V_n(T(b), R(b)) \rightarrow 0\). It can be assumed that the union of these neighborhoods is invariant for map \((T, R) \rightarrow (\pi T, \pi R)\) for every \(\pi \in S_{2m}\). Then, by the same reasons as in the proof of Theorem 1, we can restrict the integration domain by the union of the neighborhoods \(V_n\). Shifting the variables \(T \rightarrow T + \mathfrak {I}T(b)\), \(R \rightarrow R + \mathfrak {I}R(b)\) in each neighborhood and expanding \(g_{2m}\) by the Taylor formula, we get

where the summation is over all stationary points under consideration and

The number of terms of the Taylor series in (4.5) is the minimal number that allows us to obtain nonzero asymptotics.

Fix some stationary point (T(b), R(b)) and some multi-index \(\alpha \). Let \(\beta \le \alpha \) be a multi-index with \(\beta _{j_1} = \beta _{j_2}\) for some \(j_1 \ne j_2\), \(j_1, j_2 \in I_+\) or \(j_1, j_2 \in I_-\), where \(\beta \le \alpha \Leftrightarrow \forall j\ \beta _j \le \alpha _j\). Then

where \(\hat{\alpha }\) is the multi-index, which is obtained by swapping \(\alpha _{j_1}\) and \(\alpha _{j_2}\) in \(\alpha \). Hence, in the sum in (4.5) only the summands with \(|\alpha | = 2m(m - 1)\) remain.

Changing the variables \(T \rightarrow n^{-1/2}T\), \(R \rightarrow n^{-1/2} R\), we get

where q is a vector which consists of all integration variables, \(dq = dTdR\), \(\mathcal {V}_n(T(b), R(b)) = \sqrt{n}\hat{V}_n(T(b), R(b))\) and \(|r_6(q)| \le C \sum \limits _{j} |q_j|^3\).

(4.4) implies that, as \(n \rightarrow \infty \),

where

Therefore,

Thus, the value of the \(\mathfrak {R}f_{2m}\) at the stationary points of the form (4.2) with \(\#I_+ = m\) is greater than that at the other stationary points of such a form for \(\lambda _0 \in (-2, 2)\backslash \{0\}\). For \(\lambda _0 = 0\) the values of the \(\mathfrak {R}f_{2m}\) at the stationary points are equal, because (4.2) continuously depends on \(\lambda _0\). This yields that the sum in (4.6) may be restricted to the sum only over the stationary points with \(\#I_+ = m\) (for \(\lambda _0 = 0\) the other summands have the less order of n). We have

Consider the term with \(I_+ = \{1, 2, \ldots , m\}\). The Gaussian integration gives us

where C is some real n-independent constant.

On the other hand,

where \(\hat{S}_{2m}\) is defined in (1.7) and \(\rho _{sc}\) is defined in (1.4). The determinant in the l.h.s. is the sum of \(\exp \Big \{i\pi \rho _{sc}(\lambda _0)\sum \limits _{j = 1}^m \varepsilon _j x_j\Big \}\) over all collections \(\{\varepsilon _j\}\), which consist of m elements \(+1\) and m elements \(-1\), with certain coefficients. Since (see [19, Problem 7.3])

the coefficient under \(\exp \Big \{-i\pi \rho _{sc}(\lambda _0)\sum \limits _{j = 1}^m (x_j - x_{m + j})\Big \}\) is

The other coefficients can be computed by the same way. Therefore,

The assertion of the theorem follows.

5 Proof of Theorem 3

In this section we consider the case \(p \rightarrow \infty \). As in the proof of Lemma 3, the good contour is \(\mathfrak {I}t_1 = \mathfrak {I}t_2 = -\alpha \lambda _0\), \(s \in \mathbb {R}\) with the stationary point \(\left( -i\alpha \lambda _0, -i\alpha \lambda _0, \frac{1-\alpha }{\alpha }b_2\right) \), where \(\alpha \) satisfies (3.18).

Set \(\beta = 2\alpha - 1\). Then (3.18) transforms to \(b_2 = \beta (1 + \beta )(1 - \beta )^{-1}\), and hence \(\beta = \sqrt{\frac{2}{p}}(1 + o(1))\). Substitution of \(\lambda _0 = \pm 2\), \(\alpha = \frac{1 + \beta }{2}\) and \(b_2 = \beta (1 + \beta )(1 - \beta )^{-1}\) in (3.22) and (3.23) yields

In addition,

If (i) \(\frac{n^{2/3}}{p} \rightarrow \infty \), then \(V_n\) is chosen as a product of the neighborhoods of 0, 0, and \(\frac{1 - \alpha }{\alpha } b_2\) such that the radius of the corresponding to \(t_1\) and \(t_2\) neighborhoods is \(\frac{\log n}{\sqrt{n\beta }}\), but the radius of the corresponding to s neighborhood is \(\frac{\log n}{\sqrt{n}}\). We have (3.24).

Change of variables \(T \rightarrow (n\beta )^{-1/2}T\), \(s \rightarrow n^{-1/2}s + \frac{1 - \alpha }{\alpha } b_2\) and repeating of the argument of the proof of Lemma 3 yield

where C is some absolute constant and \(\widehat{A} = f \left( - i\alpha \Lambda _0, \frac{1-\alpha }{\alpha }b_2\right) \).

The assertion (i) follows.

Let now (ii) \(\frac{n^{2/3}}{p} \rightarrow c\). Chose \(V_n\) the same as in the case (i), but the radius of the neighborhoods corresponding to \(t_1\) and \(t_2\) is \(\frac{\log n}{\root 3 \of {n}}\). Then (3.24) is also valid. The Cauchy theorem implies

where the integration domain over s is not changed, but the ones over \(t_j\) become \(\{ |z| \le n^{-1/3}\log n\ |\ \arg z = -\pi /6 \text { or } \arg z = -5\pi /6 \}\).

Changing the variables \(T \rightarrow n^{-1/3}T\), \(s \rightarrow n^{-1/2}s + \frac{1 - \alpha }{\alpha } b_2\), we obtain

where

Change of the variables \(\tau _j = t_j + i\sqrt{2c}\) and using of the Cauchy theorem gives us

where C is some absolute constant. Taking into account (1.8) and (2.13), we get

which completes the proof of the assertion (ii).

References

Bauer, M., Golinelli, O.: Random incidence matrices: moments and spectral density. J. Stat. Phys. 103, 301–337 (2001)

Brezin, E., Hikami, S.: Characteristic polynomials of random matrices. Commun. Math. Phys. 214, 111–135 (2000)

Brezin, E., Hikami, S.: Characteristic polynomials of real symmetric random matrices. Commun. Math. Phys. 223, 363–382 (2001)

Erdős, L., Knowles, A., Yau, H.-T., Yin, J.: Spectral statistics of Erdős - Rényi Graphs II: eigenvalue spacing and the extreme eigenvalues. Commun. Math. Phys. 314(3), 587–640 (2012)

Evangelou, S.N., Economou, E.N.: Spectral density singularities, level statistics, and localization in a sparse random matrix ensemble. Phys. Rev. Lett. 68, 361–364 (1992)

Fyodorov, Y.V., Keating, J.P.: Negative moments of characteristic polynomials of random GOE matrices and singularity-dominated strong fluctuations. J. Phys. A Math. Gen. 36, 4035–4046 (2003)

Fyodorov, Y.V., Khoruzhenko, B.A.: On absolute moments of characteristic polynomials of a certain class of complex random matrices. Commun. Math. Phys. 273, 561–599 (2007)

Fyodorov, Y.V., Mirlin, A.D.: Localization in ensemble of sparse random matrices. Phys. Rev. Lett. 67, 2049–2052 (1991)

Götze, F., Kösters, H.: On the second-order correlation function of the characteristic polynomial of a Hermitian Wigner matrix. Commun. Math. Phys. 285, 1183–1205 (2008)

Huang, J., Landon, B., Yau, H.-T.: Bulk universality of sparse random matrices, arXiv:1504.05170v2 [math.PR] (2015)

Keating, J.P., Snaith, N.C.: Random matrix theory and \(\zeta (1/2+ it)\). Commun. Math. Phys. 214, 57–89 (2000)

Khorunzhiy, O.: On high moments and the spectral norm of large dilute Wigner random matrices. Zh. Mat. Fiz. Anal. Geom. 10(1), 64–125 (2014)

Khorunzhiy, O.: On high moments of strongly diluted large Wigner random matrices, arXiv:1311.7021v4 [math.PH] (2015)

Khorunzhy, O., Shcherbina, M., Vengerovsky, V.: Eigenvalue distribution of large weighted random graphs. J. Math. Phys. 45(4), 1648–1672 (2004)

Kühn, R.: Spectra of sparse random matrices. J. Phys. A 41(29), 295002 (2008)

Mehta, M.L.: Random Matrices. Academic Press Inc., Boston (1991)

Mehta, M.L., Normand, J.-M.: Moments of the characteristic polynomial in the three ensembles of random matrices. J. Phys. A Math. Gen. 34, 4627–4639 (2001)

Mirlin, A.D., Fyodorov, Y.V.: Universality of level correlation function of sparse random matrices. J. Phys. A 24, 2273–2286 (1991)

G. Pólya, G. Szegő, Problems and Theorems in Analysis. Vol. II. Theory of Functions, Zeros, Polynomials, Determinants, Number Theory, Geometry. Die Grundlehren der Math. Springer, New York (1976)

Rodgers, G.J., Bray, A.J.: Density of states of a sparse random matrix. Phys. Rev. B 37, 3557–3562 (1988)

Rodgers, G.J., De Dominicis, C.: Density of states of sparse random matrices. J. Phys. A Math. Gen. 23, 1567–1573 (1990)

Shcherbina, T.: On the correlation function of the characteristic polynomials of the Hermitian Wigner ensemble. Commun. Math. Phys. 308, 1–21 (2011)

Shcherbina, T.: On the correlation functions of the characteristic polynomials of the Hermitian sample covariance matrices. Probab. Theory Relat. Fields 156, 449–482 (2013)

Shcherbina, T.: On the second mixed moment of the characteristic polynomials of 1D band matrices. Commun. Math. Phys. 328(1), 45–82 (2014)

Shcherbina, T.: Universality of the second mixed moment of the characteristic polynomials of the 1D band matrices: real symmetric case. J. Math. Phys. 56, 063303 (2015). doi:10.1063/1.4922621

Shcherbina, M., Tirozzi, B.: Central limit theorem for fluctuations of linear eigenvalue statistics of large random graphs. J. Math. Phys. 51(2), 023523 (2010)

Shcherbina, M., Tirozzi, B.: Central limit theorem for fluctuations of linear eigenvalue statistics of large random graphs: diluted regime. J. Math. Phys. 53(4), 043501 (2012)

Sodin, S.: The Tracy-Widom law for some sparse random matrices. J. Stat. Phys. 136, 834–841 (2009)

Strahov, E., Fyodorov, Y.V.: Universal results for correlations of characteristic polynomials: Riemann-Hilbert approach. Commun. Math. Phys. 241, 343–382 (2003)

Vinberg, E.B.: A Course in Algebra. American Mathematical Society, Providence (2003)

Acknowledgments

The author is grateful to Prof. M. Shcherbina for statement of the problem and for fruitful discussions. The author is thankful to the Akhiezer Foundation for partial financial support. The author also thanks reviewers for carefully reading of the paper and for useful comments.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

1.1 Grassmann Variables

Consider the set of formal variables \(\{\psi _j,\, \overline{\psi }_j\}_{j=1}^n\) which satisfy the anticommutation relations

This set generates a graded algebra \(\mathcal {A}\), which is called the Grassmann algebra. Taking into account that \(\psi _j^2 = \overline{\psi }_j^2 = 0\), we have that all elements of \(\mathcal {A}\) are polynomials of \(\{\psi _j,\, \overline{\psi }_j\}_{j=1}^n\). We can also define functions of Grassmann variables. Let \(\chi \) be an element of \(\mathcal {A}\) and f be any analytical function. By \(f(\chi )\) we mean the element of \(\mathcal {A}\) obtained by substituting \(\chi - z_0\) in the Taylor series of f near \(z_0\), where \(z_0\) is a free term of \(\chi \). Since \(\chi - z_0\) is a polynomial of \(\{\psi _j,\, \overline{\psi }_j\}_{j=1}^n\) with zero free term, there exists \(l \in \mathbb {N}\) such that \((\chi - z_0)^l = 0\), and hence the series terminates after a finite number of terms.

The integral over the Grassmann variables is a linear functional, defined on the basis by the relations

A multiple integral is defined to be the repeated integral. Moreover “differentials” \(\{d\psi _j,\, d\overline{\psi }_j\}_{j=1}^n\) anticommute with each other and with \(\{\psi _j,\, \overline{\psi }_j\}_{j=1}^n\). Hence for a function f

we have by definition

The use of Grassmann variables for computing averages of determinants rests on the following identity, valid for any \(n \times n\) matrix A:

The one more identity is the Hubbard-Stratonovich transformation

which valid for any complex numbers y, t and any positive number a. The identities (6.2) also hold when y, t are arbitrary even Grassmann variables (i.e. sums of the products of even number of Grassmann variables). For even Grassmann variables the formulas can be proved by Taylor-expanding \(e^{2axy}\) and \(e^{ay(u + iv) + at(u - iv)}\) into the series and integrating each term.

The properties explained so far suffice to obtain the integral representation for \(F_{2m}\), \(m = 1\), whereas the general case \(m > 1\) requires some additional preliminaries, pertaining to antisymmetric tensor products. Further details about antisymmetric tensor products may be found in [30, Chapter 8.4].

1.1.1 Grassmann Variables and the Exterior Product

The exterior product of vectors is well-known, as well as the exterior product of alternating multilinear forms (see [30]). However, to prove Proposition 1 we need the exterior product of alternating operators. Define it as following. Let A be a linear operator on \(\Lambda ^q \mathbb {C}^n\) and B be a linear operator on \(\Lambda ^r \mathbb {C}^n\). Then the exterior product \(A \wedge B\) is the restriction of the linear operator \({\text {Alt}} \circ (A \otimes B)\) on the \(\Lambda ^{q + r} \mathbb {C}^n\). Here \({\text {Alt}}\) is the operator of the alternation, i.e.,

where \(S_k\) is the group of permutations of length k; \({\text {sgn}} \pi \) is the sign of permutation \(\pi \); \(f_\pi \) is the canonical automorphism of \(V^{\otimes k}\), which carries \(v_1 \otimes \ldots \otimes v_k\) to \(v_{\pi (1)} \otimes \ldots \otimes v_{\pi (k)}\), \(v_j \in V\); V is some finite-dimensional linear space. Note, that for \(A \in {{\mathrm{End}}}V\) the exterior product \(A \wedge A\) coincides with the well-known second exterior power of linear operator A.

Fix some basis \(\{ e_j \}_{j = 1}^n\) of \(\mathbb {C}^n\). Let \(A \in {{\mathrm{End}}}\Lambda ^k \mathbb {C}^n\) and \(\alpha , \beta \in I_{n,k}\), where \(I_{n, k}\) is defined in (2.2). By \(A_{\alpha \beta }\) we denote the corresponding entry of the matrix of A in the basis \(\{e_{\alpha _1} \wedge \ldots \wedge e_{\alpha _k},\, \alpha \in I_{n,k}\}\).

To obtain the integral representation for \(F_{2m}\) we use the lemma:

Lemma 6

Let A and B be linear operators on \(\Lambda ^q \mathbb {C}^n\) and \(\Lambda ^r \mathbb {C}^n\) respectively. Then

Proof

Let \(S_{q, r}\) be the set of such \(\pi \in S_{q + r}\) that satisfy inequalities \(\pi (1) < \ldots < \pi (q)\) and \(\pi (q + 1) < \ldots < \pi (q + r)\). Then

where

On the other hand,

where \(e_\alpha = e_{\alpha _1} \wedge \ldots \wedge e_{\alpha _q}\), \(\alpha \in I_{n, q}\).

Hence,

Thus,

which completes the proof of the lemma. \(\square \)

We also need some properties of the exterior product of the operators.

Proposition 2

Let \(A_j \in {\text {End}} \Lambda ^{q_j} \mathbb {C}^n\), \(j = \overline{1, k}\), and \(B \in {\text {End}} \mathbb {C}^n\). Then

-

(i)

\(A_1 \wedge A_2 = A_2 \wedge A_1\);

-

(ii)

\((A_1 \wedge A_2) \wedge A_3 = A_1 \wedge (A_2 \wedge A_3)\);

-

(iii)

\(\bigwedge \limits _{j = 1}^k A_j = \Big ( {\text {Alt}} \circ \bigotimes \limits _{j = 1}^k A_j \Big )\Big |_{\Lambda ^q \mathbb {C}^n}\);

-

(iv)

\(\bigwedge \limits _{j = 1}^k A_jB^{\wedge q_j} = \Big ( \bigwedge \limits _{j = 1}^k A_j \Big )B^{\wedge q}\) and \(\bigwedge \limits _{j = 1}^k B^{\wedge q_j}A_j = B^{\wedge q}\Big ( \bigwedge \limits _{j = 1}^k A_j \Big )\);

where \(q = \sum \limits _{j = 1}^k q_j\), \(B^{\wedge q} = \underbrace{B \wedge \ldots \wedge B}_{q \text { times}}\).

Proof

Assertions (i) and (ii) follow from Lemma 1 and from Grassmann variables multiplication’s anticommutativity and associativity.

(iii) Consider the case \(k = 3\).

where I is the identity operator.

The general case follows from the induction.

(iv) By definition, we have

Consider \(({\text {Alt}} \circ B^{\otimes q_j})(v_1 \otimes \ldots \otimes v_{q_j})\), \(v_l \in \mathbb {C}^n\)

Therefore, \({\text {Alt}} \circ B^{\otimes q_j} = B^{\otimes q_j} \circ {\text {Alt}}\), in particular, \(B^{\wedge q_j} = \left. B^{\otimes q_j} \right| _{\Lambda ^{q_j} \mathbb {C}^n}\). Thus,

The proof of the second formula is similar. \(\square \)

1.2 The Harish-Chandra/Itsykson–Zuber formula

For computing the integral over the unitary group, the following Harish-Chandra/Itsykson–Zuber formula is used

Proposition 3

Let A be a normal \(n \times n\) matrix with distinct eigenvalues \(\{a_j\}_{j = 1}^n\) and \(B = {{\mathrm{diag}}}\{b_j\}_{j = 1}^n\), \(b_j \in \mathbb {R}\). Then

where z is come constant, \(\Delta (A) = \Delta (\{a_j\}_{j = 1}^n)\), \(\Delta \) is defined in (2.1). Moreover, for any symmetric domain \(\Omega \) and any symmetric function f(B) of \(\{b_j\}_{j = 1}^n\)

where \(dB = \prod \nolimits _{j = 1}^{n} db_j\).

For the proof see, e.g., [16, Appendix 5].

Remark 2

Notice, that (6.3) is also valid if A has equal eigenvalues.

Indeed, if \(a_{j_1} = a_{j_2}\), \(j_1 \ne j_2\), the integrand in r.h.s. of (6.3) changes sign when \(b_{j_1}\) and \(b_{j_2}\) are swapped. Thus, the ratio of the integral and \(a_{j_1} - a_{j_2}\) is well defined.

Rights and permissions

About this article

Cite this article

Afanasiev, I. On the Correlation Functions of the Characteristic Polynomials of the Sparse Hermitian Random Matrices. J Stat Phys 163, 324–356 (2016). https://doi.org/10.1007/s10955-016-1486-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-016-1486-z