Abstract

Objectives

Longitudinal data offer many advantages to criminological research yet suffer from attrition, namely in the form of sample selection bias. Attrition may undermine reaching valid inferences by introducing systematic differences between the retained and attrited samples. We explored (1) if attrition biases correlates of recidivism, (2) the magnitude of bias, and (3) how well methods of correction account for such bias.

Methods

Using data from the LoneStar Project, a representative longitudinal sample of reentering men in Texas, we examined correlates of recidivism using official measures of recidivism under four sample conditions: full sample, listwise deleted sample, multiply imputed sample, and two-stage corrected sample. We compare and contrast the results regressing rearrest on a range of covariates derived from a pre-release baseline interview across the four sample conditions.

Results

Attrition bias was present in 44% of variables and null hypothesis significance tests differed for the correlates of recidivism in the full and retained samples. The bias was substantial, altering effect sizes for recidivism by a factor as large as 1.6. Neither the Heckman correction nor multiple imputation adequately corrected for bias. Instead, results from listwise deletion most closely mirrored the results of the full sample with 89% concordance.

Conclusions

It is vital that researchers examine attrition-based selection bias and recognize the implications it has on their data when generating evidence of theoretical, policy, or practical significance. We outline best practices for examining the magnitude of attrition and analyzing longitudinal data affected by sample selection.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Panel studies rely on interviewing and re-interviewing the same respondents at multiple points in time. Such research designs offer incredible value to the social sciences, including the field of criminology (Liberman 2008). First, panel studies bolster causal inferences because they assure temporal ordering. Second, they yield more robust conclusions than cross-sectional analyses by isolating the relative and cumulative effects of multiple factors over time. Finally, since panel studies permit the study of dynamic change, they set the stage for the emergence of developmental and life-course criminology. In short, longitudinal research in criminology has not only been used to answered difficult questions of the past, but it is critically important to advancing key aims of the field, including theory testing, evaluating policies and practices, and improving research methodology (for critiques of longitudinal research with respect to these aims, see Cartwright 2007; Cullen et al. 2019; Hirschi and Gottfredson 1983; Sampson 2010).

The focus of this study is on a core methodological limitation of longitudinal methods, which has implications for etiological and evaluation research alike: attrition. Attrition occurs when respondents drop out permanently from a study or temporarily skip an interview, ultimately introducing missing data. No longitudinal studies are immune to attrition. A loss of respondents could lead to lower statistical power and Type II errors in analyses. More importantly, even a moderate amount of attrition could alter the conclusions of a study by changing the composition or characteristics of the sample (Gustavson et al. 2012; Hansen et al. 1990). This is known as a form of sample selection bias (Campbell and Stanley 1963), which becomes problematic when the source of attrition is non-random, particularly due to variables relevant to outcomes of interest, such as recidivism (Clark et al. 2020). Missing data could have profound implications for research, policy, and practice, making it necessary for researchers to observe, assess, and correct any bias.

In this study, we examine approaches to address sample selection bias, including listwise deletion, multiple imputation, and the Heckman two-step correction.Footnote 1 Although the Heckman method has been used extensively in many disciplines, it maintains a rocky history in criminology (Bushway et al. 2007; Heckman 1979). This may be partially due to the complicated and sometimes problematic application of the Heckman two-step correction (Bushway et al. 2007). In other fields, such as econometrics, the Heckman selection model is widely used “often without questioning its validity” (Yu and Gastwirth 2010, p. 8). We apply this method—along with other popular correction methods—to an area of study that has long commanded the interest of researchers, policymakers, and practitioners: recidivism.

Using data from a longitudinal sample of imprisoned people with a baseline pre-release interview and two post-release interviews, our purpose is threefold. First, we explore if attrition biases the correlates of official records of arrest as a form of recidivism. Second, we examine the magnitude of bias for each correlate. Third, we test how well methods of correction account for sample selection bias. To do so, we combine survey and operational data (which we term “metadata”) from the LoneStar Project to create four conditions of sample selection: (1) a full sample condition, where we have the entire sample of respondents to illustrate what would happen in a perfect world without attrition (N = 791); (2) a retained condition, where we examine the correlates of recidivism with incomplete data, that is, only among the respondents retained in the wave 3 sample, the most common condition since attrition is unavoidable (n = 506); (3) a multiply imputed condition using multiple imputation chained equations (N = 791); and (4) a corrected condition (N = 791), where we use a Heckman correction to examine statistical corrections for sample selection bias introduced by attrition. By using a measure of official arrest as the outcome variable we have complete data for all respondents which allows us to identify the consequences of sample selection bias on the correlates of recidivism.

Attrition and Sample Selection Bias in Longitudinal Research

Not all longitudinal studies are created equally. Confidence in the findings derived from longitudinal studies is undermined by respondents participating in earlier waves of data collection but failing to complete later, or final waves (Asendorpf et al. 2014). Attrition tends to compound throughout waves. Once a respondent misses a wave, they are at a higher risk of missing subsequent waves (Davidov et al. 2006; Lugtig 2014). This is particularly the case for panel studies with repeated measures gleaned from the same set of individuals over two or more specific time points (Cordray and Polk 1983; Ribisl et al. 1996; Young et al. 2007). As the importance of such data grows in criminology, so too do concerns about attrition.

Attrition is “one of the most important sources of nonsampling error in panel surveys,” a problem all longitudinal data collection efforts must reckon with (Gustavson et al. 2012; Lugtig 2014, p. 700). If the presence of attrition is non-random, in that some people have a higher likelihood of dropping out than others, then the potential for bias heightens (Fitzgerald et al. 1998). The sources of attrition may be unavoidable in situations of respondent mortality or refusals, but other sources of bias may be affected by the research design and can be manipulated by researchers (Ribisl et al. 1996; Thornberry et al. 1993). Respondent tracking and retention strategies are commonly used to limit attrition in panel studies, including gathering a contact card, sending postcards, and offering incentives (Bolanos et al. 2012; McLaughlin et al. 2014; Mutti et al. 2014).

The consequences of attrition go beyond the loss of respondents and a corresponding reduction in statistical power. Sample selection bias reflects the fact that those who attrite from the study may have unique characteristics that other respondents do not share as well as reasoning for non-participation (Barry 2005; Claus et al. 2002; David et al. 2013; Fumagalli et al. 2013; Odierna and Schmidt 2009; Ribisl et al. 1996). Consequently, the remaining sample is no longer representative of the original sample, and thus not representative of the population to which inferences could be made (Carkin and Tracy 2015; Crisanti et al. 2014). Random sampling of respondents begins to lose its utility once non-random attrition occurs (Goodman and Blum 1996).

There is much evidence pointing to differences between retained and attrited samples. These include factors such as racial/ethnic minority status (Butler et al. 2013; Magruder et al. 2009; Thornberry et al. 1993), gender (Badawi et al. 1999; Snow et al. 2007; Young et al. 2006), employment status (Claus et al. 2002; Crisanti et al. 2014), educational attainment (Badawi et al. 1999; Lugtig 2014; Thornberry et al. 1993), disease severity and health status (Chatfield et al. 2005; Lugtig 2014; Schmidt et al. 2000; Young et al. 2006), substance use (Crisanti et al. 2014; Snow et al. 1992; Thornberry et al. 1993), and psychological distress (Chang et al. 2009; Claus et al. 2002). If these factors are not taken into account and corrected, the interpretability and credibility of the results may be undermined (Barry 2005; Chatfield et al. 2005), as both internal and external validity are compromised (Berk 1983; Boys et al. 2003; Crisanti et al. 2014). Overall, data that are missing non-randomly—especially as it relates to the outcome of interest—present a skewed picture of the patterns in criminal justice research, an obstacle to yielding a more complete understanding of crime (Wadsworth and Roberts 2008).

Reentering Populations and Study Attrition

Some study populations of concern to criminologists are more difficult to retain than others. Formerly incarcerated people constitute a classic example of a high-risk, hard-to-reach population because of their transient lifestyles and erratic behavior (Crisanti et al. 2014; Curtis 2010; Western 2018). Many formerly incarcerated individuals recidivate because they are ill-equipped to handle the challenges of reentry (Shinkfield and Graffam 2007). Upon leaving prison, parole violations, arrests, and reincarceration make them much more difficult to track (Coen et al. 1996; Kinner 2006).Footnote 2 Moreover, they tend to distrust researchers whom they believe could be working with law enforcement (Eidson et al. 2016; Western et al. 2017). Panel studies of formerly incarcerated people often show high rates of study attrition and nonresponse (Western et al. 2017), even after an in-prison baseline assessment (e.g., Fahmy et al. 2019). As attrition rates in longitudinal panel studies of reentering individuals vary (e.g., Serious and Violent Offender Reentry Initiative (SVORI) or Returning Home Study), so too do their sample sizes, project infrastructure, and resources. Formerly incarcerated people may also be weakly attached to mainstream social institutions which only adds to inaccessibility in standard data collection procedures (Brame and Piquero 2003; Western et al. 2017). Moreover, study participation is likely a low priority for previously incarcerated persons owing to the various post-release stressors. If researchers are more likely to retain certain groups it could bias the results, and more importantly, the implications of the research. Given the difficulty of retaining a high-risk sample over time, it is necessary for social scientists to use methods for exploring and correcting systematic sample selection bias.

Estimation Methods for Correcting Attrition

Researchers have long understood that sample selection bias presents issues in sociological and criminological research (Berk 1983; Stolzenberg and Relles 1990). When the observations that remain are dependent upon the outcome of interest, sample selection may lead to biased inferences to the population and thus, materialize into a critical data issue (Berk 1983; Bushway et al. 2007). Though this issue is ubiquitous in panel studies, researchers often fail to evaluate differences between the retained and attrited samples; although, researchers could correct for biases in their data using a variety of methods, depending on their study aims. We detail three commonly used methods, all of which we test in our data.Footnote 3

Listwise Deletion

Listwise deletion is the simplest and least computationally intensive way to address missing data; however, there is debate on whether it adequately addresses selection issues. In listwise deletion, cases which contain missing data on variables that are included in the model are dropped from analyses entirely. The benefits of this technique are that it is parsimonious and does not require the manipulation or creation of data the way more complicated methods require (Tabachnick and Fidell 2001). One concern about this technique is that, depending on the magnitude of missing data, sample sizes can decrease significantly and vary across models. Another concern is that if the data are not missing randomly, it culminates in biased results (Tabachnick and Fidell 2001). In this case, the results and implications of the analyses would be limited to the sample included in the models and not generalizable to the broader population.

In our study, listwise deletion would limit our analysis to include the 506 respondents in the LoneStar Project who completed the wave 3 interview. The 285 other respondents who have missing data on wave 3 would be dropped from the model. Our results would be applicable only to individuals who completed wave 3 of the study, or at best, the type of respondent who completes follow-up waves of longitudinal studies. However, this is not important for the purposes of this study because we are not attempting to draw conclusions about predictors of our outcome variable, arrest, but instead trying to determine which technique best approximates the full sample analysis. In theory, listwise deletion does not attempt to deal with issues of selection bias. However, we include it in our comparison to help determine whether more complicated techniques have any added value over the most parsimonious option.

Multiple Imputation

Imputation strategies vary in utility (Wadsworth and Roberts 2008), but criminologists commonly employ multiple-chained imputation. Multiple imputation by chained equations (MICE) uses one imputation model for each variable with missing values to generate a series of imputed datasets that are void of missing data and can be used for analysis. MICE is the preferred method for imputation because its algorithm allows each individual variable with missing data its own imputation model. This means that it can impute for different types of variables (i.e., continuous, binary, ordered/unordered categorical, etc.) while remaining without the logical bounds of potential outcomes for each variable (Royston and White 2011). It does this by regressing each individual variable with missing data on all other variables in the model while restricting the estimation to cases with observed values of the missing variable. MICE allows for the specification of variable type to ensure the estimation at this stage is conducted using the most appropriate method according to the variable type. The missing values are then replaced with the imputed values, which is determined using simulations from the predictive distribution that was estimated from the initial regression analysis. This process is repeated for each of the variables with missing data to create a full imputed dataset (Royston and White 2011). The final step includes repeating the entire process numerous times to create multiple imputed datasets—recommendations vary between 5 and 20 cycles (Van Buuren et al. 1999). In turn, estimates for the complete sample are generated by pooling across the imputed datasets.

In our models, we impose missingness on official arrest for our 285 participants who did not complete the wave 3 follow-up survey. In order to avoid losing 285 cases in our analysis because of attrition, as we did in the listwise deleted sample, we can utilize MICE to replace the missing data on our rearrest variable with imputed data. We replaced the missing values of rearrest (x1) in the 285 participants without wave 3 interviews by using the coefficients and regression equation to impute the missing value based on the variables which were observed for those with missing values for rearrest (x1). This process was repeated ten times to ensure stability in the imputed estimates.

When used appropriately (i.e., data are missing completely at random; Allison 2000), multiple imputation can help address selection bias by creating a complete data set, ultimately allowing every participant to be included in analyses. In the case of the LoneStar Project, although the data are missing respondents at wave 2 and/or wave 3, we have full data on all respondents from the in-prison, baseline interviews. Thus, we attempt to contrast multiple imputation techniques against the Heckman two-step correction with the full sample condition.

Heckman Two-Step Correction

Across many disciplines in the social sciences, the Heckman correction pioneered how selection issues were addressed in applied research (Bascle 2008; Certo et al. 2016; LaLonde 1986; Peel 2014; Tucker 2010). In fact, most estimation methods today are, in some form, an extension of Heckman’s original (1976, 1979) two-step approach (Yu and Gastwirth 2010). In criminological research, the use of Heckman’s two-step correction and related modelsFootnote 4 have not experienced as large of a growth as in economics, accounting, and finance. Bushway et al. (2007) reviewed publications appearing in Criminology from 1986 to 2005 and found 25 studies that used the Heckman correction. We updated and extended their review by looking at the Heckman correction in leading generalist and specialized criminology outlets from 2006 to 2019.Footnote 5 Of the 21 articles (see Appendix 1) that used the Heckman correction, 17 were related to criminal justice case processing (there was no clear pattern for the remaining four articles). And, no papers used Heckman to account for selection bias introduced via attrition—the focus of our paper.

The original conception of the Heckman two-step correction (Heckman 1976) involves estimating a probit model for selection (i.e., Stage 1 selection model), creating an inverse Mill’s ratio, and using the inverse Mill’s ratio as a covariate in the model estimating a continuous outcome (i.e., Stage 2 substantive model) to “correct” for sample selection bias. Specifically adapted for full-information maximum likelihood (FIML), this model simultaneously estimates a selection probit model and a substantive logit model for the dependent variable.

As with any post-data collection correction method, there are pitfalls if not utilized correctly. The Heckman estimator is only useful insofar as its assumptions and conditions are met and the data are appropriate (Bascle 2008; Bushway et al. 2007; Wolfolds and Siegel 2019). In short, the error terms must follow a bivariate normal distribution; the sample must be large; collinearity should be absent; and exclusion restrictions must be powerful and relevant—an issue that has not been suitably considered in past research (Bascle 2008). Otherwise, Heckman’s method is inappropriate. When the above criteria are not met, a simple ordinary least squares (OLS) regression performs more reliably and with less bias (Wolfolds and Siegel 2019). Indeed, pitfalls of the Heckman estimation method are essentially the counterfactuals of the conditions necessary for its “magic,” as Bushway and colleagues put it.

These Heckman-related pitfalls can be categorized in terms of criticisms brought about since the original formation of the method (Heckman 1976). Criticisms include (1) sensitivity to the deviations from the distributional assumptions of the error terms (Little and Rubin 1987; Puhani 2000; Stolzenberg and Relles 1990; Vella 1998); (2) a reliance on untestable assumptions (Duan et al. 1983; Puhani 2000); (3) predictive power and/or efficiency of a maximum likelihood (ML) or OLS estimation compared to the Heckman (Little and Rubin 1987; Puhani 1997, 2000; Stolzenberg and Relles 1990; Vella 1998); (4) failure in the presence of outliers (Yu and Gastwirth 2010); and lastly (5) collinearity in the presence of weak or nonexistent exclusion restrictions (Anderson 2017; Leung and Yu 1996; Little and Rubin 1987; Puhani 2000; Vella 1998). In terms of weak or absent exclusion restrictions, such variables are extremely difficult to find in practice (Leung and Yu 1996; Yu and Gastwirth 2010), but “the most important difference for the performance of the alternative estimators arises from the existence of exclusion restrictions” (Puhani 2000, p. 64), a point that is critical to the current study.

Current Study

We examine the effects of non-random sample selection as a function of attrition on recidivism in longitudinal research in the context of three key research questions:

-

1.

How does attrition bias the correlates of recidivism?

-

2.

What is the magnitude of bias for each correlate?

-

3.

Given the bias within these correlates, how well do methods of correction account for the sampling selection bias introduced by attrition?

We tackle these questions using four sample selection conditions. The first is an “ideal world” where no attrition exists, which we term the full sample condition. Because we have complete data for this condition (i.e., data for all 802 respondents), we have no attrition and thus no sample selection bias. This condition, and the associated parameters, serve as our benchmark for variables related to recidivism.

The second condition illustrates the “real world” of research where attrition exists, which we term the retained condition. This condition is the most commonly relied upon data by researchers. For this condition, we limit the sample to only those who were retained in the study after release from prison and reexamine the covariates of recidivism—thus, respondents are listwise deleted. Should the estimates vary in direction, significance, or effect size, it would lend credence to the assertion that a correction is needed.

The third condition we termed the multiply imputed condition. This condition is used to examine if bias in selection can be corrected using multiply imputed chained equations. This condition serves as a complete dataset although that completion is only achieved via imputation.

The final condition, which we term the corrected condition, is used to explore correction for sample selection bias through the use of a Heckman correction. The corrected condition combines the complete and partial sample by accounting for non-random attrition through the use of robust exclusion restriction variables that we outline below.

It is rare for publicly available longitudinal datasets to have the capacity to examine outcomes based on the four sample conditions we presented above. In an earlier example, LaLonde (1986) set out to accomplish similar goals as our own. His aim was to determine how well econometric techniques replicated the estimates of those produced in a field experiment. Similar to LaLonde (1986), the unique circumstances of our data allow us to determine how well statistical methods account for selection bias and how it operates in comparison to analysis conducted on the full, unmanipulated sample. As such, this gives us a strong basis to explore the implications of sample selection bias on recidivism and test our ability to correct for such bias.

Methods

Data

We use data from the LoneStar Project, or the Texas Study of Trajectories, Associations, and Reentry. The study consisted of three waves of data collection: baseline (within one week prior to release), wave 2 (approximately one month post-release), and wave 3 (approximately ten months post-release). The sampling frame for the baseline interview included a disproportionate stratified random sample of all men scheduled for release from the largest Texas Department of Criminal Justice (TDCJ) regional release center. Because the primary focus of the LoneStar Project was on prison gangs and reentry, TDCJ-identified gang members were oversampled by a factor of five to support subgroup analyses by race, gang type, and gang status. The LoneStar Project’s final sample of 802 males includes 368 gang and 434 non-gang respondents, so sampling weights are used for all analyses. Our analytic sample consisted of 791 respondents.Footnote 6

The current study relies on baseline (in-prison) variables as predictors of retention and recidivism. The project’s design involved in-person computer-assisted personal interviews (CAPI). Wave 1 data collection began April 2016 and was completed by December 2016. Interviews with respondents in general population housing (95% of the sample) occurred daily in an enclosed, public area of the unit. Interviews with respondents in high custody housing (the remaining 5% of the sample) occurred in a secure visiting room in the administrative segregation wing of a prison (all of whom left prison from the aforementioned release center). Interviews were conducted by trained interviewers using laptop computers and lasted approximately two hours; few people refused to participate (< 5%). Respondents were not compensated for their involvement in the study for baseline interviews. Interview data were linked with administrative records from state agencies, including TDCJ and the Texas Department of Public Safety (DPS). Together, these data paint a detailed picture of self-reported beliefs and behaviors, coupled with official data on criminal justice system contact.

The interview and administrative-linked data are ideal to answer our research questions. First, the LoneStar Project data include unique study metadata, variables, and perceptions of participation that we use as exclusion restrictions (see below). Second, because we have a complete sample and recidivism data for all respondents, we can compare the retained, imputed, and corrected samples to determine the effect of bias on recidivism. Third, the interview data contain a wide range of measures, allowing us to take stock of potential bias across correlates that are not based on official data. Finally, we have two years of post-release measures of arrest, which allows for the exploration of criminal justice system contact over an extensive period of time.

Sample Selection Condition

Retention at wave 3 was used as our sample selection dependent variable. This variable was coded as 1 = “yes” for those who completed a wave 3 interview (n = 506). Otherwise, respondents were coded as 0 = “no” if they did not participate in wave 3.

Exclusion Restrictions

Regardless of the type of Heckman correction used, it is crucial to identify proper exclusion restrictions (Bushway et al. 2007; Certo et al. 2016). Exclusion restrictions are variables associated with the process of selection but not the substantive model of interest. For example, well-populated collateral contact cards are important for study retention (Clark et al. 2020), but there is no theoretical reason to expect that such information is associated with recidivism. Properly specified exclusion restrictions are critical for reducing the potential for collinearity (Bushway et al. 2007). Indeed, failure to include exclusion restrictions or the use of weak exclusion restrictions will inevitably lead to biased and inconsistent estimates (Anderson 2017). Researchers must consider theoretical and substantive justifications when considering which variables to use as exclusion restrictions in order to ensure the selection process has been accurately modeled (Berk 1983; Bushway et al. 2007; Stolzenberg and Relles 1990). When the same predictors are used to model both the selection process and the outcome, it is problematic because of the correlation between the correction term and the included variables and may inflate perceived explanatory power.

Lennox et al. (2012) demonstrated that most studies fail to adequately report exclusion restrictions. In a review of accounting research, they found a large number of studies failed to include exclusion restrictions (14 out of 75) or do not report the first stage model (7 out of 75). And, 95% of studies failed to provide proper justification—theoretically or empirically—for their chosen restrictions. In a meta-analysis of research on strategy management using the Heckman correction, Wolfolds and Siegel (2019) revealed that fewer than one-third of the 165 papers reviewed claimed the use of a valid exclusion restrictions, which may actually worsen rather than correct estimates. Data limitations typically result in researchers including only a single variable as an exclusion criterion. When estimating a Heckman correction, it is vital that at least one predictor in the selection equation is not included in the final estimation equation (Certo et al. 2016; Stevens et al. 2011).

The metadata from the LoneStar Project allow us to incorporate nine variables that are unique to the selection equation and not typically available in other datasets. We draw upon the work of Clark and colleagues (2020), who used data from the LoneStar Project to examine the correlates of retention. They identified a grouping of such correlates—what they termed “preventive measures”—related to retention/attrition yet unrelated to recidivism. We use the following variables as exclusion restrictions: (1) if the respondent received any reminders (sent via text, email, and phone calls) before their interview; (2) the total number of calls to that respondent and their collaterals; (3) whether a concerted retention effort was deployed in an attempt to retain them; (4) how many contacts they provided for themselves and collaterals; (5) whether they completed the wave 2 interview; 6) respondent carelessness when completing the interview; (7) whether or not the interviewer perceived the respondent was being honest in their responses; (8) respondent commitment to study participation; and (9) whether respondents participated in the interview for passive, internal, external, or unclassifiable reasons.Footnote 7

We relied on these exclusion restrictions because previous research has found that they are directly related to study retention, our selection condition (e.g., Clark et al. 2020; Barber et al. 2016; Deeg et al. 2002; Odierna and Bero 2014), but only indirectly related to recidivism, our prediction outcome, through the selection process. For example, prior research has found that many participants join studies for altruistic intentions and that positive inclinations as well as positive experiences with the study team are some of the strongest predictors of retention (Price et al. 2016). The LoneStar Project placed a premium on rapport-building, interview experiences, and study branding (Mitchell et al. 2018). In other words, respondents were invested in study participation. Although there may be reason to believe that there is overlap among those involved with the criminal justice system who also lack interest in study participation post-prison, to the best of our knowledge we have not observed theory or research in the extensive literature on prisoner reentry make a claim for study investment as a predictor of recidivism, as measured by rearrest (Gendreau et al. 1996; Mears and Cochran 2015; Visher and Travis 2003). These nine variables are valid and meet all the criteria necessary for their use, thus lending themselves as viable exclusion restrictions.

Dependent Variable

Recidivism in the form rearrest after release from prison as derived from official records is our outcome. Arrest was dichotomously coded (1 = “yes”; 0 = “no”).Footnote 8 We right-censor the data at December 18, 2018, which represents two years following the conclusion of the baseline data collection. All models control for the number of days post-release through right-censoring.

Independent Variables

We organized the correlates of recidivism into four broad categories: personal attributes, criminal justice involvement, prison and reentry experiences, and attitudes. See Table 1 for variable descriptions and response options.

Personal Attributes

Prior research has found that age (Alper et al. 2018), race/ethnicity (Alper et al. 2018; McGovern et al. 2009), mental health issues (Barrett et al. 2014; Skeem et al. 2011), ties to social institutions (Berg and Huebner 2011; Uggen 2000), and days within the community post-release (Alper et al. 2018) are associated with recidivism. As such, we control for any association they may have with arrest rates.

Criminal Justice Involvement

Prior criminogenic behaviors are strongly correlated with future criminal justice involvement (Katsiyannis et al. 2018; Padfield and Maruna 2006). Hence, it was vital to control for prior criminal justice contact (i.e., total incarcerations, prior arrests) and characteristics of the current incarceration (i.e., incarceration length and incarcerating offense).

Prison and Reentry Experiences

Prison experiences and reintegration barriers can influence the likelihood of success upon reentry. Indeed, researchers have found that gang members (Huebner et al. 2007; Pyrooz et al. 2021) and those housed in restrictive housing (Butler et al. 2017; Clark and Duwe 2018; Mears and Bales 2009) may have greater challenges upon reentry. Prison visitation has been linked with decreases in recidivism post-release (Mitchell et al. 2016). Involvement in misconduct or experiences of victimization and violence may negatively affect a person’s ability to successfully reintegrate (Daquin et al. 2016; Hsieh et al. 2018; Lugo et al. 2017; Taylor 2015). Therefore, continuous indicators measuring involvement in violent misconduct or victimization and exposure to violence in the six months prior to release are used. Given the influence that peers can have on the reentry process (Taxman 2017), a mean scale of delinquent peers was created.

Having a plan for release or support during this transition can help to foster reintegration between prison and the free world (Clark 2016; Costanza et al. 2015; Wong et al. 2018). As such, three dichotomous controls were used to represent various supports and control forces designed to increase successful reintegration, including parole status, a reentry plan, and residing in a halfway house.

Attitudes

Respondent attitudes and beliefs can influence recidivism. For example, incarcerated people who were treated in a procedurally just way may be more likely to comply with the law based on their prior interactions (Beijersbergen et al. 2016) and low self-control has also been linked to criminal behavior post-release (Malouf et al. 2014). Hence, we used measures of procedural justice and low self-control. Stress upon reentry may influence recidivism rates (Spohr et al. 2016; Thoits 1995; Wimberly and Engstrom 2018) and social support has been found to aid in the reentry process and potentially decrease involvement in antisocial behavior (Berg and Huebner 2011). Consequently, a stress scale and family social support scale were created. Also, an indicator of social capital was created and included four items measuring readiness for change, three items measuring locus of control, and two items measuring self-efficacy.

Analytic Strategy

Multiple analyses were used to examine the extent of selection bias and determine the degree to which the models can account for such bias. To do this we specify four sampling conditions: (1) a complete sample, (2) a retained sample, (3) an imputed sample, and (4) a Heckman corrected sample.

We used two methods to evaluate our first research question, how attrition biases correlates of recidivism. First, we determined if there was a statistical difference between the characteristics of the full and retained samples using independent samples t-tests (LaLonde 1986). If no bias existed, we would expect the predictors of recidivism in the retained sample to be similar to the predictors in the full sample.

Second, we estimated a Two-Part Model (TPM) to examine bias. A TPM uses a probit model to estimate selection (i.e., retention) and an ordinary least squares regression to estimate the outcome of interest (i.e., rearrest count, see footnote 9). This type of model provides estimates of recidivism conditional on selection into the retained subsample of the population. In the absence of selection or adequate exclusion restrictions, an uncorrected TPM is sufficient for modeling an outcome using a specific subsample of a population (Bushway et al. 2007; Duan et al. 1983). However, if the TPM is biased and the point estimate is wrong, efficiency is no longer relevant because the TPM may produce misleadingly small standard errors. Since it is not possible to test the independence of error terms between the selection and substantive models, Bushway et al. (2007) suggested an alternative approach. This involves comparing the results of the TPM to the results of other models which attempt to correct for selection bias to determine how much of an influence selection may have on model estimates. If the estimates do not vary from those of the TPM, selection is not an issue. Thus, we compared the results from a TPM to the results of models using Heckman’s two-step model and variations existed (see Appendix 3).

Since selection bias was determined to be an issue, we proceeded with our second research question: how much is each correlate affected by attrition bias? Stolzenberg and Relles’ (1997) modeling technique which, once combined with the coefficients from the TPM, demonstrate the amount of bias that is present within each covariate. In short, Stolzenberg and Relles’ (1997) method offers an intuitive approach to understanding the severity of sample selection bias. Their technique allows for the investigation of the level of selection bias in coefficients and then to bound the estimates. This is an important first step to understanding the size and underlying reasons for the selection problem in order to better grapple with the various approaches to address it (Bushway et al. 2007; Stolzenberg and Relles 1997). Bushway et al. (2007) note that this methodology is often underused, despite its ability to determine if a Heckman correction is necessary, and their hope was to reacquaint criminologists to this method for assessing selection bias prior to applying correction methods. To do so, the following equation (Stolzenberg and Relles 1997) is used to estimate the predicted bias for each independent variable, represented by \(\aleph_{{B_{1} }}\), which is the unknown correlation between the selection and substantive equation error terms. The independent variable of interest is represented by x1 and all other variables in the equation are identified as x2 … xp. Z represents the covariates of the selection equation and \(\hat{Z}\) is the predicted values from the selection equation.

To interpret bias estimates derived from Stolzenberg and Relles’ (1997) technique, the predicted bias is divided by the sampling error for each covariate, represented by \(\aleph_{{\beta_{1} }} /S_{{\beta_{1} }}\). Then \(\aleph_{{\beta_{1} }} /S_{{\beta_{1} }}\) is multiplied by the standard error for each covariate from a two-part regression model. The final estimate demonstrates the predicted bias in the dependent variable (i.e., arrest count) for each covariate if bias is not accounted for within the models.

The third research question examined how well each method of correction could account for sample selection bias as a result of attrition. To do so, Linear Probability Models (LPMs) were estimated for the full, retained, imputed, and corrected samples. Results from these models were compared for consistency of coefficients and equality of coefficient tests were conducted. LPMs allow for simple interpretation and coefficient comparison by modeling the probability of a dichotomous outcome as a linear function of covariates (Breen et al. 2018; Greene 2011).Footnote 9

For the imputed sample, a dichotomous variable for rearrest by wave 3 was generated, which was nearly identical to that used in the full sample model. The only difference was that this variable was coded as missing for those who did not complete wave 3. We used multiple imputation with chained equations (MICE) to impute data for the cases missing on wave 3 rearrest and ran an identical LPM to the full sample using the imputed datasets. In a sense, this simulated a scenario where we had missing data due to attrition that would allow us to compare how analyses with multiple imputation compared to those using the full sample data.

An LPM for a Heckman simultaneous full-information maximum likelihood (FIML) model was estimated for the dichotomous dependent variable: arrest.Footnote 10 The selection model included a probit regression for retention with controls and metadata correlates predictive of retention but not arrest (Van de Ven and Van Praag 1981). Then, the substantive model involved estimating a logit regression of the dependent variable while accounting for the independent variables. Two statistics are of particular importance—the Wald’s test of independent equations and rho. The Wald’s test of independent equations determines if the equations are conditional on retention. If it is significant, the null hypothesis is rejected, and we would conclude that the equations are not independent and selection bias from attrition is a problem. The rho estimate is a correlation that quantifies the dependence between the outcome and selection models. If it is equal to zero, the selection and prediction models are independent and can be analyzed separately, suggesting selection is not an issue. Conversely, if the rho estimate is not equal to zero then the use of a Heckman model is justified.

Multiple concerns exist when estimating a Heckman correction. Strong exclusion restrictions are needed, but it is also important to examine the distribution of the error terms, the presence of multicollinearity, and concerns about endogeneity. The variance inflation factors (VIFs) were calculated by estimating a probit model for selection, creating an inverse Mill’s ratio, and estimating a regression to examine the VIFs. No multicollinearity issues existed; the average VIF was 1.37 (range: 1.05–1.99), which falls well below levels of concern (Field 2009; Menard 1995). Moreover, the error terms were normally distributed and endogeneity was examined using endogenous treatment effects (Cattaneo 2010; Cerulli 2014; Wooldridge 2010) and the Hausman Test (Davidson and MacKinnon 1993). The results from the treatment effects indicated that when no one is retained, the average probability of arrest is 0.25, which is 0.04 higher when someone is retained than when someone is not retained. This effect is not statistically different from zero (p = 0.366), suggesting that retention is independent of arrest outcomes. Similar results are found for the Hausman test (p = 0.160), indicating that retention is exogenous to arrest, and issues of endogeneity are limited.

Results

Descriptive and Predictive Differences Based on Sample Selection Conditions

Descriptive statistics and independent samples t- tests partitioned by the full, retained, and attrition samples are presented in Table 2. It is evident that there are differences between the respective samples. Arrest differs between those who were retained and those who were not by 13 percentage points (retained = 35 percent; attrited = 48 percent). The arrest count also differed. Whereas the retained group maintained 0.64 arrests, there were 0.82 arrests recorded among the attrition group (Cohen’s d = 0.139). Differences between the groups extended from the dependent to the independent variables, including age, incarceration length, prison gang membership, delinquent peers, and having a reentry plan. All of these differences were statistically significant at p-values at or below 0.05.

The results from the t-tests demonstrate that correlates of arrest vary between the full and retained sample, suggesting that attrition may bias results when not properly accounted for.Footnote 11 However, the substantive implications of those biases on recidivism is unclear. In other words, how much do these biases affect the magnitude of the correlates of recidivism?

The Magnitude of Sample Selection via Attrition on Correlates of Recidivism

Table 3 provides the results from the Stolzenberg and Relles’ (1997) technique to assess bias for all correlates of arrest. Column \(\aleph_{\beta }\) represents the estimate of selection bias; whereas, the column labeled \(\aleph_{{\beta_{1} }} /S_{{\beta_{1} }}\) presents the selection bias divided by the sampling error of the covariate. Although the aforementioned columns are needed for computation, the third column is most important for interpretation because it illustrates the predicted bias in arrests for covariates. For illustrative purposes, an arrest statistic of 0.45 would indicate a one unit increase in a given coefficient biasing the effect by 0.45 arrests. When modeling bias, the correlation between the error terms in the selection and substantive equations is unknown. Consistent with Bushway et al. (2007), we allow \(\rho_{\varepsilon \delta }\)—the correlation of error terms—to vary between 0.25 and 1; when the correlation increases, so too does the amount of selection bias.

We use a threshold of 0.35 for bias because it represents ± 50% change in arrests (M = 0.70). The effects of selection bias for 19 of the 28 correlates was modest—that is less than a 0.35 change in arrest—even when the error terms were perfectly correlated. The largest bias was observed for restrictive housing, which ranged from − 0.06 with a correlation of 0.25 to − 0.22 when the error terms are perfectly correlated. Once combined with the standard errors from the uncorrected TPM (see Appendix 3), the effect size for restrictive housing would be biased by − 0.29 to − 1.14 arrests. Other large effects were seen for prison gang membership (0.85 arrests), misconduct (0.73), pre-prison employment (0.57), and incarcerated for an “other” offense (0.53), when error terms were perfectly correlated. The implications of bias were smaller, albeit important, for “other” race (0.43) or Black (-0.39), incarcerated for a property offense (0.44), receiving prison visits (0.40), and having a release plan (-0.34). The results from these analyses demonstrate that there is a non-trivial amount of selection bias within the correlates of arrest. Not accounting for this could result in biased regression estimates and inaccurate implications, which is the focus of our second research question.

Comparisons of Corrections for Sample Selection Bias

If a technique was able to account for all bias within a model, the corrected model results would mirror those of the full sample. This is not the case for any of the correction techniques, although some models performed better than others.

We start by examining the consistency of statistically significant coefficients between sample conditions and the complete sample condition (see Table 4). The listwise deleted model of retained respondents and the multiply imputed model had four effects that were consistently significant (i.e., age, incarcerating property offense, incarcerating drug offense, and delinquent peers) with the full sample condition. Both the listwise deleted and the imputed models had two effects that were new compared to the full model (i.e., total incarcerations and parole) and prior arrest was no longer significant in either model. In addition, the imputed model predicted one additional significant effect for misconduct.

Before comparing the Heckman model, it is important to note that according to the Wald’s test of independence, Heckman equations are conditional on retention; therefore, it is appropriate to control for selection bias within the model (Wald = 7.37, p = < 0.05). The rho statistic (0.42) also confirms the use of a Heckman correction, suggesting that the selection and substantive equations are not independent of one another. Although the Heckman correction appears to statistically account for bias and is necessary due to the dependence between the selection and substantive equations, the substantive effects of this correction tell a different story. Three effects (i.e., age, property incarcerating offense, and drug incarcerating offense) were consistently significant between the Heckman and the full models; whereas, other race, total incarcerations, and parole were newly significant in the corrected model. Also, incarceration length and delinquent peers were no longer significant.

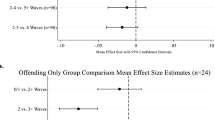

To further highlight the agreement between the full, retained, and corrected samples, we present concordance statistics on the bottom of Table 4.Footnote 12 Between the full and corrected samples, 82% of the findings were consistent across models—this was the lowest of all estimates. In fact, the agreement between the full and retained sample was 7 percentage points higher at 89%, whereas the agreement between the full and the imputed sample was slightly lower at 85%. These estimates suggest that out of all corrections, listwise deletion is producing results that are most similar to the full sample.

Although the consistency of significant correlates varies across models, it is important to examine if those variations are substantively significant. In doing so, we present the equality of coefficient comparisons across all models in Table 5. When comparing the coefficients between the full, listwise, imputed, and Heckman models, none of the coefficients were significantly different from one another. These findings suggest that all three correction samples approximate the full sample closely.Footnote 13

Discussion

There are many data sources available to criminologists to examine the etiology of criminal behavior and evaluate responses to crime. Longitudinal research design is one that has proven to be beneficial to the field when seeking to understand leading issues in crime and justice. Yet, attrition could undermine the utility of such data, making it critical to determine the extent to which it undermines conclusions and whether there are viable solutions. In this study we used multiple techniques to explore and correct for potential selection bias, which leads to three main takeaway points.

First, attrition-induced selection bias exists within longitudinal data and produces bias in the correlates of recidivism. Our study design allowed us to examine the bias between the full and retained sample, which was noteworthy given we had complete data on arrests for all respondents. Our findings demonstrated that the retained sample was systematically different than the full sample. Selection bias was observed in bivariate associations and, sometimes, the bias would change the outcome variable by a factor as large as 1.6. The means between the retained and attrited sample were statistically different for six correlates (see Table 5). The error produced by not accounting for bias can be substantial, as demonstrated by the Stolzenberg and Relles’ (1997) technique. In some instances, selection bias did not affect the correlate, but for 36% of the correlates, bias could increase or decrease the rearrest count by 43% or more. Bias as a result of attrition is problematic and would go unrecognized if researchers fail to explore the implications of bias within their data. Though our findings rely on LoneStar Project data, it is likely that selection bias alters the conclusions reached about correlates covering a wide range of outcomes across longitudinal studies that experience attrition across waves.

Second, correcting for selection bias may be simpler than previously thought. Listwise deletion proved to be the most accurate and useful means of correcting for attrition. Correspondingly, Brame and Paternoster (2003) note that the most typical response for dealing with missing data in criminological literature is listwise deletion. Indeed, we observed 89% concordance in statistical significance for the coefficients across the full and listwise sample models and perfect concordance in the equality of coefficients. Although the imputed analysis fared better than the Heckman analysis in terms of concordance, imputed data are problematic because the chained equations are based on the cases which have partial data for that collection. Also, imputation does not account for those cases who may have missed an entire wave of data collection (Allison 2002). Therefore, imputation to address attrition would not be ideal, even had it produced results equivalent to the full sample.

The Heckman correction produced less consistent results with the full sample than did the retained sample. Although no coefficients were significantly different from one another, we observed the lowest levels of agreement across models in the Heckman correction model. Disparities in levels of agreement are problematic given most researchers typically interpret the findings based on statistically significant coefficients, of which only 82% matched between the two models. In other words, the Heckman correction performed poorer than simply using the retained model, which is in line with Winship and Mare’s (1992) contention that the Heckman correction is not a panacea. Instead, this modeling technique should be used as a tool to examine if selection bias may be present, but it does not necessarily replicate the results that would be found if bias did not exist.

Our final takeaway highlights the role researchers play in advancing the utility of longitudinal research. Our results demonstrated that it is vital for researchers to more carefully consider attrition-induced selection bias when working with longitudinal data. Even basic sensitivity analyses can go a long way in critically examining the assumptions made by incomplete data (Brame and Paternoster 2003). Our review of studies in recent criminological literature using the Heckman correction illustrated that rarely, if ever, is the Heckman correction used to study recidivism or reentry experiences (see Appendix 1). Instead, most of the studies relying on the correction use court processing outcomes. Selection bias introduced through attrition should be explored and corrected (Wolfolds and Siegel 2019). As illustrated through this study, statistical methods exist that adequately determine the presence and magnitude of bias (i.e., Stolzenberg and Relles estimate) and these methods can lead to a better understanding of how bias via attrition may influence their covariates. Because researchers and practitioners rely heavily on longitudinal research for policy and practical implications, our hope is that researchers use these findings to establish a new standard of practice in longitudinal research that examines and accounts for attrition bias.

The steps researchers take moving forward will depend on the adequacy of exclusion restrictions. A Heckman correction model can only be used to confirm the presence of selection bias if the data allow for exclusion restrictions. Because of this, we highlight the importance of collecting metadata during longitudinal data collections so that these variables are available for analysis, should a correction be necessary (Brame and Paternoster 2003). In situations where exclusion restrictions are available the steps that should be taken are: (1) compare t-tests between correlates of the full and retained sample; (2) run a two-part model followed by Stolzenberg and Relles (1997) to determine the covariate-specific magnitude of biasFootnote 14; (3) report results from models estimated on the retained sample with intentional transparency about which variables may or may not be accurately estimated based on the results of the Stolzenberg and Relles models; (4) compare the equality of coefficients; and (5) estimate a Heckman correction model, noting the bias estimates (e.g., rho, Wald’s statistic, or Mill’s ratio) and any variation from the retained model. We recommend proceeding in this manner even though the Heckman correction was inadequate. Transparency is needed to explore potential bias, the magnitude of such bias, and how it affects correlates using multiple methods (Wolfolds and Siegel 2019). Furthermore, exploring the utility of the Heckman correction using different data sets will increase the validity of our findings and increase our understanding of when and where the method may or may not be necessary.

In situations where exclusion restrictions are not available, researchers should still (1) compare t-tests between correlates of the full and retained sample; (2) report results from models estimated on the retained sample with transparency about which variables may or may not be accurately estimated based on the results of the t-tests; and (3) compare coefficients across models. Although t-tests and coefficient comparisons will demonstrate bias in each covariate independently rather than the set of covariates as a whole, this will allow researchers to identify potential variation enabling them to determine how seriously their results may be biased and make recommendations for policy and practice accordingly.

In closing, this research highlighted the importance of examining selection bias as a result of attrition in longitudinal studies. The results confirm that attrition biases covariates of recidivism outcomes and in some instances those biases are large in magnitude. The Heckman correction is no universal remedy. Paradoxically, listwise deletion produced the most consistent findings with the full sample. Indeed, in many cases, “more complicated methods [to examine missing data issues] would not be warranted” (Brame and Paternoster 2003, p. 75). Selection bias will continue to plague longitudinal research if investigators do not capitalize on the tools to examine the implications of attrition. Correcting for such bias is not only applicable to research on sentencing and court outcomes. Exploring and reporting the potential effects selection bias can have on results will increase transparency and certainty of research conclusions. We believe these conclusions will empower researchers to examine how their longitudinal data may be biased when retention rates are less than ideal—which is far too often the case.

Notes

Other approaches include Manski bounds, Cosslett’s selection model, Newey’s series estimator, Powell’s two-step semiparametric estimator, Robinson’s estimator, along with other statistical methods such as xtARGLS, support vector regression, cluster-based estimating, and kernel mean matching (Winship and Mare 1992). Many of these models with limited dependent variables are estimated using LIMDEP’s statistical software package.

There are circumstances, however, in which reincarceration may actually make someone easier to locate and study as they are in custody (Fahmy et al. 2019). But, overall, it is more difficult to make inferences about this group than those in the general population.

In some cases, researchers rely on propensity score matching (PSM) to correct for bias in their samples (Dehejia and Wahba 2002), though this method is not relevant here and is beyond the scope of the paper. PSM matches subjects based on relevant variables and should reduce the potential bias due to confounding variables (Rosenbaum and Rubin 1983). Although some researchers suggest that PSM holds promise over a listwise deleted sample (Dehejia and Wahba 1998; Lennox et al. 2012), the technique requires a strict set of assumptions that are difficult to meet in many cases (Dehejia 2005), it may not be appropriately estimated, and does not provide a universal correction for selection (Dehejia 2005; Smith and Todd 2005). For instance, PSM’s conditional independence assumption is predicated on the idea that assignment in the treatment group (e.g., retained versus attrited) is based on relevant observed characteristics (Campbell et al. 2020; Tucker 2010). Because of this assumption, however, PSM cannot account for the hidden bias created by unobserved characteristics (Tucker 2010; Wolfolds and Siegel 2019). When the goal is to control for endogeneity that arises from unobservable characteristics (such as in our case), the Heckman selection model is superior (Lennox et al. 2012).

Although the Heckman two-step correction can be completed in two stages, the preferred method involves a Full Information Maximum Likelihood (FIML) model which estimates the equations simultaneously to reduce model error.

We used the following search terms in a Boolean fashion: Heckman AND (“two-step” OR “two-stage” OR “Two-step correction” OR “Two-stage correction” OR “two step” OR “ two stage” OR “Two step correction” OR “Two stage correction” OR “selection” OR “correction” OR “Mills ratio” OR “Mill's ratio” OR “rho”) NOT "two-stage least squares" and searched the following journals: Criminology, Criminal Justice and Behavior, Journal of Developmental and Life-Course Criminology, Journal of Experimental Criminology, Justice Quarterly, Journal of Quantitative Criminology, and Journal of Research in Crime and Delinquency. The initial search resulted in 67 articles. After careful review of the articles for relevance and the use of a Heckman correction, 46 articles were removed, resulting in a final sample of 21 articles.

Although we understand that Little’s (1988) Missing Completely at Random (MCAR) test does not consider unobservable data and cannot determine whether missing data is truly MCAR, we ran the test to help justify our decision to listwise delete missing data. The test statistic was non-significant (p = 1.00), which provides support for our decision. Additionally, only 1.37% of cells were missing, which gives us confidence that that the missing data were sparse enough to utilize listwise deletion. For variables that were missing more than one response, regressions were estimated with a dummy variable adjustment. Coefficients were compared between the mean/mode replaced models and the listwise deleted models and no statistically significant differences existed.

In response to a reviewer’s comment, we have run additional analyses and created a table explicating the use of our exclusion restrictions from the LoneStar Project’s metadata. We closely examined the findings of linear probability models comparing our exclusion restrictions’ ability to predict wave 3 retention versus rearrest from the Clark et al. (2020) paper and are confident that our exclusion restrictions are appropriately justified (see Appendix 2).

We also created a continuous measure, arrest count, representing the number of times a respondent was arrested after release from prison. This estimate was necessary for one modeling strategy—the TPM.

Although probit models are statistically available for the analysis of a binary outcome, in order for us to compare coefficients across models, as suggested by a reviewer, LPMs were the most appropriate.

Consistent with traditional Heckman models, we attempted to use a continuous measure of arrest. Due to the overdispersion of zeros indicating no arrests in our data, this measure was not normally distributed. A heckpoisson command exists in Stata, but our data did not meet poisson distribution assumptions. Given the challenges with normality, a heckprobit was also assessed in our analyses; however, we ultimately decided to run a FIML LPM Heckman model with a binary outcome in order to compare coefficients across models. Therefore, we estimate a FIML LPM Heckman in order to compare equality of coefficients as well as a heckprobit to maintain the integrity of the Heckman correction using a binary outcome. As demonstrated by Tables 4 and 5, statistically significant coefficients did not vary between Heckman models.

It is possible that this variation is due to the loss of analytical power between the full and retained sample. It becomes more difficult to detect statistically significant differences when a sample changes from 791 to 506 people.

These estimates were calculated by first coding each variable to determine how many of the two coefficients across each model were significant and noting the agreement between those coefficients. For each variable across models, if both coefficients were significant or if both coefficients were non-significant, it was coded as 2 (i.e., “agreement”). If one coefficient was significant and the other was non-significant, it was coded as 0 (i.e., “disagreement”). This step is conducted at the variable level, so each variable within the model had an agreement estimate, which were later summed. Step two requires calculating the percent agreement between the models which involved summing agreement/disagreement estimates from each variable and dividing them by the total number of coefficients across models. That equation was \(Model Agreement=\frac{Sum\,of\,Agreement}{2\times Number\,of\,Variables}\times100\).

We are aware that coefficient comparisons, similar to Paternoster et al. (1998), are designed for and assume independent samples. That is not the case for our data. However, this was the most viable way of comparing coefficients across models since we are not able to estimate seemingly unrelated estimation (SUR). SUR is implausible because equations have to be balanced in terms of number of observations, the sureg command in Stata does not allow for conditional statements (such as weights) or use of the same dependent variable, and the SUR method is used to model parameters of all equations simultaneously; thus, we have no way of fitting different model types. Due to these limitations, please use caution when interpreting the findings.

Stolzenberg and Relles (1997) require a continuous outcome variable. Although this may prohibit its use for some research questions, we encourage researchers to move away from binary outcomes which limit the variation and restrain the social world to a binary.

References

Allison PD (2000) Multiple imputation for missing data: a cautionary tale. Soc Methods Res 28(3):301–309

Allison PD (2002) Missing data. Sage, Thousand Oaks

Alper M, Durose MR, Markman J (2018) 2018 Update on prisoner recidivism: a 9-year follow-up period (2005–2014). U.S. Department of Justice, Bureau of Justice Statistics, Washington, DC

Anderson B (2017) Selection models and weak instruments. Musings. Retrieved from https://info.umkc.edu/drbanderson/selection-models-and-weak-instruments/

Asendorpf JB, Van De Schoot R, Denissen JJA, Hutteman R (2014) Reducing bias due to systematic attrition in longitudinal studies: the benefits of multiple imputation. Int J Behav Dev 38(5):453–460. https://doi.org/10.1177/0165025414542713

Badawi MA, Eaton WW, Myllyluoma J, Weimer LG, Gallo J (1999) Psychopathology and attrition in the Baltimore ECA 15-year follow-up 1981–1996. Soc Psychiatry Psychiatr Epidemiol 34(2):91–98. https://doi.org/10.1007/s001270050117

Barber J, Kusunoki Y, Gatny H, Schulz P (2016) Participation in an intensive longitudinal study with weekly web surveys over 2.5 years. Jl Med Internet Res 18(6):1–12. https://doi.org/10.2196/jmir.5422

Barrett DE, Katsiyannis A, Zhang D, Zhang D (2014) Delinquency and recidivism: a multicohort, matched-control study of the role of early adverse experiences, mental health problems, and disabilities. J Emot Behav Disord 22(1):3–15. https://doi.org/10.1177/1063426612470514

Barry AE (2005) How attrition impacts the internal and external validity of longitudinal research. J Sch Health 75(7):267–270. https://doi.org/10.1111/j.1746-1561.2005.00035.x

Bascle G (2008) Controlling for endogeneity with instrumental variables in strategic management research. Strateg Organ 6(3):285–327. https://doi.org/10.1177/1476127008094339

Beijersbergen KA, Dirkzwager AJE, Nieuwbeerta P (2016) Reoffending after release: Does procedural justice during imprisonment matter? Crim Justice Behav 43(1):63–82. https://doi.org/10.1177/0093854815609643

Berg MT, Huebner BM (2011) Reentry and the ties that bind: an examination of social ties, employment, and recidivism. Justice Q 28(2):382–410. https://doi.org/10.1080/07418825.2010.498383

Berk RA (1983) An introduction to sample selection bias in sociological data. Am Sociol Rev 48(3):386–398

Bolanos F, Herbeck D, Christou D, Lovinger K, Pham A, Raihan A et al (2012) Using Facebook to maximize follow-up response rates in a longitudinal study of adults who use methamphetamine. Subst Abuse Res Treat 6:1–11

Boys A, Marsden J, Stillwell G, Hatchings K, Griffiths P, Farrell M (2003) Minimizing respondent attrition in longitudinal research: practical implications from a cohort study of adolescent drinking. J Adolesc 26(3):363–373

Brame R, Paternoster R (2003) Missing data problems in criminological research: two case studies. J Quant Criminol 19(1):55–78. https://doi.org/10.4324/9781315089256-5

Brame R, Piquero AR (2003) Selective attrition and the age-crime relationship. J Quant Criminol 19(2):107–127

Breen R, Karlson KB, Holm A (2018) Interpreting and understanding logits, probits, and other nonlinear probability models. Ann Rev Sociol 44(1):39–54. https://doi.org/10.1146/annurev-soc-073117-041429

Bushway S, Johnson BD, Slocum LA (2007) Is the magic still there? The use of the Heckman two-step correction for selection bias in criminology. J Quant Criminol 23(2):151–178

Butler J, Quinn SC, Fryer CS, Garza MA, Kim KH, Thomas SB (2013) Characterizing researchers by strategies used for retaining minority participants: Results of a national survey. Contemp Clin Trials 36(1):61–67

Butler HD, Steiner B, Makarios MD, Travis LF (2017) Assessing the effects of exposure to supermax confinement on offender postrelease behaviors. Prison J 97(3):275–295. https://doi.org/10.1177/0032885517703925

Campbell DT, Stanley JC (1963) Experimental and quasi-experimental designs for research. Rand McNally, Chicago

Campbell CM, Labrecque RM, Weinerman M, Sanchagrin K (2020) Gauging detention dosage: assessing the impact of pretrial detention on sentencing outcomes using propensity score modeling. J Crim Just 70:1–14. https://doi.org/10.1016/j.jcrimjus.2020.101719

Carkin DM, Tracy PE (2015) Adjusting for unit non-response in surveys through weighting. Crime Delinq 61(1):143–158. https://doi.org/10.1177/0011128714556739

Cartwright N (2007) Are RCTs the gold standard? BioSocieties 2(1):11–20. https://doi.org/10.1017/s1745855207005029

Cattaneo MD (2010) Efficient semiparametric estimation of multi-valued treatment effects under ignorability. J Econom 155(2):138–154. https://doi.org/10.1016/j.jeconom.2009.09.023

Certo ST, Busenbark JR, Woo H-S, Semadeni M (2016) Sample selection bias and Heckman models in strategic management research. Strateg Manag J 37:2639–2657. https://doi.org/10.1002/smj

Cerulli G (2014) Ivtreatreg: a command for fitting binary treatment models with heterogeneous response to treatment and unobservable selection. Stata J 14(3):453–480. https://doi.org/10.1177/1536867x1401400301

Chang MW, Brown R, Nitzke S (2009) Participant recruitment and retention in a pilot program to prevent weight gain in low-income overweight and obese mothers. BMC Public Health 9:1–11. https://doi.org/10.1186/1471-2458-9-424

Chatfield MD, Brayne CE, Matthews FE (2005) A systematic literature review of attrition between waves in longitudinal studies in the elderly shows a consistent pattern of dropout between differing studies. J Clin Epidemiol 58(1):13–19. https://doi.org/10.1016/j.jclinepi.2004.05.006

Clark VA (2016) Predicting two types of recidivism among newly released prisoners: first addresses as “launch pads” for recidivism or reentry success. Crime Delinq 62(10):1364–1400. https://doi.org/10.1177/0011128714555760

Clark VA, Duwe G (2018) From solitary to the streets: the effect of restrictive housing on recidivism. Corrections. https://doi.org/10.1080/23774657.2017.1416318

Clark KJ, Mitchell MM, Fahmy C, Pyrooz DC, Decker SH (2020) What if they are all high-risk for attrition? Correlates of retention in a longitudinal study of reentry from prison. Int J Offender Ther Comp Criminol. https://doi.org/10.1177/0306624X20967934

Claus RE, Kindleberger LR, Dugan MC (2002) Predictors of attrition in a longitudinal study of substance abusers. J Psychoact Drugs 34(1):69–74

Coen AS, Patrick DC, Shern DL (1996) Minimizing attrition in longitudinal studies of special populations: an integrated management approach. Eval Program Plan 19(4):309–319

Cordray S, Polk K (1983) The implications of respondent loss in panel studies of deviant behavior. J Res Crime Delinq 20(2):214–242. https://doi.org/10.1177/002242788302000205

Costanza SE, Cox SM, Kilburn JC (2015) The impact of halfway houses on parole success and recidivism. J Sociol Res 6(2):39–55. https://doi.org/10.5296/jsr.v6i2.8038

Crisanti AS, Case BF, Isakson BL, Steadman HJ (2014) Understanding study attrition in the evaluation of jail diversion programs for persons with serious mental illness or co-occurring substance use disorders. Crim Justice Behav 41(6):772–790

Cullen FT, Pratt TC, Graham A (2019) Why longitudinal research is hurting criminology. The Criminologist 5:63

Curtis R (2010) Getting good data from people that do bad things: Effective methods and techniques for conduting research with hard-to-reach and hidden populations. In: Bernasco W (ed) Offenders on offending: learning about crime from criminals. Willan Publishing, London, pp 141–158

Daquin JC, Daigle LE, Listwan SJ (2016) Vicarious victimization in prison: examining the effects of witnessing victimization while incarcerated on offender reentry. Crim Justice Behav 43(8):1018–1033

David MC, Alati R, Ware RS, Kinner SA (2013) Attrition in a longitudinal study with hard-to-reach participants was reduced by ongoing contact. J Clin Epidemiol 66(5):575–581

Davidov E, Yang-Hansen K, Gustafsson JE, Schmidt P, Bamberg S (2006) Does money matter? A theory-driven growth mixture model to explain travel-mode choice with experimental data. Methodology 2(3):124–134. https://doi.org/10.1027/1614-2241.2.3.124

Davidson R, MacKinnon JG (1993) Estimation and inference in econometrics. Oxford University Press, New York

Deeg DJH, Van Tilburg T, Smit JH, De Leeuw ED (2002) Attrition in the longitudinal aging study Amsterdam: the effect of differential inclusion in side studies. J Clin Epidemiol 55(4):319–328. https://doi.org/10.1016/S0895-4356(01)00475-9

Dehejia RH (2005) Does matching overcome LaLonde’s critique of non-experimental estimators? A postcript. In: Nber working paper series

Dehejia RH, Wahba S (1998) Causal effects in non-experimental studies: re-evaluating the evaluation of training programs (No. 6586)

Dehejia RH, Wahba S (2002) Propensity score-matching methods for nonexperimental causal studies. Rev Econ Stat 84(1):151–161

Duan N, Manning WG, Morris CN, Newhouse JP (1983) A comparison of alternative models for the demand for medical care. J Bus Econ Stat 1(2):115–126. https://doi.org/10.1080/07350015.1983.10509330

Eidson JL, Roman CG, Cahill M (2016) Successes and challenges in recruiting and retaining gang members in longitudinal research: lessons learned from a multisite social network study. Youth Violence Juvenile Just. https://doi.org/10.1177/1541204016657395

Fahmy C, Clark K, Mitchell MM, Decker SH, Pyrooz DC (2019) Method to the madness: tracking and interviewing respondents in a longitudinal study of prisoner reentry. Sociol Methods Res. https://doi.org/10.1177/0049124119875962

Field A (2009) Discovering statistics using SPSS. Sage, Thousand Oaks

Fitzgerald J, Gottschalk P, Moffitt R (1998) An analysis of sample attrition in panel data: the Michigan Panel Study of income dynamics. Population 33(2):251–299

Fumagalli L, Laurie H, Lynn P (2013) Experiments with methods to reduce attrition in longitudinal surveys. J R Stat Soc A Stat Soc 176(2):499–519

Gendreau P, Little T, Goggin C (1996) A meta-analysis of the predictors of adult offender recidivism: What works! Criminology 34(4):575–607

Goodman JS, Blum TC (1996) Assessing the non-random sampling effects of subject attrition in longitudinal research. J Manag 22(4):627–652. https://doi.org/10.1016/s0149-2063(96)90027-6

Greene WH (2011) Econometric analysis, 7th edn. Prentice Hall, Upper Saddle River

Gustavson K, Von Soest T, Karevold E, Roysamb E (2012) Attrition and generalizability in longitudinal studies: findings from a 15-year population-based study and a Monte Carlo simulation study. BMC Public Health 12:918–929. https://doi.org/10.1186/1471-2458-12-918

Hansen WB, Tobler NS, Graham JW (1990) Attrition in substance abuse prevention research: a meta-analysis of 85 longitudinally followed cohorts. Eval Rev 14(6):677–685

Heckman JJ (1976) The common structure of statistical models of truncation, sample selection and limited dependent variables and a simple estimator for such models. Ann Econ Soc Meas 5(4):475–492

Heckman JJ (1979) Sample selection bias as a specification error. Econometrica 47(1):153–161. https://doi.org/10.1007/S10021-01

Hirschi T, Gottfredson M (1983) Age and the explanation of crime. Am J Sociol 89(3):552–584

Hsieh M-L, Hamilton Z, Zgoba KM (2018) Prison experience and reoffending: exploring the relationship between prison terms, institutional treatment, infractions, and recidivism for sex offenders. Sex Abuse 30(5):556–575. https://doi.org/10.1177/1079063216681562

Huebner BM, Varano SP, Bynum TS (2007) Gangs, guns, and drugs: recidivism among serious, young offenders. Criminol Public Policy 6(2):187–222

Katsiyannis A, Whitford DK, Zhang D, Gage NA (2018) Adult recidivism in United States: a meta-analysis 1994–2015. J Child Fam Stud 27(3):686–696. https://doi.org/10.1007/s10826-017-0945-8

Kinner SA (2006) Continuity of health impairment and substance misuse among adult prisoners in Queensland. Australia Int J Prison Health 2(2):101–113. https://doi.org/10.1080/17449200600935711

LaLonde RJ (1986) Evaluating the econometric evaluations of training programs with experimental data. Am Econ Rev 76(4):604–620

Lennox CS, Francis JR, Wang Z (2012) Selection models in accounting research. Account Rev 87(2):589–616. https://doi.org/10.2308/accr-10195

Leung SF, Yu S (1996) On the choice between sample selection and two-part models. J Econom 72:197–229

Liberman AM (2008) The long view of crime: a synthesis of longitudinal research. Springer, Washington, DC

Little RJA (1988) A test of missing completely at random for multivariate data with missing values. J Am Stat Assoc 83(404):1198–1202