Abstract

The emergence of composite materials has started a revolution in the aerospace industry. When using composite materials, it is possible to design larger and lighter components. However, due to their anisotropy, composite materials are usually difficult to inspect and detecting internal defects is a challenge. Line scan thermography (LST) is a dynamic thermography technique, which is used to inspect large components of metallic surfaces and composites, commonly used in the aerospace industry. In this paper, the robotized LST technique has been investigated on a large composite component which contains different types of internal defects located at a variety of depths. For theoretical analysis, the LST inspection was simulated using a mathematical formulation based on the 3D heat conduction equation in the transient regime in order to determine the optimum parameters. The solution of the model was performed using the finite element method. The LST parameters were adjusted to detect the deepest defects in the specimen. In order to validate the numerical results with experimental data, a robotized system in which the infrared camera and the heating source move in tandem, has been employed. From the experimental tests, it was noted that there are three sources of noise (non-uniform heating, unsynchronized frame rate with scanning speed and robot arm vibration) which affect the performance of the test. In this work, image processing techniques that were initially developed to be applied on pulse thermography have been successfully implemented. Finally, the performance of each technique was evaluated using the probability of detection approach.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Nowadays, composite materials, specifically carbon fiber reinforced polymers (CFRPs), play a dominant role in science as well as civil, nuclear, aerospace, renewable energy and automobile industries. These materials significantly improve the mechanical properties, providing high stiffness, higher strength, and improving the fatigue resistance [1, 2]. Aerospace engineers prefer materials which are lighter and easier to shape. Advanced composites such as CFRPs have been increasingly utilized in aerospace structures such as aps, slats, spoilers, elevators, etc. CFRPs offer valuable properties to manufacture complex-shaped components with reduced manufacturing time [1, 3, 4]. Due to their interlaminar structure, CFRPs distribute the energy of impact over a large area using a polymeric matrix. This characteristic makes them more resistant against low velocity impacts, but it may increase the detection probability of internal defects that cannot be observed from the surface [1]. Therefore, due to the high probability of damaging composite materials, engineers must inspect and evaluate the components during the different steps of manufacturing, service and maintenance. In order to detect subsurface defects, non-destructive testing (NDT) techniques are employed. In the case of composite materials, a variety of NDT methods have been proposed in the literature to evaluate composite materials. Infrared thermography [5,6,7,8], ultrasonic [9] or thermosonics [10], SQUID magnetic response [1], and X-ray [11] are some of the methods used for the inspection of composite materials [12, 13]. The final selection of an NDT technique depends on the defect type, material characteristics, accessibility, sensitivity required, as well as the time available to perform the inspection [14].

In this paper, robotized line scan thermography (LST) was investigated in order to inspect a large CFRP specimen which is used in the aerospace industry. This technique consists in heating the component, line-by-line, while acquiring a series of thermograms with an infrared camera [15]. The robotic arm—which carries an infrared camera and the heating source—moves along the surface while the specimen is motionless [15, 16]. The robotized LST method provides advantages in comparison to the static approaches. Robotized LST provides heating uniformity and allows image processing which enhances the detection probability, allowing a large-scale component to be inspected with-out the loss of resolution. Using the LST approach, it is possible to inspect large areas at high scan speeds. Also, the inspection results are immediately available for analysis while the scanning process continues. In order to estimate the optimum inspection parameters, the heat transfer process that takes place during the LST inspection is simulated using the 3D-FEM approach.

COMSOL Multiphysics was the software used to model the problem and to solve the differential equations that govern the heat transfer process [17]. In this research, the CFRP specimen has been modeled using approximately 200 K elements to achieve accurate results. An experimental LST inspection has been conducted in order to validate the numerical simulation and to verify the inspection parameters obtained through the finite element method (FEM) simulation. As per the obtained results, the main sources of noise that affect the LST inspection performance are the non-uniform heating, unsynchronized frame rate with scanning speed and the vibration produced by the robotic arm mechanism.

To compensate for the effects of the noise, data processing algorithms such as thermographic signal reconstruction (TSR), principal component thermography (PCA) and partial least square thermography (PLS) were employed. This paper investigates and evaluates the effect and performance of data processing algorithms in LST data. Finally, the performance of each data processing algorithm was evaluated using the probability of detection(PoD) criterion.

2 Robotized Line Scan Setup

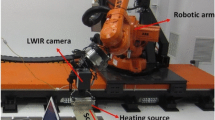

Robotized line scanning thermography was proposed as a dynamic approach for the inspection of large and complex shaped components. The infrared camera and heat source are installed on the robot arm. These components move in tandem, while the specimen remains fixed (see Fig. 1). Using a computer program which provides the commands for the robotic arm, it is possible to tune all inspection parameters such as the speed of the inspection heat, the distance between the inspection head and the specimen, acquisition rate, and the scanning velocity.

The specimen under study is a 900 mm \(\times \) 150 mm monolithic CFRP panel consisting of 10 sections (1–10 as indicated) with a variable number of CFRP layers (progressively increasing from 6 to 22 plies). Each section has 3 flat-bottomed holes of different diameters (6, 8 and 10 mm), for a total of 30 defects located at different depths (from 0.425 to 6.09 mm). The characteristics of the specimen under study are shown in Fig. 2. A relation of the depth and diameters of the defects is presented in Table 1, together with the diameter to depth ratios (D/z). A picture of the robotized line scanning setup is shown in Fig. 1. The reference panel was positioned over a fixed table and the inspection head over the robot scanned the specimen surface while the camera and heat source moved on the reference panel. An uncooled micro-bolometer camera (Jenoptik IRTCM 384, LWIR 7.5–14 \(\mu \)m; 384 \(\times \) 288 pixels) was used during data acquisition and the specimen was heated using a low power heating line lamp.

3 Numerical Simulation of LST

Several models have been proposed in order to estimate the temperature distribution during the thermography process. In the case of LST thermography, there are some analytical models that are more precise than others. The following equation has been proposed for composite materials in 2008 [18].

where the term \(K_0 (x)\) is a modified Bessel function of the second kind of order zero, v is the line-source velocity, L is the specimen thickness and q is the rate of heat emitted per unit length. This equation calculates the temperature on the specimen surface. It is considered that the material is homogenous and the input energy source should be identical for all points in the same line. In the case of CFRP materials, because of their porous structure, the preciseness of the analytical model is not sufficient enough in order to detect the small defects. Therefore, it is strongly suggested to employ the three-dimensional finite element approach in order to calculate the heat transfer in the material volume. It will be more time consuming, but the result will be close to the reality.

To simulate the LST inspection, the three-dimensional finite element method (3D-FEM) is employed to determine the thermal response of the composite specimen when a dynamic heat excitation is applied on its surface. The LST parameters must be adjusted to maximize the temperature variation on the material surface. COMSOL Multiphysics, was employed to model and simulate the LST inspection of the CFRP specimen. In order to simulate the LST thermography in COMSOL Multiphysics, the heat transfer module and multibody dynamics module is used. This module allows the 3D transient energy equation to be modeled and solved in order to obtain the temperature distribution in the CFRP specimen that contains subsurface defects. The heat transfer modulus also provides different types of uniform and non-uniform time dependent heat sources [15]. The proposed model in this work considers the heat transfer by conduction within the specimen and heat transfer by convection and radiation between the sample surface and the ambient. Figure 3 shows the schematic of the heat fluxes considered in this work.

The 3D model geometry was defined as being the same as the experimental specimen. Figure 4 shows the 3D model which consists of 10 sections with various internal layers (progressively increasing from 6 to 22 plies). Also, the fiber orientations are shown in Fig. 5. The number of layers, the defect position and size and the composite fiber direction are the most important parameters when developing the 3D model.

One of the key steps in the simulation process consists in generating the appropriate mesh size. There is a trade-off between the accuracy of the results and the simulation time. A finer mesh size increases the accuracy; however, it also increases the simulation time and requires more computational resources. Figure 6 shows the generated mesh in COMSOL 3D. The simulation parameters as well as the thermophysical properties of the specimen are shown in Table 2.

4 Simulation Results

COMSOL solves the 3D heat conduction equation using the finite element method. To simulate the LST model using COMSOL, there are two procedures to implement that lead to the same results: (1) moving the specimen under the fixed heat source and camera (2) moving the heat source and camera with a fixed specimen.

In this work, because of some mechanical constraints in the experimental setup in order to move the specimen, the second strategy was chosen in simulation. Figure 7 illustrates three defect lines which consist of all internal defects. In the results of the simulation, the sudden temperature change on the lines indicates the defect position. To evaluate defect visibility, the thermal contrast, which is defined as the difference of temperature between a non-defective and a defective area of the specimen, is used. There are various thermal contrast definitions such as the absolute contrast, running contrast, normalized contrast and standard contrast [19]. The absolute thermal contrast is the variable adopted to analyze the detectability of defects and is given by [7]:

where \(T_d \) is the temperature of a pixel or the average value of a group of pixels of a defective area, and \(T_s \) is the temperature at time t for the non-defective area [20].

In this paper, three scanning velocity speeds are considered: 10, 20 and 30 mm/s. The total power of the heating source is 500 W. Figure 8 shows the temperature variation of three defect sizes (A4, B4 and C4) at two different scanning speeds (10 and 30 mm/s) using a constant heat energy (500 W).

It can be observed from figure 8 that the lower the scanning speed, the higher the thermal contrast (or the detectability level of the defects). This can be explained as follows lower speeds the specimen receives more energy from the line source, producing thus a higher thermal contrast between defective and non-defective areas. The scanning speed has an impact on the observation time as well as in the amount of energy that is delivered to the specimen. Thus, the observation time is dependent on the scanning speed. Since the scanning speed increases, the observation time decreases and the amount of energy that is delivered to the specimen, decreases with a reduction in the observation time. The thermal contrasts of three defects (A4, B4 and C4) considering three scanning speeds are compared in Fig. 9.

The maximum thermal contrast and its time of occurrence are dependent on the aspect ratio D/z of the defects (diameter to t depth ratio) [21]. It can be observed from the results obtained that defects with lower D/z require more energy (or lower scanning speed) to obtain a higher value of thermal contrast. Figure 10 illustrates the direct relationship between the maximum thermal contrast and the depth of defects (for three diameters) when the scanning speed is of 10 mm/s.

The amount of energy has a significant effect on the detectability of the defects. To show the influence of the irradiation power density on the thermal contrast, another amount of energy is applied (1000 W). Figure 11 shows the influence of the amount of energy on the maximum thermal contrast at a constant velocity. It can be observed that the visibility of the defects characterized in this work by the maximum thermal contrast can be improved by increasing the amount of the irradiation power density delivered to the specimen. And also, as shown in Fig. 11, the simulation LST results indicate that the detection of the defects is a function of the aspect ratio of the defects (considering the same inspection parameters). Defects with a higher D/z ratio have higher detectability level.

Figure 12 shows 4 different thermal maps of the surface of the material at four different times of the inspection using a scanning speed of 10 mm/s. It is possible to observe that the thermal contrasts of the subsurface defects and the moment at which they become visible in the thermal map are functions of the depth and the lateral size of the defects. Data processing methods could help to increase the detection probability.

According to the simulation results, it is possible to detect almost all of the defects with a different level of visibility using the LST approach. The simulation results using different scanning speeds prove that a longer heating time (lower scanning speed) increases the PoD of the defects due to the time during which energy is delivered to the specimen surface.

5 Experimental Setup

In the experimental setup, an uncooled microbolometer camera (Jenoptik IRTCM 384, LWIR 7.5–14 \(\mu m\), 384 \(\times \) 288 pixels) was used during data acquisition and the specimen was heated using a 500 W line source. The linear speed of the source on the specimen is 10 mm/s. During the experimental implementation of LST, due to the specimen length, the infrared camera covered only a section of the specimen at a time. Therefore, the pseudo-static matrix reconstruction approach is utilized to produce a static image of the specimen, thus allowing a better analysis of the produced data and the possibility to apply post-processing techniques to the acquired thermal images. The experimental parameters are shown in Table 3.

5.1 Validation of the Numerical Simulation

Figure 13 shows the thermal profiles of three defects (A1, B1 and C1) which were obtained from simulation and experimental results at a 10 mm/s scanning speed. These profiles are the best criterion in order to validate and prove the accuracy of the simulation model. A comparison between the simulation profiles and experimental profiles confirms the validity of the simulation model, composite parameters and our analysis approach. However, because of the low frame rate of the camera, the resolution of the experimental profile is not high enough to compare with the simulation profiles. At the beginning of the heating process, the simulation and experimental results are in good agreement while in the cooling time, the cooling rate of the experimental data is higher. It could be dependent on the room temperature or data acquisition accuracy.

5.2 Data Reconstruction

Figure 14 illustrates the methodology adopted to produce the pseudo-static matrix. The infrared camera captures the original sequence \(P_{xi} (t)\), frame by frame (through time t). The first line of the image matrix at time \(t_1 \), will be relocated as the first line of the reconstructed image matrix corresponding to a virtual time \(t_1^{\prime } \), that is \(P_{x^{{\prime }}1} (t_1^{\prime } )\). The first line at the same position \(x_1 \) but in the following frame (at time \(t_2 )\) is then relocated as the second line of the reconstructed matrix, and so on. At the end of this process, the sequence of lines at position \(x_1 \) in the original sequence: \(P_{x1} \left( {t_1 } \right) \ldots P_{x1} (t_n )\) is rearranged into a single image representing the first frame at the virtual time \(t_1^{\prime } \) which is defined as the time of the visibility of a specified specimen line for the camera. The same procedure is repeated for the remaining lines in the original sequence [15]. In order to construct the accurate pseudo matrix, the camera and heat source should move with a constant speed. In other words, the camera framerate must be perfectly synchronized with the scanning speed, which is difficult to achieve. To address this issue, an additional calibration procedure was proposed by Oswald-Tranta and Sorger [22]; or one can use a shifting correction procedure based on the interpolation between the initial and final positions of a reference pixel. In both cases, it is assumed that the camera and source move at a constant speed [15]. The observation time \(t_{obs} \) (or time window), the time during which a given point (line) in the inspected object is observed at a given scanning speed \(u_x \), can be precisely calculated with the knowledge of the length of the FOV in the scanning direction X [15]:

The virtual acquisition rate of the reconstructed sequence \(f_{rate}^{\prime } \), can then be estimated using the known number of pixels being scanned \(p_x \) during the observation time from \(t_{obs} \) [15]:

Equations 3 and 4 are employed to reconstruct the pseudo-static sequence from the dynamic matrix and to determine the observation time and frame rate for every pixel in the new sequence [15].

Figure 15 shows the reconstructed thermograms obtained using the robotized LST inspection. The reconstructed thermograms correspond to the virtual times 2.17, 3.03, 4.17 and 6.5 s. As mentioned in the previous section, it is difficult to synchronize the mechanical motion speed and the acquisition frame rate of the IR camera. The misalignment is visible in the results caused by shifting the object position from one frame to the next. Several solutions have been proposed to reduce the effect of this problem, such as using the matching algorithms as iterative closest point (ICP), the interpolation between the initial and final positions of a reference pixel [22], or increasing the field of view (FOV). As per Fig. 15 it is possible to observe that shallower defects (line A) were easy to detect by robotized LST.

As times elapses, deeper defects are visible. In other words, deeper defects require more time to be detected, in a similar way as in the static inspection. In the last frame (at virtual time 6.5 s), four defects in line B and the first defect in line C appeared. Therefore, without the data processing algorithms it is possible to detect the defects located close to the surface at a depth of 2mm and less. However, through the implementation of signal processing techniques it is possible to reduce the effects of different sources of noise and therefore, improve the detectability of the defects that are undetectable in raw images. The next section discusses the implementation of some of the most commonly used techniques to process infrared thermal data.

6 Data Processing Algorithms

Currently in the literature one can find information on a wide selection of methods aimed at processing thermographic images. Some of the most commonly used techniques are: thermographic signal reconstruction (TSR) [23], thermal tomography [24], pulsed phase thermography (PPT) [5], Principal component thermography (PCT) [25, 26] and Partial least square thermography (PLST) [27]. Data processing techniques in NDT enhance the defect detection probability, with the downside of increasing the computational time or requiring interactions with an operator to select algorithm parameters [26]. In this paper, these data processing algorithms have been used to increase the visibility of defects.

6.1 TSR

Thermographic signal reconstruction (TSR) is well-known as an effective data processing technique for PT data. The most important advantages of using this method over raw data is the simplicity and accuracy of quantitative measurement, the increase of temporal and spatial resolution, reduction of high frequency noise and the ability to produce time derivative images [5]. As its name implies, TSR uses a low order polynomial function in order to reconstruct the temperature evolution curve which is obtained from a PT inspection [28]. Figure 16a shows the result of the TSR approach. It is clear that the TSR approach enhances the detection probability and makes it possible to locate deeper defects.

6.2 PCA

An interesting technique is principal component thermography (PCT) which is used to extract features and reduce the undesirable information in thermographic sequences. PCT is used in NDT for defect detection and the estimation of depth of the detected subsurface defects [25]. PCT is based on the singular value decomposition (SVD) to extract the spatial (Empirical Orthogonal Functions or EOFs) and temporal (principal components PCs) information from thermal data. Each principal component is characterized by the variability level or its variance. Thus, the first component is the largest variance of all the components, followed by the second component and so on. Using the first few (most important) principal components helps to reduce the dimensionality of the data [29, 30]. Figure 16b shows the results of using PCT on the robotized LST data. The PCT technique has a significant effect on raw data and enhances the detection capability of the test. In Fig. 16c a combination of TSR and PCA techniques was employed, thereby improving the performance.

In this way, the TSR technique was used as a filter to reduce the noise and then PCA was applied to this sequence. The TSR is employed to suppress noise and in the next step PCT is carried out to improve defect detection. The combination of these signal processing techniques (TSR and PCT) effectively improves the result by combining the advantages of each technique.

6.3 PPT

The application of pulsed phase thermography to process thermographic data obtained from the LST inspection is also investigated in this work. PPT is based on the fact that any waveform can be approximated by the sum of harmonic waves at different frequencies through the Fourier Transform, which is used to extract a certain number of thermal waves from a thermal pulse [31], each one oscillating at a different frequency and having a different amplitude. The amplitude and phase maps obtained after the implementation of PPT to processing the thermographic LST data is shown in Fig. 17a and b.

6.4 PLST

Partial least square (PLS) is composed of a wide class of methods in order to establish the relations between sets of observed variables by means of latent variables. This involves regression and classification tasks as well as dimension reduction techniques and modeling tools [32]. Using this method, irrelevant and unstable information is discarded and only the most relevant part of the thermal data is used for regression. Furthermore, since all variables are projected down to only a few linear combinations, simple plotting techniques can be used for analysis. As a regression method, PLSR seeks to model a dependent variable Y (predicted) in terms of an independent variable X (predictor) [27, 33]. PLS generalizes and combines features of two techniques: principal component regression (PCR) and multivariate linear regression (MLR) to achieve this aim [27, 33]. The result of the partial least square thermography (PLST) technique is shown in Fig. 17c. The PLS technique does not provide an appropriate performance for this data. In the next section, the performance of the different data processing approaches is compared.

7 Evaluation of Signal Processing Techniques

Concerning the signal processing algorithms, it is important to note that PPT and TSR were originally developed to be applied on static thermography, when the heat conduction regimes follow the solution of the 1D differential equations. For this reason, their performance could not be as expected since the thermal regime or the thermal process is not 1D anymore. In fact, the decay curve obtained from LST is very different from the one obtained from static thermography. Therefore, it is important to investigate and evaluate performance of the data processing algorithm in the new space. The performance evaluation provides a criterion to determine the capability of each algorithm to eliminate the noise and detect the deeper defects. The PoD is known as a powerful tool which is employed to estimate the performance of data processing algorithms [34,35,36].

In this research, the performance of the processing techniques has been evaluated quantitatively using the PoD approach. The PoD analysis is a quantitative measuring method used to evaluate the inspection quality and the reliability of a NDT&E technique. This criterion is widely used for traditional NDT&E techniques [30]. PoD tries to recognize the minimum aw depth that can be reliably detected by the NDT technique. This is best done by plotting the accumulation of flaws detected against the aw depth of all of the flaws “detected” or that produce a response over a threshold. Based on the PoD result, all defects which are

deeper than a critical depth is not detected while others are detected. The tool most commonly used for PoD description is the PoD curve [37]. It was proven that the log-logistic distribution was the most acceptable [38]. The PoD curves can be produced from two types of data: (1) hit/miss data (the flaw is detected or not), (2) signal response data. The mathematical expression to describe the PoD function from hit/miss data is written below:

where a is the defect size, \(\mu =-\frac{\alpha }{\beta }\) and \(\sigma =\frac{\pi }{\beta \sqrt{3}}\) are the median standard deviation respectively. From Eq. 6, a direct relationship can be demonstrated between the log-odds PoD(a) and defect size:

For signal response data, the following formula is commonly used to model the relation between a flaw size (a) and a quantitative response data (\({\hat{a}} )\) [30, 39]:

where \(\beta _0 \) and \(\beta _1 \) are respectively the intercept and slope which can be estimated by maximum likelihood. The PoD(a) function will be calculated as:

where \({\hat{a}} _{dec} \) is a decision concerning the threshold. Finally, the PoD function is written based on the continuous cumulative distribution function.

where F is the continuous cumulative distribution function which has the cumulative log-normal distribution. The values of \(\beta _0 \), \(\beta _1 \) and \(\sigma \) are calculated by Minitab software and represent the 95% lower confidence bound [30, 39].

Figure 18 shows the 95% lower confidence bound of various data processing algorithms, which were calculated using Minitab. The PoD curves of each technique are shown in Fig. 18. This result shows that PCA provides the highest probability to detect the deeper defects. In order to have a better comparison, the performance of each technique has been measured. The detection performance of each approach is the area under the PoD curve divided by the total area. Figure 19 shows a comparison of the PoD value for different techniques which represents the capability to detect the deepest defects. PoD calculates the performance of each data processing algorithm based on the number of visible defects and their depth. In other words, PoD provides an appropriate criterion in order to determine the ranking of each algorithm. In this research, the results of raw data, TSR, PCA, PLS, TSR + PLS and PPT were evaluated and compared. The raw data has very low performance due to low camera resolution, camera vibration and Non-uniform motion of the camera and source. The TSR algorithm reduces the noise and significantly increases the number of visible defects. Unexpectedly, the PPT and PLS algorithms have lower performance. In comparison with the static thermography, it can be concluded that these algorithms are sensitive to the motion noise and are not proper choices for LST application. PCA and TSR + PCA provide the highest performance in comparison to other techniques. This indicates that TSR, PCA and especially their combination is robust against the motion noise and are the best choices for LST.

8 Conclusion

In this research, the application of robotized Line Scan Thermography (LST) has been investigated for the non-destructive inspection of large and complex composite structures. All the experiments and theoretical analysis were conducted on a carbon fiber reinforced polymer (CFRP) specimen with defects located at different depths and diameters. For theoretical analysis, the heat transfer process that takes places within the material during the LST inspection was simulated using COMSOL Multiphysics. The developed thermal model also considered different parameters associated with the LST setup, such as the amount of energy delivered to the specimen and the speed at which the IR camera and heating source move. It is important to mention that the developed thermal model can be used not only to study the heat transfer process in the LST inspection, but also as a technical tool that can be employed for training of technicians and specialists. Furthermore, the model can be used as a pre-screening tool to obtain inspection parameters and to verify the reliability of the LST before being applied in real tests. A parametric study was conducted to analyze the influence of the irradiation density and the scanning speed on the thermal contrast (or the defectivity level) of the defects. From this study, it was observed that the simulated thermal curves of the defects with respect to time follow a similar behavior as that observed in pulse thermography. Furthermore, the results indicate that there is a direct relation between the scanning speed and the maximum thermal contrast of the defects due to the amount of heat energy delivered to the specimen. Based on the results obtained from numerical simulation, a comprehensive analysis of several image processing techniques (TSR, PCT, PCT + TSR, PPT and PLST) commonly used to improve the quality of PT data were implemented on the thermal maps obtained from the inspection by the robotized line scan thermography system. After processing, the results were evaluated in terms of the PoD criteria, allowing to conclude that principal components thermography and thermographic signal reconstruction provided an improvement of the depth probing capabilities of LST. In both cases, it was possible to detect defects up to a depth of 2.1 mm from the surface of the specimen. Further investigations are focused on the implementation of machine learning methods to go beyond the current limit of 2.1 mm depth.

References

Bonavolonta, C., Valentino, M., Peluso, G., Barone, A.: Nondestructive evaluation of advanced composite materials for aerospace application using hts squids. IEEE Trans. Appl. Supercond. 17(2), 772–775 (2007). doi:10.1109/TASC.2007.897193

Hull, D., Clyne, T.: An Introduction to Composite Materials. Cambridge University Press, New York (1996)

Matthews, D.F., Rawlings, R.D.: Composite Materials: Engineering and Science. Elsevier (1999)

Soutis, C.: Fibre reinforced composites in aircraft construction. Prog. Aerosp. Sci. 41(2), 143–151 (2005)

Lopez, F., Ibarra-Castanedo, C., Maldague, X., Nicolau, V.: Pulsed thermography signal processing techniques based on the 1d solution of the heat equation applied to the inspection of laminated composites. Mater. Eval. 72, 91–102 (2014)

Ibarra-Castanedo, C., Gonzalez, D., Klein, M., Pilla, M., Vallerand, S., Maldague, X.: Infrared image processing and data analysis. Infrared. Phys. Technol. 46(1), 75–83 (2004)

Ibarra-Castanedo, C., Genest, M., Servais, P., Maldague, X., Bendada, A.: Qualitative and quantitative assessment of aerospace structures by pulsed thermography. Nondestruct. Test. Eval. 22(2–3), 199–215 (2007)

Ibarra-Castanedo, C., Avdelidis, N.P., Grinzato, E.G., Bison, P.G., Marinetti, S., Plescanu, C.C., Bendada, A., Maldague, X.P.: Delamination detection and impact damage assessment of glare by active thermography. Int. J. Mater. Prod. Technol. 41(1–4), 5–16 (2011)

Dillenz, A., Zweschper, T., Busse, G.: Progress in ultrasound phase thermography. In: Aerospace/Defense Sensing, Simulation, and Controls. International Society for Optics and Photonics, pp. 574–579 (2001)

Favro, L., Han, X., Ouyang, Z., Sun, G., Sui, H., Thomas, R.: Infrared imaging of defects heated by a sonic pulse. Rev. Sci. Instrum. 71(6), 2418–2421 (2000)

McCann, D., Forde, M.: Review of ndt methods in the assessment of concrete and masonry structures. NDT & E Int. 34(2), 71–84 (2001)

Ibrahim, M.: Nondestructive evaluation of thick-section composites and sandwich structures: a review. Compos. A Appl. Sci. Manuf. 64, 36–48 (2014)

Gholizadeh, S.: A review of non-destructive testing methods of composite materials. Procedia Struct. Integr. 1, 50–57 (2016)

Ley, O., Godinez-Azcuaga, V.: Line scanning thermography and its application inspecting aerospace composites. In: 5th International Symposium on NDT in Aerospace, Singapore

Ibarra-Castanedo, C., Servais, P., Ziadi, A., Klein, M., Maldague, X.: RITA-Robotized Inspection by Thermography and Advanced processing for the inspection of aeronautical components. In: 12th International Conference on Quantitative InfraRed Thermography (2014)

Woolard, D.F., Cramer, K.E.: Line scan versus ash thermography: comparative study on reinforced carbon-carbon. In: Defense and Security, International Society for Optics and Photonics, pp. 315–323 (2005)

Aieta, N.V., Das, P.K., Perdue, A., Bender, G., Herring, A.M., Weber, A.Z., Ulsh, M.J.: Applying infrared thermography as a quality-control tool for the rapid detection of polymer-electrolyte-membrane-fuel-cell catalyst-layer-thickness variations. J. Power Sources 211, 4–11 (2012)

Kaltmann, D.: Quantitative line-scan thermographic evaluation of composite structures, Masters by Research, Aerospace, Mechanical and Manufacturing Engineering, RMIT University

Benitez, H., Ibarra-Castanedo, C., Bendada, A., Maldague, X., Loaiza, H., Caicedo, E.: Modified differential absolute contrast using thermal quadrupoles for the nondestructive testing of finite thickness specimens by infrared thermography. In: Electrical and Computer Engineering, 2006. CCECE’06. Canadian Conference on, IEEE, 2006, pp. 1039–1042

Ibarra-Castanedo, C., Benitez, H., Maldague, X., Bendada, A.: Review of thermal-contrast based signal processing techniques for the nondestructive testing and evaluation of materials by infrared thermography. In: Proceedings of the International Workshop on Imaging NDE (Kalpakkam, India, 25–28 April 2007), pp. 1–6 (2007)

Lopez, F., de Paulo Nicolau, V., Ibarra-Castanedo, C., Maldague, X.: Thermal numerical model and computational simulation of pulsed thermography inspection of carbon fiber reinforced composites. Int. J. Therm. Sci. 86, 325–340 (2014)

Oswald-Tranta, B., Sorger, M.: Scanning pulse phase thermography with line heating. Quant. InfraRed Thermogr. J. 9(2), 103–122 (2012)

Ibarra-Castanedo, C., Bendada, A., Maldague, X.: Thermographic image processing for NDT. In: IV Conferencia Panamericana de END, vol. 79. Citeseer (2007)

Vavilov, V., Nesteruk, D., Shirayev, V., Ivanov, A.: Some novel approaches to thermal tomography of CFRP composites. In: Proceedings of the 10th International Conference on Quantitative Infrared Thermography, FIE du CAO, pp. 433–440 (2010)

Vahid, P.H., Hesabi, S., Maldague, X.: The effect of pre-processing techniques in detecting defects of thermal images

Fariba, K., Saeed, S., Maldague, X.: Infrared thermography and NDT: 2050 horizon, QIRT

Lopez, F., Nicolau, V., Maldague, X., Ibarra-Castanedo, C.: Multivariate infrared signal processing by partial least-squares thermography. In: ISEM Conference (2013)

Lopez, F., Ibarra-Castanedo, C., Maldague, X., de Paulo Nicolau, V.: Analysis of signal processing techniques in pulsed thermography. In: SPIE Defense, Security, and Sensing, International Society for Optics and Photonics, 2013, pp. 87050W–87050W

Ibarra-Castanedo, C., Avdelidis, N.P., Grenier, M., Maldague, X., Bendada, A.: Active thermography signal processing techniques for defect detection and characterization on composite materials. In: SPIE Defense, Security, and Sensing, International Society for Optics and Photonics, 2010, pp. 76610O–76610O

Duan, Y.: Probability of detection analysis for infrared nondestructive testing and evaluation with applications including a comparison with ultrasonic testing, Ph.D. thesis, Universite Laval (2014)

Castanedo, C.I.: Quantitative subsurface defect evaluation by pulsed phase thermography: depth retrieval with the phase, Ph.D. thesis, Universite Laval (2005)

Rosipal, R., Kramer, N.: Overview and recent advances in partial least squares. In: Subspace, Latent Structure and Feature Selection. Springer, pp. 34–51 (2006)

Lopez, F., Ibarra-Castanedo, C., de Paulo Nicolau, V., Maldague, X.: Optimization of pulsed thermography inspection by partial least-squares regression. NDT & E Int. 66, 128–138 (2014)

Duan, Y., Servais, P., Genest, M., Ibarra-Castanedo, C., Maldague, X.P.: Thermopod: A reliability study on active infrared thermography for the inspection of composite materials. J. Mech. Sci. Technol. 26(7), 1985–1991 (2012)

Junyan, L., Yang, L., Fei, W., Yang, W.: Study on probability of detection (pod) determination using lock-in thermography for nondestructive inspection (ndi) of cfrp composite materials. Infrared Phys. Technol. 71, 448–456 (2015)

Wehling, P., LaBudde, R.A., Brunelle, S.L., Nelson, M.T.: Probability of detection (pod) as a statistical model for the validation of qualitative methods. J. AOAC Int. 94(1), 335–347 (2011)

Duan, Y., Huebner, S., Hassler, U., Osman, A., Ibarra-Castanedo, C., Maldague, X.P.: Quantitative evaluation of optical lock-in and pulsed thermography for aluminum foam material. Infrared Phys. Technol. 60, 275–280 (2013)

Georgiou, G.A.: Probability of detection (pod) curves: derivation, applications and limitations. Jacobi Consulting Limited Health and Safety Executive Research Report 454

Muller, C., Elaguine, M., Bellon, C., Ewert, U., Zscherpel, U., Scharmach, M., Redmer, B., Ryden, H., Ronneteg, U.: Pod (probability of detection) evaluation of NDT techniques for cu-canisters for risk assessment of nuclear waste encapsulation. In: Proceedings of the 9th European Conference on NDT, Berlin, German, Sept, 2006, pp. 25–29

Acknowledgements

The authors are thankful for the support of the following organizations which help to fund our research activities: Natural Science and Engineering Research Council of Canada, Canada Research Chair Secretariat, Ministre des Relations Internationales du Quebec and Quebec-Wallonia/Brussels Program, Visioimage Ltd., Centre.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Khodayar, F., Lopez, F., Ibarra-Castanedo, C. et al. Optimization of the Inspection of Large Composite Materials Using Robotized Line Scan Thermography. J Nondestruct Eval 36, 32 (2017). https://doi.org/10.1007/s10921-017-0412-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10921-017-0412-x