Abstract

This paper has introduced a digital process aimed at automatically identify and classify flaws in the weld joints. Therefore, various algorithms are presented for computer aided detection (CAD) and classification. These algorithms include preprocessing algorithms using Gaussian pyramidal transform, contrast enhancement algorithm using contrast stretch and normalization method, noise reduction algorithm by blind image separation (BIS), measurement of the noise separation quality and image segmentation algorithm based on expectation-maximization (EM) method. Also, an algorithm for detection and classification of welding defects from radiographic images is presented. This algorithm is based on multi-scale wavelet packet (MWP) technique for feature extraction. Also, extraction of features from its transform domains is proposed to assist in achieving a higher classification rate. Moreover, the support vector machine (SVM) is applied for matching the extracted features. Consequently, classification error was computed for both normal and defect images. The obtained results confirm that much higher classification error was computed for defect image due to various objects may be in the image. Moreover, the accuracy of the considered algorithms is determined by statistical measurements. These algorithms have the potential for further improvement because of their simplicity and encouraging results. Therefore, it will motivate real-time flaws detection and classification for many CAD applications.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Gamma radiography was introduced as a supplement to X-ray radiography to provide a means of welded assemblies and other engineering structures for internal defects such as blowholes and cracks [1]. Welded structures often have to be tested nondestructively as industrial radiography using X-rays or gamma rays, ultrasonic testing, liquid penetrant testing or via eddy current [2].

Radiographic testing is the commonly used non-destructive test (NDT) method for detecting internal welding flaws. Also, it is based on the ability of \(\gamma \)-rays to pass through metal and other materials opaque to ordinary light [3]. Generally, radiographic films are very dark. Their density is rather large. Therefore, an ordinary scanner cannot give a sufficient lighting through radiogram [4]. Also, gamma rays produce photographic records by the transmitted radiant energy. Therefore, the defective areas absorb more energy. Consequently, penetrated \(\gamma \)-rays show variations in intensity on the receiving films. Thus, the defects appear darker in the image [5]. The limited range of intensities the image capture device is able to accommodate is a reason of background in the image. Also, the gray level values of the noise pixels are much higher than those of their immediate neighbors [6]. However, the distribution of gray levels is highly skewed towards the darker side in the original radiographic image. Therefore, these defects can hardly be recognized [6]. Consequently, these dark regions represent the background in the image. However, this noise represents the high frequency components in the image. Therefore, it provides a means to examine the internal structure of a weld [6].

There are several advantages of gamma radiography compared to other technologies [1]. It can be done thoroughly and non-invasively, more rapidly, and cheaply [7]. Gamma radiography testing (GRT) uses gamma radioisotopes to inspect materials defects of welds in radiographic films [7].

Defect extraction, detection and classification from radiographic images have been studied by several researchers using various techniques and approaches in 2013 [7]. Inanc [8] provides a brief survey of these radiography efforts and discusses approaches adapted by various simulation efforts. Abd Halim et al. [9] extract the weld defect and evaluate its geometrical feature. The defect boundary is detected by recognizing the black pixel of eight neighborhoods of 3x3 filtering. The coordinates of the boundary pixel are stored and used to calculate the information of defect features. This information can be used by interpreter to interpret a defect. Also, Sundaram et al. [10] proposed an automatic method to extract the various welding defects in radiographic weld images. The welding region is extracted by using c-means segmentation method. Then, different features of the welded region are calculated after segmentation.

Moreover, Kasban et al. [11] presented a cepstral approach for flaw detection from radiography images. In addition, Saber et al. [12, 13] proposed a method for the automatic detection of weld defects in radiographic images. The cepstral features are extracted from the higher-order spectra (Bispectrum and Trispectrum). Then, neural networks are used for feature matching. Also, Alaknanda et al. [14] present an approach to process the radiographic weld images of the weld specimens considering morphological aspects of the image.

Hassan and Awan [15] present a novel technique for the detection and classification of weld defects by means of geometric features. They tend to localize defects with maximum interclass variance and minimum intra class variance. They move towards extracting features describing the shape of localized objects in segmented images. They classify the defects by artificial neural network (ANN) using these geometric features. Also, Liao [16] proposed a fuzzy expert system approach for the classification of different types of welding flaws. His results indicate that the fuzzy expert system approach outperforms all others in terms of classification accuracy. Furthermore, Lim et al. [17] developed an effective weld defect classification algorithm using a large database of simulated defects. A multi-layer perceptron (MLP) neural network was trained using shape parameters extracted from the simulated images of weld defects. The optimized set of nine shape descriptors gave the highest classification accuracy of 100 % by testing on 60 unknown simulated defects. Defect classification on 49 real defects from digitized radiographs produced maximum overall classification accuracy of 97.96 %.

Humans have an enhanced ability to qualitatively extract information from images [18]. However, this ability is limited if quantitative information has to be achieved from the image. Therefore, it is an important task to identify the welding defects in gamma radiography images without human intervention. The interpretation of these radiographic films can be performed automatically [7]. Therefore, computer aided detection (CAD) systems can help radiographers in interpreting flaws detection and classification. The combination of CAD scheme and expert’s knowledge would greatly improve the detection and classification accuracy. This paper focuses on developing a CAD system for automatic flaws detection and classification using gamma radiography welding images. This paper is organized as follows: Section 2 presents proposed welding flaws classification of gamma radiography images. However, Sect. 3 discusses the proposed defects classification algorithm. Section 4 introduces the obtained experimental results and discussion. Section 5 is devoted for conclusion of this work.

2 The Proposed Approach

Here, an approach is proposed. Therefore, various algorithms are presented to accurately identify defects in gamma radiography images. These algorithms are preprocessing algorithm using Gaussian pyramidal transforms method, contrast enhancement algorithm using contrast stretch and normalization method, noise reduction algorithm by blind image separation (BIS), and image segmentation algorithm based on expectation-maximization (EM) method. The block diagram of the proposed automatic defect identification and classification approach is shown in Fig. 1. The functionality of each stage is described in the following subsections.

2.1 Preprocessing Algorithm Using Gaussian Pyramidal Transform

It is necessary to overcome the background problem in the welding radiography image. Therefore, image preprocessing is an essential step. Image preprocessing was done using Gaussian pyramidal transform. The main strength of Gaussian pyramidal transform is the capability of removing the background without affecting the brightness area in the image. Each weld image contains some background information. In addition, weld images have a wide range of intensity level. Therefore, image preprocessing is necessary to remove the background, enhance the image, and normalize the image. Thus, every image has the same maximal intensity [19]. On other words, image preprocessing is used to remove the background. Moreover, it is used to normalize all the line images to the same maximum gray level [19].

A single threshold value cannot be chosen to remove the backgrounds for all images. Moreover, the overall gray levels of different weld images are not consistent. A threshold that removes the background correctly for a bright image may remove all the pixels from a dark image. So, adjustment of the images must be made to enable the use of a single threshold [19]. Therefore, Gaussian pyramidal transforms is used for this purpose. Consequently, preprocessing algorithm using Gaussian pyramidal transforms is presented in Fig. 2. The image can be processed into two subimages with the pyramid wavelet transform. The subimage produced by the low-pass filter can be further processed into two subimages. The subimages yielded by the high-pass filters have reduced entropy. They can be effectively coded and compressed [20]. However, the accuracy of this algorithm is measured with respect to statistical measurements as illustrated in Appendix.

2.2 Contrast Enhancement Algorithm

The signal-to-noise ratios in different line images are differ significantly. Consequently, the signal-to-noise ratio of some dark images is smaller than those of bright images. Therefore, the noise levels in dark images are amplified to a larger degree than those of bright images as well as those images are normalized. A dark image is the one with average gray level lower than the expected average. Dark image enhancement is performed using contrast stretch and normalization algorithm in order to keep the bell shape similar to the original one [19]. This algorithm is depicted in Fig. 3. The current algorithm based on contrast stretches on the image and normalize image from 0 to 1. It is completely differs from standard stretching methods. The standard methods find global minimum and maximum of the image. Then, it uses some low and high threshold values to normalize the image. Therefore, the values below low threshold are equated to low threshold. However, the values above high threshold are equated to high threshold.

However, the contrast stretch and normalization method uses threshold values that are next to minimum and maximum threshold. The minimum value on the image itself is determined. Therefore, the normalized threshold values that are equal to zero are identified. These normalized zero values represents the background of the image [21]. Accordingly, the image background was excluded. Since, it is normally zero values. Also, the same consideration was done to high threshold values. So, the first global maximum was excluded. Consequently, the zero value next to minimum threshold and first global maximum was removed from the image. Moreover, better chance with the next value is obtained for spike value. Otherwise, the next value is quite close to maximum. Thus, the obtained error is reduced.

2.3 Noise Reduction Algorithm

Blind image separation (BIS) is an important field of research in image processing [22, 23]. A deflation approach is used to implement the blind source factor separation. Therefore, a noise reduction algorithm based on blind image separation (BIS) by a deflation based method is shown in Fig. 4. The purpose of this section is to separate the noise from the welding gamma radiography image using blind image separation based on five different methods. These methods are kurtosis maximization [23, 24], quadratic backward compatibility (Quadsvd), quadratic grad (quadgrad), cubihopm, and cyclo-stationary sources. The multiple-input multiple-output finite impulse response (MIMO-FIR) channel is considered as [25]

where x(t), s(t), \(\text {H(k)}=[\text {h}_\mathrm{i,j}^\text {(k)}]\) and K denote an m column output vector called the observed signal, an n column input vector called the source signal, an i x j matrix representing the impulse response of the channel, and the order, respectively. However, the last equation can be rewritten as [25]

where H(z) denotes the transfer function. It is defined by z-transform of the impulse response, \(\sum \nolimits _{k=0}^K {H^{(k)}} z^k\). All signals and channel impulse can be assumed real values [25].

The blind source-factor separation problem is formulated by [25]

where \(\text {h}_\mathrm{i,j} \text {(z)}\) is the \(\text {({i,j})}^{\text {th}}\) element of H(z). A filtered version \(\text {y}_\mathrm{j} \text {(t)}\) of source \(\text {s}_\mathrm{j} \text {(t)}\) is given by [25]

The filter value is given by

where \(w_{ji} (z)=\sum \nolimits _{k=0}^L {w_{ji}^{(k)} } z^k\)and L is the order of \(w_{ji} \) with a sufficiently large positive integer. The filter \(\text {g}_\mathrm{j}\text {(z)}\) is obtained by adjusting the parameter \(w_{ji}^{(k)} \)’s of the filter \(\text {w}_\mathrm{ij} \text {(z)}\). Since, \(\text {y}_\mathrm{j} \text {(t)}\) is introduced by [25]

The filtered source signal,\(y_j (t)\), is extracted from the observed signals, \(x_i (t)\), using a deflation approach. Then, the contribution signal, \(\text {c}_\mathrm{ij} \text {(t)}\) with \({({\mathrm{i}=1,\ldots .,\mathrm{m}})}\), to the source signal, \(\text {s}_\mathrm{j} \text {(t)}\), was computed by using the filtered signal, \(\text {y}_\mathrm{j} \text {(t)}\). Subsequently, the difference signal, \(\text {x}_\mathrm{i} \text {(t)- c}_\mathrm{ij} \text {(t)}\) for \({\mathrm{i}=1,\ldots .,\mathrm{m}}\) is calculated. This process is repeated until the last contribution is extracted. Then, blind source-factor separation problem is solved. The contribution signal problem can be obtained as follows [25]

where \(a_{ij} (z)=\frac{h_{ij} (z)}{g_j (z)}\). Therefore, it follows from above that [25]

where \({\mathrm{y(t)}=}[\text {y}_\mathrm{1} {\text {(t)},\ldots .,\mathrm{y}}_\mathrm{n} \text {(t)}]^\text {T}\) and \(\text {A(z)=}[\text {a}_\mathrm{ij} \text {(z)}]\). The contributions signal, \(\text {c}_\mathrm{ij} \text {(t)}\), can be replaced by the transfer function, A(z). Since, the filtered versions, \(\text {y}_\mathrm{j} \text {(t)}\) with \((\mathrm{j}=1,\ldots ,\mathrm{n})\), is obtained. The transfer function, A(z), was assumed to has no pole on the unit circle \(\left| z \right| =1\). Therefore, the transfer function is very simple to become conventional system identification. Since, both of the two signals x(t) and y(t) are known. Consequently, the second-order correlation technique is used to find the transfer function, A(z) [25].

2.3.1 Measurement of Noise Separation Quality Algorithm

The quality of the \(s_{ij}^{spat} \)estimates of the spatial images of all sources j for some test mixtures was evaluated by comparison with the true source images, \(s_{ij}^{img} \), using four objective performance criteria [26]. These criteria can be computed for all types of separation algorithms. It does not necessitate knowledge of the separating filters or masks [26]. The criteria derive from the decomposition of an estimated source image as [26]

where \(S_{ij}^{img} (t)\), \(e_{ij}^{ispat} (t)\), \(S_{ij}^{interf} (t)\), \(e_{ij}^{iartif} (t)\)denote the true source image, distinct error components representing spatial (or filtering) distortion, interference and artifacts, respectively. On other words, the spatial distortion and interference components can be expressed as filtered versions of the true source images. These are computed by least-squares projection of the estimated source image onto the corresponding subspaces.

We are interested with evaluation of blind image separation (BIS) algorithm as shown in Fig. 5. Therefore, the true source spatial, source interference and artifacts are used for evaluation of blind image separation (BIS) algorithm. The relative amounts of spatial distortion, interference and artifacts were measured using three energy ratio criteria expressed in decibels (dB). These are source image to spatial distortion ratio (ISR), source to interference ratio (SIR) and sources to artifacts ratio (SAR) that are defined by [26, 27]

where \(s_{ij}^{img} \)and \(s_{ij}^{spat} \)denote the true source image and true source spatial (or filtering), respectively.

where \(s_{ij}^{interf} \)denotes true source interference.

where \(s_{ij}^{iartif} \) denotes true source artifacts. Also, the total error was measured by the signal to distortion ratio (SDR) [26]

2.4 Image Segmentation Algorithm

Accurate image segmentation is an important step of gamma radiography image analysis. The purpose is to use the regions extracted by preprocessing. Then, the segments that correspond to the defects are extracted [5]. An EM algorithm for welding gamma radiography image segmentation was studied. This algorithm is rapidly converges to a reasonable approximate solution with little iteration. The EM algorithm is the mean-field approximation that used to simplify the computation [28]. It plays an important role in computing maximum likelihood estimate from missing or hidden data in mathematical statistics. Gaussian mixture model (GMM) represents a modeling of statistical distribution by a linear combination of several Gaussian distributions. The EM algorithm is a natural method to estimate parameter for Gaussian mixture. It automatically meets constraint, requires no learning step and monotonously converges to a local maximum or a saddle point [29].

Image segmentation with EM algorithm using Gaussian mixed model of defect gamma radiography image is presented in Fig. 6. Let \(\chi \) denotes a random variable that having the distribution \(pr(\chi \vert \Theta )\), where \(\Theta \) is its parameter. Also, \(X=\{x_1 ,x_2 ,...,x_N \}\) is an observed data set consisting of N independent samples. The maximum likelihood estimate is to find an optimal distribution parameter, \(\Theta \), to maximize the log-likelihood function that is given by

The iterative computation is to maximize a conditional expectation under the current \(\Theta ^n\). This is essential instead of directly maximizing the log-likelihood function. Then, a new \(\Theta ^{n+1}\) is obtained as follows [29]

3 Flaws Classification

The flaws classification step in welding radiography image provides a set of objects that correspond to one of the following classes: non-defect (false alarm), worm holes, porosity, linear slag inclusion, gas pores, lack of fusion and cracks [5]. A set of multi-scale wavelet packet (MWP) features is extracted. Then, it is used as input to a multi-class classifier in order to classify each of the obtained objects.

3.1 Feature Extraction

3.1.1 Multi-scale Wavelet Packet (MWP) Feature Extraction

Welding radiography images usually consist of brief high-frequency components that closely spaced in time. Also, it is accompanied by low-frequency components that closely spaced in frequency. Wavelets are considered appropriate for analyzing welding gamma radiography images. Since, they are exhibit good frequency resolution along with finite time resolution. The first is to localize low-frequency components. Then, the high-frequency components are resolved [30]. The wavelet-packets transform (WPT) was introduced by Coifman et al. [30]. Thus, the link between multiresolution approximations and wavelets is generalized. The WPT may be thought of as a tree of subspaces, with \(\Omega _{0,0} \) representing the original signal space (the root node of the tree). The node \(\Omega _{j,k} \) (j denoting the scale and k denoting the subband index within the scale) is decomposed into two orthogonal subspaces. These are an approximation space [30] \(\Omega _{j,k} \rightarrow \Omega _{j+1,2k} \) and a detail space \(\Omega _{j,k} \rightarrow \Omega _{j+1,2k+1} \). This is done by dividing the orthogonal basis \(\left\{ {\phi _j ({t-2^jk})} \right\} _{k\in Z} \)of \(\Omega _{j,k} \) into two other orthogonal bases \(\left\{ {\phi _{j+1} ( {t-2^{j+1}k})} \right\} _{k\in Z} \). These bases are \(\Omega _{j+1,2k} \) and \(\left\{ {\psi _{\text{ j }+\text{1 }} ( {\text{ t }-\text{2 }^{\text{ j }+\text{1 }}\text{ k }})} \right\} _{k\in Z} \)of \(\Omega _{j+1,2k+1} \). The scaling and wavelet functions are given respectively in [29] as

where \(2^j\) and \(2^jk\) denote the dilation factor and location parameter, respectively. The latter measures the degree of compression or scaling. The former determines the time location of the wavelet.

This process is repeated J times, where \(J\le \log _2 N\) with N being the number of samples in the original signal. This in turn results in \(J \times N\) coefficients. Thus, the tree has N coefficients divided into \(2^j\) coefficient blocks at resolution level \(( {j=1, 2,...,J})\). This iterative process generates a binary wavelet-packet tree-like structure. Since, the nodes of the tree represent subspaces with different frequency localization characteristics. This is shown schematically in Fig. 7 with three decomposition levels.

The wavelet-packet decompositions of \(\Omega _{0,0} \) into tree structured subspaces [30]

3.1.2 Wavelet Packet Based Feature Extraction Method

The problem of WPT-based feature extraction can be decomposed into two main tasks: feature construction and bases selection. For feature construction step, the goal is to utilize the WPT coefficients generated at each of the WPT tree subspaces. In order to construct variables that can represent the classes of the signals at hand. However, the bases selection problem is related to the identification of the best bases. Moreover, the constructed features can highly discriminate between the signals belonging to different problem classes [30]. Features are usually generated by taking the energy of the wavelet coefficients in the subband according to the normalized filter bank energy, S(l). It is given by [30, 31]

where \(w_x ,l\) , and N\(_{i}\) denote wavelet packet transform of image x, subband frequency index and number of wavelet coefficients in the \(l^{th}\) subband, respectively. A more powerful measure is required to identify the information content of the different features. Since, the error depends on the overlap between the class likelihoods.

3.1.3 Feature Extraction from the Transform Domain

The flaw classification becomes a challenging task in the presence of noise. The noise may mask the signal making the features infeasible in the identification. Also, noise degradation acts like a low pass filter on the signal. Moreover, it is removing most of the characteristic features of the signal. As a result, much more coefficients are required in the presence degradations. Various transform domains are used in order to obtain a large feature vector suitable for defect classification in the presence of degradations. The discrete cosine transforms (DCT), discrete sine transforms (DST), and discrete wavelet transforms (DWT) can be a useful tool to overcome the degradation problems. Features can be extracted from these transforms of the WPT flaw signal and added to the feature vector extracted from the signal itself.

3.2 Classification Using Support Vector Machine (SVM)

Classification of normal image is necessary in order to compare with defect image. Also, we are interested in the calculation of classification error with normal image in order to design a complete computer aided detection (CAD) system. On other words, it provides a complete embedded system. This system will decide if the image is normal (free of defects). Otherwise, it will determine the type of defect class in the image as depicted in Fig. 8. Moreover, the interesting object in the normal image that is used in training purpose was the brightness section referring to good welding (free of defect).

The SVM based on statistical learning theory was introduced by Vapnik [32]. Support vector machine (SVM) based techniques have proven to be powerful in classification and regression. It provides a higher performance than that of traditional learning machines. It tends to find a hyperplane that can separate the input samples. The SVM will transform the original data into a feature space of higher dimension by using the kernel function. This is performed as well as the original data cannot be separated by a hyperplane. The popular kernels are [32] Linear kernel, Polynomial kernel of degree d, Gaussian radial-basis function, and neural nets (sigmoids).

Suppose a training set, S, contains n labeled samples \((\mathbf {x}_1 ,y_1 ),...,(\mathbf {x}_n ,y_n )\), where \(\mathbf {x}_i \in \left\{ {-1,1} \right\} ,i=1,...,n. {\mathbf {\Phi (x)}}\) denotes the mapping from \(R^N\) to the feature space, Z. It needs to find the hyperplane with the maximum margin as [32]

such that for each point \(({\mathbf z}_i ,y_i )\) , where \({\mathbf z_i =\Phi (x_i )}\) ,

The soft margin is allowed by introducing n non-negative variables that denoted by \(\xi =(\xi _1 ,\xi _2 ,...,\xi _n )\) as the dataset is not linearly separable. Therefore, the constraint for each sample in Eq. 20 is rewritten as [32]

The optimal hyperplane problem is to solve the following equation

Minimize

where C is a constant parameter that tunes the balance between the maximum margin and the minimum classification error. Also, the first term in Eq. 21 measures the margin between the support vectors. However, the second term measures the amount of misclassifications. The classification errors were computed by training linear support vector machine (SVM). Therefore, classification error is the fraction of misclassified observations.

The classification error of both normal and defect images were computed using features extractions from multi-scale wavelet packet. Then, the SVM was employed to measure the error of classified objects. These objects may be brightness section in normal image or one of defect classes that illustrated below for defect image. Moreover, two different methods are used for classification error computation. These are re-substitution error and loo error methods. Thus, the classification error based on re-substitution method is given by

where \(\xi _i \) , k and \(\sigma \) denote m-by-1 vector of class label, the length of vector of class labels and the measure of classified data using SVM, respectively. However, the classification error for loo error method is identified by

where \(C_p \) and \(E_R \) are the performance evaluation data classifier parameter and the error rate as a performance parameter, respectively.

The loo error method achieves the lowest possible error. Consequently, the obtained results are based on loo error method. Then, the percentage error is given by

Furthermore, the classification rate percentage in next tables is computed according to the following relation

4 Experimental Results and Discussion

4.1 Preprocessing Results

The background correction of normal and defect gamma radiography image using the Gaussian pyramidal transform method is depicted in Fig. 9. The original normal image is shown in Fig. 9a. However, its background corrected image is depicted in Fig. 9b. Also, the original defect image is illustrated in Fig. 9c. Consequently, its background corrected image is depicted in Fig. 9d. The accuracy and evaluation of the considered algorithm is obtained in terms of statistical measurements for both normal and defect gamma radiography image as depicted in Table 1. These statistical measurements are discussed in Appendix. The obtained result confirms that Gaussian pyramidal transform achieves better background correction without changing the image quality. Moreover, background correction results of normal image are superior that of defect image due to little objects in normal image than defect image.

4.2 Contrast Enhancement Results

The contrast enhancement of normal and defect gamma radiography image using the contrast stretch and normalization method is depicted in Fig. 10. The original normal image is shown in Fig. 10a. However, its enhancement image is depicted in Fig. 10b. Also, the original defect image is observed in Fig. 10c. Consequently, its enhancement image is depicted in Fig. 10d. The accuracy and evaluation of the considered algorithm is obtained in terms of statistical measurements for both normal and defect image as depicted in Table 2. The obtained results show that high peak signal to noise ratio (PSNR) and low mean square error (MSE) is achieved using contrast stretch and normalization method for both cases. However, smaller error and higher signal-to-noise ratio (SNR) were achieved with normal image.

4.3 Noise Reduction Results

The image is de-noised using blind image separation (BIS) algorithm. Five defferent methods of blind image separation (BIS) are considered. These methods are Kurtosis, Quadsvd, Quadgrad, Cubihopm and Cyclostat. Comparisons between these methods were done with respect to the number of estimated separator as depicted in Fig. 11. As shown in this figure, the Kurtosis method achieves the lowest estimated signal separation. Evaluation between these methods is done in terms of mean square error (MSE) as depicted in Table 3. From this table, the Kurtosis and Cyclostat methods introduces lower error in comparison with other methods.

4.3.1 Measurement Results of Noise Separation Quality

Evaluation of ordering and measurement of the separation quality for estimated source spatial image by blind image separation (BIS) were performed. This evaluation was performed in terms of signal to distortion ratio (SDR), spatial distortion ratio (ISR), source to interference ratio (SIR), sources to artifacts ratio (SAR) and best ordering of estimated sources image (BOES) as depicted in Table 4. These noise separation measurements at different classes of welding radiography images are illustrated in Table 4. The obtained results indicate that blind image separation (BIS) introduces effective results for de-noising the welding gamma radiography images.

4.4 Image Segmentation Results

The brightness area in the image represents the flawed area that should be selected for features extraction for classification. The flaw in the weld usually exists in the brightness section of the image. On other hand, background represents the darkness area around the welding section. Image segmentation was done automatically based on the selection order (k). On other words, the parameter k denotes the pixel order of the image. Furthermore, it means that segmentation method has an underlying assumption that each element cannot belong to two clusters at the same time. However, an element in transition area between two clusters is hard to define. This element may belong to multiple clusters with different probabilities [33]. Here, the expectation maximization algorithm and Gaussian distribution function for image segmentation of defect image was achieved. Therefore, the results of the considered segmentation method are presented. Segmented radiography image results of defect image were depicted in Fig. 12. The original defect image is shown in Fig. 12a. However, the segmented image with orders k=11, 9, and 2 is depicted in Fig. 12b, c, d respectively. The obtained results confirm that the mask out, M, of the segmented image increases with the decrease of pixel order of the image, k. Moreover, the mask out is given by

where i, k, \(P_i \) and \(C_i \) denote the group of each pixel that may be ( 1, 2, 3, ..k), pixel order of the image, the probability that this pixel belong to the group i and the center of the group k that refers to RGB space, respectively. Therefore, better region of interest is obtained with Gaussian order of k = 9. In this case, the number of segmented objects is 100 %. Therefore, the number of selected objects of welding gamma radiography image is based on the selected Gaussian order, k.

The quantitative evaluation of the expectation maximization algorithm is achieved in terms of statistical measurements for different Gaussian order of k = 2, k = 9 and k = 11 as depicted in Table 5. Also, the results confirm that efficient measurements are achieved using Gaussian order of k = 9.

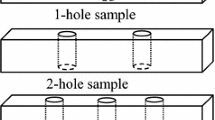

4.5 Defects Classification Results

The purpose of image segmentation algorithm is to extract the region of interest (ROI) that is the brightness area in the image. It extracts the ROI regardless of the type of welding. However, the classification algorithm is tested for both normal and defect images. One of the advantages of the current methodology is to detect type of welding that may be normal or defect. Next, it determines the type of defect class that are mentioned in Table 6. Therefore, normal and defect gamma radiography images are used for classification. Then, there are several types of defects that are used for classification purposes of defected images. These defects are cracks, undercut, porosities, inclusion, lack of penetration, worm holes cavity defects and burn through. Moreover, normal images are considered for classification. The data sets of these defects are as illustrated in Table 6. The main objective of including normal images in comparison with defect one is to implement a complete embedded system and to avoid human eye interpretation. This system will decide if the tested image is defected or free of defect. Then, determine the type of defect class as the image is defected. The main object in normal image is the brightness section. However, the interesting one in the defect image is one of the defect classes that illustrated in Table 6. Features from normal and defect images are extracted and saved in database during training phase. These features are extracted from the original image or from one of its transform domain. Then, these features are compared with extracted features of tested images. The difference between both features represents classification error. Consequently, the classification error was computed for both normal and defect image at different number of extracted features as shown in Figs. 13, 14, 15, and 16.

More than 40 images of normal and defect gamma radiography images are used for the classification purpose. Support vector machine (SVM) was used as a classifier. Polynomial kernel function was employed with SVM. The parameters with the smallest generalization error are chosen. Consequently, the best parametric settings are obtained.

Moreover, sampling frequency of 256 and spacing of the windows of 32 was used. Wavelet-packets transform (WPT) with seven decomposition levels was applied on gamma radiography images. Thus, total 128 features are extracted from gamma radiography image. Also, 486 feature vectors are obtained. These features are extracted from both normal and defect gamma radiography images using wavelet packet based feature extraction method and its transform domain. These domains are discrete cosine transforms (DCT), discrete sine transforms (DST), and discrete wavelet transforms (DWT). The obtained result confirms that 64 features are used to distinguish normal and defect gamma radiography images. However, the other 64 features introduce similar results. Therefore, these features can not be used to distinguish between normal and defect gamma radiography images. Consequently, classification error rate against the number of extracted features by WPT for features extracted from original images, DWT and signal with DWT, DCT and signal with DCT, DST and signal with DST from both normal and defect gamma radiography image is depicted in Figs. 13, 14, 15, and 16, respectively. From these figures, the error decreases with the number of extracted features. The number of these extracted features increases with the selected decomposition level. Therefore, the percentage error is very large at small number of features. Consequently, the CAD can not discriminate the necessary object in the image. However, the classification error is decreased with the number of selected features as illustrated in Figs. 13, 14, 15, and 16. The classification error of normal image is less than that of defect image at the same number of extracted features from the image as illustrated in Fig. 13.

Classification error rate against the number of extracted features by WPT for features extracted from DWT and signal with DWT from both normal and defect gamma radiography image is shown in Fig. 14. It is evident that features extracted from DWT achieves lower error for both normal and defect images. Also, classification error rate against the number of extracted features by WPT for features extracted from DCT and signal with DCT from both normal and defect gamma radiography image is illustrated in Fig. 15. The obtained results confirm that features extracted from DCT achieves lower error for both normal and defect images. Moreover, classification error rate against the number of extracted features by WPT for features extracted from DST and signal with DST from both normal and defect gamma radiography image is shown in Fig. 16.

From the figure, the features extracted from DST achieves lower error for both normal and defect images. The obtained results confirm that signal with DCT achieves the lowest classification error for defect image at smaller number of extracted features. The number of support vectors (SV) against the number of features and decomposition levels for both normal and defect images are shown in Fig. 17a, b respectively. The number of SV increases with both number of features and decomposition levels.

Classification error against number of extracted features by WPT for features extracted from original signal, DWT of signal, DWT with signal, DCT of signal, DCT with signal, DST of signal and DST with signal for both normal and defect images is illustrated in Fig. 18. The classification error against number of extracted features for normal image is shown in Fig. 18a. However, the classification error against number of extracted features for defect image is shown in Fig. 18b. It is apparent that extracted features above 64 are not included for classification purposes.

Classification rate at different decomposition levels for defect and normal image for features extraction by WPT from original signal, DWT, signal with DWT, DCT, signal with DCT, DST, signal with DST are depicted in Tables 7, 8, 9, 10, 11, 12, and 13 respectively. From these tables, the highest classification rate is achieved with level 7 for all cases. However, the highest classification rate is achieved with features extraction from DCT. Since, it has high compaction energy.

5 Conclusion

Automatic detection and classification of flaws in welding radiographic images is the main task of this paper. Therefore, an approach is introduced comprises different algorithms to deal with this task. These algorithms are background correction, contrast enhancement, noise reduction, segmentation and classification. Background correction of radiographic images is performed as a preprocessing step using Gaussian pyramidal transforms. It achieves better correction without changing the image quality. The contrast enhancement of the radiography image using the contrast stretch and normalization method was carried out. Statistical measurements were used to judge on the considered method. Also, a noise reduction algorithm based on blind image separation by a deflation based method is adopted using five different methods. These methods are kurtosis maximization, quadratic backward compatibility, quadratic grad, cubihopm, and cyclo-stationary sources. The obtained results show that Kurtosis method achieves better mean square error (MSE) and signal separation than other methods. Evaluation of ordering and measurement of the separation quality for estimated source spatial image by BIS was adopted. The obtained results indicate that BIS introduces effective results for de-noising the radiography images. Also, image segmentation algorithm based on expectation-maximization (EM) with Gaussian mixed model was considered. The results showed that the proposed method provides better segmentation with Gaussian order of 9. Also, an efficient classification algorithm from welding defects of gamma radiography image is proposed. This Algorithm depends on the multi-scale wavelet packet (MWP) technique for feature extraction from the defect images and its transform domains. The SVM is used as a classifier for matching the extracted features. It has been found from the experimental results that the multi-scale wavelet packet is more sensitive to welding defects. Also, classification rate of 99.5 % is achieved for features extraction by WPT from signal with DCT and signal with DST at the seven decomposition level. Consequently, the obtained results show that the WPT features extraction from signal with DCT is the most appropriate domain for feature extraction from defect images. The accuracy achieved is satisfied.

References

Mintern, R.A., Chaston, J.C.: Advantages of castings in the non-destructive testing welded structures. Platinum Metals Rev. 3(I), 12–16 (1959)

Thirugnanam, A., Santhosh, M.: Radiographic testing and ultrasonic testing in stainless steel weldment in tig welding. Middle East J. Sci. Res. 20(6), 749–751 (2014)

Wang, X., Wong, B.S., Tan, C.S.: Recognition of welding defects in radiographic images by using support vector machine classifier. Res. J. Appl. Sci. Eng. Technol. 2(3), 295–301 (2010)

Nafaa N., Hamami, L., Ziou, D.: Image thresholding for weld defect extraction in industrial radiographic testing. Int. J. Comput. Control Quantum and Inf. Eng. 1(7) (2007)

Valavanis, I., Kosmopoulos, D.: Multiclass defect detection and classification in weld radiographic images using geometric and texture features. Expert Syst. Appl. 37, 7606–7614 (2010)

Wang, G., Warren Liao, T.: Automatic identification of different types of welding defects in radiographic images. NDT E Int. J. 35, 519–528 (2002)

Zahran, O., Kasban, H., El-Kordy, M., Abd El-Samie, F.E.: Automatic weld defect identification from radiographic images. NDT E Int. J. 57, 26–35 (2013)

Inanc, F.: Scattering and its role in radiography simulations. NDT E Int. J. 35, 581–593 (2002)

Abd Halim, S., Abd Hadi, N., Ibrahim, A., Manurung, Y.H.P.: The geometrical feature of weld defect in assessing digital radiographic image. In: IEEE International Conference on Imaging Systems and Techniques (IST), Penang, pp. 189–193 (2011)

Sundaram, M., Prabin Jose, J., Jaffino, G.: Welding defects extraction for radiographic images using c-means segmentation method. In: International Conference on Communication and Network Technologies (ICCNT), India, pp. 79–83 (2014)

Kasban, H., Zahran, O., Arafa, H., El-Kordy, M., Elaraby, S.M.S., Abd El-Samie, F.E.: Welding defect detection from radiography images with a cepstral approach. NDT E Int. J. 44(2), 226–231 (2011)

Saber, S., Selim, G.I.: Higher-order statistics for automatic weld defect detection. J. Softw. Eng. Appl. 6, 251–258 (2013)

Saber, S., Selim, G.I.: Bispectrum for welds defects detection from radiographic images. In: Proceeding of SPIE, Fifth International Conference on Digital Image Processing (ICDIP-2013), China, Vol. 8878 (2013)

Alaknanda, P.K., Anand, R.S.: Flaw detection in radiographic weld images using morphological approach. NDT E Int. J. 39, 29–33 (2006)

Hassan, J., Majid Awan, A.: Welding defect detection and classification using geometric features. In: The 10th International Conference on Frontiers of Information Technology, Islamabad, pp. 139 – 144 (2012)

Liao, T.W.: Classification of welding flaw types with fuzzy expert systems. Expert Syst. Appl. 25, 101–111 (2003)

Lim, T.Y., Ratnam, M.M., Khalid, M.A.: Automatic classification of weld defects using simulated data and an MLP neural network. Radiography 49(3) (2007)

Quackenbush, L.J.: A review of techniques for extracting linear features from imagery. Photogramm. Eng. Remote Sens. 70(12), 1383–1392 (2004)

Liao, T.W., Li, Y.: An automated radiographic NDT system for weld inspection: Part II-flaw detection. NDT E Int. J. 31(3), 183–192 (1998)

Olkkonen, H., Pesola, P.: Gaussian pyramid wavelet transform for multiresolution analysis of images. Graph. Models Image Process. 58(4), 394–398 (1996)

C. P. Loizou, M. Pantziaris, I. Seimenis, and C. S. Pattichis, “Brain MR image normalization in texture analysis of multiple sclerosis”, The 9th International Conference on Information Technology and Applications in Biomedicine (ITAB-2009), Larnaca, pp. 1-5, 2009

Karray, E., Loghmari, M.A., Naceur, M.S.: Blind source separation of hyperspectral images in DCT-domain. In: The 5th Advanced satellite multimedia systems conference (ASMA) and the 11th signal processing for space communications workshop (SPSC). Tunis, Tunisia (2010)

Xiang C., Chi, C.-Y., Wong, C.-W., Zhou, S., Yao, Y.: Non-cancellation multistage kurtosis maximization with prewhitening for blind source separation. In: Record of the Forty-First Asilomar Conference on Signals, Systems and Computers (ACSSC-2007), Pacific Grove, pp. 3-8, 2007 [978-1-4244-2110-7/08/\(25.00]\)

Ning, C., De Leon, P.: Blind image separation through kurtosis maximization. In: Record of the Thirty-Fifth Asilomar Conference on Signals, Systems and Computers, Pacific Grove, pp. 318-322 (2001)

Kawamoto, M., Inouye, Y.: A deflation algorithm for the blind source-factor separation of MIMO-FIR channels driven by colored sources. IEEE Signal Process. Lett. 10(11), 343–346 (2003)

Vincent, E., Sawada, H., Bofill, P., Makino, S., Rosca, J.P.: First stereo audio source separation evaluation campaign: data, algorithms and results. Independent Component Analysis and Signal Separation, Lecture Notes in Computer Science 4666, 552–559 (2007)

Souidene, W., Aissa-El-Bey, A., Abed-Meriam, K., Beghdadi, A.: Blind image separation using sparse presentation. In: Proceedings of IEEE International Conference on Image Processing (ICIP), San Antonio, Vol. 3, pp. 125–128 (2007)

Akira, H., Kudo, H.: Ordered-subsets EM algorithm for image segmentation with application to brain MRI. In: IEEE Conference on Nuclear Science, Lyon, Vol. 3, pp. 18-1–18-121 (2000)

Yueyang, T., Zhang, T.: The EM algorithm for generalized exponential mixture model. In: IEEE International Conference on Computational Intelligence and Software Engineering (CISE), Wuhan, pp. 1–4 (2010)

Khushaba, R.N., Kodagoa, S., Lal, S., Dissanayake, G.: Driver drowsiness classification using fuzzy wavelet packet based feature extraction algorithm. IEEE Trans. Biomed. Eng. 58(1), 121–131 (2011)

Khushaba, R.N., Al-Jumaily, A., Al-Ani, A.: Novel feature extraction method based on fuzzy entropy and wavelet packet transform for myoelectric control. In: International Symposium on Communications and Information Technologies (ISCIT-2007). Sydney, NSW (2007)

Wang, D., Shi, L., Heng, P.A.: Automatic detection of breast cancers in mammograms using structured support vector machines. Neurocomputing 72, 3296–3302 (2009)

http://www.mathworks.com/matlabcentral/fileexchange/34164-image-segmentation-with-em-algorithm

Pu, Y., Wei W., Xu, Q.: Image change detection based on the minimum mean square error. In: The 5th International Joint Conference on Computational Sciences and Optimization, Harbin, pp. 367–371 (2012)

Acknowledgments

Dr. H. Kasban provided database of welding radiography images.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

The proposed algorithms have been tested using multiple gamma radiographic welding images. However, the purpose of the statistical measurements is the quantitative evaluation of the considered algorithms. Moreover, statistical measurements are considered for comparison between the proposed algorithms. Therefore, some parameters have been calculated for testing the corrected image. These parameters are, peak signal to noise ratio (PSNR), mean square error (MSE), entropy, signal to noise ratio (SNR), root mean square error (RMSE), mean absolute error (MAE), and Pearson correlation coefficient (PCC). Mean square error (MSE) is the average squared difference between a reference image and a distorted image. It is computed pixel-by-pixel by adding up the squared differences of all the pixels and dividing by the total pixel count. The sum of the mean square error (MSE) of each region is used as a cost value to segment the difference image computed by the absolute-valued log ratio [34].

where A, B, M and N denote the corrected image, the input image, the number of rows and columns of input signal, respectively. The PSNR represents a measure of the peak error. It is given by

The entropy of the image, the SNR, the RMSE, the mean absolute error (MAE) and the Pearson correlation coefficient are given by

where \(P_j\)and \(Log_2\) denote the probability that is the difference between 2 adjacent pixels, and the base 2 logarithm, respectively.

where \(P_s\) and \(P_n\) denote the signal power and noise power, respectively.

where \(S_1 =A(m,n)-\frac{\sum \limits _{m=0,n=0}^{M-1,N-1} {A(m,n)} }{M+N}\), \(S_2 =B(m,n)-\frac{\sum \limits _{m=0,n=0}^{M-1,N-1} {B(m,n)} }{M+N}\), and \(\sigma \) denotes the standard deviation.

Rights and permissions

About this article

Cite this article

El-Tokhy, M.S., Mahmoud, I.I. Classification of Welding Flaws in Gamma Radiography Images Based on Multi-scale Wavelet Packet Feature Extraction Using Support Vector Machine. J Nondestruct Eval 34, 34 (2015). https://doi.org/10.1007/s10921-015-0305-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10921-015-0305-9