Abstract

A polar coordinate transformation is considered, which transforms the complex geometries into a unit disc. Some basic properties of the polar coordinate transformation are given. As applications, we consider the elliptic equation in two-dimensional complex geometries. The existence and uniqueness of the weak solution are proved, the Fourier–Legendre spectral-Galerkin scheme is constructed and the optimal convergence of numerical solutions under \(H^1\)-norm is analyzed. The proposed method is very effective and easy to implement for problems in 2D complex geometries. Numerical results are presented to demonstrate the high accuracy of our spectral-Galerkin method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Spectral methods employ global basis functions to approximate PDEs and have become increasingly popular in scientific computing and engineering applications, see, e.g., [2, 4, 5, 7, 10, 16, 19, 21, 23]. As long as the solutions are analytic, spectral methods possess exponential convergence.

However, the spectral methods are only applicable to regular domains, such as rectangles or discs in the 2D case, which greatly limits their applications. In order to expand the applications of spectral methods to more complex geometries, some domain decomposition spectral methods and spectral element methods have been developed, see, e.g., [12, 13, 17, 20, 22]. The basic idea is to partition the irregular region into smaller regular subdomains, and to achieve global spectral approximations by constructing local basis functions on subdomains, interfaces and nodes. Although these methods can effectively deal with some problems of irregular regions and can obtain high order accuracy, they greatly increase the complexity of programming and the computational costs.

It is still a great challenge to construct direct spectral methods for solving problems in complex geometries. So far, there are basically two direct spectral methods: (i) embed the complex geometry into a larger regular domain, which belongs to the class of fictitious domain methods [3]; and (ii) map the complex geometry into a regular domain through an explicit and smooth mapping [15] or through the Gordon–Hall mapping [6].

There have been some attempts in using the fictitious domain approach to solve PDEs in complex geometries. Lui [14] proposed the spectral method with domain embedding to solve PDEs in complex geometry. The main idea is to embed the irregular domain into a regular one so that classical spectral methods can be applied. Gu and Shen [8, 9] developed efficient and well-posed spectral methods for elliptic PDEs in complex geometries using the fictitious domain approach, and provided a rigorous error analysis for the Poisson equation.

As for the mapping methods, Orszag [15] proposed a Fourier–Chebyshev spectral approach for solving the heat equation in the annular region by using an explicit mapping. Heinrichs [11] tested the diameter Fourier–Chebyshev spectral collocation approach for problems in complex geometries and focused on the clustering of collocation nodes and the improvement of the condition number. It should be pointed out that the authors in [11, 15] did not describe the algorithms in detail, nor did they prove the existence and uniqueness of the weak solutions and the convergence of the numerical solutions.

The aim of this paper is to develop a Fourier–Legendre spectral method for elliptic PDEs in two-dimentional complex geometries using the mapping method. To do this, we first consider a polar coordinate transformation to transform the complex geometries into a unit disc. Then we present some basic properties of the polar coordinate transformation. As applications, we focus on the elliptic equation in two-dimensional complex geometries and construct a proper variational formulation. The existence and uniqueness of the weak solution are proved. We also propose a Fourier–Legendre spectral-Galerkin method with proper test and trial spaces and analyze the optimal convergence of numerical solutions under \(H^1\)-norm. The proposed method is very effective and easy to implement for problems in complex geometries whether the boundary curves can be represented directly or not. Ample numerical results demonstrate the high accuracy of our spectral-Galerkin method.

The remainder of this paper is organized as follows. In Sect. 2, we recall some basic results on the shifted Legendre polynomials. In Sect. 3, we first introduce the polar coordinate transformation and present its basic properties. Then we consider the elliptic equation in two-dimensional complex geometries and construct a proper variational formulation. The existence and uniqueness of the weak solution are proved. We also propose a Fourier–Legendre spectral-Galerkin method and describe its numerical implementation. In Sect. 4, we analyze the optimal convergence of numerical solutions under \(H^1\)-norm. Section 5 is for some numerical results of the elliptic equations defined in squircle-bounded domains, butterfly-bounded domains, cardioid-bounded domains, star-shaped domains and general domains, respectively. The finial section is for some concluding remarks.

2 Preliminaries

For integer \(r\ge 0,\) we define the weighted Sobolev space \(H^{r}_{\chi }(I)\) as usual, with the inner product \((u,v)_{r,\chi ,I},\) the semi-norm \(|v|_{r,\chi ,I}\) and the norm \(\Vert v\Vert _{r,\chi ,I},\) respectively, where I is a certain interval. In cases where no confusion would arise, \(\chi \) (if \(\chi \equiv 1\)), r (if \(r=0\)) and I may be dropped from the notations. For simplicity, we denote \(v^{(k)}=\partial _{x}^k v=\frac{d^k v}{dx^k}.\) Moreover, let N be any positive integer and \(\mathcal {P}_N(I)\) stands for the set of all algebraic polynomials of degree at most N.

We next introduce the shifted Legendre polynomials. For \(\xi \in (-1,1),\) let \(P_n(\xi )\) be the Legendre polynomial of degree n, which satisfies the three-term recurrence relation (cf. [19]):

with \(P_0(\xi )=1\) and \(P_1(\xi )=\xi .\)

By the coordinate transformation \(\xi =2r-1,\) we consider the shifted Legendre polynomial \(L_{n}(r):=P_{n}(2r-1)\) of degree n, which satisfies the three-term recurrence relation:

with \(L_0(r)=1\) and \(L_1(r)=2r-1.\) Moreover, the shifted Legendre polynomials \(L_{n}(r)\) also satisfy the following relations:

The shifted Legendre polynomials \(L_{n}(r)\) possess the following orthogonality:

where \(\delta _{mn}\) is the Kronecker symbol.

3 Fourier–Legendre Spectral-Galerkin Method in Complex Geometries

In this section, we shall propose a Fourier–Legendre spectral-Galerkin method for problems in two-dimensional complex geometries.

3.1 A Polar Coordinate Transformation

In this subsection, we consider a polar coordinate transformation, which will be used to deal with PDEs in two-dimensional complex geometries.

For a simply-connected domain \(\Omega \) of complex geometry bounded by a simple closed curve \(\Gamma ,\) we define the polar coordinate transformation in the following form:

where \(R(\theta )>0\) is only related to the angle \(\theta ,\) and represents the distance from the origin to the curve \(\Gamma \) (see Fig. 1). The coordinate transformation (3.1) converts the domain \(\Omega \) of complex geometry into the unit disc \(\Theta \). Particularly, the case \(R(\theta )\equiv 1\) is simplified to the classical polar transformation. Clearly, by the definition of \(R(\theta ),\) we have

Moreover, we always assume

Next, differentiating (3.1) with respect to x and y, respectively, we obtain

Accordingly, we have

where \(u(r,\theta ):=U(rR(\theta )\cos \theta ,rR(\theta )\sin \theta ).\)

We are now ready to change to polar coordinates in the Laplacian. Differentiating (3.4) again with respect to x and y, respectively, we get

Applying the product rule for differentiation and the chain rule in two dimensions, we have

By (3.4), (3.6) and (3.7), a direct computation leads to the following results,

Furthermore, differentiating (3.1) with respect to r and \(\theta \), respectively, we know the Jacobian determinant of the polar transformation is

Therefore

3.2 Fourier–Legendre Spectral-Galerkin Method

In order to more easily illustrate the Fourier–Legendre spectral-Galerkin method based on the polar coordinate transformation for problems in two-dimensional complex geometries, we consider the following elliptic equation with homogeneous boundary condition on \(\Omega \):

Applying the polar coordinate transformation (3.1)–(3.11), and denoting

we obtain

where \(u(r,\theta )\) satisfies the essential pole condition \(\partial _{\theta } u(0,\theta )=0.\)

By multiplying the first equation of (3.12) by \(rR^2(\theta )v\) and then integrating the result over \(\Theta \), we derive a weak formulation of (3.12). It is to find \(u\in {}_0H^1_p(\Theta )\) such that for any \(v\in {}_0H^1_p(\Theta )\),

and

equipped with the semi-norm \(|\cdot |_{1,p,\Theta }\) and norm \(\Vert \cdot \Vert _{1,p,\Theta }\) as follows:

Hereafter, \(v(r,\theta )=V(rR(\theta )\cos \theta ,rR(\theta )\sin \theta ).\)

Next, let

Clearly, we have \(a(u,v)=b(U,V)\). Since

and by the Poincaré inequality,

where \(C>0\) depends only on \(\Omega ,\) we deduce

This implies that \(a(\cdot ,\cdot )\) is continuous and coercive in \({}_0H^{1}_{p} (\Theta )\). Hence, by the Lax-Milgram lemma, (3.13) admits a unique solution as long as \(f\in ({}_0H^1_p(\Theta ))^\prime .\)

Remark 3.1

For problem (3.12) with nonhomogeneous boundary condition \(u(1,\theta ) = h(\theta ),\) we may easily make the variable transformation \(w(r,\theta )=u(r,\theta )-rh(\theta )\) to transform the original problem to a problem with homogeneous boundary condition.

We next propose a Fourier–Legendre spectral-Galerkin method for (3.13). To do this, let

Obviously, by choosing \(\varphi _k(r) = L_k(r) -L_{k+2}(r) \) and \(\psi _k(r) = L_k(r)-L_{k+1}(r),\) we have from (2.3) that

Denote

Then, for any \(u(r,\theta ) \in {}_0X_{M,N}(\Theta ),\) \(u(1,\theta )=0\) and the pole condition \(\partial _{\theta }u(0,\theta )=0\) is satisfied. We expand the numerical solution \(u_{MN}(r,\theta )\) as

The Fourier–Legendre spectral-Galerkin approximation for (3.13) is to find \( u_{MN}(r,\theta ) \in {}_0X_{M,N}(\Theta )\) such that for any \(v\in {}_0X_{M,N}(\Theta ),\)

3.3 Numerical Implementation

We describe in this subsection how our method can be efficiently implemented. To this end, we first consider the r-direction. Let

and

Clearly, the matrices \(A^{q,m,n},\) \(B^{q,m,n}\) and \(C^{q,m,n}\) can be computed precisely according to the recurrence relations (2.2)–(2.4).

We next describe the implementation of the \(\theta \)-direction. Let \( 1\le k,j\le M \) and denote the matrices \(D^{1}=\big (d^{1}_{k,j}\big ),\) \(D^{2}=\big (d^{2}_{k,j}\big ),\) \(D^{3}=\big (d^{3}_{k,j}\big ),\) \(D^{4}=\big (d^{4}_{j}\big )\) and \(D^{5}=\big (d^{5}_{j}\big )\) with the elements

Moreover, set the matrix \(E=\big (e_{k,j}\big )\) with the elements \(e_{k,j}=k^2\pi \delta _{kj}.\) Similarly, denote the following matrices and their corresponding elements

and

The matrices mentioned above can be efficiently approximated by some suitable quadrature formulas. For deriving a compact matrix form of (3.22), we introduce the matrices

where \(\otimes \) represents the Kronecker product, i.e., \(A \otimes B=(a_{ij}B).\) We also denote

Then, the compact matrix form of (3.22) is as follows,

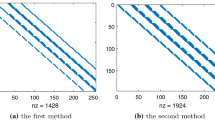

It should be pointed out that the matrix \(C^{1,1,1}\) is diagonal, the matrices \(A^{1,1,1}\), \(A^{-1,0,0}\), \(A^{0,1,0}\), \(B^{1,1,1}\) and \(B^{0,1,0}\) are tridiagonal, the matrix \(C^{1,0,0}\) is pentadiagonal, and the matrices \(A^{1,0,0}\) and \(B^{1,0,0}\) are heptadiagonal. Moreover, the matrix \(\textbf{W}\) in (3.23) is symmetrical. The system (3.23) can be solved in the same way as the usual spectral-Galerkin methods for PDEs with variable coefficients in regular geometries (cf. [18]).

4 Convergence Analysis

In this section, we shall analyze the numerical error of scheme (3.22).

We first consider the Legendre orthogonal approximation. Denote by \(\omega ^{\alpha ,\beta }:=\omega ^{\alpha ,\beta }(r)=(1-r)^{\alpha }r^{\beta }\) the Jacobi weight function of index \((\alpha ,\beta ),\) which is not necessarily in \(L^1(I).\) Define the \(L^2\)-orthogonal projection \(\pi ^{0,0}_{N}:L^2(I)\rightarrow \mathcal {P}_{N}(I)\) such that

Further, for \(k\ge 1,\) we define recursively the \(H^k\)-orthogonal projections \(\pi _N^{-k,-k}:H^k(I)\rightarrow \mathcal {P}_N(I)\) such that

Next, for any nonnegative integers \(s\ge k\ge 0,\) define the Sobolev space as follows:

We have the following error estimate on \(\pi _N^{-k,-k}u.\)

Lemma 1.1 (cf. [1]) \(\pi _N^{-k,-k}u\) is a Legendre tau approximation of u such that

Further suppose \(u\in H^{s,k}(I)\) with \(s\ge k.\) Then for \(N\ge k,\)

We next consider the Fourier orthogonal approximation. Let \(\Lambda =(0,2\pi )\) and \(H^m(\Lambda )\) be the Sobolev space with norm \(\Vert \cdot \Vert _{m,\Lambda }\) and semi-norm \(|\cdot |_{m,\Lambda }.\) For any non-negative integer m, \(H^m_{p}(\Lambda )\) denotes the subspace of \(H^m(\Lambda ),\) consisting of all functions whose derivatives of order up to \(m-1\) have the period \(2\pi .\) In particular, \(L^2(\Lambda )=H^0_{p}(\Lambda ).\)

Let M be any positive integer, and \(\widetilde{V}_{M}(\Lambda )=\hbox {span}\{~e^{il\theta }~|~|l|\le M\}.\) We denote by \(V_{M}(\Lambda )\) the subset of \(\widetilde{V}_{M}(\Lambda )\) consisting of all real-valued functions. The orthogonal projection \(P_{M}:\) \(L^2(\Lambda )\rightarrow V_{M}(\Lambda )\) is defined by

It was shown in [4] that for any \(v \in H^m_{p}(\Lambda )\), integer \(m\ge 0\) and \(\mu \le m\),

We now turn to the mixed Fourier–Legendre orthogonal approximation. We introduce the space

equipped with the following semi-norm and norm,

Since \(|\frac{\partial _{\theta }R(\theta )}{R(\theta )}|\) is bounded above, we deduce readily that \({}_{0}\widetilde{H}^1_{p}(\Theta )\) is the subspace of \({}_0H^{1}_{p} (\Theta ).\) Next, we define the orthogonal projection \({}_0P^1_{M,N}\): \({}_0\widetilde{H}^{1}_{p} (\Theta )\rightarrow {}_0X_{M,N}(\Theta )\) by

Theorem 4.1

For any \(v \in {}_{0}\widetilde{H}^1_{p}(\Theta )\cap H^{m,1}(I,L^2(\Lambda ))\cap L^2(I,H^s_p(\Lambda ))\), integers \(m\ge 1\) and \(s\ge 1\),

provided that the norms mentioned above are bounded.

Proof

By the projection theorem,

Take \(\phi =\pi _N^{-1,-1}P_Mv.\) Clearly, by Lemma 4.1 we know \(\phi \in {}_0X_{M,N}(\Theta ).\) With the aid of (4.1) and Lemma 4.1, we deduce that

Next, due to \(\partial _\theta P_M v=P_M \partial _\theta v\), we use (4.1) and Lemma 4.1 again to obtain that

In the same manner, we verify that

Finally, the desired result comes immediately from a combination of (4.4)–(4.6). \(\square \)

Let u and \(u_{MN}\) be the solutions of the problem (3.13) and the scheme (3.22), U and \(U_{MN}\) be the corresponding solutions of u and \(u_{MN}\) in the xy-plane. We have the following convergence results.

Theorem 4.2

If \(R(\theta )\) satisfies the conditions (3.2) and (3.3), then for any \(u \in {}_{0}\widetilde{H}^1_{p}(\Theta )\cap H^{m,1}(I,L^2(\Lambda ))\cap L^2(I,H^s_p(\Lambda ))\) with integers \(m\ge 1\) and \(s\ge 1\), we have

provided that the norms mentioned above are bounded.

Proof

Hence

Next, according to (3.17),

This means \(\Vert u-u_{MN}\Vert _{1,p,\Theta }\le c\Vert u-{}_0P^1_{M,N}u\Vert _{1,p,\Theta }.\) Moreover, since \(|\frac{\partial _{\theta }R(\theta )}{R(\theta )}|\) is bounded above, we use the Cauchy–Schwarz inequality to get

and

The above two inequalities imply \(\Vert v\Vert ^2_{1,p,\Theta }\le c \Vert v\Vert ^2_{\widetilde{H}^1(\Theta )},\) where c is a positive constant depending on \(|\frac{\partial _{\theta }R(\theta )}{R(\theta )}|.\) Therefore, by Theorem 4.1 we obtain

This ends the proof. \(\square \)

5 Numerical Results

In this section, we present some numerical results for problem (3.11) defined in a convex or concave domain. We first consider some special domains where the functions \(R(\theta )\) have exact expressions, and then consider general domains where the functions \(R(\theta )\) do not have specific expressions. We define the \(L^2\)- and \(L^\infty \)- errors by

5.1 Squircle-Bounded Domains

We consider the simply connected domain bounded by a squircle

A squircle, also known as a Lamé curve or Lamé oval, is a mathematical shape with properties between those of a square and those of a circle. It is a special case of the superellipse. As p increases, the curve becomes more and more like a square with slightly rounded corners, and the limit as \( p \rightarrow \infty \) is a square, which can be seen from Fig. 2.

5.1.1 p is Even

When p is even, the squircle (5.1) becomes

from which we can easily know

We first take \(p=4\), \(\mu =2.5\) and test the smooth exact solution of the elliptic equation (3.11),

Clearly

The domain and the exact solution (5.4)

\(L^2\)- and \(L^{\infty }\)- errors of (5.4) versus N with different M

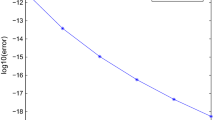

In Fig. 3, we show the domain \(\Omega \) and the exact solution in the xy-plane. In Fig. 4, we plot the \(L^2\)- and \(L^{\infty }\)- errors versus N with different M. They indicate the errors decay exponentially as N increases. We see that for fixed N, the scheme with larger M produces better numerical results.

\(L^2\)- and \(L^{\infty }\)- errors of (5.6) versus N with different M

We next take \(p=4\), \(\mu =2.5\) and test the exact solution with low regularity,

Obviously

We first take \(h=\frac{9}{2}.\) In Fig. 5, we plot the \(L^2\)- and \(L^{\infty }\)- errors versus N with different M. They indicate the errors decay exponentially as N increases. We also see that for fixed N, the scheme with larger M produces better numerical results. In Fig. 6 (left), we plot the exact solution with \(h=\frac{9}{2}\) in the xy-plane. In Fig. 6 (right), we plot the \(L^{\infty }\)-errors versus N with different h. They indicate the errors decay exponentially as N increases. We also observe that the errors decrease rapidly as h increases.

The exact solution with \(h=\frac{9}{2}\) and \(L^{\infty }\)- errors of (5.6) with \(M=24\)

To further illustrate the efficiency of the suggested approach, we also test the following exact solution,

Taking \(p=4\) and \(\mu =2.5\), we show the exact solution (5.7) in Fig. 7 (left) and the \(L^{\infty }\)- errors in Fig. 7 (right). We find that the numerical results achieve good expectations again.

The exact solution and \(L^{\infty }\)- errors of (5.7)

5.1.2 p is Odd

When p is odd, the squircle (5.1) becomes

Accordingly,

\(L^2\)- and \(L^{\infty }\)- errors of (5.5) versus N with different M

We take \(p=7,\) \(\mu =2.5\) and test the exact solution (5.5). In Fig. 8, we show the domain \(\Omega \) and the exact solution (5.5) in the xy-plane. In Fig. 9, we plot the \(L^2\)- and \(L^{\infty }\)- errors versus N with different M. They indicate the errors decay exponentially as N increases. We see that for fixed N, the scheme with larger M produces better numerical results.

5.2 Butterfly-Bounded Domains

We mainly consider butterflies that have no body, tail and middle wings, but only upper and lower wings. Lui [14] used the spectral domain embedding method to solve the elliptic partial differential equation defined in the simplest butterfly-bounded domain.

Let

In Fig. 10, we show the domain \(\Omega \) and the exact solution (5.5) in the xy-plane, by taking the parameters \(a=4\) and \(b=3\). In Fig. 11, we plot the \(L^2\)- and \(L^{\infty }\)- errors versus N with \(\mu =2.5\) and different M. They indicate the errors decay exponentially as N increases. We also see that for fixed N, the scheme with larger M produces better numerical results.

\(L^2\)- and \(L^{\infty }\)- errors of (5.5) versus N with different M

We next consider variants of the butterfly curves. In Figs. 12 and 14, we show the domains and the exact solutions (5.5) in the xy-plane.

In Figs. 13 and 15, we plot the \(L^2\)- and \(L^{\infty }\)- errors versus N with \(\mu =2.5\) and different M for two cases. It can be seen that for those cases, the exponential spectral accuracy can be reached.

Compared with the butterfly domain considered in [14], the three domains we consider are more complex. Particularly, we can easily carry out their numerical simulations and obtain high-order accuracy.

\(L^2\)- and \(L^{\infty }\)- errors of (5.5) versus N with different M

\(L^2\)- and \(L^{\infty }\)- errors of (5.5) versus N with different M

5.3 Cardioid-Bounded Domains

We consider the cardioid-bounded domain. Let

In Fig. 16, we show the domain \(\Omega \) and the exact solution (5.5) in the xy-plane, by taking the parameters \(a=10\) and \(b=9\). In Fig. 17, we plot the \(L^2\)- and \(L^{\infty }\)- errors versus N with \(\mu =2.5\) and different M. Clearly, they indicate the errors decay exponentially as N. We see that for fixed N, the scheme with lager M produces better numerical results.

\(L^2\)- and \(L^{\infty }\)- errors of (5.5) versus N with different M

5.4 Star-Shaped Domains

We consider the star-shaped domains. Let

In Figs. 18 and 20, we show the domains \(\Omega \) and the exact solutions (5.5) in the xy-plane, by taking \(R(\theta )=0.7+0.2\sin ( 3\theta )\) and \(R(\theta )=0.8+0.1\sin ( 5\theta ),\) which were also considered in [9, 14]. In Figs. 19 and 21, we plot the \(L^2\)- and \(L^{\infty }\)- errors versus N with \(\mu =2.5\) and different M. Clearly, they indicate the errors decay exponentially as N. We see that for fixed N, the scheme with larger M produces better numerical results.

\(L^2\)- and \(L^{\infty }\)- errors of (5.5) versus N with different M

\(L^2\)- and \(L^{\infty }\)- errors of (5.5) versus N with different M

5.5 General Domains

In fact, in many cases, we do not know the specific expressions or parametric equations of the boundary curves. For those general domains, our method is still available. To this end, we need to derive the approximation function of \(R(\theta )\) described below.

We first calculate the discrete values \(\big \{R(\theta _k)\big \}_{k=0}^{2K}\) by \(R(\theta _k)=\sqrt{x_k^2+y_k^2},\) where \(\theta _k=\frac{2k\pi }{2K+1}\) represent the \(2K+1\) Fourier collocation points, and \((x_k,y_k)\) is the Cartesian coordinates on boundary curve \(\Gamma \) corresponding to \(\theta _k.\) Then we approximate \(R(\theta )\) using the trigonometric functions as basis functions, i.e.,

where

Accordingly, we obtain the approximation function of \(\partial _{\theta }R(\theta ).\)

As examples, we provide four representative domains. Throughout this subsection, we always take the exact solution

and use the suggested approach combined with the homogenization technique in Remark 3.1 to simulate numerically the Eq. (3.11).

We first consider the smooth quasi-pentagonal domain. To apply our method to solve this kind of problem, we start by calculating the values of the function \(R(\theta )\) at \(\theta _k\) marked by six-pointed stars, and then use (5.13) to approximate the function \(R(\theta ).\)

\(L^2\)- and \(L^{\infty }\)- errors of (5.14) versus M with different N

In Fig. 22, we show the domain \(\Omega \) and the exact solution (5.14) in the xy-plane. In Fig. 23, we plot the \(L^2\)- and \(L^{\infty }\)- errors versus M with \(\mu =2.5\) and different N. They indicate the errors decay exponentially as M increases. We also see that for fixed M, the scheme with larger N produces better numerical results.

We next consider the smooth quasi-hexagonal domain. Similarly, we use (5.13) to approximate the function \(R(\theta ).\)

\(L^2\)- and \(L^{\infty }\)- errors of (5.14) versus M with different N.

In Fig. 24, we show the domain \(\Omega \) and the exact solution (5.14) in the xy-plane. In Fig. 25, we plot the \(L^2\)- and \(L^{\infty }\)- errors versus M with \(\mu =2.5\) and different N. They indicate the errors decay exponentially as M increases. We also see that for fixed M, the scheme with larger N produces better numerical results.

We then consider the teardrop domain, whose boundary curve satisfies the following cartesian equation:

In Fig. 26, we show the domain \(\Omega \) and the exact solution (5.14) in the xy-plane. By calculating the values of the function \(R(\theta )\) at \(\theta _k\) marked by six-pointed stars, we can use the suggested approach to simulate numerically the Eq. (3.11). In Fig. 27, we plot the \(L^2\)- and \(L^{\infty }\)- errors versus M with \(\mu =2.5\) and different N. They indicate the errors decay exponentially as M increases. We also see that for fixed M, the scheme with larger N produces better numerical results.

\(L^2\)- and \(L^{\infty }\)- errors of (5.14) versus M with different N

\(L^2\)- and \(L^{\infty }\)- errors of (5.14) versus M with different N

We finally consider a more general smooth domain, whose boundary curve has an irregular smooth shape, as shown in Fig. 28 (left). This boundary curve has several irregular protrusions and depressions. Generally speaking, we can not get the explicit cartesian equation for this irregular curve.

As before, we can approximate the function \(R(\theta )\). In Fig. 28 (right), we plot the exact solution (5.14) in the xy-plane. In Fig. 29, we plot the \(L^2\)- and \(L^{\infty }\)- errors versus M with \(\mu =2.5\) and different N. Clearly, the numerical errors decay exponentially as M increases. We also see that for fixed M, the scheme with larger N produces better numerical results. From the above, it can be seen that for irregular convex and concave regions, our Fourier–Legendre spectral-Galerkin method is still feasible with higher accuracy.

6 Concluding Remarks

We developed in this paper the Fourier–Legendre spectral-Galerkin method for solving two-dimensional elliptic PDEs in complex geometries using a polar coordinate transformation. This method is very effective and easy to implement, and is proved to be well-posed with spectral accuracy in the sense that the convergence rate increases with the smoothness of the solution. Although we only consider linear elliptic PDEs, the proposed method is effective for the nonlinear and/or time-dependent problems in two-dimensional complex geometries. Particularly, the main ideas and methods of this paper can also be extended to three-dimensional situations, and we will report on this work in the near future.

Data Availability

No data is used and generated in this manuscript.

References

An, J., Li, H.Y., Zhang, Z.M.: Spectral-Galerkin approximation and optimal error estimate for biharmonic eigenvalue problems in circular/spherical/elliptical domains. Numer. Algorithms 84, 427–455 (2020)

Boyd, J.P.: Chebyshev and Fourier Spectral Methods, 2nd edn. Springer, Berlin (2001)

Buzbee, B.L., Dorr, F.W., George, J.A., Golub, G.H.: The direct solution of the discrete Poisson equation on irregular regions. SIAM J. Numer. Anal. 8, 722–736 (1971)

Canuto, C., Hussaini, M.Y., Quarteroni, A., Zang, T.A.: Spectral Methods. Fundamentals in Single Domains. Springer, Berlin (2006)

Canuto, C., Hussaini, M.Y., Quarteroni, A., Zang, T.A.: Spectral Methods. Evolution to Complex Geometries and Applications to Fluid Dynamics. Springer, Berlin (2007)

Gordon, W.J., Hall, C.A.: Transfinite element methods: blending-function interpolation over arbitrary curved element domains. Numer. Math. 21, 109–129 (1973)

Gottlieb, D., Orszag, S.A.: Numerical Analysis of Spectral Methods: Theory and Applications. SIAM, Philadelphia (1977)

Gu, Y.Q., Shen, J.: Accurate and efficient spectral methods for elliptic PDEs in complex domains. J. Sci. Comput. 83, 42, 20 (2020)

Gu, Y.Q., Shen, J.: An efficient spectral method for elliptic PDEs in complex domains with circular embedding. SIAM J. Sci. Comput. 43, A309–A329 (2021)

Guo, B.Y.: Spectral Methods and Their Applications. World Scientific, Singapore (1998)

Heinrichs, W.: Spectral collocation schemes on the unit disc. J. Comput. Phys. 199, 66–86 (2004)

Karniadakis, G., Sherwin, S.: Spectral/hp Element Methods for Computational Fluid Dynamics, 2nd edn. Oxford University Press, Oxford (2005)

Korczak, K.Z., Patera, A.T.: An isoparametric spectral element method for solution of the Navier–Stokes equations in complex geometry. J. Comput. Phys. 62, 361–382 (1986)

Lui, S.H.: Spectral domain embedding for elliptic PDEs in complex domains. J. Comput. Appl. Math. 225, 541–557 (2009)

Orszag, S.A.: Spectral methods for problems in complex geometries. J. Comput. Phys. 37, 70–92 (1980)

Peyret, R.: Spectral Methods for Incompressible Viscous Flow. Springer, New York (2002)

Quarteroni, A., Valli, A.: Domain Decomposition Methods for Partial Differential Equations. Oxford University Press, Oxford (1999)

Shen, J.: Efficient spectral-Galerkin method. I. Direct solvers of second- and fourth-order equations using Legendre polynomials. SIAM J. Sci. Comput. 15, 1489–1505 (1994)

Shen, J., Tang, T., Wang, L.L.: Spectral Methods: Algorithms, Analysis and Applications. Springer, Berlin (2011)

Shen, J., Wang, L.L., Li, H.Y.: A triangular spectral element method using fully tensorial rational basis functions. SIAM J. Numer. Anal. 47, 1619–1650 (2009)

Song, F.Y., Xu, C.J., Karniadakis, G.E.: Computing fractional Laplacians on complex-geometry domains: algorithms and simulations. SIAM J. Sci. Comput. 39, A1320–A1344 (2017)

Toselli, A., Widlund, O.: Domain Decomposition Methods-Algorithms and Theory. Springer, Berlin (2005)

Yi, L.J., Guo, B.Q.: An h-p version of the continuous Petrov–Galerkin finite element method for Volterra integro-differential equations with smooth and nonsmooth kernels. SIAM J. Numer. Anal. 53, 2677–2704 (2015)

Funding

The work of these authors is supported by the National Natural Science Foundation of China (No. 12071294) and Natural Science Foundation of Shanghai (No. 22ZR1443800).

Author information

Authors and Affiliations

Contributions

Z. Wang, X. Wen and G. Yao all contributed to the conceptualization, methodology, writing and the numerical simulations.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The work of these authors is supported by the National Natural Science Foundation of China (No. 12071294) and Natural Science Foundation of Shanghai (No. 22ZR1443800).

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, Z., Wen, X. & Yao, G. An Efficient Spectral-Galerkin Method for Elliptic Equations in 2D Complex Geometries. J Sci Comput 95, 89 (2023). https://doi.org/10.1007/s10915-023-02207-4

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-023-02207-4

Keywords

- PDEs in complex geometries

- Polar coordinate transformation

- Fourier–Legendre spectral-Galerkin methods

- Convergence

- Numerical results