Abstract

We prove that the superconvergence of \(C^0\)-\(Q^k\) finite element method at the Gauss–Lobatto quadrature points still holds if variable coefficients in an elliptic problem are replaced by their piecewise \(Q^k\) Lagrange interpolants at the Gauss–Lobatto points in each rectangular cell. In particular, a fourth order finite difference type scheme can be constructed using \(C^0\)-\(Q^2\) finite element method with \(Q^2\) approximated coefficients.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Motivations

Consider solving a variable coefficient Poisson equation

with homogeneous Dirichlet boundary conditions on a rectangular domain \(\Omega \). Assume that the coefficient a(x, y) and the solution u(x, y) are sufficiently smooth. Let \(\Vert u\Vert _{k,p,\Omega }\) be the norm of Sobolev space \(W^{k,p}(\Omega )\). For \(p=2\), let \(H^k(\Omega )=W^{k,2}(\Omega )\) and \(\Vert \cdot \Vert _{k,\Omega }=\Vert \cdot \Vert _{k,2,\Omega }\). The subindex \(\Omega \) will be omitted when there is no confusion, e.g., \(\Vert u\Vert _{0}\) denotes the \(L^2(\Omega )\)-norm and \(\Vert u\Vert _1\) denotes the \(H^1(\Omega )\)-norm. The variational form is to find \(u\in H_0^1(\Omega )=\{v\in H^1(\Omega ){:}\,v|_{\partial \Omega }=0\}\) satisfying

where \(A(u,v)=\iint _{\Omega } a\nabla u \cdot \nabla v dx dy\), \( (f,v)=\iint _{\Omega }fv dxdy.\) Consider a rectangular mesh with mesh size h. Let \(V_0^h\subseteq H^1_0(\Omega )\) be the continuous finite element space consisting of piecewise \(Q^k\) polynomials (i.e., tensor product of piecewise polynomials of degree k), then the \(C^0\)-\(Q^k\) finite element solution of (1.2) is defined as \(u_h\in V_0^h\) satisfying

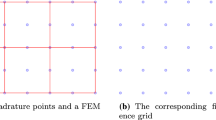

For implementing finite element method (1.3), either some quadrature is used or the coefficient a(x, y) is approximated by polynomials for computing \(\iint _{\Omega }a u_h v_h\,dx dy\). In this paper, we consider the implementation to approximate the smooth coefficient a(x, y) by its \(Q^k\) Lagrangian interpolation polynomial in each cell. For instance, consider \(Q^2\) element in two dimensions, tensor product of 3-point Lobatto quadrature form nine uniform points on each cell, see Fig. 1. By point values of a(x, y) at these nine points, we can obtain a \(Q^2\) Lagrange interpolation polynomial on each cell. Let \(a_I(x,y)\) and \(f_I(x,y)\) denote the piecewise \(Q^k\) interpolation of a(x, y) and f(x, y) respectively. For a smooth functions \(a\ge C> 0\), the interpolation error on each cell e is \(\max _{{\mathbf {x}}\in e} |a_I(\mathbf x)-a({\mathbf {x}})|={\mathcal {O}}(h^{k+1})\) thus \(a_I>0\) if h is small enough. So if assuming the mesh is fine enough so that \(a_I(x,y)\ge C>0,\) we consider the following scheme using the approximated coefficients \(a_I(x,y)\): find \({{\tilde{u}}}_h\in V_0^h\) satisfying

where \(\langle f,v_h\rangle _h\) denotes using tensor product of \((k+1)\)-point Gauss–Lobatto quadrature for the integral \((f,v_h)\). One can also simplify the computation of the right hand side by using \(f_I(x,y)\), so we also consider the scheme to find \({\tilde{u}}_h\) satisfying

The schemes (1.4) and (1.5) correspond to the equation

At first glance, one might expect \((k+1)\)-th order accuracy for a numerical method applying to (1.6) due to the interpolation error \(a(x,y)-a_I(x,y)={\mathcal {O}}(h^{k+1})\). But as we will show in Sect. 4.1, the difference between exact solutions u and \({{\tilde{u}}}\) to the two elliptic equations (1.1) and (1.6) is \({\mathcal {O}}(h^{k+2})\) in \(L^2(\Omega )\)-norm under suitable assumptions. The main focus of this paper is to show (1.4) and (1.5) are \((k+2)\)th order accurate finite difference type schemes via the superconvergence of finite element method. Such a result is very interesting from the perspective that a fourth order accurate scheme can be constructed even if the coefficients in the equation are approximated by quadratic polynomials, which does not seem to be considered before in the literature.

Since only grid point values of a(x, y) and f(x, y) are needed in scheme (1.4) or (1.5), they can be regarded as finite difference type schemes. Consider a uniform \(n_x\times n_y\) grid for a rectangle \(\Omega \) with grid points \((x_i,y_j)\) and grid spacing h, where \(n_x\) and \(n_y\) are both odd numbers as shown in Fig. 1a. Then there is a mesh \(\Omega _h\) of \((n_x-1)/2\times (n_y-1)/2\)\(Q^2\) elements so that Gauss–Lobatto points for all cells in \(\Omega _h\) are exactly the finite difference grid points. By using the scheme (1.4) or (1.5) on the finite element mesh \(\Omega _h\) shown in Fig. 1b, we obtain a fourth order finite difference scheme in the sense that \({{\tilde{u}}}_h\) is fourth order accurate in the discrete 2-norm at all grid points.

In practice the most convenient implementation is to use tensor product of \((k+1)\)-point Gauss–Lobatto quadrature for integrals in (1.2), since the standard \(L^2(\Omega )\) and \(H^1(\Omega )\) error estimates still hold [8, 10] and the Lagrangian \(Q^k\) basis are delta functions at these quadrature points. Such a quadrature scheme can be denoted as finding \(u_h\in V_0^h\) satisfying

where \(A_h(u_h,v_h)\) and \(\langle f,v_h\rangle _h\) denote using tensor product of \((k+1)\)-point Gauss–Lobatto quadrature for integrals \(A(u_h,v_h)\) and \((f,v_h)\) respectively. Numerical tests suggest that the approximated coefficient scheme (1.5) is more accurate and robust than the quadrature scheme (1.7) in some cases.

1.2 Superconvergence of \(C^0\)-\(Q^k\) Finite Element Method

Standard error estimates of (1.3) are \(\Vert u-u_h\Vert _{1}\le C h^{k}\Vert u\Vert _{k+1}\) and \(\Vert u-u_h\Vert _{0}\le C h^{k+1}\Vert u\Vert _{k+1}\) [8]. At certain quadrature or symmetry points the finite element solution or its derivatives have higher order accuracy, which is called superconvergence. Douglas and Dupont first proved that continuous finite element method using piecewise polynomial of degree k has \(O(h^{2k})\) convergence at the knots in an one dimensional mesh [11, 12]. In [12], \(O(h^{2k})\) was proven to be the best possible convergence rate. For \(k\ge 2\), \({\mathcal {O}} (h^{k+1})\) for the derivatives at Gauss quadrature points and \({\mathcal {O}} (h^{k+2})\) for functions values at Gauss–Lobatto quadrature points were proven in [2, 4, 17].

For two dimensional cases, it was first showed in [13] that the \((k+2)\)th order superconvergence for \(k\ge 2\) at vertices of all rectangular cells in a two dimensional rectangular mesh. Namely, the convergence rate at the knots is as least one order higher than the rate globally. Later on, the 2kth order (for \(k\ge 2\)) convergence rate at the knots was proven for \(Q^k\) elements solving \(-\,\Delta u=f\), see [7, 15].

For the multi-dimensional variable coefficient case, when discussing the superconvergence of derivatives, it can be reduced to the Laplacian case. Superconvergence of tensor product elements for the Laplacian case can be established by extending one-dimensional results [13, 22]. See also [16] for the superconvergence of the gradient. The superconvergence of function values in rectangular elements for the variable coefficient case were studied in [6] by Chen with M-type projection polynomials and in [19] by Lin and Yan with the point-line-plane interpolation polynomials. In particular, let \(Z_0\) denote the set of tensor product of \((k+1)\)-point Gauss–Lobatto quadrature points for all rectangular cells, then the following superconvergence of function values for \(Q^k\) elements was shown in [6]:

In general superconvergence of (1.3) has been well studied in the literature. Many superconvergence results are established for interior points away from the boundary for various domains. Our major motivation to study superconvergence is to use it for constructing a finite difference scheme, thus we only consider a rectangular domain for which all Lobatto points can form a finite difference grid.

We are interested in superconvergence of function values for \(Q^k\) element when the computation of integrals is simplified. For one-dimensional problems, it was proven in [12] that \(O(h^{2k})\) at knots still holds if \((k+1)\)-point Gauss–Lobatto quadrature is used for \(P^2\) element. Superconvergence of the gradient for using quadrature was studied in [17]. For multidimensional problems, even though it is possible to show (1.8) holds for (1.3) with accurate enough quadrature, it is nontrivial to extend the superconvergence proof to (1.7) with only \((k+1)\)-point Gauss–Lobatto quadrature. Superconvergence analysis of the scheme (1.7) is much more complicated thus will be discussed in another paper [18].

1.3 Contributions of the Paper

The objective and main motivation of this paper is to construct a fourth order accurate finite difference type scheme based on the superconvergence of \(C^0\)-\(Q^2\) finite element method using \(Q^2\) polynomial coefficients in elliptic equations and demonstrate the accuracy. The main result can be easily generalized to higher order cases thus we keep the discussion general to \(Q^k\) (\(k\ge 2\)) and prove its \((k+2)\)th order superconvergence of function values when using PDE coefficients are replaced by their \(Q^k\) interpolants: (1.8) still holds for both schemes (1.4) and (1.5). Moreover, (1.4) and (1.5) have all finite element method advantages such as the symmetry of the stiffness matrix, which is desired in applications. The scheme (1.4) or (1.5) is also an efficient implementation of \(C^0\)-\(Q^k\) finite element method since only \(Q^k\) coefficients are needed to retain the \((k+2)\)th order accuracy of function values at the Lobatto points.

The paper is organized as follows. In Sect. 2, we introduce the notations and review standard interpolation and quadrature estimates. In Sect. 3, we review the tools to establish superconvergence of function values in \(C^0\)-\(Q^k\) finite element method (1.3) with a complete proof. In Sect. 4, we prove the main result on the superconvergence of (1.4) and (1.5) in two dimensions with extensions to a general elliptic equation. All discussion in this paper can be easily extended to the three dimensional case. Numerical results are given in Sect. 5. Section 6 consists of concluding remarks.

2 Notations and Preliminaries

2.1 Notations

In addition to the notations mentioned in the introduction, the following notations will be used in the rest of the paper:

n denotes the dimension of the problem. Even though we discuss everything explicitly for \(n=2\), all key discussions can be easily extended to \(n=3\). The main purpose of keeping n is for readers to see independence/cancellation of the dimension n in the proof of some important estimates.

We only consider a rectangular domain \(\Omega \) with its boundary \(\partial \Omega \).

\(\Omega _h\) denotes a rectangular mesh with mesh size h. Only for convenience, we assume \(\Omega _h\) is an uniform mesh and \(e=[x_e-h,x_e+h]\times [y_e-h,y_e+h]\) denotes any cell in \(\Omega _h\) with cell center \((x_e,y_e)\). The assumption of an uniform mesh is not essential to the proof.

\(Q^k(e)=\left\{ p(x,y)=\sum \nolimits _{i=0}^k\sum \nolimits _{j=0}^k p_{ij} x^iy^j, (x,y)\in e\right\} \) is the set of tensor product of polynomials of degree k on a cell e.

\(V^h=\{p(x,y)\in C^0(\Omega _h){:}\,p|_e \in Q^k(e),\quad \forall e\in \Omega _h\}\) denotes the continuous piecewise \(Q^k\) finite element space on \(\Omega _h\).

\(V^h_0=\{v_h\in V^h{:}\,v_h=0 \quad \text{ on }\quad \partial \Omega \}.\)

The norm and seminorms for \(W^{k,p}(\Omega )\) and \(1\le p<+\infty \), with standard modification for \(p=+\infty \):

$$\begin{aligned}&\Vert u\Vert _{k,p,\Omega }=\left( \sum \limits _{i+j\le k}\iint _{\Omega }|\partial _x^i\partial _y^ju(x,y)|^pdxdy\right) ^{1/p}, \\&|u|_{k,p,\Omega }=\left( \sum \limits _{i+j= k}\iint _{\Omega }|\partial _x^i\partial _y^ju(x,y)|^pdxdy\right) ^{1/p}, \\&{[}u]_{k,p,\Omega }=\left( \iint _{\Omega }|\partial _x^k u(x,y)|^pdxdy+\iint _{\Omega }|\partial _y^k u(x,y)|^p dxdy\right) ^{1/p}. \end{aligned}$$Notice that \([u]_{k+1,p,\Omega }=0\) if u is a \(Q^k\) polynomial.

\(\Vert u\Vert _{k,\Omega }\), \(|u|_{k,\Omega }\) and \([u]_{k,\Omega }\) denote norm and seminorms for \(H^k(\Omega )=W^{k,2}(\Omega )\).

When there is no confusion, \(\Omega \) may be dropped in the norm and seminorms.

For any \(v_h\in V_h\), \(1\le p<+\infty \) and \(k\ge 1\),

$$\begin{aligned} \Vert v_h\Vert _{k,p,\Omega }:= \left[ \sum _e\Vert v_h\Vert _{k,p,e}^p\right] ^{\frac{1}{p}}, \quad |v_h|_{k,p,\Omega }:= \left[ \sum _e|v_h|_{k,p,e}^p\right] ^{\frac{1}{p}}. \end{aligned}$$Let \(Z_{0,e}\) denote the set of \((k+1)\times (k+1)\) Gauss–Lobatto points on a cell e.

\(Z_0=\bigcup _e Z_{0,e}\) denotes all Gauss–Lobatto points in the mesh \(\Omega _h\).

Let \(\Vert u\Vert _{2,Z_0}\) and \(\Vert u\Vert _{\infty ,Z_0}\) denote the discrete 2-norm and the maximum norm over \(Z_0\) respectively:

$$\begin{aligned} \Vert u\Vert _{2,Z_0}=\left[ h^2\sum _{(x,y)\in Z_0} |u(x,y)|^2\right] ^{\frac{1}{2}},\quad \Vert u\Vert _{\infty ,Z_0}=\max _{(x,y)\in Z_0} |u(x,y)|. \end{aligned}$$For a smooth function a(x, y), let \(a_I(x,y)\) denote its piecewise \(Q^k\) Lagrange interpolant at \(Z_{0,e}\) on each cell e, i.e., \(a_I\in V^h\) satisfies:

$$\begin{aligned} a(x,y)=a_I(x,y), \quad \forall (x,y)\in Z_0. \end{aligned}$$\(P^k(t)\) denotes the polynomial of degree k of variable t.

(f, v) denotes the inner product in \(L^2(\Omega )\):

$$\begin{aligned} (f,v)=\iint _{\Omega } fv\, dxdy. \end{aligned}$$\(\langle f,v\rangle _h\) denotes the approximation to (f, v) by using \((k+1)\times (k+1)\)-point Gauss–Lobatto quadrature for integration over each cell e.

The following are commonly used tools and facts:

\({\hat{K}}=[-1,1]\times [-1,1]\) denotes a reference cell.

For v(x, y) defined on e, consider \({\hat{v}}(s, t)=v(sh+ x_e,t h+ y_e)\) defined on \({\hat{K}}\).

For n-dimensional problems, the following scaling argument will be used:

$$\begin{aligned} h^{k-n/p}|v|_{k,p,e}=|{\hat{v}}|_{k,p,{\hat{K}}},\quad h^{k-n/p}[v]_{k,p,e}=[{\hat{v}}]_{k,p,{\hat{K}}}, \quad 1\le p\le \infty . \end{aligned}$$(2.1)Sobolev’s embedding in two and three dimensions: \(H^{2}({\hat{K}})\hookrightarrow C^0({\hat{K}})\).

The embedding implies

$$\begin{aligned}&\Vert {\hat{f}}\Vert _{0,\infty ,{\hat{K}}}\le C \Vert {\hat{f}}\Vert _{k,2, {\hat{K}}}, \forall {\hat{f}}\in H^{k}({\hat{K}}), k\ge 2,\\&\Vert {\hat{f}}\Vert _{1,\infty ,{\hat{K}}}\le C \Vert {\hat{f}}\Vert _{k+1,2, {\hat{K}}}, \forall {\hat{f}}\in H^{k+1}({\hat{K}}), k\ge 2. \end{aligned}$$Cauchy–Schwarz inequalities:

$$\begin{aligned} \sum _e \Vert u\Vert _{k,e}\Vert v\Vert _{k,e}\le \left( \sum _e \Vert u\Vert ^2_{k,e}\right) ^{\frac{1}{2}}\left( \sum _e \Vert v\Vert ^2_{k,e}\right) ^{\frac{1}{2}}, \Vert u\Vert _{k,1,e}={\mathcal {O}}(h^{\frac{n}{2}}) \Vert u\Vert _{k,2,e}. \end{aligned}$$Poincaré inequality: let \(\bar{{\hat{f}}}\) be the average of \({\hat{f}}\in H^1({\hat{K}})\) on \({\hat{K}}\), then

$$\begin{aligned} |{\hat{f}}-\bar{{\hat{f}}}|_{0,p,{\hat{K}}}\le C |\nabla {\hat{f}}|_{0,p,{\hat{K}}}, \quad p\ge 1. \end{aligned}$$For \(k\ge 2\), the \((k+1)\times (k+1)\) Gauss–Lobatto quadrature is exact for integration of polynomials of degree \(2k-1\ge k+1\) on \({\hat{K}}\).

Any polynomial in \(Q^k({\hat{K}})\) can be uniquely represented by its point values at \((k+1)\times (k+1)\) Gauss–Lobatto points on \({\hat{K}}\), and it is straightforward to verify that the discrete 2-norm \(\Vert p\Vert _{2, Z_0}\) and \(L^2(\Omega )\)-norm \(\Vert p\Vert _{0, \Omega }\) are equivalent for a piecewise \(Q^k\) polynomial \(p\in V^h\).

Define the projection operator \(\hat{\Pi }_1: {\hat{u}} \in L^1({\hat{K}})\rightarrow {\hat{\Pi }}_1{\hat{u}}\in Q^1({\hat{K}})\) by

$$\begin{aligned} \iint _{{\hat{K}}} (\hat{\Pi }_1 \hat{u} ) w dxdy= \iint _{{\hat{K}}} \hat{u} w dxdy,\forall w\in Q^1({\hat{K}}). \end{aligned}$$(2.2)Notice that \(\hat{\Pi }_1\) is a continuous linear mapping from \(L^2({\hat{K}})\) to \(H^1({\hat{K}})\) (or \(H^2({\hat{K}})\)) since all degree of freedoms of \(\hat{\Pi }_1 \hat{u}\) can be represented as a linear combination of \(\iint _{{\hat{K}}} {\hat{u}}(s,t) p(s,t)dsdt\) for \(p(s,t)=1,s,t,st\) and by Cauchy–Schwarz inequality \(|\iint _{{\hat{K}}} {\hat{u}}(s,t) p(s,t)dsdt|\le \Vert {\hat{u}}\Vert _{0,2,{\hat{K}}}\Vert {\hat{p}}\Vert _{0,2,{\hat{K}}}\le C \Vert {\hat{u}}\Vert _{0,2,{\hat{K}}}\).

2.2 The Bramble–Hilbert Lemma

By the abstract Bramble–Hilbert Lemma in [3], with the result \(\Vert v\Vert _{m,p,\Omega }\le C(|v|_{0,p,\Omega }+[v]_{m,p,\Omega })\) for any \(v\in W^{m, p}(\Omega )\) [1, 21], the Bramble–Hilbert Lemma for \(Q^k\) polynomials can be stated as (see Exercise 3.1.1 and Theorem 4.1.3 in [9]):

Theorem 2.1

If a continuous linear mapping \(\Pi {:}\,H^{k+1}({\hat{K}})\rightarrow H^{k+1}({\hat{K}})\) satisfies \(\Pi v=v\) for any \(v\in Q^k({\hat{K}})\), then

Thus if \(l(\cdot )\) is a continuous linear form on the space \(H^{k+1}({\hat{K}})\) satisfying \(l(v)=0,\forall v\in Q^k({\hat{K}}),\) then

where \( \Vert l\Vert '_{k+1, {\hat{K}}}\) is the norm in the dual space of \(H^{k+1}({\hat{K}})\).

2.3 Interpolation and Quadrature Errors

For \(Q^k\) element (\(k\ge 2\)), consider \((k+1)\times (k+1)\) Gauss–Lobatto quadrature, which is exact for integration of \(Q^{2k-1}\) polynomials.

It is straightforward to establish the interpolation error:

Theorem 2.2

For a smooth function a, \(|a-a_I|_{0,\infty ,\Omega }={\mathcal {O}} (h^{k+1})|a|_{k+1,\infty ,\Omega }\).

Let \(s_{j}, t_j\) and \(w_j\)\((j=1,\cdots , k+1)\) be the Gauss–Lobatto quadrature points and weight for the interval \([-1,1]\). Notice \({\hat{f}}\) coincides with its \(Q^k\) interpolant \({\hat{f}}_I\) at the quadrature points and the quadrature is exact for integration of \({\hat{f}}_I\), the quadrature can be expressed on \({\hat{K}}\) as

thus the quadrature error is related to interpolation error:

We have the following estimates on the quadrature error:

Theorem 2.3

For \(n=2\) and a sufficiently smooth function a(x, y), if \(k\ge 2\) and m is an integer satisfying \(k\le m\le 2k\), we have

Proof

Let E(a) denote the quadrature error for function a(x, y) on e. Let \({\hat{E}}({\hat{a}})\) denote the quadrature error for the function \({\hat{a}}(s,t)=a(sh+x_e, th+y_e)\) on the reference cell \({\hat{K}}\). Then for any \({\hat{f}}\in H^{m}({\hat{K}})\) (\(m\ge k\ge 2\)), since quadrature are represented by point values, with the Sobolev’s embedding we have

Thus \({\hat{E}}(\cdot )\) is a continuous linear form on \(H^{m}({\hat{K}})\) and \({\hat{E}}({\hat{f}})=0\) if \({\hat{f}}\in Q^{m-1}({\hat{K}})\). With (2.1), the Bramble–Hilbert lemma implies

\(\square \)

Theorem 2.4

If \(k\ge 2\), \((f,v_h)-\langle f,v_h\rangle _h ={\mathcal {O}}(h^{k+2}) \Vert f\Vert _{k+2} \Vert v_h\Vert _2,\quad \forall v_h\in V^h.\)

Proof

This result is a special case of Theorem 5 in [10]. For completeness, we include a proof. Let \({\hat{E}}(\cdot )\) denote the quadrature error term on the reference cell \({\hat{K}}\). Consider the projection (2.2). Let \(\Pi _1\) denote the same projection on e. Since \({\hat{\Pi }}_1\) leaves \(Q^0({\hat{K}})\) invariant, by the Bramble–Hilbert lemma on \({\hat{\Pi }}_1\), we get \([{\hat{v}}_h-{\hat{\Pi }}_1 {\hat{v}}_h]_{1,{\hat{K}}}\le \Vert {\hat{v}}_h-{\hat{\Pi }}_1 {\hat{v}}_h\Vert _{1,{\hat{K}}} \le C [{\hat{v}}_h]_{1,{\hat{K}}}\) thus \([{\hat{\Pi }}_1 {\hat{v}}_h]_{1,{\hat{K}}}\le [{\hat{v}}_h]_{1,{\hat{K}}}+[{\hat{v}}_h-{\hat{\Pi }}_1 {\hat{v}}_h]_{1,{\hat{K}}}\le C [{\hat{v}}_h]_{1,{\hat{K}}}\). By setting \(w={\hat{\Pi }}_1{\hat{v}}_h\) in (2.2), we get \(|\hat{\Pi }_1 \hat{v}_h|_{0,{\hat{K}}}\le |{\hat{v}}_h|_{0,{\hat{K}}}\). For \(k\ge 2\), repeat the proof of Theorem 2.3, we can get

where the fact \([{\hat{\Pi }}_1 {\hat{v}}_h]_{l,\infty ,{\hat{K}}}=0\) for \(l\ge 2\) is used. The equivalence of norms over \(Q^1({\hat{K}})\) implies

Next consider the linear form \({\hat{f}}\in H^k({\hat{K}})\rightarrow {\hat{E}}({\hat{f}} ({\hat{v}}_h-{\hat{\Pi }}_1 {\hat{v}}_h))\). Due to the embedding \(H^{k}({\hat{K}})\hookrightarrow C^0({\hat{K}})\), it is continuous with operator norm \(\le C\Vert {\hat{v}}_h-{\hat{\Pi }}_1 {\hat{v}}_h\Vert _{0,{\hat{K}}}\) since

For any \({\hat{f}}\in Q^{k-1}({\hat{K}})\), \({\hat{E}}({\hat{f}} {\hat{v}}_h)=0\). By the Bramble–Hilbert lemma, we get

So on a cell e, with (2.1), we get

Summing over e and use Cauchy–Schwarz inequality, we get the desired result. \(\square \)

Theorem 2.5

For \(k\ge 2\), \((f,v_h)-(f_I,v_h) ={\mathcal {O}}(h^{k+2}) \Vert f\Vert _{k+2} \Vert v_h\Vert _2,\quad \forall v_h\in V^h.\)

Proof

Repeat the proof of Theorem 2.4 for the function \(f-f_I\) on a cell e, with the fact \([f_I]_{k+1,p,e}=[f_I]_{k+2,p,e}=0\), we get

By (2.3) on the Lagrange interpolation operator and the fact \([f-f_I]_{k,e}\le \Vert f-f_I\Vert _{k+1,e}\), we get \([f-f_I]_{k,e}\le Ch[f]_{k+1,e}\). Notice that \(\langle f-f_I, v_h\rangle _h=0\), with (2.1), we get

\(\square \)

3 The M-Type Projection

To establish the superconvergence of \(C^0\)-\(Q^k\) finite element method for multi-dimensional variable coefficient equations, it is necessary to use a special polynomial projection of the exact solution, which has two equivalent definitions. One is the M-type projection used in [5, 6]. The other one is the point-line-plane interpolation used in [19, 20].

For the sake of completeness, we review the relevant results regarding M-type projection, which is a more convenient tool. Most results in this section were considered and established for more general rectangular elements in [6]. For simplicity, we use some simplified proof and arguments for \(Q^k\) element in this section. We only discuss the two dimensional case and the extension to three dimensions is straightforward.

3.1 One Dimensional Case

The \(L^2\)-orthogonal Legendre polynomials on the reference interval \({\hat{K}}=[-1,1]\) are given as

Define their antiderivatives as M-type polynomials:

which satisfy the following properties:

\(M_k(\pm 1)=0, \forall k\ge 2.\)

If \(j-i\ne 0, \pm 2\), then \(M_i(t)\perp M_j(t)\), i.e., \(\int _{-1}^1 M_i(t)M_j(t) dt=0.\)

Roots of \(M_k(t)\) are the k-point Gauss–Lobatto quadrature points for \([-1,1]\).

Since Legendre polynomials form a complete orthogonal basis for \(L^{2}([-1,1])\), for any \(f(t)\in H^1([-1,1])\), its derivative \(f'(t)\) can be expressed as Fourier–Legendre series

Define the M-type projection

where \(b_0=\frac{f(1)+f(-1)}{2}\) is determined by \(b_1=\frac{f(1)-f(-1)}{2}\) to make \(f_k(\pm 1)=f(\pm 1)\). Since the Fourier–Legendre series converges in \(L^2\), by Cauchy–Schwarz inequality,

We get the M-type expansion of f(t): \( f(t)=\lim \nolimits _{k\rightarrow \infty }f_k(t)=\sum \nolimits _{j=0}^{\infty }b_{j}M_j(t). \) The remainder \(R_k(t)\) of M-type projection is

The following properties are straightforward to verify:

\(f_k(\pm 1)=f(\pm 1)\) thus \(R_k(\pm 1)=0\) for \(k\ge 1\).

\(R[f]_k(t)\perp v(t)\) for any \(v(t)\in P^{k-2}(t)\) on \([-1,1]\), i.e., \(\int _{-1}^1 R[f]_k v dt=0\).

\(R[f]_k'(t)\perp v(t)\) for any \(v(t)\in P^{k-1}(t)\) on \([-1,1]\).

For \(j\ge 2\), \(b_j=(j-\frac{1}{2})[\left. f(t)l_{j-1}(t)\right| _{-1}^1] -\int _{-1}^1 f(t)l'(j-1)(t)dt.\)

For \(j\le k\), \(|b_j|\le C_k \Vert f\Vert _{0, \infty , {\hat{K}}}.\)

\(\Vert R[f]_k(t)\Vert _{0, \infty , {\hat{K}}}\le C_k\Vert f\Vert _{0, \infty , {\hat{K}}}.\)

3.2 Two Dimensional Case

Consider a function \({\hat{f}}(s,t)\in H^2({\hat{K}})\) on the reference cell \({\hat{K}}=[-\,1,1]\times [-\,1,1]\), it has the expansion

where

Define the \(Q^k\) M-type projection of \({\hat{f}}\) on \({\hat{K}}\) and its remainder as

For f(x, y) on \(e=[x_e-h, x_e+h]\times [y_e-h, y_e+h]\), let \({\hat{f}}(s,t)= f( sh+x_e, t h+y_e)\) then the \(Q^k\) M-type projection of f on e and its remainder are defined as

Theorem 3.1

The \(Q^k\) M-type projection is equivalent to the \(Q^k\) point-line-plane projection \(\Pi \) defined as follows:

- 1.

\(\Pi {\hat{u}}={\hat{u}}\) at four corners of \({\hat{K}}=[-1,1]\times [-1,1]\).

- 2.

\(\Pi {\hat{u}}-{\hat{u}}\) is orthogonal to polynomials of degree \(k-2\) on each edge of \({\hat{K}}\).

- 3.

\(\Pi {\hat{u}}-{\hat{u}}\) is orthogonal to any \(v\in Q^{k-2}({\hat{K}})\) on \({\hat{K}}\).

Proof

We only need to show that M-type projection \({\hat{f}}_{k,k}(s,t)\) satisfies the same three properties. By \(M_j(\pm 1)=0\) for \(j\ge 2\), we can derive that \({\hat{f}}_{k,k}={\hat{f}}\) at \((\pm 1,\pm 1)\). For instance, \({\hat{f}}_{k,k}(1,1)={\hat{b}}_{0,0}+{\hat{b}}_{1,0}+{\hat{b}}_{0,1}+{\hat{b}}_{1,1}={\hat{f}}(1,1)\).

The second property is implied by \(M_j(\pm 1)=0\) for \(j\ge 2\) and \(M_j(t)\perp P^{k-2}(t)\) for \(j\ge k+1\). For instance, at \(s=1\), \({\hat{f}}_{k,k}(1,t)-{\hat{f}}(1,t)=\sum \nolimits _{j=k+1}^\infty ({\hat{b}}_{0,j}+{\hat{b}}_{1,j}) M_j(t)\perp P^{k-2}(t)\) on \([-1,1]\).

The third property is implied by \(M_j(t)\perp P^{k-2}(t)\) for \(j\ge k+1\). \(\square \)

Lemma 3.1

Assume \({\hat{f}}\in H^{k+1}({\hat{K}})\) with \(k\ge 2\), then

- 1.

\(|{\hat{b}}_{i,j}|\le C_k \Vert {\hat{f}}\Vert _{0,\infty , {\hat{K}}},\quad \forall i,j\le k\).

- 2.

\(|{\hat{b}}_{i,j}|\le C_k |{\hat{f}}|_{i+j,2,{\hat{K}}},\quad \forall i,j\ge 1, i+j\le k+1.\)

- 3.

\(|{\hat{b}}_{i,k+1}|\le C_k |{\hat{f}}|_{k+1,2,{\hat{K}}},\quad 0\le i\le k+1.\)

- 4.

If \({\hat{f}}\in H^{k+2}({\hat{K}})\), then \(|{\hat{b}}_{i,k+1}|\le C_k |{\hat{f}}|_{k+2,2,{\hat{K}}},\quad 1\le i\le k+1.\)

Proof

First of all, similar to the one-dimensional case, through integration by parts, \({\hat{b}}_{i,j}\) can be represented as integrals of \({\hat{f}}\) thus \(|{\hat{b}}_{i,j}|\le C_k \Vert {\hat{f}}\Vert _{0,\infty , {\hat{K}}}\) for \(i,j\le k\).

By the fact that the antiderivatives (and higher order ones) of Legendre polynomials vanish at \(\pm \,1\), after integration by parts for both variables, we have

For the third estimate, by integration by parts only for the variable t, we get

For \({\hat{b}}_{0,k+1}\), from the first estimate, we have \(|{\hat{b}}_{0,k+1}|\le C_k\Vert {\hat{f}}\Vert _{0,\infty ,{\hat{K}}}\le C_k \Vert {\hat{f}}\Vert _{k+1,2,{\hat{K}}}\) thus \({\hat{b}}_{0,k+1}\) can be regarded as a continuous linear form on \(H^{k+1}({\hat{K}})\) and it vanishes if \({\hat{f}} \in Q^k({\hat{K}})\). So by the Bramble–Hilbert Lemma, \(|{\hat{b}}_{0,k+1}|\le C_k[{\hat{f}}]_{k+1,2,{\hat{K}}}\).

Finally, by integration by parts only for the variable t, we get

\(\square \)

Lemma 3.2

For \(k\ge 2\), we have

- 1.

\(|{\hat{R}}[{\hat{f}}]_{k,k}|_{0,\infty ,{\hat{K}}}\le C_k [{\hat{f}}]_{k+1,{\hat{K}}}\), \(|{\hat{R}}[{\hat{f}}]_{k,k}|_{0,2, {\hat{K}}}\le C_k [{\hat{f}}]_{k+1,{\hat{K}}}\).

- 2.

\(|\partial _s {\hat{R}}[{\hat{f}}]_{k,k}|_{0,\infty , {\hat{K}}}\le C_k [{\hat{f}}]_{k+1,{\hat{K}}}\), \(|\partial _s {\hat{R}}[{\hat{f}}]_{k,k}|_{0,2, {\hat{K}}}\le C_k [{\hat{f}}]_{k+1,{\hat{K}}}\).

- 3.

\(\iint _{{\hat{K}}}\partial _s {\hat{R}}[{\hat{f}}]_{k,k} ds dt=0\)

Proof

Lemma 3.1 implies \(\Vert {\hat{f}}_{k,k}\Vert _{0,\infty , {\hat{K}}}\le C_k \Vert {\hat{f}}\Vert _{0,\infty , {\hat{K}}}\) and \(\Vert \partial _s {\hat{f}}_{k,k}\Vert _{0,\infty , {\hat{K}}}\le C_k \Vert {\hat{f}}\Vert _{0,\infty , {\hat{K}}}\). Thus

Notice that here \(C_k\) does not depend on (s, t). So \(R[{\hat{f}}]_{k,k}(s,t)\) is a continuous linear form on \(H^{k+1}({\hat{K}})\) and its operator norm is bounded by a constant independent of (s, t). Since it vanishes for any \({\hat{f}}\in Q^{k}({\hat{K}})\), by the Bramble–Hilbert Lemma, we get \(|R[{\hat{f}}]_{k,k}(s,t)|\le C_k [{\hat{f}}]_{k+1,{\hat{K}}}\) where \(C_k\) does not depend on (s, t). So the \(L^\infty \) estimate holds and it implies the \(L^2\) estimate.

The second estimate can be established similarly since we have

The third equation is implied by the fact that \(M_j(t)\perp 1\) for \(j\ge 3\) and \(M'_j(t)\perp 1\) for \(j\ge 2\). Another way to prove the third equation is to use integration by parts

which is zero the second property in Theorem 3.1. \(\square \)

For the discussion in the next few subsections, it is useful to consider the lower order part of the remainder of \({\hat{R}}[{\hat{f}}]_{k,k}\):

Lemma 3.3

For \({\hat{f}}\in H^{k+2}({\hat{K}})\) with \(k\ge 2\), define \({\hat{R}}[{\hat{f}}]_{k+1,k+1}-{\hat{R}}[{\hat{f}}]_{k,k}={\hat{R}}_1+{\hat{R}}_2\) with

They have the following properties:

- 1.

\(\iint _{{\hat{K}}} \partial _s {\hat{R}}_1 ds dt=0\).

- 2.

\(|\partial _s {\hat{R}}_1|_{0,\infty ,{\hat{K}}}\le C_k|{\hat{f}} |_{k+2,2,{\hat{K}}}\), \(|\partial _s {\hat{R}}_1|_{0,2,{\hat{K}}}\le C_k|{\hat{f}} |_{k+2,2,{\hat{K}}}.\)

- 3.

\(|{\hat{b}}_{k+1}(t)|\le C_k |{\hat{f}}|_{k+1,{\hat{K}}}\), \(|{\hat{b}}'_{k+1}(t)|\le C_k |{\hat{f}}|_{k+2,{\hat{K}}}\), \(\forall t\in [-1,1]\).

Proof

The first equation is due to the fact that \(M_{k+1}(t)\perp 1\) since \(k\ge 2.\)

Notice that \(M'_0(s)=0\), by Lemma 3.1, we have

So we get the \(L^\infty \) estimate for \(|\partial _s {\hat{R}}_1(s,t)|\) thus the \(L^2\) estimate.

Similar to the estimates in Lemma 3.1, we can show \(|{\hat{b}}_{k+1,j}|\le C_k |{\hat{f}}|_{k+1,{\hat{K}}}\) for \(j\le k+1\), thus \(|b_{k+1}(t)|\le C_k |{\hat{f}}|_{k+1,{\hat{K}}}\). Since \(b_{k+1}'(t)= \sum \nolimits _{j=1}^{k+1}{\hat{b}}_{k+1,j}M_j'(t)\), by the last estimate in Lemma 3.1, we get \(|{\hat{b}}'_{k+1}(t)|\le C_k |{\hat{f}}|_{k+2,{\hat{K}}}\). \(\square \)

3.3 The \(C^0\)-\(Q^k\) Projection

Now consider a function \(u(x,y)\in H^{k+2}(\Omega )\), let \(u_p(x,y)\) denote its piecewise \(Q^k\) M-type projection on each element e in the mesh \(\Omega _h\). The first two properties in Theorem 3.1 imply that \(u_p(x,y)\) on each edge is uniquely determined by u(x, y) along that edge. Thus \(u_p(x,y)\) is continuous on \(\Omega _h\). The approximation error \(u-u_p\) is one order higher at all Gauss–Lobatto points \(Z_0\):

Theorem 3.2

Proof

Consider any e with cell center \((x_e, y_e)\), define \({\hat{u}}(s,t)=u(x_e+sh, y_e+th)\). Since the \((k+1)\) Gauss–Lobatto points are roots of \(M_{k+1}(t)\), \({\hat{R}}_{k+1,k+1}[{\hat{u}}]-{\hat{R}}_{k,k}[{\hat{u}}]\) vanishes at \((k+1)\times (k+1)\) Gauss–Lobatto points on \({\hat{K}}\). By Lemma 3.2, we have \(|{\hat{R}}_{k+1,k+1}[{\hat{u}}](s,t)|\le C [{\hat{u}}]_{k+2,{\hat{K}}}\).

Mapping back to the cell e, with (2.1), at the \((k+1)\times (k+1)\) Gauss–Lobatto points on e, \(|u-u_p|\le C h^{k+2-\frac{n}{2}}[u]_{k+2, e}\). Summing over all elements e, we get

If further assuming \(u\in W^{k+2,\infty }(\Omega )\), then at the \((k+1)\times (k+1)\) Gauss–Lobatto points on e, \(|u-u_p|\le C h^{k+2-\frac{n}{2}}[u]_{k+2, e}\le C h^{k+2} [u]_{k+2,\infty ,\Omega }\), which implies the second estimate. \(\square \)

3.4 Superconvergence of Bilinear Forms

For convenience, in this subsection, we drop the subscript h in a test function \(v_h\in V^h\). When there is no confusion, we may also drop dxdy or dsdt in a double integral.

Lemma 3.4

Assume \(a(x,y)\in W^{2,\infty }(\Omega ).\) For \(k\ge 2\),

Proof

For each cell e, we consider \(\iint _{e}a(u-u_p)_xv_x \,dxdy\). Let \(R[u]_{k,k}=u-u_p\) denote the M-type projection remainder on e. Then \(R[u]_{k,k}\) can be splitted into lower order part \(R[u]_{k,k}-R[u]_{k+1,k+1}\) and high order part \(R[u]_{k+1,k+1}\).

We first consider the high order part. Mapping everything to the reference cell \({\hat{K}}\) and let \(\overline{{\hat{a}}{\hat{v}}_s}\) denote the average of \({\hat{a}}{\hat{v}}_s\) on \({\hat{K}}\). By the last property in Lemma 3.2, we get

By Poincaré inequality and Cauchy–Schwarz inequality, we have

Mapping back to the cell e, with (2.1), by Lemma 3.2, the higher order part is bounded by \(C h^{k+2}[u]_{k+2,2,e}(|a|_{1,\infty , e} |v|_{1,2,e}+|a|_{0,\infty , e} |v|_{2,2,e})\) thus

Now we only need to discuss the lower order part of the remainder. Let \(R[u]_{k,k}-R[u]_{k+1,k+1}=R_1+R_2\) which is defined similarly as in (3.1). For \(R_1\), by the first two results in Lemma 3.3, we have

By similar discussions above, we get

For \(R_2\), let N(s) be the antiderivative of \(M_{k+1}(s)\) then \(N(\pm 1)=0\). Let \(\bar{{\hat{a}}}\) be the average of \(\bar{{\hat{a}}}\) on \({\hat{K}}\) then \(|{\hat{a}}-\bar{{\hat{a}}}|_{0,\infty , {\hat{K}}}\le C |{\hat{a}}|_{1,\infty ,{\hat{K}}}\). Since \(M_{k+1}(s)\perp P^{k-2}(s)\), we have \(\iint _{{\hat{K}}}{\hat{b}}_{k+1}(t) M_{k+1}(s){\hat{v}}_{ss}=0.\) After integration by parts, by Lemma 3.3 we have

Thus we can get

So we have \(\iint _{\Omega }a(u-u_p)_xv_x \,dxdy={\mathcal {O}} (h^{k+2})\Vert a\Vert _{2,\infty ,\Omega } \Vert u\Vert _{k+2}\Vert v\Vert _2,\quad \forall v\in V^h.\)\(\square \)

Lemma 3.5

Assume \(c(x,y)\in W^{1,\infty }(\Omega ).\) For \(k\ge 2\),

Proof

Let \(\overline{{\hat{c}}{\hat{v}}}\) be the average of \({\hat{c}}{\hat{v}}\) on \({\hat{K}}\). Following similar arguments as in the proof Lemma 3.4,

So with (2.1) we have

which implies the estimate. \(\square \)

Lemma 3.6

Assume \(b(x,y)\in W^{2,\infty }(\Omega ).\) For \(k\ge 2\),

Proof

Let \(\overline{{\hat{b}}{\hat{v}}}\) be the average of \({\hat{b}}{\hat{v}}\) on \({\hat{K}}\). Following similar arguments as in the proof Lemma 3.4, we have

Let N(s) be the antiderivative of \(M_{k+1}(s)\). After integration by parts, we have

After combining all the estimates, with (2.1), we have

\(\square \)

Lemma 3.7

Assume \(a(x,y)\in W^{2,\infty }(\Omega ).\) For \(k\ge 2\),

Proof

Similar to the proof of Lemma 3.4, we have

and

Following the proof of Lemma 3.4, with (2.1), we get

Let N(s) be the antiderivative of \(M_{k+1}(s)\). After integration by parts, we have

After integration by parts on the t-variable,

By Lemma 3.3, we have the estimate for the two double integral terms

which gives the estimate \(C h^{k+2}\Vert a\Vert _{2,\infty ,\Omega }\Vert u\Vert _{k+2,e}\Vert v\Vert _{k+2,e}\) after mapping back to e.

So we only need to discuss the line integral term now. After mapping back to e, we have

Notice that we have

and similarly we get \(b_{k+1}(y_e-h)={\hat{b}}_{k+1}(-1)=(k+\frac{1}{2})\int _{x_e-h}^{x_e+h} \partial _x u(x,y_e-h) l_k(\frac{x-x_e}{h})dx\). Thus the term \(b_{k+1}(y)M_{k+1}(\frac{x-x_e}{h})a v_{xx}\) is continuous across the top/bottom edge of cells. Therefore, if summing over all elements e, the line integral on the inner edges are cancelled out. Let \(L_1\) and \(L_3\) denote the top and bottom boundary of \(\Omega \). Then the line integral after summing over e consists of two line integrals along \(L_1\) and \(L_3\). We only need to discuss one of them.

Let \(l_1\) and \(l_3\) denote the top and bottom edge of e. First, after integration by parts k times, we get

thus by Cauchy–Schwarz inequality we get

Second, since \(v^2_{xx}\) is a polynomial of degree 2k w.r.t. y variable, by using \((k+2)\)-point Gauss–Lobatto quadrature for integration w.r.t. y-variable in \(\iint _e v^2_{xx} dx dy \), we get

So by Cauchy–Schwarz inequality, we have

Thus the line integral along \(L_1\) can be estimated by considering each e adjacent to \(L_1\) in the reference cell:

where the trace inequality \( \Vert u\Vert _{k+1,\partial \Omega } \le C \Vert u\Vert _{k+2, \Omega }\) is used.

Combine all the estimates above, we get (3.2). Since the \(\frac{1}{2}\) order loss is only due to the line integral along \(L_1\) and \(L_3\), on which \(v_{xx}=0\) if \(v\in V^h_0\), we get (3.3). \(\square \)

4 The Main Result

4.1 Superconvergence of Bilinear Forms with Approximated Coefficients

Even though standard interpolation error is \(a-a_I={\mathcal {O}} (h^{k+1})\), as shown in the following discussion, the error in the bilinear forms is related to \(\iint _{e} (a- a_I)\, dxdy\) on each cell e, which is the quadrature error thus the order is higher. We have the following estimate on the bilinear forms with approximated coefficients:

Lemma 4.1

Assume \(a(x,y)\in W^{k+2,\infty }(\Omega )\) and \(u(x,y)\in H^2(\Omega )\), then \(\forall v\in V^h\) or \(\forall v\in H^2(\Omega ),\)

Proof

For every cell e in the mesh \(\Omega _h\), let \(\overline{u_xv_x}\) be the cell average of \({u_xv_x}\). By Theorems 2.2 and 2.3, we have

By Poincaré inequality and Cauchy–Schwarz inequality, we have

thus \(\iint _e (a_I-a)u_xv_x=\mathcal O(h^{k+2})\Vert a\Vert _{k+2,\infty ,\Omega }\Vert u\Vert _{2,e}\Vert v\Vert _{2,e}.\) Summing over all elements e, we have \(\iint _{\Omega } (a_I-a)u_xv_x={\mathcal {O}}(h^{k+2}) \Vert a\Vert _{k+2,\infty ,\Omega } \Vert u\Vert _2\Vert v\Vert _2.\) Similarly we can establish the other three estimates. \(\square \)

Lemma 4.1 implies that the difference in the solutions to (1.6) and (1.1) is \(\mathcal O(h^{k+2})\) in the \(L^2(\Omega )\)-norm:

Theorem 4.1

Assume \(a(x,y)\in W^{k+2,\infty }(\Omega )\) and \(a_I(x,y)\ge C>0\). Let \(u, {{\tilde{u}}}\in H_0^1(\Omega )\) be the solutions to

and

respectively, where \(f\in L^2(\Omega )\). Then \(\Vert u-\tilde{u}\Vert _0={\mathcal {O}}(h^{k+2}) \Vert a\Vert _{k+2,\infty ,\Omega } \Vert f\Vert _0.\)

Proof

By Lemma 4.1, for any \(v\in H^2 (\Omega )\) we have

Let \(w\in H_0^1(\Omega )\) be the solution to the dual problem

Since \(a_I\ge C>0\) and \(|a_I(x,y)|\le C|a(x,y)|\), the coercivity and boundedness of the bilinear form \(A_I\) hold [8]. Moreover, \(a_I\) is Lipschitz continuous because \(a(x,y)\in W^{k+2,\infty }(\Omega )\). Thus the solution w exists and the elliptic regularity \(\Vert w\Vert _2\le C \Vert u-{{\tilde{u}}}\Vert _0\) holds on a convex domain, e.g., a rectangular domain \(\Omega \), see [14]. Thus,

With elliptic regularity \(\Vert w\Vert _2\le C \Vert u-{{\tilde{u}}}\Vert _0\) and \(\Vert u\Vert _2\le C\Vert f\Vert _0\), we get

\(\square \)

Remark 1

For even number \(k\ge 4\), \((k+1)\)-point Newton-Cotes quadrature rule has the same error order as the \((k+1)\)-point Gauss–Lobatto quadrature rule. Thus Theorem 4.1 still holds if we redefine \(a_I(x,y)\) as the \(Q^k\) interpolant of a(x, y) at the uniform \((k+1)\times (k+1)\) Newton-Cotes points in each cell if \(k\ge 4\) is even.

4.2 The Variable Coefficient Poisson Equation

Let \(u(x,y)\in H^1_0(\Omega )\) be the exact solution to

Let \({{\tilde{u}}}_h\in V^h_0(\Omega )\) be the solution to

Theorem 4.2

For \(k\ge 2\), let \(u_p\) be the piecewise \(Q^k\) M-type projection of u(x, y) on each cell e in the mesh \(\Omega _h\). Assume \(a\in W^{k+2,\infty }(\Omega )\) and \(u, f\in H^{k+2}(\Omega )\), then

Proof

For any \(v_h\in V^h\), we have

Lemma 4.1 implies \(A(u,v_h)-A_I(u,v_h)=\mathcal O(h^{k+2})\Vert a\Vert _{k+2,\infty }\Vert u\Vert _2\Vert v_h\Vert _2\). Theorem 2.4 gives \(\langle f,v_h\rangle _h-(f,v_h)=\mathcal O(h^{k+2})\Vert f\Vert _{k+2}\Vert v_h\Vert _2\). By Lemma 3.4, \(A(u-u_p,v_h)=\mathcal O(h^{k+2})\Vert a\Vert _{2,\infty }\Vert u\Vert _{k+2}\Vert v_h\Vert _2\).

For the second term \(A_I(u-u_p,v_h)-A(u-u_p,v_h)=\iint _\Omega (a-a_I) \nabla (u-u_p)\nabla v_h\), by Theorem 2.2 and Lemma 3.2, we have

\(\square \)

Theorem 4.3

Assume \(a(x,y)\in W^{k+2,\infty }(\Omega )\) is positive and \(u(x,y), f(x,y)\in H^{k+2}(\Omega )\). Assume the mesh is fine enough so that the piecewise \(Q^k\) interpolant satisfies \(a_I(x,y)\ge C>0\). Then \({{\tilde{u}}}_h\) is a (\(k+2\))th order accurate approximation to u in the discrete 2-norm over all the \((k+1)\times (k+1)\) Gauss–Lobatto points:

Proof

Let \(\theta _h={\tilde{u}}_h-u_p\). By the definition of \(u_p\) and Theorem 3.1, it is straightforward to show \(\theta _h=0\) on \(\partial \Omega \). By the Aubin–Nitsche duality method, let \(w\in H_0^1(\Omega )\) be the solution to the dual problem

By the same discussion as in the proof of Theorem 4.1, the solution w exists and the regularity \(\Vert w\Vert _2\le C \Vert \theta _h\Vert _0\) holds.

Let \(w_h\) be the finite element projection of w, i.e., \(w_h\in V_0^h\) satisfies

Since \(w_h\in V^h_0\), by Theorem 4.2, we have

Let \(w_I=\Pi _1 w\) be the piecewise \(Q^1\) projection of w on \(\Omega _h\) as defined in (2.2). By the Bramble–Hilbert Lemma, we get \(\Vert w-w_I\Vert _{2,e}\le C [w]_{2,e}\le C\Vert w\Vert _{2,e}\) thus

By the inverse estimate on the piecewise polynomial \(w_h-w_I\), we have

With coercivity, Galerkin orthogonality and Cauchy–Schwarz inequality, we get

which implies

With (4.2), (4.3) and the elliptic regularity \(\Vert w\Vert _2\le C \Vert \theta _h\Vert _0\), we get

i.e.,

Finally, by the equivalency between the discrete 2-norm on \(Z_0\) and the \(L^2(\Omega )\) norm in the space \(V^h\), with Theorem 3.2, we obtain

\(\square \)

Remark 2

To extend Theorem 4.3 to homogeneous Neumann boundary conditions or mixed homogeneous Dirichlet and Neumann boundary conditions, dual problems with the same homogeneous boundary conditions as in primal problems should be used. Then all the estimates such as Theorem 4.2 hold not only for \(v\in V_0^h\) but also for any v in \(V^h\).

Remark 3

With Theorem 2.5, all the results hold for the scheme (1.5).

Remark 4

It is straightforward to verify that all results hold in three dimensions. Notice that the in three dimensions the discrete 2-norm is

Remark 5

For discussing superconvergence of the scheme (1.7), we have to consider the dual problem of the bilinear form A instead and the exact Galerkin orthogonality in (1.7) no longer holds. In order for the proof above holds, we need to show the Galerkin orthogonality in (1.7) holds up to \({\mathcal {O}}(h^{k+2})\Vert v_h\Vert _2\) for a test function \(v_h\in V_h\), which is very difficult to establish. This is the main difficulty to extend the proof of Theorem 4.3 to the Gauss–Lobatto quadrature scheme (1.7), which will be analyzed in [18] by different techniques.

4.3 General Elliptic Problems

In this section, we discuss extensions to more general elliptic problems. Consider an elliptic variational problem of finding \(u\in H_0^1(\Omega )\) to satisfy

where \({\mathbf {a}}(x,y)=\begin{pmatrix} a_{11} &{}\quad a_{12}\\ a_{21} &{}\quad a_{22} \end{pmatrix} \) is positive definite and \({\mathbf {b}}=[b_1 \quad b_2]\). Assume the coefficients \({\mathbf {a}}\), \({\mathbf {b}}\) and c are smooth, and A(u, v) satisfies coercivity \(A(v,v)\ge C \Vert v\Vert _1\) and boundedness \(|A(u,v)|\le C \Vert u\Vert _1 \Vert v\Vert _1\) for any \(u,v\in H^1_0(\Omega )\).

By the estimates in Sect. 3.4, we first have the following estimate on the \(Q^k\) M-type projection \(u_p\):

Lemma 4.2

Assume \(a_{ij}(x,y), b_i(x,y)\in W^{2,\infty }(\Omega )\) and \(b_i(x,y)\in W^{2,\infty }(\Omega )\), then

If \(a_{12}=a_{21}\equiv 0\), then

Let \({\mathbf {a}}_I\), \(b_I\) and \(c_I\) denote the corresponding piecewise \(Q^k\) Lagrange interpolation at Gauss–Lobatto points. We are interested in the solution \({{\tilde{u}}}_h\in V^h_0\) to

We need to assume that \(A_I\) still satisfies coercivity \(A_I(v,v)\ge C \Vert v\Vert _1\) and boundedness \(|A_I(u,v)|\le C \Vert u\Vert _1 \Vert v\Vert _1\) for any \(u,v\in H^1_0(\Omega )\), so that the solution \(u\in H^1_0(\Omega )\) of the following problem exists and is unique:

We also need the elliptic regularity to hold for the dual problem:

For instance, if \({\mathbf {b}}\equiv 0\), it suffices to require that eigenvalues of \({\mathbf {a}}_I+c_I\begin{pmatrix} 1 &{}\quad 0\\ 0&{}\quad 1 \end{pmatrix} \) has a uniform positive lower bound on \(\Omega \), which is achievable on fine enough meshes if \({\mathbf {a}}+c\begin{pmatrix} 1 &{}\quad 0\\ 0&{}\quad 1 \end{pmatrix} \) are positive definite. This implies the coercivity of \(A_I\). The boundedness of \(A_I\) follows from the smoothness of coefficients. Since \({\mathbf {a}}_I\) and \(c_I\) are Lipschitz continuous, the elliptic regularity for \(A_I\) holds on a convex domain [14].

By Lemmas 4.1 and 4.2, it is straightforward to extend Theorem 4.2 to the general elliptic case:

Theorem 4.4

For \(k\ge 2\), assume \(a_{ij}, b_i, c\in W^{k+2,\infty }(\Omega )\) and \(u, f\in H^{k+2}(\Omega )\), then

And if \(a_{12}=a_{21}\equiv 0\), then

With suitable assumptions, it is straightforward to extend the proof of Theorem 4.3 to the general case:

Theorem 4.5

For \(k\ge 2\), assume \(a_{ij}, b_i, c\in W^{k+2,\infty }(\Omega )\) and \(u, f\in H^{k+2}(\Omega )\), Assume the approximated bilinear form \(A_I\) satisfies coercivity and boundedness and the elliptic regularity still holds for the dual problem of \(A_I\). Then \(\tilde{u}_h\) is a \((k+2)\)th order accurate approximation to u in the discrete 2-norm over all the \((k+1)\times (k+1)\) Gauss–Lobatto points:

Remark 6

With Neumann type boundary conditions, due to Lemma 3.7, we can only prove \((k+1.5)\)th order accuracy

unless there are no mixed second order derivatives in the elliptic equation, i.e., \(a_{12}=a_{21}\equiv 0.\) We emphasize that even for the full finite element scheme (1.3), only \((k+1.5)\)-th order accuracy at all Lobatto points can be proven for a general elliptic equation with Neumann type boundary conditions.

5 Numerical Results

In this section we show some numerical tests of \(C^0\)-\(Q^2\) finite element method on an uniform rectangular mesh and verify the order of accuracy at \(Z_0\), i.e., all Gauss–Lobatto points. The following four schemes will be considered:

- 1.

Full \(Q^2\) finite element scheme (1.3) where integrals in the bilinear form are approximated by \(5\times 5\) Gauss quadrature rule, which is exact for \(Q^{9}\) polynomials thus exact for \(A(u_h,v_h)\) if the variable coefficient is a \(Q^5\) polynomial.

- 2.

The Gauss–Lobatto quadrature scheme (1.7): all integrals are approximated by \(3\times 3\) Gauss–Lobatto quadrature.

- 3.

The last three schemes are finite difference type since only grid point values of the coefficients are needed. In (1.4) and (1.5), \(A_I(u_h, v_h)\) can be exactly computed by \(4\times 4\) Gauss quadrature rule since coefficients are \(Q^2\) polynomials. An alternative finite difference type implementation of (1.4) and (1.5) is to precompute integrals of Lagrange basis functions and their derivatives to form a sparse tensor, then multiply the tensor to the vector consisting of point values of the coefficient to form the stiffness matrix. With either implementation, computational cost to assemble stiffness matrices in schemes (1.4) and (1.5) is higher than the stiffness matrix assembling in the simpler scheme (1.7) since the Lagrangian \(Q^k\) basis are delta functions at Gauss–Lobatto points.

5.1 Accuracy

We consider the following example with either purely Dirichlet or purely Neumann boundary conditions:

where \(a(x,y)=1+0.1x^3y^5+\cos (x^3y^2+1)\) and \(u(x,y)=0.1(\sin (\pi x)+x^3)(\sin (\pi y)+y^3)+\cos (x^4+y^3)\). The nonhomogeneous boundary condition should be computed in a way consistent with the computation of integrals in the bilinear form. The errors at \(Z_0\) are shown in Tables 1 and 2. We can see that the four schemes are all fourth order in the discrete 2-norm on \(Z_0\). Even though we did not discuss the max norm error on \(Z_0\) in this paper, we should expect a \(|\ln h|\) factor in the order of \(l^\infty \) error over \(Z_0\) due to (1.9), which was proven upon the discrete Green’s function.

Next we consider an elliptic equation with purely Dirichlet or purely Neumann boundary conditions:

where \({\mathbf {a}}=\left( {\begin{array}{cc} a_{11} &{} a_{12} \\ a_{21} &{} a_{22} \\ \end{array} } \right) \), \(a_{11}=10+30y^5+x\cos {y}+y\), \(a_{12}=a_{21}=2+0.5(\sin (\pi x)+x^3)(\sin (\pi y)+y^3)+\cos (x^4+y^3)\), \(a_{22}=10+x^5\), \(c=1+x^4y^3\) and \(u(x,y)=0.1(\sin (\pi x)+x^3)(\sin (\pi y)+y^3)+\cos (x^4+y^3)\). The errors at \(Z_0\) are listed in Tables 3 and 4. Recall that only \({\mathcal {O}} (h^{3.5})\) can be proven due to the mixed second order derivatives for the Neumann boundary conditions as discussed in Remark 6, we observe around fourth order accuracy for (1.4) and (1.5) for Neumann boundary conditions in this particular example.

5.2 Robustness

In Tables 1 and 2, the errors of approximated coefficient schemes (1.4), (1.5) and the Gauss–Lobatto quadrature scheme (1.7) are close to one another. We observe that the scheme (1.5) tends to be more accurate than (1.4) and (1.7) when the coefficient a(x, y) is closer to zero in the Poisson equation. See Table 5 for errors of solving \(\nabla \cdot (a\nabla u)=f\quad \text {on } [0,1]\times [0,2]\) with Dirichlet boundary conditions, \(a(x,y)=1+\varepsilon x^3y^5+\cos (x^3y^2+1)\) and \(u(x,y)=0.1(\sin (\pi x)+x^3)(\sin (\pi y)+y^3)+\cos (x^4+y^3)\) where \(\varepsilon =0.001\). Here the smallest value of a(x, y) is around \(\varepsilon =0.001\). We remark that the difference among three schemes is much smaller for larger \(\varepsilon \) such as \(\varepsilon =0.1\) as in Table 1.

6 Concluding Remarks

We have shown that the classical superconvergence of functions values at Gauss–Lobatto points in \(C^0\)-\(Q^k\) finite element method for an elliptic problem still holds if replacing the coefficients by their piecewise \(Q^k\) Lagrange interpolants at the Gauss–Lobatto points. Such a superconvergence result can be used for constructing a fourth order accurate finite difference type scheme by using \(Q^2\) approximated variable coefficients. Numerical tests suggest that this is an efficient and robust implementation of \(C^0\)-\(Q^2\) finite element method without affecting the superconvergence of function values.

References

Agmon, S.: Lectures on Elliptic Boundary Value Problems, vol. 369. American Mathematical Society, Providence (2010)

Bakker, M.: A note on \(C^0\) Galerkin methods for two-point boundary problems. Numer. Math. 38, 447–453 (1982)

Brezzi, F., Marini, L.: On the numerical solution of plate bending problems by hybrid methods, Revue française d’automatique, informatique, recherche opérationnelle. Anal. Numér. 9, 5–50 (1975)

Chen, C.: Superconvergent points of Galerkin’s method for two point boundary value problems. Numer. Math. A J. Chin. Univ. 1, 73–79 (1979)

Chen, C.: Superconvergence of finite element solutions and their derivatives. Numer. Math. A J. Chin. Univ. 3, 118–125 (1981)

Chen, C.: Structure Theory of Superconvergence of Finite Elements. Hunan Science and Technology Press, Changsha (2001). (in Chinese)

Chen, C., Hu, S.: The highest order superconvergence for bi-\(k\) degree rectangular elements at nodes: a proof of 2\(k\)-conjecture. Math. Comput. 82, 1337–1355 (2013)

Ciarlet, P.G.: Basic error estimates for elliptic problems. Handb. Numer. Anal. 2, 17–351 (1991)

Ciarlet, P.G.: The Finite Element Method for Elliptic Problems. Society for Industrial and Applied Mathematics, Philadelphia (2002)

Ciarlet, P.G., Raviart, P.-A.: The combined effect of curved boundaries and numerical integration in isoparametric finite element methods. In: Aziz, A.K. (ed.) The Mathematical Foundations of the Finite Element Method with Applications to Partial Differential Equations, pp. 409–474. Elsevier, Amsterdam (1972)

Douglas, J.: Some superconvergence results for Galerkin methods for the approximate solution of two-point boundary problems. In: Topics in Numerical Analysis, pp. 89–92 (1973)

Douglas, J., Dupont, T.: Galerkin approximations for the two point boundary problem using continuous, piecewise polynomial spaces. Numer. Math. 22, 99–109 (1974)

Douglas Jr., J., Dupont, T., Wheeler, M.F.: An \( l^{\infty }\) estimate and a superconvergence result for a Galerkin method for elliptic equations based on tensor products of piecewise polynomials. ESAIM Math. Model. Numer. Anal-Modélisation Mathématique et Analyse Numérique 8, 61–66 (1974)

Grisvard, P.: Elliptic Problems in Nonsmooth Domains, vol. 69. SIAM, Philadelphia (2011)

He, W., Zhang, Z.: \(2k\) superconvergence of \(Q_k\) finite elements by anisotropic mesh approximation in weighted Sobolev spaces. Math. Comput. 86, 1693–1718 (2017)

He, W., Zhang, Z., Zou, Q.: Ultraconvergence of high order FEMS for elliptic problems with variable coefficients. Numer. Math. 136, 215–248 (2017)

Lesaint, P., Zlamal, M.: Superconvergence of the gradient of finite element solutions. RAIRO Anal. Numér. 13, 139–166 (1979)

Li, H., Zhang, X.: Superconvergence of high order finite difference schemes based on variational formulation for elliptic equations, arXiv preprint arXiv:1904.01179 (2019)

Lin, Q., Yan, N.: Construction and Analysis for Efficient Finite Element Method. Hebei University Press, Baoding (1996). (in Chinese)

Lin, Q., Yan, N., Zhou, A.: A rectangle test for interpolated finite elements. In: Proceedings of the Systems Science and Systems Engineering (Hong Kong), Great Wall Culture Publication Co, pp. 217–229 (1991)

Smith, K.: Inequalities for formally positive integro-differential forms. Bull. Am. Math. Soci. 67, 368–370 (1961)

Wahlbin, L.: Superconvergence in Galerkin Finite Element Methods. Springer, Berlin (2006)

Acknowledgements

Research is supported by the NSF Grant DMS-1522593. The authors are grateful to Prof. Johnny Guzmán for discussions on Theorem 4.1.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Li, H., Zhang, X. Superconvergence of \(C^0\)-\(Q^k\) Finite Element Method for Elliptic Equations with Approximated Coefficients. J Sci Comput 82, 1 (2020). https://doi.org/10.1007/s10915-019-01102-1

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-019-01102-1

Keywords

- Superconvergence

- Fourth order finite difference

- Elliptic equations

- Gauss–Lobatto points

- Approximated coefficients