Abstract

Recently, the method called tensor completion by parallel matrix factorization via tensor train (TMac-TT) has achieved promising performance on estimating the missing information. TMac-TT, which borrows \(ket \ augmentation\) to transform a lower-order tensor into a higher-order tensor, suffers from serious block-artifacts. To tackle this issue, we build an optimization model combining low-rank matrix factorization based on tensor train (TT) rank and the total variation to retain the strength of TT rank and alleviate block-artifacts. We develop a block successive upper-bound minimization algorithm to solve the proposed model. Under some mild conditions, we theoretically prove that the proposed algorithm converges to the coordinatewise minimizers. Extensive numerical experiments illustrate the superiority of the proposed method over several existing state-of-the-art methods qualitatively and quantitatively.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Tensors, treated as high-dimensional generalizations of matrices and vectors, are powerful to express more complex interactions related to higher-order data. Recent years, the tensor analysis plays an important role in a wide range of real-world applications [3, 10,11,12, 14, 34]. Among them, one important problem is the tensor completion problem, which aims to estimate the missing values from the observed tensor data, e.g., color image or video inpainting [2, 4, 8, 19], hyperspectral images recovery [5, 15, 21], magnetic resonance image (MRI) recovery [6, 32], and higher-order web link analysis [18].

The low-rank constraint has become a powerful tool to recover the higher-order tensor with missing entries. Mathematically, the low-rank tensor completion (LRTC) problem can be formulated as

where \(\mathcal {M}\in \mathbb {R}^{n_{1}\times \cdots \times n_{j}}\) is the underlying tensor, \(\mathcal {T}\in \mathbb {R}^{n_{1}\times \cdots \times n_{j}}\) is the observed tensor, \(\Omega \) is the index of observed entries, and \(\mathcal {P}_{\Omega }(\cdot )\) is the projection operator that keeps entries in \(\Omega \) and zeros out others. However, the definition for the rank of tensors is not unique, such as CANDECOMP/PARAFAC rank and Tucker rank [17]. Both of the corresponding minimization problems are generally NP-hard [9, 26]. To tackle this problem, Liu et al. [22] firstly defined the nuclear norm of a tensor based on the mode-k unfolding [17]

where \(\mathbf M _{(k)}\in \mathbb {R}^{n_{k}\times \prod _{d\ne k} n_{d}}\) is the mode-k unfolding of \(\mathcal {M}\) and \(\alpha _{k}s\) are positive constants satisfying \(\sum _{k=1}^{j}\alpha _{k}=1\). The nuclear norm minimization methods for solving problem (2) have to calculate the singular value decomposition (SVD) for all matrices in every iteration, which suffer from high computational cost. To improve the capacity of solving large-scale problems, Xu et al. [35] performed the low-rank matrix factorization to all-mode unfolded matrices, i.e., factorize each mode matricization \(\mathbf M _{(k)}\) into the product of two smaller matrices \(\mathbf X _{k}\) and \(\mathbf Y _{k}\), and proposed the following model:

where \(\mathbf X _{k}\in \mathbb {R}^{n_{k}\times r_{k}}\), \(\mathbf Y _{k}\in \mathbb {R}^{r_{k}\times \prod _{d\ne k} n_{d}}\), and \(r_{k}\) is the estimated rank of the matrix \(\mathbf M _{(k)}\).

As the matrix \(\mathbf M _{(k)}\) is constructed based on an unbalanced matricization scheme (one mode versus the rest) [7, 22], the \(rank(\mathbf M _{(k)})\) only captures the correlation between a simple mode (not a few modes) and rest modes of the tensor. Therefore, the existing methods based on Tucker rank maybe not suitable for completing higher-order tensors (\(j>3\)) [16, 28].

Recently, the tensor train (TT) rank, which considers the global correlation of tensors thanks to a well-balanced matricization scheme, is proposed [27, 29] as

where \(\mathbf M _{[k]}\in \mathbb {R}^{(\prod _{d=1}^{k}n_{d}) \times (\prod _{d=k+1}^{j}n_{d})}\) is the mode-k canonical matricization of the tensor \(\mathcal {M}\). Bengua et al. [1] applied TT rank to the color image and video completion. They proposed two methods based on TT rank for LRTC. The first one, simple low-rank tensor completion via tensor train (SiLRTC-TT), minimizes the TT nuclear norm, i.e.,

where \(\alpha _{k}\) is the constant satisfying \(\alpha _{k} \ge 0\) and \(\sum _{k=1}^{j-1}\alpha _{k}=1\). Another one, tensor completion by parallel matrix factorization via tensor train (TMac-TT), uses matrix factorization to approximate the TT rank of a tensor, i.e.,

where \(\mathbf X _{k}\in \mathbb {R}^{(\prod _{d=1}^{k}n_{d})\times r_{k}}\), \(\mathbf Y _{k}\in \mathbb {R}^{r_{k}\times (\prod _{d=k+1}^{j}n_{d})}\), and \(r_{k}\) is the rank of the matrix \(\mathbf M _{[k]}\). TT rank is more suitable for higher-order tensors due to the ability of capturing the global correlation of a tensor. In order to handle the third-order tensor data, SiLRTC-TT and TMac-TT use a tensor augmented scheme known as \(ket \ augmentation\) (KA), but they cause block-artifacts [24] on restored images.

KA uses an appropriate block structured addressing scheme to cast a lower-order tensor into a higher-order tensor. However, this scheme does not consider the local smoothness between blocks and blocks, so the block-artifacts are caused on restored images. The block-artifacts can be seen in the results for completion of the Lena image with \(90\%\) missing entries in Fig. 1b, c. To reduce the block-artifacts, we need to smooth the edges between each block. Total variation (TV), one of the most famous functions to characterize the piecewise smoothness prior, has been shown to preserve edges well in image processing [13, 25, 31, 33, 36, 37]. Motivated by former works, we introduce TV into LRTC (6),

where \(\alpha _{k}\) satisfies \(\alpha _{k} \ge 0\) and \(\sum _{k=1}^{j-1}\alpha _{k}=1\), \(\lambda \) is a regularization parameter, and \(\text {TV}(\mathcal {M})\) is the total variation of \(\mathcal {M}\) in spatial dimensions (see details in Sect. 3.1). The proposed model is named tensor completion via matrix factorization based on tensor train rank and total variation (MF-TTTV). From Fig. 1, it is clear that our method can effectively alleviate block-artifacts compared with SiLRTC-TT and TMac-TT.

The contributions of this paper are mainly two folds: (1) we propose a new tensor completion model combining low-rank matrix factorization based on TT rank with the TV regularization, which simultaneously exploit the low-rankness and the piecewise smoothness prior of the underlying tensor; (2) we develop a block successive upper-bound minimization (BSUM) algorithm to solve the proposed model. Experiments demonstrate that our method performs better than the compared methods and effectively reduces the block-artifacts caused by using KA.

The outline of this paper is as follows. Section 2 reviews some preliminary knowledge about the tensor, the tensor train rank, the proximal operator, and KA. Section 3 describes the model formulation and an efficient BSUM-based algorithm with convergence analysis. Section 4 demonstrates the effectiveness of the proposed method based on abundant numerical experiments. Section 5 summarizes this paper.

2 Preliminary

2.1 Tensor Basics

A tensor is a high-dimensional array and its order (or mode) is the number of its dimensions. We denote scalars as lowercase letters, e.g., z, vectors as boldface lowercase letters, e.g., z, matrices as capital letters, e.g., \(\mathbf Z \), and tensors as calligraphic letters, e.g., \(\mathcal {Z}\). A jth-order tensor is defined as \(\mathcal {Z}\in \mathbb {R}^{n_{1}\times \cdots \times n_{j}}\) whose \((i_{1},\ldots ,i_{j})\)-th component is denoted as \(z_{i_{1},\ldots ,i_{j}}\).

The inner product of two tensors \(\mathcal {X}\) and \(\mathcal {Y}\) with same size is defined as

The Frobenius norm of a jth-order tensor \(\mathcal {Z}\) is \(\Vert \mathcal {Z}\Vert _{F} = \sqrt{\langle \mathcal {Z}, \mathcal {Z}\rangle }\).

The mode-k unfolding of a tensor \(\mathcal {Z}\) is denoted as \(\mathbf Z _{(k)}\in \mathbb {R}^{n_{k}\times \prod _{d\ne k} n_{d}}\), where the tensor element \((i_{1},\ldots ,i_{j})\) maps to the element \((i_{k}, b)\) of matrix \(\mathbf Z _{(k)}\) satisfying

We denote the mode-k unfolding of a tensor \(\mathcal {Z}\) as \(\mathbf Z _{(k)}=\text {unfold}_{(k)}(\mathcal {Z})\). The inverse operator of unfolding is denoted as “fold”, i.e., \(\mathcal {Z} = \text {fold}_{(k)} (\mathbf Z _{(k)})\).

The mode-k canonical matricization of a tensor \(\mathcal {Z}\) is defined as \(\mathbf Z _{[k]}\in \mathbb {R}^{(\prod _{d=1}^{k}n_{d}) \times (\prod _{d=k+1}^{j}n_{d})}\), where the tensor element \((i_{1},\ldots ,i_{j})\) maps to the element (a, b) of matrix \(\mathbf Z _{[k]}\) satisfying

In MATLAB, it can be implemented by the reshape function

We denote the mode-k canonical matricization of a tensor \(\mathcal {Z}\) as \(\mathbf Z _{[k]}=\text {reshape}_{[k]}(\mathcal {Z})\). The inverse operator of reshape is denoted as “unreshape”, i.e., \(\mathcal {Z}=\text {unreshape}_{[k]}(\mathbf Z _{[k]})\).

The TT rank is defined as the vector

The tensor \(\mathcal {Z}\) is low-rank, if \(\mathbf Z _{[k]}\) is low-rank for all k .

The notations are listed in Table 1.

2.2 Operators

Let \(\Omega \) be an index set, then the projection operator \(\mathcal {P}_{\Omega }(\mathcal {Z})\) denotes the tensor copying the entries from \(\mathcal {Z}\) in the set \(\Omega \) and letting the remaining entries be zeros, i.e.,

The proximal operator of a given convex function f(x) is defined as

where \(\rho \) is a positive proximal constant. The problem \(\min _{x}\{{f(x)}\}\) is equivalent to \(\min _{x,y}\{{f(x)}+\frac{\rho }{2}\Vert x-y\Vert ^{2}\}\). Thus one can obtain the minimization of f(x) by iteratively solving \(\text {prox}_{f}(x^{l})\), where \(x^{l}\) is the latest update of x. The proximal operator is very attractive in that the objective function (10) is strongly convex with respect to x so long as f(x) is convex.

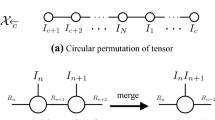

2.3 Ket Augmentation

In this subsection, we introduce ket augmentation (KA) to represent a lower-order tensor by a higher-order one without changing the number of entries in the tensor. The TT decomposition can effectively exploit the local structure of the data by considering the low-rankness of the augmented tensor. Even though the tensor is slightly correlated, its augmented tensor has low TT rank [20].

KA is firstly introduced in [20] to cast a grayscale image into \(real \ ket \ state\) of a Hilbert space by using an appropriate block structured addressing. In [1], the authors successfully extended KA to third-order tensors. Next, we introduce the details about how KA works on the color image. We define the third-order tensor \(\mathcal {M}\in \mathbb {R}^{n_{1}\times n_{2}\times n_{3}}\) that represents the color image, where \(n_{1}\times n_{2} = 2^{n}\times 2^{n}\) is the number of pixels in the image and \(n_{3}=3\) is the number of colors. Let us start with an initial block, labeled as \(i_{1}\) and containing \(2 \times 2\) pixels corresponding to a single color j (\(j = 1, 2, 3\) denotes red, green and blue colors, respectively). This block can be represented as

where \(c_{i_{1}j}\) is the pixel value corresponding to color j and \(\mathbf e _{i_{1}}\) is the orthonormal base \(\mathbf e _{1} = (1, 0, 0, 0)\), \(\mathbf e _{2} = (0, 1, 0, 0)\), \(\mathbf e _{3} = (0, 0, 1, 0)\), and \(\mathbf e _{4} = (0, 0, 0, 1)\). For all three color channels, the three blocks is represented as

where \(\mathbf u _{j}\) is the orthonormal base \(\mathbf u _{1} = (1, 0, 0)\), \(\mathbf u _{2} = (0, 1, 0)\), \(\mathbf u _{3} = (0, 0, 1)\), and \(\otimes \) denotes a tensor product [17]. Let us consider a larger block (labeled as \(i_{2}\)) which consists of four inner sub-blocks for each color j as shown in Fig. 2b. So we have the new block

The color image can be cast into an \((n+1)\)th-order tensor as follows:

Based on the KA method, TT-based methods can effectively exploit the local structure information of visual data and have a better low-rank representation. After the augmented data is processed by the completion method, the reverse operation of KA is performed to obtain the original image form.

3 The Proposed Model and Algorithm

3.1 The Proposed Model

The objective function of our model is:

where \(\mathbf X = (\mathbf X _{1},\ldots ,\mathbf X _{j-1})\), \(\mathbf Y = (\mathbf Y _{1},\ldots ,\mathbf Y _{j-1})\), and \(\lambda \) is the regularization parameter. In the proposed model, the first term is the fidelity term, the second term is the regularization term.

In the fidelity term, matrices \(\mathbf M _{[k]}\), obtained by a well-balanced matricization scheme, capture the global information of the underlying tensor \(\mathcal {M}\). This fidelity term enhances the low-rankness of the underlying tensor \(\mathcal {M}\).

In the regularization term, \(\text {TV}(\mathcal {M})\) denotes the isotropic TV in spatial dimensions. This term aims at enhancing the piecewise smoothness in spatial dimensions. According to the rules of unfolding a tensor (8), we find that the \(\text {mode}\)-1 unfolding matrix \(\mathbf M _{(1)}\) has a good structure:

where \(\hat{s}=\prod _{k=3}^{j}n_{k}\), \(s=n_{2}\times \hat{s}\), and \(\mathbf M ^{(i)}\in \mathbb {R}^{n_{1}\times n_{2}}\) is a matrix in spatial dimensions of the tensor \(\mathcal {M}\).

In our works, the isotropic TV is defined as follows:

where \(D_{m,n}^{1}{} \mathbf M ^{(i)}\) and \(D_{m,n}^{2}{} \mathbf M ^{(i)}\) are the horizontal and vertical gradient values at the \((\text {m},~ \text {n})\)-th pixel of matrix \(\mathbf M ^{(i)}\), respectively, and \(D_{m,n}^{1}\) and \(D_{m,n}^{2}\) are the discrete horizontal and vertical gradient operators, respectively.

3.2 The Proposed Algorithm

It is clear that the objective function (12) is not jointly convex for \((\mathbf X ,\mathbf Y ,\mathcal {M})\), but is convex with respect to \(\mathbf X \), \(\mathbf Y \), and \(\mathcal {M}\) independently. In order to solve the nonconvex problem effectively, we develop the BSUM-based algorithm.

Let \(\mathcal {Z}=(\mathbf X ,\mathbf Y ,\mathcal {M})\) and \(\mathcal {Z}^{l}=(\mathbf X ^{l},\mathbf Y ^{l},\mathcal {M}^{l})\), by utilizing the proximal operator, (7) can be updated through the following:

where \(\rho \) is the positive proximal parameter. Note that (15) can be written as

where \(\mathcal {Z}^{l}_{1}=(\mathbf X ^{l},\mathbf Y ^{l},\mathcal {M}^{l})\), \(\mathcal {Z}^{l}_{2}=(\mathbf X ^{l+1},\mathbf Y ^{l},\mathcal {M}^{l})\), \(\mathcal {Z}^{l}_{3}=(\mathbf X ^{l+1},\mathbf Y ^{l+1},\mathcal {M}^{l})\). It is easy to see that the \(\mathbf X \)- and \(\mathbf Y \)-subproblem can be decomposed into \(j-1\) independent problems. Then, the (16) can be written as

where \(\mathbf I \in \mathbb {R}^{r_{k}\times r_{k}}\) is an identify matrix.

The cost of computing \(\mathbf X _{k}\) is \(O(r_{k}\prod _{d=1}^{j}n_{d})\) and the cost of computing \(\mathbf Y _{k}\) is \(O(r_{k}\prod _{d=1}^{j}n_{d})\).

Since the \(\mathcal {M}\)-subproblem does not admit a close-form solution, we solve the \(\mathcal {M}\)-subproblem using the alternating direction method of multipliers (ADMM). By introducing auxiliary variables, we rewrite it as the following equivalent constrained problem:

where \(\mathbf E _{m,n}=[(\mathbf E _{1})_{m,n}, (\mathbf E _{2})_{m,n}]\in \mathbb {R}^{1 \times 2}\), \((\mathbf E _{1})_{m,n}\) and \((\mathbf E _{2})_{m,n}\) are the \(\text {(m,n)-th}\) entries of \(\mathbf E _{1}\) and \(\mathbf E _{2}\), respectively, and \(\mathbf D _{1}\) and \(\mathbf D _{2}\) are the first-order difference matrices in the horizontal and vertical directions based on \(D_{m,n}^{1}\) and \(D_{m,n}^{2}\), respectively.

The augmented Lagrangian function of (18) is defined as

where \(\mathcal {Q}\), \(\{\mathcal {B}_{k}\}_{k=1}^{j-1}\), and \(\{\mathbf{F }_{i}\}_{i=1}^{2}\) are Lagrangian multipliers of the linear constraint and \(\beta _{1}, \beta _{2}, \beta _{3}>0\) are penalty parameters. The iterative scheme for solving (18) is as follows:

We give the details for solving the first four subproblems in (20).

-

1.

\(\mathcal {M}\)-subproblem We fix all variables but \(\mathcal {M}\), the optimal \(\mathcal {M}\) is obtained as

$$\begin{aligned} \begin{aligned} \mathcal {M}^{l+1,p+1}&= \mathop {\mathrm{arg}\,\mathrm{min}}_{ \mathcal {P}_{\Omega }(\mathcal {M}) = \mathcal {T} } \sum _{k=1}^{j-1}\left( \frac{\beta _{1}}{2}\Vert \mathcal {M}-\mathcal {A}_{k}^{p}\Vert _{F}^{2}+\langle \mathcal {M}-\mathcal {A}_{k}^{p}, \mathcal {B}_{k}^{p}\rangle \right) \\&\quad +\,\frac{\beta _{2}}{2}\Vert \mathcal {M}-\mathcal {Z}^{p}\Vert _{F}^{2} + \langle \mathcal {M}-\mathcal {Z}^{p}, \mathcal {Q}^{p}\rangle +\frac{\rho }{2}\Vert \mathcal {M}-\mathcal {M}^{l}\Vert _{F}^{2}\\&=\mathop {\mathrm{arg}\,\mathrm{min}}_{ \mathcal {P}_{\Omega }(\mathcal {M}) = \mathcal {T} } \sum _{k=1}^{j-1}\frac{\beta _{1}}{2}\left\| \mathcal {M}-\mathcal {A}_{k}^{p}+\frac{\mathcal {B}_{k}^{p}}{\beta _{1}}\right\| _{F}^{2}+ \frac{\beta _{2}}{2}\left\| \mathcal {M}-\mathcal {Z}^{p}+\frac{\mathcal {Q}^{p}}{\beta _{2}}\right\| _{F}^{2}\\&\quad +\,\frac{\rho }{2}\Vert \mathcal {M}-\mathcal {M}^{l}\Vert _{F}^{2}. \end{aligned} \end{aligned}$$(21)The function is quadratic in terms of \(\mathcal {M}\). The optimal \(\mathcal {M}\) is

$$\begin{aligned} \mathcal {M}^{l+1,p+1} = \mathcal {P}_{\Omega ^{c}} \left( \frac{\sum _{k=1}^{j-1}(\beta _{1}\mathcal {A}_{k}^{p}-\mathcal {B}_{k}^{p})+\beta _{2}\mathcal {Z}^{p}-\mathcal {Q}^{p} +\rho \mathcal {M}^{l}}{(j-1)\beta _{1}+\beta _{2}+\rho } \right) +\mathcal {T}. \end{aligned}$$(22) -

2.

\(\{\mathcal {A}_{k}\}\)-subproblem We give the optimal \(\mathcal {A}_{k}\) by

$$\begin{aligned} \begin{aligned} \mathcal {A}_{k}^{p+1}&=\mathop {\mathrm{arg}\,\mathrm{min}}_{\mathcal {A}_{k}} \frac{\alpha _{k}}{2}\left\| \mathbf X _{k}^{l+1}{} \mathbf Y _{k}^{l+1}-\mathcal {A}_{k[k]}\right\| _{F}^{2}\\&\quad +\,\frac{\beta _{1}}{2}\Vert \mathcal {M}^{l+1,p+1}-\mathcal {A}_{k}\Vert _{F}^{2}+\langle \mathcal {M}^{l+1,p+1}-\mathcal {A}_{k}, \mathcal {B}_{k}^{p}\rangle \\&= \mathop {\mathrm{arg}\,\mathrm{min}}_{\mathcal {A}_{k}} \frac{\alpha _{k}}{2}\left\| \mathbf X _{k}^{l+1}{} \mathbf Y _{k}^{l+1}-\mathcal {A}_{k[k]}\right\| _{F}^{2}+\frac{\beta _{1}}{2}\left\| \mathcal {M}^{l+1,p+1}-\mathcal {A}_{k}+\frac{\mathcal {B}_{k}^{p}}{\beta _{1}}\right\| _{F}^{2}. \end{aligned} \end{aligned}$$(23)Utilizing the equation \(\Vert \mathbf M _{[k]}\Vert _{F} = \Vert \mathcal {M}\Vert _{F}\) and the definition of \(\text {unreshape}_{[k]}(\cdot )\) in Sect. 2.1, we rewrite the optimization problem (23) as the following problem:

$$\begin{aligned} \mathcal {A}_{k}^{p+1}= \mathop {\mathrm{arg}\,\mathrm{min}}_{\mathcal {A}_{k}} \frac{\alpha _{k}}{2}\left\| \text {unreshape}_{[k]}(\mathbf X _{k}^{l+1}{} \mathbf Y _{k}^{l+1}){-}\mathcal {A}_{k}\right\| _{F}^{2}+\frac{\beta _{1}}{2}\left\| \mathcal {M}^{l+1,p+1}{-}\mathcal {A}_{k}+\frac{\mathcal {B}_{k}^{p}}{\beta _{1}}\right\| _{F}^{2}.\nonumber \\ \end{aligned}$$(24)The function is quadratic in terms of \(\mathcal {A}_{k}\). The optimal \(\mathcal {A}_{k}\) is

$$\begin{aligned} \mathcal {A}_{k}^{p+1} = \frac{\alpha _{k}\text {unreshape}_{[k]}(\mathbf X _{k}^{l+1}{} \mathbf Y _{k}^{l+1})+\beta _{1}\mathcal {M}^{l+1,p+1}+\mathcal {B}_{k}^{p}}{\alpha _{k}+\beta _{1}}. \end{aligned}$$(25) -

3.

\(\mathcal {Z}\)-subproblem The \(\mathcal {Z}\)-subproblem is

$$\begin{aligned} \begin{aligned} \mathcal {Z}^{p+1}&=\mathop {\mathrm{arg}\,\mathrm{min}}_{\mathcal {Z}} \frac{\beta _{2}}{2}\left\| \mathcal {M}^{l+1,p+1}{-}\mathcal {Z}\right\| _{F}^{2}{+}\langle \mathcal {M}^{l+1,p+1}-\mathcal {Z}, \mathcal {Q}^{p}\rangle {+}\frac{\beta _{3}}{2}\left\| \mathbf{D }_{1}\mathbf{Z }_{(1)}-\mathbf{E }_{1}^{p}\right\| _{F}^{2}\\&\quad +\,\langle \mathbf{D }_{1}\mathbf{Z }_{(1)}-\mathbf{E }_{1}^{p}, \mathbf{F }_{1}^{p} \rangle +\frac{\beta _{3}}{2}\left\| \mathbf{D }_{2}\mathbf{Z }_{(1)}-\mathbf{E }_{2}^{p}\right\| _{F}^{2}+\langle \mathbf{D }_{2}\mathbf{Z }_{(1)}-\mathbf{E }_{2}^{p}, \mathbf{F }_{2}^{p} \rangle \\&=\mathop {\mathrm{arg}\,\mathrm{min}}_{\mathcal {Z}} \frac{\beta _{2}}{2}\left\| \mathcal {M}^{l+1,p+1}-\mathcal {Z}+\frac{\mathcal {Q}^{p}}{\beta _{2}}\right\| _{F}^{2} +\frac{\beta _{3}}{2}\left\| \mathbf{D }_{1}\mathbf{Z }_{(1)}-\mathbf{E }_{1}^{p}+\frac{\mathbf{F }_{1}^{p}}{\beta _{3}}\right\| _{F}^{2}\\&\quad +\,\frac{\beta _{3}}{2}\Vert \mathbf{D }_{2}\mathbf{Z }_{(1)}-\mathbf{E }_{2}^{p}+\frac{\mathbf{F }_{2}^{p}}{\beta _{3}}\Vert _{F}^{2}. \end{aligned} \end{aligned}$$(26)Using the equation \(\Vert \mathbf{Z }_{(1)}\Vert _{F} = \Vert \mathcal {Z}\Vert _{F}\), we solve its equivalent problem as

$$\begin{aligned} \begin{aligned} \mathbf{Z }^{p+1}_{(1)}&=\mathop {\mathrm{arg}\,\mathrm{min}}_{\mathbf{Z }_{(1)}} \frac{\beta _{2}}{2}\left\| \mathbf{M }^{l+1,p+1}_{(1)}-\mathbf{Z }_{(1)}+\frac{\mathbf{Q }^{p}_{(1)}}{\beta _{2}}\right\| _{F}^{2} +\frac{\beta _{3}}{2}\left\| \mathbf{D }_{1}\mathbf{Z }_{(1)}-\mathbf{E }_{1}^{p}+\frac{\mathbf{F }_{1}^{p}}{\beta _{3}}\right\| _{F}^{2}\\&\quad +\,\frac{\beta _{3}}{2}\left\| \mathbf{D }_{2}\mathbf{Z }_{(1)}-\mathbf{E }_{2}^{p}+\frac{\mathbf{F }_{2}^{p}}{\beta _{3}}\right\| _{F}^{2}. \end{aligned} \end{aligned}$$(27)Optimizing this problem can be treated as solving the following linear system:

$$\begin{aligned} \mathbf A \mathbf{Z }_{(1)}=\mathbf{B }, \end{aligned}$$(28)where \(\mathbf A = \beta _{2}+\beta _{3}{} \mathbf D _{1}^{T}{} \mathbf D _{1} + \beta _{3}{} \mathbf D _{2}^{T}{} \mathbf D _{2}\) and \(B = \beta _{2}{} \mathbf M _{(1)}^{l+1,p+1}+\mathbf Q _{(1)}^{p}+\beta _{3}{} \mathbf D _{1}^{T}{} \mathbf E _{1}^{p}-\mathbf D _{1}^{T}{} \mathbf F _{1}^{p}+\beta _{3}{} \mathbf D _{2}^{T}{} \mathbf E _{2}^{p} -\mathbf D _{2}^{T}{} \mathbf F _{2}^{p}\).

Assuming the periodic boundary condition, \(\mathbf D _{1}\) and \(\mathbf D _{2}\) have block circulant with circulating block (BCCB) structure. After applying 2D FFTs, the optimal \(\mathcal {Z}\) is formed directly

$$\begin{aligned} \mathcal {Z} = \text {fold}_{(1)}\Bigg (\mathcal {F}^{-1}\Bigg (\frac{\mathcal {F}(\mathbf B )}{\mathcal {F}(\mathbf A )}\Bigg ) \Bigg ). \end{aligned}$$(29) -

4.

\(\mathbf E \)-subproblem For the \(\mathbf E \)-subproblem, we solve the following optimization problem:

$$\begin{aligned} \begin{aligned} \mathbf{E }^{p+1}&=\mathop {\mathrm{arg}\,\mathrm{min}}_{\mathbf{E }} \lambda \sum _{m=1}^{n_{1}}\sum _{n=1}^{s}\left\| \mathbf{E }_{m,n}\right\| _{2} +\frac{\beta _{3}}{2}\left\| \mathbf{D }_{1}{} \mathbf Z _{(1)}^{p+1}-\mathbf{E }_{1}\right\| _{F}^{2}+\langle \mathbf{D }_{1}{} \mathbf Z _{(1)}^{p+1}-\mathbf{E }_{1}, \mathbf{F }_{1}^{p} \rangle \\&\quad +\,\frac{\beta _{3}}{2}\left\| \mathbf{D }_{2}{} \mathbf Z _{(1)}^{p+1}-\mathbf{E }_{2}\right\| _{F}^{2}+\langle \mathbf{D }_{2}{} \mathbf Z _{(1)}^{p+1}-\mathbf{E }_{2}, \mathbf{F }_{2}^{p} \rangle \\&=\mathop {\mathrm{arg}\,\mathrm{min}}_{E} \lambda \sum _{m=1}^{n_{1}}\sum _{n=1}^{s}\left\| \mathbf{E }_{m,n}\right\| _{2} +\frac{\beta _{3}}{2}\left\| \mathbf{D }_{1}{} \mathbf Z _{(1)}^{p+1}-\mathbf{E }_{1}+\frac{\mathbf{F }_{1}^{p}}{\beta _{3}}\right\| _{F}^{2}\\&\quad +\,\frac{\beta _{3}}{2}\left\| \mathbf{D }_{2}{} \mathbf Z _{(1)}^{p+1}-\mathbf{E }_{2}+\frac{\mathbf{F }_{2}^{p}}{\beta _{3}}\right\| _{F}^{2}. \end{aligned} \end{aligned}$$(30)Given fixed \(\mathbf Z _{(1)}\) and \(\mathbf F _{i}\), the optimal \(\mathbf E \) consists of solving \(n_{1}s\) independent two-variable minimization problems

$$\begin{aligned} \begin{aligned} \mathop {\mathrm{arg}\,\mathrm{min}}_{(\mathbf{E }_{1},\mathbf{E }_{2})_{m,n}}&\quad \lambda \sqrt{\left[ (\mathbf{E }_{1})_{m,n}\right] ^{2}{+}\left[ (\mathbf{E }_{2})_{m,n}\right] ^{2}} {+}\frac{\beta _{3}}{2}\left[ (\mathbf{E }_{1})_{m,n} - (\mathbf{D }_{1}{} \mathbf Z _{(1)}^{p+1})_{m,n}-\frac{1}{\beta _{3}}(\mathbf{F }_{1}^{p})_{m,n}\right] ^{2}\\&+\frac{\beta _{3}}{2}\left[ (\mathbf{E }_{2})_{m,n} - (\mathbf{D }_{2}{} \mathbf Z _{(1)}^{p+1})_{m,n}-\frac{1}{\beta _{3}}(\mathbf{F }_{2}^{p})_{m,n}\right] ^{2}. \end{aligned} \end{aligned}$$(31)And (31) can be solved by using the 2-D shrinkage formula as follows:

$$\begin{aligned} {[}(\mathbf{E }_{1})_{m,n},(\mathbf{E }_{2})_{m,n}]=\text {max}\left\{ \left\| \mathbf{W }_{m,n}\right\| _{2}-\frac{\lambda }{\beta _{3}},0\right\} \frac{\mathbf{W }_{m,n}}{\left\| \mathbf{W }_{m,n}\right\| _{2}}, 1\le m\le n_{1}, 1\le n\le s, \end{aligned}$$(32)where \(\mathbf W _{m,n}=[(\mathbf D _{1}{} \mathbf Z _{(1)}^{p+1})_{m,n}+\frac{1}{\beta _{3}}(\mathbf F _{1}^{p})_{m,n}, (D_{2}{} \mathbf Z _{(1)}^{p+1})_{m,n}+\frac{1}{\beta _{3}}(\mathbf F _{2}^{p})_{m,n}]\) for \(1\le m\le n_{1}, 1\le n\le s\), and set \(0\cdot (0/0)=0\).

Now we discuss the computational complexity of the \(\mathcal {M}\) problem. The \(\mathcal {A}_{k}\)-subproblem (25) involves the product of \(\mathbf X _{k}\) and \(\mathbf Y _{k}\) with sizes \( (\prod _{d=1}^{k}n_{d})\times r_{k}\) and \( r_{k}\times (\prod _{d=k+1}^{j}n_{d})\), whose complexity is \(O(r_{k}\prod _{d=1}^{j}n_{d})\). The main computation of the \(\mathcal {Z}_{k}\)-subproblem (29) is the fast Fourier transforms on the matrix with size \(\prod _{d=1}^{j}n_{d}\), whose complexity is \(O(\prod _{d=1}^{j}n_{d} \log \prod _{d=1}^{j}n_{d})\). The complexity of \([\mathbf E _{1},\mathbf E _{2}]\) (32) is \(O(\prod _{d=1}^{j}n_{d})\). The computational task of solving the \(\mathcal {M}\) problem involves a few inner iterations and its complexity is \(O(\sum _{k=1}^{j-1}r_{k}\prod _{d=1}^{j}n_{d} + \prod _{d=1}^{j}n_{d} \log \prod _{d=1}^{j}n_{d})\).

We summarize the proposed algorithm in Algorithm 1. In each iteration, the total cost of computing \(\mathbf X _{k}\), \(\mathbf Y _{k}\), and \(\mathcal {M}\) in Algorithm 1 is

3.3 Convergence Analysis

In the subsection, we study the convergence of the proposed algorithm. Recently, Razaviyayn et al. [30] proposed the BSUM algorithm for the non-smooth optimization problem. It is an alternative inexact block coordinate descent method. Following, we reviewed the convergence result in [30] for convenience.

Lemma 1

Given the problem \(\min f(x)\), and subject to \(x\in \mathcal {X}\), where \(\mathcal {X}\) is the feasible set. Assume \(u(x,x^{l-1})\) is an approximation of f(x) at the \((l-1)\)-th iteration, which satisfied the following conditions:

where \(u_{i}(x_{i},y)\) is the sub-problem with respect to the i-th block and \(f'(y;d)\) is the direction derivative of f at the point y in direction d. Suppose \(u_{i}(x_{i},y)\) is quasi-convex in \(x_{i}\) for \(i=1,\ldots ,n\). Furthermore, assume that each sub-problem \(\mathop {\mathrm{arg}\,\mathrm{min}}u_{i}(x_{i}, x^{l-1})\), \(s.t. x\in \mathcal {X}_{i}\) has a unique solution for any point \(x^{l-1}\in \mathcal {X}\). Then, the iterates generated by the BSUM algorithm converge to the set of coordinatewise minimum of f. In addition, if \(f(\cdot )\) is regular at z, then z is a stationary point.

Next, we will show that the convergence of the proposed algorithm for the model (7) is guaranteed, as it fits the framework of the BSUM method.

Theorem 1

The iterates generated by (16) converge to the set of coordinatewise minimizers.

Proof

It is easy to verify that \(h(\mathcal {Z}, \mathcal {Z}^{k})\) is an approximation and a global upper bound of \(f(\mathcal {Z})\) at the k-th iteration, which satisfies the following conditions:

where \(\mathcal {Z}=(\mathbf X ,\ \mathbf Y ,\ \mathcal {M})\), and \(\mathcal {Z}_{i}\) is equal to \(\mathbf X \), \(\mathbf Y \), \(\mathcal {M}\), for \(i = 1 , 2 , 3\) , respectively. In addition, the sub-problem \(h_{i}(i = 1 , 2 , 3)\) is strictly convex with respect to \(\mathbf X \), \(\mathbf Y \) and \(\mathcal {M}\) respectively and thus each sub-problem has a unique solution. Therefore, all assumptions in Lemma 1 are satisfied. \(\square \)

4 Numerical Experiments

In this section, we evaluate the performance of the proposed method for the reconstruction of color images, multispectral images (MSIs), MRI, and color videos. We compare the results with five well-known tensor completion methods, including SiLRTC [22], TMac [35], the method based on the tensor nuclear norm in [23] (“TNN” for short), SiLRTC-TT, and TMac-TT [1]. All test tensors are rescaled between [0, 255] to allow a fair quantitative evaluation. In color videos tests, as TNN is only applicable to third-order tensors, we perform it on each frame separately.

The quality of recovered images is measured by the peak signal-to-noise ratio (PSNR) and the structural similarity index (SSIM), which are defined as

and

where \(\mathbf M ^{*}\) is the true image, \(\mathbf M \) is the recovered image, \(\text {Max}_\mathbf{M , \mathbf M ^{*}}\) is the maximum pixel value of the images \(\mathbf M \) and \(\mathbf M ^{*}\), \(\mu _\mathbf{M }\) and \(\mu _\mathbf{M ^{*}}\) are the mean values of images \(\mathbf M \) and \(\mathbf M ^{*}\), \(\sigma _\mathbf{M }\) and \(\sigma _\mathbf{M ^{*}}\) are the standard variances of \(\mathbf M \) and \(\mathbf M ^{*}\), respectively, \(\sigma _\mathbf{M \mathbf M ^{*}}\) is the covariance of \(\mathbf M \) and \(\mathbf M ^{*}\), and \(c_{1}\) and \(c_{2}>0\) are constants. By calculating average PSNR and SSIM values for all bands, we obtain the PSNR and SSIM values of a higher-order tensor. Higher PSNR and SSIM values imply better image quality.

The convergence criterion of our proposed algorithm is defined by computing the relative error of the tensor \(\mathcal {M}\) between two successive iterations as follows:

In the experiments, there are two parameters that should be initialized: the weighting parameters \(\alpha \) and the initial ranks for TMac, TMac-TT, and MF-TTTV. Firstly, the weights \(\alpha _{k}\) for TMac-TT and MF-TTTV are defined as

where \(k=1, \ldots , j-1\). In this way, we assign the large weights to the more balanced matrices. Similarly, for TMac, the weights \(\alpha _{k}\) are defined as

where \(k=1, \ldots , j\). Secondly, to obtain the initial ranks for TMac, TMac-TT, and MF-TTTV, each rank \(r_{k}\) is approximated by the numbers of the singular values that satisfies the following inequality:

where \(q = 1,\ldots ,r_{k}\), th is the threshold, and \(\sigma _{q}^{[k]}\) is assumed to be in descending order. This condition is chosen such that the matricizations with lower-rank will have more singular values truncated. Parameters in competing methods SiLRTC, TNN, and SiLRTC-TT are automatically optimized according to the author’s suggestion.

In our method, we set the proximal parameter \(\rho = 10^{-3}\), penalty parameters \(\beta _{1} = 5*10^{-3}\), and \(\beta _{2} = 0.1\) for all experiments. And we empirically select the regularization parameter \(\lambda \) and the penalty parameter \(\beta _{3}\) from the candidate set: \(\{0.01, 0.03, 0.05, 0.1, 0.3\}\), to attain the highest PSNR value.

All numerical experiments are performed on Windows 10 64-bit and MATLAB R2012a running on a desktop equipped with an Intel(R) Core(TM) i7-6700M CPU with 3.40 GHz and 8 GB of RAM.

4.1 Color Images

In this subsection, we evaluate the performance of the proposed method on color images. The size of the testing image is \(256\times 256 \times 3\). By applying KA, we cast a third-order tensor \(\mathcal {M} \in \mathbb {R}^{256\times 256 \times 3}\) into a ninth-order tensor \(\mathcal {\tilde{M}} \in \mathbb {R}^{4\times 4 \times 4\times 4\times 4\times 4\times 4\times 4\times 3}\) and then apply the proposed method to restore color images. The sampling rate (SR) is tested from 0.05 to 0.5.

Figure 3 shows the visual results of color images with \(SR=0.1\) recovered by SiLRTC, TMac, TNN, SiLRTC-TT, TMac-TT, and MF-TTTV, respectively. Obviously, there are several vertical thorns in the recovered images by SiLRTC and TMac, the results by TNN appear dark and have horizontal thorns, and block-artifacts can be easily observed on the restored images by SiLRTC-TT and TMac-TT. While the recovered results by the proposed method are visually better than compared methods and keep the smoothness best. From the zoom-in regions of recovered images, the proposed method can effectively reduce block-artifacts compared with SiLRTC-TT and TMac-TT. Table 2 lists the numerical results by different methods, where the best PSNR and SSIM values are emphasized in bold font. Figure 4 shows the recovery PSNR and SSIM curves by different methods when applied to images Lena, Peppers, and House. We can see that for different SRs, the method performs better than the compared methods in terms of the PSNR and SSIM.

In Fig. 5, we show the results by applying different methods on images House and Lena with structural missing entries. The missing entries are selected as the black text. The outlines of the text can still be clearly seen on the recovered images by the compared methods, while the text is almost removed using MF-TTTV.

In Table 3, we show the PSNR, SSIM, and Time (in seconds) values of the restored images by different methods. We test the color images Lena and Barbara with \(SR = 0.1\) and 0.3. It can be seen that our method outperforms the compared ones in terms of both PSNR and SSIM, although the proposed method requires more time than compared methods. The reason is that for better recovery results, we introduce the TV regularization and several variables when solving the proposed model, which lead to a more complex numerical algorithm.

The results of one band of testing MSIs recovered by different methods. The first (third) and second (fourth) rows: the results of observed MSIs Washington DC (Flowers) with \(SR=0.05\) and 0.1, respectively. From left to right: the original data, the observed data, the reconstructed results by SiLRTC, TMac, TNN, SiLRTC-TT, TMac-TT, and MF-TTTV, respectively

4.2 MSIs Data

In this subsection, we compare the performance of different methods on MSIs Faces, Flowers, and Washington DC data. The testing images of size \(256\times 256 \times 11\) are selected from the original MSIs. The third-order tensor is converted to the ninth-order tensor of size \(4\times 4 \times 4\times 4\times 4\times 4\times 4\times 4\times 11\) by using KA. The SRs are set to be 0.05, 0.1, and 0.15, respectively. In Fig. 6, we display the recovered results by different compared methods. From the zoom-in regions of recovered images, it is clear that the proposed method performs best in preserving the edges and details of recovered images. The PSNR and SSIM values of each band of the reconstructed MSIs Washington DC and Faces with \(SR = 0.1\) are shown in Fig. 7. From this figure, we can see that the PSNR and SSIM values in all bands obtained by the proposed method are better than those obtained by the compared methods. In addition, Table 4 shows the average PSNR and SSIM values of the reconstructed tensors by different methods. We can note that our proposed method obtains the highest quality results for different MSIs with different sampling rates.

4.3 MRI Data

In this subsection, we use the MRI data of size \(256\times 256 \times 11\) to compare the performance of SiLRTC, TMac, TNN, SiLRTC-TT, TMac-TT, and MF-TTTV. The SRs are set to be 0.05, 0.1, and 0.2, respectively. Table 5 lists the average PSNR and SSIM of the recovered MRI data using different methods. Figure 8 shows the PSNR and SSIM values of every band with \(SR=0.1\) and 0.2. From Table 5, we see that the proposed method achieves higher quality results for different sampling rates. The better visual performance of the recovered image by our method are shown in Fig. 9. Compared the results recovered by TMac-TT, the block-artifacts produced by using KA are obviously reduced in the restored images by MF-TTTV.

The results of one band of testing MRI recovered by different methods. From top to bottom: the results of observed MRI with \(SR=0.05\), 0.1, and 0.2, respectively. From left to right: the original data, the observed data, the reconstructed results by SiLRTC, TMac, TNN, SiLRTC-TT, TMac-TT, and MF-TTTV, respectively

4.4 Color Videos

In this subsection, we test the proposed method on two color videos Bus and Mobile. The size of testing videos is \(243\times 256 \times 3 \times 27\). We reshape the tensor to a ninth-order tensor of size \(6\times 6 \times 6\times 6\times 6\times 6\times 6\times 6\times 3\). The ninth-order data is used for the proposed tensor completion algorithm. The SR is set as 0.1 in this task.

The results of two frames of testing color videos recovered by different methods. The first (third) and second (fourth) rows: the results of color videos Bus (Mobile), respectively. From left to right: the original data, the observed data, the reconstructed results by SiLRTC, TMac, TNN, SiLRTC-TT, TMac-TT, and MF-TTTV, respectively (Color figure online)

Results of using different methods are shown in Fig. 10. It is obvious that our method visually outperforms SiLRTC, TMac, TNN, SiLRTC-TT, and TMac-TT in keeping smoothness and details of recovered images. The PSNR and SSIM values of each frame of two reconstructed color videos are plotted in Fig. 11. We note that the PSNR and SSIM values of each frame recovered by the proposed method are higher than all compared methods.

5 Conclusion

In this paper, we propose a new model combining low-rank matrix factorization based on TT rank with the TV regularization for LRTC. An effective BSUM-based algorithm is developed to solve the proposed model with guaranteed convergence. Moreover, a tensor augmentation tool is introduced to improve the performance of our method. Numerical experiments demonstrate that the proposed method can effectively keep the piecewise smoothness of tensor data in spatial dimensions and distinctly reduce the block-artifacts compared with TMac-TT using KA. In the future work, we will try to speed up the proposed method or develop the numerical algorithm with a faster convergence rate.

References

Bengua, J.A., Phiem, H.N., Tuan, H.D., Do, M.N.: Efficient tensor completion for color image and video recovery: low-rank tensor train. IEEE Trans. Image Process. 26(5), 2466–2479 (2017)

Bertalmio, M., Sapiro, G., Caselles, V., Ballester, C.: Image inpainting. Siggraph 4(9), 417–424 (2000)

Cao, W.-F., Wang, Y., Sun, J., Meng, D.-Y., Yang, C., Cichocki, A., Xu, Z.-B.: Total variation regularized tensor RPCA for background subtraction from compressive measurements. IEEE Trans. Image Process. 25(9), 4075–4090 (2016)

Chan, S.H., Khoshabeh, R., Gibson, K.B., Gill, P.E., Nguyen, T.Q.: An augmented Lagrangian method for total variation video restoration. IEEE Trans. Image Process. 20(11), 3097–3111 (2011)

Chen, Y., Huang, T.-Z., Zhao, X.-L.: Destriping of multispectral remote sensing image using low-rank tensor decomposition. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 11(12), 4950–4967 (2018)

Fu, Y., Dong, W.-S.: 3D magnetic resonance image denoising using low-rank tensor approximation. Neurocomputing 195, 30–39 (2016)

Gandy, S., Recht, B., Yamada, I.: Tensor completion and low-n-rank tensor recovery via convex optimization. Inverse Probl. 27(2), 025010 (2011)

Gao, S.-Q., Fan, Q.-B.: A mixture of nuclear norm and matrix factorization for tensor completion. J. Sci. Comput. 75, 43–64 (2018)

Hillar, C.J., Lim, L.H.: Most tensor problems are NP-hard. J. ACM 60(6), 45 (2013)

Iordache, M.D., Bioucas-Dias, J.M., Plaza, A.: Total variation spatial regularization for sparse hyperspectral unmixing. IEEE Trans. Geosci. Remote Sens. 50(11), 4484–4502 (2012)

Ji, H., Liu, C., Shen, Z., Xu, Y.: Robust video denoising using low rank matrix completion. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 1791–1798 (2010)

Ji, T.-Y., Huang, T.-Z., Zhao, X.-L., Ma, T.-H., Deng, L.-J.: A non-convex tensor rank approximation for tensor completion. Appl. Math. Model. 48, 410–422 (2017)

Ji, T.-Y., Huang, T.-Z., Zhao, X.-L., Ma, T.-H., Liu, G.: Tensor completion using total variation and low-rank matrix factorization. Inf. Sci. 326, 243–257 (2016)

Jiang, T.-X., Huang, T.-Z., Zhao, X.-L., Deng, L.-J., Wang, Y.: FastDeRain: a novel video rain streak removal method using directional gradient priors. IEEE Trans. Image Process. 28(4), 2089–2102 (2019)

Jiang, T.-X., Huang, T.-Z., Zhao, X.-L., Ji, T.-Y., Deng, L.-J.: Matrix factorization for low-rank tensor completion using framelet prior. Inf. Sci. 436–437, 403–417 (2018)

Khoromskij, B., Khoromskaia, V.: Multigrid accelerated tensor approximation of function related multidimensional arrays. SIAM J. Sci. Comput. 31(4), 3002–3026 (2009)

Kolda, T.G., Bader, B.W.: Tensor decompositions and applications. SIAM Rev. 51(3), 455–500 (2009)

Kolda, T.G., Bader, B.W., Kenny, J.P.: Higher-order web link analysis using multilinear algebra. In: IEEE International Conference on Data Mining, pp. 242–249 (2005)

Komodakis, N.: Image completion using global optimization. In: IEEE Conference on Computer Vision and Pattern Recognition, vol. 1, pp. 442–452 (2006)

Latorre, J.I.: Image compression and entanglement. (2005). arXiv:quant-ph/0510031

Li, F., Ng, M.K., Plemmons, R.J.: Coupled segmentation and denoising/deblurring models for hyperspectral material identification. Numer. Linear Algebra Appl. 19(1), 153–173 (2012)

Liu, J., Musialski, P., Wonka, P., Ye, J.: Tensor completion for estimating missing values in visual data. IEEE Trans. Pattern Anal. Mach. Intell. 35(1), 208–220 (2013)

Lu, C.-Y., Feng, J.-S., Lin, Z.-C., Yan, S.-C.: Exact low tubal rank tensor recovery from Gaussian measurements. In: International Joint Conference on Artificial Intelligence (2018)

Luo, Y., Ward, R.K.: Removing the blocking artifacts of block-based DCT compressed images. IEEE Trans. Image Process. 12(7), 838–842 (2003)

Mei, J.-J., Dong, Y.-Q., Huang, T.-Z., Yin, W.-T.: Cauchy noise removal by nonconvex admm with convergence guarantees. J. Sci. Comput. 74, 743–766 (2018)

Mu, C., Huang, B., Wright, J., Goldfarb, D.: Square deal: lower bounds and improved relaxations for tensor recovery. In: International Conference on Machine Learning, pp. 73–81 (2014)

Oseledets, I.V.: Tensor-train decomposition. SIAM J. Sci. Comput. 33(5), 2295–2317 (2011)

Oseledets, I.V., Savostianov, D.V., Tyrtyshnikov, E.E.: Tucker dimensionality reduction of three-dimensional arrays in linear time. SIAM J. Matrix Anal. Appl. 30(3), 939–956 (2008)

Oseledets, I.V., Tyrtyshnikov, E., Zamarashkin, N.: Tensor-train ranks for matrices and their inverses. Comput. Methods Appl. Math. 11(3), 394–403 (2011)

Razaviyayn, M., Hong, M., Luo, Z.-Q.: A unified convergence analysis of block successive minimization methods for nonsmooth optimization. SIAM J. Optim. 23(2), 1126–1153 (2012)

Rudin, L.I., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Phys. D Nonlinear Phenom. 60(1–4), 259–268 (1992)

Varghees, V.N., Manikandan, M.S., Gini, R.: Adaptive MRI image denoising using total-variation and local noise estimation. In: International Conference on Advances in Engineering, Science and Management, pp. 506–511 (2012)

Wang, Y., Peng, J.-J., Zhao, Q., Leung, Y., Zhao, X.-L., Meng, D.-Y.: Hyperspectral image restoration via total variation regularized low-rank tensor decomposition. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 11(4), 1227–1243 (2018)

Wang, Y.-T., Zhao, X.-L., Jiang, T.-X., Deng, L.-J., Ma, T.-H., Zhang, Y.-T., Huang, T.-Z.: A total variation and group sparsity based tensor optimization model for video rain streak removal. Signal Process. Image Commun. (2018). https://doi.org/10.1016/j.image.2018.11.008

Xu, Y.-Y., Hao, R.-R., Yin, W.-T., Su, Z.-X.: Parallel matrix factorization for low-rank tensor completion. Inverse Probl. Imaging 9(2), 601–624 (2017)

Zhao, X.-L., Wang, F., Ng, M.: A new convex optimization model for multiplicative noise and blur removal. SIAM J. Imaging Sci. 7(1), 456–475 (2014)

Zhao, X.-L., Wang, W., Zeng, T.-Y., Huang, T.-Z., Ng, M.K.: Total variation structured total least squares method for image restoration. SIAM J. Sci. Comput. 35(6), 1304–1320 (2013)

Acknowledgements

The authors would like to thank the anonymous referees and editor for their valuable remarks, questions, and comments that enabled the authors to improve this paper. This research is supported by the National Science Foundation of China (61772003, 61876203), the Fundamental Research Funds for the Central Universities (ZYGX2016J132, 31020180QD126), and Science Strength Promotion Programme of UESTC.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ding, M., Huang, TZ., Ji, TY. et al. Low-Rank Tensor Completion Using Matrix Factorization Based on Tensor Train Rank and Total Variation. J Sci Comput 81, 941–964 (2019). https://doi.org/10.1007/s10915-019-01044-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-019-01044-8