Abstract

In this paper we aim at developing highly accurate and stable method in temporal direction for time-fractional diffusion equations with initial data \(v\in L^2(\varOmega )\). To this end we begin with a kind of (time-)fractional ODEs, and a hybrid multi-domain Petrov–Galerkin spectral method is proposed. To match the singularity at the beginning of time, we use geometrically graded mesh together with fractional power Jacobi functions as basis on the first interval. Jacobi polynomials are then chosen to approximate the solution in temporal direction on the intervals hereafter. The algorithm is motivated by the discovery that the solution in temporal direction possesses high regularity in proper weighted Sobolev space on a special mesh in piecewise sense. Combining standard finite element method, we extend it to solve time-fractional diffusion equations with initial data \(v\in L^2(\varOmega )\), in which the mesh in temporal direction is determined by spatial mesh size and finial time T. Numerical tests show that the scheme is stable and converges exponentially in time.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \(\varOmega \subset {\mathbb {R}}^d\) (\(d=1,2,3\)) be a bounded convex domain with a polygonal boundary \(\partial \varOmega \). In this paper we consider the following initial value problem for the unknown u(x, t):

where \({\mathcal {L}}u=\nabla \cdot (a(x)\nabla u)+b(x) u\), with a(x), b(x) functions such that \({\mathcal {L}}\) positive definite and possessing full regularity. Here \(_0\partial _t^\alpha u\) denotes the left-sided Caputo fractional derivative of order \(\alpha \in (0,1)\) in t, cf. (2.2) below.

In fact (1.1) has an equivalent form, which can be obtained by operating \({}_0I_t^\alpha \) on both sides of (1.1)

1.1 Brief Overview of Existing Approaches

Convolution quadrature in time is usually chosen to solve evolution equations like (1.2) numerically. To our best knowledge, the earlier work was established in [16], based on the previous work of Lubich [13,14,15].

Many numerical approaches have been developed to deal with the fractional differential operator \( {{}_{\;0}}\partial _t^\alpha \). L1 scheme is the most popular and widely used scheme up to now because of its simple formulation. It has been proven to be \((2-\alpha )\)’s order in [12, 28] under the assumption that \(u(x,t)\in C^2[0,T]\) for any \(x\in \varOmega \). However, one can not expect such high regularity for u(x, t) with respect to t because of the so called initial layer [26]. Even though the initial data is very smooth, the solution still can be singular when t is close to zero [25]. Jin et al. revisited the L1 scheme in [10] and showed that the scheme is of first order accuracy for \(v\in L^2(\varOmega )\). Most recently Yan et al. [31] presented a modified L1 scheme for (1.1) and proved that it is of \({\mathcal {O}}(\tau ^{2-\alpha })\) even for non-smooth initial data v(x). Stynes et al. [26] considered the L1 method on a graded mesh of the form \((t/N)^\gamma T\). Under the assumption of certain singularity of the solution, they proved the method on such mesh is of \({\mathcal {O}}(N^{-\min \{2-\alpha ,\gamma \alpha \}})\). Furthermore, to overcome the computational cost some fast and parareal algorithms are proposed to speed up the approximation schemes in temporal direction for time fractional diffusion equation, e.g., [8, 22, 29, 30].

It is known that (1.2) is actually an Volterra-type integral equation with a weakly singular kernel \((t-\tau )^{\alpha -1}\) in frequency space with respect to each eigenpair of \({\mathcal {L}}\). The global behavior of the solution inspires researchers to apply global approaches, e.g. [2, 3, 11] which use Jacobi orthogonal polynomials to approximate the solution numerically.

Spectral methods have been being developed for fractional differential operators. Roughly speaking, there are two predominant approaches: using generalized Jacobi functions (GJFs, or named ‘polyfractonomials’) as basis [1, 5, 23, 24, 32,33,34] or employing fractional power Jacobi functions (FPJFs, also referred as Müntz-type functions) as basis, see e.g. [6, 7]. Usually FPJFs are obtained from Jacobi polynomials, by means of a nonlinear variable transform. In most recent work [27], a new technique to construct Müntz-type functions was introduced and singularly perturbed fractional differential equations were solved.

1.2 The Reason of Reconsideration

The spectral methods mentioned above can deal with the singularity of the solution at \(t=0\) in some degree, but still does not work well for long time simulation, i.e., for problems with a large final time T. To illustrate it, ignoring the spatial direction, we consider the model problem \({_0\partial _t^\alpha } {\hat{u}}(t) +\lambda {\hat{u}}(t) = 0\) on \(t\in (0,T] \) with initial data \({\hat{v}}\). After shifting the domain to (0, 1], we obtain an equivalent problem (we still use \({\hat{u}}(t)\) to denote the unknown)

with \({\lambda _T}=T^\alpha \lambda \). By Laplace transform one can obtain \({\hat{u}}(t)=E_{\alpha ,1}(-{\lambda _T}t^\alpha )\) with \(E_{\alpha ,1}(t)\) the Mittag–Leffler function defined by \(E_{\alpha ,1}(t)=\sum _{k=0}^{\infty }\frac{t^k}{\varGamma (k\alpha +1)}\) and \(\varGamma (\cdot )\) representing the Euler Gamma function.

Let \(\{{\tilde{J}}_n^{\delta ,\sigma }(t)\}_{n=0}^{\infty }\) denote the shifted Jacobi polynomials defined on [0, 1]. We apply Petrov–Galerkin (PG) spectral methods correspondingly with utilizing GJFs \(\{t^\alpha {\tilde{J}}_n^{-\alpha ,\alpha }(t)\}_{n=0}^{N}\) and FPJFs \(\{{\tilde{J}}_n^{0,0}(t^\alpha )\}_{n=0}^{N}\) (referred as fractional spectral method in [7] and Müntz Spectral Method in [6]) as trial functions to solve (1.3) with \(\alpha =0.5\) and different \({\lambda _T}\) (Tables 1 and 2).

It can be seen that the method by GJFs converges like \({\mathcal {O}}(N^{-1})\) and for big \({\lambda _T}\), i.e., for long time simulation, more terms of expansion are needed to achieve the desired accuracy. Thus if we apply the scheme to (1.1) in temporal direction then it will cost too much to solve the space-time problem to balance the error in time and space.

The scheme by FPJFs converges exponentially for small \({\lambda _T}\), but does not work for big \({\lambda _T}\). This can be interpreted as follows: suppose the error satisfies the same error bound like in [6, Theorem 3.7], i.e., bounded by \(N^{c-k}\Vert \partial _t^k {\hat{u}}^\alpha (t)\Vert _{L^2_{k,k}(I)}\) with \({\hat{u}}^\alpha (t)={\hat{u}}(t^{1/\alpha })\), \(I:=(0,1)\). After manipulations we get (see Sect. 3 for details)

One can see that for large \({\lambda _T}\), the right hand side grows super fast with respect to k, which ruins the convergency order \(N^{-k}\). Furthermore, the condition number also increases super fast which makes it hard to solve for slightly big N.

Besides big finial time T, another reason we consider (1.3) with large input \({\lambda _T}\) is because it can be taken as the eigen-problem of (1.1) (after shifting (0, T] to (0, 1]), and \(\lambda \) is considered as the eigenvalue of \({\mathcal {L}}\). For non-smooth initial data, e.g., \(v\in L^2(\varOmega )\), we can not ignore the high frequency part of the solution since it decreases slowly with the increase of \(\lambda \).

1.3 Our Approach

In this paper we mainly aim at developing highly accurate and stable scheme in temporal direction for (1.1) with \(v\in L^2(\varOmega )\). Our scheme is a space-time formulation, in which we apply standard finite element method (FEM) in space and hybrid multi-domain PG spectral method in time which will be clarified later.

We first introduce some notations for use. Let \({\mathcal {A}}(\cdot ,\cdot )\) denote the bilinear form corresponding to \({\mathcal {L}}\) and \(V_h\subset H^1_0(\varOmega )\) represent the finite element space with h the parameter of the mesh size of space. And we use \({\mathcal {L}}_h\) to denote the discrete counterpart of the elliptic operator \({\mathcal {L}}\), mapping from \(V_h\) to \(V_h\) and satisfying for any \(\phi ,\chi \in V_h\), \(({\mathcal {L}}_h \phi ,\chi )={\mathcal {A}}(\phi , \chi )\) with \((\cdot ,\cdot )\) the inner product in \(L^2(\varOmega )\). Furthermore, we use \(\lambda _{h,min}=\lambda _{1,h}<\lambda _{2,h}<\cdots <\lambda _{M,h}=\lambda _{h,max}\) to represent the eigenvalues of \({\mathcal {L}}_h\). Then it is known that \(\lambda _{h,min}\) is bounded from below by a constant independent of h and \(\lambda _{h,max}\le c h^{-2}\) with c a constant independent of h.

After applying standard FEM to (1.1) in space and shifting the time domain to (0, 1]we obtain the semidiscrete problem (see e.g., [9, eq. (3.2)]): find \(u_h(t)\in V_h\) with \(u_h(0)=v_h \), such that

where \(v_h \in V_h\) is an appropriate approximation of v. Instead of considering the space-time scheme directly, we begin with (1.5) in frequency space, say the fractional ODE (1.3), which was showed in [4], has close connection with (1.1). Since the tensor structure of the time-space domain and follow the idea of [4], we in fact just need to show the scheme in temporal direction converges uniformly for (1.3) for any \(\lambda _T\in [ T^\alpha \lambda _{h,min}, T^\alpha \lambda _{h,max}]\).

Experientially, the more regularity of the solution one can utilize, the higher accuracy one can achieve. We find that the solution of (1.3) actually possesses high regularity in piecewise sense on a special mesh. The mesh is geometrically graded with the first interval \(I_0\) satisfying \(|I_0|^\alpha {\lambda _T}\le 1\). For the solution on the first interval, after the transformation of variable \((t/|I_0|)^\alpha \rightarrow t/|I_0|\) it can be dropped in the space \(B^n_{\delta ,\sigma }(I_0)(\delta ,\sigma >-1)\) (see the next section for the definition) for any fixed n. And the solution on each interval hereafter is actually infinitely smooth in the space \(B^n_{\beta ,-1}(I_i)(\beta >-1)\). Based on this we design a hybrid spectral method, which uses FPJFs as basis on the first interval and GJF s of type \((t-t_i){\tilde{J}}_n^{\beta ,1,i}(t)\) for the intervals hereafter, with \({\tilde{J}}^{\beta ,1,i}_n(t)\) denoting the shifted Jacobi polynomial on \(I_i:=[t_i,t_{i+1}]\) .

The remaining part of this paper is organized as follows: In Sect. 2 we recall some basic definitions and properties about fractional calculus and Jacobi polynomials. In Sect. 3, we give the regularity of the solution on the special mesh we mentioned above and present the hybrid PG spectral method for (1.3). In Sect. 4 we generalize the scheme to (1.1) with applying FEM in space. We also present some error analysis of the scheme but rigorous error bound is beyond the goal of this paper. Some numerical results are carried out in Sect. 5 to verify the stability and the accuracy of the scheme in temporal direction. We finally make some conclusions in Sect. 6.

2 Preliminaries

2.1 Fractional Calculus

Firstly we recall the definitions of fractional calculus. Denote \(\varLambda :=(a,b)\). For any \(\beta > 0\) and \( f \in L^1(\varLambda )\), the left-sided and right-sided Riemann–Liouville fractional integral operators, i.e., \({}_aI^{\beta }_t\) and \({}_tI_b^\beta \), of order \(\beta \) are defined respectively by

where \(\varGamma (\cdot )\) is the Euler Gamma function defined by \(\varGamma (z)=\int _0^\infty e^{-t}t^{z-1}dt\) for \(\mathfrak {R}z>0\). For any \(s>0\) with \(k-1 \le s < k\), \(k\in {\mathbb {N}}^+\), the (formal) left-sided and right-sided Riemann–Liouville fractional derivative of order s is defined respectively by

Correspondingly the left-sided and right-sided fractional derivative in Caputo sense is defined respectively by

These fractional-order derivatives are well defined for sufficiently smooth functions. Furthermore, it is easy to verify that

holds for any \(n\in {\mathbb {N}}^+\) with any \(s>0\), and it reduces to zero when \(n=0\). Hereafter we use the notation \({{}_{\;0}}\partial _t^\alpha \) to represent the Caputo fractional derivative operator \({{}^{\,C}_{\;0}}\partial _t^\alpha \) for notational simplicity.

2.2 Jacobi Polynomials and Fractional Power Jacobi Functions

Let \(\{J_n^{\beta ,\gamma }(x)\}_{n=0}^{\infty }\) denote the Jacobi polynomials on \([-1,1]\). It can be defined by

where \((q)_n\) is the (rising) Pochhammer symbol defined by

and \({}_2F_1(a,b,c,x)\) is the Gauss hypergeometric function given as follows

For a non-positive integer input \(a=-\,n\), \({}_2F_1(a,b,c,x)\) degenerates to a polynomial, such that it accepts \(|x|=1\).

It is well known that \(\{J_n^{\beta ,\gamma }(x)\}_{n=0}^{\infty }\) are mutually orthogonal with respect to the Jacobi weight function \((1-x)^\beta (1+x)^\gamma \) for \(\beta ,\gamma >-1\). To apply the Jacobi polynomials on generic interval [a, b], we define the shifted Jacobi polynomials \(\{{\tilde{J}}_n^{\beta ,\gamma }(t)\}_{n=0}^{\infty }\) by \({\tilde{J}}_{n}^{\beta ,\gamma }(t)=J_{n}^{\beta ,\gamma }(x)\) with \(x=2\frac{t-a}{b-a}-1\). Then the orthogonality holds

where \(\omega ^{\beta ,\gamma }(t)=(b-t)^\beta (t-a)^\gamma \) and (see e.g., [5, (2.7) and (2.8)])

Furthermore, the k’th order derivative of \({\tilde{J}}^{\beta ,\gamma }_n(t)\) for \(n=k,k+1,\ldots \) satisfy

and are mutually orthogonal with the weight function \({\omega ^{\beta +k ,\gamma +k}}(t)\):

with

Let \(\varLambda :=(a,b)\). we will use \({\mathcal {P}}_N(\varLambda )\) to denote the set of polynomials on \(\varLambda \) with degree less than or equal to N hereafter.

We define the fractional power Jacobi functions (FPJFs) \(\{J_{\alpha ,n}^{\beta ,\gamma }(t)\}_{n=0}^{\infty }\) for \(t\in [0,T]\) and \(\alpha \in (0,1]\) as follows

Since the series \({}_2F_1(a,b,c,x)\) terminates if either a or b is a non-positive integer, the FPJFs \(J_{\alpha ,n}^{\beta ,\gamma }(t)\) defined by (2.12) has finite terms which consists of \(\{1,t^\alpha ,t^{2\alpha },\ldots ,t^{n\alpha }\}\). So we can extend the domain from \(t\in [0,T)\) to \(t\in [0,T]\).

Applying the transformation of integral variable \(t\rightarrow T^{1-1/\alpha }t^{1/\alpha }\), we get the following orthogonality of \(J_{\alpha ,n}^{\beta ,\gamma }(t)\):

where \(\omega ^{\beta ,\gamma }_\alpha (t)=t^{(\gamma +1)\alpha -1}(T^\alpha -t^\alpha )^\beta \) and

Applying (2.9) it is easy to verify the following formula holds

with \(k_{\alpha ,n}^{\beta ,\gamma }=\frac{\alpha }{T^\alpha }(n+\beta +\gamma +1)\). Denote \(I_T=(0,T)\) and

then for any \(\varphi _{\alpha }(t)\in {\mathcal {P}}_N^\alpha (I_T)\), we have \(_{0}\partial _t^{\alpha } \varphi _{\alpha }(t)\in {\mathcal {P}}_{N-1}^\alpha (I_T)\) and \({}_0I_t^\alpha \varphi _{\alpha }(t)\in {\mathcal {P}}_{N+1}^\alpha (I_T)\).

Let \(\{t_j,\mathrm {w}^{\beta ,\gamma }_j\}_{j=0}^{N}\) denote the set of Jacobi–Gauss (JG), Jacobi–Gauss–Radau (JGR), or Jacobi–Gauss–Lobatto (JGL) nodes and weights relative to the Jacobi weight function \(\omega ^{\beta ,\gamma }(t)\). Denote \(t_{\alpha ,j}=T^{1-1/\alpha }t_j^{1/\alpha }\) and \(\mathrm {w}_{\alpha ,j}^{\beta ,\gamma }=\frac{T^{(\alpha -1)(\beta +\gamma +1)}}{\alpha }\mathrm {w}^{\beta ,\gamma }_j\), appealing to Jacobi–Gauss quadrature and utilizing transformation of variable \(t\rightarrow t^\alpha T^{1-\alpha }\), it can be verified that for any \(\varphi _\alpha (t)\in {\mathcal {P}}^\alpha _{2N+l}(I)(l=1,0,-1)\)

where \(l=1,0,-1\) for JG, JGR and JGL respectively.

2.3 Differential Matrix for FPJFs

The definition of \(J_{\alpha ,n}^{\beta ,\gamma }(t)\), cf. (2.12), implies the following expansion holds

Utilizing (2.4) it follows

for \(n\ge 0\). On the other hand, for \(l\in {\mathbb {N}}\), we expand \(t^{l\alpha }\) as

where

So if we denote

and

then there holds the following formula

where

and we use \({{}_{\;0}}\partial _t^\alpha {\mathcal {G}}(t)\) to denote

2.4 Some Useful Definitions and Lemmas

Definition 1

[21, see pp. 117] Suppose \(L_{\beta ,\gamma }^2(\varLambda )\) is the space of all functions defined on the interval \(\varLambda =(a,b)\) with corresponding norm \({\left\| \cdot \right\| _{L^2_{\beta ,\gamma }(\varLambda )}} < \infty \). The inner product and norm are defined as follows

with \(\omega ^{\beta ,\gamma }(t)=(b-t)^\beta (t-a)^\gamma \). And let \(B_{\beta ,\gamma }^m(\varLambda )\) denote the non-uniformly (or anisotropic) Jacobi-weighted Sobolev space:

equipped with the inner product and norm

Lemma 1

The following formula will be used in the analysis later

where \( {\tilde{J}}_n^{\beta ,\gamma }(t)\) represents the shifted Jacobi polynomials defined on \({\bar{\varLambda }}=[a,b]\) and \(\beta \in {\mathbb {R}}, \gamma >\alpha - 1\).

3 Multi-domain PG Spectral Scheme for Fractional ODEs

In this section we shall present the multi-domain PG spectral scheme for (1.3) under assumption that \({\lambda _T}\in [\lambda _{min},\lambda _{max}]\) with \(\lambda _{min},\lambda _{max}\in {\mathbb {R}}^+\). To avoid heavy notations we use u(t) to denote the solution \({\hat{u}}(t)\) and v to represent \({\hat{v}}\) in this section.

Let \({\mathcal {T}}_\tau \) be the partition of the time interval \({\bar{I}}:=[0,1]\) into \({L}+1\) open subintervals \(\left\{ I_n=[t_{n},t_{n+1}]\right\} _{n=0}^{{L}}\) with nodal points \(t_0=0, t_n=2^{n-{L}-1}\) for \(n=1,2,\ldots , {L}+1\), and \({L}\) is the smallest integer bigger than \(\log _2 \lambda ^{1/\alpha }_{{max}}\). That is we ensure \(t_1^\alpha \lambda _{max}\le 1\). We assign to each interval \(I_n\) an approximation order \({\mathbf{r}}_n\) and store these orders in the vector \({\mathbf{r}}\). Hereafter we denote \(u_j(t):=u(t)|_{t\in I_j}\).

3.1 Regularity of \(u_j(t)\) and the Numerical Scheme

Let \(u^{\alpha }_0(t)=u_0(|I_0|^{1-1/\alpha }t^{1/\alpha })\), after trivial manipulations we get

Since the Mittag–Leffler function \(E_{\alpha ,1}(-t)\) is completely monotonic [19], it holds

then by the fact \(\int _{I_0}(t_1-t)^{n+\sigma }t^{n+\delta }dt=|I_0|^{2n+\delta +\sigma +1}B(n+\delta +1,n+\sigma +1)\) we have

with \(B(\cdot ,\cdot )\) the Euler Beta function. Note that for \( \delta ,\sigma >-1\)

and the mesh ensures \(|I_0|^\alpha \lambda _{max}\approx 1\), we then can conclude:

Proposition 1

For any \(\delta , \sigma >-1\) and any nonnegative integer \(n\in {\mathbb {N}}\), we have \(u_0^\alpha (t)\in B_{\delta ,\sigma }^n(I_0)\). Furthermore the following estimate holds

where c is a constant independent of n and \(|I_0|\).

Denote \(z_j(t):=u_j(t)-u(t_j)\) for \(j=1,2,\ldots , {L}\), then obviously \(z_j(t)\in L^2_{\beta ,-1}(I_j)(\beta >-1)\). For any positive integer \(n\in {\mathbb {N}}^{+}\), apply [18, Theorem 1.6] and the following properties of the Mittag–Leffler function ([20, lemma 3.2]): for \(t,q>0\) and \(n\in {\mathbb {N}}\)

then it follows

where c is a constant independent of n and \(|I_j|\). Take into account that \(t_j=t_{j+1}-t_j=|I_j|\) we obtain the following proposition for \(u_j(t)\)( \(j=1,2,\ldots , {L}\)):

Proposition 2

For any \(\beta >-1\) and \(n\in {\mathbb {N}}\) we have \(z_j(t)\in B_{\beta ,-1}^n(I_j)\). Furthermore, for any \(n\in {\mathbb {N}}^+\) there holds

where c is a constant independent of n and \(|I_j|\).

The basis we will choose on each interval is based on the following qualitative analysis. Let \(\pi _{N}^{\sigma ,\delta }\) represent the orthogonal projection mapping from \(f\in L^2_{\sigma ,\delta }(I_0)\) to \(\pi _{N}^{\sigma ,\delta }f\in {\mathcal {P}}_N(I_0)\), given by for any \(\varphi \in {\mathcal {P}}_N(I_0) \), \((f-\pi _{N}^{\sigma ,\delta }f,\varphi )_{L^2_{\sigma ,\delta }(I_0)}=0\). Following the idea of [21, Lemma 3.10] with noticing that \(\max _{x\in [-1,1]}\left| J_m^{\sigma ,\delta }(x)\right| ={\mathcal {O}}(m^{\max (-1/2,\sigma ,\delta )})\) and the domain transplant \([-1,1]\rightarrow I_0\) one can obtain for \(\max (\sigma ,\delta )+1<n\le N+1\)

Recall Proposition 1 and take \(n=N+1\) then one can find the factor involving \(|I_0|\) is canceled and exponential convergency is achieved by the asymptotic equivalence \(N^{N+1/2}\sim N!e^{N}\)(see, e.g., [21, A.8]). That is, \(u_0^\alpha (t)\) can be approximated accurately by Jacobi polynomials which implies, by the nonlinear transplant \(t\rightarrow |I_0|^{1-\alpha }t^\alpha \), \(u_0(t)\) can be treated accurately by FPJFs on \(I_0\) in \(L^\infty \) sense. Similarly, by appealing to [5, Theorem 3.6] together with Proposition 2 we know the GJFs \(\{(t-t_j){\tilde{J}}_n^{\beta ,1}(t)\}_{n=0}^{N}\cup 1\) (actually polynomials) on \(I_j\) can be applied to approximate \(u_j(t)\) with exponential convergence rate in \(L^\infty \) sense as well.

For any interval \(\varLambda =(a,b)\), denote

The analysis above inspires us to use the following multi-domain PG spectral method: find \(U(t)\in S_{0}^{{\mathbf{r}}}({\mathcal {T}}_\tau )\) with initial data \( U(0)=v\) such that

where

and

denote the trial and test space respectively and \((\cdot ,\cdot )_{\omega }\) denotes the weighted \(L^2\)-inner product with weight function

In fact (3.8) is a time stepping scheme. Denote \(U_j:=U(t)|_{t\in I_j}\), (3.8) can be put in the following form:

where \(U_0=v\), \(U_j(t_j)=U_{j-1}(t_j)\), \(G_0(t)=0\),

for \(j=1,2,\ldots ,{L}\) and \(\omega _j:=\omega _j(t)\) for \(j\ge 1\) with \(\omega _0:=\omega _\alpha ^{\delta ,\sigma }(I_0)\). Hereafter we always use \(\Vert \cdot \Vert _{\omega _j}\) to represent the weighted norm \(\Vert \cdot \Vert _{L^2_{\omega _j}(I_j)}\) in this section.

3.2 Linear Algebraic Problem

For the consideration of computational cost, in practice we take \(\delta =0,\beta =-\,\alpha \). Expand U(t) in each interval by

where \(\{{\tilde{J}}_n^{\cdot ,\cdot ,j}\}_{n=0}^{{\mathbf{r}}_{j}}\) represent the shifted Jacobi polynomials defined on \(I_j=[t_j,t_{j+1}]\). For \(i\in {\mathbb {N}},j\in {\mathbb {N}}^+\), denote

then we can put the numerical solution in vector form

Step 1 Compute the solution on the first interval, \(U_0:=U(t)|_{t\in I_0}\). Firstly focus on the inner product \(({}_{0}\partial _t^\alpha U_0,w)_{\omega _0}\) on the left hand side of (3.10). Recall (2.21) we have

where the inter product \((matrix,scalar)_\omega \) is understood in element sense, i.e.,

Appealing to (2.13) and (2.14) with \(\beta =0,\gamma =\sigma \) we have

where

Secondly, consider \((U_0,w)_{\omega _0}\).

where \(S^0_{n,k}\) can be calculated exactly by (2.17). Let \(S^0=(S^0_{n,k})_{n,k=0}^{{\mathbf{r}}_0}\), then by the orthogonality of FPJFs we can verify \(S^0\) is tridiagonal. Set \(H^0=diag(h_{\alpha ,0}^{0,\sigma },h_{\alpha ,1}^{0,\sigma },\ldots ,h_{\alpha ,{\mathbf{r}}_0}^{0,\sigma })\) and \(M^0=C D H^0\), then the numerical solution on \(I_0\) can be obtained by

Step 2 Compute U(t) on the intervals hereafter, i.e., \(U_{j}:=U(t)|_{t\in I_j}\), \(j=1,2,\ldots ,{L}\). As before we consider

Recalling Lemma 1 and \(\omega _j\) in (3.9) it follows

where \(h_k^{0,1-\alpha }\) is given by (2.8) with \(b-a=|I_j|\). Secondly focus on

Recalling \(\omega _j\) in (3.9) we have

where \(S^j_{n,k}\) can be calculated accurately by Jacobi–Gauss quadrature with Jacobi weight function \((1+x)\).

Finally consider the memory term \((G_j,(t-t_j)^{1-\alpha }{\tilde{J}}_k^{0,1-\alpha ,j}(t))_{\omega _j}\). Recall the composition of \(G_j(t)\) we only need calculate the following integrals

where \(i=1,2,\ldots ,{L}-1\), \(j=i+1,\ldots ,{L}\). Utilizing (2.15) it follows

By variable transform \(\eta =\frac{\xi ^\alpha }{|I_0|^\alpha }\), \(\theta =\frac{t-t_j}{|I_j|}\) with noticing that \(|I_j|=t_j=2^j|I_0|\) we have

where \(j=1,2,\ldots ,{L}\) and \(\{{\tilde{J}}_k^{\cdot ,\cdot }(\eta )\}_{k=0}^{\infty }\) are Jacobi polynomials defined on [0, 1]. Appealing to (3.9) and Lemma 1 it holds

Apply domain transplant \(\theta =\frac{t-t_j}{|I_j|}\), \(\eta =\frac{\xi -t_i}{|I_i|}\) then

Note that \(|I_j|=t_j,|I_i|=t_i\) and \(|I_j|=2^{j-i}|I_i|\), then

Denote

then \({\mathbf {a}}^j\), the coefficients of \(U_j(t)\) satisfy

where \(c(i,j)=|I_j|\) for \(i=0\) and \(c(i,j)=|I_i|^{1-\alpha }|I_j|\) for \(i=1,2,\ldots , {L}-1\).

Remark 1

The most time consuming part in this scheme is computing \({\hat{\mathbf {I}}}^{j-i}\) for \(j-i=1,2,\ldots ,{L}\). However once they were obtained, we can store them and reuse them when we plan to apply more basis on each interval or do simulation for bigger \({\lambda _T}\), i.e., for larger T. For example, suppose \(\lambda '_T\) is the new input under which we obtain a new mesh with nodes \(t_0,t_1,\ldots ,t_{{L}'+1}\). Furthermore, let

denote the new vector of approximation order and \({\hat{\mathbf {I}}}^{j-i}_{new}\) be the new matrix formed by (3.19). Then for \(j-i\le {L}\),

And for \(j-i={L}+1,\ldots ,{L}'\) we use (3.19) to compute the whole \({\mathbf{r}}'_i+1\) by \({\mathbf{r}}'_j+1\) matrix.

4 Multi-domain PG Spectral Scheme for Fractional PDEs

4.1 Semi-discretization with Respect to Space

Before introducing the fully discrete scheme, we first recall the Galerkin semidiscrete scheme for (1.1). Let \({\mathcal {T}}_h\) be a shape regular and quasi-uniform triangulation of the domain \(\varOmega \) into d-simplexes with h denoting the maximum diameter and piecewise linear functions are employed as the basis. We use \(V_h\subset H_0^1(\varOmega )\) to denote the discrete finite element space. Furthermore we will use \((\cdot ,\cdot )\) to represent the dual pair over \(\varOmega \) and \({\mathcal {A}}(\cdot ,\cdot )\) to represent the bilinear form corresponding to \({\mathcal {L}}\). After we use the transformation of variable \(t\rightarrow Tt\), the Galerkin semidiscrete scheme for (1.1) reads: for \(t \in (0,1]\) find \(u_h(t) \in V_h \) with \(u_h(0)=v_h\) such that

where \(v_h\in V_h\) is a proper approximation of v, and \({\mathcal {A}}_T(\cdot ,\cdot )=T^\alpha {\mathcal {A}}(\cdot ,\cdot )\).

To begin with we shall introduce some notations and definitions which will be used later. Let \(\{\lambda _j,\psi _j\}_{j=1}^{\infty }\) denote the eigenpairs of \({\mathcal {L}}\) under Dirichlet boundary condition on \(\varOmega \), and the space \({\dot{H}}^s(\varOmega )(s\in {\mathbb {R}})\) denote the Hilbert space induced by the norm \(\Vert \cdot \Vert _{s}\) with

where \(\langle v_1,v_2 \rangle :=\int _{\varOmega }v_1 v_2 dx\). For \(s=0\) it reduces to the \(L^2(\varOmega )\)-norm and we use the notation \(\Vert \cdot \Vert \) for simplicity. We also need the \(L^2\)-orthogonal projection \(P_h\), mapping \(\varphi \in {\dot{H}}^s(\varOmega )\) with \(s\ge -1\) to \(P_h\varphi \in V_h\), defined by

and the Ritz projection \(R_h: \, H_0^1\rightarrow V_h\), given by

Furthermore let \({\mathcal {L}}_h\) represent the discrete counterpart of the elliptic operator \({\mathcal {L}}\), mapping from \(V_h\) to \(V_h\) and satisfying for any \(\phi ,\chi \in V_h\)

Correspondingly let \(\{\psi _{j,h}\}_{j=1}^M\subset V_h\) denote the \(L^2(\varOmega )\)-orthonormal basis for \(V_h\) of generalized eigenfunctions of \({\mathcal {L}}_h\) and \(\{\lambda _{j,h}\}_{j=1}^{M}\) be the eigenvalues, i.e.,

Then we introduce the following norm for \(\phi \in V_h\)

It is known that \(\lambda _{h,min}\), the minimal eigenvalue of \({\mathcal {L}}_{h}\) is bounded from below by some constant independent of h and \(\lambda _{h,max}\), the maximum eigenvalue of \({\mathcal {L}}_{h}\) is bounded from above by \(ch^{-2}\) with c independent of h.

Now we are at the position to present the error bound for the semidiscrete scheme (4.1). Thanks to [9, Theorem 3.5, Theorem 3.7, Remark 3.4] we know the following estimate holds

for \(p=0,1,2\) where \({\mathscr {C}}_h=|\ln h|\) for \(p=0,1\) with \(v_h=P_h v\), and \({\mathscr {C}}_h=1\) for \(p=1,2\) with \(v_h=R_h v\).

4.2 Full Discretization and Error Analysis

Next we shall clarify how to deal with the temporal direction. Actually (4.1) can be reformulated as

where \({\mathcal {L}}_{T,h}:=T^\alpha {\mathcal {L}}_h\). We apply the geometric mesh given at the very beginning in Sect. 3 and choose \(\lambda _{T,max}:=T^\alpha \lambda _{h,max}\), the maximum eigenvalue of \({\mathcal {L}}_{T,h}\) as the parameter \(\lambda _{max}\) to determine the temporal mesh. That is \(t_0=0\), and for \(n=1,2,\ldots , {L}+1\)

Denote \(Q_0^{{\mathbf{r}},h}\) to be the tensor space \(S_{0}^{{\mathbf{r}}}({\mathcal {T}}_{\tau })\times V_h\) and correspondingly let \(Q_1^{{\mathbf{r}},h}\) represent \(S_{1}^{{\mathbf{r}}}({\mathcal {T}}_{\tau })\times V_h\). Then the PG spectral scheme reads: find \(U_{h}(x,t)\in Q_0^{{\mathbf{r}},h} \) with \(U(x,0)=v_h\) such that

where \(\omega (t)\) is defined by (3.9) and \(I:=\textsc {(0,1)}\), \(Q_I=\varOmega \times I\).

Linear Algebraic Problem Let \(\{\phi _m\}_{m=1}^{M}\)denote the piecewise linear basis functions in \(V_h\), then \(U_h(x,t)\) satisfies the following system

Furthermore suppose \(v_h=\sum _{l=1}^{M}v_h(x_l)\phi _l(x)\), \(U_h(x,t_j)=\sum _{l=1}^{M}U_h(x_l,t_j)\phi _l(x)\) and denote

then \(U_h(t)\) can be given by the following linear system:

where \({\mathcal {J}}(t),{\mathcal {J}}^j(t)\) are defined as in the last section. Let \(M_h\) denote the mass matrix and \(S_h\) represent the stiffness matrix corresponding to \({\mathcal {L}}\) under certain triangulation of \(\varOmega \), recalling (4.6) then we obtain

where \(j=1,2,\ldots ,{L}\) and c(i, j) is the one in (3.20).

Remark 2

Note that the mesh \({\mathcal {T}}_\tau \) in temporal direction is determined by \(\lambda _{max}=T^\alpha {\mathcal {O}}(h^{-2})\). That is if we use finer mesh in space, then we obtain a finer mesh \({\mathcal {T}}'_\tau \) in time with \({L}'>{L}\) intervals. So we can reuse \({\hat{\mathbf {I}}}^{j-i}\) as we had pointed out in remark 1, which can save much time.

Assumption A

We assume that (3.8), the hybrid multi-domain PG spectral method for ODEs satisfied the following error bound

where \({\hat{v}}\) is the initial data and \({\mathcal {E}}^n(\alpha ,{\mathbf{r}})\) depends only on \(\alpha \) and \({\mathbf{r}}\).

Suppose Assumption A holds then we have the following space time error:

Theorem 1

(Error analysis) Suppose \(v(x)\in {\dot{H}}^p(\varOmega )\) with \(p=0,1,2\). Take \(v_h=P_h v\) for \(p=0,1\) and \(v_h=R_h v\) for \(p=1,2\). Denote \(U^n_h(x,t):=U_h(x,t)|_{t\in I_n}\) with \(U_h(x,t)\) the solution of (4.6). Then for \(n=0,1,\ldots ,{L}\) and any \(t\in I_n\)

where \({\mathcal {E}}^n(\alpha ,{\mathbf{r}})\) is defined as in Assumption A, and \({\mathscr {C}}_h\) is determined by \(v_h\), as claimed below (4.4).

Proof

Using the basis \(\{\psi _{j,h}\}_{j=1}^M\), we expand the solutions \(u_h\) and \(U_{h}\) into

where \({\hat{u}}_{j,h}(t)=({u}_h(t),\psi _{j,h})\) and \({\hat{U}}_{j,h}(t)=(U_{h }(t),\psi _{j,h})\). Obviously, the function \({\hat{u}}_{j,h}(t)\) satisfies \({\hat{u}}_{j,h}(0)={\hat{v}}_{j,h}:=(v_h,\psi _{j,h})\) and

On the other hand, by taking \(\varphi =w(t)\psi _{j,h}\in Q_1^{{\mathbf{r}},h}\) in (4.6) it follows that the function \({\hat{U}}_{j,h}(t)\) satisfies

In other words, \({\hat{U}}_{j,h}(t)\) is the approximation of \({\hat{u}}_{j,h}\) by PG multi-domain scheme (3.8). Define

where \({\hat{u}}^{n}_{j,h}(t)={\hat{u}}_{j,h}(t)|_{t\in I_n}\) and \({\hat{U}}^n_{j,h}(t)={\hat{U}}_{j,h}(t)|_{t\in I_n}\). By Assumption A, one can obtain that \(\Vert {\mathcal {E}}^n_{j,h}(t) \Vert _{\infty }\) can be bounded as

Thanks to (4.8) we have for \(t\in I_n\) and \(s\in {\mathbb {R}}\)

Let q denote 0 or 1. Then for \(v\in {\dot{H}}^p(\varOmega )\) with \(p=0,1\) and \(v_h=P_h v\), appealing to the inverse inequality(see e.g., [9, Lemma 3.3]) we have

where the last step follows form the stability of \(P_h\) in \(L^2(\varOmega )\). For \(p=1,2\) and \(v_h=R_h v\), it follows

where we applied the fact that \(R_h\) is stable in \({\dot{H}}^1(\varOmega )\). Recall (4.4) and the coincidence of \({\dot{H}}^1(\varOmega )\) and \({H}_0^1(\varOmega )\), then the desired estimate follows. \(\square \)

5 Numerical Examples

5.1 Numerical Tests for Fractional ODEs

In this subsection we present some visualized plots of the error for

under various \(\alpha \) and \({\lambda _T}\). We set \(\lambda _{max}=1000\) and use uniform approximation order for each interval, i.e. \({\mathbf{r}}_0={\mathbf{r}}_1=,\ldots ,={\mathbf{r}}_{{L}}=N\).

Figure 1 presents the maximum absolute errors for \(\alpha =0.2,0.4,0.6,0.8\) and \(N=2,3,\ldots ,11\) under \({\lambda _T}=1000\). One can observe that the scheme is exponentially convergent. Figures 2, 3 and 4 present \(|(u-U)(t)|\) respectively for \({\lambda _T}=0.1\), \({\lambda _T}=10\) and \({\lambda _T}=\lambda _{max}=1000\) by loglog function in MATLAB. We use the red dashed line to mark the position of \(t_1\), since different approximation scheme is applied on the first interval.

One can observe the error behaves different under different \({\lambda _T}\): for \({\lambda _T}=0.1\), it grows with time going while decreases from the second interval when \({\lambda _T}=1000\). This is actually due to the behavior of the solution u(t) and the structure of the mesh: for large \({\lambda _T}\), the solution falls off super fast around \(t=0\) and then becomes very flat, so we do not need big N to achieve the desired accuracy on the intervals away from \(t=0\), despite the length of the interval grows exponentially. But for a small \({\lambda _T}\), with the growing of the length of the interval, it is better to increase the number of the basis correspondingly to fit the solution since u(t) is nearly-singular even on the intervals far away from \(t=0\).

5.2 Numerical Tests for 1-D Fractional PDEs

We shall first consider the following equation on \(\varOmega =(0,1)\):

with diffusion coefficient \(c_d=0.1\) and initial data

-

(a)

\(v=\left\{ \begin{array}{c} 1,\quad x\in (0, 0.5] \\ 0,\quad x\in (0.5,1) \end{array}\right. \), so that \(v\in {\dot{H}}^{s}{(\varOmega )}\) for \(s<\frac{1}{2}\).

-

(b)

\(v=2\min (x,1-x)\), so that \(v\in {\dot{H}}^{s}(\varOmega )\) for \(s<\frac{3}{2}\).

We use uniform approximation order in time, say \({\mathbf{r}}_0={\mathbf{r}}_1=,\ldots ,{\mathbf{r}}_{{L}}=N\), and fix the spatial mesh size \(h=1{/}100\). By virtue of the fact that \(\lambda _{h,max}\), the maximum eigenvalue of \(-\varDelta _h\), is bounded from above by \(\lambda _{h,max}\le c h^{-2}\) which implies \(c_d\lambda _{h,\max }\le ch^{-2}\), so we determine the temporal mesh by \(t_0=0\) and

with

We aim at compute the maximum relative \(L^2\) error under different N

where \(\Vert \cdot \Vert \) represents \(\Vert \cdot \Vert _{L^2(\varOmega )}\) and \(U_{ref}\) is obtained by the same scheme with a higher approximation order \(N_{ref}\).

Figure 5 shows \(\Vert e\Vert _{2,\infty }\) under \(N=2,4,6,8\) and \(\alpha =0.2,0.4,0.6,0.8\) for case (a) and case (b). It is known that the \(L^2\)-projection for initial data given by case (a) oscillates near the discontinuous point and the incompatible boundary, so we use lumped mass matrix instead of mass matrix. One can observe from the slop of each dashed line that the error decreases exponentially. Figures 6 and 7 present 3-D meshed figures for the numerical solutions \(U_h(t)\)(under N=8) and corresponding errors \(U_h(t)-U_{ref}(t)\) of case (a) under different \(\alpha \), from which one can have a visualized observation of how the solution and corresponding error behave with time stepping. For case (b) we show the error \(U_h(t)-U_{ref}(t)\) in Fig. 8 under \(N=8\). One can see from Figs. 7 and 8 that the maximum absolute error at any time t is always restricted in the range of the same magnitude, which indicates the scheme is stable.

We also would like to comment on the complexity of the scheme. On one hand, (5.3) indicates that the scheme does not cost many solves. Take \(\varOmega =(0,1)\) and \(h=1/100\) for an example, for \(\alpha =0.2\) the scheme needs \({L}+1=68\) steps totally to arrive at \(t=1\); For \(\alpha =0.8\) the total number of solves is \({L}+1=18\). In spite of more solves will be needed if a finer spatial mesh size is chosen, the total steps grows as \(|\log _2 h|\) for any fixed \(\alpha \), which actually is very slow. On the other hand, in practice we do not need big N, the approximation order in temporal direction, to balance the error arising from spatial discretization. For instance, Fig. 5 shows that \(N=4\) is enough for \(h=1/100\) since the spatial error is \({\mathcal {O}}(h^2)\).

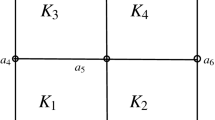

5.3 Numerical Tests for 2-D Fractional PDE

Next, to confirm the scheme really works very well for complex domain, we do the simulation for (5.2) with diffusion coefficient \(c_d=0.5\) on domain \(\varOmega \) given in Fig. 9 with initial data \(v=1\) on \(\varOmega \). We apply ‘distmesh2d’ given by P. Persson and G. Strang in [17] and triangulate the domain by the following code:

We then obtain 10782 triangles and 5207 inner points with 368 boundary points. The time mesh is determined by \(\lambda _{max}=100^2\) and we use \(N=5\) as the approximation order for each interval in time. Figure 10 presents the numerical solutions for \(\alpha =0.2\) and \(\alpha =0.8\), from which one can confirm the scheme is stable and works very well.

6 Concluding Remarks

We proposed a hybrid multi-domain spectral method in time to solve fractional ODEs and then extended it to solve time-fractional diffusion equations. Geometrically stepping mesh was considered in temporal direction, which reduces the total number of solves a lot compared with the uniform mesh. Numerical results show that the approach is stable and converges exponentially in \(L^\infty \) sense. However, the convergency is verified by numerical tests instead of theoretical analysis, which still needs more work.

References

Chen, S., Shen, J., Wang, L.L.: Generalized Jacobi functions and their applications to fractional differential equations. Math. Comput. 85(300), 1603–1638 (2016)

Chen, Y., Tang, T.: Spectral methods for weakly singular volterra integral equations with smooth solutions. J. Comput. Appl. Math. 233(4), 938–950 (2009)

Chen, Y., Tang, T.: Convergence analysis of the Jacobi spectral-collocation methods for Volterra integral equations with a weakly singular kernel. Math. Comput. 79(269), 147–167 (2010)

Duan, B., Jin, B., Lazarov, R., Pasciak, J., Zhou, Z.: Space-time Petrov–Galerkin fem for fractional diffusion problems. Comput. Methods Appl. Math. 18(1), 1–20 (2018)

Duan, B., Zheng, Z., Cao, W.: Spectral approximation methods and error estimates for Caputo fractional derivative with applications to initial-value problems. J. Comput. Phys. 319, 108–128 (2016)

Hou, D., Hasan, M.T., Xu, C.: Müntz spectral methods for the time-fractional diffusion equation. Comput. Methods Appl. Math. 18(1), 43–62 (2018)

Hou, D., Xu, C.: A fractional spectral method with applications to some singular problems. Adv. Comput. Math. 43(5), 1–34 (2017)

Jiang, S., Zhang, J., Zhang, Q., Zhang, Z.: Fast evaluation of the caputo fractional derivative and its applications to fractional diffusion equations. Commun. Comput. Phys. 21(3), 650–678 (2017)

Jin, B., Lazarov, R., Zhou, Z.: Error estimates for a semidiscrete finite element method for fractional order parabolic equations. SIAM J. Numer. Anal. 51(1), 445–466 (2013)

Jin, B., Lazarov, R., Zhou, Z.: An analysis of the L1 scheme for the subdiffusion equation with nonsmooth data. IMA J. Numer. Anal. 36(2), 197–221 (2015)

Li, X., Tang, T.: Convergence analysis of Jacobi spectral collocation methods for Abel–Volterra integral equations of second kind. Front. Math. China 7(1), 69–84 (2012)

Lin, Y., Xu, C.: Finite difference/spectral approximations for the time-fractional diffusion equation. J. Comput. Phys. 225(2), 1533–1552 (2007)

Lubich, C.: Discretized fractional calculus. SIAM J. Math. Anal. 17(3), 704–719 (1986)

Lubich, C.: Convolution quadrature and discretized operational calculus. I. Numer. Math. 52(2), 129–145 (1988)

Lubich, C.: Convolution quadrature and discretized operational calculus. II. Numer. Math. 52(4), 413–425 (1988)

Lubich, C., Sloan, I., Thomée, V.: Nonsmooth data error estimates for approximations of an evolution equation with a positive-type memory term. Math. Comput. Am. Math. Soc. 65(213), 1–17 (1996)

Persson, P.O., Strang, G.: A simple mesh generator in matlab. SIAM Rev. 46(2), 329–345 (2004)

Podlubny, I.: Fractional Differential Equations: An Introduction to Fractional Derivatives, Fractional Differential Equations, to Methods of Their Solution and Some of Their Applications, vol. 198. Academic Press, Cambridge (1998)

Pollard, H.: The completely monotonic character of the Mittag–Leffler function \({E}_{\alpha }(-x)\). Bull. Am. Math. Soc. 54(12), 1115–1116 (1948)

Sakamoto, K., Yamamoto, M.: Initial value/boundary value problems for fractional diffusion-wave equations and applications to some inverse problems. J. Math. Anal. Appl. 382(1), 426–447 (2011)

Shen, J., Tang, T., Wang, L.L.: Spectral Methods: Algorithms, Analysis and Applications, vol. 41. Springer, Berlin (2011)

Shen, Jy, Sun, Zz, Du, R.: Fast finite difference schemes for time-fractional diffusion equations with a weak singularity at initial time. East Asian J. Appl. Math. 8(4), 834–858 (2018)

Sheng, C., Shen, J.: A hybrid spectral element method for fractional two-point boundary value problems. Numer. Math. Theory Methods Appl. 10(2), 437–464 (2017)

Sheng, C., Shen, J.: A space-time Petrov–Galerkin spectral method for time fractional diffusion equation. Numer. Math. Theory Methods Appl. 11, 854–876 (2018)

Stynes, M.: Too much regularity may force too much uniqueness. Fract. Calc. Appl. Anal. 19(6), 1554–1562 (2016)

Stynes, M., O’Riordan, E., Gracia, J.L.: Error analysis of a finite difference method on graded meshes for a time-fractional diffusion equation. SIAM J. Numer. Anal. 55(2), 1057–1079 (2017)

Sun, T., Liu, Rq, Wang, L.L.: Generalised müntz spectral galerkin methods for singularly perturbed fractional differential equations. East Asian J. Appl. Math. 8(4), 611–633 (2018)

Sun, Zz, Wu, X.: A fully discrete difference scheme for a diffusion-wave system. Appl. Numer. Math. 56(2), 193–209 (2006)

Wu, S.L., Zhou, T.: Parareal algorithms with local time-integrators for time fractional differential equations. J. Comput. Phys. 358, 135–149 (2018)

Yan Yonggui, S.Z.Z., Zhang, J.: Fast evaluation of the caputo fractional derivative and its applications to fractional diffusion equations: a second-order scheme. Commun. Comput. Phys. 22(4), 1028–1048 (2017)

Yan, Y., Khan, M., Ford, N.J.: An analysis of the modified l1 scheme for time-fractional partial differential equations with nonsmooth data. SIAM J. Numer. Anal. 56(1), 210–227 (2018)

Zayernouri, M., Ainsworth, M., Karniadakis, G.E.: A unified Petrov–Galerkin spectral method for fractional pdes. Comput. Methods Appl. Mech. Eng. 283, 1545–1569 (2015)

Zayernouri, M., Karniadakis, G.E.: Fractional sturm-liouville eigen-problems: theory and numerical approximation. J. Comput. Phys. 252, 495–517 (2013)

Zayernouri, M., Karniadakis, G.E.: Fractional spectral collocation method. SIAM J. Sci. Comput. 36(1), A40–A62 (2014)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work is partially supported by the National Key Research and Development Program of China (Grant No. 2017YFB0701700). Beiping Duan really appreciates the financial support by the Fundamental Research Funds for the Central Universities of Central South University (2016zzts015).

Rights and permissions

About this article

Cite this article

Duan, B., Zheng, Z. An Exponentially Convergent Scheme in Time for Time Fractional Diffusion Equations with Non-smooth Initial Data. J Sci Comput 80, 717–742 (2019). https://doi.org/10.1007/s10915-019-00953-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-019-00953-y

Keywords

- Multi-domain spectral method

- Jacobi polynomials

- Fractional power Jacobi functions

- Time-fractional diffusion equation