Abstract

Monotone finite difference methods provide stable convergent discretizations of a class of degenerate elliptic and parabolic partial differential equations (PDEs). These methods are best suited to regular rectangular grids, which leads to low accuracy near curved boundaries or singularities of solutions. In this article we combine monotone finite difference methods with an adaptive grid refinement technique to produce a PDE discretization and solver which is applied to a broad class of equations, in curved or unbounded domains which include free boundaries. The grid refinement is flexible and adaptive. The discretization is combined with a fast solution method, which incorporates asynchronous time stepping adapted to the spatial scale. The framework is validated on linear problems in curved and unbounded domains. Key applications include the obstacle problem and the one-phase Stefan free boundary problem.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this article we numerically approximate a class of nonlinear elliptic and parabolic PDEs using monotone finite difference methods. Finite difference methods are most easily implemented on regular, rectangular grids. In this article we combine the monotone finite difference methods with an adaptive quadtree grid, resulting in significantly improved accuracy near boundaries. The effectiveness of the method is demonstrated on the Laplace equation on curved and on unbounded domains. Key applications are the obstacle problem, and the Stefan Free Boundary problems.

Using the framework of nonlinear elliptic operators, we can combine the partial differential equation with the boundary conditions (or even free boundaries) into a single degenerate elliptic operator. This allows us to build adaptive discretizations and solvers using a unified framework, and to experiment with different grid adaptation strategies.

Adaptive finite difference methods have been used in a similar context in a variety of problems, but a not in a framework as general as this one. A review of data structures and implementation of sparse grids for Partial Differential Equations can be found in [2]. Many approaches using finite differences methods combine the popular level set method for tracking the boundary with a representation of the operator inside the boundary. A fourth order adaptive method for the heat equation and stefan equation can be found in [12]. Adaptive grids for the Stefan problem were used in [7]. Adaptive grid refinement combined with a level set representation of the free boundary was used for the Poisson–Boltzmann system in [15, 19].

An advantage of the finite difference implementation and the viscosity solution framework is that the conditioning of the solvers does not break down as the equation becomes degenerate. For example, fast solvers for the degenerate elliptic Monge-Ampere equation have been built, where the Newton’s method solver speed is (nearly) independent of the regularity of the solutions [10, 11]. These problems were solved on a uniform grid using wide stencil finite difference schemes, but the later article extended the problem to Optimal Transportation boundary conditions, where the source domain is irregular, and the target domain is convex [1]. However the anisotropy of the operator requires wide stencils for monotone discretizations, which are more challenging to implement on an adaptive grid.

In order to work with an adaptive grid, we need a refinement criteria. We take the point of view that the equation itself should provide this criteria. By writing the entire problem (including boundary conditions) as a single degenerate elliptic operator we are able to produce an effective refinement criteria.

The framework can be used for many purposes, including:

-

Artificial Boundary Conditions for problems in an unbounded domain. We use coarse grids in the exterior, and choose to adapt based on either the residual of the boundary conditions or the distance from a reference point in the domain.

-

Grid adaptation for PDEs on curved domains, using grid based discretizations.

-

Obstacle problems or one phase free boundary problems such as the Stefan problem.

-

Nonlinear iterative methods for stationary problems. On an adaptive grid, the iterations are asynchronous, so there the nonlinear CFL condition is locally determined.

1.1 The Framework of Degenerate Elliptic Operators

We consider the class of degenerate elliptic equations [6], which include first order equations, such as the eikonal equation, as well as fully nonlinear PDEs, such as the Monge-Ampere equation, and free boundary problems. Singularities can be present in the solutions to these equation, in particular at locations near the free boundary or where the equation changes types. For this reason, weak solutions, are needed, which are the viscosity solutions [6]. The theory of viscosity solutions is by now well-established. To prove convergence of the schemes, we require that that uniqueness hold for the underlying PDE. In most cases, this is covered by the standard theory. Classical solutions of the Stefan problem arise only under limited conditions [9]. For the one phase Stefan problem, uniqueness of viscosity solutions is established in [16].

Let \(\Omega \) be a domain in \({\mathbb {R}}^n,\) Du and \(D^2u\) denote the gradient and Hessian of u, respectively, and let F(X, p, r, x) be a continuous real valued function defined on \(\mathbb {S}^n\times {\mathbb {R}}^n\times {\mathbb {R}}\times \Omega \), \(\mathbb {S}^n\) being the space of symmetric \(n\times n\) matrices. Write

Definition 1.1

The operator F is degenerate elliptic if

where \(Y \le X\) means that \(Y - X\) is a nonnegative definite symmetric matrix.

If the operator F is degenerate elliptic, then we say the Partial Differential Equation on the domain \(\Omega \)

(along with, for example, Dirichlet boundary conditions, \( u(x) = g(x)\), or x on \(\partial \Omega \)) is as well. The initial-boundary value problem for the

is called degenerate parabolic, when the operator F is degenerate elliptic.

Example 1

(Examples of degenerate elliptic operators). The obstacle problem,

is degenerate elliptic. The Hamilton–Jacobi equation

is degenerate parabolic. The equation

is degenerate elliptic, provided \(c(x), d(x) \ge 0\).

1.2 Elliptic Finite Difference Methods

The class of finite difference methods (or equations) we focus on are called elliptic, [20]. They are a special class of monotone finite difference schemes which are automatically stable, and arise from a simple construction. Consistent elliptic schemes, since they are monotone and stable, converge, according to the theory presented in [5].

Finite difference equations can be defined on a general unstructured grid, regarded as a weighted, directed graph. In our case the adaptive finite difference grid has a natural data structure given by the quadtree, which is discussed below. But to define monotone schemes, we can consider the abstract setting. The unstructured grid on the domain \(\Omega \); is a directed graph consisting of a set of points, \(x_i \in \Omega , i = 1,\dots N\), each endowed with a list of neighbors, \(N(i) = (i_1, \dots , i_d)\). A grid function is a real-valued function defined on the grid, with values \(u_{i} = u(x_{i}).\) The finite difference operator is represented at each grid point by

where \({F}^i(x,y_1, \dots , y_d)\) is a specified, usually nonlinear, function of its arguments. The list of finite differences in the above expression can be regarded as the gradient of the function on the graph. The notation

was used in [18], so that we can write

This notation emphasizes the fact that a finite difference operator is local: it depends only on the value at the reference points, and the gradient of the function on the graph. (Second order finite differences come from combinations of first order differences; higher order differences are not needed). A solution is a grid function which satisfies \({F}[u] = 0\) (at all grid points). A boundary point can be identified as a grid point with no neighbors, so that Dirichlet boundary conditions can be imposed by setting \({F}^i[u] = u_i - g(x_i)\).

We now define degenerate elliptic operators.

Definition 1.2

The finite difference operator \({F}\) is degenerate elliptic if each component \({F}^i(x,y_1, \dots , y_d)\) is nondecreasing in each variable.

We emphasize that the scheme is a nondecreasing function of \(u_i\) and the differences \(u_i - u_j\) for neighbors j of i.

Remark 1.3

We now explain the reason for using degenerate elliptic schemes in this context. On a uniform grid, the standard discretization of the Laplacian operator is given, up to a constant, by the difference between u(x) and an average of the neighbors of u(x). On a non-uniform grid, the operator is given by a similar formula, except the average is replaced by a weighted average (see the discretizations in Sect. 3). Each of these discretizations are in the degenerate elliptic form. In addition, adding a constant term, which corresponds to the inclusion of a term f(x) (which does not depend on u maintains this form. Furthermore, we can take the maximum or minimum of two terms, and, since the max and min functions are non-decreasing in their arguments, this type of nonlinearity is still degenerate elliptic.

For the most of the discretizations we present below, we can use a clever combination of the Laplacian, and the maximum or minimum terms to produce a discretization which is degenerate elliptic. This means that we can appeal to the convergence theory for numerical schemes set out in the references above to ensure that the methods converge. The main new step in the discretization which has not be used in the preceding reference is the use of the irregular grids, which is explained in detail below.

1.3 Boundary Conditions and Far Field Boundary Conditions

Here we show how to include boundary conditions on more general (non-rectangular) domains, as well as far field boundary conditions. These boundary conditions are combined along with the elliptic PDE operator into a single (possibly discontinuous) elliptic operator, which is combined with a refinement criteria to perform the grid adaptation.

Example 2

Consider the Poisson equation \(-\Delta u = f\), with f supported on the unit ball and the far field boundary condition \(u \rightarrow 0\), as \(\Vert x\Vert \rightarrow \infty \). An adaptive grid allows us to capture fine details for x near 0 while reducing computational effort in the far field. The artificial boundary condition,

approximates the solution with accuracy \({\mathcal O}\left( \frac{1}{r^3}\right) \) [3].

Remark 1.4

(Characteristic functions on adaptive grids). Given a domain \(\Omega \subset {\mathbb {R}}^2\), defined the characteristic function of the set \(\Omega \) by

The characteristic function on a uniform grid was used in [20] to give a very coarse representation of a boundary (or free boundary). This representation leads to a piece-wise linear approximation of the boundary of the domain, by connecting the boundary grid points. On the adaptive grid, the we obtain a piece-wise linear approximation of the boundary, at difference grid scales, corresponding the spacing of the local grid points.

Example 3

Consider the Dirichlet problem for the domain \(\Omega \subset B = [0,1]^2\) in \({\mathbb {R}}^2\),

along with boundary conditions

Define the operator,

where \(\chi _S\) is the characteristic function of the set S. Note that since the characteristic functions are non-negative, this equation is of the form (1), so the operator is degenerate elliptic. Note also that the operator is discontinuous in x.

In Sect. 3, we present the discretization of the Laplace operator on the grid. In all cases, the approximation has the property that

where \(u(x_j)\) represents the neighbors of \(x_i\). (On a uniform grid, each \(w_j\) would be equal to \(1/h^2\), where h is the grid spacing.) This leads to a discretization of (3) in the form

with \(c(x), d(x) \ge 0\). It is degenerate elliptic according to Definition 1.2.

We can impose other (for example Neumann or Robin boundary conditions), by replacing the second term with

where H(Du(x), x) is itself a first order degenerate elliptic operator.

1.4 Including Free Boundaries in a Single Degenerate Elliptic Operator

The obstacle problem can be formulated as a variational inequality [14, 17], which is naturally discretized using finite element methods [4], and solved using a multigrid method [13].

Our approach of adaptive finite difference methods is natural for the obstacle problem, using a formulation of the problem as a degenerate elliptic PDE, however there are far fewer works which use this approach. Our framework leads to a simple, effective finite difference method which achieves good results using adaptive grids.

We describe here how to write a free boundary problem as a single degenerate elliptic operator.

Example 4

The obstacle problem, for a given obstacle function g(x), which requires that \(u(x) \ge g(x)\) and that

can be written as a single elliptic equation

As in the preceding example, we can discretization the Laplacian in the form (4) and obtain an equation of the form

which is degenerate elliptic according to Definition 1.2.

This example generalizes to double obstacle problems (using a maximum as well as a minimum), as well as obstacle problems involving nonlinear PDEs which replace the Laplacian [20].

Example 5

The evolution of the one-phase Stefan problem in two dimensions,

can be represented by the degenerate elliptic operator,

with

More general one phase free boundary problems can be represented as a single operator on the extended domain, as in [20]. Here we have extended from the free boundary to a larger domain, and we solve for the extended operator in the whole domain.

1.5 Comparison and Stability of Degenerate Elliptic Finite Differences Methods

Stability of degenerate elliptic equations is demonstrated in settings in the reference [20]. First, it is shown that there is an explicitly calculated time step so that the forward Euler method is a contraction in the maximum norm. Second, it is shown that the equation satisfies a nonlinear comparison principle. While the proof is detailed, some intuition for the results can come from the fact that we can regard the elliptic finite difference equation as expressing a nonlinear average, a point of view taken explicitly in [18]. We give a heuristic explanation of these ideas in this remark and refer the reader to the two cited references for more details.

First notice that it is too much to ask that our numerical schemes satisfy the maximum principle. Even for the equation \(-\Delta u = f\) in \(\Omega \) with Dirichlet boundary conditions \(u=g\) on \(\partial \Omega \), the maximum principle does not hold, unless we assume \(f \le 0\). The comparison principle takes the general form

where the notation \(h_1 \le h_2\) means \(h_1(x) \le h_2(x)\) for all x (the domain of definition of the functions is implicit). A more specific, and also more explicit form comes from writing \(u_1 = S(f_1, g_1)\) and \(u_2 = S(f_2, g_2)\) for the solutions of the equation with data \(f = f_1, g=g_1\) or \(f_2, g_2\), respectively. In this case, the comparison principle becomes

where \(u_1 = S(f_1,g_1), u_2 = S(f_2,g_2)\).

The discrete comparison principle holds for the numerical scheme, provided that the Laplacian is discretized using an elliptic scheme. This principle can be proved using the degenerate elliptic property for a general class of equations which satisfy mild analytical conditions on the operator [20] or directly from specific classes of equations without assuming analytical conditions [18]. Once the comparison principle is established, uniqueness of solutions of the schemes follows, since if \(f_1=f_2\) and \(g_1=g_2\), then \(u_1\le u_2\) and also \(u_1 \ge u_2\).

The actual proof of the comparison principle, as in the PDE setting, is a proof by contradiction. However, we include a plausibility argument which is gives an idea of the reason the local condition can lead to comparison, because it is instructive. Fix \(g_1= g_2\). Starting from (4) and solving for the reference variable, we obtain

where \(x_j\) represents neighbors of \(x_i\), and \(\sum _j w_j = \bar{w}\). This last equation expresses the fact that \(u(x_i)\) is a weighted average of its neighbors, plus a constant proportional to \(f(x_i)\). From this form of the equation, it is plausible that increasing \(f(x_i)\) does not decrease the value \(u(x_i)\), which leads to the comparison principle.

The argument which leads to a comparison principle does not depend on the fact that the equation is linear. So we make a parallel argument for a nonlinear elliptic PDE, with a elliptic finite difference discretization. Consider the example of the obstacle problem (5). Again the general comparison principle takes the form

which we write in the explicit form

where \(u_1 = S(g_1), u_2 = S(g_2)\).

The degenerate elliptic discretization was given in (6). Again, we will solve for the reference variable. Since we are looking for a solution, which corresponds to the right hand side equal to zero, we can divide each side of the equation by \(\bar{w} = \bar{w}_i\). Multiplying the second equation by \(\bar{w}\) also does not change the solution. Then we can pull out the term \(u(x_i)\) from the equation, and obtain the following equation

Whereas in the linear case, (7), \(u(x_i)\) was an affine function of an average of the values of u at the neighbors, and the data, now \(u(x_i)\) is the maximum of that same average and the data. In both cases, comparison is suggested by the fact that increasing any of the values of the neighbors or that data does not decrease \(u(x_i)\), and stability is suggested by the fact that increasing the values of just one of the neighbors of \(x_i\) can increase \(u(x_i)\) by at most that amount (and no more).

2 Adaptive Grid

Our adaptive grid is implemented using a quadtree representation [8, Chapter 14: Quadtrees]. Conceptually, the domain is divided into rectangular regions such that the side length of each neighboring rectangle is either twice, half, or the same as its neighbor. The collection of all vertices are the grid nodes for computing the unknown function. Internally the quadtree is represented as a sparse matrix where the indices of non-zero entries represent coordinates on a fixed ultra-fine grid.

Our implementation with sparse matrices in MATLAB. The tool is modular, and the inputs are simple: the discretization of the operator, F, and an additional operator, G, used as the refinement criteria, which can be intrinsic (simply setting \(G = F\)), or defined by the user. In addition, if Newton’s method is to be used as a solver, the formal Jacobian of the operator is needed, DF.

2.1 Quadtree Construction

A quadtree is uniquely determined by a list of coordinates and corresponding maximum length scales. Either there is a node at each coordinate with all neighbors within the specified distance, or the coordinate must lie within a rectangle no larger than indicated.

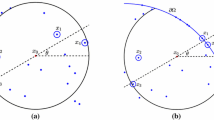

To discretize the Laplacian, we impose an additional ‘scale-padding’ constraints depending on the aspect ratio of the physical domain. Dangling nodes, vertices with three neighbors, occur midway along the shared edge of two equal-sized rectangles one of which is subdivided. The scale-padding constraint in x specifies the minimum number of equal-sized rectangles that must exist to both the left and right of a dangling node. Figure 1 illustrates a pair of quadtrees, the latter refined to observe the scale-padding constraints 2 in x and 1 in y.

To built a quadtree over a virtual ultra-fine grid of \(2^N+1\) by \(2^N+1\), we build a list of squares the quadtree must contain.

-

(1)

List all requested or required squares size \(2^k+1\) by \(2^k+1\).

-

(2)

Add siblings to the list. Fill to the edge, if needed to prevent a dangling node too close to the boundary.

-

(3)

List all parents. Expand list to satisfy scale-padding constraints.

-

(4)

Use grandparents to ensure the expanded list of parents includes all of their siblings. This is the list of all required squares size \(2^{k+1}+1\) by \(2^{k+1}+1\).

Given M requested coordinate-length scale pairs, this procedure is \({\mathcal O}\left( N M \log M\right) \).

2.2 Adaptivity

Any refinement scheme based on position and the local value of the function and its derivatives may be specified. A “refinement criteria” operator is computed at all current grid points and compared with a “refinement threshold”. The new grid must be refined to the finest available spacing at all nodes where the criteria exceeds the threshold.

For better control, the user may supply a non-decreasing array of refinement threshold values. Where the criteria exceeds the \(k^{th}\) threshold the new grid is refined to at least the \(k^{th}\)-finest scale.

The user may also supply a padding parameter, above that required for discretization of the Laplacian at dangling nodes. It is often convenient to give a simple refinement criteria and a large padding parameter.

All adaptive grids must include the fixed initial quadtree, which is determined by the placement of the initial data. The initial data is treated as scattered and linearly interpolated onto the smallest quadtree for which every supplied data-point appears as a node. We assume the placement of initial data implies the minimally acceptable spatial resolution.

3 Discretization on the Adaptive Grid

As mentioned above, degenerate elliptic schemes are easily built from the upwind schemes for derivatives and Laplacian. Our solvers expect the user to specify their operators in terms of these building blocks. For convenience, the discretization discussed here is kept in a black box.

We will consider regular nodes, dangling nodes, and boundary nodes separately. Dangling nodes are those with only three neighbors and occur midway along the edge of a rectangle that adjoins two half-size rectangles.

A regular node is the shared vertex of four rectangles. Consider the nearest neighbors \(u_E, u_W, u_N\) and \(u_S\) at distances \(\Delta _E, \Delta _W, \Delta _N\) and \(\Delta _S\) respectively, as in Fig. 2. The standard upwind discretizations are:

both accurate to first order. For the Laplacian operator we identify the nearest pairs of equidistant opposing nodes, \(u_{E'}\) and \(u_{W'}\), and \(u_{N'}\) and \(u_{S'}\), as in Fig. 2. The standard discretization for \(\partial _x^2u\),

is accurate to second order. Discretization using only nearest-neighbors is only accurate to first order.

For a dangling node, as in Fig. 3, we use the farther vertices of the larger square to interpolate a value for the unknown function directly opposite, then discretize as at a regular node. In the illustrated situation we would use,

This is a monotone discretization, accurate to first order since \(u_N\) and \(u_S\) are equidistant. Second derivatives are more difficult. There is no general upwind discretization for \(\partial _{x}^2u\) for the dangling node illustrated in Fig. 3.

At dangling nodes, we choose to discretize the laplacian using an I-shaped stencil as in Fig. 3. The following expansions are accurate to second-order:

Our discretized Laplacian is then:

This is a monotone discretization provided \(\Delta y \le \Delta x\). Should \(\Delta y > \Delta x\) we take a wider I-shaped stencil, as in Fig. 4. To ensure \(\Delta y \le {\text {pad}}_x\Delta x\), (or, \(\Delta x \le {\text {pad}}_y\Delta y\), for the other type of dangling node,) we choose,

These values reflect the aspect ratio of the domain in physical variables. The ‘scale-padding’ constraints when building the quadtree guarantee this wider stencil is available at all dangling nodes.

3.1 Boundary Nodes

At boundary nodes where Dirichlet boundary conditions are not provided, we implement generic Robin boundary conditions:

The functions A, B, C should be provided for each edge of the domain. User-friendly shortcuts for Neumann or Dirichlet conditions are provided.

Where \(A(x) = 0\), u(x) is specified. The node is considered inactive and is not updated by means of a logical mask. Where \(A(x) \ne 0\), we use the boundary conditions to determine the outward derivative and the regular discretization for the inward derivative. We discretize the second derivative by weighting the outward and inward derivatives equally, as in (9).

4 Numerical Solvers

In this section we discuss the approach to solve the nonlinear finite dimensional equations obtained by discretization of the elliptic or parabolic PDE on the adaptive grid.

In the case of a nonlinear parabolic (time-dependent) problem, \(u_t = F[u]\), it is natural to discretize using the Forward Euler method, which leads to

This discretization leads to the explicit iteration

For stability, there is a restriction on the time step dt, which is often proportional to \(dx^2\) for parabolic problems, where dx is the grid discretization parameter (for uniform grids). The theory developed in [20, Theorem 6] allows us to obtain an explicit value for the time step restriction which ensures stability in the maximum norm. In addition, we can use local values of this time step, which allows different time steps at different grid resolutions. In this manner, a global time step can be taken which corresponds to multiple iterations at small scales, and a single iteration at the largest scale.

When the Laplacian is discretized according to (4), where the weights \(w_i\) depend on the local grid, and are equal to \((\Delta _x)^2\) or \((\Delta _y)^2\) in the case of (9) and in the case of dangling nodes can be obtained from (11). In this case, the restriction on the time step at \(x_i\) is given by

The local dependence of the time step is encoded directly in the weight \(\bar{w}_i\). For nonlinear discretizations involving the Laplacian, such as the obstacle problem, given by (5), the local time step restriction is the same. In general, the local time step restriction is given by the local Lipschitz constant of the scheme, regarded as a function. So simply differentiating the scheme and taking an upper bound can given an acceptable time step. In some cases, this constant may depend on the initial data, as in the case of \(u_t = \vert u_x^2\vert \) where \(dt \le dx^2/\max _j {u^0_{j+1}-u^0_j}\) from [20, Section 4].

4.1 Details on the Time-Dependent Solver

Before time evolution, we apply the refinement scheme to the linear interpolation of the initial data and iterate to ensure infill of any coarse regions of the initial quadtree that are nevertheless of interest.

Nodes are separated according to the distance to their nearest neighbor. A nonlinear CFL condition is required to determine a characteristic time-scale for each group. The time-scales differ by powers of two. We list each group according to the inverse ratio of their characteristic time-scales and the coarsest time-scale. The result is randomly permuted before each time-step to produce a visitation schedule. To evolve by one time-step, all nodes in each group are simultaneously updated according to the visitation schedule.

This scheme is optimal in the sense that each group-update operation is of the order of the number of nodes in the group, and that no more updates occur than required by the nonlinear CFL condition.

4.2 Static Solver

In the case of elliptic equations, it is possible to find the solution by iterating the time dependent problem. This follows from the fact that the forward Euler method, with the restricted time step is a contraction, [20, Theorem 7]. However, this method is slow, since the time step can scale quadratically with the smallest spatial scale, \(dt = {\mathcal {O}}(dx^2)\), so the number of iterations to solve to a fixed time grows as the grid grows.

An effective alternative is to implement Newton’s method. We build the exact Jacobian of the discrete scheme, meaning the gradient of the scheme regarded as a function, which requires writing additional code to represent the Jacobian. In addition, since we are working in two dimensions, and the Jacobian is a sparse matrix, we can use direct solvers effectively. The Jacobian is sparse with the number of nonzero elements on the order of the number of nodes. We expect Gaussian elimination in \({\mathcal O}\left( M\log M\right) \) time, where M is the number of nodes. This results in a fast direct solver.

For further efficiency, we seek a solution at coarse scales before allowing refinement to finer scales. This corresponds to the first step in a V-cycle for a multigrid method. Starting with the initial quadtree we iterate Newton’s method until a stopping condition is reached. We then allow refinement up to the second-coarsest scale present in the initial quadtree as called for by the refinement criteria and threshold. We then seek another solution and repeat the process until all scales are allowed.

Facility for stopping criteria and thresholds are similar to those for refinement. When allowing refinement to the \(k^{th}\)-finest scale, iteration of Newton’s method continues until the stopping criteria is less than the \(k^{th}\) stopping threshold at all nodes. The default stopping criteria is the \(L^\infty \) norm of the elliptic operator.

Note that when seeking a solution over a fixed multi-scale grid it is more efficient to define the multiscale grid through the refinement criteria and provide initial data only on the coarse grid.

5 Computational Examples

In this section we present numerical results, which show the validity and performance of the method, and allow for us to demonstrate the effectiveness of different refinement strategies.

5.1 Artificial Boundary Conditions

We are in the setting of Example 2: the Poisson equation \(-\Delta u = f\), with f supported on the unit ball and \(u \rightarrow 0\), as \(\Vert x\Vert \rightarrow \infty \). Set

where \((1-r)^+ = \max (1-r,0)\), and impose the artificial boundary condition, \(\partial _r u + \frac{u}{r} = 0\) at the boundary of the computational domain. Set the domain to be a square domain with side length \(2\times 10^3\). The refinement criteria: the grid should be finest for \(r<1\).

A detailed view of the solution in the near field can be see in Fig. 5a. The layout of the grid at large scale can be seen in Fig. 5b. Table 1 outlines the allocation of computing resources and nodes by region. Broadly speaking, the adaptive grid allows us to compute on a very large domain, with a computational cost on the order of (within a couple multiples of) restricting to the unit square.

5.2 Irregular Domains

Consider a problem of the type Example 3, where the Dirichlet problem is posed on an irregular domain \(\Omega \), contained in a rectangle. For the Poisson equation with Dirichlet Boundary conditions we use the operator \(F^{bc}\).

The refinement criterion used was based on the combination of residual of the operator and the proximity to the boundary. An example of what can be accomplished is shown in Fig. 6. Notice that this leads to a maximal refinement in two blobs near the boundary, near local extreme points of the solution (red, where the solution value is near 60 and blue, where the solution value is near \(-\)100), while other areas near the boundary have a relatively coarse grid (yellow, where the solution is near 0).

To impose Neumann or Robin Boundary conditions, we apply the boundary conditions for grid points near the boundary, and further away, simply impose \(u=0\). As an example, Fig. 7 presents results for the Laplace equation with homogeneous Dirichlet boundary conditions on the boundary of the unit square, combined with inhomogeneous Neumann boundary conditions

The adaptive grid was determined by the slope of the solution combined with proximity to the interior boundary.

5.3 Obstacle Problems

We are in the setting of the obstacle problem, Example 4, represented by the operator \(F^{obs}\).

The obstacle \(g(r,\theta )=r^2\cos ^2(\theta )\), multiplied by a factor of \(2\sin ^2(\pi y)\) for \(x<0\), and by \(\exp (-r)\) for \(r>\frac{1}{4}\). The obstacle and solution are shown in Fig. 8.

The problem was solved using different refinement criteria, defined as follows. The contact contour determined in all three cases was virtually indistinguishable.

-

As a baseline method, we used a simple predetermined (non-adaptive) grid criteria. The finest grid resolution is specified by the distance to the local maxima \(x_1, x_2, x_3\) of the obstacle (since the contact set is unknown).

$$\begin{aligned} G(x) \text { determined by }( \text {dist}(x, \{ x_1, x_2, x_3 \} )) \end{aligned}$$In this case, the solution was found using nonlinear multigrid, allowing a progressively finer grid each time the residual drops below a threshold.

-

The free boundary-determined grid criteria specifies the finest grid resolution at nodes where both terms F[u] and \(u-g\) are close to zero.

$$\begin{aligned} G^T[u] \text { determined by } \min ( \vert \Delta u \vert , \vert u - g\vert ) \end{aligned}$$The resulting grid provides the most refinement near the boundary of the contact set, as seen in Fig. 9.

-

We also chose to refine the grid at nodes where the absolute value of operator \(F^{obs}[u] = \min (-\Delta [u], u-g(x) )\) exceeds a threshold,

$$\begin{aligned} G^F[u] \text { determined by } \vert F^{obs}[u] \vert . \end{aligned}$$Notice in this case that the scaling of the two terms are different: the Laplacian scales like \(1/h^2\) while the obstacle term has no scaling in h. The resulting grid is very similar to the previous one, but with more refinement inside the contact set (which corresponds to capturing details of g(x)), see Fig. 9. More Newton iterates were performed for the operator-determined grid, however these are often performed when the grid is still coarse resulting in overall slightly better performance.

The relative performance of the different refinement methods is given in Table 2.

5.4 Stefan Free Boundary Problems

We are now in the context of Example 5, where the Stefan problem is represented by the single operator \(F^{Stef}\).

We take initial data corresponding to a function with three local maxima. To test the effectiveness of different grid adaptation strategies we solve the equation using:

-

a uniform coarse grid,

-

a uniform fine grid,

-

adapting the grid according to the size of the operator, \(G^F[u] = \vert F^{Stef[u]}\vert \),

-

adapting the grid according to the size of both terms in the operator \(G^T[u] = \min (\vert \Delta u\vert , \vert \nabla u\vert ^2)\).

The reason for choosing the term adapted refinement \(G^T\) comes from assuming that most of the accuracy of the solution comes from the accuracy of the free boundary: the term \(G^T\) refines near the free boundary. The operator-adapted refinement is intrinsic, it also scales correctly in terms of the grid, since both terms are order \(1/h^2\).

Figure 10 shows the observed computational complexity and outlines the \(L^\infty \) proximity of the three methods to the fine-grid solution. By this metric, using \(G^F\), the operator-adaptive grid, is superior to using \(G^T\), the both terms adapted grid. On closer inspection, Fig. 11 shows the \(G^T\) grid is finest near \(\partial \{u=0\}\), as desired, but that the solution is evolving slowly in this region. The operator \(F^{Stef}\) is relatively small near the boundary. By comparing the implied boundary-curves, Fig. 12 demonstrates clearly that the operator-adapted grid is a better strategy, because the accuracy of the location of the free boundary is significantly better in this case.

6 Conclusions

We introduced a general framework for bringing adaptive grid and solvers to bear on a class of degenerate elliptic and parabolic Partial Differential Equations, which allows for the incorporation of free boundary problems, irregular and unbounded domains, along with adaptive grid refinement. We have demonstrated the significant improvement of solution accuracy and solution time, as compared to methods on regular grids.

The adaptive grid overcomes the low accuracy of the finite difference method where curved boundaries are involved. This includes the free boundaries which arise in the obstacle problem, or the Stefan problem. By incorporating the boundary conditions etc into the operator, we can define a global residual, which included errors from the geometry as well as form the error. Using this criteria we developed a grid refinement criteria which resulted in improvements over the other methods.

Other applications of the framework which are easily implemented include:

-

First order equations, such as the eikonal equation in either bounded or unbounded domains.

-

Visibility problems, with refinement near essential small features of the obstructions

-

Optimal or stochastic control problems with refinement at switching regions, so long as the second order operator can be discretized on the grid.

-

One phase free boundary problems, such as Hele-Shaw.

References

Benamou, J.-D., Froese, B.D., Oberman, A.M.: Numerical solution of the optimal transportation problem using the Monge–Ampère equation. J. Comput. Phys. 260, 107–126 (2014)

Bungartz, H.-J., Griebel, M.: Sparse grids. Acta Numer. 13, 147–269 (2004)

Bayliss, A., Gunzburger, M., Turkel, E.: Boundary conditions for the numerical solution of elliptic equations in exterior regions. SIAM J. Appl. Math. 42(2), 430–451 (1982)

Brezzi, F., Hager, W.W., Raviart, P.-A.: Error estimates for the finite element solution of variational inequalities. Numer. Math. 28(4), 431–443 (1977)

Barles, G., Souganidis, P.E.: Convergence of approximation schemes for fully nonlinear second order equations. Asymptot. Anal. 4(3), 271–283 (1991)

Crandall, M.G., Ishii, H., Lions, P.-L.: User’s guide to viscosity solutions of second order partial differential equations. Bull. Am. Math. Soc. (N.S.) 27(1), 1–67 (1992)

Chen, H., Min, C., Gibou, F.: A numerical scheme for the stefan problem on adaptive cartesian grids with supralinear convergence rate. J. Comput. Phys. 228(16), 5803–5818 (2009)

De Berg, M., Van Kreveld, M., Overmars, M., Schwarzkopf, O.C.: Computational Geometry. Springer, New York (2000)

Daskalopoulos, P., Lee, K.-A.: All time smooth solutions of the one-phase stefan problem and the Hele–Shaw flow. Commun. Partial Differ. Equ. 29(1–2), 71–89 (2005)

Froese, B.D., Oberman, A.M.: Fast finite difference solvers for singular solutions of the elliptic Monge–Ampère equation. J. Comput. Phys. 230(3), 818–834 (2011)

Froese, B.D., Oberman, A.M.: Convergent filtered schemes for the Monge–Ampère partial differential equation. SIAM J. Numer. Anal. 51(1), 423–444 (2013)

Gibou, F., Fedkiw, R.: A fourth order accurate discretization for the laplace and heat equations on arbitrary domains, with applications to the stefan problem. J. Comput. Phys. 202(2), 577–601 (2005)

Gräser, C., Kornhuber, R.: Multigrid methods for obstacle problems. J. Comput. Math. 27(1), 1–44 (2009)

Glowinski, R.: Numerical Methods for Nonlinear Variational Problems, vol. 4. Springer, New York (1984)

Helgadóttir, Á., Gibou, F.: A Poisson–Boltzmann solver on irregular domains with neumann or robin boundary conditions on non-graded adaptive grid. J. Comput. Phys. 230(10), 3830–3848 (2011)

Kim, I.C.: Uniqueness and existence results on the Hele–Shaw and the stefan problems. Arch. Ratl. Mech. Anal. 168(4), 299–328 (2003)

Kinderlehrer, D., Stampacchia, G.: An Introduction to Variational Inequalities and Their Applications, vol. 31. SIAM, Philadelphia (2000)

Manfredi, J.J., Oberman, A.M., Svirodov, A.P.: Nonlinear elliptic partial differential equations and p-harmonic functions on graphs. arXiv preprint arXiv:1212.0834 (2012)

Mirzadeh, M., Theillard, M., Gibou, F.: A second-order discretization of the nonlinear Poisson–Boltzmann equation over irregular geometries using non-graded adaptive cartesian grids. J. Comput. Phys. 230(5), 2125–2140 (2011)

Oberman, A.M.: Convergent difference schemes for degenerate elliptic and parabolic equations: Hamilton–Jacobi equations and free boundary problems. SIAM J. Numer. Anal. 44(2), 879–895 (2006). (electronic)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Oberman, A.M., Zwiers, I. Adaptive Finite Difference Methods for Nonlinear Elliptic and Parabolic Partial Differential Equations with Free Boundaries. J Sci Comput 68, 231–251 (2016). https://doi.org/10.1007/s10915-015-0137-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-015-0137-x