Abstract

The Genvar criterion, proposed by Steel, is one of the important generalizations of canonical correlation analysis. This paper deals with iterative methods for the Genvar criterion. An alternating variable method is analysed and an inexact version of it is proposed. Two starting point strategies are suggested to enhance these iterative algorithms. Numerical results show that, these starting point strategies not only can improve the rate of convergence, but also boost up the probability of finding a global solution.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Since Hotelling [1] proposed canonical correlation analysis (CCA) as the method for describing the relation between the scores of a set of observation units on two groups of variables, CCA has become an important method in numerous applications, including cluster analysis, principal component analysis, multidimensional scaling, pattern recognition, artificial intelligence, and bioinformatics. Several generalizations of canonical correlation analysis for multiple-sets of variables have been proposed by Steel [2], Kettenring [3], Horst [4], Van de Geer [5], Hanafi and Kiers [6], Via et al. [7, 8], and other scholars. Among the five CCA criteria in [3], the sum of correlation (SUMCOR) or its covariance-based version called Maxbet in [5] is the most popular one in practical applications and has been extensively studied. In this paper, we shall concern ourselves with the Genvar criterion originally proposed by Steel [2], which can be introduced briefly as follows.

Let \(y_i=\left( \begin{array}{c} y_{i,1}\\ \vdots \\ y_{i,n_i} \end{array} \right) \), \(i=1,\ldots ,m\) be m-sets of random variables. Suppose the covariance matrix A of \(y=\left( \begin{array}{c} y_1\\ \vdots \\ y_m \end{array} \right) \) exists and is given by

Here \(\varSigma _{ij}\) is the covariance between \(y_i\) and \(y_j\), and we suppose all of the covariance matrix \(\varSigma _{ii}\) of \(y_i\) are invertible. Then the correlation matrix of \(y=\left( \begin{array}{c} y_1\\ \vdots \\ y_m \end{array} \right) \) is given by

Here \(\varSigma _{ii}^{\frac{1}{2}}\) denotes the square root of \(\varSigma _{ii}\).

Let

The Genvar criterion proposed by Steel [2] is to find a minimizer of the following optimization problem

Because \(\varSigma _m\) is a bounded and closed set in \(\mathbb {R}^n\), \(\det (R(t))\) is a continuous function, we see that the solution of Genvar always exists. One important feature of the Genvar criterion is that it reduces to Hotelling’s CCA when the number of sets is only two. Another feature of Genvar is that it is related to the multi-normal negative log-likelihood [2, 9]. Its covariance-based version is closely related to the Box–Cox approach in regression [9]. In [10], some interesting statistical properties of Genvar are proved. In the recent paper [11], the authors point out that the multi-feature fusion method based on SUMCOR is difficult to reveal integral relation among multi-set variables, so they suggest applying Genvar to extract multi-set integral canonical correlation features. In the present paper, we mainly focus on the computational issue of Genvar. The Genvar criterion is a constrained nonlinear optimization problem and can only be solved by means of iteration. General-purpose iterative methods (see, e.g., [12]) can be employed. However, these methods stop at a stationary point, which serves only as a necessary condition for the global minima of Genvar. For statistical applications on the other hand, a global solution is quite desirable, which has substantial importance for the reliability of the statistical prediction. In fact, finding a global solution of the general Genvar is a very hard problem because a particular Genvar where \(m=n\) is equivalent to the so-called Boolean quadratic program, which is long-known to be computationally difficult and belongs to the class of NP-hard problems (see e.g., [13]). To tackle Genvar, Steel [2] using a compound matrix approach developed a system of nonlinear equations. These equations seem to be quite difficult to solve except in special cases. Kettenring [3] outlined an alternating variable method (AVM, see Algorithm 2.2 in Sect. 2), which is a descent-type iterative procedure. To our best knowledge, this is the state-of-art algorithm for Genvar. The AVM algorithm has several disadvantages. Numerical experiment indicates that AVM depends closely on the starting point, and unless it is specially selected, the AVM algorithm has a high probability to stop at a local but non-global minima of Genvar. In addition, for some cases, the AVM algorithm with random starting point converges very slowly. In the present paper, we will numerically demonstrate the performance of AVM and present some theoretical results. An inexact version of AVM is presented. Two starting point strategies are suggested to improve these iterative methods in both reducing the number of iterations and boosting up the probability of finding a global solution of (1.4).

This paper is organised as follows. Section 2 focuses on the optimality conditions for (1.4), and suggests the Gauss–Seidel method. We present upper and lower bounds of the optimal objective function value in Sect. 3. In Sect. 4, we suggest two starting point strategies. We present numerical test results in Sect. 5 and followed by some remarks in Sect. 6.

2 Optimality Conditions

Let \(R_i(t)\) denote the matrix obtained by deleting the i-th row and column of R(t). We note that \(R_i(t)\) is independent of \(t_i\).

Let

Theorem 2.1

Suppose \(t\in \varSigma _m\) is a solution of (1.4). Then there exist real numbers \(u_1,\ldots ,u_m\) such that

Proof

Consider the Lagrangian function of (1.4)

We see that

Therefore,

Consequently,

Here \(\varOmega \equiv diag\left( u_1I_{n_1},\ldots ,u_mI_{n_m}\right) .\) It follows that (2.1) holds. \(\square \)

We note that (2.1) can be rewritten as

and \(G_i\) and \(R_i(t)\) are independent of \(t_i\), but depend on \(t_j(j\ne i)\). Hence, naturally, we suggest the following Gauss–Seidel method to solve (2.3).

Algorithm 2.1

(Gauss–Seidel type method, G–S method)

-

(1)

Take \(t^{(0)}=\left( \begin{array}{c} t_1^{(0)}\\ \vdots \\ t_m^{(0)}\\ \end{array} \right) \in \varSigma _m.\)

-

(2)

Suppose that the current approximate point is \(t^{(k)}=\left( \begin{array}{c} t_1^{(k)}\\ \vdots \\ t_m^{(k)}\\ \end{array} \right) \in \varSigma _m\). Then compute \(t^{(k+1)}\) as follows.

$$\begin{aligned} y_i^{(k)}= & {} G_i\left( z^{(i)}\right) ^TR_i\left( z^{(i)}\right) ^{-1}G_i\left( z^{(i)}\right) t_i^{(k)},\nonumber \\ u_i^{(k)}= & {} \parallel y_i^{(k)}\parallel _2,\nonumber \\ t_i^{(k+1)}= & {} \frac{1}{u_i^{(k)}}y_i^{(k)}. \end{aligned}$$(2.4)Here

$$\begin{aligned} z^{(i)}=\left( t_1^{(k+1)^T},\ldots ,t_{i-1}^{(k+1)^T},t_i^{(k)^T},\ldots ,t_m^{(k)^T}\right) ^T,\quad i=1,2,\ldots ,m. \end{aligned}$$ -

(3)

If \(\mid \det (R(t^{(k+1)}))-\det (R(t^{(k)}))\mid \leqslant \varepsilon \), then stop the algorithm with the approximate solution \(t^{(k+1)}\).

The expression (2.2) of the objective function motivates us to employ an alternating variable method (AVM) to solve (1.4), which is a natural extension of the so-called coordinated descent method [12, p. 53] and is described in Algorithm 2.2.

Algorithm 2.2

(Alternating variable method [3], AVM)

-

(1)

Take \(t^{(0)}=\left( \begin{array}{c} t_1^{(0)}\\ \vdots \\ t_m^{(0)}\\ \end{array} \right) \in \varSigma _m.\)

-

(2)

Suppose that the current approximate point is \(t^{(k)}=\left( \begin{array}{c} t_1^{(k)}\\ \vdots \\ t_m^{(k)}\\ \end{array} \right) \in \varSigma _m\). Then compute \(t^{(k+1)}\) by

$$\begin{aligned} t^{(k+1)}_i=\arg \min \limits _{\parallel t_i\parallel _2=1}\det \left( R(t_1^{(k+1)},\ldots ,t_{i-1}^{(k+1)},t_i,t^{(k)}_{i+1},\ldots ,t_m^{(k)})\right) ,\quad i=1,2,\ldots ,m.\nonumber \\ \end{aligned}$$(2.5) -

(3)

If \(\mid \det (R(t^{(k+1)}))-\det (R(t^{(k)}))\mid \leqslant \varepsilon \), then stop the algorithm, and \(t^{(k+1)}\) is an approximate solution.

This algorithm was outlined by Kettenring [3]. We will present some analysis below and numerically demonstrate its performance in Sect. 5.

Let

We see that

Thus it is very clear that the sequence \(\{\rho (t^{(k)})\}\) is monotonically non-increasing and bounded. This directly implies the convergence of \(\{\rho (t^{(k)})\}\).

Let

Then (2.2) implies that (2.5) is equivalent to the following optimization problem:

and its solution \(t_i^{(k+1)}\) is the unit eigenvector according to the largest eigenvalue of \(G_i^{(k)T}R_i(z^{(i)})^{-1}G_i^{(k)}\). There are several efficient algorithms to compute \(t_i^{(k+1)}\), see e.g., [14].

Next, we will show Algorithm 2.1 is closely relating to Algorithm 2.2. Indeed, we will apply iterative majorization principle(see, e.g., [15]) to (2.7) to demonstrate that Algorithm 2.1 is an inexact version of Algorithm 2.2. To this end, let

Then, \(M_{ik}\) is a symmetric and positive semi-definite matrix, \(g(t_i)\) is a convex function. We note that

Noticing that \(g_i(t_i^{(k)})=h_i(t_i^{(k)})\), (2.7) can be approximately solved by

Here \(y_i^{(k)}=M_{ik}t_i^{(k)}\).

We see that (2.9) is same as (2.4). Therefore, Algorithm 2.1 can be seen as an inexact version of the AVM algorithm. From (2.8), we see that the sequence \(\{t^{(k)}\}\) generated by Algorithm 2.1 has the following property:

That is, Algorithm 2.1 is also a descent algorithm. Furthermore, we see that \(\rho (t^{(k)})=\rho (t^{(k+1)})\) if and only if there exists \(u_i^{(k)}\ge 0\) to satisfy

In other words, for Algorithm 2.1, \(\rho (t^{(k)})=\rho (t^{(k+1)})\) if and only if \(t^{(k)}\) is a stationary point of the Genvar criterion.

Algorithms 2.1 and 2.2 mainly deal with the first-stage of Genvar. That is, a first-stage canonical vector, \(s^{(1)}\), is obtained. We can expand these algorithms successively to later stages as follows. Suppose we have obtained the first \(p-1\) canonical vectors \(s^{(i)}=\left( \begin{array}{c}s^{(i)}_1\\ \vdots \\ s^{(i)}_{m}\end{array}\right) \in \varSigma _m,\quad i=1,2,\ldots ,p-1;\ \ p\le \min \limits _{1\le i\le m}n_i\). To compute the p-stage canonical vector \(s^{(p)}=\left( \begin{array}{c}s^{(p)}_1\\ \vdots \\ s^{(p)}_{m}\end{array}\right) \), we consider the following optimization problem

Let \(Q^{(1)}_i=[s^{(1)}_i,\ldots ,s^{(p-1)}_i]\) and let \(Q_i=[Q^{(1)}_i,X_i]\) be an \(n_i\times n_i\) orthogonal matrix. Let

The constraints in (2.11) mean that \(t_{i}\) can be expressed as \(t_i=X_i\tilde{t}_i\) and so we consider the following optimization problem

Suppose \(\tilde{t}=\left( \begin{array}{c}\tilde{t}^{(p)}_1\\ \vdots \\ \tilde{t}^{(p)}_{m}\end{array}\right) \) is one solution of (2.12), then we see that \(s^{(p)}=\left( \begin{array}{c}s^{(p)}_1\\ \vdots \\ s^{(p)}_{m}\end{array}\right) \) with \(s^{(p)}_i=X_i\tilde{t}_i^{(p)}(i=1,2,\ldots ,m)\) is the p-stage canonical vector of Genvar. Obviously, we can apply Algorithms 2.1 and 2.2 to solve problem (2.12).

3 Upper and lower bounds of the optimal objective function value

In this section, we present some upper and lower bounds of the optimal objective function value of (1.4). Throughout this section we suppose R is an invertible matrix (then symmetric and positive definite matrix), and therefore can be factorized as

Clearly, \(P_j^TP_j=I_{n_j}\quad \ (j=1,2,\ldots ,m)\).

Let

Then, we see that

Let

The following auxiliary result presents a least squares expression of \(1-a_m^TR_m(t)^{-1}a_m\).

Lemma 3.1

Proof

The solution of the least squares problem (3.3) is

Consequently,

\(\square \)

Now, we present a lower bound of \(\min \limits _{t\in \varSigma _m}det(R(t))\). Let

Then,

Theorem 3.1

Let \(H_k=[P_1,\dots ,P_k]\), then,

Proof

From (3.5), we see that

Recursively, it follows that (3.6) holds. \(\square \)

An upper bound of \(\min \limits _{t\in \varSigma _m}\ \det (R(t))\) is given below.Let

Theorem 3.2

Proof

Without loss of generality, we suppose that

Let \(t_{2s-1}\) and \(t_{2s}\) be respectively the unit right and left singular vectors corresponding to the largest singular value of \(R_{2s-1,2s}\). Then

The Hadamard inequality implies that

\(\square \)

From the view point of practical application, the covariance matrix A usually is unknown, we should replace A by a sample covariance matrix, and consequently a perturbed correlation matrix R is applied in Genvar.

If \(\rho _{min}\equiv \min \limits _{t\in \varSigma _m}\det \left( R(t)\right) \) is very small, then the perturbation of R may result in a large relative perturbation of \(\rho _{min}\). The inequality (3.7) means that if any among \(\rho ^{(s)}\) is close to 1 then \(\rho _{min}\) will be small. We note that \(\rho ^{(s)}\) is the canonical correlation between \(y_{2s-1}\) and \(y_{2s}\)[1]. Furthermore, by applying the interlacing property of eigenvalues for real symmetric matrix, it is easy to see that \(1-\rho ^{(s)}\) is an upper bound of the smallest eigenvalue of R.

4 Starting point strategies

Although enjoying the monotone convergence, neither of Algorithms 2.1 and 2.2 can guarantee convergence to a global solution of (1.4). So in this section we consider a preconditioning technique to improve the performance of Algorithms 2.1 and 2.2. The idea of the preconditioning technique is to choose a good approximate solution \(t^{(0)}\) of (1.4) as a starting point for both algorithms. Since Algorithms 2.1 and 2.2 are monotone, and

we see that if \(\rho (t^{(0)})\) has been close to the optimal objective function value of Genvar, then (4.1) means that the sequence \(\{\rho (t^{(i-1)})-\rho (t^{(i)})\}\) will quickly converges to zero, and therefore the special selection of \(t^{(0)}\) increases the rate of convergence. On the other hand, if \(t^{*}\) is an accumulation point of \(\{t^{(k)}\}\), then

This result implies that some stationary points of (1.4) can be ruled out from the limiting points of Algorithms 2.1 and 2.2. In this sense, a good starting point \(t^{(0)}\) can boost up the probability of finding a global solution.

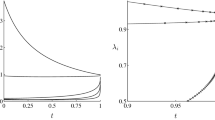

Let the eigenvalues of R(t) be \(\lambda _1(t)\ge \ldots \ge \lambda _m(t)\). Then

Noticing that the solution of the maximization problem \(\max \limits _{{s.t.s_1+\cdots +s_m=m}}\prod \limits _{j=1}^ms_j\) is \(s_1=\cdots =s_m=1\), we consider two starting point strategies as following, in which the aim is to make \(\lambda _1(t)\) as large as possible (Strategy 4.1) or to make \(\lambda _m(t)\) as small as possible (Strategy 4.2).

Strategy 4.1

Compute the eigenvector \(y=\left( \begin{array}{c} y_1\\ \vdots \\ y_m \end{array} \right) \) corresponding to the largest eigenvalue \(\lambda _{max}(R)\) of R, then

Here, \(u_i\in \mathbb {R}^{n_i}\), \(\parallel u_i\parallel _2=1\).

Strategy 4.2

Compute the eigenvector \(z=\left( \begin{array}{c} z_1\\ \vdots \\ z_m \end{array} \right) \) corresponding to the smallest eigenvalue \(\lambda _{min}(R)\) of R, then

Here, \(\nu _i\in \mathbb {R}^{n_i}\), \(\parallel \nu _i\parallel _2=1\).

We note that the following results have been proved by Kettenring [3].

These results imply that \(t^{(0)}\) generated by Strategy 4.1 is a solution of the so-called Maxvar, and \(t^{(0)}\) generated by Strategy 4.2 is a solution of the so-called Minvar. Since both Maxvar and Minvar are generalizations of CCA, and Genvar also is a generalization of CCA, intuitively, \(t^{(0)}\) should be a good approximate solution of the Genvar criterion.

If \(\lambda _{min}(R)\) is small, then employ Strategy 4.2, however, if \(\lambda _{max}(R)\) is large, then employ Strategy 4.1. we now present some estimates of \(\lambda _{max}(R)\) and \(\lambda _{min}(R)\).

Proposition 4.1

Let \(Rx=\lambda _{max}(R)x\), \(\parallel x\parallel _2=1\), \(x=\left( \begin{array}{c}x_1\\ \vdots \\ x_m\end{array}\right) \), \(x_j\in \mathbb {R}^{n_j}\). Then

Proof

Consider the decomposition of R (block Cholesky decomposition)

Then,

\(\square \)

The proof of Proposition 4.1 shows that, unless \(L_jx_j\approx L_1x_1,\ \ j=2,\ldots ,m\), \(\lambda _{max}(R)\) is not very close to m. This seems to imply that usually Strategy 4.2 is better than Strategy 4.1. The following result is a consequence of interlacing property of eigenvalues of real symmetric matrix.

Proposition 4.2

Here, \(\rho _{max}=\max \limits _{i\ne j}\parallel R_{ij}\parallel _2\).

5 Numerical Examples

In this section, we present our numerical experiment of Algorithms 2.1 and 2.2 with Strategies 4.1 and 4.2 to show their efficiency, and most importantly, the effectiveness of Strategies 4.1 and 4.2 both in reducing the number of iterations and in seeking the global minima of Genvar. All of our tests were conducted in MATLAB on a PC with Intel(R) Pentium(R)4 processor of 3.20 GHZ. The defaults value of \(\varepsilon \) is \(10^{-5}\).

For our comparison, we first create two small examples, Examples 5.1 and 5.2. Then, in Examples 5.3 and 5.4, the matrices are of size \(100\times 100\), which were randomly generated, and we ran both algorithms for each matrix starting from 100 random initial points. The test results are documented in Tables 1, 2 and 3, respectively. Under columns “\(Avg.Iter\sharp \)” are the average numbers of iterations needed to meet the stopping criterion. Under columns ”Avg.Time” are the average CPU times (in seconds, measured by the MATLAB function cputime) including the cost of computing initial point. Under columns “\(\bar{\rho }_{Genvar}\)” are the average objective function values. Under columns “\(Total\ Global\%\)” are the sample probabilities, out of all converged objective function values for fixed A, of hitting the best we’ve seen.

First, we list the related algorithms below:

Example 5.1

The matrix X is given by:

We centered each column of X and got a matrix \(\hat{X}\). Then, the matrix A was formed by \(A=\hat{X}^T\hat{X}\). Here, \(m=4, n_1=4, n_2=3, n_3=4, n_4=3\). This example, which is based on data from sensory evaluation of port wines, had been used by Hanafi and Kiers [6].

Example 5.2

The matrix A is given by

with \(m=3, n_1=3, n_2=3, n_3=4\).

The numerical results of Examples 5.1 and 5.2 are documented in Table 1. These results show that for these examples Strategies 4.1 and 4.2 improved the performance of Algorithms 2.1 and 2.2 in terms of iteration numbers and CPU time.

Example 5.3

In this example, we randomly generated 100 matrices of size \(100\times 100\), with \(m = 4,\ n_1=10,\ n_i=n_1+(i-1)10,\quad i=2,3,4\). These matrices were constructed by the following Matlab code.

For each matrix A, we ran Algorithms 2.1 and 2.2 starting from 100 randomly generated initial points. Thus for each A, we obtained 204 objective function values. The numerical results are documented in Table 2.

The results in Table 2 show that Strategy 4.2 significantly improved the performance of Algorithms 2.1 and 2.2 in terms of iterative steps, CPU time and “Total Global”. Strategy 4.2 is much better than Strategy 4.1. In addition, for this example, Algorithm 2.1 is faster than Algorithm 2.2.

Example 5.4

In this example, we randomly generated 100 matrices, with \(m = 4, n_1=10, n_i=n_1+(i-1)10,i=2,3,4\). These matrices were constructed by the following Matlab code.

The numerical results are documented in Table 3.

The results in Table 3 show that Strategy 4.1 significantly improved the performance of Algorithms 2.1 and 2.2 in terms of iterative steps, CPU time and “Total Global”. Strategy 4.1 is much better than Strategy 4.2.

Summarizing the results of Examples 5.1-5.4, we can make several observations as follows.

-

(i)

Generally, both AVM and Gauss–Seidel methods converge to the global minima with a low probability if the starting point is randomly selected.

-

(ii)

Strategies 4.1 and 4.2 can significantly improve these iterative methods in terms of iterative steps and CPU time.

-

(iii)

Strategy 4.1 and/or Strategy 4.2 can significantly boost up the probability of getting a “better” solution of Genvar.

-

(iv)

The Gauss–Seidel method frequently converges faster than the AVM in term of CPU time.

6 Final Remarks

In this paper, the AVM method proposed by Kettenring for the Genvar criterion is analysed. An inexact version of AVM, the Gauss–Seidel method, is presented. We suggest two starting point strategies to improve these iterative methods in terms of rate of convergence and the probability of finding a global solution of Genvar.

We ran Examples 5.3 and 5.4 for large m. The results show that, as m increases, the efficiency of Strategies 4.1 and 4.2 descend. Hence, how to construct other starting point strategy is a future topic. We hope the results in Sect. 3 can enlighten us on this topic.

References

Hotelling, H.: Relations between two sets of variables. Biometrika 28, 321–377 (1936)

Steel, R.G.D.: Minimum generalized variance for a set of linear functions. Ann. Math. Statist. 22, 456–460 (1951)

Kettenring, J.R.: Canonical analysis of several sets of variables. Biometrika 58, 433–451 (1971)

Horst, P.: Relations among m sets of measures. Psychometrika 26, 129–149 (1961)

Van De Geer, J.P.: Linear relations among k sets of variables. Psychometrika 49(1), 70–94 (1984)

Hanafi, M., Kiers, H.A.L.: Analysis of K sets of data, with differential emphasis on agreement between and within sets. Comput. Statist. Data Anal. 51, 1491–1508 (2006)

Vía, J., Santamaría, I., Pérez, J.: Canonical correlation analysis (CCA) algorithms for multiple data sets: an application to blind SIMO equalization. European Signal Processing Conference, EUPSIPCO, Antalya, Turkey (2005)

Vía, J., Santamaría, I., Pérez, J.: A learning algorithm for adaptive canonical correlation analysis of several data sets. Neural Netw. 20, 139–152 (2007)

De leeuw, J.: Multivariate analysis with optimal scaling. In: Das Gupta, S., Ghosh, J.K.( eds.) Proceedings of the International Conference on Advances in Multivariate Statistical Analysis, Calcutta, pp. 127–160 (1988)

Zhang, Y.T., Zhu, X.D.: Correlation of K groups of random variables. Chin. J. Appl. Probab. Statist. 4(1), 27–34 (1988)

Yuan, Y.H., et al.: A novel multiset integrated canonical correlation analysis framework and its application in feature fusion. Pattern Recognit. 44, 1031–1040 (2011)

Nocedal, J., Wright, S.J.: Numerical Optimization. Springer, New York (1999)

Geomans, M.Z., Williamson, D.P.: Improved approximation algorithms for maximum cut and satisfiability problems using semidefinite programming. J. ACM 42, 1115–1145 (1995)

Golub, G.H., Van Loan, C.F.: Matrix Computations, 3rd edn. Johns Hopkins University Press, Baltimore (1996)

Kiers, H.A.L.: Setting up alternating least squares and iterative majorization algorithms for solving various matrix optimization problems. Comput. Statist. Data Anal. 41, 157–170 (2002)

Acknowledgments

We are very grateful to both referees for their constructive comments and suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was partially supported by NSF of China, Grant 11371333.

Rights and permissions

About this article

Cite this article

Liu, X., You, J. Numerical Methods for the Genvar Criterion of Multiple-Sets Canonical Analysis. J Sci Comput 67, 821–835 (2016). https://doi.org/10.1007/s10915-015-0103-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-015-0103-7

Keywords

- Multiple-sets canonical correlation analysis

- Genvar criterion

- Alternating variable method

- Starting point strategy