Abstract

The order conditions for modified Runge–Kutta methods are derived via the rooted trees. Symmetry and symplecticity conditions and exponential fitting conditions for modified diagonally implicit Runge–Kutta (DIRK) are considered. Three new exponentially fitted symmetric and symplectic diagonally implicit Runge–Kutta (EFSSDIRK) methods of respective second order and fourth order are constructed. Phase properties of the new methods are analyzed. The new EFSSDIRK methods are applied to several Hamiltonian problems and compared to the results obtained by the existing symplectic DIRK methods in the literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we are concerned with effective integration of initial value problems of the system of first order differential equations in the form

where \(y \in \mathbb {R}^d\), \(f:\mathbb {R} \times \mathbb {R}^d\rightarrow \mathbb {R}^d\) is a smooth function. Such problems arise frequently in applied sciences such as celestial mechanics, chemistry, molecular dynamics and systems biology. More than often, the exact solution to the problem (1) is not available, therefore highly accurate numerical solution is of great importance. Runge–Kutta type methods constitute a category of most widely used integrators for the numerical solution of the problem (1) (see [1,2,3,4]).

Implicit RK methods have been designed for stiff differential equations [1, 5]. Besides, implicit methods usually possess large stability regions, which means less limitation in stepsizes. Unfortunately, to integrate a system of d differential equations, an s-stage implicit method with a full coefficient matrix requires the solution of \(d\times s\) simultaneous implicit (in general nonlinear) equations at each time step. But for an s-stage diagonally implicit RK (DIRK) method with a lower triangular matrix, each internal stages can be computed by solving an d-dimensional system (see [6]). This saves computational cost dramatically. Al-Rabeh [7] examined the optimal order of non-confluent DIRK methods with non-zero weights. Faragó et al. [8] investigated the convergence of the combination of any of the diagonally implicit (including also the explicit) Runge–Kutta methods with active Richardson extrapolation and showed that the numerical solution obtained converges under rather natural conditions.

In many cases, the problem (1) takes the form of a Hamiltonian system with the differential equations (see Hairer et al. [9], Feng and Qin [10])

where \(y=(p^T,q^T)^T\), \(p=(p_1,\ldots ,p_N)^T\) is the generalized momenta, \(q=(q_1,\ldots ,q_N))^T\) is the generalized coordinates, \(J=\left( \begin{array}{cc} {\mathbf {0}}&{}I_{N}\\ -I_{N}&{}{\mathbf {0}}\end{array}\right) \) and \(H=H(p,q)\) is the Hamiltonian energy. As typical examples of Hamiltonian systems, we mention the Kepler problem, the mathematical pendulum, the Fermi-Pasta-Ulam and the Lotka–Volterra oscillator (see [9, 11, 12]).

The problem (2) calls for some special class of numerical integrators, namely, symplectic integrators. Many numerical analysts in this area attest to the fact that symplectic integrators for the numerical solution of (2) have great advantages because of the preservation of the qualitative (geometric) properties of the flow over classical integrators. The symplecticity conditions for Runge–Kutta were discovered by Sanz-Serna [13]. Among pioneers in this area are Forest and Ruth [14], Yoshida [15], Feng and Qin [16] and McLachlan and Atela [17]. Some symplectic diagonally implicit methods (DIRK) were proposed by Cooper [18], Sanz-Serna and Calvo [19] and Feng and Qin [10].

On the other hand, as Hairer et al. [9] pointed out, symmetric methods show better long time behaviour than non-symmetric methods when applied to reversible systems. Earlier work on the numerical solution of the system (2) with the Runge–Kutta type method seldom considered the symmetry property of the method. Sanz-Serna and Abia [20] proposed to construct symplecitc and symmetric DIRK methods. J.M. Franco and I. Gómez [21] derived fourth-order symmetric DIRK methods for periodic stiff problems.

In applications, the solution to the IVP (1) can often be expressed primarily as a linear combination of functions \(\{\exp (\pm \lambda x)\}\) for some complex number \(\lambda \) with perhaps a small perturbation. This motives the attempt to design RK methods that can integrate without truncation error differential equations with solution of the form \(\{\exp (\pm \lambda x)\}\). These methods are named exponentially fitted methods. Calvo et al. [22] investigated the structure (linear and quadratic invariants) preservation of exponentially fitted Runge–Kutta methods and derived a family of symplectic two-stage and fourth order EFRK methods.

In the particular case that \(\lambda = \mathrm{i} \omega \) where \(\omega >0, \mathrm{i}^2=-1\), the previous exponentially fitted methods are called trigonometrically fitted methods. Gautschi [23] and Bettis [24] constructed linear multistep methods and Runge–Kutta methods respectively which are exact if the solution is a trigonometric polynomial in \(\omega t\) of a prescribed degree. A survey of exponentially fitted methods can be found in the monograph by Ixaru and Vanden Berghe [25] and the monograph by Wu et al. [26].

The combination of symplecticity with the exponential/trigonometric fitting was first proposed by Simos and Vigo-Aguiar [27] where a two-stage Runge–Kutta–Nyström method was derived for second order differential equations. Recently, Vanden Berghe and Van Daele [28] have derived trigonometrically fitted RK methods. Unfortunately explicit Runge–Kutta methods cannot be symplectic [10] or symmetric. Calvo et al. [29] constructed sixth-order symmetric and symplectic exponentially fitted Runge–Kutta methods of the Gauss type. Very recently, Kalogiratou [30] derived a three-stage trigonometrically fitted DIRK method which is shown to be very effective when applied to oscillatory Hamiltonian systems.

The purpose of this paper is to investigate the symmetry and symplecticity conditions for modified exponentially fitted DIRK methods when they are applied to Hamiltonian systems in the form (1) or (2). The remainder of this paper is organized as follows. In Sect. 2 we introduce a new type of imaginary trees to derive the order conditions for modified DIRK methods followed by symmetry, symplecticity and exponentially fitting conditions. In Sect. 3, we derive two practical symmetric and symplectic exponentially fitted RK methods. Phase properties of the new EFSSDIRK methods are analyzed in Sect. 4. Numerical experiments are carried out in Sect. 5 to illustrate the effectiveness of the new methods. Conclusive remarks are given in Sect. 6.

2 Modified RK methods with order conditions, symmetry, symplecticity and exponential fitting conditions

Suppose the IVP (1) has oscillatory solution of the principal frequency \(\omega \). The idea of a fitted type Runge–Kutta method is to introduce coefficients depending on \(z=\mathrm{i}\omega h\) and the method is referred to as a modified Runge–Kutta method.

Definition 2.1

An s-stage modified Runge–Kutta (RK) method for solving the system (1) has the scheme

where h is the stepsize, \(\eta _i(z),b_i(z),a_{ij}(z),c_i(z)\), \(1\le j\le i,\ i=1,\ldots ,s\) are assumed to be even functions of \(z=\omega h\).

It is usually assumed that \(\lim \limits _{z\rightarrow 0}\eta _i(z)=1\) so that as \(z\rightarrow 0\), the scheme (3) reduces to a traditional Runge–Kutta method.

The scheme (3) can be briefly expressed by the Butcher tableau of coefficients

The purpose of this section is to present the algebraic order conditions, symmetric conditions and exponential fitting conditions for the modified RK method (3).

2.1 Order conditions

A modified Runge–Kutta method of the form (3) has order p if for any sufficiently smooth function f(x, y) in Problem (1), under the assumption that \(y(x_0)=y_0\), the local truncation error of the solution satisfies

The order conditions can be obtained by comparing the series expansion of the numerical solution with that of the exact solution. The exact solution can be expanded into series of the time step in terms of the rooted tree (see Butcher [4], Hairer et al. [9])

where \(\mathcal {T}\) is the set of (modified) rooted trees, \(\mathcal {F}(\tau )(y_n)\) is the elementary differential of f associated to \(\tau \in \mathcal {T}\) at \(y_n\), \(\rho (\tau )\) is the order of the tree \(\tau \), \(\gamma (\tau )\) is the density of \(\tau \), \(\alpha (\tau )\) is the number of monotonic labellings of \(\tau \) and \(\Phi (\tau )\)is the elementary weight coefficient which depends on A(z).

On the other hand, the numerical solution of the modifed RK method (3)

Further expanding \(f(Y_j)\) at \(y_n\) we have

where \(\widetilde{\mathcal {T}}\) is the set of complex rooted trees (also called imaginary rooted trees, or imaginary trees), for \(\tau \in \widetilde{\mathcal {T}}\), the order \(\rho (\tau )\), the function \(\widetilde{\alpha }(\tau )\) and the density \(\widetilde{\gamma }(\tau )\) are defined in the same way as those for traditional trees \(\tau \in \mathcal {T}\), \(\widetilde{\mathcal {F}}(\tau )(y_n)\) is the elementary differential of f associated to \(\tau \) at \(y_n\) and \(\widetilde{\Phi }(\tau )\) is the vector of elementary weight coefficients which depends on A(z) and \(\eta (z)\). The first imaginary trees with the corresponding vector of elementary weight coefficients and elementary differentials are presented in Table 1.

Thus the local truncation error of the modified RK method (3) can be expressed as

By the independence of the elementary differentials, it follows from (4) that the method (3) is of order p if

and

To be specific, the p-th order conditions are listed in two groups as follows:

-

(i)

For traditional trees

$$\begin{aligned}&b(z)^Te=1+\mathcal {O}(z^p), \quad b(z)^TA(z)e=\dfrac{1}{2}+\mathcal {O}\big (z^{p-1}\big ),\nonumber \\&b(z)^T (A(z)e)^2=\dfrac{1}{3}+\mathcal {O}\big (z^{p-2}\big ), \quad b(z)^TA^2(z)e=\dfrac{1}{6}+\mathcal {O}\big (z^{p-2}\big ),\nonumber \\&b(z)^T(A(z)e)^3=\dfrac{1}{4}+\mathcal {O}\big (z^{p-3}\big ),\quad b(z)^T\big (A(z)e\cdot \big (A(z)^2e\big )\big )=\dfrac{1}{12}+\mathcal {O}(z),\nonumber \\&b(z)^TA(z)\big (A(z)e\big )^2=\dfrac{1}{8}+\mathcal {O}\big (z^{p-3}\big ),\quad b(z)^TA^3(z)e=\dfrac{1}{24}+\mathcal {O}\big (z^{p-3}\big ),\nonumber \\&\ldots \end{aligned}$$(5) -

(ii)

For imaginary trees

$$\begin{aligned}&b(z)^T\big (\eta (z)-e\big )^k=\mathcal {O}(z^p), \quad 1\le k< p/2,\nonumber \\&b(z)^T\Big (\big (\eta (z)-e\big )^k\cdot A(z)\big (\eta (z)-e\big )^l\Big )=\mathcal {O}\big (z^{p-1}\big ), \quad 1< k+l< p/2,\nonumber \\&b(z)^T\Big (\big (\eta (z)-e\big )^k\cdot A(z)\big (\eta (z)-e\big )^l\cdot A(z)\big (\eta (z)-e\big )^m\Big )=\mathcal {O}\big (z^{p-2}\big ),\nonumber \\&\quad 1\le k+l+m< p/2,\nonumber \\&b(z)^T\Big ((\eta (z)-e)^k\cdot A(z)\big ((\eta (z)-e)^l\cdot A(z)(\eta (z)-e)^m\big )\Big )=\mathcal {O}\big (z^{p-2}\big ),\nonumber \\&\quad 1\le k+l+m<p/2,\nonumber \\&b(z)^T\Big (\eta (z)-e)^k\cdot (A(z)(\eta (z)-e)^l\cdot A(z)(\eta (z)-e)^m \cdot A(z)(\eta (z)-e)^n\Big )\nonumber \\&\quad =\mathcal {O}\big (z^{p-3}\big ),\quad 1\le k+l+m+n< p/2,\nonumber \\&b(z)^T\Big ((\eta (z)-e)^k\cdot A(z)(\eta (z)-e)^l\cdot A(z)\big ((\eta (z)-e)^m\cdot A(z)(\eta (z)-e)^n\big )\Big )\nonumber \\&\quad =\mathcal {O}\big (z^{p-3}\big ),\quad 1\le k+l+m+n< p/2,\nonumber \\&b(z)^T\Big ((\eta (z)-e)^k\cdot A(z)(\eta (z)-e)^l\cdot \big (A(z)(\eta (z)-e)^m\cdot A(z)(\eta (z)-e)^n\big )\Big )\nonumber \\&\quad =\mathcal {O}\big (z^{p-3}\big ),\quad 1\le k+l+m+n< p/2,\nonumber \\&b(z)^T\Big ((\eta (z)-e)^k\cdot A(z)\big ((\eta (z)-e)^l\cdot A(z)((\eta (z)-e)^m\cdot A(z)(\eta (z)-e)^n)\big )\Big )\nonumber \\&\quad =\mathcal {O}\big (z^{p-3}\big ),\quad 1\le k+l+m+n< p/2,\nonumber \\&\ldots \end{aligned}$$(6)

where \(e=(1,\ldots ,1)^{T}\), k, l, m, n are non-negative integers and a dot “\(\cdot \)” between two vectors indicates componentwise multiplication. The first set of conditions (5) can be used to simplify successively the second set (6). For example, for \(k=2\), \(l=1\) and \(p=7\), if the conditions

have been confirmed, then the second condition in (5) implies that

which can be equivalently replaced by a simpler one

Keeping \(\eta (z)-e=\mathcal {O}(z^2)\) in mind, the first to fourth conditions are listed as follows:

-

(i)

First order conditions:

$$\begin{aligned} b(z)^Te=1+\mathcal {O}(z). \end{aligned}$$(7) -

(ii)

Second order conditions:

$$\begin{aligned} b(z)^Te=1+\mathcal {O}(z^2), \quad b(z)^TA(z)e=\dfrac{1}{2}+\mathcal {O}(z). \end{aligned}$$(8) -

(iii)

Third order conditions:

$$\begin{aligned}&b(z)^Te=1+\mathcal {O}\big (z^3\big ), \quad b(z)^T\eta (z)=1+\mathcal {O}\big (z^3\big ), \quad b(z)^TA(z)e=\dfrac{1}{2}+\mathcal {O}\big (z^2\big ),\nonumber \\&b(z)^T(A(z)e)^2=\dfrac{1}{3}+\mathcal {O}(z),\quad b(z)^TA(z)^2e=\dfrac{1}{6}+\mathcal {O}(z). \end{aligned}$$(9) -

(iv)

Fourth order conditions:

$$\begin{aligned}&b(z)^Te=1+\mathcal {O}\big (z^4\big ),\quad b(z)^T\eta (z)=1+\mathcal {O}\big (z^4\big ), \quad b(z)^TA(z)e=\dfrac{1}{2}+\mathcal {O}\big (z^3\big ),\nonumber \\&b(z)^TA(z)\eta (z)=\dfrac{1}{2}+\mathcal {O}\big (z^3\big ), \quad b(z)^T\big (\eta (z)\cdot A(z)e\big )=\dfrac{1}{2}+\mathcal {O}\big (z^3\big ), \nonumber \\&b(z)^T (A(z)e)^2=\dfrac{1}{3}+\mathcal {O}\big (z^2\big ),\quad b(z)^TA^2(z)e=\dfrac{1}{6}+\mathcal {O}\big (z^2\big ),\nonumber \\&b(z)^T(A(z)e)^3=\dfrac{1}{4}+\mathcal {O}(z),\quad b(z)^TA(z)(A(z)e)^2=\dfrac{1}{12}+\mathcal {O}(z),\nonumber \\&b(z)^T\big (A(z)e \cdot A^2(z)e\big )=\dfrac{1}{8}+\mathcal {O}(z),\quad b(z)^TA^3(z)e=\dfrac{1}{24}+\mathcal {O}(z). \end{aligned}$$(10) -

(iv)

Fifth order conditions:

$$\begin{aligned}&b(z)^Te=1+\mathcal {O}\big (z^5\big ),\quad b(z)^T\eta (z) =1+\mathcal {O}\big (z^5\big ), \quad b(z)^T\eta (z)^2 =1+\mathcal {O}\big (z^5\big ), \\&b(z)^TA(z)e=\dfrac{1}{2}+\mathcal {O}\big (z^4\big ), b(z)^TA(z)\eta (z)=\dfrac{1}{2}+\mathcal {O}\big (z^4\big ), \\&b(z)^T\big (\eta (z)\cdot A(z)e\big ) =\dfrac{1}{2}+\mathcal {O}\big (z^4\big ),\quad b(z)^T (A(z)e)^2=\dfrac{1}{3}+\mathcal {O}\big (z^3\big ),\\&b(z)^T\big (\eta (z)\cdot (A(z)e)^2\big )=\dfrac{1}{3}+\mathcal {O}\big (z^3\big ),\quad b(z)^T\big (A(z)\eta (z)\cdot (A(z)e)\big ) =\dfrac{1}{3}+\mathcal {O}\big (z^3\big ),\\&b(z)^TA^2(z)e=\dfrac{1}{6}+\mathcal {O}\big (z^3\big ),\quad b(z)^T\big (\eta (z)\cdot A^2(z)e\big ) =\dfrac{1}{6}+\mathcal {O}\big (z^3\big ),\\&b(z)^TA(z)\big (\eta (z)\cdot A(z)e\big )=\dfrac{1}{6}+\mathcal {O}\big (z^3\big ),\quad b(z)^TA^2(z)\eta (z)=\dfrac{1}{6}+\mathcal {O}\big (z^3\big ),\\&b(z)^T(A(z)e)^3=\dfrac{1}{4}+\mathcal {O}\big (z^2\big ),\quad b(z)^TA(z)(A(z)e)^2 =\dfrac{1}{12}+\mathcal {O}\big (z^2\big ),\\&b(z)^T\big (A(z)e \cdot A^2(z)e\big )=\dfrac{1}{8}+\mathcal {O}\big (z^2\big ),\quad b(z)^TA^3(z)e =\dfrac{1}{24}+\mathcal {O}\big (z^2\big ),\\&b(z)^T(A(z)e)^4=\dfrac{1}{5}+\mathcal {O}(z),\\&b(z)^T\big ((A(z)e)^2 \cdot A(z)^2e\big )=\dfrac{1}{10}+\mathcal {O}(z),\quad b(z)^T\big (A(z)^2e \cdot A(z)^2e\big ) =\dfrac{1}{20}+\mathcal {O}(z),\\&b(z)^T\big (A(z)e \cdot A(z) (A(z)e)^2\big )=\dfrac{1}{15}+\mathcal {O}(z),\quad b(z)^T\big (A(z)e \cdot A(z)^3e\big ) =\dfrac{1}{15}+\mathcal {O}(z),\\&b(z)^TA(z)\big ( A(z)e\big )^3=\dfrac{1}{20}+\mathcal {O}(z),\quad b(z)^TA(z)\big (A(z)e \cdot A(z)^2e\big ) =\dfrac{1}{10}+\mathcal {O}(z),\\&b(z)^TA(z)^2\big ( A(z)e\big )^2=\dfrac{1}{40}+\mathcal {O}(z),\quad b(z)^TA(z)^4e=\dfrac{1}{60}+\mathcal {O}(z). \end{aligned}$$

2.2 Symmetry conditions

Definition 2.2

[9] The adjoint method \(\Phi ^*_h\) of a modified RK method \(\Phi _h\) is the inverse map of the original method with reversed time step \(-h\), i.e., \(\Phi ^*_h:=\Phi ^*_{-h}\). A method for which \(\Phi ^*_h:=\Phi ^*_{-h}\) is called symmetric.

Theorem 2.1

The adjoint method of a modified RK method (3) is again an s-stage modified RK method and its coefficients are given by

If

then the modified RK method (3) is symmetric.

Proof

Interchanging \(y_{n+1}\leftrightarrow y_n\) and \(h \leftrightarrow -h\) in (3) yields

Replacing i by \(s+1-i\) and j by \(s+1-j\) and denoting \(Y_{s+1-i}\) as \(Y^*_{i}\) gives

The system (13) resprents a modified RK methods with coefficients given by (11). Comparing (11) and (12) implies that the adjoint coincides the original method if \(c_i^*(z)=c_{i}(z),\ a^*_{ij}(z)=a_{ij}(z)\) and \(b^*_i(z)=b_i(z)\) and the result follows. The proof is complete. \(\square \)

Remark 2.1

As \(z\rightarrow 0\), the conditions (12) reduces to the symmetry conditions for traditional RK methods with constant coefficients.

In this paper we are mainly interested in modified diagonally implicit RK (DIRK) methods, that is, in the scheme (3), \(a_{ij}(z)=0\) for \(i<j\).

Corollary 2.1

Under the assumptions of (11), the modified DIRK is symmetric if

2.3 Symplecticity conditions

The flow of the Hamiltonian system (2) is the map \(\varphi _t:U\subset \mathbb {R}^{2N}\rightarrow \mathbb {R}^{2N}\), \(\varphi _t(p_0, q_0) = \big (p(t, p_0, q_0), q(t, p_0, q_0)\big )\), where \(p(t, p_0, q_0), q(t, p_0, q_0)\) is the solution of the system with the initial values \(p(0) = p_0, q(0) = q_0\). It is well-known that the flow \(\varphi _t\) satisfies

Definition 2.3

A one-step method \(\Phi _h: y_n\rightarrow y_{n+1}\) is symplectic if its Jacobian matrix \(\dfrac{\partial y_{n+1}}{\partial y_n}\) is symplectic, that is, \(\left( \dfrac{\partial y_{n+1}}{\partial y_n}\right) ^TJ\left( \dfrac{\partial y_{n+1}}{\partial y_n}\right) =J\).

Theorem 2.2

[31] The modified RK method (3) is symplectic if its coefficients satisfy the following conditions

The proof is essentially the same as that of Theorem 2 in Sanz-Serna and Calvo [19].

For a DIRK method, it follows from the symplecticity condition (15) that

assuming that \(b_i(z) \ne 0\ (i=1,\ldots ,s)\).

Combining (15) with (14), the scheme of a symmetric and symplectic modified DIRK can written as

2.4 Exponentially fitting conditions

The basic idea of exponential fitting is to introduce fitting coefficients to the scheme of a traditional Runge–Kutta method so that the method can integrate without truncation error differential equations with solution of a exponential function \(\exp (\lambda x),\ \lambda \in \mathbb {C}\). Exponentially fitted (EF) algorithms have been systematically studied by Ixaru and Vanden Berghe [25]. Vanden Berghe et al. [32] presented the exponential fitting conditions for the Runge–Kutta methods and derived some practical explicit EFRK methods. We follow the approach of Albrecht [33] and view each internal stage of the scheme (3) as a linear multistep method on a non-equidistant grid. In what follows,

-

to the internal stages of (3), we associate the linear operators

$$\begin{aligned} \mathcal {L}_i[A(z),h]y(x)= & {} y(x+c_i(z)h)-\eta _i(z)y(x)\\&-\,h\sum \limits _{j=1}^sa_{ij}(z)y'(x+c_jh),\quad i=1,\ldots ,s; \end{aligned}$$ -

to the update of (3), we associate a linear operator

$$\begin{aligned} \mathcal {L}[b(z),h]y(x)=y(x+h)-y(x)-h\sum \limits _{i=1}^sb_i(z)y'(x+c_ih). \end{aligned}$$

Requiring that the operators \(\mathcal {L}_i\) and \(\mathcal {L}\) to vanish for the functions \(\{\exp (\pm \lambda x)\}\) leads to the following equations:

where \(z=\lambda h\). Equations (17) are called the exponential fitting conditions.

If the coefficients of a modified diagonally implicit Runge–Kutta method satisfy the conditions (14), (15) and (17), then the method is referred to as an EFSSDIRK method.

3 Construction of new EFSSDIRK methods

The purpose of this section is to construct one to three-stage practical EFSSDIRK methods of order two and order four, respectively.

3.1 A one-stage EFSSDIRK method of order two

For \(s=1\), the scheme (16) becomes

For this scheme, the exponentially fitting conditions (17) imply that

Solving the system (19) with \(c_1(z)=1/2,\ a_{11}(z)=\frac{1}{2}\eta _1(z)b_1(z)\), we obtain

It is easy to check that

Thus the method defined by (18) and (20) has order two and we denote the method as EFSSDIRK1s2. Note that as the frequency \(\omega \rightarrow 0\) (\(z\rightarrow 0\)), this method reduces to a one-stage symmetric and symplectic DIRK method of order two

which is denoted as SSDIRK1s2 (Alexander [6]).

3.2 A two-stage EFSSDIRK method of order two

For \(s=2\), the scheme (16) becomes

For this scheme, the exponentially fitting conditions (17) imply that

Taking \(c_1(z)\) as constant we solve the system (22) and obtain

It is easily checked that the method defined by (21) and (23) has order two and we denote the method as EFSSDIRK2s2.

We note that as \(z\rightarrow 0\), the EFSSDIRK2 method reduces to a classical two-stage SSDIRK, which first appeared in Cooper [18] and we denote the method by SSDIRK2s2.

3.3 A three-stage EFSSDIRK method

Now we proceed to construct a three-stage method. For \(s=3\), the scheme (16) becomes

The exponentially fitting conditions for a three-stage modified DIRK method are given by

where \(c_2(z)=1/2, c_3(z)=1-c_1(z), \eta _{3}(z)=\eta _{1}(z), a_{11}(z)=\frac{1}{2}\eta _1(z)b_1(z), a_{21}(z)=\eta _2(z)b_1(z), a_{22}(z)=\frac{1}{2}\eta _2(z)b_2(z), a_{31}(z)=\eta _1(z)b_1(z), a_{31}(z)=\eta _1(z)b_2(z), a_{33}=\frac{1}{2}\eta _1(z)b_1(z), b_{3}(z)=b_1(z).\)

We solve the system (25) for \(\eta _{1}(z), \eta _{2}(z), b_1(z)\) and \(b_{2}(z)\) expressed in terms of \(c_{1}(z)\) as follows

Substituting the above coefficients into the order four conditions (10) with the node \(c_1(z)\) expanded as

we obtain

The fourth-order method by defined (24), (26), (27) and (28) is denoted by EFSSDIRK3s4. We note that as \(z\rightarrow 0\), the EFSSDIRK3s4 method reduces to a classical three-stage symmetric and symplectic DIRK with constant coefficients. This method first appeared in Sans-Serna and Abia [20] and was also presented in Feng and Qin [10]. We denote the method by SSDIRK3s4S.

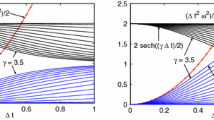

4 Phase properties of the new EFSSDIRK methods

The dispersion and dissipation are important properties which give an idea of the numerical behaviour of methods constructed for oscillatory problems. This section is devoted to the phase-lag and the amplification errors of the methods derived in Sect. 3. Applying the modified RK method (3) to the linear scalar test equation

gives the recurrence

where \(\mu =h\lambda \) and

is the stability function. The definitions of dispersion and dissipation are given as follows.

Definition 4.1

The quantities

are respectively called the dispersion (phase-lag) and the dissipation (amplification error), where \(R((\mathrm{i}\mu ;z)\) is given by (29).

Definition 4.2

The dispersion order is p if

and the dissipation order is q if

where \(r=\dfrac{z}{\mu }\). The method is called zero-dispersive or zero-dissipative if \(P(\mu )=0\) and \(D(\mu )=0\) respectively.

The dispersion and dissipation for the methods derived in Sect. 3 and their limit methods are displayed in Table 2. The table shows that EFSSDIRK3s4 has the lowest phase lag. All the methods have zero dissipation.

5 Numerical experiments

To examine the numerical effectiveness of the newly constructed exponentially fitted symmetric and symplectic diagonally implicit Runge–Kutta methods, we carry out experiments on four test problems. Some highly efficient codes are selected from the recent literature. The codes used for comparison are listed as follows:

-

EFSSDIRK1s2: the one-stage exponentially fitted symmetric and symplectic DIRK method of order two constructed in this paper.

-

EFSSDIRK2s2: the two-stage exponentially fitted symmetric and symplectic DIRK method of order two constructed in this paper.

-

EFSSDIRK3s4: the three-stage exponentially fitted symmetric and symplectic DIRK method of order four constructed in this paper.

-

EFSDIRK2s2: the two-stage exponentially fitted symmetric DIRK method of order two constructed by R. D’Ambrosio and B. Paternoster in [34].

-

SSDIRK1s2: the one-stage symmetric and symplectic DIRK method of order two given by Cash [35], which is the limit method of the EFSSDIRK1s2 method as \(z\rightarrow 0\).

-

SSDIRK2s2: the two-stage symmetric and symplectic DIRK method of order two proposed by Cooper [18], which is the limit method of the EFSSDIRK2s2 method as \(z\rightarrow 0\).

-

SSDIRK3s4: the three-stage symmetric and symplectic DIRK method of order four derived by Sanz-Serna and Abia [20], which is the limit method of the EFSSDIRK3s4 method as \(z\rightarrow 0\).

-

SDIRK4s4: the four-stage symplectic DIRK method of order four derived by Feng and Qin [10].

-

TFSSDIRK3s4: A three-stage trigonometrically fitted symplectic DIRK method of order four given by Kalogiratou [30].

The effectiveness of the methods is tested by measuring the error in the numerical solution and preservation of Hamiltonian. For each problem, we first integrate the problem on a time interval and give the maximal global error of the solution for different stepsizes of integration. We then integrate the problem on a long time interval with a fixed stepsize and plot the time evolution of the maximal global error of the Hamiltonian.

Problem 1

The two-body problem We consider the two-body problem studied in Hairer et al. [3] which involves finding the positions and velocities of two massive bodies that attract each other gravitationally. The first body is located at the origin while the second body moves around the first body in a plane. Denote the position and the velocity of the second body by \((q_1,q_2)\) and \((p_1,p_2)\), respectively. The motion of the second body is governed by the systems of differential equations

This is a Hamiltonian system with the Hamiltonian

For initial values

the exact solution is \(q_1(x)=\cos (x)\), \(q_2(x)=\sin (x)\). We integrate the problem on the interval \([0, 10 \pi ]\) with different stepsizes \(h=\pi /2^{{j}},\ j=3,4,5,6\). Then the problem is integrated with stepsize \(h=\pi /15\) on the interval \([0,1000\pi ]\). The numerical results are presented in Fig. 1.

Problem 1. a Accuracy comparison, b preservation of Hamiltonian

Problem 2

A nonlinear Hamiltonian system Consider the Hamiltonian system studied by Franco [36]

with the initial data

where \(\alpha =\epsilon (2w+\epsilon )\). The analytic solution is given by

representing a periodic motion. In this experiment, we have chosen the parameter values \(\epsilon =10^{-2}\), \(w=5\), and the integration carried out on the interval \([0,10\pi ]\) with stepsizes \(h=1/2^i\), \(j=5,6,7,8\). Then we integrate the problem on the interval \([0,100\pi ]\) with stepsize \(h=1/24\). The numerical results are presented in Fig. 2.

Problem 2. a Accuracy comparison, b preservation of Hamiltonian

Problem 3

The standard pendulum Consider the differential equation describing the standard pendulum given by

The Hamiltonian of the this problem is

We integrate the problem in the interval [0, 100] with stepsizes \(h=1/2^{j},\ i=3,4,5,6\). Then the problem is integrated on the interval [0, 1000] with the stepsize \(h=1/16\). Numerical results obtained are presented in Fig. 3.

Problem 3. a Accuracy comparison, b preservation of Hamiltonian

Problem 4

The Lotka–Volterra model for chemical oscillators Consider the chemical reaction system consisting three reactions between two reactants \(S_1\) and \(S_2\) [9, 11, 37, 38]:

The reaction rates of the three reactions are r, k and d, respectively. Let u(t) and v(t) denote the concentrations of \(S_1\) and \(S_2\) at time t. By the mass-action law, the evolution of the reactants is governed by the following Lotka–Volterra system

By the logarithmic transformation \(p=\log u\), \(q=\log v\), the system (30) becomes a Hamiltonian problem

with the Hamiltonian

In this experiment, we take the values for the parameters \(k=d=1, \ r=2.\) The system (5) is integrated with initial values \(p(0)=\log 2, q(0)=\log 2\) on the interval [0, 100] with fitting frequency \(\omega =1.46\) and stepsizes \(h=1/2^j, j=4,5,6,7\). Then we integrate the problem on the time interval [0, 2000] with initial values \(p(0)=\log 0.1, q(0)=\log 5\) and stepsize \(h=1/32\). The numerical results are presented in Fig. 4.

Problem 4. a Accuracy comparison, b preservation of Hamiltonian

It can be observed from Figs. 1, 2, 3 and 4 that in comparison of accuracy and Hamiltonian preservation, the new methods EFSSDIRK1s2, EFSSDIRK2s2, EFSSDIRK3s4 outperform their limit methods SSDIRK1s2, SSDIRK2s2, SSDIRK, respectively. The fourth-order method EFSSDIRK3s4 is comparable to or even more accurate than Kalogiratou’s TFSSDIRK3s4 method. In Problem 1, the second-order method EFSSDIRK2s2 works as well as the fourth-order method EFSSDIRK3s4 while in Problem 2, EFSSDIRK2s2 even outperforms EFSSDIRK4s4. Among the four fourth-order methods, the four-stage method SDIRK4s4 does the most poorly. It is not surprising since SDIRK4s4 is not a symmetric method.

6 Conclusions and discussions

In this paper, we have adapted the symmetric and symplectic conditions given in [9, 13] respectively to the modified DIRK methods for autonomous Hamiltonian systems. Order conditions for modified DIRK methods are obtained through imaginary rooted trees. The exponentially fitting conditions are given following the line of [25]. Three practical symmetric and symplectic methods of algebraic order two and four were constructed respectively using the symmetric, symplectic and exponentially fitted conditions presented in Sect. 2 of this paper. These methods are reduced to classical DIRK methods with constant coefficients which have appeared in the literatures [10, 18, 20], when the frequency used in the fitting process is set to zero. Phase lags of the methods are analyzed. Although the new three-stage method EFSSDIRK3s4 has the same phase lag order as Kalogiratou’s TFSSDIRK3s4 method [30], the leading term of the phase lag is smaller. All these methods are zero-dissipative due to their sympleticity.

Numerical experiments considered in this paper shows that the exponential fitted methods produces more accurate results on Hamiltonian problems than their classical counterparts. We note that in implementation of exponentially fitted or trigonometrically fitted methods, a fitting frequency \(\omega \) must be prescribed in advance. This fitting frequency is an estimate of the frequency of the solution. If the solution has been given as in Problems 1 and 2, then the frequency of the solution is of course known and the numerical solution is not necessary. However, in most cases, the true solution and its frequency are not available and the choice of a fitting frequency becomes a challenge. More than often, the true frequency, if available, is not suitable for fitting. For instance, in Problem 4 of Sect. 5, the Lotka–Volterra model for chemical oscillators, for the initial value \((p(0),q(0))=(\log 2,\log 2)\), the exact frequency is known to be \( 2\pi /4.61487051945103 = 1.361508471515472\), but it can not serve as the fitting frequency for our new EFSSDIRK methods (and for TFSSDIRK3s4). The fact is, as mentioned in Zhang et al. [37], each method has its own best fitting frequency which depend on the differential equation, initial data and the integration interval. In Problems 1 and 2, the fitting frequency is taken as the frequency of the true solution while in Problems 3 and 4 the frequency utilized is obtained with a searching algorithm. For other techniques of determining the fitting frequency, the reader is referred to Ixaru and Vanden Berghe [39], Ramos and Vigo-Aguiar [40] and Vigo-Aguiar and Ramos [41].

Finally, it should be noted that an RK method having more stages does not necessarily lead to higher effectiveness. In Problem 1, for example, the one-stage method EFSSDIRK1s2 and the two-stage method EFSSDIRK2s2 outperform all the other fourth-order methods with three or four stages. Relative effectiveness of different integrators depends to some extent on the problem they solve.

References

J.C. Butcher, A history of Runge–Kutta method. Appl. Numer. Math. 20, 247–260 (1996)

J.H.E. Cartwright, O. Piro, The dynamics of Runge–Kutta method. Int. J. Bifurc. Chaos 2, 427–449 (1992)

E. Hairer, S.P. Nørsett, G. Wanner, Solving Ordinary Differential Equations I, Non Stiff Problems, 2nd edn. (Springer, Berlin, 1993)

J. Butcher, Numerical Methods for Ordinary Differential Equations, 2nd edn. (Wiley, New York, 2008)

J.M. Franco, I. Gómez, L. Rández, SDIRK methods for stiff ODES with oscillating solutions. J. Comput. Appl. Math. 81, 197–209 (1997)

R. Alexander, Diagonally implicit Runge–Kutta methods for stiff ODEs. SIAM J. Numer. Anal. 14, 1006–1021 (1997)

A.H. Al-Rabeh, Optimal order diagonally implicit Runge–Kutta methods. BIT Numer. Math. 33(4), 619–633 (1993)

I. Faragó, Á. Havasi, Z. Zlatev, The convergence of diagonally implicit Runge–Kutta methods combined with Richardson extrapolation. Comput. Math. Appl. 65(3), 395–401 (2013)

E. Hairer, Ch. Lubich, G. Wanner, Geometric Numerical Integration—Structure Preserving Algorithms for Ordinary Differential Equations, 2nd edn. (Springer, Berlin, 2006)

K. Feng, M. Qin, Symplectic Geometric Algorithms for Hamiltonian Systems (Springer, Berlin, 2010)

R.H. Hering, Oscillations in Lotka–Volterra systems of chemical reactions. J. Math. Chem. 5, 197–202 (1990)

A.J. Lotka, Undamped oscillation derived from the law of mass action. J. Am. Chem. Soc. 42, 201–205 (1920)

J.M. Sanz-Serna, Runge–Kutta schemes for Hamiltonian systems. BIT 28, 877–883 (1988)

E. Forest, R. Ruth, Fourth-order symplectic integration. Physica D 43, 105–17 (1990)

H. Yoshida, Construction of higher order symplectic integrators. Phys. Lett. 150A, 262–269 (1990)

K. Feng, M. Qin, Hamiltonian algorithms for Hamiltonian systems and a comparative numerical study. Comput. Phys. Commun. 65, 173–187 (1991)

R. McLachlan, P. Atela, The accuracy of symplectic integrators. Nonlinearity 5, 541–562 (1992)

G.J. Cooper, Stability of Runge–Kutta methods for trajectory problems. IMA J. Numer. Anal. 7, 1–13 (1987)

J.M. Sanz-Serna, M.P. Calvo, Numerical Hamiltonian Systems (Chapman and Hall, London, 1994)

J.M. Sanz-Serna, L. Abia, Order conditions for canonical Runge–Kutta schemes. SIAM J. Numer. Anal. 28, 1081–1096 (1991)

J.M. Franco, I. Gómez, Fourth-order symmetric DIRK methods for periodic stiff problems. Numer. Algorithms 32, 317–336 (2003)

M. Calvo, J.M. Franco, J.I. Montijano, L. Rández, Structure preservation of exponentially fitted Runge–Kutta methods. J. Comput. Appl. Math. 218, 421–434 (2008)

W. Gautschi, Numerical integration of ordinary differential equations based on trigonometric polynomials. Numer. Math. 3, 381–397 (1961)

D.G. Bettis, Runge–Kutta algorithms for oscillatory problems. J. Appl. Math. Phys. (ZAMP) 30, 699–704 (1979)

LGr Ixaru, G. Vanden Berghe, Exponential Fitting (Kluwer Academic Publishers, Dordrecht, 2004)

X. Wu, X. You, B. Wang, Structure Preserving Algorithms for Oscillatory Differential Equations (Springer, Berlin, 2013)

T.E. Simos, J. Vigo-Aguiar, Exponentially fitted symplectic integrator. Phys. Rev. E 67, 1–7 (2003)

G. Vanden Berghe, M. Van Daele, Fourth-order symplectic exponentially-fitted modified Runge–Kutta methods of the Gauss type: a review. Monografias De La Real Academia De Ciencias De Zaragoza 33, 55–70 (2010)

M. Calvo, J.M. Franco, J.I. Montijano, L. Rández, Sixth-order symmetric and symplectic exponentially fitted Runge–Kutta methods of the Gauss type. J. Comput. Appl. Math. 223, 387–98 (2009)

Z. Kalogiratou, Diagonally implicit trigonometrically fitted symplectic Runge–Kutta methods. Appl. Math. Comput. 219, 7406–7412 (2013)

H. Van de Vyver, A fourth-order symplectic exponentially fitted integrator. Comput. Phys. Commun. 174, 255–262 (2006)

G. Vanden Berghe, H. De Meyer, M. Van Daele, T. Van Hecke, Exponentially-fitted explicit Runge–Kutta methods. Comput. Phys. Commun. 123, 7–15 (1999)

P. Albrecht, A new theoretical approach to RK methods. SIAM J. Numer. Anal. 24, 391–406 (1987)

R. D’Ambrosio, B. Paternoster, Exponentially fitted singly diagonally implicit Runge–Kutta methods. J. Comput. Appl. Math. 263, 277–287 (2014)

J.R. Cash, Diagonally implicit Runge–Kutta formulae for the numerical integration of nonlinear two-point boundary value problems. Comput. Math. Appl. 10, 123–137 (1984)

J.M. Franco, I. Gómez, Symplectic explicit methods of Runge–Kutta–Nyström type for solving perturbed oscillators. J. Comput. Appl. Math. 260, 482–493 (2014)

R. Zhang, W. Jiang, J.O. Ehigie, Y. Fang, X. You, Novel phase-fitted symmetric splitting methods for chemical oscillators. J. Math. Chem. 55, 238–258 (2017)

Y.V. Bibik, The second Hamiltonian structure for a special case of the Lotka–Volterra equations. Comput. Math. Math. Phys. 47, 1285–1294 (2007)

L.Gr. Ixaru, G. Vanden Berghe, H. De Meyer, Frequency evaluation in exponential fitting multistep algorithms for ODEs. J. Comput. Appl. Math. 140, 423–434 (2002)

H. Ramos, J. Vigo-Aguiar, On the frequency choice in trigonometrically fitted methods. Appl. Math. Lett. 23, 1378–1381 (2010)

J. Vigo-Aguiar, H. Ramos, On the choice of the frequency in trigonometrically-fitted methods for periodic problems. J. Comput. Appl. Math. 277, 94–105 (2015)

Acknowledgements

We are very grateful to the anonymous referees for their invaluable comments and suggestions which helped much to improve the quality of the paper. This work was partially supported by the Natural Science Foundation of Jiangsu Province under Grant No. BK20171370, the National Natural Science Foundation of China (NSFC) under Grant Nos. 11171155 and 11571302, the State Key Program of Natural Science Foundation of China under Grant No. 31330067 and the Foundation of Scientific Research Project of Shandong Universities under Grant No. J17KA190.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ehigie, J.O., Diao, D., Zhang, R. et al. Exponentially fitted symmetric and symplectic DIRK methods for oscillatory Hamiltonian systems. J Math Chem 56, 1130–1152 (2018). https://doi.org/10.1007/s10910-017-0841-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10910-017-0841-x