Abstract

Decision trees have been a very popular class of predictive models for decades due to their interpretability and good performance on categorical features. However, they are not always robust and tend to overfit the data. Additionally, if allowed to grow large, they lose interpretability. In this paper, we present a mixed integer programming formulation to construct optimal decision trees of a prespecified size. We take the special structure of categorical features into account and allow combinatorial decisions (based on subsets of values of features) at each node. Our approach can also handle numerical features via thresholding. We show that very good accuracy can be achieved with small trees using moderately-sized training sets. The optimization problems we solve are tractable with modern solvers.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Interpretability has become a well-recognized goal for machine learning models as they push further into domains such as medicine, criminal justice, and business. In many of these applications machine learning models complement domain experts and for human decision-makers to trust these models, interpretability is crucial. Decision trees have been a very popular class of predictive models for decades due to their interpretability and good performance on categorical features. Decision trees (DTs, for short) are similar to flow-charts as they apply a sequence of binary tests or decisions to predict the output label of the input data. As they can be easily interpreted and applied by non-experts, DTs are considered as one of the most widely used tools of machine learning and data analysis (see the recent survey [11] and references therein). Another advantage of DTs is that they often naturally result in feature selection, as only a part of the input is typically used in the decision-making process. Furthermore, DTs can work with both numerical and categorical data directly, which is not the case for numerical classifiers such as linear classifiers or neural networks, as these methods require the data to be real-valued (and ordinal). For example, if a categorical feature can take three values such as (i) red, (ii) blue, or, (iii) yellow, it is often represented by a group of three binary features such that one of these features takes the value 1 while the other two are 0. A numerical classifier would treat this group of three features independently where any combination of 0/1 values are possible—ignoring the valuable information that only three values for the triplet are possible. Numerical classifiers typically recover this lost information by observing enough data and fitting the model accordingly. However, this is not a trivial task, and may require a more complex model than what is really necessary. In comparison, DTs can explicitly deal with categorical features.

There are also known disadvantages to DT predictors. For example, they are not always robust, as they might result in poor prediction on out-of-sample data when the tree is grown too large. Hence, small trees are often desirable to avoid overfitting and also for the sake of interpretability. Assuming that for a given data distribution there exists a small DT that can achieve good accuracy, the small DTs that are computed by a typical recursive DT algorithm (such as CART [5, 16]) may not achieve such accuracy, due to the heuristic nature of the algorithm. Moreover, it is usually impossible to establish a bound on the difference between the expected accuracy of the DT produced by a heuristic algorithm and the best possible DT.

Currently, popular algorithms used for constructing DTs (such as CART or C4.5) are sequential heuristics that first construct a tree and then trim (prune) it to reduce its size, see [11]. When building the tree, these heuristics use various criteria to choose a feature and a condition on that feature to branch on. As the tree is built gradually, the resulting DT is not necessarily “the best” for any particular global criterion. One recent example of this fact is the winning entry [8] in the FICO interpretable machine learning competition [9]. The authors of [8] construct a simple classifier in conjunctive normal form which in fact can also be seen as a small depth decision tree. The authors show that their classifier is both simpler and more accurate (on test data) than the trees constructed by CART.

In this paper, we aim to find optimal small DTs for binary classification problems that produce interpretable and accurate classifiers for the data for which such classifiers exist. We call a DT optimal if it has the best possible classification accuracy on a given training dataset. We allow complex branching rules using subsets of values of categorical features. For example, if a categorical feature represents a person’s marital status and can take the values “single”, “married”,“divorced”, “widowed”, or “has domestic partner”, a simple branching rule, which looks at numerical representation of the features, will make decisions based on a feature being “single” or not, while a more appropriate decision may be “either married or has a domestic partner” or not. Such combinatorial branching rules are considered desirable and in the case of binary classification using CART, branching on the best subset values of a categorical feature can be done again according to a sequential local heuristic. On the other hand, combinatorial branching may lead to overfitting when a categorical variable can take a large number of values. If the categorical variable can take \(\ell \) values, then, there are \(2^\ell -2\) possible subsets of values of this feature that can be used for branching. To avoid overfitting, our model allows bounding the size of the subset used for branching.

While finding an optimal DT (even without the combinatorial decisions) is known to be an NP-hard problem [10], we show that with careful modeling, the resulting integer programs can be solved to optimality in a reasonable amount of time using commercial solvers such as Cplex. Moreover, since we directly optimize the empirical loss of a DT in our model, even suboptimal feasible solutions tend to yield classifiers that outperform those learned by other DT algorithms. In particular, we consider a binary classification problem, which means that the output nodes (leaves) of our DTs generate binary output. Our problem formulation takes particular advantage of this fact. Also, while our formulation can be generalized to real-valued data, it is designed for the case when the input data is binary. Hence, we will consider input data as being a binary vector with the property that features are grouped so that only one feature can take the value 1 in each group for each data sample. Our formulation explicitly takes this structure into account as we allow branching on any subset of the values of that feature. To our knowledge such generalized rules have not been addressed by any algorithm aiming at constructing optimal trees, such as a recent method proposed in [3], which we will discuss in the next section.

In this paper, we focus on constructing small DTs with up to four levels of decisions, which makes the resulting model clearly interpretable and easily usable by humans. Our formulation, in principle, can work for binary trees of any topology; however, as we will show in our computational results, trees of more complex topologies are much more time consuming to train and require larger training sets to avoid overfitting. The purpose of this paper is to show that if an accurate small (interpretable) tree exists for a given data set, it can be obtained in a reasonable time by our proposed model, while popular heuristic methods such as C4.5 [16] and random forests [6] tend to produce less accurate and less interpretable trees. We note that even though we mostly focus on categorical features, our approach can easily handle numerical features via tresholding. We discuss how to do this later and also present numerical experiments with data sets with both categorical and numerical features.

The key approach we pursue is to formulate the DT training problem as a mixed-integer optimization problem that is specially designed to handle categorical variables. We then propose several modifications that are intended to aid a branch-and-bound solver, e.g. symmetry breaking. We also consider an extension to a formulation that directly constrains either training sensitivity or training specificity and then maximizes the other measure.

The rest of the paper is organized as follows: First, in Sect. 2, we discuss related work in using integer formulations for practical machine learning. Then, in Sect. 3, we describe the main ideas of our approach and the structure of the data for which the model is developed. In Sect. 4 we describe an initial IP model and several techniques for strengthening this formulation. We present some computational results and comparisons in Sect. 5.

2 Related work

The idea of solving decision trees to optimality given a fixed topology is hardly new. In [5] from 1984, the authors discuss the “one-step optimality” of inductive (greedy) tree algorithms, and how one would ideally prefer an “overall optimal” method wherein the tree is learned in one step (such as the one we explore in this paper). The authors remark that this is analogous to a “best subset selection” procedure of linear regression, and continue to say that “At the current stage of computer technology, an overall optimal tree growing procedure does not appear feasible for any reasonably sized dataset”. In [14], the authors detail what they call the “look-ahead pathology” of greedy tree learning algorithms, lending further evidence of possible failures of greedy one-step methods.

In the 1990s several papers considered optimization formulations for optimal decision tree learning, but deliberately relaxed the inherently integer nature of the problem. In particular, in [1], a large-scale linear optimization problem, which can be viewed as a relaxation, is solved to global optimality via a specialized tabu search method over the extreme points of the linear polytope. In [2], a similar formulation is used, but this time combined with the use of support-vector machine techniques such as generalized kernels for multivariate decisions, yielding a convex nonlinear optimization problem which admits a favorable dual structure. More recent work [15] has employed a stochastic gradient method to minimize a continuous upper bound on misclassification error made by a deep decision tree. None of these methods, though, guarantee optimal decision trees, since they do not consider the exact (integer) formulations, such as the one discussed in this paper.

Recently, in [3], an integer model for optimal decision trees has been proposed. The key difference with the model in this paper is that [3] does not target categorical variables and, hence, does not exploit the resulting combinatorial structure. Moreover, all features are treated as real-valued ones, hence a categorical feature is replaced by several binary features, and two possible models are proposed. The first uses arbitrary linear combinations of features, and, in principal, is more general than what we propose here, but results in a loss of interpretability. The second uses the value of one feature in each branching decision, and hence is less general than the model in this paper. Additionally, we focus on binary classification problems whereas [3] presents a formulation for multi-class classification. Rather than fixing a tree topology, as we do, they propose tuning a regularization parameter in the objective; as the parameter magnitude increases, more leaf nodes may have no samples routed to them, effectively yielding shallower trees. We note that this does not simplify the underlying optimization problem, and moreover requires tuning parameters in a setting where the training of models is computationally non-negligible, and the effect of the choice of regularization parameter on the tree topology cannot be known a priori. In fact, in the computational results of [3], the depth is often fixed. Finally, unlike the work in [3], we not only propose a basic model that specifically exploits the categorical nature of the features, but we also propose several modifications of the model that produce stronger formulations and improve the efficiency of the branch-and-bound solver.

We would now like to remark on other relevant uses of integer optimization in classification settings. In particular, [18] considered the problem of learning optimal “or’s of and’s”, which fits into the problem of learning optimal disjunctive normal forms (DNFs), where optimality is measured by a trade-off between the misclassification rate and the number of literals that appear in the “or of and’s”. The work in [18] remarks on the relationship between this problem and learning optimal decision trees. In [18], for the sake of computational efficiency, the authors ultimately resort to optimally selecting from a subset of candidate suboptimal DNFs learned by heuristic means rather than solving their proposed mixed-integer optimization problem. Similarly, [13] proposes learning DNF-like rules via integer optimization, and propose a formulation that can be viewed as boolean compressed sensing, lending theoretical credibility to solving a linear programming relaxation of their integer problem. Another integer model that minimizes misclassification error by choosing general partitions in feature space was proposed in [4], but when solving the model, global optimality certificates were not easily obtained on moderately-sized classification datasets, and the learned partition classifiers rarely outperformed CART, according to the overlapping author in [3]. Finally, a column generation based mixed-integer programming approach to construct optimal DNFs was recently proposed in [8]. This approach seems to work quite well on several binary classification datasets including the FICO challenge data [9].

3 Setting

In this paper we consider datasets of the form \(\{(g^i_1,\ldots ,g^i_t,y^i):i\in 1,2,\ldots , N\}\) where \(g^i_j\in G_j\) for some finite set \(G_j\) for \(j=1,\ldots ,t\), and \(y^i\in \{-1,+1\}\) is the class label associated with a negative or positive class, respectively. For example, if the data is associated with a manufacturing process with t steps, then each \(G_j\) may correspond to a collection of different tools that can perform the jth step of the production process and the label may denote whether the resulting product meets certain quality standards or not. The classification problem associated with such an example is to estimate the label of a new item based on the particular different step-tool choices used in its manufacturing. Alternatively, the classification problem can involve estimating whether a student will succeed in graduating from high school based on features involving gender, race, parents marital status, zip-code and similar information.

Any (categorical) data of this form can alternatively be represented by a binary vector so that \(g^i_j\in G_j\) is replaced by a unit vector of size \(|G_j|\) where the only non-zero entry in this vector indicates the particular member of \(G_j\) that the data item contains. In addition, a real-valued (numerical) feature can be, when appropriate, made into a categorical one by “binning”—that is breaking up the range of the feature into segments and considering segment membership as a categorical feature. This is commonly done with features such as income or age of an individual. For example, for advertising purposes websites typically represent users by age groups such as “teens”, “young adults”, “middle aged”, and “seniors” instead of actual age.

The non-leaf nodes in a decision tree are called the decision nodes where a binary test is applied to data items. Depending on the results of these tests, the data item is routed to one of the leaf nodes. Each leaf node is given a binary label that determines the label assigned to the data by the DT. The binary tests we consider are of the form “does the jth feature of the data item belong to set \(\bar{G}_j\)?”, where \(\bar{G}_j\subseteq G_j \). If the categorical data is represented by a binary vector, then the test becomes checking if at least one of the indices from a given collection contains a 1 or not. We do not consider more general tests that might check different conditions on multiple features.

As a concrete example, consider the tree in Fig. 1 applied to binary vectors \(a\in \{0,1\}^6\) whose elements are divided into two groups: \(\{a_1, a_2, a_3, a_4\}\) and \(\{a_5, a_6\}\) corresponding to two categorical features in the original data representation. The branching decision at node 1 (the root), is based on whether one of \(a_1\) or \(a_2\) is equal to 1. If true, a given data sample is routed to the left, otherwise (that is, if both \(a_1\) and \(a_2\) are 0), the sample is routed to the right. The branching at nodes 2 and 3 (the two children of node 1) are analogous and are shown in the picture. We can now see that data samples \(s^1=(1, 0, 0, 0, 0, 1)\) and \(s^2=(0,1, 0, 0, 0, 1)\) are routed to leaf node 1, sample \(s^3=(0,0,1, 0, 1,0)\) is routed to leaf node 3, and samples \(s^4=( 0, 0, 0, 1,1,0)\) and \(s^5=(0,0,1, 0, 1,0)\) are routed to leaf node 4. The labels of the leaf nodes are denoted by the colors white and gray in Fig. 1.

Formally, a DT is defined by (i) the topology of the tree, (ii) binary tests applied at each decision node, and, (iii) labels assigned to each leaf node. Throughout the paper we consider tree topologies where a decision node either has two leaf nodes or else has two other decision nodes as children. Note that decision trees defined this way are inherently symmetric objects, in the sense that the same DT can be produced by different numberings of the decision and leaf nodes as well as different labeling of the leaf nodes and the binary tests applied at the decision nodes. For example, reversing the binary test from \((a_6)\) to \((\lnot a_6)\) in decision node 2, and at the same time flipping the labels of the leaf nodes 1 and 2, results in an identical DT. More generally, it is possible to reverse the binary test at any decision node and “flip” the subtrees rooted at that node to obtain the same tree.

The optimization problem we consider in the next section starts with a given tree topology and finds the best binary tests (and labels for the leaf nodes) to classify the test data at hand with minimum error. Due to the symmetry discussed above, we can fix the labeling of the leaf nodes at the beginning of the process and the problem reduces to finding the best binary tests, or equivalently, choosing a categorical feature and a subset of its realizations at each decision node. Therefore, the optimization problem consists of assigning a binary test to each decision node so as to maximize the number of correctly classified samples in the training set. We say that the classification of the ith sample is correct provided the path the ith sample takes through the tree starting from the root node ends at a leaf corresponding to the correct label. The ultimate goal of the process, however, is to obtain a DT that will classify new data well, i.e., we are actually concerned with the generalization ability of the resulting DT.

Notice that given two tree topologies such that one is a minor of the other (i.e. it can be obtained from the other by deleting nodes and contracting edges), the larger tree would always be able to classify at least as many samples correctly as the smaller one on the training data. Consequently, for optimization purposes, larger trees always perform better than any of its minors. However, larger trees generally result in more computationally challenging optimization problems. In addition, smaller trees are often more desirable for classification purposes as they are more robust and are easier to interpret.

4 Integer programming formulation

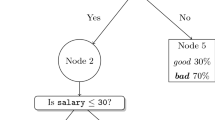

In this section, we first present the basic integer programming formulation and then describe some enhancements to improve its computational efficiency. We initially assume that the topology of the binary tree is given (see Fig. 2) and therefore the number of decision and leaf nodes as well as how these nodes are connected is known. We will then describe how to pick a good topology. The formulation below models how the partitioning of the samples is done at the decision nodes, and which leaf node each sample is routed to as a result.

We begin by introducing the notation. Let the set of all samples be indexed by \(I = \{1,2,\ldots ,{|I|}\}\), let \(I_{+}\subset I\) denote the indices of samples with positive labels and let \(I_{-}=I\setminus I_{+}\) denote the indices of the samples with negative labels. Henceforth, we assume that for each sample the input data is transformed into a binary vector where each categorical feature is represented by a unit vector that indicates the realization of the categorical feature. With some abuse of terminology, we will now refer to the entries of this binary vector as “features”, and the collection of these 0/1 features that are associated with the same categorical feature as “groups”. Let the set of groups be indexed by \(G=\{1,2,\ldots ,|G|\}\) and the set of the 0/1 features be indexed by \( J =\{1,2,\ldots ,{|J|}\}\). In addition, let J(g) denote the set of features that are contained in group g. In the example associated with Fig. 1 above, we have \(G=\{1,2\}\), \(J=\{1,2,3,4,5,6\}\), and \(J(1)=\{1,2,3,4\}\), \(J(2)=\{5,6\}.\) For sample i, we denote the value of its jth feature by \(a^i_j\).

Let the set of decision nodes be indexed by \(K=\{1,2,\ldots ,|K|\}\) and the set of leaf nodes be indexed by \(B=\{1,2,\ldots ,|B|\}\). We denote the indices of leaf nodes with positive labels by \(B_{+}\subset B\) and the indices of leaf nodes with negative labels by \(B_{-} = B\setminus B_{+}\). For convenience, we let \(B_{+}\) contain even indices, and \(B_{-}\) contain the odd ones.

4.1 The basic formulation

We now describe our key decision variables and the constraints on these variables. We use binary variables \(v_g^k\in \{0,1\}\) for \(g\in G\) and \( k\in K\) to denote if group g is selected for branching at decision node k. As discussed in Sect. 3, exactly one group has to be selected for branching at a decision node; consequently, we have the following set of constraints:

The second set of binary variables \( z_j^k\in \{0,1\}\) for \(j\in J\) and \( k\in K\) are used to denote if feature j is one of the selected features for branching at a decision node k. Clearly, feature \(j\in J\) can be selected only if the group containing it is selected at that node. Therefore,we have the following set of constraints:

in the formulation. Without loss of generality, we use the convention that if a sample has one of the selected features at a given node, it follows the left branch at that node; otherwise it follows the right branch.

Let

and note that for any \((v,z)\in S\) one can construct a corresponding decision tree in a unique way and vice versa. In other words, for any given \((v,z)\in S\) one can easily decide which leaf node each sample is routed to. We next describe how to relate these variables (and therefore the corresponding decision tree) to the samples.

We use binary variables \(c_b^i\in \{0,1\}\) for \(b\in B\) and \(i\in I\) to denote if sample i is routed to leaf node b. This means that variable \(c_b^i\) should take the value 1 only when sample i exactly follows the unique path in the decision tree that leads to leaf node b. With this in mind, we define the expression

and make the following observation:

Proposition 1

Let \((z,v)\in S\). Then, for all \(i\in I\) and \(k\in K\) we have \(L(i,k)\in \{0,1\}\) . Furthermore, \(L(i,k)=1\) if and only if there exists some \(j\in J\) such that \(a_{j}^i=1\) and \(z_{j}^k=1\).

Proof

For any \((z,v)\in S\) and \(k\in K\), exactly one of the \(v_g^k\) variables, say \(v_{g'}^k\), takes value 1 and \(v_g^k=0\) for all \(g\not = g'\). Therefore, \(z_{j}^k=0\) for all \(j\not \in J(g).\) Consequently, the first part of the claim follows for all \(i\in I\) as \(L(i,k) = \sum _{j\in J} a_j^iz_j^k=\sum _{j\in J(g')} a_j^iz_j^k= z_{j_i}^k\in \{0,1\}\) where \(j_i\in J(g')\) is the index of the unique feature for which \(a_{j_i}^i=1\). In addition, \(L(i,k)=1\) if and only if \(z_{j_i}^k=1\) which proves the second part of the claim. \(\square \)

Consequently, the expression L(i, k) indicates if sample \(i\in I\) branches left at node \(k\in K\). Similarly, we define the expression

to indicate if sample i branches right at node k.

To complete the model, we relate the expressions L(i, k) and R(i, k) to the \(c_b^i\) variables. Given that the topology of the tree is fixed, there is a unique path leading to each leaf node \(b\in B\) from the root of the tree. This path visits a subset of the nodes \(K(b)\subset K\) and for each \(k\in K(b)\) either the left branch or the right branch is followed. Let \(K^L(b)\subseteq K(b)\) denote the decision nodes where the left branch is followed to reach leaf node b and let \(K^R(b)=K(b)\setminus K^L(b)\) denote the decision nodes where the right branch is followed. Sample i is routed to b only if it satisfies all the conditions at the nodes leading to that leaf node. Consequently, we define the constraints

for all \(i\in I\) and \(b\in B\). Combining these with the equations

gives a complete formulation. Let

We next formally show that combining the constraints in S and Q(z, v) gives a correct formulation.

Proposition 2

Let \((z,v)\in S\), and let \(c\in Q(z,v)\). Then, \(c^i_b\in \{0,1\}\) for all \(i\in I\) and \(b\in B\). Furthermore, if \(c^i_b=1\) for some \(i\in I\) and \(b\in B\), then sample i is routed to leaf node b.

Proof

Given \((z,v)\in S\) and \(i\in I\), assume that the correct leaf node sample i should be routed to in the decision tree defined by (z, v) is the leaf node \(b'\).

For all other leaf nodes \(b\in B\setminus \{b'\}\), sample i either has \(L(i,k)=0\) for some \( k\in K^L(b)\) or \(R(i,k)=0\) for some \( k\in K^R(b)\). Consequently, \(c^i_b=0\) for all \(b\not =b'\). Equation (7) then implies that \(c^i_{b'}=1\) and therefore \(c^i_b\in \{0,1\}\) for all \(b\in B\). Conversely, if \(c^i_{b'}=1\) for some \(b'\in B\), then \(L(i,k)=1\) for all \( k\in K^L(b)\) and \(R(i,k)=1\) for all \( k\in K^R(b)\). \(\square \)

We therefore have the following integer programming (IP) formulation:

where C in the objective (8a) is a constant weight chosen in case of class imbalance. For instance, if a training set has twice as many good examples as bad examples, it may be worth considering setting \(C=2\), so that every correct classification of a bad data point is equal to two correct classifications of good data points.

Notice that formulation (8) allows solutions where all samples follow the same branch. For example, it is possible to have a solution where a branching variable \( v^k_{g}=1\) for some \(k\in K\) and \(g\in G\), and at the same time \(z_j^k=0\) for all \(j\in J(g)\). In this case \(L(i,k)=0\) for all \(i\in I\) and all samples follow the right branch. It is possible to exclude such solutions using the following pair of constraints:

for all \(k\in K\) and \(g\in G\). These constraints enforce that if a group is selected for branching, then at least one, but not all, of its features should be selected. We should note that in our experiments we have not seen any benefit from using these inequalities and decided not to include them in the formulation.

4.2 Choosing the tree topology

The IP model (8) finds the optimal decision tree for a given tree topology which is an input to the model. It is possible to build a more complicated IP model that can also build the tree topology (within some restricted class) but for computational efficiency, we decided against it. Instead, for a given dataset, we use several fixed candidate topologies and build a different DTs for each one of them. We then pick the most promising one using cross-validation. The four tree topologies we use are the balanced depth-3 tree shown in Fig. 2 and the additional trees shown in Fig. 3.

Note that the first two trees presented in Fig. 3 can be obtained as a minor of the balanced depth-3 tree shown in Fig. 2 and therefore, the optimal value of the model using the balanced depth-3 tree will be at least as good as that of the smaller trees. Similarly, these two trees can also be obtained as a subtree of the last tree in Fig. 3. However, due to possible overfitting, the larger trees might perform worse than the smaller ones on new data (in testing). As we will show via computational experiments, training smaller trees take fraction of the time compared to training larger trees, hence training a collections of trees of increasing topologies is comparable to training one large tree.

4.3 Computational tractability

While (8) is a correct formulation, it can be improved to enhance computational performance. We next discuss some ideas that help reduce the size of the problem, break symmetry and strengthen the linear programming relaxation. We first observe that the LP relaxation of (8), presented explicitly below, is rather weak.

Note that we do not need an explicit upper bound of 1 on the variables as it is implied by other constraints. Also note that as \(\sum _{b\in B} c_b^i \le 1\), for all \(i\in I\), the optimal value of the LP relaxation is at most \(| I_{+}|+C| I_{-}|\). Assuming that the decision tree has at least two levels, we will next construct a solution to the LP that attains this bound. Moreover, this solution would also satisfy \(v^k_{g}\in \{0,1\}\) for all \(k\in K\) and \(g\in G\).

As the decision tree has at least two levels, both the left and right branches of the root node contain a leaf node in \( B_{+}\) as well as a leaf node in \( B_{-}\). Let \(b_-^L,b_-^R\in B_-\) and \(b_+^L,b_+^R\in B_+\) where \(b_-^L\) and \(b_+^L\) belong to the left branch and \(b_-^R\) and \(b_+^R\) belong to the right branch. For an arbitrary \(\bar{g}\in G\), we construct the solution (z, v, c) as follows: First we set \(v^k_{\bar{g}}=1\) for all \(k\in K\) and \( z_j^k=1/2\) for all \(k\in K\) and \(j\in J(\bar{g})\). We then set \(c_b^i=1/2\) for \(b\in \{b_+^L,b_+^R\}\) for all \(i\in I_{+}\) and set \(c_b^i=1/2\) for \(b\in \{b_-^L,b_-^R\}\) for all \(i\in I_{-}\). We set all the remaining variables to zero. Notice that \(\sum _{b\in B_{-}} c_b^i = 1\) for \(i\in I_{-}\) and \(\sum _{b\in B_{+}} c_b^i = 1\) for \(i\in I_{+}\) and therefore the value of this solution is indeed \(| I_{+}|+C| I_{-}|\). To see that the this solution is feasible for the LP relaxation of (8), first note that \( \sum _{g\in G} v^k_g = 1 \) for all \(k\in K\) and \( z_j^k\le v^k_{g} \) for all \(j\in J(g),~g\in G,\) and \( k\in K \). Also notice that \(L(i,k)=R(i,k)=1/2\) for all \( i\in I\) and \(k\in K\), which implies that (11) and (12) are also satisfied for all \( i\in I\) and \(k\in K\).

4.3.1 Relaxing some binary variables

The computational difficulty of a MILP typically increases with the number of integer variables in the formulation and therefore it is desirable to impose integrality on as few variables as possible. We next show that all of the v variables and most of the z variables take value \(\{0,1\}\) in an optimal solution even when they are not explicitly constrained to be integral.

Proposition 3

Every extreme point solution to (8) is integral even if (i) variables \(v^k_g\) are not declared integral for all \(g\in G\) and decision nodes \(k\in K\), and, (ii) variables \(z^k_j\) are not declared integral for \(j\in J\) and decision nodes \(k\in K\) that are adjacent to a leaf node.

Proof

Assume the claim does not hold and let \(\bar{p} = (\bar{v},\bar{z},\bar{c})\) be an extreme point solution that is fractional. Let \(K^L\subset K\) denote the decision nodes that are adjacent to leaf nodes and consider node \(a\not \in K^L\). First note that if \(\bar{v}^{a}_{b}\) is fractional, that is, if \(1>\bar{v}^{a}_{b}>0\) for some feature group \(b\in G\), then \(1>\bar{v}^a_g\) for all groups \(g\in G\) as \(\sum _{g\in G}\bar{v}^a_g=1\). Consequently, for this decision node we have all \(\bar{z}^a_j=0\) as \(\bar{z}^a_j\in \{0,1\}\) for \(j\in J\). This also implies that \(L(i,a)=0\) for all \(i\in I\). In this case, for any \( g\in G\), the point \(\bar{p}\) can be perturbed by setting the \( v^{a}_{ g}\) variable to 1 and setting the remaining \( v^{a}_{*}\) variables to 0 to obtain a point that satisfies the remaining constraints. A convex combination of these perturbed points (with weights equal to \(\bar{v}^{a}_{g}\) ) gives the point \(\bar{p}\), a contradiction. Therefore all \(\bar{v}^k_g\) are integral for \(g\in G\) and \(k\in K\setminus K^L\).

Therefore, if \(\bar{p}\) is fractional, then at least one of the following must hold: either (i) \(1>\bar{v}^{k}_{g}>0\) for some \(k\in K^L\) and \(g\in G\), or, (ii) \(1>\bar{z}^k_j>0\) for some \(k\in K^L\) and \(j\in J\), or, (iii) \(1>c^i_b>0\) for some \(b\in B\) and \(i\in I\). As all these variables are associated with some decision node \(k\in K^L\), we conclude that there exists a decision node \(a\in K^L\) for which either \(1>\bar{v}^{a}_{g}>0\) for some \(g\in G\), or, \(1>\bar{z}^a_j>0\) for some \(j\in J\), or, \(1>c^i_b>0\) for some \(i\in I\) and \(b\in \{b_+,b_-\}\) where \(b_+\in B_+\) and \(b_-\in B_-\) are the two leaf nodes attached to decision node a on the left branch and on the right branch, respectively.

Let \( I_a^+\) denote the set of samples in \(I^+\) such that \(\bar{c}^i_{b_+}>0\) and similarly, let \( I_a^-\) denote the set of samples in \(I^-\) such that \(\bar{c}^i_{b_-}>0\). If \(\bar{c}^i_{b_+}\not = L(i,a)\), for some \(i\in I_a^+\), then point \(\bar{p}\) can be perturbed by increasing and decreasing \(\bar{c}^i_{b_+}\) to obtain two new points that contain \(\bar{p}\) in their convex hull, a contradiction. Note that \(L(i,k) \in \{0,1\}\) for all \(i\in I\) and \(k\in K\setminus K^L\) and therefore these two points indeed satisfy all the constraints. Consequently, we conclude that \(\bar{c}^i_{b_+} = L(i,a)\) for all \(i\in I_a^+\). Similarly, \(\bar{c}^i_{b_-} = 1-L(i,a)\) for all \(i\in I_a^-\). Notice that this observation also implies that, if \(\bar{c}^i_{b_+}\) is fractional for some \(i\in I_a^+\) or \(\bar{c}^i_{b_-}\) is fractional for some \(i\in I_a^-\), then L(i, a) is also fractional, which in turn implies that for some feature \(h\in J\) we must have \(\bar{z}^a_h>0\) fractional as well.

Now assume there exists a feature \(h\in J(g)\) such that \(v^a_{g}>\bar{z}^a_h>0\). In this case increasing and decreasing \(\bar{z}^a_h\) by a small amount and simultaneously updating the values of \(\bar{c}^i_{b_+}\) for \(i\in I_a^+\) and \(\bar{c}^i_{b_-}\) for \(i\in I_a^-\) to satisfy \(\bar{c}^i_{b_+} = L(i,a)\) and \(\bar{c}^i_{b_-} = 1-L(i,a)\) after the update, leads to two new points that contain \(\bar{p}\) in their convex hull. Therefore, we conclude that \(\bar{z}^a_h\) is either zero, or \(\bar{z}^a_h = \bar{v}^a_{g}\).

So far, we have established that if \(\bar{c}^i_b\) is fractional for some \(i\in I_a^-\cup I_a^+\) and \(b\in \{b_+,b_-\}\), then there is a fractional \(\bar{z}^a_j\) variable for some feature \(j\in J\). In addition, we observed that if there is a fractional \(\bar{z}^a_j\) for some \(j\in J\) then there is a fractional \(\bar{v}^a_g\) for some \(g\in G\). Therefore, if \(\bar{p}\) is not integral, there exists a feature group \(d\in G\) such that \(1>\bar{v}^a_d>0\). As \(\sum _{g\in G}\bar{v}^a_g=1\), this implies that there also exists a different group \(e\in G\setminus \{d\}\) such that \(1>\bar{v}^a_e>0\).

We can now construct two new points that contain \(\bar{p}\) in their convex hull as follows: For the first point we increase \(\bar{v}^a_d\) and decrease \(\bar{v}^a_e\) by a small amount and for the second point we do the opposite perturbation. In addition, for both points we first update the values of \(\bar{z}^a_j\) for all \(j\in J(d)\cup J(e)\) and \(\bar{z}^a_j>0\) so that \(\bar{z}^a_j = \bar{v}^a_{d}\) for all \(j\in J(d)\) and \(\bar{z}^a_j = \bar{v}^a_{e}\) for all \(j\in J(e)\). Finally, we perturb the associated \(\bar{c}^i_b\) variables for \(i\in I_a^-\cup I_a^+\) and \(b\in \{b_+,b_-\}\) so that \(\bar{c}^i_{b_+} = L(i,a)\), for \(i\in I_a^+\), and \(\bar{c}^i_{b_-} = 1-L(i,a)\) for all \(i\in I_a^-\). Both points are feasible and therefore we can conclude that \(\bar{p}\) is not an extreme point, which is a contradiction. Hence \(\bar{p}\) cannot be fractional. \(\square \)

We have therefore established that the v variables do not need to be declared integral and the only z variables that need to be declared integral in the formulation (8) are the feature selection variables \(z^k_j\) for all features \(j\in J\) and decision nodes \(k\in K\) that are not adjacent to a leaf node.

4.3.2 Deleting unnecessary variables

Notice that the objective function (8a) uses variables \(c_b^i\) only if it corresponds to a correct classification of the sample (i.e., \({i\in I_{+}}\) and \({b\in B_{+}}\), or \({i\in I_{-}}\) and \( {b\in B_{-}}\)). Consequently, the remaining \(c_b^i\) variables can be projected out of the formulation without changing the value of the optimal solution. We therefore only define \(c_b^i\) variables for

and write constraints (5) and (6) for these variables only. In addition, This reduces the number of c variables and the associated constraints in the formulation by a factor of one half. In this projected formulation equation (7) becomes

4.3.3 Relaxing more binary variables

Also note that the objective function (8a) is maximizing a (weighted) sum of \(c_b^i\) variables and the only constraints that restrict the values of these variables are inequalities (5), (6) and (7) which all have a right hand side of 0 or 1. Consequently, replacing the integrality constraints \(c_b^i\in \{0,1\}\) with simple bound constraints \(1\ge c_b^i\ge 0\), still yields optimal solutions that satisfy \(c_b^i\in \{0,1\}\). Hence, we do not require \(c_b^i\) to be integral in the formulation and therefore significantly reduce the number of integer variables. Thus, we have a formulation for training optimal decision trees, where the number of integer variables is independent of the number of samples.

4.3.4 Strengthening the model

We next present valid inequalities for (8) that can be used to strengthen its LP relaxation. Consider inequalities (5)

for \(i\in I\), \(b\in B\) and \(k\in K^L(b)\) where \(K^L(b)\) denotes the decision nodes where the left branch is followed to reach the leaf node b. Also remember that \(\sum _{b\in B}c^i_b=1\) for \(i\in I\) due to Eq. (7).

Now consider a fixed \(i\in I\) and \(k\in K\). If \( L(i,k)=0\), then \(c^i_b=0\) for all b such that \(k\in K^L(b)\). On the other hand, if \( L(i,k)=1\) then at most one \(c^i_b=1\) for b such that \(k\in K^L(b)\). Therefore,

is a valid inequality for all \({i\in I}\) and \(k\in K\). While this inequality is satisfied by all integral solutions to the set Q(z, v), it is violated by some of the solutions to its continuous relaxation. We replace the inequalities (5) in the formulation with (11) to obtain a tighter formulation. We also replace inequalities (6) in the formulation with the following valid inequality:

for all \({i\in I}\) and \(k\in K\). Note that, by definition, \( L(i,k)+ R(i,k)=1\) for all \(i\in I\) and \(k\in K\), and consequently, adding inequalities (11) and (12) for the root node of the decision tree implies that \(\sum _{b\in B} c_b^i \le 1\) for all \(i\in I\).

When using inequalities (11) and (12) in the projected formulation described in Sect. 4.3.2, we write these inequalities with the \(c^i_b\) variables whose indices are contained in the set described in (10). Moreover, in this case adding the inequalities associated with the root node of the decision tree yields \( \sum _{b\in B^+}c^i_b\le 1\) for \(i\in I_{+}\) and \( \sum _{b\in B^-}c^i_b\le 1\) for \(i\in I_{-}\). Therefore, the projected version of (7) described in Sect. 4.3.2 becomes redundant.

4.3.5 Breaking symmetry: anchor features

If the variables of an integer program can be permuted without changing the structure of the problem, the integer program is called symmetric. This poses a problem for MILP solvers (such as Cplex) since the search space increases exponentially, see Margot (2009). The formulation (8) falls into this category as there may be multiple alternative solutions that represent the same decision tree. In particular, as we have discussed earlier in the paper, we consider a decision node that is not adjacent to leaf nodes and assume that the subtrees associated with the left and right branches of this node are symmetric (i.e. they have the same topology). In this case, if the branching condition is reversed at this decision node (in the sense that the values of the v variables associated with the chosen group are flipped), and, at the same time, the subtrees associated with the left and right branches of this node are switched, one obtains an alternative solution to the formulation corresponding to the same decision tree. To avoid this, we designate one particular feature \(j(g)\in J(g)\) of each group \(g\in G\) to be the anchor feature of that group and enforce that if a group is selected for branching at such a node, samples with the anchor feature follow the left branch. More precisely, we turn one of the inequalities in (2) to an equation and add the following to the formulation:

for all \(g\in G\), and all \(k\in K\) that is not adjacent to a leaf node and has symmetric subtrees hanging on the right and left branches. While Eq. (13) lead to better computational performance, they do not exclude any decision trees from the feasible set of solutions.

4.4 Controlling overfitting due to combinatorial branching

As mentioned earlier, combinatorial branching may lead to overfitting when |J(g)| is large for a categorical feature \(g\in G\) as there are \(2^{|J(g)|}\) possible ways to branch using this feature. To avoid overfitting, we require the size of the subset used for branching to be either at most max.card or at least \((|J(g)|-max.card)\) for some input parameter max.card. To this end, for each node \(k\in K\) and for each group \(g\in G\) that corresponds to a categorical feature with \(|J(g)|>max.card\), we create an additional variable \(x_g^k\) and include the following constraints in the formulation,

We note that these new variables can also be used to break symmetry in the problem. Instead of using anchor features, one can simply set all \(x_g^k\) variables to 1 for \(g\in G\) whenever \(k\in K\) is not adjacent to a leaf node and has symmetric subtrees hanging on the right and left branches. Similar to using anchor features, this restriction would exclude one of the solutions obtained by reversing the branching condition at a decision node (i.e. flipping the values of the v variables associated with the chosen group), and, switching the subtrees associated with the left and right branches of this node.

4.5 Handling numerical features

To handle numerical features, we simply turn them into categorical features by binning them into intervals using deciles as thresholds. Consequently, each numerical feature becomes a categorical feature with (up to) 10 possible values, depending on the decile it belongs to. Therefore, one can use the model described above without any further changes. However, this might lead to decision trees that branch on, for example, whether or not a numerical feature belongs to the second or seveth quantiles, which of course is not a very interpretable condition. It is therefore desirable to branch on these features in a way that captures their ordinal nature. To this end, we add additional constraints for these features to ensure that the branching decisions correspond to “less than or equal to” or “greater than or equal to” conditions.

More precisely, for each node \(k\in K\) and for each group \(g\in G\) that corresponds to a numerical feature, we create an additional variable \(w_g^k\) to denote if the branching condition is of “greater than or equal to” or “less than or equal to” form. We then require the associated \(z^k_j\) variables for \(j\in J(g)\) to take either increasing (when \(w_g^k=1\)) or decreasing values (when \(w_g^k=0\)). The additional constraints are,

We note that it is possible to enforce “less than or equal to” or “greater than or equal to” form without using the additional variables w, by binarizing numerical features differently, see [8, 19]. However in this case the LP formulation becomes more dense and overall solution times are significantly slower.

We also note that an alternative way to break symmetry in this case is to set all \(w^k_{g}\) variables to 1 for \(g\in G\) (without loss of generality) whenever \(k\in K\) is not adjacent to a leaf node and has symmetric subtrees hanging on the right and left branches. For balanced trees this property is satisfied for all non-leaf nodes. Fixing w variables this way enforces that the left branch of a decision node will check if the greater than or equal to condition holds for the associated numerical feature. Clearly, if this symmetry breaking rule is used, one should not use anchor features described in Sect. 4.3.5.

4.6 Maximizing sensitivity/specificity

In many practical applications, especially those involving imbalanced datasets, the user’s goal is to maximize sensitivity (the true positive rate, or TPR), while guaranteeing a certain level of specificity (the true negative rate, or TNR), or vice versa, instead of optimizing the total accuracy. While such problems cannot be addressed with heuristics such as CART (except by a trial-and-error approach to reweighting samples), our model (8) readily lends itself to such a modified task. For example, if we intend to train a classifier with a guaranteed specificity (on the training set) of 0.95, then we simply add the following constraint to (8)

and change the objective function (8a) to

Likewise, we can produce a model that maximizes specificity while guaranteeing a certain level of sensitivity by switching the expressions in the constraint (14) and objective (15).

5 Computational results

We now turn to computational experiments for which we used a collection of 10 binary (two-class) classification datasets. We obtained two of these datasets (a1a and breast-cancer-wisconsin) from LIBSVM [7], one from FICO Explainable Machine Learning Challenge [9] and the remaining 7 from the UCI Machine Learning repository [12]. These datasets were selected because they fit into our framework as the majority of their variables are either binary or categorical. Each dataset was preprocessed to have the binary form assumed by the formulation, with identified groups of binary variables. A summary description of the problems is given in Table 1.

Each dataset/tree topology pair results in a MILP instance, which we implemented in Python 2.7 and then solved with Cplex version 12.6.1 on a computational cluster, giving each instance access to 8 cores of an AMD Opteron 2.0 GHz processor. Throughout this section, we will refer to our method as ODT (Optimal Decision Trees).

5.1 Tuning the IP model

We begin with some computational tests to illustrate the benefit of various improvements to the IP model that were discussed in \(\S \)4.3. We only show results for five of the datasets: a1a, bc, krkp, mush and ttt, since for the other datasets, aside from heloc, the IP is solved quickly and the effect of improvements is less notable, while for heloc the time limit was reached in all cases.

We note that the deletion of unnecessary variables discussed in \(\S \)4.3.2 seems to be performed automatically by Cplex in preprocessing, and so we do not report results relevant to this modeling choice. However, we experiment with anchoring adding (13) (\(\S \)4.3.5), relaxing appropriate z variables and c variables (\(\S \)4.3.1 and \(\S \)4.3.1), and strengthening the model using additional constraints (11) and (12) (\(\S \)4.3.4). In particular, we compare the model where none of the above techniques are applied and using the formulation (8) (Nothing), only relaxation and strengthening (11) and (12) are applied (No Anchor), only anchoring (13) and strengthening (11) and (12) are applied (No Relax), only anchoring (13) and relaxation are applied (No Strength) and finally when all of the techniques are applied (All).

In Table 2 we show the results for symmetric DTs of depths 3, while using reduced datasets of 200 randomly subsampled data instances. In each column we list the total time in seconds it took Cplex to close the optimality gap to below the default tolerance and the total number of LPs solved in the process. In the case when Cplex exceeded 3 h, the solve is terminated and a ”*” is reported instead of the time.

As we see from Table 2, the data set with 200 data points make the IP difficult to solve for some data sets, such as a1a, student and heloc but is easy to some others, such as bc and mush. Hence in Table 3 we show results for various sizes of data, selected so that the corresponding IP is not trivial but is still solvable within three hours.

We can conclude from Tables 2 and 3 that our proposed strategies provide significant improvement in terms of computational time. In some cases, turning off an option may outperform using all options; for example, turning off variable strengthening improves computational time for a1a and mush compared to the All option in Table 2 and for krkp and student in Table 3 However, the All option consistently dominates other options in the majority of the cases, hence we conclude that using all proposed improvements is the best overall strategy.

Next we show the dependence of computational time on the tree topology and the size of the data set. In Table 4 we report these results for the krkp, a1a, and bc data set each averaged over five runs with random sample selection. Here, by depth-2.5 we refer to the topology shown in the upper right corner of Fig. 3, and by imbalanced, we refer to the topology shown in the bottom of Fig. 3. In these experiments we terminated each Cplex run after 3 h and when this happens on all five runs we report ”*” in the tables instead of the time. In the case when some runs terminated in less than two hours and some did not, we averaged the times of the finished runs and reported the time in the able, followed by ”(*)”.

As one would expect, Table 4 shows that solving the IP to optimality becomes increasingly more difficult when the sample size increases and when the tree topology becomes more complicated. However, the increase in solution time as sample size increases differs significantly among different datasets for the same tree topology depending on the number of features and groups of the dataset as well as how well the data can be classified using a decision tree. Note that even though the imbalanced trees and depth-3 trees have the same number of nodes, solving the IP for imbalanced trees is more challenging. We believe that this is at least partly due to the fact that symmetry breaking using anchor features has to be disabled at the root node of imbalanced trees, as the tree is not symmetric. To confirm this we switched off symmetry breaking for depth-3 trees and the solutions time general increased dramatically. For example for a1a the corresponding row of the table became \([3469.1(*), \ 6430.8(*), \ *, \ *, \ *, \ * ]\) which means that some of the instances with 100 and 200 samples did not solve to optimality and for solved instances the average time for 100 samples increased from 364.4 to 3469.1 s and time for 200 samples increased from 1975.6 to 6430 s. Moreover, none of the larger instances finished solving. For bc the corresponding row of the table became \([16.5,\ 2538.7,\ 4477.4(*),\ 6721.6(*),\ 6729.9(*),\ *]\).

Restricting the number of features in the data can significantly reduce computational time. To demonstrate this, we run the following experiments: we first repeatedly apply the CART algorithm to each data set, using 90% of the data and default setting and thus not applying any particular restriction of the size of the tree. We then select groups that have been used for branching decision at least once in the CART tree. We then remove all other feature groups from the IP formulation (by setting the corresponding v variables to 0) and apply our ODT model to the reduced problem. On average this procedure reduced the number of original features (groups) in a1a from 14 to 7.2, in bc from 9 to 2.4, and in krkp from 36 to 8. The effect of this reduction on the solution time is illustrated in Table 5. We can see that in many cases significant improvement in terms of time is achieved over results reported in Table 4. We will discuss the effect of the feature selection on the prediction accuracy later in Sect. 5.4.

5.2 Effect of combinatorial branching

We next make a comparison to see the effect of the constraint on combinatorial branching for categorical data which is discussed in Sect. 4.4. When using this constraint with \(max.card=1\) we recover “simple” branching rules where branching is performed using only one possible value of the feature, as is done in [3]. We compare simple branching denoted as simple, constrained branching using \(max.card=2\), denoted by comb-con and unconstrained branching, denoted as comb-unc. We have also tried \(max.card=3\) and \(max.card=4\), but \(max.card=2\) consistently gave better testing accuracy than the other values. We only show the results for two data sets, a1a and mush because for the other data sets combinatorial branching did not produce different results as most of the categorical features had only 2 or 3 possible values. We compare decision trees of depths 2 and 3 trained using data sets of size 600. Results averaged over five runs are shown in Table 6.

We see that for mush using combinatorial branching makes a significant improvement. In particular, for depth-3 trees and even without max cardinality constraint, it achieves a 99.3% out-of-sample accuracy compared to 97.7% for simple branching. We show the optimal depth-3 tree for mush dataset in Fig. 4. However, for a1a—even though unconstrained combinatorial branching achieves good training accuracy they do not generalize as well as simple branching rules. In particular, the a1a dataset contains one group (occupation) with many different possible values. Branching on this group results in combinatorially many possible decisions which leads to overfitting. Adding a constraint with \(max.card=2\) remedies the situation, while still providing a small improvement over simple branching.

5.3 Effect of constraints for numerical features

Here we compare the effect of special constraints introduced for the numerical features in Sect. 4.5. The results of this comparison are shown in Table 7. When the constraint is imposed, the feature group is treated as numerical, and this formulation is label with ”n”, for numerical. When the constraint is not imposed, then the group is treated as if the original feature is categorical, and the formulation is labeled with ”c”, for categorical. We compare both accuracy and time averaged from 5 runs with 30 mins limit.

We observe that overall adding the special constraint to impose the numerical nature of the group improves the testing accuracy and saves computational time.

5.4 Comparison with CART depth-3 trees

We next focus on comparing the accuracy of ODTs with CART. We consider 4 different tree topologies for ODTs: depth-2, depth-2.5, depth-3 and imbalanced. We use CART as implemented in the package rpart for R [17]. We compare the performance of ODT to CART by restricting the maximum depth of the learned CART trees to 3, thus allowing at most 8 leaf nodes, which is the maximum that our trees can have. We note that this does not mean the learned CARTs have the same topology as our ODTs. In fact, we found that due to various pruning heuristics, the topologies of the trees learned by CART vary erratically and in most cases the tree has much fewer that 8 leaves, as is shown in Table 8. On the other hand, in a later section we show that when CART is not restricted to maximum depth-3 the resulting trees are much larger.

We also investigate the effect of feature selection by running CART first and considering only the features used by CART in constructing ODTs. For each dataset, we generate five random training/testing splits of the dataset by sampling without replacement and report the averages. We use \(90\%\) of the data for training CART and we use \(\min \{90\%,600\}\) data points for training ODTs.

In Tables 8 and 9 we show the results for ODTs trained for up to 30 min with and without feature selection, respectively, and compare with CART trees of depth-3. In both tables we list the average training and testing accuracy, in percentages, over the five runs. We highlight in bold the best testing accuracy achieved by the ODTs if it is more than \(1\%\) larger than that achieved by CART, and reversely, highlight accuracy of CART when it is more than \(1\%\) larger than best accuracy of ODT. The standard deviation in all cases is fairly small, typically around \(0.2-0.3\%\).

In Table 8 we see that testing accuracy achieved by ODTs after 30 min of training is significant better than that of depth-3 CART. Comparing Tables 8 and 9, we see that on average the feature selection typically degrades training accuracy but results in better testing accuracy. This can be explained by the fact that reducing the number of features prevents the ODTs from overfitting. This observation suggests that using feature selection, especially for larger trees could be beneficial not only for computational speedup but for better accuracy.

We next repeat the same experiments from Tables 8 and 9 with a 5 min time limit on Cplex and report the results in Tables 10 and 11. Note that the time for feature selection is negligible.

Comparing Tables 8 and 10, we do not see a significant difference in accuracy for depth-2 and depth-2.5 ODTs due to the reduction of the time limit from 30 to 5 min. For depth-3 ODTs, and the imbalanced trees however, both training and testing performance gets noticeably worse due to the reduction of the time limit. Comparing Tables 10 and 11, we see that in most cases feature selection helps in terms of both training and testing accuracy.

Overall the testing accuracy degrades between Tables 8 and 11, but not very significantly, thus we conclude that feature selection helps for larger trees independent of the time limit. Moreover, average testing accuracy of ODTs obtained only after 5 min of computation using feature selection seems to be similar to testing accuracy with 30 min time limit (with or without feature selection) and thus still outperforms CART. We should also note that when the IPs are terminated earlier, the optimality gap is usually larger but it often happens that an optimal or a near optimal integral solution is already obtained by Cplex.

5.5 Effect of training set size

To demonstrate the effect of the training set size on the resulting testing accuracy we present the appropriate comparison in Table 12. In these experiments we run Cplex with a 30 min time.

We observe that in most cases increasing the size of the training data narrows the gap between training and testing accuracy. This can happen for two reasons—because optimization progress slows down and training accuracy drops and/or because there is less overfitting. For example, for a1a it appears to be harder to find the better tree and so both the training and the testing accuracy drops, while for mush testing accuracy gets better, as the gap between training and testing accuracy closes. We also see, for example in the case of mush and krkp, the effect of the increase of the data set tends to diminish as the gap between training and testing accuracy. This is a common behavior for machine learning models, as larger training data tends to be more representative with respect to the entire data set. However, in our case, we utilize the larger data set to perform prior feature selection and as a result relatively small training sets are often sufficient for training of the ODTs. Hence, the computational burden of solving IPs to train the ODTs is balanced by the lack of need to use large training sets.

5.6 Choosing the tree topology

In this section we discuss how to chose the best tree topology via cross-validation and compare the accuracy obtained by the chosen topology to the accuracy of trees obtained by CART with cross-validation.

For each dataset we randomly selected \(90\%\) of the data points to use for training and validation, leaving the remaining data for final testing. For the smaller data sets, we select the best topology using standard 5-fold cross validation. For large data sets such as a1a, bc, krkp, mush and ttt, we instead repeat the following experiment 5 times: we randomly select 600 data points as the training set and train a tree of each topology on this set. The remaining data is used as a validation set and we compute the accuracy of each trained tree on this set. After 5 experiments, we select the topology that has the best average validation accuracy. We then retrain the tree with this topology and report the testing accuracy using the hold-out \(10\%\). We train CART with \(90\%\) of the data points, allowing it to choose the tree depth using its default setting and then report the testing accuracy using the hold-out set. We summarize the results in Table 13 where for each method we list the average testing accuracy and the average number of leaves in the tree chosen via cross-validation. We set ODT time limit to 30 mins and used feature selection from CART trained on \(90\%\) of each dataset.

We can summarize the results in Table 13 as follows: in most cases, either ODTs outperform CARTs in terms of accuracy or else they tend to have a significantly simpler structure than the CART trees. In particular, for data sets a1a, student and bc that contain interpretable human-relatable data, ODTs perform better in terms of accuracy and better or comparably in interpretability, undoubtedly because there exist simple shallow trees that make good predictors for such data sets, and the exact optimization method such as ours can find such trees, while a heuristic, such as CART may not. On the other hand, on the dataset ttt (which describes various positions in a combinatorial game), simple two or three levels of decision are simply not enough to predict the game outcome. In this case, we see that CART can achieve better accuracy, but at the cost of using much deeper trees. A similar situation holds for krkp, but to a lesser extent. Finally, monks-1 data set is an artificial data set, classifying robots using simple features describing parts of each robot. Classification in monks-1 is based on simple rules that can be modeled using shallow trees and ODT performance is much better on that data set than that of CART. In conclusion, our results clearly demonstrate that when classification can be achieved by a small interpretable tree, ODT outperforms CART in accuracy and interpretability.

5.7 Training depth-2 tree on full heloc data.

We performed a more detailed study of the heloc data set which was introduced in the FICO interpretable machine learning competition [9]. The authors of the winning approach [8] produced a model for this data set which can be represented as a depth-2 decision tree achieving 71.7 testing accuracy. Here we show how we are able to obtain comparable results with our approach. First we applied feature selection using CART, making sure that at least 4 features are selected. Then we trained a depth-2 tree using our ODT model and \(90\%\) of the data points (8884 points). The optimal solution was obtained within 405 s and the resulting testing accuracy is 71.6. The corresponding CART model gives 71.0 testing accuracy.

5.8 Results of maximizing sensitivity/specificity

We now present computational results related to the maximization of sensitivity or specificity, as discussed in Sect. 4.6. We will focus on the bc dataset, which contains various measurements of breast tumors. The positive examples in this data sets are the individuals with malignant tumors in the breast. Clearly, it is vitally important to correctly identify all (or almost all) positive examples, since missing a positive example may result in sending a individual who may need cancer treatment home without recommending further tests or treatment. On the other hand, placing a healthy individual into the malignant group, while undesirable, is less damaging, since further tests will simply correct the error. Hence, the goal should be maximizing specificity, while constraining sensitivity. Of course, the constraint on the sensitivity is only guaranteed on the training set. In Table 14 we present the results of solving such model using \(\min (\lceil .9n\rceil , 600)\) samples and the resulting testing sensitivity (TPR) and specificity (TNR). We report average and variance over 30 runs.

We observe that, while depth-2 trees deliver worse specificity in training than depth-3 trees, they have better generalization and hence closely maintain the desired true positive rate. This is also illustrated in Fig. 5.

6 Concluding remarks

We have proposed an integer programming formulation for constructing optimal binary classification trees for data consisting of categorical features. This integer programming formulation takes problem structure into account and, as a result, the number of integer variables in the formulation is independent of the size of the training set. We show that the resulting MILP can be solved to optimality in the case of small decision trees; in the case of larger topologies, a good solution can be obtained within a set time limit. We show that our decision trees tend to outperform those produced by CART, in accuracy and/or interpretability. Moreover, our formulation can be extended to optimize specificity or sensitivity instead of accuracy, which CART cannot do.

Our formulation is more specialized than that proposed recently in [3] and is hence is easier to solve by an MILP solver. However, our model allows flexible branching rules for categorical variables, as those allowed by CART. In addition the formulations proposed in [3] are not particularly aimed at interpretability.

Several extensions and improvements should be considered in future work. For example, while the number of integer variables does not depend on the size of the training set, the number of continuous variables and the problem difficulty increases with the training set size. Hence, we plan to consider various improvements to the solution technique which may considerably reduce this dependence.

References

Bennett, K.P., Blue, J.: Optimal decision trees. Technical Report 214, Rensselaer Polytechnic Institute Math Report (1996)

Bennett, K.P., Blue, J.A.: A support vector machine approach to decision trees. Neural Netw. Proc. IEEE World Congr. Comput. Intell. 3, 2396–2401 (1998)

Bertsimas, D., Dunn, J.: Optimal classification trees. Mach. Learn. 106(7), 1039–1082 (2017)

Bertsimas, D., Shioda, R.: Classification and regression via integer optimization. Oper. Res. 55(2), 252–271 (2017)

Breiman, L., Friedman, J.H., Olshen, R.A., Stone, C.J.: Classification and Regression Trees. Chapman and Hall, New York (1984)

Breiman, L.: Random forests. Mach. Learn. 45(1), 5–32 (2001)

Chang, C.C., Lin, C.J.: LIBSVM: a library for support vector machines. ACM Trans. Intell. Syst. Technol. 2, 27:1–27:27 (2011)

Dash, S., Günlük, O., Wei, D.: Boolean Decision Rules via Column Generation. Advances in Neural Information Processing Systems. Montreal, Canada (2018)

FICO Explainable Machine Learning Challenge https://community.fico.com/s/explainable-machine-learning-challenge

Hyafil, L., Rivest, R.L.: Constructing optimal binary decision trees is np-complete. Inform. Process. Lett. 5(1), 15–17 (1976)

Kotsiantis, S.B.: Decision trees: a recent overview. Artif. Intell. Rev. 39(4), 261–283 (2013)

Lichman, M.: UCI machine learning repository (2013)

Malioutov, D.M., Varshney, K.R.: Exact rule learning via boolean compressed sensing. In: Proceedings of the 30th International Conference on Machine Learning, volume 3, pp. 765–773 (2013)

Murthy, S., Salzberg, S.: Lookahead and pathology in decision tree induction. In: Proceedings of the 14th International Joint Conference on Artificial Intelligence, volume 2, pp. 1025–1031, San Francisco, CA, USA, (1995). Morgan Kaufmann Publishers Inc

Norouzi, M., Collins, M., Johnson, M.A., Fleet, D.J., Kohli, P.: Efficient non-greedy optimization of decision trees. In: Advances in Neural Information Processing Systems, pp. 1720–1728, (2015)

Ross, J.: Quinlan. C4.5: Programs for Machine Learning. Morgan Kaufmann Publishers Inc., San Francisco (1993)

Therneau, T., Atkinson, B., Ripley, B.: rpart: Recursive partitioning and regression trees. Technical Report (2017). R package version 4.1-11

Wang, T., Rudin, C.: Learning optimized or’s of and’s. Technical report, (2015). arxiv:1511.02210

Wang, T., Rudin, C., Doshi-Velez, F., Liu, Y., Klampfl, E., MacNeille, P.: A Bayesian framework for learning rule sets for interpretable classification. J. Mach. Learn. Res. 18(70), 1–37 (2017)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The work of Katya Scheinberg was partially supported by NSF Grant CCF-1320137. Part of this work was performed while Katya Scheinberg was on sabbatical leave at IBM Research, Google, and University of Oxford, partially supported by the Leverhulme Trust.

Rights and permissions

About this article

Cite this article

Günlük, O., Kalagnanam, J., Li, M. et al. Optimal decision trees for categorical data via integer programming. J Glob Optim 81, 233–260 (2021). https://doi.org/10.1007/s10898-021-01009-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-021-01009-y