Abstract

The problem of the reconstruction of the parameters characterizing the plasma shape in a tokamak device is of paramount importance both for present day experiments and for future reactor. The plasma shape can only be evaluated by diagnostic data, such as poloidal flux and magnetic field measured respectively by the flux loops and magnetic probes located on the vacuum vessel outside the plasma. The aim of the present paper is to take a step forward in the application of the neural network approach for the identification of non-circular plasma equilibrium and data analysis for the problem of the optimal location of a limited number of magnetic sensors. We have adopted a machine learning method, back-propagation neural network, to analyze the magnetic diagnostic data. The database has been generated by means of a specially adapted version of an MHD equilibrium code EFIT with reference to the EAST geometry and stored in the EAST mdsplus database. The network uses external magnetic measurements as input data and the selected plasma parameters as output data to train and test. Then a novel strategy is implemented for the selection of the optimum location of a limited number of magnetic probes based data analysis of the network. The average accuracy of the identification procedure is quite good (e.g., the maximum relative error is 0.260 % of internal inductance), with a contrast of the computation results of EFIT as desired output. It has been shown that the degradation of the performance is rather small (e.g., RMS error of minor radius vary from 4.307 to 4.765 %) when the number of magnetic probes is reduced by nearly half.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Thermonuclear plasmas are nonlinear, open systems characterized by a very high level of complexity, which practically prevents the formulation of theories from basic principles. This complexity poses an issue to the physical interpretation because thermonuclear plasmas are very difficult to access for measurement, especially in hostile environment. The consequences are typically a limited experimental characterization of many phenomena and the presence of high noise levels in the data. In future reactors, the need for the physical interpretation of the measurements will probably be much less important, whereas the problem of estimating the set of plasma parameters required for the control of the radial and vertical positions of the plasma column in the vacuum chamber as well as for the control of its shape becomes rather crucial [1–4]. The precious challenges are compounded by the difficulties inherent in interpreting the large amounts of data produced by present day diagnostics. It is probably impossible to develop a ‘standard model’ for a really complex system. Indeed the capability to generate enormous amounts of information is one of the distinguishing characteristics of modern tokamak experiments. In JET, more than 45 gigabytes of data can be produced in a well diagnosed discharge and the whole database now exceeds 200 terabytes [1, 6]. Moreover, the amount of experimental data produced is expected to increase significantly in the future generation of devices. Given the lack of a unifying theory, the limited experimental description of many phenomena, the presence of significant noise in the measurements and the large amounts of data available, sometimes important information remains hidden in the databases and it can be arduous to perform sound inference with tradition methods. To tackle these challenges, new data analysis tools are required to increase the physics output that can be derived from the measurements.

These new data analysis methods and techniques consist of collecting a series of original contributions in this field of innovative data analysis techniques for the exploration of databases, for interpretation of the physical contents of the data and the development of more effective real time control techniques [1]. In the past, several neural network approaches have been considered to apply on many nonlinear problems, for example, plasma configurations classification and plasma equilibrium reconstruction [2, 3].

An application of neural network models to experiment ASDEX Upgrade data generated during the operational phases of the experiment was presented by Francesco Morabito in [2, 3]. The performance of neural network models were compared to those obtained by using the real time version of the Function Parameterization (FP) technique which is a statistical one based on Principal Component Analysis and Function Parameterization (PCA + FP) implemented on the plasma control computer of the machine. A 18-10-4 multilayered architecture was used to solve the problem, once a training database of 1000 cases (250 per category) was selected. The NN method used in classification on the preprocess data outperform PCA + FP technique in terms of computation time in the online evaluation phase. However, the classification results presented concerned only four shots #940, 942, 1162, 3513.

In [4], the application of artificial neural network technique of data interpretation to characterization and classification of measurements of plasma columns was proposed. This paper focused attention on ITER configuration in which they used a Neural Network approach (NN) to classify measurement (inner, outer and divertor). The exploited approach showed a strong adaptability of NNs with respect to the originally database, including a database of 4848 lower single null equilibrium.

The neural network technique has been successfully applied to the extraction of equilibrium parameters from measurements of single-null diverted plasmas in the DIII-D tokamak by Lister in [5]. It discussed three previous approaches to approximating the non-linear mapping: Ad hoc trail functions used in DIII-D, reducing the data by principal component analysis (PCA) and subsequently to develop an expansion for the extracted parameters, and the linear method developed for the control of TCV and ALCATOR C-MOD. It chose 22 tangential magnetic field probes and 20 flux loops as inputs and seven parameters used in DIII-D for shape control were chosen as outputs. However, the data set used in this study was restricted to single-null diverted discharges.

A single hidden layer back-propagation neural network is described in [6] to establish a nonlinear mapping between magnetic flux measurements and some shaping parameters of non-circular plasma. The database has been generated by means of a specially adapted version of an MHD equilibrium code on ASDEX Upgrade. The input parameters were 31 flux values furnished by 31 sensors located around the plasma. The plasma quantities including major and minor radius, elongation and triangularity, internal inductance, poloidal beta, R and Z co-ordinates of the X point were selected as output parameters. The comparison between neural network method and statistical method leads to the conclusion that the simultaneous analysis of the behavior of the neural network can solve the problem of the optimal location of a limited number of sensors. However, the only use of flux measurements as input parameters is in general not sufficient for analysis of the plasma parameters.

In this paper, we use an artificial neural network (ANN) method, fed by signals from 38 magnetic probes and 35 flux loops, to establish neural network mapping between magnetic measurements and some shaping parameters of EAST tokamak plasma. The data for network training and validation were selected from the EAST_PCS and EFIT_EAST database. In particular, this study focuses on flattop phase magnetic measurements database. The flattop scenarios are very important because they will be normal operating conditions in next step tokamaks such as the International Thermonuclear Experimental Reactor (ITER) and CFETR. These extrapolations in size and physics performance provide major constraints on the design of ITER and CFETR. This paper shows that the neural network can be exploited for selecting the optimal location of a limited number of sensors. It can even become a critical issue for future reactors that the number of sensors will be limited to the minimum required for the identification and control of the plasma position and shape in the vacuum chamber.

This paper is organized as follows: “Principles of Back-Propagation Neural Network” section is a description of the neural network topology adopted for the case studied, including the basic principles of neural networks, while “Generation of the Dataset” section describes the details of the database that has been built to generate the computed equilibrium dataset. In “Application to Experimental Data from EAST” section the results obtained when applying the approach to EAST geometry is discussed, demonstration of how the proposed approach is relevant to the problem of the optimum choice of the number and location of probes. Finally, “Summary and Conclusions” section summarize the results and conclusions.

Principles of Back-Propagation Neural Network

In a wide variety of artificial neural network [7, 9], the BP neural network, which adjusts connection weights in accordance with the error gradient descent rule, is one of most mature neural networks. Today, ANNs are applied to solve an increasing number of real world problems of considerable complexity. They offer ideal solutions to a variety of classification problems such as pattern recognition, speech, character and signal recognition, as well as functional prediction and system modelling. The BP neural network has been used in an E-business credit risk early-warning system, the movie box office forecasting system, rainfall prediction, population prediction [8, 9], and so on. The application field is broad and capable of nonlinear mapping, self-organizing, error feedback adjustment, generalization and fault tolerance.

An artificial neural network is composed of activated functions of neurons, the network topology, connection weights and the threshold of neurons. Generally speaking, when the network topology is fixed, the output is affected by changes of connection weights. The learning procedure is generally based on the definition of an error function, which has to be minimized with respect to the weights and bias in the network [9]. If the error function is a differentiable function of the network weights, it is possible to estimate the derivative of the error with respect to the weights and modify the weights in order to minimize the error function. The back-propagation algorithm is based on the evaluation of the derivatives of the error function.

The multi-layer BP neural network presented in Fig. 1, with 73 inputs, 10 neurons in the first hidden layer, 5 neurons in the second hidden layer and 8 neurons in the output layer in our study. The activation function is a differentiable function and several neural network activation functions. An activation function is used to the input to generate a nonlinear output, while neural network implements a nonlinear function mapping one multidimensional space into another one.

The artificial neurons include input \(\{ x_{1} ,x_{2} , \ldots ,x_{n} \}\), output \(y\), weight of the connection \(\omega_{i}\) and threshold \(\theta\).\(f\) is an activation function and \(\xi\) is a middle variable. The relationship between input and output can be described in (1).

The learning approach works like this: input data is put forward from input layer to hidden layer, then to output layer, error information is propagated backward from output layer to hidden layer then to input layer. The B-P learning steps include:

-

1.

Select a pattern from the training set and present it to the network.

-

2.

Compute activation of input, hidden and output neurons in that sequence.

-

3.

Compute the error over the output neurons by comparing the generated outputs with the desired outputs.

-

4.

Use the calculated error to update all weights in the network, such that a global error measure gets reduced.

-

5.

Last but not least, repeat step 1 through step 4 until the global error falls below a predefined threshold.

The training of the network continues until minimization of the sum of the squares of errors given by the relationship:

\(e(\omega )\) is squared error, \(d_{i}\) is desired output, \(y_{i}\) is generated output. The objective is to minimize the squared error i.e. reach the Minimum Squared Error (MSE). Gradient descent is an optimization method for finding out the weight vector leading to the MSE. The vector form: \(\omega = \omega + \eta [ - \nabla e(\omega )]\), \(\eta\) is learning rate and \(- \nabla e(\omega )\) is gradient.For output layer, weight update rule works like this:

For hidden layer, weight update rule works like this:

An ANN is composed of simple processing elements operating in parallel. The processing ability of the network is stored in the inter-unit connection strengths (weights), obtained by a process of adaptation to a set of training patterns (learning).

The neural network on EAST has two categories of diagnostic signals input including 35 flux loops and 38 magnetic probes. In the experiment on EAST tokamak database, we select some representative of the discharge data as training and testing samples which are all lower divertor configuration discharges. The determination of input variable and network topology are basically determined by experience or through trial and error. The network includes two hidden layers, which optimal number of neurons of hidden layers is 10 and 5 respectively.

Generation of the Dataset

The database used for the NNs has been generated by means of an equilibrium code which represents a numerical model of the experiment, using the EAST configuration and mechanical structures. Each record of this database includes both the values of the physical quantities of interest, namely, some global parameters which are supposed to completely describe the state of the system, and the related measurements.

The code used to generate the dataset during both training and testing phases is EFIT code, which was first developed by L.LAO to complete magnetic analyses for DIII tokamak [10, 11].The main task of EFIT reconstruction algorithm is to compute the distributions in the plane (R, Z) of the poloidal flux \(\psi\), and toroidal current density \(J_{T}\), which provide a least squares best fit to diagnostic data and satisfy the model given by the Grad–Shafranov equation.

The reconstruction code can provide plasma shape parameters which can be used as neural network output parameters. In fact, the dataset is composed of 38 magnetic probes and 35 flux loops measurement data, used as input parameters of NNs, and the corresponding plasma parameters, used as output parameters.

EAST is an advanced device with full superconducting magnets to demonstrate high performance and steady state operation in ITER-like shape. EAST is normally operated at R = 1.8–1.9 m, minor radius a = 0.45–0.5 m, toroidal field \(B_{t}\) = 1.53–3.5 T, and plasma current \(I_{p}\) = 0.2–1 MA [12–14]. On EAST tokamak, there are 38 magnetic poloidal probes mounted inside the vessel and 35 poloidal flux loops installed inside the torus. The magnetic probes are two-dimensional with a probe measuring \(B_{\theta }\) and the other measuring \(B_{r}\), and used to detect the poloidal magnetic field and equilibrium reconstruction together with flux loops.

In the magnetic sensors presented here [15], only unprocessed data are used in NNs (e.g. processed magnetic measurement data for equilibrium reconstruction computation is not used).

The dataset is relatively large selected from 4 shots #51800, 51802, 51804, 51806, so we randomly sampled 1900 time slices training examples and 100 time slice testing examples. Based on the above discussion, the input parameters that have been initially considered for the present analysis are 35 flux values and 38 magnetic probes furnished by 35 + 38 magnetic sensors located around the plasma as is shown in Fig. 2.

As output parameters, the following plasma quantities have been selected:

-

Minor radius

-

Elongation and triangularity

-

Internal inductance

-

R and Z co-ordinates of lower X point

-

R and Z co-ordinates of up X point

All the diagnostic data are stored in mdsplus data server. EAST plasma equilibrium reconstruction is carried out offline using a sparse time slice manually after the discharge.

Based on the above discussion, the available data is divided into two subsets:

-

1.

The first subset is the training set, which is used for computing the gradient and updating the network weights and biases. It includes 1900 time slices discharge data, and every time slice discharge includes magnetic probes and flux loops input data and plasma parameters data calculated from EFIT.

-

2.

The test set is not used during the training, but is used to obtain the plasma parameters and compare the errors between predicted output of NNs and desired output calculated from EFIT. The test set includes 100 time slices discharge data, which are used to test the network and compare the result of NNs with EFIT results.

From a practical point of view, we have selected three different input datasets for testing NNs performance and data analysis. The first part of the datasets including 2000 time slices data are tested in three steps:

-

1.

use 35 flux loops and 38 magnetic probes as input parameters, the eight output parameters include: minor radius, triangularity, elongation, internal inductance, X point below R, X point up R, X point below Z, X point up Z.

-

2.

use 35 flux loops as input parameters, the eight output parameters include: minor radius, triangularity, elongation, internal inductance, X point below R, X point up R, X point below Z, X point up Z.

-

3.

use 38 magnetic probes as input parameters, the eight output parameters include: minor radius, triangularity, elongation, internal inductance, X point below R, X point up R, X point below Z, X point up Z.

It is noted that the classification of neural networks, i.e. the ability to distinguish between the limiter, divertor, ITER-like configurations on the bases of only magnetic measurement, will not be discussed in this paper.

Application to Experimental Data from EAST

The NN Performance Analysis of Different Input Data

The results obtained with the application of the above mentioned neural network to the generated dataset of plasma equilibrium are shown in follows. Several different diagnostic measurement inputs with varying numbers of magnetic sensors were trained on the problem under study. For the divertor configuration we have also analyzed the performance of network between different input magnetic measurement data. For input variables we chose: (1) a set of magnetic probes (38 coils); (2) a set of magnetic flux loops (35 loops); (3) both 38 magnetic probes and 35 flux loops.

The results achieved are also satisfactory: a good accuracy has been achieved as shown in Table 1 when we use 38 magnetic probes data as input parameters when we retrain the neural network until optimization. The parameter units of minor radius, X point below R, X point up R, X point below Z, X point up Z are all centimeters in this paper.

From the prediction results of neural network, we see that the relative errors of different output parameters are acceptably small in Fig. 3a–c. (Here we show the relative error of minor radius, triangularity, elongation, the results of other parameters are similar) and Fig. 4.

The results achieved are satisfactory: a good accuracy has been achieved as shown in Table 2 when we use 35 flux loops data as input parameters when we retrain the neural network until optimization. However, when proceeding in this way, it is noted that the retrained network deals with a different problem.

The results achieved are satisfactory: a good accuracy has been achieved as shown in Table 3 when we used 38 magnetic probes and 35 flux loops data as input parameters.

In our statistics results of output parameters, every parameter has 100 predicted outputs from neural network and 100 desired outputs from EFIT computation. We had chosen 100 time slices data as test dataset to get predicted results from trained neural network and compare the predicted output value with desired output value of every time slice.

The procedure for reducing the input variables starts from inspection of Table 4. We have then trained, using the same dataset, a new network with different input parameters and we have achieved the results in Table 4. It can be seen the degradation of the performance is rather small. Finally, in Table 4 we report RMS error of all output plasma parameters when using the optimization neural network of different input parameters. Obviously, for most parameters, it can be noted that the accuracy of results using 38 magnetic probes and 35 flux loops as input parameters is better than that only using 38 magnetic probes or 35 flux loops as input parameters. In addition, flux loops have more important influence on minor radius measurement, since RMS error is 3.741 % which is lower than 4.307 and 4.661 %. At the same time, magnetic probes play a more important role in identification of X point position than flux loops.

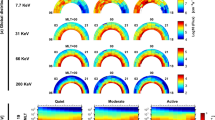

Minimization of the Magnetic Probes

For EAST and next generation ITER-like reactors, it is very important to minimize and optimize the number of magnetic sensors to be mounted inside the device. The neural network approach is particularly well suited to this problem, and can be used to rank the various magnetic probes. Our approach is to start from a relatively high numbers of magnetic probes, almost evenly distributed around the vessel, and then generate a procedure capable of ranking them in decreasing order of importance. In order to rank the magnetic probes, a possible procedure is to exclude each signal in turn from the set of inputs and retrain the network. However, when proceeding in this way, it is quite difficult to establish a clear rule to rank the probes, since the retrained network deals with a different problem. For this reason, we have preferred to force to zero the signal corresponding to a certain throughout the whole test dataset. Obviously the RMS error on the output parameters generally increases with respect to the reference case. By iterating this procedure to all probes, we have established a possible ranking rule for them. The results achieved when applying this simple technique are shown in Table 5. It has been found that, in spite of the completely different method, the results are in very good agreement with the achievements from EFIT. The parameter units of minor radius, X point below R, X point up R, X point below Z, X point up Z are all centimeters in this paper.

The procedure of reducing the number of probes starts from inspection of Table 5. If one assumes that the ranking parameters are the error averaged on the output parameters, we come to the conclusion that probes Nos. 9, 22, 30, 32, 35, 36, 37, 38 are the first candidates for elimination. For example, it can be noted that Nos. 9, 22, 30, 32, 35, 36, 37, 38 have weakest influence on the output result, while the minimum RMS errors appear when the 8 probes signal were forced to zero separately. Indeed, to assure the signal levels from all magnetic sensors, a series of vacuum shots were carefully designed and performed before each campaign. The measurement signal used for EFIT computation are selected at the test shot, that is to say, Nos. 9, 22, 30, 32, 35, 36, 37, 38 are not used as EFIT magnetic measurement input data. However, they were used as noise signals to verify the robustness of the neural network.

In this respect, Table 5 can be used to assess the criticality of the magnetic probes measurement coming from a certain sensor with respect to a specific shape parameter. For example, it can be noted that probe No. 21 is the most critical for a good identification of the Z co-ordinate of the lower X point, while probes Nos. 23 and 24 are the second and third critical for the Z co-ordinate of the lower X point. Probes Nos. 5 and 6 are very important for a good identification of the internal inductance,

As already pointed out above, it is not always possible to isolate and assess the contribution of each individual magnetic probe, since the information on the field components actually comes from a pair of sensors. We remark that the problem of an optimal and well balanced distribution of flux and field measurements around the plasma deserves a careful and deeper investigation. For this purpose, it is necessary to analyze the real experimental measurements, which is definitely beyond the scope of this paper.

Table 6 indicates that very good results can also be obtained with a much smaller number of sensors. We have retrained, using the same dataset, a new network with 30 magnetic probes and we have achieved the Table 6 results. It can be seen that the degradation of performance is rather small when the number of magnetic probes was reduced to 20. This event suggests that 38 probes are redundant for the identification of the selected shape parameters and therefore a reduction procedure make sense.

Summary and Conclusions

In this paper, NNs for data analysis of magnetic measurement database on EAST tokamak are presented. Particularly, addressing our attention on EAST single-null diverted discharges, we have exploited a machine learning method to analyze the optimum selection of the magnetic probes. It has been proved that the performance of the method is very good compared with the calculation result of EFIT, in terms of precision and duration of the training phase. It has been shown that, by inspecting the good properties of fault tolerance of the method, one can derive interesting information about the relative importance of the various magnetic probes. The above considerations lead to the conclusion that the simultaneous analysis of the behaviour of the neural network used to train and test can give interesting guidelines for the problem of the optimal location of a limited number of sensors.

However, the iteration of the above procedure can be rather cumbersome if one aims for a strong reduction of the number of sensors, as would be the case for the selection of the optimal location of a very limited number of probes. In fact, at each step, one has first to produce a table similar to Table 5 and then train and test a new network.

In conclusion, the method looks very promising especially for the real time control of the plasma shape parameters in the ITER-like device. In fact, if we assume that the quality factor of a method for this task is given by its quickness, accuracy and robustness, we believe that the neural network approach can achieve a very good score.

Subsequent work of this paper is to analyse in detail the theoretical feasibility of BP neural network prediction. Anyhow, the present results encourage us and enhance our confidence. In the future, more diagnostic signals (including ECE, MSE, Soft X information) and more efficient machine learning methods will be required and developed for data analysis based on different tokamak shape configurations [16–22].

References

A. Murari, J. Vega, Physics-based optimization of plasma diagnostic information. Plasma Phys. Controll. Fusion 56, 110301 (2014)

BPh van Milligen, V. Tribaldos, J.A. Jimenez, Neural network differential equation and plasma equilibrium solver. Phys. Rev. Lett. 75(20), 13 (1995)

F.C. Morabito, Equilibrium parameters recovery for experimental data in ASDEX upgrade elongated plasmas, in Neural Networks, 1995. Proceedings, IEEE International Conference on, Vol 2 (Perth, 1995)

A. Greco, N. Mammone, F.C. Morabito, M. Versaci, Artificial neural networks for classifying magnetic measurements in tokamak reactors. Int. J. Math. Comput. Phys. Quantum Eng. 1(7), 1 (2007)

J.B. Lister, H. Schnurrenberger, Fast non-linear extraction of plasma equilibrium parameters using a neural network mapping. Nucl. Fusion 31(7), 1–3 (1991)

E. Coccorese, C. Morabito, R. Martone, Identification of non-circular plasma equilibria using a neural network approach. Nucl. Fusion 34(10), 1–4 (1994)

D. Yonghua et al., Neural network prediction of disruptions caused by locked modes on J-TEXT tokamak. Plasma Sci. Technol. 15(11), 1–3 (2013)

S. Lek et al., Application of neural networks to modelling nonlinear relationships in ecology. Ecol. Model. 90, 39–52 (1996)

M. Camplani, Data analysis techniques for nonlinear dynamical systems [Ph.D thesis] (Department of Electrical and Electronic Engineering University of Cagliari, Italy, 2010)

L.L. Lao et al., Reconstruction of current profile parameters and plasma shapes in tokamaks. Nucl. Fusion 25, 1611 (1985)

Z.P. Luo et al., Online equilibrium reconstruction for EAST plasma discharge, in 16th IEEE-NPSS Real Time Conference (2009)

Y. Wan, J. Li, P. Weng, in 21st IAEA Fusion Energy Conference (Chengdu, 2009)

B. Wan, Nucl. Fusion 49, 104011 (2009)

J.P. Qian, X.Z. Gong et al., Plasma Sci. Technol 13, 1 (2011)

W.B. Xi et al., Nucl. Fusion Plasma Phys. 1, 014 (2008)

D. Rastovic, J. Fusion Energ. 26(4), 337–342 (2007)

D. Rastovic, J. Fusion Energ. 27(3), 182–187 (2008)

D. Rastovic, J. Fusion Energ. 34(2), 1 (2015)

D. Rastovic, Neural Comput. Appl. 21(5), 1–5 (2012)

D.E. Rumelhart, J.L. MeClelland, Parallel Distributed Processing, vol. 2 (MIT Press, Cambridge, 1986)

C. Bishop, Neural Networks for Pattern Recognition (Clarendon Press, Oxford, 1995)

D. Fisher, H, Lenz, A comparative evaluation of sequential feature selection algorithms, in Proceedings of the 5th International Workshop on Artificial Intelligence and Statistics (Ft. Lauderdale, 1995), pp. 1–7

Acknowledgments

The authors gratefully acknowledge the helpful discussions with Xing Zhe, Robert Granetz, Shaoyong Liang, and Dalong Chen. In addition, this work was supported by the National Magnetic Confinement Fusion Research Program of China under Grant No. 2014GB103000, the National Natural Science Foundation of China under Grant No. 11305216.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wang, B., Xiao, B., Li, J. et al. Artificial Neural Networks for Data Analysis of Magnetic Measurements on East. J Fusion Energ 35, 390–400 (2016). https://doi.org/10.1007/s10894-015-0044-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10894-015-0044-z