Abstract

Following Part I, we consider a class of reversible systems and study bifurcations of homoclinic orbits to hyperbolic saddle equilibria. Here we concentrate on the case in which homoclinic orbits are symmetric, so that only one control parameter is enough to treat their bifurcations, as in Hamiltonian systems. First, we modify and extend arguments of Part I to show in a form applicable to general systems discussed there that if such bifurcations occur in four-dimensional systems, then variational equations around the homoclinic orbits are integrable in the meaning of differential Galois theory under some conditions. We next extend the Melnikov method of Part I to reversible systems and obtain some theorems on saddle-node, transcritical and pitchfork bifurcations of symmetric homoclinic orbits. We illustrate our theory for a four-dimensional system, and demonstrate the theoretical results by numerical ones.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the companion paper [1], which we refer to as Part I here, we studied bifurcations of homoclinic orbits to hyperbolic saddle equilibria in a class of systems including Hamiltonian systems. They also arise as bifurcations of solitons or pulses in partial differential equations (PDEs), and have attracted much attention even in the fields of PDEs and nonlinear waves (see, e.g., Section 2 of [9]). Only one control parameter is enough to treat these bifurcations in Hamiltonian systems but two control parameters are needed in general. We applied a version of Melnikov’s method due to Gruendler [4] to obtain some theorems on saddle-node and pitchfork types of bifurcations for homoclinic orbits in systems of dimension four or more. Furthermore, we proved that if these bifurcations occur in four-dimensional systems, then variational equations (VEs) around the homoclinic orbits are integrable in the meaning of differential Galois theory [2, 8] when there exist analytic invariant manifolds on which the homoclinic orbits lie. In [14], spectral stability of solitary waves, which correspond to such homocinic orbits in a two-degree-of-freedom Hamiltonian system, in coupled nonlinear Schrödinger equations were also studied.

In this part, we consider reversible systems of the form

where \(f:\mathbb {R}^{2n}\times \mathbb {R}\rightarrow \mathbb {R}^{2n}\) is analytic, \(\mu \) is a parameter and \(n\ge 2\) is an integer, and continue to discuss bifurcations of homoclinic orbits to hyperbolic saddles. A rough sketch of the results were briefly stated in [11]. Our precise assumptions are as follows:

- (R1):

-

The system (1.1) is reversible, i.e., there exists a linear involution R such that \(f(Rx;\mu )+Rf(x;\mu )=0\) for any \((x,\mu )\in \mathbb {R}^{2n}\times \mathbb {R}\). Moreover, \(\dim \mathrm {Fix}(R)\) \(=n\), where \(\mathrm {Fix}(R)=\{x\in \mathbb {R}^{2n}\,|\,Rx=x\}\).

- (R2):

-

The origin \(x=0\), denoted by O, is an equilibrium in (1.1) for all \(\mu \in \mathbb {R}\), i.e., \(f(0;\mu )=0\).

Note that \(O\in \mathrm {Fix}(R)\) since \(RO=O\). By abuse of notation, we write x(t) for a solution to (1.1). We use such a notation through this paper.

A fundamental characteristic of reversible systems is that if x(t) is a solution, then so is \(Rx(-t)\). We call a solution (and the corresponding orbit) symmetric if \(x(t)=Rx(-t)\). It is a well-known fact that an orbit is symmetric if and only if it intersects the space \(\mathrm {Fix}(R)\) [10]. Moreover, if \(\lambda \in \mathbb {C}\) is an eigenvalue of \(\mathrm {D}_x f(0;\mu )\), then so are \(-\lambda \) and \(\overline{\lambda }\), where the overline represents the complex conjugate. See also [7] for general properties of reversible systems. We also assume the following.

- (R3):

-

The Jacobian matrix \(\mathrm {D}_x f(0;0)\) has 2n eigenvalues \(\pm \lambda _1,\ldots ,\pm \lambda _n\) such that \(0<\mathrm {Re}\lambda _1\le \cdots \le \mathrm {Re}\lambda _n\) (i.e., the origin is a hyperbolic saddle).

- (R4):

-

The equilibrium \(x=0\) has a symmetric homoclinic orbit \(x^\mathrm {h}(t)\) with \(x^\mathrm {h}(0)\in \mathrm {Fix}(R)\) at \(\mu =0\). Let \(\Gamma _0=\{x^\mathrm {h}(t)|t\in \mathbb {R}\}\cup \{0\}\).

Assumptions similar to (R3) and (R4) were made in (M1) and (M2) for general multi-dimensional systems and in (A1) and (A2) for four-dimensional systems in Part I:

- (A1):

-

The origin \(x=0\) is a hyperbolic saddle equilibrium (in (1.1) with \(n=2\)) at \(\mu =0\), such that \(\mathrm {D}_x f(0; 0)\) has four real eigenvalues, \(\tilde{\lambda }_1\le \tilde{\lambda }_2< 0<\tilde{\lambda }_3\le \tilde{\lambda }_4\).

- (A2):

-

At \(\mu =0\) the hyperbolic saddle \(x=0\) has a homoclinic orbit \(x^\mathrm {h}(t)\). Moreover, there exists a two-dimensional analytic invariant manifold \(\mathscr {M}\) containing \(x=0\) and \(x^\mathrm {h}(t)\).

In particular, in (R1)–(R4), we do not assume that there exists such an invariant manifold as \(\mathscr {M}\) in (A2). When \(f(0;0)=0\), it follows only from (R3) that the origin \(x=0\) is still a hyperbolic saddle near \(\mu =0\) under some change of coordinates if necessary, as in (R2).

Reversible systems are frequently encountered in applications such as mechanics, fluids and optics, and have attracted much attention in the literature [7]. One of the characteristic properties of reversible systems is that homoclinic orbits to hyperbolic saddles are typically symmetric and continue to exist when their parameters are varied if so, in contrast to the fact that such orbits do not persist in general systems. In [5] saddle-node bifurcations of homoclinic orbits to hyperbolic saddles in reversible systems were discussed and shown to be codimension-one or -two depending on whether the homoclinic orbits are symmetric or not. Here we concentrate on the case in which homoclinic orbits are symmetric, so that only one control parameter is enough to treat their bifurcations, as in Hamiltonian systems (see Part I). For asymmetric homoclinic orbits, the arguments of Part I for non-Hamiltonian systems can apply.

The object of this paper is twofold. First, we consider the case of \(n=2\), and modify and extend arguments given in Part I to show in a form applicable to general systems discussed there that if a bifurcation of the homoclinic orbit \(x^\mathrm {h}(t)\) occurs at \(\mu =0\), then the VE of (1.1) around \(x^\mathrm {h}(t)\) at \(\mu =0\),

is integrable in the meaning of differential Galois theory under some conditions even if there does not exist such an invariant manifold as \(\mathscr {M}\) in (A2). Here the domain on which Eq. (1.2) is defined has been extended to a neighborhood of \(\mathbb {R}\) in \(\mathbb {C}\). Such an extension is possible since \(f(x;\mu )\) and \(\mathrm {D}_xf(x;\mu )\) are analytic. We assume the following three conditions:

- (B1):

-

The origin \(x=0\) is a hyperbolic saddle equilibrium and has a homoclinic orbit \(x^\mathrm {h}(t)\) in (1.1) with \(n=2\) at \(\mu =0\), such that \(\mathrm {D}_x f(0; 0)\) has four real eigenvalues, \(\tilde{\lambda }_1\le \tilde{\lambda }_2< 0<\tilde{\lambda }_3\le \tilde{\lambda }_4\).

- (B2):

-

The homoclinic orbit \(x^\mathrm {h}(t)\) is expressed as

$$\begin{aligned} x^\mathrm {h}(t)= {\left\{ \begin{array}{ll} h_+(e^{\lambda _- t}) &{} \text{ for } \mathrm {Re}\,t>0;\\ h_-(e^{\lambda _+ t}) &{} \text{ for } \mathrm {Re}\,t<0, \end{array}\right. } \end{aligned}$$(1.3)in a neighborhood U of \(t=0\) in \(\mathbb {C}\), where \(h_\pm :U\rightarrow \mathbb {C}^4\) are certain analytic functions with their derivatives satisfying \(h_\pm '(0)\ne 0\), \(\lambda _+=\tilde{\lambda }_3\) or \(\tilde{\lambda }_4\) and \(\lambda _-=\tilde{\lambda }_1\) or \(\tilde{\lambda }_2\). When the system (1.1) is reversible and \(x^\mathrm {h}(t)\) is symmetric as in (R1)–(R4), we have \(\lambda _\pm =\mp \tilde{\lambda }_j\) for \(j=1\) or 2.

- (B3):

-

The VE (1.2) has a solution \(\xi =\varphi (t)\) such that

$$\begin{aligned} \begin{aligned}&\qquad \varphi (\lambda _-^{-1}\log z) =a_+(z)z^{\lambda _+'/\lambda _-}+b_{1+}(z)z^{\tilde{\lambda }_1/\lambda _-} +b_{2+}(z)z^{\tilde{\lambda }_2/\lambda _-},\\&\qquad \text{ or }\quad \varphi (\lambda _-^{-1}\log z) =a_+(z)z^{\lambda _+'/\lambda _-}+b_{1+}(z)z+b_{2+}(z)z\log z \end{aligned} \end{aligned}$$(1.4)and

$$\begin{aligned} \begin{aligned}&\varphi (\lambda _+^{-1}\log z) =a_-(z)z^{\lambda _-'/\lambda _+}+b_{1-}(z)z^{\tilde{\lambda }_3/\lambda _+} +b_{2-}(z)z^{\tilde{\lambda }_4/\lambda _+},\\&\text{ or }\quad \varphi (\lambda _+^{-1}\log z) =a_-(z)z^{\lambda _-'/\lambda _+}+b_{1-}(z)z+b_{2-}(z)z\log z \end{aligned} \end{aligned}$$(1.5)in \(|z|\ll 1\), where \(a_{\pm }(z)\) and \(b_{j\pm }(z)\), \(j=1,2\), are certain analytic functions in U with \(a_\pm (0)\ne 0\) and \(\lambda _+'=\tilde{\lambda }_3\) or \(\tilde{\lambda }_4\) and \(\lambda _-'=\tilde{\lambda }_1\) or \(\tilde{\lambda }_2\).

Note that if assumptions (A1) and (A2) hold, then so does (B1). In (B3), we have \(\varphi (t)e^{-\lambda _+' t}=\mathscr {O}(1)\) as \(t\rightarrow +\infty \) and \(\varphi (t)e^{\lambda _-' t}=\mathscr {O}(1)\) as \(t\rightarrow -\infty \). Moreover, the second equations in (1.4) and (1.5) hold only if \(\tilde{\lambda }_1=\tilde{\lambda }_2\) and \(\tilde{\lambda }_3=\tilde{\lambda }_4\), respectively. Note that the existence of such an invariant manifold as \(\mathscr {M}\) in (A2) is not assumed. We prove the following theorem.

Theorem 1.1

Let \(n=2\) and suppose that the following condition holds along with (B1)–(B3):

- (C):

-

The VE (1.2) has another linearly independent bounded solution.

Then the VE (1.2) has a triangularizable differential Galois group, when regarded as a complex differential equation with meromorphic coefficients in a desingularized neighborhood \(\Gamma _\mathrm {loc}\) of the homoclinic orbit \(x^\mathrm {h}(t)\) in \(\mathbb {C}^4\).

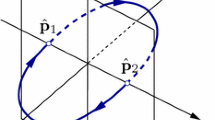

Note that \(\xi =\dot{x}^\mathrm {h}(t)\) is a bounded solution to (1.2). A proof of Theorem 1.1 is given in Sect. 2. Here \(\Gamma _\mathrm {loc}\) is a local Riemann surface that is given by \(\Sigma _-\cup \Sigma _t\cup \Sigma _+\) and taken sufficiently narrowly, where \(\Sigma _+\) and \(\Sigma _-\) are, respectively, neighborhoods of \(0_+\) and \(0_-\), which are represented in the temporal parameterization of \(x^\mathrm {h}(t)\) by \(t=+\infty \) and \(-\infty \), and \(\Sigma _t\) be a neighborhood of \(\Gamma _0\setminus (\Sigma _+\cup \Sigma -)\) (see Fig. 1). See Sect. 2 for more details. This theorem means that under conditions (B1)–(B3) the VE (1.2) is integrable in the meaning of differential Galois theory if condition (C) holds. The statement of Theorem 1.1 holds for general systems, especially even if the homoclinic orbit is asymmetric in (1.1). It was proved in Part I, where instead of such conditions as (B2) and (B3) the existence of a two-dimensional invariant manifold containing \(x=0\) and \(x^\mathrm {h}(t)\) was assumed. Conditions (B2) and (B3) with appropriate modifications also hold under the restricted assumption. We give simple conditions guaranteeing (B2) and (B3) under condition (B1) in the general setting. See Proposition 2.4. As stated below (see also Part I), condition (C) is a necessary and sufficient condition for the occurrence of some bifurcations of the homoclinic orbit under certain nondegenerate conditions.

Secondly, we extend the Melnikov method of Part I to reversible systems and obtain some theorems on saddle-node, transcritical and pitchfork bifurcations of symmetric homoclinic orbits. In particular, it is shown without the restriction of \(n=2\) that if and only if condition (C) holds and no further linearly independent bounded solution to the VE (1.2) exists, then saddle-node, transcritical or pitchfork bifurcations of symmetric homoclinic orbits occur under some nondegenerate conditions. We emphasize that our result is not an immediate extension of Part I: The reversibility of the systems as well as their symmetry is well used to detect codimension-one bifurcations of symmetric homoclinic orbits. So bifurcations of symmetric homoclinic orbits in reversible systems are proven to be of codimension-one, again. We also illustrate our theory for a four-dimensional system. We perform numerical computations using the computer tool AUTO [3], and demonstrate the usefulness and validity of the theoretical results comparing them with the numerical ones.

The outline of the paper is as follows: In Sect. 2 we give a proof of Theorem 1.1 in a form applicable to general four-dimensional systems discussed in Part I. In Sect. 3 we extend the Melnikov method and obtain analytic conditions for bifurcations of symmetric homoclinic orbits. Finally, our theory is illustrated for the example along with numerical results in Sect. 4.

2 Algebraic Condition

In this section we restrict ourselves to the case of \(n=2\) and give a proof of Theorem 1.1 in a form applicable to general systems stated above, i.e., without assumptions (R1)–(R4). We first recall Lemma 2.1 of Part I in our setting.

Lemma 2.1

Under condition (B1), there exist a fundamental matrix \(\Phi (t)=(\varphi _1(t),\ldots ,\varphi _4(t))\) of (1.2) and a permutation \(\sigma \) on four symbols \(\{1,2,3,4\}\) such that

where \(k_j=0\) or 1, \(j=1\)-4.

We now assume that the hypotheses of Theorem 1.1 hold along with condition (C) and that \(\lambda _-=\tilde{\lambda }_1(<0)\) and \(\lambda _+=\tilde{\lambda }_4(>0)\) for simplicity since the other cases can be treated similarly. We regard the VE (1.2) as a differential equation defined on a neighborhood of \(\mathbb {R}\) in \(\mathbb {C}\), as stated in Sect. 1. From (1.3) in (B2) we easily obtain the following since \(\xi =\dot{x}^\mathrm {h}(t)\) is a solution to (1.2).

Lemma 2.2

We have

in U, where \(h_\pm '(z)\) represent the derivatives of \(h_\pm (z)\).

Let \(U_\mathbb {R}\) denote a neighborhood of \(\mathbb {R}\cup \{\infty \}\) in the Riemann sphere \(\mathbb {P}^1=\mathbb {C}\cup \{\infty \}\) and let \(\Gamma =\{x^\mathrm {h}(t)\mid t\in U_\mathbb {R}\}\). Introducing two points \(0_+\) and \(0_-\) for the double point \(x=0\), we desingularize the curve \(\Gamma _0=\{x=x^\mathrm {h}(t)\mid t\in \mathbb {R}\}\cup \{0\}\) on \(\Gamma \). Here the points \(0_+\) and \(0_-\) are represented in the temporal parameterization by \(t=+\infty \) and \(-\infty \), respectively. Let \(\Sigma _\pm \) be neighborhoods of \(0_\pm \) on \(\Gamma \), and take a sufficiently narrow, simply connected neighborhood \(\Sigma _t\) of \(\Gamma _0\setminus (\Sigma _+\cup \Sigma _-)\). We set \(\Gamma _\mathrm {loc}=\Sigma _-\cup \Sigma _t\cup \Sigma _+\). See Fig. 1. Using the expression (1.3), we introduce three charts on the Riemann surface \(\Gamma _\mathrm {loc}\): a chart \(\Sigma _+\) (resp. \(\Sigma _-\)) near \(0_+\) (resp. near \(0_-\)) with coordinates \(z=\mathrm {e}^{\lambda _- t}\) (resp. \(z=\mathrm {e}^{\lambda _+ t}\)), and a chart \(\Sigma _t\) bridging them with a coordinate t. The transformed VE becomes

in the charts \(\Sigma _{\pm }\) while it has the same form in the chart \(\Sigma _t\) as the original one. We take a sufficiently small surface as \(\Gamma _{\mathrm {loc}}\) such that it contains no other singularity except \(z=0\) in \(\Sigma _{\pm }\). We easily see that \(\mathrm {D}_x f(h_\pm (z);0)\) are analytic in \(\Sigma _\pm \) to obtain the following lemma.

Lemma 2.3

The singularities of the transformed VE for (1.2) at \(z=0\) in \(\Sigma _{\pm }\) are regular. Thus, the transformed VE is Fuchsian on \(\Gamma _{\mathrm {loc}}\).

We are now in a position to prove Theorem 1.1.

Proof of Theorem 1.1

We first see that \(\Phi (\lambda _\mp ^{-1}\log z)\) in \(\Sigma _{\pm }\) is a fundamental matrix of the transformed VE. So the \(4\times 4\) monodromy matrices \(M_\pm \) along small circles of radius \(\epsilon >0\) centered at \(z=0\) in \(\Sigma _{\pm }\) are computed as

Let \(e_j\) denote the vector of which the j-th component is one and the others are zero for \(j=1\)-4. Since \(\varphi _1(t)=\Phi (t)e_1\), it follows from Lemma 2.2 that

To prove the theorem, we only have to show that \(M_\pm \) are simultaneously triangularizable, since by Corollary 3.5 of Part I the differential Galois group is triangularizable if so.

Suppose that \(\tilde{\lambda }_1\ne \tilde{\lambda }_2\) and \(\tilde{\lambda }_3\ne \tilde{\lambda }_4\). Then the Jacobian matrix \(\mathrm {D}_xf(0;0)\) is diagonalizable, so that by Lemma 2.1 and condition (C)

for \(|z|\ll 1\), where \(a_{j\pm }\), \(j=1,2\), are certain analytic functions. Hence,

Moreover, since the first equations of (1.4) and (1.5) in (B3) hold, there exists \(v\in \mathbb {C}^4\) such that

Thus, \(M_\pm \) are simultaneously triangularizable.

Next assume that \(\tilde{\lambda }_1=\tilde{\lambda }_2\) but \(\tilde{\lambda }_3\ne \tilde{\lambda }_4\). If the eigenvalue \(\tilde{\lambda }_1=\tilde{\lambda }_2\) is of geometric multiplicity two, then we can prove that \(M_\pm \) are simultaneously triangularizable as in the above case. So we assume that it is of geometric multiplicity one and algebraic multiplicity two. Instead of the first equation of (2.1) we have

for \(|z|\ll 1\), so that Eq. (2.2) holds. Moreover, even if not the first but second equation in (1.4) holds, there exists \(v\in \mathbb {C}^4\) satisfying (2.3) as above. Hence, \(M_\pm \) are simultaneously triangularizable. Similarly, we can show that \(M_\pm \) are simultaneously triangularizable when \(\tilde{\lambda }_3=\tilde{\lambda }_4\) but \(\tilde{\lambda }_1\ne \tilde{\lambda }_2\).

Finally, assume that \(\tilde{\lambda }_1=\tilde{\lambda }_2\) and \(\tilde{\lambda }_3=\tilde{\lambda }_4\). If the eigenvalue \(\tilde{\lambda }_1=\tilde{\lambda }_2\) and/or \(\tilde{\lambda }_3=\tilde{\lambda }_4\) is of geometric multiplicity two, then we can prove that \(M_\pm \) are simultaneously triangularizable as in the above two cases. So we assume that they are of geometric multiplicity one and algebraic multiplicity two. Instead of (2.1) we have

for \(|z|\ll 1\), so that Eq. (2.2) holds. Even if not the first but second equation in (1.4) holds, then there exists \(v\in \mathbb {C}^4\) satisfying (2.3) as above. Hence, \(M_\pm \) are simultaneously triangularizable. \(\square \)

At the end of this section we give simple conditions guaranteeing (B2) and (B3) under condition (B1).

Proposition 2.4

Under condition (B1), conditions (B2) and (B3) hold if one of the following conditions holds:

-

(i)

There exists a two-dimensional analytic invariant manifold \(\mathscr {M}\) containing \(x = 0\) and \(x^\mathrm {h}(t);\)

-

(ii)

As well as \(\sigma (3)=2\) and \(k_2,k_3=0\), we can take \(\varphi _1(t)=\dot{x}^\mathrm {h}(t)\) with \(\sigma (1)=4\) and \(k_1,k_4=0\) or condition (B2) holds.

Proof

First, assume condition (i). We recall from Part I that the VE (1.2) can be rewritten in certain coordinates \((\chi ,\eta )\in \mathbb {R}^2\times \mathbb {R}^2\) as

where \(A_\chi (x),A_c(x),A_\eta (x)\) are analytic \(2\times 2\) matrix functions of \(x\in \mathbb {R}^4\), and that the tangent space of \(\mathscr {M}\) is given by \(\eta =0\). See Section 4.1 (especially, Eq. (32)) of Part I. In particular, \(A_\chi (0)\) and \(A_\eta (0)\) have positive and negative eigenvalues, say \(\tilde{\lambda }_1<0<\tilde{\lambda }_3\) and \(\tilde{\lambda }_2<0<\tilde{\lambda }_4\). Hence, \(\dot{x}^\mathrm {h}(t)\) corresponds to a solution to

so that condition (B2) holds. Equation (2.4) also has a solution satisfying

which mean that

for \(|z|\ll 1\), where \(a_{\pm }(z)\) and \(b_{\pm }(z)\) are certain analytic functions with \(a_\pm (0)\ne 0\). This means condition (B3). Similar arguments can apply even if the eigenvalues of \(A_\chi (0)\) and \(A_\eta (0)\) are not \(\tilde{\lambda }_1,\tilde{\lambda }_3\) and \(\tilde{\lambda }_2,\tilde{\lambda }_4\), respectively.

Next, assume condition (ii). If condition (B2) holds, then there exists a solution to the VE (1.2) such that

which mean condition (B3). On the other hand, if \(\varphi _1(t)=\dot{x}^\mathrm {h}(t)\) with \(\sigma (1)=4\) and \(k_1,k_4=0\), then

which mean condition (B2) with \(\lambda _-=\tilde{\lambda }_1\) and \(\lambda _+=\tilde{\lambda }_4\). Thus, we complete the proof. \(\square \)

3 Analytic Conditions

In this section we consider the general case of \(n\ge 2\) and extend the Melnikov method of Part I to reversible systems under assumptions (R1)–(R4). Here we restrict to \(\mathbb {R}\) the domain on which the VE (1.2) is defined.

3.1 Extension of Melnikov’s Method

Consider the general case of \(n\ge 2\) and assume (R1)–(R4). By assumption (R1) there exists a splitting \(\mathbb {R}^{2n}=\mathrm {Fix}(R)\oplus \mathrm {Fix}(-R)\). So we choose a scalar product \(\langle \cdot ,\cdot \rangle \) in \(\mathbb {R}^{2n}\) such that

Since \(f(Rx;0)+Rf(x;0)=0\), we have

It follows from (3.1) that if \(\xi (t)\) is a solution to (1.2), then so are \(\pm R\xi (-t)\) as well as \(-\xi (t)\). For (1.2), we also say that a solution \(\xi (t)\) is symmetric and antisymmetric if \(\xi (t)=R\xi (-t)\) and \(\xi (t)=-R\xi (-t)\), respectively, and show that it is symmetric and antisymmetric if and only if it intersects the spaces \(\mathrm {Fix}(R)\) and \(\mathrm {Fix}(-R)=\mathrm {Fix}(R)^\bot \), respectively, at \(t=0\). We easily see that \(\xi =\dot{x}^\mathrm {h}(t)\) is antisymmetric since \(x^\mathrm {h}(t)=Rx^\mathrm {h}(-t)\) so that

Here we also assume the following.

- (R5):

-

Let \(n_0<2n\) be a positive integer. The VE (1.2) has just \(n_0\) linearly independent bounded solutions, \(\xi =\varphi _1(t)\,(=\dot{x}^\mathrm {h}(t)),\varphi _2(t),\ldots ,\varphi _{n_0}(t)\), such that \(\varphi _j(0)\in \mathrm {Fix}(R)\) for \(j=2,\ldots ,n_0\). If \(n_0=1\), then there is no bounded solution that is linearly independent of \(\xi =\dot{x}^\mathrm {h}(t)\).

Here by abuse of notation \(\varphi _j(t)\), \(j=1,\ldots ,2n\), are different from those of Lemma 2.1 (such abuse of notation was used in Part I without mentioning). Note that \(\varphi _1(0)=\dot{x}^\mathrm {h}(0)\in \mathrm {Fix}(-R)=\mathrm {Fix}(R)^\bot \). Thus, \(\varphi _2(t),\ldots ,\varphi _{n_0}(t)\) are symmetric but \(\varphi _1(t)\) is antisymmetric. Using Lemma 2.1 of Part I, under assumptions (R1)–(R5), we can take other linearly independent solutions \(\varphi _j(t)\), \(j=n_0+1,\ldots ,n\), to the VE (1.2) than those given in (R5) as follows.

Lemma 3.1

There exist linearly independent solutions \(\varphi _j(t)\), \(j=1,\ldots ,2n\), to (1.2) such that they satisfy the following conditions:

Here \(\varphi _j(t)\), \(j=1,\ldots ,n_0\), are given in (R5).

Proof

It follows from Lemma 2.1 of Part I that there are linearly independent solutions \(\varphi _j(t)\), \(j=n_0+1,\ldots ,n+n_0\), to (1.2) such that they are linearly independent of \(\varphi _j(t)\), \(j=1,\ldots ,n_0\), and satisfy the first, second and third conditions in (3.2) except that \(\varphi _j(0)\in \mathrm {Fix}(R)\) or \(\mathrm {Fix}(-R)\) for \(j=n+1,\ldots ,n+n_0\). Note that other linearly independent solutions with \(\xi (0)\in \mathrm {Fix}(R)\) than \(\varphi _j(t)\), \(j=2,\ldots ,n_0\), do not converge to 0 as \(t\rightarrow +\infty \) or \(-\infty \). Let

We easily see that they satisfy the fourth condition in (3.2) and \(\varphi _j(t)\), \(j=1,\ldots ,2n\) are linearly independent.

Let \(\xi =\varphi (t)\) be a solution to (1.2). If \(\varphi (0)\not \in \mathrm {Fix}(-R)\) and \(\varphi (0)\not \in \mathrm {Fix}(R)\), then \(\xi (t)=\varphi (t)+R\varphi (-t)\) and \(\xi (t)=\varphi (t)-R\varphi (-t)\) satisfy \(\xi (0)\in \mathrm {Fix}(R)\) and \(\xi (0)\in \mathrm {Fix}(-R)\), respectively. Hence, we choose \(\varphi _j(t)\), \(j=n+1,\ldots ,n+n_0\), such that \(\varphi _j(0)\in \mathrm {Fix}(R)\cup \mathrm {Fix}(-R)\). Moreover, the subspace spanned by \(\varphi _j(0)\) and \(\varphi _{n+j}(0)\), \(j=n_0+1,\ldots ,n\), intersects each of \(\mathrm {Fix}(R)\) and \(\mathrm {Fix}(-R)\) in an \((n-n_0)\)-dimensional subspace. Thus, one of \(\varphi _j(t)\), \(j=n+1,\ldots ,n+n_0\), is contained in \(\mathrm {Fix}(R)\), and the others are contained in \(\mathrm {Fix}(-R)\) since \(\varphi _1(0)\in \mathrm {Fix}(-R)\), \(\varphi _j(0)\in \mathrm {Fix}(R)\), \(j=2,\ldots ,n_0\), and \(\dim \mathrm {Fix}(R)=\dim \mathrm {Fix}(-R)=n\). This completes the proof. \(\square \)

Let \(\Phi (t)=(\varphi _1(t),\ldots ,\varphi _{2n}(t))\). Then \(\Phi (t)\) is a fundamental matrix to (1.2). Define \(\psi _j(t)\), \(j=1,\ldots ,2n\), by

where \(\delta _{jk}\) is Kronecker’s delta. The functions \(\psi _j(t)\), \(j=1,\ldots ,n\), can be obtained by the formula \(\Psi (t)=(\Phi ^*(t))^{-1}\), where \(\Psi (t)=(\psi _1(t),\ldots ,\psi _n(t))\) and \(\Phi ^*(t)\) is the transpose matrix of \(\Phi (t)\). It immediately follows from (R5) and (3.2)-(3.4) that

and

Moreover, \(\Psi (t)\) is a fundamental matrix to the adjoint equation

See Section 2.1 of Part I. Note that if \(\xi (t)\) is a solution to (3.6), then so are \(\pm R^*\xi (-t)\) as well as \(-\xi (t)\).

As in Part I, we look for a symmetric homoclinic orbit of the form

satisfying \(x(0)\in \mathrm {Fix}(R)\) in (1.1) when \(\mu \ne 0\), where \(\alpha =(\alpha _1,\ldots ,\alpha _{n_0-1})\). Here the \(\mathscr {O}(\alpha )\)-terms are eliminated in (3.7) if \(n_0=1\). Let \(\kappa \) be a positive real number such that \(\kappa <\frac{1}{4}\lambda _1\), and define two Banach spaces as

where the maximum of the suprema is taken as a norm of each space. We have the following result as in Lemma 2.3 of Part I.

Lemma 3.2

The nonhomogeneous VE,

with \(\eta \in \hat{\mathscr {Z}}^0\), has a solution in \(\hat{\mathscr {Z}}^1\) if and only if

Moreover, if condition (3.9) holds, then there exists a unique solution to (3.8) satisfying \(\langle \psi _j(0),\xi (0)\rangle =0\), \(j=1,\ldots ,n_0\), in \(\hat{\mathscr {Z}}^1\).

Proof

As in Lemma 2.2 of Part I, we see that if \(z\in \hat{\mathscr {Z}}^1\), then

Hence, if Eq. (3.8) has a solution \(\xi \in \hat{\mathscr {Z}}^1\), then

for \(j=2,\ldots ,n_0\). Thus, the necessity of the first part is proven.

Assume that condition (3.9) holds. We easily see that for \(\eta \in \hat{\mathscr {Z}}^0\)

while

Hence, by variation of constants we obtain a solution to (3.8) as

which is contained in \(\hat{\mathscr {Z}}^1\) since by (3.3) and (3.5)

Note that \(\hat{\xi }(0)\in \mathrm {Fix}(R)\) yields \(\hat{\xi }\in \hat{\mathscr {Z}}^1\) since if \(\xi (t)\) is a solution to (3.8), then so is \(R\xi (-t)\). Thus the sufficiency of the first part is proven.

We turn to the second part. Obviously, \(\langle \psi _j(0),\hat{\xi }(0)\rangle =0\), \(j=1,\ldots ,n_0\). In addition, if \(\xi =\xi (t)\) is a solution to (3.8) in \(\hat{\mathscr {Z}}^1\), then \(\xi (0)\in \mathrm {Fix}(R)\) so that \(\langle \psi _1(0),\xi (0)\rangle =0\) by \(\mathrm {Fix}(-R)^\bot =\mathrm {Fix}(R)\). Moreover, any solution to (3.8) is represented as \(\xi (t)=\hat{\xi }(t)+\sum _{j=1}^{2n}d_j\varphi _j(t)\), where \(d_j\in \mathbb {R}\), \(j=1,\ldots ,n\), are constants, but one has \(d_j=0\), \(j=1,\ldots ,2n\), if it is contained in \(\hat{\mathscr {Z}}^1\) and satisfies \(\langle \psi _j(0),\xi (0))\rangle =0\), \(j=2,\ldots ,n_0\). This completes the proof. \(\square \)

Let

which is also a Banach space. Define a differentiable function \(F:\hat{\mathscr {Z}}_0^1\times \mathbb {R}^{n_0-1}\times \mathbb {R}\rightarrow \hat{\mathscr {Z}}^0\) as

Note that for \(z\in \hat{\mathscr {Z}}_0^1\)

A solution \(z\in \hat{\mathscr {Z}}_0^1\) to

for \((\alpha ,\mu )\) fixed gives a symmetric homoclinic orbit to \(x=0\).

We now proceed as in Section 2.1 of Part I with taking the reversibility of (1.1) into account. Define a projection \(\Pi :\hat{\mathscr {Z}}^0\rightarrow \hat{\mathscr {Z}}^1\) by

where \(q:\mathbb {R}\rightarrow \mathbb {R}\) is a continuous function satisfying

Note that for \(z\in \hat{\mathscr {Z}}^0\)

Using Lemma 3.2 and the implicit function theorem, we can show that there are a neighborhood U of \((\alpha ,\mu )=(0,0)\) and a differentiable function \(\bar{z}:U\rightarrow \hat{\mathscr {Z}}_0^1\) such that \(\bar{z}(0,0)=0\) and

for \((\alpha ,\mu )\in U\), where “\(\mathrm {id}\)” represents the identity.

Let

We can prove the following theorem as in Theorem 2.4 of Part I (see also Theorem 5 of [4]).

Theorem 3.3

Under assumptions (R1)–(R5) with \(n_0\ge 1\), suppose that \(\bar{F}(0;0)=0\). Then for each \((\alpha ,\mu )\) sufficiently close to (0, 0) Eq. (1.1) admits a unique symmetric homoclinic orbit to the origin of the form (3.7)

Henceforth we apply Theorem 3.3 to obtain persistence and bifurcation theorems for symmetric homoclinic orbits in (1.1) with \(n\ge 2\), as in Sections 2.2 and 2.3 of Part I.

3.2 Persistence and Bifurcations of Symmetric Homoclinic Orbits

We first assume that \(n_0=1\), which means that condition (C) does not hold for \(n\ge 2\). Since \(\Pi \,z=0\) for \(z\in \hat{\mathscr {Z}}^0\) and Eq. (3.13) has a solution \(\bar{z}(\mu )\) on a neighborhood U of \(\mu =0\), we immediately obtain the following result from the above argument, as in Theorem 2.5 of Part I.

Theorem 3.4

Under assumptions (R1)–(R5) with \(n_0=1\), there exists a symmetric homoclinic orbit on some open interval \(I\subset \mathbb {R}\) including \(\mu =0\).

Remark 3.5

Theorem 3.4 implies that if condition (C) does not hold for \(n\ge 2\), then the homoclinic orbit \(x^\mathrm {h}(t)\) persists, i.e., no bifurcation occurs, as stated in Sect. 1.

We now assume that \(n_0=2\), which means that condition (C) holds for \(n\ge 2\) and no further linearly independent solution to the VE (1.2) exists. Define two constants \(a_2,b_2\) as

(cf. Eq. (19) of Part I). We obtain the following result as in Theorem 2.7 of Part I.

Theorem 3.6

Under assumptions (R1)–(R5) with \(n_0=2\), suppose that \(a_2,b_2\ne 0\). Then for some open interval I including \(\mu =0\) there exists a differentiable function \(\phi :I\rightarrow \mathbb {R}\) with \(\phi (0)=0\), \(\phi '(0)=0\) and \(\phi ''(0)\ne 0\), such that a symmetric homoclinic orbit of the form (3.7) exists for \(\mu =\phi (\alpha )\), i.e., a saddle-node bifurcation of symmetric homoclinic orbits occurs at \(\mu =0\). Moreover, it is supercritical and subcritical if \(a_2b_2<0\) and \(>0\), respectively. See Fig. 2.

We next assume the following instead of (R4).

- (R4’):

-

The equilibrium \(x=0\) has a symmetric homoclinic orbit \(x^\mathrm {h}(t;\mu )\) in an open interval \(I_{0}\ni \mu =0\). Moreover, \(\langle \psi _{n+2}(t),\dot{x}^\mathrm {h}(t;\mu )\rangle =0\) for any \(t\in \mathbb {R}\) and \(\mu \in I_{0}\).

Under assumption (R4’) we have

so that

In this situation we cannot apply Theorem 3.6. Let \(\xi =\xi ^\mu (t)\) be the unique solution to

in \(\hat{\mathscr {Z}}_0^1\), and define

where \(x^\mathrm {h}(t)=x^\mathrm {h}(t;0)\) (cf. Eq. (20) of Part I).

Theorem 3.7

Under assumptions (R1)-(R3), (R4’) and (R5) with \(n_0=2\), suppose that \(\bar{a}_2,b_2\ne 0\). Then for some open interval \(I\,{(\subset I_0)}\) including \(\mu =0\) there exists a differentiable function \(\phi :I\rightarrow \mathbb {R}\) with \(\phi (0)=0\) and \(\phi '(0)\ne 0\), such that a different symmetric homoclinic orbit of the form (3.7) than \(x^\mathrm {h}(t;\mu )\) exists for \(\alpha =\phi (\mu )\) with \(\mu \ne 0\), i.e., a transcritical bifurcation of symmetric homoclinic orbits occurs at \(\mu =0\). See Fig. 3.

Proof

Differentiating (3.13) with respect to \(\alpha \) and using (3.11), we have

at \((\alpha ,\mu )=(0,0)\), i.e., \(\mathrm {D}_\alpha \bar{z}(0;0)(t)\) is a solution of (1.2), so that \(\mathrm {D}_\alpha \bar{z}(0;0)(t)=0\) by Lemma 3.2. Using this fact, (3.10) and (3.16), we compute (3.14) as

as in the proof of Theorem 2.7 of Part I. Since \(\mathrm {D}_\alpha \bar{F}_1(0,0)=0\) and \(\mathrm {D}_\alpha \mathrm {D}_\mu \bar{F}_1(0,0)\ne 0\), we apply the implicit function theorem to show that there exist an open interval \(I\,(\ni 0)\,{(\subset I_0)}\) and a differentiable function \(\bar{\phi }:I\rightarrow \mathbb {R}\) such that \(\bar{F}(\bar{\phi }(\alpha ),\alpha )=0\) for \(\alpha \in I\) with \(\bar{\phi }(0)=0\) and \(\bar{\phi }'(0)\ne 0\). This implies the result along with Theorem 3.3. \(\square \)

Remark 3.8

For the class of systems discussed in Part I, including Hamiltonian systems, we can prove a result similar to Theorem 3.7.

Finally we consider the \(\mathbb {Z}_2\)-equivalent or equivariant case for \(n_0=2\), and assume the following.

- (R6):

-

Eq. (1.1) is \(\mathbb {Z}_2\)-equivalent or equivariant, i.e., there exists an \(n\times n\) matrix S such that \(S^2=\mathrm {id}_n\) and \(Sf(x;\mu )=f(Sx;\mu )\).

See Section 2.3 of Part I or Section 7.4 of [6] for more details on \(\mathbb {Z}_2\)-equivalent or equivariant systems. Especially, if \(x=\bar{x}(t)\) is a solution to (1.1), then so is \(x=S\bar{x}(t)\). We say that the pair \(\bar{x}(t)\) and \(S\bar{x}(t)\) are S-conjugate if \(\bar{x}(t)\ne S\bar{x}(t)\). The space \(\mathbb {R}^{2n}\) can be decomposed into a direct sum as

where \(Sx=x\) for \(x\in X^+\) and \(Sx=-x\) for \(x\in X^-\). We also need the following assumption.

- (R7):

-

We have \(X^-=(X^+)^\bot \). For every \(t\in \mathbb {R}\), \(x^\mathrm {h}(t),\psi _{n+1}(t)\in X^+\) and \(\varphi _2(t),\psi _{n+2}(t)\in X^-\).

In Part I, we implicitly assumed that \(X^-=(X^+)^\bot \). Recall that the scalar product in \(\mathbb {R}^{2n}\) was already chosen such that \(\mathrm {Fix}(-R)=\mathrm {Fix}(R)^\bot \).

Assumption (R7) also means that \(\varphi _1(t)\in X^+\). Moreover, a symmetric homoclinic orbit of the form (3.7) has an S-conjugate counterpart for \(\alpha \ne 0\) since it is not included in \(X^+\). In this situation, we have \(a_2,b_2=0\) in Theorems 3.6 and 3.7, as in Lemma 2.8 of Part I, and cannot apply these theorems.

Let \(\xi =\xi ^\alpha (t)\) be the unique solution to

in \(\hat{\mathscr {Z}}_0^1\), and define

(cf. Eq. (20) of Part I). We obtain the following result as in Theorem 2.9 of Part I.

Theorem 3.9

Under assumptions (R1)–(R7) with \(n_0=2\), suppose that \(\bar{a}_2,\bar{b}_2\ne 0\). Then for \(j=1,2\) there exist an open interval \(I_j\ni 0\) and a differentiable function \(\phi _j:I_j\rightarrow \mathbb {R}\) with \(\phi _j(0)=0\), \(\phi _2'(0)=0\), \(\phi _2''(0)\ne 0\) and \(\phi _2(\alpha )=\phi _2(-\alpha )\) for \(\alpha \in I_2\), such that a symmetric homoclinic orbit exists on \(X^+\) for \(\mu =\phi _1(\mu _2)\) and an S-conjugate pair of symmetric homoclinic orbits exist for \(\mu =\phi _2(\alpha )\): a pitchfork bifurcation of homoclinic orbits occurs. Moreover, it is supercritical and subcritical if \(\bar{a}_2\bar{b}_2<0\) and \(>0\), respectively. See Fig. 4.

From Theorems 3.6, 3.7 and 3.9 we see that if condition (C) holds for \(n\ge 2\), then a saddle-node, transcritical or pitchfork bifurcation occurs under some nondegenerate condition, as stated in Sect. 1.

4 Example

We now illustrate our theory for the four-dimensional system

where \(s>0\) and \(\beta _j\), \(j=1\)-4, are constants. Similar systems were treated in Part I and [12,13,14] (although \(s<0\) in [13]). Eq. (4.1) is reversible with the involution

for which \(\mathrm {Fix}(R)=\{(x_1,x_2,x_3,x_4)\in \mathbb {R}^4\mid x_3,x_4=0\}\), and has an equilibrium at the origin \(x=0\). Thus, assumptions (R1) and (R2) hold. The Jacobian matrix of the right hand side of (4.1) at \(x=0\) has two pairs of positive and negative eigenvalues with the same absolute values so that the origin \(x=0\) is a hyperbolic saddle. Thus, assumption (R3) holds.

Suppose that \(\beta _2=0\). The \((x_1,x_3)\)-plane is invariant under the flow of (4.1) and there exist a pair of symmetric homoclinic orbits

to \(x=0\). Thus, assumption (R4) holds as well as conditions (B2) and (B3) by Proposition 2.4. Henceforth we only treat the homoclinic orbit \(x_+^\mathrm {h}(t)\) for simplification and denote it by \(x^\mathrm {h}(t)\). Note that a pair of symmetric homoclinic orbits also exist on the \((x_2,x_4)\)-plane. The VE (1.2) around \(x=x^\mathrm {h}(t)\) for (4.1) is given by

As discussed in Section 5 of Part I (see also [12]), Eq. (4.3b) has a bounded symmetric solution, so that assumption (R5) holds with \(n_0=2\), if and only if

while Eq. (4.3a) always has a bounded solution corresponding to \(\xi =\dot{x}^\mathrm {h}(t)\). The bounded symmetric solution \((\bar{\xi }_2(t),\bar{\xi }_4(t))\) to (4.3b) is given by

for \(\ell =0\),

for \(\ell =1\),

for \(\ell =2\) and \(\bar{\xi }_4(t)=\dot{\bar{\xi }}_2(t)\) (see Appendix A of Part I). Note that Eq. (4.3b) has an asymmetric bounded solution if the first equation (4.4) holds for \(\ell \in \frac{1}{2}\mathbb {N}\setminus \mathbb {N}\). Moreover, if condition (4.4) holds, then the differential Galois group of the VE given by (4.3a) and (4.3b) is triangularizable. See Fig. 7 of Part I for the dependence of \(\beta _1\) satisfying (4.4) on s (the definition of \(\ell \) there is different from here: \(\ell \) is replaced with \(2\ell \)). When condition (4.4) holds, we have

and

Fix the values of \(\beta _1\) and \(\beta _3\ne 0\) such that Eq. (4.4) holds. Take \(\mu =\beta _2\) as a control parameter. Eq. (3.15) becomes

See Appendix A of Part I for analytic expressions of these integrals for \(\ell =0,1,2\), which correspond to \(\ell =0,2,4\) there. Applying Theorem 3.6, we see that a saddle-node bifurcation of symmetric homoclinic orbits occurs at \(\beta _2=0\) if \(a_2b_2\ne 0\), which holds for almost all values of s when \(\beta _3\ne 0\) and \(0\le \ell \le 2\).

We next assume that \(\beta _2=0\). Then assumption (R4’) holds. Take \(\mu =\beta _1\) as a control parameter. Since \(\mathrm {D}_\mu f(x^\mathrm {h}(t);0)=0\), the solution to (3.17) in \(\tilde{\mathscr {Z}}_0^1\) is \(\xi ^{\beta _1}(t)=0\). Eq. (3.18) becomes

Applying Theorem 3.7, we see that a transcritical bifurcation of symmetric homoclinic orbits occurs at the values of \(\beta _1\) given by (4.4) if \(b_2\ne 0\).

We next assume that \(\beta _2,\beta _3=0\). Then Eq. (4.1) is \(\mathbb {Z}_2\)-equivariant with the involution

and assumptions (R6) and (R7) hold. In particular, \(X^+=\{x_2,x_4=0\}\) and \(X^-=\{x_1,x_3=0\}\). Since

we write (3.20) as

where \(\xi _1^\alpha (t)\) is the first component of the solution to (3.19) in \(\tilde{\mathscr {Z}}_0^1\) and given by

and \(\varphi _{jk}(t)\) and \(\psi _{jk}(t)\) are the kth components of \(\varphi _j(t)\) and \(\psi _j(t)\), respectively (the corresponding formula in Part I had a small error). We compute (4.5) as

where

and

See Section 7 and Appendix B of [14] for derivation of these expressions. Here condition (4.4) has been substituted for each \(\ell \ge 0\). In particular, we see that \(\bar{b}_2\ne 0\) except for a finite number of values of \(s>0\), for each \(\ell \ge 0\). Applying Theorem 3.9, we see that a pitchfork bifurcation of symmetric homoclinic orbits occurs at the values of \(\beta _1\) given by (4.4) if \(\bar{b}_2\ne 0\).

Bifurcation diagrams for \(s=2\) and \(\beta _3=4\): a \(\ell =0\); b \(\ell =1\); c \(\ell =2\). Here \(\beta _2\) is taken as a control parameter and \(\beta _1\) satisfies (4.4) for each value of \(\ell \)

Profiles of symmetric homoclinic orbits on the branches for \(s=2\), \(\beta _2=0\) and \(\beta _3=4\): a1 \(\beta _1=1.5\) and \(\ell =0\); a2 \(\beta _1=2\) and \(\ell =0\); b1 \(\beta _1=7.7\) and \(\ell =1\); b2 \(\beta _1=7.3\) and \(\ell =1\); c1 \(\beta _1=17.3\) and \(\ell =2\); c2 \(\beta _1=17.4\) and \(\ell =2\)

Finally we give numerical computations for (4.1). We take \(s=2\) so that Eq. (4.4) gives \(\beta _1=1.70710678\ldots \) for \(\ell =0\), \(\beta _1=7.5355339\ldots \) for \(\ell =1\) and \(\beta _1=17.36396103\ldots \) for \(\ell =2\) as the value of \(\beta _1\) for which assumption (R5) holds with \(n_0=2\). To numerically compute symmetric homoclinic orbits, we used the computer tool AUTO [3] to solve the bondary value problem of (4.1) with the boundary conditions

where \(T=20\) and \(L_\mathrm {s}\) is the \(2\times 4\) matrix consisting of two row eigenvectors with negative eigenvalues for the Jacobian matrix of (4.1) at the origin,

Figure 5 shows bifurcation diagrams for \(\beta _3=4\) when \(\beta _1\) is fixed and satisfies (4.4) for \(\ell =0,1,2\) and \(\beta _2\) is taken as a control parameter. In Fig. 5(c) the maximum and minimum of the \(x_2\)-component are plotted as the ordinate when \(x_2(0)\) is positive and negative, respectively. We observe that a saddle-node bifurcation occurs at \(\beta _2=0\) while another saddle-node bifurcation occurs at a different value of \(\beta _2\). The \(x_2\)-components of symmetric homoclinic orbits born at the bifurcation point \(\beta _2=0\) in Fig. 5 are plotted in Fig. 6. We also see that they have \(\ell +1\) extreme points like the corresponding bounded solutions to (4.3b) when \(\beta _1\) satisfies (4.4) with \(\ell =0,1,2\).

Figure 7 shows bifurcation diagrams for \(\beta _2=0\) and \(\beta _3=4\) when \(\beta _1\) is taken as a control parameter. Note that there exists a branch of \(x_2(= x_4)=0\), which corresponds to the symmetric homoclinic orbit (4.2), for all values of \(\beta _1\). We observe that a transcritical bifurcation occurs at \(\beta _1=0\) satisfying (4.4) for \(\ell =0,1,2\) while another bifurcation occurs at a value of \(\beta _1\) in Fig. 7(a): Eq. (4.1) is \(\mathbb {Z}_2\)-equivariant with the involution

and has a symmetric homoclinic orbit with \((x_1,x_3)=(0,0)\) for \(\beta _2=0\), and a pitchfork bifurcation at which a pair of symmetric homoclinic orbits with \((x_1,x_3)\ne (0,0)\) are born occurs there. The \(x_2\)-components of symmetric homoclinic orbits born at the bifurcation points in Fig. 7 are plotted in Fig. 8.

Figure 9 shows bifurcation diagrams for \(\beta _2,\beta _3=0\) when \(\beta _1\) is taken as a control parameter. Note that there exist a branch of \(x_2(= x_4)=0\) for all values of \(\beta _1\), and a pair of branches of solutions which are symmetric about \(x_2=0\). We observe that a pitchfork bifurcation occurs at values of \(\beta _1\) satisfying (4.4) for \(\ell =0,1,2\). The \(x_2\)-components of symmetric homoclinic orbits born at the bifurcation in Fig. 9 are also plotted in Fig. 10.

References

Blázquez-Sanz, D., Yagasaki, K.: Analytic and algebraic conditions for bifurcations of homoclinic orbits I: Saddle equilibria. J. Differ. Equ. 253, 2916–2950 (2012)

Crespo, T., Hajto, Z.: Algebraic Groups and Differential Galois Theory. American Mathematical Society, Providence, RI (2011)

Doedel, E., Oldeman, B. E.:(2012). AUTO-07P: Continuation and Bifurcation Software for Ordinary Differential Equations. http://cmvl.cs.concordia.ca/auto

Gruendler, J.: Homoclinic solutions for autonomous dynamical systems in arbitrary dimension, SIAM. J. Math. Anal. 23, 702–721 (1992)

Knobloch, J.: Bifurcation of degenerate homoclinic orbits in reversible and conservative systems. J. Dynam. Differ. Equ. 9, 427–444 (1997)

Kuznetsov, Y.A.: Elements of Applied Bifurcation Theory, 3rd edn. Springer, New York (2004)

Lamb, J.S.W., Roberts, J.A.G.: Time-reversal symmetry in dynamical systems: a survey. Phys. D 112, 1–39 (1998)

Van der Put, M., Singer, M.F.: Galois Theory of Linear Differential Equations. Springer, New York (2003)

Sandstede, B. (2002). Stability of travelling waves. In: B. Fiedler (ed.) Handbook of Dynamical Systems, Vol. 2. North-Holland, Amsterdam, Chapter 18, pp. 983–1055

Vanderbauwhede, A., Fiedler, B.: Homoclinic period blow-up in reversible and conservative systems. Z. Angew. Math. Phys. 43, 292–318 (1992)

Yagasaki, K. (2015). Analytic and algebraic conditions for bifurcations of homoclinic orbits in reversible systems. In: S.-I. Ei, S. Kawashima, M. Kimura, T. Mizumachi (eds.) Nonlinear Dynamics in Partial Differential Equations. Mathematical Society of Japan, Tokyo, Japan, pp. 229–234

Yagasaki, K., Stachowiak, T.: Bifurcations of radially symmetric solutions to a coupled elliptic system with critical growth in \({\mathbb{R}}^d\) for \(d=3,4\). J. Math. Anal. Appl. 484, 123726 (2020)

Yagasaki, K., Wagenknecht, T.: Detection of symmetric homoclinic orbits to saddle-centres in reversible systems. Phys. D 214, 169–181 (2006)

Yagasaki, K., Yamazoe, S.: Bifurcations and spectral stability of solitary waves in coupled nonlinear Schrödinger equations (2021). arXiv:2005.10317

Acknowledgements

This work was partially supported by the JSPS KAKENHI Grant Numbers 25400168 and 17H02859.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yagasaki, K. Analytic and Algebraic Conditions for Bifurcations of Homoclinic Orbits II: Reversible Systems. J Dyn Diff Equat 35, 1863–1884 (2023). https://doi.org/10.1007/s10884-021-10091-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10884-021-10091-5