Abstract

We consider the semi-linear beam equation on the d dimensional irrational torus with smooth nonlinearity of order \(n-1\) with \(n\ge 3\) and \(d\ge 2\). If \(\varepsilon \ll 1\) is the size of the initial datum, we prove that the lifespan \(T_\varepsilon \) of solutions is \(O(\varepsilon ^{-A(n-2)^-})\) where \(A\equiv A(d,n)= 1+\frac{3}{d-1}\) when n is even and \(A= 1+\frac{3}{d-1}+\max (\frac{4-d}{d-1},0)\) when n is odd. For instance for \(d=2\) and \(n=3\) (quadratic nonlinearity) we obtain \(T_\varepsilon =O(\varepsilon ^{-6^-})\), much better than \(O(\varepsilon ^{-1})\), the time given by the local existence theory. The irrationality of the torus makes the set of differences between two eigenvalues of \(\sqrt{\Delta ^2+1}\) accumulate to zero, facilitating the exchange between the high Fourier modes and complicating the control of the solutions over long times. Our result is obtained by combining a Birkhoff normal form step and a modified energy step.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this article we consider the beam equation on an irrational torus

where \(f\in C^{\infty }({\mathbb {R}},{\mathbb {R}})\), \(\psi =\psi (t,y)\), \(y\in {\mathbb {T}}^{d}_{{\nu }}\), with \({\nu }=(\nu _1,\ldots ,\nu _{d})\in [1,2]^{d}\) and

The initial data \((\psi _0, \psi _1)\) have small size \(\varepsilon \) in the standard Sobolev space \(H^{s+1}({\mathbb {T}}_\nu ^{d})\times H^{s-1}({\mathbb {T}}_\nu ^{d})\) for some \(s\gg 1\). The nonlinearity \(f(\psi )\) has the form

for some smooth function \(F\in C^{\infty }({\mathbb {R}},{\mathbb {R}})\) having a zero of order at least \(n\ge 3\) at the origin. Local existence theory implies that (1.1) admits, for small \(\varepsilon >0\), a unique smooth solution defined on an interval of length \(O(\varepsilon ^{-n+2})\). Our goal is to prove that, generically with respect to the irrationality of the torus (i.e. generically with respect to the parameter \(\nu \)), the solution actually extends to a larger interval.

Our main theorem is the following.

Theorem 1

Let \(d\ge 2\). There exists \(s_0\equiv s_0(n,d)\in {\mathbb {R}}\) such that for almost all \(\nu \in [1,{2}]^{d}\), for any \(\delta >0\) and for any \(s\ge s_0\) there exists \(\varepsilon _0>0\) such that for any \(0<\varepsilon \le \varepsilon _0\) we have the following. For any initial data \((\psi _0,\psi _1)\in H^{s+1}({\mathbb {T}}_{\nu }^{d})\times H^{s-1}({\mathbb {T}}_{\nu }^{d})\) such that

there exists a unique solution of the Cauchy problem (1.1) such that

where \(\mathtt {a}=\mathtt {a}(d,n)\) has the form

Originally, the beam equation has been introduced in physics to model the oscillations of a uniform beam, so in a one dimensional context. In dimension 2, similar equations can be used to model the motion of a clamped plate (see for instance the introduction of [28]). In larger dimension (\(d\ge 3\)) we do not claim that the beam Eq. (1.1) has a physical interpretation but nevertheless remains an interesting mathematical model of dispersive PDE. We note that when the equation is posed on a torus, there is no physical reason to assume the torus to be rational.

This problem of extending solutions of semi-linear PDEs beyond the time given by local existence theory has been considered many times in the past, starting with Bourgain [11], Bambusi [1] and Bambusi–Grébert [2] in which the authors prove the almost global existence for the Klein Gordon equation:

on a one dimensional torus. Precisely, they proved that, given \(N\ge 1\), if the initial datum has a size \(\varepsilon \) small enough in \(H^{s}({\mathbb {T}})\times H^{s-1}({\mathbb {T}})\), and if the mass stays outside an exceptional subset of zero measure, the solution of (1.7) exists at least on an interval of length \(O(\varepsilon ^{-N})\). This result has been extended to Eq. (1.7) on Zoll manifolds (in particular spheres) by Bambusi–Delort–Grébert–Szeftel [3] but also for the nonlinear Schrödinger equation posed on \({\mathbb {T}}^{d}\) (the square torus of dimension d) [2, 19] or on \({\mathbb {R}}^d\) with a harmonic potential [24]. What all these examples have in common is that the spectrum of the linear part of the equation can be divided into clusters that are well separated from each other. Actually if you considered (1.1) with a generic mass m on the square torus \({\mathbb {T}}^d\) then the spectrum of \(\sqrt{\Delta ^2+m}\) (the square root comes from the fact that the equation is of order two in time) is given by \(\{\sqrt{|j|^4+m}\mid j\in {\mathbb {Z}}^d\}\) which can be divided in clusters around each integers n whose diameter decreases with |n|. Thus for n large enough these clusters are separated by 1/2. So in this case also we could easily prove, following [2], the almost global existence of the solution.

On the contrary when the equation is posed on an irrational torus, the nature of the spectrum drastically changes: the differences between couples of eigenvalues accumulate to zero. Even for the Klein Gordon Eq. (1.7) posed on \(\mathbb T^d\) for \(d\ge 2\) the linear spectrum is not well separated. In both cases we could expect exchange of energy between high Fourier modes and thus the almost global existence in the sense described above is not reachable (at least up to now!). Nevertheless it is possible to go beyond the time given by the local existence theory. In the case of (1.7) on \({\mathbb {T}}^d\) for \(d\ge 2\), this local time has been extended by Delort [13] and then improved in different ways by Fang and Zhang [18], Zhang [29] and Feola et al. [20] (in this last case a quasi linear Klein Gordon equation is considered). We quote also the remarkable work on multidimensional periodic water wave by Ionescu and Pusateri [26].

The beam equation has already been considered on irrational torus in dimension 2 by Imekraz [25]. In the case he considered, the irrationality parameter \(\nu \) was diophantine and fixed, but a mass m was added in the game (for us m is fixed and for convenience we chose \(m=1\)). For almost all mass, Imekraz obtained a lifespan \(T_\varepsilon =O(\varepsilon ^{-\frac{5}{4}(n-2)^+})\) while we obtain, for almost all \(\nu \), \(T_\varepsilon =O(\varepsilon ^{-4(n-2)^+})\) when n is even and \(T_\varepsilon =O(\varepsilon ^{-4(n-2)-2^+})\) when n is odd.

We notice that applying the Theorem 3 of [6] (and its Corollary 1) we obtain the almost global existence for (1.1) on irrational tori up to a large but finite loss of derivatives.

Let us also mention some recent results about the longtime existence for periodic water waves [7,8,9,10]. In the same spirit we quote the long time existence for a general class of quasi-linear Hamiltonian equations [21] and quasi-linear reversible Schrödinger equations [22] on the circle. The main theorem in [21] applies also for quasi-linear perturbations of the beam equation. We mention also [16], here the authors study the lifespan of small solutions of the semi-linear Klein–Gordon equation posed on a general compact boundary-less Riemannian manifold.

All previous results [13, 18, 20, 25, 29] have been obtained by a modified energy procedure. Such procedure partially destroys the algebraic structure of the equation and, thus, it makes more involved to iterate the procedure.Footnote 1 On the contrary, in this paper, we begin by a Birkhoff normal form procedure (when \(d=2,3\)) before applying a modified energy step. Further in dimension 2 we can iterate two steps of Birkhoff normal form and therefore we get a much better time. The other key tool that allows us to go further in time is an estimate of small divisors that we have tried to optimize to the maximum: essentially small divisors make us lose \((d-1)\) derivatives (see Proposition 2.2) which explains the strong dependence of our result on the dimension d of the torus and also explains why we obtain a better result than [25]. In Sect. 1.2 we detail the scheme of the proof of Theorem 1.

1.1 Hamiltonian Formalism

We denote by \(H^{s}({\mathbb {T}}^{d};{\mathbb {C}})\) the usual Sobolev space of functions \({\mathbb {T}}^{d}\ni x \mapsto u(x)\in {\mathbb {C}}\). We expand a function u(x) , \(x\in {\mathbb {T}}^{d}\), in Fourier series as

We also use the notation

We set \(\langle j \rangle :=\sqrt{1+|j|^{2}}\) for \(j\in {\mathbb {Z}}^{d}\). We endow \(H^{s}({\mathbb {T}}^{d};{\mathbb {C}})\) with the norm

Moreover, for \(r\in {\mathbb {R}}^{+}\), we denote by \(B_{r}(H^{s}({\mathbb {T}}^{d};{\mathbb {C}}))\) the ball of \(H^{s}({\mathbb {T}}^{d};{\mathbb {C}}))\) with radius r centered at the origin. We shall also write the norm in (1.10) as \(\Vert u\Vert ^{2}_{H^{s}}= (\langle D\rangle ^{s}u,\langle D\rangle ^{s} u)_{L^{2}}\), where \(\langle D\rangle e^{\mathrm{i} j\cdot x}=\langle j\rangle e^{\mathrm{i} j\cdot x}\), for any \(j\in {\mathbb {Z}}^{d}\).

In the following it will be more convenient to rescale the Eq. (1.1) and work on squared tori \({\mathbb {T}}^{d}\). For any \(y\in {\mathbb {T}}_\nu ^{d}\) we write \(\psi (y)=\phi (x)\) with \(y=(x_1\nu _1,\ldots , x_d\nu _d)\) and \(x=(x_1,\ldots ,x_d)\in {\mathbb {T}}^{d}\). The beam equation in (1.1) reads

where \(\Omega \) is the Fourier multiplier defined by linearity as

Introducing the variable \(v={\dot{\phi }}=\partial _{t}\phi \) we can rewrite Eq. (1.11) as

By (1.3) we note that (1.13) can be written in the Hamiltonian form

where \(\partial \) denotes the \(L^{2}\)-gradient of the Hamiltonian function

on the phase space \(H^{2}({\mathbb {T}}^{d};{\mathbb {R}})\times L^{2}({\mathbb {T}}^{d};{\mathbb {R}})\). Indeed we have

for any \((\phi ,v), ({\hat{\phi }},{\hat{v}})\) in \(H^{2}({\mathbb {T}}^{d};{\mathbb {R}})\times L^{2}({\mathbb {T}}^{d};{\mathbb {R}})\), where \(\lambda _{{\mathbb {R}}}\) is the non-degenerate symplectic form

The Poisson bracket between two Hamiltonian \(H_{{\mathbb {R}}}, G_{{\mathbb {R}}}: H^{2}({\mathbb {T}}^{d};{\mathbb {R}})\times L^{2}({\mathbb {T}}^{d};{\mathbb {R}})\rightarrow {\mathbb {R}}\) are defined as

We define the complex variables

where \(\Omega \) is the Fourier multiplier defined in (1.12). Then the system (1.13) reads

Notice that (1.18) can be written in the Hamiltonian form

with Hamiltonian function (see (1.14))

and where \(\partial _{{\bar{u}}}=(\partial _{\mathfrak {R}u}+\mathrm{i} \partial _{\mathfrak {I}u})/2\), \(\partial _{u}=(\partial _{\mathfrak {R}u}-\mathrm{i} \partial _{\mathfrak {I}u})/2\). Notice that

and that (using (1.17))

for any \(h\in H^{2}({\mathbb {T}}^{d};{\mathbb {C}})\) and where the two form \(\lambda \) is given by the push-forward \(\lambda =\lambda _{{\mathbb {R}}}\circ {\mathcal {C}}^{-1}\). In complex variables the Poisson bracket in (1.16) reads

where we set \(H=H_{{\mathbb {R}}}\circ {\mathcal {C}}^{-1}\), \(G=G_{{\mathbb {R}}}\circ {\mathcal {C}}^{-1}\). Let us introduce an additional notation:

Definition 1.1

If \(j \in ({\mathbb {Z}}^d)^r\) for some \(r\ge k\) then \(\mu _k(j)\) denotes the \(k^{st}\) largest number among \(|j_1|, \dots , |j_r|\) (multiplicities being taken into account). If there is no ambiguity we denote it only with \(\mu _k\).

Let \(r\in {\mathbb {N}}\), \(r\ge n\). A Taylor expansion of the Hamiltonian H in (1.20) leads to

where

and \(H_k\), \(k=n,\ldots ,r-1\), is an homogeneous polynomial of order k of the form

with (noticing that the zero momentum condition \(\sum _{i=1}^k\sigma _i j_i=0\) implies \(\mu _1(j)\lesssim \mu _2(j)\))

and

The estimate above follows by Moser’s composition theorem in [27], section 2. Estimates (1.27) and (1.28) express the regularizing effect of the semi-linear nonlinearity in the Hamiltonian writing of (1.11).

1.2 Scheme of the Proof of Theorem 1

As usual Theorem 1 will be proved by a bootstrap argument and thus we want to control, \(N_s(u(t)):=\Vert u(t)\Vert ^2_{H^s}\), for \(t\mapsto u(t,\cdot )\) a small solution (whose local existence is given by the standard theory for semi-linear PDEs) of the Hamiltonian system generated by H given by (1.24) for the longest time possible (and at least longer than the existence time given by the local theory). So we want to control its derivative with respect to t. We have

By (1.28) we have \(\{N_s,R_r\}\lesssim \Vert u\Vert ^{r-1}_{H^s}\) and thus we can neglect this term choosing r large enough. Then we define \(H^{\le N}_k\) the truncation of \(H_k\) at order N:

and we set \(H^{> N}_k=H_k-H^{\le N}_k\). As a consequence of (1.27) we have \(\{N_s,H_k^{>N}\}\lesssim N^{-2}\Vert u\Vert ^{k-1}_{H^s}\) and thus we can neglect these terms choosing N large enough. So it remains to take care of \(\sum _{k=n}^{r-1}\{N_s, H_k^{\le N}\}\).

The natural idea to eliminate \(H_k^{\le N}\) consists in using a Birkhoff normal form procedure (see [2, 23]). In order to do that, we have first to solve the homological equation

This is achieved in Lemma 3.6 and, thanks to the control of the small divisors given by Proposition 2.2, we get that there exists \(\alpha \equiv \alpha (d,k)>0\) such that for any \(\delta >0\)

From [2] we learn that the positive power of \(\mu _3(j)\) appearing in the right hand side of (1.30) is not dangerousFootnote 2 (taking s large enough) but the positive power of \(\mu _1(j)\) implies a loss of derivatives. So this step can be achieved only assuming \(d\le 3\) and in that case the corresponding flow is well defined in \(H^s\) (with s large enough) and is controlled by \(N^{\delta }\) (see Lemma 3.7). In other words, this step is performed only when \(d=2,3\), when \(d\ge 4\) we directly go to the modified energy step.

For \(d=2,3\), let us focus on \(n=3\). After this Birkhoff normal form step, we are left with

where \(Q_4\) is a Hamiltonian of order 4 whose coefficients are bounded by \(\mu _1(j)^{d-3+\delta }\) (see Lemma 3.5, estimate (3.15)) and \(Z_3\) is a Hamiltonian of order 3 which is resonant: \(\{Z_2,Z_3\}=0\). Actually, as consequence Proposition 2.2, \(Z_3=0\) and thus we have eliminated all the terms of order 3 in (1.29).

In the case \(d=2\), \(Q_4^{\le N}\) is still \((1-\delta )\)-regularizing and we can perform a second Birkhoff normal form. Actually, since in eliminating \(Q_4^{\le N}\) we create terms of order at least 6, we can eliminate both \(Q_4^{\le N}\) and \(Q_5^{\le N}\). So, for \(d=2\), we are left with

where \(Z_4\) is Hamiltonian of order 4 which is resonant,Footnote 3\(\{Z_2,Z_4\}=0\), and \(Q_6\) is a Hamiltonian of order 6 whose coefficients are bounded by \(N^{2\delta }\). Since resonant Hamiltonians commute with \(N_s\), the first contribution in (1.29) is \(\{N_s,Q_6\}\). This is essentially the statement of Theorem 2 (which will be stated in Sect. 3) in the case \(d=2\) and \(n=3\) and this achieves the Birkhoff normal forms step.

Let us describe the modified energy step only in the case \(d=2\) and \(n=3\) and let us focus on the worst term in \(\{N_s, {{\tilde{H}}}\}\), i.e. \(\{N_s,Q_6\}\). Let us write

From Proposition 2.2 we learn that if \(\sigma _1\sigma _2=1\) then the small divisor associated with \((j,\sigma )\) is controlled by \(\mu _3(j)\) and thus we can eliminate the corresponding monomial by one more Birkhoff normal forms step.Footnote 4 Now if we assume \(\sigma _1\sigma _2=-1\) we have

where we used the zero momentum condition, \(\sum _{i=1}^6\sigma _i j_i=0\), to obtain \(|j_1-j_2|\le 4|j_3|\). This gain of one derivative, also known as the commutator trick, is central in a lot of results about modified energy [6, 13] or growth of Sobolev norms [4, 5, 12, 14].

So if \(Q_6^-\) denotes the restriction of \(Q_6\) to monomials satisfying \(\sigma _1\sigma _2=-1\) we have essentially proved that

Then we can consider the modified energy \(N_s+E_6\) with \(E_6\) solving

in such a way that

Since this modified energy will not produce new terms of order 7, we can in the same time eliminate \(Q_7^{-,\le N_1}\). Thus we obtain a new energy, \(N_s+E_6+E_7\), which is equivalent to \(N_s\) in a neighborhood of the origin, and such that, by neglecting all the powers of \(N^\delta \) and \(N_1^\delta \) which appear when we work carefully (see (4.6) for a precise estimate),

Then, a suitable choice of N and \(N_1\) and a standard bootstrap argument lead to, \(T_\varepsilon =O(\varepsilon ^{-6})\) by using this rough estimate, and \(T_\varepsilon =O(\varepsilon ^{-6^-})\) by using the precise estimate (see Sect. 5).

Remark 1.2

In principle a Birkhoff normal form procedure gives more than just the control of \(H^s\) norm of the solutions, it gives an equivalent Hamiltonian system and therefore potentially more information about the dynamics of the solutions. However, if one wants to control only the solution in \(H^s\) norm, the modified energy method is sufficient and simpler. One could therefore imagine applying this last method from the beginning. However, when we iterate it, the modified energy method brings up terms that, when we apply a Birkhoff procedure, turn out to be zero. Unfortunately we have not been able to prove the cancellation of these terms directly by the modified energy method, that is why we use successively a Birkhoff normal form procedure and a modified energy procedure.

Notation

We shall use the notation \(A\lesssim B\) to denote \(A\le C B\) where C is a positive constant depending on parameters fixed once for all, for instance d, n. We will emphasize by writing \(\lesssim _{q}\) when the constant C depends on some other parameter q.

2 Small Divisors

As already remarked in the introduction, the proof of Theorem 1 is based on a normal form approach. In particular we have to deal with a small divisors problem involving linear combination of linear frequencies \(\omega _{j}\) in (1.12).

This section is devoted to establish suitable lower bounds for generic (in a probabilistic way) choices of the parameters \(\nu \) excepted for exceptional indices for which the small divisor is identically zero. According to the following definition such indices are called resonant.

Definition 2.1

(Resonant indices) Being given \(r\ge 3\), \(j_1,\dots ,j_r \in {\mathbb {Z}}^d\) and \(\sigma _1,\dots ,\sigma _r\in \{-1,1\}\), the couple \((\sigma ,j)\) is resonant if r is even and there exists a permutation \(\rho \in {\mathfrak {S}}_r\) such that

In this section we aim at proving the following proposition whose proof is postponed to the end of this section (see Sect. 2.3). We recall that a is defined with respect to the length, \(\nu \), of the torus by the relation \(a_i= \nu _i^2\) (see (1.12)).

Proposition 2.2

For almost all \(a\in (1,4)^d\), there exists \(\gamma >0\) such that for all \(\delta >0\), \(r\ge 3\), \(\sigma _1,\dots ,\sigma _r\in \{-1,1\}\), \(j_1,\dots ,j_r \in {\mathbb {Z}}^d\) satisfying \(\sigma _1 j_1+\dots +\sigma _r j_r = 0\) and \(|j_1|\ge \dots \ge |j_r|\) at least one of the following assertion holds

-

(i)

\((\sigma ,j)\) is resonant (see Definition 2.1)

-

(ii)

\(\sigma _1 \sigma _2 = 1\) and

$$\begin{aligned} \left| \sum _{k=1}^r \sigma _k \sqrt{1+|j_k|_a^4} \right| > rsim _r \gamma \, (\langle j_3 \rangle \dots \langle j_r \rangle )^{-9dr^2}, \end{aligned}$$ -

(iii)

\(\sigma _1 \sigma _2 = -1\) and

$$\begin{aligned} \left| \sum _{k=1}^r \sigma _k \sqrt{1+|j_k|_a^4} \right| > rsim _{r,\delta } \gamma \, \langle j_1 \rangle ^{-(d-1+\delta )} (\langle j_3 \rangle \dots \langle j_r \rangle )^{-44dr^4}. \end{aligned}$$

We refer the reader to Lemma 2.9 and its corollary to understand how we get this degeneracy with respect to \(j_1\).

2.1 A Weak Non-resonance Estimate

In this subsection we aim at proving the following technical lemma.

Lemma 2.3

If \(r\ge 1\), \((j_1,\dots ,j_r) \in ({\mathbb {N}}^d)^r\) is injective,Footnote 5\(n \in ({\mathbb {Z}}^*)^r\) and \(\kappa \in {\mathbb {R}}^d\) satisfies \(\kappa _{i_{\star }}=0\) for some \(i_{\star }\in \llbracket 1,d\rrbracket \) then we have

Its proof (postponed to the end of this subsection) rely essentially on the following lemma.

Lemma 2.4

If I, J are two bounded intervals of \({\mathbb {R}}_+^*\), \(r\ge 1\), \((j_1,\dots ,j_r) \in ({\mathbb {N}}^d)^r\) is injective, \(n \in ({\mathbb {Z}}^*)^r\) and \(h: J^{d-1}\rightarrow {\mathbb {R}}\) is measurable then for all \(\gamma >0\) we have

where \((1,b):=(1,b_1,\dots ,b_{d-1})\in {\mathbb {R}}^d\).

Proof of Lemma 2.4

The proof of this lemma is classical and follows the lines of [1].

Without loss of generality, we assume that \(\gamma \in (0,1)\). Let \(\eta \in (0,1)\) be a positive number which will be optimized later with respect to \(\gamma \). If \(1\le i<k \le r\) then we have

Since, by assumption, \((j_1,\dots ,j_r)\) is injective, either there exists \(\ell \in \llbracket 2,d \rrbracket \) such that \(j_{i,\ell }\ne j_{k,\ell }\) or \(j_{i,1}\ne j_{k,1}\) and \(j_{i,\ell }=j_{k,\ell }\) for \(\ell = 2,\dots , d\). Note that in this second case, we have \(|| j_i|_{1,b}^2 - | j_k|_{1,b}^2 |\ge 1\). In any case, since the dependency with respect to b is affine the set

Therefore, we have

In order to estimate this last measure we fix \(b\in J^{d-1}{\setminus } \bigcup _{i<k} {\mathcal {P}}_{\eta }^{(i,k)}\) and we define \(g:I\rightarrow {\mathbb {R}}\) by

By a straightforward calculation, for \(\ell \ge 1\), we have

Therefore, we have

Denoting by V this Vandermonde matrix, by \(|x|_{\infty } := \max |x_i|\) for \(x\in {\mathbb {R}}^d\) and also by \(|\cdot |_\infty \) the associated matrix norm, we deduce that

We recall that the inverse of V is given by

(this formula can be easily derived using the Lagrange interpolation polynomials) where \(S_{\ell } : {\mathbb {R}}^{r-1}\rightarrow {\mathbb {R}}\) is the \(\ell ^{st}\) elementary symmetric function

Furthermore, we have

To estimate \(|V^{-1}|_{\infty } \) in (2.3), we use the estimates

Indeed, if \(||j_i|^4_{(1,b)} - |j_k|^4_{(1,b)}|\ge \frac{1}{2}|j_i|^4_{(1,b)} \) we have

and conversely, if \(||j_i|^4_{(1,b)} - |j_k|^4_{(1,b)}|\le \frac{1}{2}|j_i|^4_{(1,b)}\) then \(|j_i|^4_{(1,b)} \le 2 |j_k|^4_{(1,b)}\) and so, since \(b\in J^{d-1}{\setminus } \bigcup _{i<k} {\mathcal {P}}_{\eta }^{(i,k)}\), we have

Therefore by (2.5) and (2.4), we have

Consequently, we deduce from (2.3) that

Furthermore, considering (2.2), it is clear that

As a consequence, being given \(\rho >0\) (that will be optimized later), applying Lemma B.1. of [17], we get N sub-intervals of I, denoted \(\Delta _{1},\dots ,\Delta _{N}\) such that

Observing that \(I {\setminus } (\Delta _1 \cup \dots \cup \Delta _N)\) can be written as the union of M intervals with \(M\lesssim 1 + N\), we deduce that

We optimize \(\rho \) to equalize the two terms in this last sum:

This provides the estimate

Finally, we optimize (2.1) by choosing

and, recalling that \(|n|_{\infty }\ge 1\), we get

Since this measure is obviously bounded by \(|I||J|^{d-1}\), the exponent \(r+\frac{r-1}{r}\) can be replaced by \(r+1\) in the above expression which conclude this proof. \(\square \)

Now using Lemma 2.4, we prove Lemma 2.3.

Proof of Lemma 2.3

Without loss of generality we assume that \(i_{\star } = 1\). First, since \(\kappa _1=0\), we note that we have

where

Let denote by \(\Psi \) the map \(a\mapsto (m,b)\). It is clearly smooth and injective. Furthermore, we have

Consequently, \(\Psi \) is a smooth diffeomorphism onto its image \(\Psi ((1,4)^d)\) which is included in the rectangle \(\left( \frac{1}{16},1\right) \times \left( \frac{1}{4},4\right) ^{d-1}\). Therefore, by a change of variable, we have

Finally, by applying Lemma 2.4, we get the expected estimate. \(\square \)

2.2 Non-resonance Estimates for Two Large Modes

In this subsection we consider \(r\ge 3\), \((j_k)_{k\ge 3} \in ({\mathbb {Z}}^d)^{r-2}\) and \(\sigma \in \{-1,1\}^r\) such that \(\sigma _1=-\sigma _2\) as fixed. We define \(j_{\ge 3} \in {\mathbb {Z}}^d\) by

Being given \(j_1\in {\mathbb {Z}}^d\), we define implicitly \(j_2:= j_1+\sigma _1 j_{\ge 3}\) in order to satisfy the zero momentum condition

and we define the function \(g_{j_1}:(1,4)^d \rightarrow {\mathbb {R}}\) by

Finally, for \(\gamma >0\), we introduce the following setsFootnote 6

First, we prove the following technical lemma whose Corollary 2.6 allows to deal with the non-degenerated cases.

Lemma 2.5

If there exists \(i\in \llbracket 1,d\rrbracket \) such that

then for all \(\gamma >0\)

Proof

Without loss of generality we assume that \(\sigma _1=1\) and \(\sigma _2 = -1\). We compute the derivative with respect to \(a_1\)

Consequently, we have

Furthermore, we have

Consequently, we get

Observing that by definition we have \(j_{1,i}^2 - j_{2,i}^2 = j_{\ge 3,i}(j_{1,i} + j_{2,i})\), we deduce of the assumption (2.9) that

Since by (2.9) we know that \(j_{\ge 3,i} \in {\mathbb {Z}}{\setminus } \{0\}\), we deduce that

Therefore \(a_1\mapsto \sum _{k=1}^r \sigma _k \sqrt{1+|j_k|^4_a}\) is a diffeomorphism (it is a smooth monotonic function). Consequently, applying this change of coordinate, we get directly (2.10) which conclude this proof. \(\square \)

Corollary 2.6

For all \(\gamma >0\) we have

Proof of Corollary 2.6

Let \(j_1\in C_i\). By definition of \(j_2\), we have

Consequently, since \(j_1\in C_i\), we have

Therefore, since \(j_{\ge 3,i}\ne 0\), we have

Applying Lemma 2.5, we deduce that for all \(\gamma >0\)

Consequently, we have

\(\square \)

In the following lemma, we deal with most of the degenerated cases.

Lemma 2.7

For all \(\gamma >0\), we have

Proof

Without loss of generality, we assume that \(\gamma < \min ((2r)^{-2},(36d)^{-1})\). If \(j_1\in R_{\gamma }\) recalling that for \(x\ge 0\), we have \(|\sqrt{1+x}-1|\le x/2\), we deduce that

However, by definition of \(j_2\) and \(R_{\gamma }\), we have

Noting that, for \(a\in (1,4)^d\), we have \(|\cdot | \le |\cdot |_a\), we deduce that

Consequently, it is enough to prove that

To prove this estimate, we have to note the following result whose proof is postponed/to the end of this proof. \(\square \)

Lemma 2.8

If \(j_1\in R_{\gamma }\) then there exists \(\kappa _{j_1} \in {\mathbb {Z}}^d\) such that

Now we have to distinguish two cases.

-

Case 1: \((\sigma _k,j_k)_{k\ge 3}\) is resonant. If \(j_1\in R_{\gamma }\), let \(\kappa _{j_1}\in {\mathbb {Z}}^d\) be given by Lemma 2.8. Note that \(\kappa _{j_1}\ne 0\) because else we would have \(j_{1,i}^2=j_{2,i}^2\) for all \(i\in \llbracket 1,d \rrbracket \) and so \((\sigma ,j)\) would be resonant (which is excluded by definition of \(R_{\gamma }\)). Furthermore, here \(h_{j_1}(a)= \kappa _{j_1} \cdot a\) is a linear form. Consequently, for all \(\gamma >0\), we have the following estimate which is much stronger than (2.13):

$$\begin{aligned}&|\{ a\in (1,4)^d : \exists j_1\in R_{1}, \ |h_{j_1}(a)|< \gamma \}| \\&\quad \le \left| \bigcup _{\begin{array}{c} \kappa \in {\mathbb {Z}}^d {\setminus } \{0\}\\ |\kappa |_{\infty } \le 10(\langle j_3 \rangle \dots \langle j_r \rangle )^3 \end{array}} \{ a\in (1,4)^d : \kappa \cdot a< \gamma \} \right| \\&\quad \le \sum _{\begin{array}{c} \kappa \in {\mathbb {Z}}^d {\setminus } \{0\}\\ |\kappa |_{\infty } \le 10(\langle j_3 \rangle \dots \langle j_r \rangle )^3 \end{array}} |\{ a\in (1,4)^d : \kappa \cdot a < \gamma \}| \le \gamma (20(\langle j_3 \rangle \dots \langle j_r \rangle )^3)^d \end{aligned}$$ -

Case 2: \((\sigma _k,j_k)_{k\ge 3}\) is non-resonant. If \(j_1\in R_{\gamma }\), \(h_{j_1}\) writes

$$\begin{aligned} h_{j_1}(a) = \kappa _{j_1} \cdot a + \sum _{k=1}^{{\widetilde{r}}} n_k \sqrt{1+|{\widetilde{j}}_k|^4_a} \end{aligned}$$where \( \kappa _{j_1}\) is given by Lemma 2.8, \({\widetilde{r}}\le r-2\), \(({\widetilde{j}}_1,\dots ,{\widetilde{j}}_{{\widetilde{r}}}) \in ({\mathbb {N}}^d)^{{\widetilde{r}}}\) is injective, \(n_k\in ({\mathbb {Z}} {\setminus } \{0\})^d\) is defined by

$$\begin{aligned} n_k = \sum _{\begin{array}{c} i\in \llbracket 3,r \rrbracket \\ \forall \ell ,\ |j_{i,\ell }| = {\widetilde{j}}_{k,\ell } \end{array}} \sigma _1 \sigma _i. \end{aligned}$$Consequently, by Lemma 2.8, we have

$$\begin{aligned}&|\{ a\in (1,4)^d : \exists j_1\in R_{1}, \ |h_{j_1}(a)|< \gamma \}|\\&\quad \le \left| \bigcup _{\begin{array}{c} \kappa \in {\mathbb {Z}}^d \\ |\kappa |_{\infty } \le 10(\langle j_3 \rangle \dots \langle j_r \rangle )^3 \\ \exists i_{\star },\ \kappa _{i_{\star }=0} \end{array}} \left\{ a\in (1,4)^d : \left| \kappa \cdot a + \sum _{k=1}^{{\widetilde{r}}} n_k \sqrt{1+|{\widetilde{j}}_k|^4_a} \right|< \gamma \right\} \right| \\&\quad \le \sum _{\begin{array}{c} \kappa \in {\mathbb {Z}}^d \\ |\kappa |_{\infty } \le 10(\langle j_3 \rangle \dots \langle j_r \rangle )^3 \\ \exists i_{\star },\ \kappa _{i_{\star }=0} \end{array}} \left| \left\{ a\in (1,4)^d : \left| \kappa \cdot a + \sum _{k=1}^{{\widetilde{r}}} n_k \sqrt{1+|{\widetilde{j}}_k|^4_a} \right| < \gamma \right\} \right| . \end{aligned}$$Finally, by applying Lemma 2.3 we get

$$\begin{aligned} |\{ a\in (1,4)^d : \exists j_1\in R_{1}, \ |h_{j_1}(a)| < \gamma \}| \lesssim _{r,d} \gamma ^{\frac{1}{(r-2)(r-1)}} (\langle j_3 \rangle \dots \langle j_r \rangle )^{\frac{12}{r-1}+3d}, \end{aligned}$$which is also stronger than (2.13). \(\square \)

Proof of Lemma 2.8

First let us note that

First we aim at controlling \(|\kappa |_{\infty }\). If \(i\notin {\mathcal {I}}\) then \(j_{\ge 3,i} =0\) and so \(\kappa _{j_1,i}=0\). Else, since \(j_1\in {\mathbb {Z}}^d {\setminus } \bigcup _{i\in I} C_i\), we have \(|j_{1,i}|\le 2(1+ \sum _{k\ge 3} |j_{k,i}|^2)\). Consequently, we deduce that

Now we assume by contradiction that \(\kappa _{j_1,i}\ne 0\) for all \(i\in \llbracket 1,d\rrbracket \). Consequently, we have \({\mathcal {I}}=\llbracket 1,d\rrbracket \) and so

However, since \(j_1\in R_{\gamma }\), we have \(|j_1| \ge \gamma ^{-1/2}(\langle j_3 \rangle \dots \langle j_r \rangle )^{2dr^2}\) which is in contradiction with (2.14) because we have assumed that \(\gamma < (36d)^{-1}\). \(\square \)

Finally in the following lemma we deal with the general degenerated cases.

Lemma 2.9

For all \(\gamma >0\), we have

Proof

Without loss of generality we assume that \(\gamma \in (0,1)\). Let \(\eta \in (0,1)\) be a small number that will be optimized with respect to \(\gamma \) later. From the decomposition \(S= R_{\eta }\cup (S{\setminus } R_{\eta })\) we get

To estimate the sum, we apply Lemma 2.3 (with \(\kappa =0\)) and we get

Furthermore, by the zero momentum condition (2.8), since \(\eta \in (0,1)\), we also have

Consequently, we have

Therefore, applying Lemma 2.7, we deduce of (2.16) that

Finally, we get (2.15) by optimizing this last estimate choosing

\(\square \)

2.3 Proof of Proposition 2.2

For \(r\ge 3\) let \({\mathcal {M}}_r\) and \({\mathcal {R}}_r\) be the sets defined by

On the one hand, as a direct corollary of Lemma 2.9 and Corollary 2.6, for all \(\gamma >0\) we have

where \(c_{r,d}>0\) is a constant depending only on r and d. Consequently, it is enough to prove that for all \(\gamma \in (0,1)\), we have

where \(\kappa _{r,d}\in (0,1)\) is another constant depending only on r and d (and that will be determined later). Indeed, by additivity of the measure, we have

Note that if \(|j_1| \ge 2\sqrt{r} \langle j_3 \rangle \dots \langle j_r \rangle \) and \(\sigma _1 \sigma _2 = 1\) then

and so \(\Big |\{ a \in (1,4)^d : \big | \sum _{k=1}^r \sigma _k \sqrt{1+|j_k|_a^4} \big | < \kappa _{r,d} \gamma ^{r(r+1)} (\langle j_3 \rangle \dots \langle j_r \rangle )^{-9d r^2}\} \Big | \) vanishes. Since the same holds if \(j_1\) is replaced by \(j_2\), consequently, we have that \(I_{\gamma }\) is bounded from above by

Now denoting by \(c_{r,d}>0\) the constant given by Lemma 2.3, we get

Consequently, we get an other constant \({\widetilde{c}}_{r,d}>0\) such that

Noting that \(9 d \frac{r^2}{r(r+1)} -\frac{36}{r+1}\ge 2d\), we deduce that

Consequently, we deduce a natural choice for \(\kappa _{r,d}\) such that \(I_{\gamma }<\gamma \) which conclude this proof.

3 The Birkhoff Normal form Step

In the rest of the paper we shall fix the parameter \(\nu \), (see (1.2) and (1.12)) defining the irrationality of the torus, in the full Lebesgue measure set given by Proposition 2.2. For \(d\ge 2\) and \(n\in {\mathbb {N}}\) we define

The main result of this section is the following.

Theorem 2

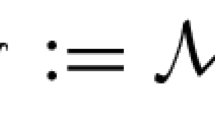

Let \(d=2,3\) and let \(r\in {\mathbb {N}}\) such that \(M_{d,n}\le r\le 4n\). There exits \(\beta =\beta (d,r)>0\) such that for any \(N\ge 1\), any \(\delta >0\) and \(s\ge s_0=s_0(\beta )\), there exist \(\varepsilon _0\lesssim _{s,\delta } N^{-\delta }\) and two canonical transformation \(\tau ^{(0)}\) and \(\tau ^{(1)}\) making the following diagram to commute

and close to the identity

such that, on \(B_s(0,2\varepsilon _0)\), \(H \circ \tau ^{(1)}\) writes

where \(M_{d,n}\) is given in (3.1) and where

-

(i)

\(Z_{k}^{\le N}\), for \(k=n,\ldots , M_{d,n}-1\), are resonant Hamiltonians of order k given by the formula

$$\begin{aligned} Z_k^{\le N} = \sum _{\begin{array}{c} \sigma \in \{-1,1\}^k,\ j\in ({\mathbb {Z}}^{d})^{k},\ \mu _2(j)\le N\\ \sum _{i=1}^k\sigma _i j_i=0\\ \sum _{i=1}^k\sigma _i \omega _{j_i}=0 \end{array}} (Z_{k}^{\le N})_{\sigma ,j}u_{j_1}^{\sigma _1}\cdots u_{j_k}^{\sigma _k}, \quad |(Z_{k}^{\le N})_{\sigma ,j}|\lesssim _{\delta }N^{\delta } \frac{\mu _3(j)^\beta }{\mu _1(j)}; \end{aligned}$$(3.5) -

(ii)

\(K_k\), \(k=M_{d,n},\ldots , r-1\), are homogeneous polynomials of order k

$$\begin{aligned} K_k= \sum _{\begin{array}{c} \sigma \in \{-1,1\}^k,\ j\in ({\mathbb {Z}}^{d})^{k}\\ \sum _{i=1}^k\sigma _i j_i=0 \end{array}} (K_{k})_{\sigma ,j}u_{j_1}^{\sigma _1}\cdots u_{j_k}^{\sigma _k}, \quad |(K_k)_{\sigma ,j}|\lesssim _{\delta } N^{\delta } \mu _3(j)^\beta ; \end{aligned}$$(3.6) -

(iii)

\(K^{>N}\) and \({\tilde{R}}_{r}\) are remainders satisfying

$$\begin{aligned} \Vert X_{K^{>N}}(u)\Vert _{H^{s}}&\lesssim _{s,\delta }N^{-1+\delta }\Vert u\Vert _{H^{s}}^{n-1}, \end{aligned}$$(3.7)$$\begin{aligned} \Vert X_{{{\tilde{R}}}_r}(u)\Vert _{H^{s}}&\lesssim _{s,\delta } N^{\delta } \Vert u\Vert _{H^s}^{r-1}. \end{aligned}$$(3.8)

It is convenient to introduce the following class.

Definition 3.1

(Formal Hamiltonians) Let \(N\in {\mathbb {R}}\), \(k\in {\mathbb {N}}\) with \(k\ge 3\) and \(N\ge 1\).

-

(i)

We denote by \( {\mathcal {L}}_k\) the set of Hamiltonian having homogeneity k and such that they may be written in the form

$$\begin{aligned} G_{k}(u)&= \sum _{\begin{array}{c} \sigma _i\in \{-1,1\},\ j_i\in {\mathbb {Z}}^d\\ \sum _{i=1}^k\sigma _i j_i=0 \end{array}} (G_{k})_{\sigma ,j}u_{j_1}^{\sigma _1}\cdots u_{j_k}^{\sigma _k}, \quad (G_{k})_{\sigma ,j}\in {\mathbb {C}}, \quad \begin{array}{cl}&{}\sigma :=(\sigma _1,\ldots ,\sigma _k)\\ &{}j:=(j_1,\ldots ,j_k)\end{array} \end{aligned}$$(3.9)with symmetric coefficients \((G_k)_{\sigma ,j}\), i.e. for any \(\rho \in {\mathfrak {S}}_{k}\) one has \((G_{k})_{\sigma ,j}=(G_{k})_{\sigma \circ \rho ,j\circ \rho }\).

-

(ii)

If \(G_{k}\in {\mathcal {L}}_{k}\) then \(G_{k}^{>N}\) denotes the element of \({\mathcal {L}}_{k}\) defined by

$$\begin{aligned} (G_{k}^{>N})_{\sigma ,j}:=\left\{ \begin{array}{lll} &{}(G_{k})_{\sigma ,j},&{}\mathrm{if} \;\;\mu _{2}(j)>N,\\ &{}0, &{} \mathrm{else}. \end{array} \right. \end{aligned}$$(3.10)We set \(G^{\le N}_{k}:=G_{k}-G^{>N}_{k}\).

Remark 3.2

Consider the Hamiltonian H in (1.20) and its Taylor expansion in (1.24). One can note that the Hamiltonians \(H_{k}\) in (1.26) belong to the class \({\mathcal {L}}_{k}\). This follows form the fact that, without loss of generality, one can substitute the Hamiltonian \(H_{k}\) with its symmetrization.

We also need the following definition.

Definition 3.3

Consider the Hamiltonian \(Z_2\) in (1.25) and \(G_{k}\in {\mathcal {L}}_{k}\).

-

(Adjoint action). We define the adjoint action \(\mathrm{ad}_{Z_2}G_k\) in \({\mathcal {L}}_{k}\) by

$$\begin{aligned} (\mathrm{ad}_{Z_2}G_k)_{\sigma ,j}:= \Big (\mathrm{i} \sum _{i=1}^{k}\sigma _i\omega _{j_i}\Big ) (G_{k})_{\sigma ,j}. \end{aligned}$$(3.11) -

(Resonant Hamiltonian). We define \(G_{k}^{\mathrm{res}}\in {\mathcal {L}}_{j}\) by

$$\begin{aligned} (G_{k}^{res})_{\sigma ,j}:=(G_{k})_{\sigma ,j},\quad \mathrm{when}\quad \sum _{i=1}^{k}\sigma _i\omega _{j_i}=0 \end{aligned}$$and \((G_{k}^{\mathrm{res}})_{\sigma ,j}=0\) otherwise.

-

We define \(G_{k}^{(+1)}\in {\mathcal {L}}_{k}\) by

$$\begin{aligned}&(G_{k}^{(+1)})_{\sigma ,j}:=(G_{k})_{\sigma ,j},\quad \mathrm{when}\quad \exists i,p=1,\ldots ,k\;\mathrm{s.t.}\\&\mu _{1}(j)=|j_{i}|,\quad \mu _{2}(j)=|j_{p}| \quad \mathrm{and}\quad \sigma _{i}\sigma _{p}=+1. \end{aligned}$$We define \(G_{k}^{(-1)}:=G_k-G_{k}^{(+1)}\).

Remark 3.4

Notice that, in view of Proposition 2.2, the resonant Hamiltonians given in Definition 3.3 must be supported on indices \(\sigma \in \{-1,1\}^{k}\), \(j\in {\mathbb {Z}}^{kd}\) which are resonant according to Definition 2.1. We remark that \((G_{k})^{\mathrm{res}}\equiv 0\) if k is odd.

In the following lemma we collect some properties of the Hamiltonians in Definition 3.1.

Lemma 3.5

Let \(N\ge 1\), \(0\le \delta _i<1\), \(q_i\in {\mathbb {R}}\), \(k_i\ge 3\), consider \(G^i_{k_i}(u)\) in \({\mathcal {L}}_{k_i}\) for \(i=1,2\). Assume that the coefficients \((G^i_{k_i})_{\sigma ,j}\) satisfy

for some \(\beta _i>0\) and \(C_i>0\), \(i=1,2\).

-

(i)

(Estimates on Sobolev spaces) Set \(k=k_i\), \(\delta =\delta _i\), \(q=q_i\), \(\beta =\beta _i\), \(C=C_i\) and \(G^i_{k_i}=G_k\) for \(i=1,2\). There is \(s_0=s_0(\beta ,d)\) such that for \(s\ge s_0\), \(G_{k}\) defines naturally a smooth function from \(H^{s}({\mathbb {T}}^{d})\) to \({\mathbb {R}}\). In particular one has the following estimates:

$$\begin{aligned} |G_{k}(u)|&\lesssim _{s}CN^{\delta }\Vert u\Vert _{H^{s}}^{k}, \end{aligned}$$(3.13)$$\begin{aligned} \Vert X_{G_k}(u)\Vert _{H^{s+q}}&\lesssim _s CN^{\delta }\Vert u\Vert _{H^{s}}^{k-1}, \end{aligned}$$(3.14)$$\begin{aligned} \Vert X_{G_{k}^{>N}}(u)\Vert _{H^{s}}&\lesssim _{s} CN^{-q+\delta }\Vert u\Vert ^{k-1}_{H^{s}}, \end{aligned}$$(3.15)for any \(u\in H^{s}({\mathbb {T}}^{d}).\)

-

(ii)

(Poisson bracket) The Poisson bracket between \(G^1_{k_1}\) and \(G^{2}_{k_2}\) is an element of \({\mathcal {L}}_{k_1+k_2-2}\) and it verifies the estimate

$$\begin{aligned} |(\{G^1_{k_1},G^2_{k_2}\})_{\sigma ,j}|\lesssim _{s} C_1 C_2 N^{\delta _1+\delta _2}\mu _{3}^{\beta _1+\beta _2}\mu _1(j)^{-\min \{q_1,q_2\}}, \end{aligned}$$(3.16)for any \(\sigma \in \{+1,-1\}^{k_1+k_2-1}\) and \( j\in {\mathbb {Z}}^{d(k_1+k_2-2)}.\)

Proof

We prove item (i). Concerning the proof of (3.13) it is sufficient to give the proof in the case \(q=0\). For convenience, without loss of generality, we assume \(C_{i}=1\), \(i=1,2\). We have

for any \(\epsilon >0\), we proved the (3.13) with \(s_0=d/2+\epsilon +\beta \).

We now prove (3.14). Since the coefficients of \(G_k\) are symmetric, we have

Therefore, we have

We note that in the last sum above, we have \(\langle n\rangle \lesssim \langle j_1 \rangle \), \(\mu _{1}(j,n)\ge \langle j_1 \rangle \) and \(\mu _{3}(j,n)\le \langle j_2 \rangle \). As a consequence, we deduce that

Consequently, applying the Young convolutional inequality, we get

The proof of (3.15) follows the same lines. The proof of item (ii) of the lemma is a direct consequence of the previous computations, definition (1.23) and the momentum condition. \(\square \)

We are in position to prove the main Birkhoff result.

Proof of Theorem 2

In the case \(d=2\) we perform two steps of Birkhoff normal form procedure, see Lemmata 3.8, 3.12. The case \(d=3\) is slightly different. Indeed, due to the estimates on the small divisors given in Proposition 2.2, we can note that the Hamiltonian in (3.24) has already the form (3.4) since the coefficients of the Hamiltonians \({\tilde{K}}_{k}\) (see (3.25)) do not decay anymore in the largest index \(\mu _1(j)\). The proof of Theorem 2 is then concluded after just one step of Birkhoff normal form.

Step 1 if \(d=2\) or \(d=3\). We have the following Lemma.

Lemma 3.6

(Homological equation 1) Let \(q_{d}=3-d\) for \(d=2,3\). For any \(N\ge 1\) and \(\delta >0\) there exist multilinear Hamiltonians \(\chi ^{(1)}_{k}\), \(k=n,\ldots , 2n-3\) in the class \({\mathcal {L}}_{k}\) with coefficients \((\chi _{k}^{(1)})_{\sigma ,j}\) satisfying

such that (recall Definition 3.3)

where \(Z_2\), \(H_k\) are given in (1.25), (1.26) and \(Z_{k}\) is the resonant Hamiltonian defined as

Moreover \(Z_{k}\) belongs to \({\mathcal {L}}_{k}\) and has coefficients satisfying (3.5).

Proof

Consider the Hamiltonians \(H_{k}\) in (1.26) with coefficients satisfying (1.27). Recalling Definition 3.1 we write

with \(Z_k\) as in (3.19). We define

where \(\mathrm{ad}_{Z_2}\) is given by Definition 3.3. In particular (recall formula (3.11)) their coefficients have the form

for indices \(\sigma \in \{-1,+1\}^{k}\), \(j\in ({\mathbb {Z}}^{d})^{k}\) such that

By (1.27) and Proposition 2.2 (with \(d=2,3\)) we deduce the bound (3.17) for some \(\beta >0\). The resonant Hamiltonians \(Z_{k}\) in (3.19) have the form (3.5). One can check by an explicit computation that Eq. (3.18) is verified. \(\square \)

We shall use the Hamiltonians \(\chi ^{(1)}_{k}\) given by Lemma 3.6 to generate a symplectic change of coordinates.

Lemma 3.7

Let us define

There is \(s_0=s_0(d,r)\) such that for any \(\delta >0\), for any \(N\ge 1\) and any \(s\ge s_0\), if \(\varepsilon _0\lesssim _{s,\delta } N^{-\delta }\), then the problem

has a unique solution \(Z(\tau )=\Phi ^{\tau }_{\chi ^{(1)}}(u)\) belonging to \(C^{k}([-1,1];H^{s}({\mathbb {T}}^{d}))\) for any \(k\in {\mathbb {N}}\). Moreover the map \(\Phi _{\chi ^{(1)}}^{\tau } : B_{s}(0,\varepsilon _0)\rightarrow H^{s}({\mathbb {T}}^{d})\) is symplectic. The flow map \(\Phi ^{\tau }_{\chi ^{(1)}}\) and its inverse \(\Phi ^{-\tau }_{\chi ^{(1)}}\) satisfy

Proof

By estimate (3.17) and Lemma 3.5 we have that the vector field \(X_{\chi ^{(1)}}\) is a bounded operator on \(H^{s}({\mathbb {T}}^{d})\). Hence the flow \(\Phi ^{\tau }_{\chi ^{(1)}}\) is well-posed by standard theory of Banach space ODE. The estimates of the map and its differential follow by using the equation in (3.23), the fact that \(\chi ^{(1)}\) is multilinear and the smallness condition on \(\varepsilon _0\). Finally the map is symplectic since it is generated by a Hamiltonian vector field. \(\square \)

We now study how changes the Hamiltonian H in (1.24) under the map \(\Phi ^{\tau }_{\chi ^{(1)}}\).

Lemma 3.8

(The new Hamiltonian 1) There is \(s_0=s_0(d,r)\) such that for any \(N\ge 1\), \(\delta >0\) and any \(s\ge s_0\), if \(\varepsilon _0\lesssim _{s,\delta } N^{-\delta }\) then we have that

where

-

\(\Phi _{\chi ^{(1)}}:=(\Phi ^{\tau }_{\chi ^{(1)}})_{|\tau =1}\) is the flow map given by Lemma 3.7;

-

the resonant Hamiltonians \(Z_k\) are defined in (3.19);

-

\({\widetilde{K}}_k\) are in \({\mathcal {L}}_{k}\) with coefficients \(({\widetilde{K}}_k)_{\sigma ,j}\) satisfying

$$\begin{aligned} |({\widetilde{K}}_k)_{\sigma ,j}|\lesssim _{\delta } N^{\delta } \mu _3(j)^{\beta }\mu _{1}(j)^{-q_{d}}, \quad k=2n-2,\ldots , r-1, \end{aligned}$$(3.25)with \(q_{d}=3-d\) for \(d=2,3\);

-

the Hamiltonian \({\widetilde{K}}^{>N}\) and the remainder \({\mathcal {R}}_r\) satisfy

$$\begin{aligned} \Vert X_{{\widetilde{K}}^{>N}}(u)\Vert _{H^{s}}&\lesssim _{s,\delta } N^{-1}\Vert u\Vert _{H^{s}}^{n-1}, \end{aligned}$$(3.26)$$\begin{aligned} \Vert X_{{\mathcal {R}}_r}(u)\Vert _{H^{s}}&\lesssim _{s,\delta } N^{\delta }\Vert u\Vert ^{r-1}_{H^{s}}, \quad \forall u\in B_{s}(0,2\varepsilon _0). \end{aligned}$$(3.27)

Proof

Fix \(\delta >0\) and \(\varepsilon _0N^{\delta }\) small enough. We apply Lemma 3.7 with \(\delta \rightsquigarrow \delta '\) to be chosen small enough with respect to \(\delta \) we have fixed (which ensures us that the smallness condition \(\varepsilon _0N^{\delta '}\lesssim _{s,\delta '}1\) of Lemma 3.7 is fulfilled). Let \(\Phi ^{\tau }_{\chi ^{(1)}}\) be the flow at time \(\tau \) of the Hamiltonian \(\chi ^{(1)}\). We note that

Then, for \(L\ge 2\), we get the Lie series expansion

where \(\mathrm{ad}_{\chi ^{(1)}}^{p}\) is defined recursively as

Recalling the Taylor expansion of the Hamiltonian H in (1.24) we obtain

We study each summand separately. First of all, by definition of \(\chi _{k}^{(1)}\) (see (3.18) in Lemma 3.6), we deduce that

One can check, using Lemma 3.5 (see (3.15)), that \({\widetilde{K}}^{>N}\) satisfies (3.26). Consider now the term in (3.29). By definition of \(\chi ^{(1)}\) (see (3.18) and (3.22)), we get, for \(p=2,\ldots , L\),

Therefore, by Lemma 3.5-(ii) and recalling (3.28), we get

where \({\widetilde{K}}_k\) are k-homogeneous Hamiltonians in \({\mathcal {L}}_{k}\). In particular, by (3.16), (3.17) and (1.27) (with \(\delta \rightsquigarrow \delta '\)), we have

for some \(\beta >0\) depending only on d, n. This implies the estimates (3.25) taking \(L\delta '\le \delta \), where L will be fixed later. Then formula (3.24) follows by setting

The estimate (3.27) holds true for \(X_{{\widetilde{K}}_k}\) with \(k=r,\ldots ,L(2n-3)+r-1-2L\), thanks to (3.25) and Lemma 3.5. It remains to study the terms appearing in (3.30), (3.31). We start with the remainder in (3.31). We note that

We obtain the estimate (3.27) on the vector field \(X_{R_{r}\circ \Phi }\) by using (1.28) and Lemma 3.7. In order to estimate the term in (3.30) we reason as follows. First notice that

with \(j=n,\ldots ,r-1\). Using Lemma 3.5 we deduce that

We choose \(L=9\) which implies \(Ln+n-2L\ge r\) since \(r\le 4n\). Notice also that all the summand in (3.30) are of the form

Then we can estimates their vector fields by reasoning as done for the Hamiltonian \(R_{r}\circ \Phi _{\chi ^{(1)}}\). This concludes the proof. \(\square \)

Remark 3.9

(Case \(d=3\)) We remark that Theorem 2 for \(d=3\) follows by Lemmata 3.6, 3.7, 3.8, by setting \(\tau ^{(1)}:=\Phi _{\chi ^{(1)}}\) and recalling that (see (3.1)) \(M_{d,n}=2n-2\) for \(d=3\).

Step 2 if \(d=2\). This step is performed only in the case \(d=2\). Consider the Hamiltonian in (3.24). Our aim is to reduce in Birkhoff normal form all the Hamiltonians \({\widetilde{K}}_{k}\) of homogeneity \(k=2n-2\,\ldots , M_{2,n}-1\) where \(M_{2,n}\) is given in (3.1). We follow the same strategy adopted in the previous step.

Lemma 3.10

(Homological equation 2) Let \(N\ge 1\), \(\delta >0\) and consider the Hamiltonian in (3.24). There exist multilinear Hamiltonians \(\chi ^{(2)}_{k}\), \(k=2n-2,\ldots , M_{2,n}-1\) in the class \({\mathcal {L}}_{k}\), with coefficients satisfying

for some \(\beta >0\), such that

where \({\widetilde{K}}_k\) are given in Lemma 3.8 and \(Z_{k}\) is the resonant Hamiltonian defined as

Moreover \(Z_{k}\) belongs to \({\mathcal {L}}_{k}\) and has coefficients satisfying (3.5).

Proof

Recalling Definitions 3.1, 3.3, we write

with \(Z_{k}\) as in (3.36), and we define

The Hamiltonians \(\chi _{k}^{(2)}\) have the form (3.9) with coefficients

for indices \(\sigma \in \{-1,+1\}^{k}\), \(j\in ({\mathbb {Z}}^{d})^{k}\) such that

Recalling that we are in the case \(d=2\), by (3.25) and Proposition 2.2 we deduce (3.34). The resonant Hamiltonians \(Z_{k}\) in (3.36) have the form (3.5). The (3.35) follows by an explicit computation. \(\square \)

Lemma 3.11

Let us define

There is \(s_0=s_0(d,r)\) such that for any \(\delta >0\), for any \(N\ge 1\) and any \(s\ge s_0\), if \(\varepsilon _0\lesssim _{s,\delta } N^{-\delta }\), then the problem

has a unique solution \(Z(\tau )=\Phi ^{\tau }_{\chi ^{(2)}}(u)\) belonging to \(C^{k}([-1,1];H^{s}({\mathbb {T}}^{d}))\) for any \(k\in {\mathbb {N}}\). Moreover the map \(\Phi _{\chi ^{(2)}}^{\tau } : B_{s}(0,\varepsilon _0)\rightarrow H^{s}({\mathbb {T}}^{d})\) is symplectic. The flow map \(\Phi ^{\tau }_{\chi ^{(2)}}\), and its inverse \(\Phi ^{-\tau }_{\chi ^{(2)}}\), satisfy

Proof

It follows reasoning as in the proof of Lemma 3.7. \(\square \)

We have the following.

Lemma 3.12

(The new Hamiltonian 2) There is \(s_0=s_0(d,r)\) such that for any \(N\ge 1\), \(\delta >0\) and any \(s\ge s_0\), if \(\varepsilon _0\lesssim _{s,\delta } N^{-\delta }\) then we have that \(H\circ \Phi _{\chi ^{(1)}}\circ \Phi _{\chi ^{(2)}}\) has the form (3.4) and satisfies items (i), (ii), (iii) of Theorem 2.

Proof

We fix \(\delta >0\) and we apply Lemmata 3.8, 3.10 with \(\delta \rightsquigarrow \delta '\) with \(\delta '\) to be chosen small enough with respect to \(\delta \) fixed here.

Reasoning as in the previous step we have (recall (3.1), (3.28) and (3.24))

where \(\Phi ^{\tau }_{\chi ^{(2)}}\), \(\tau \in [0,1]\), is the flow at time \(\tau \) of the Hamiltonian \(\chi ^{(2)}\). We study each summand separately. First of all, thanks to (3.35), we deduce that

One can check, using Lemma 3.5, that \({\widetilde{K}}_{+}^{>N}\) satisfies

Consider now the terms in (3.42). First of all notice that we have

The Hamiltonian above has a homogeneity at least of degree \(4n-6\) which actually is larger or equal to \(M_{2,n}\) (see (3.1)). The terms with lowest homogeneity in the sum (3.42) have degree exactly \(M_{2,n}\) and come from the term \(\mathrm{ad}_{\chi ^{(2)}}\Big [\sum _{k=n}^{2n-3}Z_{k}\Big ]\) recalling that (see Remark 3.4) if n is odd then \(Z_{n}\equiv 0\). Then, by (3.34), (3.25) and Lemma 3.5-(ii), we get

where \({\widetilde{K}}^{+}_k\) are k-homogeneous Hamiltonians of the form (3.6) with coefficients satisfying

for some \(\beta >0\). By the discussion above, using formulæ (3.40)–(3.44), we obtain that the Hamiltonian \(H\circ \Phi _{\chi ^{(1)}}\circ \Phi _{\chi ^{(2)}}\) has the form (3.4) with (recall (3.19), (3.36), (3.41), (3.45))

and with remainder \({\tilde{R}}_{r}\) defined as

Recalling (3.19), (3.36) and the estimates (1.27), (3.25) we have that \(Z_{k}^{\le N}\) in (3.48) satisfies the condition of item (i) of Theorem 2. Similarly \(K_{k}\) in (3.48) satisfies (3.6) thanks to (3.25) and (3.47) as long as \(\delta '\) is sufficiently small. The remainder \(K^{>N}\) in (3.49) satisfies the bound (3.7) using (3.46), (3.26) and Lemma 3.5-(i). It remains to show that the remainder defined in (3.50) satisfies the estimate (3.8). The claim follows for the terms \({\widetilde{K}}_{k}^{+}\) for \(k=r,\ldots , L(M_{2,n}-1)+r-1-2L\) by using (3.47) and Lemma 3.5. For the remainder in (3.43), (3.44) one can reason following almost word by word the proof of the estimate of the vector field of \({\mathcal {R}}_r\) in (3.33) in the previous step. In this case we choose \(L+1=8\) which implies \(L+1\ge (r+n)/(2n-4)\). \(\square \)

Theorem 2 follows by Lemmata 3.8, 3.12 setting \(\tau ^{(1)}:=\Phi _{\chi ^{(1)}}\circ \Phi _{\chi ^{(2)}}\). The bound (3.3) follows by Lemmata 3.7 and 3.11.

4 The Modified Energy Step

In this section we construct a modified energy which is an approximate constant of motion for the Hamiltonian system of \(H\circ \tau ^{(1)}\) in (3.4), when \(d=2,3\), and for the Hamiltonian H in (1.24) when \(d\ge 4\). For compactness we shall write, for \(s\in {\mathbb {R}}\),

for \(u\in H^{s}({\mathbb {T}}^{2};{\mathbb {C}})\). For \(d\ge 2\) and \(n\in {\mathbb {N}}\) we define (recall (3.1))

Proposition 4.1

There exists \(\beta =\beta (d,n)>0\) such that for any \(\delta >0\), any \(N\ge N_1>1\) (\(N=N_1\) if \(d\ge 4\)) and any \(s\ge {\tilde{s}}_0\), for some \({\tilde{s}}_0={\tilde{s}}_0(\beta )>0\), if \(\varepsilon _0 \lesssim _{s,\delta } N^{-\delta }\), there are multilinear maps \(E_{k}\), \(k=M_{d,n},\ldots , {\widetilde{M}}_{d,n}-1\), in the class \({\mathcal {L}}_{k}\) such that the following holds:

-

the coefficients \((E_{k})_{\sigma ,j}\) satisfies

$$\begin{aligned} |(E_{k})_{\sigma ,j}|\lesssim _{s,\delta }N^{\delta } N_1^{\kappa _{d}} \mu _{3}(j)^{\beta } \mu _1(j)^{2s}, \end{aligned}$$(4.3)for \(\sigma \in \{-1,1\}^{k}\), \(j\in ({\mathbb {Z}}^{d})^{k}\), \(k=M_{d,n},\ldots ,{\widetilde{M}}_{d,n}-1\), where

$$\begin{aligned} \kappa _d:=0\; \mathrm{if}\; d=2,\;\;\;\;\;\; \kappa _d:=1\; \mathrm{if}\; d=3,\;\;\;\;\;\; \kappa _d:=d-4\; \mathrm{if}\; d\ge 4. \end{aligned}$$(4.4) -

for any \(u\in B_{s}(0,2\varepsilon _0)\) setting

$$\begin{aligned} E(u):=\sum _{k=M_{d,n} }^{{\widetilde{M}}_{d,n}-1}E_{k}(u). \end{aligned}$$(4.5)one has

$$\begin{aligned} \begin{aligned} |\{N_{s}+E,H\circ \tau ^{(1)}\}|&\lesssim _{s,\delta } N_1^{\kappa _d} N^{\delta } \big (\Vert u\Vert ^{{\widetilde{M}}_{d,n}}_{H^{s}} +N^{-1}\Vert u\Vert ^{M_{d,n}+n-2}_{H^{s}}\big )\\&\quad +N_1^{-{\mathfrak {s}}_d+\delta }\Vert u\Vert _{H^{s}}^{M_{d,n}} +N^{-{\mathfrak {s}}_d+\delta }\Vert u\Vert _{H^{s}}^{n}, \end{aligned} \end{aligned}$$(4.6)where

$$\begin{aligned} {\mathfrak {s}}_{d}:=1,\;\;\;\mathrm{for }\;\; d=2,3,\;\;\; \mathrm{and}\;\;\; {\mathfrak {s}}_{d}:=3,\;\;\;\mathrm{for }\;\; d\ge 4. \end{aligned}$$(4.7)

Remark 4.2

We remark that in the proposition above we introduced a second truncation parameter \(N_1\). This is needed in order to optimize the time of existence that we shall deduce by estimate (4.6) . In Sect. 5 we shall choose \(N, N_1\) (depending on \(\varepsilon \)) is such a way that the last two summands in the r.h.s. of (4.6) are negligible w.r.t. the first two summands. Since for \(d=3\) the term \(\Vert u\Vert ^{n}_{H^{s}}\) is larger than \(\Vert u\Vert ^{M_{d,n}}_{H^{s}}\) it would be convenient to choose \(N\gg N_1\) to make the last summand small enough. This is possible since the factor \(N_1^{k_{d}}N^{\delta }\) grows very slowly in N since \(\delta \) is arbitrary small. Note that in the case \(d\ge 4\) we need just one truncation since no preliminary Birkhoff normal form is performed. In the case \(d=2\) one could use the same truncation N since \(\kappa _d=0\).

We need the following technical lemma.

Lemma 4.3

(Energy estimate) Let \(N\ge 1\), \(0\le \delta <1\), \(p\in {\mathbb {N}}\), \(p\ge 3\). Consider the Hamiltonians \(N_{s}\) in (4.1), \(G_p\in {\mathcal {L}}_{p}\) and write \(G_{p}=G_{p}^{(+1)}+G_{p}^{(-1)}\) (recall Definition 3.3). Assume also that the coefficients of \(G_p\) satisfy

for some \(\beta >0\), \(C>0\) and \(q\ge 0\). We have that the Hamiltonian \(Q_{p}^{(\eta )}:=\{N_s,G_{p}^{(\eta )}\}\), \(\eta \in \{-1,1\}\), belongs to the class \({\mathcal {L}}_{p}\) and has coefficients satisfying

Proof

Using formulæ (4.1), (1.23), (3.9) and recalling Definition 3.3 we have that the Hamiltonian \(\{N_s,G_{p}^{(\eta )}\}\) has coefficients

for any \(\sigma \in \{-1,+1\}^p\), \(j\in ({\mathbb {Z}}^{d})^{p}\) satisfying

for some \(i,k=1,\ldots ,p\). Then the bound (4.9) follows by the fact that

and using the assumption (4.8). \(\square \)

Proof of Proposition 4.1

Case \(d=2,3\). Consider the Hamiltonians \(K_{k}\) in (3.6) for \(k=M_{d,n},\ldots , {\widetilde{M}}_{n,d}-1\) where \( {\widetilde{M}}_{n,d}\) is defined in (4.2). Recalling Definition 3.3 we set \(E_{k}:=E_{k}^{(+1)}+E_{k}^{(-1)}\), where

for \(k=M_{d,n},\ldots , {\widetilde{M}}_{d,n}-1\). Notice that formulæin (4.10) are well-defined since \(\{N_{s}, K_{k}^{(+1)}\}\) and \(\{N_{s}, K_{k}^{(-1,\le N_1)}\}\) are in the range of the adjoint action \(\mathrm{ad}_{Z_2}\) thanks to Proposition 2.2. It is easy to note that \(E_{k}\in {\mathcal {L}}_{k}\). Moreover, using the bounds on the coefficients \((K_{k})_{\sigma ,j}\) in (3.6) and item (ii) of Proposition 2.2 (with \(\delta \) therein possibly smaller than the one fixed here), one can check that the coefficients \((E_{k}^{(+1)})_{\sigma ,j}\) satisfy the (4.3). By (3.6), Lemma 4.3 (in particular formula (4.9) with \(\eta =-1\)) and item (iii) of Proposition 2.2, one gets that the coefficients \((E_{k}^{(-1)})_{\sigma ,j}\) satisfy the (4.3) as well. Using (4.10) we notice that

Combining Lemmata 3.5 and 4.3 we deduce

for s large enough with respect to \(\beta \). We define the energy E as in (4.5). We are now in position to prove the estimate (4.6).

Using the expansions (3.4) and (4.5) we get

We study each summand separately. First of all note that, by item (i) in Theorem 2 and Proposition 2.2 we deduce that the right hand side of (4.13) vanishes. Consider now the term in (4.14). Using the bounds (3.7), (3.8) and recalling (1.23) one can check that, for \(\varepsilon _0N^{\delta }\lesssim _{s,\delta }1\),

By (4.11) and (4.12) we deduce that

By (4.3), (3.4)–(3.8), Lemma 3.5 (recall also (4.2)) we get

The discussion above implies the bound (4.6) using that \(r\ge {\widetilde{M}}_{d,n}\). This concludes the proof in the case \(d=2,3\).

Case \(d\ge 4\). In this case we consider the Hamiltonian H in (1.24). Recalling Definition 3.3 we set

where

for \(k=M_{d,n},\ldots , {\widetilde{M}}_{d,n}-1\). Notice that the energies \(E_{k}^{(+1)}\), \(E_{k}^{(-1)}\) are in \({\mathcal {L}}_{k}\) with coefficients

and

with \(\sigma \in \{-1,+1\}^{k}\), \(j\in ({\mathbb {Z}}^{d})^{k}\). Recall that in this case \(M_{d,n}=n\) (see (3.1)). Using Proposition 2.2 and reasoning as in the proof of Lemma 4.3 one can check that estimate (4.3) on the coefficients of \(E_{k}^{(+1)}\) and \(E_{k}^{(-1)}\) holds true with \(\kappa _{d}\) as in (4.4). Equation (4.20) implies

where \({\widetilde{M}}_{d,n}-1=2n-1\) if n odd and \({\widetilde{M}}_{d,n}-1=2n-2\) if n even (see (4.2)). Recall that the coefficients of the Hamiltonian \(H_{k}\) satisfy the bound (1.27). Therefore, combining Lemmata 4.3 and 3.5, we deduce

for s large enough with respect to \(\beta \). Recalling (1.24) we have

One can obtain the bound (4.6) by reasoning as in the case \(d=2,3\), using (4.22), (1.28). This concludes the proof. \(\square \)

5 Proof of Theorem 1

In this section we show how to combine the results of Theorem 2 and Proposition 4.1 in order to prove Theorem 1.

Consider \(\psi _0\) and \(\psi _1\) satisfying (1.4) and let \(\psi (t,y)\), \(y\in {\mathbb {T}}_{\nu }^{d}\), be the unique solution of (1.1) with initial conditions \((\psi _0,\psi _1)\) defined for \(t\in [0,T]\) for some \(T>0\). By rescaling the space variable y and passing to the complex variable in (1.17) we consider the function u(t, x), \(x\in {\mathbb {T}}^{d} \) solving the Eq. (1.18). We recall that (1.18) can be written in the Hamiltonian form

where H is the Hamiltonian function in (1.20) (see also (1.24)). We have that Theorem 1 is a consequence of the following Lemma.

Lemma 5.1

(Main bootstrap) There exists \(s_0=s_0(n,d)\) such that for any \(\delta >0\), \(s\ge s_0\), there exists \(\varepsilon _0=\varepsilon _0(\delta ,s)\) such that the following holds. Let u(t, x) be a solution of (5.1) with \(t\in [0,T)\), \(T>0\) and initial condition \(u(0,x)=u_0(x)\in H^{s}({\mathbb {T}}^{d})\). For any \(\varepsilon \in (0, \varepsilon _0)\) if

with \(\mathtt {a}=\mathtt {a}(d,n)\) in (1.6), then we have the improved bound \(\sup _{t\in [0,T)}\Vert u(t)\Vert _{H^{s}}\le \frac{3}{2} \varepsilon \).

In order to prove Lemma 5.1 we first need a preliminary result.

Lemma 5.2

(Equivalence of the energy norm) Let \(\delta >0\), \(N\ge N_1\ge 1\). Let u(t, x) as in (5.2) with \(s\gg 1\) large enough. Then, for any \(0<c_0<1\), there exists \(C=C(\delta ,s,d,n,c_0)>0\) such that, if we have the smallness condition

the following holds true. Define

where \(\tau ^{(\sigma )}\), \(\sigma =0,1\), are the maps given by Theorem 2 and \(N_{s}\) is in (4.1), E is given by Proposition 4.1. We have

Proof

Thanks to (5.3) we have that Theorem 2 and Proposition 4.1 apply. Consider the function \(z=\tau ^{(0)}(u)\). By estimate (3.3) we have

where \({\tilde{C}}\) is some constant depending on s and \(\delta \). The latter inequality follows by taking C in (5.3) large enough. Reasoning similarly and using the bound (3.3) on \(\tau ^{(1)}\) one gets the (5.5). Let us check the (5.6). First notice that, by (4.3), (4.5) and Lemma 3.5,

for some \({\tilde{C}}>0\) depending on s and \(\delta \). Then, recalling (5.4), we get

where we used that \(M_{d,n}-2\ge 1\). This implies the first inequality in (5.6). On the other hand, using (5.5), (5.7) and (5.2), we have

Then, since \(M_{d,n}>2\) (see (3.1)), taking C in (5.3) large enough we obtain the second inequality in (5.6). \(\square \)

Proof of Lemma 5.1

Assume the (5.2). We study how the Sobolev norm \(\Vert u(t)\Vert _{H^{s}}\) evolves for \(t\in [0,T]\) by inspecting the equivalent energy norm \({\mathcal {E}}_{s}(z)\) defined in (5.4). Notice that

Therefore, for any \(t\in [0,T]\), we have that

We now fix

with \(0<\alpha \le \gamma \) to be chosen properly. Hence we have

We choose \(\alpha >0\) such that

i.e.

We shall choose \(\gamma >0\) is such a way the terms in (5.9) are negligible with respect to the terms in (5.8). In particular we set (recall (5.11))

Therefore estimates (5.8)–(5.9) become

where \(\mathtt {a}\) is defined in (1.6) and appears thanks to definitions (3.1), (4.2), (4.4), (4.7) and (5.11). Moreover we define

with \(\alpha ,\gamma \) given in (5.11) and (5.12). Notice that, since \(\delta >0\) is arbitrary small, then \(\delta '\) can be chosen arbitrary small. Since \(\varepsilon \) can be chosen arbitrarily small with respect to s and \(\delta \), with this choices we get

as long as \(T\le \varepsilon ^{-\mathtt {a}+\delta '}\). Then, using the equivalence of norms (5.6) and choosing \(c_0>0\) small enough, we have

for times \(T\le \varepsilon ^{-\mathtt {a}+\delta '}\). This implies the thesis. \(\square \)

Notes

Actually there are papers in which such procedure is iterated. We quote for instance [15] and reference therein.

When you have a control of the small divisors involving only \(\mu _3(j)\) then you can solve the homological equation at any order and you obtain an almost global existence result in the spirit of [2]. This would be the case if we consider the semi-linear beam equation on the squared torus \({\mathbb {T}}^d\).

Notice that there is no resonant term of odd order by Proposition 2.2, in other words \(Z_3=Z_5=0\).

i.e. \(\forall k,\ell \in \llbracket 1,r \rrbracket , \ k\ne \ell \Rightarrow \ j_{k}\ne j_{\ell }\).

see Definition 2.1.

References

Bambusi, D.: Birkhoff normal form for some nonlinear PDEs. Commun. Math. Phys. 234, 253–283 (2003)

Bambusi, D., Grébert, B.: Birkhoff normal form for partial differential equations with tame modulus. Duke Math. J. 135(3), 507–567 (2006)

Bambusi, D., Delort, J.M., Grébert, B., Szeftel, J.: Almost global existence for Hamiltonian semi-linear Klein–Gordon equations with small Cauchy data on Zoll manifolds. Commun. Pure Appl. Math. 60, 1665–1690 (2007)

Bambusi, D., Grébert, B., Maspero, A., Robert, D.: Growth of Sobolev norms for abstract linear Schrödinger equations. To appear in JEMS, (2020)

Bernier, J.: Bounds on the growth of high discrete Sobolev norms for the cubic discrete nonlinear Schrödinger equations on \(h{\mathbb{Z}}\). Discret. Contin. Dyn. Syst. A 39(6), 3179–3195 (2019)

Bernier, J., Faou, E., Grébert, B.: Long time behavior of the solutions of NLW on the \(d\)-dimensional torus. Forum Math. Sigma 8, 12 (2020)

Berti, M., Delort, J.M.: Almost global solutions of capillary-gravity water waves equations on the circle. In: UMI Lecture Notes (2017)

Berti, M., Feola, R., Pusateri, F.: Birkhoff normal form and long time existence for periodic gravity water waves (2018). Preprint at arXiv:1810.11549

Berti, M., Feola, R., Franzoi, L.: Quadratic life span of periodic gravity-capillary water waves. Water Waves (2020). https://doi.org/10.1007/s42286-020-00036-8

Berti, M., Feola, R., Pusateri, F.: Birkhoff normal form for gravity water waves. Water Waves (2020). https://doi.org/10.1007/s42286-020-00024-y

Bourgain, J.: Construction of approximative and almost periodic solutions of perturbed linear Schrödinger and wave equations. Geom. Funct. Anal. 6(2), 201–230 (1996)

Bourgain, J.: Growth of Sobolev norms in linear Schrödinger equations with quasi-periodic potential. Commun. Math. Phys. 204(1), 207–247 (1999)

Delort, J.M.: On long time existence for small solutions of semi-linear Klein–Gordon equations on the torus. J. d’Analyse Mathèmatique 107, 161–194 (2009)

Delort, J.M.: Growth of Sobolev norms of solutions of linear Schrödinger equations on some compact manifolds. Int. Math. Res. Not. 12, 2305–2328 (2010)

Delort, J.M.: Quasi-linear perturbations of Hamiltonian Klein–Gordon equations on spheres. Am. Math. Soc. (2015). https://doi.org/10.1090/memo/1103

Delort, J.M., Imekraz, R.: Long time existence for the semi-linear Klein-Gordon equation on a compact boundaryless Riemannian manifold. Commun. PDE 42, 388–416 (2017)

Eliasson, H.: Perturbations of linear quasi-periodic systems. In: Dynamical Systems and Small Divisors (Cetraro, Italy, 1998). Lect. Notes Math., vol. 1784, pp. 1–60. Springer, Berlin (2002)

Fang, D., Zhang, Q.: Long-time existence for semi-linear Klein–Gordon equations on tori. J. Differ. Equ. 249, 151–179 (2010)

Faou, E., Grébert, B.: A Nekhoroshev-type theorem for the nonlinear Schrödinger equation on the torus. Anal. PDE 6(6) (2013)

Feola, R., Grébert, B., Iandoli, F.: Long time solutions for quasi-linear Hamiltonian perturbations of Schrödinger and Klein–Gordon equations on tori. Preprint. arXiv:2009.07553 (2020)

Feola, R., Iandoli, F.: A non-linear Egorov theorem and Poincaré–Birkhoff normal forms for quasi-linear pdes on the circle. Preprint. arXiv:2002.12448 (2020)

Feola, R., Iandoli, F.: Long time existence for fully nonlinear NLS with small Cauchy data on the circle. Annali della Scuola Normale Superiore di Pisa (Classe di Scienze). To appear (2019). https://doi.org/10.2422/2036-2145.201811-003

Grébert, B.: Birkhoff normal form and Hamiltonian PDEs. Séminaires et Congrès 15, 3067–3102 (2016)

Grébert, B., Imekraz, R., Paturel, E.: Normal forms for semilinear quantum harmonic oscillators. Commun. Math. Phys 291, 763–798 (2009)

Imekraz, R.: Long time existence for the semi-linear beam equation on irrational tori of dimension two. Nonlinearity 29, 1–46 (2007)

Ionescu, A.D., Pusateri, F.: Long-time existence for multi-dimensional periodic water waves. Geom. Funct. Anal. 29, 811–870 (2019)

Moser, J.: A rapidly convergent iteration method and non-linear partial differential equations—I. Ann. Sc. Norm. Sup. Pisa Cl. Sci. III Ser. 20(2), 265–315 (1966)

Pausader, B.: Scattering and the Levandosky–Strauss conjecture for fourth-order nonlinear wave equations. J. Differ. Equ. 241(2), 237–278 (2007)

Zhang, Q.: Long-time existence for semi-linear Klein–Gordon equations with quadratic potential. Commun. Partial Differ. Equ. 35(4), 630–668 (2010)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Yingfei Yi.

In memory of Walter Craig whose beautiful voice, always relevant and friendly, we miss.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Felice Iandoli has been supported by ERC grant ANADEL 757996. Roberto Feola, Joackim Bernier and Benoit Grébert have been supported by the Centre Henri Lebesgue ANR-11-LABX- 0020-01 and by ANR-15-CE40-0001-02 “BEKAM” of the ANR.

Rights and permissions

About this article

Cite this article

Bernier, J., Feola, R., Grébert, B. et al. Long-Time Existence for Semi-linear Beam Equations on Irrational Tori. J Dyn Diff Equat 33, 1363–1398 (2021). https://doi.org/10.1007/s10884-021-09959-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10884-021-09959-3