Abstract

One can get an integer solution for the one-dimensional cutting stock problem by means of the constructive or residual heuristic. In this work we propose a change in the Constructive Greedy Heuristic that consists in building a cutting pattern by sorting in descending order the items of pair or odd length, priority being given to those which appear more frequently in the problem, cut from objects in stock with pair or odd length respectively, with the aim of minimizing the quantity of cut objects. Computing tests that were carried out showed the efficiency of the proposed heuristic when compared with other methods in the literature that generate an integer solution for the problem and will be presented at the end of this work.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Cutting problems appear in several practical situations such as cutting steel sheets, wood, electric wires, paper rolls etc. There are many industries where such problems come up such as: car factories, aero-spatial, mechanics, naval, mobile factories, those of tubes and connections, petrol and gas etc; a lot of them being often applied to such areas as odontology, engineering, logistics, agronomy, fashion and designer, robotics, among others; for applications in these sectors we suggest to look up (Stadtler 1990; Farley 1990; Cui 2005; Johnson et al. 1997). These problems may also be seen as bin-packing problems present in food, pharmacy and construction materials industries etc. Specifically in the one-dimensional case, what we have is an optimization combinatorial problem that consists in cutting pieces longer than length L (objects) in order to produce shorter pieces of length \(l_i\) (items) so that one may supply the \(d_i\) (demand) orders in which \(i = 1,\ldots , m\) trying to optimize any objective function, which may be, for instance, the minimization of costs or the minimization of the quantity of cut objects. For this paper, we considered only one objective to be attained, but it is common in the literature to consider two or more objectives for the problem (see Wascher 1990). A solution for the problem consists in determining a set of patterns of cuttings and their frequency, that is, the quantity of times that each of them must be cut so that the demands of the items are respected. Be n a quantity of patterns in the solution, L the size of the object on stock, with utilization cost \(c_j\) and J the set of indexes of all variable patterns. The objective is to minimize the quantity of cut objects. Let’s assume that \(c_j=1\), \(\forall j \in J\). If \(\alpha _{ij}\) is the decision variable that indicates the quantity of items type i in the cutting pattern j with frequency \(x_j\), for \(i=1,\ldots ,m\) and \(j=1,\ldots ,n\), then the problem may be formulated as the following problem of integral linear programming:

Be \(A=[{\mathbf {a_1}} \ \ {\mathbf {a_2}} \ \ \cdot \ \cdot \ \cdot \ \ {\mathbf {a_n}} ]\) the matrix whose columns represent the n-possible patterns and \(\mathbf{d} = (d_{1} ,\ldots ,d_{i} ,\ldots ,d_{m})^T\) the demand vector of the items. Each column-j vector of A, \(\mathbf{a_j}=(\alpha _{1j} ,\ldots ,\alpha _{ij} ,\ldots ,\alpha _{mj})^T\), represents a variable cutting pattern, and must therefore satisfy the following restriction of the knapsack:

This problem is NP-hard (McDiarmid 1999), and which makes it difficult to solve are the integrality conditions attributed to the decision variables, added to the fact that the number of patterns to explicit increases a lot as the number of different items and their demands increases.

2 Relaxed solution for the cutting problem

The first efficient methods to solve the cutting stock problem (CSP) appeared the 1960’s with the works by Gilmore and Gomory (1961, 1963),who presented the method simplex generation of columns, a resolution technique in which the integrality requirement of the decision variables is abandoned, thus considering this a relaxed model. At each iteration of the simplex method (Bazaraa et al. 1990), a column in the model is generated (Barnhart et al. 1994). The algorithm starts with a basic initial solution for the one-dimensional cutting stock problem(1CSP), formed only by homogeneous patterns of the kind \({\mathbf {a_j}}=(0,\ldots ,\left\lfloor {\frac{L}{{l_i }}} \right\rfloor ,\ldots ,0 )^T\), \(i=1,\ldots ,m\); \(j \in J\). The basic matrix, in this case, is diagonal, in which the only non-null element of each column indicates the cut quantity of the item i, \(i=1,\ldots ,m\) :

2.1 Column generation

By following the procedure suggested in Gilmore and Gomory (1961) the column \(\mathbf{a_k}\) to enter the basis is corresponding to the variable \(x_k\) with the least relative cost, which means the resolution of the following subproblem:

If \((c_k-\pi ^T {\mathbf {a_k}})\ge 0\) thus the current basic solution \(\mathbf{x} _\mathbf{B} =\mathbf{B} ^{-1}{} \mathbf{d} \) is optimal, in which \(\pi \) is the simplex multiplying vector of the current iteration, B is the basic matrix \(m \times m\), \(\mathbf{x} _\mathbf{B }\) is the vector of the basic variables, \(\mathbf{d} \) is the vector of the demands. We need to determine a non-basic column \({\mathbf {a_j}}=(\alpha _{1j} ,\ldots ,\alpha _{ij} ,\ldots ,\alpha _{mj})^T\), corresponding to a feasible cutting pattern \(j \in J\), which is a candidate to enter the basis. Thus, since \(min \left\{ c_j-\pi ^T {\mathbf {a_j}}\right\} = c_j-max \left\{ \pi ^T {\mathbf {a_j}}\right\} \) the column comes from solving the restrict knapsack problem:

Techniques for solving the knapsack problem are found in Pisinger (1993) and Martello and Toth (1990). The method used in our work was that implicit enumeration suggested by Gilmore and Gomory (1963), based on depth search first, enabling through the use of bounding that the worst solutions be discarded without losing the optimal solution. For \(i = 1, \ldots , m\), \( v_i\) is the utility value of item-i. The complete algorithm is shown below.

To better understand the Knapsack Problem Algorithm we present a small example of the one-dimensional cutting stock problem, with three items. The size of the object in stock is \(L = 17\). The data are shown in the Table 1. With each iteration of the Simplex Column Generation, the steps P.2. and P.3. determine the simplex multiplier vector \(\pi =(\pi _1,\pi _2,\pi _3)\), \(\pi _i\) utility value of item-i, \(i = 1,2,3\). The solution to the relaxed problem is shown in Table 2. We will now detail the first iteration, whose vector \(\pi =(1,0.3333,0.25)\) is passed as utility vector of the knapsack, that is, \(v_1=1; v_2=0.333; v_3=0.25\). With these data the problem 4 becomes:

In P.1. Now defining \(\pi _i = v_i/ l_i \), the most valuable items are ordered by length unit. Suppose \(\pi _1 \ge \pi _2 \ge \pi _3\), so \(\pi \) is now given by \(\pi =(0.09;0.08;0.06)\). The initial solution according to P.2. is such that \(y_1 = min \left\{ \left\lfloor {\frac{17}{{11}}} \right\rfloor ,7 \right\} =1\), \(y_2 = min \left\{ \left\lfloor {\frac{17-11}{{3}}} \right\rfloor ,4 \right\} =2\), \(y_3 = min \left\{ \left\lfloor {\frac{17-11-6}{{5}}} \right\rfloor ,9\right\} =0\), so \(\mathbf{y }=(y_1,y_2,y_3)= (1,2,0)\). According P.3. \(g(\mathbf{y} ) = \sum \limits _{i = 1}^3 {v_i y_i }=1.5=\underline{G} \) and \(y^*=\mathbf{y }\). In P.4. \(k=2\) and \(\overline{G}= v_1y_1+v_2(y_2-1)+\frac{v_3}{l_3}(L-l_1y_1-l_2(y_2-1)=1.33\). Since \(\overline{G} \le \underline{G}\) in P.5. make \(y_2=0\) turning \(\mathbf{y }= (1,0,0)\) coming back to P.4. and repeating the process one has \(\overline{G}=0\) and again \(\overline{G} \le \underline{G}\), now k=1 then one makes \(y_1=0\) which turns \(\mathbf{y }= (0,0,0)\) and therefore the current solution saved in \(y^*\), \(\mathbf{y }= (1,2,0)\) which in the original problem corresponds to \(\mathbf{y }= (1,0,2)\) it is optimal and it is exactly the first pattern in the solution of the relaxed problem in Table 2. Repeating the process, the other patterns of the solution are determined.

3 Heuristics of rounding solutions

Be \(\mathbf{x} =(x_1,x_2,\ldots ,x_n)\) the optimal solution of the relaxed problem 1. Let’s admit that at least one component of \(\mathbf{x} \) not integer, otherwise the integer solution for the problem would already have been found. There are two possibilities in this case to determine an integer solution: one either round the components of vector \(\mathbf{x} \) for the inferior integer or for the superior integer. In the first case, the found solution is not always viable because the resulting rounding from the non-integer components of \(\mathbf{x} \) does not necessarily supply the demand of all items, while in the second case more items are produced. In both cases, it is necessary to solve at least one residual problem. Be \(x^*=\left( {\left\lceil {x_1 } \right\rceil ,\left\lceil {x_2 } \right\rceil ,\ldots ,\left\lceil {x_n } \right\rceil } \right) \)the rounding of the components of vector \(\mathbf{x} \) for the superior integer with objective function corresponding to \(f(x^*)=\sum \nolimits _{j = 1}^n {\left\lceil {x_j } \right\rceil }\) and \(f(\mathbf{x} )=\sum \nolimits _{j = 1}^n {x_j }\) the objective function of the relaxed solution. An lower bound (LB) for the objective function of problem 1 is given by \(LB={\left\lceil f(\mathbf{x} ) \right\rceil }\). We call \(integrality \ gap\) the difference \(\Delta (\mathbf{x} )=f(x^*)-f( \)x). According to Marcotte (1985) and Scheithauer and Terno (1995) if \(\Delta (\mathbf{x} )<1\) the (1CSP) 1 has IRUP (Integer Round-Up Property), that is, the integrality gap, the difference between the optimal value of the entire solution and the optimal value of the relaxed solution rounded to the nearest upper integer is less than or equal to 1 (one). If \(f(\mathbf{x} )=LB\) the solution is optimal.

3.1 Residual heuristics

We call residual heuristics each procedure that starts a search for an integer solution for the (1CSP) by means of rounding techniques applied to the relaxed solution. Since in such cases the original demand is not completely supplied one or more residual problems are solved with the remaining demand. One of the residual heuristics often used in the literature was proposed by Wascher and Gau (1996) and it consists in rounding the components of vector \(\mathbf{x} \) downwards, \(\overline{x}=\left( {\left\lfloor {x_1 } \right\rfloor ,\left\lfloor {x_2 } \right\rfloor ,\ldots ,\left\lfloor {x_n } \right\rfloor } \right) \) the corresponding objective function being given by \(f( \overline{x})=\sum \nolimits _{j = 1}^n {\left\lfloor {x_j } \right\rfloor }\) and in solving the residual problem, which corresponds to solving problem 1 by replacing the demand vector d by the residual demand \(\mathbf{r} = (r_{1} ,\ldots ,r_{i} ,\ldots ,r_{m})^T\) com \(r_i = d_i-\sum \nolimits _{j = 1}^n {a_{ij} \left\lfloor {x_j } \right\rfloor }, \ i = 1,\ldots ,m\). In case of another fractional solution, rounding for the inferior integer is again carried out, generating a new residual demand. One repeats the process until the rounding generates null frequencies. If there still remain items with non-null demands, one solves a final residual problem with any heuristic that furnishes an integer solution for the problem.

Another residual approach proposed by Poldi and Arenales (2009), at each iteration solves a relaxed cutting stock problem, sorts the solution vector \(\mathbf{x} \) in decreasing order, rounds the first frequency for the superior integer and tests the feasibility of the first pattern of the solution in order to verify that there were no excesses in the production of any item, otherwise, the frequency is reduced one unit until excesses be eliminated and one proceeds to the next frequency pattern. When the last generated cutting pattern is examined, one updates the demand and the stock. The residual problem is solved and the rounding procedure is repeated until all the demand is supplied. Since every time a relaxed problem is solved at least one cutting pattern is accepted to the solution, the Greedy Rounding Heuristic-GRH guarantees that every demand be supplied in a finite number of iterations. Two other versions of the heuristic, GRH2 and GRH3 sort the solution vector \(\mathbf{x} \) with priority for the patterns with the least waste and for patterns in which the solution vector \(\mathbf{x} \) presents the largest fractional part respectively.

3.2 Constructive heuristics

Another way to determine an integer solution for a one-dimensional cutting problem consists in constructing a good cutting pattern and use it as many times as possible (see Hinxman 1980), without excess in the production of any item. These are the so-called constructive heuristics. At each iteration of such procedure, the demand of the items is updated and the process is repeated until it is fully supplied, generating at last an integer solution for the problem.

3.2.1 FFD heuristic

FFD Heuristic consists in putting the largest item in the pattern the highest possible number of times without excess in the demand. If the selected item does not fit the pattern anymore, the second largest item is selected and so forth. The complete algorithm is presented below:

3.2.2 Greedy Heuristic

Greedy Heuristic follows the same idea as FFD Heuristic, except for step P.3. Instead of giving priority to the largest items to build the pattern, this is obtained by solving the restrict knapsack problem 4, making \(\pi _i=l_i\), in the objective function for \(i=1,\ldots ,m\) .

3.2.3 Modified Greedy Heuristic

Until the submission of this article, we did not find in the literature any works that propose any modification in the methodology of the pattern of Greedy Heuristic for the CSP. Cherri et al. (2009) modify the FFD and Greedy Heuristic without modifying the methodology of putting in the pattern the largest item in the list, the difference is that at each iteration this list is shorter since the pattern is only accepted if it is within the bounding acceptable loss, otherwise an item in the pattern (the largest one) is withdrawn, the pattern is generated with FFD or Greedy Heuristic and the loss is assessed, if the pattern is not accepted one withdraws the largest item and so forth. This procedure is repeated until the loss or rest is within the bounding defined as acceptable or the demand is totally supplied. Ongkunaruk (2005) presents a modification in the FFD Heuristic in order to solve the Bin Packing Problem—BPP, but the idea is the same contained in Cherri et al. (2009). The modification proposal that present in this work differs from the Greedy Heuristic in the way the items are arranged in the decreasing order. If there are more pair than odd numbers these are ordered first and the same happens in the opposite case.

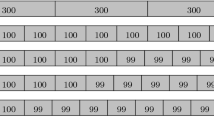

Following is an example of the cutting problem with 10 types of items \((m = 10)\). The length and the demand of each item are shown on Table 3. The objects in stock have lengths 1000 and 1001. Variation in the lengths of the objects in stock also appear in the works of Poldi and Arenales (2009) and Belov and Scheithauer (2002). On Tables 4, 5, 6 and 7 one sees the patterns and frequencies of the solutions obtained by generating columns and by the GRH, Greedy and Modified Greedy Heuristic respectively. The tables show that while the modified heuristic obtained a solution with the same 398 objects of the relaxed solution and 12 patterns, 02 more than that, the greedy heuristic produced 41 objects and 4 extra patterns. The GRH Heuristic obtained a solution very close to the relaxed solution with 01 object and 01 extra pattern. The only one to have obtained an optimal solution in this case was the Modified Greedy Heuristic (MGH) because according to the exposition on Sect. 3\(f(\mathbf{x} )=398=LB\) and the \(integrality\ gap\) \(\Delta (\mathbf{x} )=0\).

4 Computational experiments

For the computing experiments, the test problems were obtained by Cutgen1, a random generator of cutting problems of one-dimensional stock developed by Gau and Wascher (1995). We considered 18 classes of problems each one with 100 instances and we adopted in Cutgen1 the seed 1994. The classes are divided according to the quantity of items m, the average of the items demands \(\overline{\mathbf{d }}\), and different combinations of values \(v_1\) and \(v_2\) for determining the length of the items that are generated in the interval \([v_1L, v_2L]\), as shown on Table 8. In the carried out computing experiments, the considered size of the stock object was \(L = 1000\) for all implemented heuristics. For Modified Greedy Heuristic we used two sizes of objects, because for every problem one decision is taken to cut the items out of the object of length \(L = 1000\) or \(L = 1001\) inasmuch we have even or odd length respectively, a variation of \(0.1\%\) longer than the object used by the other heuristics. For FFD, Greedy, GRH, BPP and Modified Greedy Heuristic the Simplex Method of Column Generation and the knapsack algorithm were implemented in \(C_{++}\) and all the computing tests for this work were carried out in an Intel Core i5-2450M computer with 4GB memory (Ram).

5 Results analysis

On Table 9 one presents the results of the computing tests carried out with the eight tested heuristics: three constructive ones: FFD, Greedy, and Modified Greedy (MGH); and five residual ones: FFD, Greedy, Bin Packing (BPP), GRH and Modified Greedy. Each column of Table 9 provides the average of the number of cut objects from the 100 problems of each of the 18 classes that were used. The values in bold in the rows of Table 9 indicate the heuristics that presented the lowest average of cut objects. In this case we point out the optimal performance of the residual Modified Greedy Heuristic (MGH), which got a better performance than the others in 12 out of the 18 tested classes. The constructive heuristics obtained better performances in only 02 out of the 18 classes, thus confirming the tendency that the best solutions in this case be gotten by the residual heuristics, because the solution of the relaxed problem besides a technique of rounding solutions are demonstrably more efficient than building one pattern after another and use it exhaustively, as do the constructive heuristics.

The Table 10 provides the average of the number of patterns for each of the 18 tested classes. The values in bold in the rows indicate the heuristics with the lowest average of patterns used. We did not use any efforts in order to reduce the number of patterns as the methods proposed by Foerster and Wascher (1999) and Yanasse et al. (2011) which were used for example in the works by Yanasse and Limeira (2006), Cerqueira and Yanasse (2009) and Cui et al. (2008) among others. Even though that was not an objective of our work, by the data in the table we notice that the averages of the patterns used by the residual heuristics kept themselves very close to one another, and much better than the average of the constructive heuristics, something expected as we explained earlier. The residual GRH got the best results for the average of the objects, surpassing the others in 12 of the 18 classes. There were no significant variations in the average time of the heuristics as shown in Table 11.

The Table 12 shows the average rate of variation of objects and patterns between the constructive Modified Greedy Heuristic and Greedy Heuristic. The positive and negative values indicate respectively the upward and downward average of objects and patterns used by MGH as compared with Greedy Heuristic. One notices by the data in the table that in 12 of the 18 tested classes, MGH reduces enough the average of objects cut by Greedy Heuristic, virtually keeping the patterns average stable, reducing in 80.5 the average of the objects and 0.13 the average of the patterns in class 12. We calculated the average pattern deviation of objects in the solution of the Greedy Heuristic (G-XXX) and Modified Greedy Heuristic (M-XXX) in relation to other tested heuristics, which consists in calculating the difference of the average of objects among the generated solutions.

The results on Table 13 show how much each heuristic was better than the others, producing downward objects, if the values are negative, or upward if they are positive. As pointed out earlier we have not proposed as an objective to reduce patterns, even though we consider excellent the performance of MGH in relation to the average of patterns in the solution. In class 18, for instance, with a small rise of 0.57 in the average of patterns one cuts 137.08 objects fewer than Greedy Heuristic, and the few classes in which MGH does not reduce the average of objects and patterns, the upward variation rate is very low, in class 5 for instance, 0.14 and 0.59 respectively (Table 11).

6 Conclusion and future works

In this work, we presented the Modified Greedy Heuristic, a change of Greedy Heuristic, which instead of ordering in decreasing order of the size of all the items, it orders first the pair items or the odd ones in case there are more items in the problem, of pair and odd size respectively. The results of the computing tests carried out showed that the solutions generated by the MGH have the average of cut objects lower than the Greedy Heuristic. They were also assessed in other constructive and residual heuristics in the literature for the problem of cutting in the one-dimensional stock, the residual MGH being the one that got the best performance as for the proposed objective of minimizing the number of objects in the solution, as shown on Table 9. The average of patterns was also compared and GRH obtained the best performance, but the residual MGH got results very close to the other tested residual heuristics, since these obtain much better solutions than the constructive heuristics, as we explained earlier. For future works, we intend to carry out other computing simulations with MGH, assigning different pairs of values with the size of the pair and odd object and consider the problem with different sizes of the stocked object.

References

Barnhart C, Johnson EL, Nenhauser GL, Vance PH (1994) Solving binary cutting stock problems by colum generation and branch and bound. Comput Optim Appl 3:111–130

Bazaraa MS, Jarvis JJ, Sherali HD (1990) Linear programming and network flows, 2nd edn. Wiley, New York

Belov G, Scheithauer G (2002) Decomposition approaches for solving the integer one-dimensional cutting stock problem with different types of standard lengths. Eur J Oper Res 141:295–312

Cerqueira GRL, Yanasse HH (2009) A pattern reduction procedure in a one-dimensional cutting stock problem by grouping items according to their demands. J Comput Interdiscip Sci 2:159–164

Cherri AC, Arenales MN, Yanasse HH (2009) The one-dimensional cutting stock problem with usable leftover: a heuristic approach. Eur J Oper Res 196:897–908

Cui Y, Yang Y, Yu P (2008) A heuristic for the one-dimensional cutting stock problem with pattern reduction. Eng Manuf 222:677–685

Cui Y (2005) A cutting stock problem and its solution in the manufacturing industry of large electric generators. Comput Oper Res 32:1709–1721

Farley AA (1990) The cutting stock problem in the canvas industry. Eur J Oper Res 44:247–255

Foerster H, Wascher G (1999) Pattern reduction in one-dimensional cutting stock problems. Int J Prod Res 38:1657–676

Gau T, Wascher G (1995) Cutgen1: a problem generator for one-dimensional cutting stock problem. Eur J Oper Res 84:572–579

Gilmore P, Gomory R (1961) A linear programming approach to the cutting stock problem. Oper Res 9:849–859

Gilmore P, Gomory R (1963) A linear programming approach to the cutting stock problem-part II. Oper Res 6:863–888

Hinxman AI (1980) The trim-loss and assortment problems: a survey. Eur J Oper Res 5:8–18

Johnson MP, Rennick C, Zak E (1997) Skiving addition to the cutting stock problem in the paper industry. Soc Ind Appl Math 39:472–483

Marcotte O (1985) The cutting stock problem and integer rounding. Math Program 33:82–92

Martello S, Toth P (1990) Knapsack problems: algorithms and computer implementations. Wiley, New York

McDiarmid C (1999) Pattern minimisation in cutting stock problems. Discrete Appl Math 98:121–130

Ongkunaruk P (2005) Asymptotic worst-case analyses for the open bin packing problem. Faculty of the Virginia Polytechnic Institute

Pisinger D (1993) Algorithms for knapsack problems. Thesis-DIKU, University of Copenhagen, Copenhagen

Poldi KC, Arenales MN (2009) Heuristics for the one-dimensional cutting stock problem with limited multiple stock lengths. Comput Oper Res 36:2074–2081

Scheithauer G, Terno J (1995) The modified integer round-up property of the one-dimensional cutting stock problem. Eur J Oper Res 84:562–571

Stadtler H (1990) A one-dimensional cutting stock problem in the aluminium industry and its solution. Eur J Oper Res 44:209–223

Wascher G (1990) An lp-based approach to cutting stock problems with multiple objectives. Eur J Oper Res 44:175–184

Wascher G, Gau T (1996) Heuristics for the integer one-dimensional cutting stock problem: a computational study. OR Spektrum 18:131–144

Yanasse HH, Poldi KC, Cerqueira GRL (2011) Modified KOMBI to reduce the different patterns in cutting stock problems. International Federation of Operational Research Societies Melbourne, Australia

Yanasse HH, Limeira MS (2006) A hybrid heuristic to reduce the number of different patterns in cutting stock problems. Comput Oper Res 33:2744–2756

Acknowledgements

The authors thank Fapesb, Capes and CNPq for the financial support. The authors are also indebted to the reviewers for their valuable and helpful suggestions that improved the presentation of this paper.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that the research presented in this manuscript is in compliance with the rules of the institution’s Research Ethics Committee, and since it does not involve human beings, informed consent does not apply. The authors also declare that they have no personal, commercial, academic, political or financial conflicts of interest for the submission of this manuscript.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Cerqueira, G.R.L., Aguiar, S.S. & Marques, M. Modified Greedy Heuristic for the one-dimensional cutting stock problem. J Comb Optim 42, 657–674 (2021). https://doi.org/10.1007/s10878-021-00695-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10878-021-00695-4