Abstract

Online social networks have become popular media worldwide. However, they also allow rapid dissemination of misinformation causing negative impacts to users. With a source of misinformation, the longer the misinformation spreads, the greater the number of affected users will be. Therefore, it is necessary to prevent the spread of misinformation in a specific time period. In this paper, we propose maximizing misinformation restriction (\(\mathsf {MMR}\)) problem with the purpose of finding a set of nodes whose removal from a social network maximizes the influence reduction from the source of misinformation within time and budget constraints. We demonstrate that the \(\mathsf {MMR}\) problem is NP-hard even in the case where the network is a rooted tree at a single misinformation node and show that the calculating objective function is #P-hard. We also prove that objective function is monotone and submodular. Based on that, we propose an \(1{-}1/\sqrt{e}\)-approximation algorithm. We further design efficient heuristic algorithms, named \(\mathsf {PR}\)-\(\mathsf {DAG}\) to show \(\mathsf {MMR}\) in very large-scale networks.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Online social networks (OSNs) have provided an effective platform for interaction and communication for billions of users. Many people have integrated popular online social sites into their daily lives and considered them as the main source of information. For example, the news about the death of Bin Laden was released on Twitter before the US government officially announced it on social media (Sutter 2017). Furthermore, information spreads on OSNs can have substantial impact on the society, especially political trends. For instance, Facebook and Twitter had great impact on the US Presidential Election in 2008 and the Arab Spring in 2010 (Hughes and Palen 2009; Wolfsfeld et al. 2013).

Unfortunately, rapid dissemination of information on OSNs can be used to spread misinformation to a large amount of users. This may lead to huge economic losses as well as negative impacts on the community in real life. For example, false information about assassination of President Obama has indirectly caused a damage of $136.5 billion to the financial market (Domm 2013). In addition, misinformation on social networks had a significant impact on the US Presidential Election in 2016 (Gentzkow 2017). In order for the OSN to serve its users as a reliable channel of information, it is critical to have effective measures to detect the sources of misinformation and restrict their spread.

Identifying sources of misinformation is a basis for preventing misinformation. Some initial studies have used data mining methods to detect sources of misinformation/rumors (Qazvinian et al. 2011; Kwon et al. 2013). Recently, in order to prevent the outbreak of misinformation and rumors, a commonly used strategy is to block accounts and links which play a vital role in the propagation process. For example, in 2015, Twitter removed 125,000 accounts which was suspected to relate to terrorisms (Yadron 2017). Facebook deleted 30,000 fake accounts reporting rumors in France before the Presidential Election in 2017 (Kottasov 2017).

However, in reality, we cannot remove too many nodes and links because it can affect the spread of information and freedom of speech. Therefore, it is necessary to have an optimal solution for selecting edges and nodes needed to be removed. Information diffusion models provide the basis for the study of solutions to prevent the spread of misinformation in which two most used ones are linear threshold model (LT) and the independent cascade model (IC) (Kempe et al. 2003). Kimura et al. proposed heuristic methods to remove sets of edges from network in order to minimize influence from sources of misinformation (Kimura et al. 2008, 2009). In LT model, Khalil et al. (2014) proposed an algorithm with an approximation ratio of \(1{-}1/e- \epsilon \) for this problem. Recently, Zhang et al. has designed a strategy to vaccinate sets of edges or nodes in order to reduce the influence of sources of epidemics (Zhang and Prakash 2015, 2014). After that, (Zhang et al. 2016a, c) proposed a strategy of placing monitor machine to prevent the impact of sources of misinformation to important users. In essence, vaccinating and placing monitor on nodes are equivalent to removing nodes from the network, from the abstraction point of view.

A shortcoming of the above studies is that they do not take into account the time constraints when preventing spread of known sources of misinformation. In reality, information is spread from this user to another through only a few hops. The earlier the preventing of spread of misinformation, the smaller the amount of affected users. In addition, cost of removing one user from the network by placing monitor machine or vaccinating nodes are often different. Therefore, in this paper, we investigate a problem, Maximizing Misinformation Restriction which seeks the set of nodes to be removed satisfying two conditions: (1) the total cost to remove nodes does not exeed given budget; (2) the number of propagation hops is limited, such that the influence reduction of misinformation source is maximal. Our main contributions are summarized as follows:

-

We expand the LT model by combining the constraint of number of propagation step hops, called T-LT model, given a set of source of misinformation nodes, time constraint and limited budget. In this model, we formulate Maximizing Misinformation Restriction (\(\mathsf {MMR}\)) problem that seeks a set of nodes to remove from the network within limited budget and time constraints such that influence reduction of misinformation sources is maximized.

-

For complexity, we show that \(\mathsf {MMR}\) is NP-hard and even if when the network is a rooted tree at single misinformation source node, and prove that calculation of the objective function is #P-hard.

-

When the network has a form of tree rooted from only one misinformation source, we designed a Fully Polynomial - Time Approximation Scheme (FPTAS) algorithm which is the best approximation algorithm for NP-hard problem. In general case, we prove that the objective function is monotone and submodular, and thus a Greedy algorithm will have an approximation ratio of \(1{-}1/\sqrt{e}\). We further propose Speed-up Greedy (\(\mathsf {SG}\)) algorithm by using the state-of-the-art technique in Zhang et al. (2016b). \(\mathsf {SG}\) provide an approximation ratio of \(1{-}1/\sqrt{e} -\epsilon \). In order to make this solution scalable to billion of nodes and edges, we introduce an efficient heuristic algorithm, called \(\mathsf {PR}\)-\(\mathsf {DAG}\).

-

Experiments are performed on real-world social traces of Gnutella, Oregon, Epinions and EU Email datasets showed the performance of our purposed algorithms. In each of these networks, we observe that \(\mathsf {PR}\)-\(\mathsf {DAG}\) and \(\mathsf {SG}\) outperformed baseline methods and they gave the similar result in terms of maximizing the target function while \(\mathsf {PR}\)-\(\mathsf {DAG}\) run faster than \(\mathsf {SG}\) (upto 45 times faster). Experiments also sho that \(\mathsf {PR}\)-\(\mathsf {DAG}\) is scalable with EU Email networks, which is large-scale social network contain about 265K nodes and 420K edges.

1.1 Related work

Studying information propagation model is critical for research about influence maximization problem (Kempe et al. 2003) and reducing spread of misinformation/rumors on social networks (Zhang and Prakash 2015; Khalil et al. 2014; Kimura et al. 2008, 2009; Zhang and Prakash 2014; Zhang et al. 2013, 2016a, b; He et al. 2011; Budak et al. 2011; Nguyen et al. 2013). In Domingos and Richardson (2001) studied propagation of information on social networks based on data mining technique. Based on this study, Kempe et al. (2003) proposed two information propagation models which are the Linear Threshold (LT) and the Independent Cascade (IC). In these models, they proposed the problem of Influence Maximization (IM) and a greedy algorithm with a ratio of \(1 - 1/e\). Later, numerous works about proposed scalability and efficiency algorithms have been done (Goyal et al. 2012; Chen et al. 2010a, b; Nguyen and Zheng 2013). In addition, many related diffusion information and variable problems were proposed and studied (Liu et al. 2012; Chen et al. 2012; Zhang et al. 2013; Bhagat et al. 2012) and some studies have extended these two models (Zhang et al. 2013; Liu et al. 2012; Chen et al. 2012).

An important task of preventing misinformation is to identify the source of misinformation or rumors. They can be identified through collecting contents of posts, comments, shares (Qazvinian et al. 2011). Rumors can be identified by using features such as time, structures and language of users (Kwon et al. 2013). In order to prevent the spread of misinformation or bad information, some work proposed a campaign of injecting good information into some nodes in order to fight the misinformation (Budak et al. 2011; Nguyen et al. 2013; He et al. 2011). Budak et al. Budak et al. (2011) proposed a problem of selecting a seed set to create positive information so that the number of users believing in negative information is minimized. He et al. (2011) studied Influence Blocking Maximization problem which chose k nodes to initiate positive information in order to minimize influence of negative information. Nguyen et al. (2013) studied \(\beta _{T}^I\)-Node Protectors problem with the purpose of seeking the smallest set of nodes with good information to decontaminate misinformation. However, in order to apply all above strategies, we need to know the content of the negative information and create positive information equivalently. Furthermore, it is difficult to persuade users when they already believe in the false information.

Recently, another branch of work used vaccinating/monitoring (Zhang and Prakash 2014, 2015; Zhang et al. 2016a, b) on a set of edges or nodes which are equivalent to solution of removing edges or nodes in the network to reduce the influence of misinformation sources (Khalil et al. 2014). Zhang et al. (2016a) proposed \(\tau \)-MP problem that prevent propagation of misinformation from the sources to center set nodes at most guaranteed threshold \(\tau \in [0, 1]\) by placing monitors on some nodes. They showed that the problem was #P-hard under IC model and proposed greedy algorithm based on cut sets method. However, it is difficult to apply it for a large number of central nodes because the algorithm using a Monte–Carlo sampling technique which takes a long running time. Given a set of infected notes in a network, Zhang and Prakash (2015, 2014) proposed a vaccination strategy for the k remaining nodes so that the number of infected nodes after propagation is minimal under IC model. The similar method have also been applied for groups of edges and nodes to control propagation of epidemics under LT model (Zhang et al. 2016b).

Most of existing works ignored two important aspects which are the time constraints and the cost of monitoring/vaccinating/removing a set of nodes. Preventing the spread of misinformation within time constraints is essential to limit their harmful effects. Moreover, the recent studies showed that information is spread after few hops from the source and almost negligible in the next steps (Cha et al. 2009; Leskovec et al. 2007). In addition, we considered cost of monitoring/vaccinating/removing each node on network and the total cost is limited.

1.2 Organization

The rest of the paper is structured as follows. We formulate the propagation models and problem definition in Sect. 2. Section 3 introduces some hardness and complexity results. Sections 4, 5 present our proposed algorithms. The experiments on several datasets are presented in Sect. 6. Finally, we give some implication for future work and conclusion in Sect. 7.

2 Model and problem definitions

In this section, we define the diffusion model called Time constraint Linear Threshold (T-LT) based on the traditional LT model. We next formulate the \(\mathsf {MMR}\) problem, which aims to find a set of nodes to remove from the network with limited budget and time constraints. In Table 1, the frequently used notations are summarized.

2.1 Diffusion model

The most well known models are the LT model and the IC model (Kempe et al. 2003). However, they are not suitable for the time constraints of propagation process. In this subsection, we first describe the standard LT model and then present our extension that incorporates time constraints of the diffusion.

2.1.1 Linear threshold model

Let \(G=(V, E, w)\) be a social network with a set of nodes V and a directed edge E, with \(|V|=n\) and \(|E|=m\). In this paper, the concept of vertex is equivalent to node. Each directed edge \((u, v) \in E\) is associated with an influence weight \(w(u, v) \in [0, 1]\) such that \(\sum _{u \in N_-(v)} w(u, v) \le 1\).

Given a subset \(S=\{s_1, s_2, \ldots , s_p\} \subset V\) represents a source of misinformation (as the seed set in IM problem). In this paper, each node has two states, active and inactive, \(\sigma (S)\) denotes the expected number of activated nodes. In the LT model, the influence cascades in G are illustrated as follows. First, every node \(v\in V\) uniformly chooses a threshold \(\theta _v \in [0, 1]\), which represents the weighted fraction of u’s neighbors that must be active to activate u. Next, the influence propagation happens in round \(t=1,2,3..\).

-

At round 1, all nodes in S are activated and the others are inactive.

-

At round \(t > 1\), an inactive node v is activated if weighted number of its incoming active neighbors is greater than or equal its threshold:

$$\begin{aligned} \sum \limits _{\text {incoming infected neighbor}\,u} w(u, v)\ge \theta _v. \end{aligned}$$ -

Since a node becomes activated, its status remains in the spreading process. The influence propagation stops when no more nodes can be activated.

2.1.2 Time constraint linear threshold model

We are going to describe our extension to the LT model that incorporates time-constraint diffusion processes, called T-LT. Similar to the LT model, in this model, each node chooses a uniform random number in [0, 1] as a threshold, and the spreading process performs in discrete steps. The new aspect in our model is that the influence is limited to the nodes that are within \(d \ge 1\) hops from the source. In other words, the difference of T-LT is the propagation process ends after the d propagation steps. The LT model is a special case of T-LT with \(d = |V|\). Therefore, T-LT inherits some characteristics of LT model.

Kempe et al. (2003) proved LT to be equivalent to live-edge model and sample graph. In LT model, for every node \(v \in V\), picks at most one random incoming edge, such that the edge (u, v) is selected with probability w(u, v), and no edge is selected with probability \(1-\sum _{u \in N_-(v)} w(u, v)\). The selected edges are called live and all other edges are called blocked. \(\Pr [g|G]\) denotes the probability of sample graph g in G, we have:

where

R(g, S) denotes the set of nodes reachable from S in g, by claim 2.6 in Kempe et al. (2003), we have:

\(R_d(g, S)\) denotes the set of nodes reachable from S in g within distance d. In T-LT model, we notice that v is reachable from source S if and only if: (1) there exits at least one path consisting entirely of live edges (called live-path) from some nodes in S to v, and (2) the collective number of hops along the shortest live-path from S to v is not greater than d. By proof of Theorem 2.11 in Chen et al. (2013), the equivalence between LT and live-edge model is with respect to all active sets \(S_t\), where t is the number of step of propagation. Hence, the influence of S after d hops is:

The influence of S after removal of \(A \subset V \setminus S\) is equal to the influence of S on residual graph, the influence of S at this time is:

To simplify, instead of considering all sample graphs in G, we only need to consider the sample graphs in \(G_d(S)\). We define benefit of A is expected reduction of influence of S after removing A, that is,

For convenience, we simplify the symbol \(h_d(\cdot )\) by \(h(\cdot )\) because G and d is constant. Our purpose is to choose a set A with a given budget such that h(A) is maximal. The definition of the problem is presented in the next subsection.

2.2 Problem definition

Suppose that each node \(u \in V\) has a cost \(c(u)\ge 0\) for removing from \(V \setminus S\) and a limited cost is \(L >0\). When \(d = 1\) finding the solution is trivial and does not make much sense, so we only consider the case \(d \ge 2\). In this paper, we consider Maximum Misinformation Restriction (\(\mathsf {MMR}\)) which is defined as follows:

Definition 1

(\(\mathsf {MMR}\)) Given a graph \(G=(V, E, w)\) on T-LT model with \(d \ge 2\) be a number of hops of propagation, potential misinformation sources \(S=\{s_1, s_2,\ldots , s_p\} \subset V\), and a budget \(L > 0\). Find the set of vertices A with total cost \(c(A) =\sum _{u \in A}c(u) \le L\) to maximize h(A).

\(\mathsf {MMR}\) is generalized by the following cases:

-

When \(d= n\): Maximum Misinformation Restriction on whole process propagation of misinformation source S.

-

When \(c(u)=1\ \forall u\in U \subset V, c(u)=+ \infty , u \in V\setminus U\), find the set A in the allowed area U to maximize h(A).

3 Hardness and complexity

In this section, we show that \(\mathsf {MMR}\) is NP-hard even when the graph is the rooted tree by reducing from the 0-1 Knapsack problem. Furthermore, we prove that the exact calculation of h(A) is #P-hard.

Theorem 1

\(\mathsf {MMR}\) is NP-hard in T-LT model, even for \(d=2\) and the input graph is the rooted tree.

Proof

To prove that \(\mathsf {MMR}\) is NP-hard, we will reduce the decision of 0–1 Knapsack problem.

Knapsack Given \(2n+2\) positively integers: \(W, K, s_1, \ldots , s_n, c_1, \ldots , c_n \), determine whether there is a sequence \(\mathbf x =(x_1, x_2, \ldots , x_n) \in \{0,1\}^n\) such that \(\sum _{i=1}^{n}{w_ix_i} \le W\) and \(\sum _{i=1}^{n}{c_ix_i} \ge K\).

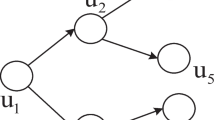

Construction Let \(\mathcal {I}_{1}=(W, K, w_1, \ldots , w_n, c_1, \ldots , c_n) \) is an instance of Knapsack. We construct a rooted tree \( \mathcal {I}_2=(G,S,d, L)\) which is an instance of \(\mathsf {MMR}\) as follows (Fig. 1):

-

We build only one source node \(S=\{s\}\). For each \(c_i\), we construct a direct simple path, in which: the starting node is s, \(c_i\) next nodes: \(u_{i, 1}, u_{i, 2}, \ldots , u_{i, c_i}\), the weight of all edges in this path is 1.

-

The cost of nodes: \(c(u_{1, i})=1, c(u_{2, i})=c(u_{3, i})=\ldots =c(u_{c_i, i})= +\infty \), \(i =1\ldots n\).

-

Finally, set \(L=W\) and \(Z=K\) and \(d=\max _{i=1\ldots n}\{c_i\}\).

By the construction, we obtain graph \(G=(V, E, w)\) satisfies LT model. Now, we prove that if \(\mathcal {I}_1\) has a solution \(\mathbf x =(x_1, x_2, \ldots , x_n)\) the \(\mathcal {I}_2\) has a solution \(A=\{u_{i, 1}|x_i=1\}\) such that \(h(A) \ge Z\) and vice versa.

(\(\rightarrow \)) Suppose \(\mathbf x =(x_1, x_2,\ldots , x_n)\) is solution of instance \(\mathcal {I}_1\). Now with our construction we see that, we chose set \(A=\{u_{i,1}| x_i=1\}\). We have \(c(A)=\sum _{i|x_i=1}w_i \le W=L\). According LT model, for each path, we select \(u_{i, 1}\) in set A, the influence from s to another nodes is blocked, implies the reduction of influence is \(c_i\). Therefore, \(h(A)=\sum _{i|x_i=1}c_i \ge K=Z\).

(\(\leftarrow \)) Otherwise, if \(\mathcal {I}_2\) has the solution A, A can not contain \(u_{i, j}\) for \(j \ge 2\) since the cost of this node is \(+ \infty \). Suppose \(A={u_{i, 1}}\), \(i \in I\), now we chose a vector \(\mathbf x =(x_1, x_2, \ldots , x_n)\) for \(x_i=1\) if \(u_{1,i} \in A \), otherwise \(x_i=0\). We have \(c(A)=\sum _{i|u_{i, 1} \in A} w_i = \sum _{i=1}^n x_i w_i \le L=W\) and \(h(A)=\sum _{i|u_{i, 1} \in A} c_i=\sum _{i=1}^n x_i c_i \ge Z= K\) implies \(\mathbf x \) is solution of \(\mathcal {I}_1\) \(\square \)

Theorem 2

It is \(\#P\)-hard to compute h(A) in T-LT model.

Proof

We will reduce from the s-t paths problem defined in the following. Given a directed graph \(G=(V, E), |V|=n, |E|=m\), computing the number of (directed) paths from node s to node t that visit every node at most once has been proved to be #P-complete (Valiant 1979). From G, we construct a graph \(G'\) by adding a new node \(t'\) and adding an edge from t to \(t'\) with weight \(w(t, t')=1\). The number of vertices of G is \(n + 1\). Let \(\varDelta \) be the maximum in-degree of any node in \(G'\). For \(e \ne (t, t'), e \in E\) we set \(w(e)=w=1/\varDelta \), this assumption satisfies the LT model since the total of in-neighbour weight is less than 1. We set \(S=\{s\}, A=\{t'\}\) and \(d=n+1\). We will show that if for any eligible w computing \(h(t')\) on \(G'\) is solvable, the s-t problem is also solvable (Fig. 2).

By the equivalence given claim 2.6 in Kempe et al. (2003), we have:

Eliminate the same elements in the two above equations, the remaining paths contain node \(t'\), we have

Since each simple path from s to \(t'\) in \(G'\) contains a simple path from s to t in G, we have:

where \(\alpha _i=|\mathsf {P}_i(G, s, t)|\). For Eq. (10), we can set weight w to n distinction values \(w_1, w_2, \ldots , w_n\) and we have value of \(h(t')\) corresponding to \(w_i\). Hence, we obtain a set of n linear equations with \(\{\alpha _0, \alpha _2, \ldots , \alpha _{n-1}\}\) as variables. The matrix of this equation is \(M_{n \times n}=\{m_{ij}\}\) and \(m_{ij}=w^{i}, i,j=0,..,n-1\) so this is Vandermonde matrix and we can easily to compute the unique solution \(\{\alpha _0, \alpha _2, \ldots , \alpha _{n-1}\}\) for the linear system of equations. The total of s-t paths in G is \(\sum _{i=0}^{n-1} \alpha _i\). If \(\mathcal {A}\) is a polynomial-time algorithm which can calculate exactly \(h(t')\), we can use \(h(t')\) to calculate s-t paths, implying that the exact calculaiton of \(h(t')\) is at least as hard as s-t paths problem \(\square \)

4 Approximation algorithms

In this section, we introduce some approximation algorithms for \(\mathsf {MMR}\) problem. First, we provide a Fully Polynomial Time Approximation Scheme (FPTAS) algorithm for the case when the network is a rooted tree at single misinformation source node. In the general case, we designed a fast greedy algorithm with a ratio of \(1-1/\sqrt{e}\) as the objective function is proved to be monotone and submodular.

4.1 FPTAS for rooted tree

In this section, we consider tree version of \(\mathsf {MMR}\) (called \(\mathsf {T}\)-\(\mathsf {MMR}\)) that means the network is a tree rooted at a source node I. We designed FPTAS for \(\mathsf {T}\)-\(\mathsf {MMR}\), the algorithm has the best approximation ratio (\(1-\epsilon \)) for NP-hard problem.

4.1.1 Calculate benefit of nodes

When the graph is a tree rooted at I, we consider the sub-tree rooted I that each node has a maximum depth d, called \(T_I\). If we select node u, the influence of I will not reach any descendant nodes of u. Inf(u, v) denotes the influence from u to v when u is activated, D(u) is the set of descendant nodes of u on \(T_I\), we have:

To calculate h(.) we propose a recursive algorithm based on Depth-First Search (DFS) described in Algorithm 1.

Lemma 1

Algorithm 1 has a complexity is \(\mathcal {O}(n_T)\), where \(n_T\) is number of nodes in \(T_I\).

Proof

The steps of the algorithm is similar to the algorithm DFS so it has a complexity \(\mathcal {O}(m_T+n_T)=\mathcal {O}(n_T)\) (due to \(T_I\) is a tree) \(\square \)

4.1.2 FPTAS for \(\mathsf {T}\)-\(\mathsf {MMR}\)

Based on the tree structure of the problem, we used dynamic programming method to design a Fully Polynomial Time Approximation Scheme (FPTAS). This algorithm is divided into two phases. In the first phase, we find sub-tree rooted at I with the depth d to satisfy the time constraint of the problem. Next, we standardize benefit value of nodes as well as the flow values. Basically, we try to bound them by a polynomial in n and \(\frac{1}{\epsilon }\). In the second phase, we use the dynamic programming to find the solution in polynomial time. The algorithm is summarized in Algorithm 2 (Fig. 3).

Phase 1: Preprocessing We find sub-tree root at I has the depth at most d. Next we will calculate the benefit of nodes by Algorithm 1 and standardize the benefit as shown in line 4 in Algorithm 2 then h(u) is scaled down by a factor K. This preprocessing step ensures that all h(u) is integer between 0 and \(\lceil \frac{n}{\epsilon } \rceil \).

Phase 2: Dynamic Programming In the second phase, we use dynamic programming to find an optimal solution for the \(\mathsf {MMR}\) problem instance (G, I, h) Now, given the tree \(G = (V, E)\) with \(|V | = n\), and the root I. In order to describe the dynamic algorithm, we use the following the notations:

-

\(T^u\): The sub-tree rooted at u in \(T_I\), with the set of vertices \(V^u\) and the set of edges \(E^u\).

-

t(u): The outcoming degree of node u.

-

\(u_1, u_2, \ldots , u_{t(u)}\): represent children of u.

-

\(T^u_i\): The i-th sub-tree of tree rooted at u i.e, the subtree rooted at i-th u’s child.

We define the following recursion functions:

-

\(F^u(p)\): The minimum total costs when removing a set of nodes in \(T^u\) so that the total benefits gained on \(T^u\) is at least p.

-

\(F^u_i(p)\): The minimum total costs to remove a set of nodes in \(T^u_i\) so that the total benefits gained on \(T^u_i\) is at least p.

-

\(H^u_i(p)\): The minimum total costs when removing the set of nodes in subtrees \(\{T^u_1, T^u_2, \ldots , T^u_i \}\) so that the total benefits gained is at lest p, where \(i=1\ldots t(u)\).

The core of the dynamic algorithm is to compute \(F^u(p)\) and \(H^u_i(p)\) through the following recursions:

The basic cases are as follows.

If node u is a leaf.

Finally, the maximum objective for instance \((G, I, h')\) is given at the root u by:

Lemma 2

Algorithm 2 finds an optimal solution for \(\mathsf {T}\)-\(\mathsf {MMR}\) instance \((G, I, h')\) has the complexity of \(\mathcal {O}(\epsilon ^{-2} n^5)\)

Proof

The accuracy of the dynamic programming algorithm comes from the sub-optimal structure of the problem. For calculation \(F^u(p)\), the major portion of running time is to compute \(F^u_i(p)\). Since node u has maximum \(n-1\) children and \(q \le p \le \lceil \frac{n^2}{\epsilon } \rceil \). The running time to compute is \(\mathcal {O}(n \cdot \lceil \frac{n^2}{\epsilon } \rceil \cdot \lceil \frac{n^2}{\epsilon } \rceil )=\mathcal {O}(\epsilon ^{-2}n^5)\) \(\square \)

Theorem 3

Algorithm 2 is FPTAS for \(\mathsf {T}\)-\(\mathsf {MMR}\).

Proof

Let \(A^*\) be an optimal solution of \(\mathsf {T}\)-\(\mathsf {MMR}\) instance \(\mathcal {I}_1=(G, I, h)\) with the objective value \(OPT=h(A^*)\). \(A'\) is an optimal solution of \(\mathsf {T}\)-\(\mathsf {MMR}\) instance \(\mathcal {I}_2=(G, I, h')\) by running Agorithm 2 with the objective value \(OPT'=h'(A)\), with \(h'(.)\) is value of for \(\mathcal {I}_2\). We see that \(A'\) is feasible solution of \(\mathcal {I}_1\) since it satisfies the condition that the highest cost is L. We need to prove:

Due to rounding down \(h'(u)\) in line 4 in Agorithm 2, we have:

Therefore:

Since we filtered nodes u with \(c(u) > L\), we have \(OPT \ge M\). Therefore,

Thus the objective of \(A'\) is within a factor \(1-\epsilon \) of OPT i.e. Algorithm 2 is \((1-\epsilon )\) approximation for \(0<\epsilon <1\) for \(\mathsf {T}\)-\(\mathsf {MMR}\). Combining lemma 2 this alg. has a time complexity of \(\mathcal {O} (\epsilon ^{-2}n^5)\) so it is a FPTAS \(\square \)

4.2 Approximation algorithm for general case

We next introduce our \((1- 1/\sqrt{e})\)-approximation algorithm. We first present the following lemmas and theorems.

Lemma 3

\(\sigma _{d, E}(S, X)\) is monotonically decreasing function of the set of edges X to be deleted.

Lemma 4

The function \(\sigma _{d, E}(S, X)\) is supermodular, i.e. for \(X \subseteq Y \subset E\) and \(\forall e \in E \setminus Y\) then:

The proofs of Lemmas 3 and 4 are presented in “Appendix”.

Theorem 4

The benefit function h(A) is monotone:

and submodular function:

where \( \forall A\subseteq T \subset V, v \notin T \)

Proof

Removing a vertex u is equivalent to the influence passing through edges adjacent to it blocked. Hence, \(\sigma _d(S, A)= \sigma _{d, E}(N_E(A))\). For vertex \(v \in V\) then \(N_E(A) \subseteq N_E(A+\{v\}) \) combining with Lemma 3, we obtain that \(\sigma _d(S, A)\) is monotonically decreasing which infers that h(A) is monotonically increasing.

Denote \(E_{T, v}=N_E(T+\{v\})\setminus N_E(T), E_{A, v}= N_E(A+\{v\}) \setminus N_E(A)\). Due to \(A \subseteq T\) the set of edges adjacent to v but not adjacent to any vertices in T is subset of set of edges adjacent with v but not adjacent any vertices in A, we have \(E_{T, v} \subseteq E_{A, v}\) and \(N_E(A)\cup E_{T, v} \subseteq N_E(A) \cup N_E(v) = N_E(A+ \{v\})\). Using Lemmas 4 and 3, we have:

Combine with the definition of (Eq. 5) we have:

This has completed the proof \(\square \)

In Algorithm 3, we make a greedy strategy. In each step, we select a node u that maximizes the marginal benefit over the cost ratio if the cost of u is not greater than the remaining budget. Let A be the set of currently selected vertices, the marginal benefit of node u over the cost ratio is calculated by the formula:

The algorithm terminates when at least one of the two conditions occurs: no budget remains, or no node can be added to A.

In the case that all nodes have the same cost, Algorithm 3 achieves an approximation ratio of \(1-1/e\). However, with different node costs, Algorithm 3 can have unbounded approximation ratio and it gives even bad results. For example, considering the directed network containing \(t+2\) nodes \(V=\{I, u, v_1, v_2, \ldots , v_t \}\), I is a source node, the set of edges \(E=\{(I, u), (I, v_1), (v_1, v_2), (v_2, v_3), \ldots , (v_{t-1}, v_t)\}\) and the weight of all edges is equal to 1. Let the cost \(c(u)=1- \epsilon \), \(c(v_i)=t, \forall \ i=1\ldots t\) and the budget \(L=t\). The optimal solution is node \(v_1\) which achieves a benefit of t. Algorithm 3 only chooses node u since it has the maximum benefit gained over cost ratio \(1/(1-\epsilon )\), resulting benefit is 1. Next, we inherit idea of Khuller et al. (1999) to modify the Algorithm 3 to achieve a constant approximation ratio. First, we remove all nodes that cost more than the budget because they are not feasible solutions. Let \(A_1\) be selected set of nodes by Algorithm 3. We will consider another candidate solution \(v_{max}\), which is the node that has the largest benefit. We compare the benefit of \(A_1\) and \(v_{max}\), then output the one with higher benefit. The process is summarized in Algorithm 4.

Theorem 5

Algorithm 4 provides a \((1-\frac{1}{\sqrt{e}})\)-approximation to find max h(S) problem.

Proof

Straightforward based on Khuller et al. (1999). \(\square \)

Complexity Let R be the maximum time needed to calculate the value of \(h(A), \forall A \subseteq V\). Algorithm 3 runs in \(\mathcal {O}(n^2R)\) where n is the number of nodes, k is the number of iterators. \(u_{max}\) can be determined in \(\mathcal {O}(nT)\) time. Hence, Algorithm 4 runs in \(\mathcal {O}(n^2R)\).

Note that we can improve the approximation ratio to \(1-1/e\) by inheriting greedy with partial heuristic enumeration (Khuller et al. 1999). However, the time complexity of \(\mathcal {O}(n^4 R)\) will become unfeasible. The difficulty in implementing the Algorithm 4 is computing. Kempe et al. (2003) used Monte–Carlo simulations method to estimate the influence function and this method can be applied for the algorithm. However, it is hard to apply to large graphs due to its high complexity. Therefore, to speed up the Algorithm 4, we will propose Speed-up Greedy (SA) algorithm based on two aspects: (1) simplifying the source nodes S into super source node I, and (2) and fast updating the function based on characteristics of generated samples.

4.2.1 Speed-up of the improved greedy algorithm

In this subsection, we apply GREEDY-LT method proposed by Zhang et al. (2016b) which is the state-of-the-art method to Speed-up IG algorithm. We first simplify the \(\mathsf {MMR}\) problem by merging set source \(S=\{s_1, s_2,\ldots , s_p\}\) into a supper source node I. In T-LT model if a healthy node has multiple neighbors which are source nodes, it will have a new edge weight which would be the sum of weights. For example, if node u has two source neighbors \(s_1\) and \(s_2\), the weight of merged node I would be: \(w(I, u)=w(s_1, u)+w(s_2, u)\). The details of this method are presented in Algorithm 5 and equivalence between the before and after instances of \(\mathsf {MMR}\) is proved in Proposition 1.

Proposition 1

Given an instance of the \(\mathsf {MMR}\) instance (G, S, w) under T-LT model, Algorithm 5 outputs an equivalent instance \((G', I, w')\) where I is the only source of misinformation node in the new graph \(G'\).

Proof

We will prove this proposition by induction. If a node v connects to two source nodes \(u_1\) and \(u_2\). In T-LT model, the influence of \(u_1\) and \(u_2\) to v will be \(w(u_1, v)+w(u_2, v)\) which is the same as the total influence of output of Algorithm 5 (line 9). When v has \(i+1\) incoming neighbours source nodes \(u_1, u_2,\ldots , u_{i+1}\). Denote by \(w'_i(I, v)\) is influence after merging i nodes. When merging a new source node \(u_{i+1}\), the total influence is \(w'_{i+1}(I, v)=w'_{i}(I, v)+ w(u_{i+1}, v)\) which is the same as the output of Algorithm 5 (line 9) \(\square \)

Complexity As the same method in Zhang and Prakash (2015), the complexity of alg. 5 is \(\mathcal {O}(p+|N(S)|)\), where |N(S)| is number of neighbours of S.

Next, to speed up Algorithm 4, we propose the Speed-up Greedy algorithm (SGA) summarized in Algorithm 6. Firstly, we merge S into a supper source node I and we obtain the new graph is \(G'\) (line 2). Let \(\mathcal {R}\) be the set of \(\eta \) samples generated from \(G'\). In each sample graph \(g \in \mathcal {G}(G')\), all nodes reachable from I is a tree root at I. Since the time constraint is d, denoted \(I_I^d \sim g \) is sub-tree of T root at I with height at most d, Let \(\mathcal {L}\) denote the set of \(\eta \) sample graphs and \(T=\{T_I^d \sim g|g \in \mathcal {G}(G')\}\), \(h(u, T_{I})\) denotes the benefit of node u in \(T^d_I\), we have \(h(u, T_{I})=|\{v|v \in subtree(u) \}|\) is the number of nodes that under the sub-tree root at u (including u) in \(T_{I}\). After that, we apply the framework of Algorithm 4 with the marginal benefit of node u over tree \(T_I\) is:

The problem of computing the \(h(\cdot )\) exactly has been shown to be #P-Hard. Thus we apply the Sample Average Approximation (SAA) framework to approximate the function \(h(\cdot )\) in near-linear time by using empirical average over set \(\mathcal {L}\). That is,

The marginal benefit of node u on G is approximated by the average marginal benefit for all tree \(T_I^d \in \mathcal {L}\).

We choose the node that has the largest marginal benefit \(\hat{\delta }(A, u)\) in line 7 and choose the set of nodes \(A_1\) by framework of Algorithm 3 (lines 8–19). We remove the node which is picked into \(A_1\) and update function \(h(u, T_{I}), u \in T_I\) (line 16) in two cases as follows: (1) for children of u, we can remove them because they are not connected to I, (2) for any ancestor v of u, \(h(v, T_{I}^d\setminus u) =h(v, T_{I}^d)-h(u, T_{I}^d )\), this task can be done in \(\mathcal {O}(1)\). The algorithm has an approximation ratio of \(1-1/\sqrt{e} -\epsilon \) where \(\epsilon \) is the approximation factor for estimating \(h(\cdot )\).

Complexity of \(\mathsf {SG}\) algorithm Denoted \(m_d=|E_d|, n_d=|V_d|\). Generating set tree T can be done by using BFS algorithm with the depth d, this task takes \(\mathcal {O}(\eta (m_d+n_d))\) (line 4). Calculating \(h(T_I, u), \forall u \in T_I\) can be done using Algorithm 1, the running time is \(\mathcal {O}(\eta n_d)\). For greedy phase, in each iterator, choosing node u which has the largest marginal benefit needs \(\mathcal {O}(n_d)\), updating the set of trees \(\mathcal {L}\) needs \(\mathcal {O}(\eta n_d)\). Hence, the total time of this phase is \(\mathcal {O}(k_1\eta n_d)\) where \(k_1\) is the number of iterations of greedy pharse (lines 8–19). Therefore, Algorithm 6 runs in \(\mathcal {O}(\eta ( m_d+k_1 n_d))\).

5 DAG-based algorithm

In the previous section, we introduced the approximation algorithms. However, difficult to apply these algorithms for large networks due to the fact that it estimate h(A) based on Monte–Carlo simulation method which runs for long time. Therefore, in this section, we will introduce an effective heuristic algorithm which is scalable for large networks. First, we are going to extract directed acyclic graph (DAG) from original graph and apply it to estimate the influence propagation. Whereby, we will provide a metric measuring the role of a node in misinformation propagation, called propagation role. Next, we put it into framework of Algorithm 4 instead of marginal benefit. To speed-up algorithm, we narrow candidate for solution set in DAG. The details of this algorithm are described in next subsections.

5.1 DAG construction

Recent studies have shown that the influence of a node is very small for a long path (Chen et al. 2010a) so we ignore these paths. On the other hand, the union of maximum influence paths is a good way to approximate the influence (Chen et al. 2010a, 2012, 2010b; Nguyen and Zheng 2013). Furthermore, for the time constraint of d in the \(\mathsf {MMR}\) problem, we only consider paths has length at most d. Therefore, we use the set of paths which have two characteristics above as a basis for building the DAG. The following definitions are the basis for our method.

Definition 2

(Influence path) For a path \(P(u, v)=\{u=x_1, x_2,\ldots , x_l=v\}\), of length l from a node u to v, define the influence of the path, \(\mathsf {Inf}(P(u, v))\), as:

Definition 3

(Maximum influence path) The Maximum Influence Path MIP(G, u, v) from u to v is defined as:

Definition 4

(Maximum influence path within length constraint) The Maximum Influence Path \(MIP_d(G, u, v)\) from u to v within length d is defined as:

\(MIP_d(G, u, v)\) is different from MIP(G, u, v). For \(MIP_d(G, u, v)\), we consider all paths from u to v which have the maximum length of d and then choose the path that has the greatest influence. Based on \(MIP_d(G, u, v)\), we define Maximum Influence Path Out-Arborescence with Length Constraint as follows:

Definition 5

For a graph G, an influence threshold \(\theta \), the Maximum Influence Out-Arborescence of a node \(u \in V, MIOA(G, u, d, \theta )\) with length of d, is:

\(MIOA(G, u, \theta )\) is defined as the union of all \(MIP_d(G, u, x), \forall x \in V\) with influence path greater than a threshold \(\theta \) and the maximum length of d. \(MIOA(G, u, d, \theta )\) gives the local influence regions (given lower bound) of u within d hops, so with different values of \(\theta \) we have different local influence regions sizes. \(MIOA(G, u, d, \theta )\) can be computed by modifying Dijkstra algorithm in graph \(G_d\) with edge weight \( - \log w(u, v)\) for edge (u, v).

Now, we use \(MIOA(G, I, d, \theta )\) to build the DAG and use it to approximate the influence from node to node in graph. DAG is a finite directed graph with no directed cycles which has at least a topological ordering. In the \(MIOA(G, u, d, \theta )\) tree, we add edges from the node with low depth to node with high depth (or high height to low height) in original graph, we obtain a DAG. d(I, u) denotes the depth of node u on the tree \(MIOA(G, I, d, \theta )\). The DAG constructing procedure is summarized in Algorithm 7 (Fig. 4).

In DAG, we design a metric to measure the propagation role of a node u in the diffusion from source I. The metric combines the two following aspects: (1) influence from I to u, and (2) influence from u to the other nodes in DAG. We define them as \(f_{in}(u)\) and \(f_{out}(u)\) respectively,

Finally, the propagation role of node u defined is,

For example, in Fig. 6, \(f_{in}(v_1)=w(I, v_1)=0.6\), \(f_{out}(v_1)= 1+ 0.2 \cdot 1 + 0.3 \cdot 1=1.5 \), \(r(v_1)=0.6 \cdot 1.5=0.9\). Similarly, \(r(v_2)=0.75, r(v_3)=0.12, r(v_4)=0.23, r(v_6)=0.2\).

Chen et al. (2010b) design a linear-time to estimate influence from seed set node on DAG. We use this method to calculate \(f_{in}(\cdot )\), \(f_ {out}(\cdot )\) and \(r(\cdot )\). The procedure is showed in Algorithm 8. We first assign \(f_{in}(I)= f_{out}(I)=1, f_{in}(u)=0, f_{out}(u)=1, \forall u \in \mathcal {D} \setminus I\) and topological sort all nodes in \(\mathcal {D}\) from I using DFS. We then calculate the influence from I to each node in order from first to last (lines 5–9). We use node u to update the influence from I to its out-neighbour v (line 7). For \(f_{out}(u)\), we do the same above works from the last to the first of list. This work can be performed concurrently with DFS topological sort.

5.2 Algorithm description

The heuristic algorithm is proposed based on a combination of the following aspects: (1) constructing DAG from original graph and calculating the propagation role measure of each node 8, (2) using the framework of Algorithm 4 in which margain benefit be replaced by propagation role. The algorithm is presented in Algorithm 9.

The algorithm starts by constructing the DAG graph from original graph G (line 1). Then, it selects the node that maximizes \(r(\cdot )\) with the cost less than the budget L is performed (line 4). From line 5 to 24, the greedy phase is showed. The set vertices of \(\mathcal {D}\) except I is considered as candidate set in order to choose the solution (line 3). For the repeat loop (lines 6–23), the node that has the ratio of propagation role per cost in current DAG is selected in each iteration. Note that, the current DAG is built from residual graph. The nodes has cost more than the remaining costs are remove from candidate set U(line 19). This phase stops when the candidate is empty (line 24) or no node has a cost that is less than the remaining cost (line 8). Finally, we approximate the influence of I on current graph by its influence on \(\mathcal {D}\),

Algorithm compares the influence of source when remove set \(A_1\) and \(v_{max}\) then returns the solution with the smaller propagation influence (line 26).

5.3 Running time

Next, we consider the worst-case running time of \(\mathsf {PR}\)-\(\mathsf {DAG}\). Suppose that \(n_\theta , m_\theta \) is the largest number of nodes and edges in \(\mathcal {D}\). Calculating \(MIOA(G, I, d, \theta )\) from G takes \(\mathcal {O}(m_\theta + n_\theta \log n_\theta )\), so constructing \(\mathcal {D}\) takes \(\mathcal {O}(m_\theta +n_\theta \log n_\theta )\) (line 1). Calculate propagation role of each node \(u \in \mathcal {D}\) using Algorithm 8 takes \(\mathcal {O}(n_\theta +m_\theta )\) time complexity (line 2). For the repeat loop from lines 6 to 32, denote \(k_1\) is the number of iterators. For each iterator, we have to construct DAG and calculate the propagation role of each node. This work can be done in \(\mathcal {O}(n_\theta + m_\theta ) + \mathcal {O}(n_\theta +n_\theta \log n_\theta )=\mathcal {O}( m_\theta + n_\theta \log n_\theta )\). The selection of nodes that maximize ratio propagation role per cost can be done in linear time. Hence, the total time of loop is \(\mathcal {O}(k_1 (m_\theta + n_\theta \log n_\theta ))\). This is also the complexity of the algorithm.

6 Experiments

In this section, we are going to experimentally evaluate and compare the performance of our proposed algorithms to several widely used methods in the following aspects: (1) objective of solution, and (2) running time on various real social network datasets.

6.1 Experiment settings

We have implemented the proposed algorithms in Python 2.7. All experiments are conducted using a 2 \(\times \) Intel(R) Xeon(R) CPU E5-2697 v4 @ 2.30 GHz with 8 \(\times \) 16 GB DIMM ECC DDR4 @ 2400 MHz main memory.

Baseline compared For a comparative analysis of the performance of our proposed algorithms, we will compare our proposed algorithms \(\mathsf {SG}\), \(\mathsf {PR}\)-\(\mathsf {DAG}\) to various baseline algorithms.

-

\(\mathsf {Random}\): Randomly select nodes within budget L among the \(N_d(S)\).

-

Degree Centrality (\(\mathsf {DC}\)): The heuristic algorithm base centrality measure. We select nodes with the highest degree among the \(N_d(S)\) and we keep on adding the highest-degree nodes until total costs of the selection of nodes exceed L.

6.1.1 Datasets

We run our experiment on multiple real datasets. In addition, we try to pick datasets of various sizes from different domains, in which the \(\mathsf {MMR}\) problem is especially applicable. Table 2 shows datasets we used.

Gnutella The snapshot of the Gnutella peer-to-peer file sharing network in August 2002. Nodes represent hosts in the Gnutella network topology and edges represent connections between the Gnutella hosts (Leskovec et al. 2007). It contains 20,777 links among 6,301 hosts.

Oregon This is a graph of Autonomous Systems (AS) peering information inferred from Oregon route-views in March 31 2001 and May 26 2001 (Leskovec et al. 2005).

Epinions The Epinions dataset was extracted (Richardson et al. 2003) and obtained from http://snap.stanford.edu/data. This is a who-trust-whom online social network of a a general consumer review site Epinions.com. Members of the site can decide whether to “trust” each other. All the trust relationships interact and form the Web of Trust which is then combined with review ratings to determine which reviews are shown to the user.

EU Email The network was generated using email data from a large European research institution. For a period from October 2003 to May 2005 (18 months) we have anonymized information about all incoming and outgoing emails of the research institution. Given a set of email messages, each node corresponds to an email address. There is a directed edge between nodes i and j, if i sends at least one message to j.

6.1.2 Parameter settings

Calculating the edge weights We assign the weights of edges in LT model according to previous studies (Goyal et al. 2012; Chen et al. 2010b; Khalil et al. 2014; Kempe et al. 2003; Zhang et al. 2016b). The weight of the edge (u, v) is calculated as follows:

Choice of Source nodes We use the methods in Nguyen et al. (2013); Zhang et al. (2016a) to select the source of misinformation. Accordingly, the nodes generating misinformation often have a small number of out-neighbors but are typically located in the vicinity of celebrities (high-degree nodes). Therefore, sources are chosen randomly from the neighbors of high-degree nodes. For each dataset, we choose a set of source nodes with size \(|S|=100\).

In all experiments, we choose different \(\theta \) for each different social network. Cha et al. (2009) showed that information mostly propagates within 2 to 5 hops so we choose \(d=3,4,5\). To get the expected benefits after removing the set of nodes, we run Mote–Carlo simulation 10,000 times and take the average.

6.2 Experiment results

6.2.1 Comparison of solution quality of \(\mathsf {MMR}\) in the case of general cost

In this experiment, we compare algorithms when d varies, the budget \(L = \{10, 15, 25, 40, 60, 80, 100\}\) and the costs of node are uniformly distributed in\([1.0, 3.0]\). Figures 5, 6, 7, and 8 show the performance of \(\mathsf {SG}\), \(\mathsf {PR}\)-\(\mathsf {DAG}\), \(\mathsf {DC}\) and \(\mathsf {Random}\) algorithms on Oregon, Epinion, Gnutella and EU Email networks. On avarage, we observe that \(\mathsf {SG}\) and \(\mathsf {PR}\)-\(\mathsf {DAG}\) give better performance compare to the others.

Compare \(\mathsf {PR}\)-\(\mathsf {DAG}\) with \(\mathsf {SG}\): for Oregon network, \(\mathsf {PR}\)-\(\mathsf {DAG}\) is better 1.37 to 12.6% than the result of \(\mathsf {SG}\). For Epinions, \(\mathsf {SG}\) is better than the result of \(\mathsf {PR}\)-\(\mathsf {DAG}\). There is not much diffirent between \(\mathsf {SG}\) and \(\mathsf {PR}\)-\(\mathsf {DAG}\) when \(d = 3, 4\) (2.6 and 4.8% times better). In case of \(d=5\), the distance is enlarged. For Gnutella, \(\mathsf {PR}\)-\(\mathsf {DAG}\) is better than \(\mathsf {SG}\) when \(d=3\) and worse than \(\mathsf {SG}\) when \(d=4,5\). In EU Email, the running time of \(\mathsf {SG}\) is over 72 h, so we ignore \(\mathsf {SG}\) on above dataset. However, as shown in Fig. 8, \(\mathsf {PR}\)-\(\mathsf {DAG}\) still performs better than the others. This shows that for large networks (with 265K nodes and 420K edges), \(\mathsf {PR}\)-\(\mathsf {DAG}\) finshed within allowed time and gives good performance. Comparing with \(\mathsf {DC}\) algorithm, the objective function of \(\mathsf {PR}\)-\(\mathsf {DAG}\) and \(\mathsf {SG}\) is 1.05 to 1.72 times better than \(\mathsf {DC}\). As the cost of selection of nodes increases, the gap between \(\mathsf {PR}\)-\(\mathsf {DAG}\) and the others is getting larger. \(\mathsf {Random}\) algorithm has a much worse results, indicating that a careful selection solution is critical to block misinformation propagation.

6.2.2 Comparison of solution quality of \(\mathsf {MMR}\) in the case of unit-cost version

To observe more clearly on the performance of these algorithms, we have conducted experiments with the case of all node costs are equal to 1 on datasets. We set L varies from 1 to 50, and d varies from 3 to 5. Figures 9, 10 and 11 display the objective function on Gnutella, Oregon and Epinions, respectively. \(\mathsf {PR}\)-\(\mathsf {DAG}\) is very close to \(\mathsf {SG}\). The larger value of k, the smaller the gap betwen \(\mathsf {PR}\)-\(\mathsf {DAG}\) and \(\mathsf {SG}\). Especially, with cost greater than 25, \(\mathsf {PR}\)-\(\mathsf {DAG}\) and the \(\mathsf {SG}\) achieve the same level of performance. It is very clear to prove that \(\mathsf {PR}\)-\(\mathsf {DAG}\) can find the solution during the constructing DAG from original graph which effectively approximate the influence between nodes. Like the previous test case, for EU Email, the running time of \(\mathsf {SG}\) is over 72 h, so we ignore \(\mathsf {SG}\) on above dataset. Therefore, \(\mathsf {SG}\) algorithm is not feasible when finding the solution on large networks. Comparing \(\mathsf {PR}\)-\(\mathsf {DAG}\) with \(\mathsf {DC}\), \(\mathsf {PR}\)-\(\mathsf {DAG}\) outperforms \(\mathsf {DC}\) algorithm in term of benefit of selected nodes. On avarage, \(\mathsf {PR}\)-\(\mathsf {DAG}\) is 1.51 times better on Gnutella, 5.12 times better on Oregon, 1.43 times better on Epinions, and 1.14 times better on EU Email. These results are also consistent with what have been observed in the previous test case.

6.2.3 Scalability

In this subsection, we show some running time results to demonstrate scalability. Tables 3 and 4 show the running time for \(\mathsf {PR}\)-\(\mathsf {DAG}\) and \(\mathsf {SG}\). We did not show the running time of \(\mathsf {Random}\) and \(\mathsf {DC}\) because they are fast heuristics that finish in the order of seconds. As expected, \(\mathsf {PR}\)-\(\mathsf {DAG}\) is much faster than \(\mathsf {SG}\). In average, \(\mathsf {PR}\)-\(\mathsf {DAG}\) is up to 32.5 and 45.4 times faster \(\mathsf {SG}\) on general cost and unit-cost case, respectively. We also show detail the running times of \(\mathsf {PR}\)-\(\mathsf {DAG}\) and \(\mathsf {SG}\) on Oregon in Figs. 12 and 13, for other networks we got similar results. We observe that as L increases, the gap between \(\mathsf {PR}\)-\(\mathsf {DAG}\) and \(\mathsf {SG}\) increases which is consistent with their analysis theoretical complexities. For the large-scale dataset EU Email, \(\mathsf {PR}\)-\(\mathsf {DAG}\) took less than 11 h to select the set nodes, while \(\mathsf {SG}\) could not finish in 72 h. This again emphasizes the scalability of \(\mathsf {PR}\)-\(\mathsf {DAG}\) for \(\mathsf {MMR}\) on large-scale networks.

7 Conclusion

In this paper, we investigated a new NP-hard problem of Maximizing Misinformation Restriction with the aim of maximizing the influence reduction of misinformation source on OSNs by removing the set of nodes within time constraints and the limited budget. We proved the hardness results, and provided the approximation algorithm to solve the problem. In addition, due to the high runtime complexity of this approximation algorithm, a much more efficient heuristic algorithm was also proposed, named \(\mathsf {PR}\)-\(\mathsf {DAG}\). This algorithm shows large performance advantage in both solution quality and running time on real social networks.

References

Bhagat S, Goyal A, Lakshmanan LV (2012) Maximizing product adoption in social networks. In: Proceedings of the fifth ACM international conference on Web search and data mining, Seattle, Washington, pp 603–612

Budak C, Agrawal D, Abbadi AE (2011) Limiting the spread of misinformation in social networks, In: Proceedings of the 20th international conference on world wide web, WWW ’11, ACM, New York, NY. https://doi.org/10.1145/1963405.1963499

Cha M, Mislove A, Gummadi KP (2009) A measurement-driven analysis of information propagation in the Flickr social network. In: Proceedings of the 18th international conference on world wide web, New York, USA, pp 721–730

Chen W, Lakshmanan LVS, Castillo C (2013) Information and influence propagation in social networks. Morgan and Claypool, San Rafael

Chen W, Lu W, Zang N (2012) Time-critical influence maximization in social networks with time-delayed diffusion process. In: Proceedings of the twenty-sixth AAAI conference on artificial intelligence, Toronto, Ontario, pp 592–598

Chen W, Wang C, Wang Y (2010) Scalable influence maximization for prevalent viral marketing in large-scale social networks. In: Proceedings of the 16th ACM SIGKDD international conference on knowledge discovery and data mining, Washington, DC, pp 1029–1038

Chen W, Wang C, Wang Y (2010) Scalable influence maximization in social networks under the linear threshold model. In: Proceedings of the 2010 IEEE international conference on data mining, Washington, pp 88–97

Domingos P, Richardson M (2001) Mining the network value of customers. In: Proceedings of the seventh ACM SIGKDD international conference on knowledge discovery and data mining, New York, USA, pp 57–66

Domm P (2013) False rumor of explosion at white house causes stocks to briefly plunge, AP Confirms Its Twitter Feed Was Hacked CNBC. http://www.cnbc.com/id/100646197(2013). Accessed 23 April 2013

Gentzkow M (2017) Social media and fake news in the 2016 election. Stanford Web. http://news.stanford.edu/2017/01/18/stanford-study-examines-fake-news-2016-presidential-election/ (2017). Accessed 24 June 2017

Goyal A, Lu W, Lakshmanan LVS (2012) SIMPATH: an efficient algorithm for influence maximization under the linear threshold model. In: Proceeding IEEE 11th international conference on data mining, Vancouver, BC, pp 211–220

He X, Song G, Chen W, Jiang Q (2011) Influence blocking maximization in social networks under the competitive linear threshold model technical report, CoRR abs/1110.4723

Hughes AL, Palen L (2009) Twitter adoption and use in mass convergence and emergency events. Int J Emerg Manage 6(3):248–260

Kempe D, Kleinberg J, Tardos E (2003) Maximizing the spread of influence through a social network. In: Proceedings of the ninth ACM SIGKDD international conference on knowledge discovery and data mining. https://doi.org/10.1145/956750.956769

Khalil EB, Dilkina B,Song L (2014) Scalable diffusion-aware optimization of network topology. In: Proceedings of the ACM SIGKDD international conference on knowledge discovery and data mining, New York, pp 1226–1235

Khuller S, Moss A, Naor JS (1999) The budgeted maximum coverage problem. Inf Process Lett 70(1):39–45

Kimura M, Saito K, Motoda H (2008) Solving the contamination minimization problem on networks for the linear threshold model. In: Pacific rim international conference on artificial intelligence. https://doi.org/10.1007/978-3-540-89197-0_94

Kimura M, Saito K, Motoda H (2009) Blocking links to minimize contamination spread in a social network. In: ACM transactions on knowledge discovery from data. https://doi.org/10.1145/1514888.1514892

Kottasov I (2017) Facebook targets 30,000 fake accounts in France. CNN media Web. http://money.cnn.com/2017/04/14/media/facebook-fake-news-france-election/index.html. Accessed 24 June 2017

Kwon S, Cha M, Jung K, Chen W, and Wang Y (2013) Prominent features of rumor propagation in online social media. In: Proceeding of IEEE 13th international conference on data mining. https://doi.org/10.1109/ICDM.2013.61

Leskovec J, Adamic LA, Huberman BA (2007) The dynamics of viral marketing. ACM Trans Web. https://doi.org/10.1145/1232722

Leskovec J, Kleinberg J, Faloutsos C (2005) Graphs over time: densification laws, shrinking diameters and possible explanations. In: Proceeding of ACM SIGKDD international conference on knowledge discovery and data mining. https://doi.org/10.1145/1081870.1081893

Leskovec J, Kleinberg J, Faloutsos C (2007) Graph evolution: densification and shrinking diameters. ACM Trans Knowl Discov Data 1(1):2

Liu B, Cong G, Xu D, Zheng Y (2012) Time constrained influence maximization in social networks. In: Proceeding of IEEE 12th international conference on data mining, Belgium, Brussels, pp 439–448

Nguyen H, Zheng R (2013) On budgeted influence maximization in social networks. IEEE J Sel Areas Commun 31(6):1084–1094

Nguyen NP, Yan G, Thai MT (2013) Analysis of misinformation containment in online social networks. Comput Netw 57:21332146

Qazvinian V, Rosengren E, Radev DR, Mei Q (2011) Rumor has it: identifying misinformation in microblogs. In: Proceedings of the conference on empirical methods in natural language processing, Edinburgh, pp 1589–1599

Richardson M, Agrawal R, Domingos P (2003)Trust management for the semantic web. In: Proceeding of international semantic web conference. https://doi.org/10.1007/978-3-540-39718-2_23

Sutter JD (2017) How bin Laden news spread on Twitter. CNN Web. http://edition.cnn.com/2011/TECH/social.media/05/02/osama.bin.laden.twitter/index.html. Accessed 23 June 2017

Valiant LG (1979) The complexity of enumeration and reliability problems. SIAM J Comput 8(3):410–421

Wolfsfeld G, Segev E, Sheafer T (2013) Social media and the Arab Spring: politics comes first. Int J Press Polit 18(2):115–137

Yadron D (2017) Twitter deletes 125,000 Isis accounts and expands anti-terror teams. The Guardian Web. https://www.theguardian.com/technology/2016/feb/05/twitter-deletes-isis-accounts-terrorism-online. Accessed 24 June 2017

Zhang H, Alim M, Li X, My TT, Nguyen H (2016a) Misinformation in online social networks: catch them all with limited budget. ACM Trans Inf Syst 34(3):18

Zhang Y, Adigay A, Saha S, Vullikanti A, Prakash A (2016b) Near-optimal algorithms for controlling propagation at group scale on networks. IEEE Trans Knowl Data Eng. https://doi.org/10.1109/TKDE.2016.2605088

Zhang H, Dinh TN, Thai MT (2013) Maximizing the spread of positive influence in online social networks. In: Proceeding IEEE 33rd international conference on distributed computing systems, Philadelphia, PA, pp 317-326

Zhang H, Kuhnle A, Zhang H, Thai MT (2016c) Detecting misinformation in online social networks before it is too late. In: Proceeding IEEE/ACM international conference on advances in social networks analysis and mining (ASONAM). https://doi.org/10.1109/ASONAM.2016.7752288

Zhang Y, Prakash B (2015) Data-aware vaccine allocation over large networks. ACM Trans Knowl Discov Data. https://doi.org/10.1145/2803176

Zhang Y, Prakash BA (2014) Scalable vaccine distribution in large graphs given uncertain data. In: Proceeding of the ACM international conference on information and knowledge management, Shanghai, pp 1719–1728

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

We let \(e=(u, v), f=(u', v')\), \(\mathcal {G}^e(G\setminus X)\) is the set of sample graph where incoming edge \(e=(u, v)\) is selected for node v, \(\mathcal {G}^{\overline{e}}(G\setminus X)\) is the set of sample graph where a different incoming edge \(\overline{e}=(y, v)\) is selected for node v and \(\mathcal {G}^{\emptyset }(G\setminus X)\) is the set of sample graph where no incoming edge \(\overline{e}=(y, v)\) is selected for node v. According to Khalil et al. (2014), we have the following results:

Proposition 2

(Khalil et al. (2014), proposition 1) For every live-edge \(g \in \mathcal {G}^{\emptyset }(G \setminus X)\), there exits a corresponding live-edge graph \(\widetilde{g} \in \mathcal {G}^e(G \setminus X)\) and vice versa. If \(g=(V, E_g)\) then \(\widetilde{g}=(V, E_g \cup \{e\} )\).

Proposition 3

(Khalil et al. (2014), proposition 2) \(\mathcal {G}(G \setminus (X\cup \{e\})) \subseteq \mathcal {G}(G \setminus X)\) and furthermore \(\mathcal {G}(G \setminus (X\cup \{e\}))=\mathcal {G}^{\overline{e}}(G \setminus X) \cup \mathcal {G}^{\emptyset }(G \setminus X)\).

Proposition 4

(Khalil et al. (2014), proposition 3) Given \(f=(u', v') \in E \setminus X, v' \ne v\), let \(t=|\mathcal {G}^{\emptyset }(G \setminus (X \cup \{f\}))|\) then \(\mathcal {G}^{\emptyset }(G \setminus X)\) can be partitioned into t sets \(\{ \varPhi _i \}_{i=1}^t\) such that, for every \(\varPhi _i\) there exits a corresponding \(g_i \in \mathcal {G}^{\emptyset }( G \setminus (X \cup \{f\}))\) and vice versa.

Proposition 5

(Khalil et al. (2014), proposition 4) For every \(\varPhi _i \subseteq \mathcal {G}^{\emptyset }(G \setminus X)\) and its associated \(g_i \in \mathcal {G}^{\emptyset }(G \setminus (X \cup \{e\}))\), \(\Pr [g_i|G \setminus (X \cup \{f \})]= \sum _{H \in \varPhi _i} \Pr [H|G \setminus X]\).

Proof of Lemma 3

We need to show that \( \sigma _{d,E}(S, X) \ge \sigma _{d,E}(S, X \cup \{e\})\). The idea of the proof is similar to the theorem 5 in Khalil et al. (2014). Using Proposition 2 and 3, we have:

Recall that \(e=(u, v)\), for \(g \in \mathcal {G}^{\emptyset }(G \setminus X)\), we have:

For \(g \in \mathcal {G}^{\overline{e}}(G \setminus X)\) we have \(P(v, g, G \setminus X)=P(v, g, G \setminus (X\cup \{e\}))=w(\overline{e})\) which leads to \(\Pr [g|G \setminus X]=\Pr [g|G \setminus (X\cup \{e\})]\), it infers:

Using prop. 2, for \(g\in \mathcal {G}^{\emptyset }(G \setminus X)\) there exits a corresponding \(\widetilde{g} \in \mathcal {G}^e(G \setminus X)\) and \(\Pr [\widetilde{g}|G \setminus X]=w(u, v)\prod _{v' \ne v}p(v', \widetilde{g}, G \setminus X)\). Therefore,

We can see that g is a subgraph of \(\widetilde{g}\), the set of vertices which can reach from S in g is subset of the set of vertices which can reach from S in \(\widetilde{g}\). Hence, \(f_d(\widetilde{g}, S) - f_d(g, S) \ge 0\), which completes the proof. \(\square \)

Proof of Lemma 4

the idea of the proof is similar to that of theorem 6 in Khalil et al. (2014). For edge \(f \in E\), let \(t=|\mathcal {G}(G \setminus (X\{f\}))|\). From Proposition 4, we can partition \(\mathcal {G}^{\emptyset }(G \setminus X)\) into t sets \(\{\varPhi \}_{i=1}^t\), rewrite (36) as:

Using similar reasoning to that in Eq. (36) in the proof of lemma 1 for \(G \setminus (X \cup \{f \})\), we have:

We will compare two Eqs. (37) and (38) term by term for each \(g_i \in \mathcal {G}^{\emptyset }(G \setminus X), i=1,\ldots ,t \). It can be divided into two cases: (1) \(\varPhi _i =\{X_i\}\) in case in \(g_i\) has another incoming edge to \(v'\) not f, now the terms in two equations are equal; (2) in the case in \(g_i\) has only incoming edge to \(v'\) is f, then \(\varPhi _i=\{g_i, g_i'\}\), we need to prove:

Using prop. 5 in Khalil et al. (2014), we have:

Hence, inequality (39) is true if:

Note that \(\widetilde{g'_i}=(V, E_{g_i} \cup \{f\})\) and live-edge graphs are constructed in a way that each node has at most one incoming edge. We can see that: a reachability path in \(\widetilde{g_i}\) is clearly presented in \(\widetilde{g'_i}\), hence if removing edge e from \(\widetilde{g_i}\) results in unreachability of some nodes in \(g_i\). Similarly, some nodes become unreachable when removing e from \(\widetilde{g'_i}\). Removing edge e from \(\widetilde{g'_i}\) may disconnect some additional nodes whose paths derived from the source including edge f. Therefore, the reduction in reachable nodes when removing edge e from \(\widetilde{g'_i}\) is the same or larger than the reduction when removing e from \(\widetilde{g_i}\), it implies (41) is true. \(\square \)

Rights and permissions

About this article

Cite this article

Pham, C.V., Thai, M.T., Duong, H.V. et al. Maximizing misinformation restriction within time and budget constraints. J Comb Optim 35, 1202–1240 (2018). https://doi.org/10.1007/s10878-018-0252-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10878-018-0252-3