Abstract

We introduce a novel regulariser based on the natural vector field operations gradient, divergence, curl and shear. For suitable choices of the weighting parameters contained in our model, it generalises well-known first- and second-order TV-type regularisation methods including TV, ICTV and TGV\(^2\) and enables interpolation between them. To better understand the influence of each parameter, we characterise the nullspaces of the respective regularisation functionals. Analysing the continuous model, we conclude that it is not sufficient to combine penalisation of the divergence and the curl to achieve high-quality results, but interestingly it seems crucial that the penalty functional includes at least one component of the shear or suitable boundary conditions. We investigate which requirements regarding the choice of weighting parameters yield a rotational invariant approach. To guarantee physically meaningful reconstructions, implying that conservation laws for vectorial differential operators remain valid, we need a careful discretisation that we therefore discuss in detail.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the beginning of the 1990s, Rudin, Osher and Fatemi revolutionised image processing and in particular variational methods using sparsity-enforcing terms by introducing total variation (TV) regularisation [33]. Since then, it has been serving as a state-of-the-art concept for various imaging tasks including denoising, inpainting, medical image reconstruction, segmentation and motion estimation. Minimisation of the TV functional, which for \(u \in L^1(\varOmega )\) is given by

provides cartoon-like images with piecewise constant areas that are separated by sharp edges. Note that here and in the following \(\varOmega \subseteq \mathbb {R}^2\) is an open, bounded image domain with Lipschitz boundary and \(\alpha > 0\). With regard to the TV model, it is a well-known fact that there are two major drawbacks inherent in this method: on the one hand solutions typically suffer from a loss of contrast. On the other hand, they often exhibit the so-called staircasing effect, where areas of gradual intensity transitions are approximated by piecewise constant regions separated by sharp edges such that the intensity function along a line profile in 1D is reminiscent of a staircase. To address the former deficiency, Osher et al. proposed the use of Bregman iterations [30], a semi-convergent iterative procedure that allows for a regain of contrast and details in the recovered images. More recently, various debiasing techniques [14, 20, 21] have been introduced to compensate for the systematic error of the lost contrast. In this paper, we shall however focus on the latter issue. To this end, we propose a novel regularisation functional composed of natural vector field operators that is capable of providing solutions with sharp edges and smooth transitions between intensity values simultaneously. This approach certainly stands in the tradition of several modified TV-type regularisation functionals that have been contrived to cure the staircasing effect by incorporating penalisation of second-order total variation, which is given by (cf. for example [4, 34])

Here, \({{\mathrm{{\text {Sym}}}}}^2(\mathbb {R}^2)\) denotes the set of second-order symmetric tensor fields on \(\mathbb {R}^2\), i.e. the set of symmetric \(2 \times 2\)-matrices. Moreover, for a symmetric \(2 \times 2\)-matrix \(\varphi \), \({{\mathrm{{div}}}}(\varphi ) \in C_0^1(\varOmega ,\mathbb {R}^2)\) and \({{\mathrm{{div}}}}^2(\varphi ) \in C_0(\varOmega )\) are defined by

Let us briefly recall the most popular instances of second-order TV-type regularisers in a formal way. Note first that for \(u \in W^{1,1}(\varOmega )\), the (first-order) total variation functional can be rephrased as

where here and in the following we always denote by \(\nabla u\) the gradient of u in the sense of distributions and by \(\vert \cdot \vert \) the Euclidean norm. Against the backdrop of (TV), Chambolle and Lions [16] proposed to compose regularisers for image processing tasks by coupling several convex functionals of the gradient by means of the infimal convolution, defined for two functionals as

In particular, they suggested to use a combination of first and second derivatives

where here and in the following \(\alpha _1, \alpha _0 > 0\) and we denote by \(\vert \cdot \vert \) the Frobenius norm whenever the input argument is a matrix. Following this train of thought, Chan et al. [18] proposed another variant of such a composed regularisation functional, namely

Illustration of the SVF image compression approach (SVF1) for \(\alpha = \frac{1}{15}\)

More recently, Bredies et al. [11] suggested to generalise the TV functional to the higher-order case in a different way. In comparison with the second-order TV functional (TV2*), they further constrained the set over which the supremum is taken by imposing an additional requirement on the supremum norm of the divergence of the symmetric tensor field. Thus, they introduced the total generalised variation (TGV) functional, which in the second-order case is given by

Considering the corresponding primal definition of this functional, we obtain the following unconstrained formulation:

In this case, one naturally obtains a minimiser for w in the space \(BD(\varOmega )\) of vector fields of bounded deformation, i.e. \(w \in L^1(\varOmega ,\mathbb {R}^2)\) such that the distributional symmetrised derivative \(\mathcal {E}(w)\) given by

is a \({{\mathrm{{\text {Sym}}}}}^2(\mathbb {R}^2)\)-valued Radon measure. Note that we will very briefly recall the definition of Radon measures and some related notions in the subsequent section. Looking closely at the (TGV) functional, similarities and differences to the other second-order TV-type regularisation functionals introduced so far are revealed: all these approaches have in common that they employ the infimal convolution to balance between enforcing sparsity of the gradient of the function u and sparsity of some differential operator of a vector field resembling the gradient of u. Thus, they locally emphasise penalisation of either the first- or the second-order derivative information, which will become visually apparent in Sect. 6, Figs. 6 and 7. As a consequence, in comparison with the original TV regularisation, all the previously recalled second-order models introduce an additional optimisation problem. On the other hand, we can already observe a difference between the former two models and the latter approach: while in the ICTV and the CEP functional the gradient respectively the divergence operator is applied to the gradient of \(u_2\), the symmetrised derivative in the TGV functional is applied to a vector field w that does not necessarily have to be a gradient field. We will come back to this point later on. In the course of this paper, we will moreover show that our novel functional, which will be introduced below, can be seen as a generalisation of all aforementioned first- and second-order TV-type models, since for suitable parameter choices we (in the limit) obtain each of these approaches as a special case. This way, we do not only shed a new light on the relation of these well-established regularisation functionals and provide a means of interpolating between them, but we will also discuss properties of further second-order TV-type approaches that can be obtained by different weightings between the natural vector field operators our model builds upon.

Let us now introduce our novel approach in more detail. In [13], we proposed a variational model for image compression that was motivated by earlier PDE-based methods [28, 29]: essentially, images are first encoded by performing edge detection and by saving the intensity values at pixels on both sides of the edges and these data are then decoded by performing homogeneous diffusion inpainting. In this context, our key observation was that the encoding step amounts to the search for a suitable image representation by means of a vector field whose nonzero entries are concentrated at the edges of the image to be compressed. Therefore, we conceived a minimisation problem that directly promotes such a sparse vector field v and at the same time guarantees a certain fidelity of the decoded image u to the original image f:

or equivalently, defining \(w = \nabla u - v\),

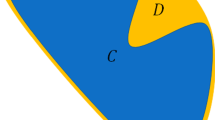

Reconstruction of piecewise affine test image using (gSVF) for different vector operators S

where \(\chi _0\) denotes the characteristic function of the set of divergence-free vector fields w. Figure 1 illustrates the sparse vector fields (SVF) method for image compression in an intuitive way. The input image f (Fig. 1, left image) is encoded via the two components of the vector field v (second and third image) with the corresponding decoded image u (right image) satisfying \({{\mathrm{{div}}}}(\nabla u - v) = 0\). Looking at these results, we concluded that the support of v (fourth image) indeed corresponds well to an edge indicator, confirming the relation to [28, 29]. Moreover, we observed that on the one hand, our method preserves the main edges well while on the other hand, the decoded images (cf. Fig. 1, right) exhibit a higher spatial smoothness in comparison with the original input images (cf. Fig. 1, left). Since this increased smoothness did not come along with characteristic artefacts like the staircasing effect in case of the TV regularisation, this seemed attractive for further reconstruction tasks. Therefore, we already back then considered the SVF model for homogeneous diffusion inpainting-based image denoising. In order to obtain higher flexibility, we reformulated the minimisation problem to

with \(\beta >0\). In this form, the (SVF) model reveals strong similarities to the (CEP) model with the only difference that w does not necessarily have to be a gradient field. However, we had to realise that the denoising performance of this model was not convincing, since point artefacts were created at reasonable choices of the regularisation parameter (cf. [13, Fig. 5]). In particular, these point artefacts are also apparent in the second image of Fig. 2 in Sect. 3.1. As we will elaborate on in greater detail in Sect. 3, these artefacts are indeed inherent in this method. Against the backdrop of the Helmholtz decomposition theorem, stating that every vector field can be orthogonally decomposed into one divergence-free component and a second curl-free one, we proposed in [13] to extend the SVF model by incorporating penalisation of the curl of w. However, as we shall dwell on in Sect. 4, such an extended model still had not yet provided satisfactory results, since the point artefacts could indeed be reduced, but were still visible. Hence, we concluded that further adjustments to our model were needed. Inspired by the idea to combine penalisations of divergence, curl and shear to regularise motion flow fields [35], we eventually contrived the following image denoising model, which (dependent on the weights chosen) enforces a joint vector operator sparsity (VOS) of divergence, curl and the two components of the shear:

where \(\alpha >0\) is a regularisation parameter in the classical sense while the \(\beta _i>0\) are determining the specific form of the regularisation functional.

In this paper, we will show results for image denoising, but similar to existing TV-type regularisers our novel approach is not limited to this field of application, but can rather be used as a regulariser for a large variety of image reconstruction problems. To apply the (VOS) model in the context of a different imaging task, the squared \(L^2\)-norm would have to be replaced by a suitable distance measure D(Au, f), where A denotes the bounded linear forward operator between two Banach spaces corresponding to the reconstruction problem to be solved. The fidelity term D(Au, f) would have to be chosen in dependence on the expected noise characteristics and specific application as it is common practice in variational modelling (cf. [6, 15]). However, for the sake of simplicity and to provide a good intuition for the effects of our novel regulariser on the reconstruction result, we will adhere to image denoising for the remainder of this paper.

To summarise our contributions, we provide a way of looking at well-established TV-type regularisation methods from a new angle. We introduce a functional that generalises both our model presented in [13] and the methods discussed above, formulated by applying sparsity constraints to common natural differential vector field operators. In contrast to improving state-of-the-art imaging methods, we rather focus on a sound mathematical analysis of our regulariser incorporating analysis of the nullspaces, which allows us to draw conclusions on optimal parameter combinations. Even more, we investigate under which conditions imposed on the weighting parameters we obtain rotational invariance. We also show that we can yield competitive denoising results sharing the ability of second-order models to reconstruct sharp edges and smooth intensity transitions simultaneously. Moreover, we highlight the fact that our model is able to interpolate between (ICTV) and (TGV) by only modifying one parameter. We also include a discussion on our discretisation, which is different from the one for the latter models, but has its own merits with respect to compliance with conservation laws.

Particularly, the remainder of this paper is organised as follows: In the subsequent section, we very briefly recall some notions in the context of Radon measures relevant for the further course of this work. Afterwards, exact definitions of the differential operators included in the (VOS) model will be stated in Sect. 3. We will investigate both theoretically and practically how regularisation where only one \(\beta _i\) is nonzero affects image reconstruction. In fact, all of the four resulting cases will involve certain characteristic artefacts that can be rigorously explained by studying the corresponding nullspaces of the regulariser. As we will show in Sect. 4, the VOS model is indeed capable of producing denoising results with sharp edges and smooth transitions between intensity values simultaneously at suitable choices of the weighting parameters. Even more, a rigorous discussion and analysis of this model will reveal further properties and will pave the way for the insight that our novel approach is a means of unifying the well-known first- and second-order TV-type models introduced above and as such it naturally offers possibilities for interpolation between them. In Sect. 5, the discretisation of our model is explained in detail, as it is not straightforward to choose due to the various vector field operators involved. We compare our specific type of discretisation with the one in [11] and justify our choice by showing that we comply with various conservation laws. In Sect. 6, we briefly discuss the numerical solution of our model, compare the best result we can obtain to state-of-the-art methods illustrating that the proposed approach can indeed compete with those of existing second-order TV-type models. We furthermore present statistics on how various parameter combinations affect reconstructions with respect to different quality measures. We conclude the paper with a summary of our findings and future perspectives in Sect. 7.

2 Preliminaries

In the previous section, we have introduced the total variation of a function \(u \in L^1(\varOmega )\) as

On this basis, one defines the space of functions of bounded variation by

which equipped with the norm

constitutes a Banach space. It is a well-known fact (cf. e.g. [3, Chapter II]) that for \(u \in BV(\varOmega )\) the distributional gradient \(\nabla u\) of u can be identified with a finite vector-valued Radon measure, which can be characterised in the following way (cf. e.g. [1, Chapter I]): Let \(\mathcal {B}(\varOmega )\) denote the Borel \(\sigma \)-algebra generated by the open sets in \(\varOmega \). Then we call a mapping \(\mu :\mathcal {B}(\varOmega ) \rightarrow \mathbb {R}^d\), \(d \ge 1\), an \(\mathbb {R}^d\)-valued, finite Radon measure if \(\mu (\emptyset ) = 0\) and \(\mu \) is \(\sigma \)-additive, i.e. for any sequence \((A_n)_{n \in \mathbb {N}}\) of pairwise disjoint elements of \(\mathcal {B}(\varOmega )\) it holds that \( \mu \left( \bigcup _{n = 1}^\infty A_n \right) = \sum _{n =1}^\infty \mu (A_n) \). Moreover, we denote the space of \(\mathbb {R}^d\)-valued finite Radon measures by

By means of the Riesz–Markov representation theorem the space of the \(\mathbb {R}^d\)-valued finite Radon measures can be identified with the dual space of \(C_0(\varOmega ,\mathbb {R}^d)\) under the pairing

Consequently, we equip the space of the \(\mathbb {R}^d\)-valued finite Radon measures with the dual norm

yielding a Banach space structure for \(\mathcal {M}(\varOmega ,\mathbb {R}^d)\). Now taking into account that for \(u \in BV(\varOmega )\) the distributional gradient is a finite \(\mathbb {R}^2\)-valued Radon measure we can consider

By the density of the space of test functions \(C_c^\infty (\varOmega )\) in \(C_0(\varOmega )\), we moreover obtain the following identity:

where the second equality results from the definition of the distributional gradient. We thus see that for \(u \in BV(\varOmega )\) its total variation equals just the Radon norm of its distributional gradient. For this reason, an alternative approach towards the definition of the space of bounded variation characterises functions \(u \in L^1(\varOmega )\) as elements of \(BV(\varOmega )\) if their distributional gradient is representable by a finite \(\mathbb {R}^d\)-valued Radon measure. However, there also exists a dissimilarity between \(\Vert \nabla u \Vert _{\mathcal {M}(\varOmega ,\mathbb {R}^2)}\) and \(\text {TV}(u)\): while by its characteristic as a norm the former can only attain values in \(\left[ 0,\infty \right) \), the latter can not only be defined for functions in \(BV(\varOmega )\), but also for any function in \(L^1(\varOmega )\), since it can equal infinity. We will come back to this point shortly.

In view of the previously summarised insights, it seems natural to implement the infimal convolution to balance between enforcing sparsity of the distributional gradient of u and some differential operator of a finite \(\mathbb {R}^d\)-valued Radon measure w resembling \(\nabla u\) by means of Radon norms. In the following, we will thus pursue this approach. In doing so, we however will slightly abuse notation by extending the Radon norm to a broader class of generalised functions similar to \(\text {TV}\) that is defined for a broader class of functions than the actual Radon norm of the distributional gradient. Here, we will adhere to the notation of the Radon norm and just set it to infinity whenever the argument is no finite \(\mathbb {R}^d\)-valued Radon measure, but only an element of the more general class of distributions.

3 Natural Differential Operators on Vector Fields

In Sect. 1 we recalled the (SVF) model for image denoising and already mentioned that due to point artefacts the obtained denoising results were unsatisfactory. Nevertheless, we decided to adhere to the idea of realising penalisation of second-order derivative information by applying natural vector operators to a two-dimensional vector field w resembling the gradient of u. Against the backdrop of the Helmholtz respectively the Hodge decomposition theorem and inspired by the work of Schnörr [35], the differential operators we are going to consider besides the divergence are the curl and the two components of the shear. In this section, we first give precise definitions of these operators in 2D. In a next step, we then reexamine the SVF model and moreover consider three alternatives, where the divergence operator is replaced by one of the aforementioned natural vector operators, namely the curl respectively one component of the shear. We show denoising results for the respective models revealing that each regulariser leads to very distinct artefacts that we can explain rigorously by analysing the corresponding nullspaces.

3.1 Differential Operators on 2D Vector Fields

The curl is traditionally defined for three-dimensional vector fields and there is no unique way to define it in two dimensions. We chose the following definition of the curl of a 2D vector field z:

The definition of the divergence is well-known and is given as

As mentioned in Sect. 1, incorporating the shear as a component of a sparse regulariser for vector fields has first been introduced by Schnörr [35]. It consists of two components, each of which we consider separately. Their definitions also differ slightly in the literature and we decided to choose the following two:

3.2 Sparsity of Scalar-Valued Natural Differential Operators

In Fig. 2, we can see how enforcing sparsity of one of the four different aforementioned scalar-valued natural vector operators applied to the vector field w in (SVF) changes the reconstruction u. More precisely, we consider the model

where S corresponds to one of the vector field operators defined in (curl)–(sh2). Here and in the following we will slightly abuse notation and write derivatives of the measure w, which are however to be interpreted in a distributional sense. We first identify S(w) with the linear functional

If this linear functional is bounded in the predual space of \(\mathcal {M}(\varOmega )\), the space of continuous functions with compact support, then we can identify it with a Radon measure S(w) and define \(\Vert S(w) \Vert _{\mathcal {M}(\varOmega )} \), otherwise we set it to infinity.

In order to understand the appearance of artefacts as above, it is instructive to study the nullspaces of the differential operators, as the following lemma shows, providing a result similar to [5]:

Lemma 1

Let \(R: L^2(\varOmega ) \rightarrow \mathbb {R}\cup \{+\infty \}\) be a convex absolutely one-homogeneous functional, i.e. \(R(c u) = \vert c \vert R(u)\ \forall c \in \mathbb {R}\). Then for each \(u_0 \in L^2(\varOmega )\) with \(R(u_0) = 0\) we have

Moreover, let \(f=f_0+g\) with \(R(f_0)=0\) and \(\int _\varOmega f_0 g ~\mathrm{d}x = 0\). Then the minimiser \(\hat{u}\) of

is given by \(\hat{u} = f_0 + u_*\) with \(\int _\varOmega f_0 u_*~\mathrm{d}x = 0\) and

where \(\lambda _0\) is the smallest positive eigenvalue of R.

Proof

Convexity and positive homogeneity imply a triangle inequality, hence

and since \(R(u_0) = R(-u_0) = 0\), we conclude \(R(u+u_0) = R(u)\).

Now consider the variational model (2) and write \(u=cf_0 +v\) with \(\int _\varOmega v f_0~\mathrm{d}x = 0\). Then we have

The first term is minimised for \(c=1\) and the second for \(v = u_*\) with \(u_*\) being the solution of

It remains to verify that indeed \(\int _\varOmega u_* f_0~\mathrm{d}x = 0\). Since the Fréchet subdifferential of the functional to be minimised is the sum of the Fréchet derivative of the first term and the subdifferential of the regularisation term (cf. e.g. [32, Theorem 23.8]), the solution \(u_*\) satisfies the optimality condition \(u_* = g + \alpha p_*\) for \(p_* \in \partial R(u_*)\). We refer to [22, Chapter I, Sect. 5] for a formal definition of the subdifferential. Since by definition of a subgradient of R

we obtain the orthogonality relation because \(\int _\varOmega g f_0~\mathrm{d}x = 0\). The lower bound on \(\Vert u_* -g \Vert _2\) follows from a result in [5, Sect. 6], the upper bound on the regularisation follows from combining this estimate with

which is due to the fact that \(u_*\) is a minimiser of the functional with data g. \(\square \)

Lemma 1 has a rather intuitive interpretation: while the nullspace component with respect to R in the signal is unchanged in the reconstruction, the part orthogonal to the nullspace is changed. Indeed this part is shrunk in some sense, \(u_*\) has a smaller value of the regularisation functional than g. Hence, when rescaling the resulting image for visualisation, the nullspace component is effectively amplified. As a consequence, we proceed to a study of nullspaces for the different models with

-

Let \(S = {{\mathrm{\text {curl}}}}\) and choose \(u \in C^2(\varOmega )\), then we can set \(w= \nabla u\) and since the curl of the gradient vanishes, we obtain the infimum at zero. By a density argument R vanishes on \(L^2(\varOmega )\). Hence, Lemma 1 with \(g =0 \) shows that the data f are exactly reconstructed by \(\hat{u}\).

-

Let \(S = {{\mathrm{{div}}}}\), which exactly resembles (SVF), and we can observe the point artefacts described above (cf. Fig. 2, second image). Those are more difficult to be understood from the nullspace, which consists of harmonic functions (\(w = \nabla u,\ {{\mathrm{{div}}}}(w) = 0\)). The latter is less relevant however for discontinuous functions, which are far away from harmonic ones. We rather expect to have a divergence of w being sparse, i.e. a linear combination of Dirac \(\delta \)-distributions. Hence, with this structure of \(\Delta u = {{\mathrm{{div}}}}(w)\) the resulting u would be the sum of a harmonic function and a linear combination of fundamental solutions of the Poisson equation, which exhibits a singularity at its centre in two dimensions. This singularity corresponds to the visible point artefacts.

-

With \(S = {{\mathrm{\text {sh}_1}}}\), we observe a stripe-like texture pattern in diagonal directions. Here, \(w=\nabla u\), \({{\mathrm{\text {sh}_1}}}(w) = 0\) yields a wave equation \(\frac{\partial ^2 u}{\partial x_1^2} = \frac{\partial ^2 u}{\partial x_2^2}\). According to d’Alembert’s formula (cf. e.g. [23, pp. 65–68]), the latter is solved by functions of the form \(u=U(x_1+x_2) + V(x_1-x_2)\), which corresponds exactly to structures along the diagonal.

-

The artefacts in the case \(S = {{\mathrm{\text {sh}_2}}}\) look similar, but the stripe artefacts are parallel to the \(x_1\)- and \(x_2\)-axes. Now the nullspace is characterised by \(w=\nabla u,\ {{\mathrm{\text {sh}_2}}}(w) = 0\), which is equivalent to \(\frac{\partial ^2 u}{\partial x_1 \partial x_2} = 0\). This holds indeed for \(u=U_1(x_1) + U_2(x_2)\), i.e. structures parallel to the coordinate axes.

As observed already in the SVF model, we see from the above examples that the functional using any single differential operator has a huge nullspace and will not yield a suitable regularisation in the space of functions of bounded variation. On the other hand, using norms of the symmetric or full gradient as in TGV or ICTV is known to yield a regularisation in this space [4, 11]. Thus, one may ask which and how many scalar differential operators one should combine to obtain a suitable functional. In the subsequent section, we will deduce an answer to this question, where in the end again a particular focus is laid on the four natural differential operators discussed above.

4 Unified Model

In view of the insights described in the previous section, we decided to consider a much more general approach, where no longer one natural vector operator is applied to w, but instead a general operator \(\mathcal {A}\) is applied to the Jacobian of w to penalise second-order derivative information. We give a rigorous dual definition of the regularisation functional and state the corresponding subdifferential. By rephrasing this very general approach appropriately, we are eventually able to show that for a suitable choice of the general operator we can return to a formulation based on a weighted combination of the aforementioned natural vector field operators. We analyse the thus obtained model with respect to nullspaces and prove the existence of BV solutions. In addition, we unroll that it is indeed justified to call the proposed approach a unified model, since we show that (at least in the limit) we can obtain the well-known second-order TV-type models ICTV, CEP and TGV as well as variations of first-order total variation as special cases. Finally, we investigate under which conditions the presented approach is rotationally invariant.

4.1 General Second-Order TV-Type Regularisations

In a unified way, any of the above regularisation functionals can be written in the form

with a pointwise linear operator \(\mathcal {A}: \mathbb {R}^{2 \times 2} \rightarrow \mathbb {R}^m\) independent of x such that \(\nabla w(x) \mapsto \mathcal {A}\nabla w(x)\) if w has \(C^1\) density, where in the above context \(m = 1\). In the general setting, we can use the distributional gradient and identify \(\mathcal {A}\nabla w\) with the linear form

We are interested in the case where this linear functional is bounded on the predual space of \({\mathcal {M}(\varOmega ,\mathbb {R}^m)}\), i.e. the space of continuous vector fields, and thus identify \(\mathcal{A} \nabla w\) with such a vector measure justifying the use of the norm in (3) (see also the equivalent dual definition below). Note that for \(m < 4\)\(\mathcal {A}\) will have a nullspace and hence \(\mathcal {A}\nabla w\) being a Radon measure does not imply that \(\nabla w\) is a Radon measure. The product is hence rather to be interpreted as some differential operator \(\mathcal {A}\nabla \) applied to the measure w than \(\mathcal {A}\) multiplied with \(\nabla w\).

In view of (3), where as mentioned earlier \(m=1\), we can derive a rigorous dual definition starting from

Assuming that we can exchange the infimum and supremum, i.e.

we see that a value greater than \(-\infty \) in the infimum only appears if \(\varphi + {{\mathrm{{div}}}}(\mathcal{A}^*\psi ) = 0\). Thus, we can restrict the supremum to such test functions, which actually eliminates w and \(\varphi \), and obtain the following formula reminiscent of the TGV functional [11]:

We see that there is an immediate generalisation of the above definition when we want to use more than one scalar differential operator for regularising the vector-valued measure w, we simply need to introduce a pointwise linear operator \(\mathcal {A}: \mathbb {R}^{2 \times 2} \rightarrow \mathbb {R}^m\) with \(m \ge 1\). Then the definition (5) remains unchanged if we adapt the admissible set

Let us provide some analysis of the above formulations. First of all we show that the infimal convolution is exact, i.e. for given \(u \in BV(\varOmega )\) the infimum is attained for some \(\overline{w} \in \mathcal {M}(\varOmega ,\mathbb {R}^2)\).

Lemma 2

Let \(u \in BV(\varOmega )\), then there exists \(\overline{w} \in \mathcal {M}(\varOmega ,\mathbb {R}^2)\) such that

Proof

We consider the convex functional

First of all \(w=0\) is admissible and yields a finite value \(F(0) = \Vert \nabla u \Vert _{\mathcal {M}(\varOmega ,\mathbb {R}^2)} < \infty \), since \(u \in BV(\varOmega )\). Thus, we can look for a minimiser of F on the set \(F(w) \le F(0)\). For such w the triangle inequality yields the bound

In particular, w and \(\mathcal {A} \nabla w\) are uniformly bounded in \(\mathcal {M}(\varOmega ,\mathbb {R}^2)\), which consequently also holds for minimising sequences \(w_n\) and \(\mathcal {A} \nabla w_n\). A standard argument based on the Banach-Alaoglu theorem and the metrisability of the weak-star topology on bounded sets (or alternatively cf. [1, Theorem 1.59]) yields the existence of weak-star convergent subsequences \(w_{n_k}\) and \(\mathcal {A} \nabla w_{n_k}\). Let \(\overline{w} \in \mathcal {M}(\varOmega ,\mathbb {R}^2)\) denote the limit of the first subsequence \(w_{n_k}\). Taking into account the continuity of the operator \(\mathcal {A}\nabla \) in the space of distributions, the limit of the second subsequence \(\mathcal {A} \nabla w_{n_k}\) equals \(\mathcal {A} \nabla \overline{w}\). Then \(\overline{w}\) is a minimiser due to the weak-star lower semicontinuity of both summands of F. \(\square \)

Next, we show the equivalence of the problem formulations in (3) and (5).

Lemma 3

The definitions (3) and (5) with a pointwise linear operator \(\mathcal {A}: \mathbb {R}^{2 \times 2} \rightarrow \mathbb {R}^m\) are equivalent, i.e. for all \(u \in BV(\varOmega )\) we have

with \(\mathcal{B}_1^*\) given by (7).

Proof

The proof follows the line of argument in [10] (see also [12]) and is based on a Fenchel duality argument for the formulation, which we already sketched above. For this sake let \(R_P\) denote the primal formulation (3) and rewrite the dual formulation \(R_D\) given in (5) as

where we use the spaces \(X = C_0^1(\varOmega ,\mathbb {R}^2) \times C_0^2(\varOmega ,\mathbb {R}^m)\), \(Y = C_0^1(\varOmega ,\mathbb {R}^2)\), the linear operator \(\Lambda : X \rightarrow Y\), \(\Lambda (v_1,v_2) = -v_1 + {{\mathrm{{div}}}}(\mathcal{A}^* v_2)\), and the indicator functions

The equivalence of the supremal formulation on these spaces follows from the density of \(C_c^\infty (\varOmega )\) in \(C_0^k(\varOmega )\) for any k. Using the convex functionals \(G: Y \rightarrow \mathbb {R}\cup \{+\infty \}\) as the indicator function of the set \(\{0\}\) and \(F: X \rightarrow \mathbb {R}\cup \{+\infty \}\) given by

we can further write

In view of [10, p. 12] it is straightforward to verify that

where \(\text {dom}(F) = \{ x \in X : F(x) < \infty \}\) denotes the effective domain, and hence together with the convexity and lower semicontinuity the conditions for the Fenchel duality theorem [2, Corollary 2.3] are satisfied. Hence,

where the last conversion results from the definition of the Radon norm. Since \(u \in BV(\varOmega )\) the above functional only has a finite value for \(w \in \mathcal {M}(\varOmega ,\mathbb {R}^2).\) Hence the infimum in the larger space \(Y^*\) equals the infimum in \(\mathcal {M}(\varOmega ,\mathbb {R}^2).\) This yields the assertion. \(\square \)

Based on the dual formulation (5), we can also understand the subdifferential of the absolutely one-homogeneous functional R. We see that \(p \in \partial R(u)\) if \(p ={{\mathrm{{div}}}}^2( \mathcal{A}^*\psi )\) for \(\psi \in {\mathcal{B}_1^* }\) and

In general, subgradients will be elements of a larger set, namely a closure of \(\mathcal{B}_1^* \) in \(L^\infty (\varOmega )\) with the restriction that \({{\mathrm{{div}}}}( \mathcal{A}^*\psi )\) can be integrated with respect to the measure \(\nabla u\).

The domain of R and the topological properties introduced are unclear at first glance and depend on the specific choice of \(\mathcal{A}\). However, we can give a general result bounding R by the total variation.

Lemma 4

The functional R is a seminorm on \(BV(\varOmega )\) and satisfies \( R(u) \le TV (u) = \vert u \vert _{BV}\) for all \(u \in BV(\varOmega )\).

Proof

The fact that R is a seminorm is apparent from the dual definition (5). From the primal definition (3) we see that the infimum over all w is less than or equal to the value at \(w=0\), which is just \(|u|_{BV}\). \(\square \)

4.2 Combination of Natural Differential Operators

As an alternative to the above form, we can provide a matrix formulation when writing the gradient as a vector

Then the operator \(\mathcal{A}\) is represented by an \(m \times 4\) matrix \(\varvec{A}\), and we have \(\mathcal{A} \nabla w = \varvec{A} \nabla _V w\). For the four scalar operators used above, we obtain

Using the vector of natural differential operators

we can also write

We mention that due to the fact that we use the Frobenius norm, which has the property \(\Vert z \Vert = \Vert \mathbf{Q} z \Vert \) for every orthogonal matrix \(\mathbf{Q}\), two regularisations represented by matrices \(\mathbf{A}_1\) and \(\mathbf{A}_2\) will be equivalent if there exists an orthogonal matrix \(\mathbf{Q}\) with \(\mathbf{A}_2 = \mathbf{Q} \mathbf{A}_1\).

The question we would like to investigate in detail in the following paragraphs is whether enforcement of joint sparsity of some or all of the four natural differential operators (curl)–(sh2) applied to the vector field w can improve the reconstruction results. Moreover, we shall characterise a variety of models in the literature as special cases. This is not surprising, as we can always choose a suitable matrix \(\varvec{A}\) for any of those, but interestingly they can all be described by a diagonal matrix

We will thus describe the regularisation functional solely in terms of the vector

as

with

where

and

Based on this regularisation, we will study the model problem

for \(f\in L^2(\varOmega )\), which of course can be extended directly to more general inverse problems and data terms. Note that \(\alpha \) is a regularisation parameter in the classical sense, while the parameters \(\beta _i\) are rather characterising the specific form of the regularisation functionals.

In Sect. 3, we have presented reconstruction results for the denoising problem (gSVF) and the effect of regularisation incorporating one of the four scalar-valued vector operations (curl)–(sh2), i.e. for only one of the weights \(\beta _i\) being nonzero. In the following, we demonstrate how our model behaves when two, three or all \(\beta _i\) are nonzero.

Reconstruction of a piecewise affine test image adding Gaussian noise with zero mean and variance \(\sigma ^2 = 0.05\) using (9) for different parameter combinations

In Fig. 3, we are given a piecewise affine test image and add Gaussian noise with zero mean and variance \(\sigma ^2 = 0.05\). For the task of denoising, we solve (9) and vary the weights \(\beta _i\). We optimise the parameters such that the structure similarity (SSIM) index is maximal. We can observe that setting two weights in our novel regulariser to zero still yields some artefacts in the reconstruction, especially in the case of enforcing a sparse curl in combination with one of the two components of the shear. As soon as we only set one of the four weights to zero, we obtain very good results, as can be seen in the bottom row of Fig. 3. On the top right, the reconstruction with all weights being nonzero is presented, which yields a comparably good result.

In the following, we demonstrate that we can resemble special cases of already existing TV-type reconstruction models by modifying the weights in our regulariser (8). In particular, we show that we are able to retrieve (TV), (CEP), (TGV) and (ICTV). However, before we discuss the relation of our proposed model to these existing regularisers in detail and even demonstrate that we can interpolate between the latter two by adapting one single weight, we shall elaborate on nullspaces and the existence of BV solutions for our unified model given in (9).

4.3 Nullspaces and Existence of BV Solutions

Our numerical results indicate that we obtain a real denoising resembling at least the regularity of a BV solution if at least three of the \(\beta _i\) are not vanishing. It is thus interesting to further study the nullspace \(\mathcal{N}(R_{\varvec{\beta }})\) of the regularisation functional \(R_{\varvec{\beta }}\) in such cases and check whether it is finite-dimensional. Subsequently, a similar argument to [4] can be made showing that the regularisation functional is equivalent to the norm in \(BV(\varOmega )\) on a subspace that does not include the nullspace. If the nullspace components are sufficiently regular, Lemma 1 yields that minimisers of a variational model for denoising are indeed in \(BV(\varOmega )\). In the following, we thus aim at characterising the set of all \(u \in L^2(\varOmega )\) for which \(R_{\varvec{\beta }}(u) = 0\) holds. Note that we provide further details on the derivation of the subsequent results in Appendix A. First of all, we directly see that \(\beta _1\) plays a special role, since curl(\(\nabla u) = 0\). Thus, the case \(\beta _1=0\) will yield the same nullspace as \(\beta _1 > 0\). Hence, we only distinguish cases based on the other parameters:

-

\(\beta _i > 0\), \(i=2,3,4\). In this case, we have \(\nabla u = w\) and \(\nabla w =0\), the nullspace simply consists of affinely linear functions (see also [4]).

-

\(\beta _2=0\), \(\beta _3,\beta _4 > 0\). In this case, we can argue similarly to Sect. 3 and see that \(u=U(x_1+x_2) + V(x_1-x_2)=U_1(x_1)+U_2(x_2)\). Computation of second derivatives with respect to \(x_1\) and \(x_2\), respectively, yields the identity \(U''(x_1+x_2) + V''(x_1-x_2)=U_1''(x_1)=U_2''(x_2).\) Thus, \(U_1''\) and \(U_2''\) are equal and constant. Integrating those with the constraint that \(U_1\) and \(U_2\) can only depend on one variable yields that the nullspace can only be a linear combination of \(x_1^2+x_2^2\), \(x_1\), \(x_2\), 1. One easily checks that these functions are indeed elements of the nullspace.

-

\(\beta _3=0\), \(\beta _2,\beta _4 > 0\). Now we see that u is harmonic and on the other hand \(u=U_1(x_1)+U_2(x_2)\), which yields \(U_1''(x_1) + U_2''(x_2) = 0\). The latter can only be true if \(U_1''\) and \(U_2''\) are constant, with constants summing to zero. Integrating those shows that the nullspace consists exactly of linear combinations of \(x_1^2-x_2^2\), \(x_1\), \(x_2\), 1.

-

\(\beta _4=0\), \(\beta _2,\beta _3 > 0\). A similar argument as above now yields \(u=U(x_1+x_2) + V(x_1-x_2)\) and \(U''(x_1+x_2)+V''(x_1-x_2)=0\). Again we obtain that \(U''\) and \(V''\) are constant, after integration we see that the nullspace consists exactly of linear combinations of \(x_1 x_2\), \(x_1\), \(x_2\), 1.

This leads us to the following result characterising further the topological properties of the regularisation functionals, based on a Sobolev–Korn-type inequality, which we state first.

Lemma 5

Let \(\beta _i \ge 0\) for \(i=1,\ldots ,4\) and assume that at most one of the parameters \(\beta _i\) vanishes. Then the Korn-type inequality

holds, where \(P_{\varvec{B}}\) is the projection onto the finite-dimensional nullspace \(\mathcal{N}(\varvec{B} \nabla _N w)\) of the differential operator \(\varvec{B} \nabla _N w\) and \(C_{\varvec{B}}\) is a constant depending on \(\varvec{B}\) only.

Proof

We will use the Korn inequality in measure spaces (cf. [9, Corollary 4.20]), stating that for vector fields of bounded deformation the inequality

holds, where \(\mathcal{E}_S(w)\) is the symmetric gradient and P a projector onto its nullspace. We can equivalently write the inequality as

where \(\mathcal {E}(w)\) is the vectorised symmetric gradient

Since on a bounded domain the total variation of a measure is a weaker norm than the \(L^2\) norm of its Lebesgue density, we find

In order to verify the Korn-type inequality it is crucial to have three coefficients \(\beta _i\) different from zero.

In this case, an elementary computation shows that there exists an invertible matrix \(\tilde{\varvec{B}} \in \mathbb {R}^{2\times 2}\) and an orthogonal Matrix \(\varvec{Q} \in \mathbb {R}^{4 \times 4}\) such that

Thus, the Korn inequality applied to \(\tilde{w} = \tilde{\varvec{B}} w\) implies

Since \(P=\tilde{\varvec{B}} P_N (\tilde{\varvec{B}})^{-1}\) is a projector on the nullspace of \(\mathcal{E}\), we obtain

If all \(\beta _i\) are positive, we can use an analogous argument with \(\varvec{B} \nabla _N w = \nabla \tilde{\varvec{B}} w\) and the Poincaré-Wirtinger inequality in spaces of bounded variation [7]. \(\square \)

Lemma 6

Let \(\beta _i \ge 0\) for \(i=1,\ldots ,4\). Then for \(R_{\varvec{\beta }}\) defined in (8) the estimate \( R_{\varvec{\beta }}(u) \le |u|_{BV} \) holds for all \( u \in BV(\varOmega )\). Moreover, assume that at most one of the parameters \(\beta _i\) vanishes and let \(\mathcal{U} \subset BV(\varOmega )\) be the subspace of all BV functions orthogonal to \(\mathcal{N}(R_{\varvec{\beta }})\) in the \(L^2\) scalar product. Then there exists a constant \(c \in (0,1)\) depending only on \({\varvec{\beta }}\) and \(\varOmega \) such that \( R_{\varvec{\beta }}(u) \ge c |u|_{BV} \) for all \(u \in \mathcal{U} \).

Proof

The first estimate is a special case of Lemma 4. In order to verify the second inequality we proceed as in [10]. The key idea is to use the Korn-type inequality defined in Lemma 5. Given (10), we have

Thus, taking the infimum over all w yields

where the last equality results from the definition of the projection \(P_{\varvec{B}}\).

It is then easy to see that for \(u \in \mathcal{U}\) the optimal value is \(\tilde{w}=0\). This implies the desired estimate. \(\square \)

Remark 1

In the above analysis of the nullspaces, we figured out that due to our choice of the first term \(\Vert \nabla u - w \Vert _{\mathcal {M}(\varOmega ,\mathbb {R}^2)}\) of the regulariser \(R_{\varvec{\beta }}\), penalisation of the curl is irrelevant for the characterisation of the nullspaces of \(R_{\varvec{\beta }}\). Accordingly, the assertion of the above lemma can easily be extended to the cases \(\beta _1 = \beta _2 = 0\) and \(\beta _3,\beta _4 > 0\), \(\beta _1 = \beta _3 = 0\) and \(\beta _2,\beta _4 > 0\) as well as \(\beta _1 = \beta _4 = 0\) and \(\beta _2,\beta _3 > 0\), where the line of argument follows exactly the proof given above. For all remaining cases, the above proof fails however, since in these cases the resulting nullspaces are not finite-dimensional.

Theorem 1

Let \(f\in L^2(\varOmega )\) and \(\alpha > 0\). Moreover, let \(\beta _i \ge 0\) for \(i=1,\ldots ,4\) and let at most one of the parameters \(\beta _1,\ldots ,\beta _4\) vanish. Then there exists a unique solution \(\hat{u} \in BV(\varOmega )\) of (9).

Proof

We decompose \(u=u_0+(u-u_0)\) and \(f=f_0 +(f-f_0)\), where \(u_0\) respectively \(f_0\) are the \(L^2\) projections on the nullspace of \(R_{\varvec{\beta }}\). Then

Since \(f_0 \in BV(\varOmega )\), it is easy to see that the optimal solution is given by \(u=f_0 + v\), where v is a minimiser of

according to Lemma 1.

Since \(R_{\varvec{\beta }}\) is coercive on \(\mathcal{U}\) and the functionals are lower semicontinuous in the weak-star topology on bounded sets, we conclude the existence of a minimiser by standard arguments. Uniqueness follows from strict convexity of the first term and convexity of \(R_{\varvec{\beta }}\). \(\square \)

4.4 Special Cases

In the following, we discuss several special cases of second-order functionals in the literature, which arise either by a special choice of the vector \({\varvec{\beta }}\) or by letting elements in \({\varvec{\beta }}\), in particular \(\beta _1\), tend to infinity. For the sake of readability, we will in all cases consider all models with additional parameters equal to one, the case of other values follows by simple scaling arguments. Throughout this section, for simplicity we denote by \(\mathcal {E}(w)\) and \(\nabla (w)\) the respective vectorised versions, i.e.

and

4.4.1 TGV

The second-order TGV model (TGV) in a notation corresponding to our approach is given by

with \(\mathcal{E}(w)\) being the symmetric gradient, encoded via the matrix

Now let \(\mathbf{B}=\) diag\((0,\frac{1}{\sqrt{2}},\frac{1}{\sqrt{2}},\frac{1}{\sqrt{2}})\) and

We see that \( \mathbf{A}_{\text {TGV}} = \mathbf{Q} \mathbf{A}_1\) with the orthogonal matrix

Hence, the TGV functional can be considered as a special case of (8) with \({\varvec{\beta }}=(0,\frac{1}{\sqrt{2}},\frac{1}{\sqrt{2}},\frac{1}{\sqrt{2}})\).

4.4.2 TGV with full gradient matrix

A variant of the second-order TGV model is given by using the full gradient instead of the symmetric gradient, i.e.

This can simply be encoded via \(\mathbf{A}_{\text {TGVF}} = \mathbf{I}\) being the unit matrix in \(\mathbb {R}^{4\times 4}\). Choosing \(\mathbf{B} = \frac{1}{\sqrt{2}} \mathbf{I}\) we immediately see the equivalence, since

is already an orthogonal matrix and we obtain \(\mathbf{A}_{\text {TGVF}} = \mathbf{A}_1^{\top } \mathbf{A}_1\). Hence, the TGV functional with full gradient matrix can be considered as a special case of (8) with \({\varvec{\beta }}=(\frac{1}{\sqrt{2}},\frac{1}{\sqrt{2}},\frac{1}{\sqrt{2}},\frac{1}{\sqrt{2}})\).

4.4.3 ICTV

Let us now examine the relation to (ICTV), which rewritten in our notation becomes

In this case, we do not need to distinguish between the gradient of w and the symmetric gradient, since they are equal due to the vanishing curl. Note that we have replaced the assumption of w being a gradient by the equivalent assumption of vanishing curl, which corresponds better to our approach and indicates that we will need to consider the limit \(\beta _1 \rightarrow \infty \). Not surprisingly we will choose \(\beta _2=\beta _3=\beta _4=\frac{1}{2} \) as in the TGV case. Thus, we will study the limit of \(\beta _1 \rightarrow \infty \), using the notion of \(\Gamma \)-convergence ([8, 19]):

Theorem 2

Let \(\beta _2=\beta _3=\beta _4=\frac{1}{2}\). We define \({\varvec{\beta }^t}:=(t,\frac{1}{\sqrt{2}}, \frac{1}{\sqrt{2}},\frac{1}{\sqrt{2}})\), \(t > 0\). Then \(R_{{\varvec{\beta }}^t}\)\(\Gamma \)-converges to ICTV strongly in \(L^p(\varOmega )\) for any \(p < 2\) as \(t\rightarrow \infty \), where we extend both functionals by infinity on \(L^p(\varOmega ) \setminus BV(\varOmega )\).

Proof

Let \(t > 0\), \(u_t \in BV(\varOmega )\) and let \(w_t \in \mathcal {M}(\varOmega ,\mathbb {R}^2)\) be a minimiser of

with \(\mathbf{B}^t\) being the diagonal matrix with diagonal \({\varvec{\beta }}^t\). First, we consider the lower bound inequality. To this end, we assume \(u_t \rightarrow u\) strongly in \(L^p(\varOmega )\). Then we either have \(\lim \inf _t R_{{\varvec{\beta }}^t}(u_t) = \infty \), which makes the lower bound inequality trivial, or \(R_{{\varvec{\beta }}^t}(u_t) \) bounded. If \(\lim \inf R_{{\varvec{\beta }}^t}(u_t)\) is finite, we immediately see from the norm equivalence and lower semicontinuity of the total variation that the limit u has finite norm in \(BV(\varOmega )\). Hence, for \(u \in L^p(\varOmega ) \setminus BV(\varOmega )\) the lower bound inequality holds. Thus, let us consider the remaining case of the limit inferior being finite and \(u \in BV(\varOmega )\). Then we see that

which implies that \( {{\mathrm{\text {curl}}}}(w_t)\) strongly converges to zero in \(\mathcal{M}(\varOmega )\). Since

for all w, we have

Due to the lower semicontinuity of the last term we see

where w is a weak-star limit of an appropriate subsequence of \(w_t\). The latter exists due to the boundedness of \(w_t\) and satisfies \({{\mathrm{\text {curl}}}}(w) = 0\). Since the infimum over all curl-free w is at most as large, we obtain the lower bound inequality

Next, we consider the upper bound inequality. For \(u \in L^p(\varOmega ) \setminus BV(\varOmega )\) the upper bound inequality follows trivially with the sequence \(u_t = u\) for all t. The upper bound inequality for \(u \in BV(\varOmega )\) is also easy to verify since for such u we have \( R_{{\varvec{\beta }}^t}(u) \le \text {ICTV}(u) \) due to the fact that we obtain exactly ICTV(u) when we restrict the infimum in the definition of \( R_{{\varvec{\beta }}^t}(u)\) to the subset of curl-free w. \(\square \)

An interesting observation is that we interpolate the two TGV models as well as the ICTV model solely by the parameter \(\beta _1\), from the TGV model with the symmetric gradient (\(\beta _1=0\)) over the one with the full gradient \((\beta _1=\frac{1}{2})\) to the ICTV model in the limit \(\beta _1 \rightarrow \infty \).

Reconstruction of parrot test image adding Gaussian noise with zero mean and variance \(\sigma ^2 = 0.05\) using (9) demonstrating the ability to interpolate between (TGV) and (ICTV). Top three rows: denoised images u, error image showing the difference to the ground truth, difference image to the interpolated result. Lower four rows: different differential operators applied to vector field w

4.4.4 Interpolation between TGV and ICTV

In this paragraph, we illustrate the previously described ability of our approach to interpolate between the ICTV and TGV model by means of a numerical test case. To this end, we corrupted an image section of the parrot test image from the Kodak image databaseFootnote 1 by Gaussian noise with zero mean and a variance of 0.05. Next, we applied the proposed denoising model (9) to the noisy image data, where we always chose \(\alpha = \frac{1}{4}\) and \(\beta _2 = \beta _3 = \beta _4 =\frac{1}{2}\) and varied \(\beta _1\) as follows: In order to obtain a second-order “TGV-type” reconstruction, we set \(\beta _1\) equal to zero. For the “ICTV-type” model recovery that we obtain if \(\beta _1\) tends to infinity, we chose \(\beta _1 = 10^{10}\). The corresponding denoising results are depicted in the left and right column of the first row of Fig. 4. Additionally, we calculated the respective interpolated denoising result for \(\beta _1 \in \lbrace 10^{-4}, 10^{-3},10^{-2},10^{-1}, 0.25, 1, 2, 4, 25,\)\(100, 2500, 10^{4}, 10^{6}\rbrace \), where \(\beta _1=25\) yielded the best result with respect to the quality measure SSIM. The corresponding denoised image is shown in the middle of the top row of Fig. 4. It is a well-known fact that the TGV and the ICTV model yield results of comparable quality and thus it is not surprising that all three denoising results as well as the error images in the second row of Fig. 4 look very similar. This visual inspection is further confirmed by the quality measure SSIM, since the differences are only in the range of \(10^{-3}\). To point out that there are indeed slight differences between these denoising results, we also provide difference images between the result for \(\beta _1=25\) and the TGV respectively the ICTV result in the third row of Fig. 4. While in the first rows of Fig. 4 we can hardly recognise any visual difference between the results of the three methods under consideration, the lower four rows of Fig. 4 reveal that in some sense the interpolated model is indeed in between the TGV and the ICTV model. In these rows, we plot the four different operators (curl)–(sh2) applied to the vector field w corresponding to the “TGV-type”, “interpolated” and “ICTV-type” reconstruction in the top row, respectively. Looking at these results, we can observe that the plots of the divergence and the two components of the shear apparently are rather similar and exhibit the same structures. On the other hand, the plot of the curl of the ICTV-type model seems to be very close to zero in the whole image domain, while in the curl of the interpolated model slight structures become visible, which are even more evident in the respective plot of the TGV model, exactly as expected.

4.4.5 CEP

The CEP model (CEP) can be rewritten in our notation as

It is apparent in this case to choose \({\beta }^t:=(t,1,\)0, 0) and to again consider the limit \(t \rightarrow \infty \) to recover the CEP functional as a limit of \(R_{{\varvec{\beta }}^t}\). However, here we are in a situation where more than one of the parameters \(\beta _i\) vanishes, thus we cannot guarantee the existence of a minimiser for such a model and consequently we cannot perform a rigorous analysis of the limit in \(BV(\varOmega )\). In the denoising case (9) one could still perform a convergence analysis for the functional including the data term with respect to weak \(L^2\) convergence, which is however not in the scope of our approach.

From the issues in the analysis and our previous discussion of artefacts when only using \({{\mathrm{{div}}}}\) and \({{\mathrm{\text {curl}}}}\) in the regularisation functional it is also to be expected that the CEP model produces some kind of point artefacts. Indeed those can be seen by close inspection of the results in [18], in particular Figure 4.

4.4.6 TV and Variants

We finally verify the relation of our model to the original total variation, which is of course to be expected as the parameters \(\beta _i\) converge to infinity. This is made precise by the following theorem, from which we see the \(\Gamma \)-convergence except on the finite-dimensional nullspace. The proof is analogous to Theorem 2 and omitted here.

Theorem 3

Let \(\beta _i \ge 0\) for \(i=1,\ldots ,4\) and let at most one of them vanish. Set \(\mathbf{B}=\) diag\((\sqrt{\beta _1},\ldots ,\sqrt{\beta _4})\) and \({\varvec{\beta }}^t = t {\varvec{\beta }}\), \(\mathbf{B}^t = t \mathbf{B}\). Then \(R_{{\varvec{\beta }}^t}\)\(\Gamma \)-converges to \(TV _{B }\) strongly in \(L^p(\varOmega )\) for any \(p < 2\) as \(t\rightarrow \infty \), where

and we extend both functionals by infinity on \(L^p(\varOmega ) \setminus BV(\varOmega )\).

4.5 Rotational Invariance

At the end of this section, we show that by imposing a simple condition on the choice of the weighting parameters \(\beta _1,\dots ,\beta _4\) we can control the rotational invariance of the regulariser \(R_{\varvec{\beta }}(u)\).

Theorem 4

Let \(\beta _i \ge 0\) for \(i = 1,\dots ,4\) and let \(\beta _3 = \beta _4\). Then the regulariser \(R_{\varvec{\beta }}(u)\) is rotationally invariant, i.e. for an orthonormal rotation matrix \(\varvec{Q} \in \mathbb {R}^{2\times 2}\) with

and for \(u \in BV(\varOmega )\) it holds that \(\check{u} \in BV(\varOmega )\), where \(\check{u}=u\circ \varvec{Q}\), i.e. \(\check{u}(x) = u(\varvec{Q}x)\) for a.e. \(x \in \varOmega \), and

Proof

See Appendix B. \(\square \)

5 Discretisation

We devote a separate section of this paper to the discretisation of our novel approach and the contained natural vector operators as a basis for any numerical implementation. This seemed necessary, since we aim at complying not only with the standard requirement that it should hold that

but also with natural conservation laws such as

imposing additional constraints upon the choice of discretisation. We will use the finite differences-based discretisation proposed in the context of the congeneric second-order TGV model [11] as a starting point for our considerations in this section. However, as we shall see, the approach taken there fails to fulfil the aforementioned conservation laws (conservLaws). As a consequence, we suggest a similar, yet in several places adjusted and thus different discretisation, which all numerical results of our unified model (VOS) presented in this paper are based upon. We will compare solutions of the TGV model (TGV) obtained by means of the discretisation we suggest with images resulting from the discretisation proposed in [11]. Eventually, we will comment on chances and challenges of other discretisation strategies using staggered grids or finite element methods in the context of our unified model.

Abusing notation, we denote the involved functions and operators in the same way as in the continuous setting before, but from now on, we are thereby referring to their discretised versions. For the sake of simplicity, we assume the normalised images to be quadratic, i.e. \(f,u \in [0,1]^{N \times N}\). Then we discretise the image domain by a two-dimensional regular Cartesian grid of size \(N \times N\), i.e. \(\varOmega = \lbrace (ih,jh): 1 \le i,j \le N\rbrace \), where h denotes the spacing size and (i, j) denote the discrete pixels at location (ih, jh) in the image domain. Similarly as in [11] and as it is fairly customary in image processing, we use forward differences with Neumann boundary conditions to discretise the gradient \((\nabla )_{i,j}: \mathbb {R} \rightarrow \mathbb {R}^2\) of a scalar-valued function u, i.e.

where

and where we extend the definition by zero if \(i=N\) respectively \(j=N\). To avoid asymmetries and to preserve the adjoint structure (adjG), we discretise the first-order divergence operator \((\text {div})_{i,j}:\mathbb {R}^2 \rightarrow \mathbb {R}\) of a two-dimensional vector field \(w_{i,j} = (w_{i,j}^1,w_{i,j}^2)^{\mathrm {T}}\) using backward finite differences with homogeneous Dirichlet boundary conditions, i.e.

where

In [11] the authors moreover proposed to recursively apply forward and backward differences to the divergence operator of higher order such that the outermost divergence operator is based on backward differences with homogeneous Dirichlet boundary conditions. For the second-order divergence operator \((\text {div}^2)_{i,j}: \mathbb {R}^{2 \times 2} \rightarrow \mathbb {R}\) of a symmetric \(2 \times 2\)-matrix \((g)_{i,j}\) at every pixel location (i, j) (cf. (div2)) this means:

Further following the reasoning in [11], the discrete second-order derivative and discrete second-order divergence should also satisfy an adjointness condition. Consequently, we calculate the adjoint of \(\text {div}^2\) in order to obtain the Hessian matrix of a scalar-valued function u. Symmetrisation of the Hessian then yields the following discretisation of the symmetrised second-order derivative \((\mathcal {E}^2)_{i,j} : \mathbb {R} \rightarrow \mathbb {R}^{2 \times 2}\):

where for the first equality we used that since \((\nabla u)_{i,j}\) is a (1,0)-tensor, or in other words a vector, the symmetrised gradient \(\mathcal {E}\) of u is just equal to the gradient. To stay consistent, the symmetrised derivative \((\mathcal {E})_{i,j}:\mathbb {R}^2 \rightarrow \mathbb {R}^{2 \times 2}\) of a two-dimensional vector field \(w_{i,j} = (w_{i,j}^1,w_{i,j}^2)^{\mathrm {T}}\) should thus be discretised in the following way:

where \((\delta _{x-} w^2)_{i,j}\) and \((\delta _{y-} w^1)_{i,j}\) are defined analogously to () with \(w^1\) and \(w^2\) being interchanged. We have thus recalled the choice of discretisation of the second-order divergence and hence of the symmetrised derivative as suggested in [11].

With regard to Sect. 4.4, we conclude that in this setting the most natural discretisations of the curl operator \((\text {curl})_{i,j}: \mathbb {R}^2 \rightarrow \mathbb {R}\) of a two-dimensional vector field \(w_{i,j} = (w_{i,j}^1,w_{i,j}^2)^{\mathrm {T}}\) as well as of the two components of the shear\((\text {sh}_1)_{i,j}: \mathbb {R}^2 \rightarrow \mathbb {R}\) and \((\text {sh}_2)_{i,j}: \mathbb {R}^2 \rightarrow \mathbb {R}\) would all be based on backward finite differences with homogeneous Dirichlet boundary conditions, i.e.

However, this discretisation of the curl operator fails to comply with the conservation laws given in (conservLaws), since

can in general each be nonzero. To resolve this issue, we decided to instead discretise the curl operator \((\text {curl})_{i,j}: \mathbb {R}^2 \rightarrow \mathbb {R}\) of a two-dimensional vector field \(w_{i,j} = (w_{i,j}^1,w_{i,j}^2)^{\mathrm {T}}\) with forward finite differences, i.e.

In order to meet the theory derived for the continuous setting in Sect. 4.4, the discretisation of the curl operator by forward finite differences in combination with the discretisation of the divergence operator by backward finite differences requires that the first component of the shear \((\text {sh}_1)_{i,j}: \mathbb {R}^2 \rightarrow \mathbb {R}\) shall be discretised using backward finite differences while the second component \((\text {sh}_2)_{i,j}: \mathbb {R}^2 \rightarrow \mathbb {R}\) shall be discretised by means of forward finite differences, i.e.

As a side benefit of this choice of discretisation, we additionally obtain the identities

Vice versa, this approach leads to the following discretisation of the symmetrised derivative \((\mathcal {E})_{i,j}:\mathbb {R}^2 \rightarrow \mathbb {R}^{2 \times 2}\) of a vector field \(w_{i,j} = (w_{i,j}^1,w_{i,j}^2)^{\mathrm {T}}\):

that is we discretise the mixed derivatives differently than proposed in [11]. Further following the line of argument brought forward in this section, the corresponding discrete second-order divergence operator \((\text {div}^2)_{i,j}: \mathbb {R}^{2 \times 2} \rightarrow \mathbb {R}\) of a symmetric \(2 \times 2\)-matrix \((g)_{i,j}\) at every pixel location (i, j) (cf. (div2)) would be given by:

Paraphrasing this discretisation, one could say that with respect to the pure second partial derivatives, i.e. the diagonal entries of the Hessian, we stick to the idea of recursively applying forward and backward differences as proposed by Bredies et al. [11], while in regard to the mixed partial derivatives we repeatedly use forward differences. Being aware that this discretisation of the second-order divergence might seem a little less intuitive than the one proposed in [11], we nevertheless decided to adhere to the discretisation that we introduced in this section. This is because in the context of our unified model it seems crucial to find a discretisation that preserves the nullspaces of the continuous model and complies with natural conservation laws such that for example choosing \(\beta _1 > 0\) and \(\beta _2 = \beta _3 = \beta _4 = 0\) indeed returns the noisy image f as predicted by the theory for the continuous model.

Comparison of our proposed discretisation (reconstructions in the left column) with the one in [11] (reconstructions in the middle column) and absolute difference of the two reconstructions (right column) for two different image sizes

To compare the effect of the two different discretisation schemes on the reconstructed images, we corrupted a test image from the Mc Master Dataset [37] by Gaussian noise of mean 0 and variance 0.05 and calculated the denoising results obtained by means of the TGV\(^2\) model (TGV) with both discretisation approaches discussed in this section so far, the one proposed by Bredies et al. [11] as well as our alternative satisfying the natural conservation laws. The outcome of this comparison is shown in Fig. 5. Looking at the denoised images, we can conclude that both discretisation approaches provide very similar results, since visually there is hardly any difference between the corresponding images detectable. Thus, we included the difference images in the figure to illustrate that the reconstructions based on the two different discretisations are not identical, but indeed differ slightly especially close to some of the edges and near the boundary of the image domain. Also, with respect to the quality measure SSIM the results for both discretisations are in a similar range; however, the differences seem to become more significant with decreasing image resolution. This makes sense since the proportion of pixels depicting an edge in relation to the overall number of pixels of the image increases with decreasing resolution and this is where most of the differences due to the different discretisation schemes occur. In light of the bottom row of Fig. 5, we can conclude that at a relatively low image resolution our proposed discretisation apparently performs slightly inferior to the one proposed by Bredies et al. [11], however, we decided to nevertheless adhere to the proposed discretisation scheme since this way we can guarantee that the conservation laws valid in the continuous setting also apply for the discretised model.

At the end of this section, we shall also briefly comment on alternative discretisation schemes in the context of our unified model (VOS) that do not rely on finite differences. One option for such a discretisation would be based on staggered grids, i.e. on two grids, often referred to as primal and dual grid, that are shifted with respect to each other by half a pixel. Following for example [25], one could define a discrete gradient operator of a scalar function mapping from the cell centres of the primal grid to the vertical and horizontal faces (normal to the sides) of the primal grid, which can be identified with the vertical and horizontal edges (tangential to the sides) of the dual grid. In this setting, one could then also define discrete versions of the natural vector field operators contained in our model: the curl would map from a vector field defined on the edges of the dual grid to a scalar function defined on the cell centres of the dual grid, which can be identified with the nodes of the primal grid. The same would apply to the second component of the shear. The divergence operator and the first component of the shear on the contrary would map from a vector field defined on the faces of the primal grid to a scalar function defined on the cell centres of the primal grid. However, now one had to face the question of how to add up the values of these different natural vector operators of a given vector field, since their codomains do not coincide. Of course, one may consider introducing averaging operators such that in the end one obtains values of the respective operators at the same locations [25] or one might try to resolve this issue by defining inner products and norms in a suitable way (cf. e.g. [26, 27, 36]), however again it seems less obvious which is the best way to go. Another alternative would be Raviart–Thomas-based finite element methods [31], where it would be quite straightforward to define the gradient and the divergence operator, however here, too, it would be less clear how to define the curl operator and the two components of the shear in the most natural way.

Summing up, there seems to be no straightforward solution to the discretisation of our unified model (VOS) that meets all our demands and we thus, despite the known demerits, decided to stick to the simple discretisation based on forward finite differences introduced earlier in this section. An extensive investigation of the most natural discretisation in the context of higher-order TV methods and the Hessian taking into account the connection to the natural vector field operators and the related conservation laws is beyond the scope of this paper and left to future research.

6 Results

In this section, we report on numerical denoising results obtained for two different greyscale test images: Trui (\(257 \times 257\) pixels), cf. Fig. 1, and the piecewise affine test image considered in Figs. 2 and 3 (\(256 \times 256\) pixels). We choose the denoising framework because of its straightforward implementation and simple interpretability but would like to stress that our novel joint regulariser could be employed in any variational imaging model. First, we compare the best denoising result with respect to the structure similarity (SSIM) index obtained by using our unified model (VOS) with denoising models using TV, ICTV and second-order TGV regularisers and the same standard \(L^2\) data term. In addition, we present results of a large-scale parameter test solving our model (VOS) and examining how various parameter combinations lead to reconstructions of different quality.

In all experiments, we use the first-order primal-dual algorithm by Chambolle and Pock [17] for the convex optimisation. Moreover, we make use of both the step size adaptation and the stopping criterion presented in [24]. In order to solve our model (VOS), analogous to the implementation described in detail in [13], we define

where the image u and the vector field w are defined as above, \(y_1\) has the same size as w, \(y_2\) is a vector with four components, each of which has the same size as u, and I denotes the identity matrix. Using this notation we can now write our energy functional as a sum \(G(x) + F(Kx)\) according to [17] by defining

and apply the modified primal-dual algorithm in [24]. For the implementation of the TV, ICTV and TGV models, we employ the corresponding standard primal-dual implementations, using the discretisation proposed in the respective papers if applicable.

6.1 Comparison of Best VOS Result to State-Of-The-Art Methods

In the following, we compare the best result of our (VOS) model employing the discretisation described in Sect. 5 with denoising results obtained by using TV, ICTV and second-order TGV regularisation. We measure optimality with respect to SSIM.

In Fig. 6, we demonstrate that by using our unified model (VOS) we are able to obtain a reconstruction of the noisy Trui image superior to TV and comparable to ICTV and second-order TGV with respect to the quality measure SSIM. The task is to reconstruct the image on the top left, which has been corrupted by additive Gaussian noise with zero mean and variance \(\sigma ^2 = 0.05\) (top centre). We would like to stress that this noise level is relatively high compared with most publications on denoising but we chose it in order to better highlight the visual differences in the reconstructions. In the TV-regularised reconstruction (top right), we choose \(\alpha = \frac{1}{4}\) and obtain an SSIM value of 0.7995. In the ICTV case (bottom left), we select \(\alpha _1 = \frac{1}{2}\) and \(\alpha _0 = \frac{1}{4}\), where SSIM = 0.8121. For the second-order TGV-type reconstruction, we set \(\alpha _1 = \alpha _0 = \frac{1}{4}\). Here, we obtain an SSIM value of 0.8141. For better comparison with the ICTV result and the result of our unified model, we mention that the corresponding TGV-result with our discretisation on this image resolution yields an SSIM of 0.8131. The best result using our model is shown on the bottom right, choosing \(\alpha = \frac{1}{4.5}, \beta _1 = 0, \beta _2 = \frac{1}{8}, \beta _3 = 1\) and \(\beta _4 = \frac{1}{2}\) and achieving an SSIM value of 0.8136. We would like to especially draw attention to the enhanced reconstruction of the chessboard-like pattern in the scarf as well as the regions around the eyes and the mouth by using our model.

Now we present similar results obtained by solving the denoising problem for the piecewise affine square test image in Fig. 7, again considering a noise variance of \(\sigma ^2 = 0.05\). In the case of TV denoising (top right), we choose \(\alpha = \frac{1}{2}\), yielding SSIM = 0.9153. On the bottom left, ICTV regularisation selecting \(\alpha _1 = 1\) and \(\alpha _0 = \frac{1}{2}\) leads to an SSIM value of 0.9509. The parameters for the second-order TGV reconstruction (bottom centre) are \(\alpha _1 = \frac{1}{2}\) and \(\alpha _0 = 2\). Here, we obtain an SSIM value of 0.9775. The best result using our model is obtained by setting \(\alpha = \frac{1}{3}\), \(\beta _1 = 4.5\), \(\beta _2 = 90\) and \(\beta _3 = \beta _4 = 9\). We achieve the best SSIM index of 0.9844.

6.2 Practical Study of Parameter Combinations

In order to get a better understanding of our novel regulariser and how zero and nonzero values of the different parameters in our model affect the denoising reconstructions, we set up large-scale parameter tests for both the Trui and the piecewise affine test image. We use the discretisation described in Sect. 5 for all experiments, solving (VOS) numerically as described at the beginning of this section. For the Trui image, we select \(\alpha \in \{ \frac{1}{5}, \frac{1}{4.5}, \frac{1}{4} \}\) and \(\beta _i \in \{ 0, \frac{1}{8}, \frac{1}{4}, \frac{1}{2}, 1, 2, 5, 20 \}\), \(i = 1,\dots ,4\), which leads to 12288 different combinations, and for the piecewise affine test image we choose \(\alpha \in \{ \frac{1}{4.5}, \frac{1}{4}, \frac{1}{3.5}, \frac{1}{3} \}\) and \(\beta _i = \frac{b}{\alpha ^2}\), \(b \in \{ 0, \frac{1}{8}, \frac{1}{4}, \frac{1}{2}, 1, 10 \}\), \(i = 1,\dots ,4\), which leads to 5184 different combinations. We use different parameter sets, as our images differ quite significantly in structure and we naturally need a stronger overall regularisation for the less textured and more homogeneous piecewise affine test image. Again, we consider the denoising problem explained above and corrupt the original image by additive Gaussian noise with zero mean and variance \(\sigma ^2 = 0.05\).

6.2.1 Trui Test Image

Figure 8 shows histograms for three quality measures we calculated for all obtained reconstructions of Trui in our parameter test: SSIM, PSNR and relative error. It can be immediately observed that in the majority of cases, we get competitive values.

Histograms for Trui considering all tested parameter combinations, sub-divided into four cases: (1) all \(\beta _i\) are nonzero (blue), (2) one \(\beta _i\) is equal to zero (orange), (3) two \(\beta _i\) are equal to zero (yellow), (4) three \(\beta _i\) are equal to zero (purple). Note that the bars do not have equal width (Color figure online)

In Fig. 9, we examine the occurrences of various quality measure values for different parameter combinations in more depth. More specifically, we subdivide the results into four classes, dependent on how many \(\beta _i\) are nonzero. From this analysis, we can already conclude that scenarios where only one \(\beta _i\) is positive and hence only a single differential operator acts on the vector field w in the joint vector operator sparsity regularisation term yield the worst results with respect to our selected measures. Setting two of the \(\beta _i\) to zero seems to be the second-worst case. On the other hand, having all \(\beta _i\) activated yields the best performing results, which confirms the usefulness and added value of our model and justifies the comparably large number of parameters.

Note at this point that for the multi-colour histograms throughout this section, we manually selected the very differently sized intervals for the bars and heavily customised them such that the different classes become well-separated. Consequently, if a bar still contains a variety of colours, they could not be separated further in a reasonable or meaningful manner.

Histograms for Trui in the scenario that two \(\beta _i\) are equal to zero: (1) \(\beta _1 = \beta _2 = 0\) (blue), (2) \(\beta _1 = \beta _3 = 0\) (orange), (3) \(\beta _1 = \beta _4 = 0\) (yellow), (4) \(\beta _2 = \beta _3 = 0\) (purple), (5) \(\beta _2 = \beta _4 = 0\) (green), (6) \(\beta _3 = \beta _4 = 0\) (cyan). Note that the bars do not have equal width (Color figure online)

Histograms for piecewise affine image considering all tested parameter combinations, sub-divided into four cases: (1) all \(\beta _i\) are nonzero (blue), (2) one \(\beta _i\) is equal to zero (orange), (3) two \(\beta _i\) are equal to zero (yellow), (4) three \(\beta _i\) are equal to zero (purple). Note that the bars do not have equal width (Color figure online)

In Fig. 10, we only consider a subset of our results and look at the case where one of the \(\beta _i\) is set to zero, i.e. where three differential operators are active in our joint regulariser. Also in this scenario we recognise a certain trend. Considering the curl in the regularisation does not seem to be essential, since the best results are achieved in the case when it is set to zero. In contrast, the divergence appears to be of more crucial importance, as setting it to zero produces worse results in general. Of course, this is however strongly dependent on the combination of all five parameters including the overall regularisation weight \(\alpha \), and in some cases zero divergence even yields very good results, especially with respect to the SSIM.