Abstract

Radiation-induced single transient faults (STFs) are expected to evolve into multiple transient faults (MTFs) at nanoscale CMOS technology nodes. For this reason, the reliability evaluation of logic circuits in the presence of MTFs is becoming an important aspect of the design process of deep submicron and nanoscale systems. However, an accurate evaluation of the reliability of large-scale and very large-scale circuits is both very complex and time-consuming. Accordingly, this paper presents a novel soft error reliability calculation approach for logic circuits based on a probability distribution model. The correctness or incorrectness of individual logic elements are regarded as random events obeying Bernoulli distribution. Subsequently, logic element conversion-based fault simulation experiments are conducted to analyze the logical masking effects of the circuit when one logic element fails or when two elements fail simultaneously. On this basis, the reliability boundaries of the logic circuits can efficiently be calculated using the proposed probability model and fault simulation results. The proposed solution can obtain an accurate reliability range through single fault and double faults simulations with small sample sizes, and also scales well with the variation of the error rate of the circuit element. To validate the proposed approach, we have calculated the reliability boundaries of ISCAS’85, ISCAS’89, and ITC’99 benchmark circuits. Statistical analysis and experimental results demonstrate that our method is effective and scalable, while also maintaining sufficiently close accuracy.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

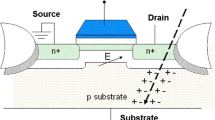

The continuous advances made in the field of semiconductor technology are leading to an increasingly aggressive reduction of device dimensions down to the nanometer scale. As a result, integrated circuits (ICs) are becoming more susceptible to soft errors induced by transient faults (TFs). These faults can be caused by a range of temporary environmental phenomena, such as high-energy particle strikes originating from cosmic rays, capacitive coupling, electromagnetic interference, or power transients [14, 16, 38, 42]. Of these factors, radiation-induced energy particle strikes have a particularly serious impact on circuit reliability [5, 55]. When a high-energy particle hits the sensitive transistors, a large number of electron hole pairs are generated; these electron hole pairs may change the stored state of flip-flops and latches for sequential circuits, and may also affect the logic value of combinational circuit elements [31]. The TFs caused by a single particle strike in sequential and combinational elements are referred to as single event upsets (SEUs) and single event transients (SETs), respectively [3, 12].

Furthermore, due to the fact that the distance between junctions is decreasing and the critical charge of internal nodes is reducing in today’s technology, the amount of energy contained in radiation particles that is required to cause a multiple transient fault (MTF) is decreasing [20, 23, 54]. Consequently, the probability that a single high-energy particle will affect more than one circuit node is no longer negligible [36, 39, 48]; moreover, if the affected nodes belong to different logic elements, MTFs can be generated and propagated to the primary outputs or flip-flops of circuits [9, 19, 22, 24, 28].

Reliability evaluation has proven to be essential in the early design stages for improving system lifetime [2, 11, 52]. A feasible and accurate reliability evaluation approach resulting from MTFs in logic circuits is crucial for identifying the features required for future reliable circuits. In this paper, we present an efficient reliability calculation approach for logic circuits based on a probability distribution model. The solution can obtain an accurate reliability range through single fault and double faults simulations, and scales well with the size of logic circuits.

The paper is organized as follows. Related works are studied in Section 2. Then in Section 3, we introduce the soft error reliability of logic circuits and the probabilistic model for MTFs. The reliability evaluation approach is proposed in Section 4. In Section 5, the simulation experiments and analysis of results on some standard benchmark circuits are presented to validate our approach. Finally, Section 6 concludes the paper.

2 Related Work

Many analysis methods have been proposed to evaluate the soft error-induced reliability of nanoscale logic circuits, as well as more complex systems [10, 36, 45, 49, 53, 56]. One common approach to analyzing the impact of TFs involves injecting numerous fault pulses into the target circuit and simulating the circuit for several different excitation-vectors to determine whether the faults propagate to the circuit outputs [15, 33, 46]. This approach can offer a high level of accuracy through fault simulation with large sample sizes; however, it is also very time-consuming and intractable for very large-scale integrated (VLSI) circuits. For instance, if only 1 millisecond is required to simulate the value of outputs of a circuit for each input vector, an exhaustive algorithm will spend about 50 days calculating the reliability for a relatively small circuit with only 32 input ports (i.e. the number of input vectors is 232) [27]. Therefore, the selected sample size directly affects both the evaluation accuracy and calculation time.

Another type of evaluation method involves analyzing the soft error rates (SERs) and the reliability of circuits by using signal probability theory [3, 4, 32]. This technique develops TF propagation rules for different types of logic gates and employs signal analysis of the fault pulse propagation probabilities through the sensitized paths. Some research suggests that the computational complexity of this approach is linear, and that analyzing the circuit reliability via analytical means is thus orders of magnitude faster than using simulation-based methods [4]. Nevertheless, this approach also requires a significant amount of calculation time when taking reconvergent fanout-induced signal correlations into account. In addition, once the simultaneous occurrence of MTFs is accounted for, the computational complexity of this type of approach increases dramatically, making it unsuitable for the evaluation of large-scale and very large-scale circuits.

A general computational tool based on probabilistic transfer matrices (PTMs) has also been introduced in order to analyze the effects of TFs on logic circuits [21, 29, 30, 37, 40]. The reliability of a given logic block can be represented by its PTM, which expresses all probabilities of its input/output occurrences [21, 29, 40]. The circuit is partitioned into proper logic blocks, and the reliability of the entire circuit is calculated based on the reliability values of all of these blocks. Specific mathematical tools, such as algebraic decision diagrams (ADDs), are used to implement and optimize PTM-based approaches to analyze the circuit reliability [34,35,36]. However, as circuit size increases, this kind of methods will encounter the problem of an explosion of required storage space.

In [8], a transient fault propagation metrics (TFPMs)-based reliability evaluation method is proposed. The TFPM of each node is calculated via reverse topological traversal using Boolean operations in parallel. The reliability of the combinational circuits can be evaluated efficiently by means of these fault propagation features. In [26], the fault propagation probabilities from the fault site to the primary outputs or reachable flip-flops are computed; however, the influence of signal correlations cannot be completely solved, which is also an issue faced by the TFPMs-based approach [8]. A new concept called statistical vulnerability window (SVW) is proposed to analyze SER in [43]. SVW is an inference of the necessary conditions for a SET to cause observable errors in the given circuit, and the SER is calculated using a probabilistic formulation based on the parameters of SVWs. In [44], a vulnerability analysis technique and probabilistic computation model is presented and the effects of all masking factors are considered in the SER estimation of the circuits without any dependency on the initial width of SETs. Furthermore, a soft error analysis approach for evaluating the vulnerability of combinational circuits is proposed in [10]; this approach can effectively handle single event multiple transients (SEMTs) events by combining fault injection techniques with Monte Carlo simulation. In [17], a method with four-value logic is employed to compute the logical masking factor; this technique can handle the effects of both STF and MTF propagation in reconvergent paths. A probabilistic gate model (PGM) that relates the output signal probability to the error and input signal probabilities of an unreliable logic gate is presented in [25], while some approximate and accurate PGM-based computational algorithms for evaluating circuit reliability are also proposed in this work to meet the need for reliability evaluation techniques.

Fast and accurate evaluation of circuit reliability can help IC designers to make targeted fault-tolerant designs [47]. Most reliability evaluation approaches aim to provide an effective tradeoff between accuracy and runtime. However, the reliability evaluation of logic circuits for MTFs still needs improvement, especially for the case of VLSI circuits. Accordingly, in this paper, we present an efficient approach to evaluate the reliability of logic circuits in the presence of MTFs, based on fault simulation and probability analysis. The proposed approach involves decomposing the evaluation objective of the circuit affected by MTFs to different components, after which the principal component and the primary high-order component are calculated accurately. This approach has several advantages: 1) The proposed method can be used to solve the circuit reliability evaluation with multiple transient faults; 2) Since the fault injection and simulation technique is adopted, the influence of signal correlations is fully considered; 3) Simulation experiments and statistical analysis demonstrate that the runtimes of the single fault and double faults simulation are quite acceptable; 4) The results of the proposed method can be easily extended to other manufacturing processes; 5) Our method is suitable for VLSI circuits, even those that are industrial-sized.

3 Circuit Reliability and Probabilistic Model

In this paper, we mainly evaluate the soft error reliability of combinational circuits and extend the research to the sequential circuits with full-scan design structure.

3.1 Reliability of Combinational Circuit

The soft error reliability of a logic circuit is a metric concerning the probability that the values of outputs will be correct despite the occurrence of some TFs in this circuit. In simple terms, the reliability of a combinational circuit is defined as the probability of the outputs having the expected logic values. Let us first consider a combinational logic circuit with m inputs and n outputs, as shown in Fig. 1:

The reliability R of such a circuit can be determined by

where X and Y represent the input vector and output vector of the combinational circuit, respectively. Meanwhile, p(X) represents the probability of a given input vector X, and p(Y = correct|X) refers to the probability that a correct output vector occurs given an input vector X. Obviously, if the probabilities of all input vectors are equal, R can be expressed by

where Xw denotes the w-th input vector X, represented by the binary code of w. For example, when m = 4 and w = 6, X6 refers to the input vector (0110).

3.2 Reliability of Full-Scan Design Sequential Circuit

In this paper, we focus on the soft error reliability evaluation of combinational circuits. Our work here does not involve the reliability analysis of storage elements, nor the propagation and capture of TFs under multiple clock cycles in sequential circuits. However, it is worth noting that for the purposes of testing, most sequential circuits are using scan design structure by substituting scan flip-flops (SFFs) for common D flip-flops (DFFs), as shown in Fig. 2.

In this full-scan design circuit, all storage elements are replaced with scan cells, which are then configured as one or more scan chains during the shift operation. As a result, all inputs to the combinational logic, including those driven by SFFs, can be controlled; moreover, all outputs from the combinational logic, including those driving SFFs, can be observed. That is to say, the outputs and inputs of SFFs can be treated as the primary inputs and primary outputs of the circuit, respectively [8, 51]. We can thereby extend our evaluation method to full-scan sequential circuits.

3.3 Probabilistic Model

When the manufacturing process of the CMOS IC enters the nanometer scale, the pipeline depth of most modules exceeds 20 or even 30, and the operating frequency of circuits increases dramatically. Therefore, the logical masking effect occupies the dominant position in the reliability evaluation of multiple factors. Our approach is primarily concerned with the logical masking effect. It is clear that the calculation results can be enhanced by considering technology-dependent factors such as the electrical masking effect and latching-window masking effect [1, 45, 50]. According to this premise, the radiation-induced TFs in the circuit can be equivalent to the faults of the logic gates. We use the same fault model as in [25, 41], namely PGM, in which it is assumed that any logic gate will fail independently with a constant probability f [25]. While simplistic, this fault model can be extended to consider the technologies used to build the logic gate by incorporating their failure mechanisms and gate structures. For example, we could develop more reliable models based on a gate’s physical area, transistor-level structure, and robustness to faults, then incorporate these factors into the expression of f [18].

In this paper, we suppose that the logic gates are characterized by an equal probability, f, of independently generating an incorrect output. Vector G = (g1 g2 ... gt) is defined as representing the status of all gates of a circuit, where t is the total number of these circuit gates. Each gi takes a value in {0, 1} such that gi = 1 indicates the occurrence of a fault and gi = 0 signifies the proper operation of the gate i. An example of a small-scale circuit with two faulty gates (gates 2 and 5) is presented in Fig. 3; the corresponding vector G is (0100100). The number of faulty gates in the target circuit is indicated by the number of 1 s in vector G. Gt(k) is used to denote that the number of 1 s in vector G is k, corresponding to a circuit in which k faults occur simultaneously. According to this notation, Gt(0) is the particular case corresponding to a fault-free circuit.

It should be noted here that the reliability R of a circuit includes both correct outputs resulting from fault-free operation and correct outputs resulting from fault masking; in short, some faults or their combinations may not be propagated to the circuit outputs in the end due to the effects of masking.

For a given input vector Xw, and in the presence of k faults, the Boolean equation in (3) indicates that all outputs of the circuit are correct:

Where Y(Gt(k); Xw) and Y(Gt(0); Xw) represent the output vector of the circuit with k faults and fault-free circuit by the excitation of Xw, respectively. The operator ‘\( \overline{\oplus} \)’ is the XNOR Boolean operator used for vectors with the same length.

We can rewrite the reliability R as a weighted combination of all possible functions p(k = i), as shown in (4):

Here, p(k = i) is the probability that the number of faulty gates is i, while p(Y = correct| k = i) represents the probability that a correct output vector occurs in the presence of i faults. Using (2), the reliability R can be determined as follows:

Here, p(Y = correct| Xw, k = i) represents the probability that a correct output vector occurs in the presence of i faults given input vector Xw. Considering that \( {C}_t^i \) denotes the number of possible combinations of t gates experiencing i faults simultaneously, such that \( {C}_t^i=\frac{t!}{\left(t-i\right)!i!} \), we use the expression Y(Gt(i): j; Xw) (j = 1, 2, ...,\( {C}_t^i \)) to denote the output vector that corresponds to one possible combination situation in the presence of i faults given input vector Xw. Equation (5) can be rewritten as follows:

Since each gate is considered to fail independently with a constant probability, the number of faulty logic gates is a random variable subject to Bernoulli distribution; that is to say, p(k = i) can be expressed as follows:

Equations (6) and (7) can be combined and simplified to

where Ti denotes the probability that the output vectors of the circuit are correct in the presence of i faults, so Ti ∈[0, 1].

4 Proposed Evaluation Approach

Our work aims to accurately and efficiently evaluate the soft error reliability for nanoscale logic circuits. Accordingly, in this section, we present some concepts pertaining to reliability boundaries, along with details of their expressions and calculation methods. In addition, we describe the fault simulation algorithms that will be used in the following experiments.

4.1 Reliability Boundaries

According to the description of Ti in (8)—and considering that, when all logic gates of a circuit are fault-free, the circuit will output correct results—we can conclude that T0 = 1. We decompose (8) as follows:

It can be seen from (9) that R ≥ (1 − f)t. For a given f, the lowest limit of circuit reliability is expressed by Rc − lower = (1 − f)t, which is termed the conservative lower limit (Rc-lower). The latter part of (9) can be considered as the sum of i-order (i = 1, 2, ..., t) components of circuit reliability. The principal component superimposed on Rc-lower is the first-order component R1, which can be expressed as follows:

As the feature size and power supply voltage of ICs decreases, the error rate of logic elements attacked by high- energy particles tends to increase, and the possibility of the simultaneous occurrence of MTFs becomes larger. Therefore, the influence of high-order components of circuit reliability cannot be ignored. This paper focuses on the calculation and analysis of first- and second-order components and evaluates the reliability of logic circuits in a reasonable time by combining mathematical statistics. Similar to the first-order component of reliability (i.e., R1) above, we represent the second-order component of circuit reliability using R2, which can be expressed as:

We next define four reliability boundaries: these are referred to as the first-order lower limit, first-order upper limit, second-order lower limit, and second-order upper limit respectively, and are calculated using eqs. (12) to (15):

When we calculate the first-order lower limit of circuit reliability using (12), we are in fact ignoring all high-order components and assuming that the circuit will fail in the presence of MTFs, which is a pessimistic situation. Moreover, the calculation of the first-order upper limit of reliability using (13) is another extreme case: at this point, all the Ti (i ≥ 2) are regarded as 1, which is an optimistic situation. Equations (14) and (15), by contrast, take the second-order components of reliability into account, which will evidently make the circuit reliability calculation results more accurate.

By considering the equation \( \sum \limits_{i=0}^tP\left(k=i\right)=1 \), (12) to (15) can be further expressed as follows:

The remainder of this section will focus on obtaining T1 and T2 by means of fault simulations.

4.2 Fault Simulation Algorithm

Fault injection, combined with the simulation technique, is used in this paper to calculate the principal and second-order components of circuit reliability, while the influence of reconvergent fanout-induced signal correlations is also well solved.

-

1)

Single Fault Simulation:

The calculations of T1 and T2 are realized using the single fault simulation algorithm and the double faults simulation algorithm respectively. The steps for calculating T1 are outlined in Algorithm I below.

As shown in Algorithm I, for each excitation-vector, all logic gates in the circuit take turns at being faulty gates. Algorithm I converts the faulty gate into the corresponding opposite element to simulate the faulty circuit: for example, we convert an AND gate to a NAND gate, an XOR gate to an XNOR gate, and so on. Table 1 lists the various types of logic gates and their opposite elements.

We again consider the circuit in Fig. 3 as an example to illustrate the execution process of Algorithm I. As shown in Fig. 4, we load four input vectors into the primary inputs of the circuit to simulate the fault-free circuit. The correct logic values of all gates are labeled above the wires; by contrast, the simulated results of the faulty circuit are labeled below the corresponding wires. According to the primary outputs of gates 6 and 7, the fault induced by gate 2 can only be logically masked by the excitation of the input vector (11111) among the given 4 input vectors. As can be seen from this figure, in order to evaluate the circuit reliability, we need only to re-simulate the logic gates contained in the output cone of the faulty gate (the sections enclosed by the two dotted lines in Fig. 4). However, simulating the logic gates in the output cone will save only limited time if the faulty gate is close to the primary inputs, while searching for the output cone of the faulty gate will also be time-consuming. Therefore, we replace the faulty gate with its opposite element and re-simulate the entire replaced circuit in Algorithm I. Furthermore, we describe only a 4-bit parallel simulation in this example in the interests of simplicity; in our actual simulation experiment, a parallel simulation occupying a full number of bits per memory unit is used.

-

2)

Double Faults Simulation:

In our work, the number of simulated double faults is preset, and the locations of these double faults are randomly selected. Accordingly, the double faults include not only the logic-level netlist adjacent or the layout-based adjacent [17], but also the simultaneous faults of two distant nodes caused by multiple particles strikes. The main steps of the proposed calculation of T2 are summarized in Algorithm II below.

Two randomly selected faulty gates in the circuit may not affect each other; alternatively, one of the faulty gate is the driving gate for the other one. The locations and relationships of two faulty gates are presented in Fig. 5. In order to simulate the double faults circuit, we convert the two faulty gates into their corresponding opposite gates. Such an operation is not only applicable to two independent faulty gates, but is also well suited to deal with the active relationship of the driving gate.

The values of T1 and T2 are obtained using Algorithm I and Algorithm II, respectively. In the case of a given f, four circuit reliability boundaries can be calculated according to (16)–(19), after which the reliability of the circuit is analyzed on this basis.

Equation (8) shows that the more reliable a single logic gate is, the lower the probability of MTFs will be. Therefore, the impact of three or more transient faults on the overall reliability of the circuit is smaller in the current CMOS technology. Considering more transient faults may improve the accuracy of evaluation results, but it also consumes a lot of extra calculation time. In fact, our scheme can easily be extended to the case where three or more transient faults are considered.

5 Experimental Results

Using the proposed method of reliability boundary calculation and logic element conversion-based fault simulations, we have performed a deep analysis of the impact of MTFs on the reliability of nanoscale logic circuits. In order to verify the accuracy and validity of our proposed reliability evaluation approach, a series of experiments are carried out in this section and their results are presented and analyzed.

5.1 Fault Simulation of Benchmark Circuits

In this part, T1 and T2 of some benchmark circuits are calculated via fault simulation experiments. The characteristics and simulation results of some benchmark circuits, namely ISCAS’85 [6], ISCAS’89 [7], and ITC’99 [13], are presented in Tables 2, 3, and 4 respectively. All experiments in this section were performed on a system equipped with an Intel(R)Xeon(R) CPU E5@2.7GHz processor and 4GB main memory.

In Table 2, the first column presents the names of the ISCAS’85 benchmark circuits, while the next column shows the number of gates contained in each experimental circuit and the number of its primary inputs and primary outputs (in parentheses, respectively). The third and fourth columns present the values of T1 and T2 obtained by our proposed fault simulation algorithms. It should be noted here that the experimental circuit C432 in our paper consists of 18 XOR gates, which may be different from the netlist file of C432 in some other works. The time required for simulation depends not only on the size of the circuit, but also on the number of excitation-vectors applied. According to the number of primary inputs, the number of excitation-vectors corresponding to the benchmark circuits listed in this table ranges from 1000 to 10,000. Moreover, all figures for T1 and T2 are averages of the simulation results from 10 groups. The accuracy of these experimental results will be further explored in the following part D.

The characteristics and fault simulation results of the ISCAS’89 benchmark circuits are listed in Table 3. The main difference between Tables 2 and 3 is the second column: the PPIs (pseudo-primary inputs) and PPOs (pseudo-primary outputs) in the second column of Table 3 are the outputs of SFFs and inputs of SFFs of the full-scan design sequential circuits respectively. For full-scan sequential circuits, PIs and PPIs constitute the inputs of the combinational logic, while POs and PPOs are outputs of the combinational logic.

Table 4 presents the results of some larger experimental circuits. As the increase in calculation time is too rapid, we set fewer excitation-vectors for b18 and b19, such that the standard deviations of the results of multiple simulations may increase for these two benchmark circuits. However, as can be seen in the subsequent Table 7, the values of T1 and T2 are less important to the reliability of such a very large-scale circuit in some cases.

5.2 Circuit Reliability

We employ the reliability boundary evaluation method outlined in section IV to calculate the lower and upper limits of circuit reliability. The conservative lower limit (Rc-lower) and the four reliability boundaries (i.e., Rlower1, Rupper1, Rlower2, and Rupper2) of the three groups of benchmark circuits are presented in Tables 5, 6, and 7, respectively. All reliability boundaries are based on a constant failure probability f (f = 1e-4), which is in compliance with the current development of CMOS technology; however, it is clear that our proposed method can be easily extended with the value of f. As shown in Tables 5 to 7, for a given f, one basic trend that can be observed is that circuit reliability decreases with the increase of circuit size, while the distance between the upper and lower limits of reliability will also increase as the circuit size increases.

5.3 Reliability Comparison

In this part, we select five large combinational circuits and five large full-scan design sequential circuits and plot their reliability boundaries in the form of curves; see Fig. 6(a) and (b), respectively. Taking Fig. 6(a) as an example, the reliability curve at the top connects the first-order upper limits of reliability of these five combinational circuits, while the next-highest curve represents the second-order upper limits of the reliability of the experimental circuits. By contrast, the bottom curve and the next-lowest curve describe the first-order lower limits and the second-order lower limits of the reliability of these circuits, respectively. Moreover, there is a red curve in the middle of several reliability curves, which is labeled “PGM-Simulation” and has been added for the sake of comparison. In PGM-Simulation, all logic gates fail with a specific probability; in line with this premise, we test a large number (over 1 million) of experimental samples, including various random input vectors, to calculate the PGM-based reliability of circuits. We take the results of PGM-Simulation as a relatively accurate reliability reference standard. As shown in these two figures, among the 10 experimental circuits, the PGM-Simulation results for only two circuits (i.e., C6288 and S35932) are outside the range of Rupper2 and Rlower2 (part D proves that the calculation results of our method for these two circuits are still accurate and effective). In addition, we can see from Fig. 6 that the smaller the circuit size, the closer the calculation result of the reliability boundaries is to the actual reliability of the circuit.

5.4 Confidence Interval of Reliability

Figure 6 shows that the PGM-Simulation results for C6288 and S35932 slightly exceed the second-order boundaries of circuit reliability. The specific PGM-Simulation results of C6288 and S35932 are 0.805978 and 0.536673, both of which are slightly less than the second-order lower limits of these two experimental circuits. Accordingly, in order to demonstrate the accuracy and credibility of our reliability boundary calculation results, we next analyze the fault simulation in the experimental process 10 times. As shown in Table 8, ten instances of experimental data for C6288 and S35932 are listed. Taking T1 of C6288 as an example, it can be regarded as a random variable X; the value of each experiment T1 is a random sample from overall. X is approximately normal distribution, i.e., X ~ N(μ, σ2). It can be concluded that \( \frac{\overline{X}-\mu }{S/\sqrt{n}}\sim t\left(n-1\right) \). Thus, the confidence interval of μ is \( \left(\overline{X}\pm \frac{S}{\sqrt{n}}{t}_{\alpha /2}\left(n-1\right)\right) \) given the confidence level (1 − α). We can thus obtain \( \overline{x}=0.105277 \) and s = 3.96092e-5 from Table 8. If the confidence level is 0.99, the confidence interval of T1 of C6288 is (0.105236, 0.105318); similarly, at a given confidence level of 0.99, the confidence interval of T2 of C6288 is (0.028179, 0.028245). We can further calculate that the confidence intervals of T1 and T2 for S35932 are (0.661162, 0.661400) and (0.475286, 0.475356) respectively at a confidence level of 0.99. According to (18) and (19), the value of Rlower2 reaches the minimum only when T1 and T2 take the minimum values. Therefore, the minimum Rlower2 of C6288 and the minimum Rlower2 of S35932 are 0.805976 and 0.536665, respectively; that is to say, even for these two circuits, the PGM-Simulation results have a probability in excess of 0.99 of falling inside the second-order boundaries of circuit reliability.

The confidence intervals of our single fault and double faults simulation results for eight benchmark circuits in Fig. 6 (except for C6288 and S35932) are shown in Table 9. In this table, the second and third columns present the confidence

intervals of T1 and T2 respectively at a given confidence level of 0.99. The relative errors of T1 and T2 (i.e., Δ1 and Δ2) are listed in the last two columns. Here, Δ1 signifies the ratio of the difference between the average value of 10 experiments of T1 (i.e., \( \overline{T_1} \)) and CImin to \( \overline{T_1} \), which can be expressed as follows:

Alternatively, Δ1 can also be expressed as

Similar to the above, Δ2 can be obtained by (22) or (23).

The results of Table 9 clearly show that our single fault and double faults simulation results of ten experiments are very close, which confirms the accuracy and effectiveness of our evaluation approach.

5.5 Impact of Parameter f on Reliability Boundaries

In this part, we study the influence of the failure probability f on reliability boundary calculation. As shown in Table 7, the second-order boundaries of circuit reliability of b18 and b19 are meaningless, given that f is 1e-4. The reason for this is that as the circuit size increases, the probability of more than two logic elements failing simultaneously becomes very large where f = 1e-4; under these circumstances, the third-order and even higher order components of circuit reliability cannot be ignored.

Figure 7(a) to (d) illustrate that the reliability boundaries are greatly affected by the parameter f. For four large-scale scan design sequential circuits, the second-order boundaries of circuit reliability are shown in Figs. 7(a) to 7(d) respectively as parameter f is changed; note that the X-axes in these four figures are negative logarithmic. As can be seen from the below figures, along with the decrease of f, the second-order upper limit and second-order lower limit of reliability will gradually approach each other until they finally coincide. Accordingly, this experiment demonstrates that the more reliable the logic element, the more effective our proposed reliability evaluation approach, particularly for VLSI circuits.

5.6 Runtime

For medium-scale integrated (MSI) circuits and some large-scale integrated (LSI) circuits, the fault simulation time of our proposed method is slightly less than that of PGM-Simulation. As the circuit size enters the very large-scale level, however, the time advantage of the single fault and the double faults simulation gradually becomes apparent. Compared with PGM-Simulation, the single fault and the double faults simulations require fewer simulation samples to obtain accurate results; moreover, once T1 and T2 are obtained, the circuit reliability can be calculated simply by using the proposed probability distribution model, regardless of any change in f. In short, we do not need to conduct time-consuming simulation experiments, which greatly reduces the time required for reliability evaluation. In addition, the time required by our method is controllable by adjusting the number of simulations on the premise that the accuracy of the evaluation results is not significantly affected. The runtimes of PGM-Simulation and the proposed fault simulation algorithms for six benchmark circuits are presented in Fig. 8; note that the Y-axis in this figure is logarithmic. As can be seen from Fig. 8, the acceleration of our method increases with the increase of circuit size. As for the VLSI circuits of ISCAS’89 and ITC’99 benchmarks, the speed of our method is about two orders of magnitude faster than the large sample size-based PGM-Simulation, while still maintaining sufficiently close accuracy when f is appropriate.

6 Conclusion and Future Work

To properly manage the tradeoffs between the conflicting goals of maximizing reliability and minimizing fault tolerance costs, it is essential that the reliability of future nanoscale logic circuits be accurately and efficiently evaluated. However, the large number of fault combinations and the exponential growth of the input vector space could effectively prohibit the efficient calculation of reliability for large-scale and very large-scale circuits in the absence of a suitable solution. Accordingly, the main contribution of this paper is the development of an efficient and scalable method for calculating the reliability boundaries of a circuit with great accuracy and within a reasonable time, even for VLSI circuits with high reliable logic elements. Our approach combines a novel Bernoulli distribution model-based reliability calculation method, to decompose the evaluation objective of circuits, with single fault and double faults simulations, to obtain the principal and primary high-order components of reliability. The proposed method scales well with circuit size and is independent of the error rate of the logic element. Moreover, the evaluation speed is orders of magnitude faster than the large sample size-based PGM-Simulation. Furthermore, the accuracy of approximate analytical methods is very low because the influence of signal correlation is not considered. However, the accurate analytical methods need to fully consider the influence of signal correlation, and the calculation time will increase exponentially with the number of reconvergent fan-out nodes. And the analytical methods will become more complicated in the face of MTFs. Our method in this paper can be applied to the evaluation of large scale and very large scale circuits, which exceeds the analytical methods in accuracy and scalability.

In the future, our approach will take into account the relationship between the location of the double fault nodes and the gate-level netlist or layout. In addition, we will also conduct research into the acceleration of simulation techniques. For example, the proposed approach could improve fault simulation speed by using graphical processing units (GPUs) for parallel implementations.

References

Anglada M, Canal R, Aragón JL, González A (2016) “MASkIt:Soft error rate estimation for combinational circuits,” in Proc. IEEE International Conference on Computer Design, Scottsdale, AZ, USA, pp 614–621

Anglada M, Canal R, Aragón JL, González A (2018) Fast and accurate SER estimation for large combinational blocks in early stages of the design. IEEE Trans Sustain Comput:1–15. https://doi.org/10.1109/TSUSC.2018.2886640

Asadi H, Tahoori MB (2010) Soft error modeling and remediation techniques in ASIC designs. Microelectron J 41(8):506–522

Asadi H, Tahoori MB, Fazeli M, Miremadi SG (2012) Efficient algorithms to accurately compute derating factors of digital circuits. Microelectron Reliab 52:1215–1226

Black JD, Dodd PE, Warren KM (2013) Physics of multiple-node charge collection and impacts on single-event characterization and soft error rate prediction. IEEE Trans Nucl Sci 60(3):1836–1851

F. Brglez and H. Fujiwara, “A neutral netlist of 10 combinational benchmark circuits and a target translator in fortran,” in Proc. IEEE International Symposium on Circuits and Systems, Kyoto, Japan, 1985, pp. 663–698

Brglez F, Bryan D, Kozminski K (1989) “Combinational profiles of sequential benchmark circuits,” in Proc. IEEE International Symposium on Circuits and Systems, Portland, OR, USA, pp 1929–1934

Cai S, Yu F, Wang WZ, Liu TQ, Liu P, Wang W (2017) Reliability evaluation of logic circuits based on transient faults propagation metrics. IEICE Electron Exp 14(7):1–7

Cai S, Wang WZ, Yu F, He BY (2019) Single event transient propagation probabilities analysis for nanometer CMOS circuits. J Electron Testing - Theory Appl 35(2):163–172

Cao XB, Xiao LY, Li J, Zhang RS, Liu SS, Wang JX (2019) A layout-based soft error vulnerability estimation approach for combinational circuits considering single event multiple transients (SEMTs). IEEE Trans Comput-Aided Design Int Circ Syst 38(6):1109–1122

Cao D, Jiang YC, Wang J, Ji BF, Alfarraj O, Tolba A, Ma XM, Liu YH (2020) ARNS: adaptive relay-node selection method for message broadcasting in the internet of vehicles. Sensors 20(5):1338–1355

Chatterjee I, Narasimham B, Mahatme NN, Bhuva BL, Reed RA, Schrimpf RD, Wang JK, Vedula N, Bartz B, Monzel C (2014) Impact of technology scaling on SRAM soft error rates. IEEE Trans Nucl Sci 61(6):3512–3518

Davidson S (1999) “ITC’99 benchmark circuits-Preliminary results,” in Proc. International Test Conference, Atlantic, NJ, USA, pp 1125–1130

Dodd P, Massengill L (2003) Basic mechanisms and modeling of single-event upset in digital microelectronics. IEEE Trans Nucl Sci 50(3):583–602

Du Y, Chen S (2016) A novel layout-based single event transient injection approach to evaluate the soft error rate of large combinational circuits in complimentary metal-oxide-semiconductor bulk technology. IEEE Trans Reliab 65(1):48–255

Ebrahimi M, Evans A, Tahoori MB, Costenaro E, Alexandrescu D, Chandra V, Seyyedi R (2015) Comprehensive analysis of sequential and combinational soft errors in an embedded processor. IEEE Trans Comput-Aided Design Int Circ Syst 34(6):1586–1599

Ebrahimi M, Asadi H, Bishnoi R, Tahoori MB (2016) Layout-based modeling and mitigation of multiple event transients. IEEE Trans Comput-Aided Des Int Circ Syst 35(3):367–379

El-Maleh AH, Al-Hashimi BM, Melouki A, Khan F (2009) Defect tolerant N2-transistor structure for reliable nanoelectronic designs. IET Comput Digit Tech 3(6):570–580

Fazeli M, Miremadi S, Asadi H, Tahoori M (2010) “A fast analytical approach to multi-cycle soft error rate estimation of sequential circuits,” in Proc. 13th Euromicro conference on digital system design: architectures, Methods and Tools, Lille, France, pp 797–800

Franco D, Vasconcelos M, Naviner L, Naviner JF (2008) Signal probability for reliability evaluation of logic circuits. Microelectron Reliab 48(9):1422–1429

Franco D, Vasconcelos M, Naviner L, Naviner JF (2008) “Reliability of logic circuits under multiple simultaneous faults,” in Proc. IEEE International Midwest Symposium on Circuits and Systems, Knoxville, TN, USA, pp 265–268

Furuta J, Kobayashi K, Onodera H (2013) “Impact of cell distance and well-contact density on neutron-induced multiple cell upsets,” in Proc. IEEE International Reliability Physics Symposium, Anaheim, CA, USA, pp 6C.3.1–6C.3.4

Gangadhar S, Tragoudas S (2012) “Accurate calculation of SET propagation probability for hardening” in Proc. IEEE International Symposium on Defect & Fault Tolerance in VLSI & Nanotechnology Systems, Austin, TX, USA, pp 104–108

Giot D, Roche P, Gasiot G, Autran JL, Harboe-Sorensen R (2008) Heavy ion testing and 3-D simulations of multiple cell upset in 65nm standard SRAMs. IEEE Trans Nucl Sci 55(4):2048–2054

Han J, Chen H, Boykin E, Fortes J (2011) Reliability evaluation of logic circuits using probabilistic gate models. Microelectron Reliab 51(2):468–476

Huang KH, Hu Y, Li XW (2014) Reliability-oriented placement and routing algorithm for SRAM-based FPGAs. IEEE Trans Very Large Scale Int Syst 22(2):256–269

Ibrahim W, Shousha M, Chinneck JW (2015) Accurate and efficient estimation of logic circuits reliability bounds. IEEE Trans Comput 64(5):1217–1229

Kachave D, Sengupta A (2016) Integrating physical level design and high level synthesis for simultaneous multi-cycle transient and multiple transient fault resiliency of application specific datapath processors. Microelectron Reliab 60:141–152

Krishnaswamy S, Viamontes GF, Markov IL, Hayes JP (2005) “Accurate reliability evaluation and enhancement via probabilistic transfer matrices,” in Proc. Design, Automation and Test in Europe Conference, Munich, Germany, pp 282–287

Krishnaswamy S, Viamontes GF, Markov IL, Hayes JP (2008) Probabilistic transfer matrices in symbolic reliability analysis of logic circuits. ACM Trans Des Autom Electron Syst 13(1):8

Li J, Draper J (2017) Accelerated soft-error-rate(SER) estimation for combinational and sequential circuits. ACM Trans Des Autom Electron Syst 22(3):1–57

Liu BJ, Cai L (2012) Reliability evaluation for single event transients on digital circuits. IEEE Trans Reliab 61(3):687–691

Liu BJ, Cai L (2017) Monte Carlo reliability model for single-event transient on combinational circuits. IEEE Trans Nucl Sci 64(12):2933–2937

Miskov-Zivanov N, Marculescu D (2006) “MARS-C: Modeling and reduction of soft errors in combinational circuits,” in Proc. Design Automation Conference, San Francisco, CA, USA, pp 767–772

Miskov-Zivanov N, Marculescu D (2007) “Soft error rate analysis for sequential circuits,” in Proc. Design, Automation and Test in Europe Conference, Nice, France, pp 1436–1441

Miskov-Zivanov N, Marculescu D (2010) Multiple transient faults in combinational and sequential circuits: a systematic approach. IEEE Trans Comput-Aided Design Int Circ Syst 29(10):1614–1627

Naviner L, Liu KK, Cai H, Naviner JF (2014) “Efficient computation of combinational circuits reliability based on probabilistic transfer matrix,” in Proc. IEEE International Conference on IC Design & Technology, Austin, TX, USA, pp 1–4

Nicolaidis M (2005) Design for soft error mitigation. IEEE Trans Device Mater Reliab 5(3):405–418

Pagliarini S, Kastensmidt F, Entrena L, Lindoso A, Millan E (2011) Analyzing the impact of single-event-induced charge sharing in complex circuits. IEEE Trans Nucl Sci 58(6):2768–2775

Patel KN, Markov IL, Hayes JP (2003) “Evaluating circuit reliability under probabilistic gate-level fault models,” in Proc. International Workshop on Logic and Synthesis, Laguna Beach, CA, USA, pp 59–64

Qi Y, Gao JB, Fortes J (2005) Markov chains and probabilistic computation - a general framework for multiplexed nanoelectronic systems. IEEE Trans Nanotechnol 4(2):194–205

Rajaei R, Tabandeh M, Fazeli M (2014) Soft error rate estimation for combinational logic in presence of single event multiple transients. J Circ Syst Comput 23(6):1–20

Raji M, Ghavami B (2016) A fast statistical soft error rate estimation method for nano-scale combinational circuits. J Electron Testing - Theory Appl 32(6):291–305

Raji M, Pedram H, Ghavami B (2015) Soft error rate estimation of combinational circuits based on vulnerability analysis. IET Circ Syst 9(6):311–320

Rao RR, Chopra K, Blaau DT, Sylvester DM (2007) Computing the soft error rate of a combinational logic circuit using parameterized descriptors. IEEE Trans Comput-Aided Design Int Circ Syst 26(3):468–479

Reorda MS, Violante M (2004) A new approach to the analysis of single event transients in VLSI circuits. J Electron Testing - Theory Appl 20(5):511–521

Rohanipoor MR, Ghavami B, Raji M (2020) Improving combinational circuit reliability against multiple event transients via a partition and restructuring approach. IEEE Trans Comput-Aided Design Int Circ Syst 39(5):1059–1072

Rossi D, Omana M, Toma F, Metra C (2005) “Multiple transient faults in logic: an issue for next generation ics?” in Proc. IEEE International Symposium on Defect & Fault Tolerance in VLSI & Nanotechnology Systems, Monterey, CA, USA, pp 352–360

Sootkaneung W, Saluja KK (2010) “Gate input reconfiguration for combating soft errors in combinational circuits,” in Proc. International Conference on Dependable Systems & Networks Workshops, Washington, DC, USA, pp 107–112

Wang F, Xie Y (2011) Soft error rate analysis for combinational logic using an accurate electrical masking model. IEEE Trans Depend Sec Comput 8(1):137–146

Wang WZ, Wang JC, Wang W, Liu P, Cai S (2019) A secure DFT architecture protecting crypto chips against scan-based attacks. IEEE Access 7:22206–22213

Yan AB, Huang ZF, Yi MX, Xu XM, Ouyang YM, Liang HG (2017) Double-node-upset-resilient latch design for nanoscale CMOS technology. IEEE Trans Very Large Scale Int Syst 25(6):1978–1982

Yu F, Gao L, Liu L, Qian S, Cai S, Song Y (2020) A 1 V, 0.53 ns, 59 μW current comparator using standard 0.18μm CMOS technology. Wirel Pers Commun 111:843–851

Zandevakili H, Mahani A, Saneei M (2015) An accurate and fast reliability analysis method for combinational circuits. Int J Comput Math Electric Electron Eng 34(3):979–995

Zebrev GI, Galimov AM (2017) Compact modeling and simulation of heavy ion-induced soft error rate in space environment: principles and validation. IEEE Trans Nucl Sci 64(8):2129–2135

Zhang M, Shanbhag NR (2006) A soft-error-rate-analysis methodology. IEEE Trans Comput-Aided Design Int Circ Syst 25(10):2140–2155

Author information

Authors and Affiliations

Consortia

Corresponding author

Additional information

Responsible Editor: B. Ghavami

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by the National Natural Science Foundation of China (NSFC) (Grant No.61702052, No.61504013, No.61804037), Hunan Provincial Natural Science Foundation of China (Grant No. 2020JJ4622, No. 2019JJ50648), and the Scientific Research Fund of Hunan Provincial Education Department (Grant No.18A137, No.17B011).

Rights and permissions

About this article

Cite this article

Cai, S., He, B., Wang, W. et al. Soft Error Reliability Evaluation of Nanoscale Logic Circuits in the Presence of Multiple Transient Faults. J Electron Test 36, 469–483 (2020). https://doi.org/10.1007/s10836-020-05898-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10836-020-05898-x